modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

smanjil/German-MedBERT | smanjil | 2022-06-13T16:52:46Z | 1,297 | 20 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"exbert",

"German",

"de",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: de

tags:

- exbert

- German

---

<a href="https://huggingface.co/exbert/?model=smanjil/German-MedBERT">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

# German Medical BERT

This is a fine-tuned model on the Medical domain for the German language and based on German BERT. This model has only been trained to improve on-target tasks (Masked Language Model). It can later be used to perform a downstream task of your needs, while I performed it for the NTS-ICD-10 text classification task.

## Overview

**Language model:** bert-base-german-cased

**Language:** German

**Fine-tuning:** Medical articles (diseases, symptoms, therapies, etc..)

**Eval data:** NTS-ICD-10 dataset (Classification)

**Infrastructure:** Google Colab

## Details

- We fine-tuned using Pytorch with Huggingface library on Colab GPU.

- With standard parameter settings for fine-tuning as mentioned in the original BERT paper.

- Although had to train for up to 25 epochs for classification.

## Performance (Micro precision, recall, and f1 score for multilabel code classification)

|Models|P|R|F1|

|:------|:------|:------|:------|

|German BERT|86.04|75.82|80.60|

|German MedBERT-256 (fine-tuned)|87.41|77.97|82.42|

|German MedBERT-512 (fine-tuned)|87.75|78.26|82.73|

## Author

Manjil Shrestha: `shresthamanjil21 [at] gmail.com`

## Related Paper: [Report](https://opus4.kobv.de/opus4-rhein-waal/frontdoor/index/index/searchtype/collection/id/16225/start/0/rows/10/doctypefq/masterthesis/docId/740)

Get in touch:

[LinkedIn](https://www.linkedin.com/in/manjil-shrestha-038527b4/)

|

taeminlee/polyglot_12.8b_ins_orcastyle | taeminlee | 2023-10-10T07:32:26Z | 1,297 | 0 | transformers | [

"transformers",

"pytorch",

"gpt_neox",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-10T06:16:36Z | Entry not found |

gizmo-ai/q-and-a-llama-7b-awq | gizmo-ai | 2023-10-24T16:23:42Z | 1,297 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-24T13:22:00Z | Entry not found |

metterian/llama-2-ko-7b-pt | metterian | 2023-11-25T16:20:14Z | 1,297 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-25T16:12:28Z | Entry not found |

shleeeee/mistral-7b-wiki | shleeeee | 2024-03-08T00:10:36Z | 1,297 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"finetune",

"ko",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-28T13:00:50Z | ---

language:

- ko

pipeline_tag: text-generation

tags:

- finetune

---

# Model Card for mistral-7b-wiki

It is a fine-tuned model using Korean in the mistral-7b model

## Model Details

* **Model Developers** : shleeeee(Seunghyeon Lee) , oopsung(Sungwoo Park)

* **Repository** : To be added

* **Model Architecture** : The mistral-7b-wiki is is a fine-tuned version of the Mistral-7B-v0.1.

* **Lora target modules** : q_proj, k_proj, v_proj, o_proj,gate_proj

* **train_batch** : 2

* **Max_step** : 500

## Dataset

Korean Custom Dataset

## Prompt template: Mistral

```

<s>[INST]{['instruction']}[/INST]{['output']}</s>

```

## Usage

```

# Load model directly

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("shleeeee/mistral-7b-wiki")

model = AutoModelForCausalLM.from_pretrained("shleeeee/mistral-7b-wiki")

# Use a pipeline as a high-level helper

from transformers import pipeline

pipe = pipeline("text-generation", model="shleeeee/mistral-7b-wiki")

```

## Evaluation

|

gordicaleksa/YugoGPT | gordicaleksa | 2024-02-22T15:29:25Z | 1,297 | 25 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-22T14:51:21Z | ---

license: apache-2.0

---

This repo contains YugoGPT - the best open-source base 7B LLM for BCS (Bosnian, Croatian, Serbian) languages developed by Aleksa Gordić.

You can access more powerful iterations of YugoGPT already through the recently announced [RunaAI's API platform](https://dev.runaai.com/)!

Serbian LLM eval results compared to Mistral 7B, LLaMA 2 7B, and GPT2-orao (also see this [LinkedIn post](https://www.linkedin.com/feed/update/urn:li:activity:7143209223722627072/)):

Eval was computed using https://github.com/gordicaleksa/serbian-llm-eval

It was trained on tens of billions of BCS tokens and is based off of [Mistral 7B](https://huggingface.co/mistralai/Mistral-7B-v0.1).

## Notes

1) YugoGPT is a base model and therefore does not have any moderation mechanisms.

2) Since it's a base model it won't follow your instructions as it's just a powerful autocomplete engine.

3) If you want an access to much more powerful BCS LLMs (some of which are powering [yugochat](https://www.yugochat.com/)) - you can access the models through [RunaAI's API](https://dev.runaai.com/)

# Credits

The data for the project was obtained with the help of [Nikola Ljubešić](https://nljubesi.github.io/), [CLARIN.SI](https://www.clarin.si), and [CLASSLA](https://www.clarin.si/info/k-centre/). Thank you!

# Project Sponsors

A big thank you to the project sponsors!

## Platinum sponsors 🌟

* <b>Ivan</b> (anon)

* [**Things Solver**](https://thingsolver.com/)

## Gold sponsors 🟡

* **qq** (anon)

* [**Adam Sofronijevic**](https://www.linkedin.com/in/adam-sofronijevic-685b911/)

* [**Yanado**](https://yanado.com/)

* [**Mitar Perovic**](https://www.linkedin.com/in/perovicmitar/)

* [**Nikola Ivancevic**](https://www.linkedin.com/in/nivancevic/)

* **Rational Development DOO**

* [**Ivan**](https://www.linkedin.com/in/ivan-kokic-258262175/) i [**Natalija Kokić**](https://www.linkedin.com/in/natalija-kokic-19a458131/)

## Silver sponsors ⚪

[**psk.rs**](https://psk.rs/), [**OmniStreak**](https://omnistreak.com/), [**Luka Važić**](https://www.linkedin.com/in/vazic/), [**Miloš Durković**](https://www.linkedin.com/in/milo%C5%A1-d-684b99188/), [**Marjan Radeski**](https://www.linkedin.com/in/marjanradeski/), **Marjan Stankovic**, [**Nikola Stojiljkovic**](https://www.linkedin.com/in/nikola-stojiljkovic-10469239/), [**Mihailo Tomić**](https://www.linkedin.com/in/mihailotomic/), [**Bojan Jevtic**](https://www.linkedin.com/in/bojanjevtic/), [**Jelena Jovanović**](https://www.linkedin.com/in/eldumo/), [**Nenad Davidović**](https://www.linkedin.com/in/nenad-davidovic-662ab749/), [**Mika Tasich**](https://www.linkedin.com/in/mikatasich/), [**TRENCH-NS**](https://www.linkedin.com/in/milorad-vukadinovic-64639926/), [**Nemanja Grujičić**](https://twitter.com/nemanjagrujicic), [**tim011**](https://knjigovodja.in.rs/sh)

**Also a big thank you to the following individuals:**

- [**Slobodan Marković**](https://www.linkedin.com/in/smarkovic/) - for spreading the word! :)

- [**Aleksander Segedi**](https://www.linkedin.com/in/aleksander-segedi-08430936/) - for help around bookkeeping!

## Citation

```

@article{YugoGPT,

author = "Gordić Aleksa",

title = "YugoGPT - an open-source LLM for Serbian, Bosnian, and Croatian languages",

year = "2024"

howpublished = {\url{https://huggingface.co/gordicaleksa/YugoGPT}},

}

``` |

Slep/CondViT-B16-txt | Slep | 2024-05-15T14:56:48Z | 1,297 | 0 | transformers | [

"transformers",

"safetensors",

"condvit",

"feature-extraction",

"lrvsf-benchmark",

"custom_code",

"dataset:Slep/LAION-RVS-Fashion",

"arxiv:2306.02928",

"license:cc-by-nc-4.0",

"model-index",

"region:us"

] | feature-extraction | 2024-04-05T15:15:20Z | ---

license: cc-by-nc-4.0

model-index:

- name: CondViT-B16-txt

results:

- dataset:

name: LAION - Referred Visual Search - Fashion

split: test

type: Slep/LAION-RVS-Fashion

metrics:

- name: R@1 +10K Dist.

type: recall_at_1|10000

value: 94.18 ± 0.86

- name: R@5 +10K Dist.

type: recall_at_5|10000

value: 98.78 ± 0.32

- name: R@10 +10K Dist.

type: recall_at_10|10000

value: 99.25 ± 0.30

- name: R@20 +10K Dist.

type: recall_at_20|10000

value: 99.71 ± 0.17

- name: R@50 +10K Dist.

type: recall_at_50|10000

value: 99.79 ± 0.13

- name: R@1 +100K Dist.

type: recall_at_1|100000

value: 87.07 ± 1.30

- name: R@5 +100K Dist.

type: recall_at_5|100000

value: 95.28 ± 0.61

- name: R@10 +100K Dist.

type: recall_at_10|100000

value: 96.99 ± 0.44

- name: R@20 +100K Dist.

type: recall_at_20|100000

value: 98.04 ± 0.36

- name: R@50 +100K Dist.

type: recall_at_50|100000

value: 98.98 ± 0.26

- name: R@1 +500K Dist.

type: recall_at_1|500000

value: 79.41 ± 1.02

- name: R@5 +500K Dist.

type: recall_at_5|500000

value: 89.65 ± 1.08

- name: R@10 +500K Dist.

type: recall_at_10|500000

value: 92.72 ± 0.87

- name: R@20 +500K Dist.

type: recall_at_20|500000

value: 94.88 ± 0.58

- name: R@50 +500K Dist.

type: recall_at_50|500000

value: 97.13 ± 0.48

- name: R@1 +1M Dist.

type: recall_at_1|1000000

value: 75.60 ± 1.40

- name: R@5 +1M Dist.

type: recall_at_5|1000000

value: 86.62 ± 1.42

- name: R@10 +1M Dist.

type: recall_at_10|1000000

value: 90.13 ± 1.06

- name: R@20 +1M Dist.

type: recall_at_20|1000000

value: 92.82 ± 0.76

- name: R@50 +1M Dist.

type: recall_at_50|1000000

value: 95.61 ± 0.62

- name: Available Dists.

type: n_dists

value: 2000014

- name: Embedding Dimension

type: embedding_dim

value: 512

- name: Conditioning

type: conditioning

value: text

source:

name: LRVSF Leaderboard

url: https://huggingface.co/spaces/Slep/LRVSF-Leaderboard

task:

type: Retrieval

tags:

- lrvsf-benchmark

datasets:

- Slep/LAION-RVS-Fashion

---

# Conditional ViT - B/16 - Text

*Introduced in <a href=https://arxiv.org/abs/2306.02928>**LRVSF-Fashion: Extending Visual Search with Referring Instructions**</a>, Lepage et al. 2023*

<div align="center">

<div id=links>

|Data|Code|Models|Spaces|

|:-:|:-:|:-:|:-:|

|[Full Dataset](https://huggingface.co/datasets/Slep/LAION-RVS-Fashion)|[Training Code](https://github.com/Simon-Lepage/CondViT-LRVSF)|[Categorical Model](https://huggingface.co/Slep/CondViT-B16-cat)|[LRVS-F Leaderboard](https://huggingface.co/spaces/Slep/LRVSF-Leaderboard)|

|[Test set](https://zenodo.org/doi/10.5281/zenodo.11189942)|[Benchmark Code](https://github.com/Simon-Lepage/LRVSF-Benchmark)|[Textual Model](https://huggingface.co/Slep/CondViT-B16-txt)|[Demo](https://huggingface.co/spaces/Slep/CondViT-LRVSF-Demo)|

</div>

</div>

## General Infos

Model finetuned from CLIP ViT-B/16 on LRVSF at 224x224. The conditioning text is preprocessed by a frozen [Sentence T5-XL](https://huggingface.co/sentence-transformers/sentence-t5-xl).

Research use only.

## How to Use

```python

from PIL import Image

import requests

from transformers import AutoProcessor, AutoModel

import torch

model = AutoModel.from_pretrained("Slep/CondViT-B16-txt")

processor = AutoProcessor.from_pretrained("Slep/CondViT-B16-txt")

url = "https://huggingface.co/datasets/Slep/LAION-RVS-Fashion/resolve/main/assets/108856.0.jpg"

img = Image.open(requests.get(url, stream=True).raw)

txt = "a brown bag"

inputs = processor(images=[img], texts=[txt])

raw_embedding = model(**inputs)

normalized_embedding = torch.nn.functional.normalize(raw_embedding, dim=-1)

``` |

RichardErkhov/state-spaces_-_mamba-370m-hf-gguf | RichardErkhov | 2024-06-22T18:56:54Z | 1,297 | 0 | null | [

"gguf",

"region:us"

] | null | 2024-06-22T18:47:41Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

mamba-370m-hf - GGUF

- Model creator: https://huggingface.co/state-spaces/

- Original model: https://huggingface.co/state-spaces/mamba-370m-hf/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [mamba-370m-hf.Q2_K.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q2_K.gguf) | Q2_K | 0.2GB |

| [mamba-370m-hf.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.IQ3_XS.gguf) | IQ3_XS | 0.23GB |

| [mamba-370m-hf.IQ3_S.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.IQ3_S.gguf) | IQ3_S | 0.23GB |

| [mamba-370m-hf.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q3_K_S.gguf) | Q3_K_S | 0.23GB |

| [mamba-370m-hf.IQ3_M.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.IQ3_M.gguf) | IQ3_M | 0.23GB |

| [mamba-370m-hf.Q3_K.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q3_K.gguf) | Q3_K | 0.23GB |

| [mamba-370m-hf.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q3_K_M.gguf) | Q3_K_M | 0.23GB |

| [mamba-370m-hf.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q3_K_L.gguf) | Q3_K_L | 0.23GB |

| [mamba-370m-hf.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.IQ4_XS.gguf) | IQ4_XS | 0.26GB |

| [mamba-370m-hf.Q4_0.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q4_0.gguf) | Q4_0 | 0.27GB |

| [mamba-370m-hf.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.IQ4_NL.gguf) | IQ4_NL | 0.27GB |

| [mamba-370m-hf.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q4_K_S.gguf) | Q4_K_S | 0.27GB |

| [mamba-370m-hf.Q4_K.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q4_K.gguf) | Q4_K | 0.27GB |

| [mamba-370m-hf.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q4_K_M.gguf) | Q4_K_M | 0.27GB |

| [mamba-370m-hf.Q4_1.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q4_1.gguf) | Q4_1 | 0.28GB |

| [mamba-370m-hf.Q5_0.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q5_0.gguf) | Q5_0 | 0.3GB |

| [mamba-370m-hf.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q5_K_S.gguf) | Q5_K_S | 0.3GB |

| [mamba-370m-hf.Q5_K.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q5_K.gguf) | Q5_K | 0.3GB |

| [mamba-370m-hf.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q5_K_M.gguf) | Q5_K_M | 0.3GB |

| [mamba-370m-hf.Q5_1.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q5_1.gguf) | Q5_1 | 0.32GB |

| [mamba-370m-hf.Q6_K.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q6_K.gguf) | Q6_K | 0.34GB |

| [mamba-370m-hf.Q8_0.gguf](https://huggingface.co/RichardErkhov/state-spaces_-_mamba-370m-hf-gguf/blob/main/mamba-370m-hf.Q8_0.gguf) | Q8_0 | 0.42GB |

Original model description:

---

library_name: transformers

tags: []

---

# Mamba

<!-- Provide a quick summary of what the model is/does. -->

This repository contains the `transfromers` compatible `mamba-2.8b`. The checkpoints are untouched, but the full `config.json` and tokenizer are pushed to this repo.

# Usage

You need to install `transformers` from `main` until `transformers=4.39.0` is released.

```bash

pip install git+https://github.com/huggingface/transformers@main

```

We also recommend you to install both `causal_conv_1d` and `mamba-ssm` using:

```bash

pip install causal-conv1d>=1.2.0

pip install mamba-ssm

```

If any of these two is not installed, the "eager" implementation will be used. Otherwise the more optimised `cuda` kernels will be used.

## Generation

You can use the classic `generate` API:

```python

>>> from transformers import MambaConfig, MambaForCausalLM, AutoTokenizer

>>> import torch

>>> tokenizer = AutoTokenizer.from_pretrained("state-spaces/mamba-370m-hf")

>>> model = MambaForCausalLM.from_pretrained("state-spaces/mamba-370m-hf")

>>> input_ids = tokenizer("Hey how are you doing?", return_tensors="pt")["input_ids"]

>>> out = model.generate(input_ids, max_new_tokens=10)

>>> print(tokenizer.batch_decode(out))

["Hey how are you doing?\n\nI'm doing great.\n\nI"]

```

## PEFT finetuning example

In order to finetune using the `peft` library, we recommend keeping the model in float32!

```python

from datasets import load_dataset

from trl import SFTTrainer

from peft import LoraConfig

from transformers import AutoTokenizer, AutoModelForCausalLM, TrainingArguments

tokenizer = AutoTokenizer.from_pretrained("state-spaces/mamba-370m-hf")

model = AutoModelForCausalLM.from_pretrained("state-spaces/mamba-370m-hf")

dataset = load_dataset("Abirate/english_quotes", split="train")

training_args = TrainingArguments(

output_dir="./results",

num_train_epochs=3,

per_device_train_batch_size=4,

logging_dir='./logs',

logging_steps=10,

learning_rate=2e-3

)

lora_config = LoraConfig(

r=8,

target_modules=["x_proj", "embeddings", "in_proj", "out_proj"],

task_type="CAUSAL_LM",

bias="none"

)

trainer = SFTTrainer(

model=model,

tokenizer=tokenizer,

args=training_args,

peft_config=lora_config,

train_dataset=dataset,

dataset_text_field="quote",

)

trainer.train()

```

|

ai4bharat/IndicBART | ai4bharat | 2022-08-07T17:12:33Z | 1,296 | 21 | transformers | [

"transformers",

"pytorch",

"mbart",

"text2text-generation",

"multilingual",

"nlp",

"indicnlp",

"as",

"bn",

"gu",

"hi",

"kn",

"ml",

"mr",

"or",

"pa",

"ta",

"te",

"arxiv:2109.02903",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:05Z | ---

language:

- as

- bn

- gu

- hi

- kn

- ml

- mr

- or

- pa

- ta

- te

tags:

- multilingual

- nlp

- indicnlp

---

IndicBART is a multilingual, sequence-to-sequence pre-trained model focusing on Indic languages and English. It currently supports 11 Indian languages and is based on the mBART architecture. You can use IndicBART model to build natural language generation applications for Indian languages by finetuning the model with supervised training data for tasks like machine translation, summarization, question generation, etc. Some salient features of the IndicBART are:

<ul>

<li >Supported languages: Assamese, Bengali, Gujarati, Hindi, Marathi, Odiya, Punjabi, Kannada, Malayalam, Tamil, Telugu and English. Not all of these languages are supported by mBART50 and mT5. </li>

<li >The model is much smaller than the mBART and mT5(-base) models, so less computationally expensive for finetuning and decoding. </li>

<li> Trained on large Indic language corpora (452 million sentences and 9 billion tokens) which also includes Indian English content. </li>

<li> All languages, except English, have been represented in Devanagari script to encourage transfer learning among the related languages. </li>

</ul>

You can read more about IndicBART in this <a href="https://arxiv.org/abs/2109.02903">paper</a>.

For detailed documentation, look here: https://github.com/AI4Bharat/indic-bart/ and https://indicnlp.ai4bharat.org/indic-bart/

# Pre-training corpus

We used the <a href="https://indicnlp.ai4bharat.org/corpora/">IndicCorp</a> data spanning 12 languages with 452 million sentences (9 billion tokens). The model was trained using the text-infilling objective used in mBART.

# Usage:

```

from transformers import MBartForConditionalGeneration, AutoModelForSeq2SeqLM

from transformers import AlbertTokenizer, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("ai4bharat/IndicBART", do_lower_case=False, use_fast=False, keep_accents=True)

# Or use tokenizer = AlbertTokenizer.from_pretrained("ai4bharat/IndicBART", do_lower_case=False, use_fast=False, keep_accents=True)

model = AutoModelForSeq2SeqLM.from_pretrained("ai4bharat/IndicBART")

# Or use model = MBartForConditionalGeneration.from_pretrained("ai4bharat/IndicBART")

# Some initial mapping

bos_id = tokenizer._convert_token_to_id_with_added_voc("<s>")

eos_id = tokenizer._convert_token_to_id_with_added_voc("</s>")

pad_id = tokenizer._convert_token_to_id_with_added_voc("<pad>")

# To get lang_id use any of ['<2as>', '<2bn>', '<2en>', '<2gu>', '<2hi>', '<2kn>', '<2ml>', '<2mr>', '<2or>', '<2pa>', '<2ta>', '<2te>']

# First tokenize the input and outputs. The format below is how IndicBART was trained so the input should be "Sentence </s> <2xx>" where xx is the language code. Similarly, the output should be "<2yy> Sentence </s>".

inp = tokenizer("I am a boy </s> <2en>", add_special_tokens=False, return_tensors="pt", padding=True).input_ids # tensor([[ 466, 1981, 80, 25573, 64001, 64004]])

out = tokenizer("<2hi> मैं एक लड़का हूँ </s>", add_special_tokens=False, return_tensors="pt", padding=True).input_ids # tensor([[64006, 942, 43, 32720, 8384, 64001]])

# Note that if you use any language other than Hindi or Marathi, you should convert its script to Devanagari using the Indic NLP Library.

model_outputs=model(input_ids=inp, decoder_input_ids=out[:,0:-1], labels=out[:,1:])

# For loss

model_outputs.loss ## This is not label smoothed.

# For logits

model_outputs.logits

# For generation. Pardon the messiness. Note the decoder_start_token_id.

model.eval() # Set dropouts to zero

model_output=model.generate(inp, use_cache=True, num_beams=4, max_length=20, min_length=1, early_stopping=True, pad_token_id=pad_id, bos_token_id=bos_id, eos_token_id=eos_id, decoder_start_token_id=tokenizer._convert_token_to_id_with_added_voc("<2en>"))

# Decode to get output strings

decoded_output=tokenizer.decode(model_output[0], skip_special_tokens=True, clean_up_tokenization_spaces=False)

print(decoded_output) # I am a boy

# Note that if your output language is not Hindi or Marathi, you should convert its script from Devanagari to the desired language using the Indic NLP Library.

# What if we mask?

inp = tokenizer("I am [MASK] </s> <2en>", add_special_tokens=False, return_tensors="pt", padding=True).input_ids

model_output=model.generate(inp, use_cache=True, num_beams=4, max_length=20, min_length=1, early_stopping=True, pad_token_id=pad_id, bos_token_id=bos_id, eos_token_id=eos_id, decoder_start_token_id=tokenizer._convert_token_to_id_with_added_voc("<2en>"))

decoded_output=tokenizer.decode(model_output[0], skip_special_tokens=True, clean_up_tokenization_spaces=False)

print(decoded_output) # I am happy

inp = tokenizer("मैं [MASK] हूँ </s> <2hi>", add_special_tokens=False, return_tensors="pt", padding=True).input_ids

model_output=model.generate(inp, use_cache=True, num_beams=4, max_length=20, min_length=1, early_stopping=True, pad_token_id=pad_id, bos_token_id=bos_id, eos_token_id=eos_id, decoder_start_token_id=tokenizer._convert_token_to_id_with_added_voc("<2en>"))

decoded_output=tokenizer.decode(model_output[0], skip_special_tokens=True, clean_up_tokenization_spaces=False)

print(decoded_output) # मैं जानता हूँ

inp = tokenizer("मला [MASK] पाहिजे </s> <2mr>", add_special_tokens=False, return_tensors="pt", padding=True).input_ids

model_output=model.generate(inp, use_cache=True, num_beams=4, max_length=20, min_length=1, early_stopping=True, pad_token_id=pad_id, bos_token_id=bos_id, eos_token_id=eos_id, decoder_start_token_id=tokenizer._convert_token_to_id_with_added_voc("<2en>"))

decoded_output=tokenizer.decode(model_output[0], skip_special_tokens=True, clean_up_tokenization_spaces=False)

print(decoded_output) # मला ओळखलं पाहिजे

```

# Notes:

1. This is compatible with the latest version of transformers but was developed with version 4.3.2 so consider using 4.3.2 if possible.

2. While I have only shown how to get logits and loss and how to generate outputs, you can do pretty much everything the MBartForConditionalGeneration class can do as in https://huggingface.co/docs/transformers/model_doc/mbart#transformers.MBartForConditionalGeneration

3. Note that the tokenizer I have used is based on sentencepiece and not BPE. Therefore, I used the AlbertTokenizer class and not the MBartTokenizer class.

4. If you wish to use any language written in a non-Devanagari script (except English), then you should first convert it to Devanagari using the <a href="https://github.com/anoopkunchukuttan/indic_nlp_library">Indic NLP Library</a>. After you get the output, you should convert it back into the original script.

# Fine-tuning on a downstream task

1. If you wish to fine-tune this model, then you can do so using the <a href="https://github.com/prajdabre/yanmtt">YANMTT</a> toolkit, following the instructions <a href="https://github.com/AI4Bharat/indic-bart ">here</a>.

2. (Untested) Alternatively, you may use the official huggingface scripts for <a href="https://github.com/huggingface/transformers/tree/master/examples/pytorch/translation">translation</a> and <a href="https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization">summarization</a>.

# Contributors

<ul>

<li> Raj Dabre </li>

<li> Himani Shrotriya </li>

<li> Anoop Kunchukuttan </li>

<li> Ratish Puduppully </li>

<li> Mitesh M. Khapra </li>

<li> Pratyush Kumar </li>

</ul>

# Paper

If you use IndicBART, please cite the following paper:

```

@misc{dabre2021indicbart,

title={IndicBART: A Pre-trained Model for Natural Language Generation of Indic Languages},

author={Raj Dabre and Himani Shrotriya and Anoop Kunchukuttan and Ratish Puduppully and Mitesh M. Khapra and Pratyush Kumar},

year={2021},

eprint={2109.02903},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

# License

The model is available under the MIT License. |

wngkdud/llama2_koen_13b_SFTtrain | wngkdud | 2024-03-13T02:14:05Z | 1,296 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-15T00:11:36Z | ---

license: cc-by-nc-sa-4.0

---

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

llama2_koen_13b_SFTtrain model

|

sronger/koko_test | sronger | 2023-11-29T08:25:13Z | 1,296 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"feature-extraction",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | feature-extraction | 2023-11-29T06:50:46Z | Entry not found |

mncai/llama2-13b-dpo-v4 | mncai | 2023-12-14T03:33:12Z | 1,296 | 3 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"en",

"ko",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-05T01:35:03Z | ---

license: cc-by-nc-sa-4.0

language:

- en

- ko

---

# Model Card for llama2-13b-dpo-v4

### Introduction of MindsAndCompany

https://mnc.ai/

We create various AI models and develop solutions that can be applied to businesses. And as for generative AI, we are developing products like Code Assistant, TOD Chatbot, LLMOps, and are in the process of developing Enterprise AGI (Artificial General Intelligence).

### Model Summary

based beomi/llama-2-koen-13b, instruction tuned and dpo.

### How to Use

Here give some examples of how to use our model.

```python

from transformers import AutoConfig, AutoModel, AutoTokenizer

import transformers

import torch

hf_model = 'mncai/llama2-13b-dpo-v4'

message = "<|user|>\n두 개의 구가 있는데 각각 지름이 1, 2일때 구의 부피는 몇배 차이가 나지? 설명도 같이 해줘.\n<|assistant|>\n"

sequences = pipeline(

message,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=2048,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

```

### LICENSE

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International Public License, under LLAMA 2 COMMUNITY LICENSE AGREEMENT

### Contact

If you have any questions, please raise an issue or contact us at [email protected] |

mncai/llama2-13b-dpo-v6 | mncai | 2023-12-11T14:05:20Z | 1,296 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-11T13:58:02Z | Entry not found |

IBI-CAAI/MELT-llama-2-7b-chat-v0.1 | IBI-CAAI | 2024-01-06T13:17:55Z | 1,296 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-31T19:35:52Z | ---

license: apache-2.0

language:

- en

library_name: transformers

---

# Model Card MELT-llama-2-7b-chat-v0.1

The MELT-llama-2-7b-chat-v0.1 Large Language Model (LLM) is a pretrained generative text model pre-trained and fine-tuned on using publically avalable medical data.

MELT-llama-2-7b-chat-v0.1 demonstrates a 31.4% improvement over llama-2-7b-chat-hf across 3 medical benchmarks including, USMLE, Indian AIIMS, and NEET medical examination examples.

## Model Details

The Medical Education Language Transformer (MELT) models have been trained on a wide-range of text, chat, Q/A, and instruction data in the medical domain.

While the model was evaluated using publically avalable [USMLE](https://www.usmle.org/), Indian AIIMS, and NEET medical examination example questions, its use it intented to be more broadly applicable.

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [Center for Applied AI](https://caai.ai.uky.edu/)

- **Funded by:** [Institute or Biomedical Informatics](https://www.research.uky.edu/IBI)

- **Model type:** LLM

- **Language(s) (NLP):** English

- **License:** Apache 2.0

- **Finetuned from model:** [llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf)

## Uses

MELT is intended for research purposes only. MELT models are best suited for prompts using a QA or chat format.

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

MELT is intended for research purposes only and should not be used for medical advice.

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

MELT was training using collections publicly available, which likely contain biased and inaccurate information. The training and evaluation datasets have not been evaluated for content or accuracy.

## How to Get Started with the Model

Use this model like you would any llama-2-7b-chat-hf model.

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

The following datasets were used for training:

[Expert Med](https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/Q3A969)

[MedQA train](https://huggingface.co/datasets/bigbio/med_qa)

[MedMCQA train](https://github.com/MedMCQA/MedMCQA?tab=readme-ov-file#data-download-and-preprocessing)

[LiveQA](https://github.com/abachaa/LiveQA_MedicalTask_TREC2017)

[MedicationQA](https://huggingface.co/datasets/truehealth/medicationqa)

[MMLU clinical topics](https://huggingface.co/datasets/Stevross/mmlu)

[Medical Flashcards](https://huggingface.co/datasets/medalpaca/medical_meadow_medical_flashcards)

[Wikidoc](https://huggingface.co/datasets/medalpaca/medical_meadow_wikidoc)

[Wikidoc Patient Information](https://huggingface.co/datasets/medalpaca/medical_meadow_wikidoc_patient_information)

[MEDIQA](https://huggingface.co/datasets/medalpaca/medical_meadow_mediqa)

[MMMLU](https://huggingface.co/datasets/medalpaca/medical_meadow_mmmlu)

[icliniq 10k](https://drive.google.com/file/d/1ZKbqgYqWc7DJHs3N9TQYQVPdDQmZaClA/view?usp=sharing)

[HealthCare Magic 100k](https://drive.google.com/file/d/1lyfqIwlLSClhgrCutWuEe_IACNq6XNUt/view?usp=sharing)

[GenMedGPT-5k](https://drive.google.com/file/d/1nDTKZ3wZbZWTkFMBkxlamrzbNz0frugg/view?usp=sharing)

[Mental Health Conversational](https://huggingface.co/datasets/heliosbrahma/mental_health_conversational_dataset)

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Training Hyperparameters

- **Lora Rank:** 64

- **Lora Alpha:** 16

- **Lora Targets:** "o_proj","down_proj","v_proj","gate_proj","up_proj","k_proj","q_proj"

- **LR:** 2e-4

- **Epoch:** 3

- **Precision:** bf16 <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

MELT-llama-2-7b-chat-v0.1 demonstrated a average 31.4% improvement over llama-2-7b-chat-hf across 3 USMLE, Indian AIIMS, and NEET medical examination benchmarks.

### llama-2-7b-chat-hf

- **medqa:** {'base': {'Average': 36.43, 'STEP-1': 36.87, 'STEP-2&3': 35.92}}

- **mausmle:** {'base': {'Average': 30.11, 'STEP-1': 35.29, 'STEP-2': 29.89, 'STEP-3': 26.17}}

- **medmcqa:** {'base': {'Average': 39.25, 'MEDICINE': 38.04, 'OPHTHALMOLOGY': 38.1, 'ANATOMY': 42.47, 'PATHOLOGY': 41.86, 'PHYSIOLOGY': 35.61, 'DENTAL': 36.85, 'RADIOLOGY': 35.71, 'BIOCHEMISTRY': 42.98, 'ANAESTHESIA': 43.48, 'GYNAECOLOGY': 37.91, 'PHARMACOLOGY': 44.38, 'SOCIAL': 43.33, 'PEDIATRICS': 37.88, 'ENT': 47.37, 'SURGERY': 33.06, 'MICROBIOLOGY': 45.21, 'FORENSIC': 53.49, 'PSYCHIATRY': 77.78, 'SKIN': 60.0, 'ORTHOPAEDICS': 35.71, 'UNKNOWN': 100.0}}

- **average:** 35.2%

### MELT-llama-2-7b-chat-v0.1

- **medqa:** {'base': {'Average': 48.39, 'STEP-1': 49.12, 'STEP-2&3': 47.55}}

- **mausmle:** {'base': {'Average': 44.8, 'STEP-1': 42.35, 'STEP-2': 43.68, 'STEP-3': 47.66}}

- **medmcqa:** {'base': {'Average': 45.4, 'MEDICINE': 45.65, 'OPHTHALMOLOGY': 38.1, 'ANATOMY': 41.78, 'PATHOLOGY': 49.22, 'PHYSIOLOGY': 44.7, 'DENTAL': 41.47, 'RADIOLOGY': 48.21, 'BIOCHEMISTRY': 52.89, 'ANAESTHESIA': 52.17, 'GYNAECOLOGY': 35.95, 'PHARMACOLOGY': 51.12, 'SOCIAL': 50.0, 'PEDIATRICS': 50.76, 'ENT': 36.84, 'SURGERY': 48.39, 'MICROBIOLOGY': 49.32, 'FORENSIC': 51.16, 'PSYCHIATRY': 66.67, 'SKIN': 60.0, 'ORTHOPAEDICS': 42.86, 'UNKNOWN': 100.0}}

- **average:** 46.2%

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[MedQA test](https://huggingface.co/datasets/bigbio/med_qa)

[MedMCQA test](https://github.com/MedMCQA/MedMCQA?tab=readme-ov-file#data-download-and-preprocessing)

[MA USMLE](https://huggingface.co/datasets/medalpaca/medical_meadow_usmle_self_assessment)

## Disclaimer:

The use of large language models, such as this one, is provided without warranties or guarantees of any kind. While every effort has been made to ensure accuracy, completeness, and reliability of the information generated, it should be noted that these models may produce responses that are inaccurate, outdated, or inappropriate for specific purposes. Users are advised to exercise discretion and judgment when relying on the information generated by these models. The outputs should not be considered as professional, legal, medical, financial, or any other form of advice. It is recommended to seek expert advice or consult appropriate sources for specific queries or critical decision-making. The creators, developers, and providers of these models disclaim any liability for damages, losses, or any consequences arising from the use, reliance upon, or interpretation of the information provided by these models. The user assumes full responsibility for their interactions and usage of the generated content. By using these language models, users agree to indemnify and hold harmless the developers, providers, and affiliates from any claims, damages, or liabilities that may arise from their use. Please be aware that these models are constantly evolving, and their capabilities, limitations, and outputs may change over time without prior notice. Your use of this language model signifies your acceptance and understanding of this disclaimer.

|

shramay-palta/test-demo-t5-large-qa | shramay-palta | 2024-05-01T19:47:49Z | 1,296 | 0 | transformers | [

"transformers",

"safetensors",

"t5",

"text2text-generation",

"arxiv:1910.09700",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text2text-generation | 2024-05-01T19:43:38Z | ---

library_name: transformers

license: mit

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

nes470/test-demo-qa-with-roberta | nes470 | 2024-05-06T23:52:24Z | 1,296 | 0 | transformers | [

"transformers",

"safetensors",

"roberta",

"question-answering",

"arxiv:1910.09700",

"license:mit",

"endpoints_compatible",

"region:us"

] | question-answering | 2024-05-06T23:48:33Z | ---

library_name: transformers

license: mit

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

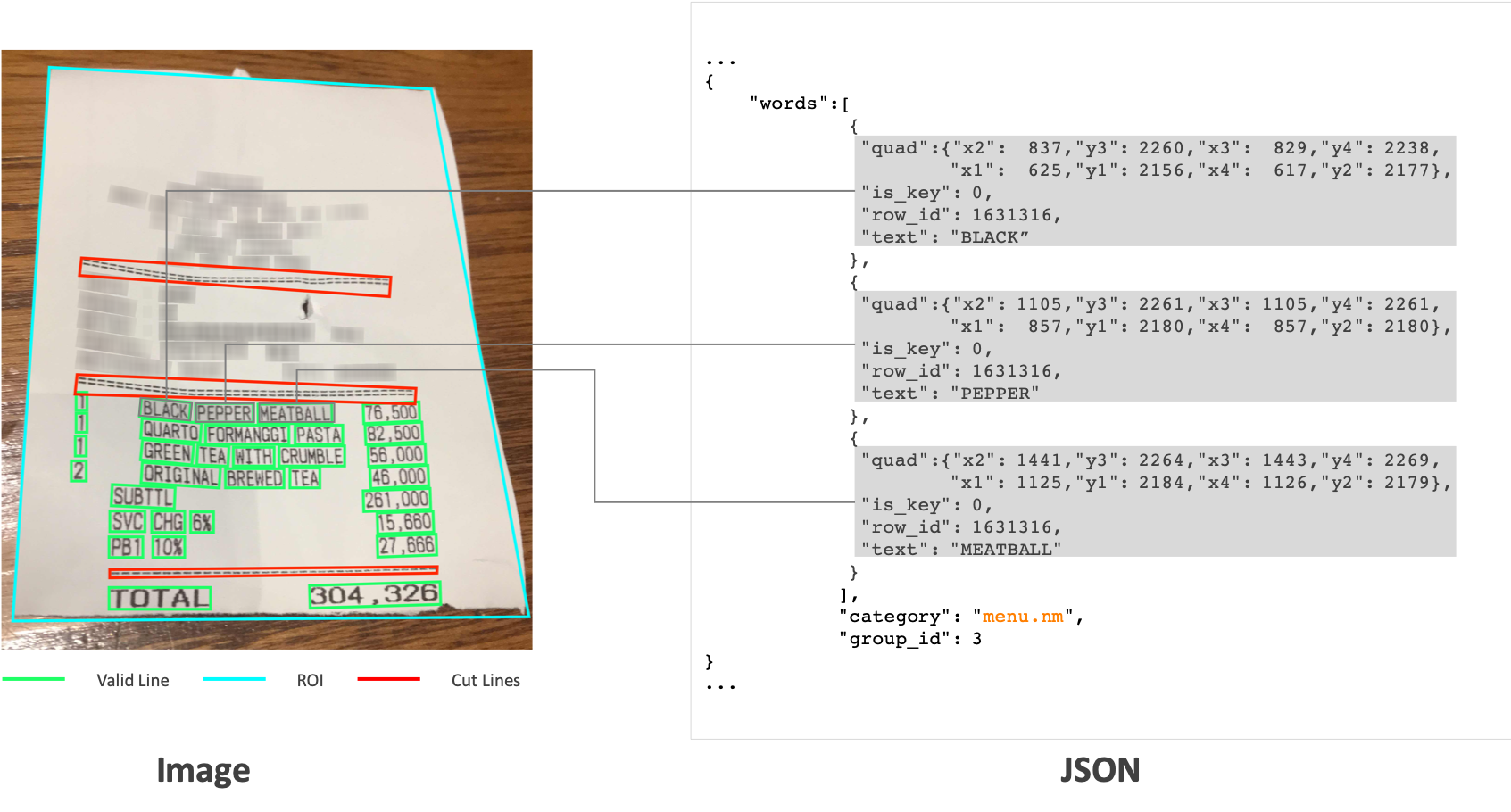

Theivaprakasham/layoutlmv2-finetuned-sroie | Theivaprakasham | 2022-03-02T08:12:26Z | 1,295 | 2 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"layoutlmv2",

"token-classification",

"generated_from_trainer",

"dataset:sroie",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | token-classification | 2022-03-02T23:29:05Z | ---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

datasets:

- sroie

model-index:

- name: layoutlmv2-finetuned-sroie

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv2-finetuned-sroie

This model is a fine-tuned version of [microsoft/layoutlmv2-base-uncased](https://huggingface.co/microsoft/layoutlmv2-base-uncased) on the sroie dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0291

- Address Precision: 0.9341

- Address Recall: 0.9395

- Address F1: 0.9368

- Address Number: 347

- Company Precision: 0.9570

- Company Recall: 0.9625

- Company F1: 0.9598

- Company Number: 347

- Date Precision: 0.9885

- Date Recall: 0.9885

- Date F1: 0.9885

- Date Number: 347

- Total Precision: 0.9253

- Total Recall: 0.9280

- Total F1: 0.9266

- Total Number: 347

- Overall Precision: 0.9512

- Overall Recall: 0.9546

- Overall F1: 0.9529

- Overall Accuracy: 0.9961

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 3000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Address Precision | Address Recall | Address F1 | Address Number | Company Precision | Company Recall | Company F1 | Company Number | Date Precision | Date Recall | Date F1 | Date Number | Total Precision | Total Recall | Total F1 | Total Number | Overall Precision | Overall Recall | Overall F1 | Overall Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:-----------------:|:--------------:|:----------:|:--------------:|:-----------------:|:--------------:|:----------:|:--------------:|:--------------:|:-----------:|:-------:|:-----------:|:---------------:|:------------:|:--------:|:------------:|:-----------------:|:--------------:|:----------:|:----------------:|

| No log | 0.05 | 157 | 0.8162 | 0.3670 | 0.7233 | 0.4869 | 347 | 0.0617 | 0.0144 | 0.0234 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.3346 | 0.1844 | 0.2378 | 0.9342 |

| No log | 1.05 | 314 | 0.3490 | 0.8564 | 0.8934 | 0.8745 | 347 | 0.8610 | 0.9280 | 0.8932 | 347 | 0.7297 | 0.8559 | 0.7878 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.8128 | 0.6693 | 0.7341 | 0.9826 |

| No log | 2.05 | 471 | 0.1845 | 0.7970 | 0.9049 | 0.8475 | 347 | 0.9211 | 0.9424 | 0.9316 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.8978 | 0.7089 | 0.7923 | 0.9835 |

| 0.7027 | 3.05 | 628 | 0.1194 | 0.9040 | 0.9222 | 0.9130 | 347 | 0.8880 | 0.9135 | 0.9006 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.0 | 0.0 | 0.0 | 347 | 0.9263 | 0.7061 | 0.8013 | 0.9853 |

| 0.7027 | 4.05 | 785 | 0.0762 | 0.9397 | 0.9424 | 0.9410 | 347 | 0.8889 | 0.9222 | 0.9052 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.7740 | 0.9078 | 0.8355 | 347 | 0.8926 | 0.9402 | 0.9158 | 0.9928 |

| 0.7027 | 5.05 | 942 | 0.0564 | 0.9282 | 0.9308 | 0.9295 | 347 | 0.9296 | 0.9510 | 0.9402 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.7801 | 0.8588 | 0.8176 | 347 | 0.9036 | 0.9323 | 0.9177 | 0.9946 |

| 0.0935 | 6.05 | 1099 | 0.0548 | 0.9222 | 0.9222 | 0.9222 | 347 | 0.6975 | 0.7378 | 0.7171 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.8608 | 0.8732 | 0.8670 | 347 | 0.8648 | 0.8804 | 0.8725 | 0.9921 |

| 0.0935 | 7.05 | 1256 | 0.0410 | 0.92 | 0.9280 | 0.9240 | 347 | 0.9486 | 0.9568 | 0.9527 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9091 | 0.9222 | 0.9156 | 347 | 0.9414 | 0.9488 | 0.9451 | 0.9961 |

| 0.0935 | 8.05 | 1413 | 0.0369 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9569 | 0.9597 | 0.9583 | 347 | 0.9772 | 0.9885 | 0.9828 | 347 | 0.9143 | 0.9222 | 0.9182 | 347 | 0.9463 | 0.9524 | 0.9494 | 0.9960 |

| 0.038 | 9.05 | 1570 | 0.0343 | 0.9282 | 0.9308 | 0.9295 | 347 | 0.9624 | 0.9597 | 0.9610 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9206 | 0.9020 | 0.9112 | 347 | 0.9500 | 0.9452 | 0.9476 | 0.9958 |

| 0.038 | 10.05 | 1727 | 0.0317 | 0.9395 | 0.9395 | 0.9395 | 347 | 0.9598 | 0.9625 | 0.9612 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9280 | 0.9280 | 0.9280 | 347 | 0.9539 | 0.9546 | 0.9543 | 0.9963 |

| 0.038 | 11.05 | 1884 | 0.0312 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9514 | 0.9597 | 0.9555 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9226 | 0.9280 | 0.9253 | 347 | 0.9498 | 0.9539 | 0.9518 | 0.9960 |

| 0.0236 | 12.05 | 2041 | 0.0318 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9043 | 0.8991 | 0.9017 | 347 | 0.9467 | 0.9474 | 0.9471 | 0.9956 |

| 0.0236 | 13.05 | 2198 | 0.0291 | 0.9337 | 0.9337 | 0.9337 | 347 | 0.9598 | 0.9625 | 0.9612 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9164 | 0.9164 | 0.9164 | 347 | 0.9496 | 0.9503 | 0.9499 | 0.9960 |

| 0.0236 | 14.05 | 2355 | 0.0300 | 0.9286 | 0.9366 | 0.9326 | 347 | 0.9459 | 0.9568 | 0.9513 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9275 | 0.9222 | 0.9249 | 347 | 0.9476 | 0.9510 | 0.9493 | 0.9959 |

| 0.0178 | 15.05 | 2512 | 0.0307 | 0.9366 | 0.9366 | 0.9366 | 347 | 0.9513 | 0.9568 | 0.9540 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9275 | 0.9222 | 0.9249 | 347 | 0.9510 | 0.9510 | 0.9510 | 0.9959 |

| 0.0178 | 16.05 | 2669 | 0.0300 | 0.9312 | 0.9366 | 0.9339 | 347 | 0.9543 | 0.9625 | 0.9584 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9171 | 0.9251 | 0.9211 | 347 | 0.9477 | 0.9532 | 0.9504 | 0.9959 |

| 0.0178 | 17.05 | 2826 | 0.0292 | 0.9368 | 0.9395 | 0.9381 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9253 | 0.9280 | 0.9266 | 347 | 0.9519 | 0.9546 | 0.9532 | 0.9961 |

| 0.0178 | 18.05 | 2983 | 0.0291 | 0.9341 | 0.9395 | 0.9368 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9253 | 0.9280 | 0.9266 | 347 | 0.9512 | 0.9546 | 0.9529 | 0.9961 |

| 0.0149 | 19.01 | 3000 | 0.0291 | 0.9341 | 0.9395 | 0.9368 | 347 | 0.9570 | 0.9625 | 0.9598 | 347 | 0.9885 | 0.9885 | 0.9885 | 347 | 0.9253 | 0.9280 | 0.9266 | 347 | 0.9512 | 0.9546 | 0.9529 | 0.9961 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.8.0+cu101

- Datasets 1.18.4.dev0

- Tokenizers 0.11.6

|

mncai/Mistral-7B-v0.1-orca-2k | mncai | 2023-10-22T06:00:24Z | 1,295 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"MindsAndCompany",

"en",

"ko",

"dataset:kyujinpy/OpenOrca-KO",

"arxiv:2306.02707",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-22T05:46:39Z | ---

pipeline_tag: text-generation

license: mit

language:

- en

- ko

library_name: transformers

tags:

- MindsAndCompany

datasets:

- kyujinpy/OpenOrca-KO

---

## Model Details

* **Developed by**: [Minds And Company](https://mnc.ai/)

* **Backbone Model**: [Mistral-7B-v0.1](mistralai/Mistral-7B-v0.1)

* **Library**: [HuggingFace Transformers](https://github.com/huggingface/transformers)

## Dataset Details

### Used Datasets

- kyujinpy/OpenOrca-KO

### Prompt Template

- Llama Prompt Template

## Limitations & Biases:

Llama2 and fine-tuned variants are a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, Llama 2 and any fine-tuned varient's potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of Llama 2 variants, developers should perform safety testing and tuning tailored to their specific applications of the model.

Please see the Responsible Use Guide available at https://ai.meta.com/llama/responsible-use-guide/

## License Disclaimer:

This model is bound by the license & usage restrictions of the original Llama-2 model. And comes with no warranty or gurantees of any kind.

## Contact Us

- [Minds And Company](https://mnc.ai/)

## Citiation:

Please kindly cite using the following BibTeX:

```bibtex

@misc{mukherjee2023orca,

title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

year={2023},

eprint={2306.02707},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

```

@misc{Orca-best,

title = {Orca-best: A filtered version of orca gpt4 dataset.},

author = {Shahul Es},

year = {2023},

publisher = {HuggingFace},

journal = {HuggingFace repository},

howpublished = {\url{https://huggingface.co/datasets/shahules786/orca-best/},

}

```

```

@software{touvron2023llama2,

title={Llama 2: Open Foundation and Fine-Tuned Chat Models},

author={Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava,

Shruti Bhosale, Dan Bikel, Lukas Blecher, Cristian Canton Ferrer, Moya Chen, Guillem Cucurull, David Esiobu, Jude Fernandes, Jeremy Fu, Wenyin Fu, Brian Fuller,

Cynthia Gao, Vedanuj Goswami, Naman Goyal, Anthony Hartshorn, Saghar Hosseini, Rui Hou, Hakan Inan, Marcin Kardas, Viktor Kerkez Madian Khabsa, Isabel Kloumann,

Artem Korenev, Punit Singh Koura, Marie-Anne Lachaux, Thibaut Lavril, Jenya Lee, Diana Liskovich, Yinghai Lu, Yuning Mao, Xavier Martinet, Todor Mihaylov,

Pushkar Mishra, Igor Molybog, Yixin Nie, Andrew Poulton, Jeremy Reizenstein, Rashi Rungta, Kalyan Saladi, Alan Schelten, Ruan Silva, Eric Michael Smith,

Ranjan Subramanian, Xiaoqing Ellen Tan, Binh Tang, Ross Taylor, Adina Williams, Jian Xiang Kuan, Puxin Xu , Zheng Yan, Iliyan Zarov, Yuchen Zhang, Angela Fan,

Melanie Kambadur, Sharan Narang, Aurelien Rodriguez, Robert Stojnic, Sergey Edunov, Thomas Scialom},

year={2023}

}

```

> Readme format: [Riiid/sheep-duck-llama-2-70b-v1.1](https://huggingface.co/Riiid/sheep-duck-llama-2-70b-v1.1) |

kyujinpy/Kosy-platypus2-13B-v5 | kyujinpy | 2023-11-02T01:53:02Z | 1,295 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"ko",

"dataset:kyujinpy/KOpen-platypus",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-01T17:25:47Z | ---

language:

- ko

datasets:

- kyujinpy/KOpen-platypus

library_name: transformers

pipeline_tag: text-generation

license: cc-by-nc-sa-4.0

---

# **Kosy🍵llama**

## Model Details

**Model Developers** Kyujin Han (kyujinpy)

**Model Description**

[NEFTune](https://github.com/neelsjain/NEFTune) method를 활용하여 훈련한 Ko-platypus2 new version!

(Noisy + KO + llama = Kosy🍵llama)

**Repo Link**

Github **KoNEFTune**: [Kosy🍵llama](https://github.com/Marker-Inc-Korea/KoNEFTune)

If you visit our github, you can easily apply **Random_noisy_embedding_fine-tuning**!!

**Base Model**

[hyunseoki/ko-en-llama2-13b](https://huggingface.co/hyunseoki/ko-en-llama2-13b)

**Training Dataset**

Version of combined dataset: [kyujinpy/KOpen-platypus](https://huggingface.co/datasets/kyujinpy/KOpen-platypus)

I use A100 GPU 40GB and COLAB, when trianing.

# **Model comparisons**

[KO-LLM leaderboard](https://huggingface.co/spaces/upstage/open-ko-llm-leaderboard)

# **NEFT comparisons**

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

| --- | --- | --- | --- | --- | --- | --- |

| [Ko-Platypus2-13B](https://huggingface.co/kyujinpy/KO-Platypus2-13B) | 45.60 | 44.20 | 54.31 | 42.47 | 44.41 | 42.62 |

| *NEFT(🍵kosy)+MLP-v1 | 43.64 | 43.94 | 53.88 | 42.68 | 43.46 | 34.24 |

| *NEFT(🍵kosy)+MLP-v2 | 45.45 | 44.20 | 54.56 | 42.60 | 42.68 | 42.98 |

| [***NEFT(🍵kosy)+MLP-v3**](https://huggingface.co/kyujinpy/Kosy-platypus2-13B-v3) | 46.31 | 43.34 | 54.54 | 43.38 | 44.11 | 46.16 |

| NEFT(🍵kosy)+Attention | 44.92 |42.92 | 54.48 | 42.99 | 43.00 | 41.20 |

| NEFT(🍵kosy) | 45.08 | 43.09 | 53.61 | 41.06 | 43.47 | 43.21 |

> *Different Hyperparameters such that learning_rate, batch_size, epoch, etc...

# Implementation Code

```python

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "kyujinpy/Koisy-Platypus2-13B"

OpenOrca = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

OpenOrca_tokenizer = AutoTokenizer.from_pretrained(repo)

```

--- |

wons/llama2-13b-dpo-test-v0.2 | wons | 2023-11-29T14:05:44Z | 1,295 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-29T01:51:51Z | Entry not found |

wons/mistral-7B-test-v0.2 | wons | 2023-11-29T03:17:31Z | 1,295 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-29T03:46:06Z | Entry not found |

sronger/mistral-ko-llm | sronger | 2023-11-30T04:42:28Z | 1,295 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-30T04:39:22Z | Entry not found |

oopsung/llama2-7b-koNqa-test-v1 | oopsung | 2023-11-30T07:08:30Z | 1,295 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-30T07:01:34Z | Entry not found |

yuntaeyang/Yi-Ko-6B-lora | yuntaeyang | 2023-12-27T09:59:19Z | 1,295 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-27T09:55:18Z | Entry not found |

Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2 | Josephgflowers | 2024-03-09T13:55:48Z | 1,295 | 0 | transformers | [

"transformers",

"safetensors",

"gguf",

"llama",

"text-generation",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-04T16:31:30Z | ---

license: mit

model-index:

- name: Tinyllama-1.3B-Cinder-Reason-Test-2

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 32.76

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 57.92

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 25.42

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 37.26

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 64.8

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 2.81

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test-2

name: Open LLM Leaderboard

---

1.3B test of two Cinder models merged layers 1-22 and 18-22, trained on math and step by step reasoning. Model Overview Cinder is an AI chatbot tailored for engaging users in scientific and educational conversations, offering companionship, and sparking imaginative exploration. It is built on the TinyLlama 1.1B parameter model and trained on a unique combination of datasets. Testing on Reason-with-cinder dataset.

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_Josephgflowers__Tinyllama-1.3B-Cinder-Reason-Test-2)

| Metric |Value|

|---------------------------------|----:|

|Avg. |36.83|

|AI2 Reasoning Challenge (25-Shot)|32.76|

|HellaSwag (10-Shot) |57.92|

|MMLU (5-Shot) |25.42|

|TruthfulQA (0-shot) |37.26|

|Winogrande (5-shot) |64.80|

|GSM8k (5-shot) | 2.81|

|

PassionFriend/5DRV1wBnJwX5bnZot2nawK2nErQjidffoTV2Genxwn5NLrdU_vgg | PassionFriend | 2024-03-01T06:46:12Z | 1,295 | 0 | keras | [

"keras",

"region:us"

] | null | 2024-02-15T15:59:48Z | Entry not found |

ChrisWilson011016/5FUaZbKuahuFmCRhmKWF936zmmGM8D21J1jqp6a2i6UZRLvn_vgg | ChrisWilson011016 | 2024-03-04T18:55:55Z | 1,295 | 0 | keras | [

"keras",

"region:us"

] | null | 2024-02-24T15:21:26Z | Entry not found |

LoneStriker/openbuddy-gemma-7b-v19.1-4k-GGUF | LoneStriker | 2024-03-02T18:31:05Z | 1,295 | 1 | transformers | [

"transformers",

"gguf",

"text-generation",

"zh",

"en",

"fr",

"de",

"ja",

"ko",

"it",

"ru",

"fi",

"base_model:google/gemma-7b",

"license:other",

"region:us"

] | text-generation | 2024-03-02T18:18:01Z | ---

language:

- zh

- en

- fr

- de

- ja

- ko

- it

- ru

- fi

pipeline_tag: text-generation

inference: false

library_name: transformers

license: other

license_name: gemma

license_link: https://ai.google.dev/gemma/terms

base_model: google/gemma-7b

---

# OpenBuddy - Open Multilingual Chatbot

GitHub and Usage Guide: [https://github.com/OpenBuddy/OpenBuddy](https://github.com/OpenBuddy/OpenBuddy)

Website and Demo: [https://openbuddy.ai](https://openbuddy.ai)

Evaluation result of this model: [Evaluation.txt](Evaluation.txt)

# Copyright Notice

BaseModel: Gemma-7b

Gemma is provided under and subject to the Gemma Terms of Use found at ai.google.dev/gemma/terms

## Disclaimer

All OpenBuddy models have inherent limitations and may potentially produce outputs that are erroneous, harmful, offensive, or otherwise undesirable. Users should not use these models in critical or high-stakes situations that may lead to personal injury, property damage, or significant losses. Examples of such scenarios include, but are not limited to, the medical field, controlling software and hardware systems that may cause harm, and making important financial or legal decisions.

OpenBuddy is provided "as-is" without any warranty of any kind, either express or implied, including, but not limited to, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement. In no event shall the authors, contributors, or copyright holders be liable for any claim, damages, or other liabilities, whether in an action of contract, tort, or otherwise, arising from, out of, or in connection with the software or the use or other dealings in the software.

By using OpenBuddy, you agree to these terms and conditions, and acknowledge that you understand the potential risks associated with its use. You also agree to indemnify and hold harmless the authors, contributors, and copyright holders from any claims, damages, or liabilities arising from your use of OpenBuddy.

## 免责声明

所有OpenBuddy模型均存在固有的局限性,可能产生错误的、有害的、冒犯性的或其他不良的输出。用户在关键或高风险场景中应谨慎行事,不要使用这些模型,以免导致人身伤害、财产损失或重大损失。此类场景的例子包括但不限于医疗领域、可能导致伤害的软硬件系统的控制以及进行重要的财务或法律决策。

OpenBuddy按“原样”提供,不附带任何种类的明示或暗示的保证,包括但不限于适销性、特定目的的适用性和非侵权的暗示保证。在任何情况下,作者、贡献者或版权所有者均不对因软件或使用或其他软件交易而产生的任何索赔、损害赔偿或其他责任(无论是合同、侵权还是其他原因)承担责任。

使用OpenBuddy即表示您同意这些条款和条件,并承认您了解其使用可能带来的潜在风险。您还同意赔偿并使作者、贡献者和版权所有者免受因您使用OpenBuddy而产生的任何索赔、损害赔偿或责任的影响。 |

johnsnowlabs/JSL-MedMNX-7B | johnsnowlabs | 2024-04-18T19:22:24Z | 1,295 | 2 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"reward model",

"RLHF",

"medical",

"conversational",

"en",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-15T19:18:02Z | ---

license: cc-by-nc-nd-4.0

language:

- en

library_name: transformers

tags:

- reward model

- RLHF

- medical

---

# JSL-MedMNX-7B

[<img src="https://repository-images.githubusercontent.com/104670986/2e728700-ace4-11ea-9cfc-f3e060b25ddf">](http://www.johnsnowlabs.com)

JSL-MedMNX-7B is a 7 Billion parameter model developed by [John Snow Labs](https://www.johnsnowlabs.com/).

This model is trained on medical datasets to provide state-of-the-art performance on biomedical benchmarks: [Open Medical LLM Leaderboard](https://huggingface.co/spaces/openlifescienceai/open_medical_llm_leaderboard).

This model is available under a [CC-BY-NC-ND](https://creativecommons.org/licenses/by-nc-nd/4.0/deed.en) license and must also conform to this [Acceptable Use Policy](https://huggingface.co/johnsnowlabs). If you need to license this model for commercial use, please contact us at [email protected].

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "johnsnowlabs/JSL-MedMNX-7B"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)