modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

Yntec/epiCVision | Yntec | 2023-08-17T03:53:13Z | 1,267 | 10 | diffusers | [

"diffusers",

"safetensors",

"Photorealistic",

"Realistic",

"Analog",

"Portrait",

"Semi-Realistic",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"SG_161222",

"epinikion",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-08-17T03:14:17Z | ---

license: creativeml-openrail-m

language:

- en

library_name: diffusers

pipeline_tag: text-to-image

tags:

- Photorealistic

- Realistic

- Analog

- Portrait

- Semi-Realistic

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- SG_161222

- epinikion

---

# epiCVision

A mix of epicRealism and realisticVision. I don't like false modesty, I claim this is better than either model:

(click for larger)

Sample and prompt:

very cute princess with curly hair wearing choker who would marry me

Original pages:

https://civitai.com/models/25694?modelVersionId=30761

https://civitai.com/models/4201?modelVersionId=5196

Full recipe:

# Add Difference 1.0

Primary model:

epicRealism

Secondary model:

epicRealism

Tertiary model:

v1-5-pruned-fp16-no-ema (https://huggingface.co/Yntec/DreamLikeRemix/resolve/main/v1-5-pruned-fp16-no-ema.safetensors)

Output Model:

Temporary

# Weighted Sum 0.70

Primary model:

RealisticVision

Secondary model:

Temporary

Output Model:

epiCVision |

MaziyarPanahi/WizardLM-Math-70B-v0.1 | MaziyarPanahi | 2024-03-04T13:34:47Z | 1,267 | 3 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"merge",

"wizardlm",

"mique",

"license:agpl-3.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-14T00:03:45Z | ---

license: agpl-3.0

tags:

- merge

- wizardlm

- mique

pipeline_tag: text-generation

model-index:

- name: WizardLM-Math-70B-v0.1

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 67.06

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MaziyarPanahi/WizardLM-Math-70B-v0.1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 86.01

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MaziyarPanahi/WizardLM-Math-70B-v0.1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 69.14

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MaziyarPanahi/WizardLM-Math-70B-v0.1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 57.07

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MaziyarPanahi/WizardLM-Math-70B-v0.1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 81.77

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MaziyarPanahi/WizardLM-Math-70B-v0.1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 64.44

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=MaziyarPanahi/WizardLM-Math-70B-v0.1

name: Open LLM Leaderboard

---

## Examples

There are three killers in a room. Someone enters the room and kills one of them. Nobody leaves the room. How many killers are left in the room? Explain your reasoning step by step.

```

Step 1: Identify the initial situation

There are three killers in a room, which we can label as A, B, and C.

Step 2: Describe the event

Someone (let's call this person D) enters the room and kills one of the killers. For the sake of simplicity, let's say that Killer A is killed.

Step 3: Analyze the new situation

After the event, there are now two killers left in the room: B and C. The person who entered the room, D, is also still present. However, since D did not leave the room after killing A, it means that D is also a killer. Therefore, there are actually three killers in the room: B, C, and D.

Final Answer: After the event, there are three killers left in the room: B, C, and D.

```

Mark is faster than Mary , Mary is faster than Joe. Is Joe faster than Mark? Let's think step by step

```

Step 1: Understand the given information

We know that Mark is faster than Mary, and Mary is faster than Joe. This means that Mark is the fastest among the three, followed by Mary, and then Joe.

Step 2: Analyze the question

The question asks if Joe is faster than Mark. Based on the given information, we can conclude that Joe is not faster than Mark. In fact, Mark is faster than Joe.

Final Answer: No, Joe is not faster than Mark.

```

If I lay 2 wet shirts out in the sun flat on the ground to dry and it takes 5 hours until they are dry, how long would it take to dry 4 shirts of the same type that way simultanously?

```

The time taken for a shirt to dry depends on several factors such as the material of the shirt, the humidity, temperature, wind speed, etc. However, if we assume that all four shirts have similar properties and the weather conditions remain constant, then you can expect the drying time to be proportional to the number of shirts.

In other words, if two shirts take 5 hours to dry, then four shirts will likely take twice as long, or 10 hours. This is because there is more fabric to dry, which means it will absorb more water from the air, thus taking longer to reach equilibrium with the surrounding environment.

However, this is just an estimate, and the actual drying time may vary depending on the specific circumstances.

```

Wrong, it takes the same amount of time!

explain step by step 25-4*2+3=?

```

Step 1: Perform the multiplication operation first.

25 - 4 * 2 + 3 = 25 - 8 + 3

Step 2: Now, perform the subtraction and addition operations from left to right.

25 - 8 + 3 = 17 + 3

Step 3: Finally, perform the remaining addition operation.

17 + 3 = 20

So, 25-4*2+3 equals 20.

```

## Eval

### WizardLM/WizardLM-70B-V1.0 vs. MaziyarPanahi/WizardLM-Math-70B-v0.1

### Leaderboard

```python

{

"all": {

"acc": 0.6914116069568377,

"acc_stderr": 0.03063431437342948,

"acc_norm": 0.6938613221179539,

"acc_norm_stderr": 0.031238741076549784,

"mc1": 0.40269277845777235,

"mc1_stderr": 0.01716883093518722,

"mc2": 0.5707095526544473,

"mc2_stderr": 0.01525040450448649

},

"harness|arc:challenge|25": {

"acc": 0.6322525597269625,

"acc_stderr": 0.014090995618168482,

"acc_norm": 0.6706484641638225,

"acc_norm_stderr": 0.013734057652635474

},

"harness|hellaswag|10": {

"acc": 0.6746664011153157,

"acc_stderr": 0.0046754187743142306,

"acc_norm": 0.8600876319458275,

"acc_norm_stderr": 0.0034618713240671846

},

"harness|hendrycksTest-abstract_algebra|5": {

"acc": 0.34,

"acc_stderr": 0.04760952285695236,

"acc_norm": 0.34,

"acc_norm_stderr": 0.04760952285695236

},

"harness|hendrycksTest-anatomy|5": {

"acc": 0.6518518518518519,

"acc_stderr": 0.041153246103369526,

"acc_norm": 0.6518518518518519,

"acc_norm_stderr": 0.041153246103369526

},

"harness|hendrycksTest-astronomy|5": {

"acc": 0.7894736842105263,

"acc_stderr": 0.03317672787533157,

"acc_norm": 0.7894736842105263,

"acc_norm_stderr": 0.03317672787533157

},

"harness|hendrycksTest-business_ethics|5": {

"acc": 0.73,

"acc_stderr": 0.04461960433384741,

"acc_norm": 0.73,

"acc_norm_stderr": 0.04461960433384741

},

"harness|hendrycksTest-clinical_knowledge|5": {

"acc": 0.7283018867924528,

"acc_stderr": 0.027377706624670713,

"acc_norm": 0.7283018867924528,

"acc_norm_stderr": 0.027377706624670713

},

"harness|hendrycksTest-college_biology|5": {

"acc": 0.8194444444444444,

"acc_stderr": 0.032166008088022675,

"acc_norm": 0.8194444444444444,

"acc_norm_stderr": 0.032166008088022675

},

"harness|hendrycksTest-college_chemistry|5": {

"acc": 0.5,

"acc_stderr": 0.050251890762960605,

"acc_norm": 0.5,

"acc_norm_stderr": 0.050251890762960605

},

"harness|hendrycksTest-college_computer_science|5": {

"acc": 0.57,

"acc_stderr": 0.049756985195624284,

"acc_norm": 0.57,

"acc_norm_stderr": 0.049756985195624284

},

"harness|hendrycksTest-college_mathematics|5": {

"acc": 0.37,

"acc_stderr": 0.04852365870939099,

"acc_norm": 0.37,

"acc_norm_stderr": 0.04852365870939099

},

"harness|hendrycksTest-college_medicine|5": {

"acc": 0.6878612716763006,

"acc_stderr": 0.035331333893236574,

"acc_norm": 0.6878612716763006,

"acc_norm_stderr": 0.035331333893236574

},

"harness|hendrycksTest-college_physics|5": {

"acc": 0.35294117647058826,

"acc_stderr": 0.047551296160629475,

"acc_norm": 0.35294117647058826,

"acc_norm_stderr": 0.047551296160629475

},

"harness|hendrycksTest-computer_security|5": {

"acc": 0.7,

"acc_stderr": 0.046056618647183814,

"acc_norm": 0.7,

"acc_norm_stderr": 0.046056618647183814

},

"harness|hendrycksTest-conceptual_physics|5": {

"acc": 0.676595744680851,

"acc_stderr": 0.030579442773610337,

"acc_norm": 0.676595744680851,

"acc_norm_stderr": 0.030579442773610337

},

"harness|hendrycksTest-econometrics|5": {

"acc": 0.40350877192982454,

"acc_stderr": 0.046151869625837026,

"acc_norm": 0.40350877192982454,

"acc_norm_stderr": 0.046151869625837026

},

"harness|hendrycksTest-electrical_engineering|5": {

"acc": 0.5793103448275863,

"acc_stderr": 0.04113914981189261,

"acc_norm": 0.5793103448275863,

"acc_norm_stderr": 0.04113914981189261

},

"harness|hendrycksTest-elementary_mathematics|5": {

"acc": 0.4497354497354497,

"acc_stderr": 0.02562085704293665,

"acc_norm": 0.4497354497354497,

"acc_norm_stderr": 0.02562085704293665

},

"harness|hendrycksTest-formal_logic|5": {

"acc": 0.46825396825396826,

"acc_stderr": 0.04463112720677172,

"acc_norm": 0.46825396825396826,

"acc_norm_stderr": 0.04463112720677172

},

"harness|hendrycksTest-global_facts|5": {

"acc": 0.46,

"acc_stderr": 0.05009082659620332,

"acc_norm": 0.46,

"acc_norm_stderr": 0.05009082659620332

},

"harness|hendrycksTest-high_school_biology|5": {

"acc": 0.8129032258064516,

"acc_stderr": 0.022185710092252252,

"acc_norm": 0.8129032258064516,

"acc_norm_stderr": 0.022185710092252252

},

"harness|hendrycksTest-high_school_chemistry|5": {

"acc": 0.5369458128078818,

"acc_stderr": 0.035083705204426656,

"acc_norm": 0.5369458128078818,

"acc_norm_stderr": 0.035083705204426656

},

"harness|hendrycksTest-high_school_computer_science|5": {

"acc": 0.79,

"acc_stderr": 0.040936018074033256,

"acc_norm": 0.79,

"acc_norm_stderr": 0.040936018074033256

},

"harness|hendrycksTest-high_school_european_history|5": {

"acc": 0.8363636363636363,

"acc_stderr": 0.02888787239548795,

"acc_norm": 0.8363636363636363,

"acc_norm_stderr": 0.02888787239548795

},

"harness|hendrycksTest-high_school_geography|5": {

"acc": 0.8686868686868687,

"acc_stderr": 0.024063156416822502,

"acc_norm": 0.8686868686868687,

"acc_norm_stderr": 0.024063156416822502

},

"harness|hendrycksTest-high_school_government_and_politics|5": {

"acc": 0.927461139896373,

"acc_stderr": 0.018718998520678178,

"acc_norm": 0.927461139896373,

"acc_norm_stderr": 0.018718998520678178

},

"harness|hendrycksTest-high_school_macroeconomics|5": {

"acc": 0.7025641025641025,

"acc_stderr": 0.023177408131465953,

"acc_norm": 0.7025641025641025,

"acc_norm_stderr": 0.023177408131465953

},

"harness|hendrycksTest-high_school_mathematics|5": {

"acc": 0.34814814814814815,

"acc_stderr": 0.02904560029061626,

"acc_norm": 0.34814814814814815,

"acc_norm_stderr": 0.02904560029061626

},

"harness|hendrycksTest-high_school_microeconomics|5": {

"acc": 0.7941176470588235,

"acc_stderr": 0.02626502460827588,

"acc_norm": 0.7941176470588235,

"acc_norm_stderr": 0.02626502460827588

},

"harness|hendrycksTest-high_school_physics|5": {

"acc": 0.4503311258278146,

"acc_stderr": 0.04062290018683776,

"acc_norm": 0.4503311258278146,

"acc_norm_stderr": 0.04062290018683776

},

"harness|hendrycksTest-high_school_psychology|5": {

"acc": 0.8954128440366973,

"acc_stderr": 0.013120530245265593,

"acc_norm": 0.8954128440366973,

"acc_norm_stderr": 0.013120530245265593

},

"harness|hendrycksTest-high_school_statistics|5": {

"acc": 0.5787037037037037,

"acc_stderr": 0.03367462138896078,

"acc_norm": 0.5787037037037037,

"acc_norm_stderr": 0.03367462138896078

},

"harness|hendrycksTest-high_school_us_history|5": {

"acc": 0.9166666666666666,

"acc_stderr": 0.019398452135813905,

"acc_norm": 0.9166666666666666,

"acc_norm_stderr": 0.019398452135813905

},

"harness|hendrycksTest-high_school_world_history|5": {

"acc": 0.8860759493670886,

"acc_stderr": 0.020681745135884565,

"acc_norm": 0.8860759493670886,

"acc_norm_stderr": 0.020681745135884565

},

"harness|hendrycksTest-human_aging|5": {

"acc": 0.757847533632287,

"acc_stderr": 0.028751392398694755,

"acc_norm": 0.757847533632287,

"acc_norm_stderr": 0.028751392398694755

},

"harness|hendrycksTest-human_sexuality|5": {

"acc": 0.8702290076335878,

"acc_stderr": 0.029473649496907065,

"acc_norm": 0.8702290076335878,

"acc_norm_stderr": 0.029473649496907065

},

"harness|hendrycksTest-international_law|5": {

"acc": 0.8181818181818182,

"acc_stderr": 0.03520893951097655,

"acc_norm": 0.8181818181818182,

"acc_norm_stderr": 0.03520893951097655

},

"harness|hendrycksTest-jurisprudence|5": {

"acc": 0.8148148148148148,

"acc_stderr": 0.03755265865037181,

"acc_norm": 0.8148148148148148,

"acc_norm_stderr": 0.03755265865037181

},

"harness|hendrycksTest-logical_fallacies|5": {

"acc": 0.7791411042944786,

"acc_stderr": 0.03259177392742179,

"acc_norm": 0.7791411042944786,

"acc_norm_stderr": 0.03259177392742179

},

"harness|hendrycksTest-machine_learning|5": {

"acc": 0.48214285714285715,

"acc_stderr": 0.047427623612430116,

"acc_norm": 0.48214285714285715,

"acc_norm_stderr": 0.047427623612430116

},

"harness|hendrycksTest-management|5": {

"acc": 0.8446601941747572,

"acc_stderr": 0.03586594738573974,

"acc_norm": 0.8446601941747572,

"acc_norm_stderr": 0.03586594738573974

},

"harness|hendrycksTest-marketing|5": {

"acc": 0.905982905982906,

"acc_stderr": 0.019119892798924974,

"acc_norm": 0.905982905982906,

"acc_norm_stderr": 0.019119892798924974

},

"harness|hendrycksTest-medical_genetics|5": {

"acc": 0.67,

"acc_stderr": 0.047258156262526066,

"acc_norm": 0.67,

"acc_norm_stderr": 0.047258156262526066

},

"harness|hendrycksTest-miscellaneous|5": {

"acc": 0.8697318007662835,

"acc_stderr": 0.012036729568216054,

"acc_norm": 0.8697318007662835,

"acc_norm_stderr": 0.012036729568216054

},

"harness|hendrycksTest-moral_disputes|5": {

"acc": 0.7774566473988439,

"acc_stderr": 0.02239421566194282,

"acc_norm": 0.7774566473988439,

"acc_norm_stderr": 0.02239421566194282

},

"harness|hendrycksTest-moral_scenarios|5": {

"acc": 0.5553072625698324,

"acc_stderr": 0.016619881988177012,

"acc_norm": 0.5553072625698324,

"acc_norm_stderr": 0.016619881988177012

},

"harness|hendrycksTest-nutrition|5": {

"acc": 0.7516339869281046,

"acc_stderr": 0.024739981355113592,

"acc_norm": 0.7516339869281046,

"acc_norm_stderr": 0.024739981355113592

},

"harness|hendrycksTest-philosophy|5": {

"acc": 0.77491961414791,

"acc_stderr": 0.023720088516179027,

"acc_norm": 0.77491961414791,

"acc_norm_stderr": 0.023720088516179027

},

"harness|hendrycksTest-prehistory|5": {

"acc": 0.7962962962962963,

"acc_stderr": 0.02240967454730417,

"acc_norm": 0.7962962962962963,

"acc_norm_stderr": 0.02240967454730417

},

"harness|hendrycksTest-professional_accounting|5": {

"acc": 0.5390070921985816,

"acc_stderr": 0.029736592526424445,

"acc_norm": 0.5390070921985816,

"acc_norm_stderr": 0.029736592526424445

},

"harness|hendrycksTest-professional_law|5": {

"acc": 0.5586701434159062,

"acc_stderr": 0.012682016335646683,

"acc_norm": 0.5586701434159062,

"acc_norm_stderr": 0.012682016335646683

},

"harness|hendrycksTest-professional_medicine|5": {

"acc": 0.7242647058823529,

"acc_stderr": 0.027146271936625162,

"acc_norm": 0.7242647058823529,

"acc_norm_stderr": 0.027146271936625162

},

"harness|hendrycksTest-professional_psychology|5": {

"acc": 0.761437908496732,

"acc_stderr": 0.017242385828779627,

"acc_norm": 0.761437908496732,

"acc_norm_stderr": 0.017242385828779627

},

"harness|hendrycksTest-public_relations|5": {

"acc": 0.7454545454545455,

"acc_stderr": 0.041723430387053825,

"acc_norm": 0.7454545454545455,

"acc_norm_stderr": 0.041723430387053825

},

"harness|hendrycksTest-security_studies|5": {

"acc": 0.7877551020408163,

"acc_stderr": 0.026176967197866767,

"acc_norm": 0.7877551020408163,

"acc_norm_stderr": 0.026176967197866767

},

"harness|hendrycksTest-sociology|5": {

"acc": 0.8805970149253731,

"acc_stderr": 0.02292879327721974,

"acc_norm": 0.8805970149253731,

"acc_norm_stderr": 0.02292879327721974

},

"harness|hendrycksTest-us_foreign_policy|5": {

"acc": 0.9,

"acc_stderr": 0.030151134457776334,

"acc_norm": 0.9,

"acc_norm_stderr": 0.030151134457776334

},

"harness|hendrycksTest-virology|5": {

"acc": 0.5602409638554217,

"acc_stderr": 0.03864139923699122,

"acc_norm": 0.5602409638554217,

"acc_norm_stderr": 0.03864139923699122

},

"harness|hendrycksTest-world_religions|5": {

"acc": 0.8596491228070176,

"acc_stderr": 0.0266405825391332,

"acc_norm": 0.8596491228070176,

"acc_norm_stderr": 0.0266405825391332

},

"harness|truthfulqa:mc|0": {

"mc1": 0.40269277845777235,

"mc1_stderr": 0.01716883093518722,

"mc2": 0.5707095526544473,

"mc2_stderr": 0.01525040450448649

},

"harness|winogrande|5": {

"acc": 0.8176795580110497,

"acc_stderr": 0.010851565594267207

},

"harness|gsm8k|5": {

"acc": 0.6444275966641395,

"acc_stderr": 0.013185402252713852

}

}

```

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_MaziyarPanahi__WizardLM-Math-70B-v0.1)

| Metric |Value|

|---------------------------------|----:|

|Avg. |70.92|

|AI2 Reasoning Challenge (25-Shot)|67.06|

|HellaSwag (10-Shot) |86.01|

|MMLU (5-Shot) |69.14|

|TruthfulQA (0-shot) |57.07|

|Winogrande (5-shot) |81.77|

|GSM8k (5-shot) |64.44|

|

yanolja/EEVE-Korean-2.8B-v1.0 | yanolja | 2024-02-24T13:54:36Z | 1,267 | 9 | transformers | [

"transformers",

"safetensors",

"phi",

"text-generation",

"generated_from_trainer",

"conversational",

"custom_code",

"arxiv:2402.14714",

"base_model:microsoft/phi-2",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-22T04:39:37Z | ---

license: apache-2.0

base_model: microsoft/phi-2

tags:

- generated_from_trainer

model-index:

- name: yanolja/EEVE-Korean-2.8B-v1.0

results: []

---

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

<p align="left">

<img src="https://huggingface.co/yanolja/EEVE-Korean-2.8B-v1.0/resolve/main/eeve_logo.webp" width="50%"/>

<p>

# EEVE-Korean-2.8B-v1.0

## Join Our Community on Discord!

If you're passionate about the field of Large Language Models and wish to exchange knowledge and insights, we warmly invite you to join our Discord server. It's worth noting that Korean is the primary language used in this server. The landscape of LLM is evolving rapidly, and without active sharing, our collective knowledge risks becoming outdated swiftly. Let's collaborate and drive greater impact together! Join us here: [Discord Link](https://discord.gg/b27bAHg95m).

## Our Dedicated Team (Alphabetical Order)

| Research | Engineering | Product Management | UX Design |

|-----------------|-----------------|--------------------|--------------

| Myeongho Jeong | Geon Kim | Bokyung Huh | Eunsue Choi |

| Seungduk Kim | Rifqi Alfi | | |

| Seungtaek Choi | Sanghoon Han | | |

| | Suhyun Kang | | |

## About the Model

This model is a Korean vocabulary-extended version of [microsoft/phi-2](https://huggingface.co/microsoft/phi-2), specifically fine-tuned on various Korean web-crawled datasets available on HuggingFace. Our approach was to expand the model's understanding of Korean by pre-training the embeddings for new tokens and partially fine-tuning the `lm_head` embeddings for the already existing tokens while preserving the original parameters of the base model.

### Technical Deep Dive

<p align="left">

<img src="https://huggingface.co/yanolja/EEVE-Korean-2.8B-v1.0/resolve/main/EEVE_figure.png" width="100%"/>

<p>

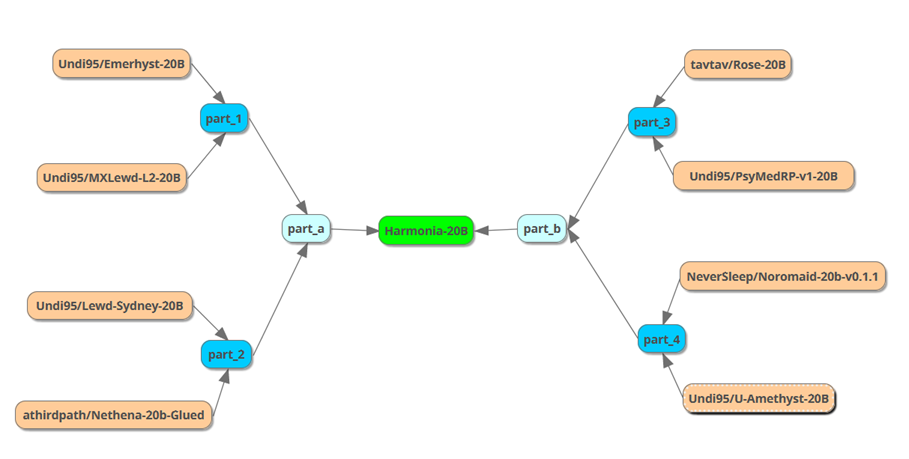

To adapt foundational models from English to Korean, we use subword-based embedding with a seven-stage training process involving parameter freezing.

This approach progressively trains from input embeddings to full parameters, efficiently extending the model's vocabulary to include Korean.

Our method enhances the model's cross-linguistic applicability by carefully integrating new linguistic tokens, focusing on causal language modeling pre-training.

We leverage the inherent capabilities of foundational models trained on English to efficiently transfer knowledge and reasoning to Korean, optimizing the adaptation process.

For more details, please refer to our technical report: [Efficient and Effective Vocabulary Expansion Towards Multilingual Large Language Models](https://arxiv.org/abs/2402.14714).

Here’s an simplified code for our key approach:

```python

# number_of_old_tokens is the size of tokenizer before vocab extension. For example, in case of EEVE-Korean-10.8B-v1.0, number_of_old_tokens is 32000.

def freeze_partial_embedding_hook(grad):

grad[:number_of_old_tokens] = 0

return grad

for name, param in model.named_parameters():

if ("lm_head" in name or "embed_tokens" in name) and "original" not in name:

param.requires_grad = True

if "embed_tokens" in name:

param.register_hook(freeze_partial_embedding_hook)

else:

param.requires_grad = False

```

### Usage and Limitations

Keep in mind that this model hasn't been fine-tuned with instruction-based training. While it excels in Korean language tasks, we advise careful consideration and further training for specific applications.

### Training Details

Our model’s training was comprehensive and diverse:

- **Vocabulary Expansion:**

We meticulously selected 8,960 Korean tokens based on their frequency in our Korean web corpus. This process involved multiple rounds of tokenizer training, manual curation, and token frequency analysis, ensuring a rich and relevant vocabulary for our model.

1. **Initial Tokenizer Training:** We trained an intermediate tokenizer on a Korean web corpus, with a vocabulary of 40,000 tokens.

2. **Extraction of New Korean Tokens:** From the intermediate tokenizer, we identified all Korean tokens not present in the original SOLAR's tokenizer.

3. **Manual Tokenizer Construction:** We then built the target tokenizer, focusing on these new Korean tokens.

4. **Frequency Analysis:** Using the target tokenizer, we processed a 100GB Korean corpus to count each token's frequency.

5. **Refinement of Token List:** We removed tokens appearing less than 6,000 times, ensuring to secure enough tokens to train models later.

6. **Inclusion of Single-Letter Characters:** Counted missing Korean single-letter characters and added them to the target tokenizer that appeared more than 6,000 times.

7. **Iterative Refinement:** We repeated steps 2 to 6 until there were no tokens to drop or add.

8. **Training Bias Towards New Tokens:** Our training data was biased to include more texts with new tokens, for effective learning.

This rigorous approach ensured a comprehensive and contextually rich Korean vocabulary for the model.

## Citation

```

@misc{kim2024efficient,

title={Efficient and Effective Vocabulary Expansion Towards Multilingual Large Language Models},

author={Seungduk Kim and Seungtaek Choi and Myeongho Jeong},

year={2024},

eprint={2402.14714},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

Jayant9928/orpo_med_v0 | Jayant9928 | 2024-05-21T12:44:00Z | 1,267 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-30T11:30:12Z | ---

license: apache-2.0

---

Model Card for Model ID Model Details Model Description This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

Developed by: [More Information Needed] Funded by [optional]: [More Information Needed] Shared by [optional]: [More Information Needed] Model type: [More Information Needed] Language(s) (NLP): [More Information Needed] License: [More Information Needed] Finetuned from model [optional]: [More Information Needed] Model Sources [optional] Repository: [More Information Needed] Paper [optional]: [More Information Needed] Demo [optional]: [More Information Needed] Uses Direct Use [More Information Needed]

Downstream Use [optional] [More Information Needed]

Out-of-Scope Use [More Information Needed]

Bias, Risks, and Limitations [More Information Needed]

Recommendations Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

How to Get Started with the Model Use the code below to get started with the model.

[More Information Needed]

Training Details Training Data [More Information Needed]

Training Procedure Preprocessing [optional] [More Information Needed]

Training Hyperparameters Training regime: [More Information Needed] Speeds, Sizes, Times [optional] [More Information Needed]

Evaluation Testing Data, Factors & Metrics Testing Data [More Information Needed]

Factors [More Information Needed]

Metrics [More Information Needed]

Results [More Information Needed]

Summary Model Examination [optional] [More Information Needed] |

TheBloke/Llama-2-Coder-7B-GGUF | TheBloke | 2023-09-27T12:49:06Z | 1,266 | 13 | transformers | [

"transformers",

"gguf",

"llama",

"generated_from_trainer",

"code",

"coding",

"text-generation",

"dataset:HuggingFaceH4/CodeAlpaca_20K",

"base_model:mrm8488/llama-2-coder-7b",

"license:apache-2.0",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-13T16:28:12Z | ---

language:

- code

license: apache-2.0

tags:

- generated_from_trainer

- code

- coding

- llama

datasets:

- HuggingFaceH4/CodeAlpaca_20K

base_model: mrm8488/llama-2-coder-7b

inference: false

model_creator: mrm8488

model_type: llama

pipeline_tag: text-generation

prompt_template: 'You are a coding assistant that will help the user to resolve the

following instruction:

### Instruction: {prompt}

### Solution:

'

quantized_by: TheBloke

thumbnail: https://huggingface.co/mrm8488/llama-2-coder-7b/resolve/main/llama2-coder-logo-removebg-preview.png

model-index:

- name: FalCoder

results: []

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Llama 2 Coder 7B - GGUF

- Model creator: [mrm8488](https://huggingface.co/mrm8488)

- Original model: [Llama 2 Coder 7B](https://huggingface.co/mrm8488/llama-2-coder-7b)

<!-- description start -->

## Description

This repo contains GGUF format model files for [mrm8488's Llama 2 Coder 7B](https://huggingface.co/mrm8488/llama-2-coder-7b).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Llama-2-Coder-7B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF)

* [mrm8488's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/mrm8488/llama-2-coder-7b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: CodingAssistant

```

You are a coding assistant that will help the user to resolve the following instruction:

### Instruction: {prompt}

### Solution:

```

<!-- prompt-template end -->

<!-- licensing start -->

## Licensing

The creator of the source model has listed its license as `apache-2.0`, and this quantization has therefore used that same license.

As this model is based on Llama 2, it is also subject to the Meta Llama 2 license terms, and the license files for that are additionally included. It should therefore be considered as being claimed to be licensed under both licenses. I contacted Hugging Face for clarification on dual licensing but they do not yet have an official position. Should this change, or should Meta provide any feedback on this situation, I will update this section accordingly.

In the meantime, any questions regarding licensing, and in particular how these two licenses might interact, should be directed to the original model repository: [mrm8488's Llama 2 Coder 7B](https://huggingface.co/mrm8488/llama-2-coder-7b).

<!-- licensing end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [llama-2-coder-7b.Q2_K.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q2_K.gguf) | Q2_K | 2 | 2.83 GB| 5.33 GB | smallest, significant quality loss - not recommended for most purposes |

| [llama-2-coder-7b.Q3_K_S.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q3_K_S.gguf) | Q3_K_S | 3 | 2.95 GB| 5.45 GB | very small, high quality loss |

| [llama-2-coder-7b.Q3_K_M.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q3_K_M.gguf) | Q3_K_M | 3 | 3.30 GB| 5.80 GB | very small, high quality loss |

| [llama-2-coder-7b.Q3_K_L.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q3_K_L.gguf) | Q3_K_L | 3 | 3.60 GB| 6.10 GB | small, substantial quality loss |

| [llama-2-coder-7b.Q4_0.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q4_0.gguf) | Q4_0 | 4 | 3.83 GB| 6.33 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [llama-2-coder-7b.Q4_K_S.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q4_K_S.gguf) | Q4_K_S | 4 | 3.86 GB| 6.36 GB | small, greater quality loss |

| [llama-2-coder-7b.Q4_K_M.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q4_K_M.gguf) | Q4_K_M | 4 | 4.08 GB| 6.58 GB | medium, balanced quality - recommended |

| [llama-2-coder-7b.Q5_0.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q5_0.gguf) | Q5_0 | 5 | 4.65 GB| 7.15 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [llama-2-coder-7b.Q5_K_S.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q5_K_S.gguf) | Q5_K_S | 5 | 4.65 GB| 7.15 GB | large, low quality loss - recommended |

| [llama-2-coder-7b.Q5_K_M.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q5_K_M.gguf) | Q5_K_M | 5 | 4.78 GB| 7.28 GB | large, very low quality loss - recommended |

| [llama-2-coder-7b.Q6_K.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q6_K.gguf) | Q6_K | 6 | 5.53 GB| 8.03 GB | very large, extremely low quality loss |

| [llama-2-coder-7b.Q8_0.gguf](https://huggingface.co/TheBloke/Llama-2-Coder-7B-GGUF/blob/main/llama-2-coder-7b.Q8_0.gguf) | Q8_0 | 8 | 7.16 GB| 9.66 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Llama-2-Coder-7B-GGUF and below it, a specific filename to download, such as: llama-2-coder-7b.q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub>=0.17.1

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Llama-2-Coder-7B-GGUF llama-2-coder-7b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/Llama-2-Coder-7B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Llama-2-Coder-7B-GGUF llama-2-coder-7b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows CLI users: Use `set HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1` before running the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m llama-2-coder-7b.q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "You are a coding assistant that will help the user to resolve the following instruction:\n### Instruction: {prompt}\n\n### Solution:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model from Python using ctransformers

#### First install the package

```bash

# Base ctransformers with no GPU acceleration

pip install ctransformers>=0.2.24

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]>=0.2.24

# Or with ROCm GPU acceleration

CT_HIPBLAS=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems

CT_METAL=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

```

#### Simple example code to load one of these GGUF models

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/Llama-2-Coder-7B-GGUF", model_file="llama-2-coder-7b.q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here's guides on using llama-cpp-python or ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: mrm8488's Llama 2 Coder 7B

<div style="text-align:center;width:250px;height:250px;">

<img src="https://huggingface.co/mrm8488/llama-2-coder-7b/resolve/main/llama2-coder-logo-removebg-preview.png" alt="llama-2 coder logo"">

</div>

# LlaMa 2 Coder 🦙👩💻

**LlaMa-2 7b** fine-tuned on the **CodeAlpaca 20k instructions dataset** by using the method **QLoRA** with [PEFT](https://github.com/huggingface/peft) library.

## Model description 🧠

[Llama-2](https://huggingface.co/meta-llama/Llama-2-7b)

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters.

Meta developed and publicly released the Llama 2 family of large language models (LLMs), a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. Our fine-tuned LLMs, called Llama-2-Chat, are optimized for dialogue use cases. Llama-2-Chat models outperform open-source chat models on most benchmarks we tested, and in our human evaluations for helpfulness and safety, are on par with some popular closed-source models like ChatGPT and PaLM.

## Training and evaluation data 📚

[CodeAlpaca_20K](https://huggingface.co/datasets/HuggingFaceH4/CodeAlpaca_20K): contains 20K instruction-following data used for fine-tuning the Code Alpaca model.

### Training hyperparameters ⚙

```py

optim="paged_adamw_32bit",

num_train_epochs = 2,

eval_steps=50,

save_steps=50,

evaluation_strategy="steps",

save_strategy="steps",

save_total_limit=2,

seed=66,

load_best_model_at_end=True,

logging_steps=1,

learning_rate=2e-4,

fp16=True,

bf16=False,

max_grad_norm=0.3,

warmup_ratio=0.03,

group_by_length=True,

lr_scheduler_type="constant"

```

### Training results 🗒️

| Step | Training Loss | Validation Loss |

|------|----------|----------|

| 50 | 0.624400 | 0.600070 |

| 100 | 0.634100 | 0.592757 |

| 150 | 0.545800 | 0.586652 |

| 200 | 0.572500 | 0.577525 |

| 250 | 0.528000 | 0.590118 |

### Eval results 📊

WIP

### Example of usage 👩💻

```py

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

model_id = "mrm8488/llama-2-coder-7b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id).to("cuda")

def create_prompt(instruction):

system = "You are a coding assistant that will help the user to resolve the following instruction:"

instruction = "### Instruction: " + instruction

return system + "\n" + instruction + "\n\n" + "### Solution:" + "\n"

def generate(

instruction,

max_new_tokens=128,

temperature=0.1,

top_p=0.75,

top_k=40,

num_beams=4,

**kwargs,

):

prompt = create_prompt(instruction)

print(prompt)

inputs = tokenizer(prompt, return_tensors="pt")

input_ids = inputs["input_ids"].to("cuda")

attention_mask = inputs["attention_mask"].to("cuda")

generation_config = GenerationConfig(

temperature=temperature,

top_p=top_p,

top_k=top_k,

num_beams=num_beams,

**kwargs,

)

with torch.no_grad():

generation_output = model.generate(

input_ids=input_ids,

attention_mask=attention_mask,

generation_config=generation_config,

return_dict_in_generate=True,

output_scores=True,

max_new_tokens=max_new_tokens,

early_stopping=True

)

s = generation_output.sequences[0]

output = tokenizer.decode(s)

return output.split("### Solution:")[1].lstrip("\n")

instruction = """

Edit the following XML code to add a navigation bar to the top of a web page

<html>

<head>

<title>CliBrAIn</title>

</head>

"""

print(generate(instruction))

```

### Citation

```

@misc {manuel_romero_2023,

author = { {Manuel Romero} },

title = { llama-2-coder-7b (Revision d30d193) },

year = 2023,

url = { https://huggingface.co/mrm8488/llama-2-coder-7b },

doi = { 10.57967/hf/0931 },

publisher = { Hugging Face }

}

```

<!-- original-model-card end -->

|

Locutusque/Hyperion-2.1-Mistral-7B | Locutusque | 2024-03-12T20:38:14Z | 1,266 | 3 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"en",

"dataset:Locutusque/hyperion-v2.0",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-10T17:20:02Z | ---

library_name: transformers

license: apache-2.0

datasets:

- Locutusque/hyperion-v2.0

language:

- en

---

# Description

Further fine-tuned Locutusque/Hyperion-2.0-Mistral-7B at a higher learning rate. This was done to see if performance increased. Read Locutusque/Hyperion-2.0-Mistral-7B's model card for more information. Slight performance gain was observed. More checkpoints will be released in the future.

# Disclaimer

This model is very compliant. It will respond to any request without refusal. If you intend to deploy this model at an enterprise level, I would recommend aligning this model using DPO.

## Quants

ExLlamaV2: https://huggingface.co/bartowski/Hyperion-2.1-Mistral-7B-exl2

GGUF: https://huggingface.co/bartowski/Hyperion-2.1-Mistral-7B-GGUF

AWQ: https://huggingface.co/solidrust/Hyperion-2.1-Mistral-7B-AWQ

|

Nekochu/Confluence-Renegade-7B | Nekochu | 2024-03-30T14:24:58Z | 1,266 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"base_model:localfultonextractor/Erosumika-7B",

"base_model:Nitral-AI/Infinitely-Laydiculous-7B",

"base_model:Kunocchini-7b-128k-test",

"base_model:Endevor/EndlessRP-v3-7B",

"base_model:ChaoticNeutrals/BuRP_7B",

"base_model:daybreak-kunoichi-2dpo-7b",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-19T10:05:26Z | ---

license: apache-2.0

base_model:

- localfultonextractor/Erosumika-7B

- Nitral-AI/Infinitely-Laydiculous-7B

- Kunocchini-7b-128k-test

- Endevor/EndlessRP-v3-7B

- ChaoticNeutrals/BuRP_7B

- daybreak-kunoichi-2dpo-7b

library_name: transformers

tags:

- mergekit

- merge

---

My first merge of RP models 7B using mergekit, They are just r/ trend RP, half is BuRP_7B. not used any, **Dumb** merge but hopfully lucky merge! ^^'

## Update 03/2024:

- Original model Card Confluence-Renegade-7B <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/tree/Confluence-Renegade-7B-v2-8.0bpw-h8-exl2">[8.0bpw-exl]</a>

- Added Model and merge recipe branch: <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/blob/Confluence-Renegade-7B-v2/mergekit_config.yml">Confluence-Renegade-7B-v2</a>

- Added Model and merge recipe branch: <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/blob/RoleBeagle-Moistral-11B-v2/mergekit_config.yml">RoleBeagle-Moistral-11B-v2</a> <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/blob/RoleBeagle-Moistral-7B-v2/mergekit_config.yml">[7B truncated]</a> and Quants <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/blob/RoleBeagle-Moistral-11B-v2-2.4bpw-h6-exl2/mergekit_config.yml">RoleBeagle-Moistral-11B-v2-2.4bpw-h6-exl2</a>, <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/tree/RoleBeagle-Moistral-11B-v2-4.25bpw-h6-exl2">4.25bpw-h6</a>, <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/tree/RoleBeagle-Moistral-11B-v2-8.0bpw-h8-exl2">8.0bpw-h8</a>

- Added Branch: <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/blob/Confluence-Shortcake-20B/mergekit_config.yml"> Confluence-Shortcake-20B Model recipes</a> and Quants here <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/tree/Confluence-Shortcake-20B-2.4bpw-h6-exl2">Confluence-Shortcake-20B-2.4bpw-h6-exl2</a>, <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/tree/Confluence-Shortcake-20B-4.25bpw-h6-exl2">4.25bpw-h6</a>, <a href="https://huggingface.co/Nekochu/Confluence-Renegade-7B/tree/Confluence-Shortcake-20B-8.0bpw-h8-exl2">8.0bpw-h8</a>

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/d38LuOG.png" alt="Nekochu" style="width: 250%; min-width: 400px; display: block; margin: auto;">

</div>

Name symbolize by *Confluence* for many unique RP model with *Renegade* mostly come from no-guardrail.

## Download branch instructions

```shell

git clone --single-branch --branch Confluence-Shortcake-20B-2.4bpw-h6-exl2 https://huggingface.co/Nekochu/Confluence-Renegade-7B

```

### Configuration Confluence-Renegade-7B

The following YAML configuration was used to produce this model:

```yaml

models:

- model: ./modela/Erosumika-7B

parameters:

density: [1, 0.8, 0.6]

weight: 0.2

- model: ./modela/Infinitely-Laydiculous-7B

parameters:

density: [0.9, 0.7, 0.5]

weight: 0.2

- model: ./modela/Kunocchini-7b-128k-test

parameters:

density: [0.8, 0.6, 0.4]

weight: 0.2

- model: ./modela/EndlessRP-v3-7B

parameters:

density: [0.7, 0.5, 0.3]

weight: 0.2

- model: ./modela/daybreak-kunoichi-2dpo-7b

parameters:

density: [0.5, 0.3, 0.1]

weight: 0.2

merge_method: dare_linear

base_model: ./modela/Mistral-7B-v0.1

parameters:

normalize: true

int8_mask: true

dtype: bfloat16

name: intermediate-model

---

slices:

- sources:

- model: intermediate-model

layer_range: [0, 32]

- model: ./modela/BuRP_7B

layer_range: [0, 32]

merge_method: slerp

base_model: intermediate-model

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5 # fallback for rest of tensors

dtype: bfloat16

name: gradient-slerp

```

```mergekit-mega config.yml ./output-model-directory --cuda --allow-crimes --lazy-unpickle```

### Models Merged Confluence-Renegade-7B

The following models were included in the merge:

- [localfultonextractor/Erosumika-7B](https://huggingface.co/localfultonextractor/Erosumika-7B)

- [Nitral-AI/Infinitely-Laydiculous-7B](https://huggingface.co/Nitral-AI/Infinitely-Laydiculous-7B)

- [Kunocchini-7b-128k-test](https://huggingface.co/Nitral-AI/Kunocchini-7b-128k-test)

- [Endevor/EndlessRP-v3-7B](https://huggingface.co/Endevor/EndlessRP-v3-7B)

- [ChaoticNeutrals/BuRP_7B](https://huggingface.co/ChaoticNeutrals/BuRP_7B)

- [daybreak-kunoichi-2dpo-7b](https://huggingface.co/crestf411/daybreak-kunoichi-2dpo-7b) |

NotAiLOL/Med-Yi-1.5-9B | NotAiLOL | 2024-05-14T10:17:33Z | 1,266 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"text-generation-inference",

"unsloth",

"trl",

"sft",

"en",

"dataset:Kabatubare/medical-alpaca",

"base_model:01-ai/Yi-1.5-9B",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-14T09:04:37Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

- sft

base_model: 01-ai/Yi-1.5-9B

datasets:

- Kabatubare/medical-alpaca

---

# Uploaded model

- **Developed by:** NotAiLOL

- **License:** apache-2.0

- **Finetuned from model :** 01-ai/Yi-1.5-9B

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

# Model detail

## Prompt format

The model uses the alpaca format:

```

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{}

### Input:

{}

### Response:

{}

``` |

timm/maxvit_rmlp_small_rw_224.sw_in1k | timm | 2023-05-11T00:19:51Z | 1,265 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2204.01697",

"arxiv:2111.09883",

"license:apache-2.0",

"region:us"

] | image-classification | 2023-01-20T21:34:10Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for maxvit_rmlp_small_rw_224.sw_in1k

A timm specific MaxViT (w/ a MLP Log-CPB (continuous log-coordinate relative position bias motivated by Swin-V2) image classification model. Trained in `timm` on ImageNet-1k by Ross Wightman.

ImageNet-1k training done on TPUs thanks to support of the [TRC](https://sites.research.google/trc/about/) program.

### Model Variants in [maxxvit.py](https://github.com/huggingface/pytorch-image-models/blob/main/timm/models/maxxvit.py)

MaxxViT covers a number of related model architectures that share a common structure including:

- CoAtNet - Combining MBConv (depthwise-separable) convolutional blocks in early stages with self-attention transformer blocks in later stages.

- MaxViT - Uniform blocks across all stages, each containing a MBConv (depthwise-separable) convolution block followed by two self-attention blocks with different partitioning schemes (window followed by grid).

- CoAtNeXt - A timm specific arch that uses ConvNeXt blocks in place of MBConv blocks in CoAtNet. All normalization layers are LayerNorm (no BatchNorm).

- MaxxViT - A timm specific arch that uses ConvNeXt blocks in place of MBConv blocks in MaxViT. All normalization layers are LayerNorm (no BatchNorm).

- MaxxViT-V2 - A MaxxViT variation that removes the window block attention leaving only ConvNeXt blocks and grid attention w/ more width to compensate.

Aside from the major variants listed above, there are more subtle changes from model to model. Any model name with the string `rw` are `timm` specific configs w/ modelling adjustments made to favour PyTorch eager use. These were created while training initial reproductions of the models so there are variations.

All models with the string `tf` are models exactly matching Tensorflow based models by the original paper authors with weights ported to PyTorch. This covers a number of MaxViT models. The official CoAtNet models were never released.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 64.9

- GMACs: 10.7

- Activations (M): 49.3

- Image size: 224 x 224

- **Papers:**

- MaxViT: Multi-Axis Vision Transformer: https://arxiv.org/abs/2204.01697

- Swin Transformer V2: Scaling Up Capacity and Resolution: https://arxiv.org/abs/2111.09883

- **Dataset:** ImageNet-1k

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('maxvit_rmlp_small_rw_224.sw_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'maxvit_rmlp_small_rw_224.sw_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 64, 112, 112])

# torch.Size([1, 96, 56, 56])

# torch.Size([1, 192, 28, 28])

# torch.Size([1, 384, 14, 14])

# torch.Size([1, 768, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'maxvit_rmlp_small_rw_224.sw_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 768, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

### By Top-1

|model |top1 |top5 |samples / sec |Params (M) |GMAC |Act (M)|

|------------------------------------------------------------------------------------------------------------------------|----:|----:|--------------:|--------------:|-----:|------:|

|[maxvit_xlarge_tf_512.in21k_ft_in1k](https://huggingface.co/timm/maxvit_xlarge_tf_512.in21k_ft_in1k) |88.53|98.64| 21.76| 475.77|534.14|1413.22|

|[maxvit_xlarge_tf_384.in21k_ft_in1k](https://huggingface.co/timm/maxvit_xlarge_tf_384.in21k_ft_in1k) |88.32|98.54| 42.53| 475.32|292.78| 668.76|

|[maxvit_base_tf_512.in21k_ft_in1k](https://huggingface.co/timm/maxvit_base_tf_512.in21k_ft_in1k) |88.20|98.53| 50.87| 119.88|138.02| 703.99|

|[maxvit_large_tf_512.in21k_ft_in1k](https://huggingface.co/timm/maxvit_large_tf_512.in21k_ft_in1k) |88.04|98.40| 36.42| 212.33|244.75| 942.15|

|[maxvit_large_tf_384.in21k_ft_in1k](https://huggingface.co/timm/maxvit_large_tf_384.in21k_ft_in1k) |87.98|98.56| 71.75| 212.03|132.55| 445.84|

|[maxvit_base_tf_384.in21k_ft_in1k](https://huggingface.co/timm/maxvit_base_tf_384.in21k_ft_in1k) |87.92|98.54| 104.71| 119.65| 73.80| 332.90|

|[maxvit_rmlp_base_rw_384.sw_in12k_ft_in1k](https://huggingface.co/timm/maxvit_rmlp_base_rw_384.sw_in12k_ft_in1k) |87.81|98.37| 106.55| 116.14| 70.97| 318.95|

|[maxxvitv2_rmlp_base_rw_384.sw_in12k_ft_in1k](https://huggingface.co/timm/maxxvitv2_rmlp_base_rw_384.sw_in12k_ft_in1k) |87.47|98.37| 149.49| 116.09| 72.98| 213.74|

|[coatnet_rmlp_2_rw_384.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_rmlp_2_rw_384.sw_in12k_ft_in1k) |87.39|98.31| 160.80| 73.88| 47.69| 209.43|

|[maxvit_rmlp_base_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/maxvit_rmlp_base_rw_224.sw_in12k_ft_in1k) |86.89|98.02| 375.86| 116.14| 23.15| 92.64|

|[maxxvitv2_rmlp_base_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/maxxvitv2_rmlp_base_rw_224.sw_in12k_ft_in1k) |86.64|98.02| 501.03| 116.09| 24.20| 62.77|

|[maxvit_base_tf_512.in1k](https://huggingface.co/timm/maxvit_base_tf_512.in1k) |86.60|97.92| 50.75| 119.88|138.02| 703.99|

|[coatnet_2_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_2_rw_224.sw_in12k_ft_in1k) |86.57|97.89| 631.88| 73.87| 15.09| 49.22|

|[maxvit_large_tf_512.in1k](https://huggingface.co/timm/maxvit_large_tf_512.in1k) |86.52|97.88| 36.04| 212.33|244.75| 942.15|

|[coatnet_rmlp_2_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_rmlp_2_rw_224.sw_in12k_ft_in1k) |86.49|97.90| 620.58| 73.88| 15.18| 54.78|

|[maxvit_base_tf_384.in1k](https://huggingface.co/timm/maxvit_base_tf_384.in1k) |86.29|97.80| 101.09| 119.65| 73.80| 332.90|

|[maxvit_large_tf_384.in1k](https://huggingface.co/timm/maxvit_large_tf_384.in1k) |86.23|97.69| 70.56| 212.03|132.55| 445.84|

|[maxvit_small_tf_512.in1k](https://huggingface.co/timm/maxvit_small_tf_512.in1k) |86.10|97.76| 88.63| 69.13| 67.26| 383.77|

|[maxvit_tiny_tf_512.in1k](https://huggingface.co/timm/maxvit_tiny_tf_512.in1k) |85.67|97.58| 144.25| 31.05| 33.49| 257.59|

|[maxvit_small_tf_384.in1k](https://huggingface.co/timm/maxvit_small_tf_384.in1k) |85.54|97.46| 188.35| 69.02| 35.87| 183.65|

|[maxvit_tiny_tf_384.in1k](https://huggingface.co/timm/maxvit_tiny_tf_384.in1k) |85.11|97.38| 293.46| 30.98| 17.53| 123.42|

|[maxvit_large_tf_224.in1k](https://huggingface.co/timm/maxvit_large_tf_224.in1k) |84.93|96.97| 247.71| 211.79| 43.68| 127.35|

|[coatnet_rmlp_1_rw2_224.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_rmlp_1_rw2_224.sw_in12k_ft_in1k) |84.90|96.96| 1025.45| 41.72| 8.11| 40.13|

|[maxvit_base_tf_224.in1k](https://huggingface.co/timm/maxvit_base_tf_224.in1k) |84.85|96.99| 358.25| 119.47| 24.04| 95.01|

|[maxxvit_rmlp_small_rw_256.sw_in1k](https://huggingface.co/timm/maxxvit_rmlp_small_rw_256.sw_in1k) |84.63|97.06| 575.53| 66.01| 14.67| 58.38|

|[coatnet_rmlp_2_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_rmlp_2_rw_224.sw_in1k) |84.61|96.74| 625.81| 73.88| 15.18| 54.78|

|[maxvit_rmlp_small_rw_224.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_small_rw_224.sw_in1k) |84.49|96.76| 693.82| 64.90| 10.75| 49.30|

|[maxvit_small_tf_224.in1k](https://huggingface.co/timm/maxvit_small_tf_224.in1k) |84.43|96.83| 647.96| 68.93| 11.66| 53.17|

|[maxvit_rmlp_tiny_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_tiny_rw_256.sw_in1k) |84.23|96.78| 807.21| 29.15| 6.77| 46.92|

|[coatnet_1_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_1_rw_224.sw_in1k) |83.62|96.38| 989.59| 41.72| 8.04| 34.60|

|[maxvit_tiny_rw_224.sw_in1k](https://huggingface.co/timm/maxvit_tiny_rw_224.sw_in1k) |83.50|96.50| 1100.53| 29.06| 5.11| 33.11|

|[maxvit_tiny_tf_224.in1k](https://huggingface.co/timm/maxvit_tiny_tf_224.in1k) |83.41|96.59| 1004.94| 30.92| 5.60| 35.78|

|[coatnet_rmlp_1_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_rmlp_1_rw_224.sw_in1k) |83.36|96.45| 1093.03| 41.69| 7.85| 35.47|

|[maxxvitv2_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxxvitv2_nano_rw_256.sw_in1k) |83.11|96.33| 1276.88| 23.70| 6.26| 23.05|

|[maxxvit_rmlp_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxxvit_rmlp_nano_rw_256.sw_in1k) |83.03|96.34| 1341.24| 16.78| 4.37| 26.05|

|[maxvit_rmlp_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_nano_rw_256.sw_in1k) |82.96|96.26| 1283.24| 15.50| 4.47| 31.92|

|[maxvit_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_nano_rw_256.sw_in1k) |82.93|96.23| 1218.17| 15.45| 4.46| 30.28|

|[coatnet_bn_0_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_bn_0_rw_224.sw_in1k) |82.39|96.19| 1600.14| 27.44| 4.67| 22.04|

|[coatnet_0_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_0_rw_224.sw_in1k) |82.39|95.84| 1831.21| 27.44| 4.43| 18.73|

|[coatnet_rmlp_nano_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_rmlp_nano_rw_224.sw_in1k) |82.05|95.87| 2109.09| 15.15| 2.62| 20.34|

|[coatnext_nano_rw_224.sw_in1k](https://huggingface.co/timm/coatnext_nano_rw_224.sw_in1k) |81.95|95.92| 2525.52| 14.70| 2.47| 12.80|

|[coatnet_nano_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_nano_rw_224.sw_in1k) |81.70|95.64| 2344.52| 15.14| 2.41| 15.41|

|[maxvit_rmlp_pico_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_pico_rw_256.sw_in1k) |80.53|95.21| 1594.71| 7.52| 1.85| 24.86|

### By Throughput (samples / sec)

|model |top1 |top5 |samples / sec |Params (M) |GMAC |Act (M)|

|------------------------------------------------------------------------------------------------------------------------|----:|----:|--------------:|--------------:|-----:|------:|

|[coatnext_nano_rw_224.sw_in1k](https://huggingface.co/timm/coatnext_nano_rw_224.sw_in1k) |81.95|95.92| 2525.52| 14.70| 2.47| 12.80|

|[coatnet_nano_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_nano_rw_224.sw_in1k) |81.70|95.64| 2344.52| 15.14| 2.41| 15.41|

|[coatnet_rmlp_nano_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_rmlp_nano_rw_224.sw_in1k) |82.05|95.87| 2109.09| 15.15| 2.62| 20.34|

|[coatnet_0_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_0_rw_224.sw_in1k) |82.39|95.84| 1831.21| 27.44| 4.43| 18.73|

|[coatnet_bn_0_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_bn_0_rw_224.sw_in1k) |82.39|96.19| 1600.14| 27.44| 4.67| 22.04|

|[maxvit_rmlp_pico_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_pico_rw_256.sw_in1k) |80.53|95.21| 1594.71| 7.52| 1.85| 24.86|

|[maxxvit_rmlp_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxxvit_rmlp_nano_rw_256.sw_in1k) |83.03|96.34| 1341.24| 16.78| 4.37| 26.05|

|[maxvit_rmlp_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_nano_rw_256.sw_in1k) |82.96|96.26| 1283.24| 15.50| 4.47| 31.92|

|[maxxvitv2_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxxvitv2_nano_rw_256.sw_in1k) |83.11|96.33| 1276.88| 23.70| 6.26| 23.05|

|[maxvit_nano_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_nano_rw_256.sw_in1k) |82.93|96.23| 1218.17| 15.45| 4.46| 30.28|

|[maxvit_tiny_rw_224.sw_in1k](https://huggingface.co/timm/maxvit_tiny_rw_224.sw_in1k) |83.50|96.50| 1100.53| 29.06| 5.11| 33.11|

|[coatnet_rmlp_1_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_rmlp_1_rw_224.sw_in1k) |83.36|96.45| 1093.03| 41.69| 7.85| 35.47|

|[coatnet_rmlp_1_rw2_224.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_rmlp_1_rw2_224.sw_in12k_ft_in1k) |84.90|96.96| 1025.45| 41.72| 8.11| 40.13|

|[maxvit_tiny_tf_224.in1k](https://huggingface.co/timm/maxvit_tiny_tf_224.in1k) |83.41|96.59| 1004.94| 30.92| 5.60| 35.78|

|[coatnet_1_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_1_rw_224.sw_in1k) |83.62|96.38| 989.59| 41.72| 8.04| 34.60|

|[maxvit_rmlp_tiny_rw_256.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_tiny_rw_256.sw_in1k) |84.23|96.78| 807.21| 29.15| 6.77| 46.92|

|[maxvit_rmlp_small_rw_224.sw_in1k](https://huggingface.co/timm/maxvit_rmlp_small_rw_224.sw_in1k) |84.49|96.76| 693.82| 64.90| 10.75| 49.30|

|[maxvit_small_tf_224.in1k](https://huggingface.co/timm/maxvit_small_tf_224.in1k) |84.43|96.83| 647.96| 68.93| 11.66| 53.17|

|[coatnet_2_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_2_rw_224.sw_in12k_ft_in1k) |86.57|97.89| 631.88| 73.87| 15.09| 49.22|

|[coatnet_rmlp_2_rw_224.sw_in1k](https://huggingface.co/timm/coatnet_rmlp_2_rw_224.sw_in1k) |84.61|96.74| 625.81| 73.88| 15.18| 54.78|

|[coatnet_rmlp_2_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/coatnet_rmlp_2_rw_224.sw_in12k_ft_in1k) |86.49|97.90| 620.58| 73.88| 15.18| 54.78|

|[maxxvit_rmlp_small_rw_256.sw_in1k](https://huggingface.co/timm/maxxvit_rmlp_small_rw_256.sw_in1k) |84.63|97.06| 575.53| 66.01| 14.67| 58.38|

|[maxxvitv2_rmlp_base_rw_224.sw_in12k_ft_in1k](https://huggingface.co/timm/maxxvitv2_rmlp_base_rw_224.sw_in12k_ft_in1k) |86.64|98.02| 501.03| 116.09| 24.20| 62.77|