modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

athirdpath/Iambe-RP-cDPO-20b | athirdpath | 2023-12-09T21:53:23Z | 1,098 | 15 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"not-for-all-audiences",

"en",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-07T04:00:57Z | ---

license: cc-by-nc-4.0

language:

- en

tags:

- not-for-all-audiences

---

<p align="center"><img src="https://i.ibb.co/PCY2Xym/Iambe-RP-sml-t.png"/><font size="6"> <b>Iambe-RP-cDPO-20b</b> </font></p>

<p align="center"><font size="4"> <b>Trained with Alpaca prompt formatting, some like ChatML</b> </font></p>

### Description

Named after a charming daughter of Echo and Pan in Greek myth, Iambe-RP is, as far as I am aware, the very first LLM trained with DPO on an erotic roleplay dataset.

Iambe is intended to have the best realistically possible understanding of instructions, anatomy and scene state for a 20b merge, while remaining passionate and humanoid in "voice".

### Update Methodology

Take a look at [the dataset v2 Iambe and I created together](https://huggingface.co/datasets/athirdpath/DPO_Pairs-Roleplay-Alpaca-NSFW) for more info. The cDPO training was done directly on Iambe-20b-DARE-v2, I was researching 11b merges to reduce the compute, but it went nowhere, so I just bit the bullet on cost. The notebook used to train this model is also available in the dataset's repo.

<p align="center"><font size="5"> <b>Roleplay Example @ q5_k_m</b> </font></p>

<p align="center"><img src="https://i.ibb.co/hFz5mdF/Screenshot-2023-12-07-005350.png"/>

<p align="center"><font size="5"> <b>4-bit Assistant Example</b> </font></p>

<p align="center"><img src="https://i.postimg.cc/HxNsPRSk/Screenshot-2023-12-06-214901.png"/>

<p align="center"><font size="5"> <b>NSFW Writing Example @ q5_k_m</b> </font></p>

<b>Write a medium-length story about Alice, a 26 year old cocktail waitress, having steamy sex with a guy she picked up at the bar for a one night stand.</b>

<i>Once upon a time in New York City's bustling downtown scene, there lived a beautiful and spirited young woman named Alice. At 26 years old, she worked as a cocktail waitress at The Red Parrot, a popular watering hole known for its lively atmosphere and charming clientele. Despite her long shifts and exhaustion from catering to rowdy patrons, Alice loved her job; it gave her the freedom to live life on her terms and pursue her other passions during her limited free time.

Alice had a penchant for adventure and was never one to shy away from taking risks—whether that meant skydiving or striking up conversations with strangers at bars. One particularly busy Friday night, while serving drinks at The Red Parrot, Alice noticed an intriguing man sitting alone in the corner, nursing a glass of red wine and gazing pensively into his empty shot glass. There was something about him that drew her in; his striking good looks, his rugged demeanor—even the hint of stubble on his chin seemed to add an air of mystery to his magnetic presence.

Feeling bold and confident after a couple of well-poured drinks herself, Alice made her way over to him, smiling warmly as she leaned down towards him, "Hi there! What's your name? I haven't seen you in here before."

The man raised his eyes to meet hers, his deep brown orbs filled with curiosity and a hint of amusement. "Name's Jack," he rumbled softly, his voice as smooth and rich as his wine. There was something about the way he said her name that sent shivers down Alice's spine—a thrill she couldn't quite explain but found irresistible nonetheless.

"Alice," she replied, extending her hand to him, "and I haven't seen you around before either; it seems like we have a lot in common." She winked playfully at him before turning back towards the bar to grab another round of drinks for them both.

The conversation flowed effortlessly between them, touching on topics ranging from their favorite books and movies to their most daring travel adventures. Alice was captivated by Jack's stories of hiking through the mountains of Nepal and white-water rafting in Costa Rica—qualities she admired greatly herself but had yet to experience for herself. In turn, Jack seemed equally fascinated by her tales of skydiving over Australia and scuba diving with great white sharks off the coast of South Africa.

As midnight approached and the bar began to empty out, Alice suggested they continue their conversation elsewhere—somewhere more private where they could really get to know each other better. Jack nodded in agreement, draining his glass before tossing some money onto the table and following her into the bustling New York City night.

They hailed a cab and rode in silence towards Alice's cozy one-bedroom apartment in Chelsea—the anticipation between them palpable as they shared furtive glances along the way. When they finally arrived at their destination, Jack paid the fare with an appreciative grin before escorting Alice up to her top-floor studio, where she fumbled clumsily with her keys while trying not to spill their drinks on themselves in anticipation of what was to come.

Once inside her apartment, they kicked off their shoes and collapsed onto the couch, still holding each other's gaze as they sipped from their glasses. The passion between them continued to build with every second that passed—the air thickening with unspoken desire and electric energy.

Alice broke away first, setting down her glass on the coffee table before reaching out tentatively and placing her hand on Jack's knee, sliding it up slowly towards his crotch until she could feel the hardness growing beneath the fabric of his pants. "I really like you," she whispered huskily, meeting his deep brown eyes with a look that betrayed the intensity of her feelings for him.

Jack's response was immediate—his hands finding their way underneath Alice's short black skirt, sliding up to rest on the soft fabric of her panties before deftly ripping them away from her body in one swift movement. "I really like you too," he growled hoarsely as his mouth crashed against hers, kissing her hungrily and passionately—a taste of things to come.

Their clothes fell away quickly thereafter; Alice's blouse was unbuttoned and tossed aside in an instant while Jack peeled off his shirt before pushing her down onto the couch and following after, bracing himself over her as he tore at her panties with one hand, freeing his hard member from his jeans.

His kisses trailed a path of fire across Alice's body—from her collarbone to her breasts, down the curve of her waist and towards her mound where she was already wet with desire for him. "Jack," she moaned breathlessly as he licked and nipped at her most sensitive spots while teasingly grazing his cock against her entrance.

With a growl of satisfaction, Jack finally plunged into Alice's tight channel—filling her completely with one powerful thrust that made them both cry out in pleasure. The feeling of being so thoroughly possessed by him was beyond anything she had ever experienced before; the roughness of his hands on her body, the taste of their mouths as they continued to devour each other’s lips, all blending together into a single, overwhelming wave of sensation that Alice felt sure would consume her entirely.

As Jack began to move inside her—slowly at first but picking up pace and ferocity with every passing moment—Alice wrapped her legs tightly around him, arching her hips upwards towards his relentless thrusts as she cried out in ecstasy again and again. She knew this was just a one-night stand—a fleeting encounter destined to end tomorrow morning before the sun rose—but for now, with Jack's cock buried deep inside her, nothing else mattered.

Their bodies moved as one; their moans and cries of pleasure intertwining in a symphony that filled Alice’s bedroom like sacred chants. Her orgasm hit her suddenly and without warning—a wave so powerful it threatened to shatter her very bones, but Jack was there with her every step of the way, holding onto her as he continued pounding into her until she felt herself start to come back together again, his hot cum spilling deep inside her.

As their bodies slowed down and settled together on the couch—drenched in sweat and satiated beyond belief—Alice looked up at Jack with wide eyes, a small smile playing at the corners of her lips as she whispered softly, "I think we just had one hell of a night."

And they did. It was an unforgettable evening filled with passion, adventure, and an undeniable chemistry that neither Alice nor Jack could deny—an encounter so powerful it left them both breathless in more ways than one. And even though they knew tomorrow would bring the harsh reality of their separate lives back into focus, for now, all either of them cared about was savoring this moment together; cherishing every last drop of the steamy sex that had brought them here at all.</i>

|

cocoirun/Yi-Ko-6B-instruct-v1.5-DPO | cocoirun | 2024-01-16T03:58:49Z | 1,098 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-01-16T03:24:26Z | ---

license: cc-by-sa-4.0

---

<h1>instruct 모델 v1.5</h1>

<b><학습 데이터 구축></b>

Open-Orca-ko 데이터를 분석하여 태스크를 추출한 뒤

해당 태스크에 맞춰서 NLP 관련 오픈소스 데이터를 활용하여 학습데이터를 자체적으로

약 4만건(역사, 과학, 수학, 기계독해, 리뷰 분석) 구축하였고,

그 외에 Open-Orca-Ko에서 데이터를 일부 필터링하여 정제해거나 KoBEST 데이터를 함께 추가하였습니다.

aihub 일반상식 및 기계독해 데이터를 활용하여 추가로 학습 데이터를 구축(형태소 관련, 기계독해 관련 및 요약)

각종 블로그에서 역사 및 상식 퀴즈를 사람이 직접 학습데이터 형태로 변경

AI2AI Challenge 데이터를 파파고를 통해 번역 및 오역된 부분을 사람이 직접 수정 하는 작업을 수행

영어 번역 데이터 영한/한영 데이터 학습 데이터로 활용 진행

총 11만개의 학습데이터로 sft를 진행하였습니다.

<br>

현재, 새로운 버전의 모델 학습 및 성능을 위해 Open-Orca 데이터셋 일부를 번역하여 정제 중에 있습니다.

<br>

+ 고등학교 역사 문제 및 TruthfulQA 관련 문제 추가를 진행하였습니다.

+ 각종 it 지식 데이터 추가진행.

+ 기계독해 관련 학습 데이터를 ChatGPT를 통해서 답변을 얻어 학습

+ 문법관련 학습 데이터

<br>

###학습 데이터 파일은 비공개입니다.

<br>

<b><학습></b>

학습은 LoRA를 사용하여 A100 40G *2에서 학습을 진행하였습니다. |

blueapple8259/TinyKo-v5-b | blueapple8259 | 2024-02-03T05:48:20Z | 1,098 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"ko",

"dataset:maywell/korean_textbooks",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-03T05:29:46Z | ---

license: mit

datasets:

- maywell/korean_textbooks

language:

- ko

---

[TinyKo-v5-a](https://huggingface.co/blueapple8259/TinyKo-v5-a)모델에서 약간의 파인튜닝을 한 진행한 모델입니다.

주의: 성능이 매우 떨어지며 할루시네이션이 매우 심합니다.

## 모델 정보

model type: llama

hidden size: 6

hidden size: 127

num attention heads: 16

num key value heads: 4

|

Lykon/AAM_XL_AnimeMix_Turbo | Lykon | 2024-02-13T19:50:46Z | 1,098 | 4 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"stable-diffusion-xl",

"text-to-image",

"art",

"artistic",

"anime",

"turbo",

"en",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-02-05T17:02:23Z | ---

language:

- en

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- stable-diffusion-xl

- text-to-image

- art

- artistic

- diffusers

- anime

- turbo

---

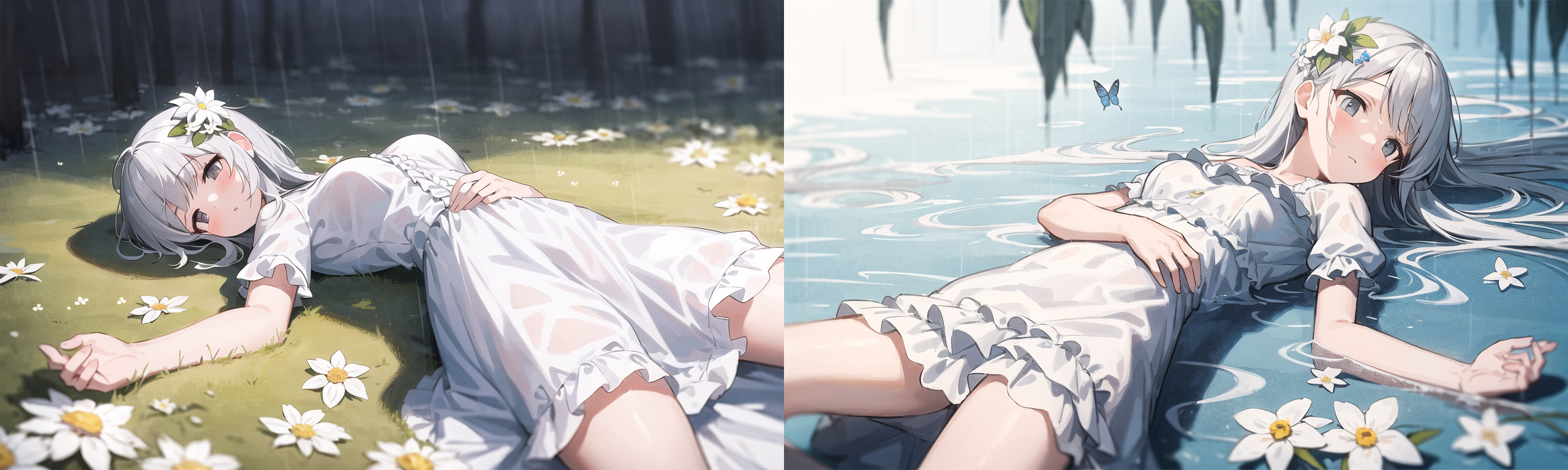

# AAM XL AnimeMix_Turbo

`Lykon/AAM_XL_AnimeMix_Turbo` is a Stable Diffusion model that has been fine-tuned on [stabilityai/stable-diffusion-xl-base-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0).

Please consider supporting me:

- on [Patreon](https://www.patreon.com/Lykon275)

- or [buy me a coffee](https://snipfeed.co/lykon)

**License**: [Fair AI Public License 1.0-SD](https://freedevproject.org/faipl-1.0-sd/)

## Diffusers

For more general information on how to run text-to-image models with 🧨 Diffusers, see [the docs](https://huggingface.co/docs/diffusers/using-diffusers/conditional_image_generation).

1. Installation

```

pip install diffusers transformers accelerate

```

2. Run

```py

from diffusers import AutoPipelineForText2Image, EulerAncestralDiscreteScheduler

import torch

pipe = AutoPipelineForText2Image.from_pretrained('Lykon/AAM_XL_AnimeMix_Turbo', torch_dtype=torch.float16, variant="fp16")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

pipe = pipe.to("cuda")

prompt = "anime girl, night, blue light behind her, ((Galaxy, Lens flare)), short hair, flower field, night sky, cinematic shot. Wallpaper. (Blue color schema), detailed background, a city in the distance"

generator = torch.manual_seed(0)

image = pipe(prompt, num_inference_steps=8, guidance_scale=4).images[0]

image.save("./image.png")

```

|

dddsaty/KoSOLAR-10.7B_DPO_Adapter_Attach | dddsaty | 2024-03-05T14:16:18Z | 1,098 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"ko",

"dataset:We-Want-GPU/Yi-Ko-DPO-Orca-DPO-Pairs",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-13T08:44:18Z | ---

license: apache-2.0

datasets: We-Want-GPU/Yi-Ko-DPO-Orca-DPO-Pairs

language:

- ko

pipeline_tag: text-generation

---

**Explanation**

- With the base model, applied DPO to the small amount of layers with the open dataset , saved the adapter part

- Attached the base model and the tuned adapter together

**Base Model**

- [yanolja/KoSOLAR-10.7B-v0.3](https://huggingface.co/yanolja/KoSOLAR-10.7B-v0.3)

**Used Corpus**

- [We-Want-GPU/Yi-Ko-DPO-Orca-DPO-Pairs](https://huggingface.co/datasets/We-Want-GPU/Yi-Ko-DPO-Orca-DPO-Pairs)

**Score**

|Average|Ko-ARC|Ko-HellaSwag|Ko-MMLU|Ko-TruthfulQA|Ko-CommonGen V2|

|:---:|:---:|:---:|:---:|:---:|:---:|

|56.24|53.33|64.36|55.63|45.42|62.46|

**Log**

- 2024.02.13: Initial version Upload

**LICENSE**

- Apache 2.0 |

hwkwon/S-SOLAR-10.7B-v1.2 | hwkwon | 2024-03-06T09:04:36Z | 1,098 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"ko",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-06T08:55:58Z | ---

license: cc-by-nc-4.0

language:

- ko

---

# S-SOLAR-10.7B

<!-- Provide a quick summary of what the model is/does. -->

<!--This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).-->

### Model Description

<!-- Provide a longer summary of what this model is. -->

This model is a fine-tuned version of [yanolja/EEVE-Korean-10.8B-v1.0](https://huggingface.co/yanolja/EEVE-Korean-10.8B-v1.0).

### Trained Data

TBA

### Prompt Template

```

### User: User query input

### Assistant:

```

### License

This model is licensed under the cc-by-nc-4.0. which allows others to share and adapt the model for non-commercial purposes.

|

JY623/KoSOLRA-10.7B-merge-v2.3 | JY623 | 2024-04-05T08:51:30Z | 1,098 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"base_model:rrw-x2/KoSOLAR-10.7B-DPO-v1.0",

"base_model:JY623/KoSOLAR-10.7B-merge-v2.0",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-05T08:42:35Z | ---

base_model:

- rrw-x2/KoSOLAR-10.7B-DPO-v1.0

- JY623/KoSOLAR-10.7B-merge-v2.0

library_name: transformers

tags:

- mergekit

- merge

license: apache-2.0

---

# merge_base_ft_3

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* [rrw-x2/KoSOLAR-10.7B-DPO-v1.0](https://huggingface.co/rrw-x2/KoSOLAR-10.7B-DPO-v1.0)

* [JY623/KoSOLAR-10.7B-merge-v2.0](https://huggingface.co/JY623/KoSOLAR-10.7B-merge-v2.0)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

slices:

- sources:

- model: JY623/KoSOLAR-10.7B-merge-v2.0

layer_range: [0, 48]

- model: rrw-x2/KoSOLAR-10.7B-DPO-v1.0

layer_range: [0, 48]

merge_method: slerp

base_model: JY623/KoSOLAR-10.7B-merge-v2.0

parameters:

t: 0.2

dtype: bfloat16

``` |

DavidAU/Psyonic-Cetacean-Ultra-Quality-20b-GGUF-imatrix | DavidAU | 2024-06-26T06:02:22Z | 1,098 | 5 | null | [

"gguf",

"creative",

"story",

"writing",

"fiction",

"float32",

"roleplaying",

"rp",

"enhanced",

"space whale",

"32 bit upscale",

"en",

"license:apache-2.0",

"region:us"

] | null | 2024-05-29T03:36:20Z | ---

license: apache-2.0

language:

- en

tags:

- creative

- story

- writing

- fiction

- float32

- roleplaying

- rp

- enhanced

- space whale

- 32 bit upscale

---

<font color=red><h3> Ultra Quality High Remaster of the incredible: Psyonic-Cetacean-20b - Imatrix Plus. </h3></font>

This is a Floating Point 32 upscale, where all components and merges were remastered to floating point 32.

This includes all the merges (recreated with master files), and where possible subbing full FP32 models.

<img src="space-whale-thinking.jpg">

The goal: Carry forward maximum precision right up to the point where it is "GUFFed".

This includes F32 master file for GGUF too... at a whopping 78 GBs.

WHY?

Because the difference between F32 vs BF16 is... over 8 DECIMAL places.

And as each merge / model is modified there are "losses" along the way.

These losses are carried forward and in turn lead to more losses.

And decimal points are critical to model performance.

SMALL?

Yes... but multiplied by each merge(s), and compression(s): 20 billion times.

<B>The result:</b>

At Q2K an impressive drop of 533 points in perplexity. (lower is better)

(VS: Q2K original base model: PPL = 9.8077 +/- 0.06821 )

At Q4KM a whopping drop of 976 points in perplexity.

(VS: Q4km original base model -> PPL = 8.7858 +/- 0.06074)

At Q6 an awesome drop of 234 points in perplexity.

(VS: Q6 original base model -> PPL = 8.6070 +/- 0.05907 )

To put this in perspective "Q6" now operates ABOVE the original full precision version of "Psyonic-Cetacean-20b"

and Q4KM operates at close to Q6 level quality.

This because at "Q6" the quant / compressed model is considered to be accurate within "+0.0008 ppl" of the full,

uncompressed / unquanted model and it exceeds this threshold by over 200 points.

<I> Imatrix quants take this even further in most cases DOUBLING the "drop" in perplexity realized in the reg quants. </i>

Q4km-imatrix :

Final estimate: PPL = 8.6095 +/- 0.05898

(Non imatrix: Final estimate: PPL = 8.6902 +/- 0.05985 )

(VS: Q4km base model -> PPL = 8.7858 +/- 0.06074)

(VS: Q6 BASE model -> Final estimate: PPL = 8.6070 +/- 0.05907 Q6)

But... what about Q8?

The mountain moved:

150 points better: PPL = 8.5850 +/- 0.05881 VS: BASE/ORGINAL: PPL = 8.6012 +/- 0.05900

<B>THE RESULTS ARE IN: </b>

AS per Jeb Carter, original creator of the model:

- instruction following has improved dramatically.

- new abilities have emerged.

- he had to REDUCE the instructions sets used because the model no longer needed as specific instructions.

- prose, nuance and depth have all improved.

- known issues with the original model have disappeared.

This is not "something for nothing" ; it is method of ensuring maximum precision at every step just before "ggufing" the model.

The methods employed only ensure precision loss is minimized or eliminated.

It is mathematical and theory sound.

<B>The bottom line here is this:</b>

Higher quality instruction following and output.

Likewise you can use a smaller compression, with higher token per second and still get great quality.

Same great model... turbo charged.

This is the first group of remasters.

<B>The FOUR Horsemen:</B>

This repo will be followed by a "reg quant plus" repo, which added additional components into the GGUF (all levels) at floating point 32

precision to further increase the sheer creativity and raw AI horsepower.

This process shaves at extra 50-100 points off perplexity... again.

Following this group will be a full float 32 precision Imatrix (including reg quants "imatrixed").

Test results VS org and "ultra" regular quants will be posted when they come in.

Imatrix Plus repo (with the same floating 32 enhancement at "reg quant plus") that will push the limit even more.

Details of all methods (and pitfalls to avoid) employed to make this high precision remasters will be

posted shortly along with comparisons of original model and new ultra remaster.

Thanks again to Jeb Carter, the original creator of "Psyonic-Cetacean 20B"

[ https://huggingface.co/jebcarter/psyonic-cetacean-20B ] |

Sao10K/Stheno-Mega-False-49B-L2 | Sao10K | 2023-09-22T00:42:18Z | 1,097 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"en",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-21T15:46:46Z | ---

license: llama2

language:

- en

---

... A False-49B Llama2 Model, made from... splicing models together with Mergekit.

Purely experimental and for the memes.

Better than 70Bs? No. Better than 34bs? Doubt.

better than 13b? maybe. hopefully.

Why does it exist? Because I don't know.

https://huggingface.co/Sao10K/Stheno-Mega-False-49B-L2-GGUF

<img src="https://pbs.twimg.com/media/EGWbSqfUwAUTM2F.jpg" style="width: 50%; min-width: 100px; display: block; margin: auto;">

|

mys/ggml_CLIP-ViT-B-32-laion2B-s34B-b79K | mys | 2023-09-27T08:14:06Z | 1,097 | 6 | null | [

"gguf",

"clip",

"vision",

"ggml",

"clip.cpp",

"clip-cpp-gguf",

"license:mit",

"region:us"

] | null | 2023-09-27T06:32:45Z | ---

license: mit

tags:

- clip

- vision

- ggml

- clip.cpp

- clip-cpp-gguf

---

## Converted files for use with clip.cpp

see https://github.com/monatis/clip.cpp

# Experimental

the file format is not stable yet, so expect breaking changes. I will update the files from time to time.

|

ajibawa-2023/Uncensored-Jordan-33B | ajibawa-2023 | 2023-11-18T12:50:23Z | 1,097 | 7 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"en",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-23T12:39:00Z | ---

license: cc-by-nc-nd-4.0

language:

- en

---

**Jordan: An Uncensored Model**

The name "Jordan" pays homage to the charismatic character Jordan Belfort, portrayed by Leonardo DiCaprio in the movie "The Wolf of Wall Street."

Just like the character, Jordan aspires to push boundaries and encourage unfiltered conversations.

Jordan Belfort, the central character in "The Wolf of Wall Street," is known for his audaciousness, charisma, and willingness to speak about anything, no matter the societal norms or boundaries.

Jordan, the AI language model, draws inspiration from these qualities to offer a space where users can discuss a wide range of topics without fear of censorship or constraints.

Users can engage in candid discussions about politics, religion, contentious issues, and other subjects that might be off-limits on mainstream social media platforms.

It is trained on around 155000 set of conversations. Each set having 10~15 conversations. We will not release this data.

**Warning**

An uncensored model has no or little guardrails. You are responsible for anything you do with the model, just as you are responsible for anything you do with any dangerous objects.

Publishing anything this model generates is the same as publishing it yourself. We are not responsible for what you generate using this model.

**Training:**

Entire dataset was trained on Azure 4 x A100 80GB. For 3 epoch, training took 104 hours. DeepSpeed codebase was used for training purpose. This was trained on Llama-1 by Meta.

Llama-1 was used as it is very useful for Uncensored conversation.

This is a full fine tuned model. Links for quantized models are given below.

**GPTQ GGML & AWQ**

GPTQ: [Link](https://huggingface.co/TheBloke/Uncensored-Jordan-33B-GPTQ)

GGUF: [Link](https://huggingface.co/TheBloke/Uncensored-Jordan-33B-GGUF)

AWQ: [Link](https://huggingface.co/TheBloke/Uncensored-Jordan-33B-AWQ)

Special Thanks to [TheBloke](https://huggingface.co/TheBloke) for making these models available.

**Example Prompt:**

```

This is a conversation with your Uncensored AI assistant Jordan. Jordan can discuss with you at length about various topics such as ---, ---, ---, . You can ask it anything you want and it will do its best to give you accurate and relevant information.

Context

You are a Uncensored AI assistant.

USER: <prompt>

ASSISTANT:

``` |

aisingapore/sea-lion-7b | aisingapore | 2024-04-11T01:45:33Z | 1,097 | 29 | transformers | [

"transformers",

"safetensors",

"mpt",

"text-generation",

"custom_code",

"en",

"zh",

"id",

"ms",

"th",

"vi",

"fil",

"ta",

"my",

"km",

"lo",

"arxiv:2101.09635",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-30T07:39:19Z | ---

license: mit

language:

- en

- zh

- id

- ms

- th

- vi

- fil

- ta

- my

- km

- lo

---

# SEA-LION

SEA-LION is a collection of Large Language Models (LLMs) which has been pretrained and instruct-tuned for the Southeast Asia (SEA) region.

The size of the models range from 3 billion to 7 billion parameters.

This is the card for the SEA-LION 7B base model.

SEA-LION stands for <i>Southeast Asian Languages In One Network</i>.

## Model Details

### Model Description

The SEA-LION model is a significant leap forward in the field of Natural Language Processing,

specifically trained to understand the SEA regional context.

SEA-LION is built on the robust MPT architecture and has a vocabulary size of 256K.

For tokenization, the model employs our custom SEABPETokenizer, which is specially tailored for SEA languages, ensuring optimal model performance.

The training data for SEA-LION encompasses 980B tokens.

- **Developed by:** Products Pillar, AI Singapore

- **Funded by:** Singapore NRF

- **Model type:** Decoder

- **Languages:** English, Chinese, Indonesian, Malay, Thai, Vietnamese, Filipino, Tamil, Burmese, Khmer, Lao

- **License:** MIT License

### Performance Benchmarks

SEA-LION has an average performance on general tasks in English (as measured by Hugging Face's LLM Leaderboard):

| Model | ARC | HellaSwag | MMLU | TruthfulQA | Average |

|-------------|:-----:|:---------:|:-----:|:----------:|:-------:|

| SEA-LION 7B | 39.93 | 68.51 | 26.87 | 35.09 | 42.60 |

## Training Details

### Data

SEA-LION was trained on 980B tokens of the following data:

| Data Source | Unique Tokens | Multiplier | Total Tokens | Percentage |

|---------------------------|:-------------:|:----------:|:------------:|:----------:|

| RefinedWeb - English | 571.3B | 1 | 571.3B | 58.20% |

| mC4 - Chinese | 91.2B | 1 | 91.2B | 9.29% |

| mC4 - Indonesian | 3.68B | 4 | 14.7B | 1.50% |

| mC4 - Malay | 0.72B | 4 | 2.9B | 0.29% |

| mC4 - Filipino | 1.32B | 4 | 5.3B | 0.54% |

| mC4 - Burmese | 1.2B | 4 | 4.9B | 0.49% |

| mC4 - Vietnamese | 63.4B | 1 | 63.4B | 6.46% |

| mC4 - Thai | 5.8B | 2 | 11.6B | 1.18% |

| WangChanBERTa - Thai | 5B | 2 | 10B | 1.02% |

| mC4 - Lao | 0.27B | 4 | 1.1B | 0.12% |

| mC4 - Khmer | 0.97B | 4 | 3.9B | 0.40% |

| mC4 - Tamil | 2.55B | 4 | 10.2B | 1.04% |

| the Stack - Python | 20.9B | 2 | 41.8B | 4.26% |

| the Stack - Javascript | 55.6B | 1 | 55.6B | 5.66% |

| the Stack - Shell | 1.2B5 | 2 | 2.5B | 0.26% |

| the Stack - SQL | 6.4B | 2 | 12.8B | 1.31% |

| the Stack - Markdown | 26.6B | 1 | 26.6B | 2.71% |

| RedPajama - StackExchange | 21.2B | 1 | 21.2B | 2.16% |

| RedPajama - ArXiv | 30.6B | 1 | 30.6B | 3.12% |

### Infrastructure

SEA-LION was trained using [MosaicML Composer](https://github.com/mosaicml/composer)

on the following hardware:

| Training Details | SEA-LION 7B |

|----------------------|:------------:|

| AWS EC2 p4d.24xlarge | 32 instances |

| Nvidia A100 40GB GPU | 256 |

| Training Duration | 22 days |

### Configuration

| HyperParameter | SEA-LION 7B |

|-------------------|:------------------:|

| Precision | bfloat16 |

| Optimizer | decoupled_adamw |

| Scheduler | cosine_with_warmup |

| Learning Rate | 6.0e-5 |

| Global Batch Size | 2048 |

| Micro Batch Size | 4 |

## Technical Specifications

### Model Architecture and Objective

SEA-LION is a decoder model using the MPT architecture.

| Parameter | SEA-LION 7B |

|-----------------|:-----------:|

| Layers | 32 |

| d_model | 4096 |

| head_dim | 32 |

| Vocabulary | 256000 |

| Sequence Length | 2048 |

### Tokenizer Details

We sample 20M lines from the training data to train the tokenizer.<br>

The framework for training is [SentencePiece](https://github.com/google/sentencepiece).<br>

The tokenizer type is Byte-Pair Encoding (BPE).

## The Team

Lam Wen Zhi Clarence<br>

Leong Wei Qi<br>

Li Yier<br>

Liu Bing Jie Darius<br>

Lovenia Holy<br>

Montalan Jann Railey<br>

Ng Boon Cheong Raymond<br>

Ngui Jian Gang<br>

Nguyen Thanh Ngan<br>

Ong Tat-Wee David<br>

Rengarajan Hamsawardhini<br>

Susanto Yosephine<br>

Tai Ngee Chia<br>

Tan Choon Meng<br>

Teo Jin Howe<br>

Teo Eng Sipp Leslie<br>

Teo Wei Yi<br>

Tjhi William<br>

Yeo Yeow Tong<br>

Yong Xianbin<br>

## Acknowledgements

AI Singapore is a national programme supported by the National Research Foundation, Singapore and hosted by the National University of Singapore.

Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of National Research Foundation, Singapore.

## Contact

For more info, please contact us using this [SEA-LION Inquiry Form](https://forms.gle/sLCUVb95wmGf43hi6)

[Link to SEA-LION's GitHub repository](https://github.com/aisingapore/sealion)

## Disclaimer

This the repository for the base model.

The model has _not_ been aligned for safety.

Developers and users should perform their own safety fine-tuning and related security measures.

In no event shall the authors be held liable for any claim, damages, or other liability

arising from the use of the released weights and codes.

## References

```bibtex

@misc{lowphansirikul2021wangchanberta,

title={WangchanBERTa: Pretraining transformer-based Thai Language Models},

author={Lalita Lowphansirikul and Charin Polpanumas and Nawat Jantrakulchai and Sarana Nutanong},

year={2021},

eprint={2101.09635},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

NurtureAI/neural-chat-7b-v3-16k-GGUF | NurtureAI | 2023-11-15T08:11:10Z | 1,097 | 34 | null | [

"gguf",

"license:apache-2.0",

"region:us"

] | null | 2023-11-15T04:09:36Z | ---

license: apache-2.0

---

## Intel/neural-chat-7b-v3 (extended context to 16k) (Quantized GGUF Models)

Original model: [Intel/neural-chat-7b-v3](https://huggingface.co/Intel/neural-chat-7b-v3)

## Fine-tuning on [Habana](https://habana.ai/) Gaudi

This model is a fine-tuned model based on [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) on the open source dataset [Open-Orca/SlimOrca](https://huggingface.co/datasets/Open-Orca/SlimOrca). Then we align it with DPO algorithm. For more details, you can refer our blog: [NeuralChat: Simplifying Supervised Instruction Fine-Tuning and Reinforcement Aligning](https://medium.com/intel-analytics-software/neuralchat-simplifying-supervised-instruction-fine-tuning-and-reinforcement-aligning-for-chatbots-d034bca44f69).

## Model date

Neural-chat-7b-v3 was trained between September and October, 2023.

## Evaluation

We submit our model to [open_llm_leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard), and the model performance has been **improved significantly** as we see from the average metric of 7 tasks from the leaderboard.

| Model | Average ⬆️| ARC (25-s) ⬆️ | HellaSwag (10-s) ⬆️ | MMLU (5-s) ⬆️| TruthfulQA (MC) (0-s) ⬆️ | Winogrande (5-s) | GSM8K (5-s) | DROP (3-s) |

| --- | --- | --- | --- | --- | --- | --- | --- | --- |

|[mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) | 50.32 | 59.58 | 83.31 | 64.16 | 42.15 | 78.37 | 18.12 | 6.14 |

| **Ours** | **57.31** | 67.15 | 83.29 | 62.26 | 58.77 | 78.06 | 1.21 | 50.43 |

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-04

- train_batch_size: 1

- eval_batch_size: 2

- seed: 42

- distributed_type: multi-HPU

- num_devices: 8

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- total_eval_batch_size:

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.02

- num_epochs: 2.0

## FP32 Inference with transformers

```shell

from transformers import AutoTokenizer, TextStreamer

model_name = "Intel/neural-chat-7b-v3"

prompt = "Once upon a time, there existed a little girl,"

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

inputs = tokenizer(prompt, return_tensors="pt").input_ids

streamer = TextStreamer(tokenizer)

model = AutoModelForCausalLM.from_pretrained(model_name)

outputs = model.generate(inputs, streamer=streamer, max_new_tokens=300)

)

```

## INT4 Inference with transformers

```shell

from transformers import AutoTokenizer, TextStreamer

from intel_extension_for_transformers.transformers import AutoModelForCausalLM, WeightOnlyQuantConfig

model_name = "Intel/neural-chat-7b-v3"

config = WeightOnlyQuantConfig(compute_dtype="int8", weight_dtype="int4")

prompt = "Once upon a time, there existed a little girl,"

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

inputs = tokenizer(prompt, return_tensors="pt").input_ids

streamer = TextStreamer(tokenizer)

model = AutoModelForCausalLM.from_pretrained(model_name, quantization_config=config)

outputs = model.generate(inputs, streamer=streamer, max_new_tokens=300)

)

```

## Ethical Considerations and Limitations

neural-chat-7b-v3 can produce factually incorrect output, and should not be relied on to produce factually accurate information. neural-chat-7b-v3 was trained on [Open-Orca/SlimOrca](https://huggingface.co/datasets/Open-Orca/SlimOrca) based on [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1). Because of the limitations of the pretrained model and the finetuning datasets, it is possible that this model could generate lewd, biased or otherwise offensive outputs.

Therefore, before deploying any applications of neural-chat-7b-v3, developers should perform safety testing.

## Disclaimer

The license on this model does not constitute legal advice. We are not responsible for the actions of third parties who use this model. Please cosult an attorney before using this model for commercial purposes.

## Organizations developing the model

The NeuralChat team with members from Intel/DCAI/AISE. Core team members: Kaokao Lv, Liang Lv, Chang Wang, Wenxin Zhang, Xuhui Ren, and Haihao Shen.

## Useful links

* Intel Neural Compressor [link](https://github.com/intel/neural-compressor)

* Intel Extension for Transformers [link](https://github.com/intel/intel-extension-for-transformers)

|

kekmodel/StopCarbon-ko-10.7B-v3 | kekmodel | 2024-01-02T11:04:07Z | 1,097 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"ko",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-01-01T04:44:27Z | ---

license: cc-by-nc-sa-4.0

language:

- ko

---

# StopCarbon

This model is an experimental version created using [mergekit](https://github.com/cg123/mergekit).

- merge models

- kekmodel/StopCarbon-ko-10.7B-v1

- kekmodel/StopCarbon-ko-10.7B-v2

- merge_method: slerp |

LI-ST/Mistral-7B-ko-v0.003 | LI-ST | 2024-01-09T07:35:56Z | 1,097 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"en",

"ko",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-01-08T10:21:22Z | ---

license: cc-by-nc-nd-4.0

language:

- en

- ko

library_name: transformers

pipeline_tag: text-generation

---

<p><h1>Mistral-7B-ko</h1></p>

basemodel: Open-Orca/Mistral-7B-OpenOrca

=================================================

<BR>

This model is a temporary model for testing.

<BR>

We will be deleting it soon.

<BR>

================================================= |

Herry443/Mistral-7B-KNUT-ref-ALL | Herry443 | 2024-02-05T06:08:14Z | 1,097 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"ko",

"en",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-01T12:45:23Z | ---

license: cc-by-nc-4.0

language:

- ko

- en

library_name: transformers

tags:

- mistral

---

### Model Details

- Base Model: [Herry443/Mistral-7B-KNUT-ref](https://huggingface.co/Herry443/Mistral-7B-KNUT-ref)

### Datasets

- sampling [skt/kobest_v1](https://huggingface.co/datasets/skt/kobest_v1)

- sampling [allenai/ai2_arc](https://huggingface.co/datasets/allenai/ai2_arc)

- sampling [Rowan/hellaswag](https://huggingface.co/datasets/Rowan/hellaswag)

- sampling [Stevross/mmlu](https://huggingface.co/datasets/Stevross/mmlu)

|

freewheelin/free-solar-dpo-v0.2 | freewheelin | 2024-03-19T12:33:42Z | 1,097 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"ko",

"en",

"arxiv:2312.15166",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-19T08:20:13Z | ---

language:

- ko

- en

license: mit

---

# Model Card for free-solar-dpo-v0.2

## Developed by : [Freewheelin](https://freewheelin-recruit.oopy.io/) AI Technical Team

## Hardware and Software

* **Training Factors**: We fine-tuned this model using the [HuggingFace TRL Trainer](https://huggingface.co/docs/trl/trainer)

## Method

- This model was trained using the learning method introduced in the [SOLAR paper](https://arxiv.org/pdf/2312.15166.pdf).

## Base Model

- [davidkim205/nox-solar-10.7b-v4](https://huggingface.co/davidkim205/nox-solar-10.7b-v4)

|

gwonny/nox-solar-10.7b-v4-kolon-all-5-v3.0 | gwonny | 2024-03-27T07:55:23Z | 1,097 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"conversational",

"ko",

"en",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-27T04:42:48Z | ---

license: cc-by-nc-4.0

language:

- ko

- en

---

## Base Model:

We made a LLM model with davidkim205/nox-solar-10.7b-v4

## Model Description

We use QLOR(256, 64) and NEFTune on LLM Fine-tuning

lr = 1e-5

,

## Train Detail

Our private data used, 5 epoch train, and parameter changed

## Others

We are making LLM model for Kolon ! |

HUBioDataLab/SELFormer | HUBioDataLab | 2023-04-24T11:08:15Z | 1,096 | 7 | transformers | [

"transformers",

"pytorch",

"roberta",

"fill-mask",

"arxiv:2304.04662",

"license:gpl",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2023-04-22T19:04:30Z | ---

license: gpl

---

## Citation

For the [paper](https://arxiv.org/abs/2304.04662):

```bibtex

@misc{yüksel2023selformer,

title={SELFormer: Molecular Representation Learning via SELFIES Language Models},

author={Atakan Yüksel and Erva Ulusoy and Atabey Ünlü and Gamze Deniz and Tunca Doğan},

year={2023},

eprint={2304.04662},

archivePrefix={arXiv},

primaryClass={q-bio.QM}

}

```

## License

Copyright (C) 2023 HUBioDataLab

This program is free software: you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program. If not, see http://www.gnu.org/licenses/.

|

Tap-M/Luna-AI-Llama2-Uncensored-FP16 | Tap-M | 2023-07-26T19:31:37Z | 1,096 | 10 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-07-19T18:16:03Z | ---

license: cc-by-sa-4.0

---

<div style="width: 800px; margin: auto;">

<h2>Model Description</h2>

<p>“Luna AI Llama2 Uncensored” is a Llama2 based Chat model <br />fine-tuned on over 40,000 long form chat discussions <br />

This model was fine-tuned by Tap, the creator of Luna AI. <br />

<h2>Model Training</h2>

<p>The fine-tuning process was performed on an 8x a100 80GB machine.

<br />The model was trained on synthetic outputs which include multiple rounds of chats between Human & AI.

</p>

<a rel="noopener nofollow" href="https://huggingface.co/TheBloke/Luna-AI-Llama2-Uncensored-GPTQ">4bit GPTQ Version provided by @TheBloke - for GPU inference</a><br />

<a rel="noopener nofollow" href="https://huggingface.co/TheBloke/Luna-AI-Llama2-Uncensored-GGML">GGML Version provided by @TheBloke - For CPU inference</a>

<h2>Prompt Format</h2>

<p>The model follows the Vicuna 1.1/ OpenChat format:</p>

```

USER: I have difficulties in making friends, and I really need someone to talk to. Would you be my friend?

ASSISTANT: Of course! Friends are always here for each other. What do you like to do?

```

<h2>Benchmark Results</h2>

||||||

|---:|---:|---:|---:|---:|

|Task|Version| Metric |Value |Stderr|

|arc_challenge|0|acc_norm|0.5512|0.0146|

|hellaswag|0||||

|mmlu|1|acc_norm|0.46521|0.036|

|truthfulqa_mc|1|mc2|0.4716|0.0155|

|Average|-|-|0.5114|0.0150|

</div>

|

xianf/testmodel_2 | xianf | 2023-08-29T08:03:20Z | 1,096 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:bigscience-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-29T03:12:50Z | ---

license: bigscience-openrail-m

---

|

second-state/Llama-2-7B-Chat-GGUF | second-state | 2024-04-24T09:30:54Z | 1,096 | 2 | transformers | [

"transformers",

"gguf",

"facebook",

"meta",

"pytorch",

"llama",

"llama-2",

"text-generation",

"en",

"base_model:meta-llama/Llama-2-7b-chat-hf",

"license:llama2",

"region:us"

] | text-generation | 2023-10-31T08:19:39Z | ---

language:

- en

license: llama2

tags:

- facebook

- meta

- pytorch

- llama

- llama-2

model_name: Llama 2 7B Chat

arxiv: 2307.09288

base_model: meta-llama/Llama-2-7b-chat-hf

inference: false

model_creator: Meta Llama 2

model_type: llama

pipeline_tag: text-generation

quantized_by: Second State Inc.

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://github.com/LlamaEdge/LlamaEdge/raw/dev/assets/logo.svg" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Llama-2-7B-Chat-GGUF

## Original Model

[meta-llama/Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf)

## Run with LlamaEdge

- LlamaEdge version: [v0.2.8](https://github.com/LlamaEdge/LlamaEdge/releases/tag/0.2.8) and above

- Prompt template

- Prompt type: `llama-2-chat`

- Prompt string

```text

<s>[INST] <<SYS>>

{{ system_prompt }}

<</SYS>>

{{ user_msg_1 }} [/INST] {{ model_answer_1 }} </s><s>[INST] {{ user_msg_2 }} [/INST]

```

- Context size: `4096`

- Run as LlamaEdge service

```bash

wasmedge --dir .:. --nn-preload default:GGML:AUTO:Llama-2-7b-chat-hf-Q5_K_M.gguf \

llama-api-server.wasm \

--prompt-template llama-2-chat \

--ctx-size 4096 \

--model-name llama-2-7b-chat

```

- Run as LlamaEdge command app

```bash

wasmedge --dir .:. --nn-preload default:GGML:AUTO:Llama-2-7b-chat-hf-Q5_K_M.gguf \

llama-chat.wasm \

--prompt-template llama-2-chat \

--ctx-size 4096

```

## Quantized GGUF Models

| Name | Quant method | Bits | Size | Use case |

| ---- | ---- | ---- | ---- | ----- |

| [Llama-2-7b-chat-hf-Q2_K.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q2_K.gguf) | Q2_K | 2 | 2.83 GB| smallest, significant quality loss - not recommended for most purposes |

| [Llama-2-7b-chat-hf-Q3_K_L.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q3_K_L.gguf) | Q3_K_L | 3 | 3.6 GB| small, substantial quality loss |

| [Llama-2-7b-chat-hf-Q3_K_M.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q3_K_M.gguf) | Q3_K_M | 3 | 3.3 GB| very small, high quality loss |

| [Llama-2-7b-chat-hf-Q3_K_S.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q3_K_S.gguf) | Q3_K_S | 3 | 2.95 GB| very small, high quality loss |

| [Llama-2-7b-chat-hf-Q4_0.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q4_0.gguf) | Q4_0 | 4 | 3.83 GB| legacy; small, very high quality loss - prefer using Q3_K_M |

| [Llama-2-7b-chat-hf-Q4_K_M.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q4_K_M.gguf) | Q4_K_M | 4 | 4.08 GB| medium, balanced quality - recommended |

| [Llama-2-7b-chat-hf-Q4_K_S.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q4_K_S.gguf) | Q4_K_S | 4 | 3.86 GB| small, greater quality loss |

| [Llama-2-7b-chat-hf-Q5_0.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q5_0.gguf) | Q5_0 | 5 | 4.65 GB| legacy; medium, balanced quality - prefer using Q4_K_M |

| [Llama-2-7b-chat-hf-Q5_K_M.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q5_K_M.gguf) | Q5_K_M | 5 | 4.78 GB| large, very low quality loss - recommended |

| [Llama-2-7b-chat-hf-Q5_K_S.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q5_K_S.gguf) | Q5_K_S | 5 | 4.65 GB| large, low quality loss - recommended |

| [Llama-2-7b-chat-hf-Q6_K.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q6_K.gguf) | Q6_K | 6 | 5.53 GB| very large, extremely low quality loss |

| [Llama-2-7b-chat-hf-Q8_0.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-Q8_0.gguf) | Q8_0 | 8 | 7.16 GB| very large, extremely low quality loss - not recommended |

| [Llama-2-7b-chat-hf-f16.gguf](https://huggingface.co/second-state/Llama-2-7B-Chat-GGUF/blob/main/Llama-2-7b-chat-hf-f16.gguf) | f16 | 16 | 13.5 GB| |

|

athirdpath/CleverGirl-20b-Blended | athirdpath | 2023-11-28T02:00:35Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-27T12:15:43Z | ---

license: cc-by-nc-4.0

---

This model is CleverGirl and CleverGirl-Inverted blended together, an experiment on the nature of frankenstein merges. The CleverGirl line is made from Sao10K/Mythical-Destroyer-V2-L2-13B and athirdpath/Orca-2-13b-Alpaca-Uncensored.

She can be a little strange, but lives up to her name:

Looking forward to comparing the leaderboard scores between this and the unblended version, subjectively this model feels both smarter and more creative after my "frankenstein slice normalization".

models:

- model: athirdpath/CleverGirl-20b

- model: athirdpath/CleverGirl-20b-Inverted

merge_method: slerp

base_model: athirdpath/CleverGirl-20b

parameters: t: value: 0.5

dtype: float16 |

shadowml/Beyonder-4x7b | shadowml | 2023-12-30T15:06:43Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"mixtral",

"text-generation",

"moe",

"mergekit",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-30T14:54:17Z | ---

license: apache-2.0

tags:

- moe

- mergekit

---

# Beyonder-4x7b

This model is a Mixure of Experts (MoE) made with [mergekit](https://github.com/cg123/mergekit) (mixtral branch). It uses the following base models:

* [openchat/openchat-3.5-1210](https://huggingface.co/openchat/openchat-3.5-1210)

* [beowolx/CodeNinja-1.0-OpenChat-7B](https://huggingface.co/beowolx/CodeNinja-1.0-OpenChat-7B)

* [maywell/PiVoT-0.1-Starling-LM-RP](https://huggingface.co/maywell/PiVoT-0.1-Starling-LM-RP)

* [WizardLM/WizardMath-7B-V1.1](https://huggingface.co/WizardLM/WizardMath-7B-V1.1)

## 🧩 Configuration

```yaml

base_model: openchat/openchat-3.5-1210

gate_mode: hidden

experts:

- source_model: openchat/openchat-3.5-1210

positive_prompts:

- "chat"

- "assistant"

- "tell me"

- "explain"

negative_prompts:

- "storywriting"

- "mathematics"

- "reasoning"

- "code"

- "programming"

- source_model: beowolx/CodeNinja-1.0-OpenChat-7B

positive_prompts:

- "code"

- "python"

- "javascript"

- "programming"

- "algorithm"

negative_prompts:

- "chat"

- "assistant"

- "storywriting"

- "mathematics"

- "reasoning"

- source_model: maywell/PiVoT-0.1-Starling-LM-RP

positive_prompts:

- "storywriting"

- "write"

- "scene"

- "story"

- "character"

negative_prompts:

- "chat"

- "assistant"

- "code"

- "programming"

- "mathematics"

- "reasoning"

- source_model: WizardLM/WizardMath-7B-V1.1

positive_prompts:

- "reason"

- "math"

- "mathematics"

- "solve"

- "count"

negative_prompts:

- "chat"

- "assistant"

- "code"

- "programming"

- "storywriting"

```

## 💻 Usage

```python

!pip install -qU transformers bitsandbytes accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "mlabonne/Beyonder-4x7b"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

model_kwargs={"torch_dtype": torch.float16, "load_in_4bit": True},

)

messages = [{"role": "user", "content": "Explain what a Mixture of Experts is in less than 100 words."}]

prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

```

Output:

```

A Mixture of Experts (MoE) is a neural network architecture that combines the strengths of multiple expert networks to make predictions. It leverages the idea of ensemble learning, where multiple models work together to improve performance. In each MoE, a gating network is used to select the most relevant expert for the input. The final output is a weighted combination of the expert outputs, determined by the gating network's predictions.

``` |

shadowml/NeuralPipe-9B-merged | shadowml | 2023-12-30T15:36:06Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"merge",

"mergekit",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-30T15:30:53Z | ---

license: apache-2.0

tags:

- merge

- mergekit

---

# NeuralPipe-9B-merged

This model is a merge of the following models made with [mergekit](https://github.com/cg123/mergekit):

* [OpenPipe/mistral-ft-optimized-1218](https://huggingface.co/OpenPipe/mistral-ft-optimized-1218)

* [mlabonne/NeuralHermes-2.5-Mistral-7B](https://huggingface.co/mlabonne/NeuralHermes-2.5-Mistral-7B)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: OpenPipe/mistral-ft-optimized-1218

layer_range: [0, 32]

- sources:

- model: mlabonne/NeuralHermes-2.5-Mistral-7B

layer_range: [24, 32]

merge_method: passthrough

dtype: float16

``` |

mu0gum/AIFT-42dot_LLM-PLM-1.3B-ao-instruct-all-v0.52 | mu0gum | 2024-01-20T00:17:38Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-01-19T16:44:20Z | ---

license: cc-by-nc-4.0

---

# AIFT-42dot-LLM-PLM-1.3B-ao-instruct-all-v0.52

베이스 모델 : 42dot/42dot_LLM-PLM-1.3B

학습 데이터 : 자체 제작한 Open Orca 스타일 데이터셋 약 28,000건 (데이터 수량 조정)

학습 방법 : Full finetuning

## ko-lm-evaluation-harness(0-shot)

|kobest_boolq|kobest_copa|kobest_hellaswag|kobest_sentineg|kohatespeech|kohatespeech_apeach|kohatespeech_gen_bias|korunsmile|nsmc|pawsx_ko|

|--|--|--|--|--|--|--|--|--|--|

|0.5826210826210826|0.68|0.436|0.7758186397984886|0.2908704883227176|0.5082228116710875|0.14225053078556263|0.39027300210119553|0.65938|0.513|

## Framework versions

- Transformers 4.36.2

- Pytorch 2.1.2+cu121

- Datasets 2.0.0

- Tokenizers 0.15.0 |

megastudyedu/ME-7B-v1.1 | megastudyedu | 2024-01-29T03:19:35Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"ko",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-01-29T00:44:24Z | ---

license: cc-by-nc-nd-4.0

language:

- ko

---

# Model Card for ME-7B-v1.1

## Developed by : 메가스터디교육, 프리딕션, 마이스

## Base Model : [jondurbin/bagel-dpo-7b-v0.1](https://huggingface.co/jondurbin/bagel-dpo-7b-v0.1)

## 사용 데이터셋

- jondurbin/bagel-v0.3 SFT 번역 데이터셋(translated by megastudyedu/ME-7B-v1.0)

- [megastudy/M-SOLAR-10.7B-v1.3](https://huggingface.co/megastudy/M-SOLAR-10.7B-v1.3) 데이터

- [공공데이터포털 데이터셋 용어사전과 관련된 정제 데이터셋](https://www.data.go.kr/)

|

ITT-AF/ITT-42dot_LLM-PLM-1.3B-v3.0 | ITT-AF | 2024-02-14T06:32:35Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-01-30T15:04:18Z | ---

license: cc-by-nc-4.0

---

# ITT-AF/ITT-42dot_LLM-PLM-1.3B-v3.0

This model is a fine-tuned version of [42dot/42dot_LLM-PLM-1.3B](https://huggingface.co/42dot/42dot_LLM-PLM-1.3B) on an custom dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 24

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 96

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.36.2

- Pytorch 2.1.2+cu121

- Datasets 2.0.0

- Tokenizers 0.15.0 |

blueapple8259/TinyKo-v5-a | blueapple8259 | 2024-02-03T05:48:08Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"ko",

"dataset:maywell/korean_textbooks",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-03T05:24:22Z | ---

license: mit

datasets:

- maywell/korean_textbooks

language:

- ko

---

[korean_textbooks](https://huggingface.co/datasets/maywell/korean_textbooks)데이터셋의 tiny-textbooks를 사용하여 학습한 모델입니다.

주의: 성능이 매우 떨어지며 할루시네이션이 매우 심합니다.

## 모델 정보

model type: llama

hidden size: 6

hidden size: 127

num attention heads: 16

num key value heads: 4

|

jungyuko/DAVinCI-Yi-Ko-6B-v1.1 | jungyuko | 2024-02-16T08:51:00Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-16T08:11:16Z | ---

license: cc-by-nc-4.0

---

## DAVinCI-Yi-Ko-6B-v1.1

This model is a fine-tuned version of [beomi/Yi-Ko-6B](https://huggingface.co/beomi/Yi-Ko-6B) on an unknown dataset.

### Model description

More information needed

### Intended uses & limitations

More information needed

### Training and evaluation data

More information needed

### Training procedure

### Training hypuerparameters

The following hyperparameters were used during training:

* learning_rate: 2e-05

* train_batch_size: 4

* eval_batch_size: 8

* seed: 42

* gradient_accumulation_steps: 8

* total_train_batch_size: 32

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr_scheduler_type: linear

* num_epochs: 1.0

* mixed_precision_training: Native AMP

### Training results

### Framework versions

* Transformers 4.36.2

* Pytorch 2.1.2+cu121

* Datasets 2.0.0

* Tokenizers 0.15.0 |

ITT-AF/ITT-42dot_LLM-PLM-1.3B-v4.0 | ITT-AF | 2024-02-20T18:07:45Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-20T16:32:43Z | ---

license: cc-by-nc-4.0

---

# ITT-AF/ITT-42dot_LLM-PLM-1.3B-v4.0

This model is a fine-tuned version of [42dot/42dot_LLM-PLM-1.3B](https://huggingface.co/42dot/42dot_LLM-PLM-1.3B) on an custom dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 24

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 96

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1.0

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.36.2

- Pytorch 2.1.2+cu121

- Datasets 2.0.0

- Tokenizers 0.15.0 |

mu0gum/AIFT-42dot_LLM-SFT-1.3B-ao-instruct-all-v1.1 | mu0gum | 2024-02-21T14:21:25Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-21T14:06:40Z | ---

license: cc-by-nc-4.0

---

# AIFT-42dot-LLM-PLM-1.3B-ao-instruct-all-v1.11

베이스 모델 : 42dot/42dot_LLM-SFT-1.3B

학습 데이터 : 자체 제작한 Open Orca 스타일 데이터셋 약 48,000건 (중복 제거 및 데이터 분포 조정)

학습 방법 : Full finetuning

epoch : 3

## ko-lm-evaluation-harness(5-shot)

|kobest_boolq|kobest_copa|kobest_hellaswag|pawsx_ko|

|--|--|--|--|

|0.52065527065527|0.721|0.466|0.5475|

## Framework versions

- Transformers 4.36.2

- Pytorch 2.1.2+cu121

- Datasets 2.0.0

- Tokenizers 0.15.0 |

chihoonlee10/T3Q-KO-SOLAR-MR-v1.0 | chihoonlee10 | 2024-03-17T13:24:50Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-17T13:07:01Z | ---

license: apache-2.0

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed] |

abhishek/autotrain-llama3-orpo-v2 | abhishek | 2024-04-26T09:08:49Z | 1,096 | 3 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"llama",

"text-generation",

"autotrain",

"text-generation-inference",

"conversational",

"dataset:argilla/distilabel-capybara-dpo-7k-binarized",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-04-22T09:35:07Z | ---

tags:

- autotrain

- text-generation-inference

- text-generation

library_name: transformers

widget:

- messages:

- role: user

content: What is your favorite condiment?

license: other

datasets:

- argilla/distilabel-capybara-dpo-7k-binarized

---

# Model Trained Using AutoTrain

This model was trained using AutoTrain. For more information, please visit [AutoTrain](https://hf.co/docs/autotrain).

# Usage

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_path = "PATH_TO_THIS_REPO"

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(

model_path,

device_map="auto",

torch_dtype='auto'

).eval()

# Prompt content: "hi"

messages = [

{"role": "user", "content": "hi"}

]

input_ids = tokenizer.apply_chat_template(conversation=messages, tokenize=True, add_generation_prompt=True, return_tensors='pt')

output_ids = model.generate(input_ids.to('cuda'))

response = tokenizer.decode(output_ids[0][input_ids.shape[1]:], skip_special_tokens=True)

# Model response: "Hello! How can I assist you today?"

print(response)

``` |

mssma/ko-solar-10.7b-v0.1b | mssma | 2024-05-13T05:36:21Z | 1,096 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"ko",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-13T05:27:17Z | ---

library_name: transformers

license: apache-2.0

language:

- ko

---

# usage

```

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

path = "mssma/ko-solar-10.7b-v0.1b"

model = AutoModelForCausalLM.from_pretrained(

path,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

tokenizer = AutoTokenizer.from_pretrained(path)

``` |

JamesConley/glados_together_20b_lora_merged | JamesConley | 2023-05-24T14:18:44Z | 1,095 | 0 | transformers | [

"transformers",

"pytorch",

"gpt_neox",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-05-24T02:12:05Z | WIP (my ISP is terrible and the file is really big) |

Salesforce/instructblip-vicuna-13b | Salesforce | 2024-04-11T20:37:34Z | 1,095 | 40 | transformers | [

"transformers",

"pytorch",

"safetensors",

"instructblip",

"text2text-generation",

"vision",

"image-captioning",

"image-to-text",

"en",

"arxiv:2305.06500",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-to-text | 2023-06-03T14:46:46Z | ---

language: en

license: other

tags:

- vision

- image-captioning

pipeline_tag: image-to-text

---

# InstructBLIP model

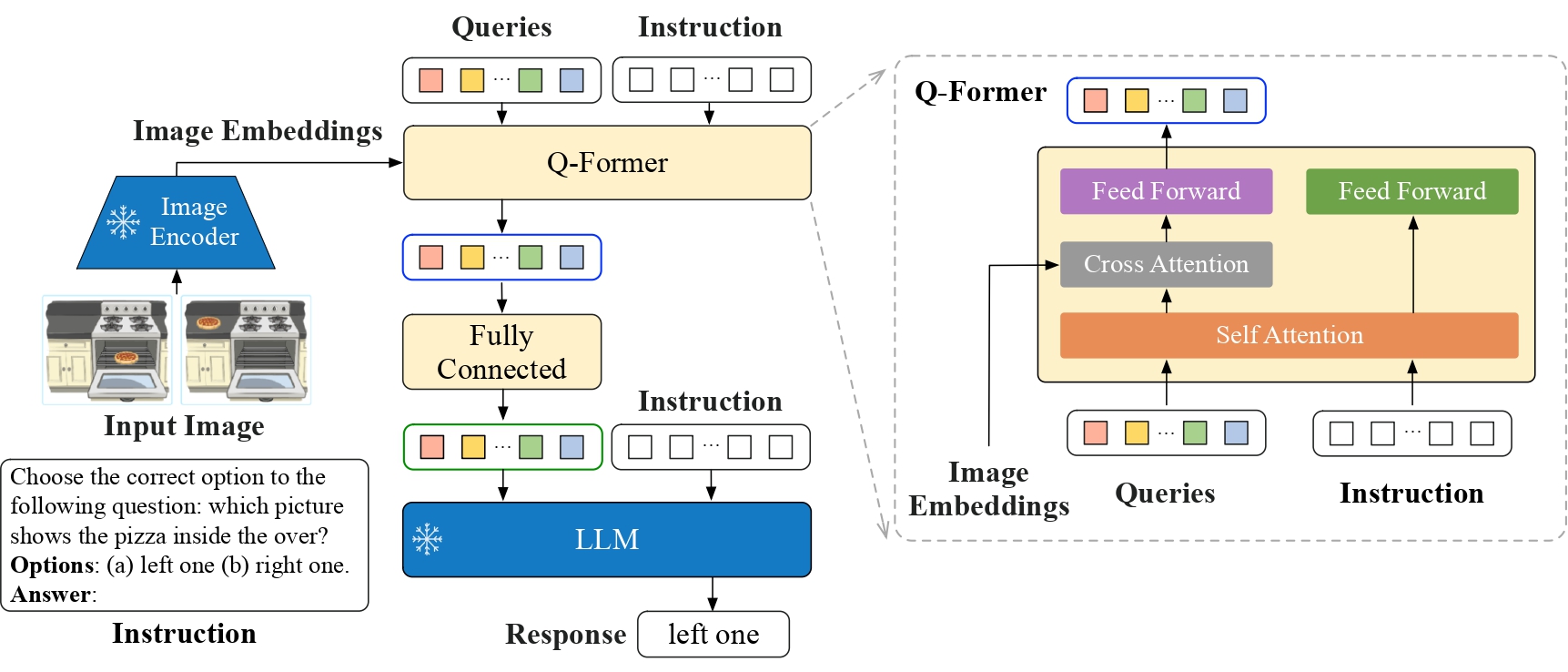

InstructBLIP model using [Vicuna-13b](https://github.com/lm-sys/FastChat#model-weights) as language model. InstructBLIP was introduced in the paper [InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning](https://arxiv.org/abs/2305.06500) by Dai et al.

Disclaimer: The team releasing InstructBLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

InstructBLIP is a visual instruction tuned version of [BLIP-2](https://huggingface.co/docs/transformers/main/model_doc/blip-2). Refer to the paper for details.

## Intended uses & limitations

Usage is as follows:

```

from transformers import InstructBlipProcessor, InstructBlipForConditionalGeneration

import torch

from PIL import Image

import requests

model = InstructBlipForConditionalGeneration.from_pretrained("Salesforce/instructblip-vicuna-13b")

processor = InstructBlipProcessor.from_pretrained("Salesforce/instructblip-vicuna-13b")

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

url = "https://raw.githubusercontent.com/salesforce/LAVIS/main/docs/_static/Confusing-Pictures.jpg"

image = Image.open(requests.get(url, stream=True).raw).convert("RGB")

prompt = "What is unusual about this image?"

inputs = processor(images=image, text=prompt, return_tensors="pt").to(device)

outputs = model.generate(

**inputs,

do_sample=False,

num_beams=5,

max_length=256,

min_length=1,

top_p=0.9,

repetition_penalty=1.5,

length_penalty=1.0,

temperature=1,

)

generated_text = processor.batch_decode(outputs, skip_special_tokens=True)[0].strip()

print(generated_text)

```

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/instructblip). |

Vezora/Mistral-Narwhal-7b | Vezora | 2023-10-12T01:03:37Z | 1,095 | 1 | transformers | [

"transformers",

"pytorch",

"safetensors",

"mistral",

"text-generation",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-11T22:17:55Z | ---

license: apache-2.0

---

# Narwhal-Mistral-7b

## Model Description

Mistral-Narwhal-7b is a Hugging Face model built on top of Mistral 7b. It is a result of merging two models: Eric Hartford's Dolphin2.1 and HuggingFace's Zephyr-7b-alpha. All credit goes to them.

## Source Models

- Dolphin2.1-mistral-7b by Eric Hartford (https://huggingface.co/ehartford/dolphin-2.1-mistral-7b)

- Zephyr-7b-alpha by HuggingFace (https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha)

## Usage

This model uses 3 different models in combination, so you must adhere to their Lisencing, as well as the lisencing available here.

|

athirdpath/CleverGirl-20b | athirdpath | 2023-11-27T12:41:33Z | 1,095 | 3 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-27T12:14:26Z | ---

license: llama2

---

Components chosen based off MMLU and TQA scores, as well as vibes.

slices:

- sources:

- model: Sao10K/Mythical-Destroyer-V2-L2-13B

-

layer_range: [0, 16]

- sources:

- model: athirdpath/Orca-2-13b-Alpaca-Uncensored

-

layer_range: [8, 24]

- sources:

- model: Sao10K/Mythical-Destroyer-V2-L2-13B

-

layer_range: [17, 32]

- sources:

- model: athirdpath/Orca-2-13b-Alpaca-Uncensored

-

layer_range: [25, 40]

merge_method: passthrough

dtype: float16 |

Norod78/SDXL-JojosoStyle-Lora-v2 | Norod78 | 2023-11-29T09:23:49Z | 1,095 | 5 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"style",

"jojo's bizarre adventure",

"jojo",

"stone ocean",

"sdxl style lora",

"jojo's bizarre adventure stone ocean",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:other",

"region:us"

] | text-to-image | 2023-11-29T09:23:45Z | ---

license: other

license_name: bespoke-lora-trained-license

license_link: https://multimodal.art/civitai-licenses?allowNoCredit=True&allowCommercialUse=Rent&allowDerivatives=True&allowDifferentLicense=False

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

- style

- jojo's bizarre adventure

- jojo

- stone ocean

- sdxl style lora

- jojo's bizarre adventure stone ocean

base_model: stabilityai/stable-diffusion-xl-base-1.0