modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

kaiku03/wildchat2 | kaiku03 | 2024-05-07T06:05:43Z | 662 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-07T03:26:58Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: wildchat2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wildchat2

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 256

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.28.0

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.13.3

|

LLM4Binary/llm4decompile-1.3b-v1.5 | LLM4Binary | 2024-06-20T07:01:03Z | 662 | 2 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"decompile",

"binary",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-10T12:49:04Z | ---

license: mit

tags:

- decompile

- binary

widget:

- text: "# This is the assembly code:\n<func0>:\nendbr64\nlea (%rdi,%rsi,1),%eax\nretq\n# What is the source code?\n"

---

### 1. Introduction of LLM4Decompile

LLM4Decompile aims to decompile x86 assembly instructions into C. The newly released V1.5 series are trained with a larger dataset (15B tokens) and a maximum token length of 4,096, with remarkable performance (up to 100% improvement) compared to the previous model.

- **Github Repository:** [LLM4Decompile](https://github.com/albertan017/LLM4Decompile)

### 2. Evaluation Results

| Model/Benchmark | HumanEval-Decompile | | | | | ExeBench | | | | |

|:----------------------:|:-------------------:|:-------:|:-------:|:-------:|:-------:|:--------:|:-------:|:-------:|:-------:|:-------:|

| Optimization Level | O0 | O1 | O2 | O3 | AVG | O0 | O1 | O2 | O3 | AVG |

| DeepSeek-Coder-6.7B | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.0000 |

| GPT-4o | 0.3049 | 0.1159 | 0.1037 | 0.1159 | 0.1601 | 0.0443 | 0.0328 | 0.0397 | 0.0343 | 0.0378 |

| LLM4Decompile-End-1.3B | 0.4720 | 0.2061 | 0.2122 | 0.2024 | 0.2732 | 0.1786 | 0.1362 | 0.1320 | 0.1328 | 0.1449 |

| LLM4Decompile-End-6.7B | 0.6805 | 0.3951 | 0.3671 | 0.3720 | 0.4537 | 0.2289 | 0.1660 | 0.1618 | 0.1625 | 0.1798 |

| LLM4Decompile-End-33B | 0.5168 | 0.2956 | 0.2815 | 0.2675 | 0.3404 | 0.1886 | 0.1465 | 0.1396 | 0.1411 | 0.1540 |

### 3. How to Use

Here is an example of how to use our model (Revised for V1.5).

Note: **Replace** func0 with the function name you want to decompile.

**Preprocessing:** Compile the C code into binary, and disassemble the binary into assembly instructions.

```python

import subprocess

import os

OPT = ["O0", "O1", "O2", "O3"]

fileName = 'samples/sample' #'path/to/file'

for opt_state in OPT:

output_file = fileName +'_' + opt_state

input_file = fileName+'.c'

compile_command = f'gcc -o {output_file}.o {input_file} -{opt_state} -lm'#compile the code with GCC on Linux

subprocess.run(compile_command, shell=True, check=True)

compile_command = f'objdump -d {output_file}.o > {output_file}.s'#disassemble the binary file into assembly instructions

subprocess.run(compile_command, shell=True, check=True)

input_asm = ''

with open(output_file+'.s') as f:#asm file

asm= f.read()

if '<'+'func0'+'>:' not in asm: #IMPORTANT replace func0 with the function name

raise ValueError("compile fails")

asm = '<'+'func0'+'>:' + asm.split('<'+'func0'+'>:')[-1].split('\n\n')[0] #IMPORTANT replace func0 with the function name

asm_clean = ""

asm_sp = asm.split("\n")

for tmp in asm_sp:

if len(tmp.split("\t"))<3 and '00' in tmp:

continue

idx = min(

len(tmp.split("\t")) - 1, 2

)

tmp_asm = "\t".join(tmp.split("\t")[idx:]) # remove the binary code

tmp_asm = tmp_asm.split("#")[0].strip() # remove the comments

asm_clean += tmp_asm + "\n"

input_asm = asm_clean.strip()

before = f"# This is the assembly code:\n"#prompt

after = "\n# What is the source code?\n"#prompt

input_asm_prompt = before+input_asm.strip()+after

with open(fileName +'_' + opt_state +'.asm','w',encoding='utf-8') as f:

f.write(input_asm_prompt)

```

**Decompilation:** Use LLM4Decompile to translate the assembly instructions into C:

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_path = 'LLM4Binary/llm4decompile-1.3b-v1.5' # V1.5 Model

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path,torch_dtype=torch.bfloat16).cuda()

with open(fileName +'_' + OPT[0] +'.asm','r') as f:#optimization level O0

asm_func = f.read()

inputs = tokenizer(asm_func, return_tensors="pt").to(model.device)

with torch.no_grad():

outputs = model.generate(**inputs, max_new_tokens=4000)

c_func_decompile = tokenizer.decode(outputs[0][len(inputs[0]):-1])

with open(fileName +'.c','r') as f:#original file

func = f.read()

print(f'original function:\n{func}')# Note we only decompile one function, where the original file may contain multiple functions

print(f'decompiled function:\n{c_func_decompile}')

```

### 4. License

This code repository is licensed under the MIT License.

### 5. Contact

If you have any questions, please raise an issue.

|

QuantFactory/Mistral-7B-Instruct-v0.3-GGUF | QuantFactory | 2024-05-23T07:03:15Z | 662 | 1 | transformers | [

"transformers",

"gguf",

"mistral",

"text-generation",

"base_model:mistralai/Mistral-7B-Instruct-v0.3",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | text-generation | 2024-05-23T04:58:44Z | ---

license: apache-2.0

base_model: mistralai/Mistral-7B-Instruct-v0.3

library_name: transformers

pipeline_tag: text-generation

tags:

- mistral

---

# Mistral-7B-Instruct-v0.3-GGUF

- This is quantized version of [mistralai/Mistral-7B-Instruct-v0.3](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.3) created using llama.cpp

# Model Description

The Mistral-7B-Instruct-v0.3 Large Language Model (LLM) is an instruct fine-tuned version of the Mistral-7B-v0.3.

Mistral-7B-v0.3 has the following changes compared to [Mistral-7B-v0.2](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2/edit/main/README.md)

- Extended vocabulary to 32768

- Supports v3 Tokenizer

- Supports function calling

### Chat

After installing `mistral_inference`, a `mistral-chat` CLI command should be available in your environment. You can chat with the model using

```

mistral-chat $HOME/mistral_models/7B-Instruct-v0.3 --instruct --max_tokens 256

```

### Instruct following

```py

from mistral_inference.model import Transformer

from mistral_inference.generate import generate

from mistral_common.tokens.tokenizers.mistral import MistralTokenizer

from mistral_common.protocol.instruct.messages import UserMessage

from mistral_common.protocol.instruct.request import ChatCompletionRequest

tokenizer = MistralTokenizer.from_file(f"{mistral_models_path}/tokenizer.model.v3")

model = Transformer.from_folder(mistral_models_path)

completion_request = ChatCompletionRequest(messages=[UserMessage(content="Explain Machine Learning to me in a nutshell.")])

tokens = tokenizer.encode_chat_completion(completion_request).tokens

out_tokens, _ = generate([tokens], model, max_tokens=64, temperature=0.0, eos_id=tokenizer.instruct_tokenizer.tokenizer.eos_id)

result = tokenizer.instruct_tokenizer.tokenizer.decode(out_tokens[0])

print(result)

```

### Function calling

```py

from mistral_common.protocol.instruct.tool_calls import Function, Tool

from mistral_inference.model import Transformer

from mistral_inference.generate import generate

from mistral_common.tokens.tokenizers.mistral import MistralTokenizer

from mistral_common.protocol.instruct.messages import UserMessage

from mistral_common.protocol.instruct.request import ChatCompletionRequest

tokenizer = MistralTokenizer.from_file(f"{mistral_models_path}/tokenizer.model.v3")

model = Transformer.from_folder(mistral_models_path)

completion_request = ChatCompletionRequest(

tools=[

Tool(

function=Function(

name="get_current_weather",

description="Get the current weather",

parameters={

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"format": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The temperature unit to use. Infer this from the users location.",

},

},

"required": ["location", "format"],

},

)

)

],

messages=[

UserMessage(content="What's the weather like today in Paris?"),

],

)

tokens = tokenizer.encode_chat_completion(completion_request).tokens

out_tokens, _ = generate([tokens], model, max_tokens=64, temperature=0.0, eos_id=tokenizer.instruct_tokenizer.tokenizer.eos_id)

result = tokenizer.instruct_tokenizer.tokenizer.decode(out_tokens[0])

print(result)

```

## Generate with `transformers`

If you want to use Hugging Face `transformers` to generate text, you can do something like this.

```py

from transformers import pipeline

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

chatbot = pipeline("text-generation", model="mistralai/Mistral-7B-Instruct-v0.3")

chatbot(messages)

```

## Limitations

The Mistral 7B Instruct model is a quick demonstration that the base model can be easily fine-tuned to achieve compelling performance.

It does not have any moderation mechanisms. We're looking forward to engaging with the community on ways to

make the model finely respect guardrails, allowing for deployment in environments requiring moderated outputs.

## The Mistral AI Team

Albert Jiang, Alexandre Sablayrolles, Alexis Tacnet, Antoine Roux, Arthur Mensch, Audrey Herblin-Stoop, Baptiste Bout, Baudouin de Monicault, Blanche Savary, Bam4d, Caroline Feldman, Devendra Singh Chaplot, Diego de las Casas, Eleonore Arcelin, Emma Bou Hanna, Etienne Metzger, Gianna Lengyel, Guillaume Bour, Guillaume Lample, Harizo Rajaona, Jean-Malo Delignon, Jia Li, Justus Murke, Louis Martin, Louis Ternon, Lucile Saulnier, Lélio Renard Lavaud, Margaret Jennings, Marie Pellat, Marie Torelli, Marie-Anne Lachaux, Nicolas Schuhl, Patrick von Platen, Pierre Stock, Sandeep Subramanian, Sophia Yang, Szymon Antoniak, Teven Le Scao, Thibaut Lavril, Timothée Lacroix, Théophile Gervet, Thomas Wang, Valera Nemychnikova, William El Sayed, William Marshall |

unsloth/Qwen2-1.5B-Instruct | unsloth | 2024-06-06T17:18:48Z | 662 | 0 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"unsloth",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-06-06T16:40:40Z | ---

language:

- en

license: apache-2.0

library_name: transformers

tags:

- unsloth

- transformers

- qwen2

---

# Finetune Mistral, Gemma, Llama 2-5x faster with 70% less memory via Unsloth!

We have a Google Colab Tesla T4 notebook for Qwen2 7b here: https://colab.research.google.com/drive/1mvwsIQWDs2EdZxZQF9pRGnnOvE86MVvR?usp=sharing

And a Colab notebook for [Qwen2 0.5b](https://colab.research.google.com/drive/1-7tjDdMAyeCueyLAwv6vYeBMHpoePocN?usp=sharing) and another for [Qwen2 1.5b](https://colab.research.google.com/drive/1W0j3rP8WpgxRdUgkb5l6E00EEVyjEZGk?usp=sharing)

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/Discord%20button.png" width="200"/>](https://discord.gg/u54VK8m8tk)

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/buy%20me%20a%20coffee%20button.png" width="200"/>](https://ko-fi.com/unsloth)

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

## ✨ Finetune for Free

All notebooks are **beginner friendly**! Add your dataset, click "Run All", and you'll get a 2x faster finetuned model which can be exported to GGUF, vLLM or uploaded to Hugging Face.

| Unsloth supports | Free Notebooks | Performance | Memory use |

|-----------------|--------------------------------------------------------------------------------------------------------------------------|-------------|----------|

| **Llama-3 8b** | [▶️ Start on Colab](https://colab.research.google.com/drive/135ced7oHytdxu3N2DNe1Z0kqjyYIkDXp?usp=sharing) | 2.4x faster | 58% less |

| **Gemma 7b** | [▶️ Start on Colab](https://colab.research.google.com/drive/10NbwlsRChbma1v55m8LAPYG15uQv6HLo?usp=sharing) | 2.4x faster | 58% less |

| **Mistral 7b** | [▶️ Start on Colab](https://colab.research.google.com/drive/1Dyauq4kTZoLewQ1cApceUQVNcnnNTzg_?usp=sharing) | 2.2x faster | 62% less |

| **Llama-2 7b** | [▶️ Start on Colab](https://colab.research.google.com/drive/1lBzz5KeZJKXjvivbYvmGarix9Ao6Wxe5?usp=sharing) | 2.2x faster | 43% less |

| **TinyLlama** | [▶️ Start on Colab](https://colab.research.google.com/drive/1AZghoNBQaMDgWJpi4RbffGM1h6raLUj9?usp=sharing) | 3.9x faster | 74% less |

| **CodeLlama 34b** A100 | [▶️ Start on Colab](https://colab.research.google.com/drive/1y7A0AxE3y8gdj4AVkl2aZX47Xu3P1wJT?usp=sharing) | 1.9x faster | 27% less |

| **Mistral 7b** 1xT4 | [▶️ Start on Kaggle](https://www.kaggle.com/code/danielhanchen/kaggle-mistral-7b-unsloth-notebook) | 5x faster\* | 62% less |

| **DPO - Zephyr** | [▶️ Start on Colab](https://colab.research.google.com/drive/15vttTpzzVXv_tJwEk-hIcQ0S9FcEWvwP?usp=sharing) | 1.9x faster | 19% less |

- This [conversational notebook](https://colab.research.google.com/drive/1Aau3lgPzeZKQ-98h69CCu1UJcvIBLmy2?usp=sharing) is useful for ShareGPT ChatML / Vicuna templates.

- This [text completion notebook](https://colab.research.google.com/drive/1ef-tab5bhkvWmBOObepl1WgJvfvSzn5Q?usp=sharing) is for raw text. This [DPO notebook](https://colab.research.google.com/drive/15vttTpzzVXv_tJwEk-hIcQ0S9FcEWvwP?usp=sharing) replicates Zephyr.

- \* Kaggle has 2x T4s, but we use 1. Due to overhead, 1x T4 is 5x faster. |

sharmajai901/UL_base_classification | sharmajai901 | 2024-06-11T10:02:28Z | 662 | 0 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:google/vit-base-patch16-224",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | image-classification | 2024-06-11T10:02:13Z | ---

license: apache-2.0

base_model: google/vit-base-patch16-224

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: UL_base_classification

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: validation

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8921161825726142

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# UL_base_classification

This model is a fine-tuned version of [google/vit-base-patch16-224](https://huggingface.co/google/vit-base-patch16-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3125

- Accuracy: 0.8921

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:------:|:----:|:---------------:|:--------:|

| 0.8296 | 0.9756 | 20 | 0.5683 | 0.8230 |

| 0.4462 | 2.0 | 41 | 0.3949 | 0.8603 |

| 0.3588 | 2.9756 | 61 | 0.3633 | 0.8575 |

| 0.3196 | 4.0 | 82 | 0.3247 | 0.8852 |

| 0.2921 | 4.9756 | 102 | 0.3374 | 0.8728 |

| 0.2688 | 6.0 | 123 | 0.3125 | 0.8921 |

| 0.2366 | 6.8293 | 140 | 0.3137 | 0.8866 |

### Framework versions

- Transformers 4.41.2

- Pytorch 2.3.0+cu121

- Datasets 2.19.2

- Tokenizers 0.19.1

|

scientisthere/sap_model-13june_all | scientisthere | 2024-06-13T05:53:27Z | 662 | 0 | transformers | [

"transformers",

"safetensors",

"t5",

"text2text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text2text-generation | 2024-06-13T05:52:20Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

mradermacher/DarkForest-20B-v2.0-i1-GGUF | mradermacher | 2024-06-14T01:25:16Z | 662 | 0 | transformers | [

"transformers",

"gguf",

"merge",

"not-for-all-audiences",

"en",

"base_model:TeeZee/DarkForest-20B-v2.0",

"license:other",

"endpoints_compatible",

"region:us"

] | null | 2024-06-13T17:47:15Z | ---

base_model: TeeZee/DarkForest-20B-v2.0

language:

- en

library_name: transformers

license: other

license_name: microsoft-research-license

quantized_by: mradermacher

tags:

- merge

- not-for-all-audiences

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/TeeZee/DarkForest-20B-v2.0

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/DarkForest-20B-v2.0-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ1_S.gguf) | i1-IQ1_S | 4.5 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ1_M.gguf) | i1-IQ1_M | 4.9 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 5.5 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ2_XS.gguf) | i1-IQ2_XS | 6.0 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ2_S.gguf) | i1-IQ2_S | 6.5 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ2_M.gguf) | i1-IQ2_M | 7.0 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q2_K.gguf) | i1-Q2_K | 7.5 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 7.7 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ3_XS.gguf) | i1-IQ3_XS | 8.3 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ3_S.gguf) | i1-IQ3_S | 8.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q3_K_S.gguf) | i1-Q3_K_S | 8.8 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ3_M.gguf) | i1-IQ3_M | 9.3 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q3_K_M.gguf) | i1-Q3_K_M | 9.8 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q3_K_L.gguf) | i1-Q3_K_L | 10.7 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-IQ4_XS.gguf) | i1-IQ4_XS | 10.8 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q4_0.gguf) | i1-Q4_0 | 11.4 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q4_K_S.gguf) | i1-Q4_K_S | 11.5 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q4_K_M.gguf) | i1-Q4_K_M | 12.1 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q5_K_S.gguf) | i1-Q5_K_S | 13.9 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q5_K_M.gguf) | i1-Q5_K_M | 14.3 | |

| [GGUF](https://huggingface.co/mradermacher/DarkForest-20B-v2.0-i1-GGUF/resolve/main/DarkForest-20B-v2.0.i1-Q6_K.gguf) | i1-Q6_K | 16.5 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his hardware for calculating the imatrix for these quants.

<!-- end -->

|

John6666/randomizer89-pdxl-merge-v3-sdxl | John6666 | 2024-06-25T08:55:28Z | 662 | 1 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"stable-diffusion-xl",

"anime",

"pony",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-06-25T08:50:37Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

- stable-diffusion-xl

- anime

- pony

---

Original model is [here](https://civitai.com/models/402600?modelVersionId=596863).

|

allenai/led-large-16384-arxiv | allenai | 2023-01-24T16:27:02Z | 661 | 28 | transformers | [

"transformers",

"pytorch",

"tf",

"led",

"text2text-generation",

"en",

"dataset:scientific_papers",

"arxiv:2004.05150",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text2text-generation | 2022-03-02T23:29:05Z | ---

language: en

datasets:

- scientific_papers

license: apache-2.0

---

## Introduction

[Allenai's Longformer Encoder-Decoder (LED)](https://github.com/allenai/longformer#longformer).

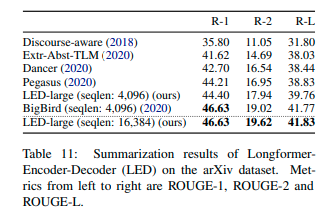

This is the official *led-large-16384* checkpoint that is fine-tuned on the arXiv dataset.*led-large-16384-arxiv* is the official fine-tuned version of [led-large-16384](https://huggingface.co/allenai/led-large-16384). As presented in the [paper](https://arxiv.org/pdf/2004.05150.pdf), the checkpoint achieves state-of-the-art results on arxiv

## Evaluation on downstream task

[This notebook](https://colab.research.google.com/drive/12INTTR6n64TzS4RrXZxMSXfrOd9Xzamo?usp=sharing) shows how *led-large-16384-arxiv* can be evaluated on the [arxiv dataset](https://huggingface.co/datasets/scientific_papers)

## Usage

The model can be used as follows. The input is taken from the test data of the [arxiv dataset](https://huggingface.co/datasets/scientific_papers).

```python

LONG_ARTICLE = """"for about 20 years the problem of properties of

short - term changes of solar activity has been

considered extensively . many investigators

studied the short - term periodicities of the

various indices of solar activity . several

periodicities were detected , but the

periodicities about 155 days and from the interval

of @xmath3 $ ] days ( @xmath4 $ ] years ) are

mentioned most often . first of them was

discovered by @xcite in the occurence rate of

gamma - ray flares detected by the gamma - ray

spectrometer aboard the _ solar maximum mission (

smm ) . this periodicity was confirmed for other

solar flares data and for the same time period

@xcite . it was also found in proton flares during

solar cycles 19 and 20 @xcite , but it was not

found in the solar flares data during solar cycles

22 @xcite . _ several autors confirmed above

results for the daily sunspot area data . @xcite

studied the sunspot data from 18741984 . she found

the 155-day periodicity in data records from 31

years . this periodicity is always characteristic

for one of the solar hemispheres ( the southern

hemisphere for cycles 1215 and the northern

hemisphere for cycles 1621 ) . moreover , it is

only present during epochs of maximum activity (

in episodes of 13 years ) .

similarinvestigationswerecarriedoutby + @xcite .

they applied the same power spectrum method as

lean , but the daily sunspot area data ( cycles

1221 ) were divided into 10 shorter time series .

the periodicities were searched for the frequency

interval 57115 nhz ( 100200 days ) and for each of

10 time series . the authors showed that the

periodicity between 150160 days is statistically

significant during all cycles from 16 to 21 . the

considered peaks were remained unaltered after

removing the 11-year cycle and applying the power

spectrum analysis . @xcite used the wavelet

technique for the daily sunspot areas between 1874

and 1993 . they determined the epochs of

appearance of this periodicity and concluded that

it presents around the maximum activity period in

cycles 16 to 21 . moreover , the power of this

periodicity started growing at cycle 19 ,

decreased in cycles 20 and 21 and disappered after

cycle 21 . similaranalyseswerepresentedby + @xcite

, but for sunspot number , solar wind plasma ,

interplanetary magnetic field and geomagnetic

activity index @xmath5 . during 1964 - 2000 the

sunspot number wavelet power of periods less than

one year shows a cyclic evolution with the phase

of the solar cycle.the 154-day period is prominent

and its strenth is stronger around the 1982 - 1984

interval in almost all solar wind parameters . the

existence of the 156-day periodicity in sunspot

data were confirmed by @xcite . they considered

the possible relation between the 475-day (

1.3-year ) and 156-day periodicities . the 475-day

( 1.3-year ) periodicity was also detected in

variations of the interplanetary magnetic field ,

geomagnetic activity helioseismic data and in the

solar wind speed @xcite . @xcite concluded that

the region of larger wavelet power shifts from

475-day ( 1.3-year ) period to 620-day ( 1.7-year

) period and then back to 475-day ( 1.3-year ) .

the periodicities from the interval @xmath6 $ ]

days ( @xmath4 $ ] years ) have been considered

from 1968 . @xcite mentioned a 16.3-month (

490-day ) periodicity in the sunspot numbers and

in the geomagnetic data . @xcite analysed the

occurrence rate of major flares during solar

cycles 19 . they found a 18-month ( 540-day )

periodicity in flare rate of the norhern

hemisphere . @xcite confirmed this result for the

@xmath7 flare data for solar cycles 20 and 21 and

found a peak in the power spectra near 510540 days

. @xcite found a 17-month ( 510-day ) periodicity

of sunspot groups and their areas from 1969 to

1986 . these authors concluded that the length of

this period is variable and the reason of this

periodicity is still not understood . @xcite and +

@xcite obtained statistically significant peaks of

power at around 158 days for daily sunspot data

from 1923 - 1933 ( cycle 16 ) . in this paper the

problem of the existence of this periodicity for

sunspot data from cycle 16 is considered . the

daily sunspot areas , the mean sunspot areas per

carrington rotation , the monthly sunspot numbers

and their fluctuations , which are obtained after

removing the 11-year cycle are analysed . in

section 2 the properties of the power spectrum

methods are described . in section 3 a new

approach to the problem of aliases in the power

spectrum analysis is presented . in section 4

numerical results of the new method of the

diagnosis of an echo - effect for sunspot area

data are discussed . in section 5 the problem of

the existence of the periodicity of about 155 days

during the maximum activity period for sunspot

data from the whole solar disk and from each solar

hemisphere separately is considered . to find

periodicities in a given time series the power

spectrum analysis is applied . in this paper two

methods are used : the fast fourier transformation

algorithm with the hamming window function ( fft )

and the blackman - tukey ( bt ) power spectrum

method @xcite . the bt method is used for the

diagnosis of the reasons of the existence of peaks

, which are obtained by the fft method . the bt

method consists in the smoothing of a cosine

transform of an autocorrelation function using a

3-point weighting average . such an estimator is

consistent and unbiased . moreover , the peaks are

uncorrelated and their sum is a variance of a

considered time series . the main disadvantage of

this method is a weak resolution of the

periodogram points , particularly for low

frequences . for example , if the autocorrelation

function is evaluated for @xmath8 , then the

distribution points in the time domain are :

@xmath9 thus , it is obvious that this method

should not be used for detecting low frequency

periodicities with a fairly good resolution .

however , because of an application of the

autocorrelation function , the bt method can be

used to verify a reality of peaks which are

computed using a method giving the better

resolution ( for example the fft method ) . it is

valuable to remember that the power spectrum

methods should be applied very carefully . the

difficulties in the interpretation of significant

peaks could be caused by at least four effects : a

sampling of a continuos function , an echo -

effect , a contribution of long - term

periodicities and a random noise . first effect

exists because periodicities , which are shorter

than the sampling interval , may mix with longer

periodicities . in result , this effect can be

reduced by an decrease of the sampling interval

between observations . the echo - effect occurs

when there is a latent harmonic of frequency

@xmath10 in the time series , giving a spectral

peak at @xmath10 , and also periodic terms of

frequency @xmath11 etc . this may be detected by

the autocorrelation function for time series with

a large variance . time series often contain long

- term periodicities , that influence short - term

peaks . they could rise periodogram s peaks at

lower frequencies . however , it is also easy to

notice the influence of the long - term

periodicities on short - term peaks in the graphs

of the autocorrelation functions . this effect is

observed for the time series of solar activity

indexes which are limited by the 11-year cycle .

to find statistically significant periodicities it

is reasonable to use the autocorrelation function

and the power spectrum method with a high

resolution . in the case of a stationary time

series they give similar results . moreover , for

a stationary time series with the mean zero the

fourier transform is equivalent to the cosine

transform of an autocorrelation function @xcite .

thus , after a comparison of a periodogram with an

appropriate autocorrelation function one can

detect peaks which are in the graph of the first

function and do not exist in the graph of the

second function . the reasons of their existence

could be explained by the long - term

periodicities and the echo - effect . below method

enables one to detect these effects . ( solid line

) and the 95% confidence level basing on thered

noise ( dotted line ) . the periodogram values are

presented on the left axis . the lower curve

illustrates the autocorrelation function of the

same time series ( solid line ) . the dotted lines

represent two standard errors of the

autocorrelation function . the dashed horizontal

line shows the zero level . the autocorrelation

values are shown in the right axis . ] because

the statistical tests indicate that the time

series is a white noise the confidence level is

not marked . ] . ] the method of the diagnosis

of an echo - effect in the power spectrum ( de )

consists in an analysis of a periodogram of a

given time series computed using the bt method .

the bt method bases on the cosine transform of the

autocorrelation function which creates peaks which

are in the periodogram , but not in the

autocorrelation function . the de method is used

for peaks which are computed by the fft method (

with high resolution ) and are statistically

significant . the time series of sunspot activity

indexes with the spacing interval one rotation or

one month contain a markov - type persistence ,

which means a tendency for the successive values

of the time series to remember their antecendent

values . thus , i use a confidence level basing on

the red noise of markov @xcite for the choice of

the significant peaks of the periodogram computed

by the fft method . when a time series does not

contain the markov - type persistence i apply the

fisher test and the kolmogorov - smirnov test at

the significance level @xmath12 @xcite to verify a

statistically significance of periodograms peaks .

the fisher test checks the null hypothesis that

the time series is white noise agains the

alternative hypothesis that the time series

contains an added deterministic periodic component

of unspecified frequency . because the fisher test

tends to be severe in rejecting peaks as

insignificant the kolmogorov - smirnov test is

also used . the de method analyses raw estimators

of the power spectrum . they are given as follows

@xmath13 for @xmath14 + where @xmath15 for

@xmath16 + @xmath17 is the length of the time

series @xmath18 and @xmath19 is the mean value .

the first term of the estimator @xmath20 is

constant . the second term takes two values (

depending on odd or even @xmath21 ) which are not

significant because @xmath22 for large m. thus ,

the third term of ( 1 ) should be analysed .

looking for intervals of @xmath23 for which

@xmath24 has the same sign and different signs one

can find such parts of the function @xmath25 which

create the value @xmath20 . let the set of values

of the independent variable of the autocorrelation

function be called @xmath26 and it can be divided

into the sums of disjoint sets : @xmath27 where +

@xmath28 + @xmath29 @xmath30 @xmath31 + @xmath32 +

@xmath33 @xmath34 @xmath35 @xmath36 @xmath37

@xmath38 @xmath39 @xmath40 well , the set

@xmath41 contains all integer values of @xmath23

from the interval of @xmath42 for which the

autocorrelation function and the cosinus function

with the period @xmath43 $ ] are positive . the

index @xmath44 indicates successive parts of the

cosinus function for which the cosinuses of

successive values of @xmath23 have the same sign .

however , sometimes the set @xmath41 can be empty

. for example , for @xmath45 and @xmath46 the set

@xmath47 should contain all @xmath48 $ ] for which

@xmath49 and @xmath50 , but for such values of

@xmath23 the values of @xmath51 are negative .

thus , the set @xmath47 is empty . . the

periodogram values are presented on the left axis

. the lower curve illustrates the autocorrelation

function of the same time series . the

autocorrelation values are shown in the right axis

. ] let us take into consideration all sets

\{@xmath52 } , \{@xmath53 } and \{@xmath41 } which

are not empty . because numberings and power of

these sets depend on the form of the

autocorrelation function of the given time series

, it is impossible to establish them arbitrary .

thus , the sets of appropriate indexes of the sets

\{@xmath52 } , \{@xmath53 } and \{@xmath41 } are

called @xmath54 , @xmath55 and @xmath56

respectively . for example the set @xmath56

contains all @xmath44 from the set @xmath57 for

which the sets @xmath41 are not empty . to

separate quantitatively in the estimator @xmath20

the positive contributions which are originated by

the cases described by the formula ( 5 ) from the

cases which are described by the formula ( 3 ) the

following indexes are introduced : @xmath58

@xmath59 @xmath60 @xmath61 where @xmath62 @xmath63

@xmath64 taking for the empty sets \{@xmath53 }

and \{@xmath41 } the indices @xmath65 and @xmath66

equal zero . the index @xmath65 describes a

percentage of the contribution of the case when

@xmath25 and @xmath51 are positive to the positive

part of the third term of the sum ( 1 ) . the

index @xmath66 describes a similar contribution ,

but for the case when the both @xmath25 and

@xmath51 are simultaneously negative . thanks to

these one can decide which the positive or the

negative values of the autocorrelation function

have a larger contribution to the positive values

of the estimator @xmath20 . when the difference

@xmath67 is positive , the statement the

@xmath21-th peak really exists can not be rejected

. thus , the following formula should be satisfied

: @xmath68 because the @xmath21-th peak could

exist as a result of the echo - effect , it is

necessary to verify the second condition :

@xmath69\in c_m.\ ] ] . the periodogram values

are presented on the left axis . the lower curve

illustrates the autocorrelation function of the

same time series ( solid line ) . the dotted lines

represent two standard errors of the

autocorrelation function . the dashed horizontal

line shows the zero level . the autocorrelation

values are shown in the right axis . ] to

verify the implication ( 8) firstly it is

necessary to evaluate the sets @xmath41 for

@xmath70 of the values of @xmath23 for which the

autocorrelation function and the cosine function

with the period @xmath71 $ ] are positive and the

sets @xmath72 of values of @xmath23 for which the

autocorrelation function and the cosine function

with the period @xmath43 $ ] are negative .

secondly , a percentage of the contribution of the

sum of products of positive values of @xmath25 and

@xmath51 to the sum of positive products of the

values of @xmath25 and @xmath51 should be

evaluated . as a result the indexes @xmath65 for

each set @xmath41 where @xmath44 is the index from

the set @xmath56 are obtained . thirdly , from all

sets @xmath41 such that @xmath70 the set @xmath73

for which the index @xmath65 is the greatest

should be chosen . the implication ( 8) is true

when the set @xmath73 includes the considered

period @xmath43 $ ] . this means that the greatest

contribution of positive values of the

autocorrelation function and positive cosines with

the period @xmath43 $ ] to the periodogram value

@xmath20 is caused by the sum of positive products

of @xmath74 for each @xmath75-\frac{m}{2k},[\frac{

2m}{k}]+\frac{m}{2k})$ ] . when the implication

( 8) is false , the peak @xmath20 is mainly

created by the sum of positive products of

@xmath74 for each @xmath76-\frac{m}{2k},\big [

\frac{2m}{n}\big ] + \frac{m}{2k } \big ) $ ] ,

where @xmath77 is a multiple or a divisor of

@xmath21 . it is necessary to add , that the de

method should be applied to the periodograms peaks

, which probably exist because of the echo -

effect . it enables one to find such parts of the

autocorrelation function , which have the

significant contribution to the considered peak .

the fact , that the conditions ( 7 ) and ( 8) are

satisfied , can unambiguously decide about the

existence of the considered periodicity in the

given time series , but if at least one of them is

not satisfied , one can doubt about the existence

of the considered periodicity . thus , in such

cases the sentence the peak can not be treated as

true should be used . using the de method it is

necessary to remember about the power of the set

@xmath78 . if @xmath79 is too large , errors of an

autocorrelation function estimation appear . they

are caused by the finite length of the given time

series and as a result additional peaks of the

periodogram occur . if @xmath79 is too small ,

there are less peaks because of a low resolution

of the periodogram . in applications @xmath80 is

used . in order to evaluate the value @xmath79 the

fft method is used . the periodograms computed by

the bt and the fft method are compared . the

conformity of them enables one to obtain the value

@xmath79 . . the fft periodogram values are

presented on the left axis . the lower curve

illustrates the bt periodogram of the same time

series ( solid line and large black circles ) .

the bt periodogram values are shown in the right

axis . ] in this paper the sunspot activity data (

august 1923 - october 1933 ) provided by the

greenwich photoheliographic results ( gpr ) are

analysed . firstly , i consider the monthly

sunspot number data . to eliminate the 11-year

trend from these data , the consecutively smoothed

monthly sunspot number @xmath81 is subtracted from

the monthly sunspot number @xmath82 where the

consecutive mean @xmath83 is given by @xmath84 the

values @xmath83 for @xmath85 and @xmath86 are

calculated using additional data from last six

months of cycle 15 and first six months of cycle

17 . because of the north - south asymmetry of

various solar indices @xcite , the sunspot

activity is considered for each solar hemisphere

separately . analogously to the monthly sunspot

numbers , the time series of sunspot areas in the

northern and southern hemispheres with the spacing

interval @xmath87 rotation are denoted . in order

to find periodicities , the following time series

are used : + @xmath88 + @xmath89 + @xmath90

+ in the lower part of figure [ f1 ] the

autocorrelation function of the time series for

the northern hemisphere @xmath88 is shown . it is

easy to notice that the prominent peak falls at 17

rotations interval ( 459 days ) and @xmath25 for

@xmath91 $ ] rotations ( [ 81 , 162 ] days ) are

significantly negative . the periodogram of the

time series @xmath88 ( see the upper curve in

figures [ f1 ] ) does not show the significant

peaks at @xmath92 rotations ( 135 , 162 days ) ,

but there is the significant peak at @xmath93 (

243 days ) . the peaks at @xmath94 are close to

the peaks of the autocorrelation function . thus ,

the result obtained for the periodicity at about

@xmath0 days are contradict to the results

obtained for the time series of daily sunspot

areas @xcite . for the southern hemisphere (

the lower curve in figure [ f2 ] ) @xmath25 for

@xmath95 $ ] rotations ( [ 54 , 189 ] days ) is

not positive except @xmath96 ( 135 days ) for

which @xmath97 is not statistically significant .

the upper curve in figures [ f2 ] presents the

periodogram of the time series @xmath89 . this

time series does not contain a markov - type

persistence . moreover , the kolmogorov - smirnov

test and the fisher test do not reject a null

hypothesis that the time series is a white noise

only . this means that the time series do not

contain an added deterministic periodic component

of unspecified frequency . the autocorrelation

function of the time series @xmath90 ( the lower

curve in figure [ f3 ] ) has only one

statistically significant peak for @xmath98 months

( 480 days ) and negative values for @xmath99 $ ]

months ( [ 90 , 390 ] days ) . however , the

periodogram of this time series ( the upper curve

in figure [ f3 ] ) has two significant peaks the

first at 15.2 and the second at 5.3 months ( 456 ,

159 days ) . thus , the periodogram contains the

significant peak , although the autocorrelation

function has the negative value at @xmath100

months . to explain these problems two

following time series of daily sunspot areas are

considered : + @xmath101 + @xmath102 + where

@xmath103 the values @xmath104 for @xmath105

and @xmath106 are calculated using additional

daily data from the solar cycles 15 and 17 .

and the cosine function for @xmath45 ( the period

at about 154 days ) . the horizontal line ( dotted

line ) shows the zero level . the vertical dotted

lines evaluate the intervals where the sets

@xmath107 ( for @xmath108 ) are searched . the

percentage values show the index @xmath65 for each

@xmath41 for the time series @xmath102 ( in

parentheses for the time series @xmath101 ) . in

the right bottom corner the values of @xmath65 for

the time series @xmath102 , for @xmath109 are

written . ] ( the 500-day period ) ] the

comparison of the functions @xmath25 of the time

series @xmath101 ( the lower curve in figure [ f4

] ) and @xmath102 ( the lower curve in figure [ f5

] ) suggests that the positive values of the

function @xmath110 of the time series @xmath101 in

the interval of @xmath111 $ ] days could be caused

by the 11-year cycle . this effect is not visible

in the case of periodograms of the both time

series computed using the fft method ( see the

upper curves in figures [ f4 ] and [ f5 ] ) or the

bt method ( see the lower curve in figure [ f6 ] )

. moreover , the periodogram of the time series

@xmath102 has the significant values at @xmath112

days , but the autocorrelation function is

negative at these points . @xcite showed that the

lomb - scargle periodograms for the both time

series ( see @xcite , figures 7 a - c ) have a

peak at 158.8 days which stands over the fap level

by a significant amount . using the de method the

above discrepancies are obvious . to establish the

@xmath79 value the periodograms computed by the

fft and the bt methods are shown in figure [ f6 ]

( the upper and the lower curve respectively ) .

for @xmath46 and for periods less than 166 days

there is a good comformity of the both

periodograms ( but for periods greater than 166

days the points of the bt periodogram are not

linked because the bt periodogram has much worse

resolution than the fft periodogram ( no one know

how to do it ) ) . for @xmath46 and @xmath113 the

value of @xmath21 is 13 ( @xmath71=153 $ ] ) . the

inequality ( 7 ) is satisfied because @xmath114 .

this means that the value of @xmath115 is mainly

created by positive values of the autocorrelation

function . the implication ( 8) needs an

evaluation of the greatest value of the index

@xmath65 where @xmath70 , but the solar data

contain the most prominent period for @xmath116

days because of the solar rotation . thus ,

although @xmath117 for each @xmath118 , all sets

@xmath41 ( see ( 5 ) and ( 6 ) ) without the set

@xmath119 ( see ( 4 ) ) , which contains @xmath120

$ ] , are considered . this situation is presented

in figure [ f7 ] . in this figure two curves

@xmath121 and @xmath122 are plotted . the vertical

dotted lines evaluate the intervals where the sets

@xmath107 ( for @xmath123 ) are searched . for

such @xmath41 two numbers are written : in

parentheses the value of @xmath65 for the time

series @xmath101 and above it the value of

@xmath65 for the time series @xmath102 . to make

this figure clear the curves are plotted for the

set @xmath124 only . ( in the right bottom corner

information about the values of @xmath65 for the

time series @xmath102 , for @xmath109 are written

. ) the implication ( 8) is not true , because

@xmath125 for @xmath126 . therefore ,

@xmath43=153\notin c_6=[423,500]$ ] . moreover ,

the autocorrelation function for @xmath127 $ ] is

negative and the set @xmath128 is empty . thus ,

@xmath129 . on the basis of these information one

can state , that the periodogram peak at @xmath130

days of the time series @xmath102 exists because

of positive @xmath25 , but for @xmath23 from the

intervals which do not contain this period .

looking at the values of @xmath65 of the time

series @xmath101 , one can notice that they

decrease when @xmath23 increases until @xmath131 .

this indicates , that when @xmath23 increases ,

the contribution of the 11-year cycle to the peaks

of the periodogram decreases . an increase of the

value of @xmath65 is for @xmath132 for the both

time series , although the contribution of the

11-year cycle for the time series @xmath101 is

insignificant . thus , this part of the

autocorrelation function ( @xmath133 for the time

series @xmath102 ) influences the @xmath21-th peak

of the periodogram . this suggests that the

periodicity at about 155 days is a harmonic of the

periodicity from the interval of @xmath1 $ ] days

. ( solid line ) and consecutively smoothed

sunspot areas of the one rotation time interval

@xmath134 ( dotted line ) . both indexes are

presented on the left axis . the lower curve

illustrates fluctuations of the sunspot areas

@xmath135 . the dotted and dashed horizontal lines

represent levels zero and @xmath136 respectively .

the fluctuations are shown on the right axis . ]

the described reasoning can be carried out for

other values of the periodogram . for example ,

the condition ( 8) is not satisfied for @xmath137

( 250 , 222 , 200 days ) . moreover , the

autocorrelation function at these points is

negative . these suggest that there are not a true

periodicity in the interval of [ 200 , 250 ] days

. it is difficult to decide about the existence of

the periodicities for @xmath138 ( 333 days ) and

@xmath139 ( 286 days ) on the basis of above

analysis . the implication ( 8) is not satisfied

for @xmath139 and the condition ( 7 ) is not

satisfied for @xmath138 , although the function

@xmath25 of the time series @xmath102 is

significantly positive for @xmath140 . the

conditions ( 7 ) and ( 8) are satisfied for

@xmath141 ( figure [ f8 ] ) and @xmath142 .

therefore , it is possible to exist the

periodicity from the interval of @xmath1 $ ] days

. similar results were also obtained by @xcite for

daily sunspot numbers and daily sunspot areas .

she considered the means of three periodograms of

these indexes for data from @xmath143 years and

found statistically significant peaks from the

interval of @xmath1 $ ] ( see @xcite , figure 2 )

. @xcite studied sunspot areas from 1876 - 1999

and sunspot numbers from 1749 - 2001 with the help

of the wavelet transform . they pointed out that

the 154 - 158-day period could be the third

harmonic of the 1.3-year ( 475-day ) period .

moreover , the both periods fluctuate considerably

with time , being stronger during stronger sunspot

cycles . therefore , the wavelet analysis suggests

a common origin of the both periodicities . this

conclusion confirms the de method result which

indicates that the periodogram peak at @xmath144

days is an alias of the periodicity from the

interval of @xmath1 $ ] in order to verify the

existence of the periodicity at about 155 days i

consider the following time series : + @xmath145

+ @xmath146 + @xmath147 + the value @xmath134

is calculated analogously to @xmath83 ( see sect .

the values @xmath148 and @xmath149 are evaluated

from the formula ( 9 ) . in the upper part of

figure [ f9 ] the time series of sunspot areas

@xmath150 of the one rotation time interval from

the whole solar disk and the time series of

consecutively smoothed sunspot areas @xmath151 are

showed . in the lower part of figure [ f9 ] the

time series of sunspot area fluctuations @xmath145

is presented . on the basis of these data the

maximum activity period of cycle 16 is evaluated .

it is an interval between two strongest

fluctuations e.a . @xmath152 $ ] rotations . the

length of the time interval @xmath153 is 54

rotations . if the about @xmath0-day ( 6 solar

rotations ) periodicity existed in this time

interval and it was characteristic for strong

fluctuations from this time interval , 10 local

maxima in the set of @xmath154 would be seen .

then it should be necessary to find such a value

of p for which @xmath155 for @xmath156 and the

number of the local maxima of these values is 10 .

as it can be seen in the lower part of figure [ f9

] this is for the case of @xmath157 ( in this

figure the dashed horizontal line is the level of

@xmath158 ) . figure [ f10 ] presents nine time

distances among the successive fluctuation local

maxima and the horizontal line represents the

6-rotation periodicity . it is immediately

apparent that the dispersion of these points is 10

and it is difficult to find even few points which

oscillate around the value of 6 . such an analysis

was carried out for smaller and larger @xmath136

and the results were similar . therefore , the

fact , that the about @xmath0-day periodicity

exists in the time series of sunspot area

fluctuations during the maximum activity period is

questionable . . the horizontal line represents

the 6-rotation ( 162-day ) period . ] ] ]

to verify again the existence of the about

@xmath0-day periodicity during the maximum

activity period in each solar hemisphere

separately , the time series @xmath88 and @xmath89

were also cut down to the maximum activity period

( january 1925december 1930 ) . the comparison of

the autocorrelation functions of these time series

with the appriopriate autocorrelation functions of

the time series @xmath88 and @xmath89 , which are

computed for the whole 11-year cycle ( the lower

curves of figures [ f1 ] and [ f2 ] ) , indicates

that there are not significant differences between

them especially for @xmath23=5 and 6 rotations (

135 and 162 days ) ) . this conclusion is

confirmed by the analysis of the time series

@xmath146 for the maximum activity period . the

autocorrelation function ( the lower curve of

figure [ f11 ] ) is negative for the interval of [

57 , 173 ] days , but the resolution of the

periodogram is too low to find the significant

peak at @xmath159 days . the autocorrelation

function gives the same result as for daily

sunspot area fluctuations from the whole solar

disk ( @xmath160 ) ( see also the lower curve of

figures [ f5 ] ) . in the case of the time series

@xmath89 @xmath161 is zero for the fluctuations

from the whole solar cycle and it is almost zero (

@xmath162 ) for the fluctuations from the maximum

activity period . the value @xmath163 is negative

. similarly to the case of the northern hemisphere

the autocorrelation function and the periodogram

of southern hemisphere daily sunspot area

fluctuations from the maximum activity period

@xmath147 are computed ( see figure [ f12 ] ) .

the autocorrelation function has the statistically

significant positive peak in the interval of [ 155

, 165 ] days , but the periodogram has too low

resolution to decide about the possible

periodicities . the correlative analysis indicates

that there are positive fluctuations with time

distances about @xmath0 days in the maximum

activity period . the results of the analyses of

the time series of sunspot area fluctuations from

the maximum activity period are contradict with

the conclusions of @xcite . she uses the power

spectrum analysis only . the periodogram of daily

sunspot fluctuations contains peaks , which could

be harmonics or subharmonics of the true

periodicities . they could be treated as real

periodicities . this effect is not visible for

sunspot data of the one rotation time interval ,

but averaging could lose true periodicities . this

is observed for data from the southern hemisphere

. there is the about @xmath0-day peak in the

autocorrelation function of daily fluctuations ,

but the correlation for data of the one rotation

interval is almost zero or negative at the points

@xmath164 and 6 rotations . thus , it is

reasonable to research both time series together

using the correlative and the power spectrum

analyses . the following results are obtained :

1 . a new method of the detection of statistically

significant peaks of the periodograms enables one

to identify aliases in the periodogram . 2 . two

effects cause the existence of the peak of the

periodogram of the time series of sunspot area

fluctuations at about @xmath0 days : the first is

caused by the 27-day periodicity , which probably

creates the 162-day periodicity ( it is a

subharmonic frequency of the 27-day periodicity )

and the second is caused by statistically

significant positive values of the autocorrelation

function from the intervals of @xmath165 $ ] and

@xmath166 $ ] days . the existence of the

periodicity of about @xmath0 days of the time

series of sunspot area fluctuations and sunspot

area fluctuations from the northern hemisphere

during the maximum activity period is questionable

. the autocorrelation analysis of the time series

of sunspot area fluctuations from the southern

hemisphere indicates that the periodicity of about

155 days exists during the maximum activity period

. i appreciate valuable comments from professor j.

jakimiec ."""

from transformers import LEDForConditionalGeneration, LEDTokenizer

import torch

tokenizer = LEDTokenizer.from_pretrained("allenai/led-large-16384-arxiv")

input_ids = tokenizer(LONG_ARTICLE, return_tensors="pt").input_ids.to("cuda")

global_attention_mask = torch.zeros_like(input_ids)

# set global_attention_mask on first token

global_attention_mask[:, 0] = 1

model = LEDForConditionalGeneration.from_pretrained("allenai/led-large-16384-arxiv", return_dict_in_generate=True).to("cuda")

sequences = model.generate(input_ids, global_attention_mask=global_attention_mask).sequences

summary = tokenizer.batch_decode(sequences)

```

|

aubmindlab/bert-base-arabertv01 | aubmindlab | 2023-06-09T12:24:20Z | 661 | 2 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"ar",

"dataset:wikipedia",

"dataset:OSIAN",

"dataset:1.5B_Arabic_Corpus",

"arxiv:2003.00104",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language: ar

datasets:

- wikipedia

- OSIAN

- 1.5B_Arabic_Corpus

widget:

- text: " عاصمة لبنان هي [MASK] ."

---

# !!! A newer version of this model is available !!! [AraBERTv02](https://huggingface.co/aubmindlab/bert-base-arabertv02)

# AraBERT v1 & v2 : Pre-training BERT for Arabic Language Understanding

<img src="https://raw.githubusercontent.com/aub-mind/arabert/master/arabert_logo.png" width="100" align="left"/>

**AraBERT** is an Arabic pretrained lanaguage model based on [Google's BERT architechture](https://github.com/google-research/bert). AraBERT uses the same BERT-Base config. More details are available in the [AraBERT Paper](https://arxiv.org/abs/2003.00104) and in the [AraBERT Meetup](https://github.com/WissamAntoun/pydata_khobar_meetup)

There are two versions of the model, AraBERTv0.1 and AraBERTv1, with the difference being that AraBERTv1 uses pre-segmented text where prefixes and suffixes were splitted using the [Farasa Segmenter](http://alt.qcri.org/farasa/segmenter.html).

We evalaute AraBERT models on different downstream tasks and compare them to [mBERT]((https://github.com/google-research/bert/blob/master/multilingual.md)), and other state of the art models (*To the extent of our knowledge*). The Tasks were Sentiment Analysis on 6 different datasets ([HARD](https://github.com/elnagara/HARD-Arabic-Dataset), [ASTD-Balanced](https://www.aclweb.org/anthology/D15-1299), [ArsenTD-Lev](https://staff.aub.edu.lb/~we07/Publications/ArSentD-LEV_Sentiment_Corpus.pdf), [LABR](https://github.com/mohamedadaly/LABR)), Named Entity Recognition with the [ANERcorp](http://curtis.ml.cmu.edu/w/courses/index.php/ANERcorp), and Arabic Question Answering on [Arabic-SQuAD and ARCD](https://github.com/husseinmozannar/SOQAL)

# AraBERTv2

## What's New!

AraBERT now comes in 4 new variants to replace the old v1 versions:

More Detail in the AraBERT folder and in the [README](https://github.com/aub-mind/arabert/blob/master/AraBERT/README.md) and in the [AraBERT Paper](https://arxiv.org/abs/2003.00104v2)

Model | HuggingFace Model Name | Size (MB/Params)| Pre-Segmentation | DataSet (Sentences/Size/nWords) |

---|:---:|:---:|:---:|:---:

AraBERTv0.2-base | [bert-base-arabertv02](https://huggingface.co/aubmindlab/bert-base-arabertv02) | 543MB / 136M | No | 200M / 77GB / 8.6B |

AraBERTv0.2-large| [bert-large-arabertv02](https://huggingface.co/aubmindlab/bert-large-arabertv02) | 1.38G 371M | No | 200M / 77GB / 8.6B |

AraBERTv2-base| [bert-base-arabertv2](https://huggingface.co/aubmindlab/bert-base-arabertv2) | 543MB 136M | Yes | 200M / 77GB / 8.6B |

AraBERTv2-large| [bert-large-arabertv2](https://huggingface.co/aubmindlab/bert-large-arabertv2) | 1.38G 371M | Yes | 200M / 77GB / 8.6B |

AraBERTv0.1-base| [bert-base-arabertv01](https://huggingface.co/aubmindlab/bert-base-arabertv01) | 543MB 136M | No | 77M / 23GB / 2.7B |

AraBERTv1-base| [bert-base-arabert](https://huggingface.co/aubmindlab/bert-base-arabert) | 543MB 136M | Yes | 77M / 23GB / 2.7B |

All models are available in the `HuggingFace` model page under the [aubmindlab](https://huggingface.co/aubmindlab/) name. Checkpoints are available in PyTorch, TF2 and TF1 formats.

## Better Pre-Processing and New Vocab

We identified an issue with AraBERTv1's wordpiece vocabulary. The issue came from punctuations and numbers that were still attached to words when learned the wordpiece vocab. We now insert a space between numbers and characters and around punctuation characters.

The new vocabulary was learnt using the `BertWordpieceTokenizer` from the `tokenizers` library, and should now support the Fast tokenizer implementation from the `transformers` library.

**P.S.**: All the old BERT codes should work with the new BERT, just change the model name and check the new preprocessing dunction

**Please read the section on how to use the [preprocessing function](#Preprocessing)**

## Bigger Dataset and More Compute

We used ~3.5 times more data, and trained for longer.

For Dataset Sources see the [Dataset Section](#Dataset)

Model | Hardware | num of examples with seq len (128 / 512) |128 (Batch Size/ Num of Steps) | 512 (Batch Size/ Num of Steps) | Total Steps | Total Time (in Days) |

---|:---:|:---:|:---:|:---:|:---:|:---:

AraBERTv0.2-base | TPUv3-8 | 420M / 207M |2560 / 1M | 384/ 2M | 3M | -

AraBERTv0.2-large | TPUv3-128 | 420M / 207M | 13440 / 250K | 2056 / 300K | 550K | -

AraBERTv2-base | TPUv3-8 | 520M / 245M |13440 / 250K | 2056 / 300K | 550K | -

AraBERTv2-large | TPUv3-128 | 520M / 245M | 13440 / 250K | 2056 / 300K | 550K | -

AraBERT-base (v1/v0.1) | TPUv2-8 | - |512 / 900K | 128 / 300K| 1.2M | 4 days

# Dataset

The pretraining data used for the new AraBERT model is also used for Arabic **GPT2 and ELECTRA**.

The dataset consists of 77GB or 200,095,961 lines or 8,655,948,860 words or 82,232,988,358 chars (before applying Farasa Segmentation)

For the new dataset we added the unshuffled OSCAR corpus, after we thoroughly filter it, to the previous dataset used in AraBERTv1 but with out the websites that we previously crawled:

- OSCAR unshuffled and filtered.

- [Arabic Wikipedia dump](https://archive.org/details/arwiki-20190201) from 2020/09/01

- [The 1.5B words Arabic Corpus](https://www.semanticscholar.org/paper/1.5-billion-words-Arabic-Corpus-El-Khair/f3eeef4afb81223df96575adadf808fe7fe440b4)

- [The OSIAN Corpus](https://www.aclweb.org/anthology/W19-4619)

- Assafir news articles. Huge thank you for Assafir for giving us the data

# Preprocessing

It is recommended to apply our preprocessing function before training/testing on any dataset.

**Install farasapy to segment text for AraBERT v1 & v2 `pip install farasapy`**

```python

from arabert.preprocess import ArabertPreprocessor

model_name="bert-base-arabertv01"

arabert_prep = ArabertPreprocessor(model_name=model_name)

text = "ولن نبالغ إذا قلنا إن هاتف أو كمبيوتر المكتب في زمننا هذا ضروري"

arabert_prep.preprocess(text)

```

## Accepted_models

```

bert-base-arabertv01

bert-base-arabert

bert-base-arabertv02

bert-base-arabertv2

bert-large-arabertv02

bert-large-arabertv2

araelectra-base

aragpt2-base

aragpt2-medium

aragpt2-large

aragpt2-mega

```

# TensorFlow 1.x models

The TF1.x model are available in the HuggingFace models repo.

You can download them as follows:

- via git-lfs: clone all the models in a repo

```bash

curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bash

sudo apt-get install git-lfs

git lfs install

git clone https://huggingface.co/aubmindlab/MODEL_NAME

tar -C ./MODEL_NAME -zxvf /content/MODEL_NAME/tf1_model.tar.gz

```

where `MODEL_NAME` is any model under the `aubmindlab` name

- via `wget`:

- Go to the tf1_model.tar.gz file on huggingface.co/models/aubmindlab/MODEL_NAME.

- copy the `oid sha256`

- then run `wget https://cdn-lfs.huggingface.co/aubmindlab/aragpt2-base/INSERT_THE_SHA_HERE` (ex: for `aragpt2-base`: `wget https://cdn-lfs.huggingface.co/aubmindlab/aragpt2-base/3766fc03d7c2593ff2fb991d275e96b81b0ecb2098b71ff315611d052ce65248`)

# If you used this model please cite us as :

Google Scholar has our Bibtex wrong (missing name), use this instead

```

@inproceedings{antoun2020arabert,

title={AraBERT: Transformer-based Model for Arabic Language Understanding},

author={Antoun, Wissam and Baly, Fady and Hajj, Hazem},