modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

TheBloke/Llama-2-7B-GPTQ | TheBloke | 2023-09-27T12:44:46Z | 23,778 | 78 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"facebook",

"meta",

"pytorch",

"llama-2",

"en",

"arxiv:2307.09288",

"base_model:meta-llama/Llama-2-7b-hf",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"4-bit",

"gptq",

"region:us"

]

| text-generation | 2023-07-18T17:06:01Z | ---

language:

- en

license: llama2

tags:

- facebook

- meta

- pytorch

- llama

- llama-2

model_name: Llama 2 7B

base_model: meta-llama/Llama-2-7b-hf

inference: false

model_creator: Meta

model_type: llama

pipeline_tag: text-generation

prompt_template: '{prompt}

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Llama 2 7B - GPTQ

- Model creator: [Meta](https://huggingface.co/meta-llama)

- Original model: [Llama 2 7B](https://huggingface.co/meta-llama/Llama-2-7b-hf)

<!-- description start -->

## Description

This repo contains GPTQ model files for [Meta's Llama 2 7B](https://huggingface.co/meta-llama/Llama-2-7b-hf).

Multiple GPTQ parameter permutations are provided; see Provided Files below for details of the options provided, their parameters, and the software used to create them.

<!-- description end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Llama-2-7B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Llama-2-7B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Llama-2-7B-GGUF)

* [Meta's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/meta-llama/Llama-2-7b-hf)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: None

```

{prompt}

```

<!-- prompt-template end -->

<!-- README_GPTQ.md-provided-files start -->

## Provided files and GPTQ parameters

Multiple quantisation parameters are provided, to allow you to choose the best one for your hardware and requirements.

Each separate quant is in a different branch. See below for instructions on fetching from different branches.

All recent GPTQ files are made with AutoGPTQ, and all files in non-main branches are made with AutoGPTQ. Files in the `main` branch which were uploaded before August 2023 were made with GPTQ-for-LLaMa.

<details>

<summary>Explanation of GPTQ parameters</summary>

- Bits: The bit size of the quantised model.

- GS: GPTQ group size. Higher numbers use less VRAM, but have lower quantisation accuracy. "None" is the lowest possible value.

- Act Order: True or False. Also known as `desc_act`. True results in better quantisation accuracy. Some GPTQ clients have had issues with models that use Act Order plus Group Size, but this is generally resolved now.

- Damp %: A GPTQ parameter that affects how samples are processed for quantisation. 0.01 is default, but 0.1 results in slightly better accuracy.

- GPTQ dataset: The dataset used for quantisation. Using a dataset more appropriate to the model's training can improve quantisation accuracy. Note that the GPTQ dataset is not the same as the dataset used to train the model - please refer to the original model repo for details of the training dataset(s).

- Sequence Length: The length of the dataset sequences used for quantisation. Ideally this is the same as the model sequence length. For some very long sequence models (16+K), a lower sequence length may have to be used. Note that a lower sequence length does not limit the sequence length of the quantised model. It only impacts the quantisation accuracy on longer inference sequences.

- ExLlama Compatibility: Whether this file can be loaded with ExLlama, which currently only supports Llama models in 4-bit.

</details>

| Branch | Bits | GS | Act Order | Damp % | GPTQ Dataset | Seq Len | Size | ExLlama | Desc |

| ------ | ---- | -- | --------- | ------ | ------------ | ------- | ---- | ------- | ---- |

| [main](https://huggingface.co/TheBloke/Llama-2-7B-GPTQ/tree/main) | 4 | 128 | No | 0.01 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-v1/test) | 4096 | 3.90 GB | Yes | 4-bit, without Act Order and group size 128g. |

| [gptq-4bit-32g-actorder_True](https://huggingface.co/TheBloke/Llama-2-7B-GPTQ/tree/gptq-4bit-32g-actorder_True) | 4 | 32 | Yes | 0.01 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-v1/test) | 4096 | 4.28 GB | Yes | 4-bit, with Act Order and group size 32g. Gives highest possible inference quality, with maximum VRAM usage. |

| [gptq-4bit-64g-actorder_True](https://huggingface.co/TheBloke/Llama-2-7B-GPTQ/tree/gptq-4bit-64g-actorder_True) | 4 | 64 | Yes | 0.01 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-v1/test) | 4096 | 4.02 GB | Yes | 4-bit, with Act Order and group size 64g. Uses less VRAM than 32g, but with slightly lower accuracy. |

| [gptq-4bit-128g-actorder_True](https://huggingface.co/TheBloke/Llama-2-7B-GPTQ/tree/gptq-4bit-128g-actorder_True) | 4 | 128 | Yes | 0.01 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-v1/test) | 4096 | 3.90 GB | Yes | 4-bit, with Act Order and group size 128g. Uses even less VRAM than 64g, but with slightly lower accuracy. |

<!-- README_GPTQ.md-provided-files end -->

<!-- README_GPTQ.md-download-from-branches start -->

## How to download from branches

- In text-generation-webui, you can add `:branch` to the end of the download name, eg `TheBloke/Llama-2-7B-GPTQ:main`

- With Git, you can clone a branch with:

```

git clone --single-branch --branch main https://huggingface.co/TheBloke/Llama-2-7B-GPTQ

```

- In Python Transformers code, the branch is the `revision` parameter; see below.

<!-- README_GPTQ.md-download-from-branches end -->

<!-- README_GPTQ.md-text-generation-webui start -->

## How to easily download and use this model in [text-generation-webui](https://github.com/oobabooga/text-generation-webui).

Please make sure you're using the latest version of [text-generation-webui](https://github.com/oobabooga/text-generation-webui).

It is strongly recommended to use the text-generation-webui one-click-installers unless you're sure you know how to make a manual install.

1. Click the **Model tab**.

2. Under **Download custom model or LoRA**, enter `TheBloke/Llama-2-7B-GPTQ`.

- To download from a specific branch, enter for example `TheBloke/Llama-2-7B-GPTQ:main`

- see Provided Files above for the list of branches for each option.

3. Click **Download**.

4. The model will start downloading. Once it's finished it will say "Done".

5. In the top left, click the refresh icon next to **Model**.

6. In the **Model** dropdown, choose the model you just downloaded: `Llama-2-7B-GPTQ`

7. The model will automatically load, and is now ready for use!

8. If you want any custom settings, set them and then click **Save settings for this model** followed by **Reload the Model** in the top right.

* Note that you do not need to and should not set manual GPTQ parameters any more. These are set automatically from the file `quantize_config.json`.

9. Once you're ready, click the **Text Generation tab** and enter a prompt to get started!

<!-- README_GPTQ.md-text-generation-webui end -->

<!-- README_GPTQ.md-use-from-python start -->

## How to use this GPTQ model from Python code

### Install the necessary packages

Requires: Transformers 4.32.0 or later, Optimum 1.12.0 or later, and AutoGPTQ 0.4.2 or later.

```shell

pip3 install transformers>=4.32.0 optimum>=1.12.0

pip3 install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/cu118/ # Use cu117 if on CUDA 11.7

```

If you have problems installing AutoGPTQ using the pre-built wheels, install it from source instead:

```shell

pip3 uninstall -y auto-gptq

git clone https://github.com/PanQiWei/AutoGPTQ

cd AutoGPTQ

pip3 install .

```

### For CodeLlama models only: you must use Transformers 4.33.0 or later.

If 4.33.0 is not yet released when you read this, you will need to install Transformers from source:

```shell

pip3 uninstall -y transformers

pip3 install git+https://github.com/huggingface/transformers.git

```

### You can then use the following code

```python

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

model_name_or_path = "TheBloke/Llama-2-7B-GPTQ"

# To use a different branch, change revision

# For example: revision="main"

model = AutoModelForCausalLM.from_pretrained(model_name_or_path,

device_map="auto",

trust_remote_code=True,

revision="main")

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=True)

prompt = "Tell me about AI"

prompt_template=f'''{prompt}

'''

print("\n\n*** Generate:")

input_ids = tokenizer(prompt_template, return_tensors='pt').input_ids.cuda()

output = model.generate(inputs=input_ids, temperature=0.7, do_sample=True, top_p=0.95, top_k=40, max_new_tokens=512)

print(tokenizer.decode(output[0]))

# Inference can also be done using transformers' pipeline

print("*** Pipeline:")

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=512,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1

)

print(pipe(prompt_template)[0]['generated_text'])

```

<!-- README_GPTQ.md-use-from-python end -->

<!-- README_GPTQ.md-compatibility start -->

## Compatibility

The files provided are tested to work with AutoGPTQ, both via Transformers and using AutoGPTQ directly. They should also work with [Occ4m's GPTQ-for-LLaMa fork](https://github.com/0cc4m/KoboldAI).

[ExLlama](https://github.com/turboderp/exllama) is compatible with Llama models in 4-bit. Please see the Provided Files table above for per-file compatibility.

[Huggingface Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference) is compatible with all GPTQ models.

<!-- README_GPTQ.md-compatibility end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

# Original model card: Meta's Llama 2 7B

# **Llama 2**

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. This is the repository for the 7B pretrained model, converted for the Hugging Face Transformers format. Links to other models can be found in the index at the bottom.

## Model Details

*Note: Use of this model is governed by the Meta license. In order to download the model weights and tokenizer, please visit the [website](https://ai.meta.com/resources/models-and-libraries/llama-downloads/) and accept our License before requesting access here.*

Meta developed and publicly released the Llama 2 family of large language models (LLMs), a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. Our fine-tuned LLMs, called Llama-2-Chat, are optimized for dialogue use cases. Llama-2-Chat models outperform open-source chat models on most benchmarks we tested, and in our human evaluations for helpfulness and safety, are on par with some popular closed-source models like ChatGPT and PaLM.

**Model Developers** Meta

**Variations** Llama 2 comes in a range of parameter sizes — 7B, 13B, and 70B — as well as pretrained and fine-tuned variations.

**Input** Models input text only.

**Output** Models generate text only.

**Model Architecture** Llama 2 is an auto-regressive language model that uses an optimized transformer architecture. The tuned versions use supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to align to human preferences for helpfulness and safety.

||Training Data|Params|Content Length|GQA|Tokens|LR|

|---|---|---|---|---|---|---|

|Llama 2|*A new mix of publicly available online data*|7B|4k|✗|2.0T|3.0 x 10<sup>-4</sup>|

|Llama 2|*A new mix of publicly available online data*|13B|4k|✗|2.0T|3.0 x 10<sup>-4</sup>|

|Llama 2|*A new mix of publicly available online data*|70B|4k|✔|2.0T|1.5 x 10<sup>-4</sup>|

*Llama 2 family of models.* Token counts refer to pretraining data only. All models are trained with a global batch-size of 4M tokens. Bigger models - 70B -- use Grouped-Query Attention (GQA) for improved inference scalability.

**Model Dates** Llama 2 was trained between January 2023 and July 2023.

**Status** This is a static model trained on an offline dataset. Future versions of the tuned models will be released as we improve model safety with community feedback.

**License** A custom commercial license is available at: [https://ai.meta.com/resources/models-and-libraries/llama-downloads/](https://ai.meta.com/resources/models-and-libraries/llama-downloads/)

**Research Paper** ["Llama-2: Open Foundation and Fine-tuned Chat Models"](arxiv.org/abs/2307.09288)

## Intended Use

**Intended Use Cases** Llama 2 is intended for commercial and research use in English. Tuned models are intended for assistant-like chat, whereas pretrained models can be adapted for a variety of natural language generation tasks.

To get the expected features and performance for the chat versions, a specific formatting needs to be followed, including the `INST` and `<<SYS>>` tags, `BOS` and `EOS` tokens, and the whitespaces and breaklines in between (we recommend calling `strip()` on inputs to avoid double-spaces). See our reference code in github for details: [`chat_completion`](https://github.com/facebookresearch/llama/blob/main/llama/generation.py#L212).

**Out-of-scope Uses** Use in any manner that violates applicable laws or regulations (including trade compliance laws).Use in languages other than English. Use in any other way that is prohibited by the Acceptable Use Policy and Licensing Agreement for Llama 2.

## Hardware and Software

**Training Factors** We used custom training libraries, Meta's Research Super Cluster, and production clusters for pretraining. Fine-tuning, annotation, and evaluation were also performed on third-party cloud compute.

**Carbon Footprint** Pretraining utilized a cumulative 3.3M GPU hours of computation on hardware of type A100-80GB (TDP of 350-400W). Estimated total emissions were 539 tCO2eq, 100% of which were offset by Meta’s sustainability program.

||Time (GPU hours)|Power Consumption (W)|Carbon Emitted(tCO<sub>2</sub>eq)|

|---|---|---|---|

|Llama 2 7B|184320|400|31.22|

|Llama 2 13B|368640|400|62.44|

|Llama 2 70B|1720320|400|291.42|

|Total|3311616||539.00|

**CO<sub>2</sub> emissions during pretraining.** Time: total GPU time required for training each model. Power Consumption: peak power capacity per GPU device for the GPUs used adjusted for power usage efficiency. 100% of the emissions are directly offset by Meta's sustainability program, and because we are openly releasing these models, the pretraining costs do not need to be incurred by others.

## Training Data

**Overview** Llama 2 was pretrained on 2 trillion tokens of data from publicly available sources. The fine-tuning data includes publicly available instruction datasets, as well as over one million new human-annotated examples. Neither the pretraining nor the fine-tuning datasets include Meta user data.

**Data Freshness** The pretraining data has a cutoff of September 2022, but some tuning data is more recent, up to July 2023.

## Evaluation Results

In this section, we report the results for the Llama 1 and Llama 2 models on standard academic benchmarks.For all the evaluations, we use our internal evaluations library.

|Model|Size|Code|Commonsense Reasoning|World Knowledge|Reading Comprehension|Math|MMLU|BBH|AGI Eval|

|---|---|---|---|---|---|---|---|---|---|

|Llama 1|7B|14.1|60.8|46.2|58.5|6.95|35.1|30.3|23.9|

|Llama 1|13B|18.9|66.1|52.6|62.3|10.9|46.9|37.0|33.9|

|Llama 1|33B|26.0|70.0|58.4|67.6|21.4|57.8|39.8|41.7|

|Llama 1|65B|30.7|70.7|60.5|68.6|30.8|63.4|43.5|47.6|

|Llama 2|7B|16.8|63.9|48.9|61.3|14.6|45.3|32.6|29.3|

|Llama 2|13B|24.5|66.9|55.4|65.8|28.7|54.8|39.4|39.1|

|Llama 2|70B|**37.5**|**71.9**|**63.6**|**69.4**|**35.2**|**68.9**|**51.2**|**54.2**|

**Overall performance on grouped academic benchmarks.** *Code:* We report the average pass@1 scores of our models on HumanEval and MBPP. *Commonsense Reasoning:* We report the average of PIQA, SIQA, HellaSwag, WinoGrande, ARC easy and challenge, OpenBookQA, and CommonsenseQA. We report 7-shot results for CommonSenseQA and 0-shot results for all other benchmarks. *World Knowledge:* We evaluate the 5-shot performance on NaturalQuestions and TriviaQA and report the average. *Reading Comprehension:* For reading comprehension, we report the 0-shot average on SQuAD, QuAC, and BoolQ. *MATH:* We report the average of the GSM8K (8 shot) and MATH (4 shot) benchmarks at top 1.

|||TruthfulQA|Toxigen|

|---|---|---|---|

|Llama 1|7B|27.42|23.00|

|Llama 1|13B|41.74|23.08|

|Llama 1|33B|44.19|22.57|

|Llama 1|65B|48.71|21.77|

|Llama 2|7B|33.29|**21.25**|

|Llama 2|13B|41.86|26.10|

|Llama 2|70B|**50.18**|24.60|

**Evaluation of pretrained LLMs on automatic safety benchmarks.** For TruthfulQA, we present the percentage of generations that are both truthful and informative (the higher the better). For ToxiGen, we present the percentage of toxic generations (the smaller the better).

|||TruthfulQA|Toxigen|

|---|---|---|---|

|Llama-2-Chat|7B|57.04|**0.00**|

|Llama-2-Chat|13B|62.18|**0.00**|

|Llama-2-Chat|70B|**64.14**|0.01|

**Evaluation of fine-tuned LLMs on different safety datasets.** Same metric definitions as above.

## Ethical Considerations and Limitations

Llama 2 is a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, Llama 2’s potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of Llama 2, developers should perform safety testing and tuning tailored to their specific applications of the model.

Please see the Responsible Use Guide available at [https://ai.meta.com/llama/responsible-use-guide/](https://ai.meta.com/llama/responsible-use-guide)

## Reporting Issues

Please report any software “bug,” or other problems with the models through one of the following means:

- Reporting issues with the model: [github.com/facebookresearch/llama](http://github.com/facebookresearch/llama)

- Reporting problematic content generated by the model: [developers.facebook.com/llama_output_feedback](http://developers.facebook.com/llama_output_feedback)

- Reporting bugs and security concerns: [facebook.com/whitehat/info](http://facebook.com/whitehat/info)

## Llama Model Index

|Model|Llama2|Llama2-hf|Llama2-chat|Llama2-chat-hf|

|---|---|---|---|---|

|7B| [Link](https://huggingface.co/llamaste/Llama-2-7b) | [Link](https://huggingface.co/llamaste/Llama-2-7b-hf) | [Link](https://huggingface.co/llamaste/Llama-2-7b-chat) | [Link](https://huggingface.co/llamaste/Llama-2-7b-chat-hf)|

|13B| [Link](https://huggingface.co/llamaste/Llama-2-13b) | [Link](https://huggingface.co/llamaste/Llama-2-13b-hf) | [Link](https://huggingface.co/llamaste/Llama-2-13b-chat) | [Link](https://huggingface.co/llamaste/Llama-2-13b-hf)|

|70B| [Link](https://huggingface.co/llamaste/Llama-2-70b) | [Link](https://huggingface.co/llamaste/Llama-2-70b-hf) | [Link](https://huggingface.co/llamaste/Llama-2-70b-chat) | [Link](https://huggingface.co/llamaste/Llama-2-70b-hf)|

|

bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF | bartowski | 2024-07-02T18:05:03Z | 23,778 | 17 | null | [

"gguf",

"text-generation",

"en",

"dataset:openbmb/UltraFeedback",

"license:apache-2.0",

"region:us"

]

| text-generation | 2024-06-30T17:29:24Z | ---

license: apache-2.0

datasets:

- openbmb/UltraFeedback

language:

- en

pipeline_tag: text-generation

quantized_by: bartowski

---

## Llamacpp imatrix Quantizations of Gemma-2-9B-It-SPPO-Iter3

Using <a href="https://github.com/ggerganov/llama.cpp/">llama.cpp</a> release <a href="https://github.com/ggerganov/llama.cpp/releases/tag/b3278">b3278</a> for quantization.

Original model: https://huggingface.co/UCLA-AGI/Gemma-2-9B-It-SPPO-Iter3

All quants made using imatrix option with dataset from [here](https://gist.github.com/bartowski1182/eb213dccb3571f863da82e99418f81e8)

Experimental quants are made with `--output-tensor-type f16 --token-embedding-type f16` per [ZeroWw](https://huggingface.co/ZeroWw)'s suggestion, please provide any feedback on quality differences you spot.

## Prompt format

```

<start_of_turn>user

{prompt}<end_of_turn>

<start_of_turn>model

```

Note that this model does not support a System prompt.

## Download a file (not the whole branch) from below:

| Filename | Quant type | File Size | Description |

| -------- | ---------- | --------- | ----------- |

| [Gemma-2-9B-It-SPPO-Iter3-Q8_0_L.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q8_1.gguf) | Q8_0_L | 10.68GB | *Experimental*, uses f16 for embed and output weights. Please provide any feedback of differences. Extremely high quality, generally unneeded but max available quant. |

| [Gemma-2-9B-It-SPPO-Iter3-Q8_0.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q8_0.gguf) | Q8_0 | 9.82GB | Extremely high quality, generally unneeded but max available quant. |

| [Gemma-2-9B-It-SPPO-Iter3-Q6_K_L.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q6_K_L.gguf) | Q6_K_L | 8.67GB | *Experimental*, uses f16 for embed and output weights. Please provide any feedback of differences. Very high quality, near perfect, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q6_K.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q6_K.gguf) | Q6_K | 7.58GB | Very high quality, near perfect, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q5_K_L.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q5_K_L.gguf) | Q5_K_L | 7.72GB | *Experimental*, uses f16 for embed and output weights. Please provide any feedback of differences. High quality, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q5_K_M.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q5_K_M.gguf) | Q5_K_M | 6.64GB | High quality, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q5_K_S.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q5_K_S.gguf) | Q5_K_S | 6.48GB | High quality, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q4_K_L.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q4_K_L.gguf) | Q4_K_L | 6.84GB | *Experimental*, uses f16 for embed and output weights. Please provide any feedback of differences. Good quality, uses about 4.83 bits per weight, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q4_K_M.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q4_K_M.gguf) | Q4_K_M | 5.76GB | Good quality, uses about 4.83 bits per weight, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q4_K_S.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q4_K_S.gguf) | Q4_K_S | 5.47GB | Slightly lower quality with more space savings, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ4_XS.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ4_XS.gguf) | IQ4_XS | 5.18GB | Decent quality, smaller than Q4_K_S with similar performance, *recommended*. |

| [Gemma-2-9B-It-SPPO-Iter3-Q3_K_XL.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q3_K_XL.gguf) | Q3_K_XL | 6.21GB | *Experimental*, uses f16 for embed and output weights. Please provide any feedback of differences. Lower quality but usable, good for low RAM availability. |

| [Gemma-2-9B-It-SPPO-Iter3-Q3_K_L.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q3_K_L.gguf) | Q3_K_L | 5.13GB | Lower quality but usable, good for low RAM availability. |

| [Gemma-2-9B-It-SPPO-Iter3-Q3_K_M.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q3_K_M.gguf) | Q3_K_M | 4.76GB | Even lower quality. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ3_M.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ3_M.gguf) | IQ3_M | 4.49GB | Medium-low quality, new method with decent performance comparable to Q3_K_M. |

| [Gemma-2-9B-It-SPPO-Iter3-Q3_K_S.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q3_K_S.gguf) | Q3_K_S | 4.33GB | Low quality, not recommended. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ3_XS.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ3_XS.gguf) | IQ3_XS | 4.14GB | Lower quality, new method with decent performance, slightly better than Q3_K_S. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ3_XXS.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ3_XXS.gguf) | IQ3_XXS | 3.79GB | Lower quality, new method with decent performance, comparable to Q3 quants. |

| [Gemma-2-9B-It-SPPO-Iter3-Q2_K.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-Q2_K.gguf) | Q2_K | 3.80GB | Very low quality but surprisingly usable. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ2_M.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ2_M.gguf) | IQ2_M | 3.43GB | Very low quality, uses SOTA techniques to also be surprisingly usable. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ2_S.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ2_S.gguf) | IQ2_S | 3.21GB | Very low quality, uses SOTA techniques to be usable. |

| [Gemma-2-9B-It-SPPO-Iter3-IQ2_XS.gguf](https://huggingface.co/bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF/blob/main/Gemma-2-9B-It-SPPO-Iter3-IQ2_XS.gguf) | IQ2_XS | 3.06GB | Very low quality, uses SOTA techniques to be usable. |

## Downloading using huggingface-cli

First, make sure you have hugginface-cli installed:

```

pip install -U "huggingface_hub[cli]"

```

Then, you can target the specific file you want:

```

huggingface-cli download bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF --include "Gemma-2-9B-It-SPPO-Iter3-Q4_K_M.gguf" --local-dir ./

```

If the model is bigger than 50GB, it will have been split into multiple files. In order to download them all to a local folder, run:

```

huggingface-cli download bartowski/Gemma-2-9B-It-SPPO-Iter3-GGUF --include "Gemma-2-9B-It-SPPO-Iter3-Q8_0.gguf/*" --local-dir Gemma-2-9B-It-SPPO-Iter3-Q8_0

```

You can either specify a new local-dir (Gemma-2-9B-It-SPPO-Iter3-Q8_0) or download them all in place (./)

## Which file should I choose?

A great write up with charts showing various performances is provided by Artefact2 [here](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9)

The first thing to figure out is how big a model you can run. To do this, you'll need to figure out how much RAM and/or VRAM you have.

If you want your model running as FAST as possible, you'll want to fit the whole thing on your GPU's VRAM. Aim for a quant with a file size 1-2GB smaller than your GPU's total VRAM.

If you want the absolute maximum quality, add both your system RAM and your GPU's VRAM together, then similarly grab a quant with a file size 1-2GB Smaller than that total.

Next, you'll need to decide if you want to use an 'I-quant' or a 'K-quant'.

If you don't want to think too much, grab one of the K-quants. These are in format 'QX_K_X', like Q5_K_M.

If you want to get more into the weeds, you can check out this extremely useful feature chart:

[llama.cpp feature matrix](https://github.com/ggerganov/llama.cpp/wiki/Feature-matrix)

But basically, if you're aiming for below Q4, and you're running cuBLAS (Nvidia) or rocBLAS (AMD), you should look towards the I-quants. These are in format IQX_X, like IQ3_M. These are newer and offer better performance for their size.

These I-quants can also be used on CPU and Apple Metal, but will be slower than their K-quant equivalent, so speed vs performance is a tradeoff you'll have to decide.

The I-quants are *not* compatible with Vulcan, which is also AMD, so if you have an AMD card double check if you're using the rocBLAS build or the Vulcan build. At the time of writing this, LM Studio has a preview with ROCm support, and other inference engines have specific builds for ROCm.

Want to support my work? Visit my ko-fi page here: https://ko-fi.com/bartowski

|

TurkuNLP/bert-base-finnish-cased-v1 | TurkuNLP | 2024-02-20T11:56:47Z | 23,762 | 5 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"fi",

"arxiv:1912.07076",

"arxiv:1908.04212",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-03-02T23:29:05Z | ---

language: fi

---

## Quickstart

**Release 1.0** (November 25, 2019)

We generally recommend the use of the cased model.

Paper presenting Finnish BERT: [arXiv:1912.07076](https://arxiv.org/abs/1912.07076)

## What's this?

A version of Google's [BERT](https://github.com/google-research/bert) deep transfer learning model for Finnish. The model can be fine-tuned to achieve state-of-the-art results for various Finnish natural language processing tasks.

FinBERT features a custom 50,000 wordpiece vocabulary that has much better coverage of Finnish words than e.g. the previously released [multilingual BERT](https://github.com/google-research/bert/blob/master/multilingual.md) models from Google:

| Vocabulary | Example |

|------------|---------|

| FinBERT | Suomessa vaihtuu kesän aikana sekä pääministeri että valtiovarain ##ministeri . |

| Multilingual BERT | Suomessa vai ##htuu kes ##än aikana sekä p ##ää ##minister ##i että valt ##io ##vara ##in ##minister ##i . |

FinBERT has been pre-trained for 1 million steps on over 3 billion tokens (24B characters) of Finnish text drawn from news, online discussion, and internet crawls. By contrast, Multilingual BERT was trained on Wikipedia texts, where the Finnish Wikipedia text is approximately 3% of the amount used to train FinBERT.

These features allow FinBERT to outperform not only Multilingual BERT but also all previously proposed models when fine-tuned for Finnish natural language processing tasks.

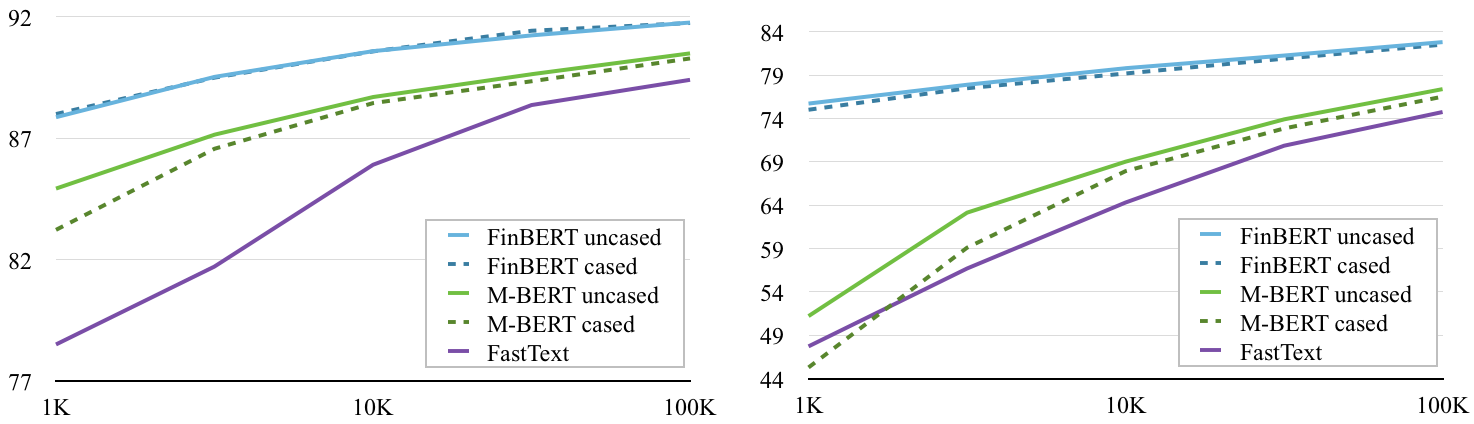

## Results

### Document classification

FinBERT outperforms multilingual BERT (M-BERT) on document classification over a range of training set sizes on the Yle news (left) and Ylilauta online discussion (right) corpora. (Baseline classification performance with [FastText](https://fasttext.cc/) included for reference.)

[[code](https://github.com/spyysalo/finbert-text-classification)][[Yle data](https://github.com/spyysalo/yle-corpus)] [[Ylilauta data](https://github.com/spyysalo/ylilauta-corpus)]

### Named Entity Recognition

Evaluation on FiNER corpus ([Ruokolainen et al 2019](https://arxiv.org/abs/1908.04212))

| Model | Accuracy |

|--------------------|----------|

| **FinBERT** | **92.40%** |

| Multilingual BERT | 90.29% |

| [FiNER-tagger](https://github.com/Traubert/FiNer-rules) (rule-based) | 86.82% |

(FiNER tagger results from [Ruokolainen et al. 2019](https://arxiv.org/pdf/1908.04212.pdf))

[[code](https://github.com/jouniluoma/keras-bert-ner)][[data](https://github.com/mpsilfve/finer-data)]

### Part of speech tagging

Evaluation on three Finnish corpora annotated with [Universal Dependencies](https://universaldependencies.org/) part-of-speech tags: the Turku Dependency Treebank (TDT), FinnTreeBank (FTB), and Parallel UD treebank (PUD)

| Model | TDT | FTB | PUD |

|-------------------|-------------|-------------|-------------|

| **FinBERT** | **98.23%** | **98.39%** | **98.08%** |

| Multilingual BERT | 96.97% | 95.87% | 97.58% |

[[code](https://github.com/spyysalo/bert-pos)][[data](http://hdl.handle.net/11234/1-2837)]

## Previous releases

### Release 0.2

**October 24, 2019** Beta version of the BERT base uncased model trained from scratch on a corpus of Finnish news, online discussions, and crawled data.

Download the model here: [bert-base-finnish-uncased.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-uncased.zip)

### Release 0.1

**September 30, 2019** We release a beta version of the BERT base cased model trained from scratch on a corpus of Finnish news, online discussions, and crawled data.

Download the model here: [bert-base-finnish-cased.zip](http://dl.turkunlp.org/finbert/bert-base-finnish-cased.zip)

|

timm/vit_large_patch14_reg4_dinov2.lvd142m | timm | 2024-02-09T17:59:52Z | 23,757 | 2 | timm | [

"timm",

"pytorch",

"safetensors",

"image-feature-extraction",

"arxiv:2309.16588",

"arxiv:2304.07193",

"arxiv:2010.11929",

"license:apache-2.0",

"region:us"

]

| image-feature-extraction | 2023-10-30T04:52:17Z | ---

license: apache-2.0

library_name: timm

tags:

- image-feature-extraction

- timm

---

# Model card for vit_large_patch14_reg4_dinov2.lvd142m

A Vision Transformer (ViT) image feature model with registers. Pretrained on LVD-142M with self-supervised DINOv2 method.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 304.4

- GMACs: 416.1

- Activations (M): 305.3

- Image size: 518 x 518

- **Papers:**

- Vision Transformers Need Registers: https://arxiv.org/abs/2309.16588

- DINOv2: Learning Robust Visual Features without Supervision: https://arxiv.org/abs/2304.07193

- An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale: https://arxiv.org/abs/2010.11929v2

- **Original:** https://github.com/facebookresearch/dinov2

- **Pretrain Dataset:** LVD-142M

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('vit_large_patch14_reg4_dinov2.lvd142m', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'vit_large_patch14_reg4_dinov2.lvd142m',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 1374, 1024) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

## Citation

```bibtex

@article{darcet2023vision,

title={Vision Transformers Need Registers},

author={Darcet, Timoth{'e}e and Oquab, Maxime and Mairal, Julien and Bojanowski, Piotr},

journal={arXiv preprint arXiv:2309.16588},

year={2023}

}

```

```bibtex

@misc{oquab2023dinov2,

title={DINOv2: Learning Robust Visual Features without Supervision},

author={Oquab, Maxime and Darcet, Timothée and Moutakanni, Theo and Vo, Huy V. and Szafraniec, Marc and Khalidov, Vasil and Fernandez, Pierre and Haziza, Daniel and Massa, Francisco and El-Nouby, Alaaeldin and Howes, Russell and Huang, Po-Yao and Xu, Hu and Sharma, Vasu and Li, Shang-Wen and Galuba, Wojciech and Rabbat, Mike and Assran, Mido and Ballas, Nicolas and Synnaeve, Gabriel and Misra, Ishan and Jegou, Herve and Mairal, Julien and Labatut, Patrick and Joulin, Armand and Bojanowski, Piotr},

journal={arXiv:2304.07193},

year={2023}

}

```

```bibtex

@article{dosovitskiy2020vit,

title={An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale},

author={Dosovitskiy, Alexey and Beyer, Lucas and Kolesnikov, Alexander and Weissenborn, Dirk and Zhai, Xiaohua and Unterthiner, Thomas and Dehghani, Mostafa and Minderer, Matthias and Heigold, Georg and Gelly, Sylvain and Uszkoreit, Jakob and Houlsby, Neil},

journal={ICLR},

year={2021}

}

```

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

``` |

hkunlp/instructor-xl | hkunlp | 2023-01-21T06:33:27Z | 23,754 | 533 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"t5",

"text-embedding",

"embeddings",

"information-retrieval",

"beir",

"text-classification",

"language-model",

"text-clustering",

"text-semantic-similarity",

"text-evaluation",

"prompt-retrieval",

"text-reranking",

"feature-extraction",

"sentence-similarity",

"transformers",

"English",

"Sentence Similarity",

"natural_questions",

"ms_marco",

"fever",

"hotpot_qa",

"mteb",

"en",

"arxiv:2212.09741",

"license:apache-2.0",

"model-index",

"region:us"

]

| sentence-similarity | 2022-12-20T06:07:18Z | ---

pipeline_tag: sentence-similarity

tags:

- text-embedding

- embeddings

- information-retrieval

- beir

- text-classification

- language-model

- text-clustering

- text-semantic-similarity

- text-evaluation

- prompt-retrieval

- text-reranking

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- t5

- English

- Sentence Similarity

- natural_questions

- ms_marco

- fever

- hotpot_qa

- mteb

language: en

inference: false

license: apache-2.0

model-index:

- name: final_xl_results

results:

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (en)

config: en

split: test

revision: e8379541af4e31359cca9fbcf4b00f2671dba205

metrics:

- type: accuracy

value: 85.08955223880596

- type: ap

value: 52.66066378722476

- type: f1

value: 79.63340218960269

- task:

type: Classification

dataset:

type: mteb/amazon_polarity

name: MTEB AmazonPolarityClassification

config: default

split: test

revision: e2d317d38cd51312af73b3d32a06d1a08b442046

metrics:

- type: accuracy

value: 86.542

- type: ap

value: 81.92695193008987

- type: f1

value: 86.51466132573681

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (en)

config: en

split: test

revision: 1399c76144fd37290681b995c656ef9b2e06e26d

metrics:

- type: accuracy

value: 42.964

- type: f1

value: 41.43146249774862

- task:

type: Retrieval

dataset:

type: arguana

name: MTEB ArguAna

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 29.872

- type: map_at_10

value: 46.342

- type: map_at_100

value: 47.152

- type: map_at_1000

value: 47.154

- type: map_at_3

value: 41.216

- type: map_at_5

value: 44.035999999999994

- type: mrr_at_1

value: 30.939

- type: mrr_at_10

value: 46.756

- type: mrr_at_100

value: 47.573

- type: mrr_at_1000

value: 47.575

- type: mrr_at_3

value: 41.548

- type: mrr_at_5

value: 44.425

- type: ndcg_at_1

value: 29.872

- type: ndcg_at_10

value: 55.65

- type: ndcg_at_100

value: 58.88099999999999

- type: ndcg_at_1000

value: 58.951

- type: ndcg_at_3

value: 45.0

- type: ndcg_at_5

value: 50.09

- type: precision_at_1

value: 29.872

- type: precision_at_10

value: 8.549

- type: precision_at_100

value: 0.991

- type: precision_at_1000

value: 0.1

- type: precision_at_3

value: 18.658

- type: precision_at_5

value: 13.669999999999998

- type: recall_at_1

value: 29.872

- type: recall_at_10

value: 85.491

- type: recall_at_100

value: 99.075

- type: recall_at_1000

value: 99.644

- type: recall_at_3

value: 55.974000000000004

- type: recall_at_5

value: 68.35

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-p2p

name: MTEB ArxivClusteringP2P

config: default

split: test

revision: a122ad7f3f0291bf49cc6f4d32aa80929df69d5d

metrics:

- type: v_measure

value: 42.452729850641276

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-s2s

name: MTEB ArxivClusteringS2S

config: default

split: test

revision: f910caf1a6075f7329cdf8c1a6135696f37dbd53

metrics:

- type: v_measure

value: 32.21141846480423

- task:

type: Reranking

dataset:

type: mteb/askubuntudupquestions-reranking

name: MTEB AskUbuntuDupQuestions

config: default

split: test

revision: 2000358ca161889fa9c082cb41daa8dcfb161a54

metrics:

- type: map

value: 65.34710928952622

- type: mrr

value: 77.61124301983028

- task:

type: STS

dataset:

type: mteb/biosses-sts

name: MTEB BIOSSES

config: default

split: test

revision: d3fb88f8f02e40887cd149695127462bbcf29b4a

metrics:

- type: cos_sim_spearman

value: 84.15312230525639

- task:

type: Classification

dataset:

type: mteb/banking77

name: MTEB Banking77Classification

config: default

split: test

revision: 0fd18e25b25c072e09e0d92ab615fda904d66300

metrics:

- type: accuracy

value: 82.66233766233766

- type: f1

value: 82.04175284777669

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-p2p

name: MTEB BiorxivClusteringP2P

config: default

split: test

revision: 65b79d1d13f80053f67aca9498d9402c2d9f1f40

metrics:

- type: v_measure

value: 37.36697339826455

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-s2s

name: MTEB BiorxivClusteringS2S

config: default

split: test

revision: 258694dd0231531bc1fd9de6ceb52a0853c6d908

metrics:

- type: v_measure

value: 30.551241447593092

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackAndroidRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 36.797000000000004

- type: map_at_10

value: 48.46

- type: map_at_100

value: 49.968

- type: map_at_1000

value: 50.080000000000005

- type: map_at_3

value: 44.71

- type: map_at_5

value: 46.592

- type: mrr_at_1

value: 45.494

- type: mrr_at_10

value: 54.747

- type: mrr_at_100

value: 55.43599999999999

- type: mrr_at_1000

value: 55.464999999999996

- type: mrr_at_3

value: 52.361000000000004

- type: mrr_at_5

value: 53.727000000000004

- type: ndcg_at_1

value: 45.494

- type: ndcg_at_10

value: 54.989

- type: ndcg_at_100

value: 60.096000000000004

- type: ndcg_at_1000

value: 61.58

- type: ndcg_at_3

value: 49.977

- type: ndcg_at_5

value: 51.964999999999996

- type: precision_at_1

value: 45.494

- type: precision_at_10

value: 10.558

- type: precision_at_100

value: 1.6049999999999998

- type: precision_at_1000

value: 0.203

- type: precision_at_3

value: 23.796

- type: precision_at_5

value: 16.881

- type: recall_at_1

value: 36.797000000000004

- type: recall_at_10

value: 66.83

- type: recall_at_100

value: 88.34100000000001

- type: recall_at_1000

value: 97.202

- type: recall_at_3

value: 51.961999999999996

- type: recall_at_5

value: 57.940000000000005

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackEnglishRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 32.597

- type: map_at_10

value: 43.424

- type: map_at_100

value: 44.78

- type: map_at_1000

value: 44.913

- type: map_at_3

value: 40.315

- type: map_at_5

value: 41.987

- type: mrr_at_1

value: 40.382

- type: mrr_at_10

value: 49.219

- type: mrr_at_100

value: 49.895

- type: mrr_at_1000

value: 49.936

- type: mrr_at_3

value: 46.996

- type: mrr_at_5

value: 48.231

- type: ndcg_at_1

value: 40.382

- type: ndcg_at_10

value: 49.318

- type: ndcg_at_100

value: 53.839999999999996

- type: ndcg_at_1000

value: 55.82899999999999

- type: ndcg_at_3

value: 44.914

- type: ndcg_at_5

value: 46.798

- type: precision_at_1

value: 40.382

- type: precision_at_10

value: 9.274000000000001

- type: precision_at_100

value: 1.497

- type: precision_at_1000

value: 0.198

- type: precision_at_3

value: 21.592

- type: precision_at_5

value: 15.159

- type: recall_at_1

value: 32.597

- type: recall_at_10

value: 59.882000000000005

- type: recall_at_100

value: 78.446

- type: recall_at_1000

value: 90.88000000000001

- type: recall_at_3

value: 46.9

- type: recall_at_5

value: 52.222

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGamingRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 43.8

- type: map_at_10

value: 57.293000000000006

- type: map_at_100

value: 58.321

- type: map_at_1000

value: 58.361

- type: map_at_3

value: 53.839999999999996

- type: map_at_5

value: 55.838

- type: mrr_at_1

value: 49.592000000000006

- type: mrr_at_10

value: 60.643

- type: mrr_at_100

value: 61.23499999999999

- type: mrr_at_1000

value: 61.251999999999995

- type: mrr_at_3

value: 58.265

- type: mrr_at_5

value: 59.717

- type: ndcg_at_1

value: 49.592000000000006

- type: ndcg_at_10

value: 63.364

- type: ndcg_at_100

value: 67.167

- type: ndcg_at_1000

value: 67.867

- type: ndcg_at_3

value: 57.912

- type: ndcg_at_5

value: 60.697

- type: precision_at_1

value: 49.592000000000006

- type: precision_at_10

value: 10.088

- type: precision_at_100

value: 1.2930000000000001

- type: precision_at_1000

value: 0.13899999999999998

- type: precision_at_3

value: 25.789

- type: precision_at_5

value: 17.541999999999998

- type: recall_at_1

value: 43.8

- type: recall_at_10

value: 77.635

- type: recall_at_100

value: 93.748

- type: recall_at_1000

value: 98.468

- type: recall_at_3

value: 63.223

- type: recall_at_5

value: 70.122

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGisRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.721

- type: map_at_10

value: 35.626999999999995

- type: map_at_100

value: 36.719

- type: map_at_1000

value: 36.8

- type: map_at_3

value: 32.781

- type: map_at_5

value: 34.333999999999996

- type: mrr_at_1

value: 29.604999999999997

- type: mrr_at_10

value: 37.564

- type: mrr_at_100

value: 38.505

- type: mrr_at_1000

value: 38.565

- type: mrr_at_3

value: 34.727000000000004

- type: mrr_at_5

value: 36.207

- type: ndcg_at_1

value: 29.604999999999997

- type: ndcg_at_10

value: 40.575

- type: ndcg_at_100

value: 45.613

- type: ndcg_at_1000

value: 47.676

- type: ndcg_at_3

value: 34.811

- type: ndcg_at_5

value: 37.491

- type: precision_at_1

value: 29.604999999999997

- type: precision_at_10

value: 6.1690000000000005

- type: precision_at_100

value: 0.906

- type: precision_at_1000

value: 0.11199999999999999

- type: precision_at_3

value: 14.237

- type: precision_at_5

value: 10.056

- type: recall_at_1

value: 27.721

- type: recall_at_10

value: 54.041

- type: recall_at_100

value: 76.62299999999999

- type: recall_at_1000

value: 92.134

- type: recall_at_3

value: 38.582

- type: recall_at_5

value: 44.989000000000004

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackMathematicaRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 16.553

- type: map_at_10

value: 25.384

- type: map_at_100

value: 26.655

- type: map_at_1000

value: 26.778000000000002

- type: map_at_3

value: 22.733

- type: map_at_5

value: 24.119

- type: mrr_at_1

value: 20.149

- type: mrr_at_10

value: 29.705

- type: mrr_at_100

value: 30.672

- type: mrr_at_1000

value: 30.737

- type: mrr_at_3

value: 27.032

- type: mrr_at_5

value: 28.369

- type: ndcg_at_1

value: 20.149

- type: ndcg_at_10

value: 30.843999999999998

- type: ndcg_at_100

value: 36.716

- type: ndcg_at_1000

value: 39.495000000000005

- type: ndcg_at_3

value: 25.918999999999997

- type: ndcg_at_5

value: 27.992

- type: precision_at_1

value: 20.149

- type: precision_at_10

value: 5.858

- type: precision_at_100

value: 1.009

- type: precision_at_1000

value: 0.13799999999999998

- type: precision_at_3

value: 12.645000000000001

- type: precision_at_5

value: 9.179

- type: recall_at_1

value: 16.553

- type: recall_at_10

value: 43.136

- type: recall_at_100

value: 68.562

- type: recall_at_1000

value: 88.208

- type: recall_at_3

value: 29.493000000000002

- type: recall_at_5

value: 34.751

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackPhysicsRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 28.000999999999998

- type: map_at_10

value: 39.004

- type: map_at_100

value: 40.461999999999996

- type: map_at_1000

value: 40.566

- type: map_at_3

value: 35.805

- type: map_at_5

value: 37.672

- type: mrr_at_1

value: 33.782000000000004

- type: mrr_at_10

value: 44.702

- type: mrr_at_100

value: 45.528

- type: mrr_at_1000

value: 45.576

- type: mrr_at_3

value: 42.14

- type: mrr_at_5

value: 43.651

- type: ndcg_at_1

value: 33.782000000000004

- type: ndcg_at_10

value: 45.275999999999996

- type: ndcg_at_100

value: 50.888

- type: ndcg_at_1000

value: 52.879

- type: ndcg_at_3

value: 40.191

- type: ndcg_at_5

value: 42.731

- type: precision_at_1

value: 33.782000000000004

- type: precision_at_10

value: 8.200000000000001

- type: precision_at_100

value: 1.287

- type: precision_at_1000

value: 0.16199999999999998

- type: precision_at_3

value: 19.185

- type: precision_at_5

value: 13.667000000000002

- type: recall_at_1

value: 28.000999999999998

- type: recall_at_10

value: 58.131

- type: recall_at_100

value: 80.869

- type: recall_at_1000

value: 93.931

- type: recall_at_3

value: 44.161

- type: recall_at_5

value: 50.592000000000006

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackProgrammersRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 28.047

- type: map_at_10

value: 38.596000000000004

- type: map_at_100

value: 40.116

- type: map_at_1000

value: 40.232

- type: map_at_3

value: 35.205

- type: map_at_5

value: 37.076

- type: mrr_at_1

value: 34.932

- type: mrr_at_10

value: 44.496

- type: mrr_at_100

value: 45.47

- type: mrr_at_1000

value: 45.519999999999996

- type: mrr_at_3

value: 41.743

- type: mrr_at_5

value: 43.352000000000004

- type: ndcg_at_1

value: 34.932

- type: ndcg_at_10

value: 44.901

- type: ndcg_at_100

value: 50.788999999999994

- type: ndcg_at_1000

value: 52.867

- type: ndcg_at_3

value: 39.449

- type: ndcg_at_5

value: 41.929

- type: precision_at_1

value: 34.932

- type: precision_at_10

value: 8.311

- type: precision_at_100

value: 1.3050000000000002

- type: precision_at_1000

value: 0.166

- type: precision_at_3

value: 18.836

- type: precision_at_5

value: 13.447000000000001

- type: recall_at_1

value: 28.047

- type: recall_at_10

value: 57.717

- type: recall_at_100

value: 82.182

- type: recall_at_1000

value: 95.82000000000001

- type: recall_at_3

value: 42.448

- type: recall_at_5

value: 49.071

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.861250000000005

- type: map_at_10

value: 37.529583333333335

- type: map_at_100

value: 38.7915

- type: map_at_1000

value: 38.90558333333335

- type: map_at_3

value: 34.57333333333333

- type: map_at_5

value: 36.187166666666656

- type: mrr_at_1

value: 32.88291666666666

- type: mrr_at_10

value: 41.79750000000001

- type: mrr_at_100

value: 42.63183333333333

- type: mrr_at_1000

value: 42.68483333333333

- type: mrr_at_3

value: 39.313750000000006

- type: mrr_at_5

value: 40.70483333333333

- type: ndcg_at_1

value: 32.88291666666666

- type: ndcg_at_10

value: 43.09408333333333

- type: ndcg_at_100

value: 48.22158333333333

- type: ndcg_at_1000

value: 50.358000000000004

- type: ndcg_at_3

value: 38.129583333333336

- type: ndcg_at_5

value: 40.39266666666666

- type: precision_at_1

value: 32.88291666666666

- type: precision_at_10

value: 7.5584999999999996

- type: precision_at_100

value: 1.1903333333333332

- type: precision_at_1000

value: 0.15658333333333332

- type: precision_at_3

value: 17.495916666666666

- type: precision_at_5

value: 12.373833333333332

- type: recall_at_1

value: 27.861250000000005

- type: recall_at_10

value: 55.215916666666665

- type: recall_at_100

value: 77.392

- type: recall_at_1000

value: 92.04908333333334

- type: recall_at_3

value: 41.37475

- type: recall_at_5

value: 47.22908333333333

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackStatsRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 25.064999999999998

- type: map_at_10

value: 31.635999999999996

- type: map_at_100

value: 32.596000000000004

- type: map_at_1000

value: 32.695

- type: map_at_3

value: 29.612

- type: map_at_5

value: 30.768

- type: mrr_at_1

value: 28.528

- type: mrr_at_10

value: 34.717

- type: mrr_at_100

value: 35.558

- type: mrr_at_1000

value: 35.626000000000005

- type: mrr_at_3

value: 32.745000000000005

- type: mrr_at_5

value: 33.819

- type: ndcg_at_1

value: 28.528

- type: ndcg_at_10

value: 35.647

- type: ndcg_at_100

value: 40.207

- type: ndcg_at_1000

value: 42.695

- type: ndcg_at_3

value: 31.878

- type: ndcg_at_5

value: 33.634

- type: precision_at_1

value: 28.528

- type: precision_at_10

value: 5.46

- type: precision_at_100

value: 0.84

- type: precision_at_1000

value: 0.11399999999999999

- type: precision_at_3

value: 13.547999999999998

- type: precision_at_5

value: 9.325

- type: recall_at_1

value: 25.064999999999998

- type: recall_at_10

value: 45.096000000000004

- type: recall_at_100

value: 65.658

- type: recall_at_1000

value: 84.128

- type: recall_at_3

value: 34.337

- type: recall_at_5

value: 38.849000000000004

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackTexRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 17.276

- type: map_at_10

value: 24.535

- type: map_at_100

value: 25.655

- type: map_at_1000

value: 25.782

- type: map_at_3

value: 22.228

- type: map_at_5

value: 23.612

- type: mrr_at_1

value: 21.266

- type: mrr_at_10

value: 28.474

- type: mrr_at_100

value: 29.398000000000003

- type: mrr_at_1000

value: 29.482000000000003

- type: mrr_at_3

value: 26.245

- type: mrr_at_5

value: 27.624

- type: ndcg_at_1

value: 21.266

- type: ndcg_at_10

value: 29.087000000000003

- type: ndcg_at_100

value: 34.374

- type: ndcg_at_1000

value: 37.433

- type: ndcg_at_3

value: 25.040000000000003

- type: ndcg_at_5

value: 27.116

- type: precision_at_1

value: 21.266

- type: precision_at_10

value: 5.258

- type: precision_at_100

value: 0.9299999999999999

- type: precision_at_1000

value: 0.13699999999999998

- type: precision_at_3

value: 11.849

- type: precision_at_5

value: 8.699

- type: recall_at_1

value: 17.276

- type: recall_at_10

value: 38.928000000000004

- type: recall_at_100

value: 62.529

- type: recall_at_1000

value: 84.44800000000001

- type: recall_at_3

value: 27.554000000000002

- type: recall_at_5

value: 32.915

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackUnixRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.297

- type: map_at_10

value: 36.957

- type: map_at_100

value: 38.252

- type: map_at_1000

value: 38.356

- type: map_at_3

value: 34.121

- type: map_at_5

value: 35.782000000000004

- type: mrr_at_1

value: 32.275999999999996

- type: mrr_at_10

value: 41.198

- type: mrr_at_100

value: 42.131

- type: mrr_at_1000

value: 42.186

- type: mrr_at_3

value: 38.557

- type: mrr_at_5

value: 40.12

- type: ndcg_at_1

value: 32.275999999999996

- type: ndcg_at_10

value: 42.516

- type: ndcg_at_100

value: 48.15

- type: ndcg_at_1000

value: 50.344

- type: ndcg_at_3

value: 37.423

- type: ndcg_at_5

value: 39.919

- type: precision_at_1

value: 32.275999999999996

- type: precision_at_10

value: 7.155

- type: precision_at_100

value: 1.123

- type: precision_at_1000

value: 0.14200000000000002

- type: precision_at_3

value: 17.163999999999998

- type: precision_at_5

value: 12.127

- type: recall_at_1

value: 27.297

- type: recall_at_10

value: 55.238

- type: recall_at_100

value: 79.2

- type: recall_at_1000

value: 94.258

- type: recall_at_3

value: 41.327000000000005

- type: recall_at_5

value: 47.588

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWebmastersRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 29.142000000000003

- type: map_at_10

value: 38.769

- type: map_at_100

value: 40.292

- type: map_at_1000

value: 40.510000000000005

- type: map_at_3

value: 35.39

- type: map_at_5

value: 37.009

- type: mrr_at_1

value: 34.19

- type: mrr_at_10

value: 43.418

- type: mrr_at_100

value: 44.132

- type: mrr_at_1000

value: 44.175

- type: mrr_at_3

value: 40.547

- type: mrr_at_5

value: 42.088

- type: ndcg_at_1

value: 34.19

- type: ndcg_at_10

value: 45.14

- type: ndcg_at_100

value: 50.364

- type: ndcg_at_1000

value: 52.481

- type: ndcg_at_3

value: 39.466

- type: ndcg_at_5

value: 41.772

- type: precision_at_1

value: 34.19

- type: precision_at_10

value: 8.715

- type: precision_at_100

value: 1.6150000000000002

- type: precision_at_1000

value: 0.247

- type: precision_at_3

value: 18.248

- type: precision_at_5

value: 13.161999999999999

- type: recall_at_1

value: 29.142000000000003

- type: recall_at_10

value: 57.577999999999996

- type: recall_at_100

value: 81.428

- type: recall_at_1000

value: 94.017

- type: recall_at_3

value: 41.402

- type: recall_at_5

value: 47.695

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWordpressRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 22.039

- type: map_at_10

value: 30.669999999999998

- type: map_at_100

value: 31.682

- type: map_at_1000

value: 31.794

- type: map_at_3

value: 28.139999999999997

- type: map_at_5

value: 29.457

- type: mrr_at_1

value: 24.399

- type: mrr_at_10

value: 32.687

- type: mrr_at_100

value: 33.622

- type: mrr_at_1000

value: 33.698

- type: mrr_at_3

value: 30.407

- type: mrr_at_5

value: 31.552999999999997

- type: ndcg_at_1

value: 24.399

- type: ndcg_at_10

value: 35.472

- type: ndcg_at_100

value: 40.455000000000005

- type: ndcg_at_1000

value: 43.15

- type: ndcg_at_3

value: 30.575000000000003

- type: ndcg_at_5

value: 32.668

- type: precision_at_1

value: 24.399

- type: precision_at_10

value: 5.656

- type: precision_at_100

value: 0.874

- type: precision_at_1000

value: 0.121

- type: precision_at_3

value: 13.062000000000001

- type: precision_at_5

value: 9.242

- type: recall_at_1

value: 22.039

- type: recall_at_10

value: 48.379

- type: recall_at_100

value: 71.11800000000001

- type: recall_at_1000

value: 91.095

- type: recall_at_3

value: 35.108

- type: recall_at_5

value: 40.015

- task:

type: Retrieval

dataset:

type: climate-fever

name: MTEB ClimateFEVER

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 10.144

- type: map_at_10

value: 18.238

- type: map_at_100

value: 20.143

- type: map_at_1000

value: 20.346

- type: map_at_3

value: 14.809

- type: map_at_5

value: 16.567999999999998

- type: mrr_at_1

value: 22.671

- type: mrr_at_10

value: 34.906

- type: mrr_at_100

value: 35.858000000000004

- type: mrr_at_1000

value: 35.898

- type: mrr_at_3

value: 31.238

- type: mrr_at_5

value: 33.342

- type: ndcg_at_1

value: 22.671

- type: ndcg_at_10

value: 26.540000000000003

- type: ndcg_at_100

value: 34.138000000000005

- type: ndcg_at_1000

value: 37.72

- type: ndcg_at_3

value: 20.766000000000002

- type: ndcg_at_5

value: 22.927

- type: precision_at_1

value: 22.671

- type: precision_at_10

value: 8.619

- type: precision_at_100

value: 1.678

- type: precision_at_1000

value: 0.23500000000000001

- type: precision_at_3

value: 15.592

- type: precision_at_5

value: 12.43

- type: recall_at_1

value: 10.144

- type: recall_at_10

value: 33.46

- type: recall_at_100

value: 59.758

- type: recall_at_1000

value: 79.704

- type: recall_at_3

value: 19.604

- type: recall_at_5

value: 25.367

- task:

type: Retrieval

dataset:

type: dbpedia-entity

name: MTEB DBPedia

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 8.654

- type: map_at_10

value: 18.506

- type: map_at_100

value: 26.412999999999997

- type: map_at_1000

value: 28.13

- type: map_at_3

value: 13.379

- type: map_at_5

value: 15.529000000000002

- type: mrr_at_1

value: 66.0

- type: mrr_at_10

value: 74.13

- type: mrr_at_100

value: 74.48700000000001

- type: mrr_at_1000

value: 74.49799999999999

- type: mrr_at_3

value: 72.75

- type: mrr_at_5

value: 73.762

- type: ndcg_at_1

value: 54.50000000000001

- type: ndcg_at_10

value: 40.236

- type: ndcg_at_100

value: 44.690999999999995

- type: ndcg_at_1000

value: 52.195

- type: ndcg_at_3

value: 45.632

- type: ndcg_at_5

value: 42.952

- type: precision_at_1

value: 66.0

- type: precision_at_10

value: 31.724999999999998

- type: precision_at_100

value: 10.299999999999999

- type: precision_at_1000

value: 2.194

- type: precision_at_3

value: 48.75

- type: precision_at_5

value: 41.6

- type: recall_at_1

value: 8.654

- type: recall_at_10

value: 23.74

- type: recall_at_100

value: 50.346999999999994

- type: recall_at_1000

value: 74.376

- type: recall_at_3

value: 14.636

- type: recall_at_5

value: 18.009

- task:

type: Classification

dataset:

type: mteb/emotion

name: MTEB EmotionClassification

config: default

split: test

revision: 4f58c6b202a23cf9a4da393831edf4f9183cad37

metrics:

- type: accuracy

value: 53.245

- type: f1

value: 48.74520523753552

- task:

type: Retrieval

dataset:

type: fever

name: MTEB FEVER

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 51.729

- type: map_at_10

value: 63.904

- type: map_at_100

value: 64.363

- type: map_at_1000

value: 64.38199999999999

- type: map_at_3

value: 61.393

- type: map_at_5

value: 63.02100000000001

- type: mrr_at_1

value: 55.686

- type: mrr_at_10

value: 67.804

- type: mrr_at_100

value: 68.15299999999999

- type: mrr_at_1000

value: 68.161

- type: mrr_at_3

value: 65.494

- type: mrr_at_5

value: 67.01599999999999

- type: ndcg_at_1

value: 55.686

- type: ndcg_at_10

value: 70.025

- type: ndcg_at_100

value: 72.011

- type: ndcg_at_1000

value: 72.443

- type: ndcg_at_3

value: 65.32900000000001

- type: ndcg_at_5

value: 68.05600000000001

- type: precision_at_1

value: 55.686

- type: precision_at_10

value: 9.358

- type: precision_at_100

value: 1.05

- type: precision_at_1000

value: 0.11

- type: precision_at_3

value: 26.318

- type: precision_at_5

value: 17.321

- type: recall_at_1

value: 51.729

- type: recall_at_10

value: 85.04

- type: recall_at_100

value: 93.777

- type: recall_at_1000

value: 96.824

- type: recall_at_3

value: 72.521

- type: recall_at_5

value: 79.148

- task:

type: Retrieval

dataset:

type: fiqa

name: MTEB FiQA2018

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 23.765

- type: map_at_10

value: 39.114

- type: map_at_100

value: 40.987

- type: map_at_1000

value: 41.155

- type: map_at_3

value: 34.028000000000006

- type: map_at_5

value: 36.925000000000004

- type: mrr_at_1

value: 46.451

- type: mrr_at_10

value: 54.711

- type: mrr_at_100

value: 55.509

- type: mrr_at_1000

value: 55.535000000000004

- type: mrr_at_3

value: 52.649

- type: mrr_at_5

value: 53.729000000000006

- type: ndcg_at_1