modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

DavidLanz/Taiwan-tinyllama-v1.0-chat | DavidLanz | 2024-06-30T07:24:15Z | 543 | 2 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"zh",

"dataset:benchang1110/pretrainedtw",

"dataset:HuggingFaceTB/cosmopedia-100k",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-05-29T01:00:31Z | ---

library_name: transformers

license: apache-2.0

datasets:

- benchang1110/pretrainedtw

- HuggingFaceTB/cosmopedia-100k

language:

- zh

widget:

- text: '在很久以前,這座島上'

example_title: Example1

---

# Model Card for Model ID

This is a continue-pretrained version of [Tinyllama](TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T) tailored for traditional Chinese. The continue-pretraining dataset contains roughly 2B tokens.

# Usage

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

def generate_response(input):

'''

simple test for the model

'''

# tokenzize the input

tokenized_input = tokenizer.encode_plus(input, return_tensors='pt').to(device)

# generate the response

outputs = model.generate(

input_ids=tokenized_input['input_ids'],

attention_mask=tokenized_input['attention_mask'],

pad_token_id=tokenizer.pad_token_id,

do_sample=False,

repetition_penalty=1.3,

max_length=500

)

# decode the response

return tokenizer.decode(outputs[0], skip_special_tokens=True)

if __name__ == '__main__':

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = AutoModelForCausalLM.from_pretrained("DavidLanz/Taiwan-tinyllama-v1.0-chat",device_map=device,torch_dtype=torch.bfloat16)

tokenizer = AutoTokenizer.from_pretrained("DavidLanz/Taiwan-tinyllama-v1.0-chat")

while(True):

text = input("input a simple prompt:")

print('System:', generate_response(text))

```

Using bfloat16, the VRAM required is around 3GB!!!

|

mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF | mradermacher | 2024-06-10T19:30:13Z | 543 | 1 | transformers | [

"transformers",

"gguf",

"Yi",

"llama",

"llama 2",

"en",

"dataset:jondurbin/airoboros-2.2.1",

"base_model:Doctor-Shotgun/airoboros-2.2.1-limarpv3-y34b",

"license:other",

"endpoints_compatible",

"region:us"

]

| null | 2024-06-09T05:59:56Z | ---

base_model: Doctor-Shotgun/airoboros-2.2.1-limarpv3-y34b

datasets:

- jondurbin/airoboros-2.2.1

language:

- en

library_name: transformers

license: other

license_link: LICENSE

license_name: yi-license

quantized_by: mradermacher

tags:

- Yi

- llama

- llama 2

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/Doctor-Shotgun/airoboros-2.2.1-limarpv3-y34b

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ1_S.gguf) | i1-IQ1_S | 7.6 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ1_M.gguf) | i1-IQ1_M | 8.3 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 9.4 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ2_XS.gguf) | i1-IQ2_XS | 10.4 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ2_S.gguf) | i1-IQ2_S | 11.0 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ2_M.gguf) | i1-IQ2_M | 11.9 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q2_K.gguf) | i1-Q2_K | 12.9 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 13.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ3_XS.gguf) | i1-IQ3_XS | 14.3 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q3_K_S.gguf) | i1-Q3_K_S | 15.1 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ3_S.gguf) | i1-IQ3_S | 15.1 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ3_M.gguf) | i1-IQ3_M | 15.7 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q3_K_M.gguf) | i1-Q3_K_M | 16.8 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q3_K_L.gguf) | i1-Q3_K_L | 18.2 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-IQ4_XS.gguf) | i1-IQ4_XS | 18.6 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q4_0.gguf) | i1-Q4_0 | 19.6 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q4_K_S.gguf) | i1-Q4_K_S | 19.7 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q4_K_M.gguf) | i1-Q4_K_M | 20.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q5_K_S.gguf) | i1-Q5_K_S | 23.8 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q5_K_M.gguf) | i1-Q5_K_M | 24.4 | |

| [GGUF](https://huggingface.co/mradermacher/airoboros-2.2.1-limarpv3-y34b-i1-GGUF/resolve/main/airoboros-2.2.1-limarpv3-y34b.i1-Q6_K.gguf) | i1-Q6_K | 28.3 | practically like static Q6_K |

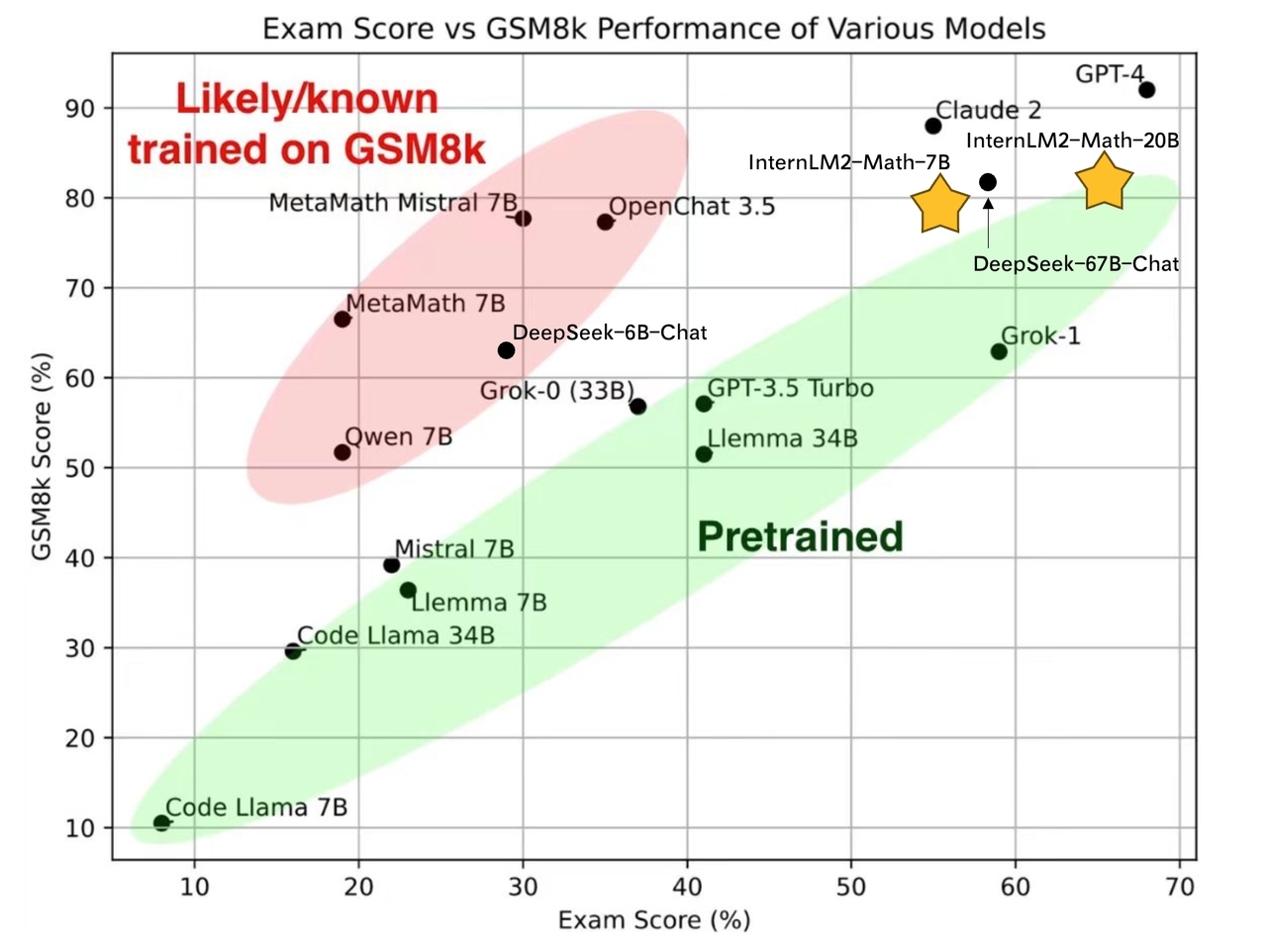

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his hardware for calculating the imatrix for these quants.

<!-- end -->

|

DavidAU/DarkSapling-V2-Ultra-Quality-7B-GGUF-imatPLUS2-imatX | DavidAU | 2024-06-22T05:07:34Z | 543 | 1 | null | [

"gguf",

"ultra quality",

"upscale 32 bit",

"imatrix plus 2",

"imatrix x",

"creative",

"story",

"roleplay",

"rp",

"remastered",

"writing",

"license:apache-2.0",

"region:us"

]

| null | 2024-06-10T12:17:23Z | ---

license: apache-2.0

tags:

- ultra quality

- upscale 32 bit

- imatrix plus 2

- imatrix x

- creative

- story

- roleplay

- rp

- remastered

- writing

---

<h3> Experimental running Quants of "Dark Sapling V2 7B Ultra Quality" (32 k context) in Imatrix Plus 2 and Imatrix X protocols. </h3>

<img src="robot-ferrari.jpg" style="width:400px; height:400px; float:right; padding:10px;">

Imatrix Plus 2 is an advanced Imatrix quanting method using multiple datasets.

Imatrix X takes Imatrix Plus 2 a step further and introduces a "pass-through merge" method between Imatrix Plus 2 and regular Quants.

This leverages the full power of both Imatrix Plus 2 and regular quant (which is based on Ultra Quality 32 bit precision).

This marries (to varying degrees) the best of THREE worlds: The Ultra Quality model, the Imatrix Plus 2 (and enhancements from the datasets)

, and the raw power of the unfiltered/unaltered Ultra Quality model itself.

These methods target the weak point(s) and enhance the strong point(s) at the same time.

Imatrix Plus 2 and Imatrix X are the next steps in Ultra Quality experience.

The 11 quants hosted here are various versions of "Imatrix X" (with Imatrix Plus 2), with the exception of

"DarkSapling-V2-Ultra-Quality-7B-Q4_k_m-imat1.gguf" which is stand alone Imatrix Plus 2.

All quants operate, however there is more work in progress and these quants are subject to revision without notice.

For testing purposes (if you download more than one) set "TEMP:0" and test your prompt(s).

You should use prompts with "no right answer" to fully evalute the quant (and vs other quants here).

Not all of these quants are suitable for all purposes and protocol(s) are still under developement.

Dark Sapling V2 7B is a fiction, roleplay, fantasy and story telling model.

Although all quants at this repo are all "Q4_K_M Imatrix 2" quants they differ greatly due to Imatrix X protocols.

Some will be far more verbose than others, answer differently, make stuff up, lie to you or worse... it might flirt with you.

Others will be more "sciency" in their replies, whereas others will be more "fictional".

Some Imat X versions even bring the core model to new levels of performance, above and beyond current 32 bit Ultra Quality levels.

Caution: Can generate NSFW content.

For more information on Dark Sapling V2 Ultra Quality please see:

[ https://huggingface.co/DavidAU/DarkSapling-V2-Ultra-Quality-7B-GGUF ] |

bloomberg/KeyBART | bloomberg | 2023-02-21T18:06:45Z | 542 | 36 | transformers | [

"transformers",

"pytorch",

"bart",

"text2text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-03-02T23:29:05Z | ---

license: apache-2.0

---

# KeyBART

KeyBART as described in "Learning Rich Representations of Keyphrase from Text" published in the Findings of NAACL 2022 (https://aclanthology.org/2022.findings-naacl.67.pdf), pre-trains a BART-based architecture to produce a concatenated sequence of keyphrases in the CatSeqD format.

We provide some examples on Downstream Evaluations setups and and also how it can be used for Text-to-Text Generation in a zero-shot setting.

## Downstream Evaluation

### Keyphrase Generation

```

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("bloomberg/KeyBART")

model = AutoModelForSeq2SeqLM.from_pretrained("bloomberg/KeyBART")

from datasets import load_dataset

dataset = load_dataset("midas/kp20k")

```

Reported Results:

#### Present Keyphrase Generation

| | Inspec | | NUS | | Krapivin | | SemEval | | KP20k | |

|---------------|--------|-------|-------|-------|----------|-------|---------|-------|-------|-------|

| Model | F1@5 | F1@M | F1@5 | F1@M | F1@5 | F1@M | F1@5 | F1@M | F1@5 | F1@M |

| catSeq | 22.5 | 26.2 | 32.3 | 39.7 | 26.9 | 35.4 | 24.2 | 28.3 | 29.1 | 36.7 |

| catSeqTG | 22.9 | 27 | 32.5 | 39.3 | 28.2 | 36.6 | 24.6 | 29.0 | 29.2 | 36.6 |

| catSeqTG-2RF1 | 25.3 | 30.1 | 37.5 | 43.3 | 30 | 36.9 | 28.7 | 32.9 | 32.1 | 38.6 |

| GANMR | 25.8 | 29.9 | 34.8 | 41.7 | 28.8 | 36.9 | N/A | N/A | 30.3 | 37.8 |

| ExHiRD-h | 25.3 | 29.1 | N/A | N/A | 28.6 | 34.7 | 28.4 | 33.5 | 31.1 | 37.4 |

| Transformer (Ye et al., 2021) | 28.15 | 32.56 | 37.07 | 41.91 | 31.58 | 36.55 | 28.71 | 32.52 | 33.21 | 37.71 |

| BART* | 23.59 | 28.46 | 35.00 | 42.65 | 26.91 | 35.37 | 26.72 | 31.91 | 29.25 | 37.51 |

| KeyBART-DOC* | 24.42 | 29.57 | 31.37 | 39.24 | 24.21 | 32.60 | 24.69 | 30.50 | 28.82 | 37.59 |

| KeyBART* | 24.49 | 29.69 | 34.77 | 43.57 | 29.24 | 38.62 | 27.47 | 33.54 | 30.71 | 39.76 |

| KeyBART* (Zero-shot) | 30.72 | 36.89 | 18.86 | 21.67 | 18.35 | 20.46 | 20.25 | 25.82 | 12.57 | 15.41 |

#### Absent Keyphrase Generation

| | Inspec | | NUS | | Krapivin | | SemEval | | KP20k | |

|---------------|--------|------|------|------|----------|------|---------|------|-------|------|

| Model | F1@5 | F1@M | F1@5 | F1@M | F1@5 | F1@M | F1@5 | F1@M | F1@5 | F1@M |

| catSeq | 0.4 | 0.8 | 1.6 | 2.8 | 1.8 | 3.6 | 1.6 | 2.8 | 1.5 | 3.2 |

| catSeqTG | 0.5 | 1.1 | 1.1 | 1.8 | 1.8 | 3.4 | 1.1 | 1.8 | 1.5 | 3.2 |

| catSeqTG-2RF1 | 1.2 | 2.1 | 1.9 | 3.1 | 3.0 | 5.3 | 2.1 | 3.0 | 2.7 | 5.0 |

| GANMR | 1.3 | 1.9 | 2.6 | 3.8 | 4.2 | 5.7 | N/A | N/A | 3.2 | 4.5 |

| ExHiRD-h | 1.1 | 2.2 | N/A | N/A | 2.2 | 4.3 | 1.7 | 2.5 | 1.6 | 3.2 |

| Transformer (Ye et al., 2021) | 1.02 | 1.94 | 2.82 | 4.82 | 3.21 | 6.04 | 2.05 | 2.33 | 2.31 | 4.61 |

| BART* | 1.08 | 1.96 | 1.80 | 2.75 | 2.59 | 4.91 | 1.34 | 1.75 | 1.77 | 3.56 |

| KeyBART-DOC* | 0.99 | 2.03 | 1.39 | 2.74 | 2.40 | 4.58 | 1.07 | 1.39 | 1.69 | 3.38 |

| KeyBART* | 0.95 | 1.81 | 1.23 | 1.90 | 3.09 | 6.08 | 1.96 | 2.65 | 2.03 | 4.26 |

| KeyBART* (Zero-shot) | 1.83 | 2.92 | 1.46 | 2.19 | 1.29 | 2.09 | 1.12 | 1.45 | 0.70 | 1.14 |

### Abstractive Summarization

```

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("bloomberg/KeyBART")

model = AutoModelForSeq2SeqLM.from_pretrained("bloomberg/KeyBART")

from datasets import load_dataset

dataset = load_dataset("cnn_dailymail")

```

Reported Results:

| Model | R1 | R2 | RL |

|--------------|-------|-------|-------|

| BART (Lewis et al., 2019) | 44.16 | 21.28 | 40.9 |

| BART* | 42.93 | 20.12 | 39.72 |

| KeyBART-DOC* | 42.92 | 20.07 | 39.69 |

| KeyBART* | 43.10 | 20.26 | 39.90 |

## Zero-shot settings

```

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("bloomberg/KeyBART")

model = AutoModelForSeq2SeqLM.from_pretrained("bloomberg/KeyBART")

```

Alternatively use the Hosted Inference API console provided in https://huggingface.co/bloomberg/KeyBART

Sample Zero Shot result:

```

Input: In this work, we explore how to learn task specific language models aimed towards learning rich representation of keyphrases from text documents.

We experiment with different masking strategies for pre-training transformer language models (LMs) in discriminative as well as generative settings.

In the discriminative setting, we introduce a new pre-training objective - Keyphrase Boundary Infilling with Replacement (KBIR),

showing large gains in performance (upto 9.26 points in F1) over SOTA, when LM pre-trained using KBIR is fine-tuned for the task of keyphrase extraction.

In the generative setting, we introduce a new pre-training setup for BART - KeyBART, that reproduces the keyphrases related to the input text in the CatSeq

format, instead of the denoised original input. This also led to gains in performance (upto 4.33 points in F1@M) over SOTA for keyphrase generation.

Additionally, we also fine-tune the pre-trained language models on named entity recognition (NER), question answering (QA), relation extraction (RE),

abstractive summarization and achieve comparable performance with that of the SOTA, showing that learning rich representation of keyphrases is indeed beneficial

for many other fundamental NLP tasks.

Output: language model;keyphrase generation;new pre-training objective;pre-training setup;

```

## Citation

Please cite this work using the following BibTeX entry:

```

@inproceedings{kulkarni-etal-2022-learning,

title = "Learning Rich Representation of Keyphrases from Text",

author = "Kulkarni, Mayank and

Mahata, Debanjan and

Arora, Ravneet and

Bhowmik, Rajarshi",

booktitle = "Findings of the Association for Computational Linguistics: NAACL 2022",

month = jul,

year = "2022",

address = "Seattle, United States",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-naacl.67",

doi = "10.18653/v1/2022.findings-naacl.67",

pages = "891--906",

abstract = "In this work, we explore how to train task-specific language models aimed towards learning rich representation of keyphrases from text documents. We experiment with different masking strategies for pre-training transformer language models (LMs) in discriminative as well as generative settings. In the discriminative setting, we introduce a new pre-training objective - Keyphrase Boundary Infilling with Replacement (KBIR), showing large gains in performance (upto 8.16 points in F1) over SOTA, when the LM pre-trained using KBIR is fine-tuned for the task of keyphrase extraction. In the generative setting, we introduce a new pre-training setup for BART - KeyBART, that reproduces the keyphrases related to the input text in the CatSeq format, instead of the denoised original input. This also led to gains in performance (upto 4.33 points in F1@M) over SOTA for keyphrase generation. Additionally, we also fine-tune the pre-trained language models on named entity recognition (NER), question answering (QA), relation extraction (RE), abstractive summarization and achieve comparable performance with that of the SOTA, showing that learning rich representation of keyphrases is indeed beneficial for many other fundamental NLP tasks.",

}

```

Please direct all questions to [email protected] |

timm/convnextv2_large.fcmae | timm | 2024-02-10T23:29:21Z | 542 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-feature-extraction",

"arxiv:2301.00808",

"license:cc-by-nc-4.0",

"region:us"

]

| image-feature-extraction | 2023-01-05T01:51:30Z | ---

license: cc-by-nc-4.0

library_name: timm

tags:

- image-feature-extraction

- timm

---

# Model card for convnextv2_large.fcmae

A ConvNeXt-V2 self-supervised feature representation model. Pretrained with a fully convolutional masked autoencoder framework (FCMAE). This model has no pretrained head and is only useful for fine-tune or feature extraction.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 196.4

- GMACs: 34.4

- Activations (M): 43.1

- Image size: 224 x 224

- **Papers:**

- ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders: https://arxiv.org/abs/2301.00808

- **Original:** https://github.com/facebookresearch/ConvNeXt-V2

- **Pretrain Dataset:** ImageNet-1k

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('convnextv2_large.fcmae', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'convnextv2_large.fcmae',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 192, 56, 56])

# torch.Size([1, 384, 28, 28])

# torch.Size([1, 768, 14, 14])

# torch.Size([1, 1536, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'convnextv2_large.fcmae',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 1536, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

All timing numbers from eager model PyTorch 1.13 on RTX 3090 w/ AMP.

| model |top1 |top5 |img_size|param_count|gmacs |macts |samples_per_sec|batch_size|

|------------------------------------------------------------------------------------------------------------------------------|------|------|--------|-----------|------|------|---------------|----------|

| [convnextv2_huge.fcmae_ft_in22k_in1k_512](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in22k_in1k_512) |88.848|98.742|512 |660.29 |600.81|413.07|28.58 |48 |

| [convnextv2_huge.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in22k_in1k_384) |88.668|98.738|384 |660.29 |337.96|232.35|50.56 |64 |

| [convnext_xxlarge.clip_laion2b_soup_ft_in1k](https://huggingface.co/timm/convnext_xxlarge.clip_laion2b_soup_ft_in1k) |88.612|98.704|256 |846.47 |198.09|124.45|122.45 |256 |

| [convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_384](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_384) |88.312|98.578|384 |200.13 |101.11|126.74|196.84 |256 |

| [convnextv2_large.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in22k_in1k_384) |88.196|98.532|384 |197.96 |101.1 |126.74|128.94 |128 |

| [convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_320](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_320) |87.968|98.47 |320 |200.13 |70.21 |88.02 |283.42 |256 |

| [convnext_xlarge.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_xlarge.fb_in22k_ft_in1k_384) |87.75 |98.556|384 |350.2 |179.2 |168.99|124.85 |192 |

| [convnextv2_base.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in22k_in1k_384) |87.646|98.422|384 |88.72 |45.21 |84.49 |209.51 |256 |

| [convnext_large.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_large.fb_in22k_ft_in1k_384) |87.476|98.382|384 |197.77 |101.1 |126.74|194.66 |256 |

| [convnext_large_mlp.clip_laion2b_augreg_ft_in1k](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_augreg_ft_in1k) |87.344|98.218|256 |200.13 |44.94 |56.33 |438.08 |256 |

| [convnextv2_large.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in22k_in1k) |87.26 |98.248|224 |197.96 |34.4 |43.13 |376.84 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in12k_in1k_384](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in12k_in1k_384) |87.138|98.212|384 |88.59 |45.21 |84.49 |365.47 |256 |

| [convnext_xlarge.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_xlarge.fb_in22k_ft_in1k) |87.002|98.208|224 |350.2 |60.98 |57.5 |368.01 |256 |

| [convnext_base.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_base.fb_in22k_ft_in1k_384) |86.796|98.264|384 |88.59 |45.21 |84.49 |366.54 |256 |

| [convnextv2_base.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in22k_in1k) |86.74 |98.022|224 |88.72 |15.38 |28.75 |624.23 |256 |

| [convnext_large.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_large.fb_in22k_ft_in1k) |86.636|98.028|224 |197.77 |34.4 |43.13 |581.43 |256 |

| [convnext_base.clip_laiona_augreg_ft_in1k_384](https://huggingface.co/timm/convnext_base.clip_laiona_augreg_ft_in1k_384) |86.504|97.97 |384 |88.59 |45.21 |84.49 |368.14 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in12k_in1k](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in12k_in1k) |86.344|97.97 |256 |88.59 |20.09 |37.55 |816.14 |256 |

| [convnextv2_huge.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in1k) |86.256|97.75 |224 |660.29 |115.0 |79.07 |154.72 |256 |

| [convnext_small.in12k_ft_in1k_384](https://huggingface.co/timm/convnext_small.in12k_ft_in1k_384) |86.182|97.92 |384 |50.22 |25.58 |63.37 |516.19 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in1k](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in1k) |86.154|97.68 |256 |88.59 |20.09 |37.55 |819.86 |256 |

| [convnext_base.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_base.fb_in22k_ft_in1k) |85.822|97.866|224 |88.59 |15.38 |28.75 |1037.66 |256 |

| [convnext_small.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_small.fb_in22k_ft_in1k_384) |85.778|97.886|384 |50.22 |25.58 |63.37 |518.95 |256 |

| [convnextv2_large.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in1k) |85.742|97.584|224 |197.96 |34.4 |43.13 |375.23 |256 |

| [convnext_small.in12k_ft_in1k](https://huggingface.co/timm/convnext_small.in12k_ft_in1k) |85.174|97.506|224 |50.22 |8.71 |21.56 |1474.31 |256 |

| [convnext_tiny.in12k_ft_in1k_384](https://huggingface.co/timm/convnext_tiny.in12k_ft_in1k_384) |85.118|97.608|384 |28.59 |13.14 |39.48 |856.76 |256 |

| [convnextv2_tiny.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in22k_in1k_384) |85.112|97.63 |384 |28.64 |13.14 |39.48 |491.32 |256 |

| [convnextv2_base.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in1k) |84.874|97.09 |224 |88.72 |15.38 |28.75 |625.33 |256 |

| [convnext_small.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_small.fb_in22k_ft_in1k) |84.562|97.394|224 |50.22 |8.71 |21.56 |1478.29 |256 |

| [convnext_large.fb_in1k](https://huggingface.co/timm/convnext_large.fb_in1k) |84.282|96.892|224 |197.77 |34.4 |43.13 |584.28 |256 |

| [convnext_tiny.in12k_ft_in1k](https://huggingface.co/timm/convnext_tiny.in12k_ft_in1k) |84.186|97.124|224 |28.59 |4.47 |13.44 |2433.7 |256 |

| [convnext_tiny.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_tiny.fb_in22k_ft_in1k_384) |84.084|97.14 |384 |28.59 |13.14 |39.48 |862.95 |256 |

| [convnextv2_tiny.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in22k_in1k) |83.894|96.964|224 |28.64 |4.47 |13.44 |1452.72 |256 |

| [convnext_base.fb_in1k](https://huggingface.co/timm/convnext_base.fb_in1k) |83.82 |96.746|224 |88.59 |15.38 |28.75 |1054.0 |256 |

| [convnextv2_nano.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in22k_in1k_384) |83.37 |96.742|384 |15.62 |7.22 |24.61 |801.72 |256 |

| [convnext_small.fb_in1k](https://huggingface.co/timm/convnext_small.fb_in1k) |83.142|96.434|224 |50.22 |8.71 |21.56 |1464.0 |256 |

| [convnextv2_tiny.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in1k) |82.92 |96.284|224 |28.64 |4.47 |13.44 |1425.62 |256 |

| [convnext_tiny.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_tiny.fb_in22k_ft_in1k) |82.898|96.616|224 |28.59 |4.47 |13.44 |2480.88 |256 |

| [convnext_nano.in12k_ft_in1k](https://huggingface.co/timm/convnext_nano.in12k_ft_in1k) |82.282|96.344|224 |15.59 |2.46 |8.37 |3926.52 |256 |

| [convnext_tiny_hnf.a2h_in1k](https://huggingface.co/timm/convnext_tiny_hnf.a2h_in1k) |82.216|95.852|224 |28.59 |4.47 |13.44 |2529.75 |256 |

| [convnext_tiny.fb_in1k](https://huggingface.co/timm/convnext_tiny.fb_in1k) |82.066|95.854|224 |28.59 |4.47 |13.44 |2346.26 |256 |

| [convnextv2_nano.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in22k_in1k) |82.03 |96.166|224 |15.62 |2.46 |8.37 |2300.18 |256 |

| [convnextv2_nano.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in1k) |81.83 |95.738|224 |15.62 |2.46 |8.37 |2321.48 |256 |

| [convnext_nano_ols.d1h_in1k](https://huggingface.co/timm/convnext_nano_ols.d1h_in1k) |80.866|95.246|224 |15.65 |2.65 |9.38 |3523.85 |256 |

| [convnext_nano.d1h_in1k](https://huggingface.co/timm/convnext_nano.d1h_in1k) |80.768|95.334|224 |15.59 |2.46 |8.37 |3915.58 |256 |

| [convnextv2_pico.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_pico.fcmae_ft_in1k) |80.304|95.072|224 |9.07 |1.37 |6.1 |3274.57 |256 |

| [convnext_pico.d1_in1k](https://huggingface.co/timm/convnext_pico.d1_in1k) |79.526|94.558|224 |9.05 |1.37 |6.1 |5686.88 |256 |

| [convnext_pico_ols.d1_in1k](https://huggingface.co/timm/convnext_pico_ols.d1_in1k) |79.522|94.692|224 |9.06 |1.43 |6.5 |5422.46 |256 |

| [convnextv2_femto.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_femto.fcmae_ft_in1k) |78.488|93.98 |224 |5.23 |0.79 |4.57 |4264.2 |256 |

| [convnext_femto_ols.d1_in1k](https://huggingface.co/timm/convnext_femto_ols.d1_in1k) |77.86 |93.83 |224 |5.23 |0.82 |4.87 |6910.6 |256 |

| [convnext_femto.d1_in1k](https://huggingface.co/timm/convnext_femto.d1_in1k) |77.454|93.68 |224 |5.22 |0.79 |4.57 |7189.92 |256 |

| [convnextv2_atto.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_atto.fcmae_ft_in1k) |76.664|93.044|224 |3.71 |0.55 |3.81 |4728.91 |256 |

| [convnext_atto_ols.a2_in1k](https://huggingface.co/timm/convnext_atto_ols.a2_in1k) |75.88 |92.846|224 |3.7 |0.58 |4.11 |7963.16 |256 |

| [convnext_atto.d2_in1k](https://huggingface.co/timm/convnext_atto.d2_in1k) |75.664|92.9 |224 |3.7 |0.55 |3.81 |8439.22 |256 |

## Citation

```bibtex

@article{Woo2023ConvNeXtV2,

title={ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders},

author={Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon and Saining Xie},

year={2023},

journal={arXiv preprint arXiv:2301.00808},

}

```

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

```

|

TheBloke/Llama-2-13B-LoRA-Assemble-GGUF | TheBloke | 2023-09-27T12:49:12Z | 542 | 4 | transformers | [

"transformers",

"gguf",

"llama",

"base_model:oh-yeontaek/llama-2-13b-LoRA-assemble",

"license:llama2",

"text-generation-inference",

"region:us"

]

| null | 2023-09-14T10:24:22Z | ---

license: llama2

model_name: Llama 2 13B LoRA Assemble

base_model: oh-yeontaek/llama-2-13b-LoRA-assemble

inference: false

model_creator: oh-yeontaek

model_type: llama

prompt_template: '{prompt}

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Llama 2 13B LoRA Assemble - GGUF

- Model creator: [oh-yeontaek](https://huggingface.co/oh-yeontaek)

- Original model: [Llama 2 13B LoRA Assemble](https://huggingface.co/oh-yeontaek/llama-2-13b-LoRA-assemble)

<!-- description start -->

## Description

This repo contains GGUF format model files for [oh-yeontaek's Llama 2 13B LoRA Assemble](https://huggingface.co/oh-yeontaek/llama-2-13b-LoRA-assemble).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF)

* [oh-yeontaek's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/oh-yeontaek/llama-2-13b-LoRA-assemble)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Unknown

```

{prompt}

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [llama-2-13b-lora-assemble.Q2_K.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [llama-2-13b-lora-assemble.Q3_K_S.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [llama-2-13b-lora-assemble.Q3_K_M.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [llama-2-13b-lora-assemble.Q3_K_L.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [llama-2-13b-lora-assemble.Q4_0.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [llama-2-13b-lora-assemble.Q4_K_S.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [llama-2-13b-lora-assemble.Q4_K_M.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [llama-2-13b-lora-assemble.Q5_0.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [llama-2-13b-lora-assemble.Q5_K_S.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [llama-2-13b-lora-assemble.Q5_K_M.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [llama-2-13b-lora-assemble.Q6_K.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [llama-2-13b-lora-assemble.Q8_0.gguf](https://huggingface.co/TheBloke/Llama-2-13B-LoRA-Assemble-GGUF/blob/main/llama-2-13b-lora-assemble.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Llama-2-13B-LoRA-Assemble-GGUF and below it, a specific filename to download, such as: llama-2-13b-lora-assemble.q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub>=0.17.1

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Llama-2-13B-LoRA-Assemble-GGUF llama-2-13b-lora-assemble.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/Llama-2-13B-LoRA-Assemble-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Llama-2-13B-LoRA-Assemble-GGUF llama-2-13b-lora-assemble.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows CLI users: Use `set HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1` before running the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m llama-2-13b-lora-assemble.q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "{prompt}"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model from Python using ctransformers

#### First install the package

```bash

# Base ctransformers with no GPU acceleration

pip install ctransformers>=0.2.24

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]>=0.2.24

# Or with ROCm GPU acceleration

CT_HIPBLAS=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems

CT_METAL=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

```

#### Simple example code to load one of these GGUF models

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/Llama-2-13B-LoRA-Assemble-GGUF", model_file="llama-2-13b-lora-assemble.q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here's guides on using llama-cpp-python or ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: oh-yeontaek's Llama 2 13B LoRA Assemble

No original model card was available.

<!-- original-model-card end -->

|

abetlen/replit-code-v1_5-3b-GGUF | abetlen | 2023-11-08T16:46:59Z | 542 | 7 | null | [

"gguf",

"license:apache-2.0",

"region:us"

]

| null | 2023-11-08T08:44:54Z | ---

license: apache-2.0

---

|

MaziyarPanahi/Llama-3-11B-Instruct-v0.1-GGUF | MaziyarPanahi | 2024-04-20T07:41:45Z | 542 | 7 | transformers | [

"transformers",

"gguf",

"mistral",

"quantized",

"2-bit",

"3-bit",

"4-bit",

"5-bit",

"6-bit",

"8-bit",

"GGUF",

"text-generation",

"mixtral",

"base_model:MaziyarPanahi/Llama-3-11B-Instruct-v0.1",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-04-19T10:37:09Z | ---

tags:

- quantized

- 2-bit

- 3-bit

- 4-bit

- 5-bit

- 6-bit

- 8-bit

- GGUF

- text-generation

- mixtral

- text-generation

model_name: Llama-3-11B-Instruct-v0.1-GGUF

base_model: MaziyarPanahi/Llama-3-11B-Instruct-v0.1

inference: false

model_creator: MaziyarPanahi

pipeline_tag: text-generation

quantized_by: MaziyarPanahi

---

# [MaziyarPanahi/Llama-3-11B-Instruct-v0.1-GGUF](https://huggingface.co/MaziyarPanahi/Llama-3-11B-Instruct-v0.1-GGUF)

- Model creator: [MaziyarPanahi](https://huggingface.co/MaziyarPanahi)

- Original model: [MaziyarPanahi/Llama-3-11B-Instruct-v0.1](https://huggingface.co/MaziyarPanahi/Llama-3-11B-Instruct-v0.1)

## Description

[MaziyarPanahi/Llama-3-11B-Instruct-v0.1-GGUF](https://huggingface.co/MaziyarPanahi/Llama-3-11B-Instruct-v0.1-GGUF) contains GGUF format model files for [MaziyarPanahi/Llama-3-11B-Instruct-v0.1](https://huggingface.co/MaziyarPanahi/Llama-3-11B-Instruct-v0.1).

## Load GGUF models

You `MUST` follow the prompt template provided by Llama-3:

```sh

./llama.cpp/main -m Llama-3-11B-Instruct.Q2_K.gguf -r '<|eot_id|>' --in-prefix "\n<|start_header_id|>user<|end_header_id|>\n\n" --in-suffix "<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n" -p "<|begin_of_text|><|start_header_id|>system<|end_header_id|>\n\nYou are a helpful, smart, kind, and efficient AI assistant. You always fulfill the user's requests to the best of your ability.<|eot_id|>\n<|start_header_id|>user<|end_header_id|>\n\nHi! How are you?<|eot_id|>\n<|start_header_id|>assistant<|end_header_id|>\n\n" -n 1024

```

|

appvoid/dot-v2.7 | appvoid | 2024-05-10T04:57:01Z | 542 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"arxiv:2311.03099",

"arxiv:2306.01708",

"base_model:matlok/tinyllama-cinder-openhermes-32k",

"base_model:raidhon/coven_tiny_1.1b_32k_orpo_alpha",

"base_model:TinyLlama/TinyLlama-1.1B-Chat-v1.0",

"base_model:appvoid/palmer-003",

"base_model:BEE-spoke-data/TinyLlama-1.1bee",

"base_model:ShieldX/manovyadh-1.1B-v1-chat",

"base_model:microsoft/rho-math-1b-interpreter-v0.1",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-05-10T04:47:10Z | ---

base_model:

- matlok/tinyllama-cinder-openhermes-32k

- raidhon/coven_tiny_1.1b_32k_orpo_alpha

- TinyLlama/TinyLlama-1.1B-Chat-v1.0

- appvoid/palmer-003

- BEE-spoke-data/TinyLlama-1.1bee

- ShieldX/manovyadh-1.1B-v1-chat

- microsoft/rho-math-1b-interpreter-v0.1

library_name: transformers

tags:

- mergekit

- merge

---

# merge

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [DARE](https://arxiv.org/abs/2311.03099) [TIES](https://arxiv.org/abs/2306.01708) merge method using [appvoid/palmer-003](https://huggingface.co/appvoid/palmer-003) as a base.

### Models Merged

The following models were included in the merge:

* [matlok/tinyllama-cinder-openhermes-32k](https://huggingface.co/matlok/tinyllama-cinder-openhermes-32k)

* [raidhon/coven_tiny_1.1b_32k_orpo_alpha](https://huggingface.co/raidhon/coven_tiny_1.1b_32k_orpo_alpha)

* [TinyLlama/TinyLlama-1.1B-Chat-v1.0](https://huggingface.co/TinyLlama/TinyLlama-1.1B-Chat-v1.0)

* [BEE-spoke-data/TinyLlama-1.1bee](https://huggingface.co/BEE-spoke-data/TinyLlama-1.1bee)

* [ShieldX/manovyadh-1.1B-v1-chat](https://huggingface.co/ShieldX/manovyadh-1.1B-v1-chat)

* [microsoft/rho-math-1b-interpreter-v0.1](https://huggingface.co/microsoft/rho-math-1b-interpreter-v0.1)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: BEE-spoke-data/TinyLlama-1.1bee

parameters:

density: 0.33

weight: 0.50

- model: raidhon/coven_tiny_1.1b_32k_orpo_alpha

parameters:

density: 0.36

weight: 0.40

- model: ShieldX/manovyadh-1.1B-v1-chat

parameters:

density: 0.33

weight: 0.30

- model: TinyLlama/TinyLlama-1.1B-Chat-v1.0

parameters:

density: 0.40

weight: 0.45

- model: matlok/tinyllama-cinder-openhermes-32k

parameters:

density: 0.32

weight: 0.26

- model: microsoft/rho-math-1b-interpreter-v0.1

parameters:

density: 0.38

weight: 0.35

merge_method: dare_ties

base_model: appvoid/palmer-003

parameters:

normalize: true

int8_mask: true

dtype: float16

```

|

RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf | RichardErkhov | 2024-05-21T12:53:55Z | 542 | 0 | null | [

"gguf",

"region:us"

]

| null | 2024-05-21T10:08:35Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

Llama-2-7b-sft-model-ocra-500k - GGUF

- Model creator: https://huggingface.co/OpenLLMAI/

- Original model: https://huggingface.co/OpenLLMAI/Llama-2-7b-sft-model-ocra-500k/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [Llama-2-7b-sft-model-ocra-500k.Q2_K.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q2_K.gguf) | Q2_K | 2.36GB |

| [Llama-2-7b-sft-model-ocra-500k.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.IQ3_XS.gguf) | IQ3_XS | 2.6GB |

| [Llama-2-7b-sft-model-ocra-500k.IQ3_S.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.IQ3_S.gguf) | IQ3_S | 2.75GB |

| [Llama-2-7b-sft-model-ocra-500k.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q3_K_S.gguf) | Q3_K_S | 2.75GB |

| [Llama-2-7b-sft-model-ocra-500k.IQ3_M.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.IQ3_M.gguf) | IQ3_M | 2.9GB |

| [Llama-2-7b-sft-model-ocra-500k.Q3_K.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q3_K.gguf) | Q3_K | 3.07GB |

| [Llama-2-7b-sft-model-ocra-500k.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q3_K_M.gguf) | Q3_K_M | 3.07GB |

| [Llama-2-7b-sft-model-ocra-500k.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q3_K_L.gguf) | Q3_K_L | 3.35GB |

| [Llama-2-7b-sft-model-ocra-500k.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.IQ4_XS.gguf) | IQ4_XS | 3.4GB |

| [Llama-2-7b-sft-model-ocra-500k.Q4_0.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q4_0.gguf) | Q4_0 | 3.56GB |

| [Llama-2-7b-sft-model-ocra-500k.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.IQ4_NL.gguf) | IQ4_NL | 3.58GB |

| [Llama-2-7b-sft-model-ocra-500k.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q4_K_S.gguf) | Q4_K_S | 3.59GB |

| [Llama-2-7b-sft-model-ocra-500k.Q4_K.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q4_K.gguf) | Q4_K | 3.8GB |

| [Llama-2-7b-sft-model-ocra-500k.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q4_K_M.gguf) | Q4_K_M | 3.8GB |

| [Llama-2-7b-sft-model-ocra-500k.Q4_1.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q4_1.gguf) | Q4_1 | 3.95GB |

| [Llama-2-7b-sft-model-ocra-500k.Q5_0.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q5_0.gguf) | Q5_0 | 4.33GB |

| [Llama-2-7b-sft-model-ocra-500k.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q5_K_S.gguf) | Q5_K_S | 4.33GB |

| [Llama-2-7b-sft-model-ocra-500k.Q5_K.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q5_K.gguf) | Q5_K | 4.45GB |

| [Llama-2-7b-sft-model-ocra-500k.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q5_K_M.gguf) | Q5_K_M | 4.45GB |

| [Llama-2-7b-sft-model-ocra-500k.Q5_1.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q5_1.gguf) | Q5_1 | 4.72GB |

| [Llama-2-7b-sft-model-ocra-500k.Q6_K.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q6_K.gguf) | Q6_K | 5.15GB |

| [Llama-2-7b-sft-model-ocra-500k.Q8_0.gguf](https://huggingface.co/RichardErkhov/OpenLLMAI_-_Llama-2-7b-sft-model-ocra-500k-gguf/blob/main/Llama-2-7b-sft-model-ocra-500k.Q8_0.gguf) | Q8_0 | 6.67GB |

Original model description:

Entry not found

|

RaphaelMourad/Mistral-Chem-v1-417M | RaphaelMourad | 2024-06-05T12:21:24Z | 542 | 2 | transformers | [

"transformers",

"safetensors",

"mixtral",

"text-generation",

"pretrained",

"mistral",

"chemistry",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-05-23T20:09:11Z | ---

license: apache-2.0

tags:

- pretrained

- mistral

- chemistry

---

# Model Card for Mistral-Chem-v1-417M (Mistral for chemistry)

The Mistral-Chem-v1-417M Large Language Model (LLM) is a pretrained generative chemical molecule model with 417M parameters.

It is derived from Mixtral-8x7B-v0.1 model, which was simplified for molecules: the number of layers and the hidden size were reduced.

The model was pretrained using 10M molecule SMILES strings from the ZINC 15 database.

## Model Architecture

Like Mixtral-8x7B-v0.1, it is a transformer model, with the following architecture choices:

- Grouped-Query Attention

- Sliding-Window Attention

- Byte-fallback BPE tokenizer

- Mixture of Experts

## Load the model from huggingface:

```

import torch

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("RaphaelMourad/Mistral-Chem-v1-417M", trust_remote_code=True)

model = AutoModel.from_pretrained("RaphaelMourad/Mistral-Chem-v1-417M", trust_remote_code=True)

```

## Calculate the embedding of a DNA sequence

```

chem = "CCCCC[C@H](Br)CC"

inputs = tokenizer(chem, return_tensors = 'pt')["input_ids"]

hidden_states = model(inputs)[0] # [1, sequence_length, 256]

# embedding with max pooling

embedding_max = torch.max(hidden_states[0], dim=0)[0]

print(embedding_max.shape) # expect to be 256

```

## Troubleshooting

Ensure you are utilizing a stable version of Transformers, 4.34.0 or newer.

## Notice

Mistral-Chem-v1-417M is a pretrained base model for chemistry.

## Contact

Raphaël Mourad. [email protected]

|

optimum-internal-testing/tiny_random_bert_neuron | optimum-internal-testing | 2024-07-01T12:33:42Z | 542 | 0 | transformers | [

"transformers",

"bert",

"feature-extraction",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

]

| feature-extraction | 2024-06-05T16:15:25Z | Entry not found |

neopolita/qwen2-0.5b-gguf | neopolita | 2024-06-06T23:36:39Z | 542 | 0 | null | [

"gguf",

"region:us"

]

| null | 2024-06-06T23:32:37Z | ---

{}

---

# GGUF quants for [**Qwen/Qwen2-0.5B**](https://huggingface.co/Qwen/Qwen2-0.5B) using [llama.cpp](https://github.com/ggerganov/llama.cpp)

**Terms of Use**: Please check the [**original model**](https://huggingface.co/Qwen/Qwen2-0.5B)

<picture>

<img alt="cthulhu" src="https://huggingface.co/neopolita/common/resolve/main/profile.png">

</picture>

## Quants

* `q2_k`: Uses Q4_K for the attention.vw and feed_forward.w2 tensors, Q2_K for the other tensors.

* `q3_k_s`: Uses Q3_K for all tensors

* `q3_k_m`: Uses Q4_K for the attention.wv, attention.wo, and feed_forward.w2 tensors, else Q3_K

* `q3_k_l`: Uses Q5_K for the attention.wv, attention.wo, and feed_forward.w2 tensors, else Q3_K

* `q4_0`: Original quant method, 4-bit.

* `q4_1`: Higher accuracy than q4_0 but not as high as q5_0. However has quicker inference than q5 models.

* `q4_k_s`: Uses Q4_K for all tensors

* `q4_k_m`: Uses Q6_K for half of the attention.wv and feed_forward.w2 tensors, else Q4_K

* `q5_0`: Higher accuracy, higher resource usage and slower inference.

* `q5_1`: Even higher accuracy, resource usage and slower inference.

* `q5_k_s`: Uses Q5_K for all tensors

* `q5_k_m`: Uses Q6_K for half of the attention.wv and feed_forward.w2 tensors, else Q5_K

* `q6_k`: Uses Q8_K for all tensors

* `q8_0`: Almost indistinguishable from float16. High resource use and slow. Not recommended for most users. |

BK-Lee/TroL-3.8B | BK-Lee | 2024-06-19T04:46:53Z | 542 | 2 | transformers | [

"transformers",

"safetensors",

"trol",

"text-generation",

"conversational",

"arxiv:2406.12246",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-generation | 2024-06-18T07:00:50Z | ---

license: mit

---

# Two steps only need.

First step. (git clone and install required packages)

```shell

git clone https://github.com/ByungKwanLee/TroL

bash install

```

Second step. (open, edit, and run `demo.py`)

```python

import torch

from config import *

from PIL import Image

from utils.utils import *

import torch.nn.functional as F

from trol.load_trol import load_trol

from torchvision.transforms.functional import pil_to_tensor

# model selection

link = "TroL-3.8B" # [Select One] 'TroL-1.8B' | 'TroL-3.8B' | 'TroL-7B'

# User prompt

prompt_type="with_image" # Select one option "text_only", "with_image"

img_path='figures/demo.png'

question="What is the troll doing? Provide the detail in the image and imagine what the event happens."

# loading model

model, tokenizer = load_trol(link=link)

# cpu -> gpu

for param in model.parameters():

if not param.is_cuda:

param.data = param.to('cuda:0')

# prompt type -> input prompt

image_token_number = None

if prompt_type == 'with_image':

# Image Load

image = pil_to_tensor(Image.open(img_path).convert("RGB"))

if not "3.8B" in link:

image_token_number = 1225

image = F.interpolate(image.unsqueeze(0), size=(490, 490), mode='bicubic').squeeze(0)

inputs = [{'image': image, 'question': question}]

elif prompt_type=='text_only':

inputs = [{'question': question}]

# Generate

with torch.inference_mode():

_inputs = model.eval_process(inputs=inputs,

data='demo',

tokenizer=tokenizer,

device='cuda:0',

img_token_number=image_token_number)

generate_ids = model.generate(**_inputs, max_new_tokens=256, use_cache=True)

response = output_filtering(tokenizer.batch_decode(generate_ids, skip_special_tokens=False)[0], model)

print(response)

```

So easy Let's say TroL!

# Paper arxiv.org/abs/2406.12246 |

NikolayKozloff/Llama-3SOME-8B-v2-Q5_K_S-GGUF | NikolayKozloff | 2024-06-21T21:01:45Z | 542 | 2 | null | [

"gguf",

"not-for-all-audiences",

"llama-cpp",

"gguf-my-repo",

"base_model:TheDrummer/Llama-3SOME-8B-v2",

"license:cc-by-nc-4.0",

"region:us"

]

| null | 2024-06-21T21:01:16Z | ---

base_model: TheDrummer/Llama-3SOME-8B-v2

license: cc-by-nc-4.0

tags:

- not-for-all-audiences

- llama-cpp

- gguf-my-repo

---

# NikolayKozloff/Llama-3SOME-8B-v2-Q5_K_S-GGUF

This model was converted to GGUF format from [`TheDrummer/Llama-3SOME-8B-v2`](https://huggingface.co/TheDrummer/Llama-3SOME-8B-v2) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/TheDrummer/Llama-3SOME-8B-v2) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo NikolayKozloff/Llama-3SOME-8B-v2-Q5_K_S-GGUF --hf-file llama-3some-8b-v2-q5_k_s.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo NikolayKozloff/Llama-3SOME-8B-v2-Q5_K_S-GGUF --hf-file llama-3some-8b-v2-q5_k_s.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo NikolayKozloff/Llama-3SOME-8B-v2-Q5_K_S-GGUF --hf-file llama-3some-8b-v2-q5_k_s.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo NikolayKozloff/Llama-3SOME-8B-v2-Q5_K_S-GGUF --hf-file llama-3some-8b-v2-q5_k_s.gguf -c 2048

```

|

CHE-72/Breeze-7B-Instruct-v1_0-Q3_K_S-GGUF | CHE-72 | 2024-06-22T18:15:57Z | 542 | 0 | null | [

"gguf",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"zh",

"en",

"base_model:MediaTek-Research/Breeze-7B-Instruct-v1_0",

"license:apache-2.0",

"region:us"

]

| text-generation | 2024-06-22T18:15:43Z | ---

base_model: MediaTek-Research/Breeze-7B-Instruct-v1_0

language:

- zh

- en

license: apache-2.0

pipeline_tag: text-generation

tags:

- llama-cpp

- gguf-my-repo

---

# CHE-72/Breeze-7B-Instruct-v1_0-Q3_K_S-GGUF

This model was converted to GGUF format from [`MediaTek-Research/Breeze-7B-Instruct-v1_0`](https://huggingface.co/MediaTek-Research/Breeze-7B-Instruct-v1_0) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/MediaTek-Research/Breeze-7B-Instruct-v1_0) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo CHE-72/Breeze-7B-Instruct-v1_0-Q3_K_S-GGUF --hf-file breeze-7b-instruct-v1_0-q3_k_s.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo CHE-72/Breeze-7B-Instruct-v1_0-Q3_K_S-GGUF --hf-file breeze-7b-instruct-v1_0-q3_k_s.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo CHE-72/Breeze-7B-Instruct-v1_0-Q3_K_S-GGUF --hf-file breeze-7b-instruct-v1_0-q3_k_s.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo CHE-72/Breeze-7B-Instruct-v1_0-Q3_K_S-GGUF --hf-file breeze-7b-instruct-v1_0-q3_k_s.gguf -c 2048

```

|

sosoai/Hansoldeco-Gemma-2-9b-it-v0.1-Q4_K_M-GGUF | sosoai | 2024-07-01T20:26:14Z | 542 | 0 | null | [

"gguf",

"llama-cpp",

"gguf-my-repo",

"base_model:sosoai/Hansoldeco-Gemma-2-9b-it-v0.1",

"region:us"

]

| null | 2024-07-01T20:25:49Z | ---

base_model: sosoai/Hansoldeco-Gemma-2-9b-it-v0.1

tags:

- llama-cpp

- gguf-my-repo

---

# sosoai/Hansoldeco-Gemma-2-9b-it-v0.1-Q4_K_M-GGUF

This model was converted to GGUF format from [`sosoai/Hansoldeco-Gemma-2-9b-it-v0.1`](https://huggingface.co/sosoai/Hansoldeco-Gemma-2-9b-it-v0.1) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/sosoai/Hansoldeco-Gemma-2-9b-it-v0.1) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo sosoai/Hansoldeco-Gemma-2-9b-it-v0.1-Q4_K_M-GGUF --hf-file hansoldeco-gemma-2-9b-it-v0.1-q4_k_m.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo sosoai/Hansoldeco-Gemma-2-9b-it-v0.1-Q4_K_M-GGUF --hf-file hansoldeco-gemma-2-9b-it-v0.1-q4_k_m.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo sosoai/Hansoldeco-Gemma-2-9b-it-v0.1-Q4_K_M-GGUF --hf-file hansoldeco-gemma-2-9b-it-v0.1-q4_k_m.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo sosoai/Hansoldeco-Gemma-2-9b-it-v0.1-Q4_K_M-GGUF --hf-file hansoldeco-gemma-2-9b-it-v0.1-q4_k_m.gguf -c 2048

```

|

timm/vit_base_patch32_clip_224.openai_ft_in1k | timm | 2023-05-06T00:04:11Z | 541 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"dataset:wit-400m",

"arxiv:2212.07143",

"arxiv:2103.00020",

"arxiv:2010.11929",

"license:apache-2.0",

"region:us"

]

| image-classification | 2022-11-10T07:58:45Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

- wit-400m

---

# Model card for vit_base_patch32_clip_224.openai_ft_in1k

A Vision Transformer (ViT) image classification model. Pretrained on WIT-400M image-text pairs by OpenAI using CLIP. Fine-tuned on ImageNet-1k in `timm`. See recipes in [Reproducible scaling laws](https://arxiv.org/abs/2212.07143).

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 88.2

- GMACs: 4.4

- Activations (M): 4.2

- Image size: 224 x 224

- **Papers:**

- Learning Transferable Visual Models From Natural Language Supervision: https://arxiv.org/abs/2103.00020

- Reproducible scaling laws for contrastive language-image learning: https://arxiv.org/abs/2212.07143

- An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale: https://arxiv.org/abs/2010.11929v2

- **Dataset:** ImageNet-1k

- **Pretrain Dataset:**

- WIT-400M

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('vit_base_patch32_clip_224.openai_ft_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1