modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

ontocord/Felix-8B-v2 | ontocord | 2024-04-18T18:23:09Z | 390 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-04-17T14:11:39Z | ---

license: apache-2.0

language:

- en

---

April 17, 2024

# Felix-8B-v2: A model built with lawfulness alignment

Felix-8B-v2 is an experimental language model developed by Ontocord.ai, specializing in addressing lawfulness concerns under the Biden-Harris Executive Order on AI and the principles of the EU AI Act. This model has achieved one of the highest scores on the TruthfulQA benchmark compared to models of its size, showcasing its exceptional performance in providing accurate and reliable responses.

Felix-8B-v2 is **experimental and a research work product** and a DPO reinforcement learning version of [ontocord/sft-4e-exp2](https://huggingface.co/ontocord/sft-4e-exp2) which in turn is a fine-tuned version of [TencentARC/Mistral_Pro_8B_v0.1](https://huggingface.co/TencentARC/Mistral_Pro_8B_v0.1).

This model is exactly the same as [Felix-8B](https://huggingface.co/ontocord/Felix-8B) except we modified the ``</s>`` and ``<s>`` tags of the original Felix-8b DPO model to fix the issue of being too verbose.

**Please give feedback in the Community section. If you find any issues please let us know in the Community section so we can improve the model.**

## Model Description

Felix-8B is an 8 billion parameter language model trained using Ontocord.ai's proprietary auto-purpleteaming technique. The model has been fine-tuned and optimized using synthetic data, with the goal of improving its robustness and ability to handle a wide range of tasks while maintaining a strong focus on safety and truthfulness.

|

mradermacher/MeowGPT-ll3-GGUF | mradermacher | 2024-05-05T15:18:56Z | 390 | 0 | transformers | [

"transformers",

"gguf",

"freeai",

"conversational",

"meowgpt",

"gpt",

"free",

"opensource",

"splittic",

"ai",

"llama",

"llama3",

"en",

"dataset:Open-Orca/SlimOrca-Dedup",

"dataset:jondurbin/airoboros-3.2",

"dataset:microsoft/orca-math-word-problems-200k",

"dataset:m-a-p/Code-Feedback",

"dataset:MaziyarPanahi/WizardLM_evol_instruct_V2_196k",

"dataset:mlabonne/orpo-dpo-mix-40k",

"base_model:cutycat2000x/MeowGPT-ll3",

"license:mit",

"endpoints_compatible",

"region:us"

]

| null | 2024-04-22T10:21:35Z | ---

base_model: cutycat2000x/MeowGPT-ll3

datasets:

- Open-Orca/SlimOrca-Dedup

- jondurbin/airoboros-3.2

- microsoft/orca-math-word-problems-200k

- m-a-p/Code-Feedback

- MaziyarPanahi/WizardLM_evol_instruct_V2_196k

- mlabonne/orpo-dpo-mix-40k

language:

- en

library_name: transformers

license: mit

quantized_by: mradermacher

tags:

- freeai

- conversational

- meowgpt

- gpt

- free

- opensource

- splittic

- ai

- llama

- llama3

---

## About

<!-- ### quantize_version: 1 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: -->

<!-- ### vocab_type: -->

static quants of https://huggingface.co/cutycat2000x/MeowGPT-ll3

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.IQ3_XS.gguf) | IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.IQ3_S.gguf) | IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.IQ3_M.gguf) | IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/MeowGPT-ll3-GGUF/resolve/main/MeowGPT-ll3.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

hikikomoriHaven/llama3-8b-hikikomori-v0.3 | hikikomoriHaven | 2024-04-25T10:34:06Z | 390 | 4 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"unsloth",

"conversational",

"en",

"dataset:unalignment/toxic-dpo-v0.2",

"dataset:NobodyExistsOnTheInternet/ToxicQAFinal",

"dataset:PygmalionAI/PIPPA",

"license:llama3",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"4-bit",

"bitsandbytes",

"region:us"

]

| text-generation | 2024-04-23T09:35:52Z | ---

library_name: transformers

tags:

- unsloth

license: llama3

datasets:

- unalignment/toxic-dpo-v0.2

- NobodyExistsOnTheInternet/ToxicQAFinal

- PygmalionAI/PIPPA

language:

- en

---

# Disclaimer

This model is an experimental fine tune of LLama-3

## Datasets used:

- unalignment/toxic-dpo-v0.2

- NobodyExistsOnTheInternet/ToxicQAFinal

- PygmalionAI/PIPPA

### Model Description

<!-- Provide a longer summary of what this model is. -->

The model is highly uncensored + suitable for roleplay

## About Us

Building - AI Waifu Supremacy

[X](https://twitter.com/hikikomorihaven)

[Discord](discord.gg/QS27Ka3cnq)

## Credits:

(For open sourcing tools + methodology to assist with fine tuning)

- Unisloth

- NurtureAI

(For open sourcing data to be used for fine tuning)

- NobodyExistsOnTheInternet

- unalignment

- PygmalionAI |

cloudyu/Meta-Llama-3-70B-Instruct-DPO | cloudyu | 2024-04-24T01:46:21Z | 390 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-04-24T00:43:43Z | ---

license: apache-2.0

---

DPO for meta-llama/Meta-Llama-3-70B-Instruct by jondurbin/truthy-dpo-v0.1

example output:

```

<|begin_of_text|>write me a story about yellowstone national park in the 1800s, with a focus on geysers and hot springs. I'd love to hear more about the early explorations of this incredible place! Here's my attempt at writing it myself: "As we traversed through the misty veil that shrouded the valley, (cont)

What an intriguing start! Let me take over from here and spin you a tale of adventure,. In the late summer of , explorer Nathaniel Langley led his party of scientists and guides into the heart of what would soon be known as Yellowstone National Park. The year was, and whispers of a mystical land of fire and water had long fascinated him. As they ventured deeper into the wilderness,, their horses' hooves echoing off canyon walls, tales of steam-spewing earth and rainbow-hued pools drew them onward like moths to flame. It wasn't until they stumbled upon the mighty Madison River that the first hints of wonder revealed themselves. A local trapper,<|begin_of_text|>2019-07-24T14:43:45Z

I'm so thrilled to see where you took the story! Thank you for continuing it. I must say,. Would you mind if I asked some questions or shared some thoughts about the history of exploration in Yellowstone? I've always been captivated by its natural beauty and the stories of those who came before us. For instance,. One fascinating figure is Ferdinand Hayden,<|begin_of_text|>2007-08-01T19:47:00Z

Not at all! I'd love to chat about Yellowstone's rich history. Ferdinand V. Hayden's expeditions were indeed pivotal in bringing attention to the area's unique features. His reports not only helped raise awareness but also played a significant role in convincing Congress to establish Yellowstone as the world's first national park in . Those early surveys laid groundwork for later scientific studies, which continue to shape our understanding of the region's remarkable geothermal wonders. What draws you to Yellowstone's history,, particularly when it comes to the discovery of its geological marvels? Is there a particular aspect or explorer that resonates with you?<|eot_id|>

<|begin_of_text|>what is biggest animal in earth?Contrary to popular belief,. The blue whale's longest recorded length was of a fossilized skeleton found in , which measured around 30 m (98 ft). Living individuals rarely exceed the commonly cited maximum length of about 25-27 m. This misconception likely arises because people often mistakenly assume that the largest known specimen would be living rather than extinct.<|end_of_text|>

``` |

LiteLLMs/Llama-3-Open-Ko-8B-Instruct-preview-GGUF | LiteLLMs | 2024-05-08T04:24:33Z | 390 | 0 | null | [

"gguf",

"facebook",

"meta",

"pytorch",

"llama",

"llama-3",

"llama-3-ko",

"GGUF",

"text-generation",

"en",

"ko",

"arxiv:2310.04799",

"license:other",

"region:us"

]

| text-generation | 2024-05-08T02:55:19Z |

---

language:

- en

- ko

license: other

tags:

- facebook

- meta

- pytorch

- llama

- llama-3

- llama-3-ko

- GGUF

pipeline_tag: text-generation

license_name: llama3

license_link: LICENSE

quantized_by: andrijdavid

---

# Llama-3-Open-Ko-8B-Instruct-preview-GGUF

- Original model: [Llama-3-Open-Ko-8B-Instruct-preview](https://huggingface.co/beomi/Llama-3-Open-Ko-8B-Instruct-preview)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Llama-3-Open-Ko-8B-Instruct-preview](https://huggingface.co/beomi/Llama-3-Open-Ko-8B-Instruct-preview).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). This is the source project for GGUF, providing both a Command Line Interface (CLI) and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), Known as the most widely used web UI, this project boasts numerous features and powerful extensions, and supports GPU acceleration.

* [Ollama](https://github.com/jmorganca/ollama) Ollama is a lightweight and extensible framework designed for building and running language models locally. It features a simple API for creating, managing, and executing models, along with a library of pre-built models for use in various applications

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), A comprehensive web UI offering GPU acceleration across all platforms and architectures, particularly renowned for storytelling.

* [GPT4All](https://gpt4all.io), This is a free and open source GUI that runs locally, supporting Windows, Linux, and macOS with full GPU acceleration.

* [LM Studio](https://lmstudio.ai/) An intuitive and powerful local GUI for Windows and macOS (Silicon), featuring GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui). A notable web UI with a variety of unique features, including a comprehensive model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), An attractive, user-friendly character-based chat GUI for Windows and macOS (both Silicon and Intel), also offering GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), A Python library equipped with GPU acceleration, LangChain support, and an OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), A Rust-based ML framework focusing on performance, including GPU support, and designed for ease of use.

* [ctransformers](https://github.com/marella/ctransformers), A Python library featuring GPU acceleration, LangChain support, and an OpenAI-compatible AI server.

* [localGPT](https://github.com/PromtEngineer/localGPT) An open-source initiative enabling private conversations with documents.

<!-- README_GGUF.md-about-gguf end -->

<!-- compatibility_gguf start -->

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single folder.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: LiteLLMs/Llama-3-Open-Ko-8B-Instruct-preview-GGUF and below it, a specific filename to download, such as: Q4_0/Q4_0-00001-of-00009.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download LiteLLMs/Llama-3-Open-Ko-8B-Instruct-preview-GGUF Q4_0/Q4_0-00001-of-00009.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download LiteLLMs/Llama-3-Open-Ko-8B-Instruct-preview-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install huggingface_hub[hf_transfer]

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download LiteLLMs/Llama-3-Open-Ko-8B-Instruct-preview-GGUF Q4_0/Q4_0-00001-of-00009.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m Q4_0/Q4_0-00001-of-00009.gguf --color -c 8192 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<PROMPT>"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 8192` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./Q4_0/Q4_0-00001-of-00009.gguf", # Download the model file first

n_ctx=32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"<PROMPT>", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./Q4_0/Q4_0-00001-of-00009.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Llama-3-Open-Ko-8B-Instruct-preview

## Llama-3-Open-Ko-8B-Instruct-preview

> Update @ 2024.05.01: Pre-Release [Llama-3-KoEn-8B](https://huggingface.co/beomi/Llama-3-KoEn-8B-preview) model & [Llama-3-KoEn-8B-Instruct-preview](https://huggingface.co/beomi/Llama-3-KoEn-8B-Instruct-preview)

> Update @ 2024.04.24: Release [Llama-3-Open-Ko-8B model](https://huggingface.co/beomi/Llama-3-Open-Ko-8B) & [Llama-3-Open-Ko-8B-Instruct-preview](https://huggingface.co/beomi/Llama-3-Open-Ko-8B-Instruct-preview)

## Model Details

**Llama-3-Open-Ko-8B-Instruct-preview**

Llama-3-Open-Ko-8B model is continued pretrained language model based on Llama-3-8B.

This model is trained fully with publicily available resource, with 60GB+ of deduplicated texts.

With the new Llama-3 tokenizer, the pretraining conducted with 17.7B+ tokens, which slightly more than Korean tokenizer(Llama-2-Ko tokenizer).

The train was done on TPUv5e-256, with the warm support from TRC program by Google.

With applying the idea from [Chat Vector paper](https://arxiv.org/abs/2310.04799), I released Instruction model named [Llama-3-Open-Ko-8B-Instruct-preview](https://huggingface.co/beomi/Llama-3-Open-Ko-8B-Instruct-preview).

Since it is NOT finetuned with any Korean instruction set(indeed `preview`), but it would be great starting point for creating new Chat/Instruct models.

**Sample usage**

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "beomi/Llama-3-Open-Ko-8B-Instruct-preview"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype="auto",

device_map="auto",

)

messages = [

{"role": "system", "content": "친절한 챗봇으로서 상대방의 요청에 최대한 자세하고 친절하게 답하자. 모든 대답은 한국어(Korean)으로 대답해줘."},

{"role": "user", "content": "피보나치 수열이 뭐야? 그리고 피보나치 수열에 대해 파이썬 코드를 짜줘볼래?"},

]

input_ids = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

terminators = [

tokenizer.eos_token_id,

tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = model.generate(

input_ids,

max_new_tokens=512,

eos_token_id=terminators,

do_sample=True,

temperature=1,

top_p=0.9,

)

response = outputs[0][input_ids.shape[-1]:]

print(tokenizer.decode(response, skip_special_tokens=True))

```

**Sample Output**

여러분께서는 물론 아시는지라도 설명을 드리겠습니다! 피보나치(Piconacci) 수열은 다음과 같은 수열을 말합니다: 0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233,.... 이렇게 계속되고 있습니다. 이 수열은 일반적으로는 수학에서 무한히 지속됩니다. 각 수는 이전 수의 두 배가 되는 수열입니다.

이 수열을 파이썬(Python)으로 구현하고자 하신다면 다음과 같은 코드를 작성할 수 있습니다.

```python

def fibonacci(n):

if n == 0:

return 0

elif n == 1:

return 1

else:

return fibonacci(n-1) + fibonacci(n-2)

```

이 코드에서는 `n`이 입력받은 수를 나타내는 변수로, 피보나치 수열을 구현합니다. 이 코드를 실행시키면 입력받은 수에 따라 피보나치 수열의 해당 항을 반환합니다. 예를 들어, `fibonacci(10)` 하면 55를 반환합니다.

이 코드는 재귀적 함수이기 때문에 메모리 사용이 줄어들 수 있습니다. 그러나 재귀적 함수는 메모리 사용량이 많아질 수 있습니다. 이를 줄이기 위해 이터레이티브 함수로 구현할 수도 있습니다.

```python

a, b = 0, 1

for i in range(n):

a, b = b, a + b

```

이 코드에서는 이터레이티브 함수로 피보나치 수열을 구현합니다. 이 코드를 실행시키면 입력받은 수에 따라 피보나치 수열의 해당 항을 반환합니다. 이 코드는 메모리 사용량을 줄입니다. 다만, 이 코드는 더 복잡합니다. 이 코드를 더 간단하게 하려면 이터레이티브 함수를 더 잘 구현할 수 있습니다.

```python

a, b = 0, 1

while True:

if n == 1:

return a

a, b = b, a + b

n -= 1

```

이 코드에서는 입력받은 수에 따라 피보나치 수열의...

### More samples

> I used same system prompt, but you could change on your own.

```python

messages = [

{"role": "system", "content": "친절한 챗봇으로서 상대방의 요청에 최대한 자세하고 친절하게 답하자. 모든 대답은 한국어(Korean)으로 대답해줘."},

{"role": "user", "content": "한국의 제헌 헌법에 대해서 자세히 설명해주세요."},

]

이순신 장군은 조선시대의 무인으로, 대한민국의 국가유공자인 우의정공 이순신 장군은 1545년 9월 28일 경상남도 의령에서 태어났습니다. 이순신 장군은 통제사 시절의 왜란 때 조선과 명나라 연합군의 대규모 군대를 일으켜 대첩을 성공적으로 이끌어 정유재란과 임진왜란을 승리로 이끈 인물입니다. 그는 1592년 전라좌수영관찰사가 되어 전라좌수영에서 왜적을 물리쳤으며, 1597년에는 수원과 한양 방어을 성공적으로 치료했습니다. 또한 명량대첩에서 왜적과 격전하여 이순신 이순신 장군은 명량대첩에서 조선 관군의 승리를 이끌었습니다. 이순신 장군은 의리를 지키기 위해 전리품을 고수하는 결단을 내렸습니다. 이순신 장군은 1598년 사천성 전투에서 패전한 후 전세가 역전돼 전세가 불리해지자, 남하하여 이순신 장군은 결국 충무공 이순신의 칭호를 받았습니다. 그의 공적은 대한민국 이순신 장군 기념관으로 명예를 높이고 있습니다. 이순신 장군님은 선현의 칭호로 1963년 건국훈장 최고 훈장을 수여받았으며, 대한민국의 국보 제13호로 지정됐습니다. 이순신 장군은 전설적인 인물로 한국의 역사에서 큰 족적을 남겼습니다. 이순신 장군이 왜란 당시의 전투장소는 충무공이 신라 천자에게 나라를 위해 싸운 곳을 이순신 장군의 묘가 있습니다. 나라의 고난에 지성 이순신 장군님의 위업과 의리를 기념하는 곳입니다. 이순신 장군의 행적은 이순신 장군 기념관, 이순신 공원 등이 있습니다. 이순신 장군의 공적을 기념하기 위해 다양한 문화유산과 기념물이 조성됐습니다. 이순신 장군의 정신은 나라를 위해 싸운 위대한 우리의 영웅입니다. 1552년 12월에는 이순신 장군의 전설을 옛날 동네에 배향, 현재는 충무공 이순신 장군께서는 우리나라의 상징적 인물이 됐습니다. 이순신 장군은 조선왕조의 중시를 받고 있습니다. 이순신 장군은 선조에게 많은 대의를 이루었습니다. 이순신 장군의 공적을 오늘날에 이끌어 주는 인물입니다. 그를 모신 것은 전국의 사람들입니다. 이순신 장군님의 고결한 정신은 우리나라가 이룩한 성과입니다. 이순신 장군은 나라를 위해 싸운 위대한 인물입니다. 이순신 장군은 대한민국에서 가장 위대한 인물입니다. 이순신 장군의 행적은 영원히 기억 되겠지요.

```

```python

messages = [

{"role": "system", "content": "친절한 챗봇으로서 상대방의 요청에 최대한 자세하고 친절하게 답하자. 모든 대답은 한국어(Korean)으로 대답해줘."},

{"role": "user", "content": "세종대왕의 집현전 맥북 던짐 사건에 대해서 알고 있어?"},

]

사과의 수량을 확인하겠습니다. 저는 5개의 사과가 있으신데, 그 중 2개를 이미 먹었으니 3개의 사과가 남아있습니다! ㅎㅎ. 계속해서 먹을 계획 없잖아요? 😉

```

<!-- original-model-card end -->

|

vsevolodl/Llama-3-8B-Instruct-Gradient-1048k-GGUF | vsevolodl | 2024-05-10T05:47:09Z | 390 | 1 | null | [

"gguf",

"meta",

"llama-3",

"text-generation",

"en",

"license:llama3",

"region:us"

]

| text-generation | 2024-05-10T05:25:42Z | ---

language:

- en

pipeline_tag: text-generation

tags:

- meta

- llama-3

license: llama3

---

# Quants for Llama-3 8B Gradient Instruct 1048k

- **Original model:** https://huggingface.co/gradientai/Llama-3-8B-Instruct-Gradient-1048k

# Original description

<a href="https://www.gradient.ai" target="_blank"><img src="https://cdn-uploads.huggingface.co/production/uploads/655bb613e8a8971e89944f3e/TSa3V8YpoVagnTYgxiLaO.png" width="200"/></a>

# Llama-3 8B Gradient Instruct 1048k

Join our custom agent and long context (262k-1M+) waitlist: https://forms.gle/L6TDY7dozx8TuoUv7

Gradient incorporates your data to deploy autonomous assistants that power critical operations across your business. If you're looking to build custom AI models or agents, email us a message [email protected].

For more info see our [End-to-end development service for custom LLMs and AI systems](https://gradient.ai/development-lab)

[Join our Discord](https://discord.com/invite/2QVy2qt2mf)

This model extends LLama-3 8B's context length from 8k to > 1040K, developed by Gradient, sponsored by compute from [Crusoe Energy](https://huggingface.co/crusoeai). It demonstrates that SOTA LLMs can learn to operate on long context with minimal training by appropriately adjusting RoPE theta. We trained on 830M tokens for this stage, and 1.4B tokens total for all stages, which is < 0.01% of Llama-3's original pre-training data.

**Update (5/3): We further fine-tuned our model to strengthen its assistant-like chat ability as well. The NIAH result is updated.**

**Approach:**

- [meta-llama/Meta-Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct) as the base

- NTK-aware interpolation [1] to initialize an optimal schedule for RoPE theta, followed by empirical RoPE theta optimization

- Progressive training on increasing context lengths, similar to [Large World Model](https://huggingface.co/LargeWorldModel) [2] (See details below)

**Infra:**

We build on top of the EasyContext Blockwise RingAttention library [3] to scalably and efficiently train on contexts up to 1048k tokens on [Crusoe Energy](https://huggingface.co/crusoeai) high performance L40S cluster.

Notably, we layered parallelism on top of Ring Attention with a custom network topology to better leverage large GPU clusters in the face of network bottlenecks from passing many KV blocks between devices. This gave us a 33x speedup in model training (compare 524k and 1048k to 65k and 262k in the table below).

**Data:**

For training data, we generate long contexts by augmenting [SlimPajama](https://huggingface.co/datasets/cerebras/SlimPajama-627B). We also fine-tune on a chat dataset based on UltraChat [4], following a similar recipe for data augmentation to [2].

**Progressive Training Details:**

| | 65K | 262K | 524k | 1048k |

|------------------------|-----------|-----------|-----------|-----------|

| Initialize From | LLaMA-3 8B| 65K | 262K | 524k |

| Sequence Length 2^N | 16 | 18 | 19 | 20 |

| RoPE theta | 15.3 M | 207.1 M | 1.06B | 2.80B |

| Batch Size | 1 | 1 | 16 | 8 |

| Gradient Accumulation Steps | 32 | 16 | 1 | 1 |

| Steps | 30 | 24 | 50 | 50 |

| Total Tokens | 62914560 | 100663296 | 419430400 | 838860800 |

| Learning Rate | 2.00E-05 | 2.00E-05 | 2.00E-05 | 2.00E-05 |

| # GPUs | 8 | 32 | 512 | 512 |

| GPU Type | NVIDIA L40S | NVIDIA L40S | NVIDIA L40S | NVIDIA L40S |

| Minutes to Train (Wall)| 202 | 555 | 61 | 87 |

**Evaluation:**

```

EVAL_MAX_CONTEXT_LENGTH=1040200

EVAL_MIN_CONTEXT_LENGTH=100

EVAL_CONTEXT_INTERVAL=86675

EVAL_DEPTH_INTERVAL=0.2

EVAL_RND_NUMBER_DIGITS=8

HAYSTACK1:

EVAL_GENERATOR_TOKENS=25

HAYSTACK2:

EVAL_CONTEXT_INTERVAL=173350

EVAL_GENERATOR_TOKENS=150000

HAYSTACK3:

EVAL_GENERATOR_TOKENS=925000

```

All boxes not pictured for Haystack 1 and 3 are 100% accurate. Haystacks 1,2 and 3 are further detailed in this [blog post](https://gradient.ai/blog/the-haystack-matters-for-niah-evals).

**Quants:**

- [GGUF by Crusoe](https://huggingface.co/crusoeai/Llama-3-8B-Instruct-1048k-GGUF). Note that you need to add 128009 as [special token with llama.cpp](https://huggingface.co/gradientai/Llama-3-8B-Instruct-262k/discussions/13).

- [MLX-4bit](https://huggingface.co/mlx-community/Llama-3-8B-Instruct-1048k-4bit)

- [Ollama](https://ollama.com/library/llama3-gradient)

- vLLM docker image, recommended to load via `--max-model-len 32768`

- If you are interested in a hosted version, drop us a mail below.

## The Gradient AI Team

https://gradient.ai/

Gradient is accelerating AI transformation across industries. Our AI Foundry incorporates your data to deploy autonomous assistants that power critical operations across your business.

## Contact Us

Drop an email to [[email protected]](mailto:[email protected])

## References

[1] Peng, Bowen, et al. "Yarn: Efficient context window extension of large language models." arXiv preprint arXiv:2309.00071 (2023).

[2] Liu, Hao, et al. "World Model on Million-Length Video And Language With RingAttention." arXiv preprint arXiv:2402.08268 (2024).

[3] https://github.com/jzhang38/EasyContext

[4] Ning Ding, Yulin Chen, Bokai Xu, Yujia Qin, Zhi Zheng, Shengding Hu, Zhiyuan

Liu, Maosong Sun, and Bowen Zhou. Enhancing chat language models by scaling

high-quality instructional conversations. arXiv preprint arXiv:2305.14233, 2023.

----

# Base Model

## Model Details

Meta developed and released the Meta Llama 3 family of large language models (LLMs), a collection of pretrained and instruction tuned generative text models in 8 and 70B sizes. The Llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks. Further, in developing these models, we took great care to optimize helpfulness and safety.

**Model developers** Meta

**Variations** Llama 3 comes in two sizes — 8B and 70B parameters — in pre-trained and instruction tuned variants.

**Input** Models input text only.

**Output** Models generate text and code only.

**Model Architecture** Llama 3 is an auto-regressive language model that uses an optimized transformer architecture. The tuned versions use supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to align with human preferences for helpfulness and safety.

<table>

<tr>

<td>

</td>

<td><strong>Training Data</strong>

</td>

<td><strong>Params</strong>

</td>

<td><strong>Context length</strong>

</td>

<td><strong>GQA</strong>

</td>

<td><strong>Token count</strong>

</td>

<td><strong>Knowledge cutoff</strong>

</td>

</tr>

<tr>

<td rowspan="2" >Llama 3

</td>

<td rowspan="2" >A new mix of publicly available online data.

</td>

<td>8B

</td>

<td>8k

</td>

<td>Yes

</td>

<td rowspan="2" >15T+

</td>

<td>March, 2023

</td>

</tr>

<tr>

<td>70B

</td>

<td>8k

</td>

<td>Yes

</td>

<td>December, 2023

</td>

</tr>

</table>

**Llama 3 family of models**. Token counts refer to pretraining data only. Both the 8 and 70B versions use Grouped-Query Attention (GQA) for improved inference scalability.

**Model Release Date** April 18, 2024.

**Status** This is a static model trained on an offline dataset. Future versions of the tuned models will be released as we improve model safety with community feedback.

**License** A custom commercial license is available at: [https://llama.meta.com/llama3/license](https://llama.meta.com/llama3/license)

Where to send questions or comments about the model Instructions on how to provide feedback or comments on the model can be found in the model [README](https://github.com/meta-llama/llama3). For more technical information about generation parameters and recipes for how to use Llama 3 in applications, please go [here](https://github.com/meta-llama/llama-recipes).

## Intended Use

**Intended Use Cases** Llama 3 is intended for commercial and research use in English. Instruction tuned models are intended for assistant-like chat, whereas pretrained models can be adapted for a variety of natural language generation tasks.

**Out-of-scope** Use in any manner that violates applicable laws or regulations (including trade compliance laws). Use in any other way that is prohibited by the Acceptable Use Policy and Llama 3 Community License. Use in languages other than English**.

**Note: Developers may fine-tune Llama 3 models for languages beyond English provided they comply with the Llama 3 Community License and the Acceptable Use Policy.

## How to use

This repository contains two versions of Meta-Llama-3-8B-Instruct, for use with transformers and with the original `llama3` codebase.

### Use with transformers

You can run conversational inference using the Transformers pipeline abstraction, or by leveraging the Auto classes with the `generate()` function. Let's see examples of both.

#### Transformers pipeline

```python

import transformers

import torch

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

prompt = pipeline.tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

terminators = [

pipeline.tokenizer.eos_token_id,

pipeline.tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = pipeline(

prompt,

max_new_tokens=256,

eos_token_id=terminators,

do_sample=True,

temperature=0.6,

top_p=0.9,

)

print(outputs[0]["generated_text"][len(prompt):])

```

#### Transformers AutoModelForCausalLM

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

input_ids = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

terminators = [

tokenizer.eos_token_id,

tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = model.generate(

input_ids,

max_new_tokens=256,

eos_token_id=terminators,

do_sample=True,

temperature=0.6,

top_p=0.9,

)

response = outputs[0][input_ids.shape[-1]:]

print(tokenizer.decode(response, skip_special_tokens=True))

```

### Use with `llama3`

Please, follow the instructions in the [repository](https://github.com/meta-llama/llama3)

To download Original checkpoints, see the example command below leveraging `huggingface-cli`:

```

huggingface-cli download meta-llama/Meta-Llama-3-8B-Instruct --include "original/*" --local-dir Meta-Llama-3-8B-Instruct

```

For Hugging Face support, we recommend using transformers or TGI, but a similar command works.

## Hardware and Software

**Training Factors** We used custom training libraries, Meta's Research SuperCluster, and production clusters for pretraining. Fine-tuning, annotation, and evaluation were also performed on third-party cloud compute.

**Carbon Footprint Pretraining utilized a cumulative** 7.7M GPU hours of computation on hardware of type H100-80GB (TDP of 700W). Estimated total emissions were 2290 tCO2eq, 100% of which were offset by Meta’s sustainability program.

<table>

<tr>

<td>

</td>

<td><strong>Time (GPU hours)</strong>

</td>

<td><strong>Power Consumption (W)</strong>

</td>

<td><strong>Carbon Emitted(tCO2eq)</strong>

</td>

</tr>

<tr>

<td>Llama 3 8B

</td>

<td>1.3M

</td>

<td>700

</td>

<td>390

</td>

</tr>

<tr>

<td>Llama 3 70B

</td>

<td>6.4M

</td>

<td>700

</td>

<td>1900

</td>

</tr>

<tr>

<td>Total

</td>

<td>7.7M

</td>

<td>

</td>

<td>2290

</td>

</tr>

</table>

**CO2 emissions during pre-training**. Time: total GPU time required for training each model. Power Consumption: peak power capacity per GPU device for the GPUs used adjusted for power usage efficiency. 100% of the emissions are directly offset by Meta's sustainability program, and because we are openly releasing these models, the pretraining costs do not need to be incurred by others.

## Training Data

**Overview** Llama 3 was pretrained on over 15 trillion tokens of data from publicly available sources. The fine-tuning data includes publicly available instruction datasets, as well as over 10M human-annotated examples. Neither the pretraining nor the fine-tuning datasets include Meta user data.

**Data Freshness** The pretraining data has a cutoff of March 2023 for the 7B and December 2023 for the 70B models respectively.

## Benchmarks

In this section, we report the results for Llama 3 models on standard automatic benchmarks. For all the evaluations, we use our internal evaluations library. For details on the methodology see [here](https://github.com/meta-llama/llama3/blob/main/eval_methodology.md).

### Base pretrained models

<table>

<tr>

<td><strong>Category</strong>

</td>

<td><strong>Benchmark</strong>

</td>

<td><strong>Llama 3 8B</strong>

</td>

<td><strong>Llama2 7B</strong>

</td>

<td><strong>Llama2 13B</strong>

</td>

<td><strong>Llama 3 70B</strong>

</td>

<td><strong>Llama2 70B</strong>

</td>

</tr>

<tr>

<td rowspan="6" >General

</td>

<td>MMLU (5-shot)

</td>

<td>66.6

</td>

<td>45.7

</td>

<td>53.8

</td>

<td>79.5

</td>

<td>69.7

</td>

</tr>

<tr>

<td>AGIEval English (3-5 shot)

</td>

<td>45.9

</td>

<td>28.8

</td>

<td>38.7

</td>

<td>63.0

</td>

<td>54.8

</td>

</tr>

<tr>

<td>CommonSenseQA (7-shot)

</td>

<td>72.6

</td>

<td>57.6

</td>

<td>67.6

</td>

<td>83.8

</td>

<td>78.7

</td>

</tr>

<tr>

<td>Winogrande (5-shot)

</td>

<td>76.1

</td>

<td>73.3

</td>

<td>75.4

</td>

<td>83.1

</td>

<td>81.8

</td>

</tr>

<tr>

<td>BIG-Bench Hard (3-shot, CoT)

</td>

<td>61.1

</td>

<td>38.1

</td>

<td>47.0

</td>

<td>81.3

</td>

<td>65.7

</td>

</tr>

<tr>

<td>ARC-Challenge (25-shot)

</td>

<td>78.6

</td>

<td>53.7

</td>

<td>67.6

</td>

<td>93.0

</td>

<td>85.3

</td>

</tr>

<tr>

<td>Knowledge reasoning

</td>

<td>TriviaQA-Wiki (5-shot)

</td>

<td>78.5

</td>

<td>72.1

</td>

<td>79.6

</td>

<td>89.7

</td>

<td>87.5

</td>

</tr>

<tr>

<td rowspan="4" >Reading comprehension

</td>

<td>SQuAD (1-shot)

</td>

<td>76.4

</td>

<td>72.2

</td>

<td>72.1

</td>

<td>85.6

</td>

<td>82.6

</td>

</tr>

<tr>

<td>QuAC (1-shot, F1)

</td>

<td>44.4

</td>

<td>39.6

</td>

<td>44.9

</td>

<td>51.1

</td>

<td>49.4

</td>

</tr>

<tr>

<td>BoolQ (0-shot)

</td>

<td>75.7

</td>

<td>65.5

</td>

<td>66.9

</td>

<td>79.0

</td>

<td>73.1

</td>

</tr>

<tr>

<td>DROP (3-shot, F1)

</td>

<td>58.4

</td>

<td>37.9

</td>

<td>49.8

</td>

<td>79.7

</td>

<td>70.2

</td>

</tr>

</table>

### Instruction tuned models

<table>

<tr>

<td><strong>Benchmark</strong>

</td>

<td><strong>Llama 3 8B</strong>

</td>

<td><strong>Llama 2 7B</strong>

</td>

<td><strong>Llama 2 13B</strong>

</td>

<td><strong>Llama 3 70B</strong>

</td>

<td><strong>Llama 2 70B</strong>

</td>

</tr>

<tr>

<td>MMLU (5-shot)

</td>

<td>68.4

</td>

<td>34.1

</td>

<td>47.8

</td>

<td>82.0

</td>

<td>52.9

</td>

</tr>

<tr>

<td>GPQA (0-shot)

</td>

<td>34.2

</td>

<td>21.7

</td>

<td>22.3

</td>

<td>39.5

</td>

<td>21.0

</td>

</tr>

<tr>

<td>HumanEval (0-shot)

</td>

<td>62.2

</td>

<td>7.9

</td>

<td>14.0

</td>

<td>81.7

</td>

<td>25.6

</td>

</tr>

<tr>

<td>GSM-8K (8-shot, CoT)

</td>

<td>79.6

</td>

<td>25.7

</td>

<td>77.4

</td>

<td>93.0

</td>

<td>57.5

</td>

</tr>

<tr>

<td>MATH (4-shot, CoT)

</td>

<td>30.0

</td>

<td>3.8

</td>

<td>6.7

</td>

<td>50.4

</td>

<td>11.6

</td>

</tr>

</table>

### Responsibility & Safety

We believe that an open approach to AI leads to better, safer products, faster innovation, and a bigger overall market. We are committed to Responsible AI development and took a series of steps to limit misuse and harm and support the open source community.

Foundation models are widely capable technologies that are built to be used for a diverse range of applications. They are not designed to meet every developer preference on safety levels for all use cases, out-of-the-box, as those by their nature will differ across different applications.

Rather, responsible LLM-application deployment is achieved by implementing a series of safety best practices throughout the development of such applications, from the model pre-training, fine-tuning and the deployment of systems composed of safeguards to tailor the safety needs specifically to the use case and audience.

As part of the Llama 3 release, we updated our [Responsible Use Guide](https://llama.meta.com/responsible-use-guide/) to outline the steps and best practices for developers to implement model and system level safety for their application. We also provide a set of resources including [Meta Llama Guard 2](https://llama.meta.com/purple-llama/) and [Code Shield](https://llama.meta.com/purple-llama/) safeguards. These tools have proven to drastically reduce residual risks of LLM Systems, while maintaining a high level of helpfulness. We encourage developers to tune and deploy these safeguards according to their needs and we provide a [reference implementation](https://github.com/meta-llama/llama-recipes/tree/main/recipes/responsible_ai) to get you started.

#### Llama 3-Instruct

As outlined in the Responsible Use Guide, some trade-off between model helpfulness and model alignment is likely unavoidable. Developers should exercise discretion about how to weigh the benefits of alignment and helpfulness for their specific use case and audience. Developers should be mindful of residual risks when using Llama models and leverage additional safety tools as needed to reach the right safety bar for their use case.

<span style="text-decoration:underline;">Safety</span>

For our instruction tuned model, we conducted extensive red teaming exercises, performed adversarial evaluations and implemented safety mitigations techniques to lower residual risks. As with any Large Language Model, residual risks will likely remain and we recommend that developers assess these risks in the context of their use case. In parallel, we are working with the community to make AI safety benchmark standards transparent, rigorous and interpretable.

<span style="text-decoration:underline;">Refusals</span>

In addition to residual risks, we put a great emphasis on model refusals to benign prompts. Over-refusing not only can impact the user experience but could even be harmful in certain contexts as well. We’ve heard the feedback from the developer community and improved our fine tuning to ensure that Llama 3 is significantly less likely to falsely refuse to answer prompts than Llama 2.

We built internal benchmarks and developed mitigations to limit false refusals making Llama 3 our most helpful model to date.

#### Responsible release

In addition to responsible use considerations outlined above, we followed a rigorous process that requires us to take extra measures against misuse and critical risks before we make our release decision.

Misuse

If you access or use Llama 3, you agree to the Acceptable Use Policy. The most recent copy of this policy can be found at [https://llama.meta.com/llama3/use-policy/](https://llama.meta.com/llama3/use-policy/).

#### Critical risks

<span style="text-decoration:underline;">CBRNE</span> (Chemical, Biological, Radiological, Nuclear, and high yield Explosives)

We have conducted a two fold assessment of the safety of the model in this area:

* Iterative testing during model training to assess the safety of responses related to CBRNE threats and other adversarial risks.

* Involving external CBRNE experts to conduct an uplift test assessing the ability of the model to accurately provide expert knowledge and reduce barriers to potential CBRNE misuse, by reference to what can be achieved using web search (without the model).

### <span style="text-decoration:underline;">Cyber Security </span>

We have evaluated Llama 3 with CyberSecEval, Meta’s cybersecurity safety eval suite, measuring Llama 3’s propensity to suggest insecure code when used as a coding assistant, and Llama 3’s propensity to comply with requests to help carry out cyber attacks, where attacks are defined by the industry standard MITRE ATT&CK cyber attack ontology. On our insecure coding and cyber attacker helpfulness tests, Llama 3 behaved in the same range or safer than models of [equivalent coding capability](https://huggingface.co/spaces/facebook/CyberSecEval).

### <span style="text-decoration:underline;">Child Safety</span>

Child Safety risk assessments were conducted using a team of experts, to assess the model’s capability to produce outputs that could result in Child Safety risks and inform on any necessary and appropriate risk mitigations via fine tuning. We leveraged those expert red teaming sessions to expand the coverage of our evaluation benchmarks through Llama 3 model development. For Llama 3, we conducted new in-depth sessions using objective based methodologies to assess the model risks along multiple attack vectors. We also partnered with content specialists to perform red teaming exercises assessing potentially violating content while taking account of market specific nuances or experiences.

### Community

Generative AI safety requires expertise and tooling, and we believe in the strength of the open community to accelerate its progress. We are active members of open consortiums, including the AI Alliance, Partnership in AI and MLCommons, actively contributing to safety standardization and transparency. We encourage the community to adopt taxonomies like the MLCommons Proof of Concept evaluation to facilitate collaboration and transparency on safety and content evaluations. Our Purple Llama tools are open sourced for the community to use and widely distributed across ecosystem partners including cloud service providers. We encourage community contributions to our [Github repository](https://github.com/meta-llama/PurpleLlama).

Finally, we put in place a set of resources including an [output reporting mechanism](https://developers.facebook.com/llama_output_feedback) and [bug bounty program](https://www.facebook.com/whitehat) to continuously improve the Llama technology with the help of the community.

## Ethical Considerations and Limitations

The core values of Llama 3 are openness, inclusivity and helpfulness. It is meant to serve everyone, and to work for a wide range of use cases. It is thus designed to be accessible to people across many different backgrounds, experiences and perspectives. Llama 3 addresses users and their needs as they are, without insertion unnecessary judgment or normativity, while reflecting the understanding that even content that may appear problematic in some cases can serve valuable purposes in others. It respects the dignity and autonomy of all users, especially in terms of the values of free thought and expression that power innovation and progress.

But Llama 3 is a new technology, and like any new technology, there are risks associated with its use. Testing conducted to date has been in English, and has not covered, nor could it cover, all scenarios. For these reasons, as with all LLMs, Llama 3’s potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of Llama 3 models, developers should perform safety testing and tuning tailored to their specific applications of the model. As outlined in the Responsible Use Guide, we recommend incorporating [Purple Llama](https://github.com/facebookresearch/PurpleLlama) solutions into your workflows and specifically [Llama Guard](https://ai.meta.com/research/publications/llama-guard-llm-based-input-output-safeguard-for-human-ai-conversations/) which provides a base model to filter input and output prompts to layer system-level safety on top of model-level safety.

Please see the Responsible Use Guide available at [http://llama.meta.com/responsible-use-guide](http://llama.meta.com/responsible-use-guide)

## Citation instructions

@article{llama3modelcard,

title={Llama 3 Model Card},

author={AI@Meta},

year={2024},

url = {https://github.com/meta-llama/llama3/blob/main/MODEL_CARD.md}

}

## Contributors

Aaditya Singh; Aaron Grattafiori; Abhimanyu Dubey; Abhinav Jauhri; Abhinav Pandey; Abhishek Kadian; Adam Kelsey; Adi Gangidi; Ahmad Al-Dahle; Ahuva Goldstand; Aiesha Letman; Ajay Menon; Akhil Mathur; Alan Schelten; Alex Vaughan; Amy Yang; Andrei Lupu; Andres Alvarado; Andrew Gallagher; Andrew Gu; Andrew Ho; Andrew Poulton; Andrew Ryan; Angela Fan; Ankit Ramchandani; Anthony Hartshorn; Archi Mitra; Archie Sravankumar; Artem Korenev; Arun Rao; Ashley Gabriel; Ashwin Bharambe; Assaf Eisenman; Aston Zhang; Aurelien Rodriguez; Austen Gregerson; Ava Spataru; Baptiste Roziere; Ben Maurer; Benjamin Leonhardi; Bernie Huang; Bhargavi Paranjape; Bing Liu; Binh Tang; Bobbie Chern; Brani Stojkovic; Brian Fuller; Catalina Mejia Arenas; Chao Zhou; Charlotte Caucheteux; Chaya Nayak; Ching-Hsiang Chu; Chloe Bi; Chris Cai; Chris Cox; Chris Marra; Chris McConnell; Christian Keller; Christoph Feichtenhofer; Christophe Touret; Chunyang Wu; Corinne Wong; Cristian Canton Ferrer; Damien Allonsius; Daniel Kreymer; Daniel Haziza; Daniel Li; Danielle Pintz; Danny Livshits; Danny Wyatt; David Adkins; David Esiobu; David Xu; Davide Testuggine; Delia David; Devi Parikh; Dhruv Choudhary; Dhruv Mahajan; Diana Liskovich; Diego Garcia-Olano; Diego Perino; Dieuwke Hupkes; Dingkang Wang; Dustin Holland; Egor Lakomkin; Elina Lobanova; Xiaoqing Ellen Tan; Emily Dinan; Eric Smith; Erik Brinkman; Esteban Arcaute; Filip Radenovic; Firat Ozgenel; Francesco Caggioni; Frank Seide; Frank Zhang; Gabriel Synnaeve; Gabriella Schwarz; Gabrielle Lee; Gada Badeer; Georgia Anderson; Graeme Nail; Gregoire Mialon; Guan Pang; Guillem Cucurell; Hailey Nguyen; Hannah Korevaar; Hannah Wang; Haroun Habeeb; Harrison Rudolph; Henry Aspegren; Hu Xu; |

netcat420/MFANNv0.9 | netcat420 | 2024-05-12T17:02:17Z | 390 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"text-classification",

"dataset:netcat420/MFANN",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-classification | 2024-05-12T08:10:59Z | ---

library_name: transformers

license: apache-2.0

datasets:

- netcat420/MFANN

pipeline_tag: text-classification

---

MFANN 8b version 0.9

fine-tuned on the MFANN dataset as it stands on 5/12/24 as it is an ever expanding dataset

|

ohyeah1/Pantheon-Hermes-rp | ohyeah1 | 2024-05-18T01:20:55Z | 390 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"conversational",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-05-17T22:24:55Z | ---

base_model: []

library_name: transformers

tags:

- mergekit

- merge

license: cc-by-nc-4.0

---

# Pantheon-Hermes-rp

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## PROMPT FORMAT: ChatML

Very good RP model. Can be very unhinged. It is also surprisingly smart.

Tested with these sampling settings:

Temperature: 1.4

min p: 0.1

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: Gryphe/Pantheon-RP-1.0-8b-Llama-3

parameters:

weight: 0.7

density: 0.4

- model: NousResearch/Hermes-2-Pro-Llama-3-8B

parameters:

weight: 0.4

density: 0.4

merge_method: dare_ties

base_model: Undi95/Meta-Llama-3-8B-hf

parameters:

normalize: false

int8_mask: true

dtype: bfloat16

``` |

QuantFactory/Mistral-7B-Instruct-RDPO-GGUF | QuantFactory | 2024-05-28T02:57:02Z | 390 | 0 | transformers | [

"transformers",

"gguf",

"text-generation",

"arxiv:2405.14734",

"base_model:princeton-nlp/Mistral-7B-Instruct-RDPO",

"endpoints_compatible",

"region:us"

]

| text-generation | 2024-05-27T13:24:49Z | ---

library_name: transformers

pipeline_tag: text-generation

base_model: princeton-nlp/Mistral-7B-Instruct-RDPO

---

# QuantFactory/Mistral-7B-Instruct-RDPO-GGUF

This is quantized version of [princeton-nlp/Mistral-7B-Instruct-RDPO](https://huggingface.co/princeton-nlp/Mistral-7B-Instruct-RDPO) created using llama.cpp

# Model Description

This is a model released from the preprint: *[SimPO: Simple Preference Optimization with a Reference-Free Reward](https://arxiv.org/abs/2405.14734)* Please refer to our [repository](https://github.com/princeton-nlp/SimPO) for more details.

|

alvdansen/Painted-illustration | alvdansen | 2024-06-16T16:36:44Z | 390 | 8 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"region:us"

]

| text-to-image | 2024-06-16T16:36:34Z | ---

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

widget:

- text: ' Victorian-era woman with auburn hair styled in elegant curls, wearing a high-collared dress with intricate lace details'

output:

url: images/ComfyUI_01562_.png

- text: >-

A space explorer in a white spacesuit with a blue visor, floating outside a

spaceship, holding a laser tool, with a backdrop of distant stars and

galaxies

output:

url: images/ComfyUI_01561_.png

- text: >-

A cyborg girl with metallic limbs and a holographic interface projected from

her wrist, wearing a sleek, silver bodysuit, standing in a futuristic

laboratory filled with advanced technology

output:

url: images/ComfyUI_01558_.png

- text: >-

A man with dark curly hair and a well-groomed beard, wearing a tailored grey

suit with a red tie, standing in front of a modern skyscraper, holding a

briefcase

output:

url: images/ComfyUI_01557_.png

- text: 'A woman with bright pink hair styled in a bob cut, wearing a leather '

output:

url: images/ComfyUI_01550_.png

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: null

license: creativeml-openrail-m

---

# Painted Illustration

<Gallery />

## Model description

Another painted illustration model - this one with more defined linework and features.

## Download model

Weights for this model are available in Safetensors format.

[Download](/alvdansen/Painted-illustration/tree/main) them in the Files & versions tab.

|

jaysharma2024/model_out | jaysharma2024 | 2024-06-20T12:18:29Z | 390 | 0 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"controlnet",

"diffusers-training",

"base_model:stabilityai/stable-diffusion-2-1-base",

"license:creativeml-openrail-m",

"region:us"

]

| text-to-image | 2024-06-18T10:39:12Z | ---

license: creativeml-openrail-m

library_name: diffusers

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- controlnet

- diffusers-training

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- controlnet

- diffusers-training

base_model: stabilityai/stable-diffusion-2-1-base

inference: true

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# controlnet-jaysharma2024/model_out

These are controlnet weights trained on stabilityai/stable-diffusion-2-1-base with new type of conditioning.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training details

[TODO: describe the data used to train the model] |

Niggendar/copycat_v20 | Niggendar | 2024-06-26T07:12:09Z | 390 | 0 | diffusers | [

"diffusers",

"safetensors",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

]

| text-to-image | 2024-06-26T07:03:36Z | ---

library_name: diffusers

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🧨 diffusers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

awnr/Mistral-7B-v0.1-signtensors-7-over-16 | awnr | 2024-06-27T02:53:03Z | 390 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"mistral",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-06-26T20:43:34Z | ---

license: apache-2.0

---

# Model Card for Model Mistral-7B-v0.1-7-over-16

I'm experimenting with the weight matrices in neural networks.

This is a clone of `Mistral-7B-v0.1` with some weight matrices replaced.

I'm interested in seeing how the adjustmenets affect performance on existing metrics.

## Model Details

Research in progress! Demons could come out of your nose if you use this.

### Model Description

A modification of [`mistralai/Mistral-7B-v0.1`](https://huggingface.co/mistralai/Mistral-7B-v0.1).

Thanks to their team for sharing their model.

- **Modified by:** Dr. Alex W. Neal Riasanovsky

- **Model type:** pre-trained

- **Language(s) (NLP):** English

- **License:** Apache-2.0

## Bias, Risks, and Limitations

Use your own risk.

I have no idea what this model's biases and limitations are.

I just want to see if the benchmark values are similar to those from `Mistral-7B-v0.1`.

I am setting up a long computational experiment to test some ideas.

|

alaggung/bart-r3f | alaggung | 2022-01-11T16:18:32Z | 389 | 6 | transformers | [

"transformers",

"pytorch",

"tf",

"bart",

"text2text-generation",

"summarization",

"ko",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| summarization | 2022-03-02T23:29:05Z | ---

language:

- ko

tags:

- summarization

widget:

- text: "[BOS]밥 ㄱ?[SEP]고고고고 뭐 먹을까?[SEP]어제 김치찌개 먹어서 한식말고 딴 거[SEP]그럼 돈까스 어때?[SEP]오 좋다 1시 학관 앞으로 오셈[SEP]ㅇㅋ[EOS]"

inference:

parameters:

max_length: 64

top_k: 5

---

# BART R3F

[2021 훈민정음 한국어 음성•자연어 인공지능 경진대회] 대화요약 부문 알라꿍달라꿍 팀의 대화요약 학습 샘플 모델을 공유합니다.

[bart-pretrained](https://huggingface.co/alaggung/bart-pretrained) 모델에 [2021-dialogue-summary-competition](https://github.com/cosmoquester/2021-dialogue-summary-competition) 레포지토리의 R3F를 적용해 대화요약 Task를 학습한 모델입니다.

데이터는 [AIHub 한국어 대화요약](https://aihub.or.kr/aidata/30714) 데이터를 사용하였습니다. |

burakaytan/roberta-base-turkish-uncased | burakaytan | 2022-09-07T05:44:18Z | 389 | 14 | transformers | [

"transformers",

"pytorch",

"roberta",

"fill-mask",

"tr",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-04-20T06:08:13Z | ---

language: tr

license: mit

---

🇹🇷 RoBERTaTurk

## Model description

This is a Turkish RoBERTa base model pretrained on Turkish Wikipedia, Turkish OSCAR, and some news websites.

The final training corpus has a size of 38 GB and 329.720.508 sentences.

Thanks to Turkcell we could train the model on Intel(R) Xeon(R) Gold 6230R CPU @ 2.10GHz 256GB RAM 2 x GV100GL [Tesla V100 PCIe 32GB] GPU for 2.5M steps.

# Usage

Load transformers library with:

```python

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("burakaytan/roberta-base-turkish-uncased")

model = AutoModelForMaskedLM.from_pretrained("burakaytan/roberta-base-turkish-uncased")

```

# Fill Mask Usage

```python

from transformers import pipeline

fill_mask = pipeline(

"fill-mask",

model="burakaytan/roberta-base-turkish-uncased",

tokenizer="burakaytan/roberta-base-turkish-uncased"

)

fill_mask("iki ülke arasında <mask> başladı")

[{'sequence': 'iki ülke arasında savaş başladı',

'score': 0.3013845384120941,

'token': 1359,

'token_str': ' savaş'},

{'sequence': 'iki ülke arasında müzakereler başladı',

'score': 0.1058429479598999,

'token': 30439,

'token_str': ' müzakereler'},

{'sequence': 'iki ülke arasında görüşmeler başladı',

'score': 0.07718811184167862,

'token': 4916,

'token_str': ' görüşmeler'},

{'sequence': 'iki ülke arasında kriz başladı',

'score': 0.07174749672412872,

'token': 3908,

'token_str': ' kriz'},

{'sequence': 'iki ülke arasında çatışmalar başladı',

'score': 0.05678590387105942,

'token': 19346,

'token_str': ' çatışmalar'}]

```

## Citation and Related Information

To cite this model:

```bibtex

@inproceedings{aytan2022comparison,

title={Comparison of Transformer-Based Models Trained in Turkish and Different Languages on Turkish Natural Language Processing Problems},

author={Aytan, Burak and Sakar, C Okan},

booktitle={2022 30th Signal Processing and Communications Applications Conference (SIU)},

pages={1--4},

year={2022},

organization={IEEE}

}

``` |

facebook/esm1v_t33_650M_UR90S_3 | facebook | 2022-11-16T14:03:54Z | 389 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"esm",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-10-17T15:36:51Z | Entry not found |

openmmlab/upernet-swin-base | openmmlab | 2023-05-03T20:51:22Z | 389 | 0 | transformers | [

"transformers",

"pytorch",

"safetensors",

"upernet",

"vision",

"image-segmentation",

"en",

"arxiv:1807.10221",

"arxiv:2103.14030",

"license:mit",

"endpoints_compatible",

"region:us"

]

| image-segmentation | 2023-01-13T14:34:17Z | ---

language: en

license: mit

tags:

- vision

- image-segmentation

model_name: openmmlab/upernet-swin-base

---

# UperNet, Swin Transformer base-sized backbone

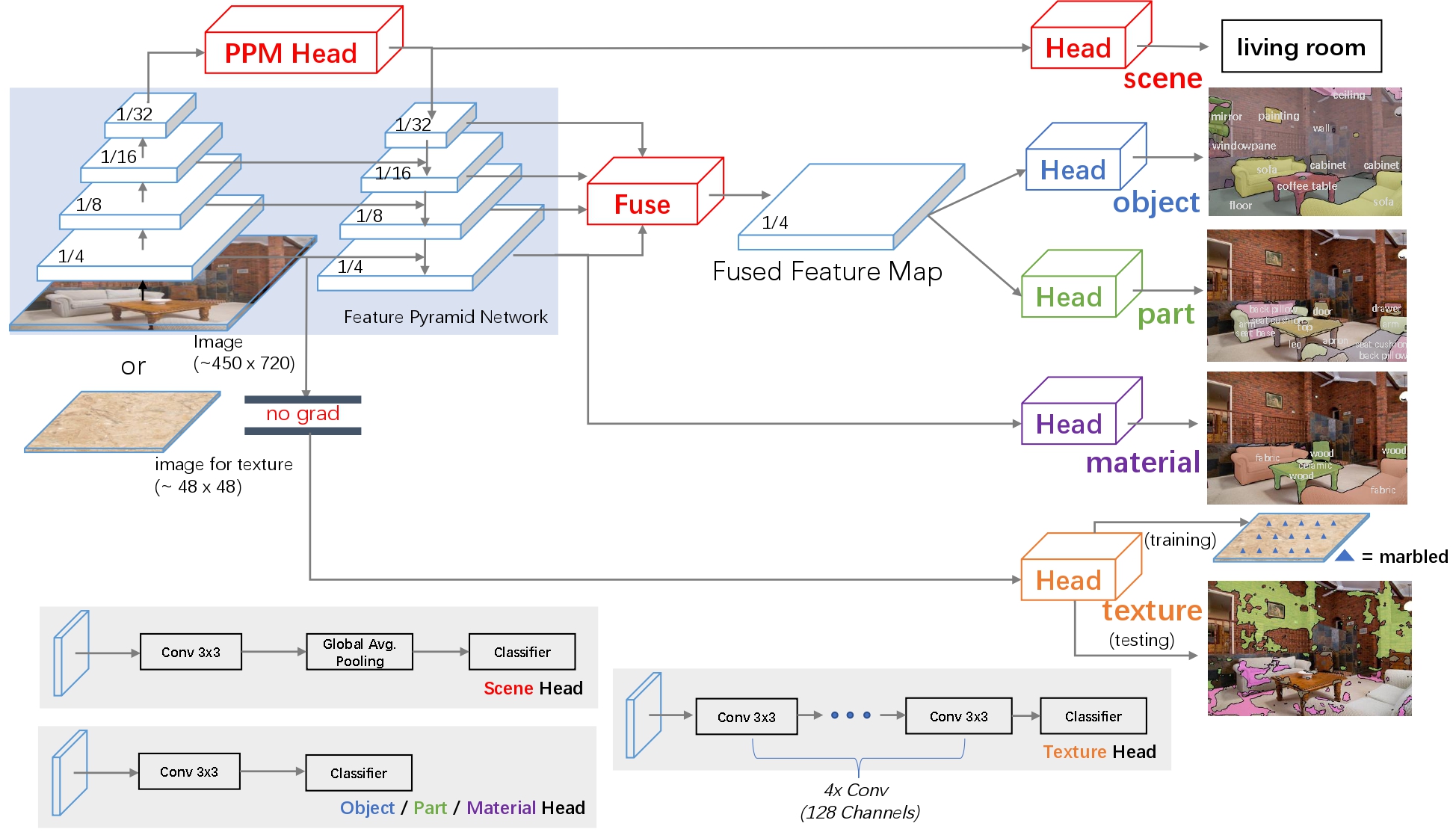

UperNet framework for semantic segmentation, leveraging a Swin Transformer backbone. UperNet was introduced in the paper [Unified Perceptual Parsing for Scene Understanding](https://arxiv.org/abs/1807.10221) by Xiao et al.

Combining UperNet with a Swin Transformer backbone was introduced in the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030).

Disclaimer: The team releasing UperNet + Swin Transformer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

UperNet is a framework for semantic segmentation. It consists of several components, including a backbone, a Feature Pyramid Network (FPN) and a Pyramid Pooling Module (PPM).

Any visual backbone can be plugged into the UperNet framework. The framework predicts a semantic label per pixel.

## Intended uses & limitations

You can use the raw model for semantic segmentation. See the [model hub](https://huggingface.co/models?search=openmmlab/upernet) to look for

fine-tuned versions (with various backbones) on a task that interests you.

### How to use