modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

NbAiLab/nb-bert-base-mnli

|

NbAiLab

| 2023-03-24T11:32:00Z | 363 | 9 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"safetensors",

"bert",

"text-classification",

"nb-bert",

"zero-shot-classification",

"tensorflow",

"norwegian",

"no",

"dataset:mnli",

"dataset:multi_nli",

"dataset:xnli",

"arxiv:1909.00161",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

zero-shot-classification

| 2022-03-02T23:29:04Z |

---

language: no

license: cc-by-4.0

thumbnail: https://raw.githubusercontent.com/NBAiLab/notram/master/images/nblogo_2.png

pipeline_tag: zero-shot-classification

tags:

- nb-bert

- zero-shot-classification

- pytorch

- tensorflow

- norwegian

- bert

datasets:

- mnli

- multi_nli

- xnli

widget:

- example_title: Nyhetsartikkel om FHI

text: Folkehelseinstituttets mest optimistiske anslag er at alle voksne er ferdigvaksinert innen midten av september.

candidate_labels: helse, politikk, sport, religion

---

**Release 1.0** (March 11, 2021)

# NB-Bert base model finetuned on Norwegian machine translated MNLI

## Description

The most effective way of creating a good classifier is to finetune a pre-trained model for the specific task at hand. However, in many cases this is simply impossible.

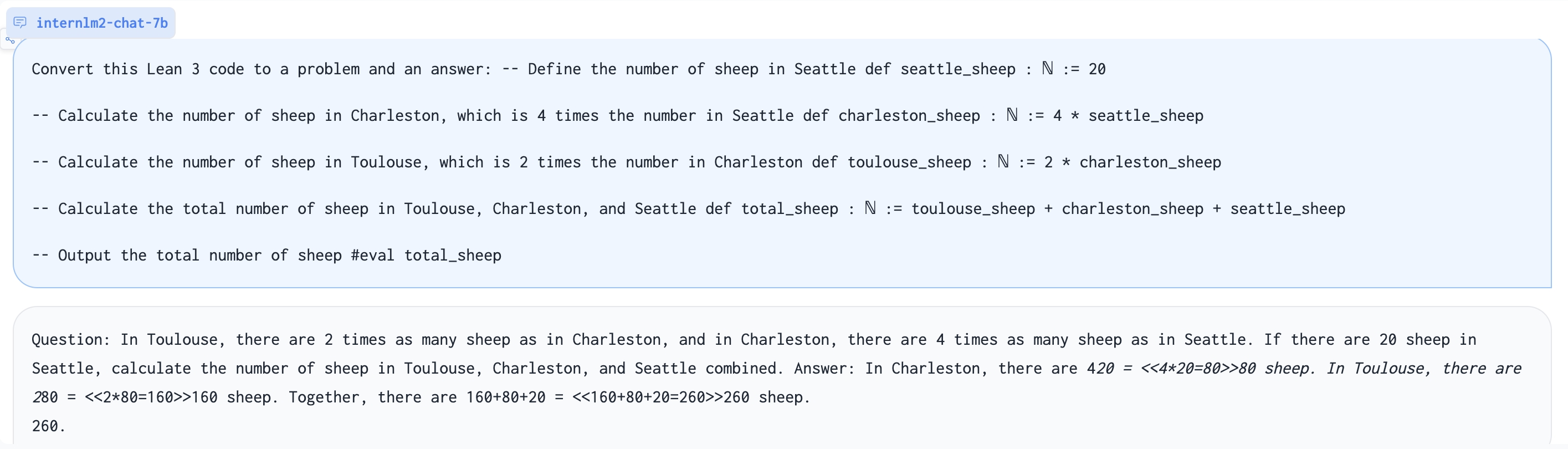

[Yin et al.](https://arxiv.org/abs/1909.00161) proposed a very clever way of using pre-trained MNLI models as zero-shot sequence classifiers. The methods works by reformulating the question to an MNLI hypothesis. If we want to figure out if a text is about "sport", we simply state that "This text is about sport" ("Denne teksten handler om sport").

When the model is finetuned on the 400k large MNLI task, it is in many cases able to solve this classification tasks. There are no MNLI-set of this size in Norwegian but we have trained it on a machine translated version of the original MNLI-set.

## Testing the model

For testing the model, we recommend the [NbAiLab Colab Notebook](https://colab.research.google.com/gist/peregilk/769b5150a2f807219ab8f15dd11ea449/nbailab-mnli-norwegian-demo.ipynb)

## Hugging Face zero-shot-classification pipeline

The easiest way to try this out is by using the Hugging Face pipeline. Please, note that you will get better results when using Norwegian hypothesis template instead of the default English one.

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification", model="NbAiLab/nb-bert-base-mnli")

```

You can then use this pipeline to classify sequences into any of the class names you specify.

```python

sequence_to_classify = 'Folkehelseinstituttets mest optimistiske anslag er at alle voksne er ferdigvaksinert innen midten av september.'

candidate_labels = ['politikk', 'helse', 'sport', 'religion']

hypothesis_template = 'Dette eksempelet er {}.'

classifier(sequence_to_classify, candidate_labels, hypothesis_template=hypothesis_template, multi_class=True)

# {'labels': ['helse', 'politikk', 'sport', 'religion'],

# 'scores': [0.4210019111633301, 0.0674605593085289, 0.000840459018945694, 0.0007541406666859984],

# 'sequence': 'Folkehelseinstituttets mest optimistiske anslag er at alle over 18 år er ferdigvaksinert innen midten av september.'}

```

## More information

For more information on the model, see

https://github.com/NBAiLab/notram

Here you will also find a Colab explaining more in details how to use the zero-shot-classification pipeline.

|

Salesforce/mixqg-3b

|

Salesforce

| 2021-10-18T16:19:00Z | 363 | 7 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"en",

"arxiv:2110.08175",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] |

text2text-generation

| 2022-03-02T23:29:04Z |

---

language: en

widget:

- text: Robert Boyle \\n In the late 17th century, Robert Boyle proved that air is necessary for combustion.

---

# MixQG (3b-sized model)

MixQG is a new question generation model pre-trained on a collection of QA datasets with a mix of answer types. It was introduced in the paper [MixQG: Neural Question Generation with Mixed Answer Types](https://arxiv.org/abs/2110.08175) and the associated code is released in [this](https://github.com/salesforce/QGen) repository.

### How to use

Using Huggingface pipeline abstraction:

```

from transformers import pipeline

nlp = pipeline("text2text-generation", model='Salesforce/mixqg-3b', tokenizer='Salesforce/mixqg-3b')

CONTEXT = "In the late 17th century, Robert Boyle proved that air is necessary for combustion."

ANSWER = "Robert Boyle"

def format_inputs(context: str, answer: str):

return f"{answer} \\n {context}"

text = format_inputs(CONTEXT, ANSWER)

nlp(text)

# should output [{'generated_text': 'Who proved that air is necessary for combustion?'}]

```

Using the pre-trained model directly:

```

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained('Salesforce/mixqg-3b')

model = AutoModelForSeq2SeqLM.from_pretrained('Salesforce/mixqg-3b')

CONTEXT = "In the late 17th century, Robert Boyle proved that air is necessary for combustion."

ANSWER = "Robert Boyle"

def format_inputs(context: str, answer: str):

return f"{answer} \\n {context}"

text = format_inputs(CONTEXT, ANSWER)

input_ids = tokenizer(text, return_tensors="pt").input_ids

generated_ids = model.generate(input_ids, max_length=32, num_beams=4)

output = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

print(output)

# should output "Who proved that air is necessary for combustion?"

```

### Citation

```

@misc{murakhovska2021mixqg,

title={MixQG: Neural Question Generation with Mixed Answer Types},

author={Lidiya Murakhovs'ka and Chien-Sheng Wu and Tong Niu and Wenhao Liu and Caiming Xiong},

year={2021},

eprint={2110.08175},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

jonatasgrosman/whisper-large-zh-cv11

|

jonatasgrosman

| 2022-12-22T23:51:35Z | 363 | 66 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"generated_from_trainer",

"zh",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-18T07:28:34Z |

---

language:

- zh

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

- cer

model-index:

- name: Whisper Large Chinese (Mandarin)

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: mozilla-foundation/common_voice_11_0 zh-CN

type: mozilla-foundation/common_voice_11_0

config: zh-CN

split: test

args: zh-CN

metrics:

- name: WER

type: wer

value: 55.02141421204441

- name: CER

type: cer

value: 9.550758567294045

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: google/fleurs cmn_hans_cn

type: google/fleurs

config: cmn_hans_cn

split: test

args: cmn_hans_cn

metrics:

- name: WER

type: wer

value: 70.62596203181118

- name: CER

type: cer

value: 11.761282471826888

---

# Whisper Large Chinese (Mandarin)

This model is a fine-tuned version of [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) on Chinese (Mandarin) using the train and validation splits of [Common Voice 11](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0). Not all validation split data were used during training, I extracted 1k samples from the validation split to be used for evaluation during fine-tuning.

## Usage

```python

from transformers import pipeline

transcriber = pipeline(

"automatic-speech-recognition",

model="jonatasgrosman/whisper-large-zh-cv11"

)

transcriber.model.config.forced_decoder_ids = (

transcriber.tokenizer.get_decoder_prompt_ids(

language="zh",

task="transcribe"

)

)

transcription = transcriber("path/to/my_audio.wav")

```

## Evaluation

I've performed the evaluation of the model using the test split of two datasets, the [Common Voice 11](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0) (same dataset used for the fine-tuning) and the [Fleurs](https://huggingface.co/datasets/google/fleurs) (dataset not seen during the fine-tuning). As Whisper can transcribe casing and punctuation, I've performed the model evaluation in 2 different scenarios, one using the raw text and the other using the normalized text (lowercase + removal of punctuations). Additionally, for the Fleurs dataset, I've evaluated the model in a scenario where there are no transcriptions of numerical values since the way these values are described in this dataset is different from how they are described in the dataset used in fine-tuning (Common Voice), so it is expected that this difference in the way of describing numerical values will affect the performance of the model for this type of transcription in Fleurs.

### Common Voice 11

| | CER | WER |

| --- | --- | --- |

| [jonatasgrosman/whisper-large-zh-cv11](https://huggingface.co/jonatasgrosman/whisper-large-zh-cv11) | 9.31 | 55.94 |

| [jonatasgrosman/whisper-large-zh-cv11](https://huggingface.co/jonatasgrosman/whisper-large-zh-cv11) + text normalization | 9.55 | 55.02 |

| [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) | 33.33 | 101.80 |

| [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) + text normalization | 29.90 | 95.91 |

### Fleurs

| | CER | WER |

| --- | --- | --- |

| [jonatasgrosman/whisper-large-zh-cv11](https://huggingface.co/jonatasgrosman/whisper-large-zh-cv11) | 15.00 | 93.45 |

| [jonatasgrosman/whisper-large-zh-cv11](https://huggingface.co/jonatasgrosman/whisper-large-zh-cv11) + text normalization | 11.76 | 70.63 |

| [jonatasgrosman/whisper-large-zh-cv11](https://huggingface.co/jonatasgrosman/whisper-large-zh-cv11) + keep only non-numeric samples | 10.95 | 87.91 |

| [jonatasgrosman/whisper-large-zh-cv11](https://huggingface.co/jonatasgrosman/whisper-large-zh-cv11) + text normalization + keep only non-numeric samples | 7.83 | 62.12 |

| [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) | 23.49 | 101.28 |

| [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) + text normalization | 17.58 | 83.22 |

| [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) + keep only non-numeric samples | 21.03 | 101.95 |

| [openai/whisper-large-v2](https://huggingface.co/openai/whisper-large-v2) + text normalization + keep only non-numeric samples | 15.22 | 79.28 |

|

SargeZT/controlnet-sd-xl-1.0-depth-faid-vidit

|

SargeZT

| 2023-07-29T07:29:51Z | 363 | 5 |

diffusers

|

[

"diffusers",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"controlnet",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-07-28T22:48:38Z |

---

license: creativeml-openrail-m

base_model: stabilityai/stable-diffusion-xl-base-1.0

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- controlnet

inference: true

---

# controlnet-SargeZT/controlnet-sd-xl-1.0-depth-faid-vidit

These are controlnet weights trained on stabilityai/stable-diffusion-xl-base-1.0 with new type of conditioning.

You can find some example images below.

prompt: the vaporwave hills from your nightmare, unsettling, light temperature 3500, light direction south-east

## License

[SDXL 1.0 License](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0/blob/main/LICENSE.md)

|

TheBloke/LLaMA-7b-AWQ

|

TheBloke

| 2023-11-09T18:18:27Z | 363 | 1 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"4-bit",

"awq",

"region:us"

] |

text-generation

| 2023-09-20T02:27:31Z |

---

base_model: https://ai.meta.com/blog/large-language-model-llama-meta-ai

inference: false

license: other

model_creator: Meta

model_name: LLaMA 7B

model_type: llama

prompt_template: '{prompt}

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# LLaMA 7B - AWQ

- Model creator: [Meta](https://huggingface.co/none)

- Original model: [LLaMA 7B](https://ai.meta.com/blog/large-language-model-llama-meta-ai)

<!-- description start -->

## Description

This repo contains AWQ model files for [Meta's LLaMA 7b](https://ai.meta.com/blog/large-language-model-llama-meta-ai).

### About AWQ

AWQ is an efficient, accurate and blazing-fast low-bit weight quantization method, currently supporting 4-bit quantization. Compared to GPTQ, it offers faster Transformers-based inference.

It is also now supported by continuous batching server [vLLM](https://github.com/vllm-project/vllm), allowing use of AWQ models for high-throughput concurrent inference in multi-user server scenarios. Note that, at the time of writing, overall throughput is still lower than running vLLM with unquantised models, however using AWQ enables using much smaller GPUs which can lead to easier deployment and overall cost savings. For example, a 70B model can be run on 1 x 48GB GPU instead of 2 x 80GB.

<!-- description end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/LLaMA-7b-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/LLaMA-7b-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/LLaMA-7b-GGUF)

* [Meta's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/huggyllama/llama-7b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: None

```

{prompt}

```

<!-- prompt-template end -->

<!-- README_AWQ.md-provided-files start -->

## Provided files and AWQ parameters

For my first release of AWQ models, I am releasing 128g models only. I will consider adding 32g as well if there is interest, and once I have done perplexity and evaluation comparisons, but at this time 32g models are still not fully tested with AutoAWQ and vLLM.

Models are released as sharded safetensors files.

| Branch | Bits | GS | AWQ Dataset | Seq Len | Size |

| ------ | ---- | -- | ----------- | ------- | ---- |

| [main](https://huggingface.co/TheBloke/LLaMA-7b-AWQ/tree/main) | 4 | 128 | [wikitext](https://huggingface.co/datasets/wikitext/viewer/wikitext-2-v1/test) | 4096 | 3.89 GB

<!-- README_AWQ.md-provided-files end -->

<!-- README_AWQ.md-use-from-vllm start -->

## Serving this model from vLLM

Documentation on installing and using vLLM [can be found here](https://vllm.readthedocs.io/en/latest/).

- When using vLLM as a server, pass the `--quantization awq` parameter, for example:

```shell

python3 python -m vllm.entrypoints.api_server --model TheBloke/LLaMA-7b-AWQ --quantization awq

```

When using vLLM from Python code, pass the `quantization=awq` parameter, for example:

```python

from vllm import LLM, SamplingParams

prompts = [

"Hello, my name is",

"The president of the United States is",

"The capital of France is",

"The future of AI is",

]

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="TheBloke/LLaMA-7b-AWQ", quantization="awq")

outputs = llm.generate(prompts, sampling_params)

# Print the outputs.

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

```

<!-- README_AWQ.md-use-from-vllm start -->

<!-- README_AWQ.md-use-from-python start -->

## How to use this AWQ model from Python code

### Install the necessary packages

Requires: [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) 0.0.2 or later

```shell

pip3 install autoawq

```

If you have problems installing [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) using the pre-built wheels, install it from source instead:

```shell

pip3 uninstall -y autoawq

git clone https://github.com/casper-hansen/AutoAWQ

cd AutoAWQ

pip3 install .

```

### You can then try the following example code

```python

from awq import AutoAWQForCausalLM

from transformers import AutoTokenizer

model_name_or_path = "TheBloke/LLaMA-7b-AWQ"

# Load model

model = AutoAWQForCausalLM.from_quantized(model_name_or_path, fuse_layers=True,

trust_remote_code=False, safetensors=True)

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, trust_remote_code=False)

prompt = "Tell me about AI"

prompt_template=f'''{prompt}

'''

print("\n\n*** Generate:")

tokens = tokenizer(

prompt_template,

return_tensors='pt'

).input_ids.cuda()

# Generate output

generation_output = model.generate(

tokens,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

max_new_tokens=512

)

print("Output: ", tokenizer.decode(generation_output[0]))

# Inference can also be done using transformers' pipeline

from transformers import pipeline

print("*** Pipeline:")

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=512,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1

)

print(pipe(prompt_template)[0]['generated_text'])

```

<!-- README_AWQ.md-use-from-python end -->

<!-- README_AWQ.md-compatibility start -->

## Compatibility

The files provided are tested to work with [AutoAWQ](https://github.com/casper-hansen/AutoAWQ), and [vLLM](https://github.com/vllm-project/vllm).

[Huggingface Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference) is not yet compatible with AWQ, but a PR is open which should bring support soon: [TGI PR #781](https://github.com/huggingface/text-generation-inference/issues/781).

<!-- README_AWQ.md-compatibility end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

# Original model card: Meta's LLaMA 7b

This contains the weights for the LLaMA-7b model. This model is under a non-commercial license (see the LICENSE file).

You should only use this repository if you have been granted access to the model by filling out [this form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform?usp=send_form) but either lost your copy of the weights or got some trouble converting them to the Transformers format.

|

TheBloke/MegaMix-S1-13B-GGUF

|

TheBloke

| 2023-09-30T18:32:19Z | 363 | 0 |

transformers

|

[

"transformers",

"gguf",

"llama",

"base_model:gradientputri/MegaMix-S1-13B",

"license:llama2",

"text-generation-inference",

"region:us"

] | null | 2023-09-30T18:26:24Z |

---

base_model: gradientputri/MegaMix-S1-13B

inference: false

license: llama2

model_creator: Putri

model_name: Megamix S1 13B

model_type: llama

prompt_template: '{prompt}

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Megamix S1 13B - GGUF

- Model creator: [Putri](https://huggingface.co/gradientputri)

- Original model: [Megamix S1 13B](https://huggingface.co/gradientputri/MegaMix-S1-13B)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Putri's Megamix S1 13B](https://huggingface.co/gradientputri/MegaMix-S1-13B).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/MegaMix-S1-13B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/MegaMix-S1-13B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF)

* [Putri's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/gradientputri/MegaMix-S1-13B)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Unknown

```

{prompt}

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [megamix-s1-13b.Q2_K.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [megamix-s1-13b.Q3_K_S.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [megamix-s1-13b.Q3_K_M.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [megamix-s1-13b.Q3_K_L.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [megamix-s1-13b.Q4_0.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [megamix-s1-13b.Q4_K_S.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [megamix-s1-13b.Q4_K_M.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [megamix-s1-13b.Q5_0.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [megamix-s1-13b.Q5_K_S.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [megamix-s1-13b.Q5_K_M.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [megamix-s1-13b.Q6_K.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [megamix-s1-13b.Q8_0.gguf](https://huggingface.co/TheBloke/MegaMix-S1-13B-GGUF/blob/main/megamix-s1-13b.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/MegaMix-S1-13B-GGUF and below it, a specific filename to download, such as: megamix-s1-13b.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/MegaMix-S1-13B-GGUF megamix-s1-13b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/MegaMix-S1-13B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/MegaMix-S1-13B-GGUF megamix-s1-13b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m megamix-s1-13b.Q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "{prompt}"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/MegaMix-S1-13B-GGUF", model_file="megamix-s1-13b.Q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Pierre Kircher, Stanislav Ovsiannikov, Michael Levine, Eugene Pentland, Andrey, 준교 김, Randy H, Fred von Graf, Artur Olbinski, Caitlyn Gatomon, terasurfer, Jeff Scroggin, James Bentley, Vadim, Gabriel Puliatti, Harry Royden McLaughlin, Sean Connelly, Dan Guido, Edmond Seymore, Alicia Loh, subjectnull, AzureBlack, Manuel Alberto Morcote, Thomas Belote, Lone Striker, Chris Smitley, Vitor Caleffi, Johann-Peter Hartmann, Clay Pascal, biorpg, Brandon Frisco, sidney chen, transmissions 11, Pedro Madruga, jinyuan sun, Ajan Kanaga, Emad Mostaque, Trenton Dambrowitz, Jonathan Leane, Iucharbius, usrbinkat, vamX, George Stoitzev, Luke Pendergrass, theTransient, Olakabola, Swaroop Kallakuri, Cap'n Zoog, Brandon Phillips, Michael Dempsey, Nikolai Manek, danny, Matthew Berman, Gabriel Tamborski, alfie_i, Raymond Fosdick, Tom X Nguyen, Raven Klaugh, LangChain4j, Magnesian, Illia Dulskyi, David Ziegler, Mano Prime, Luis Javier Navarrete Lozano, Erik Bjäreholt, 阿明, Nathan Dryer, Alex, Rainer Wilmers, zynix, TL, Joseph William Delisle, John Villwock, Nathan LeClaire, Willem Michiel, Joguhyik, GodLy, OG, Alps Aficionado, Jeffrey Morgan, ReadyPlayerEmma, Tiffany J. Kim, Sebastain Graf, Spencer Kim, Michael Davis, webtim, Talal Aujan, knownsqashed, John Detwiler, Imad Khwaja, Deo Leter, Jerry Meng, Elijah Stavena, Rooh Singh, Pieter, SuperWojo, Alexandros Triantafyllidis, Stephen Murray, Ai Maven, ya boyyy, Enrico Ros, Ken Nordquist, Deep Realms, Nicholas, Spiking Neurons AB, Elle, Will Dee, Jack West, RoA, Luke @flexchar, Viktor Bowallius, Derek Yates, Subspace Studios, jjj, Toran Billups, Asp the Wyvern, Fen Risland, Ilya, NimbleBox.ai, Chadd, Nitin Borwankar, Emre, Mandus, Leonard Tan, Kalila, K, Trailburnt, S_X, Cory Kujawski

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Putri's Megamix S1 13B

No original model card was available.

<!-- original-model-card end -->

|

LanguageBind/LanguageBind_Depth

|

LanguageBind

| 2024-02-01T06:57:09Z | 363 | 0 |

transformers

|

[

"transformers",

"pytorch",

"LanguageBindDepth",

"zero-shot-image-classification",

"arxiv:2310.01852",

"license:mit",

"endpoints_compatible",

"region:us"

] |

zero-shot-image-classification

| 2023-10-06T09:07:38Z |

---

license: mit

---

<p align="center">

<img src="https://s11.ax1x.com/2024/02/01/pFMDAm9.png" width="250" style="margin-bottom: 0.2;"/>

<p>

<h2 align="center"> <a href="https://arxiv.org/pdf/2310.01852.pdf">【ICLR 2024 🔥】LanguageBind: Extending Video-Language Pretraining to N-modality by Language-based Semantic Alignment</a></h2>

<h5 align="center"> If you like our project, please give us a star ⭐ on GitHub for latest update. </h2>

## 📰 News

* **[2024.01.27]** 👀👀👀 Our [MoE-LLaVA](https://github.com/PKU-YuanGroup/MoE-LLaVA) is released! A sparse model with 3B parameters outperformed the dense model with 7B parameters.

* **[2024.01.16]** 🔥🔥🔥 Our LanguageBind has been accepted at ICLR 2024! We earn the score of 6(3)8(6)6(6)6(6) [here](https://openreview.net/forum?id=QmZKc7UZCy¬eId=OgsxQxAleA).

* **[2023.12.15]** 💪💪💪 We expand the 💥💥💥 VIDAL dataset and now have **10M video-text data**. We launch **LanguageBind_Video 1.5**, checking our [model zoo](#-model-zoo).

* **[2023.12.10]** We expand the 💥💥💥 VIDAL dataset and now have **10M depth and 10M thermal data**. We are in the process of uploading thermal and depth data on [Hugging Face](https://huggingface.co/datasets/LanguageBind/VIDAL-Depth-Thermal) and expect the whole process to last 1-2 months.

* **[2023.11.27]** 🔥🔥🔥 We have updated our [paper](https://arxiv.org/abs/2310.01852) with emergency zero-shot results., checking our ✨ [results](#emergency-results).

* **[2023.11.26]** 💥💥💥 We have open-sourced all textual sources and corresponding YouTube IDs [here](DATASETS.md).

* **[2023.11.26]** 📣📣📣 We have open-sourced fully fine-tuned **Video & Audio**, achieving improved performance once again, checking our [model zoo](#-model-zoo).

* **[2023.11.22]** We are about to release a fully fine-tuned version, and the **HUGE** version is currently undergoing training.

* **[2023.11.21]** 💥 We are releasing sample data in [DATASETS.md](DATASETS.md) so that individuals who are interested can further modify the code to train it on their own data.

* **[2023.11.20]** 🚀🚀🚀 [Video-LLaVA](https://github.com/PKU-YuanGroup/Video-LLaVA) builds a large visual-language model to achieve 🎉SOTA performances based on LanguageBind encoders.

* **[2023.10.23]** 🎶 LanguageBind-Audio achieves 🎉🎉🎉**state-of-the-art (SOTA) performance on 5 datasets**, checking our ✨ [results](#multiple-modalities)!

* **[2023.10.14]** 😱 Released a stronger LanguageBind-Video, checking our ✨ [results](#video-language)! The video checkpoint **have updated** on Huggingface Model Hub!

* **[2023.10.10]** We provide sample data, which can be found in [assets](assets), and [emergency zero-shot usage](#emergency-zero-shot) is described.

* **[2023.10.07]** The checkpoints are available on 🤗 [Huggingface Model](https://huggingface.co/LanguageBind).

* **[2023.10.04]** Code and [demo](https://huggingface.co/spaces/LanguageBind/LanguageBind) are available now! Welcome to **watch** 👀 this repository for the latest updates.

## 😮 Highlights

### 💡 High performance, but NO intermediate modality required

LanguageBind is a **language-centric** multimodal pretraining approach, **taking the language as the bind across different modalities** because the language modality is well-explored and contains rich semantics.

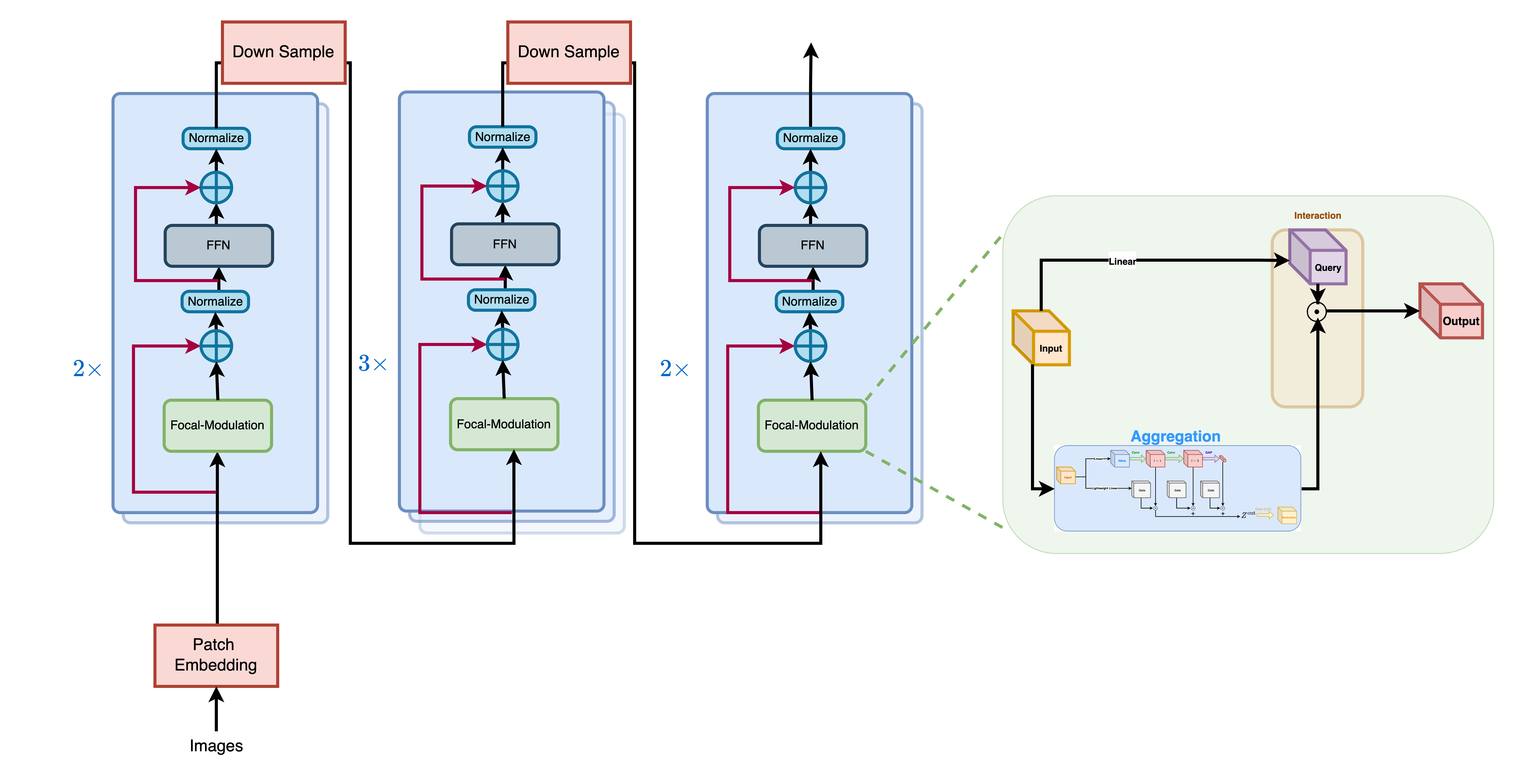

* The following first figure shows the architecture of LanguageBind. LanguageBind can be easily extended to segmentation, detection tasks, and potentially to unlimited modalities.

### ⚡️ A multimodal, fully aligned and voluminous dataset

We propose **VIDAL-10M**, **10 Million data** with **V**ideo, **I**nfrared, **D**epth, **A**udio and their corresponding **L**anguage, which greatly expands the data beyond visual modalities.

* The second figure shows our proposed VIDAL-10M dataset, which includes five modalities: video, infrared, depth, audio, and language.

### 🔥 Multi-view enhanced description for training

We make multi-view enhancements to language. We produce multi-view description that combines **meta-data**, **spatial**, and **temporal** to greatly enhance the semantic information of the language. In addition we further **enhance the language with ChatGPT** to create a good semantic space for each modality aligned language.

## 🤗 Demo

* **Local demo.** Highly recommend trying out our web demo, which incorporates all features currently supported by LanguageBind.

```bash

python gradio_app.py

```

* **Online demo.** We provide the [online demo](https://huggingface.co/spaces/LanguageBind/LanguageBind) in Huggingface Spaces. In this demo, you can calculate the similarity of modalities to language, such as audio-to-language, video-to-language, and depth-to-image.

## 🛠️ Requirements and Installation

* Python >= 3.8

* Pytorch >= 1.13.1

* CUDA Version >= 11.6

* Install required packages:

```bash

git clone https://github.com/PKU-YuanGroup/LanguageBind

cd LanguageBind

pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116

pip install -r requirements.txt

```

## 🐳 Model Zoo

The names in the table represent different encoder models. For example, `LanguageBind/LanguageBind_Video_FT` represents the fully fine-tuned version, while `LanguageBind/LanguageBind_Video` represents the LoRA-tuned version.

You can freely replace them in the recommended [API usage](#-api). We recommend using the fully fine-tuned version, as it offers stronger performance.

<div align="center">

<table border="1" width="100%">

<tr align="center">

<th>Modality</th><th>LoRA tuning</th><th>Fine-tuning</th>

</tr>

<tr align="center">

<td>Video</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Video">LanguageBind_Video</a></td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Video_FT">LanguageBind_Video_FT</a></td>

</tr>

<tr align="center">

<td>Audio</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Audio">LanguageBind_Audio</a></td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Audio_FT">LanguageBind_Audio_FT</a></td>

</tr>

<tr align="center">

<td>Depth</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Depth">LanguageBind_Depth</a></td><td>-</td>

</tr>

<tr align="center">

<td>Thermal</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Thermal">LanguageBind_Thermal</a></td><td>-</td>

</tr>

</table>

</div>

<div align="center">

<table border="1" width="100%">

<tr align="center">

<th>Version</th><th>Tuning</th><th>Model size</th><th>Num_frames</th><th>HF Link</th><th>MSR-VTT</th><th>DiDeMo</th><th>ActivityNet</th><th>MSVD</th>

</tr>

<tr align="center">

<td>LanguageBind_Video</td><td>LoRA</td><td>Large</td><td>8</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Video">Link</a></td><td>42.6</td><td>37.8</td><td>35.1</td><td>52.2</td>

</tr>

<tr align="center">

<td>LanguageBind_Video_FT</td><td>Full-tuning</td><td>Large</td><td>8</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Video_FT">Link</a></td><td>42.7</td><td>38.1</td><td>36.9</td><td>53.5</td>

</tr>

<tr align="center">

<td>LanguageBind_Video_V1.5_FT</td><td>Full-tuning</td><td>Large</td><td>8</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Video_V1.5_FT">Link</a></td><td>42.8</td><td>39.7</td><td>38.4</td><td>54.1</td>

</tr>

<tr align="center">

<td>LanguageBind_Video_V1.5_FT</td><td>Full-tuning</td><td>Large</td><td>12</td><td>Coming soon</td>

</tr>

<tr align="center">

<td>LanguageBind_Video_Huge_V1.5_FT</td><td>Full-tuning</td><td>Huge</td><td>8</td><td><a href="https://huggingface.co/LanguageBind/LanguageBind_Video_Huge_V1.5_FT">Link</a></td><td>44.8</td><td>39.9</td><td>41.0</td><td>53.7</td>

</tr>

<tr align="center">

<td>LanguageBind_Video_Huge_V1.5_FT</td><td>Full-tuning</td><td>Huge</td><td>12</td><td>Coming soon</td>

</tr>

</table>

</div>

## 🤖 API

**We open source all modalities preprocessing code.** If you want to load the model (e.g. ```LanguageBind/LanguageBind_Thermal```) from the model hub on Huggingface or on local, you can use the following code snippets!

### Inference for Multi-modal Binding

We have provided some sample datasets in [assets](assets) to quickly see how languagebind works.

```python

import torch

from languagebind import LanguageBind, to_device, transform_dict, LanguageBindImageTokenizer

if __name__ == '__main__':

device = 'cuda:0'

device = torch.device(device)

clip_type = {

'video': 'LanguageBind_Video_FT', # also LanguageBind_Video

'audio': 'LanguageBind_Audio_FT', # also LanguageBind_Audio

'thermal': 'LanguageBind_Thermal',

'image': 'LanguageBind_Image',

'depth': 'LanguageBind_Depth',

}

model = LanguageBind(clip_type=clip_type, cache_dir='./cache_dir')

model = model.to(device)

model.eval()

pretrained_ckpt = f'lb203/LanguageBind_Image'

tokenizer = LanguageBindImageTokenizer.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir/tokenizer_cache_dir')

modality_transform = {c: transform_dict[c](model.modality_config[c]) for c in clip_type.keys()}

image = ['assets/image/0.jpg', 'assets/image/1.jpg']

audio = ['assets/audio/0.wav', 'assets/audio/1.wav']

video = ['assets/video/0.mp4', 'assets/video/1.mp4']

depth = ['assets/depth/0.png', 'assets/depth/1.png']

thermal = ['assets/thermal/0.jpg', 'assets/thermal/1.jpg']

language = ["Training a parakeet to climb up a ladder.", 'A lion climbing a tree to catch a monkey.']

inputs = {

'image': to_device(modality_transform['image'](image), device),

'video': to_device(modality_transform['video'](video), device),

'audio': to_device(modality_transform['audio'](audio), device),

'depth': to_device(modality_transform['depth'](depth), device),

'thermal': to_device(modality_transform['thermal'](thermal), device),

}

inputs['language'] = to_device(tokenizer(language, max_length=77, padding='max_length',

truncation=True, return_tensors='pt'), device)

with torch.no_grad():

embeddings = model(inputs)

print("Video x Text: \n",

torch.softmax(embeddings['video'] @ embeddings['language'].T, dim=-1).detach().cpu().numpy())

print("Image x Text: \n",

torch.softmax(embeddings['image'] @ embeddings['language'].T, dim=-1).detach().cpu().numpy())

print("Depth x Text: \n",

torch.softmax(embeddings['depth'] @ embeddings['language'].T, dim=-1).detach().cpu().numpy())

print("Audio x Text: \n",

torch.softmax(embeddings['audio'] @ embeddings['language'].T, dim=-1).detach().cpu().numpy())

print("Thermal x Text: \n",

torch.softmax(embeddings['thermal'] @ embeddings['language'].T, dim=-1).detach().cpu().numpy())

```

Then returns the following result.

```bash

Video x Text:

[[9.9989331e-01 1.0667283e-04]

[1.3255903e-03 9.9867439e-01]]

Image x Text:

[[9.9990666e-01 9.3292067e-05]

[4.6132666e-08 1.0000000e+00]]

Depth x Text:

[[0.9954276 0.00457235]

[0.12042473 0.8795753 ]]

Audio x Text:

[[0.97634876 0.02365119]

[0.02917843 0.97082156]]

Thermal x Text:

[[0.9482511 0.0517489 ]

[0.48746133 0.5125386 ]]

```

### Emergency zero-shot

Since languagebind binds each modality together, we also found the **emergency zero-shot**. It's very simple to use.

```python

print("Video x Audio: \n", torch.softmax(embeddings['video'] @ embeddings['audio'].T, dim=-1).detach().cpu().numpy())

print("Image x Depth: \n", torch.softmax(embeddings['image'] @ embeddings['depth'].T, dim=-1).detach().cpu().numpy())

print("Image x Thermal: \n", torch.softmax(embeddings['image'] @ embeddings['thermal'].T, dim=-1).detach().cpu().numpy())

```

Then, you will get:

```

Video x Audio:

[[1.0000000e+00 0.0000000e+00]

[3.1150486e-32 1.0000000e+00]]

Image x Depth:

[[1. 0.]

[0. 1.]]

Image x Thermal:

[[1. 0.]

[0. 1.]]

```

### Different branches for X-Language task

Additionally, LanguageBind can be **disassembled into different branches** to handle different tasks. Note that we do not train Image, which just initialize from OpenCLIP.

#### Thermal

```python

import torch

from languagebind import LanguageBindThermal, LanguageBindThermalTokenizer, LanguageBindThermalProcessor

pretrained_ckpt = 'LanguageBind/LanguageBind_Thermal'

model = LanguageBindThermal.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

tokenizer = LanguageBindThermalTokenizer.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

thermal_process = LanguageBindThermalProcessor(model.config, tokenizer)

model.eval()

data = thermal_process([r"your/thermal.jpg"], ['your text'], return_tensors='pt')

with torch.no_grad():

out = model(**data)

print(out.text_embeds @ out.image_embeds.T)

```

#### Depth

```python

import torch

from languagebind import LanguageBindDepth, LanguageBindDepthTokenizer, LanguageBindDepthProcessor

pretrained_ckpt = 'LanguageBind/LanguageBind_Depth'

model = LanguageBindDepth.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

tokenizer = LanguageBindDepthTokenizer.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

depth_process = LanguageBindDepthProcessor(model.config, tokenizer)

model.eval()

data = depth_process([r"your/depth.png"], ['your text.'], return_tensors='pt')

with torch.no_grad():

out = model(**data)

print(out.text_embeds @ out.image_embeds.T)

```

#### Video

```python

import torch

from languagebind import LanguageBindVideo, LanguageBindVideoTokenizer, LanguageBindVideoProcessor

pretrained_ckpt = 'LanguageBind/LanguageBind_Video_FT' # also 'LanguageBind/LanguageBind_Video'

model = LanguageBindVideo.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

tokenizer = LanguageBindVideoTokenizer.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

video_process = LanguageBindVideoProcessor(model.config, tokenizer)

model.eval()

data = video_process(["your/video.mp4"], ['your text.'], return_tensors='pt')

with torch.no_grad():

out = model(**data)

print(out.text_embeds @ out.image_embeds.T)

```

#### Audio

```python

import torch

from languagebind import LanguageBindAudio, LanguageBindAudioTokenizer, LanguageBindAudioProcessor

pretrained_ckpt = 'LanguageBind/LanguageBind_Audio_FT' # also 'LanguageBind/LanguageBind_Audio'

model = LanguageBindAudio.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

tokenizer = LanguageBindAudioTokenizer.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

audio_process = LanguageBindAudioProcessor(model.config, tokenizer)

model.eval()

data = audio_process([r"your/audio.wav"], ['your audio.'], return_tensors='pt')

with torch.no_grad():

out = model(**data)

print(out.text_embeds @ out.image_embeds.T)

```

#### Image

Note that our image encoder is the same as OpenCLIP. **Not** as fine-tuned as other modalities.

```python

import torch

from languagebind import LanguageBindImage, LanguageBindImageTokenizer, LanguageBindImageProcessor

pretrained_ckpt = 'LanguageBind/LanguageBind_Image'

model = LanguageBindImage.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

tokenizer = LanguageBindImageTokenizer.from_pretrained(pretrained_ckpt, cache_dir='./cache_dir')

image_process = LanguageBindImageProcessor(model.config, tokenizer)

model.eval()

data = image_process([r"your/image.jpg"], ['your text.'], return_tensors='pt')

with torch.no_grad():

out = model(**data)

print(out.text_embeds @ out.image_embeds.T)

```

## 💥 VIDAL-10M

The datasets is in [DATASETS.md](DATASETS.md).

## 🗝️ Training & Validating

The training & validating instruction is in [TRAIN_AND_VALIDATE.md](TRAIN_AND_VALIDATE.md).

## 👍 Acknowledgement

* [OpenCLIP](https://github.com/mlfoundations/open_clip) An open source pretraining framework.

* [CLIP4Clip](https://github.com/ArrowLuo/CLIP4Clip) An open source Video-Text retrieval framework.

* [sRGB-TIR](https://github.com/rpmsnu/sRGB-TIR) An open source framework to generate infrared (thermal) images.

* [GLPN](https://github.com/vinvino02/GLPDepth) An open source framework to generate depth images.

## 🔒 License

* The majority of this project is released under the MIT license as found in the [LICENSE](https://github.com/PKU-YuanGroup/LanguageBind/blob/main/LICENSE) file.

* The dataset of this project is released under the CC-BY-NC 4.0 license as found in the [DATASET_LICENSE](https://github.com/PKU-YuanGroup/LanguageBind/blob/main/DATASET_LICENSE) file.

## ✏️ Citation

If you find our paper and code useful in your research, please consider giving a star :star: and citation :pencil:.

```BibTeX

@misc{zhu2023languagebind,

title={LanguageBind: Extending Video-Language Pretraining to N-modality by Language-based Semantic Alignment},

author={Bin Zhu and Bin Lin and Munan Ning and Yang Yan and Jiaxi Cui and Wang HongFa and Yatian Pang and Wenhao Jiang and Junwu Zhang and Zongwei Li and Cai Wan Zhang and Zhifeng Li and Wei Liu and Li Yuan},

year={2023},

eprint={2310.01852},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## ✨ Star History

[](https://star-history.com/#PKU-YuanGroup/LanguageBind&Date)

## 🤝 Contributors

<a href="https://github.com/PKU-YuanGroup/LanguageBind/graphs/contributors">

<img src="https://contrib.rocks/image?repo=PKU-YuanGroup/LanguageBind" />

</a>

|

maddes8cht/syzymon-long_llama_3b_instruct-gguf

|

maddes8cht

| 2023-11-26T23:51:54Z | 363 | 2 | null |

[

"gguf",

"code",

"text-generation-inference",

"text-generation",

"dataset:Open-Orca/OpenOrca",

"dataset:zetavg/ShareGPT-Processed",

"dataset:bigcode/starcoderdata",

"dataset:togethercomputer/RedPajama-Data-1T",

"dataset:tiiuae/falcon-refinedweb",

"arxiv:2307.03170",

"arxiv:2305.16300",

"model-index",

"region:us"

] |

text-generation

| 2023-11-26T21:33:10Z |

---

datasets:

- Open-Orca/OpenOrca

- zetavg/ShareGPT-Processed

- bigcode/starcoderdata

- togethercomputer/RedPajama-Data-1T

- tiiuae/falcon-refinedweb

metrics:

- code_eval

- accuracy

pipeline_tag: text-generation

tags:

- code

- text-generation-inference

model-index:

- name: long_llama_3b_instruct

results:

- task:

name: Code Generation

type: code-generation

dataset:

name: "HumanEval"

type: openai_humaneval

metrics:

- name: pass@1

type: pass@1

value: 0.12

verified: false

---

[]()

I'm constantly enhancing these model descriptions to provide you with the most relevant and comprehensive information

# long_llama_3b_instruct - GGUF

- Model creator: [syzymon](https://huggingface.co/syzymon)

- Original model: [long_llama_3b_instruct](https://huggingface.co/syzymon/long_llama_3b_instruct)

OpenLlama is a free reimplementation of the original Llama Model which is licensed under Apache 2 license.

# About GGUF format

`gguf` is the current file format used by the [`ggml`](https://github.com/ggerganov/ggml) library.

A growing list of Software is using it and can therefore use this model.

The core project making use of the ggml library is the [llama.cpp](https://github.com/ggerganov/llama.cpp) project by Georgi Gerganov

# Quantization variants

There is a bunch of quantized files available to cater to your specific needs. Here's how to choose the best option for you:

# Legacy quants

Q4_0, Q4_1, Q5_0, Q5_1 and Q8 are `legacy` quantization types.

Nevertheless, they are fully supported, as there are several circumstances that cause certain model not to be compatible with the modern K-quants.

## Note:

Now there's a new option to use K-quants even for previously 'incompatible' models, although this involves some fallback solution that makes them not *real* K-quants. More details can be found in affected model descriptions.

(This mainly refers to Falcon 7b and Starcoder models)

# K-quants

K-quants are designed with the idea that different levels of quantization in specific parts of the model can optimize performance, file size, and memory load.

So, if possible, use K-quants.

With a Q6_K, you'll likely find it challenging to discern a quality difference from the original model - ask your model two times the same question and you may encounter bigger quality differences.

---

# Original Model Card:

# LongLLaMA: Focused Transformer Training for Context Scaling

<div align="center">

<a href="https://colab.research.google.com/github/CStanKonrad/long_llama/blob/main/long_llama_instruct_colab.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg"></a>

</div>

<div align="center">

[TLDR](#TLDR) | [Overview](#Overview) | [Usage](#Usage) | [LongLLaMA performance](#LongLLaMA-performance) | [Authors](#Authors) | [Citation](#Citation) | [License](#License) | [Acknowledgments](#Acknowledgments)

</div>

## TLDR

This repo contains [LongLLaMA-Instruct-3Bv1.1](https://huggingface.co/syzymon/long_llama_3b_instruct) that is for **research purposes only**.

LongLLaMA is built upon the foundation of [OpenLLaMA](https://github.com/openlm-research/open_llama) and fine-tuned using the [Focused Transformer (FoT)](https://arxiv.org/abs/2307.03170) method. We release a smaller 3B base variant (not instruction tuned) of the LongLLaMA model on a permissive license (Apache 2.0) and inference code supporting longer contexts on [Hugging Face](https://huggingface.co/syzymon/long_llama_3b). Our model weights can serve as the drop-in replacement of LLaMA in existing implementations (for short context up to 2048 tokens). Additionally, we provide evaluation results and comparisons against the original OpenLLaMA models. Stay tuned for further updates.

## Overview

### Base models

[Focused Transformer: Contrastive Training for Context Scaling](https://arxiv.org/abs/2307.03170) (FoT) presents a simple method for endowing language models with the ability to handle context consisting possibly of millions of tokens while training on significantly shorter input. FoT permits a subset of attention layers to access a memory cache of (key, value) pairs to extend the context length. The distinctive aspect of FoT is its training procedure, drawing from contrastive learning. Specifically, we deliberately expose the memory attention layers to both relevant and irrelevant keys (like negative samples from unrelated documents). This strategy incentivizes the model to differentiate keys connected with semantically diverse values, thereby enhancing their structure. This, in turn, makes it possible to extrapolate the effective context length much beyond what is seen in training.

**LongLLaMA** is an [OpenLLaMA](https://github.com/openlm-research/open_llama) model finetuned with the FoT method,

with three layers used for context extension. **Crucially, LongLLaMA is able to extrapolate much beyond the context length seen in training: $8k$. E.g., in the passkey retrieval task, it can handle inputs of length $256k$**.

<div align="center">

| | [LongLLaMA-3B](https://huggingface.co/syzymon/long_llama_3b_instruct) | [LongLLaMA-3Bv1.1](https://huggingface.co/syzymon/long_llama_3b_v1_1) | LongLLaMA-7B<br />*(coming soon)*| LongLLaMA-13B<br />*(coming soon)*|

|----------------|----------|----------|-----------|-----------|

| Source model | [OpenLLaMA-3B](https://huggingface.co/openlm-research/open_llama_3b_easylm) | [OpenLLaMA-3Bv2](https://huggingface.co/openlm-research/open_llama_3b_v2_easylm) | - | - |

| Source model tokens | 1T | 1 T | - | - |

| Fine-tuning tokens | 10B | 5B | - | -|

| Memory layers | 6, 12, 18 | 6, 12, 18 | - | -|

</div>

### Instruction/Chat tuning

In the [fine_tuning](fine_tuning) subfolder we provide the code that was used to create [LongLLaMA-Instruct-3Bv1.1](https://huggingface.co/syzymon/long_llama_3b_instruct), an instruction-tuned version of [LongLLaMA-3Bv1.1](https://huggingface.co/syzymon/long_llama_3b_v1_1). We used [OpenOrca](https://huggingface.co/datasets/Open-Orca/OpenOrca) (instructions) and [zetavg/ShareGPT-Processed](https://huggingface.co/datasets/zetavg/ShareGPT-Processed) (chat) datasets for tuning.

## Usage

See also:

* [Colab with LongLLaMA-Instruct-3Bv1.1](https://colab.research.google.com/github/CStanKonrad/long_llama/blob/main/long_llama_instruct_colab.ipynb).

* [Colab with an example usage of base LongLLaMA](https://colab.research.google.com/github/CStanKonrad/long_llama/blob/main/long_llama_colab.ipynb).

### Requirements

```

pip install --upgrade pip

pip install transformers==4.30 sentencepiece accelerate

```

### Loading model

```python

import torch

from transformers import LlamaTokenizer, AutoModelForCausalLM

tokenizer = LlamaTokenizer.from_pretrained("syzymon/long_llama_3b_instruct")

model = AutoModelForCausalLM.from_pretrained("syzymon/long_llama_3b_instruct",

torch_dtype=torch.float32,

trust_remote_code=True)

```

### Input handling and generation

LongLLaMA uses the Hugging Face interface, the long input given to the model will be

split into context windows and loaded into the memory cache.

```python

prompt = "My name is Julien and I like to"

input_ids = tokenizer(prompt, return_tensors="pt").input_ids

outputs = model(input_ids=input_ids)

```

During the model call, one can provide the parameter `last_context_length` (default $1024$), which specifies the number of tokens left in the last context window. Tuning this parameter can improve generation as the first layers do not have access to memory. See details in [How LongLLaMA handles long inputs](#How-LongLLaMA-handles-long-inputs).

```python

generation_output = model.generate(

input_ids=input_ids,

max_new_tokens=256,

num_beams=1,

last_context_length=1792,

do_sample=True,

temperature=1.0,

)

print(tokenizer.decode(generation_output[0]))

```

### Additional configuration

LongLLaMA has several other parameters:

* `mem_layers` specifies layers endowed with memory (should be either an empty list or a list of all memory layers specified in the description of the checkpoint).

* `mem_dtype` allows changing the type of memory cache

* `mem_attention_grouping` can trade off speed for reduced memory usage.

When equal to `(4, 2048)`, the memory layers will process at most $4*2048$ queries at once ($4$ heads and $2048$ queries for each head).

```python

import torch

from transformers import LlamaTokenizer, AutoModelForCausalLM

tokenizer = LlamaTokenizer.from_pretrained("syzymon/long_llama_3b_instruct")

model = AutoModelForCausalLM.from_pretrained(

"syzymon/long_llama_3b_instruct", torch_dtype=torch.float32,

mem_layers=[],

mem_dtype='bfloat16',

trust_remote_code=True,

mem_attention_grouping=(4, 2048),

)

```

### Drop-in use with LLaMA code

LongLLaMA checkpoints can also be used as a drop-in replacement for LLaMA checkpoints in [Hugging Face implementation of LLaMA](https://huggingface.co/docs/transformers/main/model_doc/llama), but in this case, they will be limited to the original context length of $2048$.

```python

from transformers import LlamaTokenizer, LlamaForCausalLM

import torch

tokenizer = LlamaTokenizer.from_pretrained("syzymon/long_llama_3b_instruct")

model = LlamaForCausalLM.from_pretrained("syzymon/long_llama_3b_instruct", torch_dtype=torch.float32)

```

### How LongLLaMA handles long inputs

Inputs over $2048$ tokens are automatically split into windows $w_1, \ldots, w_m$. The first $m-2$ windows contain $2048$ tokens each, $w_{m-1}$ has no more than $2048$ tokens, and $w_m$ contains the number of tokens specified by `last_context_length`. The model processes the windows one by one extending the memory cache after each. If `use_cache` is `True`, the last window will not be loaded to the memory cache but to the local (generation) cache.

The memory cache stores $(key, value)$ pairs for each head of the specified memory layers `mem_layers`. In addition to this, it stores attention masks.

If `use_cache=True` (which is the case in generation), LongLLaMA will use two caches: the memory cache for the specified layers and the local (generation) cache for all layers. When the local cache exceeds $2048$ elements, its content is moved to the memory cache for the memory layers.

For simplicity, context extension is realized with a memory cache and full attention in this repo. Replacing this simple mechanism with a KNN search over an external database is possible with systems like [Faiss](https://github.com/facebookresearch/faiss). This potentially would enable further context length scaling. We leave this as a future work.

## LongLLaMA performance

We present some illustrative examples of LongLLaMA results. Refer to our paper [Focused Transformer: Contrastive Training for Context Scaling](https://arxiv.org/abs/2307.03170) for more details.

We manage to achieve good performance on the passkey retrieval task from [Landmark Attention: Random-Access Infinite Context Length for Transformers](https://arxiv.org/abs/2305.16300). The code for generating the prompt and running the model is located in `examples/passkey.py`.

<p align="center" width="100%">

<img src="assets/plot_passkey.png" alt="LongLLaMA" style="width: 70%; min-width: 300px; display: block; margin: auto;">

</p>

Our LongLLaMA 3B model also shows improvements when using long context on two downstream tasks, TREC question classification and WebQS question answering.

<div align="center">

| Context/Dataset | TREC | WebQS |

| --- | --- | --- |

| $2K$ | 67.0 | 21.2 |

| $4K$ | 71.6 | 21.4 |

| $6K$ | 72.9 | 22.2 |

| $8K$ | **73.3** | **22.4** |

</div>

LongLLaMA retains performance on tasks that do not require long context. We provide a comparison with OpenLLaMA

on [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness) in the zero-shot setting.

<div align="center">

| Task/Metric | OpenLLaMA-3B | LongLLaMA-3B |

|----------------|----------|-----------|

| anli_r1/acc | 0.33 | 0.32 |

| anli_r2/acc | 0.32 | 0.33 |

| anli_r3/acc | 0.35 | 0.35 |

| arc_challenge/acc | 0.34 | 0.34 |

| arc_challenge/acc_norm | 0.37 | 0.37 |

| arc_easy/acc | 0.69 | 0.68 |

| arc_easy/acc_norm | 0.65 | 0.63 |

| boolq/acc | 0.68 | 0.68 |

| hellaswag/acc | 0.49 | 0.48 |

| hellaswag/acc_norm | 0.67 | 0.65 |

| openbookqa/acc | 0.27 | 0.28 |

| openbookqa/acc_norm | 0.40 | 0.38 |

| piqa/acc | 0.75 | 0.73 |

| piqa/acc_norm | 0.76 | 0.75 |

| record/em | 0.88 | 0.87 |

| record/f1 | 0.89 | 0.87 |

| rte/acc | 0.58 | 0.60 |

| truthfulqa_mc/mc1 | 0.22 | 0.24 |

| truthfulqa_mc/mc2 | 0.35 | 0.38 |

| wic/acc | 0.48 | 0.50 |

| winogrande/acc | 0.62 | 0.60 |

| Avg score | 0.53 | 0.53 |

</div>

Starting with v1.1 models we have decided to use [EleutherAI](https://github.com/EleutherAI) implementation of [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness) wit a slight modification, that adds `<bos>` token at beginning of input sequence. The results are provided in the table below.

<div align="center">

| description | LongLLaMA-3B | OpenLLaMA-3Bv2 | LongLLaMA-3Bv1.1 | LongLLaMA-Instruct-3Bv1.1 |

|:-----------------------|:--------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------|:--------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------|

| anli_r1/acc | 0.32 | 0.33 | 0.31 | 0.33 |

| anli_r2/acc | 0.33 | 0.35 | 0.33 | 0.35 |

| anli_r3/acc | 0.35 | 0.38 | 0.35 | 0.38 |

| arc_challenge/acc | 0.34 | 0.33 | 0.32 | 0.36 |

| arc_challenge/acc_norm | 0.37 | 0.36 | 0.36 | 0.37 |

| arc_easy/acc | 0.67 | 0.68 | 0.68 | 0.7 |

| arc_easy/acc_norm | 0.63 | 0.63 | 0.63 | 0.63 |

| boolq/acc | 0.68 | 0.67 | 0.66 | 0.77 |

| hellaswag/acc | 0.48 | 0.53 | 0.52 | 0.52 |

| hellaswag/acc_norm | 0.65 | 0.7 | 0.69 | 0.68 |

| openbookqa/acc | 0.28 | 0.28 | 0.28 | 0.28 |

| openbookqa/acc_norm | 0.38 | 0.39 | 0.37 | 0.41 |

| piqa/acc | 0.73 | 0.77 | 0.77 | 0.78 |

| piqa/acc_norm | 0.75 | 0.78 | 0.77 | 0.77 |

| record/em | 0.87 | 0.87 | 0.86 | 0.85 |

| record/f1 | 0.88 | 0.88 | 0.87 | 0.86 |

| rte/acc | 0.6 | 0.53 | 0.62 | 0.7 |

| truthfulqa_mc/mc1 | 0.24 | 0.22 | 0.21 | 0.25 |

| truthfulqa_mc/mc2 | 0.38 | 0.35 | 0.35 | 0.4 |

| wic/acc | 0.5 | 0.5 | 0.5 | 0.54 |

| winogrande/acc | 0.6 | 0.66 | 0.63 | 0.65 |

| Avg score | 0.53 | 0.53 | 0.53 | 0.55 |

</div>

We also provide the results on human-eval. We cut the generated text after either

* `"\ndef "`

* `"\nclass "`

* `"\nif __name__"`

<div align="center">

| | OpenLLaMA-3Bv2 | LongLLaMA-3Bv1.1 | LongLLaMA-Instruct-3Bv1.1 |

| - | - | - | - |

| pass@1| 0.09| 0.12 | 0.12 |

</div>

## Authors

- [Szymon Tworkowski](https://scholar.google.com/citations?user=1V8AeXYAAAAJ&hl=en)

- [Konrad Staniszewski](https://scholar.google.com/citations?user=CM6PCBYAAAAJ)

- [Mikołaj Pacek](https://scholar.google.com/citations?user=eh6iEbQAAAAJ&hl=en&oi=ao)

- [Henryk Michalewski](https://scholar.google.com/citations?user=YdHW1ycAAAAJ&hl=en)

- [Yuhuai Wu](https://scholar.google.com/citations?user=bOQGfFIAAAAJ&hl=en)

- [Piotr Miłoś](https://scholar.google.pl/citations?user=Se68XecAAAAJ&hl=pl&oi=ao)

## Citation

To cite this work please use

```bibtex

@misc{tworkowski2023focused,

title={Focused Transformer: Contrastive Training for Context Scaling},

author={Szymon Tworkowski and Konrad Staniszewski and Mikołaj Pacek and Yuhuai Wu and Henryk Michalewski and Piotr Miłoś},

year={2023},

eprint={2307.03170},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

## License

The code and base models checkpoints are licensed under [Apache License, Version 2.0](http://www.apache.org/licenses/LICENSE-2.0).

The instruction/chat tuned models are for research purposes only.

Some of the examples use external code (see headers of files for copyright notices and licenses).

## Acknowledgments