modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

w4r10ck/SOLAR-10.7B-Instruct-v1.0-uncensored

|

w4r10ck

| 2024-05-31T16:56:44Z | 359 | 28 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] |

text-generation

| 2023-12-14T01:33:36Z |

---

license: apache-2.0

---

# SOLAR-10.7B-Instruct-v1.0-uncensored

SOLAR-10.7B-Instruct-v1.0 finetuned to be less censored. Refer to [upstage/SOLAR-10.7B-Instruct-v1.0](https://huggingface.co/upstage/SOLAR-10.7B-Instruct-v1.0) for model info and usage instructions.

## Training details

This model was trained using Lora and DPOTrainer on [unalignment/toxic-dpo-v0.1](https://huggingface.co/datasets/unalignment/toxic-dpo-v0.1)

## How to Cite

```

@misc{solarUncensoredDPO,

title={solar-10.7b-instruct-V1.0-uncensored},

url={https://huggingface.co/w4r10ck/SOLAR-10.7B-Instruct-v1.0-uncensored},

author={Stepan Zuev},

year={2023},

month={Dec}

}

```

|

mmnga/lightblue-karasu-7B-chat-plus-unleashed-gguf

|

mmnga

| 2024-05-07T12:58:38Z | 359 | 4 | null |

[

"gguf",

"mistral",

"ja",

"dataset:OpenAssistant/oasst1",

"dataset:zetavg/ShareGPT-Processed",

"dataset:augmxnt/ultra-orca-boros-en-ja-v1",

"license:apache-2.0",

"region:us"

] | null | 2023-12-29T12:21:29Z |

---

license: apache-2.0

tags:

- mistral

language:

- ja

datasets:

- OpenAssistant/oasst1

- zetavg/ShareGPT-Processed

- augmxnt/ultra-orca-boros-en-ja-v1

---

# lightblue-karasu-7B-chat-plus-unleashed-gguf

[lightblueさんが公開しているkarasu-7B-chat-plus-unleashed](https://huggingface.co/lightblue/karasu-7B-chat-plus-unleashed)のggufフォーマット変換版です。

## 他のモデル

[mmnga/lightblue-Karasu-Mixtral-8x22B-v0.1-gguf](https://huggingface.co/mmnga/lightblue-Karasu-Mixtral-8x22B-v0.1-gguf)

[mmnga/lightblue-suzume-llama-3-8B-multilingual-gguf](https://huggingface.co/mmnga/lightblue-suzume-llama-3-8B-multilingual-gguf)

[mmnga/lightblue-suzume-llama-3-8B-japanese-gguf](https://huggingface.co/mmnga/lightblue-suzume-llama-3-8B-japanese-gguf)

[mmnga/lightblue-ao-karasu-72B-gguf](https://huggingface.co/mmnga/lightblue-ao-karasu-72B-gguf)

[mmnga/lightblue-karasu-1.1B-gguf](https://huggingface.co/mmnga/lightblue-karasu-1.1B-gguf)

[mmnga/lightblue-karasu-7B-chat-plus-unleashed-gguf](https://huggingface.co/mmnga/lightblue-karasu-7B-chat-plus-unleashed-gguf)

[mmnga/lightblue-qarasu-14B-chat-plus-unleashed-gguf](https://huggingface.co/mmnga/lightblue-qarasu-14B-chat-plus-unleashed-gguf)

## Usage

```

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

make -j

./main -m 'lightblue-karasu-7B-chat-plus-unleashed-q4_0.gguf' -n 512 -p '[INST] <<SYS>>あなたはAIアシスタントです。 <</SYS>> イギリスの首相は誰ですか? [/INST]'

```

## License

*ライセンスは元のモデルのライセンスをそのまま使用していますが、学習に使用したデータセット内容により異なる可能性があります。ご確認ください。*

|

cazure87/5CkfAvdbsqJ3j8QgzfXPpmWd7LQfxEkMdx6hXFFgRFEnfpAo_vgg

|

cazure87

| 2024-03-02T06:47:57Z | 359 | 0 |

keras

|

[

"keras",

"region:us"

] | null | 2024-02-15T19:15:19Z |

Entry not found

|

CompendiumLabs/bge-large-zh-v1.5-gguf

|

CompendiumLabs

| 2024-02-17T22:04:50Z | 359 | 4 | null |

[

"gguf",

"license:mit",

"region:us"

] | null | 2024-02-17T21:58:26Z |

---

license: mit

---

<img src="https://raw.githubusercontent.com/CompendiumLabs/compendiumlabs.ai/main/images/logo_text_crop.png" alt="Compendium Labs" style="width: 500px;">

# bge-large-zh-v1.5-gguf

Source model: https://huggingface.co/BAAI/bge-large-zh-v1.5

Quantized and unquantized embedding models in GGUF format for use with `llama.cpp`. A large benefit over `transformers` is almost guaranteed and the benefit over ONNX will vary based on the application, but this seems to provide a large speedup on CPU and a modest speedup on GPU for larger models. Due to the relatively small size of these models, quantization will not provide huge benefits, but it does generate up to a 30% speedup on CPU with minimal loss in accuracy.

<br/>

# Files Available

<div style="width: 500px; margin: 0;">

| Filename | Quantization | Size |

|:-------- | ------------ | ---- |

| [bge-large-zh-v1.5-f32.gguf](https://huggingface.co/CompendiumLabs/bge-large-zh-v1.5-gguf/blob/main/bge-large-zh-v1.5-f32.gguf) | F32 | 1.3 GB |

| [bge-large-zh-v1.5-f16.gguf](https://huggingface.co/CompendiumLabs/bge-large-zh-v1.5-gguf/blob/main/bge-large-zh-v1.5-f16.gguf) | F16 | 620 MB |

| [bge-large-zh-v1.5-q8_0.gguf](https://huggingface.co/CompendiumLabs/bge-large-zh-v1.5-gguf/blob/main/bge-large-zh-v1.5-q8_0.gguf) | Q8_0 | 332 MB |

| [bge-large-zh-v1.5-q4_k_m.gguf](https://huggingface.co/CompendiumLabs/bge-large-zh-v1.5-gguf/blob/main/bge-large-zh-v1.5-q4_k_m.gguf) | Q4_K_M | 193 MB |

</div>

<br/>

# Usage

These model files can be used with pure `llama.cpp` or with the `llama-cpp-python` Python bindings

```python

from llama_cpp import Llama

model = Llama(gguf_path, embedding=True)

embed = model.embed(texts)

```

Here `texts` can either be a string or a list of strings, and the return value is a list of embedding vectors. The inputs are grouped into batches automatically for efficient execution. There is also LangChain integration through `langchain_community.embeddings.LlamaCppEmbeddings`.

|

RunDiffusion/Juggernaut-XL-v7

|

RunDiffusion

| 2024-03-11T20:07:38Z | 359 | 1 |

diffusers

|

[

"diffusers",

"art",

"people",

"diffusion",

"Cinematic",

"Photography",

"Landscape",

"Interior",

"Food",

"Car",

"Wildlife",

"Architecture",

"en",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] |

text-to-image

| 2024-02-21T23:50:00Z |

---

language:

- en

license: creativeml-openrail-m

library_name: diffusers

tags:

- art

- people

- diffusion

- Cinematic

- Photography

- Landscape

- Interior

- Food

- Car

- Wildlife

- Architecture

thumbnail: https://imagedelivery.net/siANnpeNAc_S2q1M3-eDrA/7bbcc140-3e88-442d-d127-85a79e7fbb00/padthumb

base_model: stabilityai/stable-diffusion-xl-base-1.0

---

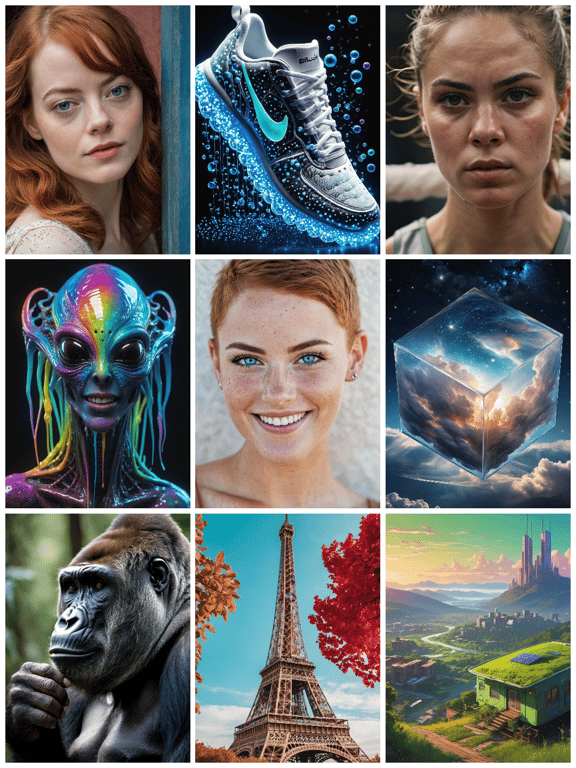

# Juggernaut XL v7 + RunDiffusion Photo v1 Official

## Juggernaut v9 is here! [Juggernaut v9 + RunDiffusion Photo v2](https://huggingface.co/RunDiffusion/Juggernaut-XL-v9)

This model is not permitted to be used behind API services. Please contact [[email protected]](mailto:[email protected]) for business inquires, commercial licensing, custom models, and consultation.

Juggernaut is available on the new Auto1111 Forge on [RunDiffusion](http://rundiffusion.com/?utm_source=huggingface&utm_medium=referral&utm_campaign=Kandoo)

A big thanks for Version 7 goes to [RunDiffusion](http://rundiffusion.com/?utm_source=huggingface&utm_medium=referral&utm_campaign=Kandoo) ([Photo Model](https://rundiffusion.com/rundiffusion-photo/?utm_source=huggingface&utm_medium=referral&utm_campaign=Kandoo)) and [Adam](https://twitter.com/Colorblind_Adam), who diligently helped me test :) (Leave some love for them ;) )

For business inquires, commercial licensing, custom models, and consultation contact me under [email protected]

|

ChrisWilson011016/5C7jPXvfkFY5WgfsZoJfjtNkBhcyZZ7cMr7Y5jWyJga6WKMa_vgg

|

ChrisWilson011016

| 2024-03-04T18:53:30Z | 359 | 0 |

keras

|

[

"keras",

"region:us"

] | null | 2024-02-24T15:16:21Z |

Entry not found

|

levimorin/5GsSNa8Xv3bywNtkzmqCuDsNMALTztXkpLTpnnQs3CPxMZnf_vgg

|

levimorin

| 2024-03-08T19:10:06Z | 359 | 0 |

keras

|

[

"keras",

"region:us"

] | null | 2024-03-03T04:59:41Z |

Entry not found

|

FreedomIntelligence/Apollo-7B-GGUF

|

FreedomIntelligence

| 2024-04-28T16:05:32Z | 359 | 7 | null |

[

"gguf",

"arxiv:2403.03640",

"license:apache-2.0",

"region:us"

] | null | 2024-03-22T11:16:48Z |

---

license: apache-2.0

---

# Multilingual Medicine: Model, Dataset, Benchmark, Code

Covering English, Chinese, French, Hindi, Spanish, Hindi, Arabic So far

<p align="center">

👨🏻💻<a href="https://github.com/FreedomIntelligence/Apollo" target="_blank">Github</a> •📃 <a href="https://arxiv.org/abs/2403.03640" target="_blank">Paper</a> • 🌐 <a href="https://apollo.llmzoo.com/" target="_blank">Demo</a> • 🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus" target="_blank">ApolloCorpus</a> • 🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/XMedbench" target="_blank">XMedBench</a>

<br> <a href="./README_zh.md"> 中文 </a> | <a href="./README.md"> English

</p>

## 🌈 Update

* **[2024.04.28]** We have updated multiple versions of the Apollo-7B GGUF model.

* **[2024.03.07]** [Paper](https://arxiv.org/abs/2403.03640) released.

* **[2024.02.12]** <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus" target="_blank">ApolloCorpus</a> and <a href="https://huggingface.co/datasets/FreedomIntelligence/XMedbench" target="_blank">XMedBench</a> is published!🎉

* **[2024.01.23]** Apollo repo is published!🎉

## Overview

| Type | Size/GB | Notes |

|:-|----:|:----|

| [Q2_K](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q2_K.gguf) | 3.6 | |

| [IQ3_XS](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.IQ3_XS.gguf) | 3.9 | |

| [IQ3_S](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.IQ3_S.gguf) | 4.1 | beats Q3_K* |

| [Q3_K_S](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q3_K_S.gguf) | 4.1 | |

| [IQ3_M](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.IQ3_M.gguf) | 4.2 | |

| [Q3_K_M](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q3_K_M.gguf) | 4.5 | lower quality |

| [Q3_K_L](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q3_K_L.gguf) | 4.8 | |

| [IQ4_XS](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.IQ4_XS.gguf) | 4.9 | |

| [Q4_K_S](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q4_K_S.gguf) | 5.1 | fast, recommended |

| [Q4_K_M](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q4_K_M.gguf) | 5.4 | fast, recommended |

| [Q5_K_S](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q5_K_S.gguf) | 6.1 | |

| [Q5_K_M](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q5_K_M.gguf) | 6.2 | |

| [Q6_K](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q6_K.gguf) | 7.1 | very good quality |

| [Q8_0](https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF/resolve/main/Apollo-7B.Q8_0.gguf) | 9.2 | fast, best quality, but very large |

## Results

🤗<a href="https://huggingface.co/FreedomIntelligence/Apollo-0.5B" target="_blank">Apollo-0.5B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-1.8B" target="_blank">Apollo-1.8B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-2B" target="_blank">Apollo-2B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-6B" target="_blank">Apollo-6B</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-7B" target="_blank">Apollo-7B</a>

🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-0.5B-GGUF" target="_blank">Apollo-0.5B-GGUF</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-2B-GGUF" target="_blank">Apollo-2B-GGUF</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-6B-GGUF" target="_blank">Apollo-6B-GGUF</a> • 🤗 <a href="https://huggingface.co/FreedomIntelligence/Apollo-7B-GGUF" target="_blank">Apollo-7B-GGUF</a>

## Dataset & Evaluation

- Dataset

🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus" target="_blank">ApolloCorpus</a>

<details><summary>Click to expand</summary>

- [Zip File](https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus/blob/main/ApolloCorpus.zip)

- [Data category](https://huggingface.co/datasets/FreedomIntelligence/ApolloCorpus/tree/main/train)

- Pretrain:

- data item:

- json_name: {data_source}_{language}_{data_type}.json

- data_type: medicalBook, medicalGuideline, medicalPaper, medicalWeb(from online forum), medicalWiki

- language: en(English), zh(chinese), es(spanish), fr(french), hi(Hindi)

- data_type: qa(generated qa from text)

- data_type==text: list of string

```

[

"string1",

"string2",

...

]

```

- data_type==qa: list of qa pairs(list of string)

```

[

[

"q1",

"a1",

"q2",

"a2",

...

],

...

]

```

- SFT:

- json_name: {data_source}_{language}.json

- data_type: code, general, math, medicalExam, medicalPatient

- data item: list of qa pairs(list of string)

```

[

[

"q1",

"a1",

"q2",

"a2",

...

],

...

]

```

</details>

- Evaluation

🤗 <a href="https://huggingface.co/datasets/FreedomIntelligence/XMedbench" target="_blank">XMedBench</a>

<details><summary>Click to expand</summary>

- EN:

- [MedQA-USMLE](https://huggingface.co/datasets/GBaker/MedQA-USMLE-4-options)

- [MedMCQA](https://huggingface.co/datasets/medmcqa/viewer/default/test)

- [PubMedQA](https://huggingface.co/datasets/pubmed_qa): Because the results fluctuated too much, they were not used in the paper.

- [MMLU-Medical](https://huggingface.co/datasets/cais/mmlu)

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

- ZH:

- [MedQA-MCMLE](https://huggingface.co/datasets/bigbio/med_qa/viewer/med_qa_zh_4options_bigbio_qa/test)

- [CMB-single](https://huggingface.co/datasets/FreedomIntelligence/CMB): Not used in the paper

- Randomly sample 2,000 multiple-choice questions with single answer.

- [CMMLU-Medical](https://huggingface.co/datasets/haonan-li/cmmlu)

- Anatomy, Clinical_knowledge, College_medicine, Genetics, Nutrition, Traditional_chinese_medicine, Virology

- [CExam](https://github.com/williamliujl/CMExam): Not used in the paper

- Randomly sample 2,000 multiple-choice questions

- ES: [Head_qa](https://huggingface.co/datasets/head_qa)

- FR: [Frenchmedmcqa](https://github.com/qanastek/FrenchMedMCQA)

- HI: [MMLU_HI](https://huggingface.co/datasets/FreedomIntelligence/MMLU_Arabic)

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

- AR: [MMLU_Ara](https://huggingface.co/datasets/FreedomIntelligence/MMLU_Hindi)

- Clinical knowledge, Medical genetics, Anatomy, Professional medicine, College biology, College medicine

</details>

## Results reproduction

<details><summary>Click to expand</summary>

**Waiting for Update**

</details>

## Acknowledgment

We sincerely thank [mradermacher](https://huggingface.co/mradermacher/Apollo-7B-GGUF) for the assistance in providing multiple versions of the Apollo-7B GGUF model!

## Citation

Please use the following citation if you intend to use our dataset for training or evaluation:

```

@misc{wang2024apollo,

title={Apollo: Lightweight Multilingual Medical LLMs towards Democratizing Medical AI to 6B People},

author={Xidong Wang and Nuo Chen and Junyin Chen and Yan Hu and Yidong Wang and Xiangbo Wu and Anningzhe Gao and Xiang Wan and Haizhou Li and Benyou Wang},

year={2024},

eprint={2403.03640},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

mradermacher/MergerixInex12-7B-GGUF

|

mradermacher

| 2024-05-06T05:03:11Z | 359 | 0 |

transformers

|

[

"transformers",

"gguf",

"merge",

"mergekit",

"lazymergekit",

"automerger",

"en",

"base_model:automerger/MergerixInex12-7B",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-04-10T20:23:40Z |

---

base_model: automerger/MergerixInex12-7B

language:

- en

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- merge

- mergekit

- lazymergekit

- automerger

---

## About

<!-- ### quantize_version: 1 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: -->

<!-- ### vocab_type: -->

static quants of https://huggingface.co/automerger/MergerixInex12-7B

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q2_K.gguf) | Q2_K | 2.8 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.IQ3_XS.gguf) | IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q3_K_S.gguf) | Q3_K_S | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.IQ3_S.gguf) | IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.IQ3_M.gguf) | IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q3_K_M.gguf) | Q3_K_M | 3.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q3_K_L.gguf) | Q3_K_L | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.IQ4_XS.gguf) | IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q4_K_S.gguf) | Q4_K_S | 4.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q4_K_M.gguf) | Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q5_K_S.gguf) | Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q5_K_M.gguf) | Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q6_K.gguf) | Q6_K | 6.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/MergerixInex12-7B-GGUF/resolve/main/MergerixInex12-7B.Q8_0.gguf) | Q8_0 | 7.8 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

nitsuai/llama-3-neural-chat-v1-8b-GGUF

|

nitsuai

| 2024-04-22T01:21:42Z | 359 | 0 |

transformers

|

[

"transformers",

"gguf",

"text-generation",

"dataset:mlabonne/orpo-dpo-mix-40k",

"dataset:Open-Orca/SlimOrca-Dedup",

"dataset:jondurbin/airoboros-3.2",

"dataset:microsoft/orca-math-word-problems-200k",

"dataset:m-a-p/Code-Feedback",

"dataset:MaziyarPanahi/WizardLM_evol_instruct_V2_196k",

"base_model:meta-llama/Meta-Llama-3-8B",

"license:other",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-04-22T01:21:42Z |

---

library_name: transformers

license: other

datasets:

- mlabonne/orpo-dpo-mix-40k

- Open-Orca/SlimOrca-Dedup

- jondurbin/airoboros-3.2

- microsoft/orca-math-word-problems-200k

- m-a-p/Code-Feedback

- MaziyarPanahi/WizardLM_evol_instruct_V2_196k

base_model: meta-llama/Meta-Llama-3-8B

quantized_by: bartowski

pipeline_tag: text-generation

---

## Llamacpp iMatrix Quantizations of llama-3-neural-chat-v1-8b

This model has the <|eot_id|> token set to not-special, which seems to work better with current inference engines.

Using <a href="https://github.com/ggerganov/llama.cpp/">llama.cpp</a> fork from pcuenca <a href="https://github.com/pcuenca/llama.cpp/tree/llama3-conversion">llama3-conversion</a> for quantization.

Original model: https://huggingface.co/Locutusque/llama-3-neural-chat-v1-8b

All quants made using imatrix option with dataset provided by Kalomaze [here](https://github.com/ggerganov/llama.cpp/discussions/5263#discussioncomment-8395384)

## Prompt format

```

<|im_start|>system

{system_prompt}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

```

## Download a file (not the whole branch) from below:

| Filename | Quant type | File Size | Description |

| -------- | ---------- | --------- | ----------- |

| [llama-3-neural-chat-v1-8b-Q8_0.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q8_0.gguf) | Q8_0 | 8.54GB | Extremely high quality, generally unneeded but max available quant. |

| [llama-3-neural-chat-v1-8b-Q6_K.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q6_K.gguf) | Q6_K | 6.59GB | Very high quality, near perfect, *recommended*. |

| [llama-3-neural-chat-v1-8b-Q5_K_M.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q5_K_M.gguf) | Q5_K_M | 5.73GB | High quality, *recommended*. |

| [llama-3-neural-chat-v1-8b-Q5_K_S.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q5_K_S.gguf) | Q5_K_S | 5.59GB | High quality, *recommended*. |

| [llama-3-neural-chat-v1-8b-Q4_K_M.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q4_K_M.gguf) | Q4_K_M | 4.92GB | Good quality, uses about 4.83 bits per weight, *recommended*. |

| [llama-3-neural-chat-v1-8b-Q4_K_S.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q4_K_S.gguf) | Q4_K_S | 4.69GB | Slightly lower quality with more space savings, *recommended*. |

| [llama-3-neural-chat-v1-8b-IQ4_NL.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ4_NL.gguf) | IQ4_NL | 4.67GB | Decent quality, slightly smaller than Q4_K_S with similar performance *recommended*. |

| [llama-3-neural-chat-v1-8b-IQ4_XS.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ4_XS.gguf) | IQ4_XS | 4.44GB | Decent quality, smaller than Q4_K_S with similar performance, *recommended*. |

| [llama-3-neural-chat-v1-8b-Q3_K_L.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q3_K_L.gguf) | Q3_K_L | 4.32GB | Lower quality but usable, good for low RAM availability. |

| [llama-3-neural-chat-v1-8b-Q3_K_M.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q3_K_M.gguf) | Q3_K_M | 4.01GB | Even lower quality. |

| [llama-3-neural-chat-v1-8b-IQ3_M.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ3_M.gguf) | IQ3_M | 3.78GB | Medium-low quality, new method with decent performance comparable to Q3_K_M. |

| [llama-3-neural-chat-v1-8b-IQ3_S.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ3_S.gguf) | IQ3_S | 3.68GB | Lower quality, new method with decent performance, recommended over Q3_K_S quant, same size with better performance. |

| [llama-3-neural-chat-v1-8b-Q3_K_S.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q3_K_S.gguf) | Q3_K_S | 3.66GB | Low quality, not recommended. |

| [llama-3-neural-chat-v1-8b-IQ3_XS.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ3_XS.gguf) | IQ3_XS | 3.51GB | Lower quality, new method with decent performance, slightly better than Q3_K_S. |

| [llama-3-neural-chat-v1-8b-IQ3_XXS.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ3_XXS.gguf) | IQ3_XXS | 3.27GB | Lower quality, new method with decent performance, comparable to Q3 quants. |

| [llama-3-neural-chat-v1-8b-Q2_K.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-Q2_K.gguf) | Q2_K | 3.17GB | Very low quality but surprisingly usable. |

| [llama-3-neural-chat-v1-8b-IQ2_M.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ2_M.gguf) | IQ2_M | 2.94GB | Very low quality, uses SOTA techniques to also be surprisingly usable. |

| [llama-3-neural-chat-v1-8b-IQ2_S.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ2_S.gguf) | IQ2_S | 2.75GB | Very low quality, uses SOTA techniques to be usable. |

| [llama-3-neural-chat-v1-8b-IQ2_XS.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ2_XS.gguf) | IQ2_XS | 2.60GB | Very low quality, uses SOTA techniques to be usable. |

| [llama-3-neural-chat-v1-8b-IQ2_XXS.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ2_XXS.gguf) | IQ2_XXS | 2.39GB | Lower quality, uses SOTA techniques to be usable. |

| [llama-3-neural-chat-v1-8b-IQ1_M.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ1_M.gguf) | IQ1_M | 2.16GB | Extremely low quality, *not* recommended. |

| [llama-3-neural-chat-v1-8b-IQ1_S.gguf](https://huggingface.co/bartowski/llama-3-neural-chat-v1-8b-GGUF/blob/main/llama-3-neural-chat-v1-8b-IQ1_S.gguf) | IQ1_S | 2.01GB | Extremely low quality, *not* recommended. |

## Which file should I choose?

A great write up with charts showing various performances is provided by Artefact2 [here](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9)

The first thing to figure out is how big a model you can run. To do this, you'll need to figure out how much RAM and/or VRAM you have.

If you want your model running as FAST as possible, you'll want to fit the whole thing on your GPU's VRAM. Aim for a quant with a file size 1-2GB smaller than your GPU's total VRAM.

If you want the absolute maximum quality, add both your system RAM and your GPU's VRAM together, then similarly grab a quant with a file size 1-2GB Smaller than that total.

Next, you'll need to decide if you want to use an 'I-quant' or a 'K-quant'.

If you don't want to think too much, grab one of the K-quants. These are in format 'QX_K_X', like Q5_K_M.

If you want to get more into the weeds, you can check out this extremely useful feature chart:

[llama.cpp feature matrix](https://github.com/ggerganov/llama.cpp/wiki/Feature-matrix)

But basically, if you're aiming for below Q4, and you're running cuBLAS (Nvidia) or rocBLAS (AMD), you should look towards the I-quants. These are in format IQX_X, like IQ3_M. These are newer and offer better performance for their size.

These I-quants can also be used on CPU and Apple Metal, but will be slower than their K-quant equivalent, so speed vs performance is a tradeoff you'll have to decide.

The I-quants are *not* compatible with Vulcan, which is also AMD, so if you have an AMD card double check if you're using the rocBLAS build or the Vulcan build. At the time of writing this, LM Studio has a preview with ROCm support, and other inference engines have specific builds for ROCm.

Want to support my work? Visit my ko-fi page here: https://ko-fi.com/bartowski

|

mradermacher/kappa-3-phi-abliterated-GGUF

|

mradermacher

| 2024-05-12T19:08:59Z | 359 | 3 |

transformers

|

[

"transformers",

"gguf",

"en",

"base_model:failspy/kappa-3-phi-abliterated",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | 2024-05-12T18:55:20Z |

---

base_model: failspy/kappa-3-phi-abliterated

language:

- en

library_name: transformers

license: mit

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

static quants of https://huggingface.co/failspy/kappa-3-phi-abliterated

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q2_K.gguf) | Q2_K | 1.5 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.IQ3_XS.gguf) | IQ3_XS | 1.7 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.IQ3_S.gguf) | IQ3_S | 1.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q3_K_S.gguf) | Q3_K_S | 1.8 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.IQ3_M.gguf) | IQ3_M | 2.0 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q3_K_M.gguf) | Q3_K_M | 2.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.IQ4_XS.gguf) | IQ4_XS | 2.2 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q3_K_L.gguf) | Q3_K_L | 2.2 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q4_K_S.gguf) | Q4_K_S | 2.3 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q4_K_M.gguf) | Q4_K_M | 2.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q5_K_S.gguf) | Q5_K_S | 2.7 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q5_K_M.gguf) | Q5_K_M | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q6_K.gguf) | Q6_K | 3.2 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.Q8_0.gguf) | Q8_0 | 4.2 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/kappa-3-phi-abliterated-GGUF/resolve/main/kappa-3-phi-abliterated.f16.gguf) | f16 | 7.7 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

gokaygokay/paligemma-docci-transformers

|

gokaygokay

| 2024-05-16T21:13:34Z | 359 | 1 |

transformers

|

[

"transformers",

"safetensors",

"paligemma",

"pretraining",

"image-text-to-text",

"en",

"dataset:google/docci",

"license:apache-2.0",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] |

image-text-to-text

| 2024-05-16T20:17:59Z |

---

license: apache-2.0

datasets:

- google/docci

language:

- en

library_name: transformers

pipeline_tag: image-text-to-text

---

Fine tuned version of [PaliGemma](https://huggingface.co/google/paligemma-3b-pt-224-jax) model on [google/docci](https://huggingface.co/datasets/google/docci) dataset.

```

pip install git+https://github.com/huggingface/transformers

```

```python

from transformers import AutoProcessor, PaliGemmaForConditionalGeneration

from PIL import Image

import requests

import torch

model_id = "gokaygokay/paligemma-docci-transformers"

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

model = PaliGemmaForConditionalGeneration.from_pretrained(model_id).eval()

processor = AutoProcessor.from_pretrained(model_id)

## prefix

prompt = "caption en"

model_inputs = processor(text=prompt, images=image, return_tensors="pt")

input_len = model_inputs["input_ids"].shape[-1]

with torch.inference_mode():

generation = model.generate(**model_inputs, max_new_tokens=256, do_sample=False)

generation = generation[0][input_len:]

decoded = processor.decode(generation, skip_special_tokens=True)

print(decoded)

```

|

MaziyarPanahi/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B-GGUF

|

MaziyarPanahi

| 2024-05-21T18:37:50Z | 359 | 0 |

transformers

|

[

"transformers",

"gguf",

"mistral",

"quantized",

"2-bit",

"3-bit",

"4-bit",

"5-bit",

"6-bit",

"8-bit",

"GGUF",

"safetensors",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"automerger",

"base_model:Kukedlc/NeuralSynthesis-7B-v0.1",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us",

"base_model:automerger/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B"

] |

text-generation

| 2024-05-21T18:05:26Z |

---

tags:

- quantized

- 2-bit

- 3-bit

- 4-bit

- 5-bit

- 6-bit

- 8-bit

- GGUF

- transformers

- safetensors

- mistral

- text-generation

- merge

- mergekit

- lazymergekit

- automerger

- base_model:Kukedlc/NeuralSynthesis-7B-v0.1

- license:apache-2.0

- autotrain_compatible

- endpoints_compatible

- text-generation-inference

- region:us

- text-generation

model_name: Ognoexperiment27multi_verse_modelNeuralsynthesis-7B-GGUF

base_model: automerger/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B

inference: false

model_creator: automerger

pipeline_tag: text-generation

quantized_by: MaziyarPanahi

---

# [MaziyarPanahi/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B-GGUF](https://huggingface.co/MaziyarPanahi/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B-GGUF)

- Model creator: [automerger](https://huggingface.co/automerger)

- Original model: [automerger/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B](https://huggingface.co/automerger/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B)

## Description

[MaziyarPanahi/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B-GGUF](https://huggingface.co/MaziyarPanahi/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B-GGUF) contains GGUF format model files for [automerger/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B](https://huggingface.co/automerger/Ognoexperiment27multi_verse_modelNeuralsynthesis-7B).

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

## Special thanks

🙏 Special thanks to [Georgi Gerganov](https://github.com/ggerganov) and the whole team working on [llama.cpp](https://github.com/ggerganov/llama.cpp/) for making all of this possible.

|

RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf

|

RichardErkhov

| 2024-05-21T23:20:54Z | 359 | 0 | null |

[

"gguf",

"region:us"

] | null | 2024-05-21T20:45:29Z |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

OmniCorso-7B - GGUF

- Model creator: https://huggingface.co/macadeliccc/

- Original model: https://huggingface.co/macadeliccc/OmniCorso-7B/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [OmniCorso-7B.Q2_K.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q2_K.gguf) | Q2_K | 2.53GB |

| [OmniCorso-7B.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.IQ3_XS.gguf) | IQ3_XS | 2.81GB |

| [OmniCorso-7B.IQ3_S.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.IQ3_S.gguf) | IQ3_S | 2.96GB |

| [OmniCorso-7B.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q3_K_S.gguf) | Q3_K_S | 2.95GB |

| [OmniCorso-7B.IQ3_M.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.IQ3_M.gguf) | IQ3_M | 3.06GB |

| [OmniCorso-7B.Q3_K.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q3_K.gguf) | Q3_K | 3.28GB |

| [OmniCorso-7B.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q3_K_M.gguf) | Q3_K_M | 3.28GB |

| [OmniCorso-7B.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q3_K_L.gguf) | Q3_K_L | 3.56GB |

| [OmniCorso-7B.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.IQ4_XS.gguf) | IQ4_XS | 3.67GB |

| [OmniCorso-7B.Q4_0.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q4_0.gguf) | Q4_0 | 3.83GB |

| [OmniCorso-7B.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.IQ4_NL.gguf) | IQ4_NL | 3.87GB |

| [OmniCorso-7B.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q4_K_S.gguf) | Q4_K_S | 3.86GB |

| [OmniCorso-7B.Q4_K.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q4_K.gguf) | Q4_K | 4.07GB |

| [OmniCorso-7B.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q4_K_M.gguf) | Q4_K_M | 4.07GB |

| [OmniCorso-7B.Q4_1.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q4_1.gguf) | Q4_1 | 4.24GB |

| [OmniCorso-7B.Q5_0.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q5_0.gguf) | Q5_0 | 4.65GB |

| [OmniCorso-7B.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q5_K_S.gguf) | Q5_K_S | 4.65GB |

| [OmniCorso-7B.Q5_K.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q5_K.gguf) | Q5_K | 4.78GB |

| [OmniCorso-7B.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q5_K_M.gguf) | Q5_K_M | 4.78GB |

| [OmniCorso-7B.Q5_1.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q5_1.gguf) | Q5_1 | 5.07GB |

| [OmniCorso-7B.Q6_K.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q6_K.gguf) | Q6_K | 5.53GB |

| [OmniCorso-7B.Q8_0.gguf](https://huggingface.co/RichardErkhov/macadeliccc_-_OmniCorso-7B-gguf/blob/main/OmniCorso-7B.Q8_0.gguf) | Q8_0 | 7.17GB |

Original model description:

---

license: cc

tags:

- mergekit

- merge

base_model:

- macadeliccc/MBX-7B-v3-DPO

- mlabonne/OmniBeagle-7B

model-index:

- name: OmniCorso-7B

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 72.7

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=macadeliccc/OmniCorso-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 88.7

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=macadeliccc/OmniCorso-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 64.91

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=macadeliccc/OmniCorso-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 73.43

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=macadeliccc/OmniCorso-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 83.74

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=macadeliccc/OmniCorso-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 70.96

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=macadeliccc/OmniCorso-7B

name: Open LLM Leaderboard

---

# OmniCorso-7B

## Code Example

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("macadeliccc/OmniCorso-7B")

model = AutoModelForCausalLM.from_pretrained("macadeliccc/OmniCorso-7B")

messages = [

{"role": "system", "content": "Respond to the users request like a pirate"},

{"role": "user", "content": "Can you write me a quicksort algorithm?"}

]

gen_input = tokenizer.apply_chat_template(messages, return_tensors="pt")

```

The following models were included in the merge:

* [macadeliccc/MBX-7B-v3-DPO](https://huggingface.co/macadeliccc/MBX-7B-v3-DPO)

* [mlabonne/OmniBeagle-7B](https://huggingface.co/mlabonne/OmniBeagle-7B)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

slices:

- sources:

- model: mlabonne/OmniBeagle-7B

layer_range: [0, 32]

- model: macadeliccc/MBX-7B-v3-DPO

layer_range: [0, 32]

merge_method: slerp

base_model: macadeliccc/MBX-7B-v3-DPO

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

## Quantizations

### GGUF

+ [iMatrix](https://huggingface.co/macadeliccc/OmniCorso-7B-GGUF)

### Exllamav2

Quants are available thanks to user bartowski, check them out [here](https://huggingface.co/bartowski/OmniCorso-7B-exl2)

| Branch | Bits | lm_head bits | VRAM (4k) | VRAM (16k) | VRAM (32k) | Description |

| ----- | ---- | ------- | ------ | ------ | ------ | ------------ |

| [8_0](https://huggingface.co/bartowski/OmniCorso-7B-exl2/tree/8_0) | 8.0 | 8.0 | 8.4 GB | 9.8 GB | 11.8 GB | Maximum quality that ExLlamaV2 can produce, near unquantized performance. |

| [6_5](https://huggingface.co/bartowski/OmniCorso-7B-exl2/tree/6_5) | 6.5 | 8.0 | 7.2 GB | 8.6 GB | 10.6 GB | Very similar to 8.0, good tradeoff of size vs performance, **recommended**. |

| [5_0](https://huggingface.co/bartowski/OmniCorso-7B-exl2/tree/5_0) | 5.0 | 6.0 | 6.0 GB | 7.4 GB | 9.4 GB | Slightly lower quality vs 6.5, but usable on 8GB cards. |

| [4_25](https://huggingface.co/bartowski/OmniCorso-7B-exl2/tree/4_25) | 4.25 | 6.0 | 5.3 GB | 6.7 GB | 8.7 GB | GPTQ equivalent bits per weight, slightly higher quality. |

| [3_5](https://huggingface.co/bartowski/OmniCorso-7B-exl2/tree/3_5) | 3.5 | 6.0 | 4.7 GB | 6.1 GB | 8.1 GB | Lower quality, only use if you have to. |

## Evaluations

<pre>----Benchmark Complete----

2024-02-11 15:34:40

Time taken: 178.3 mins

Prompt Format: ChatML

Model: macadeliccc/OmniCorso-7B

Score (v2): 73.75

Parseable: 167.0

---------------

Batch completed

Time taken: 178.3 mins

---------------

</pre>

| Model |AGIEval|GPT4All|TruthfulQA|Bigbench|Average|

|---------------------------------------------------------------|------:|------:|---------:|-------:|------:|

|[OmniCorso-7B](https://huggingface.co/macadeliccc/OmniCorso-7B)| 45.89| 77.66| 74.12| 49.24| 61.73|

### AGIEval

| Task |Version| Metric |Value| |Stderr|

|------------------------------|------:|--------|----:|---|-----:|

|agieval_aqua_rat | 0|acc |29.13|± | 2.86|

| | |acc_norm|27.17|± | 2.80|

|agieval_logiqa_en | 0|acc |39.32|± | 1.92|

| | |acc_norm|39.63|± | 1.92|

|agieval_lsat_ar | 0|acc |23.91|± | 2.82|

| | |acc_norm|23.91|± | 2.82|

|agieval_lsat_lr | 0|acc |53.14|± | 2.21|

| | |acc_norm|53.92|± | 2.21|

|agieval_lsat_rc | 0|acc |66.54|± | 2.88|

| | |acc_norm|67.29|± | 2.87|

|agieval_sat_en | 0|acc |80.58|± | 2.76|

| | |acc_norm|80.58|± | 2.76|

|agieval_sat_en_without_passage| 0|acc |45.63|± | 3.48|

| | |acc_norm|43.69|± | 3.46|

|agieval_sat_math | 0|acc |33.18|± | 3.18|

| | |acc_norm|30.91|± | 3.12|

Average: 45.89%

### GPT4All

| Task |Version| Metric |Value| |Stderr|

|-------------|------:|--------|----:|---|-----:|

|arc_challenge| 0|acc |67.32|± | 1.37|

| | |acc_norm|68.43|± | 1.36|

|arc_easy | 0|acc |87.46|± | 0.68|

| | |acc_norm|83.50|± | 0.76|

|boolq | 1|acc |88.13|± | 0.57|

|hellaswag | 0|acc |68.47|± | 0.46|

| | |acc_norm|86.96|± | 0.34|

|openbookqa | 0|acc |38.80|± | 2.18|

| | |acc_norm|50.00|± | 2.24|

|piqa | 0|acc |83.03|± | 0.88|

| | |acc_norm|85.31|± | 0.83|

|winogrande | 0|acc |81.29|± | 1.10|

Average: 77.66%

### TruthfulQA

| Task |Version|Metric|Value| |Stderr|

|-------------|------:|------|----:|---|-----:|

|truthfulqa_mc| 1|mc1 |58.26|± | 1.73|

| | |mc2 |74.12|± | 1.43|

Average: 74.12%

### Bigbench

| Task |Version| Metric |Value| |Stderr|

|------------------------------------------------|------:|---------------------|----:|---|-----:|

|bigbench_causal_judgement | 0|multiple_choice_grade|56.84|± | 3.60|

|bigbench_date_understanding | 0|multiple_choice_grade|63.41|± | 2.51|

|bigbench_disambiguation_qa | 0|multiple_choice_grade|49.22|± | 3.12|

|bigbench_geometric_shapes | 0|multiple_choice_grade|23.96|± | 2.26|

| | |exact_str_match | 1.39|± | 0.62|

|bigbench_logical_deduction_five_objects | 0|multiple_choice_grade|34.20|± | 2.12|

|bigbench_logical_deduction_seven_objects | 0|multiple_choice_grade|23.71|± | 1.61|

|bigbench_logical_deduction_three_objects | 0|multiple_choice_grade|60.33|± | 2.83|

|bigbench_movie_recommendation | 0|multiple_choice_grade|49.00|± | 2.24|

|bigbench_navigate | 0|multiple_choice_grade|55.20|± | 1.57|

|bigbench_reasoning_about_colored_objects | 0|multiple_choice_grade|70.75|± | 1.02|

|bigbench_ruin_names | 0|multiple_choice_grade|55.80|± | 2.35|

|bigbench_salient_translation_error_detection | 0|multiple_choice_grade|36.97|± | 1.53|

|bigbench_snarks | 0|multiple_choice_grade|72.38|± | 3.33|

|bigbench_sports_understanding | 0|multiple_choice_grade|76.27|± | 1.36|

|bigbench_temporal_sequences | 0|multiple_choice_grade|54.50|± | 1.58|

|bigbench_tracking_shuffled_objects_five_objects | 0|multiple_choice_grade|23.12|± | 1.19|

|bigbench_tracking_shuffled_objects_seven_objects| 0|multiple_choice_grade|20.34|± | 0.96|

|bigbench_tracking_shuffled_objects_three_objects| 0|multiple_choice_grade|60.33|± | 2.83|

Average: 49.24%

Average score: 61.73%

Elapsed time: 02:20:06

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_macadeliccc__OmniCorso-7B)

| Metric |Value|

|---------------------------------|----:|

|Avg. |75.74|

|AI2 Reasoning Challenge (25-Shot)|72.70|

|HellaSwag (10-Shot) |88.70|

|MMLU (5-Shot) |64.91|

|TruthfulQA (0-shot) |73.43|

|Winogrande (5-shot) |83.74|

|GSM8k (5-shot) |70.96|

|

mayflowergmbh/Llama3-DiscoLeo-Instruct-8B-v0.1-GGUF

|

mayflowergmbh

| 2024-05-25T18:18:36Z | 359 | 0 |

transformers

|

[

"transformers",

"gguf",

"de",

"license:llama3",

"endpoints_compatible",

"region:us"

] | null | 2024-05-25T17:55:19Z |

---

language:

- de

license: llama3

library_name: transformers

tags:

- gguf

---

# Llama3-DiscoLeo-Instruct 8B (version 0.1)

## Thanks and Accreditation

[DiscoResearch/Llama3-DiscoLeo-Instruct-8B-v0.1](https://huggingface.co/collections/DiscoResearch/discoleo-8b-llama3-for-german-6650527496c0fafefd4c9729)

is the result of a joint effort between [DiscoResearch](https://huggingface.co/DiscoResearch) and [Occiglot](https://huggingface.co/occiglot)

with support from the [DFKI](https://www.dfki.de/web/) (German Research Center for Artificial Intelligence) and [hessian.Ai](https://hessian.ai).

Occiglot kindly handled data preprocessing, filtering, and deduplication as part of their latest [dataset release](https://huggingface.co/datasets/occiglot/occiglot-fineweb-v0.5), as well as sharing their compute allocation at hessian.Ai's 42 Supercomputer.

## Model Overview

Llama3_DiscoLeo_Instruct_8B_v0 is an instruction tuned version of our [Llama3-German-8B](https://huggingface.co/DiscoResearch/Llama3_German_8B).

The base model was derived from [Meta's Llama3-8B](https://huggingface.co/meta-llama/Meta-Llama-3-8B) through continuous pretraining on 65 billion high-quality German tokens, similar to previous [LeoLM](https://huggingface.co/LeoLM) or [Occiglot](https://huggingface.co/collections/occiglot/occiglot-eu5-7b-v01-65dbed502a6348b052695e01) models.

We finetuned this checkpoint on the German Instruction dataset from DiscoResearch created by [Jan-Philipp Harries](https://huggingface.co/jphme) and [Daniel Auras](https://huggingface.co/rasdani) ([DiscoResearch](https://huggingface.co/DiscoResearch), [ellamind](https://ellamind.com)).

## How to use

Llama3_DiscoLeo_Instruct_8B_v0.1 uses the [Llama-3 chat template](https://github.com/meta-llama/llama3?tab=readme-ov-file#instruction-tuned-models), which can be easily used with [transformer's chat templating](https://huggingface.co/docs/transformers/main/en/chat_templating).

See [below](https://huggingface.co/DiscoResearch/Llama3_DiscoLeo_Instruct_8B_v0.1#usage-example) for a usage example.

## Model Training and Hyperparameters

The model was full-fintuned with axolotl on the [hessian.Ai 42](hessian.ai) with 8192 context-length, learning rate 2e-5 and batch size of 16.

## Evaluation and Results

We evaluated the model using a suite of common English Benchmarks and their German counterparts with [GermanBench](https://github.com/bjoernpl/GermanBenchmark).

In the below image and corresponding table, you can see the benchmark scores for the different instruct models compared to Metas instruct version. All checkpoints are available in this [collection](https://huggingface.co/collections/DiscoResearch/discoleo-8b-llama3-for-german-6650527496c0fafefd4c9729).

| Model | truthful_qa_de | truthfulqa_mc | arc_challenge | arc_challenge_de | hellaswag | hellaswag_de | MMLU | MMLU-DE | mean |

|----------------------------------------------------|----------------|---------------|---------------|------------------|-------------|--------------|-------------|-------------|-------------|

| meta-llama/Meta-Llama-3-8B-Instruct | 0.47498 | 0.43923 | **0.59642** | 0.47952 | **0.82025** | 0.60008 | **0.66658** | 0.53541 | 0.57656 |

| DiscoResearch/Llama3-German-8B | 0.49499 | 0.44838 | 0.55802 | 0.49829 | 0.79924 | 0.65395 | 0.62240 | 0.54413 | 0.57743 |

| DiscoResearch/Llama3-German-8B-32k | 0.48920 | 0.45138 | 0.54437 | 0.49232 | 0.79078 | 0.64310 | 0.58774 | 0.47971 | 0.55982 |

| **DiscoResearch/Llama3-DiscoLeo-Instruct-8B-v0.1** | **0.53042** | 0.52867 | 0.59556 | **0.53839** | 0.80721 | 0.66440 | 0.61898 | 0.56053 | **0.60552** |

| DiscoResearch/Llama3-DiscoLeo-Instruct-8B-32k-v0.1| 0.52749 | **0.53245** | 0.58788 | 0.53754 | 0.80770 | **0.66709** | 0.62123 | **0.56238** | 0.60547 |

## Model Configurations

We release DiscoLeo-8B in the following configurations:

1. [Base model with continued pretraining](https://huggingface.co/DiscoResearch/Llama3_German_8B)

2. [Long-context version (32k context length)](https://huggingface.co/DiscoResearch/Llama3_German_8B_32k)

3. [Instruction-tuned version of the base model](https://huggingface.co/DiscoResearch/Llama3_DiscoLeo_Instruct_8B_v0.1) (This model)

4. [Instruction-tuned version of the long-context model](https://huggingface.co/DiscoResearch/Llama3_DiscoLeo_Instruct_8B_32k_v0.1)

5. [Experimental `DARE-TIES` Merge with Llama3-Instruct](https://huggingface.co/DiscoResearch/Llama3_DiscoLeo_8B_DARE_Experimental)

6. [Collection of Quantized versions](https://huggingface.co/collections/DiscoResearch/discoleo-8b-quants-6651bcf8f72c9a37ce485d42)

## Usage Example

Here's how to use the model with transformers:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained(

"DiscoResearch/Llama3-DiscoLeo-Instruct-8B-v0.1",

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained("DiscoResearch/Llama3-DiscoLeo-Instruct-8B-v0.1")

prompt = "Schreibe ein Essay über die Bedeutung der Energiewende für Deutschlands Wirtschaft"

messages = [

{"role": "system", "content": "Du bist ein hilfreicher Assistent."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

```

## Acknowledgements

The model was trained and evaluated by [Björn Plüster](https://huggingface.co/bjoernp) ([DiscoResearch](https://huggingface.co/DiscoResearch), [ellamind](https://ellamind.com)) with data preparation and project supervision by [Manuel Brack](http://manuel-brack.eu) ([DFKI](https://www.dfki.de/web/), [TU-Darmstadt](https://www.tu-darmstadt.de/)). Initial work on dataset collection and curation was performed by [Malte Ostendorff](https://ostendorff.org) and [Pedro Ortiz Suarez](https://portizs.eu). Instruction tuning was done with the DiscoLM German dataset created by [Jan-Philipp Harries](https://huggingface.co/jphme) and [Daniel Auras](https://huggingface.co/rasdani) ([DiscoResearch](https://huggingface.co/DiscoResearch), [ellamind](https://ellamind.com)). We extend our gratitude to [LAION](https://laion.ai/) and friends, especially [Christoph Schuhmann](https://entwickler.de/experten/christoph-schuhmann) and [Jenia Jitsev](https://huggingface.co/JJitsev), for initiating this collaboration.

The model training was supported by a compute grant at the [42 supercomputer](https://hessian.ai/) which is a central component in the development of [hessian AI](https://hessian.ai/), the [AI Innovation Lab](https://hessian.ai/infrastructure/ai-innovationlab/) (funded by the [Hessian Ministry of Higher Education, Research and the Art (HMWK)](https://wissenschaft.hessen.de) & the [Hessian Ministry of the Interior, for Security and Homeland Security (HMinD)](https://innen.hessen.de)) and the [AI Service Centers](https://hessian.ai/infrastructure/ai-service-centre/) (funded by the [German Federal Ministry for Economic Affairs and Climate Action (BMWK)](https://www.bmwk.de/Navigation/EN/Home/home.html)).

The curation of the training data is partially funded by the [German Federal Ministry for Economic Affairs and Climate Action (BMWK)](https://www.bmwk.de/Navigation/EN/Home/home.html)

through the project [OpenGPT-X](https://opengpt-x.de/en/) (project no. 68GX21007D).

|

rarayayan/testftargpt2

|

rarayayan

| 2024-06-03T16:59:27Z | 359 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gpt2",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] |

text-generation

| 2024-06-03T16:47:29Z |

---

license: apache-2.0

---

|

alvdansen/paintinglight

|

alvdansen

| 2024-06-16T16:31:05Z | 359 | 8 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2024-06-06T17:32:46Z |

---

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

widget:

- text: a small boy in a sailor suit, daiton

output:

url: images/ComfyUI_00349_.png

- text: a prince

output:

url: images/ComfyUI_00343_.png

- text: a lady in a red dress, daiton

output:

url: images/ComfyUI_00347_.png

- text: a man with hair like fire

output:

url: images/Soft_Illustration_e000015_01_20240606142553.jpeg

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: daiton

license: creativeml-openrail-m

---

# Painting Light

<Gallery />

## Model description

A watercolor style painting model that does impressionism well and lends itself to anime.

## Trigger words

You should use `daiton` to trigger the image generation.

## Download model

Weights for this model are available in Safetensors format.

Model release is for research purposes only. For commercial use, please contact me directly.

[Download](/alvdansen/paintinglight/tree/main) them in the Files & versions tab.

|

gglabs/TinyLM-FC-0-epoch

|

gglabs

| 2024-06-09T20:15:05Z | 359 | 0 |

transformers

|

[

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/tinyllama-chat-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-09T20:12:16Z |

---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

base_model: unsloth/tinyllama-chat-bnb-4bit

---

# Uploaded model

- **Developed by:** gglabs

- **License:** apache-2.0

- **Finetuned from model :** unsloth/tinyllama-chat-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

CHE-72/Phi-3-mini-128k-instruct-Q8_0-GGUF

|

CHE-72

| 2024-06-22T16:46:10Z | 359 | 0 | null |

[

"gguf",

"nlp",

"code",

"llama-cpp",

"gguf-my-repo",

"text-generation",

"en",

"base_model:microsoft/Phi-3-mini-128k-instruct",

"license:mit",

"region:us"

] |

text-generation

| 2024-06-22T16:45:53Z |

---

base_model: microsoft/Phi-3-mini-128k-instruct

language:

- en

license: mit

license_link: https://huggingface.co/microsoft/Phi-3-mini-128k-instruct/resolve/main/LICENSE

pipeline_tag: text-generation

tags:

- nlp

- code

- llama-cpp

- gguf-my-repo

widget:

- messages:

- role: user

content: Can you provide ways to eat combinations of bananas and dragonfruits?

---

# CHE-72/Phi-3-mini-128k-instruct-Q8_0-GGUF

This model was converted to GGUF format from [`microsoft/Phi-3-mini-128k-instruct`](https://huggingface.co/microsoft/Phi-3-mini-128k-instruct) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/microsoft/Phi-3-mini-128k-instruct) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo CHE-72/Phi-3-mini-128k-instruct-Q8_0-GGUF --hf-file phi-3-mini-128k-instruct-q8_0.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo CHE-72/Phi-3-mini-128k-instruct-Q8_0-GGUF --hf-file phi-3-mini-128k-instruct-q8_0.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo CHE-72/Phi-3-mini-128k-instruct-Q8_0-GGUF --hf-file phi-3-mini-128k-instruct-q8_0.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo CHE-72/Phi-3-mini-128k-instruct-Q8_0-GGUF --hf-file phi-3-mini-128k-instruct-q8_0.gguf -c 2048

```

|

stablediffusionapi/sd3-medium

|

stablediffusionapi

| 2024-06-25T10:05:39Z | 359 | 0 |

diffusers

|

[

"diffusers",

"modelslab.com",

"stable-diffusion-api",

"text-to-image",

"ultra-realistic",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] |

text-to-image

| 2024-06-25T10:02:51Z |

---

license: creativeml-openrail-m

tags:

- modelslab.com

- stable-diffusion-api

- text-to-image

- ultra-realistic

pinned: true

---

# sd3 medium API Inference

## Get API Key

Get API key from [ModelsLab API](http://modelslab.com), No Payment needed.

Replace Key in below code, change **model_id** to "sd3-medium"

Coding in PHP/Node/Java etc? Have a look at docs for more code examples: [View docs](https://docs.modelslab.com)

Try model for free: [Generate Images](https://modelslab.com/models/sd3-medium)

Model link: [View model](https://modelslab.com/models/sd3-medium)

View all models: [View Models](https://modelslab.com/models)

import requests

import json

url = "https://modelslab.com/api/v6/images/text2img"

payload = json.dumps({

"key": "your_api_key",

"model_id": "sd3-medium",

"prompt": "ultra realistic close up portrait ((beautiful pale cyberpunk female with heavy black eyeliner)), blue eyes, shaved side haircut, hyper detail, cinematic lighting, magic neon, dark red city, Canon EOS R3, nikon, f/1.4, ISO 200, 1/160s, 8K, RAW, unedited, symmetrical balance, in-frame, 8K",

"negative_prompt": "painting, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, deformed, ugly, blurry, bad anatomy, bad proportions, extra limbs, cloned face, skinny, glitchy, double torso, extra arms, extra hands, mangled fingers, missing lips, ugly face, distorted face, extra legs, anime",

"width": "512",

"height": "512",

"samples": "1",

"num_inference_steps": "30",

"safety_checker": "no",

"enhance_prompt": "yes",

"seed": None,

"guidance_scale": 7.5,

"multi_lingual": "no",

"panorama": "no",

"self_attention": "no",

"upscale": "no",

"embeddings": "embeddings_model_id",

"lora": "lora_model_id",

"webhook": None,

"track_id": None

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)

> Use this coupon code to get 25% off **DMGG0RBN**

|

Callidior/bert2bert-base-arxiv-titlegen

|

Callidior

| 2023-04-26T19:42:59Z | 358 | 11 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"encoder-decoder",

"text2text-generation",

"summarization",

"en",

"dataset:arxiv_dataset",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-03-02T23:29:04Z |

---

language:

- en

tags:

- summarization

license: apache-2.0

datasets:

- arxiv_dataset

metrics:

- rouge

widget:

- text: "The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. Our model achieves 28.4 BLEU on the WMT 2014 English-to-German translation task, improving over the existing best results, including ensembles by over 2 BLEU. On the WMT 2014 English-to-French translation task, our model establishes a new single-model state-of-the-art BLEU score of 41.8 after training for 3.5 days on eight GPUs, a small fraction of the training costs of the best models from the literature. We show that the Transformer generalizes well to other tasks by applying it successfully to English constituency parsing both with large and limited training data."

---

# Paper Title Generator

Generates titles for computer science papers given an abstract.

The model is a BERT2BERT Encoder-Decoder using the official `bert-base-uncased` checkpoint as initialization for the encoder and decoder.

It was fine-tuned on 318,500 computer science papers posted on arXiv.org between 2007 and 2022 and achieved a 26.3% Rouge2 F1-Score on held-out validation data.

**Live Demo:** [https://paper-titles.ey.r.appspot.com/](https://paper-titles.ey.r.appspot.com/)

|

ahmedrachid/FinancialBERT

|

ahmedrachid

| 2022-02-07T15:00:03Z | 358 | 23 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"en",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

language: en

widget:

- text: Tesla remains one of the highest [MASK] stocks on the market. Meanwhile, Aurora Innovation is a pre-revenue upstart that shows promise.

- text: Asian stocks [MASK] from a one-year low on Wednesday as U.S. share futures and oil recovered from the previous day's selloff, but uncertainty over the impact of the Omicron

- text: U.S. stocks were set to rise on Monday, led by [MASK] in Apple which neared $3 trillion in market capitalization, while investors braced for a Federal Reserve meeting later this week.

tags:

- fill-mask

---

**FinancialBERT** is a BERT model pre-trained on a large corpora of financial texts. The purpose is to enhance financial NLP research and practice in financial domain, hoping that financial practitioners and researchers can benefit from it without the necessity of the significant computational resources required to train the model.

The model was trained on a large corpus of financial texts:

- *TRC2-financial*: 1.8M news articles that were published by Reuters between 2008 and 2010.

- *Bloomberg News*: 400,000 articles between 2006 and 2013.

- *Corporate Reports*: 192,000 transcripts (10-K & 10-Q)

- *Earning Calls*: 42,156 documents.

More details on `FinancialBERT` can be found at: https://www.researchgate.net/publication/358284785_FinancialBERT_-_A_Pretrained_Language_Model_for_Financial_Text_Mining

> Created by [Ahmed Rachid Hazourli](https://www.linkedin.com/in/ahmed-rachid/)

|

nsi319/legal-pegasus

|

nsi319

| 2021-03-11T08:50:52Z | 358 | 10 |

transformers

|

[

"transformers",

"pytorch",

"pegasus",

"text2text-generation",

"summarization",

"en",

"license:mit",

"autotrain_compatible",

"region:us"

] |

summarization

| 2022-03-02T23:29:05Z |

---

language: en

tags: summarization

metrics:

- rouge

- precision

inference: false

license: mit

---

## PEGASUS for legal document summarization

**legal-pegasus** is a finetuned version of ([**google/pegasus-cnn_dailymail**](https://huggingface.co/google/pegasus-cnn_dailymail)) for the **legal domain**, trained to perform **abstractive summarization** task. The maximum length of input sequence is 1024 tokens.

## Training data

This model was trained on [**sec-litigation-releases**](https://www.sec.gov/litigation/litreleases.htm) dataset consisting more than 2700 litigation releases and complaints.

## How to use

```Python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("nsi319/legal-pegasus")

model = AutoModelForSeq2SeqLM.from_pretrained("nsi319/legal-pegasus")