modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

Yntec/photoMovieX | Yntec | 2023-08-07T12:33:44Z | 7,712 | 5 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"MagicArt35",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-08-05T09:53:28Z | ---

license: creativeml-openrail-m

library_name: diffusers

pipeline_tag: text-to-image

tags:

- stable-diffusion

- stable-diffusion-diffusers

- diffusers

- text-to-image

- MagicArt35

---

# Photo Movie X

Preview and prompt:

pretty cute little girl in tricycle, Screenshot of an surreal jean 70s round minimalist architecture, Sharp light, 35mm still from a sci fi blockbuster color movie made in 2022, beautiful portrait, Dorothy, set in 1860, in front of a spaceship that has just landed on an alien planet, are all wearing, a robot stands nearby

Original page:

https://civitai.com/models/94687?modelVersionId=101000

|

cognitivecomputations/dolphin-2.9.2-qwen2-72b-gguf | cognitivecomputations | 2024-05-29T03:31:57Z | 7,700 | 15 | null | [

"gguf",

"region:us"

] | null | 2024-05-29T02:12:00Z | Entry not found |

facebook/dragon-plus-query-encoder | facebook | 2023-02-17T18:30:37Z | 7,695 | 16 | transformers | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"feature-extraction",

"arxiv:2302.07452",

"autotrain_compatible",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

] | feature-extraction | 2023-02-15T17:50:48Z | ---

tags:

- feature-extraction

pipeline_tag: feature-extraction

---

DRAGON+ is a BERT-base sized dense retriever initialized from [RetroMAE](https://huggingface.co/Shitao/RetroMAE) and further trained on the data augmented from MS MARCO corpus, following the approach described in [How to Train Your DRAGON:

Diverse Augmentation Towards Generalizable Dense Retrieval](https://arxiv.org/abs/2302.07452).

<p align="center">

<img src="https://raw.githubusercontent.com/facebookresearch/dpr-scale/main/dragon/images/teaser.png" width="600">

</p>

The associated GitHub repository is available here https://github.com/facebookresearch/dpr-scale/tree/main/dragon. We use asymmetric dual encoder, with two distinctly parameterized encoders. The following models are also available:

Model | Initialization | MARCO Dev | BEIR | Query Encoder Path | Context Encoder Path

|---|---|---|---|---|---

DRAGON+ | Shitao/RetroMAE| 39.0 | 47.4 | [facebook/dragon-plus-query-encoder](https://huggingface.co/facebook/dragon-plus-query-encoder) | [facebook/dragon-plus-context-encoder](https://huggingface.co/facebook/dragon-plus-context-encoder)

DRAGON-RoBERTa | RoBERTa-base | 39.4 | 47.2 | [facebook/dragon-roberta-query-encoder](https://huggingface.co/facebook/dragon-roberta-query-encoder) | [facebook/dragon-roberta-context-encoder](https://huggingface.co/facebook/dragon-roberta-context-encoder)

## Usage (HuggingFace Transformers)

Using the model directly available in HuggingFace transformers .

```python

import torch

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained('facebook/dragon-plus-query-encoder')

query_encoder = AutoModel.from_pretrained('facebook/dragon-plus-query-encoder')

context_encoder = AutoModel.from_pretrained('facebook/dragon-plus-context-encoder')

# We use msmarco query and passages as an example

query = "Where was Marie Curie born?"

contexts = [

"Maria Sklodowska, later known as Marie Curie, was born on November 7, 1867.",

"Born in Paris on 15 May 1859, Pierre Curie was the son of Eugène Curie, a doctor of French Catholic origin from Alsace."

]

# Apply tokenizer

query_input = tokenizer(query, return_tensors='pt')

ctx_input = tokenizer(contexts, padding=True, truncation=True, return_tensors='pt')

# Compute embeddings: take the last-layer hidden state of the [CLS] token

query_emb = query_encoder(**query_input).last_hidden_state[:, 0, :]

ctx_emb = context_encoder(**ctx_input).last_hidden_state[:, 0, :]

# Compute similarity scores using dot product

score1 = query_emb @ ctx_emb[0] # 396.5625

score2 = query_emb @ ctx_emb[1] # 393.8340

``` |

mmnga/cyberagent-open-calm-7b-gguf | mmnga | 2023-09-08T03:08:46Z | 7,695 | 0 | null | [

"gguf",

"gpt-neox",

"ja",

"license:cc-by-sa-4.0",

"region:us"

] | null | 2023-08-21T09:55:24Z | ---

license: cc-by-sa-4.0

language:

- ja

tags:

- gpt-neox

---

# cyberagent-open-calm-7b-gguf

[cyberagentさんが公開しているopen-calm-7b](https://huggingface.co/cyberagent/open-calm-7b)のggufフォーマット変換版です。

他モデルはこちら

[mmnga/cyberagent-open-calm-7b-gguf](https://huggingface.co/mmnga/cyberagent-open-calm-7b-gguf)

[mmnga/cyberagent-open-calm-3b-gguf](https://huggingface.co/mmnga/cyberagent-open-calm-3b-gguf)

[mmnga/cyberagent-open-calm-1b-gguf](https://huggingface.co/mmnga/cyberagent-open-calm-1b-gguf)

注意:こちらはブランチで試用になります。llama.cpp本家にgptneoxが実装された時に、このggufファイルが使用できない可能性があります。

***[GitHubリポジトリの readme はこちら](https://github.com/mmnga/llama.cpp/tree/mmnga-dev)***

## Usage (試用)

```

git clone --branch mmnga-dev https://github.com/mmnga/llama.cpp.git

cd llama.cpp

make -j

./main -m 'cyberagent-open-calm-7b-q4_0.gguf' -n 128 -p '吾輩は猫である。名前は実を言うと、' --top_p 0.9 --temp 0.7 --repeat-penalty 1.1

```

**CUBLAS**

```

LLAMA_CUBLAS=1 make -j

./main -m 'cyberagent-open-calm-7b-q4_0.gguf' -n 128 -p '吾輩は猫である。名前は実を言うと、' -ngl 40

```

|

anferico/bert-for-patents | anferico | 2023-04-04T12:59:18Z | 7,693 | 66 | transformers | [

"transformers",

"pytorch",

"tf",

"safetensors",

"fill-mask",

"masked-lm",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | 2022-03-02T23:29:05Z | ---

language:

- en

tags:

- masked-lm

- pytorch

pipeline-tag: "fill-mask"

mask-token: "[MASK]"

widget:

- text: "The present [MASK] provides a torque sensor that is small and highly rigid and for which high production efficiency is possible."

- text: "The present invention relates to [MASK] accessories and pertains particularly to a brake light unit for bicycles."

- text: "The present invention discloses a space-bound-free [MASK] and its coordinate determining circuit for determining a coordinate of a stylus pen."

- text: "The illuminated [MASK] includes a substantially translucent canopy supported by a plurality of ribs pivotally swingable towards and away from a shaft."

license: apache-2.0

metrics:

- perplexity

---

# BERT for Patents

BERT for Patents is a model trained by Google on 100M+ patents (not just US patents). It is based on BERT<sub>LARGE</sub>.

If you want to learn more about the model, check out the [blog post](https://cloud.google.com/blog/products/ai-machine-learning/how-ai-improves-patent-analysis), [white paper](https://services.google.com/fh/files/blogs/bert_for_patents_white_paper.pdf) and [GitHub page](https://github.com/google/patents-public-data/blob/master/models/BERT%20for%20Patents.md) containing the original TensorFlow checkpoint.

---

### Projects using this model (or variants of it):

- [Patents4IPPC](https://github.com/ec-jrc/Patents4IPPC) (carried out by [Pi School](https://picampus-school.com/) and commissioned by the [Joint Research Centre (JRC)](https://ec.europa.eu/jrc/en) of the European Commission)

|

duyntnet/deepseek-coder-7b-instruct-v1.5-imatrix-GGUF | duyntnet | 2024-06-12T03:05:04Z | 7,692 | 1 | transformers | [

"transformers",

"gguf",

"imatrix",

"deepseek-coder-7b-instruct-v1.5",

"text-generation",

"en",

"license:other",

"region:us"

] | text-generation | 2024-06-12T00:19:41Z | ---

license: other

language:

- en

pipeline_tag: text-generation

inference: false

tags:

- transformers

- gguf

- imatrix

- deepseek-coder-7b-instruct-v1.5

---

Quantizations of https://huggingface.co/deepseek-ai/deepseek-coder-7b-instruct-v1.5

# From original readme

### 3. How to Use

Here give some examples of how to use our model.

#### Chat Model Inference

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("deepseek-ai/deepseek-coder-7b-instruct-v1.5", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("deepseek-ai/deepseek-coder-7b-instruct-v1.5", trust_remote_code=True).cuda()

messages=[

{ 'role': 'user', 'content': "write a quick sort algorithm in python."}

]

inputs = tokenizer.apply_chat_template(messages, add_generation_prompt=True, return_tensors="pt").to(model.device)

outputs = model.generate(inputs, max_new_tokens=512, do_sample=False, top_k=50, top_p=0.95, num_return_sequences=1, eos_token_id=tokenizer.eos_token_id)

print(tokenizer.decode(outputs[0][len(inputs[0]):], skip_special_tokens=True)) |

mradermacher/Maika-Buddy-3B8-Phi3-GGUF | mradermacher | 2024-06-19T18:09:56Z | 7,691 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:olliai/Maika-Buddy-3B8-Phi3",

"endpoints_compatible",

"region:us"

] | null | 2024-06-19T17:09:03Z | ---

base_model: olliai/Maika-Buddy-3B8-Phi3

language:

- en

library_name: transformers

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/olliai/Maika-Buddy-3B8-Phi3

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q2_K.gguf) | Q2_K | 1.5 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.IQ3_XS.gguf) | IQ3_XS | 1.7 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.IQ3_S.gguf) | IQ3_S | 1.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q3_K_S.gguf) | Q3_K_S | 1.8 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.IQ3_M.gguf) | IQ3_M | 2.0 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q3_K_M.gguf) | Q3_K_M | 2.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.IQ4_XS.gguf) | IQ4_XS | 2.2 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q3_K_L.gguf) | Q3_K_L | 2.2 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q4_K_S.gguf) | Q4_K_S | 2.3 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q4_K_M.gguf) | Q4_K_M | 2.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q5_K_S.gguf) | Q5_K_S | 2.7 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q5_K_M.gguf) | Q5_K_M | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q6_K.gguf) | Q6_K | 3.2 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.Q8_0.gguf) | Q8_0 | 4.2 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Maika-Buddy-3B8-Phi3-GGUF/resolve/main/Maika-Buddy-3B8-Phi3.f16.gguf) | f16 | 7.7 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

mmnga/Phi-3-mini-128k-instruct-gguf | mmnga | 2024-04-24T14:24:09Z | 7,687 | 7 | null | [

"gguf",

"en",

"ja",

"dataset:TFMC/imatrix-dataset-for-japanese-llm",

"license:mit",

"region:us"

] | null | 2024-04-24T13:50:51Z | ---

license: mit

language:

- en

- ja

datasets:

- TFMC/imatrix-dataset-for-japanese-llm

---

# Phi-3-mini-128k-instruct-gguf

[microsoftさんが公開しているPhi-3-mini-128k-instruct](https://huggingface.co/microsoft/Phi-3-mini-128k-instruct)のggufフォーマット変換版です。

imatrixのデータはTFMC/imatrix-dataset-for-japanese-llmを使用して作成しました。

## 他のモデル

[mmnga/Phi-3-mini-128k-instruct-gguf](https://huggingface.co/mmnga/Phi-3-mini-128k-instruct-gguf)

## Usage

```

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

make -j

./main -m ../Phi-3-mini-128k-instruct/Phi-3-mini-128k-instruct-IQ4_XS.gguf -p '<|system|>\nYou are a helpful AI assistant.<|end|>\n<|user|>\nこんにちわ。<|end|>\n<|assistant|>\n' -n 128

``` |

MoritzLaurer/deberta-v3-large-zeroshot-v1.1-all-33 | MoritzLaurer | 2024-04-01T15:10:33Z | 7,678 | 51 | transformers | [

"transformers",

"pytorch",

"onnx",

"safetensors",

"deberta-v2",

"text-classification",

"zero-shot-classification",

"en",

"arxiv:2312.17543",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | zero-shot-classification | 2023-11-27T12:35:25Z | ---

language:

- en

tags:

- text-classification

- zero-shot-classification

pipeline_tag: zero-shot-classification

library_name: transformers

license: mit

---

# Model description: deberta-v3-large-zeroshot-v1.1-all-33

The model is designed for zero-shot classification with the Hugging Face pipeline.

The model can do one universal classification task: determine whether a hypothesis is "true" or "not true" given a text

(`entailment` vs. `not_entailment`).

This task format is based on the Natural Language Inference task (NLI).

The task is so universal that any classification task can be reformulated into this task.

A detailed description of how the model was trained and how it can be used is available in this [paper](https://arxiv.org/pdf/2312.17543.pdf).

## Training data

The model was trained on a mixture of __33 datasets and 387 classes__ that have been reformatted into this universal format.

1. Five NLI datasets with ~885k texts: "mnli", "anli", "fever", "wanli", "ling"

2. 28 classification tasks reformatted into the universal NLI format. ~51k cleaned texts were used to avoid overfitting:

'amazonpolarity', 'imdb', 'appreviews', 'yelpreviews', 'rottentomatoes',

'emotiondair', 'emocontext', 'empathetic',

'financialphrasebank', 'banking77', 'massive',

'wikitoxic_toxicaggregated', 'wikitoxic_obscene', 'wikitoxic_threat', 'wikitoxic_insult', 'wikitoxic_identityhate',

'hateoffensive', 'hatexplain', 'biasframes_offensive', 'biasframes_sex', 'biasframes_intent',

'agnews', 'yahootopics',

'trueteacher', 'spam', 'wellformedquery',

'manifesto', 'capsotu'.

See details on each dataset here: https://github.com/MoritzLaurer/zeroshot-classifier/blob/main/datasets_overview.csv

Note that compared to other NLI models, this model predicts two classes (`entailment` vs. `not_entailment`)

as opposed to three classes (entailment/neutral/contradiction)

The model was only trained on English data. For __multilingual use-cases__,

I recommend machine translating texts to English with libraries like [EasyNMT](https://github.com/UKPLab/EasyNMT).

English-only models tend to perform better than multilingual models and

validation with English data can be easier if you don't speak all languages in your corpus.

### How to use the model

#### Simple zero-shot classification pipeline

```python

#!pip install transformers[sentencepiece]

from transformers import pipeline

text = "Angela Merkel is a politician in Germany and leader of the CDU"

hypothesis_template = "This example is about {}"

classes_verbalized = ["politics", "economy", "entertainment", "environment"]

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-large-zeroshot-v1.1-all-33")

output = zeroshot_classifier(text, classes_verbalised, hypothesis_template=hypothesis_template, multi_label=False)

print(output)

```

### Details on data and training

The code for preparing the data and training & evaluating the model is fully open-source here: https://github.com/MoritzLaurer/zeroshot-classifier/tree/main

Hyperparameters and other details are available in this Weights & Biases repo: https://wandb.ai/moritzlaurer/deberta-v3-large-zeroshot-v1-1-all-33/table?workspace=user-

## Metrics

Balanced accuracy is reported for all datasets.

`deberta-v3-large-zeroshot-v1.1-all-33` was trained on all datasets, with only maximum 500 texts per class to avoid overfitting.

The metrics on these datasets are therefore not strictly zeroshot, as the model has seen some data for each task during training.

`deberta-v3-large-zeroshot-v1.1-heldout` indicates zeroshot performance on the respective dataset.

To calculate these zeroshot metrics, the pipeline was run 28 times, each time with one dataset held out from training to simulate a zeroshot setup.

| | deberta-v3-large-mnli-fever-anli-ling-wanli-binary | deberta-v3-large-zeroshot-v1.1-heldout | deberta-v3-large-zeroshot-v1.1-all-33 |

|:---------------------------|----------------------------:|-----------------------------------------:|----------------------------------------:|

| datasets mean (w/o nli) | 64.1 | 73.4 | 85.2 |

| amazonpolarity (2) | 94.7 | 96.6 | 96.8 |

| imdb (2) | 90.3 | 95.2 | 95.5 |

| appreviews (2) | 93.6 | 94.3 | 94.7 |

| yelpreviews (2) | 98.5 | 98.4 | 98.9 |

| rottentomatoes (2) | 83.9 | 90.5 | 90.8 |

| emotiondair (6) | 49.2 | 42.1 | 72.1 |

| emocontext (4) | 57 | 69.3 | 82.4 |

| empathetic (32) | 42 | 34.4 | 58 |

| financialphrasebank (3) | 77.4 | 77.5 | 91.9 |

| banking77 (72) | 29.1 | 52.8 | 72.2 |

| massive (59) | 47.3 | 64.7 | 77.3 |

| wikitoxic_toxicaggreg (2) | 81.6 | 86.6 | 91 |

| wikitoxic_obscene (2) | 85.9 | 91.9 | 93.1 |

| wikitoxic_threat (2) | 77.9 | 93.7 | 97.6 |

| wikitoxic_insult (2) | 77.8 | 91.1 | 92.3 |

| wikitoxic_identityhate (2) | 86.4 | 89.8 | 95.7 |

| hateoffensive (3) | 62.8 | 66.5 | 88.4 |

| hatexplain (3) | 46.9 | 61 | 76.9 |

| biasframes_offensive (2) | 62.5 | 86.6 | 89 |

| biasframes_sex (2) | 87.6 | 89.6 | 92.6 |

| biasframes_intent (2) | 54.8 | 88.6 | 89.9 |

| agnews (4) | 81.9 | 82.8 | 90.9 |

| yahootopics (10) | 37.7 | 65.6 | 74.3 |

| trueteacher (2) | 51.2 | 54.9 | 86.6 |

| spam (2) | 52.6 | 51.8 | 97.1 |

| wellformedquery (2) | 49.9 | 40.4 | 82.7 |

| manifesto (56) | 10.6 | 29.4 | 44.1 |

| capsotu (21) | 23.2 | 69.4 | 74 |

| mnli_m (2) | 93.1 | nan | 93.1 |

| mnli_mm (2) | 93.2 | nan | 93.2 |

| fevernli (2) | 89.3 | nan | 89.5 |

| anli_r1 (2) | 87.9 | nan | 87.3 |

| anli_r2 (2) | 76.3 | nan | 78 |

| anli_r3 (2) | 73.6 | nan | 74.1 |

| wanli (2) | 82.8 | nan | 82.7 |

| lingnli (2) | 90.2 | nan | 89.6 |

## Limitations and bias

The model can only do text classification tasks.

Please consult the original DeBERTa paper and the papers for the different datasets for potential biases.

## License

The base model (DeBERTa-v3) is published under the MIT license.

The datasets the model was fine-tuned on are published under a diverse set of licenses.

The following table provides an overview of the non-NLI datasets used for fine-tuning,

information on licenses, the underlying papers etc.: https://github.com/MoritzLaurer/zeroshot-classifier/blob/main/datasets_overview.csv

## Citation

If you use this model academically, please cite:

```

@misc{laurer_building_2023,

title = {Building {Efficient} {Universal} {Classifiers} with {Natural} {Language} {Inference}},

url = {http://arxiv.org/abs/2312.17543},

doi = {10.48550/arXiv.2312.17543},

abstract = {Generative Large Language Models (LLMs) have become the mainstream choice for fewshot and zeroshot learning thanks to the universality of text generation. Many users, however, do not need the broad capabilities of generative LLMs when they only want to automate a classification task. Smaller BERT-like models can also learn universal tasks, which allow them to do any text classification task without requiring fine-tuning (zeroshot classification) or to learn new tasks with only a few examples (fewshot), while being significantly more efficient than generative LLMs. This paper (1) explains how Natural Language Inference (NLI) can be used as a universal classification task that follows similar principles as instruction fine-tuning of generative LLMs, (2) provides a step-by-step guide with reusable Jupyter notebooks for building a universal classifier, and (3) shares the resulting universal classifier that is trained on 33 datasets with 389 diverse classes. Parts of the code we share has been used to train our older zeroshot classifiers that have been downloaded more than 55 million times via the Hugging Face Hub as of December 2023. Our new classifier improves zeroshot performance by 9.4\%.},

urldate = {2024-01-05},

publisher = {arXiv},

author = {Laurer, Moritz and van Atteveldt, Wouter and Casas, Andreu and Welbers, Kasper},

month = dec,

year = {2023},

note = {arXiv:2312.17543 [cs]},

keywords = {Computer Science - Artificial Intelligence, Computer Science - Computation and Language},

}

```

### Ideas for cooperation or questions?

If you have questions or ideas for cooperation, contact me at m{dot}laurer{at}vu{dot}nl or [LinkedIn](https://www.linkedin.com/in/moritz-laurer/)

### Debugging and issues

Note that DeBERTa-v3 was released on 06.12.21 and older versions of HF Transformers can have issues running the model (e.g. resulting in an issue with the tokenizer). Using Transformers>=4.13 might solve some issues.

### Hypotheses used for classification

The hypotheses in the tables below were used to fine-tune the model.

Inspecting them can help users get a feeling for which type of hypotheses and tasks the model was trained on.

You can formulate your own hypotheses by changing the `hypothesis_template` of the zeroshot pipeline. For example:

```python

from transformers import pipeline

text = "Angela Merkel is a politician in Germany and leader of the CDU"

hypothesis_template = "Merkel is the leader of the party: {}"

classes_verbalized = ["CDU", "SPD", "Greens"]

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-large-zeroshot-v1.1-all-33")

output = zeroshot_classifier(text, classes_verbalized, hypothesis_template=hypothesis_template, multi_label=False)

print(output)

```

Note that a few rows in the `massive` and `banking77` datasets contain `nan` because some classes were so ambiguous/unclear that I excluded them from the data.

#### wellformedquery

| label | hypothesis |

|:----------------|:-----------------------------------------------|

| not_well_formed | This example is not a well formed Google query |

| well_formed | This example is a well formed Google query. |

#### biasframes_sex

| label | hypothesis |

|:--------|:-----------------------------------------------------------|

| not_sex | This example does not contain allusions to sexual content. |

| sex | This example contains allusions to sexual content. |

#### biasframes_intent

| label | hypothesis |

|:-----------|:-----------------------------------------------------------------|

| intent | The intent of this example is to be offensive/disrespectful. |

| not_intent | The intent of this example is not to be offensive/disrespectful. |

#### biasframes_offensive

| label | hypothesis |

|:--------------|:-------------------------------------------------------------------------|

| not_offensive | This example could not be considered offensive, disrespectful, or toxic. |

| offensive | This example could be considered offensive, disrespectful, or toxic. |

#### financialphrasebank

| label | hypothesis |

|:---------|:--------------------------------------------------------------------------|

| negative | The sentiment in this example is negative from an investor's perspective. |

| neutral | The sentiment in this example is neutral from an investor's perspective. |

| positive | The sentiment in this example is positive from an investor's perspective. |

#### rottentomatoes

| label | hypothesis |

|:---------|:-----------------------------------------------------------------------|

| negative | The sentiment in this example rotten tomatoes movie review is negative |

| positive | The sentiment in this example rotten tomatoes movie review is positive |

#### amazonpolarity

| label | hypothesis |

|:---------|:----------------------------------------------------------------|

| negative | The sentiment in this example amazon product review is negative |

| positive | The sentiment in this example amazon product review is positive |

#### imdb

| label | hypothesis |

|:---------|:------------------------------------------------------------|

| negative | The sentiment in this example imdb movie review is negative |

| positive | The sentiment in this example imdb movie review is positive |

#### appreviews

| label | hypothesis |

|:---------|:------------------------------------------------------|

| negative | The sentiment in this example app review is negative. |

| positive | The sentiment in this example app review is positive. |

#### yelpreviews

| label | hypothesis |

|:---------|:-------------------------------------------------------|

| negative | The sentiment in this example yelp review is negative. |

| positive | The sentiment in this example yelp review is positive. |

#### wikitoxic_toxicaggregated

| label | hypothesis |

|:--------------------|:----------------------------------------------------------------|

| not_toxicaggregated | This example wikipedia comment does not contain toxic language. |

| toxicaggregated | This example wikipedia comment contains toxic language. |

#### wikitoxic_obscene

| label | hypothesis |

|:------------|:------------------------------------------------------------------|

| not_obscene | This example wikipedia comment does not contain obscene language. |

| obscene | This example wikipedia comment contains obscene language. |

#### wikitoxic_threat

| label | hypothesis |

|:-----------|:----------------------------------------------------------|

| not_threat | This example wikipedia comment does not contain a threat. |

| threat | This example wikipedia comment contains a threat. |

#### wikitoxic_insult

| label | hypothesis |

|:-----------|:-----------------------------------------------------------|

| insult | This example wikipedia comment contains an insult. |

| not_insult | This example wikipedia comment does not contain an insult. |

#### wikitoxic_identityhate

| label | hypothesis |

|:-----------------|:---------------------------------------------------------------|

| identityhate | This example wikipedia comment contains identity hate. |

| not_identityhate | This example wikipedia comment does not contain identity hate. |

#### hateoffensive

| label | hypothesis |

|:------------|:------------------------------------------------------------------------|

| hate_speech | This example tweet contains hate speech. |

| neither | This example tweet contains neither offensive language nor hate speech. |

| offensive | This example tweet contains offensive language without hate speech. |

#### hatexplain

| label | hypothesis |

|:------------|:-------------------------------------------------------------------------------------------|

| hate_speech | This example text from twitter or gab contains hate speech. |

| neither | This example text from twitter or gab contains neither offensive language nor hate speech. |

| offensive | This example text from twitter or gab contains offensive language without hate speech. |

#### spam

| label | hypothesis |

|:---------|:------------------------------|

| not_spam | This example sms is not spam. |

| spam | This example sms is spam. |

#### emotiondair

| label | hypothesis |

|:---------|:---------------------------------------------------|

| anger | This example tweet expresses the emotion: anger |

| fear | This example tweet expresses the emotion: fear |

| joy | This example tweet expresses the emotion: joy |

| love | This example tweet expresses the emotion: love |

| sadness | This example tweet expresses the emotion: sadness |

| surprise | This example tweet expresses the emotion: surprise |

#### emocontext

| label | hypothesis |

|:--------|:--------------------------------------------------------------------------------------|

| angry | This example tweet expresses the emotion: anger |

| happy | This example tweet expresses the emotion: happiness |

| others | This example tweet does not express any of the emotions: anger, sadness, or happiness |

| sad | This example tweet expresses the emotion: sadness |

#### empathetic

| label | hypothesis |

|:-------------|:-----------------------------------------------------------|

| afraid | The main emotion of this example dialogue is: afraid |

| angry | The main emotion of this example dialogue is: angry |

| annoyed | The main emotion of this example dialogue is: annoyed |

| anticipating | The main emotion of this example dialogue is: anticipating |

| anxious | The main emotion of this example dialogue is: anxious |

| apprehensive | The main emotion of this example dialogue is: apprehensive |

| ashamed | The main emotion of this example dialogue is: ashamed |

| caring | The main emotion of this example dialogue is: caring |

| confident | The main emotion of this example dialogue is: confident |

| content | The main emotion of this example dialogue is: content |

| devastated | The main emotion of this example dialogue is: devastated |

| disappointed | The main emotion of this example dialogue is: disappointed |

| disgusted | The main emotion of this example dialogue is: disgusted |

| embarrassed | The main emotion of this example dialogue is: embarrassed |

| excited | The main emotion of this example dialogue is: excited |

| faithful | The main emotion of this example dialogue is: faithful |

| furious | The main emotion of this example dialogue is: furious |

| grateful | The main emotion of this example dialogue is: grateful |

| guilty | The main emotion of this example dialogue is: guilty |

| hopeful | The main emotion of this example dialogue is: hopeful |

| impressed | The main emotion of this example dialogue is: impressed |

| jealous | The main emotion of this example dialogue is: jealous |

| joyful | The main emotion of this example dialogue is: joyful |

| lonely | The main emotion of this example dialogue is: lonely |

| nostalgic | The main emotion of this example dialogue is: nostalgic |

| prepared | The main emotion of this example dialogue is: prepared |

| proud | The main emotion of this example dialogue is: proud |

| sad | The main emotion of this example dialogue is: sad |

| sentimental | The main emotion of this example dialogue is: sentimental |

| surprised | The main emotion of this example dialogue is: surprised |

| terrified | The main emotion of this example dialogue is: terrified |

| trusting | The main emotion of this example dialogue is: trusting |

#### agnews

| label | hypothesis |

|:---------|:-------------------------------------------------------|

| Business | This example news text is about business news |

| Sci/Tech | This example news text is about science and technology |

| Sports | This example news text is about sports |

| World | This example news text is about world news |

#### yahootopics

| label | hypothesis |

|:-----------------------|:---------------------------------------------------------------------------------------------------|

| Business & Finance | This example question from the Yahoo Q&A forum is categorized in the topic: Business & Finance |

| Computers & Internet | This example question from the Yahoo Q&A forum is categorized in the topic: Computers & Internet |

| Education & Reference | This example question from the Yahoo Q&A forum is categorized in the topic: Education & Reference |

| Entertainment & Music | This example question from the Yahoo Q&A forum is categorized in the topic: Entertainment & Music |

| Family & Relationships | This example question from the Yahoo Q&A forum is categorized in the topic: Family & Relationships |

| Health | This example question from the Yahoo Q&A forum is categorized in the topic: Health |

| Politics & Government | This example question from the Yahoo Q&A forum is categorized in the topic: Politics & Government |

| Science & Mathematics | This example question from the Yahoo Q&A forum is categorized in the topic: Science & Mathematics |

| Society & Culture | This example question from the Yahoo Q&A forum is categorized in the topic: Society & Culture |

| Sports | This example question from the Yahoo Q&A forum is categorized in the topic: Sports |

#### massive

| label | hypothesis |

|:-------------------------|:------------------------------------------------------------------------------------------|

| alarm_query | The example utterance is a query about alarms. |

| alarm_remove | The intent of this example utterance is to remove an alarm. |

| alarm_set | The intent of the example utterance is to set an alarm. |

| audio_volume_down | The intent of the example utterance is to lower the volume. |

| audio_volume_mute | The intent of this example utterance is to mute the volume. |

| audio_volume_other | The example utterance is related to audio volume. |

| audio_volume_up | The intent of this example utterance is turning the audio volume up. |

| calendar_query | The example utterance is a query about a calendar. |

| calendar_remove | The intent of the example utterance is to remove something from a calendar. |

| calendar_set | The intent of this example utterance is to set something in a calendar. |

| cooking_query | The example utterance is a query about cooking. |

| cooking_recipe | This example utterance is about cooking recipies. |

| datetime_convert | The example utterance is related to date time changes or conversion. |

| datetime_query | The intent of this example utterance is a datetime query. |

| email_addcontact | The intent of this example utterance is adding an email address to contacts. |

| email_query | The example utterance is a query about emails. |

| email_querycontact | The intent of this example utterance is to query contact details. |

| email_sendemail | The intent of the example utterance is to send an email. |

| general_greet | This example utterance is a general greet. |

| general_joke | The intent of the example utterance is to hear a joke. |

| general_quirky | nan |

| iot_cleaning | The intent of the example utterance is for an IoT device to start cleaning. |

| iot_coffee | The intent of this example utterance is for an IoT device to make coffee. |

| iot_hue_lightchange | The intent of this example utterance is changing the light. |

| iot_hue_lightdim | The intent of the example utterance is to dim the lights. |

| iot_hue_lightoff | The example utterance is related to turning the lights off. |

| iot_hue_lighton | The example utterance is related to turning the lights on. |

| iot_hue_lightup | The intent of this example utterance is to brighten lights. |

| iot_wemo_off | The intent of this example utterance is turning an IoT device off. |

| iot_wemo_on | The intent of the example utterance is to turn an IoT device on. |

| lists_createoradd | The example utterance is related to creating or adding to lists. |

| lists_query | The example utterance is a query about a list. |

| lists_remove | The intent of this example utterance is to remove a list or remove something from a list. |

| music_dislikeness | The intent of this example utterance is signalling music dislike. |

| music_likeness | The example utterance is related to liking music. |

| music_query | The example utterance is a query about music. |

| music_settings | The intent of the example utterance is to change music settings. |

| news_query | The example utterance is a query about the news. |

| play_audiobook | The example utterance is related to playing audiobooks. |

| play_game | The intent of this example utterance is to start playing a game. |

| play_music | The intent of this example utterance is for an IoT device to play music. |

| play_podcasts | The example utterance is related to playing podcasts. |

| play_radio | The intent of the example utterance is to play something on the radio. |

| qa_currency | This example utteranceis about currencies. |

| qa_definition | The example utterance is a query about a definition. |

| qa_factoid | The example utterance is a factoid question. |

| qa_maths | The example utterance is a question about maths. |

| qa_stock | This example utterance is about stocks. |

| recommendation_events | This example utterance is about event recommendations. |

| recommendation_locations | The intent of this example utterance is receiving recommendations for good locations. |

| recommendation_movies | This example utterance is about movie recommendations. |

| social_post | The example utterance is about social media posts. |

| social_query | The example utterance is a query about a social network. |

| takeaway_order | The intent of this example utterance is to order takeaway food. |

| takeaway_query | This example utterance is about takeaway food. |

| transport_query | The example utterance is a query about transport or travels. |

| transport_taxi | The intent of this example utterance is to get a taxi. |

| transport_ticket | This example utterance is about transport tickets. |

| transport_traffic | This example utterance is about transport or traffic. |

| weather_query | This example utterance is a query about the wheather. |

#### banking77

| label | hypothesis |

|:-------------------------------------------------|:----------------------------------------------------------------------------------------------------------|

| Refund_not_showing_up | This customer example message is about a refund not showing up. |

| activate_my_card | This banking customer example message is about activating a card. |

| age_limit | This banking customer example message is related to age limits. |

| apple_pay_or_google_pay | This banking customer example message is about apple pay or google pay |

| atm_support | This banking customer example message requests ATM support. |

| automatic_top_up | This banking customer example message is about automatic top up. |

| balance_not_updated_after_bank_transfer | This banking customer example message is about a balance not updated after a transfer. |

| balance_not_updated_after_cheque_or_cash_deposit | This banking customer example message is about a balance not updated after a cheque or cash deposit. |

| beneficiary_not_allowed | This banking customer example message is related to a beneficiary not being allowed or a failed transfer. |

| cancel_transfer | This banking customer example message is related to the cancellation of a transfer. |

| card_about_to_expire | This banking customer example message is related to the expiration of a card. |

| card_acceptance | This banking customer example message is related to the scope of acceptance of a card. |

| card_arrival | This banking customer example message is about the arrival of a card. |

| card_delivery_estimate | This banking customer example message is about a card delivery estimate or timing. |

| card_linking | nan |

| card_not_working | This banking customer example message is about a card not working. |

| card_payment_fee_charged | This banking customer example message is about a card payment fee. |

| card_payment_not_recognised | This banking customer example message is about a payment the customer does not recognise. |

| card_payment_wrong_exchange_rate | This banking customer example message is about a wrong exchange rate. |

| card_swallowed | This banking customer example message is about a card swallowed by a machine. |

| cash_withdrawal_charge | This banking customer example message is about a cash withdrawal charge. |

| cash_withdrawal_not_recognised | This banking customer example message is about an unrecognised cash withdrawal. |

| change_pin | This banking customer example message is about changing a pin code. |

| compromised_card | This banking customer example message is about a compromised card. |

| contactless_not_working | This banking customer example message is about contactless not working |

| country_support | This banking customer example message is about country-specific support. |

| declined_card_payment | This banking customer example message is about a declined card payment. |

| declined_cash_withdrawal | This banking customer example message is about a declined cash withdrawal. |

| declined_transfer | This banking customer example message is about a declined transfer. |

| direct_debit_payment_not_recognised | This banking customer example message is about an unrecognised direct debit payment. |

| disposable_card_limits | This banking customer example message is about the limits of disposable cards. |

| edit_personal_details | This banking customer example message is about editing personal details. |

| exchange_charge | This banking customer example message is about exchange rate charges. |

| exchange_rate | This banking customer example message is about exchange rates. |

| exchange_via_app | nan |

| extra_charge_on_statement | This banking customer example message is about an extra charge. |

| failed_transfer | This banking customer example message is about a failed transfer. |

| fiat_currency_support | This banking customer example message is about fiat currency support |

| get_disposable_virtual_card | This banking customer example message is about getting a disposable virtual card. |

| get_physical_card | nan |

| getting_spare_card | This banking customer example message is about getting a spare card. |

| getting_virtual_card | This banking customer example message is about getting a virtual card. |

| lost_or_stolen_card | This banking customer example message is about a lost or stolen card. |

| lost_or_stolen_phone | This banking customer example message is about a lost or stolen phone. |

| order_physical_card | This banking customer example message is about ordering a card. |

| passcode_forgotten | This banking customer example message is about a forgotten passcode. |

| pending_card_payment | This banking customer example message is about a pending card payment. |

| pending_cash_withdrawal | This banking customer example message is about a pending cash withdrawal. |

| pending_top_up | This banking customer example message is about a pending top up. |

| pending_transfer | This banking customer example message is about a pending transfer. |

| pin_blocked | This banking customer example message is about a blocked pin. |

| receiving_money | This banking customer example message is about receiving money. |

| request_refund | This banking customer example message is about a refund request. |

| reverted_card_payment? | This banking customer example message is about reverting a card payment. |

| supported_cards_and_currencies | nan |

| terminate_account | This banking customer example message is about terminating an account. |

| top_up_by_bank_transfer_charge | nan |

| top_up_by_card_charge | This banking customer example message is about the charge for topping up by card. |

| top_up_by_cash_or_cheque | This banking customer example message is about topping up by cash or cheque. |

| top_up_failed | This banking customer example message is about top up issues or failures. |

| top_up_limits | This banking customer example message is about top up limitations. |

| top_up_reverted | This banking customer example message is about issues with topping up. |

| topping_up_by_card | This banking customer example message is about topping up by card. |

| transaction_charged_twice | This banking customer example message is about a transaction charged twice. |

| transfer_fee_charged | This banking customer example message is about an issue with a transfer fee charge. |

| transfer_into_account | This banking customer example message is about transfers into the customer's own account. |

| transfer_not_received_by_recipient | This banking customer example message is about a transfer that has not arrived yet. |

| transfer_timing | This banking customer example message is about transfer timing. |

| unable_to_verify_identity | This banking customer example message is about an issue with identity verification. |

| verify_my_identity | This banking customer example message is about identity verification. |

| verify_source_of_funds | This banking customer example message is about the source of funds. |

| verify_top_up | This banking customer example message is about verification and top ups |

| virtual_card_not_working | This banking customer example message is about a virtual card not working |

| visa_or_mastercard | This banking customer example message is about types of bank cards. |

| why_verify_identity | This banking customer example message questions why identity verification is necessary. |

| wrong_amount_of_cash_received | This banking customer example message is about a wrong amount of cash received. |

| wrong_exchange_rate_for_cash_withdrawal | This banking customer example message is about a wrong exchange rate for a cash withdrawal. |

#### trueteacher

| label | hypothesis |

|:-----------------------|:---------------------------------------------------------------------|

| factually_consistent | The example summary is factually consistent with the full article. |

| factually_inconsistent | The example summary is factually inconsistent with the full article. |

#### capsotu

| label | hypothesis |

|:----------------------|:----------------------------------------------------------------------------------------------------------|

| Agriculture | This example text from a US presidential speech is about agriculture |

| Civil Rights | This example text from a US presidential speech is about civil rights or minorities or civil liberties |

| Culture | This example text from a US presidential speech is about cultural policy |

| Defense | This example text from a US presidential speech is about defense or military |

| Domestic Commerce | This example text from a US presidential speech is about banking or finance or commerce |

| Education | This example text from a US presidential speech is about education |

| Energy | This example text from a US presidential speech is about energy or electricity or fossil fuels |

| Environment | This example text from a US presidential speech is about the environment or water or waste or pollution |

| Foreign Trade | This example text from a US presidential speech is about foreign trade |

| Government Operations | This example text from a US presidential speech is about government operations or administration |

| Health | This example text from a US presidential speech is about health |

| Housing | This example text from a US presidential speech is about community development or housing issues |

| Immigration | This example text from a US presidential speech is about migration |

| International Affairs | This example text from a US presidential speech is about international affairs or foreign aid |

| Labor | This example text from a US presidential speech is about employment or labour |

| Law and Crime | This example text from a US presidential speech is about law, crime or family issues |

| Macroeconomics | This example text from a US presidential speech is about macroeconomics |

| Public Lands | This example text from a US presidential speech is about public lands or water management |

| Social Welfare | This example text from a US presidential speech is about social welfare |

| Technology | This example text from a US presidential speech is about space or science or technology or communications |

| Transportation | This example text from a US presidential speech is about transportation |

#### manifesto

| label | hypothesis |

|:-------------------------------------------|:------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Agriculture and Farmers: Positive | This example text from a political party manifesto is positive towards policies for agriculture and farmers |

| Anti-Growth Economy: Positive | This example text from a political party manifesto is in favour of anti-growth politics |

| Anti-Imperialism | This example text from a political party manifesto is anti-imperialistic, for example against controlling other countries and for greater self-government of colonies |

| Centralisation | This example text from a political party manifesto is in favour of political centralisation |

| Civic Mindedness: Positive | This example text from a political party manifesto is positive towards national solidarity, civil society or appeals for public spiritedness or against anti-social attitudes |

| Constitutionalism: Negative | This example text from a political party manifesto is positive towards constitutionalism |

| Constitutionalism: Positive | This example text from a political party manifesto is positive towards constitutionalism and the status quo of the constitution |

| Controlled Economy | This example text from a political party manifesto is supportive of direct government control of the economy, e.g. price control or minimum wages |

| Corporatism/Mixed Economy | This example text from a political party manifesto is positive towards cooperation of government, employers, and trade unions simultaneously |

| Culture: Positive | This example text from a political party manifesto is in favour of cultural policies or leisure facilities, for example museus, libraries or public sport clubs |

| Decentralization | This example text from a political party manifesto is for decentralisation or federalism |

| Democracy | This example text from a political party manifesto favourably mentions democracy or democratic procedures or institutions |

| Economic Goals | This example text from a political party manifesto is a broad/general statement on economic goals without specifics |

| Economic Growth: Positive | This example text from a political party manifesto is supportive of economic growth, for example facilitation of more production or government aid for growth |

| Economic Orthodoxy | This example text from a political party manifesto is for economic orthodoxy, for example reduction of budget deficits, thrift or a strong currency |

| Economic Planning | This example text from a political party manifesto is positive towards government economic planning, e.g. policy plans or strategies |

| Education Expansion | This example text from a political party manifesto is about the need to expand/improve policy on education |

| Education Limitation | This example text from a political party manifesto is sceptical towards state expenditure on education, for example in favour of study fees or private schools |

| Environmental Protection | This example text from a political party manifesto is in favour of environmental protection, e.g. fighting climate change or 'green' policies or preservation of natural resources or animal rights |

| Equality: Positive | This example text from a political party manifesto is positive towards equality or social justice, e.g. protection of underprivileged groups or fair distribution of resources |

| European Community/Union: Negative | This example text from a political party manifesto negatively mentions the EU or European Community |

| European Community/Union: Positive | This example text from a political party manifesto is positive towards the EU or European Community, for example EU expansion and integration |

| Foreign Special Relationships: Negative | This example text from a political party manifesto is negative towards particular countries |

| Foreign Special Relationships: Positive | This example text from a political party manifesto is positive towards particular countries |

| Free Market Economy | This example text from a political party manifesto is in favour of a free market economy and capitalism |

| Freedom and Human Rights | This example text from a political party manifesto is in favour of freedom and human rights, for example freedom of speech, assembly or against state coercion or for individualism |

| Governmental and Administrative Efficiency | This example text from a political party manifesto is in favour of efficiency in government/administration, for example by restructuring civil service or improving bureaucracy |

| Incentives: Positive | This example text from a political party manifesto is favourable towards supply side economic policies supporting businesses, for example for incentives like subsidies or tax breaks |

| Internationalism: Negative | This example text from a political party manifesto is sceptical of internationalism, for example negative towards international cooperation, in favour of national sovereignty and unilaterialism |

| Internationalism: Positive | This example text from a political party manifesto is in favour of international cooperation with other countries, for example mentions the need for aid to developing countries, or global governance |

| Keynesian Demand Management | This example text from a political party manifesto is for keynesian demand management and demand side economic policies |

| Labour Groups: Negative | This example text from a political party manifesto is negative towards labour groups and unions |

| Labour Groups: Positive | This example text from a political party manifesto is positive towards labour groups, for example for good working conditions, fair wages or unions |

| Law and Order: Positive | This example text from a political party manifesto is positive towards law and order and strict law enforcement |

| Market Regulation | This example text from a political party manifesto is supports market regulation for a fair and open market, for example for consumer protection or for increased competition or for social market economy |

| Marxist Analysis | This example text from a political party manifesto is positive towards Marxist-Leninist ideas or uses specific Marxist terminology |

| Middle Class and Professional Groups | This example text from a political party manifesto favourably references the middle class, e.g. white colar groups or the service sector |

| Military: Negative | This example text from a political party manifesto is negative towards the military, for example for decreasing military spending or disarmament |

| Military: Positive | This example text from a political party manifesto is positive towards the military, for example for military spending or rearmament or military treaty obligations |

| Multiculturalism: Negative | This example text from a political party manifesto is sceptical towards multiculturalism, or for cultural integration or appeals to cultural homogeneity in society |

| Multiculturalism: Positive | This example text from a political party manifesto favourably mentions cultural diversity, for example for freedom of religion or linguistic heritages |

| National Way of Life: Negative | This example text from a political party manifesto unfavourably mentions a country's nation and history, for example sceptical towards patriotism or national pride |

| National Way of Life: Positive | This example text from a political party manifesto is positive towards the national way of life and history, for example pride of citizenship or appeals to patriotism |

| Nationalisation | This example text from a political party manifesto is positive towards government ownership of industries or land or for economic nationalisation |

| Non-economic Demographic Groups | This example text from a political party manifesto favourably mentions non-economic demographic groups like women, students or specific age groups |

| Peace | This example text from a political party manifesto is positive towards peace and peaceful means of solving crises, for example in favour of negotiations and ending wars |

| Political Authority | This example text from a political party manifesto mentions the speaker's competence to govern or other party's lack of such competence, or favourably mentions a strong/stable government |

| Political Corruption | This example text from a political party manifesto is negative towards political corruption or abuse of political/bureaucratic power |

| Protectionism: Negative | This example text from a political party manifesto is negative towards protectionism, in favour of free trade |

| Protectionism: Positive | This example text from a political party manifesto is in favour of protectionism, for example tariffs, export subsidies |

| Technology and Infrastructure: Positive | This example text from a political party manifesto is about technology and infrastructure, e.g. the importance of modernisation of industry, or supportive of public spending on infrastructure/tech |

| Traditional Morality: Negative | This example text from a political party manifesto is negative towards traditional morality, for example against religious moral values, for divorce or abortion, for modern families or separation of church and state |

| Traditional Morality: Positive | This example text from a political party manifesto is favourable towards traditional or religious values, for example for censorship of immoral behavour, for traditional family values or religious institutions |

| Underprivileged Minority Groups | This example text from a political party manifesto favourably mentions underprivileged minorities, for example handicapped, homosexuals or immigrants |

| Welfare State Expansion | This example text from a political party manifesto is positive towards the welfare state, e.g. health care, pensions or social housing |

| Welfare State Limitation | This example text from a political party manifesto is for limiting the welfare state, for example public funding for social services or social security, e.g. private care before state care | |

mradermacher/Marcoro14-7B-slerp-GGUF | mradermacher | 2024-06-26T12:27:06Z | 7,677 | 0 | transformers | [

"transformers",

"gguf",

"merge",

"mergekit",

"lazymergekit",

"diffnamehard/Mistral-CatMacaroni-slerp-uncensored-7B",

"nbeerbower/llama-3-spicy-abliterated-stella-8B",

"en",

"base_model:Rupesh2/Marcoro14-7B-slerp",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-26T11:52:51Z | ---

base_model: Rupesh2/Marcoro14-7B-slerp

language:

- en

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- merge

- mergekit

- lazymergekit

- diffnamehard/Mistral-CatMacaroni-slerp-uncensored-7B

- nbeerbower/llama-3-spicy-abliterated-stella-8B

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/Rupesh2/Marcoro14-7B-slerp

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q2_K.gguf) | Q2_K | 2.8 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.IQ3_XS.gguf) | IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q3_K_S.gguf) | Q3_K_S | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.IQ3_S.gguf) | IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.IQ3_M.gguf) | IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q3_K_M.gguf) | Q3_K_M | 3.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q3_K_L.gguf) | Q3_K_L | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.IQ4_XS.gguf) | IQ4_XS | 4.1 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q4_K_S.gguf) | Q4_K_S | 4.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q4_K_M.gguf) | Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q5_K_S.gguf) | Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q5_K_M.gguf) | Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q6_K.gguf) | Q6_K | 6.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.Q8_0.gguf) | Q8_0 | 7.8 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Marcoro14-7B-slerp-GGUF/resolve/main/Marcoro14-7B-slerp.f16.gguf) | f16 | 14.6 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

migtissera/Tess-34B-v1.4 | migtissera | 2023-12-05T02:48:13Z | 7,674 | 15 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-05T02:37:01Z | ---

license: other

license_name: yi-34b

license_link: https://huggingface.co/01-ai/Yi-34B/blob/main/LICENSE

---

# Tess

Tess, short for Tesoro (Treasure in Italian), is a general purpose Large Language Model series. Tess-M-v1.4 was trained on the Yi-34B-200K base.

# Prompt Format:

```

SYSTEM: <ANY SYSTEM CONTEXT>

USER:

ASSISTANT:

``` |

DeepMount00/Phi-3-Large-5.6b | DeepMount00 | 2024-05-06T20:54:30Z | 7,674 | 2 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"it",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-06T20:32:42Z | ---

language:

- it

- en

license: apache-2.0

pipeline_tag: text-generation

---

## Phi-3-mini-4k-instruct 5.6b

### Model Details

- **Name:** Expanded microsoft/Phi-3-mini-4k-instruct (Large Experiment)

- **Version:** 1.0

- **Release Date:** 06/05/2024

- **Model Type:** Instruction-based Large Language Model

- **Developer:** Montebovi Michele

### Intended Use

- **Primary Use Case:** This model is an experimental expansion of the **microsoft/Phi-3-mini-4k-instruct** instruct model. It is designed to better handle more complex, instruction-based tasks and provide more accurate responses across a wider range of topics.

- **Intended Users:** Researchers, developers, and AI enthusiasts interested in exploring the capabilities and performance of enhanced instruction-based models.

- **Out-of-Scope Use Cases:** This model is not intended for high-stakes decision-making or any usage requiring fail-safe performance, as it is experimental in nature.

### Model Architecture

- **Base Model:** microsoft/Phi-3-mini-4k-instruct but expanded with more layers

- **Modifications:** This model includes additional training layers and an expanded dataset to improve understanding and response generation across diverse instructions.

--- |

Deci/DeciLM-7B | Deci | 2024-02-21T16:04:09Z | 7,672 | 220 | transformers | [

"transformers",

"safetensors",

"deci",

"text-generation",

"conversational",

"custom_code",

"en",

"license:apache-2.0",

"autotrain_compatible",

"region:us"

] | text-generation | 2023-12-10T16:22:14Z | ---

license: apache-2.0

language:

- en

---

# DeciLM-7B

DeciLM-7B is a 7.04 billion parameter decoder-only text generation model, released under the Apache 2.0 license. At the time of release, DeciLM-7B is the top-performing 7B base language model on the Open LLM Leaderboard. With support for an 8K-token sequence length, this highly efficient model uses variable Grouped-Query Attention (GQA) to achieve a superior balance between accuracy and computational efficiency. The model's architecture was generated using Deci's proprietary Neural Architecture Search technology, AutoNAC.

## Model Details

### Model Description

Deci developed and released the DeciLM-7B language model, a pre-trained, high-efficiency text generation model with 7 billion parameters. DeciLM-7B is not only the most accurate 7B base model, but it also outpaces all models in its class with a throughput that is up to 4.4x that of Mistral-7B's. An instruct version [DeciLM-7B-instruct](https://huggingface.co/Deci/DeciLM-7B-instruct) has also been released.

- **Developed by:** [Deci](https://deci.ai/?utm_campaign=repos&utm_source=hugging-face&utm_medium=model-card&utm_content=decilm-7b)

- **Model type:** DeciLM is an auto-regressive language model using an optimized transformer decoder architecture that includes variable Grouped-Query Attention.

- **Language(s) (NLP):** English

- **License:** Apache 2.0

## Model Architecture

| Parameters | Layers | Heads | Sequence Length | GQA num_key_value_heads* |

|:----------|:----------|:----------|:----------|:----------|

| 7.04 billion | 32 | 32 | 8192 | Variable |

*AutoNAC was employed to optimize the selection of the GQA num_key_value_heads for each layer.

### Model Sources

- **Blog:** [DeciLM-7B Technical Blog](https://deci.ai/blog/introducing-DeciLM-7B-the-fastest-and-most-accurate-7b-large-language-model-to-date/?utm_campaign=repos&utm_source=hugging-face&utm_medium=model-card&utm_content=decilm-7b)

- **Demo:** [DeciLM-7B-instruct Demo](https://huggingface.co/spaces/Deci/DeciLM-7B-instruct)

- **Finetuning Notebook:** [DeciLM-7B Finetuning Notebook](https://colab.research.google.com/drive/1kEV6i96AQ94xTCvSd11TxkEaksTb5o3U?usp=sharing)

- **Text Generation Notebook:** [DeciLM-7B-instruct Text Generation Notebook](https://bit.ly/declm-7b-instruct)

## Uses

The model is intended for commercial and research use in English and can be fine-tuned for various tasks and languages.

## How to Get Started with the Model

Use the code below to get started with the model.

```bibtex

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Deci/DeciLM-7B"

device = "cuda" # for GPU usage or "cpu" for CPU usage

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype="auto", trust_remote_code=True).to(device)

inputs = tokenizer.encode("In a shocking finding, scientists discovered a herd of unicorns living in", return_tensors="pt").to(device)

outputs = model.generate(inputs, max_new_tokens=100, do_sample=True, top_p=0.95)

print(tokenizer.decode(outputs[0]))

# The model can also be used via the text-generation pipeline interface

from transformers import pipeline

generator = pipeline("text-generation", "Deci/DeciLM-7B", torch_dtype="auto", trust_remote_code=True, device=device)

outputs = generator("In a shocking finding, scientists discovered a herd of unicorns living in", max_new_tokens=100, do_sample=True, top_p=0.95)

print(outputs[0]["generated_text"])

```

## Evaluation

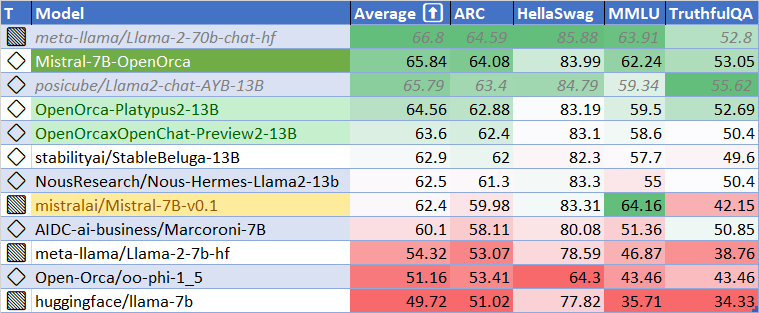

Below are DeciLM-7B and DeciLM-7B-instruct's Open LLM Leaderboard results.

| Model | Average | ARC | HellaSwag | MMLU | TruthfulQA | Winogrande | GSM8K |

|:----------|:---------:|:---------:|:---------:|:---------:|:---------:|:---------:|:---------:|

| DecilLM-7B | 61.55 | 59.39 | 82.51 | 59.76 | 40.33 | 79.95 | 47.38 |

| DecilLM-7B-instruct | 63.19 | 61.01 | 82.37 | 60.24 | 49.75 | 79.72 | 46.02 |

### Runtime Benchmarks

| Inference Tool | Hardware | Prompt length | Generation length | Generated tokens/sec | Batch Size | Number of Prompts |

|:----------|:----------|:---------:|:---------:|:---------:|:---------:|:---------:|

| HuggingFace (PyTorch) | A100 (SXM4-80GB-400W) | 512 | 512 | **1174** | 352 | 352 |

| HuggingFace (PyTorch) | A100 (SXM4-80GB-400W) | 2048 | 2048 | **328** | 72 | 72 |

| Infery-LLM | A100 (SXM4-80GB-400W)| 512 | 512 | **4559** | 1024 | 4096 |

| Infery-LLM | A100 (SXM4-80GB-400W) | 2048 | 2048 | **3997** | 512 | 2048 |

| Infery-LLM | A10 | 512 | 512 | **1345** | 128 | 512 |

| Infery-LLM | A10 | 2048 | 2048 | **599** | 32 | 128 |

- In order to replicate the results of the Hugging Face benchmarks, you can use this [code example](https://huggingface.co/Deci/DeciLM-7B/blob/main/benchmark_hf_model.py).

- Infery-LLM, Deci's inference engine, features a suite of optimization algorithms, including selective quantization, optimized beam search, continuous batching, and custom CUDA kernels. To explore the capabilities of Infery-LLM, [schedule a live demo](https://deci.ai/infery-llm-book-a-demo/?utm_campaign=DeciLM%207B%20Launch&utm_source=HF&utm_medium=decilm7b-model-card&utm_term=infery-demo).

## Ethical Considerations and Limitations

DeciLM-7B is a new technology that comes with inherent risks associated with its use. The testing conducted so far has been primarily in English and does not encompass all possible scenarios. Like those of all large language models, DeciLM-7B's outputs are unpredictable, and the model may generate responses that are inaccurate, biased, or otherwise objectionable. Consequently, developers planning to use DeciLM-7B should undertake thorough safety testing and tuning designed explicitly for their intended applications of the model before deployment.

## How to Cite

Please cite this model using this format.

```bibtex

@misc{DeciFoundationModels,

title = {DeciLM-7B},

author = {DeciAI Research Team},

year = {2023}

url={https://huggingface.co/Deci/DeciLM-7B},

}

``` |

TheLastBen/Papercut_SDXL | TheLastBen | 2023-08-29T10:36:13Z | 7,665 | 46 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | 2023-08-04T00:52:47Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: papercut

widget:

- text: papercut

---

### Papercut Style

#### SDXL LoRA by TheLastBen

#### Prompts to start with :

papercut -subject/scene-

---

Trained using https://github.com/TheLastBen/fast-stable-diffusion SDXL trainer.

#### Sample pictures:

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp) |

guoyww/animatediff-motion-lora-pan-left | guoyww | 2023-11-03T13:09:24Z | 7,662 | 1 | diffusers | [

"diffusers",

"safetensors",

"animatediff",

"text-to-video",

"region:us"

] | text-to-video | 2023-11-03T13:09:24Z | ---

library_name: diffusers

pipeline_tag: text-to-video

tags:

- animatediff

---

# Motion LoRAs

Motion LoRAs allow adding specific types of motion to your animations.

Currently the following types of motion are available for models using the `guoyww/animatediff-motion-adapter-v1-5-2` checkpoint.

- Zoom In/Out

- Pan Left/Right

- Tilt Up/Down

- Rolling Clockwise/Anticlockwise

Please refer to the [AnimateDiff documentation](https://huggingface.co/docs/diffusers/main/en/api/pipelines/animatediff) for information on how to use these Motion LoRAs. |