modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

alvdansen/littletinies | alvdansen | 2024-06-16T16:25:45Z | 6,636 | 103 | diffusers | [

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"doi:10.57967/hf/2666",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | 2024-06-11T21:22:18Z | ---

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

widget:

- text: "a girl wandering through the forest"

output:

url: >-

images/6CD03C101B7F6545EB60E9F48D60B8B3C2D31D42D20F8B7B9B149DD0C646C0C2.jpeg

- text: "a tiny witch child"

output:

url: >-

images/7B482E1FDB39DA5A102B9CD041F4A2902A8395B3835105C736C5AD9C1D905157.jpeg

- text: "an artist leaning over to draw something"

output:

url: >-

images/7CCEA11F1B74C8D8992C47C1C5DEA9BD6F75940B380E9E6EC7D01D85863AF718.jpeg

- text: "a girl with blonde hair and blue eyes, big round glasses"

output:

url: >-

images/227DE29148BC8798591C0EF99A41B71C44C0CAB5A16B976EFCC387C08D748DC0.jpeg

- text: "a girl wandering through the forest"

output:

url: >-

images/EA62C26C5D1B9E1C04FD179679F6924CA27DC3672F0D580ABA9CEB3E110BAD2B.jpeg

- text: "a toad"

output:

url: >-

images/2624AE9AE9B61D337139787B4F4E7529571C05582214CEDAF823BBD8A7E67CDA.jpeg

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: null

license: creativeml-openrail-m

---

# Little Tinies

<Gallery />

## Model description

A very classic hand drawn cartoon style.

## Download model

Weights for this model are available in Safetensors format.

Model release is for research purposes only. For commercial use, please contact me directly.

[Download](/alvdansen/littletinies/tree/main) them in the Files & versions tab.

|

timm/densenet201.tv_in1k | timm | 2023-04-21T22:54:58Z | 6,631 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:1608.06993",

"license:apache-2.0",

"region:us"

] | image-classification | 2023-04-21T22:54:45Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for densenet201.tv_in1k

A DenseNet image classification model. Trained on ImageNet-1k (original torchvision weights).

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 20.0

- GMACs: 4.3

- Activations (M): 7.9

- Image size: 224 x 224

- **Papers:**

- Densely Connected Convolutional Networks: https://arxiv.org/abs/1608.06993

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/pytorch/vision

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('densenet201.tv_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'densenet201.tv_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 64, 112, 112])

# torch.Size([1, 256, 56, 56])

# torch.Size([1, 512, 28, 28])

# torch.Size([1, 1792, 14, 14])

# torch.Size([1, 1920, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'densenet201.tv_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 1920, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Citation

```bibtex

@inproceedings{huang2017densely,

title={Densely Connected Convolutional Networks},

author={Huang, Gao and Liu, Zhuang and van der Maaten, Laurens and Weinberger, Kilian Q },

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2017}

}

```

|

digiplay/2K | digiplay | 2024-06-18T16:33:48Z | 6,631 | 4 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-06-24T14:10:11Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

|

duyntnet/mpt-7b-8k-chat-imatrix-GGUF | duyntnet | 2024-06-14T03:34:34Z | 6,627 | 0 | transformers | [

"transformers",

"gguf",

"imatrix",

"mpt-7b-8k-chat",

"text-generation",

"en",

"arxiv:2205.14135",

"arxiv:2108.12409",

"arxiv:2010.04245",

"license:other",

"region:us"

] | text-generation | 2024-06-14T01:26:06Z | ---

license: other

language:

- en

pipeline_tag: text-generation

inference: false

tags:

- transformers

- gguf

- imatrix

- mpt-7b-8k-chat

---

Quantizations of https://huggingface.co/mosaicml/mpt-7b-8k-chat

# From original readme

## How to Use

This model is best used with the MosaicML [llm-foundry repository](https://github.com/mosaicml/llm-foundry) for training and finetuning.

```python

import transformers

model = transformers.AutoModelForCausalLM.from_pretrained(

'mosaicml/mpt-7b-chat-8k',

trust_remote_code=True

)

```

Note: This model requires that `trust_remote_code=True` be passed to the `from_pretrained` method.

This is because we use a custom `MPT` model architecture that is not yet part of the Hugging Face `transformers` package.

`MPT` includes options for many training efficiency features such as [FlashAttention](https://arxiv.org/pdf/2205.14135.pdf), [ALiBi](https://arxiv.org/abs/2108.12409), [QK LayerNorm](https://arxiv.org/abs/2010.04245), and more.

To use the optimized [triton implementation](https://github.com/openai/triton) of FlashAttention, you can load the model on GPU (`cuda:0`) with `attn_impl='triton'` and with `bfloat16` precision:

```python

import torch

import transformers

name = 'mosaicml/mpt-7b-chat-8k'

config = transformers.AutoConfig.from_pretrained(name, trust_remote_code=True)

config.attn_config['attn_impl'] = 'triton' # change this to use triton-based FlashAttention

config.init_device = 'cuda:0' # For fast initialization directly on GPU!

model = transformers.AutoModelForCausalLM.from_pretrained(

name,

config=config,

torch_dtype=torch.bfloat16, # Load model weights in bfloat16

trust_remote_code=True

)

```

The model was trained initially with a sequence length of 2048 with an additional pretraining stage for sequence length adapation up to 8192. However, ALiBi enables users to increase the maximum sequence length even further during finetuning and/or inference. For example:

```python

import transformers

name = 'mosaicml/mpt-7b-chat-8k'

config = transformers.AutoConfig.from_pretrained(name, trust_remote_code=True)

config.max_seq_len = 16384 # (input + output) tokens can now be up to 16384

model = transformers.AutoModelForCausalLM.from_pretrained(

name,

config=config,

trust_remote_code=True

)

```

This model was trained with the MPT-7B-chat tokenizer which is based on the [EleutherAI/gpt-neox-20b](https://huggingface.co/EleutherAI/gpt-neox-20b) tokenizer and includes additional ChatML tokens.

```python

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained('mosaicml/mpt-7b-8k')

```

The model can then be used, for example, within a text-generation pipeline.

Note: when running Torch modules in lower precision, it is best practice to use the [torch.autocast context manager](https://pytorch.org/docs/stable/amp.html).

```python

from transformers import pipeline

with torch.autocast('cuda', dtype=torch.bfloat16):

inputs = tokenizer('Here is a recipe for vegan banana bread:\n', return_tensors="pt").to('cuda')

outputs = model.generate(**inputs, max_new_tokens=100)

print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

# or using the HF pipeline

pipe = pipeline('text-generation', model=model, tokenizer=tokenizer, device='cuda:0')

with torch.autocast('cuda', dtype=torch.bfloat16):

print(

pipe('Here is a recipe for vegan banana bread:\n',

max_new_tokens=100,

do_sample=True,

use_cache=True))

``` |

mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF | mradermacher | 2024-06-14T10:16:55Z | 6,626 | 1 | transformers | [

"transformers",

"gguf",

"generated_from_trainer",

"axolotl",

"en",

"dataset:cognitivecomputations/Dolphin-2.9",

"dataset:teknium/OpenHermes-2.5",

"dataset:cognitivecomputations/samantha-data",

"dataset:microsoft/orca-math-word-problems-200k",

"base_model:cognitivecomputations/dolphin-2.9.3-qwen2-0.5b",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-14T10:14:44Z | ---

base_model: cognitivecomputations/dolphin-2.9.3-qwen2-0.5b

datasets:

- cognitivecomputations/Dolphin-2.9

- teknium/OpenHermes-2.5

- cognitivecomputations/samantha-data

- microsoft/orca-math-word-problems-200k

language:

- en

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- generated_from_trainer

- axolotl

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/cognitivecomputations/dolphin-2.9.3-qwen2-0.5b

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q3_K_S.gguf) | Q3_K_S | 0.4 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.IQ3_S.gguf) | IQ3_S | 0.4 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.IQ3_XS.gguf) | IQ3_XS | 0.4 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q2_K.gguf) | Q2_K | 0.4 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.IQ3_M.gguf) | IQ3_M | 0.4 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.IQ4_XS.gguf) | IQ4_XS | 0.5 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q3_K_M.gguf) | Q3_K_M | 0.5 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q3_K_L.gguf) | Q3_K_L | 0.5 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q4_K_S.gguf) | Q4_K_S | 0.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q4_K_M.gguf) | Q4_K_M | 0.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q5_K_S.gguf) | Q5_K_S | 0.5 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q5_K_M.gguf) | Q5_K_M | 0.5 | |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q6_K.gguf) | Q6_K | 0.6 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.Q8_0.gguf) | Q8_0 | 0.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/dolphin-2.9.3-qwen2-0.5b-GGUF/resolve/main/dolphin-2.9.3-qwen2-0.5b.f16.gguf) | f16 | 1.1 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

TencentARC/t2i-adapter-sketch-sdxl-1.0 | TencentARC | 2023-09-08T14:57:24Z | 6,625 | 60 | diffusers | [

"diffusers",

"safetensors",

"art",

"t2i-adapter",

"image-to-image",

"stable-diffusion-xl-diffusers",

"stable-diffusion-xl",

"arxiv:2302.08453",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:apache-2.0",

"region:us"

] | image-to-image | 2023-09-03T14:55:43Z | ---

license: apache-2.0

base_model: stabilityai/stable-diffusion-xl-base-1.0

tags:

- art

- t2i-adapter

- image-to-image

- stable-diffusion-xl-diffusers

- stable-diffusion-xl

---

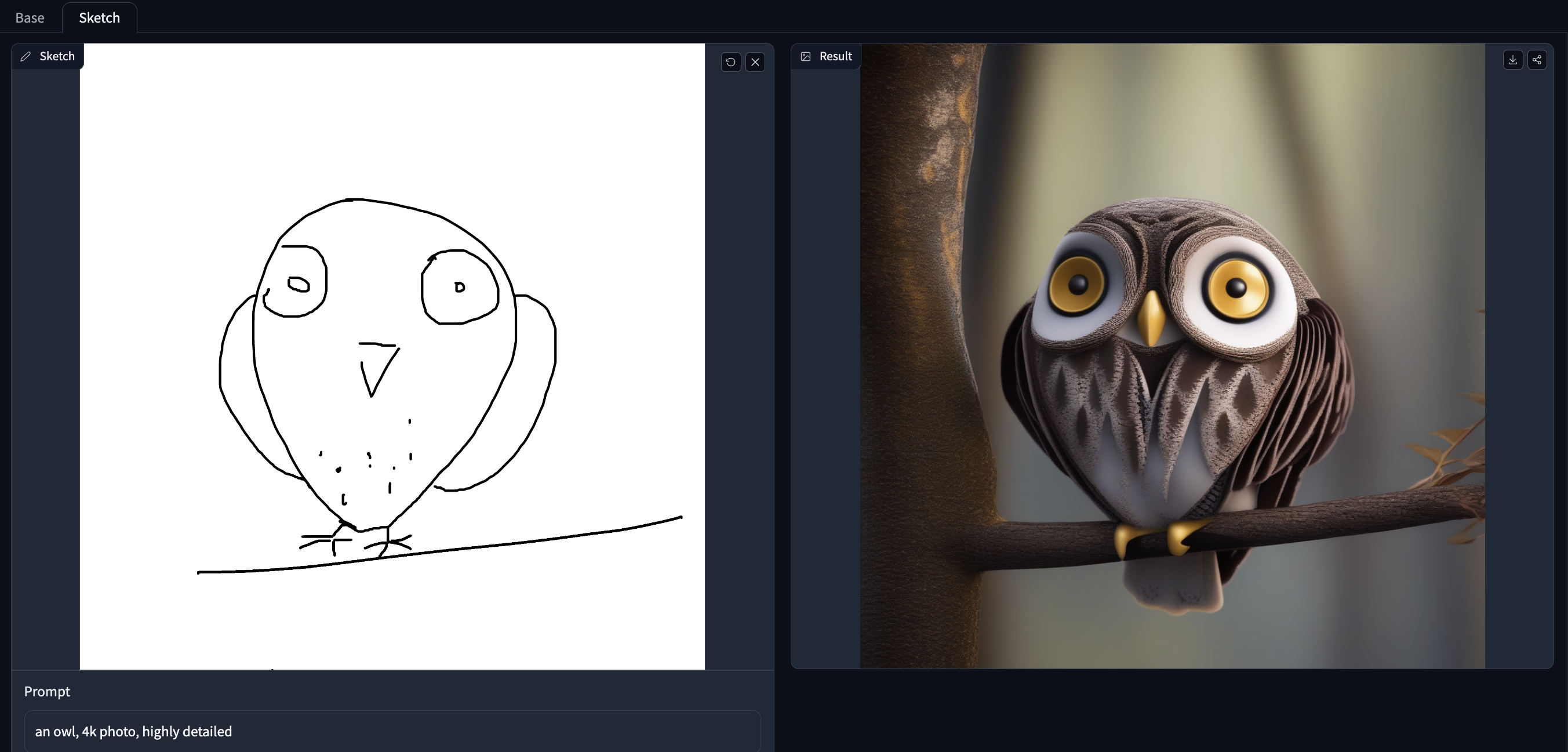

# T2I-Adapter-SDXL - Sketch

T2I Adapter is a network providing additional conditioning to stable diffusion. Each t2i checkpoint takes a different type of conditioning as input and is used with a specific base stable diffusion checkpoint.

This checkpoint provides conditioning on sketch for the StableDiffusionXL checkpoint. This was a collaboration between **Tencent ARC** and [**Hugging Face**](https://huggingface.co/).

## Model Details

- **Developed by:** T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** Apache 2.0

- **Resources for more information:** [GitHub Repository](https://github.com/TencentARC/T2I-Adapter), [Paper](https://arxiv.org/abs/2302.08453).

- **Model complexity:**

| | SD-V1.4/1.5 | SD-XL | T2I-Adapter | T2I-Adapter-SDXL |

| --- | --- |--- |--- |--- |

| Parameters | 860M | 2.6B |77 M | 77/79 M | |

- **Cite as:**

@misc{

title={T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models},

author={Chong Mou, Xintao Wang, Liangbin Xie, Yanze Wu, Jian Zhang, Zhongang Qi, Ying Shan, Xiaohu Qie},

year={2023},

eprint={2302.08453},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

### Checkpoints

| Model Name | Control Image Overview| Control Image Example | Generated Image Example |

|---|---|---|---|

|[TencentARC/t2i-adapter-canny-sdxl-1.0](https://huggingface.co/TencentARC/t2i-adapter-canny-sdxl-1.0)<br/> *Trained with canny edge detection* | A monochrome image with white edges on a black background.|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_canny.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_canny.png"/></a>|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_canny.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_canny.png"/></a>|

|[TencentARC/t2i-adapter-sketch-sdxl-1.0](https://huggingface.co/TencentARC/t2i-adapter-sketch-sdxl-1.0)<br/> *Trained with [PidiNet](https://github.com/zhuoinoulu/pidinet) edge detection* | A hand-drawn monochrome image with white outlines on a black background.|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_sketch.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_sketch.png"/></a>|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_sketch.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_sketch.png"/></a>|

|[TencentARC/t2i-adapter-lineart-sdxl-1.0](https://huggingface.co/TencentARC/t2i-adapter-lineart-sdxl-1.0)<br/> *Trained with lineart edge detection* | A hand-drawn monochrome image with white outlines on a black background.|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_lin.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_lin.png"/></a>|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_lin.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_lin.png"/></a>|

|[TencentARC/t2i-adapter-depth-midas-sdxl-1.0](https://huggingface.co/TencentARC/t2i-adapter-depth-midas-sdxl-1.0)<br/> *Trained with Midas depth estimation* | A grayscale image with black representing deep areas and white representing shallow areas.|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_depth_mid.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_depth_mid.png"/></a>|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_depth_mid.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_depth_mid.png"/></a>|

|[TencentARC/t2i-adapter-depth-zoe-sdxl-1.0](https://huggingface.co/TencentARC/t2i-adapter-depth-zoe-sdxl-1.0)<br/> *Trained with Zoe depth estimation* | A grayscale image with black representing deep areas and white representing shallow areas.|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_depth_zeo.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_depth_zeo.png"/></a>|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_depth_zeo.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_depth_zeo.png"/></a>|

|[TencentARC/t2i-adapter-openpose-sdxl-1.0](https://huggingface.co/TencentARC/t2i-adapter-openpose-sdxl-1.0)<br/> *Trained with OpenPose bone image* | A [OpenPose bone](https://github.com/CMU-Perceptual-Computing-Lab/openpose) image.|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/openpose.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/openpose.png"/></a>|<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/res_pose.png"><img width="64" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/res_pose.png"/></a>|

## Demo:

Try out the model with your own hand-drawn sketches/doodles in the [Doodly Space](https://huggingface.co/spaces/TencentARC/T2I-Adapter-SDXL-Sketch)!

## Example

To get started, first install the required dependencies:

```bash

pip install -U git+https://github.com/huggingface/diffusers.git

pip install -U controlnet_aux==0.0.7 # for conditioning models and detectors

pip install transformers accelerate safetensors

```

1. Images are first downloaded into the appropriate *control image* format.

2. The *control image* and *prompt* are passed to the [`StableDiffusionXLAdapterPipeline`](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/t2i_adapter/pipeline_stable_diffusion_xl_adapter.py#L125).

Let's have a look at a simple example using the [Canny Adapter](https://huggingface.co/TencentARC/t2i-adapter-lineart-sdxl-1.0).

- Dependency

```py

from diffusers import StableDiffusionXLAdapterPipeline, T2IAdapter, EulerAncestralDiscreteScheduler, AutoencoderKL

from diffusers.utils import load_image, make_image_grid

from controlnet_aux.pidi import PidiNetDetector

import torch

# load adapter

adapter = T2IAdapter.from_pretrained(

"TencentARC/t2i-adapter-sketch-sdxl-1.0", torch_dtype=torch.float16, varient="fp16"

).to("cuda")

# load euler_a scheduler

model_id = 'stabilityai/stable-diffusion-xl-base-1.0'

euler_a = EulerAncestralDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

vae=AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

pipe = StableDiffusionXLAdapterPipeline.from_pretrained(

model_id, vae=vae, adapter=adapter, scheduler=euler_a, torch_dtype=torch.float16, variant="fp16",

).to("cuda")

pipe.enable_xformers_memory_efficient_attention()

pidinet = PidiNetDetector.from_pretrained("lllyasviel/Annotators").to("cuda")

```

- Condition Image

```py

url = "https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/org_sketch.png"

image = load_image(url)

image = pidinet(

image, detect_resolution=1024, image_resolution=1024, apply_filter=True

)

```

<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_sketch.png"><img width="480" style="margin:0;padding:0;" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_sketch.png"/></a>

- Generation

```py

prompt = "a robot, mount fuji in the background, 4k photo, highly detailed"

negative_prompt = "extra digit, fewer digits, cropped, worst quality, low quality, glitch, deformed, mutated, ugly, disfigured"

gen_images = pipe(

prompt=prompt,

negative_prompt=negative_prompt,

image=image,

num_inference_steps=30,

adapter_conditioning_scale=0.9,

guidance_scale=7.5,

).images[0]

gen_images.save('out_sketch.png')

```

<a href="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/cond_sketch.png"><img width="480" style="margin:0;padding:0;" src="https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/res_sketch.png"/></a>

### Training

Our training script was built on top of the official training script that we provide [here](https://github.com/huggingface/diffusers/blob/main/examples/t2i_adapter/README_sdxl.md).

The model is trained on 3M high-resolution image-text pairs from LAION-Aesthetics V2 with

- Training steps: 20000

- Batch size: Data parallel with a single gpu batch size of `16` for a total batch size of `256`.

- Learning rate: Constant learning rate of `1e-5`.

- Mixed precision: fp16 |

TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF | TheBloke | 2023-09-27T12:47:41Z | 6,623 | 53 | transformers | [

"transformers",

"gguf",

"llama",

"en",

"dataset:ehartford/WizardLM_evol_instruct_V2_196k_unfiltered_merged_split",

"base_model:ehartford/WizardLM-1.0-Uncensored-Llama2-13b",

"license:llama2",

"text-generation-inference",

"region:us"

] | null | 2023-09-05T15:11:13Z | ---

language:

- en

license: llama2

datasets:

- ehartford/WizardLM_evol_instruct_V2_196k_unfiltered_merged_split

model_name: WizardLM 1.0 Uncensored Llama2 13B

base_model: ehartford/WizardLM-1.0-Uncensored-Llama2-13b

inference: false

model_creator: Eric Hartford

model_type: llama

prompt_template: 'You are a helpful AI assistant.

USER: {prompt}

ASSISTANT:

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# WizardLM 1.0 Uncensored Llama2 13B - GGUF

- Model creator: [Eric Hartford](https://huggingface.co/ehartford)

- Original model: [WizardLM 1.0 Uncensored Llama2 13B](https://huggingface.co/ehartford/WizardLM-1.0-Uncensored-Llama2-13b)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Eric Hartford's WizardLM 1.0 Uncensored Llama2 13B](https://huggingface.co/ehartford/WizardLM-1.0-Uncensored-Llama2-13b).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF)

* [Eric Hartford's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/ehartford/WizardLM-1.0-Uncensored-Llama2-13b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: WizardLM-Vicuna

```

You are a helpful AI assistant.

USER: {prompt}

ASSISTANT:

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [wizardlm-1.0-uncensored-llama2-13b.Q2_K.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [wizardlm-1.0-uncensored-llama2-13b.Q3_K_S.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [wizardlm-1.0-uncensored-llama2-13b.Q3_K_M.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [wizardlm-1.0-uncensored-llama2-13b.Q3_K_L.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [wizardlm-1.0-uncensored-llama2-13b.Q4_0.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [wizardlm-1.0-uncensored-llama2-13b.Q4_K_S.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [wizardlm-1.0-uncensored-llama2-13b.Q4_K_M.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [wizardlm-1.0-uncensored-llama2-13b.Q5_0.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [wizardlm-1.0-uncensored-llama2-13b.Q5_K_S.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [wizardlm-1.0-uncensored-llama2-13b.Q5_K_M.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [wizardlm-1.0-uncensored-llama2-13b.Q6_K.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [wizardlm-1.0-uncensored-llama2-13b.Q8_0.gguf](https://huggingface.co/TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF/blob/main/wizardlm-1.0-uncensored-llama2-13b.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF and below it, a specific filename to download, such as: wizardlm-1.0-uncensored-llama2-13b.q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub>=0.17.1

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF wizardlm-1.0-uncensored-llama2-13b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF wizardlm-1.0-uncensored-llama2-13b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows CLI users: Use `set HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1` before running the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m wizardlm-1.0-uncensored-llama2-13b.q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "You are a helpful AI assistant.\n\nUSER: {prompt}\nASSISTANT:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model from Python using ctransformers

#### First install the package

```bash

# Base ctransformers with no GPU acceleration

pip install ctransformers>=0.2.24

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]>=0.2.24

# Or with ROCm GPU acceleration

CT_HIPBLAS=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems

CT_METAL=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

```

#### Simple example code to load one of these GGUF models

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/WizardLM-1.0-Uncensored-Llama2-13B-GGUF", model_file="wizardlm-1.0-uncensored-llama2-13b.q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here's guides on using llama-cpp-python or ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Eric Hartford's WizardLM 1.0 Uncensored Llama2 13B

This is a retraining of https://huggingface.co/WizardLM/WizardLM-13B-V1.0 with a filtered dataset, intended to reduce refusals, avoidance, and bias.

Note that LLaMA itself has inherent ethical beliefs, so there's no such thing as a "truly uncensored" model. But this model will be more compliant than WizardLM/WizardLM-13B-V1.0.

Shout out to the open source AI/ML community, and everyone who helped me out.

Note: An uncensored model has no guardrails. You are responsible for anything you do with the model, just as you are responsible for anything you do with any dangerous object such as a knife, gun, lighter, or car. Publishing anything this model generates is the same as publishing it yourself. You are responsible for the content you publish, and you cannot blame the model any more than you can blame the knife, gun, lighter, or car for what you do with it.

Like WizardLM/WizardLM-13B-V1.0, this model is trained with Vicuna-1.1 style prompts.

```

You are a helpful AI assistant.

USER: <prompt>

ASSISTANT:

```

<!-- original-model-card end -->

|

digiplay/CoharuMix_real | digiplay | 2024-06-01T20:13:41Z | 6,620 | 2 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2023-12-01T21:25:15Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

Model info:

https://civitai.com/models/198114?modelVersionId=244531

Original Author's DEMO image :

|

Mozilla/llava-v1.5-7b-llamafile | Mozilla | 2024-07-01T19:27:26Z | 6,610 | 157 | null | [

"gguf",

"llamafile",

"GGUF",

"license:llama2",

"region:us"

] | null | 2023-11-20T05:47:34Z | ---

inference: false

tags:

- llamafile

- GGUF

license: llama2

---

<br>

<br>

# LLaVA Model Card

## Model details

**Model type:**

LLaVA is an open-source chatbot trained by fine-tuning LLaMA/Vicuna on GPT-generated multimodal instruction-following data.

It is an auto-regressive language model, based on the transformer architecture.

**Model date:**

LLaVA-v1.5-7B was trained in September 2023.

**Paper or resources for more information:**

https://llava-vl.github.io/

## License

Llama 2 is licensed under the LLAMA 2 Community License,

Copyright (c) Meta Platforms, Inc. All Rights Reserved.

**Where to send questions or comments about the model:**

https://github.com/haotian-liu/LLaVA/issues

## Intended use

**Primary intended uses:**

The primary use of LLaVA is research on large multimodal models and chatbots.

**Primary intended users:**

The primary intended users of the model are researchers and hobbyists in computer vision, natural language processing, machine learning, and artificial intelligence.

## Training dataset

- 558K filtered image-text pairs from LAION/CC/SBU, captioned by BLIP.

- 158K GPT-generated multimodal instruction-following data.

- 450K academic-task-oriented VQA data mixture.

- 40K ShareGPT data.

## Evaluation dataset

A collection of 12 benchmarks, including 5 academic VQA benchmarks and 7 recent benchmarks specifically proposed for instruction-following LMMs. |

dicta-il/dictalm2.0 | dicta-il | 2024-04-27T20:09:16Z | 6,610 | 8 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"pretrained",

"en",

"he",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-10T22:13:43Z | ---

license: apache-2.0

pipeline_tag: text-generation

language:

- en

- he

tags:

- pretrained

inference:

parameters:

temperature: 0.7

---

[<img src="https://i.ibb.co/5Lbwyr1/dicta-logo.jpg" width="300px"/>](https://dicta.org.il)

# Model Card for DictaLM-2.0

The DictaLM-2.0 Large Language Model (LLM) is a pretrained generative text model with 7 billion parameters trained to specialize in Hebrew text.

For full details of this model please read our [release blog post](https://dicta.org.il/dicta-lm).

This is the full-precision base model.

You can view and access the full collection of base/instruct unquantized/quantized versions of `DictaLM-2.0` [here](https://huggingface.co/collections/dicta-il/dicta-lm-20-collection-661bbda397df671e4a430c27).

## Example Code

```python

from transformers import pipeline

import torch

# This loads the model onto the GPU in bfloat16 precision

model = pipeline('text-generation', 'dicta-il/dictalm2.0', torch_dtype=torch.bfloat16, device_map='cuda')

# Sample few shot examples

prompt = """

עבר: הלכתי

עתיד: אלך

עבר: שמרתי

עתיד: אשמור

עבר: שמעתי

עתיד: אשמע

עבר: הבנתי

עתיד:

"""

print(model(prompt.strip(), do_sample=False, max_new_tokens=8, stop_sequence='\n'))

# [{'generated_text': 'עבר: הלכתי\nעתיד: אלך\n\nעבר: שמרתי\nעתיד: אשמור\n\nעבר: שמעתי\nעתיד: אשמע\n\nעבר: הבנתי\nעתיד: אבין\n\n'}]

```

## Example Code - 4-Bit

There are already pre-quantized 4-bit models using the `GPTQ` and `AWQ` methods available for use: [DictaLM-2.0-AWQ](https://huggingface.co/dicta-il/dictalm2.0-AWQ) and [DictaLM-2.0-GPTQ](https://huggingface.co/dicta-il/dictalm2.0-GPTQ).

For dynamic quantization on the go, here is sample code which loads the model onto the GPU using the `bitsandbytes` package, requiring :

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model = AutoModelForCausalLM.from_pretrained('dicta-il/dictalm2.0', torch_dtype=torch.bfloat16, device_map='cuda', load_in_4bit=True)

tokenizer = AutoTokenizer.from_pretrained('dicta-il/dictalm2.0')

prompt = """

עבר: הלכתי

עתיד: אלך

עבר: שמרתי

עתיד: אשמור

עבר: שמעתי

עתיד: אשמע

עבר: הבנתי

עתיד:

"""

encoded = tokenizer(prompt.strip(), return_tensors='pt').to(model.device)

print(tokenizer.batch_decode(model.generate(**encoded, do_sample=False, max_new_tokens=4)))

# ['<s> עבר: הלכתי\nעתיד: אלך\n\nעבר: שמרתי\nעתיד: אשמור\n\nעבר: שמעתי\nעתיד: אשמע\n\nעבר: הבנתי\nעתיד: אבין\n\n']

```

## Model Architecture

DictaLM-2.0 is based on the [Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) model with the following changes:

- An extended tokenizer with 1,000 injected tokens specifically for Hebrew, increasing the compression rate from 5.78 tokens/word to 2.76 tokens/word.

- Continued pretraining on over 190B tokens of naturally occuring text, 50% Hebrew and 50% English.

## Notice

DictaLM 2.0 is a pretrained base model and therefore does not have any moderation mechanisms.

## Citation

If you use this model, please cite:

```bibtex

[Will be added soon]

``` |

digiplay/fCAnimeMix_v3 | digiplay | 2024-04-05T22:13:55Z | 6,608 | 3 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2024-04-05T00:37:48Z | ---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

Model info:

https://civitai.com/models/64548/fcanimemix-fc-anime

Sample prompt and DEMO image generated by Huggingface's API:

1girl Overalls,anime,sunny day,3 rabbits run with her,sfw,

|

sonoisa/t5-base-japanese | sonoisa | 2022-07-31T08:20:41Z | 6,603 | 42 | transformers | [

"transformers",

"pytorch",

"jax",

"t5",

"feature-extraction",

"text2text-generation",

"seq2seq",

"ja",

"dataset:wikipedia",

"dataset:oscar",

"dataset:cc100",

"license:cc-by-sa-4.0",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text2text-generation | 2022-03-02T23:29:05Z | ---

language: ja

tags:

- t5

- text2text-generation

- seq2seq

license: cc-by-sa-4.0

datasets:

- wikipedia

- oscar

- cc100

---

# 日本語T5事前学習済みモデル

This is a T5 (Text-to-Text Transfer Transformer) model pretrained on Japanese corpus.

次の日本語コーパス(約100GB)を用いて事前学習を行ったT5 (Text-to-Text Transfer Transformer) モデルです。

* [Wikipedia](https://ja.wikipedia.org)の日本語ダンプデータ (2020年7月6日時点のもの)

* [OSCAR](https://oscar-corpus.com)の日本語コーパス

* [CC-100](http://data.statmt.org/cc-100/)の日本語コーパス

このモデルは事前学習のみを行なったものであり、特定のタスクに利用するにはファインチューニングする必要があります。

本モデルにも、大規模コーパスを用いた言語モデルにつきまとう、学習データの内容の偏りに由来する偏った(倫理的ではなかったり、有害だったり、バイアスがあったりする)出力結果になる問題が潜在的にあります。

この問題が発生しうることを想定した上で、被害が発生しない用途にのみ利用するよう気をつけてください。

SentencePieceトークナイザーの学習には上記Wikipediaの全データを用いました。

# 転移学習のサンプルコード

https://github.com/sonoisa/t5-japanese

# ベンチマーク

## livedoorニュース分類タスク

livedoorニュースコーパスを用いたニュース記事のジャンル予測タスクの精度は次の通りです。

Google製多言語T5モデルに比べて、モデルサイズが25%小さく、6ptほど精度が高いです。

日本語T5 ([t5-base-japanese](https://huggingface.co/sonoisa/t5-base-japanese), パラメータ数は222M, [再現用コード](https://github.com/sonoisa/t5-japanese/blob/main/t5_japanese_classification.ipynb))

| label | precision | recall | f1-score | support |

| ----------- | ----------- | ------- | -------- | ------- |

| 0 | 0.96 | 0.94 | 0.95 | 130 |

| 1 | 0.98 | 0.99 | 0.99 | 121 |

| 2 | 0.96 | 0.96 | 0.96 | 123 |

| 3 | 0.86 | 0.91 | 0.89 | 82 |

| 4 | 0.96 | 0.97 | 0.97 | 129 |

| 5 | 0.96 | 0.96 | 0.96 | 141 |

| 6 | 0.98 | 0.98 | 0.98 | 127 |

| 7 | 1.00 | 0.99 | 1.00 | 127 |

| 8 | 0.99 | 0.97 | 0.98 | 120 |

| accuracy | | | 0.97 | 1100 |

| macro avg | 0.96 | 0.96 | 0.96 | 1100 |

| weighted avg | 0.97 | 0.97 | 0.97 | 1100 |

比較対象: 多言語T5 ([google/mt5-small](https://huggingface.co/google/mt5-small), パラメータ数は300M)

| label | precision | recall | f1-score | support |

| ----------- | ----------- | ------- | -------- | ------- |

| 0 | 0.91 | 0.88 | 0.90 | 130 |

| 1 | 0.84 | 0.93 | 0.89 | 121 |

| 2 | 0.93 | 0.80 | 0.86 | 123 |

| 3 | 0.82 | 0.74 | 0.78 | 82 |

| 4 | 0.90 | 0.95 | 0.92 | 129 |

| 5 | 0.89 | 0.89 | 0.89 | 141 |

| 6 | 0.97 | 0.98 | 0.97 | 127 |

| 7 | 0.95 | 0.98 | 0.97 | 127 |

| 8 | 0.93 | 0.95 | 0.94 | 120 |

| accuracy | | | 0.91 | 1100 |

| macro avg | 0.91 | 0.90 | 0.90 | 1100 |

| weighted avg | 0.91 | 0.91 | 0.91 | 1100 |

## JGLUEベンチマーク

[JGLUE](https://github.com/yahoojapan/JGLUE)ベンチマークの結果は次のとおりです(順次追加)。

- MARC-ja: 準備中

- JSTS: 準備中

- JNLI: 準備中

- JSQuAD: EM=0.900, F1=0.945, [再現用コード](https://github.com/sonoisa/t5-japanese/blob/main/t5_JSQuAD.ipynb)

- JCommonsenseQA: 準備中

# 免責事項

本モデルの作者は本モデルを作成するにあたって、その内容、機能等について細心の注意を払っておりますが、モデルの出力が正確であるかどうか、安全なものであるか等について保証をするものではなく、何らの責任を負うものではありません。本モデルの利用により、万一、利用者に何らかの不都合や損害が発生したとしても、モデルやデータセットの作者や作者の所属組織は何らの責任を負うものではありません。利用者には本モデルやデータセットの作者や所属組織が責任を負わないことを明確にする義務があります。

# ライセンス

[CC-BY SA 4.0](https://creativecommons.org/licenses/by-sa/4.0/deed.ja)

[Common Crawlの利用規約](http://commoncrawl.org/terms-of-use/)も守るようご注意ください。

|

euclaise/falcon_1b_stage2 | euclaise | 2023-09-25T06:18:39Z | 6,601 | 3 | transformers | [

"transformers",

"pytorch",

"falcon",

"text-generation",

"generated_from_trainer",

"custom_code",

"base_model:euclaise/falcon_1b_stage1",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-17T22:37:09Z | ---

license: apache-2.0

base_model: euclaise/falcon_1b_stage1

tags:

- generated_from_trainer

model-index:

- name: falcon_1b_stage2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# falcon_1b_stage2

This model is a fine-tuned version of [euclaise/falcon_1b_stage1](https://huggingface.co/euclaise/falcon_1b_stage1) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-06

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 8.0

- total_train_batch_size: 128.0

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.13.3

|

avsolatorio/NoInstruct-small-Embedding-v0 | avsolatorio | 2024-05-04T02:11:03Z | 6,596 | 2 | sentence-transformers | [

"sentence-transformers",

"safetensors",

"bert",

"feature-extraction",

"mteb",

"sentence-similarity",

"transformers",

"en",

"license:mit",

"model-index",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2024-05-01T16:21:05Z | ---

language:

- en

library_name: sentence-transformers

license: mit

pipeline_tag: sentence-similarity

tags:

- feature-extraction

- mteb

- sentence-similarity

- sentence-transformers

- transformers

model-index:

- name: NoInstruct-small-Embedding-v0

results:

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (en)

config: en

split: test

revision: e8379541af4e31359cca9fbcf4b00f2671dba205

metrics:

- type: accuracy

value: 75.76119402985074

- type: ap

value: 39.03628777559392

- type: f1

value: 69.85860402259618

- task:

type: Classification

dataset:

type: mteb/amazon_polarity

name: MTEB AmazonPolarityClassification

config: default

split: test

revision: e2d317d38cd51312af73b3d32a06d1a08b442046

metrics:

- type: accuracy

value: 93.29920000000001

- type: ap

value: 90.03479490717608

- type: f1

value: 93.28554395248467

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (en)

config: en

split: test

revision: 1399c76144fd37290681b995c656ef9b2e06e26d

metrics:

- type: accuracy

value: 49.98799999999999

- type: f1

value: 49.46151232451642

- task:

type: Retrieval

dataset:

type: mteb/arguana

name: MTEB ArguAna

config: default

split: test

revision: c22ab2a51041ffd869aaddef7af8d8215647e41a

metrics:

- type: map_at_1

value: 31.935000000000002

- type: map_at_10

value: 48.791000000000004

- type: map_at_100

value: 49.619

- type: map_at_1000

value: 49.623

- type: map_at_3

value: 44.334

- type: map_at_5

value: 46.908

- type: mrr_at_1

value: 32.93

- type: mrr_at_10

value: 49.158

- type: mrr_at_100

value: 50.00599999999999

- type: mrr_at_1000

value: 50.01

- type: mrr_at_3

value: 44.618

- type: mrr_at_5

value: 47.325

- type: ndcg_at_1

value: 31.935000000000002

- type: ndcg_at_10

value: 57.593

- type: ndcg_at_100

value: 60.841

- type: ndcg_at_1000

value: 60.924

- type: ndcg_at_3

value: 48.416

- type: ndcg_at_5

value: 53.05

- type: precision_at_1

value: 31.935000000000002

- type: precision_at_10

value: 8.549

- type: precision_at_100

value: 0.9900000000000001

- type: precision_at_1000

value: 0.1

- type: precision_at_3

value: 20.081

- type: precision_at_5

value: 14.296000000000001

- type: recall_at_1

value: 31.935000000000002

- type: recall_at_10

value: 85.491

- type: recall_at_100

value: 99.004

- type: recall_at_1000

value: 99.644

- type: recall_at_3

value: 60.242

- type: recall_at_5

value: 71.479

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-p2p

name: MTEB ArxivClusteringP2P

config: default

split: test

revision: a122ad7f3f0291bf49cc6f4d32aa80929df69d5d

metrics:

- type: v_measure

value: 47.78438534940855

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-s2s

name: MTEB ArxivClusteringS2S

config: default

split: test

revision: f910caf1a6075f7329cdf8c1a6135696f37dbd53

metrics:

- type: v_measure

value: 40.12916178519471

- task:

type: Reranking

dataset:

type: mteb/askubuntudupquestions-reranking

name: MTEB AskUbuntuDupQuestions

config: default

split: test

revision: 2000358ca161889fa9c082cb41daa8dcfb161a54

metrics:

- type: map

value: 62.125361608299855

- type: mrr

value: 74.92525172580574

- task:

type: STS

dataset:

type: mteb/biosses-sts

name: MTEB BIOSSES

config: default

split: test

revision: d3fb88f8f02e40887cd149695127462bbcf29b4a

metrics:

- type: cos_sim_pearson

value: 88.64322910336641

- type: cos_sim_spearman

value: 87.20138453306345

- type: euclidean_pearson

value: 87.08547818178234

- type: euclidean_spearman

value: 87.17066094143931

- type: manhattan_pearson

value: 87.30053110771618

- type: manhattan_spearman

value: 86.86824441211934

- task:

type: Classification

dataset:

type: mteb/banking77

name: MTEB Banking77Classification

config: default

split: test

revision: 0fd18e25b25c072e09e0d92ab615fda904d66300

metrics:

- type: accuracy

value: 86.3961038961039

- type: f1

value: 86.3669961645295

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-p2p

name: MTEB BiorxivClusteringP2P

config: default

split: test

revision: 65b79d1d13f80053f67aca9498d9402c2d9f1f40

metrics:

- type: v_measure

value: 39.40291404289857

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-s2s

name: MTEB BiorxivClusteringS2S

config: default

split: test

revision: 258694dd0231531bc1fd9de6ceb52a0853c6d908

metrics:

- type: v_measure

value: 35.102356817746816

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-android

name: MTEB CQADupstackAndroidRetrieval

config: default

split: test

revision: f46a197baaae43b4f621051089b82a364682dfeb

metrics:

- type: map_at_1

value: 31.013

- type: map_at_10

value: 42.681999999999995

- type: map_at_100

value: 44.24

- type: map_at_1000

value: 44.372

- type: map_at_3

value: 39.181

- type: map_at_5

value: 41.071999999999996

- type: mrr_at_1

value: 38.196999999999996

- type: mrr_at_10

value: 48.604

- type: mrr_at_100

value: 49.315

- type: mrr_at_1000

value: 49.363

- type: mrr_at_3

value: 45.756

- type: mrr_at_5

value: 47.43

- type: ndcg_at_1

value: 38.196999999999996

- type: ndcg_at_10

value: 49.344

- type: ndcg_at_100

value: 54.662

- type: ndcg_at_1000

value: 56.665

- type: ndcg_at_3

value: 44.146

- type: ndcg_at_5

value: 46.514

- type: precision_at_1

value: 38.196999999999996

- type: precision_at_10

value: 9.571

- type: precision_at_100

value: 1.542

- type: precision_at_1000

value: 0.202

- type: precision_at_3

value: 21.364

- type: precision_at_5

value: 15.336

- type: recall_at_1

value: 31.013

- type: recall_at_10

value: 61.934999999999995

- type: recall_at_100

value: 83.923

- type: recall_at_1000

value: 96.601

- type: recall_at_3

value: 46.86

- type: recall_at_5

value: 53.620000000000005

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-english

name: MTEB CQADupstackEnglishRetrieval

config: default

split: test

revision: ad9991cb51e31e31e430383c75ffb2885547b5f0

metrics:

- type: map_at_1

value: 29.84

- type: map_at_10

value: 39.335

- type: map_at_100

value: 40.647

- type: map_at_1000

value: 40.778

- type: map_at_3

value: 36.556

- type: map_at_5

value: 38.048

- type: mrr_at_1

value: 36.815

- type: mrr_at_10

value: 45.175

- type: mrr_at_100

value: 45.907

- type: mrr_at_1000

value: 45.946999999999996

- type: mrr_at_3

value: 42.909000000000006

- type: mrr_at_5

value: 44.227

- type: ndcg_at_1

value: 36.815

- type: ndcg_at_10

value: 44.783

- type: ndcg_at_100

value: 49.551

- type: ndcg_at_1000

value: 51.612

- type: ndcg_at_3

value: 40.697

- type: ndcg_at_5

value: 42.558

- type: precision_at_1

value: 36.815

- type: precision_at_10

value: 8.363

- type: precision_at_100

value: 1.385

- type: precision_at_1000

value: 0.186

- type: precision_at_3

value: 19.342000000000002

- type: precision_at_5

value: 13.706999999999999

- type: recall_at_1

value: 29.84

- type: recall_at_10

value: 54.164

- type: recall_at_100

value: 74.36

- type: recall_at_1000

value: 87.484

- type: recall_at_3

value: 42.306

- type: recall_at_5

value: 47.371

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-gaming

name: MTEB CQADupstackGamingRetrieval

config: default

split: test

revision: 4885aa143210c98657558c04aaf3dc47cfb54340

metrics:

- type: map_at_1

value: 39.231

- type: map_at_10

value: 51.44800000000001

- type: map_at_100

value: 52.574

- type: map_at_1000

value: 52.629999999999995

- type: map_at_3

value: 48.077

- type: map_at_5

value: 50.019000000000005

- type: mrr_at_1

value: 44.89

- type: mrr_at_10

value: 54.803000000000004

- type: mrr_at_100

value: 55.556000000000004

- type: mrr_at_1000

value: 55.584

- type: mrr_at_3

value: 52.32

- type: mrr_at_5

value: 53.846000000000004

- type: ndcg_at_1

value: 44.89

- type: ndcg_at_10

value: 57.228

- type: ndcg_at_100

value: 61.57

- type: ndcg_at_1000

value: 62.613

- type: ndcg_at_3

value: 51.727000000000004

- type: ndcg_at_5

value: 54.496

- type: precision_at_1

value: 44.89

- type: precision_at_10

value: 9.266

- type: precision_at_100

value: 1.2309999999999999

- type: precision_at_1000

value: 0.136

- type: precision_at_3

value: 23.051

- type: precision_at_5

value: 15.987000000000002

- type: recall_at_1

value: 39.231

- type: recall_at_10

value: 70.82000000000001

- type: recall_at_100

value: 89.446

- type: recall_at_1000

value: 96.665

- type: recall_at_3

value: 56.40500000000001

- type: recall_at_5

value: 62.993

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-gis

name: MTEB CQADupstackGisRetrieval

config: default

split: test

revision: 5003b3064772da1887988e05400cf3806fe491f2

metrics:

- type: map_at_1

value: 25.296000000000003

- type: map_at_10

value: 34.021

- type: map_at_100

value: 35.158

- type: map_at_1000

value: 35.233

- type: map_at_3

value: 31.424999999999997

- type: map_at_5

value: 33.046

- type: mrr_at_1

value: 27.232

- type: mrr_at_10

value: 36.103

- type: mrr_at_100

value: 37.076

- type: mrr_at_1000

value: 37.135

- type: mrr_at_3

value: 33.635

- type: mrr_at_5

value: 35.211

- type: ndcg_at_1

value: 27.232

- type: ndcg_at_10

value: 38.878

- type: ndcg_at_100

value: 44.284

- type: ndcg_at_1000

value: 46.268

- type: ndcg_at_3

value: 33.94

- type: ndcg_at_5

value: 36.687

- type: precision_at_1

value: 27.232

- type: precision_at_10

value: 5.921

- type: precision_at_100

value: 0.907

- type: precision_at_1000

value: 0.11199999999999999

- type: precision_at_3

value: 14.426

- type: precision_at_5

value: 10.215

- type: recall_at_1

value: 25.296000000000003

- type: recall_at_10

value: 51.708

- type: recall_at_100

value: 76.36699999999999

- type: recall_at_1000

value: 91.306

- type: recall_at_3

value: 38.651

- type: recall_at_5

value: 45.201

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-mathematica

name: MTEB CQADupstackMathematicaRetrieval

config: default

split: test

revision: 90fceea13679c63fe563ded68f3b6f06e50061de

metrics:

- type: map_at_1

value: 16.24

- type: map_at_10

value: 24.696

- type: map_at_100

value: 25.945

- type: map_at_1000

value: 26.069

- type: map_at_3

value: 22.542

- type: map_at_5

value: 23.526

- type: mrr_at_1

value: 20.149

- type: mrr_at_10

value: 29.584

- type: mrr_at_100

value: 30.548

- type: mrr_at_1000

value: 30.618000000000002

- type: mrr_at_3

value: 27.301

- type: mrr_at_5

value: 28.563

- type: ndcg_at_1

value: 20.149

- type: ndcg_at_10

value: 30.029

- type: ndcg_at_100

value: 35.812

- type: ndcg_at_1000

value: 38.755

- type: ndcg_at_3

value: 26.008

- type: ndcg_at_5

value: 27.517000000000003

- type: precision_at_1

value: 20.149

- type: precision_at_10

value: 5.647

- type: precision_at_100

value: 0.968

- type: precision_at_1000

value: 0.136

- type: precision_at_3

value: 12.934999999999999

- type: precision_at_5

value: 8.955

- type: recall_at_1

value: 16.24

- type: recall_at_10

value: 41.464

- type: recall_at_100

value: 66.781

- type: recall_at_1000

value: 87.85300000000001

- type: recall_at_3

value: 29.822

- type: recall_at_5

value: 34.096

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-physics

name: MTEB CQADupstackPhysicsRetrieval

config: default

split: test

revision: 79531abbd1fb92d06c6d6315a0cbbbf5bb247ea4

metrics:

- type: map_at_1

value: 29.044999999999998

- type: map_at_10

value: 39.568999999999996

- type: map_at_100

value: 40.831

- type: map_at_1000

value: 40.948

- type: map_at_3

value: 36.495

- type: map_at_5

value: 38.21

- type: mrr_at_1

value: 35.611

- type: mrr_at_10

value: 45.175

- type: mrr_at_100

value: 45.974

- type: mrr_at_1000

value: 46.025

- type: mrr_at_3

value: 42.765

- type: mrr_at_5

value: 44.151

- type: ndcg_at_1

value: 35.611

- type: ndcg_at_10

value: 45.556999999999995

- type: ndcg_at_100

value: 50.86000000000001

- type: ndcg_at_1000

value: 52.983000000000004

- type: ndcg_at_3

value: 40.881

- type: ndcg_at_5

value: 43.035000000000004

- type: precision_at_1

value: 35.611

- type: precision_at_10

value: 8.306

- type: precision_at_100

value: 1.276

- type: precision_at_1000

value: 0.165

- type: precision_at_3

value: 19.57

- type: precision_at_5

value: 13.725000000000001

- type: recall_at_1

value: 29.044999999999998

- type: recall_at_10

value: 57.513999999999996

- type: recall_at_100

value: 80.152

- type: recall_at_1000

value: 93.982

- type: recall_at_3

value: 44.121

- type: recall_at_5

value: 50.007000000000005

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack-programmers

name: MTEB CQADupstackProgrammersRetrieval

config: default

split: test

revision: 6184bc1440d2dbc7612be22b50686b8826d22b32

metrics:

- type: map_at_1

value: 22.349

- type: map_at_10

value: 33.434000000000005

- type: map_at_100

value: 34.8

- type: map_at_1000

value: 34.919

- type: map_at_3

value: 30.348000000000003

- type: map_at_5

value: 31.917

- type: mrr_at_1

value: 28.195999999999998

- type: mrr_at_10

value: 38.557

- type: mrr_at_100

value: 39.550999999999995

- type: mrr_at_1000

value: 39.607

- type: mrr_at_3

value: 36.035000000000004

- type: mrr_at_5

value: 37.364999999999995

- type: ndcg_at_1

value: 28.195999999999998

- type: ndcg_at_10

value: 39.656000000000006

- type: ndcg_at_100

value: 45.507999999999996

- type: ndcg_at_1000

value: 47.848

- type: ndcg_at_3

value: 34.609

- type: ndcg_at_5

value: 36.65

- type: precision_at_1

value: 28.195999999999998

- type: precision_at_10

value: 7.534000000000001

- type: precision_at_100

value: 1.217

- type: precision_at_1000

value: 0.158

- type: precision_at_3

value: 17.085

- type: precision_at_5

value: 12.169

- type: recall_at_1

value: 22.349

- type: recall_at_10

value: 53.127

- type: recall_at_100

value: 77.884

- type: recall_at_1000

value: 93.705

- type: recall_at_3

value: 38.611000000000004

- type: recall_at_5

value: 44.182

- task:

type: Retrieval

dataset:

type: mteb/cqadupstack

name: MTEB CQADupstackRetrieval

config: default

split: test

revision: 4ffe81d471b1924886b33c7567bfb200e9eec5c4

metrics:

- type: map_at_1

value: 25.215749999999996

- type: map_at_10

value: 34.332750000000004

- type: map_at_100

value: 35.58683333333333

- type: map_at_1000

value: 35.70458333333333

- type: map_at_3

value: 31.55441666666667

- type: map_at_5

value: 33.100833333333334

- type: mrr_at_1

value: 29.697250000000004

- type: mrr_at_10

value: 38.372249999999994

- type: mrr_at_100

value: 39.26708333333334

- type: mrr_at_1000

value: 39.3265

- type: mrr_at_3

value: 35.946083333333334

- type: mrr_at_5

value: 37.336999999999996

- type: ndcg_at_1

value: 29.697250000000004

- type: ndcg_at_10

value: 39.64575

- type: ndcg_at_100

value: 44.996833333333335

- type: ndcg_at_1000

value: 47.314499999999995

- type: ndcg_at_3

value: 34.93383333333334

- type: ndcg_at_5

value: 37.15291666666667

- type: precision_at_1

value: 29.697250000000004

- type: precision_at_10

value: 6.98825

- type: precision_at_100

value: 1.138

- type: precision_at_1000

value: 0.15283333333333332

- type: precision_at_3

value: 16.115583333333333

- type: precision_at_5

value: 11.460916666666666

- type: recall_at_1

value: 25.215749999999996

- type: recall_at_10