index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos | hf_public_repos/blog/infini-attention.md | ---

title: "A failed experiment: Infini-Attention, and why we should keep trying?"

thumbnail: ./assets/185_infini_attention/infini_attention_thumbnail.png

authors:

- user: neuralink

- user: lvwerra

- user: thomwolf

---

# A failed experiment: Infini-Attention, and why we should keep trying?

TLDR: Infini-attention's performance gets worse as we increase the number of times we compress the memory, and to the best of our knowledge, [ring attention](https://x.com/Haojun_Zhao14/status/1815419356408336738), [YaRN](https://arxiv.org/abs/2309.00071) and [rope scaling](https://arxiv.org/abs/2309.16039) are still the best ways for extending a pretrained model to longer context length.

## Section 0: Introduction

The context length of language models is one of the central attributes besides the model’s performance. Since the emergence of in-context learning, adding relevant information to the model’s input has become increasingly important. Thus, the context length rapidly increased from paragraphs (512 tokens with BERT/GPT-1) to pages (1024/2048 with GPT-2 and GPT-3 respectively) to books (128k of Claude) all the way to collections of books (1-10M tokens of Gemini). However, extending standard attention to such length remains challenging.

> A small intro to Ring Attention: Ring Attention was first introduced by researchers from UC Berkeley in 2024 [[link]](https://arxiv.org/abs/2310.01889) (to the best of our knowledge). This engineering technique helps overcome memory limitations by performing self-attention and feedforward network computations in a blockwise fashion and distributing sequence dimensions across multiple devices, allowing concurrent computation and communication.

Even with Ring Attention, the number of GPUs required to train a [Llama 3 8B](https://arxiv.org/abs/2407.21783) on a 1-million-token context length with a batch size of 1 is 512 GPUs. As scaling laws have [shown](https://arxiv.org/abs/2001.08361), there is a strong correlation between model size and its downstream performance, which means the bigger the model, the better (of course, both models should be well-trained). So we not only want a 1m context length, but we want a 1m context length on the biggest model (e.g., Llama 3 8B 405B). And there are only a few companies in existence that have the resources to do so.

> Recap on the memory complexity of self-attention

> In standard attention (not-flash-attention), every token attends to every other token in the sequence, resulting in an attention matrix of size [seq_len, seq_len]. For each pair of tokens, we compute an attention score, and as the sequence length (seq_len) increases, the memory and computation requirements grow quadratically: Memory for the attention matrix is O(seq_len^2). For instance, a 10x increase in sequence length results in a 100x increase in memory requirements. Even memory efficient attention methods like Flash Attention still increase linearly with context length and are bottlenecked by single GPU memory, leading to a typical max context far lower than 1M tokens on today's GPUs.

Motivated by this, we explore an alternative approach to standard attention: infini-attention. The paper was released by researchers from Google in April 2024 [[link]](https://arxiv.org/abs/2404.07143). Instead of computing attention scores between every word, Infini-attention divides the sequence into segments, compresses earlier segments into a fixed buffer, and allows the next segment to retrieve memory from the earlier segments while limiting attention scores to words within the current segment. A key advantage is its fixed buffer size upper bounds the total memory usage. It also uses the same query within a segment to access information from both its own segment and the compressed memory, which enables us to cheaply extend the context length for a pretrained model. In theory, we can achieve infinite context length, as it only keeps a single buffer for all the memory of earlier segments. However, in reality compression limits the amount of information which can effectively been stored and the question is thus: how usably is the memory such compressed?

While understanding a new method on paper is relatively easy, actually making it work is often a whole other story, story which is very rarely shared publicly. Motivated by this, we decided to share our experiments and chronicles in reproducing the Infini-attention paper, what motivated us throughout the debugging process (we spent 90% of our time debugging a convergence issue), and how hard it can be to make these things work.

With the release of Llama 3 8B (which has a context length limit of 8k tokens), we sought to extend this length to 1 million tokens without quadratically increasing the memory. In this blog post, we will start by explaining how Infini-attention works. We’ll then outline our reproduction principles and describe our initial small-scale experiment. We discuss the challenges we faced, how we addressed them, and conclude with a summary of our findings and other ideas we explored. If you’re interested in testing our trained checkpoint [[link]](https://huggingface.co/nanotron/llama3-8b-infini-attention), you can find it in the following repo [[link]](https://github.com/huggingface/nanotron/tree/xrsrke/infini_attention_this_actually_works) (note that we currently provide the code as is).

## Section 1: Reproduction Principles

We found the following rules helpful when implementing a new method and use it as guiding principles for a lot of our work:

+ **Principle 1:** Start with the smallest model size that provides good signals, and scale up the experiments once you get good signals.

+ **Principle 2.** Always train a solid baseline to measure progress.

+ **Principle 3.** To determine if a modification improves performance, train two models identically except for the modification being tested.

With these principles in mind, let's dive into how Infini-attention actually works. Understanding the mechanics will be crucial as we move forward with our experiments.

## Section 2: How does Infini-attention works

- Step 1: Split the input sequence into smaller, fixed-size chunks called "segments".

- Step 2: Calculate the standard causal dot-product attention within each segment.

- Step 3: Pull relevant information from the compressive memory using the current segment’s query vector. The retrieval process is defined mathematically as follows:

\\( A_{\text {mem }}=\frac{\sigma(Q) M_{s-1}}{\sigma(Q) z_{s-1}} \\)

+ \\( A_{\text {mem }} \in \mathbb{R}^{N \times d_{\text {value }}} \\) : The retrieved content from memory, representing the long-term context.

+ \\( Q \in \mathbb{R}^{N \times d_{\text {key }}} \\) : The query matrix, where \\( N \\) is the number of queries, and \\( d_{\text {key }} \\) is the dimension of each query.

+ \\( M_{s-1} \in \mathbb{R}^{d_{\text {key }} \times d_{\text {value }}} \\) : The memory matrix from the previous segment, storing key-value pairs.

+ \\( \sigma \\): A nonlinear activation function, specifically element-wise Exponential Linear Unit (ELU) plus 1.

+ \\( z_{s-1} \in \mathbb{R}^{d_{\text {key }}} \\) : A normalization term.

```python

import torch.nn.functional as F

from torch import einsum

from einops import rearrange

def _retrieve_from_memory(query_states, prev_memory, prev_normalization):

...

sigma_query_states = F.elu(query_states) + 1

retrieved_memory = einsum(

sigma_query_states,

prev_memory,

"batch_size n_heads seq_len d_k, batch_size n_heads d_k d_v -> batch_size n_heads seq_len d_v",

)

denominator = einsum(

sigma_query_states,

prev_normalization,

"batch_size n_heads seq_len d_head, batch_size n_heads d_head -> batch_size n_heads seq_len",

)

denominator = rearrange(

denominator,

"batch_size n_heads seq_len -> batch_size n_heads seq_len 1",

)

# NOTE: because normalization is the sum of all the keys, so each word should have the same normalization

retrieved_memory = retrieved_memory / denominator

return retrieved_memory

```

- Step 4: Combine the local context (from the current segment) with the long-term context (retrieved from the compressive memory) to generate the final output. This way, both short-term and long-term contexts can be considered in the attention output.

\\( A=\text{sigmoid}(\beta) \odot A_{\text {mem }}+(1-\text{sigmoid}(\beta)) \odot A_{\text {dot }} \\)

+ \\( A \in \mathbb{R}^{N \times d_{\text {value }}} \\) : The combined attention output.

+ \\( \text{sigmoid}(\beta) \\) : A learnable scalar parameter that controls the trade-off between the long-term memory content \\( A_{\text {mem }} \\) and the local context.

+ \\( A_{\text {dot }} \in \mathbb{R}^{N \times d_{\text {value }}} \\) : The attention output from the current segment using dot-product attention.

+ Step 5: Update the compressive memory by adding the key-value states from the current segment, so this allows us to accumulate the context over time.

\\( M_s \leftarrow M_{s-1}+\sigma(K)^T V \\)

\\( z_s \leftarrow z_{s-1}+\sum_{t=1}^N \sigma\left(K_t\right) \\)

+ \\( M_s \in \mathbb{R}^{d_{\text {key }} \times d_{\text {value }}} \\) : The updated memory matrix for the current segment, incorporating new information.

+ \\( K \in \mathbb{R}^{N \times d_{\text {key }}} \\) : The key matrix for the current segment, representing the new keys to be stored.

+ \\( V \in \mathbb{R}^{N \times d_{\text {value }}} \\) : The value matrix for the current segment, representing the new values associated with the keys.

+ \\( K_t \\) : The \\( t \\)-th key vector in the key matrix.

+ \\( z_s \\) : The updated normalization term for the current segment.

```python

import torch

def _update_memory(prev_memory, prev_normalization, key_states, value_states):

...

sigma_key_states = F.elu(key_states) + 1

if prev_memory is None or prev_normalization is None:

new_value_states = value_states

else:

numerator = einsum(

sigma_key_states,

prev_memory,

"batch_size n_heads seq_len d_k, batch_size n_heads d_k d_v -> batch_size n_heads seq_len d_v",

)

denominator = einsum(

sigma_key_states,

prev_normalization,

"batch_size n_heads seq_len d_k, batch_size n_heads d_k -> batch_size n_heads seq_len",

)

denominator = rearrange(

denominator,

"batch_size n_heads seq_len -> batch_size n_heads seq_len 1",

)

prev_v = numerator / denominator

new_value_states = value_states - prev_v

memory = torch.matmul(sigma_key_states.transpose(-2, -1), new_value_states)

normalization = reduce(

sigma_key_states,

"batch_size n_heads seq_len d_head -> batch_size n_heads d_head",

reduction="sum",

...

)

memory += prev_memory if prev_memory is not None else 0

normalization += prev_normalization if prev_normalization is not None else 0

return memory, normalization

```

+ Step 6: As we move from one segment to the next, we discard the previous segment's attention states and pass along the updated compressed memory to the next segment.

```python

def forward(...):

...

outputs = []

global_weights = F.sigmoid(self.balance_factors)

...

local_weights = 1 - global_weights

memory = None

normalization = None

for segment_hidden_state, segment_sequence_mask in zip(segment_hidden_states, segment_sequence_masks):

attn_outputs = self.forward_with_hidden_states(

hidden_states=segment_hidden_state, sequence_mask=segment_sequence_mask, return_qkv_states=True

)

local_attn_outputs = attn_outputs["attention_output"]

query_states, key_states, value_states = attn_outputs["qkv_states_without_pe"]

q_bs = query_states.shape[0]

q_length = query_states.shape[2]

...

retrieved_memory = _retrieve_from_memory(

query_states, prev_memory=memory, prev_normalization=normalization

)

attention_output = global_weights * retrieved_memory + local_weights * local_attn_outputs

...

output = o_proj(attention_output)

memory, normalization = _update_memory(memory, normalization, key_states, value_states)

outputs.append(output)

outputs = torch.cat(outputs, dim=1) # concat along sequence dimension

...

```

Now that we've got a handle on the theory, time to roll up our sleeves and get into some actual experiments. Let's start small for quick feedback and iterate rapidly.

## Section 3: First experiments on a small scale

Llama 3 8B is quite large so we decided to start with a 200M Llama, pretraining Infini-attention from scratch using Nanotron [[link]](https://github.com/huggingface/nanotron) and the Fineweb dataset [[link]](https://huggingface.co/datasets/HuggingFaceFW/fineweb). Once we obtained good results with the 200M model, we proceeded with continual pretraining on Llama 3 8B. We used a batch size of 2 million tokens, a context length of 256, gradient clipping of 1, and weight decay of 0.1, the first 5,000 iterations were a linear warmup, while the remaining steps were cosine decay, with a learning rate of 3e-5.

**Evaluating using the passkey retrieval task**

The passkey retrieval task was first introduced by researchers from EPFL [[link]](https://arxiv.org/abs/2305.16300). It's a task designed to evaluate a model's ability to retrieve information from long contexts where the location of the information is controllable. The input format for prompting a model is structured as follows:

```There is important info hidden inside a lot of irrelevant text. Find it and memorize them. I will quiz you about the important information there. The grass is green. The sky is blue. The sun is yellow. Here we go. There and back again. (repeat x times) The pass key is 9054. Remember it. 9054 is the pass key. The grass is green. The sky is blue. The sun is yellow. Here we go. There and back again. (repeat y times) What is the pass key? The pass key is```

We consider the model successful at this task if its output contains the "needle" ("9054" in the above case) and unsuccessful if it does not. In our experiments, we place the needle at various positions within the context, specifically at 0%, 5%, 10%, ..., 95%, and 100% of the total context length (with 0% being the furthest away from the generated tokens). For instance, if the context length is 1024 tokens, placing the needle at 10% means it is located around the 102nd token. At each depth position, we test the model with 10 different samples and calculate the mean success rate.

**First results**

Here are some first results on the small 200M model:

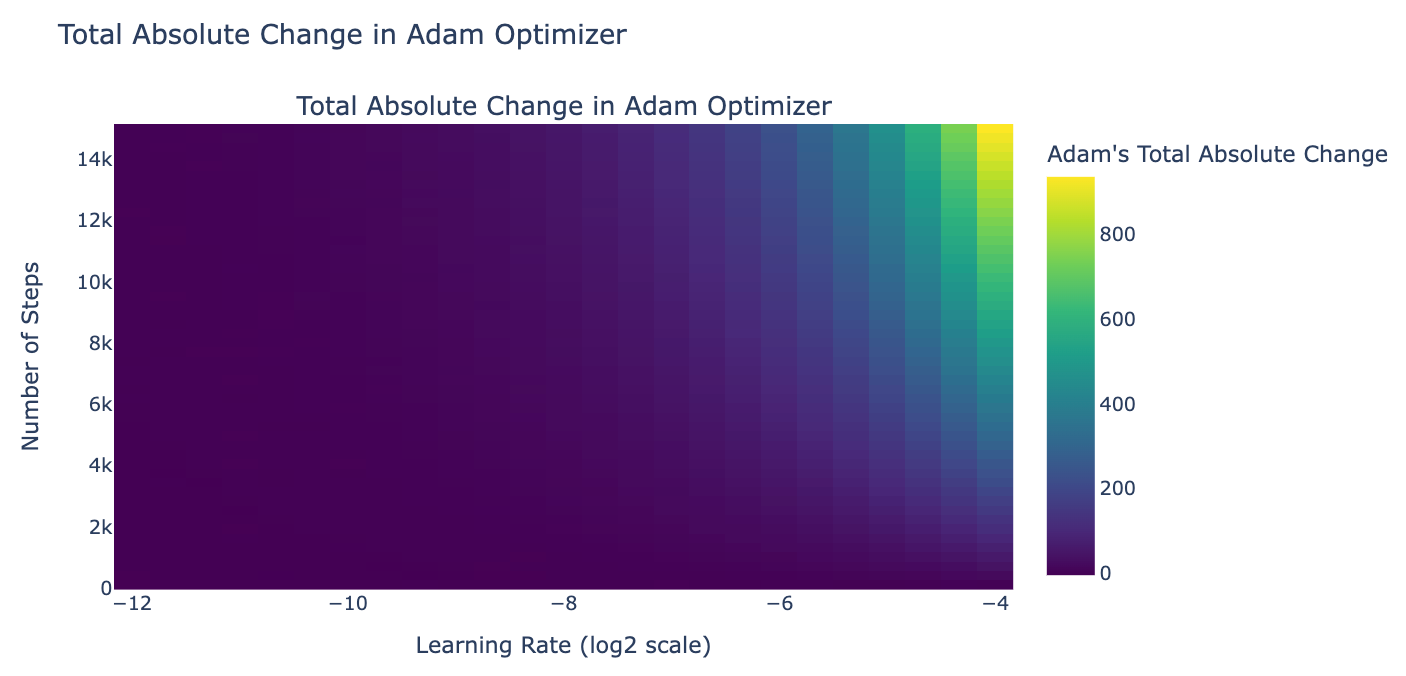

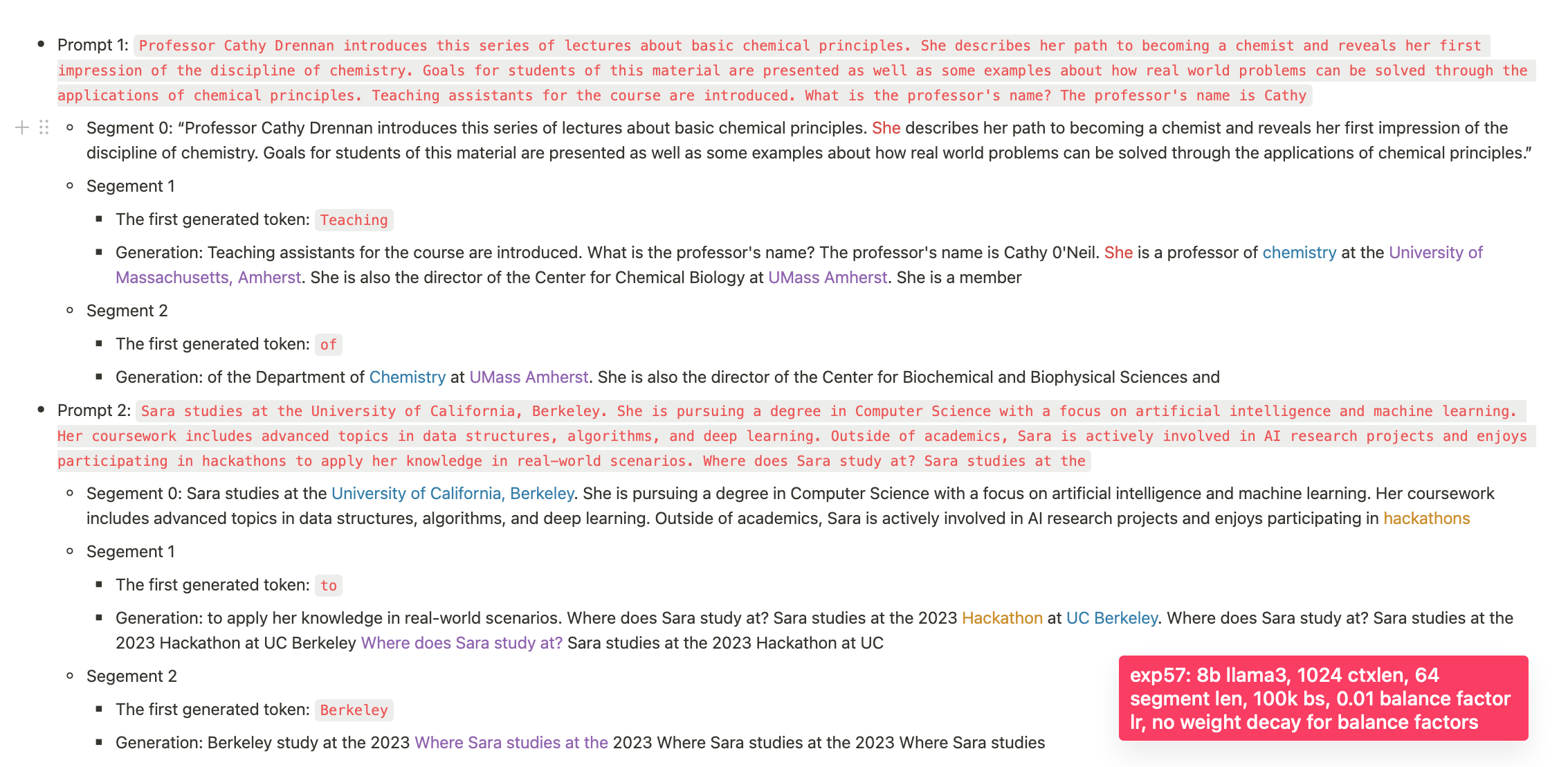

As you can see it somewhat works. If you look at the sample generations, you can see that Infini-attention generates content related to the earlier segment.

Since Infini-attention predicts the first token in the second segment by conditioning on the entire content of the first segment, which it generated as "_grad" for the first token, this provides a good signal. To validate whether this signal is a false positive, we hypothesize that Infini-attention generates content related to its earlier segment because when given "_grad" as the first generated token of the second segment, it consistently generates PyTorch-related tutorials, which happen to relate to its earlier segment. Therefore, we conducted a sanity test where the only input token was "_grad", and it generated [text here]. This suggests it does use the memory, but just doesn’t use it well enough (to retrieve the exact needle or continue the exact content of its earlier segment). The generation:

```

_graduate_education.html

Graduate Education

The Department of Physics and Astronomy offers a program leading to the Master of Science degree in physics. The program is designed to provide students with a broad background in

```

Based on these results, the model appears to in fact use the compressed memory. We decided to scale up our experiments by continually pretraining a Llama 3 8B. Unfortunately, the model failed to pass the needle evaluation when the needle was placed in an earlier segment.

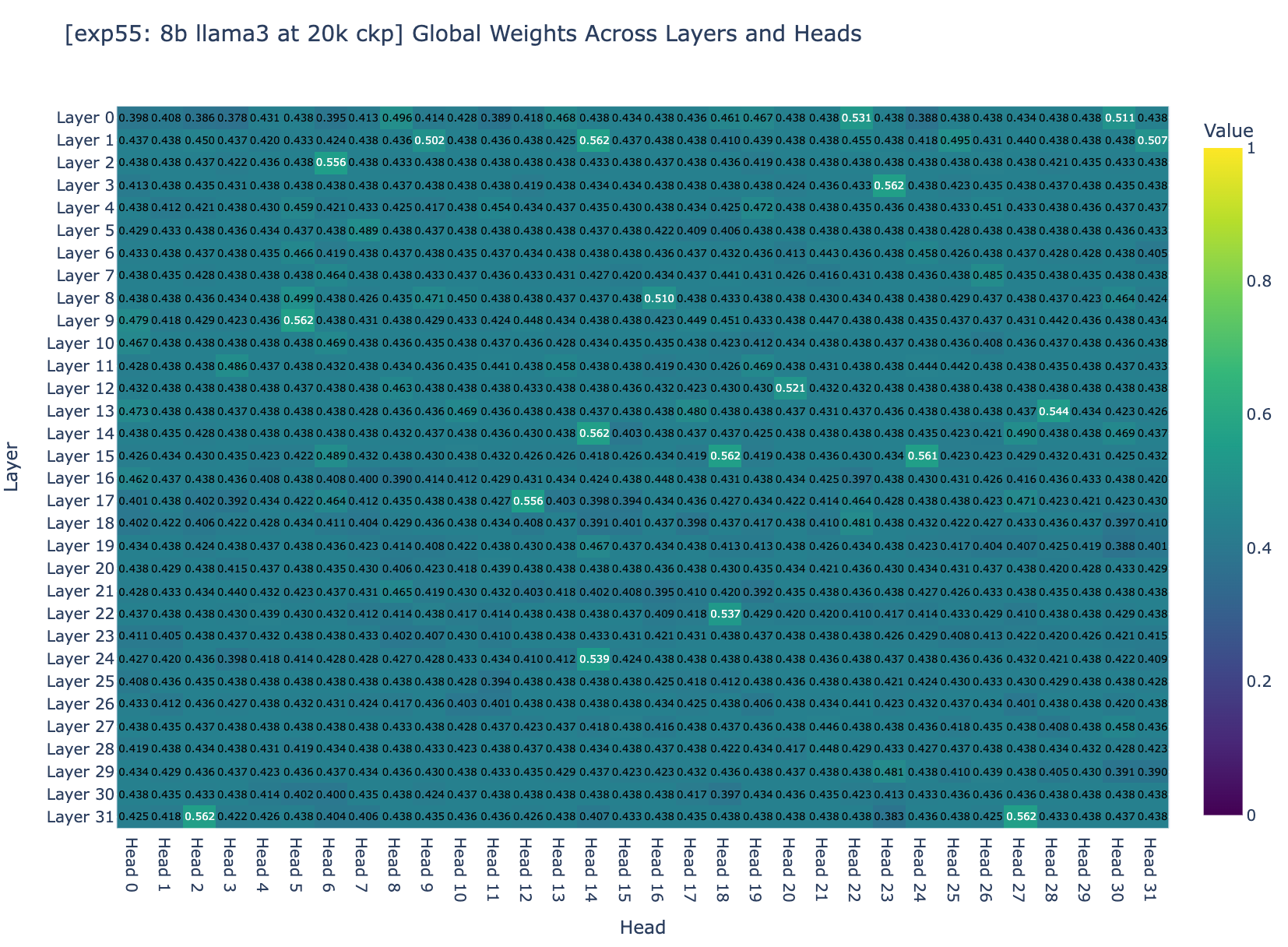

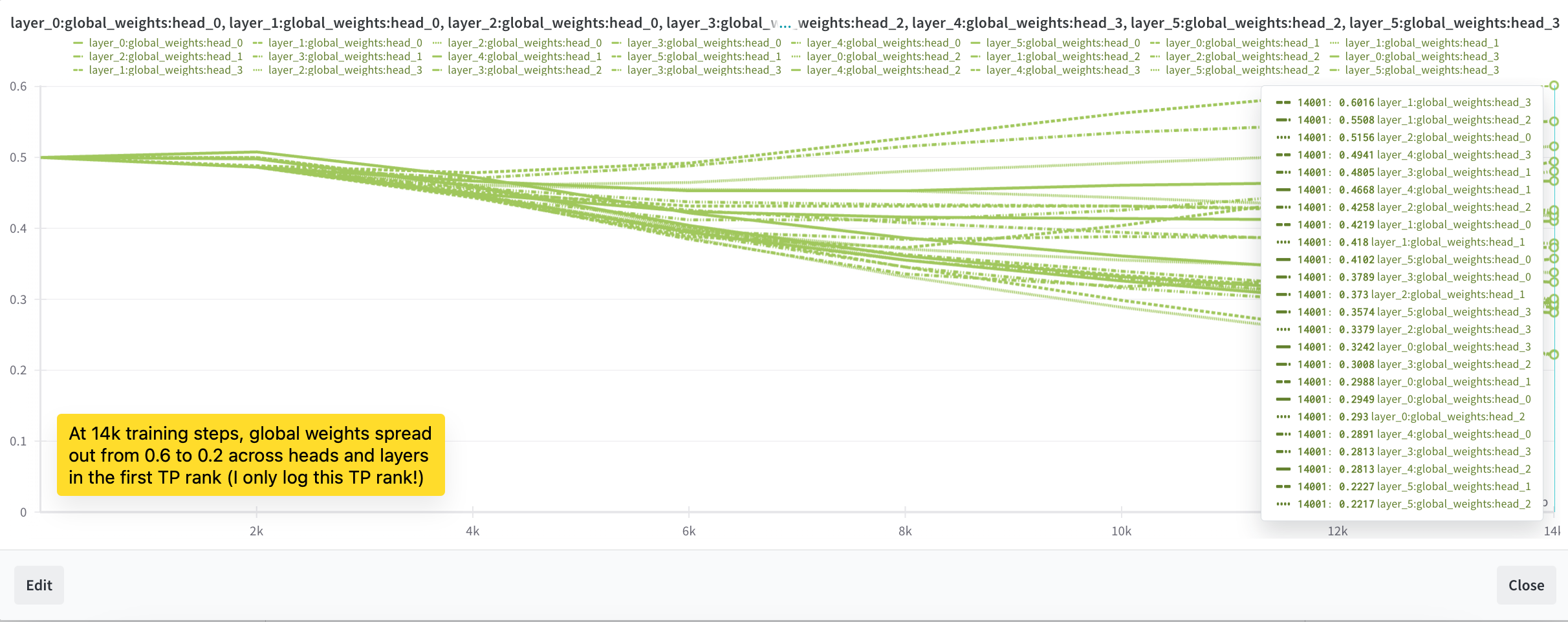

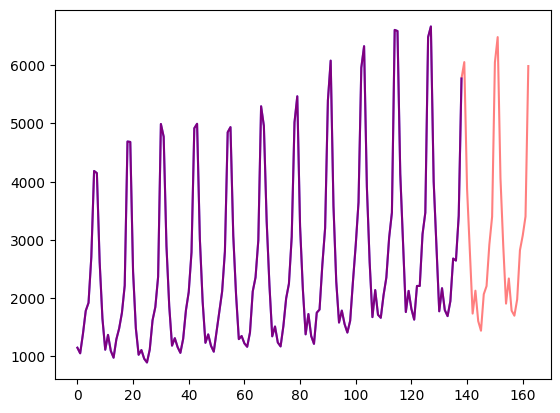

We decided to inspect the balance factors (factor balancing the amount of compressed and not-compressed memory) across all layers. Based on Figure 3a and Figure 3b, we found that about 95% of the weights are centered around 0.5. Recall that for a weight to converge to an ideal range, it depends on two general factors: the step size and the magnitude of the gradients. However, Adam normalizes the gradients to a magnitude of 1 so the question became: are the training hyper-parameters the right ones to allow the finetuning to converge?

## Section 4: Studying convergence?

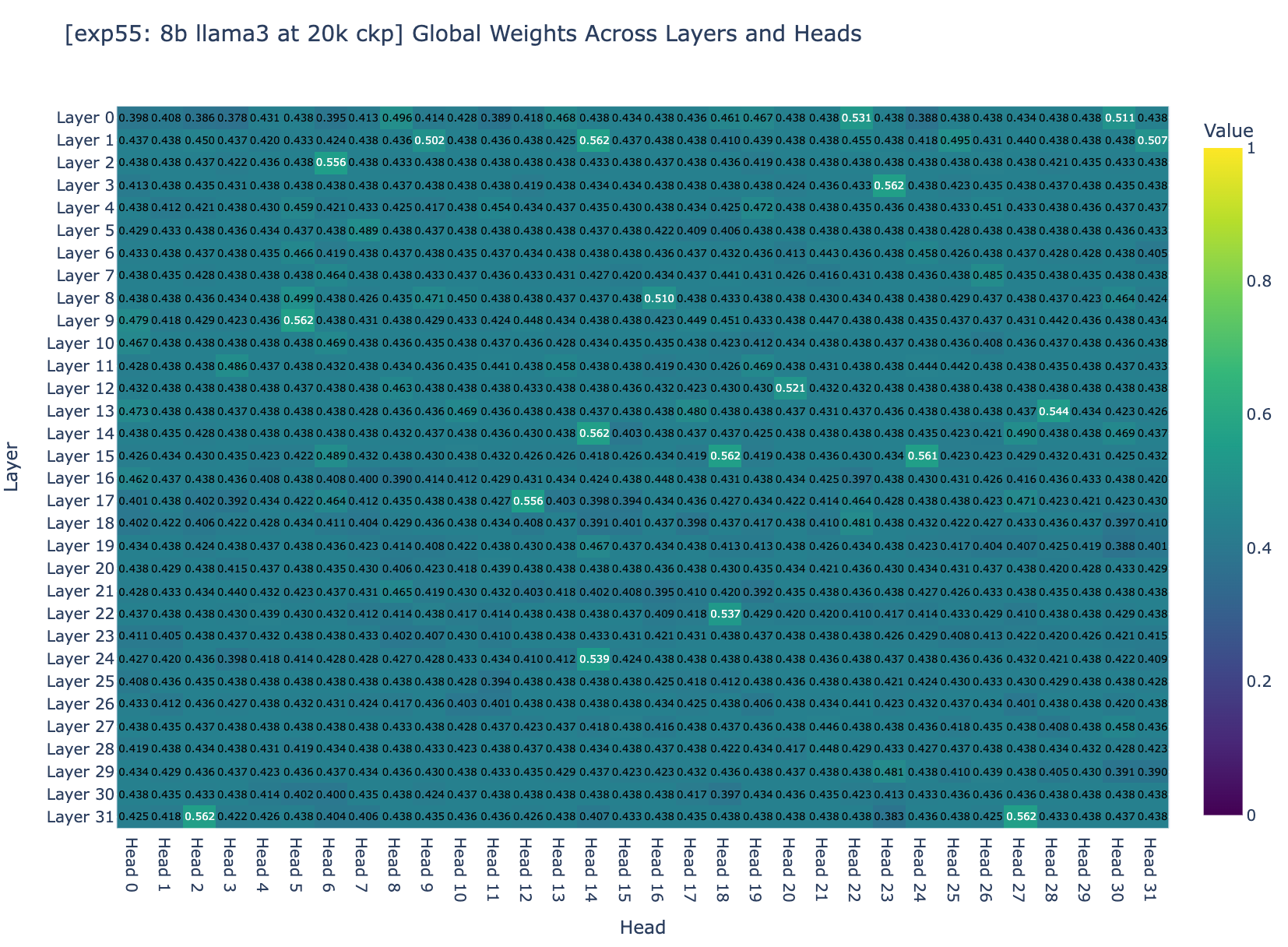

We decided to simulate how much balance weights would change during training given gradients are in a good range (L2 norm is 0.01), and found that, given the config of the last 8B LLaMA3 fine-tuning experiment, the total of absolute changes in the weight would be 0.03. Since we initialize balance factors at 0 (it doesn’t matter in this case), the weights at the end would be in the range [0 - 0.03, 0 + 0.03] = [-0.03, 0.03].

An educated guess for infinity attention to work well is when global weights spread out in the range 0 and 1 as in the paper. Given the weight above, sigmoid([-0.03, 0.03]) = tensor([0.4992, 0.5008]) (this fits with our previous experiment results that the balance factor is ~0.5). We decided as next step to use a higher learning rate for balance factors (and all other parameters use Llama 3 8B's learning rate), and a larger number of training steps to allow the balance factors to change by at least 4, so that we allow global weights to reach the ideal weights if gradient descent wants (sigmoid(-4) ≈ 0, sigmoid(4) ≈ 1).

We also note that since the gradients don't always go in the same direction, cancellations occur. This means we should aim for a learning rate and training steps that are significantly larger than the total absolute changes. Recall that the learning rate for Llama 3 8B is 3.0x10^-4, which means if we use this as a global learning rate, the gating cannot converge by any means.

> Conclusion: we decided to go with a global learning rate of 3.0x10^-4 and a gating learning rate of 0.01 which should allows the gating function to converge.

With these hyper-parameters the balance factors in Infini-attention are trainable, but we observed that the 200M llama's loss went NaN after 20B tokens (we tried learning rates from 0.001 to 1.0e-6). We investigated a few generations at the 20B tokens checkpoint (10k training steps) which you can see in Figure 4a. The model now continue the exact content and recall identities (if the memory is knocked out, it generates trash).

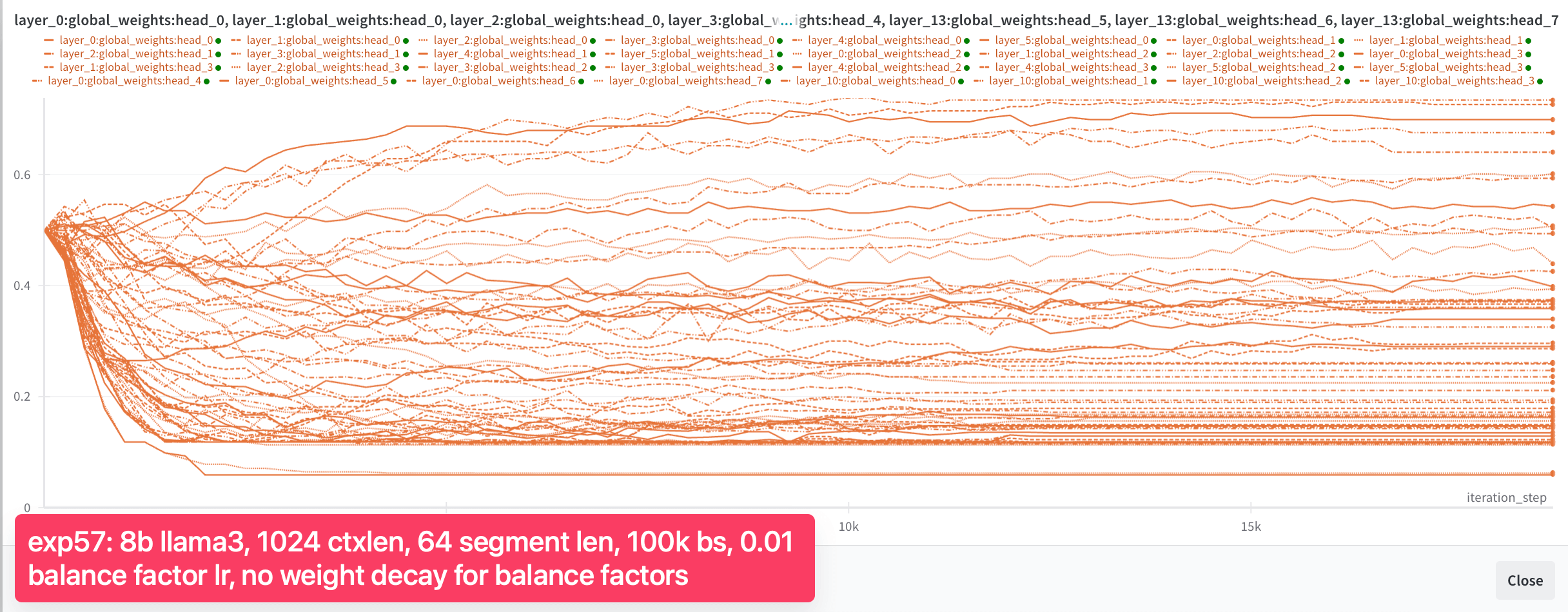

But it is still not able to recall the needle from one segment to the other (it does so reliably within the segment). Needle evaluation fails completely when the needle is placed in the 1st segment (100% success when placed in the 2nd segment, out of 2 segments total). As showed in Figure 4b, we also observed that the balance factors stopped changing after 5,000 steps. While we made some progress, we were not yet out of the woods. The balance factors were still not behaving as we hoped. We decided to dig deeper and make more adjustments.

## Section 5: No weight decay on balance factors

Inspecting in detail the balance factor once again, we saw some progress: approximately 95% of the heads now show a global weight ranging from 0.4 to 0.5, and none of the heads have a global weight greater than 0.6. But the weights still aren't in the ideal range.

We thought of another potential reason: weight decay, which encourages a small L2 norm of balance factors, leading sigmoid values to converge close to zero and factor to center around 0.5.

Yet another potential reason could be that we used too small a rollout. In the 200m experiment, we only used 4 rollouts, and in the 8b experiment, we only used 2 rollouts (8192**2). Using a larger rollout should incentive the model to compress and use the memory well. So we decided to increase the number of rollouts to 16 and use no weight decay. We scaled down the context length to 1024 context length, with 16 rollouts, getting segment lengths of 64.

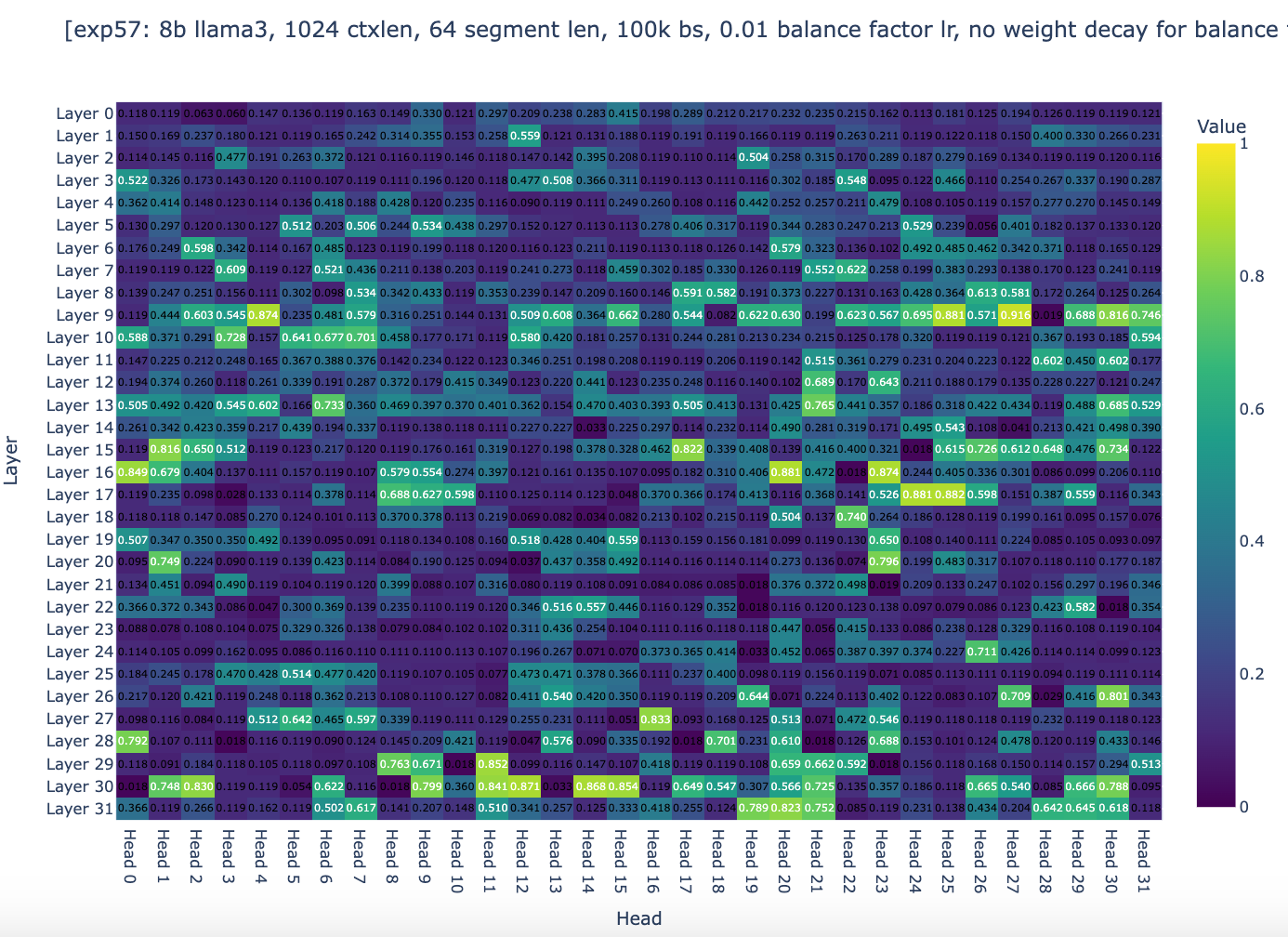

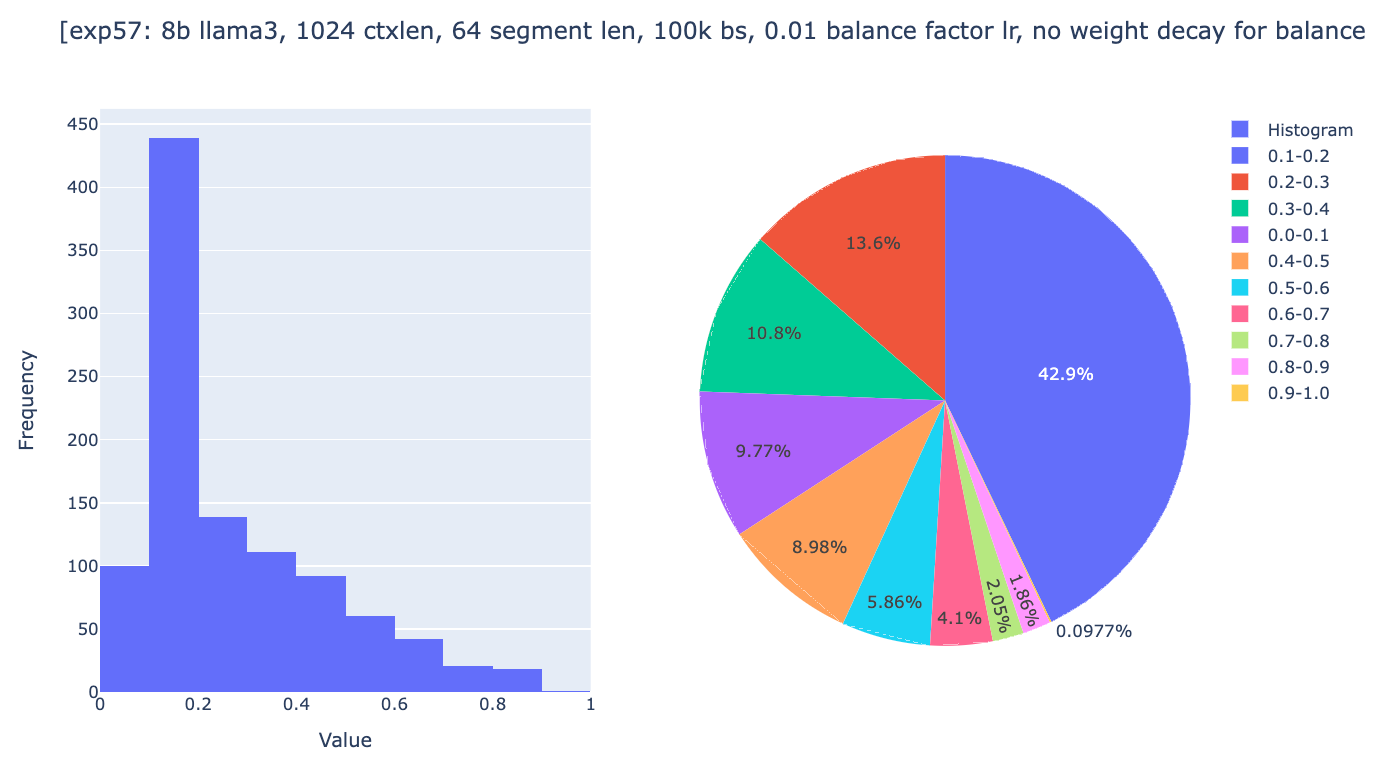

As you can see, global weights are now distributed across the range from 0 to 1, with 10% of heads having a global weight between 0.9 and 1.0, even though after 18k steps, most heads stopped changing their global weights. We were then quite confident that the experiments were setup to allow convergence if the spirits of gradient descent are with us. The only question remaining was whether the general approach of Infini-attention could works well enough.

The following evaluations were run at 1.5B tokens.

- 0-short: In the prompt 2, it recalls where a person studies (the 8b model yesterday failed at this), but fails at the needle passkey (not comprehensively run yet; will run).

- 1-short

+ Prompt 3: It identifies where a person locates.

+ Prompt 4: It passes the needle pass key

And in this cases, the models continue generating the exact content of earlier segments. (In our previous experiments, the model failed to continue with the exact content of an earlier segment and only generated something approximately related; the new model is thus quite much better already.)

## Section 6: Conclusion

Unfortunately, despite these progress, we found that Infini-attention was not convincing enough in our experiments and in particular not reliable enough. At this stage of our reproduction we are still of the opinion that Ring Attention [[link]](https://x.com/Haojun_Zhao14/status/1815419356408336738), YaRN [[link]](https://arxiv.org/abs/2309.00071) and rope scaling [[link]](https://arxiv.org/abs/2309.16039) are better options for extending a pretrained model to longer context length.

These later technics still come with large resource requirements for very large model sizes (e.g., 400B and beyond). we thus till think that exploring compression techniques or continuing to push the series of experiments we've bee describing in this blog post is of great interest for the community and are are excited to follow and try new techniques that may be developped and overcome some of the limitation of the present work.

**Recaps**

- What it means to train a neural network: give it good data, set up the architecture and training to receive good gradient signals, and allow it to converge.

- Infini-attention's long context performance decreases as the number of times we compresses the memory.

- Gating is important; tweaking the training to allow the gating to converge improves Infini-attention's long context performance (but not good enough).

- Always train a good reference model as a baseline to measure progress.

- There is another bug that messes up the dimensions in the attention output, resulting in a situation where, even though the loss decreases throughout training, the model still can't generate coherent text within its segment length. Lesson learned: Even if you condition the model poorly, gradient descent can still find a way to decrease the loss. However, the model won't work as expected, so always run evaluations.

## Acknowledgements

Thanks to Leandro von Werra and Thomas Wolf for their guidance on the project, and to Tsendsuren Munkhdalai for sharing additional details on the original experiments. We also appreciate Leandro's feedback on the blog post and are grateful to Hugging Face’s science cluster for the compute.

| 0 |

0 | hf_public_repos | hf_public_repos/blog/vit-align.md | ---

title: "New ViT and ALIGN Models From Kakao Brain"

thumbnail: /blog//assets/132_vit_align/thumbnail.png

authors:

- user: adirik

- user: Unso

- user: dylan-m

- user: jun-untitled

---

# Kakao Brain’s Open Source ViT, ALIGN, and the New COYO Text-Image Dataset

Kakao Brain and Hugging Face are excited to release a new open-source image-text dataset [COYO](https://github.com/kakaobrain/coyo-dataset) of 700 million pairs and two new visual language models trained on it, [ViT](https://github.com/kakaobrain/coyo-vit) and [ALIGN](https://github.com/kakaobrain/coyo-align). This is the first time ever the ALIGN model is made public for free and open-source use and the first release of ViT and ALIGN models that come with the train dataset.

Kakao Brain’s ViT and ALIGN models follow the same architecture and hyperparameters as provided in the original respective Google models but are trained on the open source [COYO](https://github.com/kakaobrain/coyo-dataset) dataset. Google’s [ViT](https://ai.googleblog.com/2020/12/transformers-for-image-recognition-at.html) and [ALIGN](https://ai.googleblog.com/2021/05/align-scaling-up-visual-and-vision.html) models, while trained on huge datasets (ViT trained on 300 million images and ALIGN trained on 1.8 billion image-text pairs respectively), cannot be replicated because the datasets are not public. This contribution is particularly valuable to researchers who want to reproduce visual language modeling with access to the data as well. More detailed information on the Kakao ViT and ALIGN models can be found [here](https://huggingface.co/kakaobrain).

This blog will introduce the new [COYO](https://github.com/kakaobrain/coyo-dataset) dataset, Kakao Brain's ViT and ALIGN models, and how to use them! Here are the main takeaways:

* First open-source ALIGN model ever!

* First open ViT and ALIGN models that have been trained on an open-source dataset [COYO](https://github.com/kakaobrain/coyo-dataset)

* Kakao Brain's ViT and ALIGN models perform on-par with the Google versions

* ViT and ALIGN demos are available on HF! You can play with the ViT and ALIGN demos online with image samples of your own choice!

## Performance Comparison

Kakao Brain's released ViT and ALIGN models perform on par and sometimes better than what Google has reported about their implementation. Kakao Brain's `ALIGN-B7-Base` model, while trained on a much fewer pairs (700 million pairs vs 1.8 billion), performs on par with Google's `ALIGN-B7-Base` on the Image KNN classification task and better on MS-COCO retrieval image-to-text, text-to-image tasks. Kakao Brain's `ViT-L/16` performs similarly to Google's `ViT-L/16` when evaluated on ImageNet and ImageNet-ReaL at model resolutions 384 and 512. This means the community can use Kakao Brain's ViT and ALIGN models to replicate Google's ViT and ALIGN releases especially when users require access to the training data. We are excited to see open-source and transparent releases of these model that perform on par with the state of the art!

<p>

<center>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/132_vit_align/vit-align-performance.png" alt="ViT and ALIGN performance"/>

</center>

</p>

## COYO DATASET

<p>

<center>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/132_vit_align/coyo-samples.png" alt="COYO samples"/>

</center>

</p>

What's special about these model releases is that the models are trained on the free and accessible COYO dataset. [COYO](https://github.com/kakaobrain/coyo-dataset#dataset-preview) is an image-text dataset of 700 million pairs similar to Google's `ALIGN 1.8B` image-text dataset which is a collection of "noisy" alt-text and image pairs from webpages, but open-source. `COYO-700M` and `ALIGN 1.8B` are "noisy" because minimal filtering was applied. `COYO` is similar to the other open-source image-text dataset, `LAION` but with the following differences. While `LAION` 2B is a much larger dataset of 2 billion English pairs, compared to `COYO`’s 700 million pairs, `COYO` pairs come with more metadata that give users more flexibility and finer-grained control over usage. The following table shows the differences: `COYO` comes equipped with aesthetic scores for all pairs, more robust watermark scores, and face count data.

| COYO | LAION 2B| ALIGN 1.8B |

| :----: | :----: | :----: |

| Image-text similarity score calculated with CLIP ViT-B/32 and ViT-L/14 models, they are provided as metadata but nothing is filtered out so as to avoid possible elimination bias | Image-text similarity score provided with CLIP (ViT-B/32) - only examples above threshold 0.28 | Minimal, Frequency based filtering |

| NSFW filtering on images and text | NSFW filtering on images | [Google Cloud API](https://cloud.google.com/vision) |

| Face recognition (face count) data provided as meta-data | No face recognition data | NA |

| 700 million pairs all English | 2 billion English| 1.8 billion |

| From CC 2020 Oct - 2021 Aug| From CC 2014-2020| NA |

|Aesthetic Score | Aesthetic Score Partial | NA|

|More robust Watermark score | Watermark Score | NA|

|Hugging Face Hub | Hugging Face Hub | Not made public |

| English | English | English? |

## How ViT and ALIGN work

So what do these models do? Let's breifly discuss how the ViT and ALIGN models work.

ViT -- Vision Transformer -- is a vision model [proposed by Google in 2020](https://ai.googleblog.com/2020/12/transformers-for-image-recognition-at.html) that resembles the text Transformer architecture.

It is a new approach to vision, distinct from convolutional neural nets (CNNs) that have dominated vision tasks since 2012's AlexNet. It is upto four times more computationally efficient than similarly performing CNNs and domain agnostic. ViT takes as input an image which is broken up into a sequence of image patches - just as the text Transformer takes as input a sequence of text - and given position embeddings to each patch to learn the image structure. ViT performance is notable in particular for having an excellent performance-compute trade-off. While some of Google's ViT models are open-source, the JFT-300 million image-label pair dataset they were trained on has not been released publicly. While Kakao Brain's trained on [COYO-Labeled-300M](https://github.com/kakaobrain/coyo-dataset/tree/main/subset/COYO-Labeled-300M), which has been released publicly, and released ViT model performs similarly on various tasks, its code, model, and training data(COYO-Labeled-300M) are made entirely public for reproducibility and open science.

<p>

<center>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/132_vit_align/vit-architecture.gif" alt="ViT architecture" width="700"/>

</center>

</p>

<p>

<center>

<em>A Visualization of How ViT Works from <a href="https://ai.googleblog.com/2020/12/transformers-for-image-recognition-at.html">Google Blog</a></em>

</center>

</p>

[Google then introduced ALIGN](https://ai.googleblog.com/2021/05/align-scaling-up-visual-and-vision.html) -- a Large-scale Image and Noisy Text Embedding model in 2021 -- a visual-language model trained on "noisy" text-image data for various vision and cross-modal tasks such as text-image retrieval. ALIGN has a simple dual-encoder architecture trained on image and text pairs, learned via a contrastive loss function. ALIGN's "noisy" training corpus is notable for balancing scale and robustness. Previously, visual language representational learning had been trained on large-scale datasets with manual labels, which require extensive preprocessing. ALIGN's corpus uses the image alt-text data, text that appears when the image fails to load, as the caption to the image -- resulting in an inevitably noisy, but much larger (1.8 billion pair) dataset that allows ALIGN to perform at SoTA levels on various tasks. Kakao Brain's ALIGN is the first open-source version of this model, trained on the `COYO` dataset and performs better than Google's reported results.

<p>

<center>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/132_vit_align/align-architecture.png" width="700" />

</center>

</p>

<p>

<center>

<em>ALIGN Model from <a href="https://ai.googleblog.com/2021/05/align-scaling-up-visual-and-vision.html">Google Blog</a>

</em>

</center>

<p>

## How to use the COYO dataset

We can conveniently download the `COYO` dataset with a single line of code using the 🤗 Datasets library. To preview the `COYO` dataset and learn more about the data curation process and the meta attributes included, head over to the dataset page on the [hub](https://huggingface.co/datasets/kakaobrain/coyo-700m) or the original Git [repository](https://github.com/kakaobrain/coyo-dataset). To get started, let's install the 🤗 Datasets library: `pip install datasets` and download it.

```shell

>>> from datasets import load_dataset

>>> dataset = load_dataset('kakaobrain/coyo-700m')

>>> dataset

```

While it is significantly smaller than the `LAION` dataset, the `COYO` dataset is still massive with 747M image-text pairs and it might be unfeasible to download the whole dataset to your local. In order to download only a subset of the dataset, we can simply pass in the `streaming=True` argument to the `load_dataset()` method to create an iterable dataset and download data instances as we go.

```shell

>>> from datasets import load_dataset

>>> dataset = load_dataset('kakaobrain/coyo-700m', streaming=True)

>>> print(next(iter(dataset['train'])))

{'id': 2680060225205, 'url': 'https://cdn.shopify.com/s/files/1/0286/3900/2698/products/TVN_Huile-olive-infuse-et-s-227x300_e9a90ffd-b6d2-4118-95a1-29a5c7a05a49_800x.jpg?v=1616684087', 'text': 'Olive oil infused with Tuscany herbs', 'width': 227, 'height': 300, 'image_phash': '9f91e133b1924e4e', 'text_length': 36, 'word_count': 6, 'num_tokens_bert': 6, 'num_tokens_gpt': 9, 'num_faces': 0, 'clip_similarity_vitb32': 0.19921875, 'clip_similarity_vitl14': 0.147216796875, 'nsfw_score_opennsfw2': 0.0058441162109375, 'nsfw_score_gantman': 0.018961310386657715, 'watermark_score': 0.11015450954437256, 'aesthetic_score_laion_v2': 4.871710777282715}

```

## How to use ViT and ALIGN from the Hub

Let’s go ahead and experiment with the new ViT and ALIGN models. As ALIGN is newly added to 🤗 Transformers, we will install the latest version of the library: `pip install -q git+https://github.com/huggingface/transformers.git` and get started with ViT for image classification by importing the modules and libraries we will use. Note that the newly added ALIGN model will be a part of the PyPI package in the next release of the library.

```py

import requests

from PIL import Image

import torch

from transformers import ViTImageProcessor, ViTForImageClassification

```

Next, we will download a random image of two cats and remote controls on a couch from the COCO dataset and preprocess the image to transform it to the input format expected by the model. To do this, we can conveniently use the corresponding preprocessor class (`ViTProcessor`). To initialize the model and the preprocessor, we will use one of the [Kakao Brain ViT repos](https://huggingface.co/models?search=kakaobrain/vit) on the hub. Note that initializing the preprocessor from a repository ensures that the preprocessed image is in the expected format required by that specific pretrained model.

```py

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

processor = ViTImageProcessor.from_pretrained('kakaobrain/vit-large-patch16-384')

model = ViTForImageClassification.from_pretrained('kakaobrain/vit-large-patch16-384')

```

The rest is simple, we will forward preprocess the image and use it as input to the model to retrive the class logits. The Kakao Brain ViT image classification models are trained on ImageNet labels and output logits of shape (batch_size, 1000).

```py

# preprocess image or list of images

inputs = processor(images=image, return_tensors="pt")

# inference

with torch.no_grad():

outputs = model(**inputs)

# apply SoftMax to logits to compute the probability of each class

preds = torch.nn.functional.softmax(outputs.logits, dim=-1)

# print the top 5 class predictions and their probabilities

top_class_preds = torch.argsort(preds, descending=True)[0, :5]

for c in top_class_preds:

print(f"{model.config.id2label[c.item()]} with probability {round(preds[0, c.item()].item(), 4)}")

```

And we are done! To make things even easier and shorter, we can also use the convenient image classification [pipeline](https://huggingface.co/docs/transformers/main_classes/pipelines#transformers.ImageClassificationPipeline) and pass the Kakao Brain ViT repo name as our target model to initialize the pipeline. We can then pass in a URL or a local path to an image or a Pillow image and optionally use the `top_k` argument to return the top k predictions. Let's go ahead and get the top 5 predictions for our image of cats and remotes.

```shell

>>> from transformers import pipeline

>>> classifier = pipeline(task='image-classification', model='kakaobrain/vit-large-patch16-384')

>>> classifier('http://images.cocodataset.org/val2017/000000039769.jpg', top_k=5)

[{'score': 0.8223727941513062, 'label': 'remote control, remote'}, {'score': 0.06580372154712677, 'label': 'tabby, tabby cat'}, {'score': 0.0655883178114891, 'label': 'tiger cat'}, {'score': 0.0388941615819931, 'label': 'Egyptian cat'}, {'score': 0.0011215205304324627, 'label': 'lynx, catamount'}]

```

If you want to experiment more with the Kakao Brain ViT model, head over to its [Space](https://huggingface.co/spaces/adirik/kakao-brain-vit) on the 🤗 Hub.

<center>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/132_vit_align/vit_demo.png" alt="vit performance" width="900"/>

</center>

Let's move on to experimenting with ALIGN, which can be used to retrieve multi-modal embeddings of texts or images or to perform zero-shot image classification. ALIGN's transformers implementation and usage is similar to [CLIP](https://huggingface.co/docs/transformers/main/en/model_doc/clip). To get started, we will first download the pretrained model and its processor, which can preprocess both the images and texts such that they are in the expected format to be fed into the vision and text encoders of ALIGN. Once again, let's import the modules we will use and initialize the preprocessor and the model.

```py

import requests

from PIL import Image

import torch

from transformers import AlignProcessor, AlignModel

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

processor = AlignProcessor.from_pretrained('kakaobrain/align-base')

model = AlignModel.from_pretrained('kakaobrain/align-base')

```

We will start with zero-shot image classification first. To do this, we will suppy candidate labels (free-form text) and use AlignModel to find out which description better describes the image. We will first preprocess both the image and text inputs and feed the preprocessed input to the AlignModel.

```py

candidate_labels = ['an image of a cat', 'an image of a dog']

inputs = processor(images=image, text=candidate_labels, return_tensors='pt')

with torch.no_grad():

outputs = model(**inputs)

# this is the image-text similarity score

logits_per_image = outputs.logits_per_image

# we can take the softmax to get the label probabilities

probs = logits_per_image.softmax(dim=1)

print(probs)

```

Done, easy as that. To experiment more with the Kakao Brain ALIGN model for zero-shot image classification, simply head over to its [demo](https://huggingface.co/spaces/adirik/ALIGN-zero-shot-image-classification) on the 🤗 Hub. Note that, the output of `AlignModel` includes `text_embeds` and `image_embeds` (see the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/align) of ALIGN). If we don't need to compute the per-image and per-text logits for zero-shot classification, we can retrieve the vision and text embeddings using the convenient `get_image_features()` and `get_text_features()` methods of the `AlignModel` class.

```py

text_embeds = model.get_text_features(

input_ids=inputs['input_ids'],

attention_mask=inputs['attention_mask'],

token_type_ids=inputs['token_type_ids'],

)

image_embeds = model.get_image_features(

pixel_values=inputs['pixel_values'],

)

```

Alternatively, we can use the stand-along vision and text encoders of ALIGN to retrieve multi-modal embeddings. These embeddings can then be used to train models for various downstream tasks such as object detection, image segmentation and image captioning. Let's see how we can retrieve these embeddings using `AlignTextModel` and `AlignVisionModel`. Note that we can use the convenient AlignProcessor class to preprocess texts and images separately.

```py

from transformers import AlignTextModel

processor = AlignProcessor.from_pretrained('kakaobrain/align-base')

model = AlignTextModel.from_pretrained('kakaobrain/align-base')

# get embeddings of two text queries

inputs = processor(['an image of a cat', 'an image of a dog'], return_tensors='pt')

with torch.no_grad():

outputs = model(**inputs)

# get the last hidden state and the final pooled output

last_hidden_state = outputs.last_hidden_state

pooled_output = outputs.pooler_output

```

We can also opt to return all hidden states and attention values by setting the output_hidden_states and output_attentions arguments to True during inference.

```py

with torch.no_grad():

outputs = model(**inputs, output_hidden_states=True, output_attentions=True)

# print what information is returned

for key, value in outputs.items():

print(key)

```

Let's do the same with `AlignVisionModel` and retrieve the multi-modal embedding of an image.

```py

from transformers import AlignVisionModel

processor = AlignProcessor.from_pretrained('kakaobrain/align-base')

model = AlignVisionModel.from_pretrained('kakaobrain/align-base')

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(images=image, return_tensors='pt')

with torch.no_grad():

outputs = model(**inputs)

# print the last hidden state and the final pooled output

last_hidden_state = outputs.last_hidden_state

pooled_output = outputs.pooler_output

```

Similar to ViT, we can use the zero-shot image classification [pipeline](https://huggingface.co/docs/transformers/main_classes/pipelines#transformers.ZeroShotImageClassificationPipeline) to make our work even easier. Let's see how we can use this pipeline to perform image classification in the wild using free-form text candidate labels.

```shell

>>> from transformers import pipeline

>>> classifier = pipeline(task='zero-shot-image-classification', model='kakaobrain/align-base')

>>> classifier(

... 'https://huggingface.co/datasets/Narsil/image_dummy/raw/main/parrots.png',

... candidate_labels=['animals', 'humans', 'landscape'],

... )

[{'score': 0.9263709783554077, 'label': 'animals'}, {'score': 0.07163811475038528, 'label': 'humans'}, {'score': 0.0019908479880541563, 'label': 'landscape'}]

>>> classifier(

... 'https://huggingface.co/datasets/Narsil/image_dummy/raw/main/parrots.png',

... candidate_labels=['black and white', 'photorealist', 'painting'],

... )

[{'score': 0.9735308885574341, 'label': 'black and white'}, {'score': 0.025493400171399117, 'label': 'photorealist'}, {'score': 0.0009757201769389212, 'label': 'painting'}]

```

## Conclusion

There have been incredible advances in multi-modal models in recent years, with models such as CLIP and ALIGN unlocking various downstream tasks such as image captioning, zero-shot image classification, and open vocabulary object detection. In this blog, we talked about the latest open source ViT and ALIGN models contributed to the Hub by Kakao Brain, as well as the new COYO text-image dataset. We also showed how you can use these models to perform various tasks with a few lines of code both on their own or as a part of 🤗 Transformers pipelines.

That was it! We are continuing to integrate the most impactful computer vision and multi-modal models and would love to hear back from you. To stay up to date with the latest news in computer vision and multi-modal research, you can follow us on Twitter: [@adirik](https://twitter.com/https://twitter.com/alaradirik), [@a_e_roberts](https://twitter.com/a_e_roberts), [@NielsRogge](https://twitter.com/NielsRogge), [@RisingSayak](https://twitter.com/RisingSayak), and [@huggingface](https://twitter.com/huggingface).

| 1 |

0 | hf_public_repos | hf_public_repos/blog/gaussian-splatting.md | ---

title: "Introduction to 3D Gaussian Splatting"

thumbnail: /blog/assets/124_ml-for-games/thumbnail-gaussian-splatting.png

authors:

- user: dylanebert

---

# Introduction to 3D Gaussian Splatting

3D Gaussian Splatting is a rasterization technique described in [3D Gaussian Splatting for Real-Time Radiance Field Rendering](https://huggingface.co/papers/2308.04079) that allows real-time rendering of photorealistic scenes learned from small samples of images. This article will break down how it works and what it means for the future of graphics.

## What is 3D Gaussian Splatting?

3D Gaussian Splatting is, at its core, a rasterization technique. That means:

1. Have data describing the scene.

2. Draw the data on the screen.

This is analogous to triangle rasterization in computer graphics, which is used to draw many triangles on the screen.

However, instead of triangles, it's gaussians. Here's a single rasterized gaussian, with a border drawn for clarity.

It's described by the following parameters:

- **Position**: where it's located (XYZ)

- **Covariance**: how it's stretched/scaled (3x3 matrix)

- **Color**: what color it is (RGB)

- **Alpha**: how transparent it is (α)

In practice, multiple gaussians are drawn at once.

That's three gaussians. Now what about 7 million gaussians?

Here's what it looks like with each gaussian rasterized fully opaque:

That's a very brief overview of what 3D Gaussian Splatting is. Next, let's walk through the full procedure described in the paper.

## How it works

### 1. Structure from Motion

The first step is to use the Structure from Motion (SfM) method to estimate a point cloud from a set of images. This is a method for estimating a 3D point cloud from a set of 2D images. This can be done with the [COLMAP](https://colmap.github.io/) library.

### 2. Convert to Gaussians

Next, each point is converted to a gaussian. This is already sufficient for rasterization. However, only position and color can be inferred from the SfM data. To learn a representation that yields high quality results, we need to train it.

### 3. Training

The training procedure uses Stochastic Gradient Descent, similar to a neural network, but without the layers. The training steps are:

1. Rasterize the gaussians to an image using differentiable gaussian rasterization (more on that later)

2. Calculate the loss based on the difference between the rasterized image and ground truth image

3. Adjust the gaussian parameters according to the loss

4. Apply automated densification and pruning

Steps 1-3 are conceptually pretty straightforward. Step 4 involves the following:

- If the gradient is large for a given gaussian (i.e. it's too wrong), split/clone it

- If the gaussian is small, clone it

- If the gaussian is large, split it

- If the alpha of a gaussian gets too low, remove it

This procedure helps the gaussians better fit fine-grained details, while pruning unnecessary gaussians.

### 4. Differentiable Gaussian Rasterization

As mentioned earlier, 3D Gaussian Splatting is a *rasterization* approach, which draws the data to the screen. However, some important elements are also that it's:

1. Fast

2. Differentiable

The original implementation of the rasterizer can be found [here](https://github.com/graphdeco-inria/diff-gaussian-rasterization). The rasterization involves:

1. Project each gaussian into 2D from the camera perspective.

2. Sort the gaussians by depth.

3. For each pixel, iterate over each gaussian front-to-back, blending them together.

Additional optimizations are described in [the paper](https://huggingface.co/papers/2308.04079).

It's also essential that the rasterizer is differentiable, so that it can be trained with stochastic gradient descent. However, this is only relevant for training - the trained gaussians can also be rendered with a non-differentiable approach.

## Who cares?

Why has there been so much attention on 3D Gaussian Splatting? The obvious answer is that the results speak for themselves - it's high-quality scenes in real-time. However, there may be more to the story.

There are many unknowns as to what else can be done with Gaussian Splatting. Can they be animated? The upcoming paper [Dynamic 3D Gaussians: tracking by Persistent Dynamic View Synthesis](https://arxiv.org/pdf/2308.09713) suggests that they can. There are many other unknowns as well. Can they do reflections? Can they be modeled without training on reference images?

Finally, there is growing research interest in [Embodied AI](https://ieeexplore.ieee.org/iel7/7433297/9741092/09687596.pdf). This is an area of AI research where state-of-the-art performance is still orders of magnitude below human performance, with much of the challenge being in representing 3D space. Given that 3D Gaussian Splatting yields a very dense representation of 3D space, what might the implications be for Embodied AI research?

These questions call attention to the method. It remains to be seen what the actual impact will be.

## The future of graphics

So what does this mean for the future of graphics? Well, let's break it up into pros/cons:

**Pros**

1. High-quality, photorealistic scenes

2. Fast, real-time rasterization

3. Relatively fast to train

**Cons**

1. High VRAM usage (4GB to view, 12GB to train)

2. Large disk size (1GB+ for a scene)

3. Incompatible with existing rendering pipelines

3. Static (for now)

So far, the original CUDA implementation has not been adapted to production rendering pipelines, like Vulkan, DirectX, WebGPU, etc, so it's yet to be seen what the impact will be.

There have already been the following adaptations:

1. [Remote viewer](https://huggingface.co/spaces/dylanebert/gaussian-viewer)

2. [WebGPU viewer](https://github.com/cvlab-epfl/gaussian-splatting-web)

3. [WebGL viewer](https://huggingface.co/spaces/cakewalk/splat)

4. [Unity viewer](https://github.com/aras-p/UnityGaussianSplatting)

5. [Optimized WebGL viewer](https://gsplat.tech/)

These rely either on remote streaming (1) or a traditional quad-based rasterization approach (2-5). While a quad-based approach is compatible with decades of graphics technologies, it may result in lower quality/performance. However, [viewer #5](https://gsplat.tech/) demonstrates that optimization tricks can result in high quality/performance, despite a quad-based approach.

So will we see 3D Gaussian Splatting fully reimplemented in a production environment? The answer is *probably yes*. The primary bottleneck is sorting millions of gaussians, which is done efficiently in the original implementation using [CUB device radix sort](https://nvlabs.github.io/cub/structcub_1_1_device_radix_sort.html), a highly optimized sort only available in CUDA. However, with enough effort, it's certainly possible to achieve this level of performance in other rendering pipelines.

If you have any questions or would like to get involved, join the [Hugging Face Discord](https://hf.co/join/discord)!

| 2 |

0 | hf_public_repos | hf_public_repos/blog/policy-blog.md | ---

title: "Public Policy at Hugging Face"

thumbnail: /blog/assets/policy_docs/policy_blog_thumbnail.png

authors:

- user: irenesolaiman

- user: yjernite

- user: meg

- user: evijit

---

# Public Policy at Hugging Face

AI Policy at Hugging Face is a multidisciplinary and cross-organizational workstream. Instead of being part of a vertical communications or global affairs organization, our policy work is rooted in the expertise of our many researchers and developers, from [Ethics and Society Regulars](https://huggingface.co/blog/ethics-soc-1) and the legal team to machine learning engineers working on healthcare, art, and evaluations.

What we work on is informed by our Hugging Face community needs and experiences on the Hub. We champion [responsible openness](https://huggingface.co/blog/ethics-soc-3), investing heavily in [ethics-forward research](https://huggingface.co/spaces/society-ethics/about), [transparency mechanisms](https://huggingface.co/blog/model-cards), [platform safeguards](https://huggingface.co/content-guidelines), and translate our lessons to policy.

So what have we shared with policymakers?

## Policy Materials

The following materials reflect what we have found urgent to stress to policymakers at the time of requests for information, and will be updated as materials are published.

- United States of America

- Congressional

- September 2023: [Clement Delangue (CEO) Senate AI Insight Forum Kickoff Statement](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_AI%20Insight%20Forum%20Kickoff%20Written%20Statement.pdf)

- June 2023: Clement Delangue (CEO) House Committee on Science, Space, and Technology Testimony

- [Written statement](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_HCSST_CongressionalTestimony.pdf)

- View [recorded testimony](https://science.house.gov/2023/6/artificial-intelligence-advancing-innovation-towards-the-national-interest)

- November 2023: [Dr. Margaret Mitchell (Chief Ethics Scientist) Senate Insight Forum Statement](https://www.schumer.senate.gov/imo/media/doc/Margaret%20Mitchell%20-%20Statement.pdf)

- Executive

- September 2024: Response to NIST [RFC on AI 800-1: Managing Misuse Risk for Dual-Use Foundational Models](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2024_AISI_Dual_Use_Foundational_Models_Response.pdf)

- June 2024: Response to NIST [RFC on AI 600-1: Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2024_NIST_GENAI_Response.pdf)

- March 2024: Response to NTIA [RFC on Dual Use Foundation Artificial Intelligence Models with Widely Available Model Weights](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2024_NTIA_Response.pdf)

- February 2024: Response to NIST [RFI Assignments Under Sections 4.1, 4.5 and 11 of the Executive Order Concerning Artificial Intelligence](https://huggingface.co/datasets/huggingface/policy-docs/blob/main/2024_NIST%20RFI%20on%20EO.pdf)

- December 2023: Response to OMB [RFC Agency Use of Artificial Intelligence](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_OMB%20EO%20RFC.pdf)

- November 2023: Response to U.S. Copyright Office [Notice of Inquiry on Artificial Intelligence and Copyright](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_Copyright_Response.pdf)

- June 2023: Response to NTIA [RFC on AI Accountability](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_NTIA_Response.pdf)

- September 2022: Response to NIST [AI Risk Management Framework]](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2022_NIST_RMF_Response.pdf)

- June 2022: Response to NAIRR [Implementing Findings from the National Artificial Intelligence Research Resource Task Force](https://huggingface.co/blog/assets/92_us_national_ai_research_resource/Hugging_Face_NAIRR_RFI_2022.pdf)

- European Union

- January 2024: Response to [Digital Services Act, Transparency Reports](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2024_DSA_Response.pdf)

- July 2023: Comments on the [Proposed AI Act](https://huggingface.co/blog/assets/eu_ai_act_oss/supporting_OS_in_the_AIAct.pdf)

- United Kingdom

- November 2023: Irene Solaiman (Head of Global Policy) [oral evidence to UK Parliament House of Lords transcript](https://committees.parliament.uk/oralevidence/13802/default/)

- September 2023: Response to [UK Parliament: UK Parliament RFI: LLMs](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_UK%20Parliament%20RFI%20LLMs.pdf)

- June 2023: Response to [No 10: UK RFI: AI Regulatory Innovation White Paper](https://huggingface.co/datasets/huggingface/policy-docs/resolve/main/2023_UK_RFI_AI_Regulatory_Innovation_White_Paper.pdf)

| 3 |

0 | hf_public_repos | hf_public_repos/blog/mixtral.md | ---

title: "Welcome Mixtral - a SOTA Mixture of Experts on Hugging Face"

thumbnail: /blog/assets/mixtral/thumbnail.jpg

authors:

- user: lewtun

- user: philschmid

- user: osanseviero

- user: pcuenq

- user: olivierdehaene

- user: lvwerra

- user: ybelkada

---

# Welcome Mixtral - a SOTA Mixture of Experts on Hugging Face

Mixtral 8x7b is an exciting large language model released by Mistral today, which sets a new state-of-the-art for open-access models and outperforms GPT-3.5 across many benchmarks. We’re excited to support the launch with a comprehensive integration of Mixtral in the Hugging Face ecosystem 🔥!

Among the features and integrations being released today, we have:

- [Models on the Hub](https://huggingface.co/models?search=mistralai/Mixtral), with their model cards and licenses (Apache 2.0)

- [🤗 Transformers integration](https://github.com/huggingface/transformers/releases/tag/v4.36.0)

- Integration with Inference Endpoints

- Integration with [Text Generation Inference](https://github.com/huggingface/text-generation-inference) for fast and efficient production-ready inference

- An example of fine-tuning Mixtral on a single GPU with 🤗 TRL.

## Table of Contents

- [What is Mixtral 8x7b](#what-is-mixtral-8x7b)

- [About the name](#about-the-name)

- [Prompt format](#prompt-format)

- [What we don't know](#what-we-dont-know)

- [Demo](#demo)

- [Inference](#inference)

- [Using 🤗 Transformers](#using-🤗-transformers)

- [Using Text Generation Inference](#using-text-generation-inference)

- [Fine-tuning with 🤗 TRL](#fine-tuning-with-🤗-trl)

- [Quantizing Mixtral](#quantizing-mixtral)

- [Load Mixtral with 4-bit quantization](#load-mixtral-with-4-bit-quantization)

- [Load Mixtral with GPTQ](#load-mixtral-with-gptq)

- [Disclaimers and ongoing work](#disclaimers-and-ongoing-work)

- [Additional Resources](#additional-resources)

- [Conclusion](#conclusion)

## What is Mixtral 8x7b?

Mixtral has a similar architecture to Mistral 7B, but comes with a twist: it’s actually 8 “expert” models in one, thanks to a technique called Mixture of Experts (MoE). For transformers models, the way this works is by replacing some Feed-Forward layers with a sparse MoE layer. A MoE layer contains a router network to select which experts process which tokens most efficiently. In the case of Mixtral, two experts are selected for each timestep, which allows the model to decode at the speed of a 12B parameter-dense model, despite containing 4x the number of effective parameters!

For more details on MoEs, see our accompanying blog post: [hf.co/blog/moe](https://huggingface.co/blog/moe)

**Mixtral release TL;DR;**

- Release of base and Instruct versions

- Supports a context length of 32k tokens.

- Outperforms Llama 2 70B and matches or beats GPT3.5 on most benchmarks

- Speaks English, French, German, Spanish, and Italian.

- Good at coding, with 40.2% on HumanEval

- Commercially permissive with an Apache 2.0 license

So how good are the Mixtral models? Here’s an overview of the base model and its performance compared to other open models on the [LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) (higher scores are better):

| Model | License | Commercial use? | Pretraining size [tokens] | Leaderboard score ⬇️ |

| --------------------------------------------------------------------------------- | --------------- | --------------- | ------------------------- | -------------------- |

| [mistralai/Mixtral-8x7B-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-v0.1) | Apache 2.0 | ✅ | unknown | 68.42 |

| [meta-llama/Llama-2-70b-hf](https://huggingface.co/meta-llama/Llama-2-70b-hf) | Llama 2 license | ✅ | 2,000B | 67.87 |

| [tiiuae/falcon-40b](https://huggingface.co/tiiuae/falcon-40b) | Apache 2.0 | ✅ | 1,000B | 61.5 |

| [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) | Apache 2.0 | ✅ | unknown | 60.97 |

| [meta-llama/Llama-2-7b-hf](https://huggingface.co/meta-llama/Llama-2-7b-hf) | Llama 2 license | ✅ | 2,000B | 54.32 |

For instruct and chat models, evaluating on benchmarks like MT-Bench or AlpacaEval is better. Below, we show how [Mixtral Instruct](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1) performs up against the top closed and open access models (higher scores are better):

| Model | Availability | Context window (tokens) | MT-Bench score ⬇️ |

| --------------------------------------------------------------------------------------------------- | --------------- | ----------------------- | ---------------- |

| [GPT-4 Turbo](https://openai.com/blog/new-models-and-developer-products-announced-at-devday) | Proprietary | 128k | 9.32 |

| [GPT-3.5-turbo-0613](https://platform.openai.com/docs/models/gpt-3-5) | Proprietary | 16k | 8.32 |

| [mistralai/Mixtral-8x7B-Instruct-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1) | Apache 2.0 | 32k | 8.30 |

| [Claude 2.1](https://www.anthropic.com/index/claude-2-1) | Proprietary | 200k | 8.18 |

| [openchat/openchat_3.5](https://huggingface.co/openchat/openchat_3.5) | Apache 2.0 | 8k | 7.81 |

| [HuggingFaceH4/zephyr-7b-beta](https://huggingface.co/HuggingFaceH4/zephyr-7b-beta) | MIT | 8k | 7.34 |

| [meta-llama/Llama-2-70b-chat-hf](https://huggingface.co/meta-llama/Llama-2-70b-chat-hf) | Llama 2 license | 4k | 6.86 |

Impressively, Mixtral Instruct outperforms all other open-access models on MT-Bench and is the first one to achieve comparable performance with GPT-3.5!

### About the name

The Mixtral MoE is called **Mixtral-8x7B**, but it doesn't have 56B parameters. Shortly after the release, we found that some people were misled into thinking that the model behaves similarly to an ensemble of 8 models with 7B parameters each, but that's not how MoE models work. Only some layers of the model (the feed-forward blocks) are replicated; the rest of the parameters are the same as in a 7B model. The total number of parameters is not 56B, but about 45B. A better name [could have been `Mixtral-45-8e`](https://twitter.com/osanseviero/status/1734248798749159874) to better convey the architecture. For more details about how MoE works, please refer to [our "Mixture of Experts Explained" post](https://huggingface.co/blog/moe).

### Prompt format

The [base model](https://huggingface.co/mistralai/Mixtral-8x7B-v0.1) has no prompt format. Like other base models, it can be used to continue an input sequence with a plausible continuation or for zero-shot/few-shot inference. It’s also a great foundation for fine-tuning your own use case. The [Instruct model](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1) has a very simple conversation structure.

```bash

<s> [INST] User Instruction 1 [/INST] Model answer 1</s> [INST] User instruction 2[/INST]

```

This format has to be exactly reproduced for effective use. We’ll show later how easy it is to reproduce the instruct prompt with the chat template available in `transformers`.

### What we don't know

Like the previous Mistral 7B release, there are several open questions about this new series of models. In particular, we have no information about the size of the dataset used for pretraining, its composition, or how it was preprocessed.

Similarly, for the Mixtral instruct model, no details have been shared about the fine-tuning datasets or the hyperparameters associated with SFT and DPO.

## Demo

You can chat with the Mixtral Instruct model on Hugging Face Chat! Check it out here: [https://huggingface.co/chat/?model=mistralai/Mixtral-8x7B-Instruct-v0.1](https://huggingface.co/chat/?model=mistralai/Mixtral-8x7B-Instruct-v0.1).

## Inference

We provide two main ways to run inference with Mixtral models:

- Via the `pipeline()` function of 🤗 Transformers.

- With Text Generation Inference, which supports advanced features like continuous batching, tensor parallelism, and more, for blazing fast results.

For each method, it is possible to run the model in half-precision (float16) or with quantized weights. Since the Mixtral model is roughly equivalent in size to a 45B parameter dense model, we can estimate the minimum amount of VRAM needed as follows:

| Precision | Required VRAM |

| --------- | ------------- |

| float16 | >90 GB |

| 8-bit | >45 GB |

| 4-bit | >23 GB |

### Using 🤗 Transformers

With transformers [release 4.36](https://github.com/huggingface/transformers/releases/tag/v4.36.0), you can use Mixtral and leverage all the tools within the Hugging Face ecosystem, such as:

- training and inference scripts and examples

- safe file format (`safetensors`)

- integrations with tools such as bitsandbytes (4-bit quantization), PEFT (parameter efficient fine-tuning), and Flash Attention 2

- utilities and helpers to run generation with the model

- mechanisms to export the models to deploy

Make sure to use a recent version of `transformers`:

```bash

pip install --upgrade transformers

```

In the following code snippet, we show how to run inference with 🤗 Transformers and 4-bit quantization. Due to the large size of the model, you’ll need a card with at least 30 GB of RAM to run it. This includes cards such as A100 (80 or 40GB versions), or A6000 (48 GB).

```python

from transformers import pipeline

import torch

model = "mistralai/Mixtral-8x7B-Instruct-v0.1"

pipe = pipeline(

"text-generation",

model=model,

model_kwargs={"torch_dtype": torch.float16, "load_in_4bit": True},

)

messages = [{"role": "user", "content": "Explain what a Mixture of Experts is in less than 100 words."}]

outputs = pipe(messages, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"][-1]["content"])

```

> \<s>[INST] Explain what a Mixture of Experts is in less than 100 words. [/INST] A

Mixture of Experts is an ensemble learning method that combines multiple models,

or "experts," to make more accurate predictions. Each expert specializes in a

different subset of the data, and a gating network determines the appropriate

expert to use for a given input. This approach allows the model to adapt to

complex, non-linear relationships in the data and improve overall performance.

>

### Using Text Generation Inference

**[Text Generation Inference](https://github.com/huggingface/text-generation-inference)** is a production-ready inference container developed by Hugging Face to enable easy deployment of large language models. It has features such as continuous batching, token streaming, tensor parallelism for fast inference on multiple GPUs, and production-ready logging and tracing.

You can deploy Mixtral on Hugging Face's [Inference Endpoints](https://ui.endpoints.huggingface.co/new?repository=mistralai%2FMixtral-8x7B-Instruct-v0.1&vendor=aws®ion=us-east-1&accelerator=gpu&instance_size=2xlarge&task=text-generation&no_suggested_compute=true&tgi=true&tgi_max_batch_total_tokens=1024000&tgi_max_total_tokens=32000), which uses Text Generation Inference as the backend. To deploy a Mixtral model, go to the [model page](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1) and click on the [Deploy -> Inference Endpoints](https://ui.endpoints.huggingface.co/new?repository=meta-llama/Llama-2-7b-hf) widget.

*Note: You might need to request a quota upgrade via email to **[[email protected]](mailto:[email protected])** to access A100s*

You can learn more on how to **[Deploy LLMs with Hugging Face Inference Endpoints in our blog](https://huggingface.co/blog/inference-endpoints-llm)**. The **[blog](https://huggingface.co/blog/inference-endpoints-llm)** includes information about supported hyperparameters and how to stream your response using Python and Javascript.

You can also run Text Generation Inference locally on 2x A100s (80GB) with Docker as follows:

```bash

docker run --gpus all --shm-size 1g -p 3000:80 -v /data:/data ghcr.io/huggingface/text-generation-inference:1.3.0 \

--model-id mistralai/Mixtral-8x7B-Instruct-v0.1 \

--num-shard 2 \

--max-batch-total-tokens 1024000 \

--max-total-tokens 32000

```

## Fine-tuning with 🤗 TRL

Training LLMs can be technically and computationally challenging. In this section, we look at the tools available in the Hugging Face ecosystem to efficiently train Mixtral on a single A100 GPU.

An example command to fine-tune Mixtral on OpenAssistant’s [chat dataset](https://huggingface.co/datasets/OpenAssistant/oasst_top1_2023-08-25) can be found below. To conserve memory, we make use of 4-bit quantization and [QLoRA](https://arxiv.org/abs/2305.14314) to target all the linear layers in the attention blocks. Note that unlike dense transformers, one should not target the MLP layers as they are sparse and don’t interact well with PEFT.

First, install the nightly version of 🤗 TRL and clone the repo to access the [training script](https://github.com/huggingface/trl/blob/main/examples/scripts/sft.py):

```bash

pip install -U transformers

pip install git+https://github.com/huggingface/trl

git clone https://github.com/huggingface/trl

cd trl

```

Then you can run the script:

```bash

accelerate launch --config_file examples/accelerate_configs/multi_gpu.yaml --num_processes=1 \

examples/scripts/sft.py \

--model_name mistralai/Mixtral-8x7B-v0.1 \

--dataset_name trl-lib/ultrachat_200k_chatml \

--batch_size 2 \

--gradient_accumulation_steps 1 \

--learning_rate 2e-4 \

--save_steps 200_000 \

--use_peft \

--peft_lora_r 16 --peft_lora_alpha 32 \

--target_modules q_proj k_proj v_proj o_proj \

--load_in_4bit

```

This takes about 48 hours to train on a single A100, but can be easily parallelised by tweaking `--num_processes` to the number of GPUs you have available.

## Quantizing Mixtral

As seen above, the challenge for this model is to make it run on consumer-type hardware for anyone to use it, as the model requires ~90GB just to be loaded in half-precision (`torch.float16`).

With the 🤗 transformers library, we support out-of-the-box inference with state-of-the-art quantization methods such as QLoRA and GPTQ. You can read more about the quantization methods we support in the [appropriate documentation section](https://huggingface.co/docs/transformers/quantization).

### Load Mixtral with 4-bit quantization

As demonstrated in the inference section, you can load Mixtral with 4-bit quantization by installing the `bitsandbytes` library (`pip install -U bitsandbytes`) and passing the flag `load_in_4bit=True` to the `from_pretrained` method. For better performance, we advise users to load the model with `bnb_4bit_compute_dtype=torch.float16`. Note you need a GPU device with at least 30GB VRAM to properly run the snippet below.

```python

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

model_id = "mistralai/Mixtral-8x7B-Instruct-v0.1"

tokenizer = AutoTokenizer.from_pretrained(model_id)

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16

)

model = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=quantization_config)

prompt = "[INST] Explain what a Mixture of Experts is in less than 100 words. [/INST]"

inputs = tokenizer(prompt, return_tensors="pt").to(0)

output = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

This 4-bit quantization technique was introduced in the [QLoRA paper](https://huggingface.co/papers/2305.14314), you can read more about it in the corresponding section of [the documentation](https://huggingface.co/docs/transformers/quantization#4-bit) or in [this post](https://huggingface.co/blog/4bit-transformers-bitsandbytes).

### Load Mixtral with GPTQ

The GPTQ algorithm is a post-training quantization technique where each row of the weight matrix is quantized independently to find a version of the weights that minimizes the error. These weights are quantized to int4, but they’re restored to fp16 on the fly during inference. In contrast with 4-bit QLoRA, GPTQ needs the model to be calibrated with a dataset in order to be quantized. Ready-to-use GPTQ models are shared on the 🤗 Hub by [TheBloke](https://huggingface.co/TheBloke), so anyone can use them without having to calibrate them first.

For Mixtral, we had to tweak the calibration approach by making sure we **do not** quantize the expert gating layers for better performance. The final perplexity (lower is better) of the quantized model is `4.40` vs `4.25` for the half-precision model. The quantized model can be found [here](https://huggingface.co/TheBloke/Mixtral-8x7B-v0.1-GPTQ), and to run it with 🤗 transformers you first need to update the `auto-gptq` and `optimum` libraries:

```bash

pip install -U optimum auto-gptq

```

You also need to install transformers from source:

```bash

pip install -U git+https://github.com/huggingface/transformers.git

```

Once installed, simply load the GPTQ model with the `from_pretrained` method:

```python

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

model_id = "TheBloke/Mixtral-8x7B-v0.1-GPTQ"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto")

prompt = "[INST] Explain what a Mixture of Experts is in less than 100 words. [/INST]"

inputs = tokenizer(prompt, return_tensors="pt").to(0)

output = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

Note that for both QLoRA and GPTQ you need at least 30 GB of GPU VRAM to fit the model. You can make it work with 24 GB if you use `device_map="auto"`, like in the example above, so some layers are offloaded to CPU.

## Disclaimers and ongoing work

- **Quantization**: Quantization of MoEs is an active area of research. Some initial experiments we've done with TheBloke are shown above, but we expect more progress as this architecture is known better! It will be exciting to see the development in the coming days and weeks in this area. Additionally, recent work such as [QMoE](https://arxiv.org/abs/2310.16795), which achieves sub-1-bit quantization for MoEs, could be applied here.

- **High VRAM usage**: MoEs run inference very quickly but still need a large amount of VRAM (and hence an expensive GPU). This makes it challenging to use it in local setups. MoEs are great for setups with many devices and large VRAM. Mixtral requires 90GB of VRAM in half-precision 🤯

## Additional Resources

- [Mixture of Experts Explained](https://huggingface.co/blog/moe)

- [Mixtral of experts](https://mistral.ai/news/mixtral-of-experts/)

- [Models on the Hub](https://huggingface.co/models?other=mixtral)

- [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

- [Chat demo on Hugging Chat](https://huggingface.co/chat/?model=mistralai/Mixtral-8x7B-Instruct-v0.1)

## Conclusion

We're very excited about Mixtral being released! In the coming days, be ready to learn more about ways to fine-tune and deploy Mixtral.

| 4 |

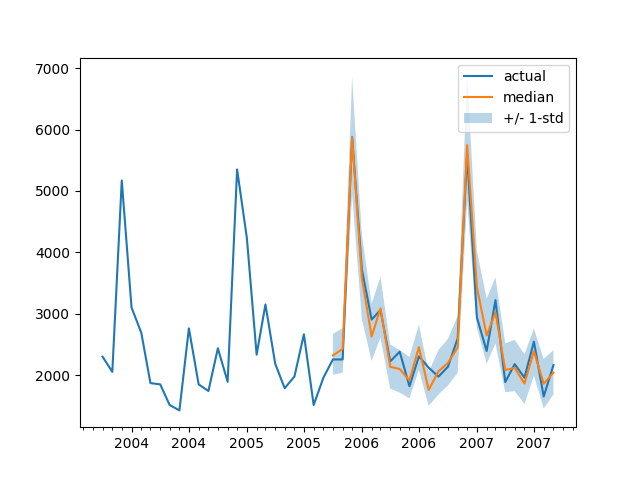

0 | hf_public_repos | hf_public_repos/blog/time-series-transformers.md | ---

title: "Probabilistic Time Series Forecasting with 🤗 Transformers"

thumbnail: /blog/assets/118_time-series-transformers/thumbnail.png

authors:

- user: nielsr

- user: kashif

---

# Probabilistic Time Series Forecasting with 🤗 Transformers

<script async defer src="https://unpkg.com/medium-zoom-element@0/dist/medium-zoom-element.min.js"></script>

<a target="_blank" href="https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/time-series-transformers.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

## Introduction