problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

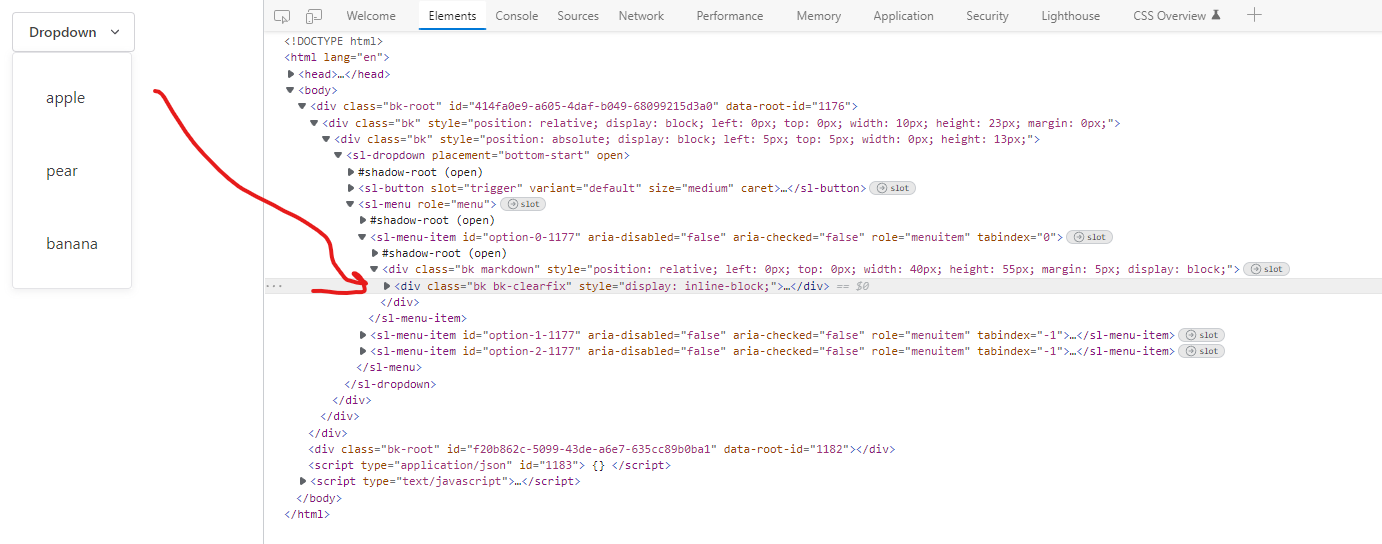

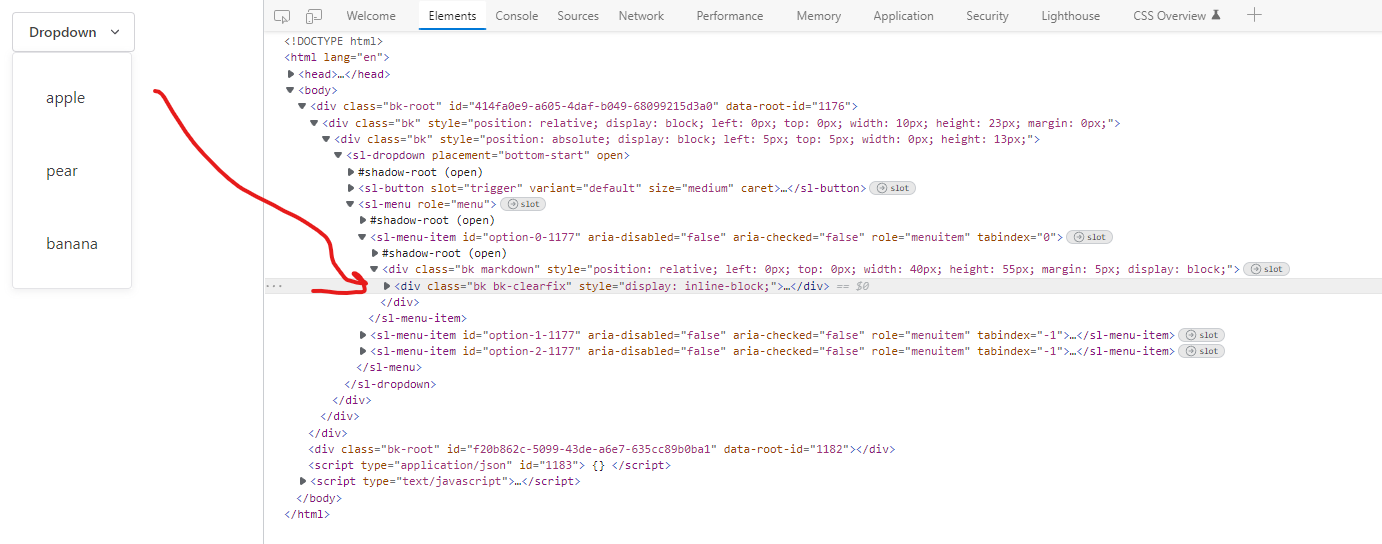

gh_patches_debug_27441 | rasdani/github-patches | git_diff | ivy-llc__ivy-13559 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

index_add

</issue>

<code>

[start of ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py]

1 # local

2 import ivy

3 from ivy.functional.frontends.torch.func_wrapper import to_ivy_arrays_and_back

4

5

6 @to_ivy_arrays_and_back

7 def adjoint(input):

8 return ivy.adjoint(input)

9

10

11 @to_ivy_arrays_and_back

12 def cat(tensors, dim=0, *, out=None):

13 return ivy.concat(tensors, axis=dim, out=out)

14

15

16 @to_ivy_arrays_and_back

17 def chunk(input, chunks, dim=0):

18 if ivy.shape(input) == ():

19 return [input]

20 else:

21 dim_size = ivy.shape(input)[dim]

22 chunk_size = dim_size // chunks

23 if chunk_size == 0:

24 return ivy.split(input, num_or_size_splits=dim_size, axis=dim)

25 else:

26 remainder = dim_size % chunks

27 if remainder == 0:

28 return ivy.split(input, num_or_size_splits=chunks, axis=dim)

29 else:

30 return ivy.split(

31 input,

32 num_or_size_splits=tuple(

33 [chunk_size + remainder] + [chunk_size] * (chunks - 1)

34 ),

35 axis=dim,

36 )

37

38

39 @to_ivy_arrays_and_back

40 def concat(tensors, dim=0, *, out=None):

41 return ivy.concat(tensors, axis=dim, out=out)

42

43

44 @to_ivy_arrays_and_back

45 def gather(input, dim, index, *, sparse_grad=False, out=None):

46 if sparse_grad:

47 raise ivy.utils.exceptions.IvyException(

48 "Gather does not yet support the sparse grad functionality"

49 )

50

51 dim = dim % len(input.shape)

52 all_indices = ivy.argwhere(ivy.full(index.shape, True))

53 gather_locations = ivy.reshape(index, [ivy.prod(ivy.array(index.shape))])

54

55 gather_indices = []

56 for axis in range(len(index.shape)):

57 if axis == dim:

58 gather_indices.append(ivy.array(gather_locations, dtype=index.dtype))

59 else:

60 gather_indices.append(ivy.array(all_indices[:, axis], dtype=index.dtype))

61

62 gather_indices = ivy.stack(gather_indices, axis=-1)

63 gathered = ivy.gather_nd(input, gather_indices)

64 reshaped = ivy.reshape(gathered, index.shape)

65 return reshaped

66

67

68 @to_ivy_arrays_and_back

69 def nonzero(input, *, out=None, as_tuple=False):

70 ret = ivy.nonzero(input)

71 if as_tuple is False:

72 ret = ivy.matrix_transpose(ivy.stack(ret))

73

74 if ivy.exists(out):

75 return ivy.inplace_update(out, ret)

76 return ret

77

78

79 @to_ivy_arrays_and_back

80 def permute(input, dims):

81 return ivy.permute_dims(input, axes=dims)

82

83

84 @to_ivy_arrays_and_back

85 def reshape(input, shape):

86 return ivy.reshape(input, shape)

87

88

89 @to_ivy_arrays_and_back

90 def squeeze(input, dim):

91 if isinstance(dim, int) and input.ndim > 0:

92 if input.shape[dim] > 1:

93 return input

94 return ivy.squeeze(input, dim)

95

96

97 @to_ivy_arrays_and_back

98 def stack(tensors, dim=0, *, out=None):

99 return ivy.stack(tensors, axis=dim, out=out)

100

101

102 @to_ivy_arrays_and_back

103 def swapaxes(input, axis0, axis1):

104 return ivy.swapaxes(input, axis0, axis1)

105

106

107 @to_ivy_arrays_and_back

108 def swapdims(input, dim0, dim1):

109 return ivy.swapaxes(input, dim0, dim1)

110

111

112 @to_ivy_arrays_and_back

113 def transpose(input, dim0, dim1):

114 return ivy.swapaxes(input, dim0, dim1)

115

116

117 @to_ivy_arrays_and_back

118 def t(input):

119 if input.ndim > 2:

120 raise ivy.utils.exceptions.IvyException(

121 "t(input) expects a tensor with <= 2 dimensions, but self is %dD"

122 % input.ndim

123 )

124 if input.ndim == 2:

125 return ivy.swapaxes(input, 0, 1)

126 else:

127 return input

128

129

130 @to_ivy_arrays_and_back

131 def tile(input, dims):

132 try:

133 tup = tuple(dims)

134 except TypeError:

135 tup = (dims,)

136 d = len(tup)

137 res = 0

138 if len(input.shape) > len([dims]) - 1:

139 res = input

140 if d < input.ndim:

141 tup = (1,) * (input.ndim - d) + tup

142 res = ivy.tile(input, tup)

143

144 else:

145 res = ivy.tile(input, repeats=dims, out=None)

146 return res

147

148

149 @to_ivy_arrays_and_back

150 def unsqueeze(input, dim=0):

151 return ivy.expand_dims(input, axis=dim)

152

153

154 @to_ivy_arrays_and_back

155 def argwhere(input):

156 return ivy.argwhere(input)

157

158

159 @to_ivy_arrays_and_back

160 def movedim(input, source, destination):

161 return ivy.moveaxis(input, source, destination)

162

163

164 @to_ivy_arrays_and_back

165 def moveaxis(input, source, destination):

166 return ivy.moveaxis(input, source, destination)

167

168

169 @to_ivy_arrays_and_back

170 def hstack(tensors, *, out=None):

171 return ivy.hstack(tensors, out=out)

172

173

174 @to_ivy_arrays_and_back

175 def index_select(input, dim, index, *, out=None):

176 return ivy.gather(input, index, axis=dim, out=out)

177

178

179 @to_ivy_arrays_and_back

180 def dstack(tensors, *, out=None):

181 return ivy.dstack(tensors, out=out)

182

183

184 @to_ivy_arrays_and_back

185 def take_along_dim(input, indices, dim, *, out=None):

186 return ivy.take_along_axis(input, indices, dim, out=out)

187

188

189 @to_ivy_arrays_and_back

190 def vstack(tensors, *, out=None):

191 return ivy.vstack(tensors, out=out)

192

193

194 @to_ivy_arrays_and_back

195 def split(tensor, split_size_or_sections, dim=0):

196 if isinstance(split_size_or_sections, int):

197 split_size = split_size_or_sections

198 split_size_or_sections = [split_size] * (tensor.shape[dim] // split_size)

199 if tensor.shape[dim] % split_size:

200 split_size_or_sections.append(tensor.shape[dim] % split_size)

201 return tuple(

202 ivy.split(

203 tensor,

204 num_or_size_splits=split_size_or_sections,

205 axis=dim,

206 with_remainder=True,

207 )

208 )

209

210

211 @to_ivy_arrays_and_back

212 def tensor_split(input, indices_or_sections, dim=0):

213 if isinstance(indices_or_sections, (list, tuple)):

214 indices_or_sections = (

215 ivy.diff(indices_or_sections, prepend=[0], append=[input.shape[dim]])

216 .astype(ivy.int8)

217 .to_list()

218 )

219 return ivy.split(

220 input, num_or_size_splits=indices_or_sections, axis=dim, with_remainder=False

221 )

222

223

224 @to_ivy_arrays_and_back

225 def unbind(input, dim=0):

226 shape = list(input.shape)

227 shape.pop(dim)

228 return tuple([x.reshape(tuple(shape)) for x in split(input, 1, dim=dim)])

229

230

231 def _get_indices_or_sections(indices_or_sections, indices, sections):

232 if not ivy.exists(indices_or_sections):

233 if ivy.exists(indices) and not ivy.exists(sections):

234 indices_or_sections = indices

235 elif ivy.exists(sections) and not ivy.exists(indices):

236 indices_or_sections = sections

237 else:

238 raise ivy.utils.exception.IvyError(

239 "got invalid argument for indices_or_sections"

240 )

241 return indices_or_sections

242

243

244 @to_ivy_arrays_and_back

245 def dsplit(input, indices_or_sections=None, /, *, indices=None, sections=None):

246 indices_or_sections = _get_indices_or_sections(

247 indices_or_sections, indices, sections

248 )

249 return tuple(ivy.dsplit(input, indices_or_sections))

250

251

252 @to_ivy_arrays_and_back

253 def hsplit(input, indices_or_sections=None, /, *, indices=None, sections=None):

254 indices_or_sections = _get_indices_or_sections(

255 indices_or_sections, indices, sections

256 )

257 return tuple(ivy.hsplit(input, indices_or_sections))

258

259

260 @to_ivy_arrays_and_back

261 def vsplit(input, indices_or_sections=None, /, *, indices=None, sections=None):

262 indices_or_sections = _get_indices_or_sections(

263 indices_or_sections, indices, sections

264 )

265 return tuple(ivy.vsplit(input, indices_or_sections))

266

267

268 @to_ivy_arrays_and_back

269 def row_stack(tensors, *, out=None):

270 return ivy.vstack(tensors, out=out)

271

272

273 @to_ivy_arrays_and_back

274 def where(condition, input=None, other=None):

275 if not ivy.exists(input) and not ivy.exists(other):

276 return nonzero(condition, as_tuple=True)

277 return ivy.where(condition, input, other)

278

279

280 @to_ivy_arrays_and_back

281 def conj(input):

282 return ivy.conj(input)

283

[end of ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py b/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py

--- a/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py

+++ b/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py

@@ -280,3 +280,45 @@

@to_ivy_arrays_and_back

def conj(input):

return ivy.conj(input)

+

+

+@to_ivy_arrays_and_back

+def index_add(input, dim, index, source, *, alpha=1, out=None):

+ # Potential Bug:

+ # There is an issue with the torch backend (not caused by ivy)

+ # where half precision (float16) values get ignored in summation:

+ #

+ # >>> a = torch.tensor(-14., dtype=torch.float16)

+ # >>> b = torch.tensor(1.014, dtype=torch.float16)

+ # >>> a+b

+ # tensor(-12.9844, dtype=torch.float16)

+ # >>> a = torch.tensor(-24., dtype=torch.float16)

+ # >>> a+b

+ # tensor(-22.9844, dtype=torch.float16)

+ # >>> a = torch.tensor(-34., dtype=torch.float16)

+ # >>> a+b

+ # tensor(-33., dtype=torch.float16)

+ # >>>

+ input = ivy.swapaxes(input, dim, 0)

+ source = ivy.swapaxes(source, dim, 0)

+ _to_adds = []

+ index = sorted(zip(ivy.to_list(index), range(len(index))), key=(lambda x: x[0]))

+ while index:

+ _curr_idx = index[0][0]

+ while len(_to_adds) < _curr_idx:

+ _to_adds.append(ivy.zeros_like(source[0]))

+ _to_add_cum = ivy.get_item(source, index[0][1])

+ while (1 < len(index)) and (index[0][0] == index[1][0]):

+ _to_add_cum = ivy.add(_to_add_cum, ivy.get_item(source, index.pop(1)[1]))

+ index.pop(0)

+ _to_adds.append(_to_add_cum)

+ while len(_to_adds) < input.shape[0]:

+ _to_adds.append(ivy.zeros_like(source[0]))

+ _to_adds = ivy.stack(_to_adds)

+ if len(input.shape) < 2:

+ # Added this line due to the paddle backend treating scalars as 1-d arrays

+ _to_adds = ivy.flatten(_to_adds)

+

+ ret = ivy.add(input, _to_adds, alpha=alpha)

+ ret = ivy.swapaxes(ret, 0, dim, out=out)

+ return ret

| {"golden_diff": "diff --git a/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py b/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py\n--- a/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py\n+++ b/ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py\n@@ -280,3 +280,45 @@\n @to_ivy_arrays_and_back\r\n def conj(input):\r\n return ivy.conj(input)\r\n+\r\n+\r\n+@to_ivy_arrays_and_back\r\n+def index_add(input, dim, index, source, *, alpha=1, out=None):\r\n+ # Potential Bug:\r\n+ # There is an issue with the torch backend (not caused by ivy)\r\n+ # where half precision (float16) values get ignored in summation:\r\n+ #\r\n+ # >>> a = torch.tensor(-14., dtype=torch.float16)\r\n+ # >>> b = torch.tensor(1.014, dtype=torch.float16)\r\n+ # >>> a+b\r\n+ # tensor(-12.9844, dtype=torch.float16)\r\n+ # >>> a = torch.tensor(-24., dtype=torch.float16)\r\n+ # >>> a+b\r\n+ # tensor(-22.9844, dtype=torch.float16)\r\n+ # >>> a = torch.tensor(-34., dtype=torch.float16)\r\n+ # >>> a+b\r\n+ # tensor(-33., dtype=torch.float16)\r\n+ # >>>\r\n+ input = ivy.swapaxes(input, dim, 0)\r\n+ source = ivy.swapaxes(source, dim, 0)\r\n+ _to_adds = []\r\n+ index = sorted(zip(ivy.to_list(index), range(len(index))), key=(lambda x: x[0]))\r\n+ while index:\r\n+ _curr_idx = index[0][0]\r\n+ while len(_to_adds) < _curr_idx:\r\n+ _to_adds.append(ivy.zeros_like(source[0]))\r\n+ _to_add_cum = ivy.get_item(source, index[0][1])\r\n+ while (1 < len(index)) and (index[0][0] == index[1][0]):\r\n+ _to_add_cum = ivy.add(_to_add_cum, ivy.get_item(source, index.pop(1)[1]))\r\n+ index.pop(0)\r\n+ _to_adds.append(_to_add_cum)\r\n+ while len(_to_adds) < input.shape[0]:\r\n+ _to_adds.append(ivy.zeros_like(source[0]))\r\n+ _to_adds = ivy.stack(_to_adds)\r\n+ if len(input.shape) < 2:\r\n+ # Added this line due to the paddle backend treating scalars as 1-d arrays\r\n+ _to_adds = ivy.flatten(_to_adds)\r\n+\r\n+ ret = ivy.add(input, _to_adds, alpha=alpha)\r\n+ ret = ivy.swapaxes(ret, 0, dim, out=out)\r\n+ return ret\n", "issue": "index_add\n\n", "before_files": [{"content": "# local\r\nimport ivy\r\nfrom ivy.functional.frontends.torch.func_wrapper import to_ivy_arrays_and_back\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef adjoint(input):\r\n return ivy.adjoint(input)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef cat(tensors, dim=0, *, out=None):\r\n return ivy.concat(tensors, axis=dim, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef chunk(input, chunks, dim=0):\r\n if ivy.shape(input) == ():\r\n return [input]\r\n else:\r\n dim_size = ivy.shape(input)[dim]\r\n chunk_size = dim_size // chunks\r\n if chunk_size == 0:\r\n return ivy.split(input, num_or_size_splits=dim_size, axis=dim)\r\n else:\r\n remainder = dim_size % chunks\r\n if remainder == 0:\r\n return ivy.split(input, num_or_size_splits=chunks, axis=dim)\r\n else:\r\n return ivy.split(\r\n input,\r\n num_or_size_splits=tuple(\r\n [chunk_size + remainder] + [chunk_size] * (chunks - 1)\r\n ),\r\n axis=dim,\r\n )\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef concat(tensors, dim=0, *, out=None):\r\n return ivy.concat(tensors, axis=dim, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef gather(input, dim, index, *, sparse_grad=False, out=None):\r\n if sparse_grad:\r\n raise ivy.utils.exceptions.IvyException(\r\n \"Gather does not yet support the sparse grad functionality\"\r\n )\r\n\r\n dim = dim % len(input.shape)\r\n all_indices = ivy.argwhere(ivy.full(index.shape, True))\r\n gather_locations = ivy.reshape(index, [ivy.prod(ivy.array(index.shape))])\r\n\r\n gather_indices = []\r\n for axis in range(len(index.shape)):\r\n if axis == dim:\r\n gather_indices.append(ivy.array(gather_locations, dtype=index.dtype))\r\n else:\r\n gather_indices.append(ivy.array(all_indices[:, axis], dtype=index.dtype))\r\n\r\n gather_indices = ivy.stack(gather_indices, axis=-1)\r\n gathered = ivy.gather_nd(input, gather_indices)\r\n reshaped = ivy.reshape(gathered, index.shape)\r\n return reshaped\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef nonzero(input, *, out=None, as_tuple=False):\r\n ret = ivy.nonzero(input)\r\n if as_tuple is False:\r\n ret = ivy.matrix_transpose(ivy.stack(ret))\r\n\r\n if ivy.exists(out):\r\n return ivy.inplace_update(out, ret)\r\n return ret\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef permute(input, dims):\r\n return ivy.permute_dims(input, axes=dims)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef reshape(input, shape):\r\n return ivy.reshape(input, shape)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef squeeze(input, dim):\r\n if isinstance(dim, int) and input.ndim > 0:\r\n if input.shape[dim] > 1:\r\n return input\r\n return ivy.squeeze(input, dim)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef stack(tensors, dim=0, *, out=None):\r\n return ivy.stack(tensors, axis=dim, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef swapaxes(input, axis0, axis1):\r\n return ivy.swapaxes(input, axis0, axis1)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef swapdims(input, dim0, dim1):\r\n return ivy.swapaxes(input, dim0, dim1)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef transpose(input, dim0, dim1):\r\n return ivy.swapaxes(input, dim0, dim1)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef t(input):\r\n if input.ndim > 2:\r\n raise ivy.utils.exceptions.IvyException(\r\n \"t(input) expects a tensor with <= 2 dimensions, but self is %dD\"\r\n % input.ndim\r\n )\r\n if input.ndim == 2:\r\n return ivy.swapaxes(input, 0, 1)\r\n else:\r\n return input\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef tile(input, dims):\r\n try:\r\n tup = tuple(dims)\r\n except TypeError:\r\n tup = (dims,)\r\n d = len(tup)\r\n res = 0\r\n if len(input.shape) > len([dims]) - 1:\r\n res = input\r\n if d < input.ndim:\r\n tup = (1,) * (input.ndim - d) + tup\r\n res = ivy.tile(input, tup)\r\n\r\n else:\r\n res = ivy.tile(input, repeats=dims, out=None)\r\n return res\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef unsqueeze(input, dim=0):\r\n return ivy.expand_dims(input, axis=dim)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef argwhere(input):\r\n return ivy.argwhere(input)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef movedim(input, source, destination):\r\n return ivy.moveaxis(input, source, destination)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef moveaxis(input, source, destination):\r\n return ivy.moveaxis(input, source, destination)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef hstack(tensors, *, out=None):\r\n return ivy.hstack(tensors, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef index_select(input, dim, index, *, out=None):\r\n return ivy.gather(input, index, axis=dim, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef dstack(tensors, *, out=None):\r\n return ivy.dstack(tensors, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef take_along_dim(input, indices, dim, *, out=None):\r\n return ivy.take_along_axis(input, indices, dim, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef vstack(tensors, *, out=None):\r\n return ivy.vstack(tensors, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef split(tensor, split_size_or_sections, dim=0):\r\n if isinstance(split_size_or_sections, int):\r\n split_size = split_size_or_sections\r\n split_size_or_sections = [split_size] * (tensor.shape[dim] // split_size)\r\n if tensor.shape[dim] % split_size:\r\n split_size_or_sections.append(tensor.shape[dim] % split_size)\r\n return tuple(\r\n ivy.split(\r\n tensor,\r\n num_or_size_splits=split_size_or_sections,\r\n axis=dim,\r\n with_remainder=True,\r\n )\r\n )\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef tensor_split(input, indices_or_sections, dim=0):\r\n if isinstance(indices_or_sections, (list, tuple)):\r\n indices_or_sections = (\r\n ivy.diff(indices_or_sections, prepend=[0], append=[input.shape[dim]])\r\n .astype(ivy.int8)\r\n .to_list()\r\n )\r\n return ivy.split(\r\n input, num_or_size_splits=indices_or_sections, axis=dim, with_remainder=False\r\n )\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef unbind(input, dim=0):\r\n shape = list(input.shape)\r\n shape.pop(dim)\r\n return tuple([x.reshape(tuple(shape)) for x in split(input, 1, dim=dim)])\r\n\r\n\r\ndef _get_indices_or_sections(indices_or_sections, indices, sections):\r\n if not ivy.exists(indices_or_sections):\r\n if ivy.exists(indices) and not ivy.exists(sections):\r\n indices_or_sections = indices\r\n elif ivy.exists(sections) and not ivy.exists(indices):\r\n indices_or_sections = sections\r\n else:\r\n raise ivy.utils.exception.IvyError(\r\n \"got invalid argument for indices_or_sections\"\r\n )\r\n return indices_or_sections\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef dsplit(input, indices_or_sections=None, /, *, indices=None, sections=None):\r\n indices_or_sections = _get_indices_or_sections(\r\n indices_or_sections, indices, sections\r\n )\r\n return tuple(ivy.dsplit(input, indices_or_sections))\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef hsplit(input, indices_or_sections=None, /, *, indices=None, sections=None):\r\n indices_or_sections = _get_indices_or_sections(\r\n indices_or_sections, indices, sections\r\n )\r\n return tuple(ivy.hsplit(input, indices_or_sections))\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef vsplit(input, indices_or_sections=None, /, *, indices=None, sections=None):\r\n indices_or_sections = _get_indices_or_sections(\r\n indices_or_sections, indices, sections\r\n )\r\n return tuple(ivy.vsplit(input, indices_or_sections))\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef row_stack(tensors, *, out=None):\r\n return ivy.vstack(tensors, out=out)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef where(condition, input=None, other=None):\r\n if not ivy.exists(input) and not ivy.exists(other):\r\n return nonzero(condition, as_tuple=True)\r\n return ivy.where(condition, input, other)\r\n\r\n\r\n@to_ivy_arrays_and_back\r\ndef conj(input):\r\n return ivy.conj(input)\r\n", "path": "ivy/functional/frontends/torch/indexing_slicing_joining_mutating_ops.py"}]} | 3,316 | 696 |

gh_patches_debug_883 | rasdani/github-patches | git_diff | scrapy__scrapy-5880 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

_sent_failed cut the errback chain in MailSender

`MailSender._sent_failed` return `None`, instead of `failure`. This cut the errback call chain, making impossible to detect in the code fail in the mails in client code.

</issue>

<code>

[start of scrapy/mail.py]

1 """

2 Mail sending helpers

3

4 See documentation in docs/topics/email.rst

5 """

6 import logging

7 from email import encoders as Encoders

8 from email.mime.base import MIMEBase

9 from email.mime.multipart import MIMEMultipart

10 from email.mime.nonmultipart import MIMENonMultipart

11 from email.mime.text import MIMEText

12 from email.utils import formatdate

13 from io import BytesIO

14

15 from twisted import version as twisted_version

16 from twisted.internet import defer, ssl

17 from twisted.python.versions import Version

18

19 from scrapy.utils.misc import arg_to_iter

20 from scrapy.utils.python import to_bytes

21

22 logger = logging.getLogger(__name__)

23

24

25 # Defined in the email.utils module, but undocumented:

26 # https://github.com/python/cpython/blob/v3.9.0/Lib/email/utils.py#L42

27 COMMASPACE = ", "

28

29

30 def _to_bytes_or_none(text):

31 if text is None:

32 return None

33 return to_bytes(text)

34

35

36 class MailSender:

37 def __init__(

38 self,

39 smtphost="localhost",

40 mailfrom="scrapy@localhost",

41 smtpuser=None,

42 smtppass=None,

43 smtpport=25,

44 smtptls=False,

45 smtpssl=False,

46 debug=False,

47 ):

48 self.smtphost = smtphost

49 self.smtpport = smtpport

50 self.smtpuser = _to_bytes_or_none(smtpuser)

51 self.smtppass = _to_bytes_or_none(smtppass)

52 self.smtptls = smtptls

53 self.smtpssl = smtpssl

54 self.mailfrom = mailfrom

55 self.debug = debug

56

57 @classmethod

58 def from_settings(cls, settings):

59 return cls(

60 smtphost=settings["MAIL_HOST"],

61 mailfrom=settings["MAIL_FROM"],

62 smtpuser=settings["MAIL_USER"],

63 smtppass=settings["MAIL_PASS"],

64 smtpport=settings.getint("MAIL_PORT"),

65 smtptls=settings.getbool("MAIL_TLS"),

66 smtpssl=settings.getbool("MAIL_SSL"),

67 )

68

69 def send(

70 self,

71 to,

72 subject,

73 body,

74 cc=None,

75 attachs=(),

76 mimetype="text/plain",

77 charset=None,

78 _callback=None,

79 ):

80 from twisted.internet import reactor

81

82 if attachs:

83 msg = MIMEMultipart()

84 else:

85 msg = MIMENonMultipart(*mimetype.split("/", 1))

86

87 to = list(arg_to_iter(to))

88 cc = list(arg_to_iter(cc))

89

90 msg["From"] = self.mailfrom

91 msg["To"] = COMMASPACE.join(to)

92 msg["Date"] = formatdate(localtime=True)

93 msg["Subject"] = subject

94 rcpts = to[:]

95 if cc:

96 rcpts.extend(cc)

97 msg["Cc"] = COMMASPACE.join(cc)

98

99 if charset:

100 msg.set_charset(charset)

101

102 if attachs:

103 msg.attach(MIMEText(body, "plain", charset or "us-ascii"))

104 for attach_name, mimetype, f in attachs:

105 part = MIMEBase(*mimetype.split("/"))

106 part.set_payload(f.read())

107 Encoders.encode_base64(part)

108 part.add_header(

109 "Content-Disposition", "attachment", filename=attach_name

110 )

111 msg.attach(part)

112 else:

113 msg.set_payload(body)

114

115 if _callback:

116 _callback(to=to, subject=subject, body=body, cc=cc, attach=attachs, msg=msg)

117

118 if self.debug:

119 logger.debug(

120 "Debug mail sent OK: To=%(mailto)s Cc=%(mailcc)s "

121 'Subject="%(mailsubject)s" Attachs=%(mailattachs)d',

122 {

123 "mailto": to,

124 "mailcc": cc,

125 "mailsubject": subject,

126 "mailattachs": len(attachs),

127 },

128 )

129 return

130

131 dfd = self._sendmail(rcpts, msg.as_string().encode(charset or "utf-8"))

132 dfd.addCallbacks(

133 callback=self._sent_ok,

134 errback=self._sent_failed,

135 callbackArgs=[to, cc, subject, len(attachs)],

136 errbackArgs=[to, cc, subject, len(attachs)],

137 )

138 reactor.addSystemEventTrigger("before", "shutdown", lambda: dfd)

139 return dfd

140

141 def _sent_ok(self, result, to, cc, subject, nattachs):

142 logger.info(

143 "Mail sent OK: To=%(mailto)s Cc=%(mailcc)s "

144 'Subject="%(mailsubject)s" Attachs=%(mailattachs)d',

145 {

146 "mailto": to,

147 "mailcc": cc,

148 "mailsubject": subject,

149 "mailattachs": nattachs,

150 },

151 )

152

153 def _sent_failed(self, failure, to, cc, subject, nattachs):

154 errstr = str(failure.value)

155 logger.error(

156 "Unable to send mail: To=%(mailto)s Cc=%(mailcc)s "

157 'Subject="%(mailsubject)s" Attachs=%(mailattachs)d'

158 "- %(mailerr)s",

159 {

160 "mailto": to,

161 "mailcc": cc,

162 "mailsubject": subject,

163 "mailattachs": nattachs,

164 "mailerr": errstr,

165 },

166 )

167

168 def _sendmail(self, to_addrs, msg):

169 from twisted.internet import reactor

170

171 msg = BytesIO(msg)

172 d = defer.Deferred()

173

174 factory = self._create_sender_factory(to_addrs, msg, d)

175

176 if self.smtpssl:

177 reactor.connectSSL(

178 self.smtphost, self.smtpport, factory, ssl.ClientContextFactory()

179 )

180 else:

181 reactor.connectTCP(self.smtphost, self.smtpport, factory)

182

183 return d

184

185 def _create_sender_factory(self, to_addrs, msg, d):

186 from twisted.mail.smtp import ESMTPSenderFactory

187

188 factory_keywords = {

189 "heloFallback": True,

190 "requireAuthentication": False,

191 "requireTransportSecurity": self.smtptls,

192 }

193

194 # Newer versions of twisted require the hostname to use STARTTLS

195 if twisted_version >= Version("twisted", 21, 2, 0):

196 factory_keywords["hostname"] = self.smtphost

197

198 factory = ESMTPSenderFactory(

199 self.smtpuser,

200 self.smtppass,

201 self.mailfrom,

202 to_addrs,

203 msg,

204 d,

205 **factory_keywords

206 )

207 factory.noisy = False

208 return factory

209

[end of scrapy/mail.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/scrapy/mail.py b/scrapy/mail.py

--- a/scrapy/mail.py

+++ b/scrapy/mail.py

@@ -164,6 +164,7 @@

"mailerr": errstr,

},

)

+ return failure

def _sendmail(self, to_addrs, msg):

from twisted.internet import reactor

| {"golden_diff": "diff --git a/scrapy/mail.py b/scrapy/mail.py\n--- a/scrapy/mail.py\n+++ b/scrapy/mail.py\n@@ -164,6 +164,7 @@\n \"mailerr\": errstr,\n },\n )\n+ return failure\n \n def _sendmail(self, to_addrs, msg):\n from twisted.internet import reactor\n", "issue": "_sent_failed cut the errback chain in MailSender\n`MailSender._sent_failed` return `None`, instead of `failure`. This cut the errback call chain, making impossible to detect in the code fail in the mails in client code.\n\n", "before_files": [{"content": "\"\"\"\nMail sending helpers\n\nSee documentation in docs/topics/email.rst\n\"\"\"\nimport logging\nfrom email import encoders as Encoders\nfrom email.mime.base import MIMEBase\nfrom email.mime.multipart import MIMEMultipart\nfrom email.mime.nonmultipart import MIMENonMultipart\nfrom email.mime.text import MIMEText\nfrom email.utils import formatdate\nfrom io import BytesIO\n\nfrom twisted import version as twisted_version\nfrom twisted.internet import defer, ssl\nfrom twisted.python.versions import Version\n\nfrom scrapy.utils.misc import arg_to_iter\nfrom scrapy.utils.python import to_bytes\n\nlogger = logging.getLogger(__name__)\n\n\n# Defined in the email.utils module, but undocumented:\n# https://github.com/python/cpython/blob/v3.9.0/Lib/email/utils.py#L42\nCOMMASPACE = \", \"\n\n\ndef _to_bytes_or_none(text):\n if text is None:\n return None\n return to_bytes(text)\n\n\nclass MailSender:\n def __init__(\n self,\n smtphost=\"localhost\",\n mailfrom=\"scrapy@localhost\",\n smtpuser=None,\n smtppass=None,\n smtpport=25,\n smtptls=False,\n smtpssl=False,\n debug=False,\n ):\n self.smtphost = smtphost\n self.smtpport = smtpport\n self.smtpuser = _to_bytes_or_none(smtpuser)\n self.smtppass = _to_bytes_or_none(smtppass)\n self.smtptls = smtptls\n self.smtpssl = smtpssl\n self.mailfrom = mailfrom\n self.debug = debug\n\n @classmethod\n def from_settings(cls, settings):\n return cls(\n smtphost=settings[\"MAIL_HOST\"],\n mailfrom=settings[\"MAIL_FROM\"],\n smtpuser=settings[\"MAIL_USER\"],\n smtppass=settings[\"MAIL_PASS\"],\n smtpport=settings.getint(\"MAIL_PORT\"),\n smtptls=settings.getbool(\"MAIL_TLS\"),\n smtpssl=settings.getbool(\"MAIL_SSL\"),\n )\n\n def send(\n self,\n to,\n subject,\n body,\n cc=None,\n attachs=(),\n mimetype=\"text/plain\",\n charset=None,\n _callback=None,\n ):\n from twisted.internet import reactor\n\n if attachs:\n msg = MIMEMultipart()\n else:\n msg = MIMENonMultipart(*mimetype.split(\"/\", 1))\n\n to = list(arg_to_iter(to))\n cc = list(arg_to_iter(cc))\n\n msg[\"From\"] = self.mailfrom\n msg[\"To\"] = COMMASPACE.join(to)\n msg[\"Date\"] = formatdate(localtime=True)\n msg[\"Subject\"] = subject\n rcpts = to[:]\n if cc:\n rcpts.extend(cc)\n msg[\"Cc\"] = COMMASPACE.join(cc)\n\n if charset:\n msg.set_charset(charset)\n\n if attachs:\n msg.attach(MIMEText(body, \"plain\", charset or \"us-ascii\"))\n for attach_name, mimetype, f in attachs:\n part = MIMEBase(*mimetype.split(\"/\"))\n part.set_payload(f.read())\n Encoders.encode_base64(part)\n part.add_header(\n \"Content-Disposition\", \"attachment\", filename=attach_name\n )\n msg.attach(part)\n else:\n msg.set_payload(body)\n\n if _callback:\n _callback(to=to, subject=subject, body=body, cc=cc, attach=attachs, msg=msg)\n\n if self.debug:\n logger.debug(\n \"Debug mail sent OK: To=%(mailto)s Cc=%(mailcc)s \"\n 'Subject=\"%(mailsubject)s\" Attachs=%(mailattachs)d',\n {\n \"mailto\": to,\n \"mailcc\": cc,\n \"mailsubject\": subject,\n \"mailattachs\": len(attachs),\n },\n )\n return\n\n dfd = self._sendmail(rcpts, msg.as_string().encode(charset or \"utf-8\"))\n dfd.addCallbacks(\n callback=self._sent_ok,\n errback=self._sent_failed,\n callbackArgs=[to, cc, subject, len(attachs)],\n errbackArgs=[to, cc, subject, len(attachs)],\n )\n reactor.addSystemEventTrigger(\"before\", \"shutdown\", lambda: dfd)\n return dfd\n\n def _sent_ok(self, result, to, cc, subject, nattachs):\n logger.info(\n \"Mail sent OK: To=%(mailto)s Cc=%(mailcc)s \"\n 'Subject=\"%(mailsubject)s\" Attachs=%(mailattachs)d',\n {\n \"mailto\": to,\n \"mailcc\": cc,\n \"mailsubject\": subject,\n \"mailattachs\": nattachs,\n },\n )\n\n def _sent_failed(self, failure, to, cc, subject, nattachs):\n errstr = str(failure.value)\n logger.error(\n \"Unable to send mail: To=%(mailto)s Cc=%(mailcc)s \"\n 'Subject=\"%(mailsubject)s\" Attachs=%(mailattachs)d'\n \"- %(mailerr)s\",\n {\n \"mailto\": to,\n \"mailcc\": cc,\n \"mailsubject\": subject,\n \"mailattachs\": nattachs,\n \"mailerr\": errstr,\n },\n )\n\n def _sendmail(self, to_addrs, msg):\n from twisted.internet import reactor\n\n msg = BytesIO(msg)\n d = defer.Deferred()\n\n factory = self._create_sender_factory(to_addrs, msg, d)\n\n if self.smtpssl:\n reactor.connectSSL(\n self.smtphost, self.smtpport, factory, ssl.ClientContextFactory()\n )\n else:\n reactor.connectTCP(self.smtphost, self.smtpport, factory)\n\n return d\n\n def _create_sender_factory(self, to_addrs, msg, d):\n from twisted.mail.smtp import ESMTPSenderFactory\n\n factory_keywords = {\n \"heloFallback\": True,\n \"requireAuthentication\": False,\n \"requireTransportSecurity\": self.smtptls,\n }\n\n # Newer versions of twisted require the hostname to use STARTTLS\n if twisted_version >= Version(\"twisted\", 21, 2, 0):\n factory_keywords[\"hostname\"] = self.smtphost\n\n factory = ESMTPSenderFactory(\n self.smtpuser,\n self.smtppass,\n self.mailfrom,\n to_addrs,\n msg,\n d,\n **factory_keywords\n )\n factory.noisy = False\n return factory\n", "path": "scrapy/mail.py"}]} | 2,542 | 79 |

gh_patches_debug_21680 | rasdani/github-patches | git_diff | conan-io__conan-2943 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Inconsistency between local and remote version of `conan search`

Depending on searching either in remotes or locally we're getting different results for situations where we don't use wildcards.

Example:

```

$ conan search zlib

There are no packages matching the 'zlib' pattern

$ conan search zlib*

Existing package recipes:

zlib/1.2.8@conan/stable

zlib/1.2.11@conan/stable

```

```

$ conan search zlib -r conan-center

Existing package recipes:

zlib/1.2.8@conan/stable

zlib/1.2.11@conan/stable

zlib/1.2.11@conan/testing

```

Same for combinations such as `zlib/1.2.8`, `zlib/1.2.8@`, `zlib/1.2.8@conan`, `zlib/1.2.8@conan/` except for `zlib/`.

Proposition: make local search act in the same manner as remote search.

</issue>

<code>

[start of conans/search/search.py]

1 import re

2 import os

3

4

5 from fnmatch import translate

6

7 from conans.errors import ConanException, NotFoundException

8 from conans.model.info import ConanInfo

9 from conans.model.ref import PackageReference, ConanFileReference

10 from conans.paths import CONANINFO

11 from conans.util.log import logger

12 from conans.search.query_parse import infix_to_postfix, evaluate_postfix

13 from conans.util.files import list_folder_subdirs, load

14

15

16 def filter_outdated(packages_infos, recipe_hash):

17 result = {}

18 for package_id, info in packages_infos.items():

19 try: # Existing package_info of old package might not have recipe_hash

20 if info["recipe_hash"] != recipe_hash:

21 result[package_id] = info

22 except KeyError:

23 pass

24 return result

25

26

27 def filter_packages(query, package_infos):

28 if query is None:

29 return package_infos

30 try:

31 if "!" in query:

32 raise ConanException("'!' character is not allowed")

33 if " not " in query or query.startswith("not "):

34 raise ConanException("'not' operator is not allowed")

35 postfix = infix_to_postfix(query) if query else []

36 result = {}

37 for package_id, info in package_infos.items():

38 if evaluate_postfix_with_info(postfix, info):

39 result[package_id] = info

40 return result

41 except Exception as exc:

42 raise ConanException("Invalid package query: %s. %s" % (query, exc))

43

44

45 def evaluate_postfix_with_info(postfix, conan_vars_info):

46

47 # Evaluate conaninfo with the expression

48

49 def evaluate_info(expression):

50 """Receives an expression like compiler.version="12"

51 Uses conan_vars_info in the closure to evaluate it"""

52 name, value = expression.split("=", 1)

53 value = value.replace("\"", "")

54 return evaluate(name, value, conan_vars_info)

55

56 return evaluate_postfix(postfix, evaluate_info)

57

58

59 def evaluate(prop_name, prop_value, conan_vars_info):

60 """

61 Evaluates a single prop_name, prop_value like "os", "Windows" against conan_vars_info.serialize_min()

62 """

63

64 def compatible_prop(setting_value, prop_value):

65 return setting_value is None or prop_value == setting_value

66

67 info_settings = conan_vars_info.get("settings", [])

68 info_options = conan_vars_info.get("options", [])

69

70 if prop_name in ["os", "compiler", "arch", "build_type"] or prop_name.startswith("compiler."):

71 return compatible_prop(info_settings.get(prop_name, None), prop_value)

72 else:

73 return compatible_prop(info_options.get(prop_name, None), prop_value)

74 return False

75

76

77 def search_recipes(paths, pattern=None, ignorecase=True):

78 # Conan references in main storage

79 if pattern:

80 if isinstance(pattern, ConanFileReference):

81 pattern = str(pattern)

82 pattern = translate(pattern)

83 pattern = re.compile(pattern, re.IGNORECASE) if ignorecase else re.compile(pattern)

84

85 subdirs = list_folder_subdirs(basedir=paths.store, level=4)

86 if not pattern:

87 return sorted([ConanFileReference(*folder.split("/")) for folder in subdirs])

88 else:

89 ret = []

90 for subdir in subdirs:

91 conan_ref = ConanFileReference(*subdir.split("/"))

92 if pattern:

93 if pattern.match(str(conan_ref)):

94 ret.append(conan_ref)

95 return sorted(ret)

96

97

98 def search_packages(paths, reference, query):

99 """ Return a dict like this:

100

101 {package_ID: {name: "OpenCV",

102 version: "2.14",

103 settings: {os: Windows}}}

104 param conan_ref: ConanFileReference object

105 """

106 infos = _get_local_infos_min(paths, reference)

107 return filter_packages(query, infos)

108

109

110 def _get_local_infos_min(paths, reference):

111 result = {}

112 packages_path = paths.packages(reference)

113 subdirs = list_folder_subdirs(packages_path, level=1)

114 for package_id in subdirs:

115 # Read conaninfo

116 try:

117 package_reference = PackageReference(reference, package_id)

118 info_path = os.path.join(paths.package(package_reference,

119 short_paths=None), CONANINFO)

120 if not os.path.exists(info_path):

121 raise NotFoundException("")

122 conan_info_content = load(info_path)

123 conan_vars_info = ConanInfo.loads(conan_info_content).serialize_min()

124 result[package_id] = conan_vars_info

125

126 except Exception as exc:

127 logger.error("Package %s has no ConanInfo file" % str(package_reference))

128 if str(exc):

129 logger.error(str(exc))

130

131 return result

132

[end of conans/search/search.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/conans/search/search.py b/conans/search/search.py

--- a/conans/search/search.py

+++ b/conans/search/search.py

@@ -1,7 +1,6 @@

import re

import os

-

from fnmatch import translate

from conans.errors import ConanException, NotFoundException

@@ -90,11 +89,27 @@

for subdir in subdirs:

conan_ref = ConanFileReference(*subdir.split("/"))

if pattern:

- if pattern.match(str(conan_ref)):

+ if _partial_match(pattern, conan_ref):

ret.append(conan_ref)

+

return sorted(ret)

+def _partial_match(pattern, conan_ref):

+ """

+ Finds if pattern matches any of partial sums of tokens of conan reference

+ """

+

+ tokens = str(conan_ref).replace('/', ' / ').replace('@', ' @ ').split()

+

+ def partial_sums(iterable):

+ sum = ''

+ for i in iterable:

+ sum += i

+ yield sum

+

+ return any(map(pattern.match, list(partial_sums(tokens))))

+

def search_packages(paths, reference, query):

""" Return a dict like this:

| {"golden_diff": "diff --git a/conans/search/search.py b/conans/search/search.py\n--- a/conans/search/search.py\n+++ b/conans/search/search.py\n@@ -1,7 +1,6 @@\n import re\n import os\n \n-\n from fnmatch import translate\n \n from conans.errors import ConanException, NotFoundException\n@@ -90,11 +89,27 @@\n for subdir in subdirs:\n conan_ref = ConanFileReference(*subdir.split(\"/\"))\n if pattern:\n- if pattern.match(str(conan_ref)):\n+ if _partial_match(pattern, conan_ref):\n ret.append(conan_ref)\n+\n return sorted(ret)\n \n \n+def _partial_match(pattern, conan_ref):\n+ \"\"\"\n+ Finds if pattern matches any of partial sums of tokens of conan reference\n+ \"\"\"\n+ \n+ tokens = str(conan_ref).replace('/', ' / ').replace('@', ' @ ').split()\n+\n+ def partial_sums(iterable):\n+ sum = ''\n+ for i in iterable:\n+ sum += i\n+ yield sum\n+\n+ return any(map(pattern.match, list(partial_sums(tokens))))\n+\n def search_packages(paths, reference, query):\n \"\"\" Return a dict like this:\n", "issue": "Inconsistency between local and remote version of `conan search`\nDepending on searching either in remotes or locally we're getting different results for situations where we don't use wildcards. \r\nExample:\r\n```\r\n$ conan search zlib\r\nThere are no packages matching the 'zlib' pattern\r\n\r\n$ conan search zlib*\r\nExisting package recipes:\r\n\r\nzlib/1.2.8@conan/stable\r\nzlib/1.2.11@conan/stable\r\n```\r\n```\r\n$ conan search zlib -r conan-center\r\nExisting package recipes:\r\n\r\nzlib/1.2.8@conan/stable\r\nzlib/1.2.11@conan/stable\r\nzlib/1.2.11@conan/testing\r\n```\r\nSame for combinations such as `zlib/1.2.8`, `zlib/1.2.8@`, `zlib/1.2.8@conan`, `zlib/1.2.8@conan/` except for `zlib/`.\r\n\r\nProposition: make local search act in the same manner as remote search.\n", "before_files": [{"content": "import re\nimport os\n\n\nfrom fnmatch import translate\n\nfrom conans.errors import ConanException, NotFoundException\nfrom conans.model.info import ConanInfo\nfrom conans.model.ref import PackageReference, ConanFileReference\nfrom conans.paths import CONANINFO\nfrom conans.util.log import logger\nfrom conans.search.query_parse import infix_to_postfix, evaluate_postfix\nfrom conans.util.files import list_folder_subdirs, load\n\n\ndef filter_outdated(packages_infos, recipe_hash):\n result = {}\n for package_id, info in packages_infos.items():\n try: # Existing package_info of old package might not have recipe_hash\n if info[\"recipe_hash\"] != recipe_hash:\n result[package_id] = info\n except KeyError:\n pass\n return result\n\n\ndef filter_packages(query, package_infos):\n if query is None:\n return package_infos\n try:\n if \"!\" in query:\n raise ConanException(\"'!' character is not allowed\")\n if \" not \" in query or query.startswith(\"not \"):\n raise ConanException(\"'not' operator is not allowed\")\n postfix = infix_to_postfix(query) if query else []\n result = {}\n for package_id, info in package_infos.items():\n if evaluate_postfix_with_info(postfix, info):\n result[package_id] = info\n return result\n except Exception as exc:\n raise ConanException(\"Invalid package query: %s. %s\" % (query, exc))\n\n\ndef evaluate_postfix_with_info(postfix, conan_vars_info):\n\n # Evaluate conaninfo with the expression\n\n def evaluate_info(expression):\n \"\"\"Receives an expression like compiler.version=\"12\"\n Uses conan_vars_info in the closure to evaluate it\"\"\"\n name, value = expression.split(\"=\", 1)\n value = value.replace(\"\\\"\", \"\")\n return evaluate(name, value, conan_vars_info)\n\n return evaluate_postfix(postfix, evaluate_info)\n\n\ndef evaluate(prop_name, prop_value, conan_vars_info):\n \"\"\"\n Evaluates a single prop_name, prop_value like \"os\", \"Windows\" against conan_vars_info.serialize_min()\n \"\"\"\n\n def compatible_prop(setting_value, prop_value):\n return setting_value is None or prop_value == setting_value\n\n info_settings = conan_vars_info.get(\"settings\", [])\n info_options = conan_vars_info.get(\"options\", [])\n\n if prop_name in [\"os\", \"compiler\", \"arch\", \"build_type\"] or prop_name.startswith(\"compiler.\"):\n return compatible_prop(info_settings.get(prop_name, None), prop_value)\n else:\n return compatible_prop(info_options.get(prop_name, None), prop_value)\n return False\n\n\ndef search_recipes(paths, pattern=None, ignorecase=True):\n # Conan references in main storage\n if pattern:\n if isinstance(pattern, ConanFileReference):\n pattern = str(pattern)\n pattern = translate(pattern)\n pattern = re.compile(pattern, re.IGNORECASE) if ignorecase else re.compile(pattern)\n\n subdirs = list_folder_subdirs(basedir=paths.store, level=4)\n if not pattern:\n return sorted([ConanFileReference(*folder.split(\"/\")) for folder in subdirs])\n else:\n ret = []\n for subdir in subdirs:\n conan_ref = ConanFileReference(*subdir.split(\"/\"))\n if pattern:\n if pattern.match(str(conan_ref)):\n ret.append(conan_ref)\n return sorted(ret)\n\n\ndef search_packages(paths, reference, query):\n \"\"\" Return a dict like this:\n\n {package_ID: {name: \"OpenCV\",\n version: \"2.14\",\n settings: {os: Windows}}}\n param conan_ref: ConanFileReference object\n \"\"\"\n infos = _get_local_infos_min(paths, reference)\n return filter_packages(query, infos)\n\n\ndef _get_local_infos_min(paths, reference):\n result = {}\n packages_path = paths.packages(reference)\n subdirs = list_folder_subdirs(packages_path, level=1)\n for package_id in subdirs:\n # Read conaninfo\n try:\n package_reference = PackageReference(reference, package_id)\n info_path = os.path.join(paths.package(package_reference,\n short_paths=None), CONANINFO)\n if not os.path.exists(info_path):\n raise NotFoundException(\"\")\n conan_info_content = load(info_path)\n conan_vars_info = ConanInfo.loads(conan_info_content).serialize_min()\n result[package_id] = conan_vars_info\n\n except Exception as exc:\n logger.error(\"Package %s has no ConanInfo file\" % str(package_reference))\n if str(exc):\n logger.error(str(exc))\n\n return result\n", "path": "conans/search/search.py"}]} | 2,075 | 265 |

gh_patches_debug_16835 | rasdani/github-patches | git_diff | ESMCI__cime-538 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

PET tests do not work on skybridge

Skybridge insta-fails the single-threaded case because it tries to use 16 procs-per-node and the sbatch only requested 8 ppn.

</issue>

<code>

[start of utils/python/CIME/SystemTests/pet.py]

1 """

2 Implementation of the CIME PET test. This class inherits from SystemTestsCommon

3

4 This is an openmp test to determine that changing thread counts does not change answers.

5 (1) do an initial run where all components are threaded by default (suffix: base)

6 (2) do another initial run with nthrds=1 for all components (suffix: single_thread)

7 """

8

9 from CIME.XML.standard_module_setup import *

10 from CIME.case_setup import case_setup

11 from CIME.SystemTests.system_tests_compare_two import SystemTestsCompareTwo

12

13 logger = logging.getLogger(__name__)

14

15 class PET(SystemTestsCompareTwo):

16

17 _COMPONENT_LIST = ('ATM','CPL','OCN','WAV','GLC','ICE','ROF','LND')

18

19 def __init__(self, case):

20 """

21 initialize a test object

22 """

23 SystemTestsCompareTwo.__init__(self, case,

24 separate_builds = False,

25 run_two_suffix = 'single_thread',

26 run_one_description = 'default threading',

27 run_two_description = 'threads set to 1')

28

29 def _case_one_setup(self):

30 # first make sure that all components have threaded settings

31 for comp in self._COMPONENT_LIST:

32 if self._case.get_value("NTHRDS_%s"%comp) <= 1:

33 self._case.set_value("NTHRDS_%s"%comp, 2)

34

35 # Need to redo case_setup because we may have changed the number of threads

36 case_setup(self._case, reset=True)

37

38 def _case_two_setup(self):

39 #Do a run with all threads set to 1

40 for comp in self._COMPONENT_LIST:

41 self._case.set_value("NTHRDS_%s"%comp, 1)

42

43 # Need to redo case_setup because we may have changed the number of threads

44 case_setup(self._case, reset=True)

45

[end of utils/python/CIME/SystemTests/pet.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/utils/python/CIME/SystemTests/pet.py b/utils/python/CIME/SystemTests/pet.py

--- a/utils/python/CIME/SystemTests/pet.py

+++ b/utils/python/CIME/SystemTests/pet.py

@@ -40,5 +40,14 @@

for comp in self._COMPONENT_LIST:

self._case.set_value("NTHRDS_%s"%comp, 1)

+ # The need for this is subtle. On batch systems, the entire PET test runs

+ # under a single submission and that submission is configured based on

+ # the case settings for case 1, IE 2 threads for all components. This causes

+ # the procs-per-node to be half of what it would be for single thread. On some

+ # machines, if the mpiexec tries to exceed the procs-per-node that were given

+ # to the batch submission, things break. Setting MAX_TASKS_PER_NODE to half of

+ # it original value prevents this.

+ self._case.set_value("MAX_TASKS_PER_NODE", self._case.get_value("MAX_TASKS_PER_NODE") / 2)

+

# Need to redo case_setup because we may have changed the number of threads

case_setup(self._case, reset=True)

| {"golden_diff": "diff --git a/utils/python/CIME/SystemTests/pet.py b/utils/python/CIME/SystemTests/pet.py\n--- a/utils/python/CIME/SystemTests/pet.py\n+++ b/utils/python/CIME/SystemTests/pet.py\n@@ -40,5 +40,14 @@\n for comp in self._COMPONENT_LIST:\n self._case.set_value(\"NTHRDS_%s\"%comp, 1)\n \n+ # The need for this is subtle. On batch systems, the entire PET test runs\n+ # under a single submission and that submission is configured based on\n+ # the case settings for case 1, IE 2 threads for all components. This causes\n+ # the procs-per-node to be half of what it would be for single thread. On some\n+ # machines, if the mpiexec tries to exceed the procs-per-node that were given\n+ # to the batch submission, things break. Setting MAX_TASKS_PER_NODE to half of\n+ # it original value prevents this.\n+ self._case.set_value(\"MAX_TASKS_PER_NODE\", self._case.get_value(\"MAX_TASKS_PER_NODE\") / 2)\n+\n # Need to redo case_setup because we may have changed the number of threads\n case_setup(self._case, reset=True)\n", "issue": "PET tests do not work on skybridge\nSkybridge insta-fails the single-threaded case because it tries to use 16 procs-per-node and the sbatch only requested 8 ppn.\n\n", "before_files": [{"content": "\"\"\"\nImplementation of the CIME PET test. This class inherits from SystemTestsCommon\n\nThis is an openmp test to determine that changing thread counts does not change answers.\n(1) do an initial run where all components are threaded by default (suffix: base)\n(2) do another initial run with nthrds=1 for all components (suffix: single_thread)\n\"\"\"\n\nfrom CIME.XML.standard_module_setup import *\nfrom CIME.case_setup import case_setup\nfrom CIME.SystemTests.system_tests_compare_two import SystemTestsCompareTwo\n\nlogger = logging.getLogger(__name__)\n\nclass PET(SystemTestsCompareTwo):\n\n _COMPONENT_LIST = ('ATM','CPL','OCN','WAV','GLC','ICE','ROF','LND')\n\n def __init__(self, case):\n \"\"\"\n initialize a test object\n \"\"\"\n SystemTestsCompareTwo.__init__(self, case,\n separate_builds = False,\n run_two_suffix = 'single_thread',\n run_one_description = 'default threading',\n run_two_description = 'threads set to 1')\n\n def _case_one_setup(self):\n # first make sure that all components have threaded settings\n for comp in self._COMPONENT_LIST:\n if self._case.get_value(\"NTHRDS_%s\"%comp) <= 1:\n self._case.set_value(\"NTHRDS_%s\"%comp, 2)\n\n # Need to redo case_setup because we may have changed the number of threads\n case_setup(self._case, reset=True)\n\n def _case_two_setup(self):\n #Do a run with all threads set to 1\n for comp in self._COMPONENT_LIST:\n self._case.set_value(\"NTHRDS_%s\"%comp, 1)\n\n # Need to redo case_setup because we may have changed the number of threads\n case_setup(self._case, reset=True)\n", "path": "utils/python/CIME/SystemTests/pet.py"}]} | 1,070 | 279 |

gh_patches_debug_53979 | rasdani/github-patches | git_diff | scikit-hep__pyhf-1261 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Remove duplicated libraries in setup.py

# Description

In `setup.py` and `setup.cfg` there are some duplicated libraries that should be removed from `setup.py`.

https://github.com/scikit-hep/pyhf/blob/75f3cd350ed3986d16d680fbb83f312791aafd68/setup.py#L47

already exists as a core requirement in `setup.cfg`

https://github.com/scikit-hep/pyhf/blob/75f3cd350ed3986d16d680fbb83f312791aafd68/setup.cfg#L45

and so should be removed from `setup.py`.

It also isn't clear if

https://github.com/scikit-hep/pyhf/blob/75f3cd350ed3986d16d680fbb83f312791aafd68/setup.py#L42

is still required, given that it was added back in PR #186 when we still used Coveralls for coverage.

</issue>

<code>

[start of setup.py]

1 from setuptools import setup

2

3 extras_require = {

4 'shellcomplete': ['click_completion'],

5 'tensorflow': [

6 'tensorflow~=2.2.0', # TensorFlow minor releases are as volatile as major

7 'tensorflow-probability~=0.10.0',

8 ],

9 'torch': ['torch~=1.2'],

10 'jax': ['jax~=0.2.4', 'jaxlib~=0.1.56'],

11 'xmlio': [

12 'uproot3~=3.14',

13 'uproot~=4.0',

14 ], # uproot3 required until writing to ROOT supported in uproot4

15 'minuit': ['iminuit~=2.1'],

16 }

17 extras_require['backends'] = sorted(

18 set(

19 extras_require['tensorflow']

20 + extras_require['torch']

21 + extras_require['jax']

22 + extras_require['minuit']

23 )

24 )

25 extras_require['contrib'] = sorted({'matplotlib', 'requests'})

26 extras_require['lint'] = sorted({'flake8', 'black'})

27

28 extras_require['test'] = sorted(

29 set(

30 extras_require['backends']

31 + extras_require['xmlio']

32 + extras_require['contrib']

33 + extras_require['shellcomplete']

34 + [

35 'pytest~=6.0',

36 'pytest-cov>=2.5.1',

37 'pytest-mock',

38 'pytest-benchmark[histogram]',

39 'pytest-console-scripts',

40 'pytest-mpl',

41 'pydocstyle',

42 'coverage>=4.0', # coveralls

43 'papermill~=2.0',

44 'nteract-scrapbook~=0.2',

45 'jupyter',

46 'graphviz',

47 'jsonpatch',

48 ]

49 )

50 )

51 extras_require['docs'] = sorted(

52 set(

53 extras_require['xmlio']

54 + [

55 'sphinx>=3.1.2',

56 'sphinxcontrib-bibtex~=2.1',

57 'sphinx-click',

58 'sphinx_rtd_theme',

59 'nbsphinx',

60 'ipywidgets',

61 'sphinx-issues',

62 'sphinx-copybutton>0.2.9',

63 ]

64 )

65 )

66 extras_require['develop'] = sorted(

67 set(

68 extras_require['docs']

69 + extras_require['lint']

70 + extras_require['test']

71 + [

72 'nbdime',

73 'bump2version',

74 'ipython',

75 'pre-commit',

76 'check-manifest',

77 'codemetapy>=0.3.4',

78 'twine',

79 ]

80 )

81 )

82 extras_require['complete'] = sorted(set(sum(extras_require.values(), [])))

83

84

85 setup(

86 extras_require=extras_require,

87 use_scm_version=lambda: {'local_scheme': lambda version: ''},

88 )

89

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -39,12 +39,10 @@

'pytest-console-scripts',

'pytest-mpl',

'pydocstyle',

- 'coverage>=4.0', # coveralls

'papermill~=2.0',

'nteract-scrapbook~=0.2',

'jupyter',

'graphviz',

- 'jsonpatch',

]

)

)

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -39,12 +39,10 @@\n 'pytest-console-scripts',\n 'pytest-mpl',\n 'pydocstyle',\n- 'coverage>=4.0', # coveralls\n 'papermill~=2.0',\n 'nteract-scrapbook~=0.2',\n 'jupyter',\n 'graphviz',\n- 'jsonpatch',\n ]\n )\n )\n", "issue": "Remove duplicated libraries in setup.py\n# Description\r\n\r\nIn `setup.py` and `setup.cfg` there are some duplicated libraries that should be removed from `setup.py`.\r\n\r\nhttps://github.com/scikit-hep/pyhf/blob/75f3cd350ed3986d16d680fbb83f312791aafd68/setup.py#L47\r\n\r\nalready exists as a core requirement in `setup.cfg`\r\n\r\nhttps://github.com/scikit-hep/pyhf/blob/75f3cd350ed3986d16d680fbb83f312791aafd68/setup.cfg#L45\r\n\r\nand so should be removed from `setup.py`.\r\n\r\nIt also isn't clear if \r\n\r\nhttps://github.com/scikit-hep/pyhf/blob/75f3cd350ed3986d16d680fbb83f312791aafd68/setup.py#L42\r\n\r\nis still required, given that it was added back in PR #186 when we still used Coveralls for coverage.\r\n\n", "before_files": [{"content": "from setuptools import setup\n\nextras_require = {\n 'shellcomplete': ['click_completion'],\n 'tensorflow': [\n 'tensorflow~=2.2.0', # TensorFlow minor releases are as volatile as major\n 'tensorflow-probability~=0.10.0',\n ],\n 'torch': ['torch~=1.2'],\n 'jax': ['jax~=0.2.4', 'jaxlib~=0.1.56'],\n 'xmlio': [\n 'uproot3~=3.14',\n 'uproot~=4.0',\n ], # uproot3 required until writing to ROOT supported in uproot4\n 'minuit': ['iminuit~=2.1'],\n}\nextras_require['backends'] = sorted(\n set(\n extras_require['tensorflow']\n + extras_require['torch']\n + extras_require['jax']\n + extras_require['minuit']\n )\n)\nextras_require['contrib'] = sorted({'matplotlib', 'requests'})\nextras_require['lint'] = sorted({'flake8', 'black'})\n\nextras_require['test'] = sorted(\n set(\n extras_require['backends']\n + extras_require['xmlio']\n + extras_require['contrib']\n + extras_require['shellcomplete']\n + [\n 'pytest~=6.0',\n 'pytest-cov>=2.5.1',\n 'pytest-mock',\n 'pytest-benchmark[histogram]',\n 'pytest-console-scripts',\n 'pytest-mpl',\n 'pydocstyle',\n 'coverage>=4.0', # coveralls\n 'papermill~=2.0',\n 'nteract-scrapbook~=0.2',\n 'jupyter',\n 'graphviz',\n 'jsonpatch',\n ]\n )\n)\nextras_require['docs'] = sorted(\n set(\n extras_require['xmlio']\n + [\n 'sphinx>=3.1.2',\n 'sphinxcontrib-bibtex~=2.1',\n 'sphinx-click',\n 'sphinx_rtd_theme',\n 'nbsphinx',\n 'ipywidgets',\n 'sphinx-issues',\n 'sphinx-copybutton>0.2.9',\n ]\n )\n)\nextras_require['develop'] = sorted(\n set(\n extras_require['docs']\n + extras_require['lint']\n + extras_require['test']\n + [\n 'nbdime',\n 'bump2version',\n 'ipython',\n 'pre-commit',\n 'check-manifest',\n 'codemetapy>=0.3.4',\n 'twine',\n ]\n )\n)\nextras_require['complete'] = sorted(set(sum(extras_require.values(), [])))\n\n\nsetup(\n extras_require=extras_require,\n use_scm_version=lambda: {'local_scheme': lambda version: ''},\n)\n", "path": "setup.py"}]} | 1,553 | 109 |

gh_patches_debug_28166 | rasdani/github-patches | git_diff | svthalia__concrexit-1818 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add payment_type or full payment to event admin API

### Motivation

`api/v2/admin/events/<eventPk>/registrations/` currently only gives the uuid of a payment, so to display in the admin screen how it was paid, the payment must be requested separately. Doing this for all of the registrations would be very inefficient (like 40 extra requests to load the event admin). If we simply add the payment_type or replace the payment uuid with a payment serializer, it will be much simpler.

</issue>

<code>

[start of website/events/api/v2/serializers/event_registration.py]

1 from rest_framework import serializers

2

3 from events.models import EventRegistration

4 from members.api.v2.serializers.member import MemberSerializer

5

6

7 class EventRegistrationSerializer(serializers.ModelSerializer):

8 """Serializer for event registrations."""

9

10 def __init__(self, *args, **kwargs):

11 # Don't pass the 'fields' arg up to the superclass

12 fields = kwargs.pop("fields", {"pk", "member", "name"})

13

14 # Instantiate the superclass normally

15 super().__init__(*args, **kwargs)

16

17 allowed = set(fields)

18 existing = set(self.fields.keys())

19 for field_name in existing - allowed:

20 self.fields.pop(field_name)

21

22 class Meta:

23 model = EventRegistration

24 fields = (

25 "pk",

26 "present",

27 "queue_position",

28 "date",

29 "payment",

30 "member",

31 "name",

32 )

33

34 member = MemberSerializer(detailed=False, read_only=True)

35

[end of website/events/api/v2/serializers/event_registration.py]

[start of website/events/api/v2/admin/serializers/event_registration.py]

1 from rest_framework import serializers

2

3 from events.models import EventRegistration

4 from members.api.v2.serializers.member import MemberSerializer

5 from members.models import Member

6

7

8 class EventRegistrationAdminSerializer(serializers.ModelSerializer):

9 """Serializer for event registrations."""

10

11 class Meta:

12 model = EventRegistration

13 fields = (

14 "pk",

15 "present",

16 "queue_position",

17 "date",

18 "date_cancelled",

19 "payment",

20 "member",

21 "name",

22 )

23 read_only_fields = ("payment",)

24

25 def to_internal_value(self, data):

26 self.fields["member"] = serializers.PrimaryKeyRelatedField(

27 queryset=Member.objects.all()

28 )

29 return super().to_internal_value(data)

30

31 def to_representation(self, instance):

32 self.fields["member"] = MemberSerializer(detailed=False, read_only=True)

33 return super().to_representation(instance)

34

[end of website/events/api/v2/admin/serializers/event_registration.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/website/events/api/v2/admin/serializers/event_registration.py b/website/events/api/v2/admin/serializers/event_registration.py

--- a/website/events/api/v2/admin/serializers/event_registration.py

+++ b/website/events/api/v2/admin/serializers/event_registration.py

@@ -3,6 +3,7 @@

from events.models import EventRegistration

from members.api.v2.serializers.member import MemberSerializer

from members.models import Member

+from payments.api.v2.serializers import PaymentSerializer

class EventRegistrationAdminSerializer(serializers.ModelSerializer):

@@ -22,6 +23,8 @@

)

read_only_fields = ("payment",)

+ payment = PaymentSerializer()

+

def to_internal_value(self, data):

self.fields["member"] = serializers.PrimaryKeyRelatedField(

queryset=Member.objects.all()

diff --git a/website/events/api/v2/serializers/event_registration.py b/website/events/api/v2/serializers/event_registration.py

--- a/website/events/api/v2/serializers/event_registration.py

+++ b/website/events/api/v2/serializers/event_registration.py

@@ -2,6 +2,7 @@

from events.models import EventRegistration

from members.api.v2.serializers.member import MemberSerializer

+from payments.api.v2.serializers import PaymentSerializer

class EventRegistrationSerializer(serializers.ModelSerializer):

@@ -31,4 +32,5 @@

"name",

)

+ payment = PaymentSerializer()

member = MemberSerializer(detailed=False, read_only=True)

| {"golden_diff": "diff --git a/website/events/api/v2/admin/serializers/event_registration.py b/website/events/api/v2/admin/serializers/event_registration.py\n--- a/website/events/api/v2/admin/serializers/event_registration.py\n+++ b/website/events/api/v2/admin/serializers/event_registration.py\n@@ -3,6 +3,7 @@\n from events.models import EventRegistration\n from members.api.v2.serializers.member import MemberSerializer\n from members.models import Member\n+from payments.api.v2.serializers import PaymentSerializer\n \n \n class EventRegistrationAdminSerializer(serializers.ModelSerializer):\n@@ -22,6 +23,8 @@\n )\n read_only_fields = (\"payment\",)\n \n+ payment = PaymentSerializer()\n+\n def to_internal_value(self, data):\n self.fields[\"member\"] = serializers.PrimaryKeyRelatedField(\n queryset=Member.objects.all()\ndiff --git a/website/events/api/v2/serializers/event_registration.py b/website/events/api/v2/serializers/event_registration.py\n--- a/website/events/api/v2/serializers/event_registration.py\n+++ b/website/events/api/v2/serializers/event_registration.py\n@@ -2,6 +2,7 @@\n \n from events.models import EventRegistration\n from members.api.v2.serializers.member import MemberSerializer\n+from payments.api.v2.serializers import PaymentSerializer\n \n \n class EventRegistrationSerializer(serializers.ModelSerializer):\n@@ -31,4 +32,5 @@\n \"name\",\n )\n \n+ payment = PaymentSerializer()\n member = MemberSerializer(detailed=False, read_only=True)\n", "issue": "Add payment_type or full payment to event admin API\n### Motivation\r\n`api/v2/admin/events/<eventPk>/registrations/` currently only gives the uuid of a payment, so to display in the admin screen how it was paid, the payment must be requested separately. Doing this for all of the registrations would be very inefficient (like 40 extra requests to load the event admin). If we simply add the payment_type or replace the payment uuid with a payment serializer, it will be much simpler.\r\n\n", "before_files": [{"content": "from rest_framework import serializers\n\nfrom events.models import EventRegistration\nfrom members.api.v2.serializers.member import MemberSerializer\n\n\nclass EventRegistrationSerializer(serializers.ModelSerializer):\n \"\"\"Serializer for event registrations.\"\"\"\n\n def __init__(self, *args, **kwargs):\n # Don't pass the 'fields' arg up to the superclass\n fields = kwargs.pop(\"fields\", {\"pk\", \"member\", \"name\"})\n\n # Instantiate the superclass normally\n super().__init__(*args, **kwargs)\n\n allowed = set(fields)\n existing = set(self.fields.keys())\n for field_name in existing - allowed:\n self.fields.pop(field_name)\n\n class Meta:\n model = EventRegistration\n fields = (\n \"pk\",\n \"present\",\n \"queue_position\",\n \"date\",\n \"payment\",\n \"member\",\n \"name\",\n )\n\n member = MemberSerializer(detailed=False, read_only=True)\n", "path": "website/events/api/v2/serializers/event_registration.py"}, {"content": "from rest_framework import serializers\n\nfrom events.models import EventRegistration\nfrom members.api.v2.serializers.member import MemberSerializer\nfrom members.models import Member\n\n\nclass EventRegistrationAdminSerializer(serializers.ModelSerializer):\n \"\"\"Serializer for event registrations.\"\"\"\n\n class Meta:\n model = EventRegistration\n fields = (\n \"pk\",\n \"present\",\n \"queue_position\",\n \"date\",\n \"date_cancelled\",\n \"payment\",\n \"member\",\n \"name\",\n )\n read_only_fields = (\"payment\",)\n\n def to_internal_value(self, data):\n self.fields[\"member\"] = serializers.PrimaryKeyRelatedField(\n queryset=Member.objects.all()\n )\n return super().to_internal_value(data)\n\n def to_representation(self, instance):\n self.fields[\"member\"] = MemberSerializer(detailed=False, read_only=True)\n return super().to_representation(instance)\n", "path": "website/events/api/v2/admin/serializers/event_registration.py"}]} | 1,185 | 329 |

gh_patches_debug_28466 | rasdani/github-patches | git_diff | scalableminds__webknossos-libs-598 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Warn when multiprocessing fails due to missing if __name__ guard

If wklibs are used with multiprocessing (e.g., calling `downsample` on a layer), python's multiprocessing module will import the main module (if `spawn` is used which is the default on OS X). If that module has side effects (i.e., it is not guarded with `if __name__ == "__main__":`), weird errors can occur.