problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

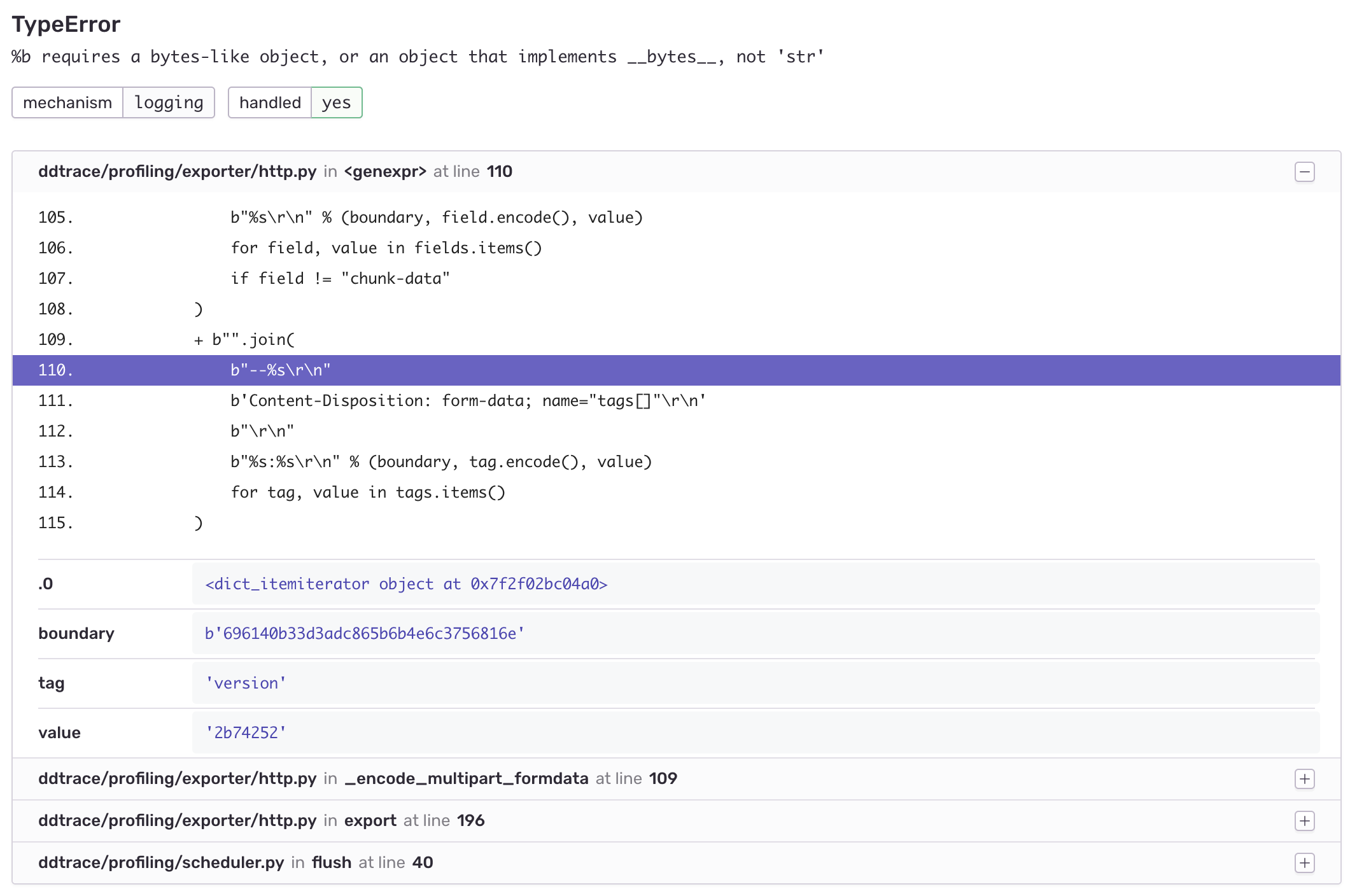

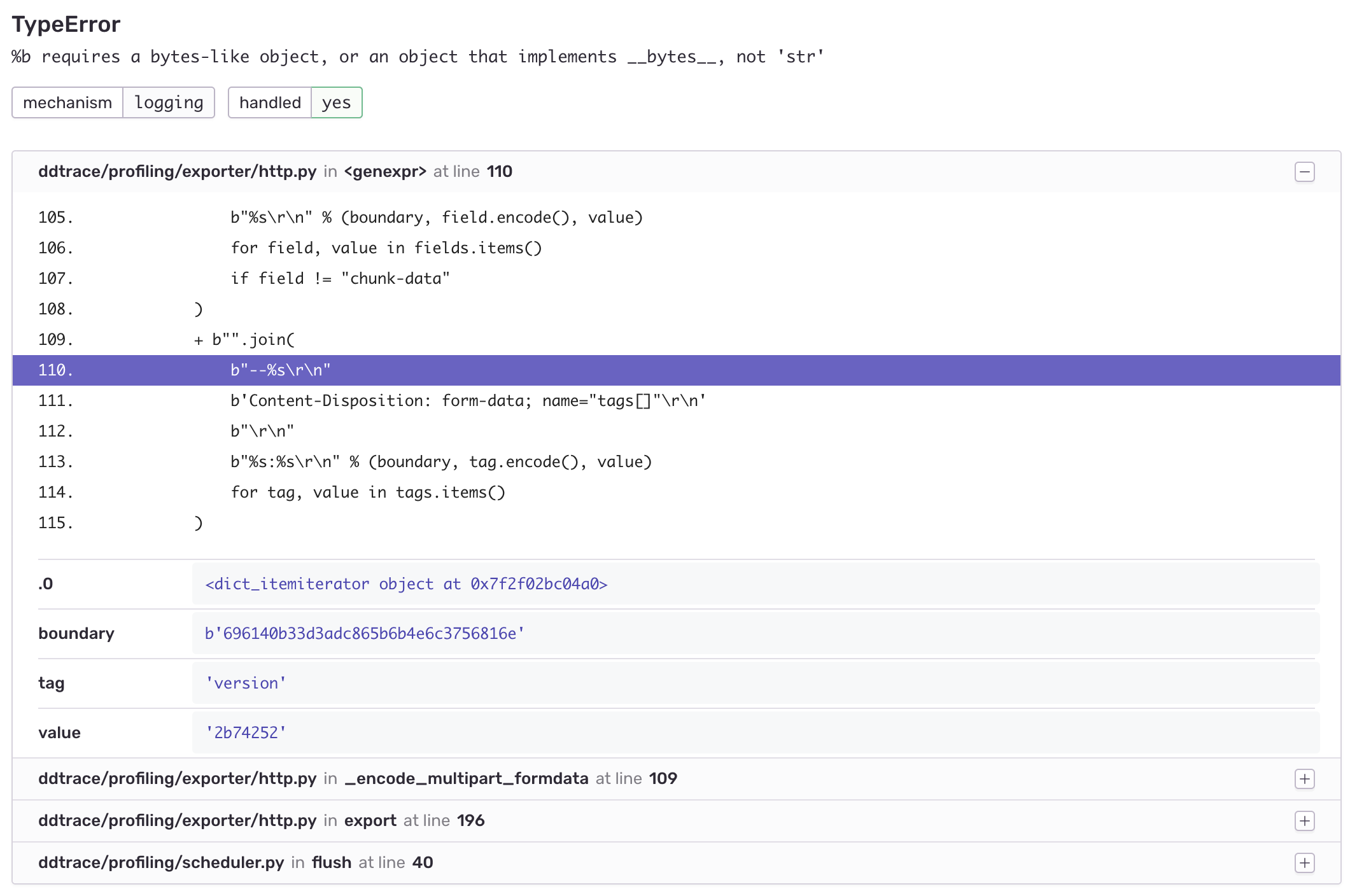

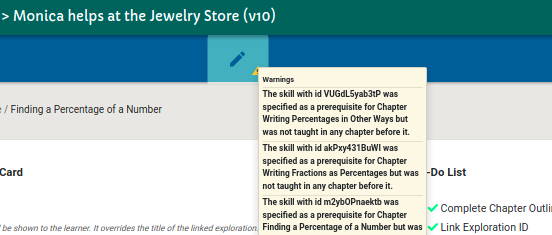

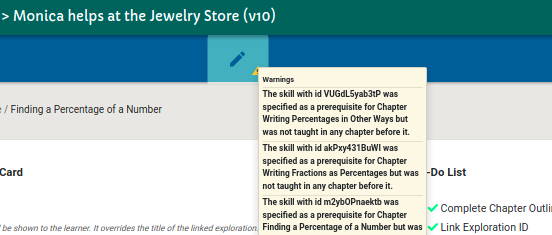

gh_patches_debug_15401 | rasdani/github-patches | git_diff | pytorch__text-1912 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

todo-decorator-remove-solved

Removed the code as the issue is closed.

</issue>

<code>

[start of torchtext/datasets/multi30k.py]

1 import os

2 from functools import partial

3 from typing import Union, Tuple

4

5 from torchtext._internal.module_utils import is_module_available

6 from torchtext.data.datasets_utils import (

7 _wrap_split_argument,

8 _create_dataset_directory,

9 )

10

11 if is_module_available("torchdata"):

12 from torchdata.datapipes.iter import FileOpener, IterableWrapper

13 from torchtext._download_hooks import HttpReader

14

15 # TODO: Update URL to original once the server is back up (see https://github.com/pytorch/text/issues/1756)

16 URL = {

17 "train": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/training.tar.gz",

18 "valid": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/validation.tar.gz",

19 "test": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/mmt16_task1_test.tar.gz",

20 }

21

22 MD5 = {

23 "train": "20140d013d05dd9a72dfde46478663ba05737ce983f478f960c1123c6671be5e",

24 "valid": "a7aa20e9ebd5ba5adce7909498b94410996040857154dab029851af3a866da8c",

25 "test": "6d1ca1dba99e2c5dd54cae1226ff11c2551e6ce63527ebb072a1f70f72a5cd36",

26 }

27

28 _PREFIX = {

29 "train": "train",

30 "valid": "val",

31 "test": "test",

32 }

33

34 NUM_LINES = {

35 "train": 29000,

36 "valid": 1014,

37 "test": 1000,

38 }

39

40 DATASET_NAME = "Multi30k"

41

42

43 def _filepath_fn(root, split, _=None):

44 return os.path.join(root, os.path.basename(URL[split]))

45

46

47 def _decompressed_filepath_fn(root, split, language_pair, i, _):

48 return os.path.join(root, f"{_PREFIX[split]}.{language_pair[i]}")

49

50

51 def _filter_fn(split, language_pair, i, x):

52 return f"{_PREFIX[split]}.{language_pair[i]}" in x[0]

53

54

55 @_create_dataset_directory(dataset_name=DATASET_NAME)

56 @_wrap_split_argument(("train", "valid", "test"))

57 def Multi30k(root: str, split: Union[Tuple[str], str], language_pair: Tuple[str] = ("de", "en")):

58 """Multi30k dataset

59

60 .. warning::

61

62 using datapipes is still currently subject to a few caveats. if you wish

63 to use this dataset with shuffling, multi-processing, or distributed

64 learning, please see :ref:`this note <datapipes_warnings>` for further

65 instructions.

66

67 For additional details refer to https://www.statmt.org/wmt16/multimodal-task.html#task1

68

69 Number of lines per split:

70 - train: 29000

71 - valid: 1014

72 - test: 1000

73

74 Args:

75 root: Directory where the datasets are saved. Default: os.path.expanduser('~/.torchtext/cache')

76 split: split or splits to be returned. Can be a string or tuple of strings. Default: ('train', 'valid', 'test')

77 language_pair: tuple or list containing src and tgt language. Available options are ('de','en') and ('en', 'de')

78

79 :return: DataPipe that yields tuple of source and target sentences

80 :rtype: (str, str)

81 """

82

83 assert len(language_pair) == 2, "language_pair must contain only 2 elements: src and tgt language respectively"

84 assert tuple(sorted(language_pair)) == (

85 "de",

86 "en",

87 ), "language_pair must be either ('de','en') or ('en', 'de')"

88

89 if not is_module_available("torchdata"):

90 raise ModuleNotFoundError(

91 "Package `torchdata` not found. Please install following instructions at https://github.com/pytorch/data"

92 )

93

94 url_dp = IterableWrapper([URL[split]])

95

96 cache_compressed_dp = url_dp.on_disk_cache(

97 filepath_fn=partial(_filepath_fn, root, split),

98 hash_dict={_filepath_fn(root, split): MD5[split]},

99 hash_type="sha256",

100 )

101 cache_compressed_dp = HttpReader(cache_compressed_dp).end_caching(mode="wb", same_filepath_fn=True)

102

103 cache_compressed_dp_1, cache_compressed_dp_2 = cache_compressed_dp.fork(num_instances=2)

104

105 src_cache_decompressed_dp = cache_compressed_dp_1.on_disk_cache(

106 filepath_fn=partial(_decompressed_filepath_fn, root, split, language_pair, 0)

107 )

108 src_cache_decompressed_dp = (

109 FileOpener(src_cache_decompressed_dp, mode="b")

110 .load_from_tar()

111 .filter(partial(_filter_fn, split, language_pair, 0))

112 )

113 src_cache_decompressed_dp = src_cache_decompressed_dp.end_caching(mode="wb", same_filepath_fn=True)

114

115 tgt_cache_decompressed_dp = cache_compressed_dp_2.on_disk_cache(

116 filepath_fn=partial(_decompressed_filepath_fn, root, split, language_pair, 1)

117 )

118 tgt_cache_decompressed_dp = (

119 FileOpener(tgt_cache_decompressed_dp, mode="b")

120 .load_from_tar()

121 .filter(partial(_filter_fn, split, language_pair, 1))

122 )

123 tgt_cache_decompressed_dp = tgt_cache_decompressed_dp.end_caching(mode="wb", same_filepath_fn=True)

124

125 src_data_dp = FileOpener(src_cache_decompressed_dp, encoding="utf-8").readlines(

126 return_path=False, strip_newline=True

127 )

128 tgt_data_dp = FileOpener(tgt_cache_decompressed_dp, encoding="utf-8").readlines(

129 return_path=False, strip_newline=True

130 )

131

132 return src_data_dp.zip(tgt_data_dp).shuffle().set_shuffle(False).sharding_filter()

133

[end of torchtext/datasets/multi30k.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/torchtext/datasets/multi30k.py b/torchtext/datasets/multi30k.py

--- a/torchtext/datasets/multi30k.py

+++ b/torchtext/datasets/multi30k.py

@@ -12,11 +12,10 @@

from torchdata.datapipes.iter import FileOpener, IterableWrapper

from torchtext._download_hooks import HttpReader

-# TODO: Update URL to original once the server is back up (see https://github.com/pytorch/text/issues/1756)

URL = {

- "train": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/training.tar.gz",

- "valid": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/validation.tar.gz",

- "test": r"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/mmt16_task1_test.tar.gz",

+ "train": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/training.tar.gz",

+ "valid": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/validation.tar.gz",

+ "test": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/mmt16_task1_test.tar.gz",

}

MD5 = {

| {"golden_diff": "diff --git a/torchtext/datasets/multi30k.py b/torchtext/datasets/multi30k.py\n--- a/torchtext/datasets/multi30k.py\n+++ b/torchtext/datasets/multi30k.py\n@@ -12,11 +12,10 @@\n from torchdata.datapipes.iter import FileOpener, IterableWrapper\n from torchtext._download_hooks import HttpReader\n \n-# TODO: Update URL to original once the server is back up (see https://github.com/pytorch/text/issues/1756)\n URL = {\n- \"train\": r\"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/training.tar.gz\",\n- \"valid\": r\"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/validation.tar.gz\",\n- \"test\": r\"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/mmt16_task1_test.tar.gz\",\n+ \"train\": \"http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/training.tar.gz\",\n+ \"valid\": \"http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/validation.tar.gz\",\n+ \"test\": \"http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/mmt16_task1_test.tar.gz\",\n }\n \n MD5 = {\n", "issue": "todo-decorator-remove-solved\nRemoved the code as the issue is closed.\n", "before_files": [{"content": "import os\nfrom functools import partial\nfrom typing import Union, Tuple\n\nfrom torchtext._internal.module_utils import is_module_available\nfrom torchtext.data.datasets_utils import (\n _wrap_split_argument,\n _create_dataset_directory,\n)\n\nif is_module_available(\"torchdata\"):\n from torchdata.datapipes.iter import FileOpener, IterableWrapper\n from torchtext._download_hooks import HttpReader\n\n# TODO: Update URL to original once the server is back up (see https://github.com/pytorch/text/issues/1756)\nURL = {\n \"train\": r\"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/training.tar.gz\",\n \"valid\": r\"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/validation.tar.gz\",\n \"test\": r\"https://raw.githubusercontent.com/neychev/small_DL_repo/master/datasets/Multi30k/mmt16_task1_test.tar.gz\",\n}\n\nMD5 = {\n \"train\": \"20140d013d05dd9a72dfde46478663ba05737ce983f478f960c1123c6671be5e\",\n \"valid\": \"a7aa20e9ebd5ba5adce7909498b94410996040857154dab029851af3a866da8c\",\n \"test\": \"6d1ca1dba99e2c5dd54cae1226ff11c2551e6ce63527ebb072a1f70f72a5cd36\",\n}\n\n_PREFIX = {\n \"train\": \"train\",\n \"valid\": \"val\",\n \"test\": \"test\",\n}\n\nNUM_LINES = {\n \"train\": 29000,\n \"valid\": 1014,\n \"test\": 1000,\n}\n\nDATASET_NAME = \"Multi30k\"\n\n\ndef _filepath_fn(root, split, _=None):\n return os.path.join(root, os.path.basename(URL[split]))\n\n\ndef _decompressed_filepath_fn(root, split, language_pair, i, _):\n return os.path.join(root, f\"{_PREFIX[split]}.{language_pair[i]}\")\n\n\ndef _filter_fn(split, language_pair, i, x):\n return f\"{_PREFIX[split]}.{language_pair[i]}\" in x[0]\n\n\n@_create_dataset_directory(dataset_name=DATASET_NAME)\n@_wrap_split_argument((\"train\", \"valid\", \"test\"))\ndef Multi30k(root: str, split: Union[Tuple[str], str], language_pair: Tuple[str] = (\"de\", \"en\")):\n \"\"\"Multi30k dataset\n\n .. warning::\n\n using datapipes is still currently subject to a few caveats. if you wish\n to use this dataset with shuffling, multi-processing, or distributed\n learning, please see :ref:`this note <datapipes_warnings>` for further\n instructions.\n\n For additional details refer to https://www.statmt.org/wmt16/multimodal-task.html#task1\n\n Number of lines per split:\n - train: 29000\n - valid: 1014\n - test: 1000\n\n Args:\n root: Directory where the datasets are saved. Default: os.path.expanduser('~/.torchtext/cache')\n split: split or splits to be returned. Can be a string or tuple of strings. Default: ('train', 'valid', 'test')\n language_pair: tuple or list containing src and tgt language. Available options are ('de','en') and ('en', 'de')\n\n :return: DataPipe that yields tuple of source and target sentences\n :rtype: (str, str)\n \"\"\"\n\n assert len(language_pair) == 2, \"language_pair must contain only 2 elements: src and tgt language respectively\"\n assert tuple(sorted(language_pair)) == (\n \"de\",\n \"en\",\n ), \"language_pair must be either ('de','en') or ('en', 'de')\"\n\n if not is_module_available(\"torchdata\"):\n raise ModuleNotFoundError(\n \"Package `torchdata` not found. Please install following instructions at https://github.com/pytorch/data\"\n )\n\n url_dp = IterableWrapper([URL[split]])\n\n cache_compressed_dp = url_dp.on_disk_cache(\n filepath_fn=partial(_filepath_fn, root, split),\n hash_dict={_filepath_fn(root, split): MD5[split]},\n hash_type=\"sha256\",\n )\n cache_compressed_dp = HttpReader(cache_compressed_dp).end_caching(mode=\"wb\", same_filepath_fn=True)\n\n cache_compressed_dp_1, cache_compressed_dp_2 = cache_compressed_dp.fork(num_instances=2)\n\n src_cache_decompressed_dp = cache_compressed_dp_1.on_disk_cache(\n filepath_fn=partial(_decompressed_filepath_fn, root, split, language_pair, 0)\n )\n src_cache_decompressed_dp = (\n FileOpener(src_cache_decompressed_dp, mode=\"b\")\n .load_from_tar()\n .filter(partial(_filter_fn, split, language_pair, 0))\n )\n src_cache_decompressed_dp = src_cache_decompressed_dp.end_caching(mode=\"wb\", same_filepath_fn=True)\n\n tgt_cache_decompressed_dp = cache_compressed_dp_2.on_disk_cache(\n filepath_fn=partial(_decompressed_filepath_fn, root, split, language_pair, 1)\n )\n tgt_cache_decompressed_dp = (\n FileOpener(tgt_cache_decompressed_dp, mode=\"b\")\n .load_from_tar()\n .filter(partial(_filter_fn, split, language_pair, 1))\n )\n tgt_cache_decompressed_dp = tgt_cache_decompressed_dp.end_caching(mode=\"wb\", same_filepath_fn=True)\n\n src_data_dp = FileOpener(src_cache_decompressed_dp, encoding=\"utf-8\").readlines(\n return_path=False, strip_newline=True\n )\n tgt_data_dp = FileOpener(tgt_cache_decompressed_dp, encoding=\"utf-8\").readlines(\n return_path=False, strip_newline=True\n )\n\n return src_data_dp.zip(tgt_data_dp).shuffle().set_shuffle(False).sharding_filter()\n", "path": "torchtext/datasets/multi30k.py"}]} | 2,277 | 324 |

gh_patches_debug_17570 | rasdani/github-patches | git_diff | bridgecrewio__checkov-3644 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

CKV_ARGO_1 / CKV_ARGO_2 - false positives for kinds Application / ApplicationSet / AppProject

**Describe the issue**

CKV_ARGO_1 / CKV_ARGO_2 checks trigger false positives for argocd kinds Application / ApplicationSet / AppProject

**Examples**

```yaml

# AppProject

---

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: default

spec:

clusterResourceWhitelist:

- group: "*"

kind: "*"

destinations:

- namespace: "*"

server: "*"

sourceRepos:

- "*"

```

```yaml

# Application

---

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: cert-manager

spec:

destination:

namespace: cert-manager

server: https://kubernetes.default.svc

project: default

source:

chart: cert-manager

helm:

values: |

installCRDs: true

prometheus:

enabled: false

repoURL: https://charts.jetstack.io

targetRevision: v1.9.0

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

```

```yaml

# ApplicationSet

---

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: cert-manager

spec:

generators:

- matrix:

generators:

- list:

elements:

- env: dev

- env: qa

- env: preprod

- env: demo

- env: training

template:

metadata:

name: "cert-manager-{{env}}"

spec:

project: "{{env}}"

source:

chart: cert-manager

helm:

values: |

installCRDs: true

prometheus:

enabled: false

repoURL: https://charts.jetstack.io

targetRevision: v1.9.0

destination:

namespace: "cert-manager-{{env}}"

server: https://kubernetes.default.svc

```

**Version (please complete the following information):**

- 2.1.207

</issue>

<code>

[start of checkov/argo_workflows/runner.py]

1 from __future__ import annotations

2

3 import re

4 from pathlib import Path

5 from typing import TYPE_CHECKING, Any

6

7 from checkov.common.images.image_referencer import ImageReferencer, Image

8 from checkov.common.output.report import CheckType

9 from checkov.yaml_doc.runner import Runner as YamlRunner

10

11 # Import of the checks registry for a specific resource type

12 from checkov.argo_workflows.checks.registry import registry as template_registry

13

14 if TYPE_CHECKING:

15 from checkov.common.checks.base_check_registry import BaseCheckRegistry

16

17 API_VERSION_PATTERN = re.compile(r"^apiVersion:\s*argoproj.io/", re.MULTILINE)

18

19

20 class Runner(YamlRunner, ImageReferencer):

21 check_type = CheckType.ARGO_WORKFLOWS # noqa: CCE003 # a static attribute

22

23 block_type_registries = { # noqa: CCE003 # a static attribute

24 "template": template_registry,

25 }

26

27 def require_external_checks(self) -> bool:

28 return False

29

30 def import_registry(self) -> BaseCheckRegistry:

31 return self.block_type_registries["template"]

32

33 def _parse_file(

34 self, f: str, file_content: str | None = None

35 ) -> tuple[dict[str, Any] | list[dict[str, Any]], list[tuple[int, str]]] | None:

36 content = self._get_workflow_file_content(file_path=f)

37 if content:

38 return super()._parse_file(f=f, file_content=content)

39

40 return None

41

42 def _get_workflow_file_content(self, file_path: str) -> str | None:

43 if not file_path.endswith((".yaml", ",yml")):

44 return None

45

46 content = Path(file_path).read_text()

47 match = re.search(API_VERSION_PATTERN, content)

48 if match:

49 return content

50

51 return None

52

53 def is_workflow_file(self, file_path: str) -> bool:

54 return self._get_workflow_file_content(file_path=file_path) is not None

55

56 def get_images(self, file_path: str) -> set[Image]:

57 """Get container images mentioned in a file

58

59 Argo Workflows file can have a job and services run within a container.

60

61 in the following sample file we can see a node:14.16 image:

62

63 apiVersion: argoproj.io/v1alpha1

64 kind: Workflow

65 metadata:

66 generateName: template-defaults-

67 spec:

68 entrypoint: main

69 templates:

70 - name: main

71 steps:

72 - - name: retry-backoff

73 template: retry-backoff

74 - - name: whalesay

75 template: whalesay

76

77 - name: whalesay

78 container:

79 image: argoproj/argosay:v2

80 command: [cowsay]

81 args: ["hello world"]

82

83 - name: retry-backoff

84 container:

85 image: python:alpine3.6

86 command: ["python", -c]

87 # fail with a 66% probability

88 args: ["import random; import sys; exit_code = random.choice([0, 1, 1]); sys.exit(exit_code)"]

89

90 Source: https://github.com/argoproj/argo-workflows/blob/master/examples/template-defaults.yaml

91

92 :return: List of container image short ids mentioned in the file.

93 Example return value for a file with node:14.16 image: ['sha256:6a353e22ce']

94 """

95

96 images: set[Image] = set()

97 parsed_file = self._parse_file(file_path)

98

99 if not parsed_file:

100 return images

101

102 workflow, workflow_line_numbers = parsed_file

103

104 if not isinstance(workflow, dict):

105 # make type checking happy

106 return images

107

108 spec = workflow.get("spec")

109 if spec:

110 templates = spec.get("templates")

111 if isinstance(templates, list):

112 for template in templates:

113 container = template.get("container")

114 if container:

115 image = self.extract_image(file_path=file_path, container=container)

116 if image:

117 images.add(image)

118 script = template.get("script")

119 if script:

120 image = self.extract_image(file_path=file_path, container=script)

121 if image:

122 images.add(image)

123

124 return images

125

126 def extract_image(self, file_path: str, container: dict[str, Any]) -> Image | None:

127 image_name = container.get("image")

128 if image_name and isinstance(image_name, str):

129 start_line = container.get("__startline__", 0)

130 end_line = container.get("__endline__", 0)

131 return Image(

132 file_path=file_path,

133 name=image_name,

134 start_line=start_line,

135 end_line=end_line,

136 )

137

138 return None

139

[end of checkov/argo_workflows/runner.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/checkov/argo_workflows/runner.py b/checkov/argo_workflows/runner.py

--- a/checkov/argo_workflows/runner.py

+++ b/checkov/argo_workflows/runner.py

@@ -15,6 +15,7 @@

from checkov.common.checks.base_check_registry import BaseCheckRegistry

API_VERSION_PATTERN = re.compile(r"^apiVersion:\s*argoproj.io/", re.MULTILINE)

+KIND_PATTERN = re.compile(r"^kind:\s*Workflow", re.MULTILINE)

class Runner(YamlRunner, ImageReferencer):

@@ -44,9 +45,12 @@

return None

content = Path(file_path).read_text()

- match = re.search(API_VERSION_PATTERN, content)

- if match:

- return content

+ match_api = re.search(API_VERSION_PATTERN, content)

+ if match_api:

+ match_kind = re.search(KIND_PATTERN, content)

+ if match_kind:

+ # only scan Argo Workflows

+ return content

return None

| {"golden_diff": "diff --git a/checkov/argo_workflows/runner.py b/checkov/argo_workflows/runner.py\n--- a/checkov/argo_workflows/runner.py\n+++ b/checkov/argo_workflows/runner.py\n@@ -15,6 +15,7 @@\n from checkov.common.checks.base_check_registry import BaseCheckRegistry\n \n API_VERSION_PATTERN = re.compile(r\"^apiVersion:\\s*argoproj.io/\", re.MULTILINE)\n+KIND_PATTERN = re.compile(r\"^kind:\\s*Workflow\", re.MULTILINE)\n \n \n class Runner(YamlRunner, ImageReferencer):\n@@ -44,9 +45,12 @@\n return None\n \n content = Path(file_path).read_text()\n- match = re.search(API_VERSION_PATTERN, content)\n- if match:\n- return content\n+ match_api = re.search(API_VERSION_PATTERN, content)\n+ if match_api:\n+ match_kind = re.search(KIND_PATTERN, content)\n+ if match_kind:\n+ # only scan Argo Workflows\n+ return content\n \n return None\n", "issue": "CKV_ARGO_1 / CKV_ARGO_2 - false positives for kinds Application / ApplicationSet / AppProject\n**Describe the issue**\r\nCKV_ARGO_1 / CKV_ARGO_2 checks trigger false positives for argocd kinds Application / ApplicationSet / AppProject\r\n\r\n**Examples**\r\n```yaml\r\n# AppProject\r\n---\r\napiVersion: argoproj.io/v1alpha1\r\nkind: AppProject\r\nmetadata:\r\n name: default\r\nspec:\r\n clusterResourceWhitelist:\r\n - group: \"*\"\r\n kind: \"*\"\r\n destinations:\r\n - namespace: \"*\"\r\n server: \"*\"\r\n sourceRepos:\r\n - \"*\"\r\n```\r\n```yaml\r\n# Application\r\n---\r\napiVersion: argoproj.io/v1alpha1\r\nkind: Application\r\nmetadata:\r\n name: cert-manager\r\nspec:\r\n destination:\r\n namespace: cert-manager\r\n server: https://kubernetes.default.svc\r\n project: default\r\n source:\r\n chart: cert-manager\r\n helm:\r\n values: |\r\n installCRDs: true\r\n\r\n prometheus:\r\n enabled: false\r\n\r\n repoURL: https://charts.jetstack.io\r\n targetRevision: v1.9.0\r\n syncPolicy:\r\n automated:\r\n prune: true\r\n selfHeal: true\r\n syncOptions:\r\n - CreateNamespace=true\r\n```\r\n```yaml\r\n# ApplicationSet\r\n---\r\napiVersion: argoproj.io/v1alpha1\r\nkind: ApplicationSet\r\nmetadata:\r\n name: cert-manager\r\nspec:\r\n generators:\r\n - matrix:\r\n generators:\r\n - list:\r\n elements:\r\n - env: dev\r\n - env: qa\r\n - env: preprod\r\n - env: demo\r\n - env: training\r\n template:\r\n metadata:\r\n name: \"cert-manager-{{env}}\"\r\n spec:\r\n project: \"{{env}}\"\r\n source:\r\n chart: cert-manager\r\n helm:\r\n values: |\r\n installCRDs: true\r\n\r\n prometheus:\r\n enabled: false\r\n\r\n repoURL: https://charts.jetstack.io\r\n targetRevision: v1.9.0\r\n destination:\r\n namespace: \"cert-manager-{{env}}\"\r\n server: https://kubernetes.default.svc\r\n```\r\n\r\n**Version (please complete the following information):**\r\n - 2.1.207\r\n \r\n\n", "before_files": [{"content": "from __future__ import annotations\n\nimport re\nfrom pathlib import Path\nfrom typing import TYPE_CHECKING, Any\n\nfrom checkov.common.images.image_referencer import ImageReferencer, Image\nfrom checkov.common.output.report import CheckType\nfrom checkov.yaml_doc.runner import Runner as YamlRunner\n\n# Import of the checks registry for a specific resource type\nfrom checkov.argo_workflows.checks.registry import registry as template_registry\n\nif TYPE_CHECKING:\n from checkov.common.checks.base_check_registry import BaseCheckRegistry\n\nAPI_VERSION_PATTERN = re.compile(r\"^apiVersion:\\s*argoproj.io/\", re.MULTILINE)\n\n\nclass Runner(YamlRunner, ImageReferencer):\n check_type = CheckType.ARGO_WORKFLOWS # noqa: CCE003 # a static attribute\n\n block_type_registries = { # noqa: CCE003 # a static attribute\n \"template\": template_registry,\n }\n\n def require_external_checks(self) -> bool:\n return False\n\n def import_registry(self) -> BaseCheckRegistry:\n return self.block_type_registries[\"template\"]\n\n def _parse_file(\n self, f: str, file_content: str | None = None\n ) -> tuple[dict[str, Any] | list[dict[str, Any]], list[tuple[int, str]]] | None:\n content = self._get_workflow_file_content(file_path=f)\n if content:\n return super()._parse_file(f=f, file_content=content)\n\n return None\n\n def _get_workflow_file_content(self, file_path: str) -> str | None:\n if not file_path.endswith((\".yaml\", \",yml\")):\n return None\n\n content = Path(file_path).read_text()\n match = re.search(API_VERSION_PATTERN, content)\n if match:\n return content\n\n return None\n\n def is_workflow_file(self, file_path: str) -> bool:\n return self._get_workflow_file_content(file_path=file_path) is not None\n\n def get_images(self, file_path: str) -> set[Image]:\n \"\"\"Get container images mentioned in a file\n\n Argo Workflows file can have a job and services run within a container.\n\n in the following sample file we can see a node:14.16 image:\n\n apiVersion: argoproj.io/v1alpha1\n kind: Workflow\n metadata:\n generateName: template-defaults-\n spec:\n entrypoint: main\n templates:\n - name: main\n steps:\n - - name: retry-backoff\n template: retry-backoff\n - - name: whalesay\n template: whalesay\n\n - name: whalesay\n container:\n image: argoproj/argosay:v2\n command: [cowsay]\n args: [\"hello world\"]\n\n - name: retry-backoff\n container:\n image: python:alpine3.6\n command: [\"python\", -c]\n # fail with a 66% probability\n args: [\"import random; import sys; exit_code = random.choice([0, 1, 1]); sys.exit(exit_code)\"]\n\n Source: https://github.com/argoproj/argo-workflows/blob/master/examples/template-defaults.yaml\n\n :return: List of container image short ids mentioned in the file.\n Example return value for a file with node:14.16 image: ['sha256:6a353e22ce']\n \"\"\"\n\n images: set[Image] = set()\n parsed_file = self._parse_file(file_path)\n\n if not parsed_file:\n return images\n\n workflow, workflow_line_numbers = parsed_file\n\n if not isinstance(workflow, dict):\n # make type checking happy\n return images\n\n spec = workflow.get(\"spec\")\n if spec:\n templates = spec.get(\"templates\")\n if isinstance(templates, list):\n for template in templates:\n container = template.get(\"container\")\n if container:\n image = self.extract_image(file_path=file_path, container=container)\n if image:\n images.add(image)\n script = template.get(\"script\")\n if script:\n image = self.extract_image(file_path=file_path, container=script)\n if image:\n images.add(image)\n\n return images\n\n def extract_image(self, file_path: str, container: dict[str, Any]) -> Image | None:\n image_name = container.get(\"image\")\n if image_name and isinstance(image_name, str):\n start_line = container.get(\"__startline__\", 0)\n end_line = container.get(\"__endline__\", 0)\n return Image(\n file_path=file_path,\n name=image_name,\n start_line=start_line,\n end_line=end_line,\n )\n\n return None\n", "path": "checkov/argo_workflows/runner.py"}]} | 2,407 | 233 |

gh_patches_debug_27074 | rasdani/github-patches | git_diff | OCA__social-937 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[14.0] mail_debrand empty content for sign up

Using mail_debrand, the content of the mail sent at sign up is empty (headers and footer are properly filled) :

```html

<!-- CONTENT -->

<tr>

<td align="center" style="min-width: 590px;">

<table border="0" cellpadding="0" cellspacing="0" width="590" style="min-width: 590px; background-color: white; padding: 0px 8px 0px 8px; border-collapse:separate;">

<tr><td valign="top" style="font-size: 13px;">

</td></tr>

<tr><td style="text-align:center;">

<hr width="100%" style="background-color:rgb(204,204,204);border:medium none;clear:both;display:block;font-size:0px;min-height:1px;line-height:0; margin: 16px 0px 16px 0px;">

</td></tr>

</table>

</td>

</tr>

```

Without mail_debrand module installed I get the following content :

```html

<!-- CONTENT -->

<tr>

<td align="center" style="min-width: 590px;">

<table border="0" cellpadding="0" cellspacing="0" width="590" style="min-width: 590px; background-color: white; padding: 0px 8px 0px 8px; border-collapse:separate;">

<tr><td valign="top" style="font-size: 13px;">

<div>

Cher(e) test,<br/><br/>

Vous avez été invité par Admin LE FILAMENT à rejoindre Odoo.

<div style="margin: 16px 0px 16px 0px;">

<a href="removed_url" style="background-color: #875A7B; padding: 8px 16px 8px 16px; text-decoration: none; color: #fff; border-radius: 5px; font-size:13px;">

Accepter l'invitation

</a>

</div>

Votre nom de domaine Odoo est: <b><a href="removed_url">removed_url</a></b><br/>

Votre courriel de connection est: <b><a href="removed_url" target="_blank">removed_email</a></b><br/><br/>

Jamais entendu parler d'Odoo? C’est un logiciel de gestion tout-en-un apprécié par plus de 3 millions d’utilisateurs. Il améliorera considérablement votre expérience de travail et augmentera votre productivité.

<br/><br/>

Faites un tour dans la section <a href="https://www.odoo.com/page/tour?utm_source=db&utm_medium=auth" style="color: #875A7B;">Odoo Tour</a> afin de découvrir l'outil.

<br/><br/>

Enjoy Odoo!<br/>

--<br/>L'équipe LE FILAMENT

</div>

</td></tr>

<tr><td style="text-align:center;">

<hr width="100%" style="background-color:rgb(204,204,204);border:medium none;clear:both;display:block;font-size:0px;min-height:1px;line-height:0; margin: 16px 0px 16px 0px;"/>

</td></tr>

</table>

</td>

</tr>

```

The issue seems related to this block : https://github.com/OCA/social/blob/02467d14fa4b2ade959ced981f3a0411d46e1d26/mail_debrand/models/mail_render_mixin.py#L51-L52 where the full div is removed because of Odoo tour section.

I am not sure how to address this without breaking the way this module works for other mail templates, any idea would be welcome !

</issue>

<code>

[start of mail_debrand/__manifest__.py]

1 # Copyright 2016 Tecnativa - Jairo Llopis

2 # Copyright 2017 Tecnativa - Pedro M. Baeza

3 # Copyright 2019 ForgeFlow S.L. - Lois Rilo <[email protected]>

4 # 2020 NextERP Romania

5 # Copyright 2021 Tecnativa - João Marques

6 # License AGPL-3.0 or later (http://www.gnu.org/licenses/agpl.html).

7

8 {

9 "name": "Mail Debrand",

10 "summary": """Remove Odoo branding in sent emails

11 Removes anchor <a href odoo.com togheder with it's parent

12 ( for powerd by) form all the templates

13 removes any 'odoo' that are in tempalte texts > 20characters

14 """,

15 "version": "14.0.2.2.1",

16 "category": "Social Network",

17 "website": "https://github.com/OCA/social",

18 "author": """Tecnativa, ForgeFlow, Onestein, Sodexis, Nexterp Romania,

19 Odoo Community Association (OCA)""",

20 "license": "AGPL-3",

21 "installable": True,

22 "depends": ["mail"],

23 "development_status": "Production/Stable",

24 "maintainers": ["pedrobaeza", "joao-p-marques"],

25 }

26

[end of mail_debrand/__manifest__.py]

[start of mail_debrand/models/mail_render_mixin.py]

1 # Copyright 2019 O4SB - Graeme Gellatly

2 # Copyright 2019 Tecnativa - Ernesto Tejeda

3 # Copyright 2020 Onestein - Andrea Stirpe

4 # Copyright 2021 Tecnativa - João Marques

5 # License AGPL-3.0 or later (http://www.gnu.org/licenses/agpl.html).

6 import re

7

8 from lxml import etree, html

9

10 from odoo import api, models

11

12

13 class MailRenderMixin(models.AbstractModel):

14 _inherit = "mail.render.mixin"

15

16 def remove_href_odoo(

17 self, value, remove_parent=True, remove_before=False, to_keep=None

18 ):

19 if len(value) < 20:

20 return value

21 # value can be bytes type; ensure we get a proper string

22 if type(value) is bytes:

23 value = value.decode()

24 has_odoo_link = re.search(r"<a\s(.*)odoo\.com", value, flags=re.IGNORECASE)

25 if has_odoo_link:

26 # We don't want to change what was explicitly added in the message body,

27 # so we will only change what is before and after it.

28 if to_keep:

29 value = value.replace(to_keep, "<body_msg></body_msg>")

30 tree = html.fromstring(value)

31 odoo_anchors = tree.xpath('//a[contains(@href,"odoo.com")]')

32 for elem in odoo_anchors:

33 parent = elem.getparent()

34 previous = elem.getprevious()

35 if remove_before and not remove_parent and previous is not None:

36 # remove 'using' that is before <a and after </span>

37 previous.tail = ""

38 if remove_parent and len(parent.getparent()):

39 # anchor <a href odoo has a parent powered by that must be removed

40 parent.getparent().remove(parent)

41 else:

42 if parent.tag == "td": # also here can be powered by

43 parent.getparent().remove(parent)

44 else:

45 parent.remove(elem)

46 value = etree.tostring(

47 tree, pretty_print=True, method="html", encoding="unicode"

48 )

49 if to_keep:

50 value = value.replace("<body_msg></body_msg>", to_keep)

51 return value

52

53 @api.model

54 def _render_template(

55 self,

56 template_src,

57 model,

58 res_ids,

59 engine="jinja",

60 add_context=None,

61 post_process=False,

62 ):

63 """replace anything that is with odoo in templates

64 if is a <a that contains odoo will delete it completly

65 original:

66 Render the given string on records designed by model / res_ids using

67 the given rendering engine. Currently only jinja is supported.

68

69 :param str template_src: template text to render (jinja) or (qweb)

70 this could be cleaned but hey, we are in a rush

71 :param str model: model name of records on which we want to perform rendering

72 :param list res_ids: list of ids of records (all belonging to same model)

73 :param string engine: jinja

74 :param post_process: perform rendered str / html post processing (see

75 ``_render_template_postprocess``)

76

77 :return dict: {res_id: string of rendered template based on record}"""

78 orginal_rendered = super()._render_template(

79 template_src,

80 model,

81 res_ids,

82 engine=engine,

83 add_context=add_context,

84 post_process=post_process,

85 )

86

87 for key in res_ids:

88 orginal_rendered[key] = self.remove_href_odoo(orginal_rendered[key])

89

90 return orginal_rendered

91

92 def _replace_local_links(self, html, base_url=None):

93 message = super()._replace_local_links(html)

94 message = re.sub(

95 r"""(Powered by\s(.*)Odoo</a>)""", "<div> </div>", message

96 )

97 return message

98

[end of mail_debrand/models/mail_render_mixin.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/mail_debrand/__manifest__.py b/mail_debrand/__manifest__.py

--- a/mail_debrand/__manifest__.py

+++ b/mail_debrand/__manifest__.py

@@ -20,6 +20,9 @@

"license": "AGPL-3",

"installable": True,

"depends": ["mail"],

+ "data": [

+ "data/ir_config_parameter.xml",

+ ],

"development_status": "Production/Stable",

"maintainers": ["pedrobaeza", "joao-p-marques"],

}

diff --git a/mail_debrand/models/mail_render_mixin.py b/mail_debrand/models/mail_render_mixin.py

--- a/mail_debrand/models/mail_render_mixin.py

+++ b/mail_debrand/models/mail_render_mixin.py

@@ -22,6 +22,23 @@

if type(value) is bytes:

value = value.decode()

has_odoo_link = re.search(r"<a\s(.*)odoo\.com", value, flags=re.IGNORECASE)

+ extra_regex_to_skip = (

+ self.env["ir.config_parameter"]

+ .sudo()

+ .get_param("mail_debrand.extra_regex_to_skip", "False")

+ )

+ # value is required field on ir config_parameter, so we have added

+ # safety check for "False"

+ if (

+ has_odoo_link

+ and extra_regex_to_skip

+ and extra_regex_to_skip.strip().lower() != "false"

+ ):

+ # check each regex to be skipped

+ for regex in extra_regex_to_skip.split(","):

+ if re.search(r"{}".format(regex), value, flags=re.IGNORECASE):

+ has_odoo_link = False

+ break

if has_odoo_link:

# We don't want to change what was explicitly added in the message body,

# so we will only change what is before and after it.

| {"golden_diff": "diff --git a/mail_debrand/__manifest__.py b/mail_debrand/__manifest__.py\n--- a/mail_debrand/__manifest__.py\n+++ b/mail_debrand/__manifest__.py\n@@ -20,6 +20,9 @@\n \"license\": \"AGPL-3\",\n \"installable\": True,\n \"depends\": [\"mail\"],\n+ \"data\": [\n+ \"data/ir_config_parameter.xml\",\n+ ],\n \"development_status\": \"Production/Stable\",\n \"maintainers\": [\"pedrobaeza\", \"joao-p-marques\"],\n }\ndiff --git a/mail_debrand/models/mail_render_mixin.py b/mail_debrand/models/mail_render_mixin.py\n--- a/mail_debrand/models/mail_render_mixin.py\n+++ b/mail_debrand/models/mail_render_mixin.py\n@@ -22,6 +22,23 @@\n if type(value) is bytes:\n value = value.decode()\n has_odoo_link = re.search(r\"<a\\s(.*)odoo\\.com\", value, flags=re.IGNORECASE)\n+ extra_regex_to_skip = (\n+ self.env[\"ir.config_parameter\"]\n+ .sudo()\n+ .get_param(\"mail_debrand.extra_regex_to_skip\", \"False\")\n+ )\n+ # value is required field on ir config_parameter, so we have added\n+ # safety check for \"False\"\n+ if (\n+ has_odoo_link\n+ and extra_regex_to_skip\n+ and extra_regex_to_skip.strip().lower() != \"false\"\n+ ):\n+ # check each regex to be skipped\n+ for regex in extra_regex_to_skip.split(\",\"):\n+ if re.search(r\"{}\".format(regex), value, flags=re.IGNORECASE):\n+ has_odoo_link = False\n+ break\n if has_odoo_link:\n # We don't want to change what was explicitly added in the message body,\n # so we will only change what is before and after it.\n", "issue": "[14.0] mail_debrand empty content for sign up\nUsing mail_debrand, the content of the mail sent at sign up is empty (headers and footer are properly filled) :\r\n```html\r\n <!-- CONTENT -->\r\n <tr>\r\n <td align=\"center\" style=\"min-width: 590px;\">\r\n <table border=\"0\" cellpadding=\"0\" cellspacing=\"0\" width=\"590\" style=\"min-width: 590px; background-color: white; padding: 0px 8px 0px 8px; border-collapse:separate;\">\r\n <tr><td valign=\"top\" style=\"font-size: 13px;\">\r\n </td></tr>\r\n <tr><td style=\"text-align:center;\">\r\n <hr width=\"100%\" style=\"background-color:rgb(204,204,204);border:medium none;clear:both;display:block;font-size:0px;min-height:1px;line-height:0; margin: 16px 0px 16px 0px;\">\r\n </td></tr>\r\n </table>\r\n </td>\r\n </tr>\r\n```\r\n\r\nWithout mail_debrand module installed I get the following content : \r\n```html\r\n <!-- CONTENT -->\r\n <tr>\r\n <td align=\"center\" style=\"min-width: 590px;\">\r\n <table border=\"0\" cellpadding=\"0\" cellspacing=\"0\" width=\"590\" style=\"min-width: 590px; background-color: white; padding: 0px 8px 0px 8px; border-collapse:separate;\">\r\n <tr><td valign=\"top\" style=\"font-size: 13px;\">\r\n <div>\r\n Cher(e) test,<br/><br/>\r\n Vous avez \u00e9t\u00e9 invit\u00e9 par Admin LE FILAMENT \u00e0 rejoindre Odoo.\r\n <div style=\"margin: 16px 0px 16px 0px;\">\r\n <a href=\"removed_url\" style=\"background-color: #875A7B; padding: 8px 16px 8px 16px; text-decoration: none; color: #fff; border-radius: 5px; font-size:13px;\">\r\n Accepter l'invitation\r\n </a>\r\n </div>\r\n Votre nom de domaine Odoo est: <b><a href=\"removed_url\">removed_url</a></b><br/>\r\n Votre courriel de connection est: <b><a href=\"removed_url\" target=\"_blank\">removed_email</a></b><br/><br/>\r\n Jamais entendu parler d'Odoo? C\u2019est un logiciel de gestion tout-en-un appr\u00e9ci\u00e9 par plus de 3 millions d\u2019utilisateurs. Il am\u00e9liorera consid\u00e9rablement votre exp\u00e9rience de travail et augmentera votre productivit\u00e9.\r\n <br/><br/>\r\n Faites un tour dans la section <a href=\"https://www.odoo.com/page/tour?utm_source=db&utm_medium=auth\" style=\"color: #875A7B;\">Odoo Tour</a> afin de d\u00e9couvrir l'outil.\r\n <br/><br/>\r\n Enjoy Odoo!<br/>\r\n --<br/>L'\u00e9quipe LE FILAMENT \r\n </div>\r\n </td></tr>\r\n <tr><td style=\"text-align:center;\">\r\n <hr width=\"100%\" style=\"background-color:rgb(204,204,204);border:medium none;clear:both;display:block;font-size:0px;min-height:1px;line-height:0; margin: 16px 0px 16px 0px;\"/>\r\n </td></tr>\r\n </table>\r\n </td>\r\n </tr>\r\n```\r\n\r\nThe issue seems related to this block : https://github.com/OCA/social/blob/02467d14fa4b2ade959ced981f3a0411d46e1d26/mail_debrand/models/mail_render_mixin.py#L51-L52 where the full div is removed because of Odoo tour section.\r\n\r\nI am not sure how to address this without breaking the way this module works for other mail templates, any idea would be welcome !\n", "before_files": [{"content": "# Copyright 2016 Tecnativa - Jairo Llopis\n# Copyright 2017 Tecnativa - Pedro M. Baeza\n# Copyright 2019 ForgeFlow S.L. - Lois Rilo <[email protected]>\n# 2020 NextERP Romania\n# Copyright 2021 Tecnativa - Jo\u00e3o Marques\n# License AGPL-3.0 or later (http://www.gnu.org/licenses/agpl.html).\n\n{\n \"name\": \"Mail Debrand\",\n \"summary\": \"\"\"Remove Odoo branding in sent emails\n Removes anchor <a href odoo.com togheder with it's parent\n ( for powerd by) form all the templates\n removes any 'odoo' that are in tempalte texts > 20characters\n \"\"\",\n \"version\": \"14.0.2.2.1\",\n \"category\": \"Social Network\",\n \"website\": \"https://github.com/OCA/social\",\n \"author\": \"\"\"Tecnativa, ForgeFlow, Onestein, Sodexis, Nexterp Romania,\n Odoo Community Association (OCA)\"\"\",\n \"license\": \"AGPL-3\",\n \"installable\": True,\n \"depends\": [\"mail\"],\n \"development_status\": \"Production/Stable\",\n \"maintainers\": [\"pedrobaeza\", \"joao-p-marques\"],\n}\n", "path": "mail_debrand/__manifest__.py"}, {"content": "# Copyright 2019 O4SB - Graeme Gellatly\n# Copyright 2019 Tecnativa - Ernesto Tejeda\n# Copyright 2020 Onestein - Andrea Stirpe\n# Copyright 2021 Tecnativa - Jo\u00e3o Marques\n# License AGPL-3.0 or later (http://www.gnu.org/licenses/agpl.html).\nimport re\n\nfrom lxml import etree, html\n\nfrom odoo import api, models\n\n\nclass MailRenderMixin(models.AbstractModel):\n _inherit = \"mail.render.mixin\"\n\n def remove_href_odoo(\n self, value, remove_parent=True, remove_before=False, to_keep=None\n ):\n if len(value) < 20:\n return value\n # value can be bytes type; ensure we get a proper string\n if type(value) is bytes:\n value = value.decode()\n has_odoo_link = re.search(r\"<a\\s(.*)odoo\\.com\", value, flags=re.IGNORECASE)\n if has_odoo_link:\n # We don't want to change what was explicitly added in the message body,\n # so we will only change what is before and after it.\n if to_keep:\n value = value.replace(to_keep, \"<body_msg></body_msg>\")\n tree = html.fromstring(value)\n odoo_anchors = tree.xpath('//a[contains(@href,\"odoo.com\")]')\n for elem in odoo_anchors:\n parent = elem.getparent()\n previous = elem.getprevious()\n if remove_before and not remove_parent and previous is not None:\n # remove 'using' that is before <a and after </span>\n previous.tail = \"\"\n if remove_parent and len(parent.getparent()):\n # anchor <a href odoo has a parent powered by that must be removed\n parent.getparent().remove(parent)\n else:\n if parent.tag == \"td\": # also here can be powered by\n parent.getparent().remove(parent)\n else:\n parent.remove(elem)\n value = etree.tostring(\n tree, pretty_print=True, method=\"html\", encoding=\"unicode\"\n )\n if to_keep:\n value = value.replace(\"<body_msg></body_msg>\", to_keep)\n return value\n\n @api.model\n def _render_template(\n self,\n template_src,\n model,\n res_ids,\n engine=\"jinja\",\n add_context=None,\n post_process=False,\n ):\n \"\"\"replace anything that is with odoo in templates\n if is a <a that contains odoo will delete it completly\n original:\n Render the given string on records designed by model / res_ids using\n the given rendering engine. Currently only jinja is supported.\n\n :param str template_src: template text to render (jinja) or (qweb)\n this could be cleaned but hey, we are in a rush\n :param str model: model name of records on which we want to perform rendering\n :param list res_ids: list of ids of records (all belonging to same model)\n :param string engine: jinja\n :param post_process: perform rendered str / html post processing (see\n ``_render_template_postprocess``)\n\n :return dict: {res_id: string of rendered template based on record}\"\"\"\n orginal_rendered = super()._render_template(\n template_src,\n model,\n res_ids,\n engine=engine,\n add_context=add_context,\n post_process=post_process,\n )\n\n for key in res_ids:\n orginal_rendered[key] = self.remove_href_odoo(orginal_rendered[key])\n\n return orginal_rendered\n\n def _replace_local_links(self, html, base_url=None):\n message = super()._replace_local_links(html)\n message = re.sub(\n r\"\"\"(Powered by\\s(.*)Odoo</a>)\"\"\", \"<div> </div>\", message\n )\n return message\n", "path": "mail_debrand/models/mail_render_mixin.py"}]} | 2,873 | 422 |

gh_patches_debug_9212 | rasdani/github-patches | git_diff | jazzband__pip-tools-956 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add python 3.8 support

#### What's the problem this feature will solve?

<!-- What are you trying to do, that you are unable to achieve with pip-tools as it currently stands? -->

Python 3.8 is released, so it's time to support it.

#### Describe the solution you'd like

<!-- A clear and concise description of what you want to happen. -->

1. add "py37" env to `tox.ini`

1. remove 3.8-dev from `.travis.yml`

1. add "Programming Language :: Python :: 3.8" classifier to `setup.py`

1. add "3.8" dimension to `.travis.yml` (supported, see https://travis-ci.community/t/add-python-3-8-support/5463)

1. add "py37" dimension to `.appveyor.yml` (not supported yet, but will be on the nex image update, tracking issue: https://github.com/appveyor/ci/issues/3142)

1. add "3.8" to python-version list in `.github/workflows/cron.yml` (not supported yet, tracking issue: https://github.com/actions/setup-python/issues/30)

<!-- Provide examples of real-world use cases that this would enable and how it solves the problem described above. -->

#### Alternative Solutions

<!-- Have you tried to workaround the problem using pip-tools or other tools? Or a different approach to solving this issue? Please elaborate here. -->

N/A

#### Additional context

<!-- Add any other context, links, etc. about the feature here. -->

https://discuss.python.org/t/python-3-8-0-is-now-available/2478

</issue>

<code>

[start of setup.py]

1 """

2 pip-tools keeps your pinned dependencies fresh.

3 """

4 from os.path import abspath, dirname, join

5

6 from setuptools import find_packages, setup

7

8

9 def read_file(filename):

10 """Read the contents of a file located relative to setup.py"""

11 with open(join(abspath(dirname(__file__)), filename)) as thefile:

12 return thefile.read()

13

14

15 setup(

16 name="pip-tools",

17 use_scm_version=True,

18 url="https://github.com/jazzband/pip-tools/",

19 license="BSD",

20 author="Vincent Driessen",

21 author_email="[email protected]",

22 description=__doc__.strip(),

23 long_description=read_file("README.rst"),

24 long_description_content_type="text/x-rst",

25 packages=find_packages(exclude=["tests"]),

26 package_data={},

27 python_requires=">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*",

28 setup_requires=["setuptools_scm"],

29 install_requires=["click>=6", "six"],

30 zip_safe=False,

31 entry_points={

32 "console_scripts": [

33 "pip-compile = piptools.scripts.compile:cli",

34 "pip-sync = piptools.scripts.sync:cli",

35 ]

36 },

37 platforms="any",

38 classifiers=[

39 "Development Status :: 5 - Production/Stable",

40 "Intended Audience :: Developers",

41 "Intended Audience :: System Administrators",

42 "License :: OSI Approved :: BSD License",

43 "Operating System :: OS Independent",

44 "Programming Language :: Python",

45 "Programming Language :: Python :: 2",

46 "Programming Language :: Python :: 2.7",

47 "Programming Language :: Python :: 3",

48 "Programming Language :: Python :: 3.5",

49 "Programming Language :: Python :: 3.6",

50 "Programming Language :: Python :: 3.7",

51 "Programming Language :: Python :: Implementation :: CPython",

52 "Programming Language :: Python :: Implementation :: PyPy",

53 "Topic :: System :: Systems Administration",

54 ],

55 )

56

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -48,6 +48,7 @@

"Programming Language :: Python :: 3.5",

"Programming Language :: Python :: 3.6",

"Programming Language :: Python :: 3.7",

+ "Programming Language :: Python :: 3.8",

"Programming Language :: Python :: Implementation :: CPython",

"Programming Language :: Python :: Implementation :: PyPy",

"Topic :: System :: Systems Administration",

| {"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -48,6 +48,7 @@\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n+ \"Programming Language :: Python :: 3.8\",\n \"Programming Language :: Python :: Implementation :: CPython\",\n \"Programming Language :: Python :: Implementation :: PyPy\",\n \"Topic :: System :: Systems Administration\",\n", "issue": "Add python 3.8 support\n#### What's the problem this feature will solve?\r\n<!-- What are you trying to do, that you are unable to achieve with pip-tools as it currently stands? -->\r\n\r\nPython 3.8 is released, so it's time to support it. \r\n\r\n#### Describe the solution you'd like\r\n<!-- A clear and concise description of what you want to happen. -->\r\n\r\n1. add \"py37\" env to `tox.ini`\r\n1. remove 3.8-dev from `.travis.yml`\r\n1. add \"Programming Language :: Python :: 3.8\" classifier to `setup.py`\r\n1. add \"3.8\" dimension to `.travis.yml` (supported, see https://travis-ci.community/t/add-python-3-8-support/5463)\r\n1. add \"py37\" dimension to `.appveyor.yml` (not supported yet, but will be on the nex image update, tracking issue: https://github.com/appveyor/ci/issues/3142)\r\n1. add \"3.8\" to python-version list in `.github/workflows/cron.yml` (not supported yet, tracking issue: https://github.com/actions/setup-python/issues/30)\r\n\r\n<!-- Provide examples of real-world use cases that this would enable and how it solves the problem described above. -->\r\n\r\n#### Alternative Solutions\r\n<!-- Have you tried to workaround the problem using pip-tools or other tools? Or a different approach to solving this issue? Please elaborate here. -->\r\n\r\nN/A\r\n\r\n#### Additional context\r\n<!-- Add any other context, links, etc. about the feature here. -->\r\n\r\nhttps://discuss.python.org/t/python-3-8-0-is-now-available/2478\n", "before_files": [{"content": "\"\"\"\npip-tools keeps your pinned dependencies fresh.\n\"\"\"\nfrom os.path import abspath, dirname, join\n\nfrom setuptools import find_packages, setup\n\n\ndef read_file(filename):\n \"\"\"Read the contents of a file located relative to setup.py\"\"\"\n with open(join(abspath(dirname(__file__)), filename)) as thefile:\n return thefile.read()\n\n\nsetup(\n name=\"pip-tools\",\n use_scm_version=True,\n url=\"https://github.com/jazzband/pip-tools/\",\n license=\"BSD\",\n author=\"Vincent Driessen\",\n author_email=\"[email protected]\",\n description=__doc__.strip(),\n long_description=read_file(\"README.rst\"),\n long_description_content_type=\"text/x-rst\",\n packages=find_packages(exclude=[\"tests\"]),\n package_data={},\n python_requires=\">=2.7, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*, !=3.4.*\",\n setup_requires=[\"setuptools_scm\"],\n install_requires=[\"click>=6\", \"six\"],\n zip_safe=False,\n entry_points={\n \"console_scripts\": [\n \"pip-compile = piptools.scripts.compile:cli\",\n \"pip-sync = piptools.scripts.sync:cli\",\n ]\n },\n platforms=\"any\",\n classifiers=[\n \"Development Status :: 5 - Production/Stable\",\n \"Intended Audience :: Developers\",\n \"Intended Audience :: System Administrators\",\n \"License :: OSI Approved :: BSD License\",\n \"Operating System :: OS Independent\",\n \"Programming Language :: Python\",\n \"Programming Language :: Python :: 2\",\n \"Programming Language :: Python :: 2.7\",\n \"Programming Language :: Python :: 3\",\n \"Programming Language :: Python :: 3.5\",\n \"Programming Language :: Python :: 3.6\",\n \"Programming Language :: Python :: 3.7\",\n \"Programming Language :: Python :: Implementation :: CPython\",\n \"Programming Language :: Python :: Implementation :: PyPy\",\n \"Topic :: System :: Systems Administration\",\n ],\n)\n", "path": "setup.py"}]} | 1,430 | 114 |

gh_patches_debug_37695 | rasdani/github-patches | git_diff | Qiskit__qiskit-8625 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Flipping of gate direction in Transpiler for CZ gate

### What should we add?

The current version of the transpiler raises the following error when attempting to set the basis gate of a backend as `cz`

` 'Flipping of gate direction is only supported for CX, ECR, and RZX at this time, not cz.' `

As far as I'm aware and through my own testing flipping of a CZ should be easy to implement as it's "flip" produces the same unitary operator. There is no direct identity needed.

If this could get added that would be great! I would have done this via a pull request myself but it seems "too easy" of a solution that I must be missing something.

The Qiskit file that would need changing.

https://github.com/Qiskit/qiskit-terra/blob/1312624309526812eb62b97e0d47699d46649a25/qiskit/transpiler/passes/utils/gate_direction.py

</issue>

<code>

[start of qiskit/transpiler/passes/utils/gate_direction.py]

1 # This code is part of Qiskit.

2 #

3 # (C) Copyright IBM 2017, 2021.

4 #

5 # This code is licensed under the Apache License, Version 2.0. You may

6 # obtain a copy of this license in the LICENSE.txt file in the root directory

7 # of this source tree or at http://www.apache.org/licenses/LICENSE-2.0.

8 #

9 # Any modifications or derivative works of this code must retain this

10 # copyright notice, and modified files need to carry a notice indicating

11 # that they have been altered from the originals.

12

13 """Rearrange the direction of the cx nodes to match the directed coupling map."""

14

15 from math import pi

16

17 from qiskit.transpiler.layout import Layout

18 from qiskit.transpiler.basepasses import TransformationPass

19 from qiskit.transpiler.exceptions import TranspilerError

20

21 from qiskit.circuit import QuantumRegister

22 from qiskit.dagcircuit import DAGCircuit

23 from qiskit.circuit.library.standard_gates import RYGate, HGate, CXGate, ECRGate, RZXGate

24

25

26 class GateDirection(TransformationPass):

27 """Modify asymmetric gates to match the hardware coupling direction.

28

29 This pass makes use of the following identities::

30

31 ┌───┐┌───┐┌───┐

32 q_0: ──■── q_0: ┤ H ├┤ X ├┤ H ├

33 ┌─┴─┐ = ├───┤└─┬─┘├───┤

34 q_1: ┤ X ├ q_1: ┤ H ├──■──┤ H ├

35 └───┘ └───┘ └───┘

36

37 ┌──────┐ ┌───────────┐┌──────┐┌───┐

38 q_0: ┤0 ├ q_0: ┤ RY(-pi/2) ├┤1 ├┤ H ├

39 │ ECR │ = └┬──────────┤│ ECR │├───┤

40 q_1: ┤1 ├ q_1: ─┤ RY(pi/2) ├┤0 ├┤ H ├

41 └──────┘ └──────────┘└──────┘└───┘

42

43 ┌──────┐ ┌───┐┌──────┐┌───┐

44 q_0: ┤0 ├ q_0: ┤ H ├┤1 ├┤ H ├

45 │ RZX │ = ├───┤│ RZX │├───┤

46 q_1: ┤1 ├ q_1: ┤ H ├┤0 ├┤ H ├

47 └──────┘ └───┘└──────┘└───┘

48 """

49

50 def __init__(self, coupling_map, target=None):

51 """GateDirection pass.

52

53 Args:

54 coupling_map (CouplingMap): Directed graph represented a coupling map.

55 target (Target): The backend target to use for this pass. If this is specified

56 it will be used instead of the coupling map

57 """

58 super().__init__()

59 self.coupling_map = coupling_map

60 self.target = target

61

62 # Create the replacement dag and associated register.

63 self._cx_dag = DAGCircuit()

64 qr = QuantumRegister(2)

65 self._cx_dag.add_qreg(qr)

66 self._cx_dag.apply_operation_back(HGate(), [qr[0]], [])

67 self._cx_dag.apply_operation_back(HGate(), [qr[1]], [])

68 self._cx_dag.apply_operation_back(CXGate(), [qr[1], qr[0]], [])

69 self._cx_dag.apply_operation_back(HGate(), [qr[0]], [])

70 self._cx_dag.apply_operation_back(HGate(), [qr[1]], [])

71

72 self._ecr_dag = DAGCircuit()

73 qr = QuantumRegister(2)

74 self._ecr_dag.add_qreg(qr)

75 self._ecr_dag.apply_operation_back(RYGate(-pi / 2), [qr[0]], [])

76 self._ecr_dag.apply_operation_back(RYGate(pi / 2), [qr[1]], [])

77 self._ecr_dag.apply_operation_back(ECRGate(), [qr[1], qr[0]], [])

78 self._ecr_dag.apply_operation_back(HGate(), [qr[0]], [])

79 self._ecr_dag.apply_operation_back(HGate(), [qr[1]], [])

80

81 @staticmethod

82 def _rzx_dag(parameter):

83 _rzx_dag = DAGCircuit()

84 qr = QuantumRegister(2)

85 _rzx_dag.add_qreg(qr)

86 _rzx_dag.apply_operation_back(HGate(), [qr[0]], [])

87 _rzx_dag.apply_operation_back(HGate(), [qr[1]], [])

88 _rzx_dag.apply_operation_back(RZXGate(parameter), [qr[1], qr[0]], [])

89 _rzx_dag.apply_operation_back(HGate(), [qr[0]], [])

90 _rzx_dag.apply_operation_back(HGate(), [qr[1]], [])

91 return _rzx_dag

92

93 def run(self, dag):

94 """Run the GateDirection pass on `dag`.

95

96 Flips the cx nodes to match the directed coupling map. Modifies the

97 input dag.

98

99 Args:

100 dag (DAGCircuit): DAG to map.

101

102 Returns:

103 DAGCircuit: The rearranged dag for the coupling map

104

105 Raises:

106 TranspilerError: If the circuit cannot be mapped just by flipping the

107 cx nodes.

108 """

109 trivial_layout = Layout.generate_trivial_layout(*dag.qregs.values())

110 layout_map = trivial_layout.get_virtual_bits()

111 if len(dag.qregs) > 1:

112 raise TranspilerError(

113 "GateDirection expects a single qreg input DAG,"

114 "but input DAG had qregs: {}.".format(dag.qregs)

115 )

116 if self.target is None:

117 cmap_edges = set(self.coupling_map.get_edges())

118 if not cmap_edges:

119 return dag

120

121 self.coupling_map.compute_distance_matrix()

122

123 dist_matrix = self.coupling_map.distance_matrix

124

125 for node in dag.two_qubit_ops():

126 control = node.qargs[0]

127 target = node.qargs[1]

128

129 physical_q0 = layout_map[control]

130 physical_q1 = layout_map[target]

131

132 if dist_matrix[physical_q0, physical_q1] != 1:

133 raise TranspilerError(

134 "The circuit requires a connection between physical "

135 "qubits %s and %s" % (physical_q0, physical_q1)

136 )

137

138 if (physical_q0, physical_q1) not in cmap_edges:

139 if node.name == "cx":

140 dag.substitute_node_with_dag(node, self._cx_dag)

141 elif node.name == "ecr":

142 dag.substitute_node_with_dag(node, self._ecr_dag)

143 elif node.name == "rzx":

144 dag.substitute_node_with_dag(node, self._rzx_dag(*node.op.params))

145 else:

146 raise TranspilerError(

147 f"Flipping of gate direction is only supported "

148 f"for CX, ECR, and RZX at this time, not {node.name}."

149 )

150 else:

151 # TODO: Work with the gate instances and only use names as look up keys.

152 # This will require iterating over the target names to build a mapping

153 # of names to gates that implement CXGate, ECRGate, RZXGate (including

154 # fixed angle variants)

155 for node in dag.two_qubit_ops():

156 control = node.qargs[0]

157 target = node.qargs[1]

158

159 physical_q0 = layout_map[control]

160 physical_q1 = layout_map[target]

161

162 if node.name == "cx":

163 if (physical_q0, physical_q1) in self.target["cx"]:

164 continue

165 if (physical_q1, physical_q0) in self.target["cx"]:

166 dag.substitute_node_with_dag(node, self._cx_dag)

167 else:

168 raise TranspilerError(

169 "The circuit requires a connection between physical "

170 "qubits %s and %s for cx" % (physical_q0, physical_q1)

171 )

172 elif node.name == "ecr":

173 if (physical_q0, physical_q1) in self.target["ecr"]:

174 continue

175 if (physical_q1, physical_q0) in self.target["ecr"]:

176 dag.substitute_node_with_dag(node, self._ecr_dag)

177 else:

178 raise TranspilerError(

179 "The circuit requires a connection between physical "

180 "qubits %s and %s for ecr" % (physical_q0, physical_q1)

181 )

182 elif node.name == "rzx":

183 if (physical_q0, physical_q1) in self.target["rzx"]:

184 continue

185 if (physical_q1, physical_q0) in self.target["rzx"]:

186 dag.substitute_node_with_dag(node, self._rzx_dag(*node.op.params))

187 else:

188 raise TranspilerError(

189 "The circuit requires a connection between physical "

190 "qubits %s and %s for rzx" % (physical_q0, physical_q1)

191 )

192 else:

193 raise TranspilerError(

194 f"Flipping of gate direction is only supported "

195 f"for CX, ECR, and RZX at this time, not {node.name}."

196 )

197 return dag

198

[end of qiskit/transpiler/passes/utils/gate_direction.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/qiskit/transpiler/passes/utils/gate_direction.py b/qiskit/transpiler/passes/utils/gate_direction.py

--- a/qiskit/transpiler/passes/utils/gate_direction.py

+++ b/qiskit/transpiler/passes/utils/gate_direction.py

@@ -20,7 +20,7 @@

from qiskit.circuit import QuantumRegister

from qiskit.dagcircuit import DAGCircuit

-from qiskit.circuit.library.standard_gates import RYGate, HGate, CXGate, ECRGate, RZXGate

+from qiskit.circuit.library.standard_gates import RYGate, HGate, CXGate, CZGate, ECRGate, RZXGate

class GateDirection(TransformationPass):

@@ -78,6 +78,11 @@

self._ecr_dag.apply_operation_back(HGate(), [qr[0]], [])

self._ecr_dag.apply_operation_back(HGate(), [qr[1]], [])

+ self._cz_dag = DAGCircuit()

+ qr = QuantumRegister(2)

+ self._cz_dag.add_qreg(qr)

+ self._cz_dag.apply_operation_back(CZGate(), [qr[1], qr[0]], [])

+

@staticmethod

def _rzx_dag(parameter):

_rzx_dag = DAGCircuit()

@@ -138,6 +143,8 @@

if (physical_q0, physical_q1) not in cmap_edges:

if node.name == "cx":

dag.substitute_node_with_dag(node, self._cx_dag)

+ elif node.name == "cz":

+ dag.substitute_node_with_dag(node, self._cz_dag)

elif node.name == "ecr":

dag.substitute_node_with_dag(node, self._ecr_dag)

elif node.name == "rzx":

@@ -169,6 +176,16 @@

"The circuit requires a connection between physical "

"qubits %s and %s for cx" % (physical_q0, physical_q1)

)

+ elif node.name == "cz":

+ if (physical_q0, physical_q1) in self.target["cz"]:

+ continue

+ if (physical_q1, physical_q0) in self.target["cz"]:

+ dag.substitute_node_with_dag(node, self._cz_dag)

+ else:

+ raise TranspilerError(

+ "The circuit requires a connection between physical "

+ "qubits %s and %s for cz" % (physical_q0, physical_q1)

+ )

elif node.name == "ecr":

if (physical_q0, physical_q1) in self.target["ecr"]:

continue