licenses

sequencelengths 1

3

| version

stringclasses 677

values | tree_hash

stringlengths 40

40

| path

stringclasses 1

value | type

stringclasses 2

values | size

stringlengths 2

8

| text

stringlengths 25

67.1M

| package_name

stringlengths 2

41

| repo

stringlengths 33

86

|

|---|---|---|---|---|---|---|---|---|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 21389 | export AffineConstantFlow, AffineHalfFlow, SlowMAF, MAF, IAF, ActNorm,

Invertible1x1Conv, NormalizingFlow, NormalizingFlowModel, NeuralCouplingFlow,

autoregressive_network, FlowOp, LinearFlow, TanhFlow, AffineScalarFlow,

InvertFlow, AffineFlow

abstract type FlowOp end

#------------------------------------------------------------------------------------------

# Users can define their own FlowOp in the Main

forward(fo::T, x::Union{Array{<:Real}, PyObject}) where T<:FlowOp = Main.forward(fo, x)

backward(fo::T, z::Union{Array{<:Real}, PyObject}) where T<:FlowOp = Main.backward(fo, z)

#------------------------------------------------------------------------------------------

mutable struct LinearFlow <: FlowOp

dim::Int64

indim::Int64

outdim::Int64

A::PyObject

b::PyObject

end

@doc raw"""

LinearFlow(indim::Int64, outdim::Int64, A::Union{PyObject, Array{<:Real,2}, Missing}=missing,

b::Union{PyObject, Array{<:Real,1}, Missing}=missing, name::Union{String, Missing}=missing)

LinearFlow(A::Union{PyObject, Array{<:Real,2}},

b::Union{PyObject, Array{<:Real,1}, Missing} = missing; name::Union{String, Missing}=missing)

Creates a linear flow operator,

$$x = Az + b$$

The operator is not necessarily invertible. $A$ has the size `outdim×indim`, $b$ is a length `outdim` vector.

# Example 1: Reconstructing a Gaussian random variable using invertible A

```julia

using ADCME

using Distributions

d = MvNormal([2.0;3.0],[2.0 1.0;1.0 2.0])

flows = [LinearFlow(2, 2)]

prior = ADCME.MultivariateNormalDiag(loc = zeros(2))

model = NormalizingFlowModel(prior, flows)

x = rand(d, 10000)'|>Array

zs, prior_logpdf, logdet = model(x)

log_pdf = prior_logpdf + logdet

loss = -sum(log_pdf)

sess = Session(); init(sess)

BFGS!(sess, loss)

run(sess, flows[1].b) # approximately [2.0 3.0]

run(sess, flows[1].A * flows[1].A') # approximate [2.0 1.0;1.0 2.0]

```

# Example 2: Reconstructing a Gaussian random variable using non-invertible A

```julia

using ADCME

using Distributions

d = Normal()

A = reshape([2. 1.], 2, 1)

b = Variable(ones(2))

flows = [LinearFlow(A, b)]

prior = ADCME.MultivariateNormalDiag(loc = zeros(1))

model = NormalizingFlowModel(prior, flows)

x = rand(d, 10000, 1)

x = x * [2.0 1.0] .+ [1.0 3.0]

x = [2.0 2.0]

zs, prior_logpdf, logdet = model(x)

log_pdf = prior_logpdf + logdet

loss = -sum(log_pdf)

sess = Session(); init(sess)

BFGS!(sess, loss)

run(sess, b[1]*2 + b[2]) # approximate 6.0

```

"""

function LinearFlow(indim::Int64, outdim::Int64, A::Union{PyObject, Array{<:Real,2}, Missing}=missing,

b::Union{PyObject, Array{<:Real,1}, Missing}=missing; name::Union{String, Missing}=missing)

if ismissing(name)

name = randstring(10)

end

if ismissing(A)

if indim==outdim

A = diagm(0=>ones(indim))

A = get_variable(A, name=name*"_A")

else

A = get_variable(Float64, shape=[outdim,indim], name=name*"_A")

end

end

if ismissing(b)

b = get_variable(zeros(1,outdim), name=name*"_b")

end

A = constant(A)

b = constant(b)

b = reshape(b, (1,-1))

if size(A)!=(outdim,indim)

error("Expected A shape: $((outdim,indim)), but got $(size(A))")

end

if size(b)!=(1,outdim)

error("Expected b shape: $((1,outdim)), but got $(size(b))")

end

LinearFlow(outdim, indim, outdim, A, b)

end

function LinearFlow(dim::Int64)

LinearFlow(dim,dim)

end

function LinearFlow(A::Union{PyObject, Array{<:Real,2}},

b::Union{PyObject, Array{<:Real}, Missing} = missing; name::Union{String, Missing}=missing)

outdim, indim = size(A)

if ismissing(b)

b = zeros(outdim)

end

A = constant(A)

b = constant(b)

LinearFlow(outdim, indim, outdim, A, b)

end

function forward(fo::LinearFlow, x::Union{Array{<:Real}, PyObject})

if fo.indim==fo.outdim

return solve_batch(fo.A, x - fo.b), -log(abs(det(fo.A))) * ones(size(x, 1))

else

return solve_batch(fo.A, x - fo.b), -1/2*log(abs(det(fo.A'*fo.A))) * ones(size(x, 1))

end

end

function backward(fo::LinearFlow, z::Union{Array{<:Real}, PyObject})

d = nothing

if fo.indim==fo.outdim

d = log(abs(det(fo.A)))

end

z * fo.A' + fo.b, d

end

#------------------------------------------------------------------------------------------

mutable struct AffineConstantFlow <: FlowOp

dim::Int64

s::Union{Missing,PyObject}

t::Union{Missing,PyObject}

end

function AffineConstantFlow(dim::Int64, name::Union{String, Missing}=missing; scale::Bool=true, shift::Bool=true)

if ismissing(name)

name = randstring(10)

end

s = missing

t = missing

if scale

s = get_variable(Float64, shape=[1,dim], name=name*"_s")

end

if shift

t = get_variable(Float64, shape=[1,dim], name=name*"_t")

end

AffineConstantFlow(dim, s, t)

end

function forward(fo::AffineConstantFlow, x::Union{Array{<:Real}, PyObject})

s = ismissing(fo.s) ? zeros_like(x) : fo.s

t = ismissing(fo.t) ? zeros_like(x) : fo.t

z = x .* exp(s) + t

log_det = sum(s, dims=2)

return z, log_det

end

function backward(fo::AffineConstantFlow, z::Union{Array{<:Real}, PyObject})

s = ismissing(fo.s) ? zeros_like(z) : fo.s

t = ismissing(fo.t) ? zeros_like(z) : fo.t

x = (z-t) .* exp(-s)

log_det = sum(-s, dims=2)

return x, log_det

end

#------------------------------------------------------------------------------------------

mutable struct ActNorm <: FlowOp

dim::Int64

fo::AffineConstantFlow

initialized::PyObject

end

function ActNorm(dim::Int64, name::Union{String, Missing}=missing)

if ismissing(name)

name = "ActNorm_"*randstring(10)

end

initialized = get_variable(1, name = name)

ActNorm(dim, AffineConstantFlow(dim), initialized)

end

function forward(actnorm::ActNorm, x::Union{Array{<:Real}, PyObject})

x = constant(x)

actnorm.fo.s, actnorm.fo.t = tf.cond(tf.equal(actnorm.initialized,constant(0)),

()->(actnorm.fo.s, actnorm.fo.t), #actnorm.fo.s, actnorm.fo.t),

()->begin

setzero = assign(actnorm.initialized, 0)

x0 = copy(x)

s0 = reshape(-log(std(x0, dims=1)), 1, :)

t0 = reshape(mean(-x0 .* exp(s0), dims=1), 1, :)

op = assign([actnorm.fo.s, actnorm.fo.t], [s0, t0])

p = tf.print("ActNorm: initializing s and t...")

op[1] = bind(op[1], setzero)

op[1] = bind(op[1], p)

op[1], op[2]

end)

forward(actnorm.fo, x)

end

function backward(actnorm::ActNorm, z::Union{Array{<:Real}, PyObject})

backward(actnorm.fo, z)

end

#------------------------------------------------------------------------------------------

mutable struct SlowMAF <: FlowOp

dim::Int64

layers::Array{Function}

order::Array{Int64}

p::PyObject

end

function SlowMAF(dim::Int64, parity::Bool, nns::Array)

local order

@assert length(nns)==dim - 1

if parity

order = Array(1:dim)

else

order = reverse(Array(1:dim))

end

p = Variable(zeros(2))

SlowMAF(dim, nns, order, p)

end

function forward(fo::SlowMAF, x::Union{Array{<:Real}, PyObject})

z = tf.zeros_like(x)

log_det = zeros(size(x,1))

for i = 1:fo.dim

if i==1

st = repeat(fo.p', size(x,1), 1)

else

st = fo.layers[i-1](x[:,1:i-1])

@assert size(st, 2)==2

end

s, t = st[:,1], st[:,2]

z = scatter_update(z, :, fo.order[i], x[:, i]*exp(s) + t)

log_det += s

end

return z, log_det

end

function backward(fo::SlowMAF, z::Union{Array{<:Real}, PyObject})

x = tf.zeros_like(z)

log_det = zeros(size(z,1))

for i = 1:fo.dim

if i==1

st = repeat(fo.p', size(x,1), 1)

else

st = fo.layers[i-1](x[:,1:i-1])

end

s, t = st[:,1], st[:,2]

x = scatter_update(x, :, i, (z[:, fo.order[i]] - t) * exp(-s))

log_det += -s

end

return x, log_det

end

#------------------------------------------------------------------------------------------

mutable struct MAF <: FlowOp

dim::Int64

net::Function

parity::Bool

end

function MAF(dim::Int64, parity::Bool, config::Array{Int64}; name::Union{String, Missing} = missing,

activation::Union{Nothing,String} = "relu", kwargs...)

push!(config, 2)

kwargs = jlargs(kwargs)

if ismissing(name)

name = "MAF_"*randstring(10)

end

net = x->autoregressive_network(x, config, name; activation = activation)

MAF(dim, net, parity)

end

function forward(fo::MAF, x::Union{Array{<:Real}, PyObject})

st = fo.net(x)

# @info st, split(st, 1, dims=2)

s, t = squeeze.(split(st, 2, dims=3))

z = x .* exp(s) + t

if fo.parity

z = reverse(z, dims=2)

end

log_det = sum(s, dims=2)

return z, log_det

end

function backward(fo::MAF, z::Union{Array{<:Real}, PyObject})

x = zeros_like(z)

log_det = zeros(size(z, 1))

if fo.parity

z = reverse(z, dims=2)

end

for i = 1:fo.dim

st = fo.net(copy(x))

s, t = squeeze.(split(st, 2, dims=3))

x = scatter_update(x, :, i, (z[:, i] - t[:, i]) * exp(-s[:, i]))

log_det += -s[:,i]

end

return x, log_det

end

"""

autoregressive_network(x::Union{Array{Float64}, PyObject}, config::Array{<:Integer},

scope::String="default"; activation::String=nothing, kwargs...)

Creates an masked autoencoder for distribution estimation.

"""

function autoregressive_network(x::Union{Array{Float64}, PyObject}, config::Array{<:Integer},

scope::String="default"; activation::Union{Nothing,String}="tanh", kwargs...)

local y

x = constant(x)

params = config[end]

hidden_units = PyVector(config[1:end-1])

event_shape = [size(x, 2)]

if haskey(STORAGE, scope*"/autoregressive_network/")

made = STORAGE[scope*"/autoregressive_network/"]

y = made(x)

else

made = ADCME.tfp.bijectors.AutoregressiveNetwork(params=params, hidden_units = hidden_units,

event_shape = event_shape, activation = activation, kwargs...)

STORAGE[scope*"/autoregressive_network/"] = made

y = made(x)

end

return y

end

#------------------------------------------------------------------------------------------

mutable struct IAF <: FlowOp

maf::MAF

end

function IAF(dim::Int64, parity::Bool, config::Array{Int64}; name::Union{String, Missing} = missing,

activation::Union{Nothing,String} = "relu", kwargs...)

MAF(dim, parity, config; name=name, activation=activation, kwargs...)

end

function forward(fo::IAF, x::Union{Array{<:Real}, PyObject})

backward(fo.maf, x)

end

function backward(fo::IAF, z::Union{Array{<:Real}, PyObject})

forward(fo.maf, z)

end

#------------------------------------------------------------------------------------------

mutable struct Invertible1x1Conv <: FlowOp

dim::Int64

P::Array{Float64}

L::PyObject

S::PyObject

U::PyObject

end

function Invertible1x1Conv(dim::Int64, name::Union{Missing,String}=missing)

if ismissing(name)

name = randstring(10)

end

Q = lu(randn(dim,dim))

P, L, U = Q.P, Q.L, Q.U

L = get_variable(L, name=name*"_L")

S = get_variable(diag(U), name=name*"_S")

U = get_variable(triu(U, 1), name=name*"_U")

Invertible1x1Conv(dim, P, L, S, U)

end

function _assemble_W(fo::Invertible1x1Conv)

L = tril(fo.L, -1) + diagm(0=>ones(fo.dim))

U = triu(fo.U, 1)

W = fo.P * L * (U + diagm(fo.S))

return W

end

function forward(fo::Invertible1x1Conv, x::Union{Array{<:Real}, PyObject})

W = _assemble_W(fo)

z = x*W

log_det = sum(log(abs(fo.S)))

return z, log_det

end

function backward(fo::Invertible1x1Conv, z::Union{Array{<:Real}, PyObject})

W = _assemble_W(fo)

Winv = inv(W)

x = z*Winv

log_det = -sum(log(abs(fo.S)))

return x, log_det

end

#------------------------------------------------------------------------------------------

mutable struct AffineHalfFlow <: FlowOp

dim::Int64

parity::Bool

s_cond::Function

t_cond::Function

end

"""

AffineHalfFlow(dim::Int64, parity::Bool, s_cond::Union{Function, Missing} = missing, t_cond::Union{Function, Missing} = missing)

Creates an `AffineHalfFlow` operator.

"""

function AffineHalfFlow(dim::Int64, parity::Bool, s_cond::Union{Function, Missing} = missing, t_cond::Union{Function, Missing} = missing)

if ismissing(s_cond)

s_cond = x->constant(zeros(size(x,1), (dim+1)÷2))

end

if ismissing(t_cond)

t_cond = x->constant(zeros(size(x,1), dim÷2))

end

AffineHalfFlow(dim, parity, s_cond, t_cond)

end

function forward(fo::AffineHalfFlow, x::Union{Array{<:Real}, PyObject})

x = constant(x)

x0 = x[:,1:2:end]

x1 = x[:,2:2:end]

if fo.parity

x0, x1 = x1, x0

end

s = fo.s_cond(x0)

t = fo.t_cond(x0)

z0 = x0

z1 = exp(s) .* x1 + t

if fo.parity

z0, z1 = z1, z0

end

z = [z0 z1]

log_det = sum(s, dims=2)

return z, log_det

end

function backward(fo::AffineHalfFlow, z::Union{Array{<:Real}, PyObject})

z = constant(z)

z0 = z[:,1:2:end]

z1 = z[:,2:2:end]

if fo.parity

z0, z1 = z1, z0

end

s = fo.s_cond(z0)

t = fo.t_cond(z0)

x0 = z0

x1 = (z1-t).*exp(-s)

if fo.parity

x0, x1 = x1, x0

end

x = [x0 x1]

log_det = sum(-s, dims=2)

return x, log_det

end

#------------------------------------------------------------------------------------------

mutable struct NeuralCouplingFlow <: FlowOp

dim::Int64

K::Int64

B::Int64

f1::Function

f2::Function

end

function NeuralCouplingFlow(dim::Int64, f1::Function, f2::Function, K::Int64=8, B::Int64=3)

@assert mod(dim,2)==0

NeuralCouplingFlow(dim, K, B, f1, f2)

end

function forward(fo::NeuralCouplingFlow, x::Union{Array{<:Real}, PyObject})

x = constant(x)

dim, K, B, f1, f2 = fo.dim, fo.K, fo.B, fo.f1, fo.f2

RQS = tfp.bijectors.RationalQuadraticSpline

log_det = constant(zeros(size(x,1)))

lower, upper = x[:,1:dim÷2], x[:,dim÷2+1:end]

f1_out = f1(lower)

@assert size(f1_out,2)==(3K-1)*size(lower,2)

out = reshape(f1_out, (-1, size(lower,2), 3K-1))

W, H, D = split(out, [K, K, K-1], dims=3)

W, H = softmax(W, dims=3), softmax(H, dims=3)

W, H = 2B*W, 2B*H

D = softplus(D)

rqs = RQS(W, H, D, range_min=-B)

upper, ld = rqs.forward(upper), rqs.forward_log_det_jacobian(upper, 1)

log_det += ld

f2_out = f2(upper)

@assert size(f2_out,2)==(3K-1)*size(upper,2)

out = reshape(f2_out, (-1, size(upper,2), 3K -1))

W, H, D = split(out, [K, K, K-1], dims=3)

W, H = softmax(W, dims=3), softmax(H, dims=3)

W, H = 2B*W, 2B*H

D = softplus(D)

rqs = RQS(W, H, D, range_min=-B)

lower, ld = rqs.forward(lower), rqs.forward_log_det_jacobian(lower, 1)

log_det += ld

return [lower upper], log_det

end

function backward(fo::NeuralCouplingFlow, x::Union{Array{<:Real}, PyObject})

x = constant(x)

dim, K, B, f1, f2 = fo.dim, fo.K, fo.B, fo.f1, fo.f2

RQS = tfp.bijectors.RationalQuadraticSpline

log_det = constant(zeros(size(x,1)))

lower, upper = x[:,1:dim÷2], x[:,dim÷2+1:end]

f2_out = f2(upper)

@assert size(f2_out,2)==(3K-1)*size(upper,2)

out = reshape(f2_out, (-1, size(upper,2), 3K-1))

W, H, D = split(out, [K, K, K-1], dims=3)

W, H = softmax(W, dims=3), softmax(H, dims=3)

W, H = 2B*W, 2B*H

D = softplus(D)

rqs = RQS(W, H, D, range_min=-B)

lower, ld = rqs.inverse(lower), rqs.inverse_log_det_jacobian(lower, 1)

log_det += ld

f1_out = f1(lower)

@assert size(f1_out,2)==(3K-1)*size(lower,2)

out = reshape(f1_out, (-1, size(lower,2), 3K -1))

W, H, D = split(out, [K, K, K-1], dims=3)

W, H = softmax(W, dims=3), softmax(H, dims=3)

W, H = 2B*W, 2B*H

D = softplus(D)

rqs = RQS(W, H, D, range_min=-B)

upper, ld = rqs.inverse(upper), rqs.inverse_log_det_jacobian(upper, 1)

log_det += ld

return [lower upper], log_det

end

#------------------------------------------------------------------------------------------

mutable struct TanhFlow <: FlowOp

dim::Int64

o::PyObject

end

function TanhFlow(dim::Int64)

TanhFlow(dim, tfp.bijectors.Tanh())

end

function forward(fo::TanhFlow, x::Union{Array{<:Real}, PyObject})

fo.o.forward(x), fo.o.forward_log_det_jacobian(x, 1)

end

function backward(fo::TanhFlow, z::Union{Array{<:Real}, PyObject})

fo.o.inverse(z), fo.o.inverse_log_det_jacobian(z, 1)

end

#------------------------------------------------------------------------------------------

mutable struct AffineScalarFlow <: FlowOp

dim::Int64

o::PyObject

end

function AffineScalarFlow(dim::Int64, shift::Float64 = 0.0, scale::Float64 = 1.0)

AffineScalarFlow(dim, tfp.bijectors.AffineScalar(shift=shift, scale=constant(scale)))

end

function forward(fo::AffineScalarFlow, x::Union{Array{<:Real}, PyObject})

fo.o.forward(x), fo.o.forward_log_det_jacobian(x, 1)

end

function backward(fo::AffineScalarFlow, z::Union{Array{<:Real}, PyObject})

fo.o.inverse(z), fo.o.inverse_log_det_jacobian(z, 1)

end

#------------------------------------------------------------------------------------------

mutable struct InvertFlow <: FlowOp

dim::Int64

o::FlowOp

end

"""

InvertFlow(fo::FlowOp)

Creates a flow operator that is the invert of `fo`.

# Example

```julia

inv_tanh_flow = InvertFlow(TanhFlow(2))

```

"""

function InvertFlow(fo::FlowOp)

InvertFlow(fo.dim, fo)

end

forward(fo::InvertFlow, x::Union{Array{<:Real}, PyObject}) = backward(fo.o, x)

backward(fo::InvertFlow, z::Union{Array{<:Real}, PyObject}) = forward(fo.o, z)

#------------------------------------------------------------------------------------------

@doc raw"""

AffineFlow(args...;kwargs...)

Creates a linear flow operator,

$$z = Ax + b$$

See [`LinearFlow`](@ref)

"""

AffineFlow(args...;kwargs...) = InvertFlow(LinearFlow(args...;kwargs...))

#------------------------------------------------------------------------------------------

mutable struct NormalizingFlow

flows::Array

NormalizingFlow(flows::Array) = new(flows)

end

function forward(fo::NormalizingFlow, x::Union{PyObject, Array{<:Real,2}})

x = constant(x)

m = size(x,1)

log_det = constant(zeros(m))

zs = PyObject[x]

for flow in fo.flows

x, ld = forward(flow, x)

log_det += ld

push!(zs, x)

end

return zs, log_det

end

function backward(fo::NormalizingFlow, z::Union{PyObject, Array{<:Real,2}})

z = constant(z)

m = size(z,1)

log_det = constant(zeros(m))

xs = [z]

for flow in reverse(fo.flows)

z, ld = backward(flow, z)

if isnothing(ld) || isnothing(log_det)

log_det = nothing

else

log_det += ld

end

push!(xs, z)

end

return xs, log_det

end

#------------------------------------------------------------------------------------------

mutable struct NormalizingFlowModel

prior::ADCMEDistribution

flow::NormalizingFlow

end

function Base.:show(io::IO, model::NormalizingFlowModel)

typevec = typeof.(model.flow.flows)

println("( $(string(typeof(model.prior))[7:end]) )")

println("\t↓")

for i = length(typevec):-1:1

println("$(typevec[i]) (dim = $(model.flow.flows[i].dim))")

println("\t↓")

end

println("( Data )")

end

function NormalizingFlowModel(prior::T, flows::Array{<:FlowOp}) where T<:ADCMEDistribution

NormalizingFlowModel(prior, NormalizingFlow(flows))

end

function forward(nf::NormalizingFlowModel, x::Union{PyObject, Array{<:Real,2}})

x = constant(x)

zs, log_det = forward(nf.flow, x)

prior_logprob = logpdf(nf.prior, zs[end])

return zs, prior_logprob, log_det

end

function backward(nf::NormalizingFlowModel, z::Union{PyObject, Array{<:Real,2}})

z = constant(z)

xs, log_det = backward(nf.flow, z)

return xs, log_det

end

function Base.:rand(nf::NormalizingFlowModel, num_samples::Int64)

z = rand(nf.prior, num_samples)

xs, _ = backward(nf.flow, z)

return xs

end

#------------------------------------------------------------------------------------------

(o::NormalizingFlowModel)(x::Union{PyObject, Array{<:Real,2}}) = forward(o, x)

#------------------------------------------------------------------------------------------

# From TensorFlow Probability

for (op1, op2) in [(:Abs, :AbsoluteValue), (:Exp, :Exp), (:Log, :Log),

(:MaskedAutoregressiveFlow, :MaskedAutoregressiveFlow), (:Permute,:Permute),

(:PowerTransform, :PowerTransform), (:Sigmoid,:Sigmoid), (:Softplus,:Softplus),

(:Square, :Square)]

@eval begin

export $op1

mutable struct $op1 <: FlowOp

dim::Int64

fo::PyObject

end

function $op1(dim::Int64, args...;kwargs...)

fo = tfp.bijectors.$op2(args...;kwargs...)

$op1(dim, fo)

end

forward(o::$op1, x::Union{PyObject, Array{<:Real,2}}) = (o.fo.forward(x), o.fo.forward_log_det_jacobian(x, 1))

backward(o::$op1, z::Union{PyObject, Array{<:Real,2}}) = (o.fo.inverse(z), o.fo.inverse_log_det_jacobian(z, 1))

end

end | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 11989 | export GAN,

jsgan,

klgan,

wgan,

rklgan,

lsgan,

sample,

predict,

dcgan_generator,

dcgan_discriminator,

wgan_stable

mutable struct GAN

latent_dim::Int64

batch_size::Int64

dim::Int64

dat::PyObject

generator::Union{Missing, Function}

discriminator::Union{Missing, Function}

loss::Function

d_vars::Union{Missing,Array{PyObject}}

g_vars::Union{Missing,Array{PyObject}}

d_loss::Union{Missing,PyObject}

g_loss::Union{Missing,PyObject}

is_training::Union{Missing,PyObject, Bool}

update::Union{Missing,Array{PyObject}}

fake_data::Union{Missing,PyObject}

true_data::Union{Missing,PyObject}

ganid::String

noise::Union{Missing,PyObject}

ids::Union{Missing,PyObject}

STORAGE::Dict{String, Any}

end

"""

build!(gan::GAN)

Builds the GAN instances. This function returns `gan` for convenience.

"""

function build!(gan::GAN)

gan.noise = placeholder(get_dtype(gan.dat), shape=(gan.batch_size, gan.latent_dim))

gan.ids = placeholder(Int32, shape=(gan.batch_size,))

variable_scope("generator_$(gan.ganid)") do

gan.fake_data = gan.generator(gan.noise, gan)

end

gan.true_data = tf.gather(gan.dat,gan.ids-1)

variable_scope("discriminator_$(gan.ganid)") do

gan.d_loss, gan.g_loss = gan.loss(gan)

end

gan.d_vars = Array{PyObject}(get_collection("discriminator_$(gan.ganid)"))

gan.d_vars = length(gan.d_vars)>0 ? gan.d_vars : missing

gan.g_vars = Array{PyObject}(get_collection("generator_$(gan.ganid)"))

gan.g_vars = length(gan.g_vars)>0 ? gan.g_vars : missing

gan.update = Array{PyCall.PyObject}(get_collection(UPDATE_OPS))

gan.update = length(gan.update)>0 ? gan.update : missing

# gan.STORAGE["d_grad_magnitude"] = gradient_magnitude(gan.d_loss, gan.d_vars)

# gan.STORAGE["g_grad_magnitude"] = gradient_magnitude(gan.g_loss, gan.g_vars)

gan

end

@doc raw"""

GAN(dat::Union{Array,PyObject}, generator::Function, discriminator::Function,

loss::Union{Missing, Function}=missing; latent_dim::Union{Missing, Int64}=missing,

batch_size::Int64=32)

Creates a GAN instance.

- `dat` ``\in \mathbb{R}^{n\times d}`` is the training data for the GAN, where ``n`` is the number of training data, and ``d`` is the dimension per training data.

- `generator```:\mathbb{R}^{d'} \rightarrow \mathbb{R}^d`` is the generator function, ``d'`` is the hidden dimension.

- `discriminator```:\mathbb{R}^{d} \rightarrow \mathbb{R}`` is the discriminator function.

- `loss` is the loss function. See [`klgan`](@ref), [`rklgan`](@ref), [`wgan`](@ref), [`lsgan`](@ref) for examples.

- `latent_dim` (default=``d``, the same as output dimension) is the latent dimension.

- `batch_size` (default=32) is the batch size in training.

# Example: Constructing a GAN

```julia

dat = rand(10000,10)

generator = (z, gan)->10*z

discriminator = (x, gan)->sum(x)

gan = GAN(dat, generator, discriminator, "wgan_stable")

```

# Example: Learning a Gaussian random variable

```julia

using ADCME

using PyPlot

using Distributions

dat = randn(10000, 1) * 0.5 .+ 3.0

function gen(z, gan)

ae(z, [20,20,20,1], "generator_$(gan.ganid)", activation = "relu")

end

function disc(x, gan)

squeeze(ae(x, [20,20,20,1], "discriminator_$(gan.ganid)", activation = "relu"))

end

gan = GAN(dat, gen, disc, g->wgan_stable(g, 0.001); latent_dim = 10)

dopt = AdamOptimizer(0.0002, beta1=0.5, beta2=0.9).minimize(gan.d_loss, var_list=gan.d_vars)

gopt = AdamOptimizer(0.0002, beta1=0.5, beta2=0.9).minimize(gan.g_loss, var_list=gan.g_vars)

sess = Session(); init(sess)

for i = 1:5000

batch_x = rand(1:10000, 32)

batch_z = randn(32, 10)

for n_critic = 1:1

global _, dl = run(sess, [dopt, gan.d_loss],

feed_dict=Dict(gan.ids=>batch_x, gan.noise=>batch_z))

end

_, gl, gm, dm, gp = run(sess, [gopt, gan.g_loss,

gan.STORAGE["g_grad_magnitude"], gan.STORAGE["d_grad_magnitude"],

gan.STORAGE["gradient_penalty"]],

feed_dict=Dict(gan.ids=>batch_x, gan.noise=>batch_z))

mod(i, 100)==0 && (@info i, dl, gl, gm, dm, gp)

end

hist(run(sess, squeeze(rand(gan,10000))), bins=50, density = true)

nm = Normal(3.0,0.5)

x0 = 1.0:0.01:5.0

y0 = pdf.(nm, x0)

plot(x0, y0, "g")

```

"""

function GAN(dat::Union{Array,PyObject}, generator::Function, discriminator::Function,

loss::Union{Missing, Function, String}=missing; latent_dim::Union{Missing, Int64}=missing,

batch_size::Int64=32)

dim = size(dat, 2)

dat = convert_to_tensor(dat)

if ismissing(latent_dim)

latent_dim=dim

end

if ismissing(loss)

loss = "jsgan"

end

if isa(loss, String)

if loss=="jsgan"

loss = jsgan

elseif loss=="klgan"

loss = klgan

elseif loss=="wgan"

loss = wgan

elseif loss=="wgan_stable"

loss = wgan_stable

elseif loss=="rklgan"

loss = rklgan

elseif loss=="lsgan"

loss = lsgan

else

error("loss function $loss not found!")

end

end

gan = GAN(latent_dim, batch_size, dim, dat, generator, discriminator, loss, missing, missing, missing,

missing, ADCME.options.training.training, missing, missing, missing, randstring(), missing, missing, Dict())

build!(gan)

gan

end

GAN(dat::Array{T}) where T<:Real = GAN(constant(dat))

function Base.:show(io::IO, gan::GAN)

yes_or_no = x->ismissing(x) ? "✘" : "✔️"

print(

"""

( Generative Adversarial Network -- $(gan.ganid) )

==================================================

$(gan.latent_dim) D $(gan.dim) D

(Latent Space)---->(Data Space)

batch_size = $(gan.batch_size)

loss function: $(gan.loss)

generator: $(gan.generator)

discriminator: $(gan.discriminator)

d_vars ... $(yes_or_no(gan.d_vars))

g_vars ... $(yes_or_no(gan.g_vars))

d_loss ... $(yes_or_no(gan.d_loss))

g_loss ... $(yes_or_no(gan.g_loss))

update ... $(yes_or_no(gan.update))

true_data ... $(size(gan.true_data))

fake_data ... $(size(gan.fake_data))

is_training... Placeholder (Bool), $(gan.is_training)

noise ... Placeholder (Float64) of size $(size(gan.noise))

ids ... Placeholder (Int32) of size $(size(gan.ids))

"""

)

end

##################### GAN Library #####################

"""

klgan(gan::GAN)

Computes the KL-divergence GAN loss function.

"""

function klgan(gan::GAN)

P, Q = gan.true_data, gan.fake_data

D_real = gan.discriminator(P, gan)

D_fake = gan.discriminator(Q, gan)

D_loss = -mean(log(D_real) + log(1-D_fake))

G_loss = mean(log((1-D_fake)/D_fake))

D_loss, G_loss

end

"""

jsgan(gan::GAN)

Computes the vanilla GAN loss function.

"""

function jsgan(gan::GAN)

P, Q = gan.true_data, gan.fake_data

D_real = gan.discriminator(P, gan)

D_fake = gan.discriminator(Q, gan)

D_loss = -mean(log(D_real) + log(1-D_fake))

G_loss = -mean(log(D_fake))

D_loss, G_loss

end

"""

wgan(gan::GAN)

Computes the Wasserstein GAN loss function.

"""

function wgan(gan::GAN)

P, Q = gan.true_data, gan.fake_data

D_real = gan.discriminator(P, gan)

D_fake = gan.discriminator(Q, gan)

D_loss = mean(D_fake)-mean(D_real)

G_loss = -mean(D_fake)

D_loss, G_loss

end

@doc raw"""

wgan_stable(gan::GAN, λ::Float64)

Returns the discriminator and generator loss for the Wasserstein GAN loss with penalty parameter $\lambda$

The objective function is

```math

L = E_{\tilde x\sim P_g} [D(\tilde x)] - E_{x\sim P_r} [D(x)] + \lambda E_{\hat x\sim P_{\hat x}}[(||\nabla_{\hat x}D(\hat x)||^2-1)^2]

```

"""

function wgan_stable(gan::GAN, λ::Float64=1.0)

real_data, fake_data = gan.true_data, gan.fake_data

@assert length(size(real_data))==2

@assert length(size(fake_data))==2

D_logits = gan.discriminator(real_data, gan)

D_logits_ = gan.discriminator(fake_data, gan)

g_loss = -mean(D_logits_)

d_loss_real = -mean(D_logits)

d_loss_fake = tf.reduce_mean(D_logits_)

α = tf.random_uniform(

shape=(gan.batch_size, 1),

minval=0.,

maxval=1.,

dtype=tf.float64

)

differences = fake_data - real_data

interpolates = real_data + α .* differences # nb x dim

d_inter = gan.discriminator(interpolates, gan)

@assert length(size(d_inter))==1

gradients = tf.gradients(d_inter, interpolates)[1] # ∇D(xt), nb x dim

slopes = sqrt(sum(gradients^2, dims=2)) # ||∇D(xt)||, (nb,)

gradient_penalty = mean((slopes-1.)^2)

d_loss = d_loss_fake + d_loss_real + λ * gradient_penalty

gan.STORAGE["gradient_penalty"] = mean(slopes)

return d_loss, g_loss

end

"""

rklgan(gan::GAN)

Computes the reverse KL-divergence GAN loss function.

"""

function rklgan(gan::GAN)

P, Q = gan.true_data, gan.fake_data

D_real = gan.discriminator(P, gan)

D_fake = gan.discriminator(Q, gan)

G_loss = mean(log((1-D_fake)/D_fake))

D_loss = -mean(log(D_fake)+log(1-D_real))

D_loss, G_loss

end

"""

lsgan(gan::GAN)

Computes the least square GAN loss function.

"""

function lsgan(gan::GAN)

P, Q = gan.true_data, gan.fake_data

D_real = gan.discriminator(P, gan)

D_fake = gan.discriminator(Q, gan)

D_loss = mean((D_real-1)^2+D_fake^2)

G_loss = mean((D_fake-1)^2)

D_loss, G_loss

end

#######################################################

"""

sample(gan::GAN, n::Int64)

rand(gan::GAN, n::Int64)

Samples `n` instances from `gan`.

"""

function sample(gan::GAN, n::Int64)

local out

@info "Using a normal latent vector"

noise = constant(randn(n, gan.latent_dim))

variable_scope("generator_$(gan.ganid)") do

out = gan.generator(noise, gan)

end

out

end

Base.:rand(gan::GAN) = squeeze(sample(gan, 1), dims=1)

Base.:rand(gan::GAN, n::Int64) = sample(gan, n)

"""

predict(gan::GAN, input::Union{PyObject, Array})

Predicts the GAN `gan` output given input `input`.

"""

function predict(gan::GAN, input::Union{PyObject, Array})

local out

flag = false

if length(size(input))==1

flag = true

input = reshape(input, 1, length(input))

end

input = convert_to_tensor(input)

variable_scope("generator_$(gan.ganid)", initializer=random_uniform_initializer(0.0,1e-3)) do

out = gan.generator(input, gan)

end

if flag; out = squeeze(out); end

out

end

# adapted from https://github.com/aymericdamien/TensorFlow-Examples/blob/master/examples/3_NeuralNetworks/dcgan.py

function dcgan_generator(x::PyObject, n::Int64=1)

if length(size(x))!=2

error("ADCME: input must have rank 2, rank $(length(size(x))) received")

end

variable_scope("generator", reuse=AUTO_REUSE) do

# TensorFlow Layers automatically create variables and calculate their

# shape, based on the input.

x = tf.layers.dense(x, units=6n * 6n * 128)

x = tf.nn.tanh(x)

# Reshape to a 4-D array of images: (batch, height, width, channels)

# New shape: (batch, 6, 6, 128)

x = tf.reshape(x, shape=[-1, 6n, 6n, 128])

# Deconvolution, image shape: (batch, 14, 14, 64)

x = tf.layers.conv2d_transpose(x, 64, 4, strides=2)

# Deconvolution, image shape: (batch, 28, 28, 1)

x = tf.layers.conv2d_transpose(x, 1, 2, strides=2)

# Apply sigmoid to clip values between 0 and 1

x = tf.nn.sigmoid(x)

end

return squeeze(x)

end

function dcgan_discriminator(x)

variable_scope("Discriminator", reuse=AUTO_REUSE) do

# Typical convolutional neural network to classify images.

x = tf.layers.conv2d(x, 64, 5)

x = tf.nn.tanh(x)

x = tf.layers.average_pooling2d(x, 2, 2)

x = tf.layers.conv2d(x, 128, 5)

x = tf.nn.tanh(x)

x = tf.layers.average_pooling2d(x, 2, 2)

x = tf.contrib.layers.flatten(x)

x = tf.layers.dense(x, 1024)

x = tf.nn.tanh(x)

# Output 2 classes: Real and Fake images

x = tf.layers.dense(x, 2)

end

return x

end | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 3783 | export gpu_info, get_gpu, has_gpu

"""

gpu_info()

Returns the CUDA and GPU information. An examplary output is

```

- NVCC: /usr/local/cuda/bin/nvcc

- CUDA library directories: /usr/local/cuda/lib64

- Latest supported version of CUDA: 11000

- CUDA runtime version: 10010

- CUDA include_directories: /usr/local/cuda/include

- CUDA toolkit directories: /home/kailaix/.julia/adcme/pkgs/cudatoolkit-10.0.130-0/lib:/home/kailaix/.julia/adcme/pkgs/cudnn-7.6.5-cuda10.0_0/lib

- Number of GPUs: 7

```

"""

function gpu_info()

NVCC = "missing"

CUDALIB = "missing"

v = "missing"

u = "missing"

dcount = 0

try

NVCC = strip(String(read(`which nvcc`)))

CUDALIB = abspath(joinpath(NVCC, "../../lib64"))

v = zeros(Int32,1)

@eval ccall((:cudaRuntimeGetVersion, $(joinpath(CUDALIB, "libcudart.so"))), Cvoid, (Ref{Cint},), $v)

u = zeros(Int32,1)

@eval ccall((:cudaDriverGetVersion, $(joinpath(CUDALIB, "libcudart.so"))), Cvoid, (Ref{Cint},), $u)

dcount = zeros(Int32, 1)

@eval ccall((:cudaGetDeviceCount, $(joinpath(CUDALIB, "libcudart.so"))), Cvoid, (Ref{Cint},), $dcount)

v = v[1]

if v==0

v = "missing"

end

u = u[1]

dcount = dcount[1]

catch

end

println("- NVCC: ", NVCC)

println("- CUDA library directories: ", CUDALIB)

println("- Latest supported version of CUDA: ", u)

println("- CUDA runtime version: ", v)

println("- CUDA include_directories: ", length(ADCME.CUDA_INC)==0 ? "missing" : ADCME.CUDA_INC)

println("- CUDA toolkit directories: ", length(ADCME.LIBCUDA)==0 ? "missing" : ADCME.LIBCUDA)

println("- Number of GPUs: ", dcount)

if NVCC == "missing"

println("\nTips: nvcc is not found in the path. Please add nvcc to your environment path if you intend to use GPUs.")

end

if length(ADCME.CUDA_INC)==0

println("\nTips: ADCME is not configured to use GPUs. See https://kailaix.github.io/ADCME.jl/latest/tu_customop/#Install-GPU-enabled-TensorFlow-(Linux-and-Windows) for instructions.")

end

if dcount==0

println("\nTips: No GPU resources found. Do you have access to GPUs?")

end

end

"""

get_gpu()

Returns the compiler information for GPUs. An examplary output is

> (NVCC = "/usr/local/cuda/bin/nvcc", LIB = "/usr/local/cuda/lib64", INC = "/usr/local/cuda/include", TOOLKIT = "/home/kailaix/.julia/adcme/pkgs/cudatoolkit-10.0.130-0/lib", CUDNN = "/home/kailaix/.julia/adcme/pkgs/cudnn-7.6.5-cuda10.0_0/lib")

"""

function get_gpu()

NVCC = missing

CUDALIB = missing

CUDAINC = missing

CUDATOOLKIT = missing

CUDNN = missing

try

NVCC = strip(String(read(`which nvcc`)))

CUDALIB = abspath(joinpath(NVCC, "../../lib64"))

CUDAINC = abspath(joinpath(NVCC, "../../include"))

if length(ADCME.CUDA_INC)>0 && CUDAINC!=ADCME.CUDA_INC

@warn """

Inconsistency detected:

ADCME CUDAINC: $(ADCME.CUDA_INC)

Implied CUDAINC: $CUDAINC

"""

end

catch

end

if length(ADCME.LIBCUDA)>0

CUDATOOLKIT = split(ADCME.LIBCUDA, ":")[1]

CUDNN = split(ADCME.LIBCUDA, ":")[2]

else

end

return (NVCC=NVCC, LIB=CUDALIB, INC=CUDAINC, TOOLKIT=CUDATOOLKIT, CUDNN=CUDNN)

end

"""

has_gpu()

Check if the TensorFlow backend is using CUDA GPUs. Operators that have GPU implementations will be executed on GPU devices.

See also [`get_gpu`](@ref)

!!! note

ADCME will use GPU automatically if GPU is available. To disable GPU, set the environment variable `ENV["CUDA_VISIBLE_DEVICES"]=""` before importing ADCME

"""

function has_gpu()

s = tf.test.gpu_device_name()

if length(s)==0

return false

else

return true

end

end

| ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 4465 | # install.jl collects scripts to install many third party libraries

export install_adept, install_blas, install_openmpi, install_hypre, install_had, install_mfem, install_matplotlib

function install_blas(blas_binary::Bool = true)

if Sys.iswindows()

if isfile(joinpath(ADCME.LIBDIR, "openblas.lib"))

@info "openblas.lib exists"

return

end

if blas_binary

http_file("https://github.com/kailaix/tensorflow-1.15-include/releases/download/v0.1.0/openblas.lib", joinpath(ADCME.LIBDIR, "openblas.lib"))

return

end

@info "You are building openblas from source on Windows, and this process may take a long time.

Alternatively, you can place your precompiled binary to $(joinpath(ADCME.LIBDIR, "openblas.lib"))"

PWD = pwd()

change_directory()

http_file("https://github.com/xianyi/OpenBLAS/archive/v0.3.9.zip", "OpenBlas.zip")

uncompress("OpenBLAS.zip", "OpenBlas-0.3.9")

change_directory("OpenBlas-0.3.9/build")

require_cmakecache() do

ADCME.cmake(CMAKE_ARGS="-DCMAKE_Fortran_COMPILER=flang -DBUILD_WITHOUT_LAPACK=no -DNOFORTRAN=0 -DDYNAMIC_ARCH=ON")

end

require_library("lib/Release/openblas") do

ADCME.make()

end

change_directory("lib/Release")

copy_file("openblas.lib", joinpath(ADCME.LIBDIR, "openblas.lib"))

cd(PWD)

else

required_file = get_library(joinpath(ADCME.LIBDIR, "openblas"))

if !isfile(required_file)

files = readdir(ADCME.LIBDIR)

files = filter(x->!isnothing(x), match.(r"(libopenblas\S*.dylib)", files))[1]

target = joinpath(ADCME.LIBDIR, files[1])

symlink(target, required_file)

@info "Symlink $(required_file) --> $(files[1])"

end

end

end

"""

install_adept()

Install adept-2 library: https://github.com/rjhogan/Adept-2

"""

function install_adept(; blas_binary::Bool = true)

change_directory()

git_repository("https://github.com/ADCMEMarket/Adept-2", "Adept-2")

cd("Adept-2/adept")

install_blas(blas_binary)

cp("$(@__DIR__)/../deps/AdeptCMakeLists.txt", "./CMakeLists.txt", force=true)

change_directory("build")

require_cmakecache() do

ADCME.cmake()

end

if Sys.iswindows()

require_file("$(ADCME.LIBDIR)/adept.lib") do

ADCME.make()

end

else

require_library("$(ADCME.LIBDIR)/adept") do

ADCME.make()

end

end

end

function install_openmpi()

filepath = joinpath(@__DIR__, "..", "deps", "install_openmpi.jl")

include(filepath)

end

function install_hypre()

filepath = joinpath(@__DIR__, "..", "deps", "install_hypre.jl")

include(filepath)

end

function install_mfem()

PWD = pwd()

change_directory()

http_file("https://github.com/kailaix/mfem/archive/shared-msvc-dev.zip", "mfem.zip")

uncompress("mfem.zip", "mfem-shared-msvc-dev")

change_directory("mfem-shared-msvc-dev/build")

require_file("CMakeCache.txt") do

ADCME.cmake(CMAKE_ARGS = ["-DCMAKE_INSTALL_PREFIX=$(joinpath(ADCME.LIBDIR, ".."))", "-SHARED=YES", "-STATIC=NO"])

end

require_library("mfem") do

ADCME.make()

end

require_file(joinpath(ADCME.LIBDIR, get_library_name("mfem"))) do

if Sys.iswindows()

run_with_env(`cmd /c $(ADCME.CMAKE) --install .`)

else

run_with_env(`$(ADCME.NINJA) install`)

end

end

if Sys.iswindows()

mfem_h = """

// Auto-generated file.

#undef NO_ERROR

#undef READ_ERROR

#undef WRITE_ERROR

#undef ALIAS

#undef REGISTERED

#include "mfem/mfem.hpp"

"""

open(joinpath(ADCME.LIBDIR, "..", "include", "mfem.hpp"), "w") do io

write(io, mfem_h)

@info "Fixed mfem.hpp definitions"

end

end

cd(PWD)

end

function install_had()

change_directory()

git_repository("https://github.com/kailaix/had", "had")

end

function install_matplotlib()

PIP = get_pip()

run(`$PIP install matplotlib`)

if Sys.isapple()

CONDA = get_conda()

pkgs = run(`$CONDA list`)

if occursin("pyqt", pkgs)

return

end

if !isdefined(Main, :Pkg)

error("Package Pkg must be imported in the main module using `import Pkg` or `using Pkg`")

end

run(`$CONDA install -y pyqt`)

Main.Pkg.build("PyPlot")

end

end | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 6921 | using MAT

export

save,

load,

Diary,

scalar,

writepb,

psave,

pload,

activate,

logging,

print_tensor

pybytes(b) = PyObject(

ccall(PyCall.@pysym(PyCall.PyString_FromStringAndSize),

PyPtr, (Ptr{UInt8}, Int),

b, sizeof(b)))

"""

psave(o::PyObject, file::String)

Saves a Python objection `o` to `file`.

See also [`pload`](@ref)

"""

function psave(o::PyObject, file::String)

pickle = pyimport("pickle")

f = open(file, "w")

pickle.dump(o, f)

close(f)

end

"""

pload(file::String)

Loads a Python objection from `file`.

See also [`psave`](@ref)

"""

function pload(file::String)

r = nothing

pickle = pyimport("pickle")

@pywith pybuiltin("open")(file,"rb") as f begin

r = pickle.load(f)

end

return r

end

"""

save(sess::PyObject, file::String, vars::Union{PyObject, Nothing, Array{PyObject}}=nothing, args...; kwargs...)

Saves the values of `vars` in the session `sess`. The result is written into `file` as a dictionary. If `vars` is nothing, it saves all the trainable variables.

See also [`save`](@ref), [`load`](@ref)

"""

function save(sess::PyObject, file::String, vars::Union{PyObject, Nothing, Array{PyObject}, Array{Any}}=nothing, args...; kwargs...)

if vars==nothing

vars = get_collection(TRAINABLE_VARIABLES)

elseif isa(vars, PyObject)

vars = [vars]

elseif isa(vars, Array{Any})

vars = Array{PyObject}(vars)

end

d = Dict{String, Any}()

vals = run(sess, vars, args...;kwargs...)

for i = 1:length(vars)

name = replace(vars[i].name, ":"=>"colon")

name = replace(name, "/"=>"backslash")

d[name] = vals[i]

end

matwrite(file, d)

d

end

function save(sess::PyObject, vars::Union{PyObject, Nothing, Array{PyObject}}=nothing, args...; kwargs...)

if vars==nothing

vars = get_collection(TRAINABLE_VARIABLES)

elseif isa(vars, PyObject)

vars = [vars]

end

d = Dict{String, Any}()

vals = run(sess, vars, args...;kwargs...)

for i = 1:length(vars)

name = replace(vars[i].name, ":"=>"colon")

name = replace(name, "/"=>"backslash")

d[name] = vals[i]

end

d

end

"""

load(sess::PyObject, file::String, vars::Union{PyObject, Nothing, Array{PyObject}}=nothing, args...; kwargs...)

Loads the values of variables to the session `sess` from the file `file`. If `vars` is nothing, it loads values to all the trainable variables.

See also [`save`](@ref), [`load`](@ref)

"""

function load(sess::PyObject, file::String, vars::Union{PyObject, Nothing, Array{PyObject}}=nothing, args...; kwargs...)

if vars==nothing

vars = get_collection(TRAINABLE_VARIABLES)

elseif isa(vars, PyObject)

vars = [vars]

end

d = matread(file)

ops = PyObject[]

for i = 1:length(vars)

name = replace(vars[i].name, ":"=>"colon")

name = replace(name, "/"=>"backslash")

if !(name in keys(d))

@warn "$(vars[i].name) not found in the file, skipped"

else

if occursin("bias", name) && isa(d[name], Number)

d[name] = [d[name]]

end

push!(ops, assign(vars[i], d[name]))

end

end

run(sess, ops, args...; kwargs...)

end

function load(sess::PyObject, d::Dict, vars::Union{PyObject, Nothing, Array{PyObject}}=nothing, args...; kwargs...)

if vars==nothing

vars = get_collection(TRAINABLE_VARIABLES)

elseif isa(vars, PyObject)

vars = [vars]

end

ops = PyObject[]

for i = 1:length(vars)

name = replace(vars[i].name, ":"=>"colon")

name = replace(name, "/"=>"backslash")

if !(name in keys(d))

@warn "$(vars[i].name) not found in the file, skipped"

else

push!(ops, assign(vars[i], d[name]))

end

end

run(sess, ops, args...; kwargs...)

end

mutable struct Diary

writer::PyObject

tdir::String

end

"""

Diary(suffix::Union{String, Nothing}=nothing)

Creates a diary at a temporary directory path. It returns a writer and the corresponding directory path

"""

function Diary(suffix::Union{String, Nothing}=nothing)

tdir = mktempdir()

printstyled("tensorboard --logdir=\"$(tdir)\" --port 0\n", color=:blue)

Diary(tf.summary.FileWriter(tdir, tf.get_default_graph(),filename_suffix=suffix), tdir)

end

"""

save(sw::Diary, dirp::String)

Saves [`Diary`](@ref) to `dirp`.

"""

function save(sw::Diary, dirp::String)

cp(sw.tdir, dirp, force=true)

end

"""

load(sw::Diary, dirp::String)

Loads [`Diary`](@ref) from `dirp`.

"""

function load(sw::Diary,dirp::String)

sw.writer = tf.summary.FileWriter(dirp, tf.get_default_graph())

sw.tdir = dirp

end

"""

activate(sw::Diary, port::Int64=6006)

Running [`Diary`](@ref) at http://localhost:port.

"""

function activate(sw::Diary, port::Int64=0)

printstyled("tensorboard --logdir=\"$(sw.tdir)\" --port $port\n", color=:blue)

run(`tensorboard --logdir="$(sw.tdir)" --port $port '&'`)

end

"""

scalar(o::PyObject, name::String)

Returns a scalar summary object.

"""

function scalar(o::PyObject, name::Union{String,Missing}=missing)

if ismissing(name)

name = tensorname(o)

end

tf.summary.scalar(name, o)

end

"""

write(sw::Diary, step::Int64, cnt::Union{String, Array{String}})

Writes to [`Diary`](@ref).

"""

function Base.:write(sw::Diary, step::Int64, cnt::Union{String, Array{String}})

if isa(cnt, String)

sw.writer.add_summary(pybytes(cnt), step)

else

for c in cnt

sw.writer.add_summary(pybytes(c), step)

end

end

end

function writepb(writer::PyObject, sess::PyObject)

output_path = writer.get_logdir()

tf.io.write_graph(sess.graph_def, output_path, "model.pb")

return

end

"""

logging(file::Union{Nothing,String}, o::PyObject...; summarize::Int64 = 3, sep::String = " ")

Logging `o` to `file`. This operator must be used with [`bind`](@ref).

"""

function logging(file::Union{Nothing,String}, o::PyObject...; summarize::Int64 = 3, sep::String = " ")

if isnothing(file)

tf.print(o..., summarize=summarize, sep=sep)

else

filepath = "file://$(abspath(file))"

tf.print(o..., output_stream=filepath, summarize=summarize, sep=sep)

end

end

logging(o::PyObject...; summarize::Int64 = 3, sep::String = " ") = logging(nothing, summarize=summarize, sep=sep)

"""

print_tensor(in::Union{PyObject, Array{Float64,2}})

Prints the tensor `in`

"""

function print_tensor(in::Union{PyObject, Array{Float64,2}}, info::AbstractString = "")

@assert length(size(in))==2

print_tensor_ = load_system_op("print_tensor")

in = convert_to_tensor(Any[in], [Float64]); in = in[1]

info = tf.constant(info)

out = print_tensor_(in, info)

end | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 12965 | export test_jacobian, test_gradients, linedata, lineview, meshdata,

meshview, gradview, jacview, PCLview, pcolormeshview,

animate, saveanim, test_hessian

function require_pyplot()

if !isdefined(Main, :PyPlot)

error("You must load PyPlot to use this function, e.g., `using PyPlot` or `import PyPlot`")

end

Main.PyPlot

end

"""

test_gradients(f::Function, x0::Array{Float64, 1}; scale::Float64 = 1.0, showfig::Bool = true)

Testing the gradients of a vector function `f`:

`y, J = f(x)` where `y` is a scalar output and `J` is the vector gradient.

"""

function test_gradients(f::Function, x0::Array{Float64, 1};

scale::Float64 = 1.0, showfig::Bool = true, mpi::Bool = false)

v0 = rand(Float64,length(x0))

γs = scale ./10 .^(1:5)

err2 = Float64[]

err1 = Float64[]

f0, J = f(x0)

for i = 1:5

f1, _ = f(x0+γs[i]*v0)

push!(err1, abs(f1-f0))

push!(err2, abs(f1-f0-γs[i]*sum(J.*v0)))

end

if showfig

if mpi && mpi_rank()>0

return

end

mp = require_pyplot()

mp.close("all")

mp.loglog(γs, err1, label="Finite Difference")

mp.loglog(γs, err2, label="Automatic Differentiation")

mp.loglog(γs, γs * 0.5*abs(err1[1])/γs[1], "--",label="\$\\mathcal{O}(\\gamma)\$")

mp.loglog(γs, γs.^2 * 0.5*abs(err2[1])/γs[1]^2, "--",label="\$\\mathcal{O}(\\gamma^2)\$")

mp.plt.gca().invert_xaxis()

mp.legend()

mp.savefig("test.png")

@info "Results saved to test.png"

println("Finite difference: $err1")

println("Automatic differentiation: $err2")

end

return err1, err2

end

"""

test_jacobian(f::Function, x0::Array{Float64, 1}; scale::Float64 = 1.0, showfig::Bool = true)

Testing the gradients of a vector function `f`:

`y, J = f(x)` where `y` is a vector output and `J` is the Jacobian.

"""

function test_jacobian(f::Function, x0::Array{Float64, 1}; scale::Float64 = 1.0, showfig::Bool = true)

v0 = rand(Float64,size(x0))

γs = scale ./10 .^(1:5)

err2 = Float64[]

err1 = Float64[]

f0, J = f(x0)

for i = 1:5

f1, _ = f(x0+γs[i]*v0)

push!(err1, norm(f1-f0))

push!(err2, norm(f1-f0-γs[i]*J*v0))

end

if showfig

mp = require_pyplot()

mp.close("all")

mp.loglog(γs, err1, label="Finite Difference")

mp.loglog(γs, err2, label="Automatic Differentiation")

mp.loglog(γs, γs * 0.5*abs(err1[1])/γs[1], "--",label="\$\\mathcal{O}(\\gamma)\$")

mp.loglog(γs, γs.^2 * 0.5*abs(err2[1])/γs[1]^2, "--",label="\$\\mathcal{O}(\\gamma^2)\$")

mp.plt.gca().invert_xaxis()

mp.legend()

mp.savefig("test.png")

@info "Results saved to test.png"

println("Finite difference: $err1")

println("Automatic differentiation: $err2")

end

return err1, err2

end

"""

test_hessian(f::Function, x0::Array{Float64, 1}; scale::Float64 = 1.0)

Testing the Hessian of a scalar function `f`:

`g, H = f(x)` where `y` is a scalar output, `g` is a vector gradient output, and `H` is the Hessian.

"""

test_hessian(f::Function, x0::Array{Float64, 1}; kwargs...) = test_jacobian(f, x0; kwargs...)

function linedata(θ1, θ2=nothing; n::Integer = 10)

if θ2 === nothing

θ2 = θ1 .* (1 .+ randn(size(θ1)...))

end

T = []

for x in LinRange(0.0,1.0,n)

push!(T, θ1*(1-x)+θ2*x)

end

T

end

function lineview(losses::Array{Float64})

mp = require_pyplot()

n = length(losses)

v = collect(LinRange(0.0,1.0,n))

mp.close("all")

mp.plot(v, losses)

mp.xlabel("\$\\alpha\$")

mp.ylabel("loss")

mp.grid("on")

end

@doc raw"""

lineview(sess::PyObject, pl::PyObject, loss::PyObject, θ1, θ2=nothing; n::Integer = 10)

Plots the function

$$h(α) = f((1-α)θ_1 + αθ_2)$$

# Example

```julia

pl = placeholder(Float64, shape=[2])

l = sum(pl^2-pl*0.1)

sess = Session(); init(sess)

lineview(sess, pl, l, rand(2))

```

"""

function lineview(sess::PyObject, pl::PyObject, loss::PyObject, θ1, θ2=nothing; n::Integer = 10)

mp = require_pyplot()

dat = linedata(θ1, θ2, n=n)

V = zeros(length(dat))

for i = 1:length(dat)

@info i, n

V[i] = run(sess, loss, pl=>dat[i])

end

lineview(V)

end

function meshdata(θ;

a::Real=1, b::Real=1, m::Integer=10, n::Integer=10 ,direction::Union{Array{<:Real}, Missing}=missing)

local δ, γ

as = LinRange(-a, a, m)

bs = LinRange(-b, b, n)

α = zeros(m, n)

β = zeros(m, n)

θs = Array{Any}(undef, m, n)

if !ismissing(direction)

δ = direction - θ

γ = randn(size(θ)...)

γ = γ/norm(γ)*norm(δ)

else

δ = randn(size(θ)...)

γ = randn(size(θ)...)

end

for i = 1:m

for j = 1:n

α[i,j] = as[i]

β[i,j] = bs[j]

θs[i,j] = θ + δ*as[i] + γ*bs[j]

end

end

return θs

end

function meshview(losses::Array{Float64}, a::Real=1, b::Real=1)

mp = require_pyplot()

m, n = size(losses)

α = zeros(m, n)

β = zeros(m, n)

as = LinRange(-a, a, m)

bs = LinRange(-b, b, n)

for i = 1:m

for j = 1:n

α[i,j] = as[i]

β[i,j] = bs[j]

end

end

mp.close("all")

mp.mesh(α, β, losses)

mp.xlabel("\$\\alpha\$")

mp.ylabel("\$\\beta\$")

mp.zlabel("loss")

mp.scatter3D(α[(m+1)÷2, (n+1)÷2], β[(m+1)÷2, (n+1)÷2], losses[(m+1)÷2, (n+1)÷2], color="red", s=100)

return α, β, losses

end

function pcolormeshview(losses::Array{Float64}, a::Real=1, b::Real=1)

mp = require_pyplot()

m, n = size(losses)

α = zeros(m, n)

β = zeros(m, n)

as = LinRange(-a, a, m)

bs = LinRange(-b, b, n)

for i = 1:m

for j = 1:n

α[i,j] = as[i]

β[i,j] = bs[j]

end

end

mp.close("all")

mp.pcolormesh(α, β, losses)

mp.xlabel("\$\\alpha\$")

mp.ylabel("\$\\beta\$")

mp.scatter(0.0,0.0, marker="*", s=100)

mp.colorbar()

return α, β, losses

end

function meshview(sess::PyObject, pl::PyObject, loss::PyObject, θ;

a::Real=1, b::Real=1, m::Integer=9, n::Integer=9,

direction::Union{Array{<:Real}, Missing}=missing)

dat = meshdata(θ; a=a, b=b, m=m, n=n, direction=direction)

m, n = size(dat)

V = zeros(m, n)

for i = 1:m

@info i, m

for j = 1:n

V[i,j] = run(sess, loss, pl=>dat[i,j])

end

end

meshview(V, a, b)

end

function pcolormeshview(sess::PyObject, pl::PyObject, loss::PyObject, θ;

a::Real=1, b::Real=1, m::Integer=9, n::Integer=9,

direction::Union{Array{<:Real}, Missing}=missing)

dat = meshdata(θ; a=a, b=b, m=m, n=n, direction=direction)

m, n = size(dat)

V = zeros(m, n)

for i = 1:m

@info i, m

for j = 1:n

V[i,j] = run(sess, loss, pl=>dat[i,j])

end

end

pcolormeshview(V, a, b)

end

function gradview(sess::PyObject, pl::PyObject, loss::PyObject, u0, grad::PyObject;

scale::Float64 = 1.0, mpi::Bool = false)

mp = require_pyplot()

local v

if length(size(u0))==0

v = rand()

else

v = rand(length(u0))

end

γs = scale ./ 10 .^ (1:5)

v1 = Float64[]

v2 = Float64[]

L_ = run(sess, loss, pl=>u0)

J_ = run(sess, grad, pl=>u0)

for i = 1:5

@info i

L__ = run(sess, loss, pl=>u0+v*γs[i])

push!(v1, norm(L__-L_))

if size(J_)==size(v)

if length(size(J_))==0

push!(v2, norm(L__-L_-γs[i]*sum(J_*v)))

else

push!(v2, norm(L__-L_-γs[i]*sum(J_.*v)))

end

else

push!(v2, norm(L__-L_-γs[i]*J_*v))

end

end

if !(mpi) || (mpi && mpi_rank()==0)

mp.close("all")

mp.loglog(γs, abs.(v1), "*-", label="finite difference")

mp.loglog(γs, abs.(v2), "+-", label="automatic linearization")

mp.loglog(γs, γs.^2 * 0.5*abs(v2[1])/γs[1]^2, "--",label="\$\\mathcal{O}(\\gamma^2)\$")

mp.loglog(γs, γs * 0.5*abs(v1[1])/γs[1], "--",label="\$\\mathcal{O}(\\gamma)\$")

mp.plt.gca().invert_xaxis()

mp.legend()

mp.xlabel("\$\\gamma\$")

mp.ylabel("Error")

if mpi

mp.savefig("mpi_gradview.png")

end

end

return v1, v2

end

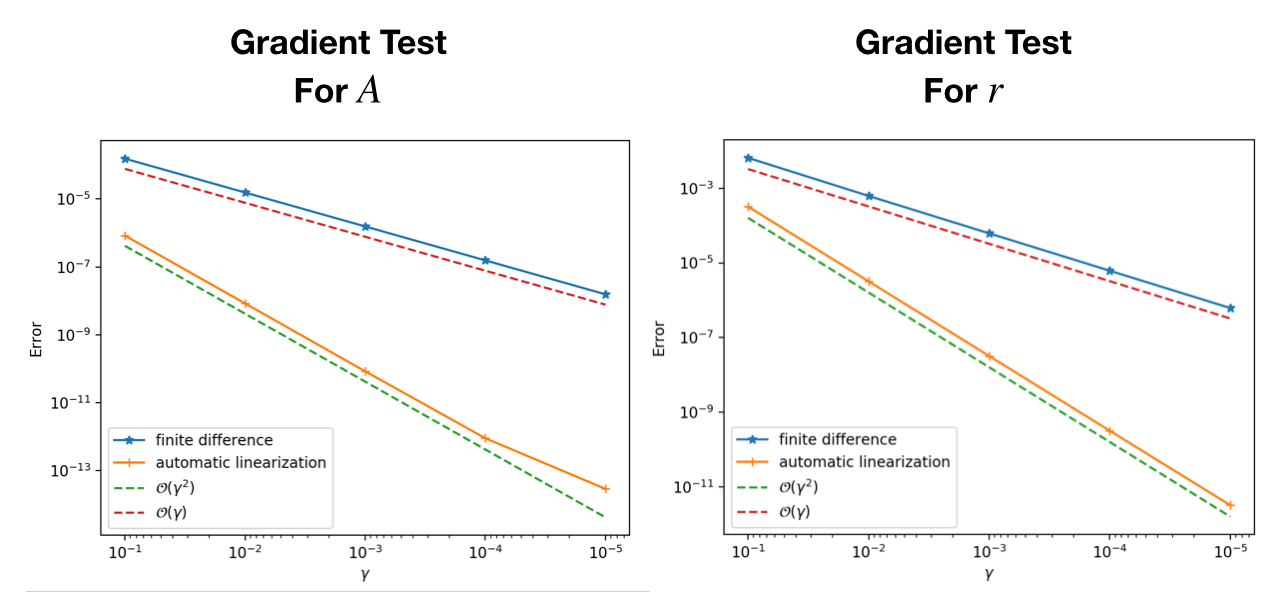

"""

gradview(sess::PyObject, pl::PyObject, loss::PyObject, u0; scale::Float64 = 1.0)

Visualizes the automatic differentiation and finite difference convergence converge. For correctly implemented

differentiable codes, the convergence rate for AD should be 2 and for FD should be 1 (if not evaluated at stationary point).

- `scale`: you can control the step size for perturbation.

"""

function gradview(sess::PyObject, pl::PyObject, loss::PyObject, u0; scale::Float64 = 1.0, mpi::Bool = false)

grad = tf.convert_to_tensor(gradients(loss, pl))

gradview(sess, pl, loss, u0, grad, scale = scale, mpi = mpi)

end

@doc raw"""

jacview(sess::PyObject, f::Function, θ::Union{Array{Float64}, PyObject, Missing},

u0::Array{Float64}, args...)

Performs gradient test for a vector function. `f` has the signature

```

f(θ, u) -> r, J

```

Here `θ` is a nuisance parameter, `u` is the state variables (w.r.t. which the Jacobian is computed),

`r` is the residual vector, and `J` is the Jacobian matrix (a dense matrix or a [`SparseTensor`](@ref)).

# Example 1

```julia

u0 = rand(10)

function verify_jacobian_f(θ, u)

r = u^3+u - u0

r, spdiag(3u^2+1.0)

end

jacview(sess, verify_jacobian_f, missing, u0)

```

# Example 2

```

u0 = rand(10)

rs = rand(10)

function verify_jacobian_f(θ, u)

r = [u^2;u] - [rs;rs]

r, [spdiag(2*u); spdiag(10)]

end

jacview(sess, verify_jacobian_f, missing, u0); close("all")

```

"""

function jacview(sess::PyObject, f::Function, θ::Union{Array{Float64}, PyObject, Missing},

u0::Array{Float64}, args...)

mp = require_pyplot()

u = placeholder(Float64, shape=[length(u0)])

L, J = f(θ, u)

init(sess)

L_ = run(sess, L, u=>u0, args...)

J_ = run(sess, J, u=>u0, args...)

v = rand(length(u0))

γs = 1.0 ./ 10 .^ (1:5)

v1 = Float64[]

v2 = Float64[]

for i = 1:5

L__ = run(sess, L, u=>u0+v*γs[i], args...)

push!(v1, norm(L__-L_))

push!(v2, norm(L__-L_-γs[i]*J_*v))

end

mp.close("all")

mp.loglog(γs, abs.(v1), "*-", label="finite difference")

mp.loglog(γs, abs.(v2), "+-", label="automatic linearization")

mp.loglog(γs, γs.^2 * 0.5*abs(v2[1])/γs[1]^2, "--",label="\$\\mathcal{O}(\\gamma^2)\$")

mp.loglog(γs, γs * 0.5*abs(v1[1])/γs[1], "--",label="\$\\mathcal{O}(\\gamma)\$")

mp.plt.gca().invert_xaxis()

mp.legend()

mp.xlabel("\$\\gamma\$")

mp.ylabel("Error")

end

function PCLview(sess::PyObject, f::Function, L::Function, θ::Union{PyObject,Array{Float64,1}, Float64},

u0::Union{PyObject, Array{Float64}}, args...; options::Union{Dict{String, T}, Missing}=missing) where T<:Real

mp = require_pyplot()

if isa(θ, PyObject)

θ = run(sess, θ, args...)

end

x = placeholder(Float64, shape=[length(θ)])

l, u, g = NonlinearConstrainedProblem(f, L, x, u0; options=options)

init(sess)

L_ = run(sess, l, x=>θ, args...)

J_ = run(sess, g, x=>θ, args...)

v = rand(length(x))

γs = 1.0 ./ 10 .^ (1:5)

v1 = Float64[]

v2 = Float64[]

for i = 1:5

@info i

L__ = run(sess, l, x=>θ+v*γs[i], args...)

# @show L__,L_,J_, v

push!(v1, L__-L_)

push!(v2, L__-L_-γs[i]*sum(J_.*v))

end

mp.close("all")

mp.loglog(γs, abs.(v1), "*-", label="finite difference")

mp.loglog(γs, abs.(v2), "+-", label="automatic linearization")

mp.loglog(γs, γs.^2 * 0.5*abs(v2[1])/γs[1]^2, "--",label="\$\\mathcal{O}(\\gamma^2)\$")

mp.loglog(γs, γs * 0.5*abs(v1[1])/γs[1], "--",label="\$\\mathcal{O}(\\gamma)\$")

mp.plt.gca().invert_xaxis()

mp.legend()

mp.xlabel("\$\\gamma\$")

mp.ylabel("Error")

end

@doc raw"""

animate(update::Function, frames; kwargs...)

Creates an animation using update function `update`.

# Example

```julia

θ = LinRange(0, 2π, 100)

x = cos.(θ)

y = sin.(θ)

pl, = plot([], [], "o-")

t = title("0")

xlim(-1.2,1.2)

ylim(-1.2,1.2)

function update(i)

t.set_text("$i")

pl.set_data([x[1:i] y[1:i]]'|>Array)

end

animate(update, 1:100)

```

"""

function animate(update::Function, frames; kwargs...)

mp = require_pyplot()

animation_ = pyimport("matplotlib.animation")

if !isa(frames, Integer)

frames = Array(frames)

end

animation_.FuncAnimation(mp.gcf(), update, frames=frames; kwargs...)

end

"""

saveanim(anim::PyObject, filename::String; kwargs...)

Saves the animation produced by [`animate`](@ref)

"""

function saveanim(anim::PyObject, filename::String; kwargs...)

if Sys.iswindows()

anim.save(filename, "ffmpeg"; kwargs...)

else

anim.save(filename, "imagemagick"; kwargs...)

end

end | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | code | 25343 | export

dense,

flatten,

ae,

ae_num,

ae_init,

fc,

fc_num,

fc_init,

fc_to_code,

ae_to_code,

fcx,

bn,

sparse_softmax_cross_entropy_with_logits,

Resnet1D

#----------------------------------------------------

# activation functions

_string2fn = Dict(

"relu" => relu,

"tanh" => tanh,

"sigmoid" => sigmoid,

"leakyrelu" => leaky_relu,

"leaky_relu" => leaky_relu,

"relu6" => relu6,

"softmax" => softmax,

"softplus" => softplus,

"selu" => selu,

"elu" => elu,

"concat_elu"=>concat_elu,

"concat_relu"=>concat_relu,

"hard_sigmoid"=>hard_sigmoid,

"swish"=>swish,

"hard_swish"=>hard_swish,

"concat_hard_swish"=>concat_hard_swish,

"sin"=>sin,

"fourier"=>fourier

)

function get_activation_function(a::Union{Function, String, Nothing})

if isnothing(a)

return x->x

end

if isa(a, String)

if haskey(_string2fn, lowercase(a))

return _string2fn[lowercase(a)]

else

error("Activation function $a is not supported, available activation functions:\n$(collect(keys(_string2fn))).")

end

else

return a

end

end

@doc raw"""

fcx(x::Union{Array{Float64,2},PyObject}, output_dims::Array{Int64,1},

θ::Union{Array{Float64,1}, PyObject};

activation::String = "tanh")

Creates a fully connected neural network with output dimension `o` and inputs $x\in \mathbb{R}^{m\times n}$.

$$x \rightarrow o_1 \rightarrow o_2 \rightarrow \ldots \rightarrow o_k$$

`θ` is the weights and biases of the neural network, e.g., `θ = ae_init(output_dims)`.

`fcx` outputs two tensors:

- the output of the neural network: $u\in \mathbb{R}^{m\times o_k}$.

- the sensitivity of the neural network per sample: $\frac{\partial u}{\partial x}\in \mathbb{R}^{m \times o_k \times n}$

"""

function fcx(x::Union{Array{Float64,2},PyObject}, output_dims::Array{Int64,1},

θ::Union{Array{Float64,1}, PyObject};

activation::String = "tanh")

pth = joinpath(@__DIR__, "../deps/Plugin/ExtendedNN/build/ExtendedNn")

pth = get_library(pth)

require_file(pth) do

install_adept()

change_directory(splitdir(pth)[1])

require_cmakecache() do

cmake()

end

make()

end

extended_nn_ = load_op_and_grad(pth, "extended_nn"; multiple=true)

config = [size(x,2);output_dims]

x_,config_,θ_ = convert_to_tensor([x,config,θ], [Float64,Int64,Float64])

x_ = reshape(x_, (-1,))

u, du = extended_nn_(x_,config_,θ_,activation)

reshape(u, (size(x,1), config[end])), reshape(du, (size(x,1), config[end], size(x,2)))

end

"""

ae(x::PyObject, output_dims::Array{Int64}, scope::String = "default";

activation::Union{Function,String} = "tanh")

Alias: `fc`, `ae`

Creates a neural network with intermediate numbers of neurons `output_dims`.

"""

function ae(x::Union{Array{Float64},PyObject}, output_dims::Array{Int64}, scope::String = "default";

activation::Union{Function,String} = "tanh")

if isa(x, Array)

x = constant(x)

end

flag = false

if length(size(x))==1

x = reshape(x, length(x), 1)

flag = true

end

net = x

variable_scope(scope, reuse=AUTO_REUSE) do

for i = 1:length(output_dims)-1

net = dense(net, output_dims[i], activation=activation)

end

net = dense(net, output_dims[end])

end

if flag && (size(net,2)==1)

net = squeeze(net)

end

return net

end

"""

ae(x::Union{Array{Float64}, PyObject}, output_dims::Array{Int64}, θ::Union{Array{Float64}, PyObject};

activation::Union{Function,String, Nothing} = "tanh")

Alias: `fc`, `ae`

Creates a neural network with intermediate numbers of neurons `output_dims`. The weights are given by `θ`

# Example 1: Explicitly construct weights and biases

```julia

x = constant(rand(10,2))

n = ae_num([2,20,20,20,2])

θ = Variable(randn(n)*0.001)

y = ae(x, [20,20,20,2], θ)

```

# Example 2: Implicitly construct weights and biases

```julia

θ = ae_init([10,20,20,20,2])

x = constant(rand(10,10))

y = ae(x, [20,20,20,2], θ)

```

See also [`ae_num`](@ref), [`ae_init`](@ref).

"""

function ae(x::Union{Array{Float64}, PyObject}, output_dims::Array{Int64}, θ::Union{Array{Float64}, PyObject};

activation::Union{Function,String, Nothing} = "tanh")

activation = get_activation_function(activation)

x = convert_to_tensor(x)

θ = convert_to_tensor(θ)

flag = false

if length(size(x))==1

x = reshape(x, length(x), 1)

flag = true

end

offset = 0

net = x

output_dims = [size(x,2); output_dims]

for i = 1:length(output_dims)-2

m = output_dims[i]

n = output_dims[i+1]

net = net * reshape(θ[offset+1:offset+m*n], (m, n)) + θ[offset+m*n+1:offset+m*n+n]

net = activation(net)

offset += m*n+n

end

m = output_dims[end-1]

n = output_dims[end]

net = net * reshape(θ[offset+1:offset+m*n], (m, n)) + θ[offset+m*n+1:offset+m*n+n]

offset += m*n+n

if offset!=length(θ)

error("ADCME: the weights and configuration does not match. Required $offset but given $(length(θ)).")

end

if flag && (size(net,2)==1)

net = squeeze(net)

end

return net

end

"""

ae(x::Union{Array{Float64}, PyObject},

output_dims::Array{Int64},

θ::Union{Array{Array{Float64}}, Array{PyObject}};

activation::Union{Function,String} = "tanh")

Alias: `fc`, `ae`

Constructs a neural network with given weights and biases `θ`

# Example

```julia

x = constant(rand(10,30))

θ = ae_init([30, 20, 20, 5])

y = ae(x, [20, 20, 5], θ) # 10×5

```

"""

function ae(x::Union{Array{Float64}, PyObject},

output_dims::Array{Int64},

θ::Union{Array{Array{Float64}}, Array{PyObject}};

activation::Union{Function,String} = "tanh")

if isa(θ, Array{Array{Float64}})

val = []

for t in θ

push!(val, θ'[:])

end

val = vcat(val...)

else

val = []

for t in θ

push!(val, reshape(θ, (-1,)))

end

vcat(val...)

end

ae(x, output_dims, θ, activation=activation)

end

@doc raw"""

ae_init(output_dims::Array{Int64}; T::Type=Float64, method::String="xavier")

fc_init(output_dims::Array{Int64})

Return the initial weights and bias values by TensorFlow as a vector. The neural network architecture is

```math

o_1 (\text{Input layer}) \rightarrow o_2 \rightarrow \ldots \rightarrow o_n (\text{Output layer})

```

Three types of

random initializers are provided

- `xavier` (default). It is useful for `tanh` fully connected neural network.

```

W^l_i \sim \mathcal{N}\left(0, \sqrt{\frac{1}{n_{l-1}}}\right)

```

- `xavier_avg`. A variant of `xavier`

```math

W^l_i \sim \mathcal{N}\left(0, \sqrt{\frac{2}{n_l + n_{l-1}}}\right)

```

- `he`. This is the activation aware initialization of weights and helps mitigate the problem

of vanishing/exploding gradients.

$$W^l_i \sim \mathcal{N}\left(0, \sqrt{\frac{2}{n_{l-1}}}\right)$$

# Example

```julia

x = constant(rand(10,30))

θ = fc_init([30, 20, 20, 5])

y = fc(x, [20, 20, 5], θ) # 10×5

```

"""

function ae_init(output_dims::Array{Int64}; T::Type=Float64, method::String="xavier")

N = ae_num(output_dims)

W = zeros(T, N)

offset = 0

for i = 1:length(output_dims)-1

m = output_dims[i]

n = output_dims[i+1]

if method=="xavier"

W[offset+1:offset+m*n] = randn(T, m*n) * T(sqrt(1/m))

elseif method=="xavier_normal"

W[offset+1:offset+m*n] = randn(T, m*n) * T(sqrt(2/(n+m)))

elseif method=="xavier_uniform"

W[offset+1:offset+m*n] = rand(T, m*n) * T(sqrt(6/(n+m)))

elseif method=="he"

W[offset+1:offset+m*n] = randn(T, m*n) * T(sqrt(2/(m)))

else

error("Method $method not understood")

end

offset += m*n+n

end

W

end

"""

ae_num(output_dims::Array{Int64})

fc_num(output_dims::Array{Int64})

Estimates the number of weights and biases for the neural network. Note the first dimension

should be the feature dimension (this is different from [`ae`](@ref) since in `ae` the feature

dimension can be inferred), and the last dimension should be the output dimension.

# Example

```julia

x = constant(rand(10,30))

θ = ae_init([30, 20, 20, 5])

@assert ae_num([30, 20, 20, 5])==length(θ)

y = ae(x, [20, 20, 5], θ)

```

"""

function ae_num(output_dims::Array{Int64})

offset = 0

for i = 1:length(output_dims)-2

m = output_dims[i]

n = output_dims[i+1]

offset += m*n+n

end

m = output_dims[end-1]

n = output_dims[end]

offset += m*n+n

return offset

end

function _ae_to_code(d::Dict, scope::String; activation::String)

i = 0

nn_code = ""

while true

si = i==0 ? "" : "_$i"

Wkey = "$(scope)backslashfully_connected$(si)backslashweightscolon0"

bkey = "$(scope)backslashfully_connected$(si)backslashbiasescolon0"

if haskey(d, Wkey)

if i!=0

nn_code *= " isa(net, Array) ? (net = $activation.(net)) : (net=$activation(net))\n"

nn_code *= " #-------------------------------------------------------------------\n"

end

nn_code *= " W$i = aedict$scope[\"$Wkey\"]\n b$i = aedict$scope[\"$bkey\"];\n"

nn_code *= " isa(net, Array) ? (net = net * W$i .+ b$i') : (net = net *W$i + b$i)\n"

i += 1

else

break

end

end

nn_code = """ global nn$scope\n function nn$scope(net)

$(nn_code) return net\n end """

nn_code

end

"""

ae_to_code(file::String, scope::String; activation::String = "tanh")

Return the code string from the feed-forward neural network data in `file`. Usually we can immediately evaluate

the code string into Julia session by

```julia

eval(Meta.parse(s))

```

If `activation` is not specified, `tanh` is the default.

"""

function ae_to_code(file::String, scope::String = "default"; activation::String = "tanh")

d = matread(file)

s = "let aedict$scope = matread(\"$file\")\n"*_ae_to_code(d, scope; activation = activation)*"\nend\n"

return s

end

fc_to_code = ae_to_code

# tensorflow layers from contrib

for (op, tfop) in [(:avg_pool2d, :avg_pool2d), (:avg_pool3d, :avg_pool3d),

(:flatten, :flatten), (:max_pool2d, :max_pool2d), (:max_pool3d, :max_pool3d)]

@eval begin

export $op

$op(args...; kwargs...) = tf.contrib.layers.$tfop(args...; kwargs...)

end

end

for (op, tfop) in [(:conv1d, :conv1d), (:conv2d, :conv2d), (:conv2d_in_plane, :conv2d_in_plane),

(:conv2d_transpose, :conv2d_transpose), (:conv3d, :conv3d), (:conv3d_transpose, :conv3d_transpose)]

@eval begin

export $op

function $op(args...;activation = nothing, bias=false, kwargs...)

activation = get_activation_function(activation)

if bias

biases_initializer = tf.zeros_initializer()

else

biases_initializer = nothing

end

tf.contrib.layers.$tfop(args...; activation_fn = activation, biases_initializer=biases_initializer, kwargs...)

end

end

end

"""

dense(inputs::Union{PyObject, Array{<:Real}}, units::Int64, args...;

activation::Union{String, Function} = nothing, kwargs...)

Creates a fully connected layer with the activation function specified by `activation`

"""

function dense(inputs::Union{PyObject, Array{<:Real}}, units::Int64, args...; activation::Union{String, Function, Nothing} = nothing, kwargs...)

inputs = constant(inputs)

activation = get_activation_function(activation)

tf.contrib.layers.fully_connected(inputs, units, args...; activation_fn=activation, kwargs...)

end

"""

bn(args...;center = true, scale=true, kwargs...)

`bn` accepts a keyword parameter `is_training`.