licenses

sequencelengths 1

3

| version

stringclasses 677

values | tree_hash

stringlengths 40

40

| path

stringclasses 1

value | type

stringclasses 2

values | size

stringlengths 2

8

| text

stringlengths 25

67.1M

| package_name

stringlengths 2

41

| repo

stringlengths 33

86

|

|---|---|---|---|---|---|---|---|---|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 16885 | # PDE Constrained Optimization

In the broad context, the physics based machine learning can be formulated as a PDE-constrained optimization problem. The PDE-constrained optimization problem aims at finding the optimal design variables such that the objective function is minimized and the constraints---usually described by PDEs---are satisfied. PDE-contrained optimization has a large variety of applications, such as optimal control and inverse problem. The topic is at the intersection of numerical PDE discretization, mathematical optimization, software design, and physics modeling.

Mathematically, a PDE-constrained optimization can be formulated as

$$\begin{aligned}

\min_{y\in \mathcal{Y}, u\in \mathcal{U}}&\; J(y, u)\\

\text{s.t.}&\; F(y, u)=0 & \text{the governing PDEs}\\

&\; c(y,u)=0&\text{additional equality constraints}\\

&\; h(y, u)\geq 0&\text{additional inequality constraints}

\end{aligned}$$

Here $J$ is the objective function, $y$ is the **design variable**, $u$ is the **state variable**.

In this section, we will focus on the PDE-constrained optimization with only the governing PDE constraints, and we consider a discretize-then-optimize and gradient-based optimization approach. Specifically, the objective function and the PDEs are first discretized numerically, leading to a constrained optimization problem with a finite dimensional optimization variable. We use gradient-based optimization because it provides fast convergence and an efficient way to integrate optimization and simulation. However, this approach requires insight into the simulator and can be quite all-consuming to obtain the gradients.

## Example

We consider an exemplary PDE-constrained optimization problem: assume we want to have a specific temperature distribution on a metal bar $\bar u(x)$ by imposing a heat source $y(x)$ on the bar

$$\begin{aligned}

\min_{y\in \mathcal{Y}, u\in \mathcal{U}}&\; L(y, u) = \frac{1}{2}\int_0^1 \|u(x) - \bar u(x)\|^2 dx + \frac{\rho}{2}\int_0^1 y(x)^2 dx \\

\text{s.t.}&\; f(y, u)=0

\end{aligned}$$

Here $f(y,u)=0$ is the static heat equation with the boundary conditions.

$$c(x)u_{xx}(x) = y(x),\quad x\in (0,1), \quad u(0)=u_0, u(1)=u_1$$

where $c(x)$ is the diffusivity coefficient, $u_0$ and $u_1$ are fixed boundaries.

After discretization, the optimization problem becomes

$$\begin{aligned}

\min_{y, u}&\; J(y, u) = \frac{1}{2}\|u-\bar u\|_2 + \frac{\rho}{2}\|y\|^2\\

\text{s.t.}&\; F(y, u)= Ku - y = 0

\end{aligned}\tag{1}$$

where $K$ is the stiffness matrix, taking into account of the boundary conditions, $u$, $\bar u$, and $y$ are vectors.

In what follows, we basically apply common optimization method to the constrained optimization problem Equation 1.

## Method 1: Penalty Method

The simplest method for solving the unconstrained optimization problem Equation 1 is via the penalty method. Specifically, instead of solving the original constrained optimization problem, we solve

$$\min_{y, u} \frac{1}{2}\|u-\bar u\|_2 + \frac{\rho}{2}\|y\|^2 + \lambda \|f(y, u)\|^2_2$$

where $\lambda$ is the penalty parameter.

The penalty method is **conceptually simple** and is also **easy-to-implement**. Additionally, it does not require solving the PDE constraint $f(y,u)=0$ and thus the comptuational cost for each iteration can be small. However, avoid solving the PDE constraint is also a disadvantage since it means the penalty method **does not eventually enforce the physical constraint**. The solution from the penalty method only converges to the the true solution when $\lambda\rightarrow \infty$, which is **not computationally feasible**. Additionally, despite less cost per iteration, the total number of iterations can be huge when the PDE constraint is "stiff". To gain some intuition, consider the following problem

$$\begin{aligned}

\min_{u}&\; 0\\

\text{s.t.}&\; Ku - y = 0

\end{aligned}$$

The optimal value is $u = K^{-1}y$ and the cost by solving the linear system is usually propertional to $\mathrm{cond}(K)$. However, if we were to solve the problem using the penalty method

$$\min_{u} \|Ku-y\|^2_2$$

The condition number for solving the least square problem (e.g., by solving the normal equation $K^TK u = K^Ty$) is usually propertional to $\mathrm{cond}(K)^2$. When the problem is stiff, i.e., $\mathrm{cond}(K)$ is large, the penaty formulation can be much more **ill-conditioned** than the original problem.

## Method 2: Primal and Primal-Dual Method

A classical theory regarding constrained optimization is the Karush-Kuhn-Tucker (KKT) Theorm. It states a necessary and sufficient condition for a value to be optimal under certain assumptions. To formulate the KKT condition, consider the **Lagrangian function**

$$L(u, y, \lambda) = \frac{1}{2}\|u-\bar u\|_2 + \frac{\rho}{2}\|y\|^2 + \lambda^T(Ku - y)$$

where $\lambda$ is the **adjoint variable**. The corresponding KKT condition is

$$\begin{aligned}

\frac{\partial L}{\partial u} &= u - \bar u + K^T\lambda = 0 \\

\frac{\partial L}{\partial y} &= \rho y - \lambda = 0 \\

\frac{\partial L}{\partial \lambda} &= Ku - y = 0

\end{aligned}$$

The **primal-dual method** solves for $(u, y, \lambda)$ simultaneously from the linear system

$$\begin{bmatrix} 1 & 0 & K^T\\ 0 & \rho & -1\\K & -1 & 0 \end{bmatrix}\begin{bmatrix}u\\ y\\ \lambda\end{bmatrix} = \begin{bmatrix}\bar u\\ 0 \\ 0\end{bmatrix}$$

In contrast, the **dual method** eliminates the state variables $u$ and $y$ and solves for $\lambda$

$$(1+\rho KK^T)\lambda = \rho K\bar u$$

The primal-dual and dual method have some advantages. For example, we reduce finding the minimum of a constrained optimization method to solving a nonlinear equation, where certain tools are available. For the primal-dual method, the limitation is that the system of equations can be very large and difficult to solve. The dual method requires analytical derivation and is not always obvious in practice, especially for nonlinear problems.

## Method 3: Primal Method

The primal method reduces the constrained optimization problem to an unconstrained optimization problem by "solving" the numerical PDE first. In the previous example, we have $u(y) = K^{-1}y$ and therefore we have

$$\min_y \frac{1}{2}\|K^{-1}y-\bar u\|_2 + \frac{\rho}{2}\|y\|^2$$

The advantage is three-folds

* Dimension reduction. The optimization variables are reduced from $(u,y)$ to the design variables $y$ only.

* Enforced physical constraints. The physical constraints are enforced numerically.

* Unconstrained optimization. The reduced problem is a constrained optimization problem and many off-the-shelf optimizers (gradient descent, BFGS, CG, etc.) are available.

However, to compute the gradients, the primal method requires deep insights into the numerical solver, which may be highly nonlinear and implicit. The usual automatic differentiation (AD) framework are in general not applicable to this type of operators (nodes in the computational graph) and we need special algorithms.

### Link to Adjoint-State Method

The adjoint state method is a standard method for computing the gradients of the objective function with respect to design variables in PDE-constrained optimization. Consider

$$\begin{aligned}

\min_{y, u}&\; J(y, u)\\

\text{s.t.}&\; F(y, u)=0

\end{aligned}$$

Let $u$ be the state variable and $y$ be the design variable. Assume we solve for $u=u(y)$ from the PDE constraint, then we have the reduced objective function

$$\hat J(y) = J(y, u(y))$$

To conduct gradient-based optimization, we need to compute the gradient

$$\frac{d \hat J(y)}{d y} =\nabla_y J(y, u(y)) +\nabla_u J(y, u(y))\frac{du(y)}{dy} \tag{2}$$

To compute the $\frac{du(y)}{dy}$ we have

$$F(y, u(y))=0 \Rightarrow \nabla_y F + \nabla_u F \frac{du}{dy} = 0 \tag{3}$$

Therefore we can use Equations 2 and 3 to evaluate the gradient of the objective function

$$\frac{d \hat J(y)}{d y} = \nabla_y J(y, u(y)) - \nabla_u J(y, u(y)) (\nabla_u F(y,u(y)))^{-1} \nabla_y F(y, u(y))\tag{4}$$

### Link to the Lagrange Function

There is a nice interpretation of Equations 2 and 3 using the Lagrange function. Consider the langrange function

$$L(y, u, \lambda) = J(y, u) + \lambda^T F(y, u) \tag{5}$$

The KKT condition says

$$\begin{aligned}

\frac{\partial L}{\partial \lambda} &= F(y, u) = 0\\

\frac{\partial L}{\partial u} &= \nabla_u J + \lambda^T \nabla_u F(y, u) = 0\\

\frac{\partial L}{\partial y} &= \nabla_y J + \lambda^T \nabla_y F(y, u) = 0\\

\end{aligned}$$

Now we relax the third equation: given a fixed $y$ (not necessarily optimal), we can solve for $(u,\lambda)$ from the first two equations. We plug the solutions into the third equation and obtain (note $u$ satisfies $F(y, u)=0$)

$$\frac{\partial L}{\partial y} = \nabla_y J(y, u) - \nabla_u J(y, u) (\nabla_u F(y,u))^{-1} \nabla_y F(y, u)$$

which is the same expression as Equation 4. When $y$ is optimal, this expression is equal to zero, i.e., all the KKT conditions are satisfied. The gradient of the unconstrained optimization problem is also zero. Both the primal system and the primal-dual system confirm the optimality. This relation also explains why we call the method above as "adjoint" state method. In summary, the adjoint-state method involves a three-step process

* **Step 1.** Create the Lagrangian Equation 5.

* **Step 2**. Conduct forward computation and solve for $u$ from

$$F(y, u) = 0$$

* **Step 3.** Compute the adjoint variable $\lambda$ from

$$\nabla_u J + \lambda^T \nabla_u F(y, u) = 0$$

* **Step 4.** Compute the sensitivity

$$\frac{\partial \hat J}{\partial y} = \nabla_y J + \lambda^T \nabla_y F(y, u)$$

### Link to Automatic Differentiation

#### Computational Graph

The adjoint-state method is also closely related to the reverse-mode automatic differentiation. Consider a concrete PDE-constrained optimization problem

$$\begin{aligned}

\min_{\mathbf{u}_1, {\theta}} &\ J = f_4(\mathbf{u}_1, \mathbf{u}_2, \mathbf{u}_3, \mathbf{u}_4), \\

\mathrm{s.t.} & \ \mathbf{u}_2 = f_1(\mathbf{u}_1, {\theta}), \\

& \ \mathbf{u}_3 = f_2(\mathbf{u}_2, {\theta}),\\

& \ \mathbf{u}_4 = f_3(\mathbf{u}_3, {\theta}).

\end{aligned}$$

where $f_1$, $f_2$, $f_3$ are PDE constraints, $f_4$ is the loss function, $\mathbf{u}_1$ is the initial condition, and $\theta$ is the model parameter.

#### Adjoint-State Method

The Lagrangian function is

$$\mathcal{L}= f_4(\mathbf{u}_1, \mathbf{u}_2, \mathbf{u}_3, \mathbf{u}_4) + {\lambda}^{T}_{2}(f_1(\mathbf{u}_1, {\theta}) - \mathbf{u}_2) + {\lambda}^T_{3}(f_2(\mathbf{u}_2, {\theta}) - \mathbf{u}_3) + {\lambda}^T_{4}(f_3(\mathbf{u}_3, {\theta}) - \mathbf{u}_4)$$

Upon conducting the foward computation we have all $\mathbf{u}_i$ available. To compute the adjoint variable $\lambda_i$, we have

$$\begin{aligned}

{\lambda}_4^T &= \frac{\partial f_4}{\partial \mathbf{u}_4} \\

{\lambda}_3^T &= \frac{\partial f_4}{\partial \mathbf{u}_3} + {\lambda}_4^T\frac{\partial f_3}{\partial \mathbf{u}_3} \\

{\lambda}_2^T &= \frac{\partial f_4}{\partial \mathbf{u}_2} + {\lambda}_3^T\frac{\partial f_2}{\partial \mathbf{u}_2}

\end{aligned}$$

The gradient of the objective function in the constrained optimization problem is given by

$$\frac{\partial \mathcal{L}}{\partial {\theta}} = {\lambda}_2^T\frac{\partial f_1}{\partial {\theta}} + {\lambda}_3^T\frac{\partial f_2}{\partial {\theta}} + {\lambda}_4^T\frac{\partial f_3}{\partial {\theta}}$$

#### Automatic Differentiation

Now let's see how the computation is linked to automatic differentiation. As explained in the previous tutorials, when we implement the automatic differentiation operator, we need to backpropagate the "top" gradients to its upstreams in the computational graph. Consider the operator $f_2$, we need to implement two operators

$$\begin{aligned}

\text{Forward:}&\; \mathbf{u}_3 = f_2(\mathbf{u}_2, \theta)\\

\text{Backward:}&\; \frac{\partial J}{\partial \mathbf{u}_2}, \frac{\partial J}{\partial \theta} = b_2\left(\frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_3}, \mathbf{u}_2, \theta\right)

\end{aligned}$$

Here $\frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_3}$ is the "total" gradient $\mathbf{u}_3$ received from the downstream in the computational graph.

#### Relation between AD and Adjoint-State Method

The backward operator is implemented using the chain rule

$$\begin{aligned}

\frac{\partial J}{\partial \mathbf{u}_2} = \frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_3} \frac{\partial f_2}{\partial \mathbf{u}_2}\qquad

\frac{\partial J}{\partial \theta} = \frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_3} \frac{\partial f_2}{\partial \theta}

\end{aligned}$$

The total gradient $\mathbf{u}_2$ received is

$$\frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_2} = \frac{\partial f_4}{\partial \mathbf{u}_2} + \frac{\partial J}{\partial \mathbf{u}_2} = \frac{\partial f_4}{\partial \mathbf{u}_2} + \frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_3} \frac{\partial f_2}{\partial \mathbf{u}_2}$$

The dual constraint in the KKT condition

$${\lambda}_2^T = \frac{\partial f_4}{\partial \mathbf{u}_2} + {\lambda}_3^T\frac{\partial f_2}{\partial \mathbf{u}_2}$$

Now we see the important relation

$$\boxed{{\lambda}_i^T = \frac{\partial J^{\mathrm{tot}}}{\partial \mathbf{u}_i}}$$

That means, in general, **the reverse-mode AD is back-propagating the Lagrange multiplier (adjoint variables)**.

#### Dicussion and Physics based Machine Learning

However, although the link between AD and adjoint state methods enables us to use AD tools for PDE-constrained optimization, many standard numerical schemes, such as implicit ones, involve an iterative process (e.g., Newton-Raphson) in nature. AD is usually designed for explicit operators. To this end, we can borrow the idea from the adjoint-state methods and enhance the current AD framework to differentiate through iterative solvers or implicit schemes. This is known as **physics constrained learning**. For more details, see [the paper](https://arxiv.org/pdf/2002.10521.pdf) here.

Another ongoing research is the combination of neural networks and physical modeling. One idea is to model the unknown relations in the physical system using neural networks. Those includes

* Koopman operator in dynamical systems

* Constitutive relations in solid mechanics.

* Turbulent closure relations in fluid mechanics.

* ......

In the context of PDE-constrained optimization, there is no essential difference between learning a neural network and finding the optimal physical parameters, except that the design variables become the weights and biases of neural networks. However, the neural networks raise some questions, for example: does one optimization technique preferable than the others? How to stabilize the numerical solvers when neural networks are present? How to add physical constraints to the neural network? How to scale the algorithm? How is the well-posedness and conditioning of the optimization problem? How much data do we need? How to stabilize the training (e.g., regularization, projected gradients)? Indeed, the application of neural networks in the physics machine learning leave more problems than what have been answered here.

## Other Optimization Techniques

Besides the formulation and optimization techniques introduced here, there are many other topics, which we have not covered here, on PDE-constrained optimization. It is worthwhile mentioning that we consider the optimize-then-discretize approach. The alternative approach, discretize-then-optimize, derives the optimal condition (KKT condition) on the continuous level and then discretize the dual PDE. In this formulation, we can use the same discretization method for both the primal and dual system, and therefore we may preserve some essential physical properties. However, the gradients derived in this way may deviate from the true gradients of the constrained optimization problem.

Another noteworthy ongoing research is to formulate the optimization as an action functional. Just like every PDE can be viewed as a minimization of an energy function, a PDE-constrained optimization problem can also be formulated as a problem of minimizing a functional. These discussions are beyond the scope of the tutorial.

## Summary

PDE-constrained optimization has a wide variety of applications. Specifically, formulating the physics based machine learning as a PDE-constrained optimization problem lends us a rich toolbox for optimization, discretization, and algorithm design. The combination of neural networks and physics modeling poses a lot of opportunities as well as challenges for solving long standing problems. The gradient based optimization with automatic differentiation has the potential to consolidate the techniques in a single framework. | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 6726 | # Inverse Modeling Recipe

Here is a tip for inverse modeling using ADCME.

## Forward Modeling

The first step is to implement your forward computation in ADCME. Let's consider a simple example. Assume that we want to compute a transformation from $\{x_1,x_2, \ldots, x_n\}$ to $\{f_\theta(x_1), f_\theta(x_2), \ldots, f_\theta(x_n)\}$, where

$$f_\theta(x) = a_2\sigma(a_1x+b_1)+b_2\quad \theta=(a_1,b_2,a_2,b_2)$$

The value $\theta=(1,2,3,4)$. We can code the forward computation as follows

```julia

using ADCME

θ = constant([1.;2.;3.;4.])

x = collect(LinRange(0.0,1.0,10))

f = θ[3]*sigmoid(θ[1]*x+θ[2])+θ[4]

sess = Session(); init(sess)

f0 = run(sess, f)

```

We obtained

```text

10-element Array{Float64,1}:

6.6423912339336475

6.675935315969742

6.706682200447601

6.734800968378825

6.7604627001561575

6.783837569144308

6.805092492614008

6.824389291376896

6.841883301751329

6.8577223804673

```

## Inverse Modeling

Assume that we want to estimate the target variable $\theta$ from observations $\{f_\theta(x_1), f_\theta(x_2), \ldots, f_\theta(x_n)\}$. The inverse modeling is split into 6 steps. Follow the steps one by one

* **Step 1: Mark the target variable as `placeholder`**. That is, we replace `θ = constant([1.;2.;3.;4.])` by `θ = placeholder([1.;2.;3.;4.])`.

* **Step 2: Check that the loss is zero given true values.** The loss function is usually formulated so that it equals zero when we plug the true value to the target variable.

You should expect `0.0` using the following codes.

```julia

using ADCME

θ = placeholder([1.;2.;3.;4.])

x = collect(LinRange(0.0,1.0,10))

f = θ[3]*sigmoid(θ[1]*x+θ[2])+θ[4]

loss = sum((f - f0)^2)

sess = Session(); init(sess)

@show run(sess, loss)

```

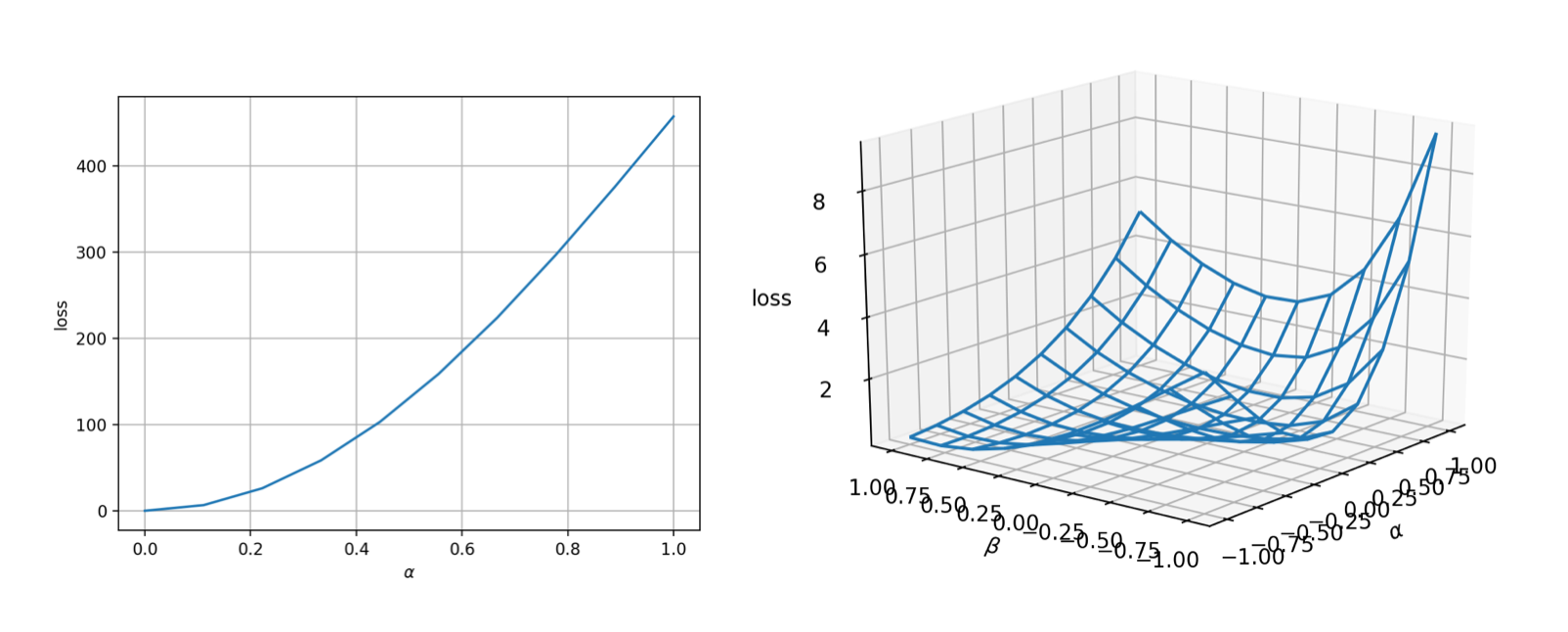

* **Step 3: Use `lineview` to visualize the landscape**. Assume the initial guess is $\theta_0$, we can use the `lineview` function from [`ADCMEKit.jl`](https://github.com/kailaix/ADCMEKit.jl) package to visualize the landscape from $\theta_0=[0,0,0,0]$ to $\theta^*$ (true value). This gives us early confidence on the correctness of the implementation as well as the difficulty of the optimization problem. You can also use `meshview`, which shows a 2D landscape but is more expensive to evaluate.

```julia

using ADCME

using ADCMEKit

θ = placeholder([1.;2.;3.;4.])

x = collect(LinRange(0.0,1.0,10))

f = θ[3]*sigmoid(θ[1]*x+θ[2])+θ[4]

loss = sum((f - f0)^2)

sess = Session(); init(sess)

@show run(sess, loss)

lineview(sess, θ, loss, [1.;2.;3.;4.], zeros(4)) # or meshview(sess, θ, loss, [1.;2.;3.;4.])

```

The landscape is very nice (convex and smooth)! That means the optimization should be very easy.

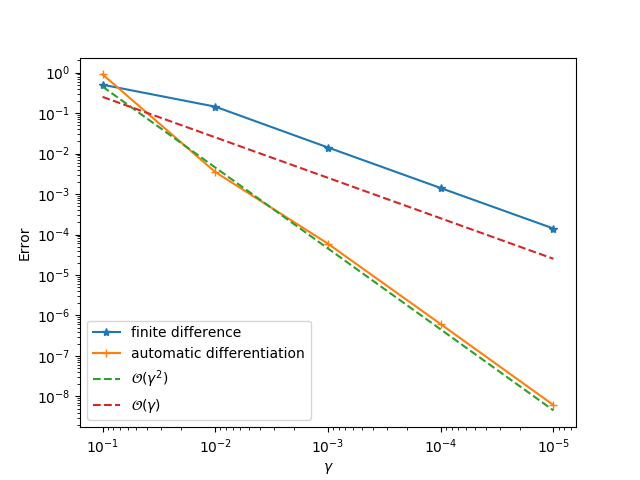

* **Step 4: Use `gradview` to check the gradients.** `ADCMEKit.jl` also provides `gradview` which visualizes the gradients at arbitrary points. This helps us to check whether the gradient is implemented correctly.

```julia

using ADCME

using ADCMEKit

θ = placeholder([1.;2.;3.;4.])

x = collect(LinRange(0.0,1.0,10))

f = θ[3]*sigmoid(θ[1]*x+θ[2])+θ[4]

loss = sum((f - f0)^2)

sess = Session(); init(sess)

@show run(sess, loss)

lineview(sess, θ, loss, [1.;2.;3.;4.], zeros(4)) # or meshview(sess, θ, loss, [1.;2.;3.;4.])

gradview(sess, θ, loss, zeros(4))

```

You should get something like this:

* **Step 5: Change `placeholder` to `Variable` and perform optimization!** We use L-BFGS-B optimizer to solve the minimization problem. A useful trick is to multiply the loss function by a large scalar so that the optimizer does not stop early (or reduce the tolerance).

```julia

using ADCME

using ADCMEKit

θ = Variable(zeros(4))

x = collect(LinRange(0.0,1.0,10))

f = θ[3]*sigmoid(θ[1]*x+θ[2])+θ[4]

loss = 1e10*sum((f - f0)^2)

sess = Session(); init(sess)

BFGS!(sess, loss)

run(sess, θ)

```

You should get

```bash

4-element Array{Float64,1}:

1.0000000000008975

2.0000000000028235

3.0000000000056493

3.999999999994123

```

That's exact what we want.

* **Step 6: Last but not least, repeat step 3 and step 4 if you get stuck in a local minimum.** Scrutinizing the landscape at the local minimum will give you useful information so you can make educated next step!

## Debugging

### Sensitivity Analysis

When the gradient test fails, we can perform _unit sensitivity analysis_. The idea is that given a function $y = f(x_1, x_2, \ldots, x_n)$, if we want to confirm that the gradients $\frac{\partial f}{\partial x_i}$ is correctly implemented, we can perform 1D gradient test with respect to a small perturbation $\varepsilon_i$:

$$y(\varepsilon_i) = f(x_1, x_2, \ldots, x_i + \varepsilon_i, \ldots, x_n)$$

or in the case you are not sure about the scale of $x_i$,

$$y(\varepsilon_i) = f(x_1, x_2, \ldots, x_i (1 + \varepsilon_i), \ldots, x_n)$$

As an example, if we want to check whether the gradients for `sigmoid` is correctly backpropagated in the above code, we have

```julia

using ADCME

using ADCMEKit

ε = placeholder(1.0)

θ = constant([1.;2.;3.;4.])

x = collect(LinRange(0.0,1.0,10))

f = θ[3]*sigmoid(θ[1]*x+θ[2] + ε)+θ[4]

loss = sum((f - f0)^2)

sess = Session(); init(sess)

gradview(sess, ε, loss, 0.01)

```

We will see a second order convergence for the automatic differentiation method while a first order convergence for the finite difference method. The principle for identifying problematic operator is to go from downstream operators to top stream operators in the computational graph. For example, given the computational graph

$$f_1\rightarrow f_2 \rightarrow \cdots \rightarrow f_i \rightarrow f_{i+1} \rightarrow \ldots \rightarrow f_n$$

If we conduct sensitivity analysis for $f_i:o_i \mapsto o_{i+1}$, and find that the gradient is wrong, then we can infer that at least one of the operators in the downstream $f_i \rightarrow f_{i+1} \rightarrow \ldots \rightarrow f_n$ has problematic gradients.

### Check Your Training Data

Sometimes it is also useful to check your training data. For example, if you are working with numerical schemes, check whether your training data are generated from reasonable physical parameters, and whether or not the numerical schemes are stable.

### Local Minimum

To check whether or not the optimization converged to a local minimum, you can either check `meshview` or `lineview`. However, these functions only give you some hints and you should only rely solely on their results. A more reliable check is to consider `gradview`. In principle, if you have a local minimum, the gradient at the local minimum should be zero, and therefore the finite difference curve should also have second order convergence. | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 2831 | # Sparse Linear Algebra

ADCME augments TensorFlow APIs by adding sparse linear algebra support. In ADCME, sparse matrices are represented by [`SparseTensor`](@ref). This data structure stores `indices`, `rows` and `cols` of the sparse matrices and keep track of relevant information such as whether it is diagonal for performance consideration. The default is row major (due to TensorFlow backend).

When evaluating `SparseTensor`, the output will be `SparseMatrixCSC`, the native Julia representation of sparse matrices

```julia

A = run(sess, s) # A has type SparseMatrixCSC{Float64,Int64}

```

## Sparse Matrix Construction

* By passing columns (`Int64`), rows (`Int64`) and values (`Float64`) arrays

```julia

ii = [1;2;3;4]

jj = [1;2;3;4]

vv = [1.0;1.0;1.0;1.0]

s = SparseTensor(ii, jj, vv, 4, 4)

```

* By passing a `SparseMatrixCSC`

```julia

using SparseArrays

s = SparseTensor(sprand(10,10,0.3))

```

* By passing a dense array (tensor or numerical array)

```julia

D = Array(sprand(10,10,0.3)) # a dense array

d = constant(D)

s = dense_to_sparse(d)

```

There are also special constructors.

| Description | Code |

| --------------------------------- | -------------- |

| Diagonal matrix with diagonal `v` | `spdiag(v)` |

| Empty matrix with size `m`, `n` | `spzero(m, n)` |

| Identity matrix with size `m` | `spdiag(m)` |

## Matrix Traits

1. Size of the matrices

```julia

size(s) # (10,20)

size(s,1) # 10

```

2. Return `row`, `col`, `val` arrays (also known as COO arrays)

```julia

ii,jj,vv = find(s)

```

## Arithmetic Operations

1. Add Subtract

```julia

s = s1 + s2

s = s1 - s2

```

2. Scalar Product

```julia

s = 2.0 * s1

s = s1 / 2.0

```

3. Sparse Product

```julia

s = s1 * s2

```

4. Transposition

```julia

s = s1'

```

## Sparse Solvers

1. Solve a linear system (`s` is a square matrix)

```julia

sol = s\rhs

```

2. Solve a least square system (`s` is a tall matrix)

```julia

sol = s\rhs

```

!!! note

The least square solvers are implemented using Eigen sparse linear packages, and the gradients are also implemented. Thus, the following codes will work as expected (the gradients functions will correctly compute the gradients):

```julia

ii = [1;2;3;4]

jj = [1;2;3;4]

vv = constant([1.0;1.0;1.0;1.0])

rhs = constant(rand(4))

s = SparseTensor(ii, jj, vv, 4, 4)

sol = s\rhs

run(sess, sol)

run(sess, gradients(sum(sol), rhs))

run(sess, gradients(sum(sol), vv))

```

## Assembling Sparse Matrix

In many applications, we want to accumulate `row`, `col` and `val` to assemble a sparse matrix in iterations. For this purpose, we provide the `SparseAssembler` utilities.

```@docs

SparseAssembler

accumulate

assemble

```

| ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 14057 | # What is ADCME? Computational Graph, Automatic Differentiation & TensorFlow

## Computational Graph

A computational graph is a functional description of the required computation. In the computationall graph, an edge represents a value, such as a scalar, a vector, a matrix or a tensor. A node represents a function whose input arguments are the the incoming edges and output values are are the outcoming edges. Based on the number of input arguments, a function can be nullary, unary, binary, ..., and n-ary; based on the number of output arguments, a function can be single-valued or multiple-valued.

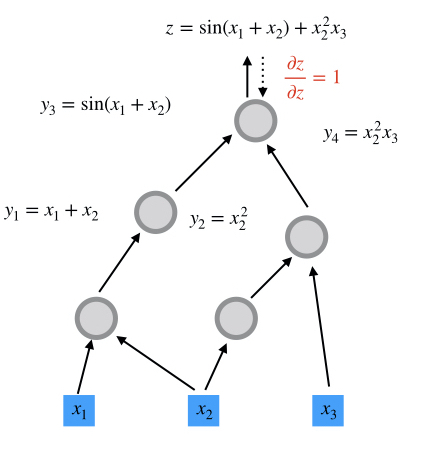

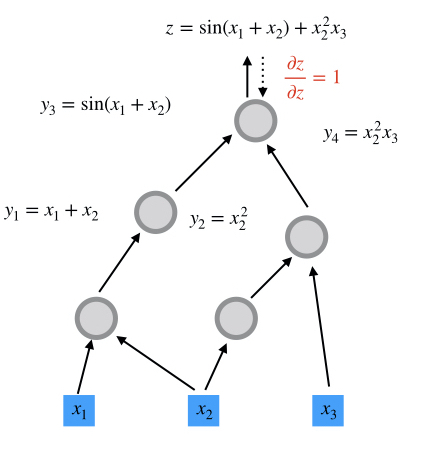

Computational graphs are directed and acyclic. The acyclicity implies the forward propagation computation is well-defined: we loop over edges in topological order and evaluates the outcoming edges for each node. To make the discussion more concrete, we illustrate the computational graph for

$$z = \sin(x_1+x_2) + x_2^2 x_3$$

There are in general two programmatic ways to construct computational graphs: static and dynamic declaration. In the static declaration, the computational graph is first constructed symbolically, i.e., no actual numerical arithmetic are executed. Then a bunch of data is fed to the graph for the actual computation. An advantage of static declarations is that they allow for graph optimization such as removing unused branches. Additionally, the dependencies can be analyzed for parallel execution of independent components. Another approach is the dynamic declaration, where the computational graph is constructed on-the-fly as the forward computation is executed. The dynamic declaration interleaves construction and evaluation of the graph, making software development more intuitive.

## Automatic Differentiation

An important application of computational graphs is automatic differentiation (AD). In general, there are three modes of AD: reverse-mode, forward-mode, and mixed mode. In this tutorial, we focus on the forward-mode and reverse-mode.

Basically, the forward mode and the reverse mode automatic differenation both use the. chain rule for computing the gradients. They evaluate the gradients of "small" functions analytically (symbolically) and chain all the computed **numerical** gradients via the chain rule

$$\frac{\partial f\circ g (x)}{\partial x} = \frac{\partial f'\circ g(x)}{\partial g} {\frac{\partial g'(x)}{\partial x}}$$

### Forward Mode

In the forward mode, the gradients are computed in the same order as function evaluation, i.e., ${\frac{\partial g'(x)}{\partial x}}$ is computed first, and then $\frac{\partial f'\circ g(x)}{\partial g} {\frac{\partial g'(x)}{\partial x}}$ as a whole. The idea is the same for a computational graph, except that we need to **aggregate** all the gradients from up-streams first, and then **forward** the gradients to down-stream nodes. Here we show how the gradient

$$f(x) = \begin{bmatrix}

x^4\\

x^2 + \sin(x) \\

-\sin(x)\end{bmatrix}$$

is computed.

| Forward-mode AD in the Computational Graph | Example |

| ------------------------------------------ | ----------------------------- |

|  |  |

### Reverse Mode

In contrast, the reverse-mode AD computes the gradient in the reverse order of forward computation, i.e., $\frac{\partial f'\circ g(x)}{\partial g}$ is first evaluated and then $\frac{\partial f'\circ g(x)}{\partial g} {\frac{\partial g'(x)}{\partial x}}$ as a whole. In the computational graph, each node first aggregate all the gradients from down-streams and then back-propagates the gradient to upstream nodes.

We show how the gradients of $z = \sin(x_1+x_2) + x_2^2 x_3$ is evaluated.

| Reverse-mode AD in the Computational Graph | Step 1 | Step 2 | Step 3 | Step 4 |

| ------------------------------------------ | ---------------------- | ---------------------- | ---------------------- | ---------------------- |

|  |  |  |  |  |

## Comparison

Reverse-mode AD reuses gradients from down-streams. Therefore, this mode is useful for many-to-few mappings. In contrast, forward-mode AD reuses gradients from upstreams. This mechanism makes forward-mode AD suitable for few-to-many mappings. Therefore, for inverse modeling problems where the objective function is usually a scalar, reverse-mode AD is most relevant. For uncertainty quantification or sensitivity analysis, the forward-mode AD is most useful. We summarize the two modes in the following table:

For a function $f:\mathbf{R}^n \rightarrow \mathbf{R}^m$

| Mode | Suitable for... | Complexity[^OPS] | Application |

| ------- | --------------- | ------------------------------ | ---------------- |

| Forward | $m\gg n$ | $\leq 2.5\;\mathrm{OPS}(f(x))$ | UQ |

| Reverse | $m\ll n$ | $\leq 4\;\mathrm{OPS}(f(x))$ | Inverse Modeling |

[^OPS]: See "Margossian CC. A review of automatic differentiation and its efficient implementation. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery. 2019 Jul;9(4):e1305.".

## A Mathematical Description of Reverse-mode Automatic Differentiation

Because the reverse-mode automatic differentiation is very important for inverse modeling, we devote this section to a rigorious mathematical description of the reverse-mode automatic differentiation.

To explain how reverse-mode AD works, let's consider constructing a computational graph with independent variables

$$\{x_1, x_2, \ldots, x_n\}$$

and the forward propagation produces a single output $x_N$, $N>n$. The gradients $\frac{\partial x_N(x_1, x_2, \ldots, x_n)}{\partial x_i}$

$i=1$, $2$, $\ldots$, $n$ are queried.

The idea is that this algorithm can be decomposed into a sequence of functions $f_i$ ($i=n+1, n+2, \ldots, N$) that can be easily differentiated analytically, such as addition, multiplication, or basic functions like exponential, logarithm and trigonometric functions. Mathematically, we can formulate it as

$$\begin{aligned}

x_{n+1} &= f_{n+1}(\mathbf{x}_{\pi({n+1})})\\

x_{n+2} &= f_{n+2}(\mathbf{x}_{\pi({n+2})})\\

\ldots\\

x_{N} &= f_{N}(\mathbf{x}_{\pi({N})})\\

\end{aligned}$$

where $\mathbf{x} = \{x_i\}_{i=1}^N$ and $\pi(i)$ are the parents of $x_i$, s.t., $\pi(i) \in \{1,2,\ldots,i-1\}$.

The idea to compute $\partial x_N / \partial x_i$ is to start from $i = N$, and establish recurrences to calculate derivatives with respect to $x_i$ in terms of derivatives with respect to $x_j$, $j >i$. To define these recurrences rigorously, we need to define different functions that differ by the choice of independent variables.

The starting point is to define $x_i$ considering all previous $x_j$, $j < i$, as independent variables. Then:

$$x_i(x_1, x_2, \ldots, x_{i-1}) = f_i(\mathbf{x}_{\pi(i)})$$

Next, we observe that $x_{i-1}$ is a function of previous $x_j$, $j < i-1$, and so on; so that we can recursively define $x_i$ in terms of fewer independent variables, say in terms of $x_1$, ..., $x_k$, with $k < i-1$. This is done recursively using the following definition:

$$x_i(x_1, x_2, \ldots, x_j) = x_i(x_1, x_2, \ldots, x_j, f_{j+1}(\mathbf{x}_{\pi(j+1)})), \quad n < j+1 < i$$

Observe that the function of the left-hand side has $j$ arguments, while the function on the right has $j+1$ arguments. This equation is used to "reduce" the number of arguments in $x_i$.

With these definitions, we can define recurrences for our partial derivatives which form the basis of the back-propagation algorithm. The partial derivatives for

$$x_N(x_1, x_2, \ldots, x_{N-1})$$

are readily available since we can differentiate

$$f_N(\mathbf{x}_{\pi(N)})$$

directly. The problem is therefore to calculate partial derivatives for functions of the type $x_N(x_1, x_2, \ldots, x_i)$ with $i<N-1$. This is done using the following recurrence:

$$\frac{\partial x_N(x_1, x_2, \ldots, x_{i})}{\partial x_i} = \sum_{j\,:\,i\in \pi(j)}

\frac{\partial x_N(x_1, x_2, \ldots, x_j)}{\partial x_j}

\frac{\partial x_j(x_1, x_2, \ldots, x_{j-1})}{\partial x_i}$$

with $n < i< N-1$. Since $i \in \pi(j)$, we have $i < j$. So we are defining derivatives with respect to $x_i$ in terms of derivatives with respect to $x_j$ with $j > i$. The last term

$$\frac{\partial x_j(x_1, x_2, \ldots, x_{j-1})}{\partial x_k}$$

is readily available since:

$$x_j(x_1, x_2, \ldots, x_{j-1}) = f_j(\mathbf{x}_{\pi(j)})$$

The computational cost of this recurrence is proportional to the number of edges in the computational graph (excluding the nodes $1$ through $n$), assuming that the cost of differentiating $f_k$ is $O(1)$. The last step is defining

$$\frac{\partial x_N(x_1, x_2, \ldots, x_n)}{\partial x_i} = \sum_{j\,:\,i\in \pi(j)}

\frac{\partial x_N(x_1, x_2, \ldots, x_j)}{\partial x_j}

\frac{\partial x_j(x_1, x_2, \ldots, x_{j-1})}{\partial x_i}$$

with $1 \le i \le n$. Since $n < j$, the first term

$$\frac{\partial x_N(x_1, x_2, \ldots, x_j)}{\partial x_j}$$

has already been computed in earlier steps of the algorithm. The computational cost is equal to the number of edges connected to one of the nodes in $\{1, \dots, n\}$.

We can see that the complexity of the back-propagation is bounded by that of the forward step, up to a constant factor. Reverse mode differentiation is very useful in the penalty method, where the loss function is a scalar, and no other constraints are present.

As a concrete example, we consider the example of evaluating $\frac{dz(x_1,x_2,x_3)}{dx_i}$, where $z = \sin(x_1+x_2) + x_2^2x_3$. The gradients are backward propagated exactly in the reverse order of the forward propagation.

| Step 1 | Step 2 | Step 3 | Step 4 |

| ---------------------- | ---------------------- | ---------------------- | ---------------------- |

|  |  |  |  |

## TensorFlow

Google's TensorFlow provides a convenient way to specify the computational graph statically. TensorFlow has automatic differentiation features and its performance is optimized for large-scale computing. ADCME is built on TensorFlow by overloading numerical operators and augmenting TensorFlow with essential scientific computing functionalities. We contrast the TensorFlow implementation with the ADCME implementation of computing the objective function and its gradient in the following example.

$$y(x) = \|(AA^T+xI)^{-1}b-c\|^2, \; z = y'(x)$$

where $A\in \mathbb{R}^{n\times n}$ is a random matrix, $x,b,c$ are scalars, and $n=10$.

**TensorFlow Implementation**

```python

import tensorflow as tf

import numpy as np

A = tf.constant(np.random.rand(10,10), dtype=tf.float64)

x = tf.constant(1.0, dtype=tf.float64)

b = tf.constant(np.random.rand(10), dtype=tf.float64)

c = tf.constant(np.random.rand(10), dtype=tf.float64)

B = tf.matmul(A, tf.transpose(A)) + x * tf.constant(np.identity(10))

y = tf.reduce_sum((tf.squeeze(tf.matrix_solve(B, tf.reshape(b, (-1,1))))-c)**2)

z = tf.gradients(y, x)[0]

sess = tf.Session()

sess.run([y, z])

```

**Julia Implementation**

```julia

using ADCME, LinearAlgebra

A = constant(rand(10,10))

x = constant(1.0)

b = rand(10)

c = rand(10)

y = sum(((A*A'+x*diagm(0=>ones(10)))\b - c)^2)

z = gradients(y, x)

sess = Session()

run(sess, [y,z])

```

## Summary

The computational graph and automatic differentiation are the core concepts underlying ADCME. TensorFlow works as the workhorse for optimion and execution of the computational graph in a high performance environment.

To construct a computational graph for a Julia program, ADCME overloads most numerical operators like `+`, `-`, `*`, `/` and matrix multiplication in Julia by the corresponding TensorFlow operators. Therefore, you will find many similar workflows and concepts as TensorFlow, such as `constant`, `Variable`, `session`, etc. However, not all operators relevant to scientific computing in Julia have its counterparts in TensorFlow. To that end, custom kernels are implemented to supplement TensorFlow, such as sparse linear algebra related functions.

ADCME aims at providing a easy-to-use, flexible, and high performance interface to do data processing, implement numerical schemes, and conduct mathematical optimization. It is built not only for academic interest but also for real-life large-scale simulations.

Like TensorFlow, ADCME works in sessions, in which each session consumes a computational graph. Usually the workflow is split into three steps:

1. Define independent variables. `constant` for tensors that do not require gradients and `Variable` for those requiring gradients.

```julia

a = constant(0.0)

```

2. Construct the computational graph by defining the computation

```julia

L = (a-1)^2

```

3. Create a session and run the computational graph

```julia

sess = Session()

run(sess, L)

```

| ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 1813 |

# Overview

> ADCME: Your Gateway to Inverse Modeling with Physics Based Machine Learning

ADCME is an open-source Julia package for inverse modeling in scientific computing using automatic differentiation. The backend of ADCME is the high performance deep learning framework, TensorFlow, which provides parallel computing and automatic differentiation features based on computational graph, but ADCME augments TensorFlow by functionalities---like sparse linear algebra---essential for scientific computing. ADCME leverages the Julia environment for maximum efficiency of computing. Additionally, the syntax of ADCME is designed from the beginning to be compatible with the Julia syntax, which is friendly for scientific computing.

**Prerequisites**

The tutorial does not assume readers with experience in deep learning. However, basic knowledge of scientific computing in Julia is required.

**Tutorial Series**

[What is ADCME? Computational Graph, Automatic Differentiation & TensorFlow](./tu_whatis.md)

[ADCME Basics: Tensor, Type, Operator, Session & Kernel](./tu_basic.md)

[PDE Constrained Optimization](./tu_optimization.md)

[Sparse Linear Algebra in ADCME](./tu_sparse.md)

[Numerical Scheme in ADCME: Finite Difference Example](./tu_fd.md)

[Numerical Scheme in ADCME: Finite Element Example](./tu_fem.md)

[Inverse Modeling in ADCME](./tu_inv.md)

[Inverse Modeling Recipe](./tu_recipe.md)

[Combining NN with Numerical Schemes](./tu_nn.md)

[Advanced: Automatic Differentiation for Implicit Operations](./tu_implicit.md)

[Advanced: Custom Operators](./tu_customop.md)

[Advanced: Debugging and Profiling](./tu_debug.md)

[Exercise](./exercise.md)[^exercise]

[^exercise]: If you want to discuss or check your exercise solutions, you are welcome to send an email to [email protected].

| ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 14503 | # Uncertainty Quantification

<!-- qunatifying uncertainty of neural networks in inverse problems using linearized Gaussian modeels -->

## Theory

### Basic Model

We consider a physical model

$$\begin{aligned}

y &= h(s) + \delta \\

s &= g(z) + \epsilon

\end{aligned}\tag{1}$$

Here $\delta$ and $\epsilon$ are independent Gaussian noises. $s\in \mathbb{R}^m$ is the physical quantities we are interested in predicting, and $y\in \mathbb{R}^n$ is the measurement. $g$ is a function approximator, which we learn from observations in our inverse problem. $z$ is considered fixed for quantifying the uncertainty for a specific observation under small perturbation, although $z$ and $s$ may have complex dependency.

$\delta$ can be interpreted as the measurement error

$$\mathbb{E}(\delta\delta^T) = R$$

$\epsilon$ is interpreted as our prior for $s$

$$\mathbb{E}(\epsilon\epsilon^T) = Q$$

### Linear Gaussian Model

When the standard deviation of $\epsilon$ is small, we can safely approximate $h(s)$ using its linearized form

$$h(s)\approx \nabla h(s_0) (s-s_0) + h(s_0) := \mu + H s$$

Here

$$\mu = h(s_0) - \nabla h(s_0) s_0\quad H = \nabla h(x_0)$$

Therefore, we have an approximate governing equation for Equation 1:

$$\begin{aligned}

y &= H s + \mu + \delta\\

s &= g(z) + \epsilon

\end{aligned}\tag{2}$$

Using Equation 2, we have

$$\begin{aligned}

\mathbb{E}(y) & = H g(z) + \mu \\

\text{cov}(y) & = \mathbb{E}\left[(H (x-g(z)) + \delta )(H (x-g(z)) + \delta )^T \right] = H QH^T + R

\end{aligned}$$

### Bayesian Inversion

#### Derivation

From the model Equation 2 we can derive the joint distribution of $s$ and $y$, which is a multivariate Gaussian distribution

$$\begin{bmatrix}

x_1\\

x_2

\end{bmatrix}\sim \mathcal{N}\left(

\begin{bmatrix}

g(z)\\

Hg(z) + \mu

\end{bmatrix} \Bigg| \begin{bmatrix}

Q & QH^T \\

HQ & HQH^T + R

\end{bmatrix}

\right)$$

Here the covariance matrix $\text{cov}(s, y)$ is obtained via

$$\text{cov}(s, y) = \mathbb{E}(s, Hs + \mu+\delta) = \mathbb{E}(s-g(z), H(s-g(z))) = \mathbb{E}((s-g(z))(s-g(z))^T) H^T = QH^T$$

Recall the formulas for conditional Gaussian distributions:

Given

$$\begin{bmatrix}

s\\

y

\end{bmatrix}\sim \mathcal{N}\left(

\begin{bmatrix}

\mu_1\\

\mu_2

\end{bmatrix} \Bigg| \begin{bmatrix}

\Sigma_{11} & \Sigma_{12} \\

\Sigma_{21} & \Sigma_{22}

\end{bmatrix}

\right)$$

We have

$$x_1 | x_2 \sim \mathcal{N}(\mu_{1|2}, V_{1|2})$$

where

$$\begin{aligned}

\mu_{1|2} &= \mu_1 + \Sigma_{12}\Sigma_{22}^{-1} (x_2-\mu_2)\\

V_{1|2} &= \Sigma_{11} - \Sigma_{12} \Sigma_{22}^{-1}\Sigma_{21}

\end{aligned}$$

Let $x_1 = s$, $x_2 = y$, we have the following formula for Baysian inversion:

$$\begin{aligned}

\mu_{s|y} &= g(z) + QH^{T}(HQH^T + R)^{-1} (y - Hg(z) - \mu)\\

V_{s|y} &= Q - QH^T(HQH^T + R)^{-1} HQ

\end{aligned}\tag{3}$$

#### Analysis

Now we consider how to compute Equation 3. In practice, we should avoid direct inverting the matrix $HQH^T + R$ since the cost is cubic in the size of dimensions of the matrix. Instead, the following theorem gives us a convenient way to solve the problem

!!! info "Theorem"

Let $\begin{bmatrix}L \\ x^T\end{bmatrix}$ be the solution to

$$\begin{bmatrix}

HQH^T + R & H g(z) \\

g(z)^T H^T & 0

\end{bmatrix}\begin{bmatrix}

L \\

x^T

\end{bmatrix} = \begin{bmatrix}

HQ \\

g(z)^T

\end{bmatrix}\tag{4}$$

Then we have

$$\begin{aligned}

\mu_{s|y} = g(z) + L^T (y-\mu) \\

V_{s|y} = Q - gx^T - QH^TL

\end{aligned}\tag{5}$$

The linear system in Equation 5 is symmetric but may not be SPD and therefore we may encounter numerical difficulty when solving the linear system Equation 4. In this case, we can add perturbation $\varepsilon g^T g$ to the zero entry.

!!! info "Theorem"

If $\varepsilon> \frac{1}{4\lambda_{\min}}$, where $\lambda_{\min}$ is the minimum eigenvalue of $Q$, then the linear system in Equation 4 is SPD.

The above theorem has a nice interpretation: typically we can choosee our prior for the physical quantity $s$ to be a scalar matrix $Q = \sigma_{{s}}^2 I$, where $\sigma_{s}$ is the standard deviation, then $\lambda_{\min} = \sigma_s^2$. This indicates that if we use a very concentrated prior, the linear system can be far away from SPD and requires us to use a large perturbation for numerical stability. Therefore, in the numerical example below, we choose a moderate $\sigma_s$. The alternative approach is to add the perturbation.

In ADCME, we provide the implementation [`uq`](@ref)

```julia

s, Σ = uqlin(y-μ, H, R, gz, Q)

```

## Benchmark

To show how the proposed method work compared to MCMC, we consider a model problem: estimating Young's modulus and Poisson's ratio from sparse observations.

$$\begin{aligned}

\mathrm{div}\; \sigma &= f & \text{ in } \Omega \\

\sigma n &= 0 & \text{ on }\Gamma_N \\

u &= 0 & \text{ on }\Gamma_D \\

\sigma & = H\epsilon

\end{aligned}$$

Here the computational domain $\Omega=[0,1]\times [0,1.5]$. We fixed the left side ($\Gamma_D$) and impose an upward pressure on the right side. The other side is considered fixed. We consider the plane stress linear elasticity, where the constitutive relation determined by

$$H = \frac{E}{(1+\nu)(1-2\nu)}\begin{bmatrix}

1-\nu & \nu & 0 \\

\nu & 1-\nu & 0 \\

0 & 0 & \frac{1-2\nu}{2}

\end{bmatrix}$$

Here the true parameters

$$E = 200\;\text{GPa} \quad \nu = 0.35$$

They are the parameters to be calibrated in the inverse modeling. The observation is given by the displacement vectors of 20 random points on the plate.

We consider a uniform prior for the random walk MCMC simuation, so the log likelihood up to a constant is given by

$$l(y') = -\frac{(y-y')^2}{2\sigma_0^2}$$

where $y'$ is the current proposal, $y$ is the measurement, and $\sigma_0$ is the standard deviation. We simulate 100000 times, and the first 20% samples are used as "burn-in" and thus discarded.

For the linearized Gaussian model, we use $Q=I$ and $R=\sigma_0^2I$ to account for a unit Gaussian prior and measurement error, respectively.

The following plots show the results

| $\sigma_0=0.01$ | $\sigma_0=0.05$ | $\sigma_0=0.1$ |

| ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

|  |  |  |

| $\sigma_0=0.2$ | $\sigma_0=0.5$ | |

|  |  | |

We see that when $\sigma_0$ is small, the approximation is quite consistent with MCMC results. When $\sigma_0$ is large, due to the assumption that the uncertainty is Gaussian, the linearized Gaussian model does not fit well with the uncertainty shape obtained with MCMC; however, the result is still consistent since the linearized Gaussian model yields a larger standard deviation.

## Example 1: UQ for Parameter Inverse Problems

We consider a simple example for 2D Poisson problem.

$$\begin{aligned}

\nabla (K(x, y) \nabla u(x, y)) &= 1 & \text{ in } \Omega\\

u(x,y) &= 0 & \text{ on } \partial \Omega

\end{aligned}$$

where $K(x,y) = e^{c_1 + c_2 x + c_3 y}$.

Here $c_1$, $c_2$, $c_3$ are parameter to be estimated. We first generate data using $c_1=1,c_2=2,c_3=3$ and add Gaussian noise $\mathcal{N}(0, 10^{-3})$ to 64 observation in the center of the domain $[0,1]^2$. We run the inverse modeling and obtain an estimation of $c_i$'s. Finally, we use [`uq`](@ref) to conduct the uncertainty quantification. We assume $\text{error}_{\text{model}}=0$.

The following plot shows the estimated mean together with 2 standard deviations.

```julia

using ADCME

using PyPlot

using AdFem

Random.seed!(233)

idx = fem_randidx(100, m, n, h)

function poisson1d(c)

m = 40

n = 40

h = 0.1

bdnode = bcnode("all", m, n, h)

c = constant(c)

xy = gauss_nodes(m, n, h)

κ = exp(c[1] + c[2] * xy[:,1] + c[3]*xy[:,2])

κ = compute_space_varying_tangent_elasticity_matrix(κ, m, n, h)

K = compute_fem_stiffness_matrix1(κ, m, n, h)

K, _ = fem_impose_Dirichlet_boundary_condition1(K, bdnode, m, n, h)

rhs = compute_fem_source_term1(ones(4m*n), m, n, h)

rhs[bdnode] .= 0.0

sol = K\rhs

sol[idx]

end

c = Variable(rand(3))

y = poisson1d(c)

Γ = gradients(y, c)

Γ = reshape(Γ, (100, 3))

# generate data

sess = Session(); init(sess)

run(sess, assign(c, [1.0;2.0;3.0]))

obs = run(sess, y) + 1e-3 * randn(100)

# Inverse modeling

loss = sum((y - obs)^2)

init(sess)

BFGS!(sess, loss)

y = obs

H = run(sess, Γ)

R = (2e-3)^2 * diagm(0=>ones(100))

X = run(sess, c)

Q = diagm(0=>ones(3))

m, V = uqlin(y, H, R, X, Q)

plot([1;2;3], [1.;2.;3.], "o", label="Reference")

errorbar([1;2;3],m + run(sess, c), yerr=2diag(V), label="Estimated")

legend()

```

!!! info "The choice of $R$"

The standard deviation $2\times 10^{-3}$ consists of the model error ($10^{-3}$) and the measurement error $10^{-3}$.

## Example 2: UQ for Function Inverse Problems

In this example, let us consider uncertainty quantification for function inverse problems. We consider the same problem as Example 1, except that $K(x,y)$ is represented by a neural network (the weights and biases are represented by $\theta$)

$$\mathcal{NN}_\theta:\mathbb{R}^2 \rightarrow \mathbb{R}$$

We consider a highly nonlinear $K(x,y)$

$$K(x,y) = 0.1 + \sin x+ x(y-1)^2 + \log (1+y)$$

The left panel above shows the exact $K(x,y)$ and the learned $K(x,y)$. We see we have a good approximation but with some error.

The left panel above shows the exact solution while the right panel shows the reconstructed solution after learning.

We apply the UQ method and obtain the standard deviation plot on the left, together with absolute error on the right. We see that our UQ estimation predicts that the right side has larger uncertainty, which is true in consideration of the absolute error.

```julia

using Revise

using ADCME

using PyPlot

using AdFem

m = 40

n = 40

h = 1/n

bdnode = bcnode("all", m, n, h)

xy = gauss_nodes(m, n, h)

xy_fem = fem_nodes(m, n, h)

function poisson1d(κ)

κ = compute_space_varying_tangent_elasticity_matrix(κ, m, n, h)

K = compute_fem_stiffness_matrix1(κ, m, n, h)

K, _ = fem_impose_Dirichlet_boundary_condition1(K, bdnode, m, n, h)

rhs = compute_fem_source_term1(ones(4m*n), m, n, h)

rhs[bdnode] .= 0.0

sol = K\rhs

end

κ = @. 0.1 + sin(xy[:,1]) + (xy[:,2]-1)^2 * xy[:,1] + log(1+xy[:,2])

y = poisson1d(κ)

sess = Session(); init(sess)

SOL = run(sess, y)

# inverse modeling

κnn = squeeze(abs(ae(xy, [20,20,20,1])))

y = poisson1d(κnn)

using Random; Random.seed!(233)

idx = fem_randidx(100, m, n, h)

obs = y[idx]

OBS = SOL[idx]

loss = sum((obs-OBS)^2)

init(sess)

BFGS!(sess, loss, 200)

figure(figsize=(10,4))

subplot(121)

visualize_scalar_on_fem_points(SOL, m, n, h)

subplot(122)

visualize_scalar_on_fem_points(run(sess, y), m, n, h)

plot(xy_fem[idx,1], xy_fem[idx,2], "o", c="red", label="Observation")

legend()

figure(figsize=(10,4))

subplot(121)

visualize_scalar_on_gauss_points(κ, m, n, h)

title("Exact \$K(x, y)\$")

subplot(122)

visualize_scalar_on_gauss_points(run(sess, κnn), m, n, h)

title("Estimated \$K(x, y)\$")

H = gradients(obs, κnn)

H = run(sess, H)

y = OBS

hs = run(sess, obs)

R = (1e-1)^2*diagm(0=>ones(length(obs)))

s = run(sess, κnn)

Q = (1e-2)^2*diagm(0=>ones(length(κnn)))

μ, Σ = uqnlin(y, hs, H, R, s, Q)

σ = diag(Σ)

figure(figsize=(10,4))

subplot(121)

visualize_scalar_on_gauss_points(σ, m, n, h)

title("Standard Deviation")

subplot(122)

visualize_scalar_on_gauss_points(abs.(run(sess, κnn)-κ), m, n, h)

title("Absolute Error")

```

## Example 3: UQ for Function Inverse Problem

In this case, we consider a more challenging case, where $K$ is a function of the state variable, i.e., $K(u)$. $K$ is approximated by a neural network, but we need an iterative solver that involves the neural network to solve the problem

$$\begin{aligned}

\nabla\cdot (K(u) \nabla u(x, y)) &= 1 & \text{ in } \Omega\\

u(x,y) &= 0 & \text{ on } \partial \Omega

\end{aligned}$$

We tested two cases: in the first case, we use the synthetic observation $u_{\text{obs}}\in\mathbb{R}$ without adding any noise, while in the second case, we add 1% Gaussian noise to the observation data

$$u'_{\text{obs}} = u_{\text{obs}} (1+0.01 z)\quad z\sim \mathcal{N}(0, I_n)$$

The prior for $K(u)$ is $\mathcal{N}(0, 10^{-2})$, where one standard deviation is around 10%~20% of the actual $K(u)$ value. The measurement prior is given by

$$\mathcal{N}(0, \sigma_{\text{model}}^2 + \sigma_{\text{noise}}^2)$$

The total error is modeled by $\sigma_{\text{model}}^2 + \sigma_{\text{noise}}^2\approx 10^{-4}$.

| Description | Uncertainty Bound (two standard deviation) | Standard Deviation at Grid Points |

| --------------------------- | ---- | ---- |

| $\sigma_{\text{noise}}=0$ |  |  |

| $\sigma_{\text{noise}}=0.01$ |  |  |

We see that in general when $u$ is larger, the uncertainty bound is larger. For small $u$, we can estimate the map $K(u)$ quite accurately using a neural network. | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 6155 | # Variational Autoencoder

Let's see how to implement an autoencoder for generating MNIST images in ADCME. The mathematics underlying autoencoder is the Bayes formula

$$p(z|x) = \frac{p(x|z)p(z)}{p(x)}$$

where $x$ a sample from the data distribution and $z$ is latent variables. To model the data distribution given the latent variable, $p(x|z)$, we use a deep generative neural network $g_\phi$ that takees $z$ as the input and outputs $x$. This gives us the approximate $p_\phi(x|z) \approx p(x|z)$.

However, computing $p(z|x)$ directly can be intractable. To this end, we approximate the posterior using $z\sim \mathcal{N}(\mu_x, \sigma_x^2I)$, where $\mu_x$ and $\sigma_x$ are both encoded using neural networks, where $x$ is the input to the neural network. In this way, we obtain an approximate posterior

$$p_w(z|x) = \frac{1}{(\sqrt{2\pi \sigma_x^2})^d}\exp\left( -\frac{\|z-\mu_x)\|^2}{2\sigma_x^2} \right) \tag{1}$$

How can we choose the correct weights and biases $\phi$ and $w$? The idea is to minimize the discrepancy between the true posterior and the approximate posterior Equation (1). We can use the KL divergence, which is a metric for measuring the discrepancy between two distributions

$$\mathrm{KL}(p_w(z|x)|| p(z|x)) = \mathbb{E}_{p_w}(\log p_w(z|x) - \log p(z|x)) \tag{2}$$

However, computing Equation 2 is still intractable since we do not know $\log p(z|x)$. Instead, we seek to minimize a maximize bound of the KL divergence

$$\begin{aligned}

\mathrm{ELBO} &= \log p(x) - \mathrm{KL}(p_w(z|x)|| p(z|x))\\

& = \mathbb{E}_{p_w}( \log p(z,x) - \log p_w(z|x)) \\

& = \mathbb{E}_{p_w(z|x)}[\log p_\phi(x|z)] - \mathrm{KL}(p_w(z|x) || p(z))

\end{aligned}$$

Note that we assumed that the generative neural network $g_\phi$ is sufficiently expressive so $p_\phi(y|z)\approx p(y|z)$. Additionally, because KL divergence is always positive

$$\mathrm{ELBO} \leq \log p(x)\tag{3}$$

Equation (3) justifies the name "evidence lower bound".

Let's consider how to compute ELBO for our autoencoder. For the marginal likelihood term $\mathbb{E}_{p_w(z|x)}[\log p_\phi(x|z)]$, for each given sample $y$, we can calculate the mean and covariance of $z$, namely $\mu_x$ and $\sigma_x^2I$. We sample $z_i\sim \mathcal{N}(\mu_x, \sigma_x^2I)$ and plug them into $g_\phi$ and obtain the outputs $x_i = g_\phi(z_i)$. If we assume that the decoder model is subject to Bernoulli distribution $x \sim Ber(g_\phi(z))$ (in this case we have $g_\phi(z)\in [0,1]$), we have the approximation

$$\mathbb{E}_{p_w(z|x)}[\log p_\phi(x|z)] \approx \frac{1}{n}\sum_{i=1}^n \left[x_i\log (g_\phi(z_i)) + (1-x_i) \log(1-g_\phi(z_i))\right]\tag{4}$$

Now let us consider the second term $\mathrm{KL}(p_w(z|x) || p(z))$. If we assign a unit Gaussian prior on $z$, we have

$$\begin{aligned}

\mathrm{KL}(p_w(z|x) || p(z)) &= \mathbb{E}_{p_w}[\log(p_w(z|x)) - \log(p(z)) ]\\

& = \mathbb{E}_{p_w}\left[-\frac{\|z-\mu_x\|^2}{2\sigma_x^2} - d\log(\sigma_x) + \frac{\|z\|^2}{2} \right]\\

& = -d - d\log(\sigma_x) +\frac{1}{2} \|\mu_x\|^2 + \frac{d}{2}\sigma_x^2

\end{aligned} \tag{5}$$

Using Equation 4 and 5 we can formulate a loss function, which we can use a stochastic gradient descent method to minimize.

The following code is an example of applying the autoencoder to learn a data distribution from MNIST dataset. Here is the result using this script:

```julia

using ADCME

using PyPlot

using MLDatasets

using ProgressMeter

function encoder(x, n_hidden, n_output, rate)

local μ, σ

variable_scope("encoder") do

y = dense(x, n_hidden, activation = "elu")

y = dropout(y, rate, ADCME.options.training.training)

y = dense(y, n_hidden, activation = "tanh")

y = dropout(y, rate, ADCME.options.training.training)

y = dense(y, 2n_output)

μ = y[:, 1:n_output]

σ = 1e-6 + softplus(y[:,n_output+1:end])

end

return μ, σ

end

function decoder(z, n_hidden, n_output, rate)

local y

variable_scope("decoder") do

y = dense(z, n_hidden, activation="tanh")

y = dropout(y, rate, ADCME.options.training.training)

y = dense(y, n_hidden, activation="elu")

y = dropout(y, rate, ADCME.options.training.training)

y = dense(y, n_output, activation="sigmoid")

end

return y

end

function autoencoder(xh, x, dim_img, dim_z, n_hidden, rate)

μ, σ = encoder(xh, n_hidden, dim_z, rate)

z = μ + σ .* tf.random_normal(size(μ), 0, 1, dtype=tf.float64)

y = decoder(z, n_hidden, dim_img, rate)

y = clip(y, 1e-8, 1-1e-8)

marginal_likelihood = sum(x .* log(y) + (1-x).*log(1-y), dims=2)

KL_divergence = 0.5 * sum(μ^2 + σ^2 - log(1e-8 + σ^2) - 1, dims=2)

marginal_likelihood = mean(marginal_likelihood)

KL_divergence = mean(KL_divergence)

ELBO = marginal_likelihood - KL_divergence

loss = -ELBO

return y, loss, -marginal_likelihood, KL_divergence

end

function step(epoch)

tx = train_x[1:batch_size,:]

@showprogress for i = 1:div(60000, batch_size)

idx = Array((i-1)*batch_size+1:i*batch_size)

run(sess, opt, x=>train_x[idx,:])

end

y_, loss_, ml_, kl_ = run(sess, [y, loss, ml, KL_divergence],

feed_dict = Dict(

ADCME.options.training.training=>false,

x => tx

))

println("epoch $epoch: L_tot = $(loss_), L_likelihood = $(ml_), L_KL = $(kl_)")

close("all")

for i = 1:3

for j = 1:3

k = (i-1)*3 + j

img = reshape(y_[k,:], 28, 28)'|>Array

subplot(3,3,k)

imshow(img)

end

end

savefig("result$epoch.png")

end

n_hidden = 500

rate = 0.1

dim_z = 20

dim_img = 28^2

batch_size = 128

ADCME.options.training.training = placeholder(true)

x = placeholder(Float64, shape = [128, 28^2])

xh = x

y, loss, ml, KL_divergence = autoencoder(xh, x, dim_img, dim_z, n_hidden, rate)

opt = AdamOptimizer(1e-3).minimize(loss)

train_x = MNIST.traintensor(Float64);

train_x = Array(reshape(train_x, :, 60000)');

sess = Session(); init(sess)

for i = 1:100

step(i)

end

```

| ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 2881 | # Video Lectures and Slides

---

Do you know...

**ADCME has its own YouTube channel [ADCME](https://www.youtube.com/channel/UCeaZFluNatYpkIYcq2TTklw)!**

---

## Slides

* [Physics Based Machine Learning for Inverse Problems (60 Pages)](https://kailaix.github.io/ADCMESlides/ADCME.pdf)

* [Automatic Differentiation for Scientific Computing (51 Pages)](https://kailaix.github.io/ADCMESlides/AD.pdf)

* [Deep Neural Networks and Inverse Modeling (50 Pages)](https://kailaix.github.io/ADCMESlides/Inverse.pdf)

* [Subsurface Inverse Modeling with Physics Based Machine Learning (35 Pages)](https://kailaix.github.io/ADCMESlides/Subsurface.pdf)

* [Calibrating Multivariate Lévy Processes with Neural Networks](https://kailaix.github.io/ADCMESlides/MSML2020.pdf)

* [ADCME.jl -- Physics Based Machine Learning for Inverse Problems (JuliaCN 2020, 40 Pages)](https://kailaix.github.io/ADCMESlides/JuliaConference2020_08_21.pdf)

* [ADCME -- Machine Learning for Computational Engineering (Berkeley/Stanford CompFest)](https://kailaix.github.io/ADCMESlides/CompFest2020.pdf)

* [Presentation on 09/23/2020 (29 Pages)](https://kailaix.github.io/ADCMESlides/InversePoreFlow2020_09_23.pdf)

* [Presentation on 10/01/2020 (40 Pages)](https://kailaix.github.io/ADCMESlides/2020_10_01.pdf)

* [Presentation on 10/06/2020 (37 Pages)](https://kailaix.github.io/ADCMESlides/2020_10_06.pdf)

* [Presentation in SMS, Peking University 10/22/2020 (35 Pages)](https://kailaix.github.io/ADCMESlides/2020_10_22.pdf)

* [Presentation in Berkeley, 11/17/2020 (60 Pages)](https://kailaix.github.io/ADCMESlides/2020_11_17.pdf); a relative comprehensive slide, see [here (31 Pages)](https://kailaix.github.io/ADCMESlides/2020_12_3.pdf) for a short version.

* [WCCM 2020 (23 Pages)](https://kailaix.github.io/ADCMESlides/2020_11_27.pdf)

* [SIAM CSE21 (21 Pages)](https://kailaix.github.io/ADCMESlides/2020_2_19.pdf)

* [AAAI MLPS 2021 (22 Pages)](https://kailaix.github.io/ADCMESlides/2021_3_9.pdf)

* [Ph.D. Oral Defense (~50 Pages)](https://kailaix.github.io/ADCMESlides/oral_defense.pdf)

## Instruction on Installing ADCME

1. [Installing ADCME (Windows)](https://www.youtube.com/watch?v=Vsc_dpyOD6k)

2. [Installing ADCME (MacOS)](https://youtu.be/nz1g-f-1s9Y)

3. [Installing ADCME (Linux)](https://youtu.be/fH0QrqgzUeo)

4. [Getting Started with ADCME](https://youtu.be/ZQyczBYZjQw)

## Posters

* [SPD-NN Poster](https://kailaix.github.io/ADCMESlides/NNFEM_poster.pdf)

## Videos

* [ADCME.jl -- Physics Based Machine Learning for Inverse Problems (中文)](https://www.bilibili.com/video/BV1va4y177fe)

* [Data-Driven Inverse Modeling with Incomplete Observations](https://www.youtube.com/watch?v=0r9qekmZGqk&t=480s)

* [AAAI Conference](https://studio.slideslive.com/web_recorder/share/20201126T052150Z__WCCM-ECCOMAS20__1328__data-driven-inverse-modeling-a?s=8f06e214-939f-467e-8899-bc66c2d64027) | ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.7.3 | 4ecfc24dbdf551f92b5de7ea2d99da3f7fde73c9 | docs | 2964 | # Install ADCME on Windows

The following sections provide instructions on installing ADCME on Windows computers.

## Install Julia

Windows users can install ADCME following these [instructions](https://julialang.org/downloads/). Choose your version of Windows (32-bit or 64-bit).

[Detailed instructions to install Julia on Windows](https://julialang.org/downloads/platform/#windows)

For Windows users, you can press the Windows button or click the Windows icon (usually located in the lower left of your screen) and type `julia`. Open the Desktop App `Julia` and you will see a Julia prompt.

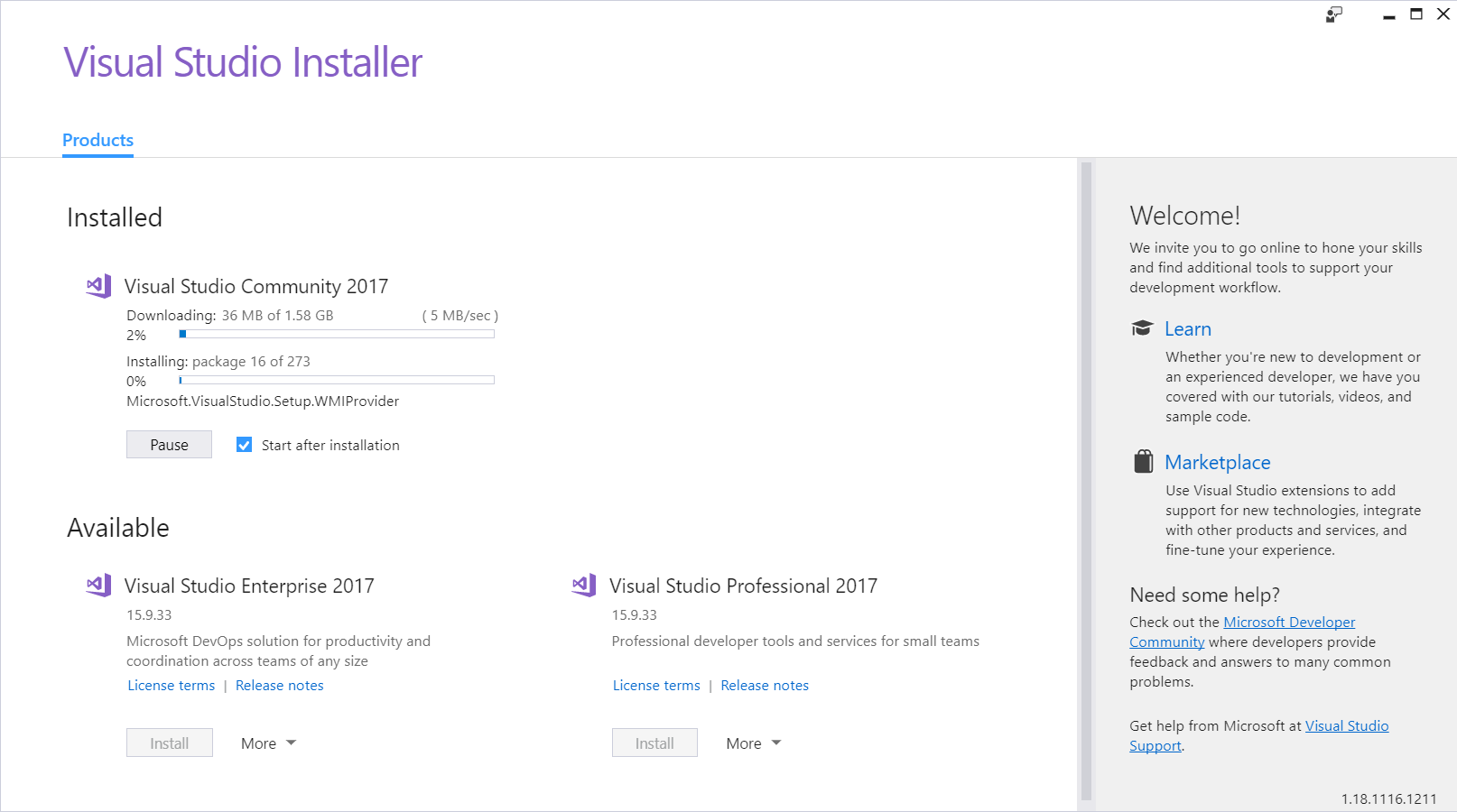

## Install C/C++ Compilers

To use and build custom operators, you need a C/C++ compiler that is compatible with the TensorFlow backend. The prebuilt TensorFlow shipped with ADCME was built using Microsoft Visual Studio 2017 15. Therefore, you need to install this specific version.

1. Download and install from [here](https://visualstudio.microsoft.com/vs/older-downloads/)

Note that this is an older version of Visual Studio. It's not the one from 2019 but the previous version from 2017.

2. Double click the installer that you just downloaded. You will see the following image:

A free community version is available (**Visual Studio Community 2017**). Click **install** and a window will pop up.

3. Make sure the following two checkboxes are checked:

- In the **Workloads** tab, **Desktop development with C++** is checked.

- In the **Indivisual components** tab, **MSBuild** is checked.

4. Click **install** on the lower right corner. You will see the following window.

5. The installation may take some time. Once the installation is finished, you can safely close the installer.

## Configure Paths

In order to locate shared libraries and executable files provided by ADCME, you also need to set an extra set of PATH environment variables. Please add the following environment variables to your system path (my user name is `kaila`; please replace it with yours!)

```

C:\Users\kaila\.julia\adcme\Scripts

C:\Users\kaila\.julia\adcme\Library\bin

C:\Users\kaila\.julia\adcme\

```

Here is how you can add these environment paths:

## Install ADCME

Now you can install ADCME via

```julia

using Pkg

Pkg.add("ADCME")

```

| ADCME | https://github.com/kailaix/ADCME.jl.git |

|

[

"MIT"

] | 0.3.3 | d34a07459e1ebdc6b551ecb28e3c19993f544d91 | code | 662 | using Documenter, Noise

using Images, TestImages, ImageIO, Random

# set seed fixed for documentation

Random.seed!(42)

DocMeta.setdocmeta!(Noise, :DocTestSetup, :(using Noise, Images, TestImages, ImageIO); recursive=true)

makedocs(modules = [Noise],

sitename = "Noise.jl",

pages = ["index.md",

"man/additive_white_gaussian.md",

"man/mult_gauss.md",

"man/salt_pepper.md",

"man/poisson.md",

"man/quantization.md",

"man/function_references.md",

]

)

deploydocs(repo = "github.com/roflmaostc/Noise.jl.git")

| Noise | https://github.com/roflmaostc/Noise.jl.git |

|

[

"MIT"

] | 0.3.3 | d34a07459e1ebdc6b551ecb28e3c19993f544d91 | code | 2761 | module Noise

using PoissonRandom

using Random

using ImageCore

"""

complex_copy(x)

If `x` is real, it returns `x + im * x`

If `x` is complex, it returns `x`,

"""

complex_copy(a::T) where T = (a + 1im * a)::Complex{T}

complex_copy(a::Complex{T}) where T= a::Complex{T}

# clipping a single value

function clip_v(x)

return max(0, min(1, x))

end

function clip_v(x, minv, maxv)

return max(minv, min(maxv, x))

end

# get the maximum single value of a RGB image

function max_rgb(X::AbstractArray{RGB{T}}) where T

max_v = -Inf

for i in eachindex(X)

max_v = max(red(X[i]), max_v)

max_v = max(green(X[i]), max_v)

max_v = max(blue(X[i]), max_v)

end

return max_v

end

function apply_noise!(pixel_f, noise_f, X::Union{AbstractArray{Gray{T}}, AbstractArray{RGB{T}},

AbstractArray{T}}, clip) where T

f(x) = pixel_f(x, noise_f(x))

# defining the core functions inside here, gives 6x speed improvement

# core functions non clipping

function core_f(x::RGB)

return RGB(f(red(x)),

f(green(x)),

f(blue(x)))

end

function core_f(x::Gray)

return Gray(f(gray(x)))

end

function core_f(x)

return f(x)

end

function core_f_clip(x::RGB)

return RGB(clip_v(f(red(x))),

clip_v(f(green(x))),

clip_v(f(blue(x))))

end

function core_f_clip(x::Gray)

return Gray(clip_v(f(gray(x))))

end

function core_f_clip(x)

return clip_v(f(x))

end

# if normed clip the values to be in [0, 1]

if T <: Normed || clip

@inbounds for i in eachindex(X)

X[i] = core_f_clip(X[i])

end

else

@inbounds for i in eachindex(X)

X[i] = core_f(X[i])

end

end

return X

end

# function which use exactly the same noise for each color channel of a pixel

function apply_noise_chn!(pixel_f, noise_f, X::AbstractArray{RGB{T}}, clip) where T

if T <: Normed || clip

@inbounds for i in eachindex(X)

a = X[i]

n = noise_f(red(a)*0)

X[i] = RGB(clip_v(pixel_f(red(a), n)),

clip_v(pixel_f(green(a), n)),

clip_v(pixel_f(blue(a), n)))

end

else

@inbounds for i in eachindex(X)

a = X[i]

n = noise_f(red(a)*0)

X[i] = RGB(pixel_f(red(a), n),

pixel_f(green(a), n),

pixel_f(blue(a), n))

end

end

return X

end

include("poisson.jl")

include("salt_pepper.jl")

include("white_noise_additive.jl")

include("quantization.jl")

include("multiplicative_noise.jl")

end

| Noise | https://github.com/roflmaostc/Noise.jl.git |

|

[

"MIT"

] | 0.3.3 | d34a07459e1ebdc6b551ecb28e3c19993f544d91 | code | 1974 | export mult_gauss, mult_gauss!, mult_gauss_chn, mult_gauss_chn!

@inline function comb_mult_gauss(x, n)

return x * n

end

mult_gauss!(X, σ=0.1, μ=1; clip=false) = apply_noise!(comb_mult_gauss, f_awg(σ, μ, complex_copy(σ), complex_copy(μ)), X, clip)

mult_gauss(X, σ=0.1, μ=1; clip=false) = mult_gauss!(copy(X), σ, μ, clip=clip)

"""

mult_gauss(X; clip=false[, σ=0.1, μ=1])

Returns the array `X` with the array value multiplied with a gauss distribution (standard deviation `σ` and mean `μ`) .

`σ` and `μ` are optional arguments representing standard deviation and mean of gauss.

If keyword argument `clip` is provided the values are clipped to be in [0, 1].

If `X` is a RGB{Normed} or Gray{Normed} image, then the values will be automatically clipped and the keyword

`clip` is meaningless.

If `X<:Complex`, `μ` and `σ` are applied to the imaginary in the same way as for the real part.

If you want to have different behaviour for real and imaginary part, simply

choose `μ` or `σ` complex.

"""

mult_gauss

mult_gauss_chn(X, σ=0.1, μ=1; clip=false) = mult_gauss_chn!(copy(X), σ, μ, clip=clip)

mult_gauss_chn!(X, σ=0.1, μ=1; clip=false) = apply_noise_chn!(comb_mult_gauss, f_awg(σ, μ, complex_copy(σ), complex_copy(μ)), X, clip)

"""

mult_gauss_chn(X; clip=false[, σ=0.1, μ=0.0])

Returns the RGB image `X` with the values of the pixel multiplied with a

gauss distribution (standard deviation `σ` and mean `μ`) pixelwise.

However, every channel of one pixel receives the same amount of noise.

The noise therefore acts roughly as intensity - but not color - changing noise.

If keyword argument `clip` is provided the values are clipped to be in [0, 1].

`σ` and `μ` are optional arguments representing standard deviation and mean of gauss.

If `X<:Complex`, `μ` and `σ` are applied to the imaginary in the same way as for the real part.

If you want to have different behaviour for real and imaginary part, simply

choose `μ` or `σ` complex.

"""

mult_gauss_chn

| Noise | https://github.com/roflmaostc/Noise.jl.git |

|

[

"MIT"

] | 0.3.3 | d34a07459e1ebdc6b551ecb28e3c19993f544d91 | code | 1571 | export poisson, poisson!

# get the maximum intensity of an image

mymax(X::AbstractArray{<:RGB}) = max_rgb(X)

mymax(X::AbstractArray{<:Gray}) = gray(maximum(X))

mymax(X::AbstractArray) = maximum(X)

# noise function for poisson

function noise_f(x, scaling, max_intens)

# scale image to max_intensity and apply poisson noise

# after Poisson noise scale it back

return pois_rand(x * scaling / max_intens) * max_intens / scaling

end

f_pois(scaling, max_intens) = x -> scaling == nothing ? pois_rand(x) : noise_f(x, scaling, max_intens)

comb_pois(x, n) = n

poisson(X::AbstractArray, scaling=nothing; clip=false) = poisson!(copy(X), scaling, clip=clip)

function poisson!(X::AbstractArray, scaling=nothing; clip=false)

max_intens = convert(Float64, mymax(X))

return apply_noise!(comb_pois, f_pois(scaling, max_intens), X, clip)

end

"""

poisson(X; scaling=nothing, clip=false)

Returns the array `X` affected by Poisson noise.

At every position the Poisson noise affects the intensity individually

and the values at the positions represent the expected value of the Poisson

Distribution.

Since Poisson Noise due to discrete events you should

provide the optional argument `scaling`. This `scaling` connects

the highest value of the array with the discrete number of events.

The highest value will be then scaled and the poisson noise is applied

Afterwards we scale the whole array back so that the initial intensity

is preserved but with applied Poisson noise.

`clip` is a keyword argument. If given, it clips the values to [0, 1]

"""

poisson

| Noise | https://github.com/roflmaostc/Noise.jl.git |

|

[

"MIT"

] | 0.3.3 | d34a07459e1ebdc6b551ecb28e3c19993f544d91 | code | 958 | export quantization!, quantization

# apply quantization and clipping

function quant(x, levels, minv, maxv)

return clip_v(round((x - minv)/(maxv-minv) * levels) / levels * (maxv-minv) + minv, minv, maxv)

end

f_quant(levels, minv, maxv) = x -> quant(x, levels, minv, maxv)

comb_quant(x, n) = n

quantization(X::AbstractArray, levels; minv=0, maxv=1) =

quantization!(copy(X), levels, minv=minv, maxv=maxv)

quantization!(X::AbstractArray, levels; minv=0, maxv=1) =

apply_noise!(comb_quant, f_quant(levels-1, minv, maxv), X, false)

"""

quantization(X, levels; minv=0, maxv=1)

Returns array `X` discretized to `levels` different values.

Therefore the array is discretized.

`levels` describes how many different value steps the resulting image has.

`minv=0` and `maxv` indicate the minimum and maximum possible values of the images.

In RGB and Gray images this is usually 0 and 1.

There is also `quantization!` available.

"""

quantization

| Noise | https://github.com/roflmaostc/Noise.jl.git |

|

[

"MIT"