code

stringlengths 2.5k

150k

| kind

stringclasses 1

value |

|---|---|

scikit_learn 1.9. Naive Bayes 1.9. Naive Bayes

================

Naive Bayes methods are a set of supervised learning algorithms based on applying Bayes’ theorem with the “naive” assumption of conditional independence between every pair of features given the value of the class variable. Bayes’ theorem states the following relationship, given class variable \(y\) and dependent feature vector \(x\_1\) through \(x\_n\), :

\[P(y \mid x\_1, \dots, x\_n) = \frac{P(y) P(x\_1, \dots, x\_n \mid y)} {P(x\_1, \dots, x\_n)}\] Using the naive conditional independence assumption that

\[P(x\_i | y, x\_1, \dots, x\_{i-1}, x\_{i+1}, \dots, x\_n) = P(x\_i | y),\] for all \(i\), this relationship is simplified to

\[P(y \mid x\_1, \dots, x\_n) = \frac{P(y) \prod\_{i=1}^{n} P(x\_i \mid y)} {P(x\_1, \dots, x\_n)}\] Since \(P(x\_1, \dots, x\_n)\) is constant given the input, we can use the following classification rule:

\[ \begin{align}\begin{aligned}P(y \mid x\_1, \dots, x\_n) \propto P(y) \prod\_{i=1}^{n} P(x\_i \mid y)\\\Downarrow\\\hat{y} = \arg\max\_y P(y) \prod\_{i=1}^{n} P(x\_i \mid y),\end{aligned}\end{align} \] and we can use Maximum A Posteriori (MAP) estimation to estimate \(P(y)\) and \(P(x\_i \mid y)\); the former is then the relative frequency of class \(y\) in the training set.

The different naive Bayes classifiers differ mainly by the assumptions they make regarding the distribution of \(P(x\_i \mid y)\).

In spite of their apparently over-simplified assumptions, naive Bayes classifiers have worked quite well in many real-world situations, famously document classification and spam filtering. They require a small amount of training data to estimate the necessary parameters. (For theoretical reasons why naive Bayes works well, and on which types of data it does, see the references below.)

Naive Bayes learners and classifiers can be extremely fast compared to more sophisticated methods. The decoupling of the class conditional feature distributions means that each distribution can be independently estimated as a one dimensional distribution. This in turn helps to alleviate problems stemming from the curse of dimensionality.

On the flip side, although naive Bayes is known as a decent classifier, it is known to be a bad estimator, so the probability outputs from `predict_proba` are not to be taken too seriously.

1.9.1. Gaussian Naive Bayes

----------------------------

[`GaussianNB`](generated/sklearn.naive_bayes.gaussiannb#sklearn.naive_bayes.GaussianNB "sklearn.naive_bayes.GaussianNB") implements the Gaussian Naive Bayes algorithm for classification. The likelihood of the features is assumed to be Gaussian:

\[P(x\_i \mid y) = \frac{1}{\sqrt{2\pi\sigma^2\_y}} \exp\left(-\frac{(x\_i - \mu\_y)^2}{2\sigma^2\_y}\right)\] The parameters \(\sigma\_y\) and \(\mu\_y\) are estimated using maximum likelihood.

```

>>> from sklearn.datasets import load_iris

>>> from sklearn.model_selection import train_test_split

>>> from sklearn.naive_bayes import GaussianNB

>>> X, y = load_iris(return_X_y=True)

>>> X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.5, random_state=0)

>>> gnb = GaussianNB()

>>> y_pred = gnb.fit(X_train, y_train).predict(X_test)

>>> print("Number of mislabeled points out of a total %d points : %d"

... % (X_test.shape[0], (y_test != y_pred).sum()))

Number of mislabeled points out of a total 75 points : 4

```

1.9.2. Multinomial Naive Bayes

-------------------------------

[`MultinomialNB`](generated/sklearn.naive_bayes.multinomialnb#sklearn.naive_bayes.MultinomialNB "sklearn.naive_bayes.MultinomialNB") implements the naive Bayes algorithm for multinomially distributed data, and is one of the two classic naive Bayes variants used in text classification (where the data are typically represented as word vector counts, although tf-idf vectors are also known to work well in practice). The distribution is parametrized by vectors \(\theta\_y = (\theta\_{y1},\ldots,\theta\_{yn})\) for each class \(y\), where \(n\) is the number of features (in text classification, the size of the vocabulary) and \(\theta\_{yi}\) is the probability \(P(x\_i \mid y)\) of feature \(i\) appearing in a sample belonging to class \(y\).

The parameters \(\theta\_y\) is estimated by a smoothed version of maximum likelihood, i.e. relative frequency counting:

\[\hat{\theta}\_{yi} = \frac{ N\_{yi} + \alpha}{N\_y + \alpha n}\] where \(N\_{yi} = \sum\_{x \in T} x\_i\) is the number of times feature \(i\) appears in a sample of class \(y\) in the training set \(T\), and \(N\_{y} = \sum\_{i=1}^{n} N\_{yi}\) is the total count of all features for class \(y\).

The smoothing priors \(\alpha \ge 0\) accounts for features not present in the learning samples and prevents zero probabilities in further computations. Setting \(\alpha = 1\) is called Laplace smoothing, while \(\alpha < 1\) is called Lidstone smoothing.

1.9.3. Complement Naive Bayes

------------------------------

[`ComplementNB`](generated/sklearn.naive_bayes.complementnb#sklearn.naive_bayes.ComplementNB "sklearn.naive_bayes.ComplementNB") implements the complement naive Bayes (CNB) algorithm. CNB is an adaptation of the standard multinomial naive Bayes (MNB) algorithm that is particularly suited for imbalanced data sets. Specifically, CNB uses statistics from the *complement* of each class to compute the model’s weights. The inventors of CNB show empirically that the parameter estimates for CNB are more stable than those for MNB. Further, CNB regularly outperforms MNB (often by a considerable margin) on text classification tasks. The procedure for calculating the weights is as follows:

\[ \begin{align}\begin{aligned}\hat{\theta}\_{ci} = \frac{\alpha\_i + \sum\_{j:y\_j \neq c} d\_{ij}} {\alpha + \sum\_{j:y\_j \neq c} \sum\_{k} d\_{kj}}\\w\_{ci} = \log \hat{\theta}\_{ci}\\w\_{ci} = \frac{w\_{ci}}{\sum\_{j} |w\_{cj}|}\end{aligned}\end{align} \] where the summations are over all documents \(j\) not in class \(c\), \(d\_{ij}\) is either the count or tf-idf value of term \(i\) in document \(j\), \(\alpha\_i\) is a smoothing hyperparameter like that found in MNB, and \(\alpha = \sum\_{i} \alpha\_i\). The second normalization addresses the tendency for longer documents to dominate parameter estimates in MNB. The classification rule is:

\[\hat{c} = \arg\min\_c \sum\_{i} t\_i w\_{ci}\] i.e., a document is assigned to the class that is the *poorest* complement match.

1.9.4. Bernoulli Naive Bayes

-----------------------------

[`BernoulliNB`](generated/sklearn.naive_bayes.bernoullinb#sklearn.naive_bayes.BernoulliNB "sklearn.naive_bayes.BernoulliNB") implements the naive Bayes training and classification algorithms for data that is distributed according to multivariate Bernoulli distributions; i.e., there may be multiple features but each one is assumed to be a binary-valued (Bernoulli, boolean) variable. Therefore, this class requires samples to be represented as binary-valued feature vectors; if handed any other kind of data, a `BernoulliNB` instance may binarize its input (depending on the `binarize` parameter).

The decision rule for Bernoulli naive Bayes is based on

\[P(x\_i \mid y) = P(x\_i = 1 \mid y) x\_i + (1 - P(x\_i = 1 \mid y)) (1 - x\_i)\] which differs from multinomial NB’s rule in that it explicitly penalizes the non-occurrence of a feature \(i\) that is an indicator for class \(y\), where the multinomial variant would simply ignore a non-occurring feature.

In the case of text classification, word occurrence vectors (rather than word count vectors) may be used to train and use this classifier. `BernoulliNB` might perform better on some datasets, especially those with shorter documents. It is advisable to evaluate both models, if time permits.

1.9.5. Categorical Naive Bayes

-------------------------------

[`CategoricalNB`](generated/sklearn.naive_bayes.categoricalnb#sklearn.naive_bayes.CategoricalNB "sklearn.naive_bayes.CategoricalNB") implements the categorical naive Bayes algorithm for categorically distributed data. It assumes that each feature, which is described by the index \(i\), has its own categorical distribution.

For each feature \(i\) in the training set \(X\), [`CategoricalNB`](generated/sklearn.naive_bayes.categoricalnb#sklearn.naive_bayes.CategoricalNB "sklearn.naive_bayes.CategoricalNB") estimates a categorical distribution for each feature i of X conditioned on the class y. The index set of the samples is defined as \(J = \{ 1, \dots, m \}\), with \(m\) as the number of samples.

The probability of category \(t\) in feature \(i\) given class \(c\) is estimated as:

\[P(x\_i = t \mid y = c \: ;\, \alpha) = \frac{ N\_{tic} + \alpha}{N\_{c} + \alpha n\_i},\] where \(N\_{tic} = |\{j \in J \mid x\_{ij} = t, y\_j = c\}|\) is the number of times category \(t\) appears in the samples \(x\_{i}\), which belong to class \(c\), \(N\_{c} = |\{ j \in J\mid y\_j = c\}|\) is the number of samples with class c, \(\alpha\) is a smoothing parameter and \(n\_i\) is the number of available categories of feature \(i\).

[`CategoricalNB`](generated/sklearn.naive_bayes.categoricalnb#sklearn.naive_bayes.CategoricalNB "sklearn.naive_bayes.CategoricalNB") assumes that the sample matrix \(X\) is encoded (for instance with the help of `OrdinalEncoder`) such that all categories for each feature \(i\) are represented with numbers \(0, ..., n\_i - 1\) where \(n\_i\) is the number of available categories of feature \(i\).

1.9.6. Out-of-core naive Bayes model fitting

---------------------------------------------

Naive Bayes models can be used to tackle large scale classification problems for which the full training set might not fit in memory. To handle this case, [`MultinomialNB`](generated/sklearn.naive_bayes.multinomialnb#sklearn.naive_bayes.MultinomialNB "sklearn.naive_bayes.MultinomialNB"), [`BernoulliNB`](generated/sklearn.naive_bayes.bernoullinb#sklearn.naive_bayes.BernoulliNB "sklearn.naive_bayes.BernoulliNB"), and [`GaussianNB`](generated/sklearn.naive_bayes.gaussiannb#sklearn.naive_bayes.GaussianNB "sklearn.naive_bayes.GaussianNB") expose a `partial_fit` method that can be used incrementally as done with other classifiers as demonstrated in [Out-of-core classification of text documents](../auto_examples/applications/plot_out_of_core_classification#sphx-glr-auto-examples-applications-plot-out-of-core-classification-py). All naive Bayes classifiers support sample weighting.

Contrary to the `fit` method, the first call to `partial_fit` needs to be passed the list of all the expected class labels.

For an overview of available strategies in scikit-learn, see also the [out-of-core learning](https://scikit-learn.org/1.1/computing/scaling_strategies.html#scaling-strategies) documentation.

Note

The `partial_fit` method call of naive Bayes models introduces some computational overhead. It is recommended to use data chunk sizes that are as large as possible, that is as the available RAM allows.

scikit_learn 2.2. Manifold learning 2.2. Manifold learning

======================

Manifold learning is an approach to non-linear dimensionality reduction. Algorithms for this task are based on the idea that the dimensionality of many data sets is only artificially high.

2.2.1. Introduction

--------------------

High-dimensional datasets can be very difficult to visualize. While data in two or three dimensions can be plotted to show the inherent structure of the data, equivalent high-dimensional plots are much less intuitive. To aid visualization of the structure of a dataset, the dimension must be reduced in some way.

The simplest way to accomplish this dimensionality reduction is by taking a random projection of the data. Though this allows some degree of visualization of the data structure, the randomness of the choice leaves much to be desired. In a random projection, it is likely that the more interesting structure within the data will be lost.

To address this concern, a number of supervised and unsupervised linear dimensionality reduction frameworks have been designed, such as Principal Component Analysis (PCA), Independent Component Analysis, Linear Discriminant Analysis, and others. These algorithms define specific rubrics to choose an “interesting” linear projection of the data. These methods can be powerful, but often miss important non-linear structure in the data.

Manifold Learning can be thought of as an attempt to generalize linear frameworks like PCA to be sensitive to non-linear structure in data. Though supervised variants exist, the typical manifold learning problem is unsupervised: it learns the high-dimensional structure of the data from the data itself, without the use of predetermined classifications.

The manifold learning implementations available in scikit-learn are summarized below

2.2.2. Isomap

--------------

One of the earliest approaches to manifold learning is the Isomap algorithm, short for Isometric Mapping. Isomap can be viewed as an extension of Multi-dimensional Scaling (MDS) or Kernel PCA. Isomap seeks a lower-dimensional embedding which maintains geodesic distances between all points. Isomap can be performed with the object [`Isomap`](generated/sklearn.manifold.isomap#sklearn.manifold.Isomap "sklearn.manifold.Isomap").

###

2.2.2.1. Complexity

The Isomap algorithm comprises three stages:

1. **Nearest neighbor search.** Isomap uses [`BallTree`](generated/sklearn.neighbors.balltree#sklearn.neighbors.BallTree "sklearn.neighbors.BallTree") for efficient neighbor search. The cost is approximately \(O[D \log(k) N \log(N)]\), for \(k\) nearest neighbors of \(N\) points in \(D\) dimensions.

2. **Shortest-path graph search.** The most efficient known algorithms for this are *Dijkstra’s Algorithm*, which is approximately \(O[N^2(k + \log(N))]\), or the *Floyd-Warshall algorithm*, which is \(O[N^3]\). The algorithm can be selected by the user with the `path_method` keyword of `Isomap`. If unspecified, the code attempts to choose the best algorithm for the input data.

3. **Partial eigenvalue decomposition.** The embedding is encoded in the eigenvectors corresponding to the \(d\) largest eigenvalues of the \(N \times N\) isomap kernel. For a dense solver, the cost is approximately \(O[d N^2]\). This cost can often be improved using the `ARPACK` solver. The eigensolver can be specified by the user with the `eigen_solver` keyword of `Isomap`. If unspecified, the code attempts to choose the best algorithm for the input data.

The overall complexity of Isomap is \(O[D \log(k) N \log(N)] + O[N^2(k + \log(N))] + O[d N^2]\).

* \(N\) : number of training data points

* \(D\) : input dimension

* \(k\) : number of nearest neighbors

* \(d\) : output dimension

2.2.3. Locally Linear Embedding

--------------------------------

Locally linear embedding (LLE) seeks a lower-dimensional projection of the data which preserves distances within local neighborhoods. It can be thought of as a series of local Principal Component Analyses which are globally compared to find the best non-linear embedding.

Locally linear embedding can be performed with function [`locally_linear_embedding`](generated/sklearn.manifold.locally_linear_embedding#sklearn.manifold.locally_linear_embedding "sklearn.manifold.locally_linear_embedding") or its object-oriented counterpart [`LocallyLinearEmbedding`](generated/sklearn.manifold.locallylinearembedding#sklearn.manifold.LocallyLinearEmbedding "sklearn.manifold.LocallyLinearEmbedding").

###

2.2.3.1. Complexity

The standard LLE algorithm comprises three stages:

1. **Nearest Neighbors Search**. See discussion under Isomap above.

2. **Weight Matrix Construction**. \(O[D N k^3]\). The construction of the LLE weight matrix involves the solution of a \(k \times k\) linear equation for each of the \(N\) local neighborhoods

3. **Partial Eigenvalue Decomposition**. See discussion under Isomap above.

The overall complexity of standard LLE is \(O[D \log(k) N \log(N)] + O[D N k^3] + O[d N^2]\).

* \(N\) : number of training data points

* \(D\) : input dimension

* \(k\) : number of nearest neighbors

* \(d\) : output dimension

2.2.4. Modified Locally Linear Embedding

-----------------------------------------

One well-known issue with LLE is the regularization problem. When the number of neighbors is greater than the number of input dimensions, the matrix defining each local neighborhood is rank-deficient. To address this, standard LLE applies an arbitrary regularization parameter \(r\), which is chosen relative to the trace of the local weight matrix. Though it can be shown formally that as \(r \to 0\), the solution converges to the desired embedding, there is no guarantee that the optimal solution will be found for \(r > 0\). This problem manifests itself in embeddings which distort the underlying geometry of the manifold.

One method to address the regularization problem is to use multiple weight vectors in each neighborhood. This is the essence of *modified locally linear embedding* (MLLE). MLLE can be performed with function [`locally_linear_embedding`](generated/sklearn.manifold.locally_linear_embedding#sklearn.manifold.locally_linear_embedding "sklearn.manifold.locally_linear_embedding") or its object-oriented counterpart [`LocallyLinearEmbedding`](generated/sklearn.manifold.locallylinearembedding#sklearn.manifold.LocallyLinearEmbedding "sklearn.manifold.LocallyLinearEmbedding"), with the keyword `method = 'modified'`. It requires `n_neighbors > n_components`.

###

2.2.4.1. Complexity

The MLLE algorithm comprises three stages:

1. **Nearest Neighbors Search**. Same as standard LLE

2. **Weight Matrix Construction**. Approximately \(O[D N k^3] + O[N (k-D) k^2]\). The first term is exactly equivalent to that of standard LLE. The second term has to do with constructing the weight matrix from multiple weights. In practice, the added cost of constructing the MLLE weight matrix is relatively small compared to the cost of stages 1 and 3.

3. **Partial Eigenvalue Decomposition**. Same as standard LLE

The overall complexity of MLLE is \(O[D \log(k) N \log(N)] + O[D N k^3] + O[N (k-D) k^2] + O[d N^2]\).

* \(N\) : number of training data points

* \(D\) : input dimension

* \(k\) : number of nearest neighbors

* \(d\) : output dimension

2.2.5. Hessian Eigenmapping

----------------------------

Hessian Eigenmapping (also known as Hessian-based LLE: HLLE) is another method of solving the regularization problem of LLE. It revolves around a hessian-based quadratic form at each neighborhood which is used to recover the locally linear structure. Though other implementations note its poor scaling with data size, `sklearn` implements some algorithmic improvements which make its cost comparable to that of other LLE variants for small output dimension. HLLE can be performed with function [`locally_linear_embedding`](generated/sklearn.manifold.locally_linear_embedding#sklearn.manifold.locally_linear_embedding "sklearn.manifold.locally_linear_embedding") or its object-oriented counterpart [`LocallyLinearEmbedding`](generated/sklearn.manifold.locallylinearembedding#sklearn.manifold.LocallyLinearEmbedding "sklearn.manifold.LocallyLinearEmbedding"), with the keyword `method = 'hessian'`. It requires `n_neighbors > n_components * (n_components + 3) / 2`.

###

2.2.5.1. Complexity

The HLLE algorithm comprises three stages:

1. **Nearest Neighbors Search**. Same as standard LLE

2. **Weight Matrix Construction**. Approximately \(O[D N k^3] + O[N d^6]\). The first term reflects a similar cost to that of standard LLE. The second term comes from a QR decomposition of the local hessian estimator.

3. **Partial Eigenvalue Decomposition**. Same as standard LLE

The overall complexity of standard HLLE is \(O[D \log(k) N \log(N)] + O[D N k^3] + O[N d^6] + O[d N^2]\).

* \(N\) : number of training data points

* \(D\) : input dimension

* \(k\) : number of nearest neighbors

* \(d\) : output dimension

2.2.6. Spectral Embedding

--------------------------

Spectral Embedding is an approach to calculating a non-linear embedding. Scikit-learn implements Laplacian Eigenmaps, which finds a low dimensional representation of the data using a spectral decomposition of the graph Laplacian. The graph generated can be considered as a discrete approximation of the low dimensional manifold in the high dimensional space. Minimization of a cost function based on the graph ensures that points close to each other on the manifold are mapped close to each other in the low dimensional space, preserving local distances. Spectral embedding can be performed with the function [`spectral_embedding`](generated/sklearn.manifold.spectral_embedding#sklearn.manifold.spectral_embedding "sklearn.manifold.spectral_embedding") or its object-oriented counterpart [`SpectralEmbedding`](generated/sklearn.manifold.spectralembedding#sklearn.manifold.SpectralEmbedding "sklearn.manifold.SpectralEmbedding").

###

2.2.6.1. Complexity

The Spectral Embedding (Laplacian Eigenmaps) algorithm comprises three stages:

1. **Weighted Graph Construction**. Transform the raw input data into graph representation using affinity (adjacency) matrix representation.

2. **Graph Laplacian Construction**. unnormalized Graph Laplacian is constructed as \(L = D - A\) for and normalized one as \(L = D^{-\frac{1}{2}} (D - A) D^{-\frac{1}{2}}\).

3. **Partial Eigenvalue Decomposition**. Eigenvalue decomposition is done on graph Laplacian

The overall complexity of spectral embedding is \(O[D \log(k) N \log(N)] + O[D N k^3] + O[d N^2]\).

* \(N\) : number of training data points

* \(D\) : input dimension

* \(k\) : number of nearest neighbors

* \(d\) : output dimension

2.2.7. Local Tangent Space Alignment

-------------------------------------

Though not technically a variant of LLE, Local tangent space alignment (LTSA) is algorithmically similar enough to LLE that it can be put in this category. Rather than focusing on preserving neighborhood distances as in LLE, LTSA seeks to characterize the local geometry at each neighborhood via its tangent space, and performs a global optimization to align these local tangent spaces to learn the embedding. LTSA can be performed with function [`locally_linear_embedding`](generated/sklearn.manifold.locally_linear_embedding#sklearn.manifold.locally_linear_embedding "sklearn.manifold.locally_linear_embedding") or its object-oriented counterpart [`LocallyLinearEmbedding`](generated/sklearn.manifold.locallylinearembedding#sklearn.manifold.LocallyLinearEmbedding "sklearn.manifold.LocallyLinearEmbedding"), with the keyword `method = 'ltsa'`.

###

2.2.7.1. Complexity

The LTSA algorithm comprises three stages:

1. **Nearest Neighbors Search**. Same as standard LLE

2. **Weight Matrix Construction**. Approximately \(O[D N k^3] + O[k^2 d]\). The first term reflects a similar cost to that of standard LLE.

3. **Partial Eigenvalue Decomposition**. Same as standard LLE

The overall complexity of standard LTSA is \(O[D \log(k) N \log(N)] + O[D N k^3] + O[k^2 d] + O[d N^2]\).

* \(N\) : number of training data points

* \(D\) : input dimension

* \(k\) : number of nearest neighbors

* \(d\) : output dimension

2.2.8. Multi-dimensional Scaling (MDS)

---------------------------------------

[Multidimensional scaling](https://en.wikipedia.org/wiki/Multidimensional_scaling) ([`MDS`](generated/sklearn.manifold.mds#sklearn.manifold.MDS "sklearn.manifold.MDS")) seeks a low-dimensional representation of the data in which the distances respect well the distances in the original high-dimensional space.

In general, [`MDS`](generated/sklearn.manifold.mds#sklearn.manifold.MDS "sklearn.manifold.MDS") is a technique used for analyzing similarity or dissimilarity data. It attempts to model similarity or dissimilarity data as distances in a geometric spaces. The data can be ratings of similarity between objects, interaction frequencies of molecules, or trade indices between countries.

There exists two types of MDS algorithm: metric and non metric. In scikit-learn, the class [`MDS`](generated/sklearn.manifold.mds#sklearn.manifold.MDS "sklearn.manifold.MDS") implements both. In Metric MDS, the input similarity matrix arises from a metric (and thus respects the triangular inequality), the distances between output two points are then set to be as close as possible to the similarity or dissimilarity data. In the non-metric version, the algorithms will try to preserve the order of the distances, and hence seek for a monotonic relationship between the distances in the embedded space and the similarities/dissimilarities.

Let \(S\) be the similarity matrix, and \(X\) the coordinates of the \(n\) input points. Disparities \(\hat{d}\_{ij}\) are transformation of the similarities chosen in some optimal ways. The objective, called the stress, is then defined by \(\sum\_{i < j} d\_{ij}(X) - \hat{d}\_{ij}(X)\)

###

2.2.8.1. Metric MDS

The simplest metric [`MDS`](generated/sklearn.manifold.mds#sklearn.manifold.MDS "sklearn.manifold.MDS") model, called *absolute MDS*, disparities are defined by \(\hat{d}\_{ij} = S\_{ij}\). With absolute MDS, the value \(S\_{ij}\) should then correspond exactly to the distance between point \(i\) and \(j\) in the embedding point.

Most commonly, disparities are set to \(\hat{d}\_{ij} = b S\_{ij}\).

###

2.2.8.2. Nonmetric MDS

Non metric [`MDS`](generated/sklearn.manifold.mds#sklearn.manifold.MDS "sklearn.manifold.MDS") focuses on the ordination of the data. If \(S\_{ij} < S\_{jk}\), then the embedding should enforce \(d\_{ij} < d\_{jk}\). A simple algorithm to enforce that is to use a monotonic regression of \(d\_{ij}\) on \(S\_{ij}\), yielding disparities \(\hat{d}\_{ij}\) in the same order as \(S\_{ij}\).

A trivial solution to this problem is to set all the points on the origin. In order to avoid that, the disparities \(\hat{d}\_{ij}\) are normalized.

2.2.9. t-distributed Stochastic Neighbor Embedding (t-SNE)

-----------------------------------------------------------

t-SNE ([`TSNE`](generated/sklearn.manifold.tsne#sklearn.manifold.TSNE "sklearn.manifold.TSNE")) converts affinities of data points to probabilities. The affinities in the original space are represented by Gaussian joint probabilities and the affinities in the embedded space are represented by Student’s t-distributions. This allows t-SNE to be particularly sensitive to local structure and has a few other advantages over existing techniques:

* Revealing the structure at many scales on a single map

* Revealing data that lie in multiple, different, manifolds or clusters

* Reducing the tendency to crowd points together at the center

While Isomap, LLE and variants are best suited to unfold a single continuous low dimensional manifold, t-SNE will focus on the local structure of the data and will tend to extract clustered local groups of samples as highlighted on the S-curve example. This ability to group samples based on the local structure might be beneficial to visually disentangle a dataset that comprises several manifolds at once as is the case in the digits dataset.

The Kullback-Leibler (KL) divergence of the joint probabilities in the original space and the embedded space will be minimized by gradient descent. Note that the KL divergence is not convex, i.e. multiple restarts with different initializations will end up in local minima of the KL divergence. Hence, it is sometimes useful to try different seeds and select the embedding with the lowest KL divergence.

The disadvantages to using t-SNE are roughly:

* t-SNE is computationally expensive, and can take several hours on million-sample datasets where PCA will finish in seconds or minutes

* The Barnes-Hut t-SNE method is limited to two or three dimensional embeddings.

* The algorithm is stochastic and multiple restarts with different seeds can yield different embeddings. However, it is perfectly legitimate to pick the embedding with the least error.

* Global structure is not explicitly preserved. This problem is mitigated by initializing points with PCA (using `init='pca'`).

###

2.2.9.1. Optimizing t-SNE

The main purpose of t-SNE is visualization of high-dimensional data. Hence, it works best when the data will be embedded on two or three dimensions.

Optimizing the KL divergence can be a little bit tricky sometimes. There are five parameters that control the optimization of t-SNE and therefore possibly the quality of the resulting embedding:

* perplexity

* early exaggeration factor

* learning rate

* maximum number of iterations

* angle (not used in the exact method)

The perplexity is defined as \(k=2^{(S)}\) where \(S\) is the Shannon entropy of the conditional probability distribution. The perplexity of a \(k\)-sided die is \(k\), so that \(k\) is effectively the number of nearest neighbors t-SNE considers when generating the conditional probabilities. Larger perplexities lead to more nearest neighbors and less sensitive to small structure. Conversely a lower perplexity considers a smaller number of neighbors, and thus ignores more global information in favour of the local neighborhood. As dataset sizes get larger more points will be required to get a reasonable sample of the local neighborhood, and hence larger perplexities may be required. Similarly noisier datasets will require larger perplexity values to encompass enough local neighbors to see beyond the background noise.

The maximum number of iterations is usually high enough and does not need any tuning. The optimization consists of two phases: the early exaggeration phase and the final optimization. During early exaggeration the joint probabilities in the original space will be artificially increased by multiplication with a given factor. Larger factors result in larger gaps between natural clusters in the data. If the factor is too high, the KL divergence could increase during this phase. Usually it does not have to be tuned. A critical parameter is the learning rate. If it is too low gradient descent will get stuck in a bad local minimum. If it is too high the KL divergence will increase during optimization. A heuristic suggested in Belkina et al. (2019) is to set the learning rate to the sample size divided by the early exaggeration factor. We implement this heuristic as `learning_rate='auto'` argument. More tips can be found in Laurens van der Maaten’s FAQ (see references). The last parameter, angle, is a tradeoff between performance and accuracy. Larger angles imply that we can approximate larger regions by a single point, leading to better speed but less accurate results.

[“How to Use t-SNE Effectively”](https://distill.pub/2016/misread-tsne/) provides a good discussion of the effects of the various parameters, as well as interactive plots to explore the effects of different parameters.

###

2.2.9.2. Barnes-Hut t-SNE

The Barnes-Hut t-SNE that has been implemented here is usually much slower than other manifold learning algorithms. The optimization is quite difficult and the computation of the gradient is \(O[d N log(N)]\), where \(d\) is the number of output dimensions and \(N\) is the number of samples. The Barnes-Hut method improves on the exact method where t-SNE complexity is \(O[d N^2]\), but has several other notable differences:

* The Barnes-Hut implementation only works when the target dimensionality is 3 or less. The 2D case is typical when building visualizations.

* Barnes-Hut only works with dense input data. Sparse data matrices can only be embedded with the exact method or can be approximated by a dense low rank projection for instance using [`TruncatedSVD`](generated/sklearn.decomposition.truncatedsvd#sklearn.decomposition.TruncatedSVD "sklearn.decomposition.TruncatedSVD")

* Barnes-Hut is an approximation of the exact method. The approximation is parameterized with the angle parameter, therefore the angle parameter is unused when method=”exact”

* Barnes-Hut is significantly more scalable. Barnes-Hut can be used to embed hundred of thousands of data points while the exact method can handle thousands of samples before becoming computationally intractable

For visualization purpose (which is the main use case of t-SNE), using the Barnes-Hut method is strongly recommended. The exact t-SNE method is useful for checking the theoretically properties of the embedding possibly in higher dimensional space but limit to small datasets due to computational constraints.

Also note that the digits labels roughly match the natural grouping found by t-SNE while the linear 2D projection of the PCA model yields a representation where label regions largely overlap. This is a strong clue that this data can be well separated by non linear methods that focus on the local structure (e.g. an SVM with a Gaussian RBF kernel). However, failing to visualize well separated homogeneously labeled groups with t-SNE in 2D does not necessarily imply that the data cannot be correctly classified by a supervised model. It might be the case that 2 dimensions are not high enough to accurately represent the internal structure of the data.

2.2.10. Tips on practical use

------------------------------

* Make sure the same scale is used over all features. Because manifold learning methods are based on a nearest-neighbor search, the algorithm may perform poorly otherwise. See [StandardScaler](preprocessing#preprocessing-scaler) for convenient ways of scaling heterogeneous data.

* The reconstruction error computed by each routine can be used to choose the optimal output dimension. For a \(d\)-dimensional manifold embedded in a \(D\)-dimensional parameter space, the reconstruction error will decrease as `n_components` is increased until `n_components == d`.

* Note that noisy data can “short-circuit” the manifold, in essence acting as a bridge between parts of the manifold that would otherwise be well-separated. Manifold learning on noisy and/or incomplete data is an active area of research.

* Certain input configurations can lead to singular weight matrices, for example when more than two points in the dataset are identical, or when the data is split into disjointed groups. In this case, `solver='arpack'` will fail to find the null space. The easiest way to address this is to use `solver='dense'` which will work on a singular matrix, though it may be very slow depending on the number of input points. Alternatively, one can attempt to understand the source of the singularity: if it is due to disjoint sets, increasing `n_neighbors` may help. If it is due to identical points in the dataset, removing these points may help.

See also

[Totally Random Trees Embedding](ensemble#random-trees-embedding) can also be useful to derive non-linear representations of feature space, also it does not perform dimensionality reduction.

| programming_docs |

scikit_learn 1.17. Neural network models (supervised) 1.17. Neural network models (supervised)

========================================

Warning

This implementation is not intended for large-scale applications. In particular, scikit-learn offers no GPU support. For much faster, GPU-based implementations, as well as frameworks offering much more flexibility to build deep learning architectures, see [Related Projects](https://scikit-learn.org/1.1/related_projects.html#related-projects).

1.17.1. Multi-layer Perceptron

-------------------------------

**Multi-layer Perceptron (MLP)** is a supervised learning algorithm that learns a function \(f(\cdot): R^m \rightarrow R^o\) by training on a dataset, where \(m\) is the number of dimensions for input and \(o\) is the number of dimensions for output. Given a set of features \(X = {x\_1, x\_2, ..., x\_m}\) and a target \(y\), it can learn a non-linear function approximator for either classification or regression. It is different from logistic regression, in that between the input and the output layer, there can be one or more non-linear layers, called hidden layers. Figure 1 shows a one hidden layer MLP with scalar output.

**Figure 1 : One hidden layer MLP.**

The leftmost layer, known as the input layer, consists of a set of neurons \(\{x\_i | x\_1, x\_2, ..., x\_m\}\) representing the input features. Each neuron in the hidden layer transforms the values from the previous layer with a weighted linear summation \(w\_1x\_1 + w\_2x\_2 + ... + w\_mx\_m\), followed by a non-linear activation function \(g(\cdot):R \rightarrow R\) - like the hyperbolic tan function. The output layer receives the values from the last hidden layer and transforms them into output values.

The module contains the public attributes `coefs_` and `intercepts_`. `coefs_` is a list of weight matrices, where weight matrix at index \(i\) represents the weights between layer \(i\) and layer \(i+1\). `intercepts_` is a list of bias vectors, where the vector at index \(i\) represents the bias values added to layer \(i+1\).

The advantages of Multi-layer Perceptron are:

* Capability to learn non-linear models.

* Capability to learn models in real-time (on-line learning) using `partial_fit`.

The disadvantages of Multi-layer Perceptron (MLP) include:

* MLP with hidden layers have a non-convex loss function where there exists more than one local minimum. Therefore different random weight initializations can lead to different validation accuracy.

* MLP requires tuning a number of hyperparameters such as the number of hidden neurons, layers, and iterations.

* MLP is sensitive to feature scaling.

Please see [Tips on Practical Use](#mlp-tips) section that addresses some of these disadvantages.

1.17.2. Classification

-----------------------

Class [`MLPClassifier`](generated/sklearn.neural_network.mlpclassifier#sklearn.neural_network.MLPClassifier "sklearn.neural_network.MLPClassifier") implements a multi-layer perceptron (MLP) algorithm that trains using [Backpropagation](http://ufldl.stanford.edu/wiki/index.php/Backpropagation_Algorithm).

MLP trains on two arrays: array X of size (n\_samples, n\_features), which holds the training samples represented as floating point feature vectors; and array y of size (n\_samples,), which holds the target values (class labels) for the training samples:

```

>>> from sklearn.neural_network import MLPClassifier

>>> X = [[0., 0.], [1., 1.]]

>>> y = [0, 1]

>>> clf = MLPClassifier(solver='lbfgs', alpha=1e-5,

... hidden_layer_sizes=(5, 2), random_state=1)

...

>>> clf.fit(X, y)

MLPClassifier(alpha=1e-05, hidden_layer_sizes=(5, 2), random_state=1,

solver='lbfgs')

```

After fitting (training), the model can predict labels for new samples:

```

>>> clf.predict([[2., 2.], [-1., -2.]])

array([1, 0])

```

MLP can fit a non-linear model to the training data. `clf.coefs_` contains the weight matrices that constitute the model parameters:

```

>>> [coef.shape for coef in clf.coefs_]

[(2, 5), (5, 2), (2, 1)]

```

Currently, [`MLPClassifier`](generated/sklearn.neural_network.mlpclassifier#sklearn.neural_network.MLPClassifier "sklearn.neural_network.MLPClassifier") supports only the Cross-Entropy loss function, which allows probability estimates by running the `predict_proba` method.

MLP trains using Backpropagation. More precisely, it trains using some form of gradient descent and the gradients are calculated using Backpropagation. For classification, it minimizes the Cross-Entropy loss function, giving a vector of probability estimates \(P(y|x)\) per sample \(x\):

```

>>> clf.predict_proba([[2., 2.], [1., 2.]])

array([[1.967...e-04, 9.998...-01],

[1.967...e-04, 9.998...-01]])

```

[`MLPClassifier`](generated/sklearn.neural_network.mlpclassifier#sklearn.neural_network.MLPClassifier "sklearn.neural_network.MLPClassifier") supports multi-class classification by applying [Softmax](https://en.wikipedia.org/wiki/Softmax_activation_function) as the output function.

Further, the model supports [multi-label classification](multiclass#multiclass) in which a sample can belong to more than one class. For each class, the raw output passes through the logistic function. Values larger or equal to `0.5` are rounded to `1`, otherwise to `0`. For a predicted output of a sample, the indices where the value is `1` represents the assigned classes of that sample:

```

>>> X = [[0., 0.], [1., 1.]]

>>> y = [[0, 1], [1, 1]]

>>> clf = MLPClassifier(solver='lbfgs', alpha=1e-5,

... hidden_layer_sizes=(15,), random_state=1)

...

>>> clf.fit(X, y)

MLPClassifier(alpha=1e-05, hidden_layer_sizes=(15,), random_state=1,

solver='lbfgs')

>>> clf.predict([[1., 2.]])

array([[1, 1]])

>>> clf.predict([[0., 0.]])

array([[0, 1]])

```

See the examples below and the docstring of [`MLPClassifier.fit`](generated/sklearn.neural_network.mlpclassifier#sklearn.neural_network.MLPClassifier.fit "sklearn.neural_network.MLPClassifier.fit") for further information.

1.17.3. Regression

-------------------

Class [`MLPRegressor`](generated/sklearn.neural_network.mlpregressor#sklearn.neural_network.MLPRegressor "sklearn.neural_network.MLPRegressor") implements a multi-layer perceptron (MLP) that trains using backpropagation with no activation function in the output layer, which can also be seen as using the identity function as activation function. Therefore, it uses the square error as the loss function, and the output is a set of continuous values.

[`MLPRegressor`](generated/sklearn.neural_network.mlpregressor#sklearn.neural_network.MLPRegressor "sklearn.neural_network.MLPRegressor") also supports multi-output regression, in which a sample can have more than one target.

1.17.4. Regularization

-----------------------

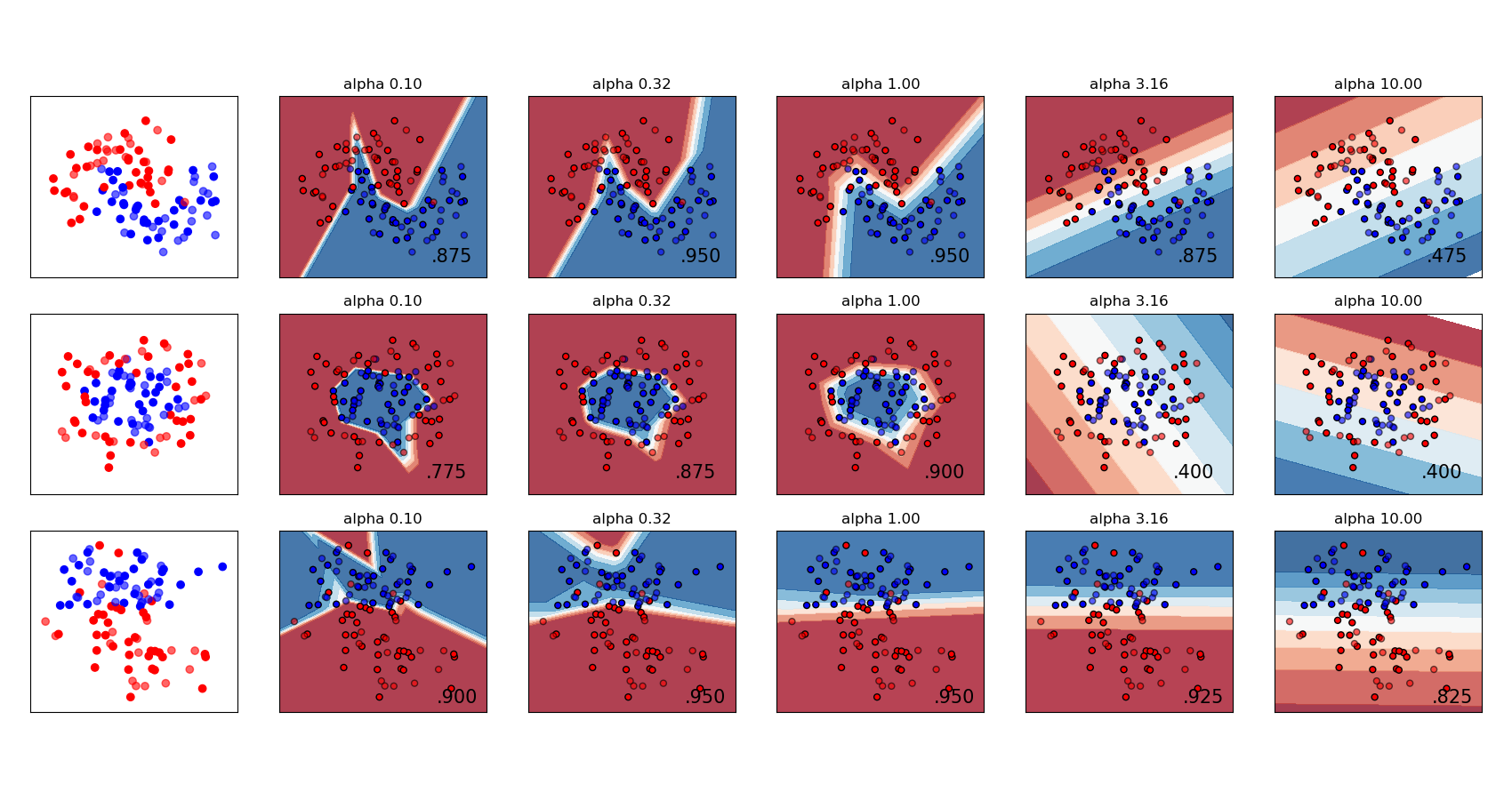

Both [`MLPRegressor`](generated/sklearn.neural_network.mlpregressor#sklearn.neural_network.MLPRegressor "sklearn.neural_network.MLPRegressor") and [`MLPClassifier`](generated/sklearn.neural_network.mlpclassifier#sklearn.neural_network.MLPClassifier "sklearn.neural_network.MLPClassifier") use parameter `alpha` for regularization (L2 regularization) term which helps in avoiding overfitting by penalizing weights with large magnitudes. Following plot displays varying decision function with value of alpha.

[](../auto_examples/neural_networks/plot_mlp_alpha) See the examples below for further information.

1.17.5. Algorithms

-------------------

MLP trains using [Stochastic Gradient Descent](https://en.wikipedia.org/wiki/Stochastic_gradient_descent), [Adam](https://arxiv.org/abs/1412.6980), or [L-BFGS](https://en.wikipedia.org/wiki/Limited-memory_BFGS). Stochastic Gradient Descent (SGD) updates parameters using the gradient of the loss function with respect to a parameter that needs adaptation, i.e.

\[w \leftarrow w - \eta (\alpha \frac{\partial R(w)}{\partial w} + \frac{\partial Loss}{\partial w})\] where \(\eta\) is the learning rate which controls the step-size in the parameter space search. \(Loss\) is the loss function used for the network.

More details can be found in the documentation of [SGD](http://scikit-learn.org/stable/modules/sgd.html)

Adam is similar to SGD in a sense that it is a stochastic optimizer, but it can automatically adjust the amount to update parameters based on adaptive estimates of lower-order moments.

With SGD or Adam, training supports online and mini-batch learning.

L-BFGS is a solver that approximates the Hessian matrix which represents the second-order partial derivative of a function. Further it approximates the inverse of the Hessian matrix to perform parameter updates. The implementation uses the Scipy version of [L-BFGS](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.fmin_l_bfgs_b.html).

If the selected solver is ‘L-BFGS’, training does not support online nor mini-batch learning.

1.17.6. Complexity

-------------------

Suppose there are \(n\) training samples, \(m\) features, \(k\) hidden layers, each containing \(h\) neurons - for simplicity, and \(o\) output neurons. The time complexity of backpropagation is \(O(n\cdot m \cdot h^k \cdot o \cdot i)\), where \(i\) is the number of iterations. Since backpropagation has a high time complexity, it is advisable to start with smaller number of hidden neurons and few hidden layers for training.

1.17.7. Mathematical formulation

---------------------------------

Given a set of training examples \((x\_1, y\_1), (x\_2, y\_2), \ldots, (x\_n, y\_n)\) where \(x\_i \in \mathbf{R}^n\) and \(y\_i \in \{0, 1\}\), a one hidden layer one hidden neuron MLP learns the function \(f(x) = W\_2 g(W\_1^T x + b\_1) + b\_2\) where \(W\_1 \in \mathbf{R}^m\) and \(W\_2, b\_1, b\_2 \in \mathbf{R}\) are model parameters. \(W\_1, W\_2\) represent the weights of the input layer and hidden layer, respectively; and \(b\_1, b\_2\) represent the bias added to the hidden layer and the output layer, respectively. \(g(\cdot) : R \rightarrow R\) is the activation function, set by default as the hyperbolic tan. It is given as,

\[g(z)= \frac{e^z-e^{-z}}{e^z+e^{-z}}\] For binary classification, \(f(x)\) passes through the logistic function \(g(z)=1/(1+e^{-z})\) to obtain output values between zero and one. A threshold, set to 0.5, would assign samples of outputs larger or equal 0.5 to the positive class, and the rest to the negative class.

If there are more than two classes, \(f(x)\) itself would be a vector of size (n\_classes,). Instead of passing through logistic function, it passes through the softmax function, which is written as,

\[\text{softmax}(z)\_i = \frac{\exp(z\_i)}{\sum\_{l=1}^k\exp(z\_l)}\] where \(z\_i\) represents the \(i\) th element of the input to softmax, which corresponds to class \(i\), and \(K\) is the number of classes. The result is a vector containing the probabilities that sample \(x\) belong to each class. The output is the class with the highest probability.

In regression, the output remains as \(f(x)\); therefore, output activation function is just the identity function.

MLP uses different loss functions depending on the problem type. The loss function for classification is Average Cross-Entropy, which in binary case is given as,

\[Loss(\hat{y},y,W) = -\dfrac{1}{n}\sum\_{i=0}^n(y\_i \ln {\hat{y\_i}} + (1-y\_i) \ln{(1-\hat{y\_i})}) + \dfrac{\alpha}{2n} ||W||\_2^2\] where \(\alpha ||W||\_2^2\) is an L2-regularization term (aka penalty) that penalizes complex models; and \(\alpha > 0\) is a non-negative hyperparameter that controls the magnitude of the penalty.

For regression, MLP uses the Mean Square Error loss function; written as,

\[Loss(\hat{y},y,W) = \frac{1}{2n}\sum\_{i=0}^n||\hat{y}\_i - y\_i ||\_2^2 + \frac{\alpha}{2n} ||W||\_2^2\] Starting from initial random weights, multi-layer perceptron (MLP) minimizes the loss function by repeatedly updating these weights. After computing the loss, a backward pass propagates it from the output layer to the previous layers, providing each weight parameter with an update value meant to decrease the loss.

In gradient descent, the gradient \(\nabla Loss\_{W}\) of the loss with respect to the weights is computed and deducted from \(W\). More formally, this is expressed as,

\[W^{i+1} = W^i - \epsilon \nabla {Loss}\_{W}^{i}\] where \(i\) is the iteration step, and \(\epsilon\) is the learning rate with a value larger than 0.

The algorithm stops when it reaches a preset maximum number of iterations; or when the improvement in loss is below a certain, small number.

1.17.8. Tips on Practical Use

------------------------------

* Multi-layer Perceptron is sensitive to feature scaling, so it is highly recommended to scale your data. For example, scale each attribute on the input vector X to [0, 1] or [-1, +1], or standardize it to have mean 0 and variance 1. Note that you must apply the *same* scaling to the test set for meaningful results. You can use `StandardScaler` for standardization.

```

>>> from sklearn.preprocessing import StandardScaler

>>> scaler = StandardScaler()

>>> # Don't cheat - fit only on training data

>>> scaler.fit(X_train)

>>> X_train = scaler.transform(X_train)

>>> # apply same transformation to test data

>>> X_test = scaler.transform(X_test)

```

An alternative and recommended approach is to use `StandardScaler` in a `Pipeline`

* Finding a reasonable regularization parameter \(\alpha\) is best done using `GridSearchCV`, usually in the range `10.0 ** -np.arange(1, 7)`.

* Empirically, we observed that `L-BFGS` converges faster and with better solutions on small datasets. For relatively large datasets, however, `Adam` is very robust. It usually converges quickly and gives pretty good performance. `SGD` with momentum or nesterov’s momentum, on the other hand, can perform better than those two algorithms if learning rate is correctly tuned.

1.17.9. More control with warm\_start

--------------------------------------

If you want more control over stopping criteria or learning rate in SGD, or want to do additional monitoring, using `warm_start=True` and `max_iter=1` and iterating yourself can be helpful:

```

>>> X = [[0., 0.], [1., 1.]]

>>> y = [0, 1]

>>> clf = MLPClassifier(hidden_layer_sizes=(15,), random_state=1, max_iter=1, warm_start=True)

>>> for i in range(10):

... clf.fit(X, y)

... # additional monitoring / inspection

MLPClassifier(...

```

scikit_learn 1.8. Cross decomposition 1.8. Cross decomposition

========================

The cross decomposition module contains **supervised** estimators for dimensionality reduction and regression, belonging to the “Partial Least Squares” family.

Cross decomposition algorithms find the fundamental relations between two matrices (X and Y). They are latent variable approaches to modeling the covariance structures in these two spaces. They will try to find the multidimensional direction in the X space that explains the maximum multidimensional variance direction in the Y space. In other words, PLS projects both `X` and `Y` into a lower-dimensional subspace such that the covariance between `transformed(X)` and `transformed(Y)` is maximal.

PLS draws similarities with [Principal Component Regression](https://en.wikipedia.org/wiki/Principal_component_regression) (PCR), where the samples are first projected into a lower-dimensional subspace, and the targets `y` are predicted using `transformed(X)`. One issue with PCR is that the dimensionality reduction is unsupervized, and may lose some important variables: PCR would keep the features with the most variance, but it’s possible that features with a small variances are relevant from predicting the target. In a way, PLS allows for the same kind of dimensionality reduction, but by taking into account the targets `y`. An illustration of this fact is given in the following example: \* [Principal Component Regression vs Partial Least Squares Regression](../auto_examples/cross_decomposition/plot_pcr_vs_pls#sphx-glr-auto-examples-cross-decomposition-plot-pcr-vs-pls-py).

Apart from CCA, the PLS estimators are particularly suited when the matrix of predictors has more variables than observations, and when there is multicollinearity among the features. By contrast, standard linear regression would fail in these cases unless it is regularized.

Classes included in this module are [`PLSRegression`](generated/sklearn.cross_decomposition.plsregression#sklearn.cross_decomposition.PLSRegression "sklearn.cross_decomposition.PLSRegression"), [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical"), [`CCA`](generated/sklearn.cross_decomposition.cca#sklearn.cross_decomposition.CCA "sklearn.cross_decomposition.CCA") and [`PLSSVD`](generated/sklearn.cross_decomposition.plssvd#sklearn.cross_decomposition.PLSSVD "sklearn.cross_decomposition.PLSSVD")

1.8.1. PLSCanonical

--------------------

We here describe the algorithm used in [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical"). The other estimators use variants of this algorithm, and are detailed below. We recommend section [[1]](#id6) for more details and comparisons between these algorithms. In [[1]](#id6), [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical") corresponds to “PLSW2A”.

Given two centered matrices \(X \in \mathbb{R}^{n \times d}\) and \(Y \in \mathbb{R}^{n \times t}\), and a number of components \(K\), [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical") proceeds as follows:

Set \(X\_1\) to \(X\) and \(Y\_1\) to \(Y\). Then, for each \(k \in [1, K]\):

* a) compute \(u\_k \in \mathbb{R}^d\) and \(v\_k \in \mathbb{R}^t\), the first left and right singular vectors of the cross-covariance matrix \(C = X\_k^T Y\_k\). \(u\_k\) and \(v\_k\) are called the *weights*. By definition, \(u\_k\) and \(v\_k\) are chosen so that they maximize the covariance between the projected \(X\_k\) and the projected target, that is \(\text{Cov}(X\_k u\_k, Y\_k v\_k)\).

* b) Project \(X\_k\) and \(Y\_k\) on the singular vectors to obtain *scores*: \(\xi\_k = X\_k u\_k\) and \(\omega\_k = Y\_k v\_k\)

* c) Regress \(X\_k\) on \(\xi\_k\), i.e. find a vector \(\gamma\_k \in \mathbb{R}^d\) such that the rank-1 matrix \(\xi\_k \gamma\_k^T\) is as close as possible to \(X\_k\). Do the same on \(Y\_k\) with \(\omega\_k\) to obtain \(\delta\_k\). The vectors \(\gamma\_k\) and \(\delta\_k\) are called the *loadings*.

* d) *deflate* \(X\_k\) and \(Y\_k\), i.e. subtract the rank-1 approximations: \(X\_{k+1} = X\_k - \xi\_k \gamma\_k^T\), and \(Y\_{k + 1} = Y\_k - \omega\_k \delta\_k^T\).

At the end, we have approximated \(X\) as a sum of rank-1 matrices: \(X = \Xi \Gamma^T\) where \(\Xi \in \mathbb{R}^{n \times K}\) contains the scores in its columns, and \(\Gamma^T \in \mathbb{R}^{K \times d}\) contains the loadings in its rows. Similarly for \(Y\), we have \(Y = \Omega \Delta^T\).

Note that the scores matrices \(\Xi\) and \(\Omega\) correspond to the projections of the training data \(X\) and \(Y\), respectively.

Step *a)* may be performed in two ways: either by computing the whole SVD of \(C\) and only retain the singular vectors with the biggest singular values, or by directly computing the singular vectors using the power method (cf section 11.3 in [[1]](#id6)), which corresponds to the `'nipals'` option of the `algorithm` parameter.

###

1.8.1.1. Transforming data

To transform \(X\) into \(\bar{X}\), we need to find a projection matrix \(P\) such that \(\bar{X} = XP\). We know that for the training data, \(\Xi = XP\), and \(X = \Xi \Gamma^T\). Setting \(P = U(\Gamma^T U)^{-1}\) where \(U\) is the matrix with the \(u\_k\) in the columns, we have \(XP = X U(\Gamma^T U)^{-1} = \Xi (\Gamma^T U) (\Gamma^T U)^{-1} = \Xi\) as desired. The rotation matrix \(P\) can be accessed from the `x_rotations_` attribute.

Similarly, \(Y\) can be transformed using the rotation matrix \(V(\Delta^T V)^{-1}\), accessed via the `y_rotations_` attribute.

###

1.8.1.2. Predicting the targets Y

To predict the targets of some data \(X\), we are looking for a coefficient matrix \(\beta \in R^{d \times t}\) such that \(Y = X\beta\).

The idea is to try to predict the transformed targets \(\Omega\) as a function of the transformed samples \(\Xi\), by computing \(\alpha \in \mathbb{R}\) such that \(\Omega = \alpha \Xi\).

Then, we have \(Y = \Omega \Delta^T = \alpha \Xi \Delta^T\), and since \(\Xi\) is the transformed training data we have that \(Y = X \alpha P \Delta^T\), and as a result the coefficient matrix \(\beta = \alpha P \Delta^T\).

\(\beta\) can be accessed through the `coef_` attribute.

1.8.2. PLSSVD

--------------

[`PLSSVD`](generated/sklearn.cross_decomposition.plssvd#sklearn.cross_decomposition.PLSSVD "sklearn.cross_decomposition.PLSSVD") is a simplified version of [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical") described earlier: instead of iteratively deflating the matrices \(X\_k\) and \(Y\_k\), [`PLSSVD`](generated/sklearn.cross_decomposition.plssvd#sklearn.cross_decomposition.PLSSVD "sklearn.cross_decomposition.PLSSVD") computes the SVD of \(C = X^TY\) only *once*, and stores the `n_components` singular vectors corresponding to the biggest singular values in the matrices `U` and `V`, corresponding to the `x_weights_` and `y_weights_` attributes. Here, the transformed data is simply `transformed(X) = XU` and `transformed(Y) = YV`.

If `n_components == 1`, [`PLSSVD`](generated/sklearn.cross_decomposition.plssvd#sklearn.cross_decomposition.PLSSVD "sklearn.cross_decomposition.PLSSVD") and [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical") are strictly equivalent.

1.8.3. PLSRegression

---------------------

The [`PLSRegression`](generated/sklearn.cross_decomposition.plsregression#sklearn.cross_decomposition.PLSRegression "sklearn.cross_decomposition.PLSRegression") estimator is similar to [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical") with `algorithm='nipals'`, with 2 significant differences:

* at step a) in the power method to compute \(u\_k\) and \(v\_k\), \(v\_k\) is never normalized.

* at step c), the targets \(Y\_k\) are approximated using the projection of \(X\_k\) (i.e. \(\xi\_k\)) instead of the projection of \(Y\_k\) (i.e. \(\omega\_k\)). In other words, the loadings computation is different. As a result, the deflation in step d) will also be affected.

These two modifications affect the output of `predict` and `transform`, which are not the same as for [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical"). Also, while the number of components is limited by `min(n_samples, n_features, n_targets)` in [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical"), here the limit is the rank of \(X^TX\), i.e. `min(n_samples, n_features)`.

[`PLSRegression`](generated/sklearn.cross_decomposition.plsregression#sklearn.cross_decomposition.PLSRegression "sklearn.cross_decomposition.PLSRegression") is also known as PLS1 (single targets) and PLS2 (multiple targets). Much like [`Lasso`](generated/sklearn.linear_model.lasso#sklearn.linear_model.Lasso "sklearn.linear_model.Lasso"), [`PLSRegression`](generated/sklearn.cross_decomposition.plsregression#sklearn.cross_decomposition.PLSRegression "sklearn.cross_decomposition.PLSRegression") is a form of regularized linear regression where the number of components controls the strength of the regularization.

1.8.4. Canonical Correlation Analysis

--------------------------------------

Canonical Correlation Analysis was developed prior and independently to PLS. But it turns out that [`CCA`](generated/sklearn.cross_decomposition.cca#sklearn.cross_decomposition.CCA "sklearn.cross_decomposition.CCA") is a special case of PLS, and corresponds to PLS in “Mode B” in the literature.

[`CCA`](generated/sklearn.cross_decomposition.cca#sklearn.cross_decomposition.CCA "sklearn.cross_decomposition.CCA") differs from [`PLSCanonical`](generated/sklearn.cross_decomposition.plscanonical#sklearn.cross_decomposition.PLSCanonical "sklearn.cross_decomposition.PLSCanonical") in the way the weights \(u\_k\) and \(v\_k\) are computed in the power method of step a). Details can be found in section 10 of [[1]](#id6).

Since [`CCA`](generated/sklearn.cross_decomposition.cca#sklearn.cross_decomposition.CCA "sklearn.cross_decomposition.CCA") involves the inversion of \(X\_k^TX\_k\) and \(Y\_k^TY\_k\), this estimator can be unstable if the number of features or targets is greater than the number of samples.

| programming_docs |

scikit_learn 2.3. Clustering 2.3. Clustering

===============

[Clustering](https://en.wikipedia.org/wiki/Cluster_analysis) of unlabeled data can be performed with the module [`sklearn.cluster`](classes#module-sklearn.cluster "sklearn.cluster").

Each clustering algorithm comes in two variants: a class, that implements the `fit` method to learn the clusters on train data, and a function, that, given train data, returns an array of integer labels corresponding to the different clusters. For the class, the labels over the training data can be found in the `labels_` attribute.

2.3.1. Overview of clustering methods

--------------------------------------

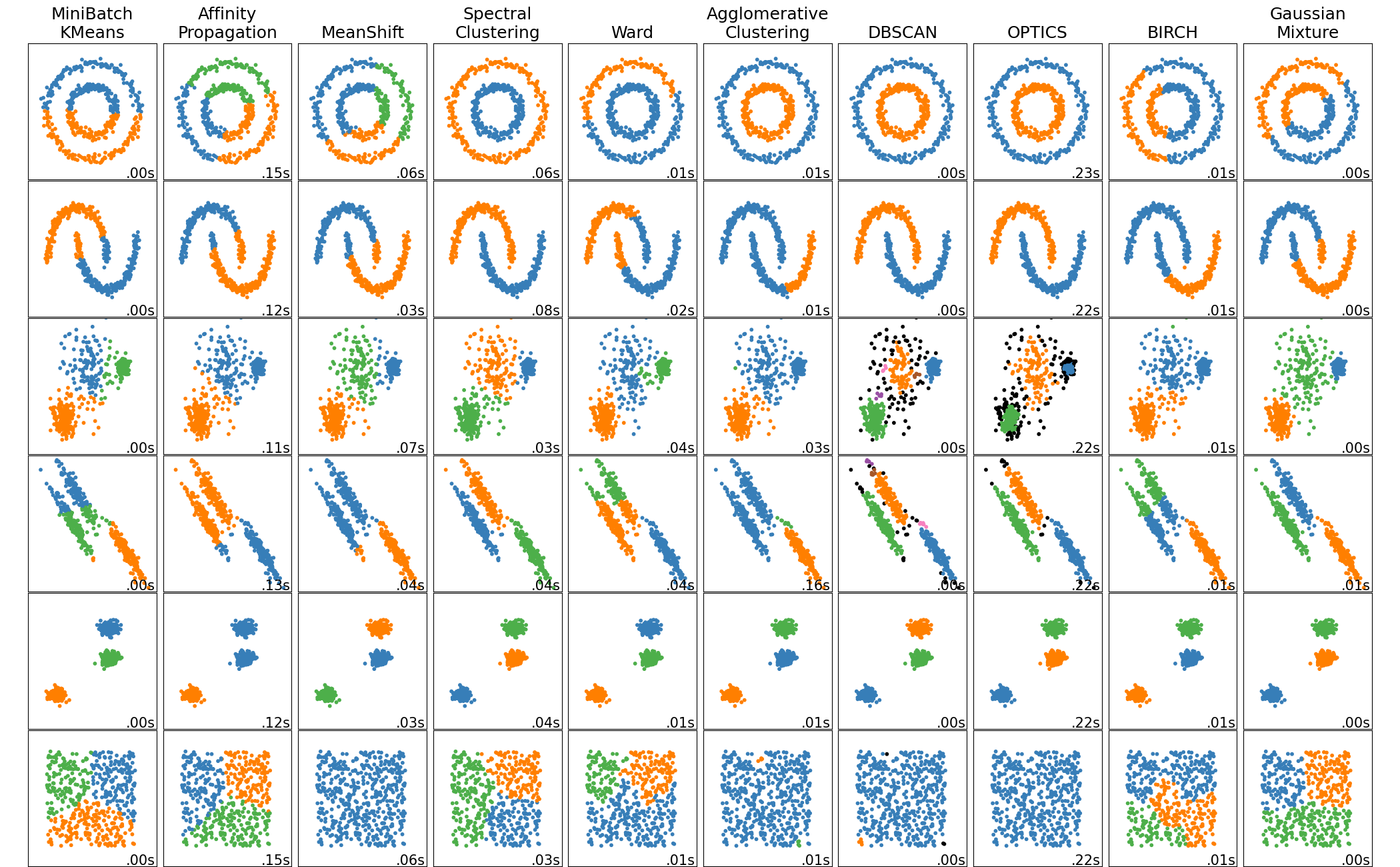

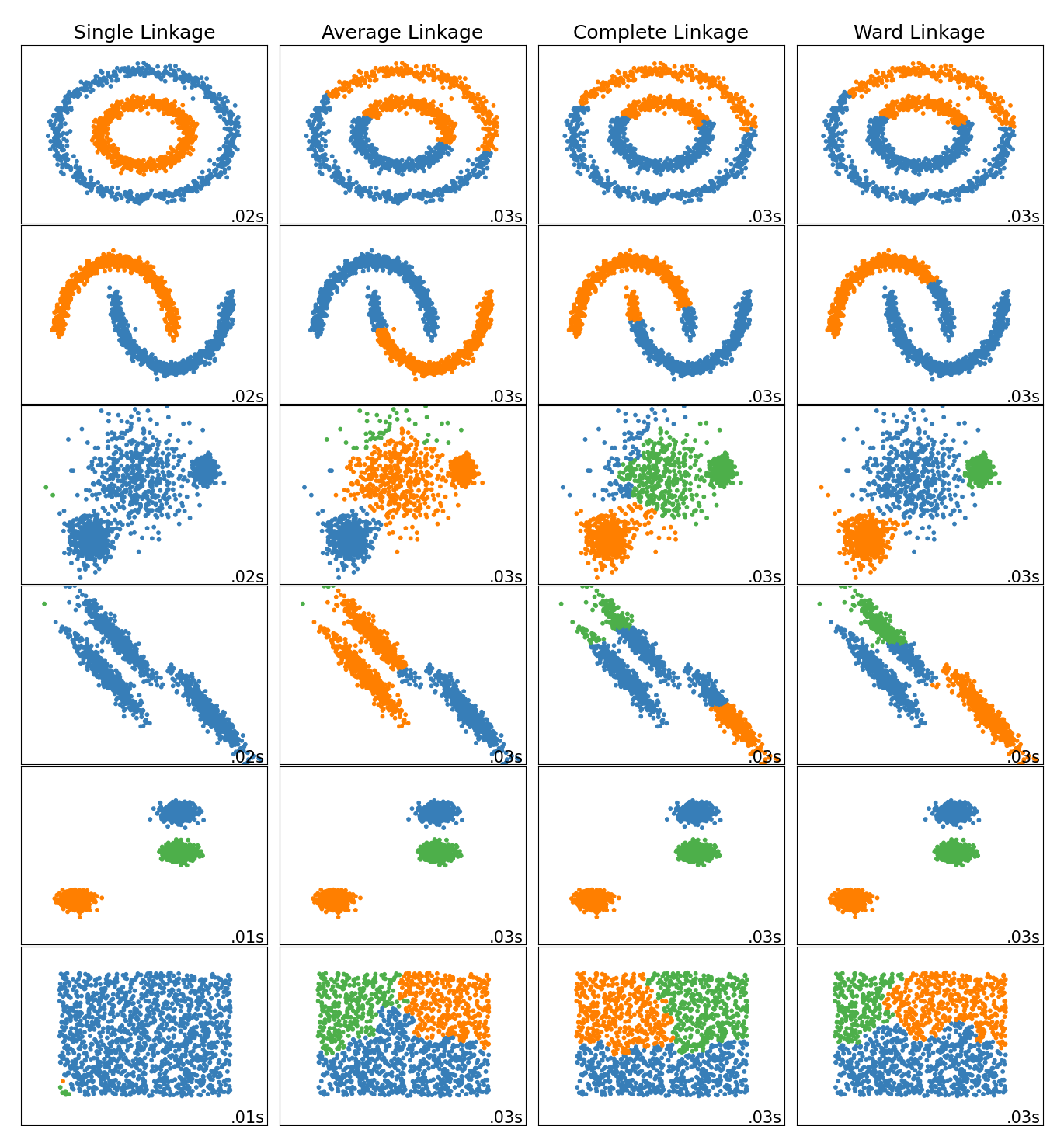

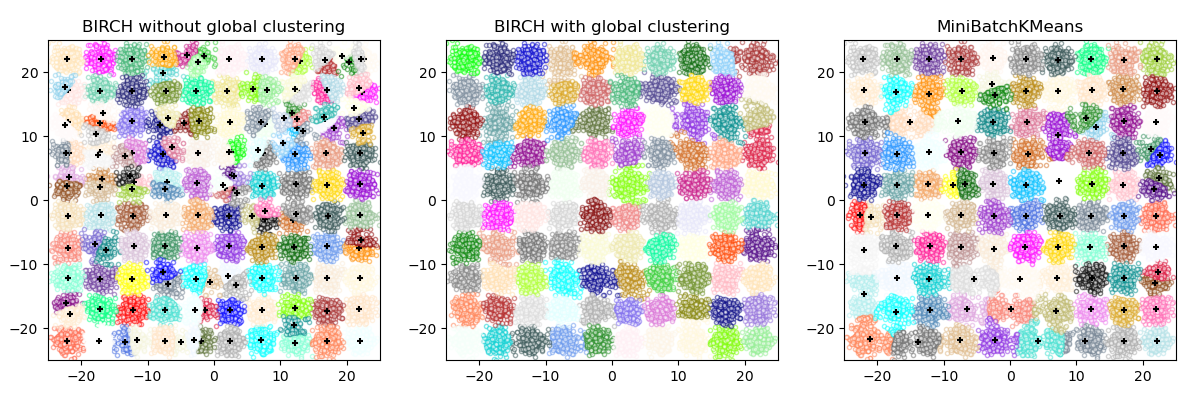

[](../auto_examples/cluster/plot_cluster_comparison) A comparison of the clustering algorithms in scikit-learn

| Method name | Parameters | Scalability | Usecase | Geometry (metric used) |

| --- | --- | --- | --- | --- |

| [K-Means](#k-means) | number of clusters | Very large `n_samples`, medium `n_clusters` with [MiniBatch code](#mini-batch-kmeans) | General-purpose, even cluster size, flat geometry, not too many clusters, inductive | Distances between points |

| [Affinity propagation](#affinity-propagation) | damping, sample preference | Not scalable with n\_samples | Many clusters, uneven cluster size, non-flat geometry, inductive | Graph distance (e.g. nearest-neighbor graph) |

| [Mean-shift](#mean-shift) | bandwidth | Not scalable with `n_samples` | Many clusters, uneven cluster size, non-flat geometry, inductive | Distances between points |

| [Spectral clustering](#spectral-clustering) | number of clusters | Medium `n_samples`, small `n_clusters` | Few clusters, even cluster size, non-flat geometry, transductive | Graph distance (e.g. nearest-neighbor graph) |

| [Ward hierarchical clustering](#hierarchical-clustering) | number of clusters or distance threshold | Large `n_samples` and `n_clusters` | Many clusters, possibly connectivity constraints, transductive | Distances between points |

| [Agglomerative clustering](#hierarchical-clustering) | number of clusters or distance threshold, linkage type, distance | Large `n_samples` and `n_clusters` | Many clusters, possibly connectivity constraints, non Euclidean distances, transductive | Any pairwise distance |

| [DBSCAN](#dbscan) | neighborhood size | Very large `n_samples`, medium `n_clusters` | Non-flat geometry, uneven cluster sizes, outlier removal, transductive | Distances between nearest points |

| [OPTICS](#optics) | minimum cluster membership | Very large `n_samples`, large `n_clusters` | Non-flat geometry, uneven cluster sizes, variable cluster density, outlier removal, transductive | Distances between points |

| [Gaussian mixtures](mixture#mixture) | many | Not scalable | Flat geometry, good for density estimation, inductive | Mahalanobis distances to centers |

| [BIRCH](#birch) | branching factor, threshold, optional global clusterer. | Large `n_clusters` and `n_samples` | Large dataset, outlier removal, data reduction, inductive | Euclidean distance between points |

| [Bisecting K-Means](#bisect-k-means) | number of clusters | Very large `n_samples`, medium `n_clusters` | General-purpose, even cluster size, flat geometry, no empty clusters, inductive, hierarchical | Distances between points |

Non-flat geometry clustering is useful when the clusters have a specific shape, i.e. a non-flat manifold, and the standard euclidean distance is not the right metric. This case arises in the two top rows of the figure above.

Gaussian mixture models, useful for clustering, are described in [another chapter of the documentation](mixture#mixture) dedicated to mixture models. KMeans can be seen as a special case of Gaussian mixture model with equal covariance per component.

[Transductive](https://scikit-learn.org/1.1/glossary.html#term-transductive) clustering methods (in contrast to [inductive](https://scikit-learn.org/1.1/glossary.html#term-inductive) clustering methods) are not designed to be applied to new, unseen data.

2.3.2. K-means

---------------

The [`KMeans`](generated/sklearn.cluster.kmeans#sklearn.cluster.KMeans "sklearn.cluster.KMeans") algorithm clusters data by trying to separate samples in n groups of equal variance, minimizing a criterion known as the *inertia* or within-cluster sum-of-squares (see below). This algorithm requires the number of clusters to be specified. It scales well to large numbers of samples and has been used across a large range of application areas in many different fields.

The k-means algorithm divides a set of \(N\) samples \(X\) into \(K\) disjoint clusters \(C\), each described by the mean \(\mu\_j\) of the samples in the cluster. The means are commonly called the cluster “centroids”; note that they are not, in general, points from \(X\), although they live in the same space.

The K-means algorithm aims to choose centroids that minimise the **inertia**, or **within-cluster sum-of-squares criterion**:

\[\sum\_{i=0}^{n}\min\_{\mu\_j \in C}(||x\_i - \mu\_j||^2)\] Inertia can be recognized as a measure of how internally coherent clusters are. It suffers from various drawbacks:

* Inertia makes the assumption that clusters are convex and isotropic, which is not always the case. It responds poorly to elongated clusters, or manifolds with irregular shapes.

* Inertia is not a normalized metric: we just know that lower values are better and zero is optimal. But in very high-dimensional spaces, Euclidean distances tend to become inflated (this is an instance of the so-called “curse of dimensionality”). Running a dimensionality reduction algorithm such as [Principal component analysis (PCA)](decomposition#pca) prior to k-means clustering can alleviate this problem and speed up the computations.

K-means is often referred to as Lloyd’s algorithm. In basic terms, the algorithm has three steps. The first step chooses the initial centroids, with the most basic method being to choose \(k\) samples from the dataset \(X\). After initialization, K-means consists of looping between the two other steps. The first step assigns each sample to its nearest centroid. The second step creates new centroids by taking the mean value of all of the samples assigned to each previous centroid. The difference between the old and the new centroids are computed and the algorithm repeats these last two steps until this value is less than a threshold. In other words, it repeats until the centroids do not move significantly.

K-means is equivalent to the expectation-maximization algorithm with a small, all-equal, diagonal covariance matrix.

The algorithm can also be understood through the concept of [Voronoi diagrams](https://en.wikipedia.org/wiki/Voronoi_diagram). First the Voronoi diagram of the points is calculated using the current centroids. Each segment in the Voronoi diagram becomes a separate cluster. Secondly, the centroids are updated to the mean of each segment. The algorithm then repeats this until a stopping criterion is fulfilled. Usually, the algorithm stops when the relative decrease in the objective function between iterations is less than the given tolerance value. This is not the case in this implementation: iteration stops when centroids move less than the tolerance.

Given enough time, K-means will always converge, however this may be to a local minimum. This is highly dependent on the initialization of the centroids. As a result, the computation is often done several times, with different initializations of the centroids. One method to help address this issue is the k-means++ initialization scheme, which has been implemented in scikit-learn (use the `init='k-means++'` parameter). This initializes the centroids to be (generally) distant from each other, leading to probably better results than random initialization, as shown in the reference.

K-means++ can also be called independently to select seeds for other clustering algorithms, see [`sklearn.cluster.kmeans_plusplus`](generated/sklearn.cluster.kmeans_plusplus#sklearn.cluster.kmeans_plusplus "sklearn.cluster.kmeans_plusplus") for details and example usage.

The algorithm supports sample weights, which can be given by a parameter `sample_weight`. This allows to assign more weight to some samples when computing cluster centers and values of inertia. For example, assigning a weight of 2 to a sample is equivalent to adding a duplicate of that sample to the dataset \(X\).

K-means can be used for vector quantization. This is achieved using the transform method of a trained model of [`KMeans`](generated/sklearn.cluster.kmeans#sklearn.cluster.KMeans "sklearn.cluster.KMeans").

###

2.3.2.1. Low-level parallelism

[`KMeans`](generated/sklearn.cluster.kmeans#sklearn.cluster.KMeans "sklearn.cluster.KMeans") benefits from OpenMP based parallelism through Cython. Small chunks of data (256 samples) are processed in parallel, which in addition yields a low memory footprint. For more details on how to control the number of threads, please refer to our [Parallelism](https://scikit-learn.org/1.1/computing/parallelism.html#parallelism) notes.

###

2.3.2.2. Mini Batch K-Means

The [`MiniBatchKMeans`](generated/sklearn.cluster.minibatchkmeans#sklearn.cluster.MiniBatchKMeans "sklearn.cluster.MiniBatchKMeans") is a variant of the [`KMeans`](generated/sklearn.cluster.kmeans#sklearn.cluster.KMeans "sklearn.cluster.KMeans") algorithm which uses mini-batches to reduce the computation time, while still attempting to optimise the same objective function. Mini-batches are subsets of the input data, randomly sampled in each training iteration. These mini-batches drastically reduce the amount of computation required to converge to a local solution. In contrast to other algorithms that reduce the convergence time of k-means, mini-batch k-means produces results that are generally only slightly worse than the standard algorithm.

The algorithm iterates between two major steps, similar to vanilla k-means. In the first step, \(b\) samples are drawn randomly from the dataset, to form a mini-batch. These are then assigned to the nearest centroid. In the second step, the centroids are updated. In contrast to k-means, this is done on a per-sample basis. For each sample in the mini-batch, the assigned centroid is updated by taking the streaming average of the sample and all previous samples assigned to that centroid. This has the effect of decreasing the rate of change for a centroid over time. These steps are performed until convergence or a predetermined number of iterations is reached.

[`MiniBatchKMeans`](generated/sklearn.cluster.minibatchkmeans#sklearn.cluster.MiniBatchKMeans "sklearn.cluster.MiniBatchKMeans") converges faster than [`KMeans`](generated/sklearn.cluster.kmeans#sklearn.cluster.KMeans "sklearn.cluster.KMeans"), but the quality of the results is reduced. In practice this difference in quality can be quite small, as shown in the example and cited reference.

2.3.3. Affinity Propagation

----------------------------

[`AffinityPropagation`](generated/sklearn.cluster.affinitypropagation#sklearn.cluster.AffinityPropagation "sklearn.cluster.AffinityPropagation") creates clusters by sending messages between pairs of samples until convergence. A dataset is then described using a small number of exemplars, which are identified as those most representative of other samples. The messages sent between pairs represent the suitability for one sample to be the exemplar of the other, which is updated in response to the values from other pairs. This updating happens iteratively until convergence, at which point the final exemplars are chosen, and hence the final clustering is given.

Affinity Propagation can be interesting as it chooses the number of clusters based on the data provided. For this purpose, the two important parameters are the *preference*, which controls how many exemplars are used, and the *damping factor* which damps the responsibility and availability messages to avoid numerical oscillations when updating these messages.

The main drawback of Affinity Propagation is its complexity. The algorithm has a time complexity of the order \(O(N^2 T)\), where \(N\) is the number of samples and \(T\) is the number of iterations until convergence. Further, the memory complexity is of the order \(O(N^2)\) if a dense similarity matrix is used, but reducible if a sparse similarity matrix is used. This makes Affinity Propagation most appropriate for small to medium sized datasets.

**Algorithm description:** The messages sent between points belong to one of two categories. The first is the responsibility \(r(i, k)\), which is the accumulated evidence that sample \(k\) should be the exemplar for sample \(i\). The second is the availability \(a(i, k)\) which is the accumulated evidence that sample \(i\) should choose sample \(k\) to be its exemplar, and considers the values for all other samples that \(k\) should be an exemplar. In this way, exemplars are chosen by samples if they are (1) similar enough to many samples and (2) chosen by many samples to be representative of themselves.

More formally, the responsibility of a sample \(k\) to be the exemplar of sample \(i\) is given by:

\[r(i, k) \leftarrow s(i, k) - max [ a(i, k') + s(i, k') \forall k' \neq k ]\] Where \(s(i, k)\) is the similarity between samples \(i\) and \(k\). The availability of sample \(k\) to be the exemplar of sample \(i\) is given by:

\[a(i, k) \leftarrow min [0, r(k, k) + \sum\_{i'~s.t.~i' \notin \{i, k\}}{r(i', k)}]\] To begin with, all values for \(r\) and \(a\) are set to zero, and the calculation of each iterates until convergence. As discussed above, in order to avoid numerical oscillations when updating the messages, the damping factor \(\lambda\) is introduced to iteration process:

\[r\_{t+1}(i, k) = \lambda\cdot r\_{t}(i, k) + (1-\lambda)\cdot r\_{t+1}(i, k)\] \[a\_{t+1}(i, k) = \lambda\cdot a\_{t}(i, k) + (1-\lambda)\cdot a\_{t+1}(i, k)\] where \(t\) indicates the iteration times.

2.3.4. Mean Shift

------------------

[`MeanShift`](generated/sklearn.cluster.meanshift#sklearn.cluster.MeanShift "sklearn.cluster.MeanShift") clustering aims to discover *blobs* in a smooth density of samples. It is a centroid based algorithm, which works by updating candidates for centroids to be the mean of the points within a given region. These candidates are then filtered in a post-processing stage to eliminate near-duplicates to form the final set of centroids.

Given a candidate centroid \(x\_i\) for iteration \(t\), the candidate is updated according to the following equation:

\[x\_i^{t+1} = m(x\_i^t)\] Where \(N(x\_i)\) is the neighborhood of samples within a given distance around \(x\_i\) and \(m\) is the *mean shift* vector that is computed for each centroid that points towards a region of the maximum increase in the density of points. This is computed using the following equation, effectively updating a centroid to be the mean of the samples within its neighborhood:

\[m(x\_i) = \frac{\sum\_{x\_j \in N(x\_i)}K(x\_j - x\_i)x\_j}{\sum\_{x\_j \in N(x\_i)}K(x\_j - x\_i)}\] The algorithm automatically sets the number of clusters, instead of relying on a parameter `bandwidth`, which dictates the size of the region to search through. This parameter can be set manually, but can be estimated using the provided `estimate_bandwidth` function, which is called if the bandwidth is not set.

The algorithm is not highly scalable, as it requires multiple nearest neighbor searches during the execution of the algorithm. The algorithm is guaranteed to converge, however the algorithm will stop iterating when the change in centroids is small.

Labelling a new sample is performed by finding the nearest centroid for a given sample.

2.3.5. Spectral clustering

---------------------------

[`SpectralClustering`](generated/sklearn.cluster.spectralclustering#sklearn.cluster.SpectralClustering "sklearn.cluster.SpectralClustering") performs a low-dimension embedding of the affinity matrix between samples, followed by clustering, e.g., by KMeans, of the components of the eigenvectors in the low dimensional space. It is especially computationally efficient if the affinity matrix is sparse and the `amg` solver is used for the eigenvalue problem (Note, the `amg` solver requires that the [pyamg](https://github.com/pyamg/pyamg) module is installed.)

The present version of SpectralClustering requires the number of clusters to be specified in advance. It works well for a small number of clusters, but is not advised for many clusters.

For two clusters, SpectralClustering solves a convex relaxation of the [normalized cuts](https://people.eecs.berkeley.edu/~malik/papers/SM-ncut.pdf) problem on the similarity graph: cutting the graph in two so that the weight of the edges cut is small compared to the weights of the edges inside each cluster. This criteria is especially interesting when working on images, where graph vertices are pixels, and weights of the edges of the similarity graph are computed using a function of a gradient of the image.

Warning

Transforming distance to well-behaved similarities

Note that if the values of your similarity matrix are not well distributed, e.g. with negative values or with a distance matrix rather than a similarity, the spectral problem will be singular and the problem not solvable. In which case it is advised to apply a transformation to the entries of the matrix. For instance, in the case of a signed distance matrix, is common to apply a heat kernel:

```

similarity = np.exp(-beta * distance / distance.std())

```