code

stringlengths 2.5k

150k

| kind

stringclasses 1

value |

|---|---|

statsmodels statsmodels.regression.recursive_ls.RecursiveLSResults.cov_params_oim statsmodels.regression.recursive\_ls.RecursiveLSResults.cov\_params\_oim

========================================================================

`RecursiveLSResults.cov_params_oim()`

(array) The variance / covariance matrix. Computed using the method from Harvey (1989).

statsmodels statsmodels.regression.linear_model.OLSResults.compare_f_test statsmodels.regression.linear\_model.OLSResults.compare\_f\_test

================================================================

`OLSResults.compare_f_test(restricted)`

use F test to test whether restricted model is correct

| Parameters: | **restricted** (*Result instance*) – The restricted model is assumed to be nested in the current model. The result instance of the restricted model is required to have two attributes, residual sum of squares, `ssr`, residual degrees of freedom, `df_resid`. |

| Returns: | * **f\_value** (*float*) – test statistic, F distributed

* **p\_value** (*float*) – p-value of the test statistic

* **df\_diff** (*int*) – degrees of freedom of the restriction, i.e. difference in df between models

|

#### Notes

See mailing list discussion October 17,

This test compares the residual sum of squares of the two models. This is not a valid test, if there is unspecified heteroscedasticity or correlation. This method will issue a warning if this is detected but still return the results under the assumption of homoscedasticity and no autocorrelation (sphericity).

statsmodels statsmodels.graphics.gofplots.qqline statsmodels.graphics.gofplots.qqline

====================================

`statsmodels.graphics.gofplots.qqline(ax, line, x=None, y=None, dist=None, fmt='r-')` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/graphics/gofplots.html#qqline)

Plot a reference line for a qqplot.

| Parameters: | * **ax** (*matplotlib axes instance*) – The axes on which to plot the line

* **line** (*str {'45'**,**'r'**,**'s'**,**'q'}*) – Options for the reference line to which the data is compared.:

+ ‘45’ - 45-degree line

+ ’s‘ - standardized line, the expected order statistics are scaled by the standard deviation of the given sample and have the mean added to them

+ ’r’ - A regression line is fit

+ ’q’ - A line is fit through the quartiles.

+ None - By default no reference line is added to the plot.

* **x** (*array*) – X data for plot. Not needed if line is ‘45’.

* **y** (*array*) – Y data for plot. Not needed if line is ‘45’.

* **dist** (*scipy.stats.distribution*) – A scipy.stats distribution, needed if line is ‘q’.

|

#### Notes

There is no return value. The line is plotted on the given `ax`.

statsmodels statsmodels.genmod.bayes_mixed_glm.PoissonBayesMixedGLM.vb_elbo_base statsmodels.genmod.bayes\_mixed\_glm.PoissonBayesMixedGLM.vb\_elbo\_base

========================================================================

`PoissonBayesMixedGLM.vb_elbo_base(h, tm, fep_mean, vcp_mean, vc_mean, fep_sd, vcp_sd, vc_sd)`

Returns the evidence lower bound (ELBO) for the model.

This function calculates the family-specific ELBO function based on information provided from a subclass.

| Parameters: | **h** (*function mapping 1d vector to 1d vector*) – The contribution of the model to the ELBO function can be expressed as y\_i\*lp\_i + Eh\_i(z), where y\_i and lp\_i are the response and linear predictor for observation i, and z is a standard normal rangom variable. This formulation can be achieved for any GLM with a canonical link function. |

statsmodels statsmodels.emplike.descriptive.DescStatMV.mv_mean_contour statsmodels.emplike.descriptive.DescStatMV.mv\_mean\_contour

============================================================

`DescStatMV.mv_mean_contour(mu1_low, mu1_upp, mu2_low, mu2_upp, step1, step2, levs=(0.001, 0.01, 0.05, 0.1, 0.2), var1_name=None, var2_name=None, plot_dta=False)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/emplike/descriptive.html#DescStatMV.mv_mean_contour)

Creates a confidence region plot for the mean of bivariate data

| Parameters: | * **m1\_low** (*float*) – Minimum value of the mean for variable 1

* **m1\_upp** (*float*) – Maximum value of the mean for variable 1

* **mu2\_low** (*float*) – Minimum value of the mean for variable 2

* **mu2\_upp** (*float*) – Maximum value of the mean for variable 2

* **step1** (*float*) – Increment of evaluations for variable 1

* **step2** (*float*) – Increment of evaluations for variable 2

* **levs** (*list*) – Levels to be drawn on the contour plot. Default = (.001, .01, .05, .1, .2)

* **plot\_dta** (*bool*) – If True, makes a scatter plot of the data on top of the contour plot. Defaultis False.

* **var1\_name** (*str*) – Name of variable 1 to be plotted on the x-axis

* **var2\_name** (*str*) – Name of variable 2 to be plotted on the y-axis

|

#### Notes

The smaller the step size, the more accurate the intervals will be

If the function returns optimization failed, consider narrowing the boundaries of the plot

#### Examples

```

>>> import statsmodels.api as sm

>>> two_rvs = np.random.standard_normal((20,2))

>>> el_analysis = sm.emplike.DescStat(two_rvs)

>>> contourp = el_analysis.mv_mean_contour(-2, 2, -2, 2, .1, .1)

>>> contourp.show()

```

statsmodels statsmodels.tsa.statespace.mlemodel.MLEResults.simulate statsmodels.tsa.statespace.mlemodel.MLEResults.simulate

=======================================================

`MLEResults.simulate(nsimulations, measurement_shocks=None, state_shocks=None, initial_state=None)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/statespace/mlemodel.html#MLEResults.simulate)

Simulate a new time series following the state space model

| Parameters: | * **nsimulations** (*int*) – The number of observations to simulate. If the model is time-invariant this can be any number. If the model is time-varying, then this number must be less than or equal to the number

* **measurement\_shocks** (*array\_like**,* *optional*) – If specified, these are the shocks to the measurement equation, \(\varepsilon\_t\). If unspecified, these are automatically generated using a pseudo-random number generator. If specified, must be shaped `nsimulations` x `k_endog`, where `k_endog` is the same as in the state space model.

* **state\_shocks** (*array\_like**,* *optional*) – If specified, these are the shocks to the state equation, \(\eta\_t\). If unspecified, these are automatically generated using a pseudo-random number generator. If specified, must be shaped `nsimulations` x `k_posdef` where `k_posdef` is the same as in the state space model.

* **initial\_state** (*array\_like**,* *optional*) – If specified, this is the state vector at time zero, which should be shaped (`k_states` x 1), where `k_states` is the same as in the state space model. If unspecified, but the model has been initialized, then that initialization is used. If unspecified and the model has not been initialized, then a vector of zeros is used. Note that this is not included in the returned `simulated_states` array.

|

| Returns: | **simulated\_obs** – An (nsimulations x k\_endog) array of simulated observations. |

| Return type: | array |

statsmodels statsmodels.tsa.vector_ar.var_model.VARResults.forecast statsmodels.tsa.vector\_ar.var\_model.VARResults.forecast

=========================================================

`VARResults.forecast(y, steps, exog_future=None)`

Produce linear minimum MSE forecasts for desired number of steps ahead, using prior values y

| Parameters: | * **y** (*ndarray* *(**p x k**)*) –

* **steps** (*int*) –

|

| Returns: | **forecasts** |

| Return type: | ndarray (steps x neqs) |

#### Notes

Lütkepohl pp 37-38

statsmodels statsmodels.tsa.regime_switching.markov_regression.MarkovRegression.score statsmodels.tsa.regime\_switching.markov\_regression.MarkovRegression.score

===========================================================================

`MarkovRegression.score(params, transformed=True)`

Compute the score function at params.

| Parameters: | * **params** (*array\_like*) – Array of parameters at which to evaluate the score function.

* **transformed** (*boolean**,* *optional*) – Whether or not `params` is already transformed. Default is True.

|

statsmodels statsmodels.sandbox.distributions.transformed.TransfTwo_gen.moment statsmodels.sandbox.distributions.transformed.TransfTwo\_gen.moment

===================================================================

`TransfTwo_gen.moment(n, *args, **kwds)`

n-th order non-central moment of distribution.

| Parameters: | * **n** (*int**,* *n >= 1*) – Order of moment.

* **arg2****,** **arg3****,****..** (*arg1**,*) – The shape parameter(s) for the distribution (see docstring of the instance object for more information).

* **loc** (*array\_like**,* *optional*) – location parameter (default=0)

* **scale** (*array\_like**,* *optional*) – scale parameter (default=1)

|

statsmodels statsmodels.sandbox.distributions.extras.SkewNorm_gen.logsf statsmodels.sandbox.distributions.extras.SkewNorm\_gen.logsf

============================================================

`SkewNorm_gen.logsf(x, *args, **kwds)`

Log of the survival function of the given RV.

Returns the log of the “survival function,” defined as (1 - `cdf`), evaluated at `x`.

| Parameters: | * **x** (*array\_like*) – quantiles

* **arg2****,** **arg3****,****..** (*arg1**,*) – The shape parameter(s) for the distribution (see docstring of the instance object for more information)

* **loc** (*array\_like**,* *optional*) – location parameter (default=0)

* **scale** (*array\_like**,* *optional*) – scale parameter (default=1)

|

| Returns: | **logsf** – Log of the survival function evaluated at `x`. |

| Return type: | ndarray |

statsmodels statsmodels.tsa.arima_model.ARMAResults.tvalues statsmodels.tsa.arima\_model.ARMAResults.tvalues

================================================

`ARMAResults.tvalues()`

Return the t-statistic for a given parameter estimate.

statsmodels statsmodels.genmod.generalized_linear_model.GLMResults.normalized_cov_params statsmodels.genmod.generalized\_linear\_model.GLMResults.normalized\_cov\_params

================================================================================

`GLMResults.normalized_cov_params()`

statsmodels statsmodels.genmod.families.links.CDFLink.deriv2 statsmodels.genmod.families.links.CDFLink.deriv2

================================================

`CDFLink.deriv2(p)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/genmod/families/links.html#CDFLink.deriv2)

Second derivative of the link function g’‘(p)

implemented through numerical differentiation

statsmodels statsmodels.sandbox.distributions.extras.NormExpan_gen.stats statsmodels.sandbox.distributions.extras.NormExpan\_gen.stats

=============================================================

`NormExpan_gen.stats(*args, **kwds)`

Some statistics of the given RV.

| Parameters: | * **arg2****,** **arg3****,****..** (*arg1**,*) – The shape parameter(s) for the distribution (see docstring of the instance object for more information)

* **loc** (*array\_like**,* *optional*) – location parameter (default=0)

* **scale** (*array\_like**,* *optional* *(**continuous RVs only**)*) – scale parameter (default=1)

* **moments** (*str**,* *optional*) – composed of letters [‘mvsk’] defining which moments to compute: ‘m’ = mean, ‘v’ = variance, ‘s’ = (Fisher’s) skew, ‘k’ = (Fisher’s) kurtosis. (default is ‘mv’)

|

| Returns: | **stats** – of requested moments. |

| Return type: | sequence |

statsmodels statsmodels.tsa.holtwinters.ExponentialSmoothing.from_formula statsmodels.tsa.holtwinters.ExponentialSmoothing.from\_formula

==============================================================

`classmethod ExponentialSmoothing.from_formula(formula, data, subset=None, drop_cols=None, *args, **kwargs)`

Create a Model from a formula and dataframe.

| Parameters: | * **formula** (*str* *or* *generic Formula object*) – The formula specifying the model

* **data** (*array-like*) – The data for the model. See Notes.

* **subset** (*array-like*) – An array-like object of booleans, integers, or index values that indicate the subset of df to use in the model. Assumes df is a `pandas.DataFrame`

* **drop\_cols** (*array-like*) – Columns to drop from the design matrix. Cannot be used to drop terms involving categoricals.

* **args** (*extra arguments*) – These are passed to the model

* **kwargs** (*extra keyword arguments*) – These are passed to the model with one exception. The `eval_env` keyword is passed to patsy. It can be either a [`patsy.EvalEnvironment`](http://patsy.readthedocs.io/en/latest/API-reference.html#patsy.EvalEnvironment "(in patsy v0.5.0+dev)") object or an integer indicating the depth of the namespace to use. For example, the default `eval_env=0` uses the calling namespace. If you wish to use a “clean” environment set `eval_env=-1`.

|

| Returns: | **model** |

| Return type: | Model instance |

#### Notes

data must define \_\_getitem\_\_ with the keys in the formula terms args and kwargs are passed on to the model instantiation. E.g., a numpy structured or rec array, a dictionary, or a pandas DataFrame.

statsmodels statsmodels.miscmodels.count.PoissonZiGMLE.score statsmodels.miscmodels.count.PoissonZiGMLE.score

================================================

`PoissonZiGMLE.score(params)`

Gradient of log-likelihood evaluated at params

statsmodels statsmodels.tsa.statespace.structural.UnobservedComponents.score statsmodels.tsa.statespace.structural.UnobservedComponents.score

================================================================

`UnobservedComponents.score(params, *args, **kwargs)`

Compute the score function at params.

| Parameters: | * **params** (*array\_like*) – Array of parameters at which to evaluate the score.

* **args** – Additional positional arguments to the `loglike` method.

* **kwargs** – Additional keyword arguments to the `loglike` method.

|

| Returns: | **score** – Score, evaluated at `params`. |

| Return type: | array |

#### Notes

This is a numerical approximation, calculated using first-order complex step differentiation on the `loglike` method.

Both \*args and \*\*kwargs are necessary because the optimizer from `fit` must call this function and only supports passing arguments via \*args (for example `scipy.optimize.fmin_l_bfgs`).

statsmodels statsmodels.tsa.vector_ar.dynamic.DynamicVAR.equations statsmodels.tsa.vector\_ar.dynamic.DynamicVAR.equations

=======================================================

`DynamicVAR.equations()` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/vector_ar/dynamic.html#DynamicVAR.equations)

statsmodels statsmodels.discrete.count_model.ZeroInflatedNegativeBinomialP.from_formula statsmodels.discrete.count\_model.ZeroInflatedNegativeBinomialP.from\_formula

=============================================================================

`classmethod ZeroInflatedNegativeBinomialP.from_formula(formula, data, subset=None, drop_cols=None, *args, **kwargs)`

Create a Model from a formula and dataframe.

| Parameters: | * **formula** (*str* *or* *generic Formula object*) – The formula specifying the model

* **data** (*array-like*) – The data for the model. See Notes.

* **subset** (*array-like*) – An array-like object of booleans, integers, or index values that indicate the subset of df to use in the model. Assumes df is a `pandas.DataFrame`

* **drop\_cols** (*array-like*) – Columns to drop from the design matrix. Cannot be used to drop terms involving categoricals.

* **args** (*extra arguments*) – These are passed to the model

* **kwargs** (*extra keyword arguments*) – These are passed to the model with one exception. The `eval_env` keyword is passed to patsy. It can be either a [`patsy.EvalEnvironment`](http://patsy.readthedocs.io/en/latest/API-reference.html#patsy.EvalEnvironment "(in patsy v0.5.0+dev)") object or an integer indicating the depth of the namespace to use. For example, the default `eval_env=0` uses the calling namespace. If you wish to use a “clean” environment set `eval_env=-1`.

|

| Returns: | **model** |

| Return type: | Model instance |

#### Notes

data must define \_\_getitem\_\_ with the keys in the formula terms args and kwargs are passed on to the model instantiation. E.g., a numpy structured or rec array, a dictionary, or a pandas DataFrame.

statsmodels statsmodels.genmod.families.family.NegativeBinomial.loglike statsmodels.genmod.families.family.NegativeBinomial.loglike

===========================================================

`NegativeBinomial.loglike(endog, mu, var_weights=1.0, freq_weights=1.0, scale=1.0)`

The log-likelihood function in terms of the fitted mean response.

| Parameters: | * **endog** (*array*) – Usually the endogenous response variable.

* **mu** (*array*) – Usually but not always the fitted mean response variable.

* **var\_weights** (*array-like*) – 1d array of variance (analytic) weights. The default is 1.

* **freq\_weights** (*array-like*) – 1d array of frequency weights. The default is 1.

* **scale** (*float*) – The scale parameter. The default is 1.

|

| Returns: | **ll** – The value of the loglikelihood evaluated at (endog, mu, var\_weights, freq\_weights, scale) as defined below. |

| Return type: | float |

#### Notes

Where \(ll\_i\) is the by-observation log-likelihood:

\[ll = \sum(ll\_i \* freq\\_weights\_i)\] `ll_i` is defined for each family. endog and mu are not restricted to `endog` and `mu` respectively. For instance, you could call both `loglike(endog, endog)` and `loglike(endog, mu)` to get the log-likelihood ratio.

statsmodels statsmodels.sandbox.stats.runs.runstest_2samp statsmodels.sandbox.stats.runs.runstest\_2samp

==============================================

`statsmodels.sandbox.stats.runs.runstest_2samp(x, y=None, groups=None, correction=True)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/sandbox/stats/runs.html#runstest_2samp)

Wald-Wolfowitz runstest for two samples

This tests whether two samples come from the same distribution.

| Parameters: | * **x** (*array\_like*) – data, numeric, contains either one group, if y is also given, or both groups, if additionally a group indicator is provided

* **y** (*array\_like* *(**optional**)*) – data, numeric

* **groups** (*array\_like*) – group labels or indicator the data for both groups is given in a single 1-dimensional array, x. If group labels are not [0,1], then

* **correction** (*bool*) – Following the SAS manual, for samplesize below 50, the test statistic is corrected by 0.5. This can be turned off with correction=False, and was included to match R, tseries, which does not use any correction.

|

| Returns: | * **z\_stat** (*float*) – test statistic, asymptotically normally distributed

* **p-value** (*float*) – p-value, reject the null hypothesis if it is below an type 1 error level, alpha .

|

#### Notes

Wald-Wolfowitz runs test.

If there are ties, then then the test statistic and p-value that is reported, is based on the higher p-value between sorting all tied observations of the same group

This test is intended for continuous distributions SAS has treatment for ties, but not clear, and sounds more complicated (minimum and maximum possible runs prevent use of argsort) (maybe it’s not so difficult, idea: add small positive noise to first one, run test, then to the other, run test, take max(?) p-value - DONE This gives not the minimum and maximum of the number of runs, but should be close. Not true, this is close to minimum but far away from maximum. maximum number of runs would use alternating groups in the ties.) Maybe adding random noise would be the better approach.

SAS has exact distribution for sample size <=30, doesn’t look standard but should be easy to add.

currently two-sided test only

This has not been verified against a reference implementation. In a short Monte Carlo simulation where both samples are normally distribute, the test seems to be correctly sized for larger number of observations (30 or larger), but conservative (i.e. reject less often than nominal) with a sample size of 10 in each group.

See also

`runs_test_1samp`, [`Runs`](statsmodels.sandbox.stats.runs.runs#statsmodels.sandbox.stats.runs.Runs "statsmodels.sandbox.stats.runs.Runs"), `RunsProb`

| programming_docs |

statsmodels statsmodels.sandbox.tsa.fftarma.ArmaFft.acf2spdfreq statsmodels.sandbox.tsa.fftarma.ArmaFft.acf2spdfreq

===================================================

`ArmaFft.acf2spdfreq(acovf, nfreq=100, w=None)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/sandbox/tsa/fftarma.html#ArmaFft.acf2spdfreq)

not really a method just for comparison, not efficient for large n or long acf

this is also similarly use in tsa.stattools.periodogram with window

statsmodels statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.get_prediction statsmodels.tsa.statespace.dynamic\_factor.DynamicFactorResults.get\_prediction

===============================================================================

`DynamicFactorResults.get_prediction(start=None, end=None, dynamic=False, index=None, exog=None, **kwargs)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/statespace/dynamic_factor.html#DynamicFactorResults.get_prediction)

In-sample prediction and out-of-sample forecasting

| Parameters: | * **start** (*int**,* *str**, or* *datetime**,* *optional*) – Zero-indexed observation number at which to start forecasting, ie., the first forecast is start. Can also be a date string to parse or a datetime type. Default is the the zeroth observation.

* **end** (*int**,* *str**, or* *datetime**,* *optional*) – Zero-indexed observation number at which to end forecasting, ie., the first forecast is start. Can also be a date string to parse or a datetime type. However, if the dates index does not have a fixed frequency, end must be an integer index if you want out of sample prediction. Default is the last observation in the sample.

* **exog** (*array\_like**,* *optional*) – If the model includes exogenous regressors, you must provide exactly enough out-of-sample values for the exogenous variables if end is beyond the last observation in the sample.

* **dynamic** (*boolean**,* *int**,* *str**, or* *datetime**,* *optional*) – Integer offset relative to `start` at which to begin dynamic prediction. Can also be an absolute date string to parse or a datetime type (these are not interpreted as offsets). Prior to this observation, true endogenous values will be used for prediction; starting with this observation and continuing through the end of prediction, forecasted endogenous values will be used instead.

* **\*\*kwargs** – Additional arguments may required for forecasting beyond the end of the sample. See `FilterResults.predict` for more details.

|

| Returns: | **forecast** – Array of out of sample forecasts. |

| Return type: | array |

statsmodels statsmodels.tsa.vector_ar.vecm.CointRankResults statsmodels.tsa.vector\_ar.vecm.CointRankResults

================================================

`class statsmodels.tsa.vector_ar.vecm.CointRankResults(rank, neqs, test_stats, crit_vals, method='trace', signif=0.05)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/vector_ar/vecm.html#CointRankResults)

A class for holding the results from testing the cointegration rank.

| Parameters: | * **rank** (int (0 <= `rank` <= `neqs`)) – The rank to choose according to the Johansen cointegration rank test.

* **neqs** (*int*) – Number of variables in the time series.

* **test\_stats** (array-like (`rank` + 1 if `rank` < `neqs` else `rank`)) – A one-dimensional array-like object containing the test statistics of the conducted tests.

* **crit\_vals** (array-like (`rank` +1 if `rank` < `neqs` else `rank`)) – A one-dimensional array-like object containing the critical values corresponding to the entries in the `test_stats` argument.

* **method** (str, {`"trace"`, `"maxeig"`}, default: `"trace"`) – If `"trace"`, the trace test statistic is used. If `"maxeig"`, the maximum eigenvalue test statistic is used.

* **signif** (*float**,* *{0.1**,* *0.05**,* *0.01}**,* *default: 0.05*) – The test’s significance level.

|

#### Methods

| | |

| --- | --- |

| [`summary`](statsmodels.tsa.vector_ar.vecm.cointrankresults.summary#statsmodels.tsa.vector_ar.vecm.CointRankResults.summary "statsmodels.tsa.vector_ar.vecm.CointRankResults.summary")() | |

statsmodels statsmodels.discrete.discrete_model.ProbitResults.resid_pearson statsmodels.discrete.discrete\_model.ProbitResults.resid\_pearson

=================================================================

`ProbitResults.resid_pearson()`

Pearson residuals

#### Notes

Pearson residuals are defined to be

\[r\_j = \frac{(y - M\_jp\_j)}{\sqrt{M\_jp\_j(1-p\_j)}}\] where \(p\_j=cdf(X\beta)\) and \(M\_j\) is the total number of observations sharing the covariate pattern \(j\).

For now \(M\_j\) is always set to 1.

statsmodels statsmodels.regression.linear_model.OLSResults.bse statsmodels.regression.linear\_model.OLSResults.bse

===================================================

`OLSResults.bse()`

statsmodels statsmodels.discrete.discrete_model.NegativeBinomialP.loglikeobs statsmodels.discrete.discrete\_model.NegativeBinomialP.loglikeobs

=================================================================

`NegativeBinomialP.loglikeobs(params)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/discrete/discrete_model.html#NegativeBinomialP.loglikeobs)

Loglikelihood for observations of Generalized Negative Binomial (NB-P) model

| Parameters: | **params** (*array-like*) – The parameters of the model. |

| Returns: | **loglike** – The log likelihood for each observation of the model evaluated at `params`. See Notes |

| Return type: | ndarray |

statsmodels statsmodels.genmod.generalized_linear_model.GLMResults.conf_int statsmodels.genmod.generalized\_linear\_model.GLMResults.conf\_int

==================================================================

`GLMResults.conf_int(alpha=0.05, cols=None, method='default')`

Returns the confidence interval of the fitted parameters.

| Parameters: | * **alpha** (*float**,* *optional*) – The significance level for the confidence interval. ie., The default `alpha` = .05 returns a 95% confidence interval.

* **cols** (*array-like**,* *optional*) – `cols` specifies which confidence intervals to return

* **method** (*string*) – Not Implemented Yet Method to estimate the confidence\_interval. “Default” : uses self.bse which is based on inverse Hessian for MLE “hjjh” : “jac” : “boot-bse” “boot\_quant” “profile”

|

| Returns: | **conf\_int** – Each row contains [lower, upper] limits of the confidence interval for the corresponding parameter. The first column contains all lower, the second column contains all upper limits. |

| Return type: | array |

#### Examples

```

>>> import statsmodels.api as sm

>>> data = sm.datasets.longley.load()

>>> data.exog = sm.add_constant(data.exog)

>>> results = sm.OLS(data.endog, data.exog).fit()

>>> results.conf_int()

array([[-5496529.48322745, -1467987.78596704],

[ -177.02903529, 207.15277984],

[ -0.1115811 , 0.03994274],

[ -3.12506664, -0.91539297],

[ -1.5179487 , -0.54850503],

[ -0.56251721, 0.460309 ],

[ 798.7875153 , 2859.51541392]])

```

```

>>> results.conf_int(cols=(2,3))

array([[-0.1115811 , 0.03994274],

[-3.12506664, -0.91539297]])

```

#### Notes

The confidence interval is based on the standard normal distribution. Models wish to use a different distribution should overwrite this method.

statsmodels statsmodels.discrete.count_model.ZeroInflatedPoissonResults.summary statsmodels.discrete.count\_model.ZeroInflatedPoissonResults.summary

====================================================================

`ZeroInflatedPoissonResults.summary(yname=None, xname=None, title=None, alpha=0.05, yname_list=None)`

Summarize the Regression Results

| Parameters: | * **yname** (*string**,* *optional*) – Default is `y`

* **xname** (*list of strings**,* *optional*) – Default is `var_##` for ## in p the number of regressors

* **title** (*string**,* *optional*) – Title for the top table. If not None, then this replaces the default title

* **alpha** (*float*) – significance level for the confidence intervals

|

| Returns: | **smry** – this holds the summary tables and text, which can be printed or converted to various output formats. |

| Return type: | Summary instance |

See also

[`statsmodels.iolib.summary.Summary`](statsmodels.iolib.summary.summary#statsmodels.iolib.summary.Summary "statsmodels.iolib.summary.Summary")

class to hold summary results

statsmodels statsmodels.tsa.arima_model.ARIMAResults.fittedvalues statsmodels.tsa.arima\_model.ARIMAResults.fittedvalues

======================================================

`ARIMAResults.fittedvalues()`

statsmodels statsmodels.tsa.holtwinters.Holt.score statsmodels.tsa.holtwinters.Holt.score

======================================

`Holt.score(params)`

Score vector of model.

The gradient of logL with respect to each parameter.

statsmodels statsmodels.tsa.vector_ar.vecm.VECMResults.cov_params_default statsmodels.tsa.vector\_ar.vecm.VECMResults.cov\_params\_default

================================================================

`VECMResults.cov_params_default()` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/vector_ar/vecm.html#VECMResults.cov_params_default)

statsmodels statsmodels.discrete.discrete_model.Logit.fit_regularized statsmodels.discrete.discrete\_model.Logit.fit\_regularized

===========================================================

`Logit.fit_regularized(start_params=None, method='l1', maxiter='defined_by_method', full_output=1, disp=1, callback=None, alpha=0, trim_mode='auto', auto_trim_tol=0.01, size_trim_tol=0.0001, qc_tol=0.03, **kwargs)`

Fit the model using a regularized maximum likelihood. The regularization method AND the solver used is determined by the argument method.

| Parameters: | * **start\_params** (*array-like**,* *optional*) – Initial guess of the solution for the loglikelihood maximization. The default is an array of zeros.

* **method** (*'l1'* *or* *'l1\_cvxopt\_cp'*) – See notes for details.

* **maxiter** (*Integer* *or* *'defined\_by\_method'*) – Maximum number of iterations to perform. If ‘defined\_by\_method’, then use method defaults (see notes).

* **full\_output** (*bool*) – Set to True to have all available output in the Results object’s mle\_retvals attribute. The output is dependent on the solver. See LikelihoodModelResults notes section for more information.

* **disp** (*bool*) – Set to True to print convergence messages.

* **fargs** (*tuple*) – Extra arguments passed to the likelihood function, i.e., loglike(x,\*args)

* **callback** (*callable callback**(**xk**)*) – Called after each iteration, as callback(xk), where xk is the current parameter vector.

* **retall** (*bool*) – Set to True to return list of solutions at each iteration. Available in Results object’s mle\_retvals attribute.

* **alpha** (*non-negative scalar* *or* *numpy array* *(**same size as parameters**)*) – The weight multiplying the l1 penalty term

* **trim\_mode** (*'auto**,* *'size'**, or* *'off'*) – If not ‘off’, trim (set to zero) parameters that would have been zero if the solver reached the theoretical minimum. If ‘auto’, trim params using the Theory above. If ‘size’, trim params if they have very small absolute value

* **size\_trim\_tol** (*float* *or* *'auto'* *(**default = 'auto'**)*) – For use when trim\_mode == ‘size’

* **auto\_trim\_tol** (*float*) – For sue when trim\_mode == ‘auto’. Use

* **qc\_tol** (*float*) – Print warning and don’t allow auto trim when (ii) (above) is violated by this much.

* **qc\_verbose** (*Boolean*) – If true, print out a full QC report upon failure

|

#### Notes

Extra parameters are not penalized if alpha is given as a scalar. An example is the shape parameter in NegativeBinomial `nb1` and `nb2`.

Optional arguments for the solvers (available in Results.mle\_settings):

```

'l1'

acc : float (default 1e-6)

Requested accuracy as used by slsqp

'l1_cvxopt_cp'

abstol : float

absolute accuracy (default: 1e-7).

reltol : float

relative accuracy (default: 1e-6).

feastol : float

tolerance for feasibility conditions (default: 1e-7).

refinement : int

number of iterative refinement steps when solving KKT

equations (default: 1).

```

Optimization methodology

With \(L\) the negative log likelihood, we solve the convex but non-smooth problem

\[\min\_\beta L(\beta) + \sum\_k\alpha\_k |\beta\_k|\] via the transformation to the smooth, convex, constrained problem in twice as many variables (adding the “added variables” \(u\_k\))

\[\min\_{\beta,u} L(\beta) + \sum\_k\alpha\_k u\_k,\] subject to

\[-u\_k \leq \beta\_k \leq u\_k.\] With \(\partial\_k L\) the derivative of \(L\) in the \(k^{th}\) parameter direction, theory dictates that, at the minimum, exactly one of two conditions holds:

1. \(|\partial\_k L| = \alpha\_k\) and \(\beta\_k \neq 0\)

2. \(|\partial\_k L| \leq \alpha\_k\) and \(\beta\_k = 0\)

statsmodels statsmodels.genmod.cov_struct.Autoregressive.summary statsmodels.genmod.cov\_struct.Autoregressive.summary

=====================================================

`Autoregressive.summary()` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/genmod/cov_struct.html#Autoregressive.summary)

Returns a text summary of the current estimate of the dependence structure.

statsmodels statsmodels.tsa.statespace.sarimax.SARIMAXResults.forecast statsmodels.tsa.statespace.sarimax.SARIMAXResults.forecast

==========================================================

`SARIMAXResults.forecast(steps=1, **kwargs)`

Out-of-sample forecasts

| Parameters: | * **steps** (*int**,* *str**, or* *datetime**,* *optional*) – If an integer, the number of steps to forecast from the end of the sample. Can also be a date string to parse or a datetime type. However, if the dates index does not have a fixed frequency, steps must be an integer. Default

* **\*\*kwargs** – Additional arguments may required for forecasting beyond the end of the sample. See `FilterResults.predict` for more details.

|

| Returns: | **forecast** – Array of out of sample forecasts. A (steps x k\_endog) array. |

| Return type: | array |

statsmodels statsmodels.stats.proportion.power_ztost_prop statsmodels.stats.proportion.power\_ztost\_prop

===============================================

`statsmodels.stats.proportion.power_ztost_prop(low, upp, nobs, p_alt, alpha=0.05, dist='norm', variance_prop=None, discrete=True, continuity=0, critval_continuity=0)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/stats/proportion.html#power_ztost_prop)

Power of proportions equivalence test based on normal distribution

| Parameters: | * **upp** (*low**,*) – lower and upper limit of equivalence region

* **nobs** (*int*) – number of observations

* **p\_alt** (*float in* *(**0**,**1**)*) – proportion under the alternative

* **alpha** (*float in* *(**0**,**1**)*) – significance level of the test

* **dist** (*string in* *[**'norm'**,* *'binom'**]*) – This defines the distribution to evalute the power of the test. The critical values of the TOST test are always based on the normal approximation, but the distribution for the power can be either the normal (default) or the binomial (exact) distribution.

* **variance\_prop** (*None* *or* *float in* *(**0**,**1**)*) – If this is None, then the variances for the two one sided tests are based on the proportions equal to the equivalence limits. If variance\_prop is given, then it is used to calculate the variance for the TOST statistics. If this is based on an sample, then the estimated proportion can be used.

* **discrete** (*bool*) – If true, then the critical values of the rejection region are converted to integers. If dist is “binom”, this is automatically assumed. If discrete is false, then the TOST critical values are used as floating point numbers, and the power is calculated based on the rejection region that is not discretized.

* **continuity** (*bool* *or* *float*) – adjust the rejection region for the normal power probability. This has and effect only if `dist='norm'`

* **critval\_continuity** (*bool* *or* *float*) – If this is non-zero, then the critical values of the tost rejection region are adjusted before converting to integers. This affects both distributions, `dist='norm'` and `dist='binom'`.

|

| Returns: | * **power** (*float*) – statistical power of the equivalence test.

* **(k\_low, k\_upp, z\_low, z\_upp)** (*tuple of floats*) – critical limits in intermediate steps temporary return, will be changed

|

#### Notes

In small samples the power for the `discrete` version, has a sawtooth pattern as a function of the number of observations. As a consequence, small changes in the number of observations or in the normal approximation can have a large effect on the power.

`continuity` and `critval_continuity` are added to match some results of PASS, and are mainly to investigate the sensitivity of the ztost power to small changes in the rejection region. From my interpretation of the equations in the SAS manual, both are zero in SAS.

works vectorized

**verification:**

The `dist='binom'` results match PASS, The `dist='norm'` results look reasonable, but no benchmark is available.

#### References

SAS Manual: Chapter 68: The Power Procedure, Computational Resources PASS Chapter 110: Equivalence Tests for One Proportion.

statsmodels statsmodels.iolib.foreign.StataReader.variables statsmodels.iolib.foreign.StataReader.variables

===============================================

`StataReader.variables()` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/iolib/foreign.html#StataReader.variables)

Returns a list of the dataset’s StataVariables objects.

statsmodels statsmodels.nonparametric.kernel_density.KDEMultivariate.cdf statsmodels.nonparametric.kernel\_density.KDEMultivariate.cdf

=============================================================

`KDEMultivariate.cdf(data_predict=None)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/nonparametric/kernel_density.html#KDEMultivariate.cdf)

Evaluate the cumulative distribution function.

| Parameters: | **data\_predict** (*array\_like**,* *optional*) – Points to evaluate at. If unspecified, the training data is used. |

| Returns: | **cdf\_est** – The estimate of the cdf. |

| Return type: | array\_like |

#### Notes

See <http://en.wikipedia.org/wiki/Cumulative_distribution_function> For more details on the estimation see Ref. [5] in module docstring.

The multivariate CDF for mixed data (continuous and ordered/unordered discrete) is estimated by:

\[F(x^{c},x^{d})=n^{-1}\sum\_{i=1}^{n}\left[G(\frac{x^{c}-X\_{i}}{h})\sum\_{u\leq x^{d}}L(X\_{i}^{d},x\_{i}^{d}, \lambda)\right]\] where G() is the product kernel CDF estimator for the continuous and L() for the discrete variables.

Used bandwidth is `self.bw`.

statsmodels statsmodels.discrete.discrete_model.NegativeBinomialP.cov_params_func_l1 statsmodels.discrete.discrete\_model.NegativeBinomialP.cov\_params\_func\_l1

============================================================================

`NegativeBinomialP.cov_params_func_l1(likelihood_model, xopt, retvals)`

Computes cov\_params on a reduced parameter space corresponding to the nonzero parameters resulting from the l1 regularized fit.

Returns a full cov\_params matrix, with entries corresponding to zero’d values set to np.nan.

statsmodels statsmodels.iolib.foreign.StataReader.dataset statsmodels.iolib.foreign.StataReader.dataset

=============================================

`StataReader.dataset(as_dict=False)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/iolib/foreign.html#StataReader.dataset)

Returns a Python generator object for iterating over the dataset.

| Parameters: | **as\_dict** (*bool**,* *optional*) – If as\_dict is True, yield each row of observations as a dict. If False, yields each row of observations as a list. |

| Returns: | * *Generator object for iterating over the dataset. Yields each row of*

* *observations as a list by default.*

|

#### Notes

If missing\_values is True during instantiation of StataReader then observations with \_StataMissingValue(s) are not filtered and should be handled by your applcation.

| programming_docs |

statsmodels statsmodels.graphics.tsaplots.plot_acf statsmodels.graphics.tsaplots.plot\_acf

=======================================

`statsmodels.graphics.tsaplots.plot_acf(x, ax=None, lags=None, alpha=0.05, use_vlines=True, unbiased=False, fft=False, title='Autocorrelation', zero=True, vlines_kwargs=None, **kwargs)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/graphics/tsaplots.html#plot_acf)

Plot the autocorrelation function

Plots lags on the horizontal and the correlations on vertical axis.

| Parameters: | * **x** (*array\_like*) – Array of time-series values

* **ax** (*Matplotlib AxesSubplot instance**,* *optional*) – If given, this subplot is used to plot in instead of a new figure being created.

* **lags** (*int* *or* *array\_like**,* *optional*) – int or Array of lag values, used on horizontal axis. Uses np.arange(lags) when lags is an int. If not provided, `lags=np.arange(len(corr))` is used.

* **alpha** (*scalar**,* *optional*) – If a number is given, the confidence intervals for the given level are returned. For instance if alpha=.05, 95 % confidence intervals are returned where the standard deviation is computed according to Bartlett’s formula. If None, no confidence intervals are plotted.

* **use\_vlines** (*bool**,* *optional*) – If True, vertical lines and markers are plotted. If False, only markers are plotted. The default marker is ‘o’; it can be overridden with a `marker` kwarg.

* **unbiased** (*bool*) – If True, then denominators for autocovariance are n-k, otherwise n

* **fft** (*bool**,* *optional*) – If True, computes the ACF via FFT.

* **title** (*str**,* *optional*) – Title to place on plot. Default is ‘Autocorrelation’

* **zero** (*bool**,* *optional*) – Flag indicating whether to include the 0-lag autocorrelation. Default is True.

* **vlines\_kwargs** ([dict](https://docs.python.org/3.2/library/stdtypes.html#dict "(in Python v3.2)")*,* *optional*) – Optional dictionary of keyword arguments that are passed to vlines.

* **\*\*kwargs** (*kwargs**,* *optional*) – Optional keyword arguments that are directly passed on to the Matplotlib `plot` and `axhline` functions.

|

| Returns: | **fig** – If `ax` is None, the created figure. Otherwise the figure to which `ax` is connected. |

| Return type: | Matplotlib figure instance |

See also

`matplotlib.pyplot.xcorr`, `matplotlib.pyplot.acorr`, `mpl_examples`

#### Notes

Adapted from matplotlib’s `xcorr`.

Data are plotted as `plot(lags, corr, **kwargs)`

kwargs is used to pass matplotlib optional arguments to both the line tracing the autocorrelations and for the horizontal line at 0. These options must be valid for a Line2D object.

vlines\_kwargs is used to pass additional optional arguments to the vertical lines connecting each autocorrelation to the axis. These options must be valid for a LineCollection object.

statsmodels statsmodels.tsa.statespace.sarimax.SARIMAXResults.cov_params_robust statsmodels.tsa.statespace.sarimax.SARIMAXResults.cov\_params\_robust

=====================================================================

`SARIMAXResults.cov_params_robust()`

(array) The QMLE variance / covariance matrix. Alias for `cov_params_robust_oim`

statsmodels statsmodels.tsa.statespace.sarimax.SARIMAX.from_formula statsmodels.tsa.statespace.sarimax.SARIMAX.from\_formula

========================================================

`classmethod SARIMAX.from_formula(formula, data, subset=None)`

Not implemented for state space models

statsmodels statsmodels.tsa.regime_switching.markov_autoregression.MarkovAutoregression.initialize_known statsmodels.tsa.regime\_switching.markov\_autoregression.MarkovAutoregression.initialize\_known

===============================================================================================

`MarkovAutoregression.initialize_known(probabilities, tol=1e-08)`

Set initialization of regime probabilities to use known values

statsmodels statsmodels.sandbox.regression.gmm.IVGMM.fititer statsmodels.sandbox.regression.gmm.IVGMM.fititer

================================================

`IVGMM.fititer(start, maxiter=2, start_invweights=None, weights_method='cov', wargs=(), optim_method='bfgs', optim_args=None)`

iterative estimation with updating of optimal weighting matrix

stopping criteria are maxiter or change in parameter estimate less than self.epsilon\_iter, with default 1e-6.

| Parameters: | * **start** (*array*) – starting value for parameters

* **maxiter** (*int*) – maximum number of iterations

* **start\_weights** (*array* *(**nmoms**,* *nmoms**)*) – initial weighting matrix; if None, then the identity matrix is used

* **weights\_method** (*{'cov'**,* *..}*) – method to use to estimate the optimal weighting matrix, see calc\_weightmatrix for details

|

| Returns: | * **params** (*array*) – estimated parameters

* **weights** (*array*) – optimal weighting matrix calculated with final parameter estimates

|

#### Notes

statsmodels statsmodels.robust.norms.RobustNorm.rho statsmodels.robust.norms.RobustNorm.rho

=======================================

`RobustNorm.rho(z)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/robust/norms.html#RobustNorm.rho)

The robust criterion estimator function.

Abstract method:

-2 loglike used in M-estimator

statsmodels statsmodels.tsa.ar_model.ARResults.initialize statsmodels.tsa.ar\_model.ARResults.initialize

==============================================

`ARResults.initialize(model, params, **kwd)`

statsmodels statsmodels.stats.moment_helpers.mnc2mvsk statsmodels.stats.moment\_helpers.mnc2mvsk

==========================================

`statsmodels.stats.moment_helpers.mnc2mvsk(args)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/stats/moment_helpers.html#mnc2mvsk)

convert central moments to mean, variance, skew, kurtosis

statsmodels statsmodels.sandbox.regression.gmm.IVGMM.score statsmodels.sandbox.regression.gmm.IVGMM.score

==============================================

`IVGMM.score(params, weights, epsilon=None, centered=True)`

statsmodels statsmodels.tools.tools.add_constant statsmodels.tools.tools.add\_constant

=====================================

`statsmodels.tools.tools.add_constant(data, prepend=True, has_constant='skip')` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tools/tools.html#add_constant)

Adds a column of ones to an array

| Parameters: | * **data** (*array-like*) – `data` is the column-ordered design matrix

* **prepend** (*bool*) – If true, the constant is in the first column. Else the constant is appended (last column).

* **has\_constant** (*str {'raise'**,* *'add'**,* *'skip'}*) – Behavior if `data` already has a constant. The default will return data without adding another constant. If ‘raise’, will raise an error if a constant is present. Using ‘add’ will duplicate the constant, if one is present.

|

| Returns: | **data** – The original values with a constant (column of ones) as the first or last column. Returned value depends on input type. |

| Return type: | array, recarray or DataFrame |

#### Notes

When the input is recarray or a pandas Series or DataFrame, the added column’s name is ‘const’.

statsmodels statsmodels.genmod.families.links.identity.deriv statsmodels.genmod.families.links.identity.deriv

================================================

`identity.deriv(p)`

Derivative of the power transform

| Parameters: | **p** (*array-like*) – Mean parameters |

| Returns: | **g’(p)** – Derivative of power transform of `p` |

| Return type: | array |

#### Notes

g’(`p`) = `power` \* `p`**(`power` - 1)

statsmodels statsmodels.graphics.functional.rainbowplot statsmodels.graphics.functional.rainbowplot

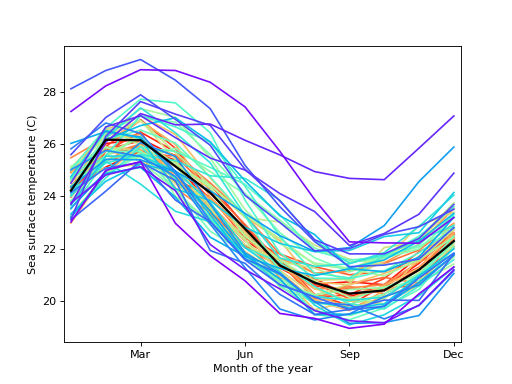

===========================================

`statsmodels.graphics.functional.rainbowplot(data, xdata=None, depth=None, method='MBD', ax=None, cmap=None)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/graphics/functional.html#rainbowplot)

Create a rainbow plot for a set of curves.

A rainbow plot contains line plots of all curves in the dataset, colored in order of functional depth. The median curve is shown in black.

| Parameters: | * **data** (*sequence of ndarrays* *or* *2-D ndarray*) – The vectors of functions to create a functional boxplot from. If a sequence of 1-D arrays, these should all be the same size. The first axis is the function index, the second axis the one along which the function is defined. So `data[0, :]` is the first functional curve.

* **xdata** (*ndarray**,* *optional*) – The independent variable for the data. If not given, it is assumed to be an array of integers 0..N-1 with N the length of the vectors in `data`.

* **depth** (*ndarray**,* *optional*) – A 1-D array of band depths for `data`, or equivalent order statistic. If not given, it will be calculated through `banddepth`.

* **method** (*{'MBD'**,* *'BD2'}**,* *optional*) – The method to use to calculate the band depth. Default is ‘MBD’.

* **ax** (*Matplotlib AxesSubplot instance**,* *optional*) – If given, this subplot is used to plot in instead of a new figure being created.

* **cmap** (*Matplotlib LinearSegmentedColormap instance**,* *optional*) – The colormap used to color curves with. Default is a rainbow colormap, with red used for the most central and purple for the least central curves.

|

| Returns: | **fig** – If `ax` is None, the created figure. Otherwise the figure to which `ax` is connected. |

| Return type: | Matplotlib figure instance |

See also

[`banddepth`](statsmodels.graphics.functional.banddepth#statsmodels.graphics.functional.banddepth "statsmodels.graphics.functional.banddepth"), [`fboxplot`](statsmodels.graphics.functional.fboxplot#statsmodels.graphics.functional.fboxplot "statsmodels.graphics.functional.fboxplot")

#### References

[1] R.J. Hyndman and H.L. Shang, “Rainbow Plots, Bagplots, and Boxplots for Functional Data”, vol. 19, pp. 29-25, 2010. #### Examples

Load the El Nino dataset. Consists of 60 years worth of Pacific Ocean sea surface temperature data.

```

>>> import matplotlib.pyplot as plt

>>> import statsmodels.api as sm

>>> data = sm.datasets.elnino.load()

```

Create a rainbow plot:

```

>>> fig = plt.figure()

>>> ax = fig.add_subplot(111)

>>> res = sm.graphics.rainbowplot(data.raw_data[:, 1:], ax=ax)

```

```

>>> ax.set_xlabel("Month of the year")

>>> ax.set_ylabel("Sea surface temperature (C)")

>>> ax.set_xticks(np.arange(13, step=3) - 1)

>>> ax.set_xticklabels(["", "Mar", "Jun", "Sep", "Dec"])

>>> ax.set_xlim([-0.2, 11.2])

>>> plt.show()

```

([Source code](../plots/graphics_functional_rainbowplot.py), [png](../plots/graphics_functional_rainbowplot.png), [hires.png](../plots/graphics_functional_rainbowplot.hires.png), [pdf](../plots/graphics_functional_rainbowplot.pdf))

statsmodels statsmodels.regression.recursive_ls.RecursiveLSResults.zvalues statsmodels.regression.recursive\_ls.RecursiveLSResults.zvalues

===============================================================

`RecursiveLSResults.zvalues()`

(array) The z-statistics for the coefficients.

statsmodels statsmodels.genmod.generalized_linear_model.GLMResults.null_deviance statsmodels.genmod.generalized\_linear\_model.GLMResults.null\_deviance

=======================================================================

`GLMResults.null_deviance()` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/genmod/generalized_linear_model.html#GLMResults.null_deviance)

statsmodels statsmodels.tsa.ar_model.AR.score statsmodels.tsa.ar\_model.AR.score

==================================

`AR.score(params)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/ar_model.html#AR.score)

Return the gradient of the loglikelihood at params.

| Parameters: | **params** (*array-like*) – The parameter values at which to evaluate the score function. |

#### Notes

Returns numerical gradient.

statsmodels statsmodels.tsa.vector_ar.vecm.VECMResults.conf_int_beta statsmodels.tsa.vector\_ar.vecm.VECMResults.conf\_int\_beta

===========================================================

`VECMResults.conf_int_beta(alpha=0.05)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/vector_ar/vecm.html#VECMResults.conf_int_beta)

statsmodels statsmodels.regression.linear_model.OLSResults.predict statsmodels.regression.linear\_model.OLSResults.predict

=======================================================

`OLSResults.predict(exog=None, transform=True, *args, **kwargs)`

Call self.model.predict with self.params as the first argument.

| Parameters: | * **exog** (*array-like**,* *optional*) – The values for which you want to predict. see Notes below.

* **transform** (*bool**,* *optional*) – If the model was fit via a formula, do you want to pass exog through the formula. Default is True. E.g., if you fit a model y ~ log(x1) + log(x2), and transform is True, then you can pass a data structure that contains x1 and x2 in their original form. Otherwise, you’d need to log the data first.

* **kwargs** (*args**,*) – Some models can take additional arguments or keywords, see the predict method of the model for the details.

|

| Returns: | **prediction** – See self.model.predict |

| Return type: | ndarray, [pandas.Series](http://pandas.pydata.org/pandas-docs/stable/generated/pandas.Series.html#pandas.Series "(in pandas v0.22.0)") or [pandas.DataFrame](http://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.html#pandas.DataFrame "(in pandas v0.22.0)") |

#### Notes

The types of exog that are supported depends on whether a formula was used in the specification of the model.

If a formula was used, then exog is processed in the same way as the original data. This transformation needs to have key access to the same variable names, and can be a pandas DataFrame or a dict like object.

If no formula was used, then the provided exog needs to have the same number of columns as the original exog in the model. No transformation of the data is performed except converting it to a numpy array.

Row indices as in pandas data frames are supported, and added to the returned prediction.

statsmodels statsmodels.discrete.discrete_model.BinaryResults.conf_int statsmodels.discrete.discrete\_model.BinaryResults.conf\_int

============================================================

`BinaryResults.conf_int(alpha=0.05, cols=None, method='default')`

Returns the confidence interval of the fitted parameters.

| Parameters: | * **alpha** (*float**,* *optional*) – The significance level for the confidence interval. ie., The default `alpha` = .05 returns a 95% confidence interval.

* **cols** (*array-like**,* *optional*) – `cols` specifies which confidence intervals to return

* **method** (*string*) – Not Implemented Yet Method to estimate the confidence\_interval. “Default” : uses self.bse which is based on inverse Hessian for MLE “hjjh” : “jac” : “boot-bse” “boot\_quant” “profile”

|

| Returns: | **conf\_int** – Each row contains [lower, upper] limits of the confidence interval for the corresponding parameter. The first column contains all lower, the second column contains all upper limits. |

| Return type: | array |

#### Examples

```

>>> import statsmodels.api as sm

>>> data = sm.datasets.longley.load()

>>> data.exog = sm.add_constant(data.exog)

>>> results = sm.OLS(data.endog, data.exog).fit()

>>> results.conf_int()

array([[-5496529.48322745, -1467987.78596704],

[ -177.02903529, 207.15277984],

[ -0.1115811 , 0.03994274],

[ -3.12506664, -0.91539297],

[ -1.5179487 , -0.54850503],

[ -0.56251721, 0.460309 ],

[ 798.7875153 , 2859.51541392]])

```

```

>>> results.conf_int(cols=(2,3))

array([[-0.1115811 , 0.03994274],

[-3.12506664, -0.91539297]])

```

#### Notes

The confidence interval is based on the standard normal distribution. Models wish to use a different distribution should overwrite this method.

statsmodels statsmodels.sandbox.distributions.extras.ACSkewT_gen.fit statsmodels.sandbox.distributions.extras.ACSkewT\_gen.fit

=========================================================

`ACSkewT_gen.fit(data, *args, **kwds)`

Return MLEs for shape (if applicable), location, and scale parameters from data.

MLE stands for Maximum Likelihood Estimate. Starting estimates for the fit are given by input arguments; for any arguments not provided with starting estimates, `self._fitstart(data)` is called to generate such.

One can hold some parameters fixed to specific values by passing in keyword arguments `f0`, `f1`, …, `fn` (for shape parameters) and `floc` and `fscale` (for location and scale parameters, respectively).

| Parameters: | * **data** (*array\_like*) – Data to use in calculating the MLEs.

* **args** (*floats**,* *optional*) – Starting value(s) for any shape-characterizing arguments (those not provided will be determined by a call to `_fitstart(data)`). No default value.

* **kwds** (*floats**,* *optional*) – Starting values for the location and scale parameters; no default. Special keyword arguments are recognized as holding certain parameters fixed:

+ f0…fn : hold respective shape parameters fixed. Alternatively, shape parameters to fix can be specified by name. For example, if `self.shapes == "a, b"`, `fa``and ``fix_a` are equivalent to `f0`, and `fb` and `fix_b` are equivalent to `f1`.

+ floc : hold location parameter fixed to specified value.

+ fscale : hold scale parameter fixed to specified value.

+ optimizer : The optimizer to use. The optimizer must take `func`, and starting position as the first two arguments, plus `args` (for extra arguments to pass to the function to be optimized) and `disp=0` to suppress output as keyword arguments.

|

| Returns: | **mle\_tuple** – MLEs for any shape parameters (if applicable), followed by those for location and scale. For most random variables, shape statistics will be returned, but there are exceptions (e.g. `norm`). |

| Return type: | tuple of floats |

#### Notes

This fit is computed by maximizing a log-likelihood function, with penalty applied for samples outside of range of the distribution. The returned answer is not guaranteed to be the globally optimal MLE, it may only be locally optimal, or the optimization may fail altogether.

#### Examples

Generate some data to fit: draw random variates from the `beta` distribution

```

>>> from scipy.stats import beta

>>> a, b = 1., 2.

>>> x = beta.rvs(a, b, size=1000)

```

Now we can fit all four parameters (`a`, `b`, `loc` and `scale`):

```

>>> a1, b1, loc1, scale1 = beta.fit(x)

```

We can also use some prior knowledge about the dataset: let’s keep `loc` and `scale` fixed:

```

>>> a1, b1, loc1, scale1 = beta.fit(x, floc=0, fscale=1)

>>> loc1, scale1

(0, 1)

```

We can also keep shape parameters fixed by using `f`-keywords. To keep the zero-th shape parameter `a` equal 1, use `f0=1` or, equivalently, `fa=1`:

```

>>> a1, b1, loc1, scale1 = beta.fit(x, fa=1, floc=0, fscale=1)

>>> a1

1

```

Not all distributions return estimates for the shape parameters. `norm` for example just returns estimates for location and scale:

```

>>> from scipy.stats import norm

>>> x = norm.rvs(a, b, size=1000, random_state=123)

>>> loc1, scale1 = norm.fit(x)

>>> loc1, scale1

(0.92087172783841631, 2.0015750750324668)

```

statsmodels statsmodels.tsa.statespace.varmax.VARMAX.score statsmodels.tsa.statespace.varmax.VARMAX.score

==============================================

`VARMAX.score(params, *args, **kwargs)`

Compute the score function at params.

| Parameters: | * **params** (*array\_like*) – Array of parameters at which to evaluate the score.

* **args** – Additional positional arguments to the `loglike` method.

* **kwargs** – Additional keyword arguments to the `loglike` method.

|

| Returns: | **score** – Score, evaluated at `params`. |

| Return type: | array |

#### Notes

This is a numerical approximation, calculated using first-order complex step differentiation on the `loglike` method.

Both \*args and \*\*kwargs are necessary because the optimizer from `fit` must call this function and only supports passing arguments via \*args (for example `scipy.optimize.fmin_l_bfgs`).

| programming_docs |

statsmodels statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults statsmodels.tsa.statespace.dynamic\_factor.DynamicFactorResults

===============================================================

`class statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults(model, params, filter_results, cov_type='opg', **kwargs)` [[source]](http://www.statsmodels.org/stable/_modules/statsmodels/tsa/statespace/dynamic_factor.html#DynamicFactorResults)

Class to hold results from fitting an DynamicFactor model.

| Parameters: | **model** (*DynamicFactor instance*) – The fitted model instance |

`specification`

*dictionary* – Dictionary including all attributes from the DynamicFactor model instance.

`coefficient_matrices_var`

*array* – Array containing autoregressive lag polynomial coefficient matrices, ordered from lowest degree to highest.

See also

[`statsmodels.tsa.statespace.kalman_filter.FilterResults`](statsmodels.tsa.statespace.kalman_filter.filterresults#statsmodels.tsa.statespace.kalman_filter.FilterResults "statsmodels.tsa.statespace.kalman_filter.FilterResults"), [`statsmodels.tsa.statespace.mlemodel.MLEResults`](statsmodels.tsa.statespace.mlemodel.mleresults#statsmodels.tsa.statespace.mlemodel.MLEResults "statsmodels.tsa.statespace.mlemodel.MLEResults")

#### Methods

| | |

| --- | --- |

| [`aic`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.aic#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.aic "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.aic")() | (float) Akaike Information Criterion |

| [`bic`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.bic#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.bic "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.bic")() | (float) Bayes Information Criterion |

| [`bse`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.bse#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.bse "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.bse")() | |

| [`coefficients_of_determination`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.coefficients_of_determination#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.coefficients_of_determination "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.coefficients_of_determination")() | Coefficients of determination (\(R^2\)) from regressions of individual estimated factors on endogenous variables. |

| [`conf_int`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.conf_int#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.conf_int "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.conf_int")([alpha, cols, method]) | Returns the confidence interval of the fitted parameters. |

| [`cov_params`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params")([r\_matrix, column, scale, cov\_p, …]) | Returns the variance/covariance matrix. |

| [`cov_params_approx`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params_approx#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_approx "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_approx")() | (array) The variance / covariance matrix. |

| [`cov_params_oim`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params_oim#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_oim "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_oim")() | (array) The variance / covariance matrix. |

| [`cov_params_opg`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params_opg#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_opg "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_opg")() | (array) The variance / covariance matrix. |

| [`cov_params_robust`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params_robust#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_robust "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_robust")() | (array) The QMLE variance / covariance matrix. |

| [`cov_params_robust_approx`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params_robust_approx#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_robust_approx "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_robust_approx")() | (array) The QMLE variance / covariance matrix. |

| [`cov_params_robust_oim`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.cov_params_robust_oim#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_robust_oim "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.cov_params_robust_oim")() | (array) The QMLE variance / covariance matrix. |

| [`f_test`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.f_test#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.f_test "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.f_test")(r\_matrix[, cov\_p, scale, invcov]) | Compute the F-test for a joint linear hypothesis. |

| [`fittedvalues`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.fittedvalues#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.fittedvalues "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.fittedvalues")() | (array) The predicted values of the model. |

| [`forecast`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.forecast#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.forecast "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.forecast")([steps]) | Out-of-sample forecasts |

| [`get_forecast`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.get_forecast#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.get_forecast "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.get_forecast")([steps]) | Out-of-sample forecasts |

| [`get_prediction`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.get_prediction#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.get_prediction "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.get_prediction")([start, end, dynamic, index, …]) | In-sample prediction and out-of-sample forecasting |

| [`hqic`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.hqic#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.hqic "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.hqic")() | (float) Hannan-Quinn Information Criterion |

| [`impulse_responses`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.impulse_responses#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.impulse_responses "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.impulse_responses")([steps, impulse, …]) | Impulse response function |

| [`info_criteria`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.info_criteria#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.info_criteria "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.info_criteria")(criteria[, method]) | Information criteria |

| [`initialize`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.initialize#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.initialize "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.initialize")(model, params, \*\*kwd) | |

| [`llf`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.llf#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.llf "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.llf")() | (float) The value of the log-likelihood function evaluated at `params`. |

| [`llf_obs`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.llf_obs#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.llf_obs "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.llf_obs")() | (float) The value of the log-likelihood function evaluated at `params`. |

| [`load`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.load#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.load "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.load")(fname) | load a pickle, (class method) |

| [`loglikelihood_burn`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.loglikelihood_burn#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.loglikelihood_burn "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.loglikelihood_burn")() | (float) The number of observations during which the likelihood is not evaluated. |

| [`normalized_cov_params`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.normalized_cov_params#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.normalized_cov_params "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.normalized_cov_params")() | |

| [`plot_coefficients_of_determination`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.plot_coefficients_of_determination#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.plot_coefficients_of_determination "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.plot_coefficients_of_determination")([…]) | Plot the coefficients of determination |

| [`plot_diagnostics`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.plot_diagnostics#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.plot_diagnostics "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.plot_diagnostics")([variable, lags, fig, figsize]) | Diagnostic plots for standardized residuals of one endogenous variable |

| [`predict`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.predict#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.predict "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.predict")([start, end, dynamic]) | In-sample prediction and out-of-sample forecasting |

| [`pvalues`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.pvalues#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.pvalues "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.pvalues")() | (array) The p-values associated with the z-statistics of the coefficients. |

| [`remove_data`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.remove_data#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.remove_data "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.remove_data")() | remove data arrays, all nobs arrays from result and model |

| [`resid`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.resid#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.resid "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.resid")() | (array) The model residuals. |

| [`save`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.save#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.save "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.save")(fname[, remove\_data]) | save a pickle of this instance |

| [`simulate`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.simulate#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.simulate "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.simulate")(nsimulations[, measurement\_shocks, …]) | Simulate a new time series following the state space model |

| [`summary`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.summary#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.summary "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.summary")([alpha, start, separate\_params]) | Summarize the Model |

| [`t_test`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.t_test#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.t_test "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.t_test")(r\_matrix[, cov\_p, scale, use\_t]) | Compute a t-test for a each linear hypothesis of the form Rb = q |

| [`t_test_pairwise`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.t_test_pairwise#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.t_test_pairwise "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.t_test_pairwise")(term\_name[, method, alpha, …]) | perform pairwise t\_test with multiple testing corrected p-values |

| [`test_heteroskedasticity`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.test_heteroskedasticity#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.test_heteroskedasticity "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.test_heteroskedasticity")(method[, …]) | Test for heteroskedasticity of standardized residuals |

| [`test_normality`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.test_normality#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.test_normality "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.test_normality")(method) | Test for normality of standardized residuals. |

| [`test_serial_correlation`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.test_serial_correlation#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.test_serial_correlation "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.test_serial_correlation")(method[, lags]) | Ljung-box test for no serial correlation of standardized residuals |

| [`tvalues`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.tvalues#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.tvalues "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.tvalues")() | Return the t-statistic for a given parameter estimate. |

| [`wald_test`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.wald_test#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.wald_test "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.wald_test")(r\_matrix[, cov\_p, scale, invcov, …]) | Compute a Wald-test for a joint linear hypothesis. |

| [`wald_test_terms`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.wald_test_terms#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.wald_test_terms "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.wald_test_terms")([skip\_single, …]) | Compute a sequence of Wald tests for terms over multiple columns |

| [`zvalues`](statsmodels.tsa.statespace.dynamic_factor.dynamicfactorresults.zvalues#statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.zvalues "statsmodels.tsa.statespace.dynamic_factor.DynamicFactorResults.zvalues")() | (array) The z-statistics for the coefficients. |

#### Attributes

| | |

| --- | --- |