code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

# Basics of Deep Learning

In this notebook, we will cover the basics behind Deep Learning. I'm talking about building a brain....

Only kidding. Deep learning is a fascinating new field that has exploded over the last few years. From being used as facial recognition in apps such as Snapchat or challenger banks, to more advanced use cases such as being used in [protein-folding](https://www.independent.co.uk/life-style/gadgets-and-tech/protein-folding-ai-deepmind-google-cancer-covid-b1764008.html).

In this notebook we will:

- Explain the building blocks of neural networks

- Go over some applications of Deep Learning

## Building blocks of Neural Networks

I have no doubt that you have heard/seen how similar neural networks are to....our brains.

### The Perceptron

The building block of neural networks. The perceptron has a rich history (covered in the background section of this book). The perceptron was created in 1958 by Frank Rosenblatt (I love that name) in Cornell, however, that story is for another day....or section in this book (backgrounds!),

The perceptron is an algorithm that can learn a binary classifier (e.g. is that a cat or dog?). This is known as a threshold function, which maps an input vector *x* to an output decision *$f(x)$ = output*. Here is the formal maths to better explain my verbal fluff:

$ f(x) = { 1 (if: w.x+b > 0), 0 (otherwise) $

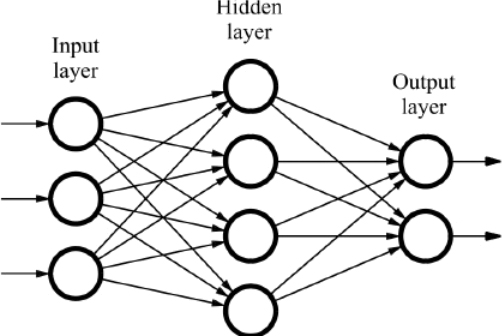

### The Artificial neural network

Lets take a look at the high level architecture first.

The gif above of a neural network classifying images is one of the best visual ways of understanding how neural networks, work. The neural network is made up of a few key concepts:

- An input: this is the data you pass into the network. For example, data relating to a customer (e.g. height, weight etc) or the pixels of an image

- An output: this is the prediction of the neural network

- A hidden layer: more on this later

- Neuron: the network is made up of neurons, that take an input, and give an output

Now, we have a slightly better understanding of what a neuron is. Lets look at a very simple neuron:

From the above image, you can clearly see the three components listed above together.

### But Abdi, what is the goal of a neural network?

Isn't it obvious? To me, it definitely was not when I first started to learn about neural networks. Neural networks are beautifully complex to understand, but with enough time and lots of youtube videos, you'll be able to master this topic.

The goal of a neural network is to make a pretty good guess of something. For example, a phone may have a face unlock feature. The phone probably got you to take a short video/images of yourself in order to set up this security feature, and when it **learned** your face, you were then able to use it to unlock your phone. This is pretty much what we do with neural networks. We teach it by giving it data, and making sure it gets better at making predictions by adjusting the weights between neurons. More on this soon.

## Gradient Descent Algo

One of the best videos on neural networks, by 3Blue1Brown:

<figure class="video_container">

<iframe src="https://www.youtube.com/watch?v=aircAruvnKk" frameborder="0" allowfullscreen="true"> </iframe>

</figure>

His series on Neural networks and Linear algebra are golden sources for learning Deep Learning.

### Simple Gradient Descent Implementation

with the help from our friends over at Udacity, please view below an implementation of the Gradient Descent Algo. This is a very basic neural network that only has its inputs linked directly to the outputs.

We begin by defining some functions.

```

import numpy as np

# We will be using a sigmoid activation function

def sigmoid(x):

return 1/(1+np.exp(-x))

# derivation of sigmoid(x) - will be used for backpropagating errors through the network

def sigmoid_prime(x):

return sigmoid(x)*(1-sigmoid(x))

```

We begin by defining a simple neural network:

- two input neurons: x1 and x2

- one output neuron: y1

```

x = np.array([1,5])

y = 0.4

```

We now define the weights, w1 and w2 for the two input neurons; x1 and x2. Also, we define a learning rate that will help us control our gradient descent step

```

weights = np.array([-0.2,0.4])

learnrate = 0.5

```

we now start moving forwards through the network, known as feed forward. We can combine the input vector with the weight vector using numpy's dot product

```

# linear combination

# h = x[0]*weights[0] + x[1]*weights[1]

h = np.dot(x, weights)

```

We now apply our non-linearity, this will provide us with our output.

```

# apply non-linearity

output = sigmoid(h)

```

Now that we have our prediction, we are able to determine the error of our neural network. Here, we will use the difference between our actual and predicted.

```

error = y - output

```

The goal now is to determine how to change our weights in order to reduce the error above. This is where our good friend gradient descent and the chain rule come into play:

- we determine the derivative of our error with respect to our input weights. Hence:

- change in weights = $ \frac{d}{dw_{i}} \frac{1}{2}{(y - \hat{y})^2}$

- simplifies to = learning rate * error term * $ x_{i}$

- where:

- learning rate = $ n $

- error term = $ (y - \hat{y}) * f'(h) $

- h = $ \sum_{i} W_{i} x_{i} $

We begin by calculating our f'(h)

```

# output gradient - derivative of activation function

output_gradient = sigmoid_prime(h)

```

Now, we can calcualte our error term

```

error_trm = error * output_gradient

```

With that, we can update our weights by combining the error term, learning rate and our x

```

#gradient desc step - updating the weights

dsc_step = [

learnrate * error_trm * x[0],

learnrate * error_trm * x[1]

]

```

Which leaves...

```

print(f'Actual: {y}')

print(f'NN output: {output}')

print(f'Error: {error}')

print(f'Weight change: {dsc_step}')

```

### More in depth...

Lets now build our own end to end example. we will begin by creating some fake data, followed by implementing our neural network.

```

x = np.random.rand(200,2)

y = np.random.randint(low=0, high=2, size=(200,1))

no_data_points, no_features = x.shape

def sig(x):

'''Calc for sigmoid'''

return 1 / (1+np.exp(-x))

weights = np.random.normal(scale=1/no_features**.5, size=no_features)

epochs = 1000

learning_rate = 0.5

last_loss = None

for single_data_pass in range(epochs):

# Creating a weight change tracker

change_in_weights = np.zeros(weights.shape)

for x_i, y_i in zip(x, y):

h = np.dot(x_i, weights)

y_hat = sigmoid(h)

error = y_i - y_hat

# error term = error * f'(h)

error_term = error * (y_hat * (1-y_hat))

# now multiply this by the current x & add to our weight update

change_in_weights += (error_term * x_i)

# now update the actual weights

weights += (learning_rate * change_in_weights / no_data_points)

# print the loss every 100th pass

if single_data_pass % (epochs/10) == 0:

# use current weights in NN to determine outputs

output = sigmoid(np.dot(x_i,weights))

# find the loss

loss = np.mean((output-y_i)**2)

#

if last_loss and last_loss < loss:

print(f'Train loss: {loss}, WARNING - Loss is inscreasing')

else:

print(f'Training loss: {loss}')

last_loss = loss

```

## Multilayer NN

Now, lets build upon our neural network, but this time, we have a hidden layer.

Lets first see how to build the network to make predictions.

```

X = np.random.randn(4)

weights_input_to_hidden = np.random.normal(0, scale=0.1, size=(4, 3))

weights_hidden_to_output = np.random.normal(0, scale=0.1, size=(3, 2))

sum_input = np.dot(X, weights_input_to_hidden)

h = sigmoid(sum_input)

sum_h = np.dot(h, weights_hidden_to_output)

y_pred = sigmoid(sum_h)

```

## Backpropa what?

Ok, so now, how do we refine our weights? Well, this is where **backpropagation** comes in. After feeding our data forwards through the network, using feed forward, we propagate our errors backwards, making use of things such as the chain rule.

Lets do an implementation.

```

# we have three input nodes

x = np.array([0.5, 0.2, -0.3])

# one output node

y = 0.7

learnrate = 0.5

# 2 nodes in hidden layer

weights_input_hidden = np.array(

[

[0.5, -0.6], [0.1, -0.2], [0.1, 0.7]

]

)

weights_hidden_output = np.array([

0.1,-0.3

])

# feeding data forwards through the network

hidden_layer_input = np.dot(x, weights_input_hidden)

hidden_layer_output = sigmoid(hidden_layer_input)

#---

output_layer_input = np.dot(hidden_layer_output, weights_hidden_output)

y_hat = sigmoid(output_layer_input)

# backward propagate the errors to tune the weights

# 1. calculate errors

error = y - y_hat

output_node_error_term = error * (y_hat * (1-y_hat))

#----

hidden_node_error_term = weights_hidden_output * output_node_error_term *(hidden_layer_output * (1-hidden_layer_output))

# 2. calculate weight changes

delta_w_output_node = learnrate * output_node_error_term * hidden_layer_output

#-----

delta_w_hidden_node = learnrate * hidden_node_error_term * x[:,None]

print(f'Original weights:\n{weights_input_hidden}\n{weights_hidden_output}')

print()

print('Change in weights for hidden layer to output layer:')

print(delta_w_output_node)

print('Change in weights for input layer to hidden layer:')

print(delta_w_hidden_node)

```

## Putting it all together

```

features = np.random.rand(200,2)

target = np.random.randint(low=0, high=2, size=(200,1))

def complete_backprop(x,y):

'''Complete implementation of backpropagation'''

n_hidden_units = 2

epochs = 900

learnrate = 0.005

n_records, n_features = features.shape

last_loss = None

w_input_to_hidden = np.random.normal(scale=1/n_features**.5,size=(n_features, n_hidden_units))

w_hidden_to_output = np.random.normal(scale=1/n_features**.5, size=n_hidden_units)

for single_epoch in range(epochs):

delw_input_to_hidden = np.zeros(w_input_to_hidden.shape)

delw_hidden_to_output = np.zeros(w_hidden_to_output.shape)

for x,y in zip(features, target):

# ----------------------

# 1. Feed data forwards

# ----------------------

hidden_layer_input = np.dot(x,w_input_to_hidden)

hidden_layer_output = sigmoid(hidden_layer_input)

output_layer_input = np.dot(hidden_layer_output, w_hidden_to_output)

output_layer_output = sigmoid(output_layer_input)

# ----------------------

# 2. Backpropagate the errors

# ----------------------

# error at output layer

prediction_error = y - output_layer_output

output_error_term = prediction_error * (output_layer_output * (1-output_layer_output))

# error at hidden layer (propagated from output layer)

# scale error from output layer by weights

hidden_layer_error = np.multiply(output_error_term, w_hidden_to_output)

hidden_error_term = hidden_layer_error * (hidden_layer_output * (1-hidden_layer_output))

# ----------------------

# 3. Find change of weights for each data point

# ----------------------

delw_hidden_to_output += output_error_term * hidden_layer_output

delw_input_to_hidden += hidden_error_term * x[:,None]

# Now update the actual weights

w_hidden_to_output += learnrate * delw_hidden_to_output / n_records

w_input_to_hidden += learnrate * delw_input_to_hidden / n_records

# Printing out the mean square error on the training set

if single_epoch % (epochs / 10) == 0:

hidden_output = sigmoid(np.dot(x, w_input_to_hidden))

out = sigmoid(np.dot(hidden_output,

w_hidden_to_output))

loss = np.mean((out - target) ** 2)

if last_loss and last_loss < loss:

print("Train loss: ", loss, " WARNING - Loss Increasing")

else:

print("Train loss: ", loss)

last_loss = loss

complete_backprop(features,target)

```

|

github_jupyter

|

import numpy as np

# We will be using a sigmoid activation function

def sigmoid(x):

return 1/(1+np.exp(-x))

# derivation of sigmoid(x) - will be used for backpropagating errors through the network

def sigmoid_prime(x):

return sigmoid(x)*(1-sigmoid(x))

x = np.array([1,5])

y = 0.4

weights = np.array([-0.2,0.4])

learnrate = 0.5

# linear combination

# h = x[0]*weights[0] + x[1]*weights[1]

h = np.dot(x, weights)

# apply non-linearity

output = sigmoid(h)

error = y - output

# output gradient - derivative of activation function

output_gradient = sigmoid_prime(h)

error_trm = error * output_gradient

#gradient desc step - updating the weights

dsc_step = [

learnrate * error_trm * x[0],

learnrate * error_trm * x[1]

]

print(f'Actual: {y}')

print(f'NN output: {output}')

print(f'Error: {error}')

print(f'Weight change: {dsc_step}')

x = np.random.rand(200,2)

y = np.random.randint(low=0, high=2, size=(200,1))

no_data_points, no_features = x.shape

def sig(x):

'''Calc for sigmoid'''

return 1 / (1+np.exp(-x))

weights = np.random.normal(scale=1/no_features**.5, size=no_features)

epochs = 1000

learning_rate = 0.5

last_loss = None

for single_data_pass in range(epochs):

# Creating a weight change tracker

change_in_weights = np.zeros(weights.shape)

for x_i, y_i in zip(x, y):

h = np.dot(x_i, weights)

y_hat = sigmoid(h)

error = y_i - y_hat

# error term = error * f'(h)

error_term = error * (y_hat * (1-y_hat))

# now multiply this by the current x & add to our weight update

change_in_weights += (error_term * x_i)

# now update the actual weights

weights += (learning_rate * change_in_weights / no_data_points)

# print the loss every 100th pass

if single_data_pass % (epochs/10) == 0:

# use current weights in NN to determine outputs

output = sigmoid(np.dot(x_i,weights))

# find the loss

loss = np.mean((output-y_i)**2)

#

if last_loss and last_loss < loss:

print(f'Train loss: {loss}, WARNING - Loss is inscreasing')

else:

print(f'Training loss: {loss}')

last_loss = loss

X = np.random.randn(4)

weights_input_to_hidden = np.random.normal(0, scale=0.1, size=(4, 3))

weights_hidden_to_output = np.random.normal(0, scale=0.1, size=(3, 2))

sum_input = np.dot(X, weights_input_to_hidden)

h = sigmoid(sum_input)

sum_h = np.dot(h, weights_hidden_to_output)

y_pred = sigmoid(sum_h)

# we have three input nodes

x = np.array([0.5, 0.2, -0.3])

# one output node

y = 0.7

learnrate = 0.5

# 2 nodes in hidden layer

weights_input_hidden = np.array(

[

[0.5, -0.6], [0.1, -0.2], [0.1, 0.7]

]

)

weights_hidden_output = np.array([

0.1,-0.3

])

# feeding data forwards through the network

hidden_layer_input = np.dot(x, weights_input_hidden)

hidden_layer_output = sigmoid(hidden_layer_input)

#---

output_layer_input = np.dot(hidden_layer_output, weights_hidden_output)

y_hat = sigmoid(output_layer_input)

# backward propagate the errors to tune the weights

# 1. calculate errors

error = y - y_hat

output_node_error_term = error * (y_hat * (1-y_hat))

#----

hidden_node_error_term = weights_hidden_output * output_node_error_term *(hidden_layer_output * (1-hidden_layer_output))

# 2. calculate weight changes

delta_w_output_node = learnrate * output_node_error_term * hidden_layer_output

#-----

delta_w_hidden_node = learnrate * hidden_node_error_term * x[:,None]

print(f'Original weights:\n{weights_input_hidden}\n{weights_hidden_output}')

print()

print('Change in weights for hidden layer to output layer:')

print(delta_w_output_node)

print('Change in weights for input layer to hidden layer:')

print(delta_w_hidden_node)

features = np.random.rand(200,2)

target = np.random.randint(low=0, high=2, size=(200,1))

def complete_backprop(x,y):

'''Complete implementation of backpropagation'''

n_hidden_units = 2

epochs = 900

learnrate = 0.005

n_records, n_features = features.shape

last_loss = None

w_input_to_hidden = np.random.normal(scale=1/n_features**.5,size=(n_features, n_hidden_units))

w_hidden_to_output = np.random.normal(scale=1/n_features**.5, size=n_hidden_units)

for single_epoch in range(epochs):

delw_input_to_hidden = np.zeros(w_input_to_hidden.shape)

delw_hidden_to_output = np.zeros(w_hidden_to_output.shape)

for x,y in zip(features, target):

# ----------------------

# 1. Feed data forwards

# ----------------------

hidden_layer_input = np.dot(x,w_input_to_hidden)

hidden_layer_output = sigmoid(hidden_layer_input)

output_layer_input = np.dot(hidden_layer_output, w_hidden_to_output)

output_layer_output = sigmoid(output_layer_input)

# ----------------------

# 2. Backpropagate the errors

# ----------------------

# error at output layer

prediction_error = y - output_layer_output

output_error_term = prediction_error * (output_layer_output * (1-output_layer_output))

# error at hidden layer (propagated from output layer)

# scale error from output layer by weights

hidden_layer_error = np.multiply(output_error_term, w_hidden_to_output)

hidden_error_term = hidden_layer_error * (hidden_layer_output * (1-hidden_layer_output))

# ----------------------

# 3. Find change of weights for each data point

# ----------------------

delw_hidden_to_output += output_error_term * hidden_layer_output

delw_input_to_hidden += hidden_error_term * x[:,None]

# Now update the actual weights

w_hidden_to_output += learnrate * delw_hidden_to_output / n_records

w_input_to_hidden += learnrate * delw_input_to_hidden / n_records

# Printing out the mean square error on the training set

if single_epoch % (epochs / 10) == 0:

hidden_output = sigmoid(np.dot(x, w_input_to_hidden))

out = sigmoid(np.dot(hidden_output,

w_hidden_to_output))

loss = np.mean((out - target) ** 2)

if last_loss and last_loss < loss:

print("Train loss: ", loss, " WARNING - Loss Increasing")

else:

print("Train loss: ", loss)

last_loss = loss

complete_backprop(features,target)

| 0.678753 | 0.989712 |

```

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import requests

import time

from config import weatherKey

from citipy import citipy

from scipy.stats import linregress

weatherAPIurl = f"http://api.openweathermap.org/data/2.5/weather?units=Imperial&APPID={weatherKey}&q="

outputPath = "./output/cities.csv"

citiesTargetTotal = 500

cityCoordinateList = []

cityUsedList = []

#generate random list of coordinates

cityLatRand = np.random.uniform(low = -90, high = 90, size = (citiesTargetTotal*3))

cityLongRand = np.random.uniform(low = -90, high = 90, size = (citiesTargetTotal*3))

cityCoordinateList = zip(cityLatRand, cityLongRand)

#associate each coordinate with nearest city

for x in cityCoordinateList:

city = citipy.nearest_city(x[0], x[1]).city_name

if city not in cityUsedList:

cityUsedList.append(city)

cityWeather = []

print("Retrieving data from openweathermap.org")

print("---------------------------------------")

recordCount = 1

setCount = 1

for index, city in enumerate(cityUsedList):

if(index % 50 == 0 and index >= 50):

recordCount = 0

setCount += 1

lookupURL = weatherAPIurl + city

print(f'Gathering Record {recordCount} of Set {setCount} |{city}')

recordCount += 1

try:

response = requests.get(lookupURL).json()

latitude = response["coord"]["lat"]

longitude = response["coord"]["lon"]

maxTemperature = response["main"]["temp_max"]

humidity = response["main"]["humidity"]

cloudCoverage = response["clouds"]["all"]

wind = response["wind"]["speed"]

country = response["sys"]["country"]

date = response["dt"]

cityWeather.append({"City:" : city,

"Latitude:" : latitude,

"Longitude:" : longitude,

"Max Temp:" : maxTemperature,

"Humidity:" : humidity,

"Cloud Coverage:" : cloudCoverage,

"Wind:" : wind,

"Country:" : country,

"Date:" : date,

})

except:

print(f'{city} not found in data set')

continue

print("---------------------------------------")

print("Data retrieval complete!")

cityWeather_df = pd.DataFrame(cityWeather)

latitude = cityWeather_df["Latitude:"]

maxTemperature = cityWeather_df["Max Temp:"]

humidity = cityWeather_df["Humidity:"]

cloudCoverage = cityWeather_df["Cloud Coverage:"]

wind = cityWeather_df["Wind:"]

cityWeather_df.to_csv(outputPath)

plt.scatter(latitude, maxTemperature, marker = "o", label = "Cities", edgecolor = "orange")

plt.title(f"City Latitude vs Highest Temperature {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Temperature (F)")

plt.savefig("./output/Lat vs. Temp.png")

plt.show()

#it was hottest around 35 latitude and gets colder the further you get away from that latitude

plt.scatter(latitude, humidity, marker = "o", edgecolor = "pink", color = "green")

plt.title(f"City Latitude vs Humidity {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Humidity (%)")

plt.savefig("./output/Lat vs. Humidity.png")

plt.show()

#little change in humidity with change in latitude

plt.scatter(latitude, wind, marker = "o", edgecolor = "green", color = "pink")

plt.title(f"City Latitude vs Wind Speed {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Wind Speed (mph)")

plt.savefig("./output/Lat vs. Wind Speed.png")

plt.show()

#little change in windspeed with change in latitude

plt.scatter(latitude, cloudCoverage, marker = "o", edgecolor = "blue", color = "red")

plt.title(f"City Latitude vs Cloud Coverage {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Cloudiness (%)")

plt.savefig("./output/Lat vs. Cloudiness.png")

plt.show()

#there were a lot of clouds just above the equator on this day

#northern and southern hemisphere dataframes

north_df = cityWeather_df.loc[(cityWeather_df["Latitude:"] >= 0)]

south_df = cityWeather_df.loc[(cityWeather_df["Latitude:"] < 0)]

def plotLinearRegression(x_values, y_values, yLabel, text_coordinates):

(slope, intercept, rvalue, pvalue, stderr) = linregress(x_values, y_values)

regress_values = x_values * slope + intercept

line_eq = "y = " + str(round(slope, 2)) + " x + " + str(round(intercept, 2))

plt.scatter(x_values, y_values)

plt.plot(x_values, regress_values, "r-")

plt.annotate(line_eq, text_coordinates, fontsize = 15, color = "red")

plt.xlabel("Latitude")

plt.ylabel(yLabel)

print(f"The r-squared is : {rvalue}")

plt.show()

#northern hemisphere - Lat vs Max Temp

x_values = north_df["Latitude:"]

y_values = north_df["Max Temp:"]

plotLinearRegression(x_values, y_values, "Max Temp", (20,40))

#the further north lower the max temp

#southern hemisphere - Lat vs Max Temp

x_values = south_df["Latitude:"]

y_values = south_df["Max Temp:"]

plotLinearRegression(x_values, y_values, "Max Temp", (-50,80))

#temperature rises the closer you get to the equator

#northern hemisphere - Lat vs Humidity

x_values = north_df["Latitude:"]

y_values = north_df["Humidity:"]

plotLinearRegression(x_values, y_values, "Humidity", (45,10))

#no relationship between humidity and latitude based off the information in this plot

#southern hemisphere - Lat vs Humidity

x_values = south_df["Latitude:"]

y_values = south_df["Humidity:"]

plotLinearRegression(x_values, y_values, "Humidity", (-55,10))

#little relationship between latitude and humidity in the southern hemisphere on this day.

#northern hemisphere - Lat vs Cloudiness

x_values = north_df["Latitude:"]

y_values = north_df["Cloud Coverage:"]

plotLinearRegression(x_values, y_values, "Cloudiness (%)", (45,10))

#small decrease in reported clouds the further north you go in the Northern Hemisphere.

#southern hemisphere - Lat vs Cloudiness

x_values = south_df["Latitude:"]

y_values = south_df["Cloud Coverage:"]

plotLinearRegression(x_values, y_values, "Cloudiness (%)", (-50,70))

#increase in reported clouds the closer to equator

#northern hemisphere - Lat vs Wind Speed

x_values = north_df["Latitude:"]

y_values = north_df["Wind:"]

plotLinearRegression(x_values, y_values, "Wind Speed (%)", (45,20))

#little relationship between windspeed and latitude in the northern hemisphere

#southern hemisphere - Lat vs Wind Speed

x_values = south_df["Latitude:"]

y_values = south_df["Wind:"]

plotLinearRegression(x_values, y_values, "Wind Speed (%)", (-30,30))

#higher reported wind speed the closer to the equator within the southern hemisphere

```

|

github_jupyter

|

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import requests

import time

from config import weatherKey

from citipy import citipy

from scipy.stats import linregress

weatherAPIurl = f"http://api.openweathermap.org/data/2.5/weather?units=Imperial&APPID={weatherKey}&q="

outputPath = "./output/cities.csv"

citiesTargetTotal = 500

cityCoordinateList = []

cityUsedList = []

#generate random list of coordinates

cityLatRand = np.random.uniform(low = -90, high = 90, size = (citiesTargetTotal*3))

cityLongRand = np.random.uniform(low = -90, high = 90, size = (citiesTargetTotal*3))

cityCoordinateList = zip(cityLatRand, cityLongRand)

#associate each coordinate with nearest city

for x in cityCoordinateList:

city = citipy.nearest_city(x[0], x[1]).city_name

if city not in cityUsedList:

cityUsedList.append(city)

cityWeather = []

print("Retrieving data from openweathermap.org")

print("---------------------------------------")

recordCount = 1

setCount = 1

for index, city in enumerate(cityUsedList):

if(index % 50 == 0 and index >= 50):

recordCount = 0

setCount += 1

lookupURL = weatherAPIurl + city

print(f'Gathering Record {recordCount} of Set {setCount} |{city}')

recordCount += 1

try:

response = requests.get(lookupURL).json()

latitude = response["coord"]["lat"]

longitude = response["coord"]["lon"]

maxTemperature = response["main"]["temp_max"]

humidity = response["main"]["humidity"]

cloudCoverage = response["clouds"]["all"]

wind = response["wind"]["speed"]

country = response["sys"]["country"]

date = response["dt"]

cityWeather.append({"City:" : city,

"Latitude:" : latitude,

"Longitude:" : longitude,

"Max Temp:" : maxTemperature,

"Humidity:" : humidity,

"Cloud Coverage:" : cloudCoverage,

"Wind:" : wind,

"Country:" : country,

"Date:" : date,

})

except:

print(f'{city} not found in data set')

continue

print("---------------------------------------")

print("Data retrieval complete!")

cityWeather_df = pd.DataFrame(cityWeather)

latitude = cityWeather_df["Latitude:"]

maxTemperature = cityWeather_df["Max Temp:"]

humidity = cityWeather_df["Humidity:"]

cloudCoverage = cityWeather_df["Cloud Coverage:"]

wind = cityWeather_df["Wind:"]

cityWeather_df.to_csv(outputPath)

plt.scatter(latitude, maxTemperature, marker = "o", label = "Cities", edgecolor = "orange")

plt.title(f"City Latitude vs Highest Temperature {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Temperature (F)")

plt.savefig("./output/Lat vs. Temp.png")

plt.show()

#it was hottest around 35 latitude and gets colder the further you get away from that latitude

plt.scatter(latitude, humidity, marker = "o", edgecolor = "pink", color = "green")

plt.title(f"City Latitude vs Humidity {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Humidity (%)")

plt.savefig("./output/Lat vs. Humidity.png")

plt.show()

#little change in humidity with change in latitude

plt.scatter(latitude, wind, marker = "o", edgecolor = "green", color = "pink")

plt.title(f"City Latitude vs Wind Speed {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Wind Speed (mph)")

plt.savefig("./output/Lat vs. Wind Speed.png")

plt.show()

#little change in windspeed with change in latitude

plt.scatter(latitude, cloudCoverage, marker = "o", edgecolor = "blue", color = "red")

plt.title(f"City Latitude vs Cloud Coverage {time.strftime('%x')}")

plt.xlabel("Latitude")

plt.ylabel("Cloudiness (%)")

plt.savefig("./output/Lat vs. Cloudiness.png")

plt.show()

#there were a lot of clouds just above the equator on this day

#northern and southern hemisphere dataframes

north_df = cityWeather_df.loc[(cityWeather_df["Latitude:"] >= 0)]

south_df = cityWeather_df.loc[(cityWeather_df["Latitude:"] < 0)]

def plotLinearRegression(x_values, y_values, yLabel, text_coordinates):

(slope, intercept, rvalue, pvalue, stderr) = linregress(x_values, y_values)

regress_values = x_values * slope + intercept

line_eq = "y = " + str(round(slope, 2)) + " x + " + str(round(intercept, 2))

plt.scatter(x_values, y_values)

plt.plot(x_values, regress_values, "r-")

plt.annotate(line_eq, text_coordinates, fontsize = 15, color = "red")

plt.xlabel("Latitude")

plt.ylabel(yLabel)

print(f"The r-squared is : {rvalue}")

plt.show()

#northern hemisphere - Lat vs Max Temp

x_values = north_df["Latitude:"]

y_values = north_df["Max Temp:"]

plotLinearRegression(x_values, y_values, "Max Temp", (20,40))

#the further north lower the max temp

#southern hemisphere - Lat vs Max Temp

x_values = south_df["Latitude:"]

y_values = south_df["Max Temp:"]

plotLinearRegression(x_values, y_values, "Max Temp", (-50,80))

#temperature rises the closer you get to the equator

#northern hemisphere - Lat vs Humidity

x_values = north_df["Latitude:"]

y_values = north_df["Humidity:"]

plotLinearRegression(x_values, y_values, "Humidity", (45,10))

#no relationship between humidity and latitude based off the information in this plot

#southern hemisphere - Lat vs Humidity

x_values = south_df["Latitude:"]

y_values = south_df["Humidity:"]

plotLinearRegression(x_values, y_values, "Humidity", (-55,10))

#little relationship between latitude and humidity in the southern hemisphere on this day.

#northern hemisphere - Lat vs Cloudiness

x_values = north_df["Latitude:"]

y_values = north_df["Cloud Coverage:"]

plotLinearRegression(x_values, y_values, "Cloudiness (%)", (45,10))

#small decrease in reported clouds the further north you go in the Northern Hemisphere.

#southern hemisphere - Lat vs Cloudiness

x_values = south_df["Latitude:"]

y_values = south_df["Cloud Coverage:"]

plotLinearRegression(x_values, y_values, "Cloudiness (%)", (-50,70))

#increase in reported clouds the closer to equator

#northern hemisphere - Lat vs Wind Speed

x_values = north_df["Latitude:"]

y_values = north_df["Wind:"]

plotLinearRegression(x_values, y_values, "Wind Speed (%)", (45,20))

#little relationship between windspeed and latitude in the northern hemisphere

#southern hemisphere - Lat vs Wind Speed

x_values = south_df["Latitude:"]

y_values = south_df["Wind:"]

plotLinearRegression(x_values, y_values, "Wind Speed (%)", (-30,30))

#higher reported wind speed the closer to the equator within the southern hemisphere

| 0.397588 | 0.468851 |

<a href="https://colab.research.google.com/github/mghendi/feedbackclassifier/blob/main/Feedback_and_Question_Classifier.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

## CCI508 - Language Technology Project

### Name: Samuel Mwamburi Mghendi

### Admission Number: P52/37621/2020

### Email: [email protected]

### Course: Language Technology – CCI 508

#### Applying Natural Language Processing (NLP) in the Classification of Bugs, Tasks and Improvements for feedback and questions received by Software Developers.

#### This report is organised as follows.

1. Data Description

* Data Loading and Preparation

* Exploratory Data Analysis

2. Data Preprocessing and Modelling

* Data Preprocessing

* Modelling

3. Model Evaluation

4. Conclusion

### 1. Data Description

#### Data Loading and Preparation

#### Initialization

```

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import pymysql

import os

import datetime

```

#### Import Data from Database

```

database = pymysql.connect (host="localhost", user = "root", passwd = "password", db = "helpdesk")

cursor1 = database.cursor()

cursor1.execute("select * from issues limit 5;")

results = cursor1.fetchall()

print(results)

import pandas as pd

df = pd.read_sql_query("select * from issues limit 70;", database)

df

from sklearn import preprocessing

from nltk.corpus import stopwords

from nltk.stem.snowball import SnowballStemmer

import matplotlib

from matplotlib import pyplot as plt

```

#### Exploratory Data Analysis

```

df.describe()

df.info()

df['issue_type'].astype(str)

del df['id']

del df['created']

del df['user']

df.groupby(['issue_type']).count().plot.bar(ylim=0)

plt.show()

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

```

#### Remove StopWords from issues

```

import nltk

nltk.download('stopwords')

sw = stopwords.words('english')

def stopwords(summary):

summary = [word.lower() for word in summary.split() if word.lower() not in sw]

return " ".join(summary)

df['summary'] = df['summary'].apply(stopwords)

df

```

#### Replacing non-ASCII characters with spaces

```

from unidecode import unidecode

def remove_non_ascii(summary):

return ''.join([i if ord(i) < 128 else ' ' for i in summary])

df['summary'] = df['summary'].apply(remove_non_ascii)

df

```

#### Removing HTML tags from issues

```

def remove_html_tags(summary):

import re

clean = re.compile('<.*?>')

return re.sub(clean, '', summary)

df['summary'] = df['summary'].apply(remove_html_tags)

df

```

#### Removing punctuations from issues

```

def remove_punctuation(summary):

import string

for c in string.punctuation:

summary = summary.replace(c," ")

return summary

df['summary'] = df['summary'].apply(remove_punctuation)

df

```

#### Lowercase all issues

```

def lowercase(summary):

import string

for c in string:

summary = summary.lower(c)

return summary

```

#### Removing emoticons from issues

```

def remove_emoticons(summary):

import re

regrex_pattern = re.compile(pattern = "["

u"\U0001F600-\U0001F64F" # emoticons

u"\U0001F300-\U0001F5FF" # symbols & pictographs

u"\U0001F680-\U0001F6FF" # transport & map symbols

u"\U0001F1E0-\U0001F1FF" # flags (iOS)

"]+", flags = re.UNICODE)

return regrex_pattern.sub(r'',summary)

df['summary'] = df['summary'].apply(remove_emoticons)

df

```

### 2. Data Preprocessing and Modelling

#### Converting Text to Numerical Vector

```

import pandas as pd

import numpy as np

import nltk

import string

import math

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import SGDClassifier

from sklearn.model_selection import train_test_split

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import confusion_matrix

from sklearn.metrics import accuracy_score

from sklearn import metrics

import re

import string

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer

from nltk.stem.wordnet import WordNetLemmatizer

df["issue_type"].replace({"Bug": "0", "Task": "1", "Improvement": "2"}, inplace=True)

print(df)

df["issue_type"].value_counts(normalize= True)

```

#### Vectorize sentences

```

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer(min_df=0, lowercase=False)

vectorizer.fit(df["summary"])

vectorizer.vocabulary_

```

#### Creating a Bag of Words model

```

vectorizer.transform(df["summary"]).toarray()

```

#### Split the data into train and test sets

```

from sklearn.model_selection import train_test_split

summaries = df["summary"].values

y = df["issue_type"].values

summaries_train, summaries_test, y_train, y_test = train_test_split(summaries, y, test_size=0.25, random_state=1000)

```

#### Using the BOW model to vectorize the questions

```

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

vectorizer.fit(summaries_train)

X_train = vectorizer.transform(summaries_train)

X_test = vectorizer.transform(summaries_test)

X_train

```

#### The resulting feature vectors have 52 samples which are the number of training samples after the train-test split. Each sample has 172 dimensions which is the size of the vocabulary.

### 3. Model Evaluation

```

from sklearn.linear_model import LogisticRegression

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

score = classifier.score(X_test, y_test)

print("Accuracy:", score)

```

### 4. Conclusion

#### Logistic regression classifies the data by considering outcome variables on extreme ends and consequently forms a line to distinguish them.

#### This algorithm provides great efficiency, works well in the segmentation and categorization of a small number of categorical variables.

|

github_jupyter

|

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import pymysql

import os

import datetime

database = pymysql.connect (host="localhost", user = "root", passwd = "password", db = "helpdesk")

cursor1 = database.cursor()

cursor1.execute("select * from issues limit 5;")

results = cursor1.fetchall()

print(results)

import pandas as pd

df = pd.read_sql_query("select * from issues limit 70;", database)

df

from sklearn import preprocessing

from nltk.corpus import stopwords

from nltk.stem.snowball import SnowballStemmer

import matplotlib

from matplotlib import pyplot as plt

df.describe()

df.info()

df['issue_type'].astype(str)

del df['id']

del df['created']

del df['user']

df.groupby(['issue_type']).count().plot.bar(ylim=0)

plt.show()

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

import nltk

nltk.download('stopwords')

sw = stopwords.words('english')

def stopwords(summary):

summary = [word.lower() for word in summary.split() if word.lower() not in sw]

return " ".join(summary)

df['summary'] = df['summary'].apply(stopwords)

df

from unidecode import unidecode

def remove_non_ascii(summary):

return ''.join([i if ord(i) < 128 else ' ' for i in summary])

df['summary'] = df['summary'].apply(remove_non_ascii)

df

def remove_html_tags(summary):

import re

clean = re.compile('<.*?>')

return re.sub(clean, '', summary)

df['summary'] = df['summary'].apply(remove_html_tags)

df

def remove_punctuation(summary):

import string

for c in string.punctuation:

summary = summary.replace(c," ")

return summary

df['summary'] = df['summary'].apply(remove_punctuation)

df

def lowercase(summary):

import string

for c in string:

summary = summary.lower(c)

return summary

def remove_emoticons(summary):

import re

regrex_pattern = re.compile(pattern = "["

u"\U0001F600-\U0001F64F" # emoticons

u"\U0001F300-\U0001F5FF" # symbols & pictographs

u"\U0001F680-\U0001F6FF" # transport & map symbols

u"\U0001F1E0-\U0001F1FF" # flags (iOS)

"]+", flags = re.UNICODE)

return regrex_pattern.sub(r'',summary)

df['summary'] = df['summary'].apply(remove_emoticons)

df

import pandas as pd

import numpy as np

import nltk

import string

import math

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import SGDClassifier

from sklearn.model_selection import train_test_split

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import confusion_matrix

from sklearn.metrics import accuracy_score

from sklearn import metrics

import re

import string

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer

from nltk.stem.wordnet import WordNetLemmatizer

df["issue_type"].replace({"Bug": "0", "Task": "1", "Improvement": "2"}, inplace=True)

print(df)

df["issue_type"].value_counts(normalize= True)

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer(min_df=0, lowercase=False)

vectorizer.fit(df["summary"])

vectorizer.vocabulary_

vectorizer.transform(df["summary"]).toarray()

from sklearn.model_selection import train_test_split

summaries = df["summary"].values

y = df["issue_type"].values

summaries_train, summaries_test, y_train, y_test = train_test_split(summaries, y, test_size=0.25, random_state=1000)

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

vectorizer.fit(summaries_train)

X_train = vectorizer.transform(summaries_train)

X_test = vectorizer.transform(summaries_test)

X_train

from sklearn.linear_model import LogisticRegression

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

score = classifier.score(X_test, y_test)

print("Accuracy:", score)

| 0.422981 | 0.861247 |

# Project : Advanced Lane Finding

The Goal of this Project

In this project, your goal is to write a software pipeline to identify the lane boundaries in a video from a front-facing camera on a car. The camera calibration images, test road images, and project videos are available in the project repository.

### The goals / steps of this project are the following:

- Compute the camera calibration matrix and distortion coefficients given a set of chessboard images.

- Apply a distortion correction to raw images.

- Use color transforms, gradients, etc., to create a thresholded binary image.

- Apply a perspective transform to rectify binary image ("birds-eye view").

- Detect lane pixels and fit to find the lane boundary.

- Determine the curvature of the lane and vehicle position with respect to center.

- Warp the detected lane boundaries back onto the original image.

- Output visual display of the lane boundaries and numerical estimation of lane curvature and vehicle position.

The images for camera calibration are stored in the folder called camera_cal. The images in test_images are for testing your pipeline on single frames. If you want to extract more test images from the videos, you can simply use an image writing method like cv2.imwrite(), i.e., you can read the video in frame by frame as usual, and for frames you want to save for later you can write to an image file.

To help the reviewer examine your work, please save examples of the output from each stage of your pipeline in the folder called output_images, and include a description in your writeup for the project of what each image shows. The video called project_video.mp4 is the video your pipeline should work well on.

The challenge_video.mp4 video is an extra (and optional) challenge for you if you want to test your pipeline under somewhat trickier conditions. The harder_challenge.mp4 video is another optional challenge and is brutal!

If you're feeling ambitious (again, totally optional though), don't stop there! We encourage you to go out and take video of your own, calibrate your camera and show us how you would implement this project from scratch!

## Import Packages

```

#importing packages

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import numpy as np

import cv2

import os

import collections as clx

from moviepy.editor import VideoFileClip

from IPython.display import HTML

%matplotlib inline

#%config InlineBackend.figure_format = 'retina'

```

## Configurations

```

# configurations Start

cameracal = "camera_cal/"

outputimages = "output_images/"

outputvideos = "output_videos/"

testimages = "test_images/"

testvideos = "test_videos/"

```

## The Camera Calibration

```

# prepare object points

nx = 9 #the number of inside corners in x

ny = 6 #the number of inside corners in y

def getchess(filepath=""):

# Preparing object points

objp = np.zeros((nx * ny, 3), np.float32)

objp[:,:2] = np.mgrid[0:nx, 0:ny].T.reshape(-1, 2)

objpoints = []

imgpoints = []

chessimgs = []

images = []

for filename in images:

img = plt.imread(filename)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, (nx, ny), None)

if ret == True:

objpoints.append(objp)

imgpoints.append(corners)

chessimgs.append(cv2.drawChessboardCorners(img, (nx, ny), corners, ret))

return objpoints, imgpoints, chessimgs

imagePathList = [x for x in os.listdir("camera_cal") if x.endswith(".jpg")]

print(imagePathList)

```

|

github_jupyter

|

#importing packages

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import numpy as np

import cv2

import os

import collections as clx

from moviepy.editor import VideoFileClip

from IPython.display import HTML

%matplotlib inline

#%config InlineBackend.figure_format = 'retina'

# configurations Start

cameracal = "camera_cal/"

outputimages = "output_images/"

outputvideos = "output_videos/"

testimages = "test_images/"

testvideos = "test_videos/"

# prepare object points

nx = 9 #the number of inside corners in x

ny = 6 #the number of inside corners in y

def getchess(filepath=""):

# Preparing object points

objp = np.zeros((nx * ny, 3), np.float32)

objp[:,:2] = np.mgrid[0:nx, 0:ny].T.reshape(-1, 2)

objpoints = []

imgpoints = []

chessimgs = []

images = []

for filename in images:

img = plt.imread(filename)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, (nx, ny), None)

if ret == True:

objpoints.append(objp)

imgpoints.append(corners)

chessimgs.append(cv2.drawChessboardCorners(img, (nx, ny), corners, ret))

return objpoints, imgpoints, chessimgs

imagePathList = [x for x in os.listdir("camera_cal") if x.endswith(".jpg")]

print(imagePathList)

| 0.247351 | 0.987424 |

# VacationPy

----

#### Note

* Keep an eye on your API usage. Use https://developers.google.com/maps/reporting/gmp-reporting as reference for how to monitor your usage and billing.

* Instructions have been included for each segment. You do not have to follow them exactly, but they are included to help you think through the steps.

```

# Dependencies and Setup

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import requests

import gmaps

import os

# Import API key

from api_keys import g_key

```

### Store Part I results into DataFrame

* Load the csv exported in Part I to a DataFrame

```

city_data_df = pd.read_csv("output_data/cities.csv")

city_data_df.head()

```

### Humidity Heatmap

* Configure gmaps.

* Use the Lat and Lng as locations and Humidity as the weight.

* Add Heatmap layer to map.

```

#configure gmaps

gmaps.configure(api_key=g_key)

#Heamap of humidity

locations = city_data_df[["Lat", "Lng"]]

humidity = city_data_df["Humidity"]

fig = gmaps.figure()

heat_layer = gmaps.heatmap_layer(locations, weights=humidity, dissipating=False, max_intensity=300, point_radius=5)

fig.add_layer(heat_layer)

fig

```

### Create new DataFrame fitting weather criteria

* Narrow down the cities to fit weather conditions.

* Drop any rows will null values.

```

#Narrowing down cities that fit criteria and drop any results with null values

narrowed_city_df = city_data_df.loc[(city_data_df["Max Temp"] < 80) & (city_data_df["Max Temp"] > 70)\

& (city_data_df["Wind Speed"] < 10)\

& (city_data_df["Cloudiness"] == 0)].dropna()

narrowed_city_df

```

### Hotel Map

* Store into variable named `hotel_df`.

* Add a "Hotel Name" column to the DataFrame.

* Set parameters to search for hotels with 5000 meters.

* Hit the Google Places API for each city's coordinates.

* Store the first Hotel result into the DataFrame.

* Plot markers on top of the heatmap.

```

#Create a Dataframe called hotel_df to store hotel name along with city

hotel_df = narrowed_city_df[["City", "Country", "Lat", "Lng"]].copy()

hotel_df["Hotel Name"]=""

hotel_df

params={

"radius": 5000,

"types": "lodging",

"key": g_key

}

for index, row in hotel_df.iterrows():

#get lat and lng

lat = row["Lat"]

lng = row["Lng"]

params["location"] = f"{lat},{lng}"

#use the search term: hotel and out lat/lng

base_url = "https://maps.googleapis.com/maps/api/place/nearbysearch/json"

name_address = requests.get(base_url, params=params).json()

try:

hotel_df.loc[index, "Hotel Name"] = name_address["results"][0]["name"]

except(KeyError, IndexError):

print("Missing field/result...skipping")

hotel_df

# NOTE: Do not change any of the code in this cell

# Using the template add the hotel marks to the heatmap

info_box_template = """

<dl>

<dt>Name</dt><dd>{Hotel Name}</dd>

<dt>City</dt><dd>{City}</dd>

<dt>Country</dt><dd>{Country}</dd>

</dl>

"""

# Store the DataFrame Row

# NOTE: be sure to update with your DataFrame name

hotel_info = [info_box_template.format(**row) for index, row in hotel_df.iterrows()]

locations = hotel_df[["Lat", "Lng"]]

# Add marker layer ontop of heat map

marker_layer = gmaps.marker_layer(locations, info_box_content=hotel_info)

fig.add_layer(marker_layer)

# Display figure

fig

```

|

github_jupyter

|

# Dependencies and Setup

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import requests

import gmaps

import os

# Import API key

from api_keys import g_key

city_data_df = pd.read_csv("output_data/cities.csv")

city_data_df.head()

#configure gmaps

gmaps.configure(api_key=g_key)

#Heamap of humidity

locations = city_data_df[["Lat", "Lng"]]

humidity = city_data_df["Humidity"]

fig = gmaps.figure()

heat_layer = gmaps.heatmap_layer(locations, weights=humidity, dissipating=False, max_intensity=300, point_radius=5)

fig.add_layer(heat_layer)

fig

#Narrowing down cities that fit criteria and drop any results with null values

narrowed_city_df = city_data_df.loc[(city_data_df["Max Temp"] < 80) & (city_data_df["Max Temp"] > 70)\

& (city_data_df["Wind Speed"] < 10)\

& (city_data_df["Cloudiness"] == 0)].dropna()

narrowed_city_df

#Create a Dataframe called hotel_df to store hotel name along with city

hotel_df = narrowed_city_df[["City", "Country", "Lat", "Lng"]].copy()

hotel_df["Hotel Name"]=""

hotel_df

params={

"radius": 5000,

"types": "lodging",

"key": g_key

}

for index, row in hotel_df.iterrows():

#get lat and lng

lat = row["Lat"]

lng = row["Lng"]

params["location"] = f"{lat},{lng}"

#use the search term: hotel and out lat/lng

base_url = "https://maps.googleapis.com/maps/api/place/nearbysearch/json"

name_address = requests.get(base_url, params=params).json()

try:

hotel_df.loc[index, "Hotel Name"] = name_address["results"][0]["name"]

except(KeyError, IndexError):

print("Missing field/result...skipping")

hotel_df

# NOTE: Do not change any of the code in this cell

# Using the template add the hotel marks to the heatmap

info_box_template = """

<dl>

<dt>Name</dt><dd>{Hotel Name}</dd>

<dt>City</dt><dd>{City}</dd>

<dt>Country</dt><dd>{Country}</dd>

</dl>

"""

# Store the DataFrame Row

# NOTE: be sure to update with your DataFrame name

hotel_info = [info_box_template.format(**row) for index, row in hotel_df.iterrows()]

locations = hotel_df[["Lat", "Lng"]]

# Add marker layer ontop of heat map

marker_layer = gmaps.marker_layer(locations, info_box_content=hotel_info)

fig.add_layer(marker_layer)

# Display figure

fig

| 0.367043 | 0.858896 |

<center>

<img src="images/meme.png">

</center>

# Машинное обучение

> Компьютерная программа обучается на основе опыта $E$ по отношению к некоторому классу задач $T$ и меры качества $P$, если качество решения задач из $T$, измеренное на основе $P$, улучшается с приобретением опыта $E$. (Т. М. Митчелл)

### Формулировка задачи:

$X$ $-$ множество объектов

$Y$ $-$ множество меток классов

$f: X \rightarrow Y$ $-$ неизвестная зависимость

**Дано**:

$x_1, \dots, x_n \subset X$ $-$ обучающая выборка

$y_i = f(x_i), i=1, \dots n$ $-$ известные метки классов

**Найти**:

$a∶ X \rightarrow Y$ $-$ алгоритм, решающую функцию, приближающую $f$ на всём множестве $X$.

```

!conda install -c intel scikit-learn -y

import numpy

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

import warnings

warnings.simplefilter('ignore')

numpy.random.seed(7)

%matplotlib inline

iris = load_iris()

X = iris.data

Y = iris.target

print(X.shape)

random_sample = numpy.random.choice(X.shape[0], 10)

for i in random_sample:

print(f"{X[i]}: {iris.target_names[Y[i]]}")

```

## Типы задач

### Задача классификации

$Y = \{ -1, +1 \}$ $-$ классификация на 2 класса;

$Y = \{1, \dots , K \}$ $-$ на $K$ непересекающихся классов;

$Y = \{0, 1 \}^K$ $-$ на $K$ классов, которые могут пересекаться.

Примеры: распознавание текста по рукописному вводу, определение предмета на фотографии.

### Задача регрессии

$Y = \mathbb{R}$ или $Y = \mathbb{R}^k$.

Примеры: предсказание стоимости акции через полгода, предсказание прибыли магазина в следующем месяце.

### Задача ранжирования

$Y$ $-$ конечное упорядоченное множество.

Пример: выдача поискового запроса.

### Задачи уменьшения размерности

Научиться описывать данные не $M$ признаками, а меньшим числом для повышения точности модели или последующей визуализации. В качестве примера помимо необходимости для визуализации можно привести сжатие данных.

### Задачи кластеризации

Разбиение данных множества объектов на подмножества (кластеры) таким образом, чтобы объекты из одного кластера были более похожи друг на друга, чем на объекты из других кластеров по какому-либо критерию.

<center>

<img src="images/ml_map.png">

</center>

```

from sklearn.svm import SVC

model = SVC(random_state=7)

model.fit(X, Y)

y_pred = model.predict(X)

for i in random_sample:

print(f"predicted: {iris.target_names[y_pred[i]]}, actual: {iris.target_names[Y[i]]}")

f"differences in {(Y != y_pred).sum()} samples"

```

# Оценка качества

## Метрика

### Задача классификации

Определим матрицу ошибок. Допустим, что у нас есть два класса и алгоритм, предсказывающий принадлежность каждого объекта одному из классов, тогда матрица ошибок классификации будет выглядеть следующим образом:

| $ $ | $y=1$ | $y=0$ |

|-------------|---------------------|---------------------|

| $\hat{y}=1$ | True Positive (TP) | False Positive (FP) |

| $\hat{y}=0$ | False Negative (FN) | True Negative (TN) |

Здесь $\hat{y}$ $-$ это ответ алгоритма на объекте, а $y$ $-$ истинная метка класса на этом объекте.

Таким образом, ошибки классификации бывают двух видов: *False Negative (FN)* и *False Positive (FP)*.

- $\textit{accuracy} = \frac{TP + TN}{TP + FP + FN + TN}$

- $\textit{recall} = \frac{TP}{TP + FN}$

- $\textit{precision} = \frac{TP}{TP + FP}$

- $\textit{f1-score} = \frac{2 \cdot \textit{recall} \cdot \textit{precision}}{\textit{precision} + \textit{recall}}$

### Задача регрессии

- $MSE = \frac{1}{n} \sum_{i=1}^n (y_i - \hat{y}_i)^2$

- $RMSE = \sqrt{MSE}$

- $MAE = \frac{1}{n} \sum_{i=1}^n |y_i - \hat{y}_i|$

## Отложенная выборка

$X \rightarrow X_{train}, X_{val}, X_{test}$

- $X_{train}$ $-$ используется для обучения модели

- $X_{val}$ $-$ подбор гиперпараметров ($ \approx{30\%}$ от тренировочной части)

- $X_{test}$ $-$ оценка качества конечной модели

```

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, f1_score

# 1/3 всего датасета возьмём для тестовой выборки

# затем 30% от тренировочной будет валидационной

test_index = numpy.random.choice(X.shape[0], X.shape[0] // 3)

train_index = [i for i in range(X.shape[0]) if i not in test_index]

X_test = X[test_index]

Y_test = Y[test_index]

X_train, X_val, Y_train, Y_val = train_test_split(X[train_index], Y[train_index], test_size=0.3, shuffle=True, random_state=7)

print(f"train size: {X_train.shape[0]}")

print(f"val size: {X_val.shape[0]}")

print(f"test size: {X_test.shape[0]}")

best_score = -1

best_c = None

for c in [0.01, 0.1, 1, 10]:

model = SVC(C=c, random_state=7)

model.fit(X_train, Y_train)

y_pred = model.predict(X_val)

cur_score = f1_score(Y_val, y_pred, average='micro')

if cur_score > best_score:

best_score = cur_score

best_c = c

f"best score is {best_score} for C {best_c}"

full_model = SVC(C=1.0, random_state=7)

full_model.fit(X[train_index], Y[train_index])

y_pred = full_model.predict(X_test)

f"test score is {f1_score(Y_test, y_pred, average='micro')}"

```

# Алгоритмы классификации

## Линейный классификатор

Построение разделяющей гиперплоскости

$$

y = \textit{sign}(Wx + b)

$$

<center>

<img src="images/linear_classifier.png">

</center>

### Стандартизация величин

При использование линейных моделей, иногда полезно стандартизировать их значения, например, оценки пользователей.

$$

X_{stand} = \frac{X - X_{mean}}{X_{std}}

$$

Для этого в `sklearn` есть класс $-$ `StandartScaler`

### Логистическая регрессия

Использование функции логита для получения вероятности

<center>

<img src="images/logit.png">

</center>

## Метод опорных векторов (Support vector machine)

Построение "полоски" максимальной ширины, которая разделяет выборку

<center>

<img src="images/svm.png">

</center>

## Дерево решений (Decision tree)

В каждой вершине определяется критерий, по которому разбивается подвыборка.

<center>

<img src="images/decision_tree.png">

</center>

## Случайный лес (Random forest)

Множество деревьев решений, каждое из которых обучается на случайной подвыборке.

<center>

<img src="images/random_forest.png">

</center>

## Метод ближайших соседей (K-neighbors)

Решение базируется на основе $k$ ближайших известных примеров.

<center>

<img src="images/knn.png">

</center>

```

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=1000, n_features=50, n_informative=20)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True, random_state=7)

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.linear_model import LogisticRegression

models = [

LogisticRegression(random_state=7, n_jobs=6),

SVC(random_state=7),

DecisionTreeClassifier(random_state=7),

RandomForestClassifier(random_state=7),

KNeighborsClassifier(n_jobs=6)

]

for model in models:

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(f"model {model.__class__.__name__} scores {round(f1_score(y_test, y_pred, average='micro'), 2)}")

from sklearn.preprocessing import StandardScaler

standart_scaler = StandardScaler()

standart_scaler.fit(X_train)

X_train_scaled = standart_scaler.transform(X_train)

X_test_scaled = standart_scaler.transform(X_test)

model = SVC(random_state=7)

model.fit(X_train_scaled, y_train)

y_pred = model.predict(X_test_scaled)

f"test score is {f1_score(y_test, y_pred, average='micro')}"

```

# Inclass task #1

Реализуйте модель, которая классифицирует цифры по рисунку.

Ваша задача получить f1-score $0.98$ на тестовом датасете.

Можете пользоваться как алгоритмами выше, так и любыми другими реализованными в `sklearn`.

```

from sklearn.datasets import fetch_openml

# Load data from https://www.openml.org/d/554

X, Y = fetch_openml('mnist_784', return_X_y=True)

print(f"shape of X is {X.shape}")

plt.gray()

fig, axes = plt.subplots(2, 5, figsize=(15, 5))

for i, num in enumerate(numpy.random.choice(X.shape[0], 10)):

axes[i // 5, i % 5].matshow(X[num].reshape(28, 28))

axes[i // 5, i % 5].set_title(Y[num])

axes[i // 5, i % 5].axis('off')

plt.show()

test_shuffle = numpy.random.permutation(X.shape[0])

X_test, X_train = X[test_shuffle[:10000]], X[test_shuffle[10000:]]

Y_test, Y_train = Y[test_shuffle[:10000]], Y[test_shuffle[10000:]]

print(f"train size: {X_train.shape[0]}")

print(f"test size: {X_test.shape[0]}")

model = SVC(random_state=5)

model.fit(X_train, Y_train)

y_pred = model.predict(X_test)

print(f"test score is {f1_score(Y_test, y_pred, average='micro')}")

# test score is 0.9810000000000001

```

# Алгоритмы регрессии

Деревья решений, случайный лес и метод ближайших соседей легко обобщаются на случай регрессии. Ответ, как правило, это среднее из полученных значений (например, среднее значение ближайших примеров).

## Линейная регрессия

$y$ линейно зависим от $x$, т.е. имеет место уравнение

$$

y = Wx + b = W <x; 1>

$$

Такой подход имеет аналитическое решение, однако он требует вычисление обратной матрицы $X$, что не всегда возможно.

Другой подход $-$ минимизация функции ошибки, например $MSE$, с помощью техники градиентного спуска.

## Регуляризация

Чтобы избегать переобучения (когда модель хорошо работает только на тренировочных данных) используют различные техники *регуляризации*.

Один из признаков переобучения $-$ модель имеет большие веса, это можно контролировать путём добавления $L1$ или $L2$ нормы весов к функции ошибки.

То есть, итоговая ошибка, которая будет распространятся на веса модели, считается по формуле:

$$

Error(W) = MSE(W, X, y) + \lambda ||W||

$$

Такие модели, так же реализованы в `sklearn`:

- Lasso

- Ridge

```

from sklearn.datasets import load_boston

X, y = load_boston(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True, random_state=7)

from sklearn.linear_model import Lasso, Ridge, LinearRegression

from sklearn.ensemble import RandomForestRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.svm import SVR

from sklearn.metrics import mean_squared_error

models = [

Lasso(random_state=7),

Ridge(random_state=7),

LinearRegression(n_jobs=6),

RandomForestRegressor(random_state=7, n_jobs=6),

KNeighborsRegressor(n_jobs=6),

SVR()

]

for model in models:

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(f"model {model.__class__.__name__} scores {round(mean_squared_error(y_test, y_pred), 2)}")

```

# Inclass task #2

Реализуйте модель, которая предсказывает стоимость медицинской страховки. В данных есть текстовые бинарные признаки (`sex` и `smoker`), не забудьте конвертировать их в `0` и `1`. Признак `region` имеет $4$ разных значения, вы можете конвертировать их в числа $0-4$ или создать $4$ бинарных признака. Для этого вам может помочь `sklearn.preprocessing.LabelEncoder` и `pandas.get_dummies`.

Ваша задача получить RMSE-score меньше $5000$ на тестовом датасете.

Можете пользоваться как алгоритмами выше, так и любыми другими реализованными в `sklearn`.

```

def rmse(y_true, y_pred):

return numpy.sqrt(mean_squared_error(y_true, y_pred))

!conda install pandas -y

import pandas

from sklearn import preprocessing

data = pandas.read_csv('data/insurance.csv')

le = preprocessing.LabelEncoder()

data['sex'] = preprocessing.LabelEncoder().fit_transform(data['sex'])

data['smoker'] = preprocessing.LabelEncoder().fit_transform(data['smoker'])

data['region'] = preprocessing.LabelEncoder().fit_transform(data['region'])

data.head()

X = data.drop(['charges'], axis=1)

y = data['charges'].values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True, random_state=7)

print(f"train size: {X_train.shape[0]}")

print(f"test size: {X_test.shape[0]}")

model = RandomForestRegressor(random_state=5, n_jobs=6)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(f"test score is {rmse(y_test, y_pred)}")

# test score is 4939.426892574252

```

|

github_jupyter

|

!conda install -c intel scikit-learn -y

import numpy

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

import warnings

warnings.simplefilter('ignore')

numpy.random.seed(7)

%matplotlib inline

iris = load_iris()

X = iris.data

Y = iris.target

print(X.shape)

random_sample = numpy.random.choice(X.shape[0], 10)

for i in random_sample:

print(f"{X[i]}: {iris.target_names[Y[i]]}")

from sklearn.svm import SVC

model = SVC(random_state=7)

model.fit(X, Y)

y_pred = model.predict(X)

for i in random_sample:

print(f"predicted: {iris.target_names[y_pred[i]]}, actual: {iris.target_names[Y[i]]}")

f"differences in {(Y != y_pred).sum()} samples"

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, f1_score

# 1/3 всего датасета возьмём для тестовой выборки

# затем 30% от тренировочной будет валидационной

test_index = numpy.random.choice(X.shape[0], X.shape[0] // 3)

train_index = [i for i in range(X.shape[0]) if i not in test_index]

X_test = X[test_index]

Y_test = Y[test_index]

X_train, X_val, Y_train, Y_val = train_test_split(X[train_index], Y[train_index], test_size=0.3, shuffle=True, random_state=7)

print(f"train size: {X_train.shape[0]}")

print(f"val size: {X_val.shape[0]}")

print(f"test size: {X_test.shape[0]}")

best_score = -1

best_c = None

for c in [0.01, 0.1, 1, 10]:

model = SVC(C=c, random_state=7)

model.fit(X_train, Y_train)

y_pred = model.predict(X_val)

cur_score = f1_score(Y_val, y_pred, average='micro')

if cur_score > best_score:

best_score = cur_score

best_c = c

f"best score is {best_score} for C {best_c}"

full_model = SVC(C=1.0, random_state=7)

full_model.fit(X[train_index], Y[train_index])

y_pred = full_model.predict(X_test)

f"test score is {f1_score(Y_test, y_pred, average='micro')}"

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=1000, n_features=50, n_informative=20)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True, random_state=7)

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.linear_model import LogisticRegression

models = [

LogisticRegression(random_state=7, n_jobs=6),

SVC(random_state=7),

DecisionTreeClassifier(random_state=7),

RandomForestClassifier(random_state=7),

KNeighborsClassifier(n_jobs=6)

]

for model in models:

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(f"model {model.__class__.__name__} scores {round(f1_score(y_test, y_pred, average='micro'), 2)}")

from sklearn.preprocessing import StandardScaler

standart_scaler = StandardScaler()

standart_scaler.fit(X_train)

X_train_scaled = standart_scaler.transform(X_train)

X_test_scaled = standart_scaler.transform(X_test)

model = SVC(random_state=7)

model.fit(X_train_scaled, y_train)

y_pred = model.predict(X_test_scaled)

f"test score is {f1_score(y_test, y_pred, average='micro')}"

from sklearn.datasets import fetch_openml

# Load data from https://www.openml.org/d/554

X, Y = fetch_openml('mnist_784', return_X_y=True)

print(f"shape of X is {X.shape}")

plt.gray()

fig, axes = plt.subplots(2, 5, figsize=(15, 5))

for i, num in enumerate(numpy.random.choice(X.shape[0], 10)):

axes[i // 5, i % 5].matshow(X[num].reshape(28, 28))

axes[i // 5, i % 5].set_title(Y[num])

axes[i // 5, i % 5].axis('off')

plt.show()

test_shuffle = numpy.random.permutation(X.shape[0])

X_test, X_train = X[test_shuffle[:10000]], X[test_shuffle[10000:]]

Y_test, Y_train = Y[test_shuffle[:10000]], Y[test_shuffle[10000:]]

print(f"train size: {X_train.shape[0]}")

print(f"test size: {X_test.shape[0]}")

model = SVC(random_state=5)

model.fit(X_train, Y_train)

y_pred = model.predict(X_test)

print(f"test score is {f1_score(Y_test, y_pred, average='micro')}")

# test score is 0.9810000000000001

from sklearn.datasets import load_boston

X, y = load_boston(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True, random_state=7)

from sklearn.linear_model import Lasso, Ridge, LinearRegression

from sklearn.ensemble import RandomForestRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.svm import SVR

from sklearn.metrics import mean_squared_error

models = [

Lasso(random_state=7),

Ridge(random_state=7),

LinearRegression(n_jobs=6),

RandomForestRegressor(random_state=7, n_jobs=6),

KNeighborsRegressor(n_jobs=6),

SVR()

]

for model in models:

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(f"model {model.__class__.__name__} scores {round(mean_squared_error(y_test, y_pred), 2)}")

def rmse(y_true, y_pred):

return numpy.sqrt(mean_squared_error(y_true, y_pred))

!conda install pandas -y

import pandas

from sklearn import preprocessing

data = pandas.read_csv('data/insurance.csv')

le = preprocessing.LabelEncoder()

data['sex'] = preprocessing.LabelEncoder().fit_transform(data['sex'])

data['smoker'] = preprocessing.LabelEncoder().fit_transform(data['smoker'])

data['region'] = preprocessing.LabelEncoder().fit_transform(data['region'])

data.head()

X = data.drop(['charges'], axis=1)

y = data['charges'].values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True, random_state=7)

print(f"train size: {X_train.shape[0]}")

print(f"test size: {X_test.shape[0]}")

model = RandomForestRegressor(random_state=5, n_jobs=6)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(f"test score is {rmse(y_test, y_pred)}")

# test score is 4939.426892574252

| 0.501709 | 0.954095 |

```

import numpy as np

import matplotlib.pyplot as pl

import pickle5 as pickle

rad_ratio = 7.860 / 9.449

temp_ratio = 315 / 95

scale = rad_ratio * temp_ratio

output_dir = '/Users/tgordon/research/exomoons_jwst/JexoSim/output/'

filename = 'OOT_SNR_NIRSpec_BOTS_PRISM_Kepler-1513 b_2020_11_23_2232_57.pickle'

result = pickle.load(open(output_dir + filename, 'rb'))

result['noise_dic']['All noise']['fracNoT14_mean'] * np.sqrt(2)

#std = result['noise_dic']['All noise']['signal_std_mean'] / result['noise_dic']['All noise']['signal_mean_mean']

wl = result['noise_dic']['All noise']['wl']

inwl = result['input_spec_wl']

inspec = result['input_spec']

inspec_interp = np.interp(wl, inwl.value, inspec.value)

saturn = np.loadtxt('../data/saturn.txt', skiprows=13)

saturn_interp = np.interp(wl, saturn[:, 0], saturn[:, 1])

dense_wl = np.linspace(wl[0], wl[-1], 1000)

saturn_smooth_interp = np.interp(dense_wl, saturn[:, 0], saturn[:, 1])

spec = (saturn_interp - np.mean(saturn_interp)) * scale + (0.08 ** 2)

smooth_spec = (saturn_smooth_interp - np.mean(saturn_interp)) * scale + (0.08 ** 2)

pl.figure(figsize=(10, 6))

pl.plot(dense_wl, smooth_spec, 'k')

rand_spec = np.random.randn(len(spec)) * onesig*1e-6 + spec

pl.errorbar(wl, rand_spec, yerr=onesig*1e-6, fmt='ro')

pl.ylim(0.0061, 0.0067)

pl.xlim(0.9, 5.5)

pl.annotate(r'$\mathrm{CH}_4$', xy=(1.0, 0.00653), xycoords='data', fontsize=15, bbox=dict(fc="white", lw=0.5, pad=5))

pl.annotate(r'$\mathrm{CH}_4$', xy=(1.3, 0.0065), xycoords='data', fontsize=15, bbox=dict(fc="white", lw=0.5, pad=5))

pl.annotate(r'$\mathrm{CH}_4$', xy=(1.7, 0.0065), xycoords='data', fontsize=15, bbox=dict(fc="white", lw=0.5, pad=5))

pl.annotate(r'$\mathrm{CH}_4$', xy=(2.2, 0.00655), xycoords='data', fontsize=15, bbox=dict(fc="white", lw=0.5, pad=5))

#pl.annotate(r'$\mathrm{CH}_4$', xy=(3.0, 0.0066), xycoords='data', fontsize=15, bbox=dict(fc="white", lw=0.5, pad=5))