code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

# The framework and why do we need it

In the previous notebooks, we introduce some concepts regarding the

evaluation of predictive models. While this section could be slightly

redundant, we intend to go into details into the cross-validation framework.

Before we dive in, let's linger on the reasons for always having training and

testing sets. Let's first look at the limitation of using a dataset without

keeping any samples out.

To illustrate the different concepts, we will use the California housing

dataset.

```

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing(as_frame=True)

data, target = housing.data, housing.target

```

<div class="admonition caution alert alert-warning">

<p class="first admonition-title" style="font-weight: bold;">Caution!</p>

<p class="last">Here and later, we use the name <tt class="docutils literal">data</tt> and <tt class="docutils literal">target</tt> to be explicit. In

scikit-learn documentation, <tt class="docutils literal">data</tt> is commonly named <tt class="docutils literal">X</tt> and <tt class="docutils literal">target</tt> is

commonly called <tt class="docutils literal">y</tt>.</p>

</div>

In this dataset, the aim is to predict the median value of houses in an area

in California. The features collected are based on general real-estate and

geographical information.

Therefore, the task to solve is different from the one shown in the previous

notebook. The target to be predicted is a continuous variable and not anymore

discrete. This task is called regression.

Therefore, we will use predictive model specific to regression and not to

classification.

```

print(housing.DESCR)

data.head()

```

To simplify future visualization, let's transform the prices from the

dollar (\\$) range to the thousand dollars (k\\$) range.

```

target *= 100

target.head()

```

<div class="admonition note alert alert-info">

<p class="first admonition-title" style="font-weight: bold;">Note</p>

<p class="last">If you want a deeper overview regarding this dataset, you can refer to the

Appendix - Datasets description section at the end of this MOOC.</p>

</div>

## Training error vs testing error

To solve this regression task, we will use a decision tree regressor.

```

from sklearn.tree import DecisionTreeRegressor

regressor = DecisionTreeRegressor(random_state=0)

regressor.fit(data, target)

```

After training the regressor, we would like to know its potential statistical

performance once deployed in production. For this purpose, we use the mean

absolute error, which gives us an error in the native unit, i.e. k\\$.

```

from sklearn.metrics import mean_absolute_error

target_predicted = regressor.predict(data)

score = mean_absolute_error(target, target_predicted)

print(f"On average, our regressor makes an error of {score:.2f} k$")

```

We get perfect prediction with no error. It is too optimistic and almost

always revealing a methodological problem when doing machine learning.

Indeed, we trained and predicted on the same dataset. Since our decision tree

was fully grown, every sample in the dataset is stored in a leaf node.

Therefore, our decision tree fully memorized the dataset given during `fit`

and therefore made no error when predicting.

This error computed above is called the **empirical error** or **training

error**.

<div class="admonition note alert alert-info">

<p class="first admonition-title" style="font-weight: bold;">Note</p>

<p class="last">In this MOOC, we will consistently use the term "training error".</p>

</div>

We trained a predictive model to minimize the training error but our aim is

to minimize the error on data that has not been seen during training.

This error is also called the **generalization error** or the "true"

**testing error**.

<div class="admonition note alert alert-info">

<p class="first admonition-title" style="font-weight: bold;">Note</p>

<p class="last">In this MOOC, we will consistently use the term "testing error".</p>

</div>

Thus, the most basic evaluation involves:

* splitting our dataset into two subsets: a training set and a testing set;

* fitting the model on the training set;

* estimating the training error on the training set;

* estimating the testing error on the testing set.

So let's split our dataset.

```

from sklearn.model_selection import train_test_split

data_train, data_test, target_train, target_test = train_test_split(

data, target, random_state=0)

```

Then, let's train our model.

```

regressor.fit(data_train, target_train)

```

Finally, we estimate the different types of errors. Let's start by computing

the training error.

```

target_predicted = regressor.predict(data_train)

score = mean_absolute_error(target_train, target_predicted)

print(f"The training error of our model is {score:.2f} k$")

```

We observe the same phenomena as in the previous experiment: our model

memorized the training set. However, we now compute the testing error.

```

target_predicted = regressor.predict(data_test)

score = mean_absolute_error(target_test, target_predicted)

print(f"The testing error of our model is {score:.2f} k$")

```

This testing error is actually about what we would expect from our model if

it was used in a production environment.

## Stability of the cross-validation estimates

When doing a single train-test split we don't give any indication regarding

the robustness of the evaluation of our predictive model: in particular, if

the test set is small, this estimate of the testing error will be

unstable and wouldn't reflect the "true error rate" we would have observed

with the same model on an unlimited amount of test data.

For instance, we could have been lucky when we did our random split of our

limited dataset and isolated some of the easiest cases to predict in the

testing set just by chance: the estimation of the testing error would be

overly optimistic, in this case.

**Cross-validation** allows estimating the robustness of a predictive model

by repeating the splitting procedure. It will give several training and

testing errors and thus some **estimate of the variability of the

model statistical performance**.

There are different cross-validation strategies, for now we are going to

focus on one called "shuffle-split". At each iteration of this strategy we:

- randomly shuffle the order of the samples of a copy of the full dataset;

- split the shuffled dataset into a train and a test set;

- train a new model on the train set;

- evaluate the testing error on the test set.

We repeat this procedure `n_splits` times. Using `n_splits=40` means that we

will train 40 models in total and all of them will be discarded: we just

record their statistical performance on each variant of the test set.

To evaluate the statistical performance of our regressor, we can use

`cross_validate` with a `ShuffleSplit` object:

```

from sklearn.model_selection import cross_validate

from sklearn.model_selection import ShuffleSplit

cv = ShuffleSplit(n_splits=40, test_size=0.3, random_state=0)

cv_results = cross_validate(

regressor, data, target, cv=cv, scoring="neg_mean_absolute_error")

```

The results `cv_results` are stored into a Python dictionary. We will convert

it into a pandas dataframe to ease visualization and manipulation.

```

import pandas as pd

cv_results = pd.DataFrame(cv_results)

cv_results.head()

```

<div class="admonition tip alert alert-warning">

<p class="first admonition-title" style="font-weight: bold;">Tip</p>

<p>A score is a metric for which higher values mean better results. On the

contrary, an error is a metric for which lower values mean better results.

The parameter <tt class="docutils literal">scoring</tt> in <tt class="docutils literal">cross_validate</tt> always expect a function that is

a score.</p>

<p class="last">To make it easy, all error metrics in scikit-learn, like

<tt class="docutils literal">mean_absolute_error</tt>, can be transformed into a score to be used in

<tt class="docutils literal">cross_validate</tt>. To do so, you need to pass a string of the error metric

with an additional <tt class="docutils literal">neg_</tt> string at the front to the parameter <tt class="docutils literal">scoring</tt>;

for instance <tt class="docutils literal"><span class="pre">scoring="neg_mean_absolute_error"</span></tt>. In this case, the negative

of the mean absolute error will be computed which would be equivalent to a

score.</p>

</div>

Let us revert the negation to get the actual error:

```

cv_results["test_error"] = -cv_results["test_score"]

```

Let's check the results reported by the cross-validation.

```

cv_results.head(10)

```

We get timing information to fit and predict at each round of

cross-validation. Also, we get the test score, which corresponds to the

testing error on each of the split.

```

len(cv_results)

```

We get 40 entries in our resulting dataframe because we performed 40

splits. Therefore, we can show the testing error distribution and thus, have

an estimate of its variability.

```

import matplotlib.pyplot as plt

cv_results["test_error"].plot.hist(bins=10, edgecolor="black", density=True)

plt.xlabel("Mean absolute error (k$)")

_ = plt.title("Test error distribution")

```

We observe that the testing error is clustered around 47 k\\$ and

ranges from 43 k\\$ to 50 k\\$.

```

print(f"The mean cross-validated testing error is: "

f"{cv_results['test_error'].mean():.2f} k$")

print(f"The standard deviation of the testing error is: "

f"{cv_results['test_error'].std():.2f} k$")

```

Note that the standard deviation is much smaller than the mean: we could

summarize that our cross-validation estimate of the testing error is

46.36 +/- 1.17 k\\$.

If we were to train a single model on the full dataset (without

cross-validation) and then had later access to an unlimited amount of test

data, we would expect its true testing error to fall close to that

region.

While this information is interesting in itself, it should be contrasted to

the scale of the natural variability of the vector `target` in our dataset.

Let us plot the distribution of the target variable:

```

target.plot.hist(bins=20, edgecolor="black", density=True)

plt.xlabel("Median House Value (k$)")

_ = plt.title("Target distribution")

print(f"The standard deviation of the target is: {target.std():.2f} k$")

```

The target variable ranges from close to 0 k\\$ up to 500 k\\$ and, with a

standard deviation around 115 k\\$.

We notice that the mean estimate of the testing error obtained by

cross-validation is a bit smaller than the natural scale of variation of the

target variable. Furthermore the standard deviation of the cross validation

estimate of the testing error is even smaller.

This is a good start, but not necessarily enough to decide whether the

generalization performance is good enough to make our prediction useful in

practice.

We recall that our model makes, on average, an error around 47 k\\$. With this

information and looking at the target distribution, such an error might be

acceptable when predicting houses with a 500 k\\$. However, it would be an

issue with a house with a value of 50 k\\$. Thus, this indicates that our

metric (Mean Absolute Error) is not ideal.

We might instead choose a metric relative to the target value to predict: the

mean absolute percentage error would have been a much better choice.

But in all cases, an error of 47 k\\$ might be too large to automatically use

our model to tag house value without expert supervision.

## More detail regarding `cross_validate`

During cross-validation, many models are trained and evaluated. Indeed, the

number of elements in each array of the output of `cross_validate` is a

result from one of this `fit`/`score`. To make it explicit, it is possible

to retrieve theses fitted models for each of the fold by passing the option

`return_estimator=True` in `cross_validate`.

```

cv_results = cross_validate(regressor, data, target, return_estimator=True)

cv_results

cv_results["estimator"]

```

The five decision tree regressors corresponds to the five fitted decision

trees on the different folds. Having access to these regressors is handy

because it allows to inspect the internal fitted parameters of these

regressors.

In the case where you are interested only about the test score, scikit-learn

provide a `cross_val_score` function. It is identical to calling the

`cross_validate` function and to select the `test_score` only (as we

extensively did in the previous notebooks).

```

from sklearn.model_selection import cross_val_score

scores = cross_val_score(regressor, data, target)

scores

```

## Summary

In this notebook, we saw:

* the necessity of splitting the data into a train and test set;

* the meaning of the training and testing errors;

* the overall cross-validation framework with the possibility to study

statistical performance variations;

|

github_jupyter

|

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing(as_frame=True)

data, target = housing.data, housing.target

print(housing.DESCR)

data.head()

target *= 100

target.head()

from sklearn.tree import DecisionTreeRegressor

regressor = DecisionTreeRegressor(random_state=0)

regressor.fit(data, target)

from sklearn.metrics import mean_absolute_error

target_predicted = regressor.predict(data)

score = mean_absolute_error(target, target_predicted)

print(f"On average, our regressor makes an error of {score:.2f} k$")

from sklearn.model_selection import train_test_split

data_train, data_test, target_train, target_test = train_test_split(

data, target, random_state=0)

regressor.fit(data_train, target_train)

target_predicted = regressor.predict(data_train)

score = mean_absolute_error(target_train, target_predicted)

print(f"The training error of our model is {score:.2f} k$")

target_predicted = regressor.predict(data_test)

score = mean_absolute_error(target_test, target_predicted)

print(f"The testing error of our model is {score:.2f} k$")

from sklearn.model_selection import cross_validate

from sklearn.model_selection import ShuffleSplit

cv = ShuffleSplit(n_splits=40, test_size=0.3, random_state=0)

cv_results = cross_validate(

regressor, data, target, cv=cv, scoring="neg_mean_absolute_error")

import pandas as pd

cv_results = pd.DataFrame(cv_results)

cv_results.head()

cv_results["test_error"] = -cv_results["test_score"]

cv_results.head(10)

len(cv_results)

import matplotlib.pyplot as plt

cv_results["test_error"].plot.hist(bins=10, edgecolor="black", density=True)

plt.xlabel("Mean absolute error (k$)")

_ = plt.title("Test error distribution")

print(f"The mean cross-validated testing error is: "

f"{cv_results['test_error'].mean():.2f} k$")

print(f"The standard deviation of the testing error is: "

f"{cv_results['test_error'].std():.2f} k$")

target.plot.hist(bins=20, edgecolor="black", density=True)

plt.xlabel("Median House Value (k$)")

_ = plt.title("Target distribution")

print(f"The standard deviation of the target is: {target.std():.2f} k$")

cv_results = cross_validate(regressor, data, target, return_estimator=True)

cv_results

cv_results["estimator"]

from sklearn.model_selection import cross_val_score

scores = cross_val_score(regressor, data, target)

scores

| 0.879639 | 0.987794 |

```

import numpy as np

```

# Exceptions

An exception is an event, which occurs during the execution of a program, that disrupts the normal flow of the program's instructions.

You've already seen some exceptions in the **Debugging** lesson.

*

Many programs want to know about exceptions when they occur. For example, if the input to a program is a file path. If the user inputs an invalid or non-existent path, the program generates an exception. It may be desired to provide a response to the user in this case.

It may also be that programs will *generate* exceptions. This is a way of indicating that there is an error in the inputs provided. In general, this is the preferred style for dealing with invalid inputs or states inside a python function rather than having an error return.

## Catching Exceptions

Python provides a way to detect when an exception occurs. This is done by the use of a block of code surrounded by a "try" and "except" statement.

```

def divide(numerator, denominator):

result = numerator/denominator

print("result = %f" % result)

divide(1.0, 0)

def divide1(numerator, denominator):

try:

result = numerator/denominator

print("result = %f" % result)

except:

print("You can't divide by 0!")

divide1(1.0, 'a')

divide1(1.0, 2)

divide1("x", 2)

def divide2(numerator, denominator):

try:

result = numerator / denominator

print("result = %f" % result)

except (ZeroDivisionError, TypeError) as err:

print("Got an exception: %s" % err)

divide2(1, "X")

divide2("x, 2)

```

#### Why didn't we catch this `SyntaxError`?

```

# Handle division by 0 by using a small number

SMALL_NUMBER = 1e-3

def divide3(numerator, denominator):

try:

result = numerator/denominator

except ZeroDivisionError:

result = numerator/SMALL_NUMBER

print("result = %f" % result)

except Exception as err:

print("Different error than division by zero:", err)

divide3(1,0)

divide3("1",0)

```

#### What do you do when you get an exception?

First, you can feel relieved that you caught a problematic element of your software! Yes, relieved. Silent fails are much worse. (Again, another plug for testing.)

## Generating Exceptions

#### Why *generate* exceptions? (Don't I have enough unintentional errors?)

```

import pandas as pd

def validateDF(df):

""""

:param pd.DataFrame df: should have a column named "hours"

"""

if not "hours" in df.columns:

raise ValueError("DataFrame should have a column named 'hours'.")

df = pd.DataFrame({'hours': range(10) })

validateDF(df)

df = pd.DataFrame({'years': range(10) })

validateDF(df)

```

## Class exercise

Choose one of the functions from the last exercise. Create two new functions:

- The first function throws an exception if there is a negative argument.

- The second function catches an exception if the modulo operator (`%`) throws an exception and attempts to correct it by coercing the argument to a positive integer.

|

github_jupyter

|

import numpy as np

def divide(numerator, denominator):

result = numerator/denominator

print("result = %f" % result)

divide(1.0, 0)

def divide1(numerator, denominator):

try:

result = numerator/denominator

print("result = %f" % result)

except:

print("You can't divide by 0!")

divide1(1.0, 'a')

divide1(1.0, 2)

divide1("x", 2)

def divide2(numerator, denominator):

try:

result = numerator / denominator

print("result = %f" % result)

except (ZeroDivisionError, TypeError) as err:

print("Got an exception: %s" % err)

divide2(1, "X")

divide2("x, 2)

# Handle division by 0 by using a small number

SMALL_NUMBER = 1e-3

def divide3(numerator, denominator):

try:

result = numerator/denominator

except ZeroDivisionError:

result = numerator/SMALL_NUMBER

print("result = %f" % result)

except Exception as err:

print("Different error than division by zero:", err)

divide3(1,0)

divide3("1",0)

import pandas as pd

def validateDF(df):

""""

:param pd.DataFrame df: should have a column named "hours"

"""

if not "hours" in df.columns:

raise ValueError("DataFrame should have a column named 'hours'.")

df = pd.DataFrame({'hours': range(10) })

validateDF(df)

df = pd.DataFrame({'years': range(10) })

validateDF(df)

| 0.357231 | 0.840193 |

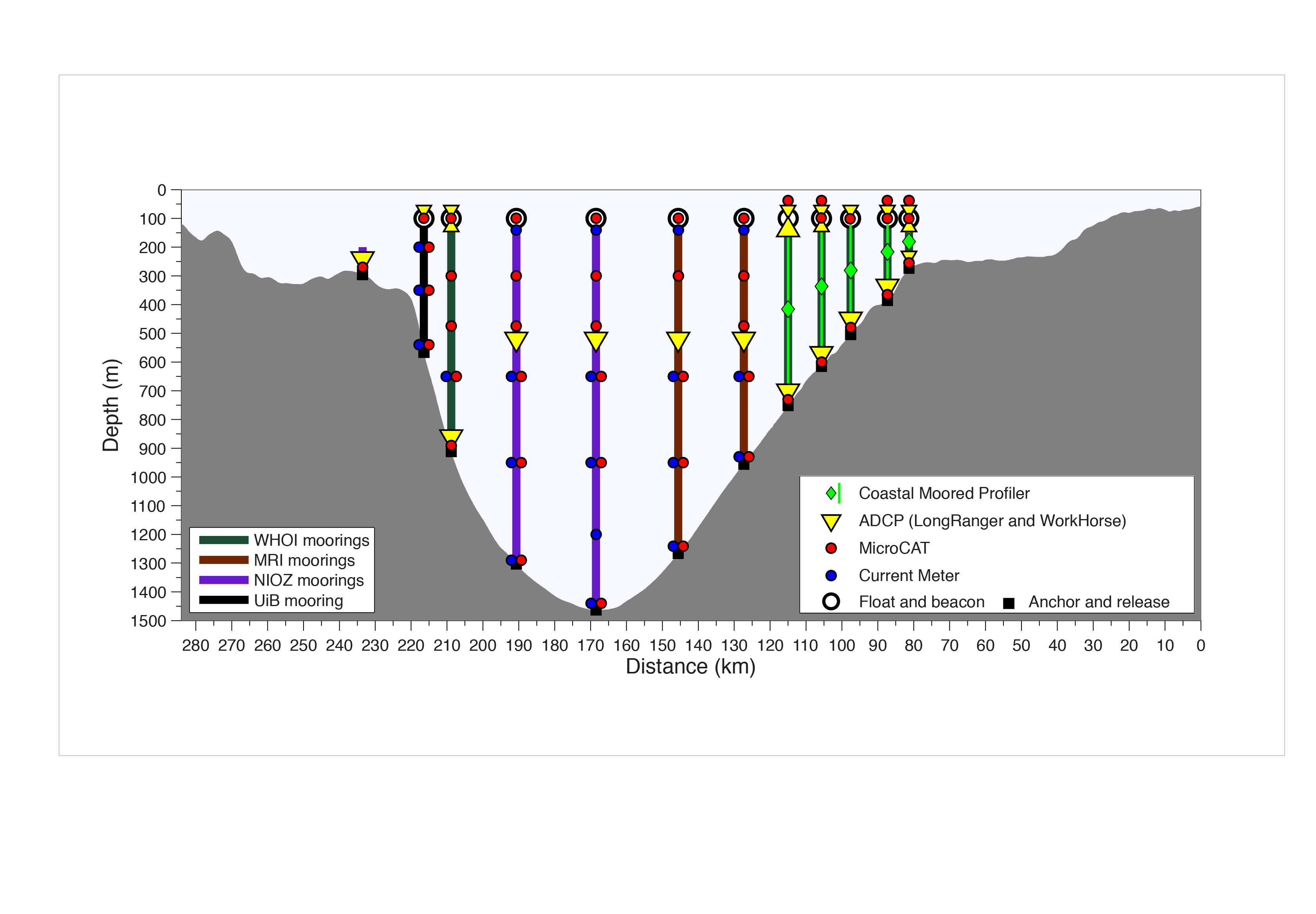

# Use Case 1: Kögur

In this example we will subsample a dataset stored on SciServer using methods resembling field-work procedures.

Specifically, we will estimate volume fluxes through the [Kögur section](http://kogur.whoi.edu) using (i) mooring arrays, and (ii) ship surveys.

```

# Import oceanspy

import oceanspy as ospy

# Import additional packages used in this notebook

import numpy as np

import matplotlib.pyplot as plt

import cartopy.crs as ccrs

```

The following cell starts a dask client (see the [Dask Client section in the tutorial](Tutorial.ipynb#Dask-Client)).

```

# Start client

from dask.distributed import Client

client = Client()

client

```

This command opens one of the datasets avaiable on SciServer.

```

# Open dataset stored on SciServer.

od = ospy.open_oceandataset.from_catalog("EGshelfIIseas2km_ASR_full")

```

The following cell changes the default parameters used by the plotting functions.

```

import matplotlib as mpl

%matplotlib inline

mpl.rcParams["figure.figsize"] = [10.0, 5.0]

```

## Mooring array

The following diagram shows the instrumentation deployed by observational oceanographers to monitor the Kögur section (source: http://kogur.whoi.edu/img/array_boxes.png).

The analogous OceanSpy function (`compute.mooring_array`) extracts vertical sections from the model using two criteria:

* Vertical sections follow great circle paths (unless cartesian coordinates are used).

* Vertical sections follow the grid of the model (extracted moorings are adjacent to each other, and the native grid of the model is preserved).

```

# Kögur information

lats_Kogur = [68.68, 67.52, 66.49]

lons_Kogur = [-26.28, -23.77, -22.99]

depth_Kogur = [0, -1750]

# Select time range:

# September 2007, extracting one snapshot every 3 days

timeRange = ["2007-09-01", "2007-09-30T18"]

timeFreq = "3D"

# Extract mooring array and fields used by this notebook

od_moor = od.subsample.mooring_array(

Xmoor=lons_Kogur,

Ymoor=lats_Kogur,

ZRange=depth_Kogur,

timeRange=timeRange,

timeFreq=timeFreq,

varList=["Temp", "S", "U", "V", "dyG", "dxG", "drF", "HFacS", "HFacW"],

)

```

The following cell shows how to store the mooring array in a NetCDF file. In this use case, we only use this feature to create a checkpoint. Another option could be to move the file to other servers or computers. If the NetCDF is re-opened using OceanSpy (as shown below), all OceanSpy functions are enabled and can be applied to the `oceandataset`.

```

# Store the new mooring dataset

filename = "Kogur_mooring.nc"

od_moor.to_netcdf(filename)

# The NetCDF can now be re-opened with oceanspy at any time,

# and on any computer

od_moor = ospy.open_oceandataset.from_netcdf(filename)

# Print size

print("Size:")

print(" * Original dataset: {0:.1f} TB".format(od.dataset.nbytes * 1.0e-12))

print(" * Mooring dataset: {0:.1f} MB".format(od_moor.dataset.nbytes * 1.0e-6))

print()

```

The following map shows the location of the moorings forming the Kögur section.

```

# Plot map and mooring locations

fig = plt.figure(figsize=(5, 5))

ax = od.plot.horizontal_section(varName="Depth")

XC = od_moor.dataset["XC"].squeeze()

YC = od_moor.dataset["YC"].squeeze()

line = ax.plot(XC, YC, "r.", transform=ccrs.PlateCarree())

```

The following figure shows the grid structure of the mooring array. The original grid structure of the model is unchaged, and each mooring is associated with one C-gridpoint (e.g., hydrography), two U-gridpoint and two V-gridpoint (e.g., velocities), and four G-gripoint (e.g., vertical component of relative vorticity).

```

# Print grid

print(od_moor.grid)

print()

print(od_moor.dataset.coords)

print()

# Plot 10 moorings and their grid points

fig, ax = plt.subplots(1, 1)

n_moorings = 10

# Markers:

for _, (pos, mark, col) in enumerate(

zip(["C", "G", "U", "V"], ["o", "x", ">", "^"], ["k", "m", "r", "b"])

):

X = od_moor.dataset["X" + pos].values[:n_moorings].flatten()

Y = od_moor.dataset["Y" + pos].values[:n_moorings].flatten()

ax.plot(X, Y, col + mark, markersize=20, label=pos)

if pos == "C":

for i in range(n_moorings):

ax.annotate(

str(i),

(X[i], Y[i]),

size=15,

weight="bold",

color="w",

ha="center",

va="center",

)

ax.set_xticks(X, minor=False)

ax.set_yticks(Y, minor=False)

elif pos == "G":

ax.set_xticks(X, minor=True)

ax.set_yticks(Y, minor=True)

ax.legend(prop={"size": 20})

ax.grid(which="major", linestyle="-")

ax.grid(which="minor", linestyle="--")

```

## Plots

### Vertical sections

We can now use OceanSpy to plot vertical sections. Here we plot isopycnal contours on top of the mean meridional velocities (`V`). Although there are two V-points associated with each mooring, the plot can be displayed because OceanSpy automatically performs a linear interpolation using the grid object.

```

# Plot time mean

ax = od_moor.plot.vertical_section(

varName="V",

contourName="Sigma0",

meanAxes="time",

robust=True,

cmap="coolwarm",

)

```

It is possible to visualize all the snapshots by omitting the `meanAxes='time'` argument:

```

# Plot all snapshots

ax = od_moor.plot.vertical_section(

varName="V", contourName="Sigma0", robust=True, cmap="coolwarm", col_wrap=5

)

# Alternatively, use the following command to produce a movie:

# anim = od_moor.animate.vertical_section(varName='V', contourName='Sigma0', ...)

```

### TS-diagrams

Here we use OceanSpy to plot a Temperature-Salinity diagram.

```

ax = od_moor.plot.TS_diagram()

# Alternatively, use the following command

# to explore how the water masses change with time:

# anim = od_moor.animate.TS_diagram()

```

We can also color each TS point using any field in the original dataset, or any field computed by OceanSpy. Fields that are not on the same grid of temperature and salinity are automatically regridded by OceanSpy.

```

ax = od_moor.plot.TS_diagram(

colorName="V",

meanAxes="time",

cmap_kwargs={"robust": True, "cmap": "coolwarm"},

)

```

## Volume flux

OceanSpy can be used to compute accurate volume fluxes through vertical sections.

The function `compute.mooring_volume_transport` calculates the inflow/outflow through all grid faces of the vertical section.

This function creates a new dimension named `path` because transports can be computed using two paths (see the plot below).

```

# Show volume flux variables

ds_Vflux = ospy.compute.mooring_volume_transport(od_moor)

od_moor = od_moor.merge_into_oceandataset(ds_Vflux)

print(ds_Vflux)

# Plot 10 moorings and volume flux directions.

fig, ax = plt.subplots(1, 1)

ms = 10

s = 100

ds = od_moor.dataset

_ = ax.step(

ds["XU"].isel(Xp1=0).squeeze().values,

ds["YV"].isel(Yp1=0).squeeze().values,

"C0.-",

ms=ms,

label="path0",

)

_ = ax.step(

ds["XU"].isel(Xp1=1).squeeze().values,

ds["YV"].isel(Yp1=1).squeeze().values,

"C1.-",

ms=ms,

label="path1",

)

_ = ax.plot(

ds["XC"].squeeze(), ds["YC"].squeeze(), "k.", ms=ms, label="mooring"

)

_ = ax.scatter(

ds["X_Vtransport"].where(ds["dir_Vtransport"] == 1),

ds["Y_Vtransport"].where(ds["dir_Vtransport"] == 1),

s=s,

c="k",

marker="^",

label="meridional direction",

)

_ = ax.scatter(

ds["X_Utransport"].where(ds["dir_Utransport"] == 1),

ds["Y_Utransport"].where(ds["dir_Utransport"] == 1),

s=s,

c="k",

marker=">",

label="zonal direction",

)

_ = ax.scatter(

ds["X_Vtransport"].where(ds["dir_Vtransport"] == -1),

ds["Y_Vtransport"].where(ds["dir_Vtransport"] == -1),

s=s,

c="k",

marker="v",

label="meridional direction",

)

_ = ax.scatter(

ds["X_Utransport"].where(ds["dir_Utransport"] == -1),

ds["Y_Utransport"].where(ds["dir_Utransport"] == -1),

s=s,

c="k",

marker="<",

label="zonal direction",

)

# Only show a few moorings

m_start = 50

m_end = 70

xlim = ax.set_xlim(

sorted(

[

ds["XC"].isel(mooring=m_start).values,

ds["XC"].isel(mooring=m_end).values,

]

)

)

ylim = ax.set_ylim(

sorted(

[

ds["YC"].isel(mooring=m_start).values,

ds["YC"].isel(mooring=m_end).values,

]

)

)

ax.legend()

```

Here we compute and plot the cumulative mean transport through the Kögur mooring array.

```

# Compute cumulative transport

tran_moor = od_moor.dataset["transport"]

cum_tran_moor = tran_moor.sum("Z").mean("time").cumsum("mooring")

cum_tran_moor.attrs = tran_moor.attrs

fig, ax = plt.subplots(1, 1)

lines = cum_tran_moor.squeeze().plot.line(hue="path", linewidth=3)

tot_mean_tran_moor = cum_tran_moor.isel(mooring=-1).mean("path")

title = ax.set_title(

"TOTAL MEAN TRANSPORT: {0:.1f} Sv" "".format(tot_mean_tran_moor.values)

)

```

Here we compute the transport of the overflow, defined as water with density greater than 27.8 kg m$^{-3}$.

```

# Mask transport using density

od_moor = od_moor.compute.potential_density_anomaly()

density = od_moor.dataset["Sigma0"].squeeze()

oflow_moor = tran_moor.where(density > 27.8)

# Compute cumulative transport as before

cum_oflow_moor = oflow_moor.sum("Z").mean("time").cumsum("mooring")

cum_oflow_moor.attrs = oflow_moor.attrs

fig, ax = plt.subplots(1, 1)

lines = cum_oflow_moor.squeeze().plot.line(hue="path", linewidth=3)

tot_mean_oflow_moor = cum_oflow_moor.isel(mooring=-1).mean("path")

title = ax.set_title(

"TOTAL MEAN OVERFLOW TRANSPORT: {0:.1f} Sv"

"".format(tot_mean_oflow_moor.values)

)

```

## Ship survey

The following picture shows the NATO Research Vessel Alliance, a ship designed to carry out research at sea (source: http://www.marina.difesa.it/noi-siamo-la-marina/mezzi/forze-navali/PublishingImages/_alliance.jpg).

The OceanSpy function analogous to a ship survey (`compute.survey_stations`) extracts vertical sections from the model using two criteria:

* Vertical sections follow great circle paths (unless cartesian coordinates are used) with constant horizontal spacing between stations.

* Interpolation is performed and all fields are returned at the same locations (the native grid of the model is NOT preserved).

```

# Spacing between interpolated stations

delta_Kogur = 2 # km

# Extract survey stations

# Reduce dataset to speed things up:

od_surv = od.subsample.survey_stations(

Xsurv=lons_Kogur,

Ysurv=lats_Kogur,

delta=delta_Kogur,

ZRange=depth_Kogur,

timeRange=timeRange,

timeFreq=timeFreq,

varList=["Temp", "S", "U", "V", "drC", "drF", "HFacC", "HFacW", "HFacS"],

)

# Plot map and survey stations

fig = plt.figure(figsize=(5, 5))

ax = od.plot.horizontal_section(varName="Depth")

XC = od_surv.dataset["XC"].squeeze()

YC = od_surv.dataset["YC"].squeeze()

line = ax.plot(XC, YC, "r.", transform=ccrs.PlateCarree())

```

## Orthogonal velocities

We can use OceanSpy to compute the velocity components orthogonal and tangential to the Kögur section.

```

od_surv = od_surv.compute.survey_aligned_velocities()

```

The following animation shows isopycnal contours on top of the velocity component orthogonal to the Kögur section.

```

anim = od_surv.animate.vertical_section(

varName="ort_Vel",

contourName="Sigma0",

robust=True,

cmap="coolwarm",

display=False,

)

# The following code is necessary to display the animation in the documentation.

# When the notebook is executed, remove the code below and set

# display=True in the command above to show the animation.

import matplotlib.pyplot as plt

dirName = "_static"

import os

try:

os.mkdir(dirName)

except FileExistsError:

pass

anim.save("{}/Kogur.mp4".format(dirName))

plt.close()

!ffmpeg -loglevel panic -y -i _static/Kogur.mp4 -filter_complex "[0:v] fps=12,scale=480:-1,split [a][b];[a] palettegen [p];[b][p] paletteuse" _static/Kogur.gif

!rm -f _static/Kogur.mp4

```

Finally, we can infer the volume flux by integrating the orthogonal velocities.

```

# Integrate along Z

od_surv = od_surv.compute.integral(varNameList="ort_Vel", axesList=["Z"])

# Compute transport using weights

od_surv = od_surv.compute.weighted_mean(

varNameList="I(ort_Vel)dZ", axesList=["station"]

)

transport_surv = (

od_surv.dataset["I(ort_Vel)dZ"] * od_surv.dataset["weight_I(ort_Vel)dZ"]

)

# Convert in Sverdrup

transport_surv = transport_surv * 1.0e-6

# Compute cumulative transport

cum_transport_surv = transport_surv.cumsum("station").rename(

"Horizontal volume transport"

)

cum_transport_surv.attrs["units"] = "Sv"

```

Here we plot the cumulative transport for each snapshot.

```

# Plot

fig, ax = plt.subplots(figsize=(13, 5))

lines = cum_transport_surv.squeeze().plot.line(hue="time", linewidth=3)

tot_mean_transport = cum_transport_surv.isel(station=-1).mean("time")

title = ax.set_title(

"TOTAL MEAN TRANSPORT: {0:.1f} Sv".format(tot_mean_transport.values)

)

```

|

github_jupyter

|

# Import oceanspy

import oceanspy as ospy

# Import additional packages used in this notebook

import numpy as np

import matplotlib.pyplot as plt

import cartopy.crs as ccrs

# Start client

from dask.distributed import Client

client = Client()

client

# Open dataset stored on SciServer.

od = ospy.open_oceandataset.from_catalog("EGshelfIIseas2km_ASR_full")

import matplotlib as mpl

%matplotlib inline

mpl.rcParams["figure.figsize"] = [10.0, 5.0]

# Kögur information

lats_Kogur = [68.68, 67.52, 66.49]

lons_Kogur = [-26.28, -23.77, -22.99]

depth_Kogur = [0, -1750]

# Select time range:

# September 2007, extracting one snapshot every 3 days

timeRange = ["2007-09-01", "2007-09-30T18"]

timeFreq = "3D"

# Extract mooring array and fields used by this notebook

od_moor = od.subsample.mooring_array(

Xmoor=lons_Kogur,

Ymoor=lats_Kogur,

ZRange=depth_Kogur,

timeRange=timeRange,

timeFreq=timeFreq,

varList=["Temp", "S", "U", "V", "dyG", "dxG", "drF", "HFacS", "HFacW"],

)

# Store the new mooring dataset

filename = "Kogur_mooring.nc"

od_moor.to_netcdf(filename)

# The NetCDF can now be re-opened with oceanspy at any time,

# and on any computer

od_moor = ospy.open_oceandataset.from_netcdf(filename)

# Print size

print("Size:")

print(" * Original dataset: {0:.1f} TB".format(od.dataset.nbytes * 1.0e-12))

print(" * Mooring dataset: {0:.1f} MB".format(od_moor.dataset.nbytes * 1.0e-6))

print()

# Plot map and mooring locations

fig = plt.figure(figsize=(5, 5))

ax = od.plot.horizontal_section(varName="Depth")

XC = od_moor.dataset["XC"].squeeze()

YC = od_moor.dataset["YC"].squeeze()

line = ax.plot(XC, YC, "r.", transform=ccrs.PlateCarree())

# Print grid

print(od_moor.grid)

print()

print(od_moor.dataset.coords)

print()

# Plot 10 moorings and their grid points

fig, ax = plt.subplots(1, 1)

n_moorings = 10

# Markers:

for _, (pos, mark, col) in enumerate(

zip(["C", "G", "U", "V"], ["o", "x", ">", "^"], ["k", "m", "r", "b"])

):

X = od_moor.dataset["X" + pos].values[:n_moorings].flatten()

Y = od_moor.dataset["Y" + pos].values[:n_moorings].flatten()

ax.plot(X, Y, col + mark, markersize=20, label=pos)

if pos == "C":

for i in range(n_moorings):

ax.annotate(

str(i),

(X[i], Y[i]),

size=15,

weight="bold",

color="w",

ha="center",

va="center",

)

ax.set_xticks(X, minor=False)

ax.set_yticks(Y, minor=False)

elif pos == "G":

ax.set_xticks(X, minor=True)

ax.set_yticks(Y, minor=True)

ax.legend(prop={"size": 20})

ax.grid(which="major", linestyle="-")

ax.grid(which="minor", linestyle="--")

# Plot time mean

ax = od_moor.plot.vertical_section(

varName="V",

contourName="Sigma0",

meanAxes="time",

robust=True,

cmap="coolwarm",

)

# Plot all snapshots

ax = od_moor.plot.vertical_section(

varName="V", contourName="Sigma0", robust=True, cmap="coolwarm", col_wrap=5

)

# Alternatively, use the following command to produce a movie:

# anim = od_moor.animate.vertical_section(varName='V', contourName='Sigma0', ...)

ax = od_moor.plot.TS_diagram()

# Alternatively, use the following command

# to explore how the water masses change with time:

# anim = od_moor.animate.TS_diagram()

ax = od_moor.plot.TS_diagram(

colorName="V",

meanAxes="time",

cmap_kwargs={"robust": True, "cmap": "coolwarm"},

)

# Show volume flux variables

ds_Vflux = ospy.compute.mooring_volume_transport(od_moor)

od_moor = od_moor.merge_into_oceandataset(ds_Vflux)

print(ds_Vflux)

# Plot 10 moorings and volume flux directions.

fig, ax = plt.subplots(1, 1)

ms = 10

s = 100

ds = od_moor.dataset

_ = ax.step(

ds["XU"].isel(Xp1=0).squeeze().values,

ds["YV"].isel(Yp1=0).squeeze().values,

"C0.-",

ms=ms,

label="path0",

)

_ = ax.step(

ds["XU"].isel(Xp1=1).squeeze().values,

ds["YV"].isel(Yp1=1).squeeze().values,

"C1.-",

ms=ms,

label="path1",

)

_ = ax.plot(

ds["XC"].squeeze(), ds["YC"].squeeze(), "k.", ms=ms, label="mooring"

)

_ = ax.scatter(

ds["X_Vtransport"].where(ds["dir_Vtransport"] == 1),

ds["Y_Vtransport"].where(ds["dir_Vtransport"] == 1),

s=s,

c="k",

marker="^",

label="meridional direction",

)

_ = ax.scatter(

ds["X_Utransport"].where(ds["dir_Utransport"] == 1),

ds["Y_Utransport"].where(ds["dir_Utransport"] == 1),

s=s,

c="k",

marker=">",

label="zonal direction",

)

_ = ax.scatter(

ds["X_Vtransport"].where(ds["dir_Vtransport"] == -1),

ds["Y_Vtransport"].where(ds["dir_Vtransport"] == -1),

s=s,

c="k",

marker="v",

label="meridional direction",

)

_ = ax.scatter(

ds["X_Utransport"].where(ds["dir_Utransport"] == -1),

ds["Y_Utransport"].where(ds["dir_Utransport"] == -1),

s=s,

c="k",

marker="<",

label="zonal direction",

)

# Only show a few moorings

m_start = 50

m_end = 70

xlim = ax.set_xlim(

sorted(

[

ds["XC"].isel(mooring=m_start).values,

ds["XC"].isel(mooring=m_end).values,

]

)

)

ylim = ax.set_ylim(

sorted(

[

ds["YC"].isel(mooring=m_start).values,

ds["YC"].isel(mooring=m_end).values,

]

)

)

ax.legend()

# Compute cumulative transport

tran_moor = od_moor.dataset["transport"]

cum_tran_moor = tran_moor.sum("Z").mean("time").cumsum("mooring")

cum_tran_moor.attrs = tran_moor.attrs

fig, ax = plt.subplots(1, 1)

lines = cum_tran_moor.squeeze().plot.line(hue="path", linewidth=3)

tot_mean_tran_moor = cum_tran_moor.isel(mooring=-1).mean("path")

title = ax.set_title(

"TOTAL MEAN TRANSPORT: {0:.1f} Sv" "".format(tot_mean_tran_moor.values)

)

# Mask transport using density

od_moor = od_moor.compute.potential_density_anomaly()

density = od_moor.dataset["Sigma0"].squeeze()

oflow_moor = tran_moor.where(density > 27.8)

# Compute cumulative transport as before

cum_oflow_moor = oflow_moor.sum("Z").mean("time").cumsum("mooring")

cum_oflow_moor.attrs = oflow_moor.attrs

fig, ax = plt.subplots(1, 1)

lines = cum_oflow_moor.squeeze().plot.line(hue="path", linewidth=3)

tot_mean_oflow_moor = cum_oflow_moor.isel(mooring=-1).mean("path")

title = ax.set_title(

"TOTAL MEAN OVERFLOW TRANSPORT: {0:.1f} Sv"

"".format(tot_mean_oflow_moor.values)

)

# Spacing between interpolated stations

delta_Kogur = 2 # km

# Extract survey stations

# Reduce dataset to speed things up:

od_surv = od.subsample.survey_stations(

Xsurv=lons_Kogur,

Ysurv=lats_Kogur,

delta=delta_Kogur,

ZRange=depth_Kogur,

timeRange=timeRange,

timeFreq=timeFreq,

varList=["Temp", "S", "U", "V", "drC", "drF", "HFacC", "HFacW", "HFacS"],

)

# Plot map and survey stations

fig = plt.figure(figsize=(5, 5))

ax = od.plot.horizontal_section(varName="Depth")

XC = od_surv.dataset["XC"].squeeze()

YC = od_surv.dataset["YC"].squeeze()

line = ax.plot(XC, YC, "r.", transform=ccrs.PlateCarree())

od_surv = od_surv.compute.survey_aligned_velocities()

anim = od_surv.animate.vertical_section(

varName="ort_Vel",

contourName="Sigma0",

robust=True,

cmap="coolwarm",

display=False,

)

# The following code is necessary to display the animation in the documentation.

# When the notebook is executed, remove the code below and set

# display=True in the command above to show the animation.

import matplotlib.pyplot as plt

dirName = "_static"

import os

try:

os.mkdir(dirName)

except FileExistsError:

pass

anim.save("{}/Kogur.mp4".format(dirName))

plt.close()

!ffmpeg -loglevel panic -y -i _static/Kogur.mp4 -filter_complex "[0:v] fps=12,scale=480:-1,split [a][b];[a] palettegen [p];[b][p] paletteuse" _static/Kogur.gif

!rm -f _static/Kogur.mp4

# Integrate along Z

od_surv = od_surv.compute.integral(varNameList="ort_Vel", axesList=["Z"])

# Compute transport using weights

od_surv = od_surv.compute.weighted_mean(

varNameList="I(ort_Vel)dZ", axesList=["station"]

)

transport_surv = (

od_surv.dataset["I(ort_Vel)dZ"] * od_surv.dataset["weight_I(ort_Vel)dZ"]

)

# Convert in Sverdrup

transport_surv = transport_surv * 1.0e-6

# Compute cumulative transport

cum_transport_surv = transport_surv.cumsum("station").rename(

"Horizontal volume transport"

)

cum_transport_surv.attrs["units"] = "Sv"

# Plot

fig, ax = plt.subplots(figsize=(13, 5))

lines = cum_transport_surv.squeeze().plot.line(hue="time", linewidth=3)

tot_mean_transport = cum_transport_surv.isel(station=-1).mean("time")

title = ax.set_title(

"TOTAL MEAN TRANSPORT: {0:.1f} Sv".format(tot_mean_transport.values)

)

| 0.670932 | 0.991067 |

# Grove Temperature Sensor 1.2

This example shows how to use the [Grove Temperature Sensor v1.2](http://wiki.seeedstudio.com/Grove-Temperature_Sensor_V1.2/). You will also see how to plot a graph using matplotlib. The Grove Temperature sensor produces an analog signal, and requires an ADC.

A Grove Temperature sensor and Pynq Grove Adapter, or Pynq Shield is required. The Grove Temperature Sensor, Pynq Grove Adapter, and Grove I2C ADC are used for this example.

You can read a single value of temperature or read multiple values at regular intervals for a desired duration.

At the end of this notebook, a Python only solution with single-sample read functionality is provided.

### 1. Load overlay

```

from pynq.overlays.base import BaseOverlay

base = BaseOverlay("base.bit")

```

### 2. Read single temperature

This example shows on how to get a single temperature sample from the Grove TMP sensor.

The Grove ADC is assumed to be attached to the GR4 connector of the StickIt. The StickIt module is assumed to be plugged in the 1st PMOD labeled JB. The Grove TMP sensor is connected to the other connector of the Grove ADC.

Grove ADC provides a raw sample which is converted into resistance first and then converted into temperature.

```

import math

from pynq.lib.pmod import Grove_TMP

from pynq.lib.pmod import PMOD_GROVE_G4

tmp = Grove_TMP(base.PMODB,PMOD_GROVE_G4)

temperature = tmp.read()

print(float("{0:.2f}".format(temperature)),'degree Celsius')

```

### 3. Start logging once every 100ms for 10 seconds

Executing the next cell will start logging the temperature sensor values every 100ms, and will run for 10s. You can try touch/hold the temperature sensor to vary the measured temperature.

You can vary the logging interval and the duration by changing the values 100 and 10 in the cellbelow. The raw samples are stored in the internal memory, and converted into temperature values.

```

import time

%matplotlib inline

import matplotlib.pyplot as plt

tmp.set_log_interval_ms(100)

tmp.start_log()

# Change input during this time

time.sleep(10)

tmp_log = tmp.get_log()

plt.plot(range(len(tmp_log)), tmp_log, 'ro')

plt.title('Grove Temperature Plot')

min_tmp_log = min(tmp_log)

max_tmp_log = max(tmp_log)

plt.axis([0, len(tmp_log), min_tmp_log, max_tmp_log])

plt.show()

```

### 4. A Pure Python class to exercise the AXI IIC Controller inheriting from PMOD_IIC

This class is ported from http://wiki.seeedstudio.com/Grove-Temperature_Sensor/

```

from time import sleep

from math import log

from pynq.lib.pmod import PMOD_GROVE_G3

from pynq.lib.pmod import PMOD_GROVE_G4

from pynq.lib import Pmod_IIC

class Python_Grove_TMP(Pmod_IIC):

"""This class controls the grove temperature sensor.

This class inherits from the PMODIIC class.

Attributes

----------

iop : _IOP

The _IOP object returned from the DevMode.

scl_pin : int

The SCL pin number.

sda_pin : int

The SDA pin number.

iic_addr : int

The IIC device address.

"""

def __init__(self, pmod_id, gr_pins, model = 'v1.2'):

"""Return a new instance of a grove OLED object.

Parameters

----------

pmod_id : int

The PMOD ID (1, 2) corresponding to (PMODA, PMODB).

gr_pins: list

The group pins on Grove Adapter. G3 or G4 is valid.

model : string

Temperature sensor model (can be found on the device).

"""

if gr_pins in [PMOD_GROVE_G3, PMOD_GROVE_G4]:

[scl_pin,sda_pin] = gr_pins

else:

raise ValueError("Valid group numbers are G3 and G4.")

# Each revision has its own B value

if model == 'v1.2':

# v1.2 uses thermistor NCP18WF104F03RC

self.bValue = 4250

elif model == 'v1.1':

# v1.1 uses thermistor NCP18WF104F03RC

self.bValue = 4250

else:

# v1.0 uses thermistor TTC3A103*39H

self.bValue = 3975

super().__init__(pmod_id, scl_pin, sda_pin, 0x50)

# Initialize the Grove ADC

self.send([0x2,0x20]);

def read(self):

"""Read temperature in Celsius from grove temperature sensor.

Parameters

----------

None

Returns

-------

float

Temperature reading in Celsius.

"""

val = self._read_grove_adc()

R = 4095.0/val - 1.0

temp = 1.0/(log(R)/self.bValue + 1/298.15)-273.15

return temp

def _read_grove_adc(self):

self.send([0])

bytes = self.receive(2)

return 2*(((bytes[0] & 0x0f) << 8) | bytes[1])

from pynq import PL

# Flush IOP state

PL.reset()

py_tmp = Python_Grove_TMP(base.PMODB, PMOD_GROVE_G4)

temperature = py_tmp.read()

print(float("{0:.2f}".format(temperature)),'degree Celsius')

```

Copyright (C) 2020 Xilinx, Inc

|

github_jupyter

|

from pynq.overlays.base import BaseOverlay

base = BaseOverlay("base.bit")

import math

from pynq.lib.pmod import Grove_TMP

from pynq.lib.pmod import PMOD_GROVE_G4

tmp = Grove_TMP(base.PMODB,PMOD_GROVE_G4)

temperature = tmp.read()

print(float("{0:.2f}".format(temperature)),'degree Celsius')

import time

%matplotlib inline

import matplotlib.pyplot as plt

tmp.set_log_interval_ms(100)

tmp.start_log()

# Change input during this time

time.sleep(10)

tmp_log = tmp.get_log()

plt.plot(range(len(tmp_log)), tmp_log, 'ro')

plt.title('Grove Temperature Plot')

min_tmp_log = min(tmp_log)

max_tmp_log = max(tmp_log)

plt.axis([0, len(tmp_log), min_tmp_log, max_tmp_log])

plt.show()

from time import sleep

from math import log

from pynq.lib.pmod import PMOD_GROVE_G3

from pynq.lib.pmod import PMOD_GROVE_G4

from pynq.lib import Pmod_IIC

class Python_Grove_TMP(Pmod_IIC):

"""This class controls the grove temperature sensor.

This class inherits from the PMODIIC class.

Attributes

----------

iop : _IOP

The _IOP object returned from the DevMode.

scl_pin : int

The SCL pin number.

sda_pin : int

The SDA pin number.

iic_addr : int

The IIC device address.

"""

def __init__(self, pmod_id, gr_pins, model = 'v1.2'):

"""Return a new instance of a grove OLED object.

Parameters

----------

pmod_id : int

The PMOD ID (1, 2) corresponding to (PMODA, PMODB).

gr_pins: list

The group pins on Grove Adapter. G3 or G4 is valid.

model : string

Temperature sensor model (can be found on the device).

"""

if gr_pins in [PMOD_GROVE_G3, PMOD_GROVE_G4]:

[scl_pin,sda_pin] = gr_pins

else:

raise ValueError("Valid group numbers are G3 and G4.")

# Each revision has its own B value

if model == 'v1.2':

# v1.2 uses thermistor NCP18WF104F03RC

self.bValue = 4250

elif model == 'v1.1':

# v1.1 uses thermistor NCP18WF104F03RC

self.bValue = 4250

else:

# v1.0 uses thermistor TTC3A103*39H

self.bValue = 3975

super().__init__(pmod_id, scl_pin, sda_pin, 0x50)

# Initialize the Grove ADC

self.send([0x2,0x20]);

def read(self):

"""Read temperature in Celsius from grove temperature sensor.

Parameters

----------

None

Returns

-------

float

Temperature reading in Celsius.

"""

val = self._read_grove_adc()

R = 4095.0/val - 1.0

temp = 1.0/(log(R)/self.bValue + 1/298.15)-273.15

return temp

def _read_grove_adc(self):

self.send([0])

bytes = self.receive(2)

return 2*(((bytes[0] & 0x0f) << 8) | bytes[1])

from pynq import PL

# Flush IOP state

PL.reset()

py_tmp = Python_Grove_TMP(base.PMODB, PMOD_GROVE_G4)

temperature = py_tmp.read()

print(float("{0:.2f}".format(temperature)),'degree Celsius')

| 0.664105 | 0.971483 |

# The *new* **Network** Class

## Intro

This notebook demonstrates the new design of skrf's **Network** Class. The new class utilizes a more object-oriented approach which is cleaner and more scalable. The draw-back is that it breaks backward compatibility.

Creating a *new style* **Network** from an old

```

import skrf as rf

%matplotlib inline

from pylab import *

rf.stylely()

from skrf import network2

a = network2.Network.from_ntwkv1(rf.data.ring_slot)

```

The new **Network** class employs nested objects, which makes for a cleaner and more logical namespace. The basic structure is:

* **Network**

* **Frequency ** (same as before)

* **Parameter** (s,z,y,etc)

* **Projection** ( db10, db20, deg, mag,etc)

## Network Parameters

Accessing a Network's parameters like `s`,`z`,or `y` returns a **Parameters** object,

```

type(a.s)

```

You can get at the array by accessing the property `val`.

```

a.s.val[:3]

```

You can also slice the parameter directly. This can be used as an alternative way to access the values.

```

a.s[:2]

```

This nested-object desgin allows for more concise function calls. For example, plot functions become members of the parameters, which behave like you expect

```

a.s.plot()

a.z.plot()

axis('equal')

```

## Projections

Each parameter has members for various scalar projections.

```

type(a.s.db)

type(a.s.deg)

```

Their numerical values may be accessed through `val` attribute or by direct slicing, just like a **Parameter**

```

a.s.db[:2]

```

Projections also `plot()` as you expect

```

a.s.db.plot();

a.s.deg.plot(1,0);

```

## Ipython Notebook display system

One interesting advantage of using an object-oriented model for parameters and projections is that we can create [custom display logic](http://nbviewer.ipython.org/github/ipython/ipython/blob/master/examples/notebooks/Custom%20Display%20Logic.ipynb) for the ipython notebook. This allows us to define graphical representations for an object, removing the need to call any plot method.

```

a.s.db

a.z.im

```

## Accessing numpy array properties

Numpy ndarray properties are accessable on both **Parameters** and **Projections **. These are implemented using python `__getattr__()` operator so they wont tab out, but you can still use em.

```

a.s.db.plot()

axhline(a.s.db.min(),color='k')

axhline(a.s.db.max(),color='k')

```

## Frequency Band Selection

Networks can sliced by an index on the frequency axis or by a human readable frequency selections,

```

a[40:100] #slice by frequency index

a['82-92ghz'] # slice by a human readable string

a['82-92ghz'].s.db

```

## Subnetworks

Individual s-parameters can be accessed by calling a **Network** with the desired port indecies (*index starting from 0*)

```

s11 = a(0,0) # s11

s22 = a(1,1) # s22

s12 = a(0,1) # s12

s21 = a(1,1) # s21

s11

```

## Time domain

Time domain transform is implemented as a Parameter named `s_time`. Note that accessing this parameter implicitly windows the s-parameters before taking the FFT. For finer control over the transform, use the functions `s2time` and `windowed`.

```

b = network2.Network.from_ntwkv1(rf.data.ring_slot_meas)

b.s_time.db

```

|

github_jupyter

|

import skrf as rf

%matplotlib inline

from pylab import *

rf.stylely()

from skrf import network2

a = network2.Network.from_ntwkv1(rf.data.ring_slot)

type(a.s)

a.s.val[:3]

a.s[:2]

a.s.plot()

a.z.plot()

axis('equal')

type(a.s.db)

type(a.s.deg)

a.s.db[:2]

a.s.db.plot();

a.s.deg.plot(1,0);

a.s.db

a.z.im

a.s.db.plot()

axhline(a.s.db.min(),color='k')

axhline(a.s.db.max(),color='k')

a[40:100] #slice by frequency index

a['82-92ghz'] # slice by a human readable string

a['82-92ghz'].s.db

s11 = a(0,0) # s11

s22 = a(1,1) # s22

s12 = a(0,1) # s12

s21 = a(1,1) # s21

s11

b = network2.Network.from_ntwkv1(rf.data.ring_slot_meas)

b.s_time.db

| 0.371365 | 0.921287 |

## Ghana Climate Data

### Exploratory Data Analysis

```

# Climate data source from https://www.ncdc.noaa.gov/cdo-web

# Period of record from 1973

# Contains data records of 17 stations of varying lengths

# Original units of variables - imperial units

%matplotlib inline

# Import libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# Seaborn for additional plotting and styling

import seaborn as sns

# Define file path to connect data source:

fp = 'C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/data_weatherGH/1619559410439.csv'

# Read in data from the csv file

# Low memory set to false to remove memory errors

data = pd.read_csv(fp, parse_dates=['DATE'], dayfirst=True, low_memory=False)

# Print data types

data.dtypes

#Print column names

data.columns.values

# Check DATE type format

type(data['DATE'])

# Check dataframe shape (number of rows, number of columns)

data.shape

# Confirm output of dataframe

data.head()

# Check sum of no-data, not a number (NaN) values in the data set

data[['PRCP', 'TAVG', 'TMAX', 'TMIN']].isna().sum()

# Confirm output of parse date

data.loc[0, 'DATE'].day_name()

# Drop column Unnamed: 0, which contains station unique code

# Drop PRCP column, work only with temperature data

data.drop(columns=['Unnamed: 0', 'PRCP'], inplace=True)

# Check drop of Unnamed: 0 and PRCP column

data.tail()

# Rename column heading of Unnamed: 1 to TOWN and TAVG to TEMP for familiarity

data = data.rename(columns={'Unnamed: 1': 'TOWN', 'TAVG': 'TEMP'})

# Confirm rename of columns

data.head()

# Replace inadvertantly named double named towns falling under 2 columns to its original double word names & KIA for Kotoka International Airport

data.replace(to_replace=['AKIM', 'KOTOKA', 'KETE', 'SEFWI'],

value= ['AKIM ODA', 'KIA', 'KETE KRACHI', 'SEFWI WIASO'],

inplace=True)

# Check confirmation of output

data.head()

# Drop STATION column due to renamed town heading

data.drop(columns=['STATION'], inplace=True)

# Check drop of STATION column

data.head()

# The date of first observation

first_obs = data.at[0, "DATE"]

print('The first record of observation was on', data.at[0, "DATE"])

# Convert to string to change capital case to lower case for TOWN names

data['TOWN'] = data['TOWN'].astype(str)

data['TOWN'] = data['TOWN'].str.title()

# Check conversion string change

data.head()

# Convert imperial units to metric through def function

def fahr_to_celsius(temp_fahrenheit):

"""Function to convert Fahrenheit temperature into Celsius.

Parameters

----------

temp_fahrenheit: int | float

Input temperature in Fahrenheit (should be a number)

Returns

-------

Temperature in Celsius (float)

"""

# Convert the Fahrenheit into Celsius

converted_temp = (temp_fahrenheit - 32) / 1.8

return converted_temp # Use apply to convert each row of value

data[['TEMP_C', 'TMAX_C', 'TMIN_C']] = round(data[['TEMP', 'TMAX', 'TMIN']].apply(fahr_to_celsius), 2)

# Check conversion to metric units

data.head()

# Drop columns with fahrenheit units since they are no longer needed

data.drop(columns=['TEMP', 'TMAX', 'TMIN'], inplace=True)

# print head to evalaute

data.head()

# For later analysis

data['DayofWeek'] = data['DATE'].dt.day_name()

data.head()

# Take mean of mean temperatures of the data set

temp_mean = round(data['TEMP_C'].mean(), 2)

temp_mean

# Set DATE as index

data.set_index('DATE', inplace=True)

data.head()

# Mean daily temperature across all the weather stations

daily_temp = round(data['TEMP_C'].resample('D').mean(), 2)

daily_temp.head()

# Indicate the mean minimum and maximum daily temperature

print(daily_temp.min(),'°C')

print('')

print(daily_temp.max(),'°C')

# Show which dates the minimum and maximum daily mean temperature were recorded

min_daily = daily_temp.loc[daily_temp == 16.67]

print(min_daily)

print(' ')

max_daily = daily_temp.loc[daily_temp == 35.0]

print(max_daily)

# Mean monthly temperature across all the weather stations

monthly_temp = round(data['TEMP_C'].resample('M').mean(), 2)

monthly_temp.head()

# Indicate the mean minimum and maximum monthly temperature

min_monthly_temp = monthly_temp.min()

print(min_monthly_temp)

print('')

max_monthly_temp = monthly_temp.max()

print(max_monthly_temp)

# Show the date the mean minimum and maximum monthly temperature were recorded

print(monthly_temp.loc[monthly_temp == min_monthly_temp])

print(' ')

print(monthly_temp.loc[monthly_temp == max_monthly_temp])

# Mean yearly temperature across all the weather stations

yearly_temp = round(data[['TEMP_C', 'TMAX_C', 'TMIN_C']].resample('Y').mean(), 2)

# Check output

yearly_temp.head()

# Indicate the mean minimum and maximum yearly temperature

min_yearly_temp = yearly_temp['TEMP_C'].min()

print(min_yearly_temp)

print(' ')

max_yearly_temp = yearly_temp['TEMP_C'].max()

print(max_yearly_temp)

# Show the year the minimum and maximum yearly temperature were recorded

print(yearly_temp['TEMP_C'].loc[yearly_temp['TEMP_C'] == min_yearly_temp])

print(' ')

print(yearly_temp['TEMP_C'].loc[yearly_temp['TEMP_C'] == max_yearly_temp])

# Reset DATE index to column

yearly_temp = yearly_temp.reset_index()

yearly_temp.head()

# Convert DATE to year using dt.year

yearly_temp['YEAR']= yearly_temp['DATE'].dt.year

yearly_temp.head()

# Plot mean yearly temperatures

import matplotlib.dates as mdates

import matplotlib.ticker as ticker

# Plot style use Seaborn

plt.style.use('seaborn-whitegrid')

fig, ax = plt.subplots(1, figsize=(10, 6))

# Data values for X and Y

x = yearly_temp.YEAR

y = yearly_temp.TEMP_C

# Plot x, y values & parameters

plt.plot(x, y, color = 'red', linestyle='-', marker='o', mfc='blue')

# Highlight coalescencing temps around 28 °C

plt.plot(x[-6:], y[-6:], 'y*', ms='12')

fig.autofmt_xdate()

# Plot title & attributes

plt.title('Yearly Mean Temperatures, Ghana', fontdict={'fontname': 'comic sans ms',

'fontsize': 15, 'weight': 'bold'})

# Add label to the plot

#plt.text(1983, 30.190, ' Severe Drought & Bushfires', fontsize='large')

# Annotate peak temp with description & arrow

ax.annotate('Severe drought & bushfires', fontsize='large', weight='semibold',

xy=(1983, 30.190), xytext=(1986, 29.5),

arrowprops=dict(arrowstyle='->',

connectionstyle='arc'),

xycoords='data',)

# Labels for axes

plt.xlabel('Year', fontdict={'fontsize': 14})

plt.ylabel('Temperature °C', fontdict={'fontsize': 14})

# legend

plt.legend(['Mean Temperature'], frameon=True, fontsize='x-large')

# major ticks locators

plt.xticks(np.arange(min(x), max(x), 4))

def setup(ax):

ax.xaxis.set_ticks_position('bottom')

ax.tick_params(which='minor', width=0.75, length=2.5)

setup(ax)

ax.xaxis.set_minor_locator(ticker.FixedLocator((x)))

plt.tight_layout()

# To save graph

plt.savefig('C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/Graphs/yearly_mean_gh.jpg')

import pandas_bokeh

pandas_bokeh.output_notebook()

pd.set_option('plotting.backend', 'pandas_bokeh')

# Plot interactive map with Bokeh

from bokeh.models import HoverTool

ax = yearly_temp.plot(x = 'YEAR', y = 'TEMP_C', title='Yearly Mean Temperatures, Ghana',

xlabel = 'Year', ylabel = 'Temperature °C', xticks = (yearly_temp.YEAR[::4]),

legend = False, plot_data_points = True, hovertool_string= r'''<h5> @{YEAR} </h5>

<h5> @{TEMP_C} °C </h5>''') # Change hoovertool stringe text to values in dataframe only

# set output to static HTML file

from bokeh.plotting import figure, output_file, show

output_file(filename='Ghana_Climate_Interactive.html', title='Ghana Climate HTML file')

#show(ax)

# Group by towns

sta_town_grp = data.groupby(['TOWN'])

# Check groupy operation with one example

sta_town_grp.get_group('Akuse').head()

# Filter out the maximum temps ever recorded in each town from TMAX_C

sta_town_max = sta_town_grp['TMAX_C'].max()

sta_town_max = sta_town_max.sort_values(ascending=False)

sta_town_max

# Filter out row values of the date of occurence of highest maximum temp for first few towns

data.loc[(data.TMAX_C == 48.89) | (data.TMAX_C == 43.89)]

# Sort DATE at index to allow slicing, done chronologically

data.sort_index(inplace=True)

# Calculate decadel mean temperatures

avg_temp_1970s = data['1970': '1979']['TEMP_C'].mean()

avg_temp_1980s = data['1980': '1989']['TEMP_C'].mean()

avg_temp_1990s = data['1990': '1999']['TEMP_C'].mean()

avg_temp_2000s = data['2000': '2009']['TEMP_C'].mean()

avg_temp_2010s = data['2010': '2019']['TEMP_C'].mean()

# Print to screen the mean decadal temperatures

print(round(avg_temp_1970s, 2))

print(' ')

print(round(avg_temp_1980s, 2))

print(' ')

print(round(avg_temp_1990s, 2))

print(' ')

print(round(avg_temp_2000s, 2))

print(' ')

print(round(avg_temp_2010s, 2))

# Take monthly mean of all daily records

monthly_data = round(data.resample('M').mean(), 2)

monthly_data.head()

# Return to column heading

monthly_data.reset_index(inplace=True)

# Convert to date month

monthly_data['MONTH'] = monthly_data['DATE'].dt.month_name().str.slice(stop=3)

monthly_data.head()

# Take mean of all indivdual months

monthly_mean = round(monthly_data.groupby(['MONTH']).mean(), 2)

monthly_mean.head()

# Create new order for actual calender months

new_order = ['Jan', 'Feb', 'Mar', 'Apr', 'May', 'Jun', 'Jul',

'Aug', 'Sep', 'Oct', 'Nov', 'Dec']

monthly_mean = monthly_mean.reindex(new_order, axis=0)

# check output of reindex

monthly_mean.head()

monthly_mean = monthly_mean.reset_index()

monthly_mean.head()

# Plot mean monthly temperatures for the period of the record

# Plot style use Seaborn

plt.style.use('seaborn')

fig, ax1 = plt.subplots(1, figsize=(10, 6))

x = monthly_mean['MONTH']

y = monthly_mean['TEMP_C']

plt.ylim(23, 31)

plt.plot(x, y, color = 'tomato', linestyle='-', marker='o', mfc='orange', linewidth = 3, markersize = 8)

plt.grid(axis = 'x')

plt.title('Monthly Mean Temperatures, Ghana', fontdict={'fontname': 'comic sans ms',

'fontsize': 15, 'weight': 'bold'})

# Labels for axes

plt.xlabel('Month', fontdict={'fontsize': 14})

plt.ylabel('Temperature °C', fontdict={'fontsize': 14})

# To save graph

plt.savefig('C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/Graphs/monthly_mean_temp.jpg')

# Use bokeh for interactivity

ax1 = monthly_mean.plot.line(x = monthly_mean['MONTH'],

y = 'TEMP_C', title='Mean Monthly Temperatures, Ghana',

xlabel ='Month', ylabel='Temperature °C', ylim=(21, 33),

marker='o', plot_data_points_size = 8, line_width = 3,

legend = False, color = 'tomato', plot_data_points=True,

hovertool_string= r'''<h5> @{MONTH} </h5>

<h5> @{TEMP_C} °C </h5>''') # Change hoovertool stringe text to values in dataframe only

# set output to static HTML file

# Remove x grid line for different fig style

ax1.xgrid.grid_line_color = None

output_file(filename='mean_monthly_gh.html', title='Monthly Mean Temperatures, Ghana')

show(ax1)

# Create new column of Climatic zones in Ghana according "Climatic Regions of Ghana (Abass, 2009)

# To help evaluate changes since the period of the record

data['CLIMATE_ZONES'] = data['TOWN'].map({'Tamale': 'Zone_4', 'Wenchi': 'Zone_4', 'Navrongo': 'Zone_4',

'Wa': 'Zone_4', 'Bole': 'Zone_4', 'Kete Krachi': 'Zone_4',

'Akim Oda': 'Zone_3', 'Sefwi Wiaso': 'Zone_3', 'Koforidua': 'Zone_3',

'Kumasi': 'Zone_3', 'Sunyani': 'Zone_3', 'Ho': 'Zone_3','Kia': 'Zone_2',

'Akuse': 'Zone_2', 'Ada': 'Zone_2', 'Axim': 'Zone_1', 'Takoradi': 'Zone_1'})

data.tail()

# Find mean yearly temp per climate zone

climate_zones_yearly = data.groupby('CLIMATE_ZONES').resample('Y').mean()

climate_zones_yearly.head()

# Return to column heading without column index

climate_zones_yearly.reset_index(inplace=True)

climate_zones_yearly.head()

# Pivot table to get temps for each climate zone per year in column format

climate_zones_yearly = climate_zones_yearly.pivot_table(values = 'TEMP_C', index = 'DATE', columns = 'CLIMATE_ZONES')

climate_zones_yearly.head()

# Check for missing values

climate_zones_yearly.isnull().sum()

# Drop NA values due to grouping operation

climate_zones_yearly = climate_zones_yearly.dropna()

climate_zones_yearly.head()

# Plot mean monthly temperatures for the 4 climate zones in Ghana

# Plot style use Seaborn

plt.style.use('seaborn')

#sns.set_style('whitegrid')

fig, ax1 = plt.subplots(1, figsize=(10, 6))

x = climate_zones_yearly.reset_index()['DATE']

y_1 = climate_zones_yearly['Zone_1']

y_2 = climate_zones_yearly['Zone_2']

y_3 = climate_zones_yearly['Zone_3']

y_4 = climate_zones_yearly['Zone_4']

# x, y limits of axes

plt.ylim(23, 33)

# Plot each lines on same axis

plt.plot(x, y_1, '.-g', label = 'Zone 1', linewidth = 2)

plt.plot(x, y_2, '.--c', label = 'Zone 2', linewidth = 2)

plt.plot(x, y_3, '.-.b', label = 'Zone 3', linewidth = 2)

plt.plot(x, y_4, '.:r', label = 'Zone 4', linewidth = 2)

leg = plt.legend()

# get the lines and texts inside legend box

leg_lines = leg.get_lines()

leg_texts = leg.get_texts()

# bulk-set the properties of all lines and texts

plt.setp(leg_lines, linewidth=4)

plt.setp(leg_texts, fontsize='x-large')

plt.title('Mean Yearly Temperature per Climate Zone, Ghana',

fontdict={'fontname': 'comic sans ms',

'fontsize': 15, 'weight': 'bold'})

# Labels for axes

plt.xlabel('Year', fontdict={'fontsize': 14})

plt.ylabel('Temperature °C', fontdict={'fontsize': 14})

# To save graph

plt.savefig('C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/Graphs/zone_yearly_mean.png')

# Find mean monthly temp per climate zone

climate_zones_monthly = data.groupby('CLIMATE_ZONES').resample('M').mean()

climate_zones_monthly.head()

# Return to column heading without column index

climate_zones_monthly.reset_index(inplace=True)

# Convert to date month in short format for temps months evaluation

climate_zones_monthly['MONTH'] = climate_zones_monthly['DATE'].dt.month_name().str.slice(stop=3)

climate_zones_monthly.tail()

# Take mean of each mean monthly temp for each zone

monthly_mean_zone = climate_zones_monthly.groupby(['CLIMATE_ZONES', 'MONTH'], as_index=False).mean()

monthly_mean_zone.head()

# Re order months by custom sorting per calendar months

months_categories = ('Jan', 'Feb', 'Mar', 'Apr', 'May', 'Jun', 'Jul', 'Aug', 'Sep', 'Oct', 'Nov', 'Dec')

monthly_mean_zone['MONTH'] = pd.Categorical(monthly_mean_zone['MONTH'], categories = months_categories)

monthly_mean_zone.head()

# Sort values under MONTH by calender months

monthly_mean_zone = monthly_mean_zone.sort_values(by = 'MONTH')

monthly_mean_zone.head()

# Pivot table to get temps for each climate zone per month in column format

monthly_mean_zone = monthly_mean_zone.pivot_table(values = 'TEMP_C', index = 'MONTH', columns = 'CLIMATE_ZONES')

monthly_mean_zone.head()

# Reset MONTH index to column

monthly_mean_zone = monthly_mean_zone.reset_index()

monthly_mean_zone

# Plot mean monthly temperatures for the 4 climate zones in Ghana

# Plot style use Seaborn

plt.style.use('seaborn')

#sns.set_style('whitegrid')

fig, ax11 = plt.subplots(1, figsize=(10, 6))

x = monthly_mean_zone['MONTH']

y_1 = monthly_mean_zone['Zone_1']

y_2 = monthly_mean_zone['Zone_2']

y_3 = monthly_mean_zone['Zone_3']

y_4 = monthly_mean_zone['Zone_4']

# x, y limits of axes

plt.ylim(23, 33)

# Plot each lines on same axis

plt.plot(x, y_1, '.-g', label = 'Zone 1', linewidth = 2)

plt.plot(x, y_2, '.--c', label = 'Zone 2', linewidth = 2)

plt.plot(x, y_3, '.-.b', label = 'Zone 3', linewidth = 2)

plt.plot(x, y_4, '.:r', label = 'Zone 4', linewidth = 2)

leg = plt.legend()

# get the lines and texts inside legend box

leg_lines = leg.get_lines()

leg_texts = leg.get_texts()

# bulk-set the properties of all lines and texts

plt.setp(leg_lines, linewidth=4)

plt.setp(leg_texts, fontsize='x-large')

plt.title('Mean Monthly Climatic Zone Temperatures, Ghana', fontdict={'fontname': 'comic sans ms',

'fontsize': 15, 'weight': 'bold'})

# Labels for axes

plt.xlabel('Month', fontdict={'fontsize': 14})

plt.ylabel('Temperature °C', fontdict={'fontsize': 14})

# To save graph

plt.savefig('C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/Graphs/zone_monthly_mean.png')

# Plot mean monthly temperatures for the 4 climate zones in Ghana in comparison to the national average

# Plot style use Seaborn

plt.style.use('seaborn')

#sns.set_style('whitegrid')

fig, ax11 = plt.subplots(1, figsize=(10, 6))

x = monthly_mean_zone['MONTH']

y_1 = monthly_mean_zone['Zone_1']

y_2 = monthly_mean_zone['Zone_2']

y_3 = monthly_mean_zone['Zone_3']

y_4 = monthly_mean_zone['Zone_4']

y_5 = monthly_mean['TEMP_C']

# x, y limits of axes

plt.ylim(23, 33)

# Plot each lines on same axis

plt.plot(x, y_1, '.-g', label = 'Zone 1', linewidth = 2)

plt.plot(x, y_2, '.--c', label = 'Zone 2', linewidth = 2)

plt.plot(x, y_3, '.-.b', label = 'Zone 3', linewidth = 2)

plt.plot(x, y_4, '.:r', label = 'Zone 4', linewidth = 2)

plt.plot(x, y_5, '-m', label = 'Average', linewidth = 2)

leg = plt.legend()

# get the lines and texts inside legend box

leg_lines = leg.get_lines()

leg_texts = leg.get_texts()

# bulk-set the properties of all lines and texts

plt.setp(leg_lines, linewidth=4)

plt.setp(leg_texts, fontsize='x-large')

plt.title('Mean Monthly National & Zonal Climatic Temperatures, Ghana', fontdict={'fontname': 'comic sans ms',

'fontsize': 15, 'weight': 'bold'})

# Labels for axes

plt.xlabel('Month', fontdict={'fontsize': 14})

plt.ylabel('Temperature °C', fontdict={'fontsize': 14})

# To save graph

plt.savefig('C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/Graphs/zone_nat_monthly_mean.png')

```

|

github_jupyter

|

# Climate data source from https://www.ncdc.noaa.gov/cdo-web

# Period of record from 1973

# Contains data records of 17 stations of varying lengths

# Original units of variables - imperial units

%matplotlib inline

# Import libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# Seaborn for additional plotting and styling

import seaborn as sns

# Define file path to connect data source:

fp = 'C:/Users/Narteh/earth_analytics/Gh_Climate_EDA/data_weatherGH/1619559410439.csv'

# Read in data from the csv file

# Low memory set to false to remove memory errors

data = pd.read_csv(fp, parse_dates=['DATE'], dayfirst=True, low_memory=False)

# Print data types

data.dtypes

#Print column names

data.columns.values