code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

# ShopUp team

In this notebook our team reveal two different approaches for creating recommender system in Retail (ecommerce) during the Summer Data Science School using:

- Deep Collaborative Filtering

- Session Based Recommendations with RNN

You can find more information about the case the other approaches and all other into at our article at [Shopup](https://shopup.me) and the repo for Learning Item Embedding which we call product2Vec.

https://shopup.me/recommenders_systems/

```

from fastai.collab import *

from fastai.tabular import *

from fastai.text import *

import numpy as np

import pandas as pd

from google.colab import drive

drive.mount('/content/gdrive', force_remount=True)

root_dir = "/content/gdrive/My Drive/Colab Notebooks/"

base_dir = root_dir + 'summer_school/data/'

```

# 1. Data Prep

### Load data

```

sorted_df = pd.read_csv(base_dir +'/events.csv')

sorted_df["date"] = pd.DatetimeIndex(sorted_df["timestamp"]).date

sorted_df.head()

# adding ratings if they browse is one if they add to card is 3 and 5 for transaction

sorted_df["rating1"] = np.where(sorted_df.event == "view",1,3)

sorted_df["rating2"] = np.where((sorted_df.event == "transaction") & (sorted_df["rating1"] == 3),2,0)

sorted_df['rating'] = sorted_df["rating1"] + sorted_df["rating2"]

sorted_df.head()

```

## Deep collaborative filtering example

`collab` models use data in a `DataFrame` of user, items, and ratings.

```

ratings = sorted_df[["visitorid", 'itemid', "rating", 'timestamp']].copy()

ratings.head()

```

That's all we need to create and train a model:

```

data = CollabDataBunch.from_df(ratings, seed=42)

data

y_range = [0,5.5]

learn = collab_learner(data, n_factors=50, y_range=y_range)

learn.lr_find()

learn.recorder.plot(skip_end=15)

learn.fit_one_cycle(1, 1e-2)

# save the model

learn.save(base_dir +'/dotcat')

```

## Session base recommendation with RNNs

```

# need to prepare the data in the format shown below

final_text= pd.read_csv(base_dir +'/final_text_no_bracket.csv')

final_text.head()

from fastai.text import *

data_lm = (TextList.from_df(final_text, cols=['visitorid', 'new'])

.split_by_rand_pct()

.label_for_lm()

.databunch())

data_lm.save(base_dir +'/data_lm.pkl')

#data_lm.load(base_dir +'/data_lm.pkl')

data_lm.vocab.itos[:11]

data_lm.show_batch()

bs=48

learn = language_model_learner(data_lm, AWD_LSTM, drop_mult=0.3)

learn.lr_find()

learn.recorder.plot(skip_end=15)

learn.fit_one_cycle(2, moms=(0.8,0.7))

learn.save(base_dir +'fit_head')

learn.save(base_dir +'/fine_tuned')

```

###Let's play

```

#learn.load(base_dir +'/fine_tuned_1')

TEXT = "440866"

N_WORDS = 4

N_SENTENCES = 1

print("\n".join(learn.predict(TEXT, N_WORDS +1 , temperature=0.75) for _ in range(N_SENTENCES)))

```

|

github_jupyter

|

from fastai.collab import *

from fastai.tabular import *

from fastai.text import *

import numpy as np

import pandas as pd

from google.colab import drive

drive.mount('/content/gdrive', force_remount=True)

root_dir = "/content/gdrive/My Drive/Colab Notebooks/"

base_dir = root_dir + 'summer_school/data/'

sorted_df = pd.read_csv(base_dir +'/events.csv')

sorted_df["date"] = pd.DatetimeIndex(sorted_df["timestamp"]).date

sorted_df.head()

# adding ratings if they browse is one if they add to card is 3 and 5 for transaction

sorted_df["rating1"] = np.where(sorted_df.event == "view",1,3)

sorted_df["rating2"] = np.where((sorted_df.event == "transaction") & (sorted_df["rating1"] == 3),2,0)

sorted_df['rating'] = sorted_df["rating1"] + sorted_df["rating2"]

sorted_df.head()

ratings = sorted_df[["visitorid", 'itemid', "rating", 'timestamp']].copy()

ratings.head()

data = CollabDataBunch.from_df(ratings, seed=42)

data

y_range = [0,5.5]

learn = collab_learner(data, n_factors=50, y_range=y_range)

learn.lr_find()

learn.recorder.plot(skip_end=15)

learn.fit_one_cycle(1, 1e-2)

# save the model

learn.save(base_dir +'/dotcat')

# need to prepare the data in the format shown below

final_text= pd.read_csv(base_dir +'/final_text_no_bracket.csv')

final_text.head()

from fastai.text import *

data_lm = (TextList.from_df(final_text, cols=['visitorid', 'new'])

.split_by_rand_pct()

.label_for_lm()

.databunch())

data_lm.save(base_dir +'/data_lm.pkl')

#data_lm.load(base_dir +'/data_lm.pkl')

data_lm.vocab.itos[:11]

data_lm.show_batch()

bs=48

learn = language_model_learner(data_lm, AWD_LSTM, drop_mult=0.3)

learn.lr_find()

learn.recorder.plot(skip_end=15)

learn.fit_one_cycle(2, moms=(0.8,0.7))

learn.save(base_dir +'fit_head')

learn.save(base_dir +'/fine_tuned')

#learn.load(base_dir +'/fine_tuned_1')

TEXT = "440866"

N_WORDS = 4

N_SENTENCES = 1

print("\n".join(learn.predict(TEXT, N_WORDS +1 , temperature=0.75) for _ in range(N_SENTENCES)))

| 0.403332 | 0.899033 |

This notebook compares the output of XGPaint.jl with expected, using Python and healpy.

```

# %config InlineBackend.figure_format = 'retina'

import numpy as np

import matplotlib.pyplot as plt

import healpy as hp

import astropy.units as u

from astropy.cosmology import WMAP9 as cosmo

from astropy.cosmology import z_at_value

def bin_ps(y, nb=1000):

x = np.arange(len(y))

bins = np.arange(0, len(y), nb)

inds = np.digitize(x, bins)

bx = np.array([ np.mean(x[inds==i]) for i in range(2,np.max(inds))])

by = np.array([ np.mean(y[inds==i]) for i in range(2,np.max(inds))])

return bx, by

map_fac = 1

freq_str = ['030', '090', '148', '219', '277', '350']

freqs = [float(f) for f in freq_str]

# conv_freq = [ 0.03701396034733168,

# 0.004683509698460815,

# 0.002595203002861245,

# 0.0020683915234002374,

# 0.002331542380044927,

# 0.0033735887524933045]

def clip_map(m):

# return m

m2 = m.copy()

# m2[m < 1e2] = 0.0

m2[m > 1e6] = 0.0

return m2

sehgal_maps = [hp.read_map(

f'/tigress/zequnl/xgpaint/sehgal/{freq}_rad_pts_healpix.fits', verbose=False)

for freq in freq_str]

sehgal_ps_autos = [hp.anafast(clip_map(m1), iter=0) for m1 in sehgal_maps]

sehgal_cib_150 = hp.read_map(

f'/tigress/zequnl/xgpaint/sehgal/{freq_str[2]}_ir_pts_healpix.fits', verbose=False)

sehgal_cib_150 = hp.alm2map(hp.map2alm(sehgal_cib_150), nside=4096)

hp.write_map(f'/tigress/zequnl/xgpaint/sehgal/{freq_str[2]}_ir_pts_healpix.fits', sehgal_cib_150)

sehgal_cib_ps = hp.anafast(sehgal_cib_150, iter=0)

```

# XGPaint Sims

```

radio_maps = [hp.read_map(f'/tigress/zequnl/xgpaint/jl/radio{freq_str[i]}.fits', verbose=False)

for i in range(len(freqs))]

cib_map_150 = hp.read_map(f'/tigress/zequnl/xgpaint/jl/cib{freq_str[2]}.fits', verbose=False) * 9e12

websky_cib_150 = hp.anafast(cib_map_150, iter=0)

ps3s = hp.anafast(clip_map(sehgal_maps[2]), map2=sehgal_cib_150, iter=0)

ps3 = hp.anafast(clip_map(radio_maps[2]), map2=cib_map_150, iter=0)

bx, by = bin_ps(ps3s)

plt.plot(bx, by, label='sehgal')

bx, by = bin_ps(ps3)

plt.plot(bx, by, label='websky')

# plt.yscale('log')

plt.legend()

plt.title(r'150 GHz CIB $\times$ Radio')

bx, by = bin_ps(hp.anafast(clip_map(sehgal_maps[2]), iter=0))

plt.plot(bx, by, label='sehgal')

bx, by = bin_ps(hp.anafast(clip_map(radio_maps[2]), iter=0))

plt.plot(bx, by, label='websky')

# plt.yscale('log')

plt.legend()

plt.title(r'150 GHz Radio $\times$ Radio')

bx, by = bin_ps(hp.anafast(sehgal_cib_150, iter=0))

plt.plot(bx, by, label='sehgal')

bx, by = bin_ps(hp.anafast(cib_map_150, iter=0))

plt.plot(bx, by, label='websky')

plt.yscale('log')

plt.legend()

plt.title(r'150 GHz CIB $\times$ CIB')

plt.figure(figsize=(12, 5))

plt.hist( np.log10(sehgal_maps[2]+1), histtype="step", label="sehgal", bins=50)

plt.hist( np.log10(maps[2]+1), histtype="step", label="zack", bins=50)

plt.yscale("log")

plt.legend()

plt.xlabel(r"$\log_{10}$ pixel flux in Jy/sr (150 GHz)")

fig, axes = plt.subplots(len(freq_str),1,figsize=(5,12))

for i in range(len(freq_str)):

axes[i].hist( np.log10(sehgal_maps[i] + 1e0), bins=30, histtype="step")

axes[i].hist( np.log10(maps[i] + 1e0), bins=30, histtype="step")

axes[i].set_yscale("log")

%time ps_auto = [hp.anafast(clip_map(m1), iter=0) for m1 in maps]

for i in range(len(ps_auto)):

plt.figure()

plt.title(freq_str[i])

plt.plot(sehgal_ps_autos[i][100:])

plt.plot(ps_auto[i][100:], alpha=0.1)

plt.scatter( freqs, [np.mean(ps[500:]) for ps in ps_auto], label='websky' )

plt.scatter( freqs, [np.mean(ps[500:]) for ps in sehgal_ps_autos], marker='X', label='sehgal', lw=0.5, s=50, color='r', alpha=0.5 )

plt.legend()

plt.xlabel('GHz')

plt.ylabel(r'$\langle C_{\ell} \rangle_{\ell}$ for $\ell > 500$')

plt.yscale('log')

np.array([np.mean(ps[500:]) for ps in ps_auto]) / np.array([np.mean(ps[500:]) for ps in sehgal_ps_autos])

```

|

github_jupyter

|

# %config InlineBackend.figure_format = 'retina'

import numpy as np

import matplotlib.pyplot as plt

import healpy as hp

import astropy.units as u

from astropy.cosmology import WMAP9 as cosmo

from astropy.cosmology import z_at_value

def bin_ps(y, nb=1000):

x = np.arange(len(y))

bins = np.arange(0, len(y), nb)

inds = np.digitize(x, bins)

bx = np.array([ np.mean(x[inds==i]) for i in range(2,np.max(inds))])

by = np.array([ np.mean(y[inds==i]) for i in range(2,np.max(inds))])

return bx, by

map_fac = 1

freq_str = ['030', '090', '148', '219', '277', '350']

freqs = [float(f) for f in freq_str]

# conv_freq = [ 0.03701396034733168,

# 0.004683509698460815,

# 0.002595203002861245,

# 0.0020683915234002374,

# 0.002331542380044927,

# 0.0033735887524933045]

def clip_map(m):

# return m

m2 = m.copy()

# m2[m < 1e2] = 0.0

m2[m > 1e6] = 0.0

return m2

sehgal_maps = [hp.read_map(

f'/tigress/zequnl/xgpaint/sehgal/{freq}_rad_pts_healpix.fits', verbose=False)

for freq in freq_str]

sehgal_ps_autos = [hp.anafast(clip_map(m1), iter=0) for m1 in sehgal_maps]

sehgal_cib_150 = hp.read_map(

f'/tigress/zequnl/xgpaint/sehgal/{freq_str[2]}_ir_pts_healpix.fits', verbose=False)

sehgal_cib_150 = hp.alm2map(hp.map2alm(sehgal_cib_150), nside=4096)

hp.write_map(f'/tigress/zequnl/xgpaint/sehgal/{freq_str[2]}_ir_pts_healpix.fits', sehgal_cib_150)

sehgal_cib_ps = hp.anafast(sehgal_cib_150, iter=0)

radio_maps = [hp.read_map(f'/tigress/zequnl/xgpaint/jl/radio{freq_str[i]}.fits', verbose=False)

for i in range(len(freqs))]

cib_map_150 = hp.read_map(f'/tigress/zequnl/xgpaint/jl/cib{freq_str[2]}.fits', verbose=False) * 9e12

websky_cib_150 = hp.anafast(cib_map_150, iter=0)

ps3s = hp.anafast(clip_map(sehgal_maps[2]), map2=sehgal_cib_150, iter=0)

ps3 = hp.anafast(clip_map(radio_maps[2]), map2=cib_map_150, iter=0)

bx, by = bin_ps(ps3s)

plt.plot(bx, by, label='sehgal')

bx, by = bin_ps(ps3)

plt.plot(bx, by, label='websky')

# plt.yscale('log')

plt.legend()

plt.title(r'150 GHz CIB $\times$ Radio')

bx, by = bin_ps(hp.anafast(clip_map(sehgal_maps[2]), iter=0))

plt.plot(bx, by, label='sehgal')

bx, by = bin_ps(hp.anafast(clip_map(radio_maps[2]), iter=0))

plt.plot(bx, by, label='websky')

# plt.yscale('log')

plt.legend()

plt.title(r'150 GHz Radio $\times$ Radio')

bx, by = bin_ps(hp.anafast(sehgal_cib_150, iter=0))

plt.plot(bx, by, label='sehgal')

bx, by = bin_ps(hp.anafast(cib_map_150, iter=0))

plt.plot(bx, by, label='websky')

plt.yscale('log')

plt.legend()

plt.title(r'150 GHz CIB $\times$ CIB')

plt.figure(figsize=(12, 5))

plt.hist( np.log10(sehgal_maps[2]+1), histtype="step", label="sehgal", bins=50)

plt.hist( np.log10(maps[2]+1), histtype="step", label="zack", bins=50)

plt.yscale("log")

plt.legend()

plt.xlabel(r"$\log_{10}$ pixel flux in Jy/sr (150 GHz)")

fig, axes = plt.subplots(len(freq_str),1,figsize=(5,12))

for i in range(len(freq_str)):

axes[i].hist( np.log10(sehgal_maps[i] + 1e0), bins=30, histtype="step")

axes[i].hist( np.log10(maps[i] + 1e0), bins=30, histtype="step")

axes[i].set_yscale("log")

%time ps_auto = [hp.anafast(clip_map(m1), iter=0) for m1 in maps]

for i in range(len(ps_auto)):

plt.figure()

plt.title(freq_str[i])

plt.plot(sehgal_ps_autos[i][100:])

plt.plot(ps_auto[i][100:], alpha=0.1)

plt.scatter( freqs, [np.mean(ps[500:]) for ps in ps_auto], label='websky' )

plt.scatter( freqs, [np.mean(ps[500:]) for ps in sehgal_ps_autos], marker='X', label='sehgal', lw=0.5, s=50, color='r', alpha=0.5 )

plt.legend()

plt.xlabel('GHz')

plt.ylabel(r'$\langle C_{\ell} \rangle_{\ell}$ for $\ell > 500$')

plt.yscale('log')

np.array([np.mean(ps[500:]) for ps in ps_auto]) / np.array([np.mean(ps[500:]) for ps in sehgal_ps_autos])

| 0.336767 | 0.867036 |

```

# Load libraries

%matplotlib inline

import numpy

import matplotlib.pyplot as plt

from numpy import arange

from matplotlib import pyplot

from pandas import read_csv

from pandas import set_option

from pandas.tools.plotting import scatter_matrix

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.model_selection import KFold

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LinearRegression

from sklearn.linear_model import Lasso

from sklearn.linear_model import ElasticNet

from sklearn.tree import DecisionTreeRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.svm import SVR

from sklearn.pipeline import Pipeline

from sklearn.ensemble import RandomForestRegressor

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.ensemble import ExtraTreesRegressor

from sklearn.ensemble import AdaBoostRegressor

from sklearn.metrics import mean_squared_error

# Load dataset

filename = 'housing.csv'

names = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV']

dataset = read_csv(filename, delim_whitespace=True, names=names)

# shape

print(dataset.shape)

# types

print(dataset.dtypes)

# head

print(dataset.head(20))

set_option('precision', 2)

print(dataset.describe())

# correlation

set_option('precision', 2)

print(dataset.corr(method='pearson'))

# histograms

dataset.hist(sharex=False, sharey=False, xlabelsize=1, ylabelsize=1, figsize=(14, 12))

plt.show()

# density

dataset.plot.density(subplots=True, layout=(4,4), sharex=False, legend=False, fontsize=1, figsize=(14, 12))

plt.show()

# box and whisker plots

dataset.plot.box(subplots=True, layout=(4,4), sharex=False, sharey=False, figsize=(14, 12))

plt.show()

# scatter plot matrix

scatter_matrix(dataset, figsize=(14, 12))

plt.show()

# correlation matrix

fig = plt.figure(figsize=(14, 12))

ax = fig.add_subplot(111)

cax = ax.matshow(dataset.corr(), vmin=-1, vmax=1, interpolation='none')

fig.colorbar(cax)

ticks = arange(0,14,1)

ax.set_xticks(ticks)

ax.set_yticks(ticks)

ax.set_xticklabels(names)

ax.set_yticklabels(names)

plt.show()

# Split-out validation dataset

array = dataset.values

X = array[:,0:13]

Y = array[:,13]

validation_size = 0.20

seed = 7

X_train, X_validation, Y_train, Y_validation = train_test_split(X, Y, test_size=validation_size, random_state=seed)

# Test options and evaluation metric

num_folds = 10

seed = 7

scoring = 'neg_mean_squared_error'

# Spot-Check Algorithms

models = []

models.append(('LR', LinearRegression()))

models.append(('LASSO', Lasso()))

models.append(('EN', ElasticNet()))

models.append(('KNN', KNeighborsRegressor()))

models.append(('CART', DecisionTreeRegressor()))

models.append(('SVR' , SVR()))

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = KFold(n_splits=num_folds, random_state=seed)

cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

# Compare Algorithms

fig = pyplot.figure(figsize=(14, 12))

fig.suptitle('Algorithm Comparison')

ax = fig.add_subplot(111)

pyplot.boxplot(results)

ax.set_xticklabels(names)

plt.show()

# Standardize the dataset

pipelines = []

pipelines.append(('ScaledLR', Pipeline([('Scaler', StandardScaler()),('LR', LinearRegression())])))

pipelines.append(('ScaledLASSO', Pipeline([('Scaler', StandardScaler()),('LASSO', Lasso())])))

pipelines.append(('ScaledEN', Pipeline([('Scaler', StandardScaler()),('EN', ElasticNet())])))

pipelines.append(('ScaledKNN', Pipeline([('Scaler', StandardScaler()),('KNN', KNeighborsRegressor())])))

pipelines.append(('ScaledCART', Pipeline([('Scaler', StandardScaler()),('CART', DecisionTreeRegressor())])))

pipelines.append(('ScaledSVR', Pipeline([('Scaler', StandardScaler()),('SVR', SVR())])))

results = []

names = []

for name, model in pipelines:

kfold = KFold(n_splits=num_folds, random_state=seed)

cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

```

Scaled KNN now has lowest MSE.

```

# Compare Algorithms

fig = pyplot.figure(figsize=(14, 12))

fig.suptitle('Scaled Algorithm Comparison')

ax = fig.add_subplot(111)

pyplot.boxplot(results)

ax.set_xticklabels(names)

plt.show()

# KNN Algorithm tuning

scaler = StandardScaler().fit(X_train)

rescaledX = scaler.transform(X_train)

k_values = numpy.array([1,3,5,7,9,11,13,15,17,19,21])

param_grid = dict(n_neighbors=k_values)

model = KNeighborsRegressor()

kfold = KFold(n_splits=num_folds, random_state=seed)

grid = GridSearchCV(estimator=model, param_grid=param_grid, scoring=scoring, cv=kfold)

grid_result = grid.fit(rescaledX, Y_train)

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

```

You can see that the best for k (n neighbors) is 3 providing a mean squared error of

-18.172137, the best so far.

### Ensemble Methods

```

# ensembles

ensembles = []

ensembles.append(('ScaledAB', Pipeline([('Scaler', StandardScaler()),('AB', AdaBoostRegressor())])))

ensembles.append(('ScaledGBM', Pipeline([('Scaler', StandardScaler()),('GBM', GradientBoostingRegressor())])))

ensembles.append(('ScaledRF', Pipeline([('Scaler', StandardScaler()),('RF', RandomForestRegressor())])))

ensembles.append(('ScaledET', Pipeline([('Scaler', StandardScaler()),('ET', ExtraTreesRegressor())])))

results = []

names = []

for name, model in ensembles:

kfold = KFold(n_splits=num_folds, random_state=seed)

cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

# Compare Algorithms

fig = pyplot.figure(figsize=(14, 12))

fig.suptitle('Scaled Ensemble Algorithm Comparison')

ax = fig.add_subplot(111)

pyplot.boxplot(results)

ax.set_xticklabels(names)

plt.show()

# Tune scaled GBM

scaler = StandardScaler().fit(X_train)

rescaledX = scaler.transform(X_train)

param_grid = dict(n_estimators=numpy.array([50,100,150,200,250,300,350,400]))

model = GradientBoostingRegressor(random_state=seed)

kfold = KFold(n_splits=num_folds, random_state=seed)

grid = GridSearchCV(estimator=model, param_grid=param_grid, scoring=scoring, cv=kfold)

grid_result = grid.fit(rescaledX, Y_train)

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

```

We can see that the best configuration was n estimators=400 resulting in a mean squared

error of -9.356471, about 0.65 units better than the untuned method.

### Finalize the model

```

# prepare the model

scaler = StandardScaler().fit(X_train)

rescaledX = scaler.transform(X_train)

model = GradientBoostingRegressor(random_state=seed, n_estimators=400)

model.fit(rescaledX, Y_train)

# transform the validation dataset

rescaledValidationX = scaler.transform(X_validation)

predictions = model.predict(rescaledValidationX)

print(mean_squared_error(Y_validation, predictions))

Y_validation

predictions

```

|

github_jupyter

|

# Load libraries

%matplotlib inline

import numpy

import matplotlib.pyplot as plt

from numpy import arange

from matplotlib import pyplot

from pandas import read_csv

from pandas import set_option

from pandas.tools.plotting import scatter_matrix

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.model_selection import KFold

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LinearRegression

from sklearn.linear_model import Lasso

from sklearn.linear_model import ElasticNet

from sklearn.tree import DecisionTreeRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.svm import SVR

from sklearn.pipeline import Pipeline

from sklearn.ensemble import RandomForestRegressor

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.ensemble import ExtraTreesRegressor

from sklearn.ensemble import AdaBoostRegressor

from sklearn.metrics import mean_squared_error

# Load dataset

filename = 'housing.csv'

names = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT', 'MEDV']

dataset = read_csv(filename, delim_whitespace=True, names=names)

# shape

print(dataset.shape)

# types

print(dataset.dtypes)

# head

print(dataset.head(20))

set_option('precision', 2)

print(dataset.describe())

# correlation

set_option('precision', 2)

print(dataset.corr(method='pearson'))

# histograms

dataset.hist(sharex=False, sharey=False, xlabelsize=1, ylabelsize=1, figsize=(14, 12))

plt.show()

# density

dataset.plot.density(subplots=True, layout=(4,4), sharex=False, legend=False, fontsize=1, figsize=(14, 12))

plt.show()

# box and whisker plots

dataset.plot.box(subplots=True, layout=(4,4), sharex=False, sharey=False, figsize=(14, 12))

plt.show()

# scatter plot matrix

scatter_matrix(dataset, figsize=(14, 12))

plt.show()

# correlation matrix

fig = plt.figure(figsize=(14, 12))

ax = fig.add_subplot(111)

cax = ax.matshow(dataset.corr(), vmin=-1, vmax=1, interpolation='none')

fig.colorbar(cax)

ticks = arange(0,14,1)

ax.set_xticks(ticks)

ax.set_yticks(ticks)

ax.set_xticklabels(names)

ax.set_yticklabels(names)

plt.show()

# Split-out validation dataset

array = dataset.values

X = array[:,0:13]

Y = array[:,13]

validation_size = 0.20

seed = 7

X_train, X_validation, Y_train, Y_validation = train_test_split(X, Y, test_size=validation_size, random_state=seed)

# Test options and evaluation metric

num_folds = 10

seed = 7

scoring = 'neg_mean_squared_error'

# Spot-Check Algorithms

models = []

models.append(('LR', LinearRegression()))

models.append(('LASSO', Lasso()))

models.append(('EN', ElasticNet()))

models.append(('KNN', KNeighborsRegressor()))

models.append(('CART', DecisionTreeRegressor()))

models.append(('SVR' , SVR()))

# evaluate each model in turn

results = []

names = []

for name, model in models:

kfold = KFold(n_splits=num_folds, random_state=seed)

cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

# Compare Algorithms

fig = pyplot.figure(figsize=(14, 12))

fig.suptitle('Algorithm Comparison')

ax = fig.add_subplot(111)

pyplot.boxplot(results)

ax.set_xticklabels(names)

plt.show()

# Standardize the dataset

pipelines = []

pipelines.append(('ScaledLR', Pipeline([('Scaler', StandardScaler()),('LR', LinearRegression())])))

pipelines.append(('ScaledLASSO', Pipeline([('Scaler', StandardScaler()),('LASSO', Lasso())])))

pipelines.append(('ScaledEN', Pipeline([('Scaler', StandardScaler()),('EN', ElasticNet())])))

pipelines.append(('ScaledKNN', Pipeline([('Scaler', StandardScaler()),('KNN', KNeighborsRegressor())])))

pipelines.append(('ScaledCART', Pipeline([('Scaler', StandardScaler()),('CART', DecisionTreeRegressor())])))

pipelines.append(('ScaledSVR', Pipeline([('Scaler', StandardScaler()),('SVR', SVR())])))

results = []

names = []

for name, model in pipelines:

kfold = KFold(n_splits=num_folds, random_state=seed)

cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

# Compare Algorithms

fig = pyplot.figure(figsize=(14, 12))

fig.suptitle('Scaled Algorithm Comparison')

ax = fig.add_subplot(111)

pyplot.boxplot(results)

ax.set_xticklabels(names)

plt.show()

# KNN Algorithm tuning

scaler = StandardScaler().fit(X_train)

rescaledX = scaler.transform(X_train)

k_values = numpy.array([1,3,5,7,9,11,13,15,17,19,21])

param_grid = dict(n_neighbors=k_values)

model = KNeighborsRegressor()

kfold = KFold(n_splits=num_folds, random_state=seed)

grid = GridSearchCV(estimator=model, param_grid=param_grid, scoring=scoring, cv=kfold)

grid_result = grid.fit(rescaledX, Y_train)

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

# ensembles

ensembles = []

ensembles.append(('ScaledAB', Pipeline([('Scaler', StandardScaler()),('AB', AdaBoostRegressor())])))

ensembles.append(('ScaledGBM', Pipeline([('Scaler', StandardScaler()),('GBM', GradientBoostingRegressor())])))

ensembles.append(('ScaledRF', Pipeline([('Scaler', StandardScaler()),('RF', RandomForestRegressor())])))

ensembles.append(('ScaledET', Pipeline([('Scaler', StandardScaler()),('ET', ExtraTreesRegressor())])))

results = []

names = []

for name, model in ensembles:

kfold = KFold(n_splits=num_folds, random_state=seed)

cv_results = cross_val_score(model, X_train, Y_train, cv=kfold, scoring=scoring)

results.append(cv_results)

names.append(name)

msg = "%s: %f (%f)" % (name, cv_results.mean(), cv_results.std())

print(msg)

# Compare Algorithms

fig = pyplot.figure(figsize=(14, 12))

fig.suptitle('Scaled Ensemble Algorithm Comparison')

ax = fig.add_subplot(111)

pyplot.boxplot(results)

ax.set_xticklabels(names)

plt.show()

# Tune scaled GBM

scaler = StandardScaler().fit(X_train)

rescaledX = scaler.transform(X_train)

param_grid = dict(n_estimators=numpy.array([50,100,150,200,250,300,350,400]))

model = GradientBoostingRegressor(random_state=seed)

kfold = KFold(n_splits=num_folds, random_state=seed)

grid = GridSearchCV(estimator=model, param_grid=param_grid, scoring=scoring, cv=kfold)

grid_result = grid.fit(rescaledX, Y_train)

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

# prepare the model

scaler = StandardScaler().fit(X_train)

rescaledX = scaler.transform(X_train)

model = GradientBoostingRegressor(random_state=seed, n_estimators=400)

model.fit(rescaledX, Y_train)

# transform the validation dataset

rescaledValidationX = scaler.transform(X_validation)

predictions = model.predict(rescaledValidationX)

print(mean_squared_error(Y_validation, predictions))

Y_validation

predictions

| 0.779028 | 0.770422 |

```

# pandas/numpy for handling data

import pandas as pd

import numpy as np

# seaborn/matplotlib for graphing

import matplotlib.pyplot as plt

import seaborn as sns

import matplotlib.colors as mc

# statistics

from statistics import mean

import statsmodels.api as sm

from statsmodels.formula.api import ols

from scipy import stats

import scikit_posthocs as sp

from statsmodels.stats.anova import AnovaRM

# helper fxns for analyzing chr aberr data

import chr_aberr_helper_fxns as cah

# setting a global darkgrid style w/ dark edge plot elements for plotting

sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

import importlib

%load_ext autoreload

%autoreload 2

%reload_ext autoreload

```

---

...

---

# Analyzing telomeric aberrations

---

## Reading in telomeric aberration data (inflight astros)

```

melt_all_astro_telo_aberr = pd.read_csv('../data/compiled and processed data/melt_all_astro_telo_aberr.csv')

melt_all_astro_telo_aberr['astro id'] = melt_all_astro_telo_aberr['astro id'].astype('str')

melt_all_astro_telo_aberr = melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] != '# of STL-complete']

melt_all_astro_telo_aberr.head(4)

```

## Graphing telomeric aberrations (inflight astros)

```

# let's make two graphs, one w/o and one w/ satellite associations

data=melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] != '# of sat associations']

data2=melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] == '# of sat associations']

x='aberration type'

y='count per cell'

hue='flight status'

ax1= sns.catplot(x=x, y=y, hue=hue, data=data, kind='bar', height=4, aspect=3)

ax2= sns.catplot(x=x, y=y, data=data2, hue=hue, kind='bar', height=4, aspect=3)

```

No trend in # fragile telos per spaceflight. Potential but very minor trend in STL-complete for midflight

Hetero telomeric foci @ sister chromatids highly elevated inflight, as are satellite associations..

High levels of recombination between telomeres? Let's combine mid-flight samples, just for curiosity.

```

mid_combined_melt_all_astro_telo_aberr = melt_all_astro_telo_aberr

mid_combined_melt_all_astro_telo_aberr['flight status new'] = (mid_combined_melt_all_astro_telo_aberr['flight status']

.apply(lambda row: cah.combine_midflight(row)))

data=mid_combined_melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] != '# of sat associations']

data2=mid_combined_melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] == '# of sat associations']

x='aberration type'

y='count per cell'

hue='flight status new'

ax1= sns.catplot(x=x, y=y, hue=hue, data=data, kind='bar', height=4, aspect=3)

ax2= sns.catplot(x=x, y=y, data=data2, hue=hue, kind='bar', height=4, aspect=3)

```

Same trends, different perspective w/ mid-flight combined. Heterogenous telomere foci between sister chromatids & satellite associations are elevated mid-flight

## Statistics: telomeric aberrations

```

cah.scipy_anova_post_hoc_tests(df=mid_combined_melt_all_astro_telo_aberr)

cah.scipy_anova_post_hoc_tests(df=mid_combined_melt_all_astro_telo_aberr)

```

# Analyzing chromosome rearrangement data

---

```

melt_all_astro_chr_aberr = pd.read_csv('../data/compiled and processed data/All_astronauts_chromosome_aberration_data_tidy_data.csv')

# reformatting (float -> int -> str)

melt_all_astro_chr_aberr['astro id'] = melt_all_astro_chr_aberr['astro id'].astype('int')

melt_all_astro_chr_aberr['astro id'] = melt_all_astro_chr_aberr['astro id'].astype('str')

astro_chr_aberr = melt_all_astro_chr_aberr.copy()

astro_chr_aberr['flight status'] = (astro_chr_aberr['flight status'].apply(lambda row: cah.combine_midflight(row)))

def rename_aberr(row):

if row == 'sister chromatid exchanges':

return 'classic SCEs'

elif row == 'total inversions':

return 'inversions'

elif row == 'satellite associations':

return 'sat. associations'

else:

return row

def rename_flights(row):

if row == 'pre-flight':

return 'Pre-Flight'

elif row == 'mid-flight':

return 'Mid-Flight'

elif row == 'post-flight':

return 'Post-Flight'

astro_chr_aberr['aberration type'] = astro_chr_aberr['aberration type'].apply(lambda row: rename_aberr(row))

astro_chr_aberr['flight status'] = astro_chr_aberr['flight status'].apply(lambda row: rename_flights(row))

```

## Graphing chromosome rearrangements for pre, mid-flight1&2, and post-flight for all astronauts (n=11)

```

order_cat=['dicentrics', 'translocations', 'inversions',]

# 'terminal SCEs','classic SCEs', 'subtelo SCEs', 'sat. associations']

plt.figure(figsize=(9, 3.2))

fontsize=16

ax = sns.barplot(x='aberration type', y='count per cell', data=astro_chr_aberr,

order=order_cat, hue='flight status',

alpha=None, capsize=0.08, linewidth=1.5, errwidth=1, **{'edgecolor':'black'},

ci=95,

palette={'Pre-Flight': '#0000FF',

'Mid-Flight': '#FF0000',

'Post-Flight': '#009900'})

# alpha setting in sns barplot modifies both bar fill AND edge colors; we want to change just fill

# keep alpha set to None, label bar colors w/ palette

# loop through patches (fill color), grab color ID & reset color w/ alpha at 0.2

for patch in range(len(ax.patches)):

color = ax.patches[patch].get_facecolor()

color = list(color)

color[3] = 0.2

color = tuple(color)

ax.patches[patch].set_facecolor(color)

plt.setp(ax.lines, color='black', linewidth=1.5)

plt.xlabel('', fontsize=fontsize)

plt.ylabel('Average frequency per cell', fontsize=fontsize)

plt.tick_params(labelsize=fontsize)

plt.ylim(0, .5)

plt.legend(fontsize=fontsize)

plt.savefig('../MANUSCRIPT 11 ASTROS/figures/dic trans inv chrr aberr (dGH) 11 astros pre mid post.png', dpi=600)

order_cat=['classic SCEs', 'subtelo SCEs', 'terminal SCEs', 'sat. associations']

plt.figure(figsize=(9, 3.2))

fontsize=16

ax = sns.barplot(x='aberration type', y='count per cell', data=astro_chr_aberr,

order=order_cat, hue='flight status',

alpha=None, capsize=0.08, linewidth=1.5, errwidth=1, **{'edgecolor':'black'},

ci=95,

palette={'Pre-Flight': '#0000FF',

'Mid-Flight': '#FF0000',

'Post-Flight': '#009900'})

# alpha setting in sns barplot modifies both bar fill AND edge colors; we want to change just fill

# keep alpha set to None, label bar colors w/ palette

# loop through patches (fill color), grab color ID & reset color w/ alpha at 0.2

for patch in range(len(ax.patches)):

color = ax.patches[patch].get_facecolor()

color = list(color)

color[3] = 0.2

color = tuple(color)

ax.patches[patch].set_facecolor(color)

plt.setp(ax.lines, color='black', linewidth=1.5)

plt.xlabel('', fontsize=fontsize)

plt.ylabel('Average frequency per cell', fontsize=fontsize)

plt.tick_params(labelsize=fontsize)

plt.ylim(0, 1.75)

plt.legend(fontsize=fontsize)

plt.savefig('../MANUSCRIPT 11 ASTROS/figures/SCEs chrr aberr (dGH) 11 astros pre mid post.png', dpi=600)

import importlib

%load_ext autoreload

%autoreload 2

%reload_ext autoreload

```

## Statistics: chromosome rearrangements (n=11)

```

astro_chr_aberr[astro_chr_aberr['aberration type'] == 'dicentrics']['count per cell']

grp_astro_chr_aberr = astro_chr_aberr.groupby(['astro id',

'flight status',

'aberration type']).agg('mean').reset_index()

pivot_chr = grp_astro_chr_aberr.pivot_table(index=['astro id', 'flight status'],

columns='aberration type', values='count per cell').reset_index()

cah.scipy_anova_post_hoc_tests(df=grp_astro_chr_aberr, flight_status_col='flight status')

```

Same results, different perspective. Let's remove the mid-flight data real quick and

then look at just our 3 unrelated astronauts w/ inflight data

```

mid_flight_removed = mid_combined_melt_all_astro_chr_aberr[mid_combined_melt_all_astro_chr_aberr['flight status new'] != 'mid-flight']

ax = sns.set(font_scale=1)

ax = sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

ax = sns.catplot(x='aberration type', y='count per cell',

hue='flight status new', kind='bar', order=order_cat,

orient='v', height=4, aspect=3, data=mid_flight_removed)

plt.title('chr aberr by subtelo dgh: 11 astros, pre-, post-', fontsize=16)

```

## Graphing chromosome rearrangements for pre, mid-flight1&2, and post-flight for all astronauts (n=3)

```

mid_combined = mid_combined_melt_all_astro_chr_aberr

mid_flight_only_astros = mid_combined[mid_combined['astro id'].isin(['2171', '1536', '5163'])]

list(mid_flight_only_astros['astro id'].unique())

order_cat=['dicentrics', 'translocations', 'total inversions', 'terminal SCEs',

'sister chromatid exchanges', 'subtelo SCEs', 'satellite associations']

ax = sns.set(font_scale=1)

ax = sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

ax = sns.catplot(x='aberration type', y='count per cell',

hue='flight status', kind='bar', order=order_cat,

orient='v', height=4, aspect=3, data=mid_flight_only_astros)

plt.title('chr aberr by subtelo dgh: 3 astros, pre-, mid1&2-, post-', fontsize=16)

ax = sns.set(font_scale=1)

ax = sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

ax = sns.catplot(x='aberration type', y='count per cell',

hue='flight status new', kind='bar', order=order_cat,

orient='v', height=4, aspect=3, data=mid_flight_only_astros)

plt.title('chr aberr by subtelo dgh: 3 astros, pre-, mid-, post-', fontsize=16)

grouped_mid_flight_only_astros = mid_flight_only_astros.groupby(['astro id', 'flight status', 'flight status new', 'aberration type']).agg('mean').reset_index()

# mid_flight_only_astros

```

## Statistics: chromosome rearrangements (n=3)

```

cah.scipy_anova_post_hoc_tests(df=mid_flight_only_astros)

df = mid_flight_only_astros

display(sp.posthoc_ttest(df[df['aberration type'] == 'total inversions'], val_col='count per cell',

group_col='flight status new', equal_var=False))

```

|

github_jupyter

|

# pandas/numpy for handling data

import pandas as pd

import numpy as np

# seaborn/matplotlib for graphing

import matplotlib.pyplot as plt

import seaborn as sns

import matplotlib.colors as mc

# statistics

from statistics import mean

import statsmodels.api as sm

from statsmodels.formula.api import ols

from scipy import stats

import scikit_posthocs as sp

from statsmodels.stats.anova import AnovaRM

# helper fxns for analyzing chr aberr data

import chr_aberr_helper_fxns as cah

# setting a global darkgrid style w/ dark edge plot elements for plotting

sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

import importlib

%load_ext autoreload

%autoreload 2

%reload_ext autoreload

melt_all_astro_telo_aberr = pd.read_csv('../data/compiled and processed data/melt_all_astro_telo_aberr.csv')

melt_all_astro_telo_aberr['astro id'] = melt_all_astro_telo_aberr['astro id'].astype('str')

melt_all_astro_telo_aberr = melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] != '# of STL-complete']

melt_all_astro_telo_aberr.head(4)

# let's make two graphs, one w/o and one w/ satellite associations

data=melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] != '# of sat associations']

data2=melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] == '# of sat associations']

x='aberration type'

y='count per cell'

hue='flight status'

ax1= sns.catplot(x=x, y=y, hue=hue, data=data, kind='bar', height=4, aspect=3)

ax2= sns.catplot(x=x, y=y, data=data2, hue=hue, kind='bar', height=4, aspect=3)

mid_combined_melt_all_astro_telo_aberr = melt_all_astro_telo_aberr

mid_combined_melt_all_astro_telo_aberr['flight status new'] = (mid_combined_melt_all_astro_telo_aberr['flight status']

.apply(lambda row: cah.combine_midflight(row)))

data=mid_combined_melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] != '# of sat associations']

data2=mid_combined_melt_all_astro_telo_aberr[melt_all_astro_telo_aberr['aberration type'] == '# of sat associations']

x='aberration type'

y='count per cell'

hue='flight status new'

ax1= sns.catplot(x=x, y=y, hue=hue, data=data, kind='bar', height=4, aspect=3)

ax2= sns.catplot(x=x, y=y, data=data2, hue=hue, kind='bar', height=4, aspect=3)

cah.scipy_anova_post_hoc_tests(df=mid_combined_melt_all_astro_telo_aberr)

cah.scipy_anova_post_hoc_tests(df=mid_combined_melt_all_astro_telo_aberr)

melt_all_astro_chr_aberr = pd.read_csv('../data/compiled and processed data/All_astronauts_chromosome_aberration_data_tidy_data.csv')

# reformatting (float -> int -> str)

melt_all_astro_chr_aberr['astro id'] = melt_all_astro_chr_aberr['astro id'].astype('int')

melt_all_astro_chr_aberr['astro id'] = melt_all_astro_chr_aberr['astro id'].astype('str')

astro_chr_aberr = melt_all_astro_chr_aberr.copy()

astro_chr_aberr['flight status'] = (astro_chr_aberr['flight status'].apply(lambda row: cah.combine_midflight(row)))

def rename_aberr(row):

if row == 'sister chromatid exchanges':

return 'classic SCEs'

elif row == 'total inversions':

return 'inversions'

elif row == 'satellite associations':

return 'sat. associations'

else:

return row

def rename_flights(row):

if row == 'pre-flight':

return 'Pre-Flight'

elif row == 'mid-flight':

return 'Mid-Flight'

elif row == 'post-flight':

return 'Post-Flight'

astro_chr_aberr['aberration type'] = astro_chr_aberr['aberration type'].apply(lambda row: rename_aberr(row))

astro_chr_aberr['flight status'] = astro_chr_aberr['flight status'].apply(lambda row: rename_flights(row))

order_cat=['dicentrics', 'translocations', 'inversions',]

# 'terminal SCEs','classic SCEs', 'subtelo SCEs', 'sat. associations']

plt.figure(figsize=(9, 3.2))

fontsize=16

ax = sns.barplot(x='aberration type', y='count per cell', data=astro_chr_aberr,

order=order_cat, hue='flight status',

alpha=None, capsize=0.08, linewidth=1.5, errwidth=1, **{'edgecolor':'black'},

ci=95,

palette={'Pre-Flight': '#0000FF',

'Mid-Flight': '#FF0000',

'Post-Flight': '#009900'})

# alpha setting in sns barplot modifies both bar fill AND edge colors; we want to change just fill

# keep alpha set to None, label bar colors w/ palette

# loop through patches (fill color), grab color ID & reset color w/ alpha at 0.2

for patch in range(len(ax.patches)):

color = ax.patches[patch].get_facecolor()

color = list(color)

color[3] = 0.2

color = tuple(color)

ax.patches[patch].set_facecolor(color)

plt.setp(ax.lines, color='black', linewidth=1.5)

plt.xlabel('', fontsize=fontsize)

plt.ylabel('Average frequency per cell', fontsize=fontsize)

plt.tick_params(labelsize=fontsize)

plt.ylim(0, .5)

plt.legend(fontsize=fontsize)

plt.savefig('../MANUSCRIPT 11 ASTROS/figures/dic trans inv chrr aberr (dGH) 11 astros pre mid post.png', dpi=600)

order_cat=['classic SCEs', 'subtelo SCEs', 'terminal SCEs', 'sat. associations']

plt.figure(figsize=(9, 3.2))

fontsize=16

ax = sns.barplot(x='aberration type', y='count per cell', data=astro_chr_aberr,

order=order_cat, hue='flight status',

alpha=None, capsize=0.08, linewidth=1.5, errwidth=1, **{'edgecolor':'black'},

ci=95,

palette={'Pre-Flight': '#0000FF',

'Mid-Flight': '#FF0000',

'Post-Flight': '#009900'})

# alpha setting in sns barplot modifies both bar fill AND edge colors; we want to change just fill

# keep alpha set to None, label bar colors w/ palette

# loop through patches (fill color), grab color ID & reset color w/ alpha at 0.2

for patch in range(len(ax.patches)):

color = ax.patches[patch].get_facecolor()

color = list(color)

color[3] = 0.2

color = tuple(color)

ax.patches[patch].set_facecolor(color)

plt.setp(ax.lines, color='black', linewidth=1.5)

plt.xlabel('', fontsize=fontsize)

plt.ylabel('Average frequency per cell', fontsize=fontsize)

plt.tick_params(labelsize=fontsize)

plt.ylim(0, 1.75)

plt.legend(fontsize=fontsize)

plt.savefig('../MANUSCRIPT 11 ASTROS/figures/SCEs chrr aberr (dGH) 11 astros pre mid post.png', dpi=600)

import importlib

%load_ext autoreload

%autoreload 2

%reload_ext autoreload

astro_chr_aberr[astro_chr_aberr['aberration type'] == 'dicentrics']['count per cell']

grp_astro_chr_aberr = astro_chr_aberr.groupby(['astro id',

'flight status',

'aberration type']).agg('mean').reset_index()

pivot_chr = grp_astro_chr_aberr.pivot_table(index=['astro id', 'flight status'],

columns='aberration type', values='count per cell').reset_index()

cah.scipy_anova_post_hoc_tests(df=grp_astro_chr_aberr, flight_status_col='flight status')

mid_flight_removed = mid_combined_melt_all_astro_chr_aberr[mid_combined_melt_all_astro_chr_aberr['flight status new'] != 'mid-flight']

ax = sns.set(font_scale=1)

ax = sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

ax = sns.catplot(x='aberration type', y='count per cell',

hue='flight status new', kind='bar', order=order_cat,

orient='v', height=4, aspect=3, data=mid_flight_removed)

plt.title('chr aberr by subtelo dgh: 11 astros, pre-, post-', fontsize=16)

mid_combined = mid_combined_melt_all_astro_chr_aberr

mid_flight_only_astros = mid_combined[mid_combined['astro id'].isin(['2171', '1536', '5163'])]

list(mid_flight_only_astros['astro id'].unique())

order_cat=['dicentrics', 'translocations', 'total inversions', 'terminal SCEs',

'sister chromatid exchanges', 'subtelo SCEs', 'satellite associations']

ax = sns.set(font_scale=1)

ax = sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

ax = sns.catplot(x='aberration type', y='count per cell',

hue='flight status', kind='bar', order=order_cat,

orient='v', height=4, aspect=3, data=mid_flight_only_astros)

plt.title('chr aberr by subtelo dgh: 3 astros, pre-, mid1&2-, post-', fontsize=16)

ax = sns.set(font_scale=1)

ax = sns.set_style(style="darkgrid",rc= {'patch.edgecolor': 'black'})

ax = sns.catplot(x='aberration type', y='count per cell',

hue='flight status new', kind='bar', order=order_cat,

orient='v', height=4, aspect=3, data=mid_flight_only_astros)

plt.title('chr aberr by subtelo dgh: 3 astros, pre-, mid-, post-', fontsize=16)

grouped_mid_flight_only_astros = mid_flight_only_astros.groupby(['astro id', 'flight status', 'flight status new', 'aberration type']).agg('mean').reset_index()

# mid_flight_only_astros

cah.scipy_anova_post_hoc_tests(df=mid_flight_only_astros)

df = mid_flight_only_astros

display(sp.posthoc_ttest(df[df['aberration type'] == 'total inversions'], val_col='count per cell',

group_col='flight status new', equal_var=False))

| 0.367611 | 0.795698 |

<a href="https://colab.research.google.com/github/kalz2q/mycolabnotebooks/blob/master/magic.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

$\latex$

$\LaTeX$

```

%%script false

と書くと、そのコードセルは実行されないので、まるでテキストセルのように使える。

テキストセルの引用(行頭に>)や、コード表示(バッククオート3つ)のように使える。

勝手に改行もされない。

なにより、Markdownによる解釈がされないので、確実に文字列を書ける。

latexも当然使えない。

```

# magic.ipynb

# はじめに

失敗作なので消していいけれど、云々。

セルマジックについてはいまのところ材料は2つあって、ひとつは

The cell magics in IPython

https://nbviewer.jupyter.org/github/ipython/ipython/blob/1.x/examples/notebooks/Cell%20Magics.ipynb

もう1つは Overview of Colaboratory Features

https://colab.research.google.com/notebooks/basic_features_overview.ipynb#scrollTo=qM4myQGfQboQ

で、このファイルは020magic.ipynbで

%lsmagic

と

%(magicname)?

で作っているみたいだが、数多くのmagicについて、どうやってdocstringを得たのだろう。

自分でもわからない。

あとの方に`%time`を使った例というか実験が載っているが、これはどこかから持ってきたのだろう。

それより、'%javascript`や`%html`をもっと知りたい。

多分、The cell magics in IPythonがよいだろう。 なので、別ファイルで云々。

名前を考えよう。

とりあえずcellmagic.ipynbでいいか。

# %lsmagicと%lsmagic?

`%lsmagic`でまずどんなコマンドがあるか確認

```

# まずどんなコマンドがあるか確認

%lsmagic

```

アウトプットを見てみよう。

ラインマジックは以下のとおり。`lsmagic`もラインマジックである。

%alias %alias_magic %autocall %automagic %autosave %bookmark %cat %cd %clear %colors %config %connect_info %cp %debug %dhist %dirs %doctest_mode %ed %edit %env %gui %hist %history %killbgscripts %ldir %less %lf %lk %ll %load %load_ext %loadpy %logoff %logon %logstart %logstate %logstop %ls %lsmagic %lx %macro %magic %man %matplotlib %mkdir %more %mv %notebook %page %pastebin %pdb %pdef %pdoc %pfile %pinfo %pinfo2 %pip %popd %pprint %precision %profile %prun %psearch %psource %pushd %pwd %pycat %pylab %qtconsole %quickref %recall %rehashx %reload_ext %rep %rerun %reset %reset_selective %rm %rmdir %run %save %sc %set_env %shell %store %sx %system %tb %tensorflow_version %time %timeit %unalias %unload_ext %who %who_ls %whos %xdel %xmode

セルマジックは以下のとおり。

%%! %%HTML %%SVG %%bash %%bigquery %%capture %%debug %%file %%html %%javascript %%js %%latex %%perl %%prun %%pypy %%python %%python2 %%python3 %%ruby %%script %%sh %%shell %%svg %%sx %%system %%time %%timeit %%writefile

`automagic`がオンのときは、ラインマジックに`%`は必要ないとのこと。まあ、どっちでもよい。=> `%automagic`コマンド自体がトグルスイッチ。明示的にオンとかオフとかにするときには、`on`、`off`、`1`、`0`、`True`、 `False`を引数として与える。

## ヘルプコマンド

マジックコマンドに`?`とか`??`をつけることで簡単な説明が得られる。仮にヘルプコマンドと呼ぼう。

```

# %lsmagic? とか %lsmagic?? とかやってみる

# %lsmagic?

```

### magicコマンドのdocstring

以下に各コマンドの`docstring`を示す。よくわからないので、英語のまま。

セルコマンドには`%%javascript`とか`ruby`とかもあり、利用できそう。

```

%alias? Define an alias for a system command.

%alias_magic? Create an alias for an existing line or cell magic.

%autocall? Make functions callable without having to type parentheses.

%automagic? Make magic functions callable without having to type the initial %.

%autosave? Set the autosave interval in the notebook (in seconds).

%bookmark? Manage IPython's bookmark system.

%cat? Repr: <alias cat for 'cat'>

%cd? Change the current working directory.

%clear? Clear the terminal.

%colors? Switch color scheme for prompts, info system and exception handlers.

%config? configure IPython

%connect_info? Print information for connecting other clients to this kernel

%cp? Repr: <alias cp for 'cp'>

%debug? Activate the interactive debugger.

%dhist? Print your history of visited directories.

%dirs? Return the current directory stack.

%doctest_mode? Toggle doctest mode on and off.

%ed? Alias for `%edit`.

%edit? Bring up an editor and execute the resulting code.

%env? Get, set, or list environment variables.

%gui? Enable or disable IPython GUI event loop integration.

%hist? Alias for `%history`.

%history? Print input history (_i<n> variables), with most recent last.

%killbgscripts? ill all BG processes started by %%script and its family.

%ldir? Repr: <alias ldir for 'ls -F -o --color %l | grep /$'>

%less? Show a file through the pager.

%lf? Repr: <alias lf for 'ls -F -o --color %l | grep ^-'>

%lk? Repr: <alias lk for 'ls -F -o --color %l | grep ^l'>

%ll? Repr: <alias ll for 'ls -F -o --color'>

%load? Load code into the current frontend.

%load_ext? Load an IPython extension by its module name.

%loadpy? Alias of `%load`

%logoff? Temporarily stop logging.

%logon? Restart logging.

%logstart? Start logging anywhere in a session.

%logstate? Print the status of the logging system.

%logstop? Fully stop logging and close log file.

%ls? Repr: <alias ls for 'ls -F --color'>

%lsmagic? List currently available magic functions.

%lx? Repr: <alias lx for 'ls -F -o --color %l | grep ^-..x'>

%macro? Define a macro for future re-execution. It accepts ranges of history, filenames or string objects.

%magic? Print information about the magic function system.

%man? Find the man page for the given command and display in pager.

%matplotlib? Set up matplotlib to work interactively.

%mkdir? Repr: <alias mkdir for 'mkdir'>

%more? Show a file through the pager.

%mv? Repr: <alias mv for 'mv'>

%notebook? Export and convert IPython notebooks.

%page? Pretty print the object and display it through a pager.

%pastebin? Upload code to Github's Gist paste bin, returning the URL.

%pdb? Control the automatic calling of the pdb interactive debugger.

%pdef? Print the call signature for any callable object.

%pdoc? Print the docstring for an object.

%pfile? Print (or run through pager) the file where an object is defined.

%pinfo? Provide detailed information about an object.

%pinfo2? Provide extra detailed information about an object.

%pip? Install a package in the current kernel using pip.

%popd? Change to directory popped off the top of the stack.

%pprint? Toggle pretty printing on/off.

%precision? Set floating point precision for pretty printing.

%prun? Run a statement through the python code profiler.

%psearch? Search for object in namespaces by wildcard.

%psource? Print (or run through pager) the source code for an object.

%pushd? lace the current dir on stack and change directory.

%pwd? Return the current working directory path.

%pycat? Show a syntax-highlighted file through a pag

%pylab? Load numpy and matplotlib to work interactively.

%qtconsole? Open a qtconsole connected to this kernel.

%quickref? Show a quick reference sheet

%recall? Repeat a command, or get command to input line for editing.

%rehashx? Update the alias table with all executable files in $PATH.

%reload_ext? Reload an IPython extension by its module name.

%rep? Docstring: Alias for `%recall`.

%rerun? Re-run previous input

%reset? Resets the namespace by removing all names defined by the user, if called without arguments

%reset_selective? Resets the namespace by removing names defined by the user.

%rm? Repr: <alias rm for 'rm'>

%rmdir? Repr: <alias rmdir for 'rmdir'>

%run? Run the named file inside IPython as a program.

%save? Save a set of lines or a macro to a given filename.

%sc? Shell capture - run shell command and capture output (DEPRECATED use !).

%set_env? Set environment variables.

%shell? Runs a shell command, allowing input to be provided.

%store? Lightweight persistence for python variables.

%sx? Shell execute - run shell command and capture output (!! is short-hand).

%system? Shell execute - run shell command and capture output (!! is short-hand).

%tb? Print the last traceback with the currently active exception mode.

%tensorflow_version? Implements the tensorflow_version line magic.

%time? Time execution of a Python statement or expression.

%timeit? Time execution of a Python statement or expression

%unalias? Remove an alias

%unload_ext? Unload an IPython extension by its module name.

%who? Print all interactive variables, with some minimal formatting.

%who_ls? Return a sorted list of all interactive variables.

%whos? Like %who, but gives some extra information about each variable.

%xdel? Delete a variable, trying to clear it from anywhere that IPython's machinery has references to it.

%xmode? Switch modes for the exception handlers.

%%HTML? Alias for `%%html`.

%%SVG? Alias for `%%svg`.

%%bash? %%bash script magic

%%bigquery? Underlying function for bigquery cell magic

%%capture? run the cell, capturing stdout, stderr, and IPython's rich display() calls.

%%debug? Activate the interactive debugger.

%%file? Alias for `%%writefile`.

%%html? ender the cell as a block of HTML

%%javascript? Run the cell block of Javascript code

%%js? Alias of `%%javascript`

%%latex? Render the cell as a block of latex

%%perl? %%perl script magic

%%prun? Run a statement through the python code profiler.

%%pypy? %%pypy script magic

%%python? Run cells with python in a subprocess.

%%python2? Run cells with python2 in a subprocess.

%%python3? Run cells with python3 in a subprocess.

%%ruby? Run cells with ruby in a subprocess.

%%script? Run a cell via a shell command

%%sh? Run cells with sh in a subprocess.

%%shell? Run the cell via a shell command, allowing input to be provided.

%%svg? Render the cell as an SVG literal

%%sx? Shell execute - run shell command and capture output (!! is short-hand).

%%system? Shell execute - run shell command and capture output (!! is short-hand).

%%time? Time execution of a Python statement or expression.

%%timeit? Time execution of a Python statement or expression

%%writefile? Write the contents of the cell to a file.

```

### %time を使ってみる。

```

%time sum(range(10000))

%timeit sum(range(10000))

%%timeit -n 1000 -r 3

for i in range(1000):

i * 2

```

### $matplotlib を使ってみる

```

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

x = np. arange(0, 10, 0.2)

y = np.sin(x)

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(x, y)

plt.show()

```

# いまここ

|

github_jupyter

|

%%script false

と書くと、そのコードセルは実行されないので、まるでテキストセルのように使える。

テキストセルの引用(行頭に>)や、コード表示(バッククオート3つ)のように使える。

勝手に改行もされない。

なにより、Markdownによる解釈がされないので、確実に文字列を書ける。

latexも当然使えない。

# まずどんなコマンドがあるか確認

%lsmagic

# %lsmagic? とか %lsmagic?? とかやってみる

# %lsmagic?

%alias? Define an alias for a system command.

%alias_magic? Create an alias for an existing line or cell magic.

%autocall? Make functions callable without having to type parentheses.

%automagic? Make magic functions callable without having to type the initial %.

%autosave? Set the autosave interval in the notebook (in seconds).

%bookmark? Manage IPython's bookmark system.

%cat? Repr: <alias cat for 'cat'>

%cd? Change the current working directory.

%clear? Clear the terminal.

%colors? Switch color scheme for prompts, info system and exception handlers.

%config? configure IPython

%connect_info? Print information for connecting other clients to this kernel

%cp? Repr: <alias cp for 'cp'>

%debug? Activate the interactive debugger.

%dhist? Print your history of visited directories.

%dirs? Return the current directory stack.

%doctest_mode? Toggle doctest mode on and off.

%ed? Alias for `%edit`.

%edit? Bring up an editor and execute the resulting code.

%env? Get, set, or list environment variables.

%gui? Enable or disable IPython GUI event loop integration.

%hist? Alias for `%history`.

%history? Print input history (_i<n> variables), with most recent last.

%killbgscripts? ill all BG processes started by %%script and its family.

%ldir? Repr: <alias ldir for 'ls -F -o --color %l | grep /$'>

%less? Show a file through the pager.

%lf? Repr: <alias lf for 'ls -F -o --color %l | grep ^-'>

%lk? Repr: <alias lk for 'ls -F -o --color %l | grep ^l'>

%ll? Repr: <alias ll for 'ls -F -o --color'>

%load? Load code into the current frontend.

%load_ext? Load an IPython extension by its module name.

%loadpy? Alias of `%load`

%logoff? Temporarily stop logging.

%logon? Restart logging.

%logstart? Start logging anywhere in a session.

%logstate? Print the status of the logging system.

%logstop? Fully stop logging and close log file.

%ls? Repr: <alias ls for 'ls -F --color'>

%lsmagic? List currently available magic functions.

%lx? Repr: <alias lx for 'ls -F -o --color %l | grep ^-..x'>

%macro? Define a macro for future re-execution. It accepts ranges of history, filenames or string objects.

%magic? Print information about the magic function system.

%man? Find the man page for the given command and display in pager.

%matplotlib? Set up matplotlib to work interactively.

%mkdir? Repr: <alias mkdir for 'mkdir'>

%more? Show a file through the pager.

%mv? Repr: <alias mv for 'mv'>

%notebook? Export and convert IPython notebooks.

%page? Pretty print the object and display it through a pager.

%pastebin? Upload code to Github's Gist paste bin, returning the URL.

%pdb? Control the automatic calling of the pdb interactive debugger.

%pdef? Print the call signature for any callable object.

%pdoc? Print the docstring for an object.

%pfile? Print (or run through pager) the file where an object is defined.

%pinfo? Provide detailed information about an object.

%pinfo2? Provide extra detailed information about an object.

%pip? Install a package in the current kernel using pip.

%popd? Change to directory popped off the top of the stack.

%pprint? Toggle pretty printing on/off.

%precision? Set floating point precision for pretty printing.

%prun? Run a statement through the python code profiler.

%psearch? Search for object in namespaces by wildcard.

%psource? Print (or run through pager) the source code for an object.

%pushd? lace the current dir on stack and change directory.

%pwd? Return the current working directory path.

%pycat? Show a syntax-highlighted file through a pag

%pylab? Load numpy and matplotlib to work interactively.

%qtconsole? Open a qtconsole connected to this kernel.

%quickref? Show a quick reference sheet

%recall? Repeat a command, or get command to input line for editing.

%rehashx? Update the alias table with all executable files in $PATH.

%reload_ext? Reload an IPython extension by its module name.

%rep? Docstring: Alias for `%recall`.

%rerun? Re-run previous input

%reset? Resets the namespace by removing all names defined by the user, if called without arguments

%reset_selective? Resets the namespace by removing names defined by the user.

%rm? Repr: <alias rm for 'rm'>

%rmdir? Repr: <alias rmdir for 'rmdir'>

%run? Run the named file inside IPython as a program.

%save? Save a set of lines or a macro to a given filename.

%sc? Shell capture - run shell command and capture output (DEPRECATED use !).

%set_env? Set environment variables.

%shell? Runs a shell command, allowing input to be provided.

%store? Lightweight persistence for python variables.

%sx? Shell execute - run shell command and capture output (!! is short-hand).

%system? Shell execute - run shell command and capture output (!! is short-hand).

%tb? Print the last traceback with the currently active exception mode.

%tensorflow_version? Implements the tensorflow_version line magic.

%time? Time execution of a Python statement or expression.

%timeit? Time execution of a Python statement or expression

%unalias? Remove an alias

%unload_ext? Unload an IPython extension by its module name.

%who? Print all interactive variables, with some minimal formatting.

%who_ls? Return a sorted list of all interactive variables.

%whos? Like %who, but gives some extra information about each variable.

%xdel? Delete a variable, trying to clear it from anywhere that IPython's machinery has references to it.

%xmode? Switch modes for the exception handlers.

%%HTML? Alias for `%%html`.

%%SVG? Alias for `%%svg`.

%%bash? %%bash script magic

%%bigquery? Underlying function for bigquery cell magic

%%capture? run the cell, capturing stdout, stderr, and IPython's rich display() calls.

%%debug? Activate the interactive debugger.

%%file? Alias for `%%writefile`.

%%html? ender the cell as a block of HTML

%%javascript? Run the cell block of Javascript code

%%js? Alias of `%%javascript`

%%latex? Render the cell as a block of latex

%%perl? %%perl script magic

%%prun? Run a statement through the python code profiler.

%%pypy? %%pypy script magic

%%python? Run cells with python in a subprocess.

%%python2? Run cells with python2 in a subprocess.

%%python3? Run cells with python3 in a subprocess.

%%ruby? Run cells with ruby in a subprocess.

%%script? Run a cell via a shell command

%%sh? Run cells with sh in a subprocess.

%%shell? Run the cell via a shell command, allowing input to be provided.

%%svg? Render the cell as an SVG literal

%%sx? Shell execute - run shell command and capture output (!! is short-hand).

%%system? Shell execute - run shell command and capture output (!! is short-hand).

%%time? Time execution of a Python statement or expression.

%%timeit? Time execution of a Python statement or expression

%%writefile? Write the contents of the cell to a file.

%time sum(range(10000))

%timeit sum(range(10000))

%%timeit -n 1000 -r 3

for i in range(1000):

i * 2

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

x = np. arange(0, 10, 0.2)

y = np.sin(x)

fig = plt.figure()

ax = fig.add_subplot(111)

ax.plot(x, y)

plt.show()

| 0.425009 | 0.95877 |

<a href="https://colab.research.google.com/github/Bhavani-Rajan/DS-Unit-1-Sprint-2-Data-Wrangling-and-Storytelling/blob/master/module3-make-explanatory-visualizations/LS_DS_123_Make_Explanatory_Visualizations.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

_Lambda School Data Science_

# Make Explanatory Visualizations

### Objectives

- identify misleading visualizations and how to fix them

- use Seaborn to visualize distributions and relationships with continuous and discrete variables

- add emphasis and annotations to transform visualizations from exploratory to explanatory

- remove clutter from visualizations

### Links

- [How to Spot Visualization Lies](https://flowingdata.com/2017/02/09/how-to-spot-visualization-lies/)

- [Visual Vocabulary - Vega Edition](http://ft.com/vocabulary)

- [Choosing a Python Visualization Tool flowchart](http://pbpython.com/python-vis-flowchart.html)

- [Searborn example gallery](http://seaborn.pydata.org/examples/index.html) & [tutorial](http://seaborn.pydata.org/tutorial.html)

- [Strong Titles Are The Biggest Bang for Your Buck](http://stephanieevergreen.com/strong-titles/)

- [Remove to improve (the data-ink ratio)](https://www.darkhorseanalytics.com/blog/data-looks-better-naked)

- [How to Generate FiveThirtyEight Graphs in Python](https://www.dataquest.io/blog/making-538-plots/)

# Avoid Misleading Visualizations

Did you find/discuss any interesting misleading visualizations in your Walkie Talkie?

## What makes a visualization misleading?

[5 Ways Writers Use Misleading Graphs To Manipulate You](https://venngage.com/blog/misleading-graphs/)

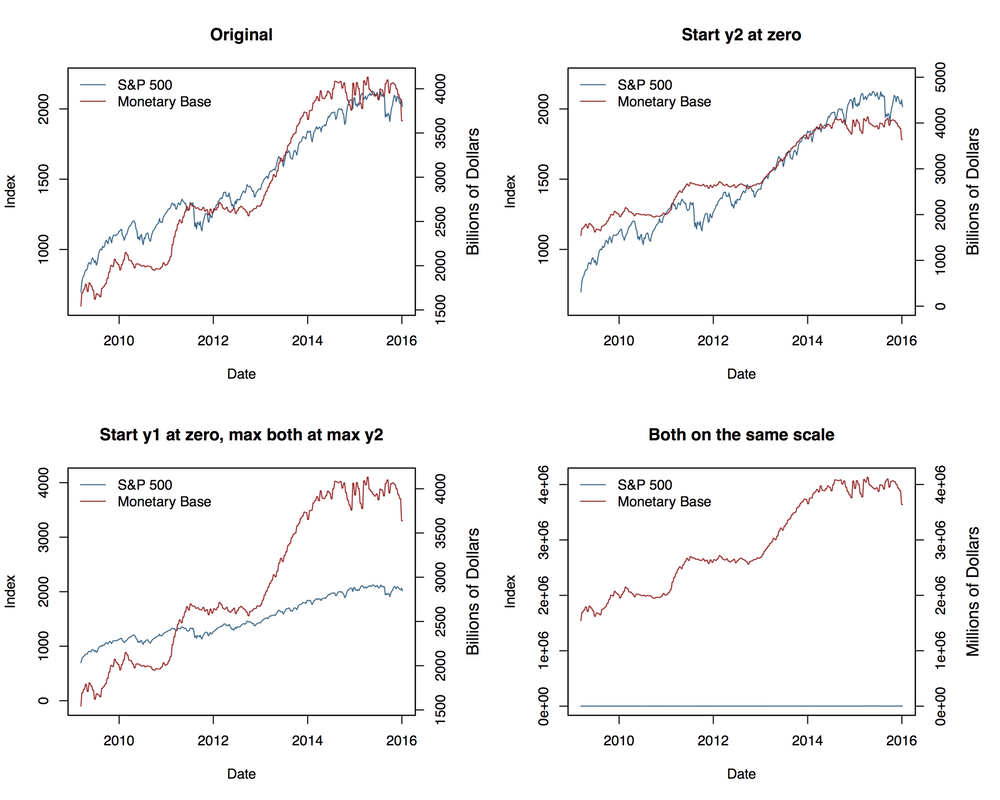

## Two y-axes

Other Examples:

- [Spurious Correlations](https://tylervigen.com/spurious-correlations)

- <https://blog.datawrapper.de/dualaxis/>

- <https://kieranhealy.org/blog/archives/2016/01/16/two-y-axes/>

- <http://www.storytellingwithdata.com/blog/2016/2/1/be-gone-dual-y-axis>

## Y-axis doesn't start at zero.

<img src="https://i.pinimg.com/originals/22/53/a9/2253a944f54bb61f1983bc076ff33cdd.jpg" width="600">

## Pie Charts are bad

<img src="https://i1.wp.com/flowingdata.com/wp-content/uploads/2009/11/Fox-News-pie-chart.png?fit=620%2C465&ssl=1" width="600">

## Pie charts that omit data are extra bad

- A guy makes a misleading chart that goes viral

What does this chart imply at first glance? You don't want your user to have to do a lot of work in order to be able to interpret you graph correctly. You want that first-glance conclusions to be the correct ones.

<img src="https://pbs.twimg.com/media/DiaiTLHWsAYAEEX?format=jpg&name=medium" width='600'>

<https://twitter.com/michaelbatnick/status/1019680856837849090?lang=en>

- It gets picked up by overworked journalists (assuming incompetency before malice)

<https://www.marketwatch.com/story/this-1-chart-puts-mega-techs-trillions-of-market-value-into-eye-popping-perspective-2018-07-18>

- Even after the chart's implications have been refuted, it's hard a bad (although compelling) visualization from being passed around.

<https://www.linkedin.com/pulse/good-bad-pie-charts-karthik-shashidhar/>

**["yea I understand a pie chart was probably not the best choice to present this data."](https://twitter.com/michaelbatnick/status/1037036440494985216)**

## Pie Charts that compare unrelated things are next-level extra bad

<img src="http://www.painting-with-numbers.com/download/document/186/170403+Legalizing+Marijuana+Graph.jpg" width="600">

## Be careful about how you use volume to represent quantities:

radius vs diameter vs volume

<img src="https://static1.squarespace.com/static/5bfc8dbab40b9d7dd9054f41/t/5c32d86e0ebbe80a25873249/1546836082961/5474039-25383714-thumbnail.jpg?format=1500w" width="600">

## Don't cherrypick timelines or specific subsets of your data:

<img src="https://wattsupwiththat.com/wp-content/uploads/2019/02/Figure-1-1.png" width="600">

Look how specifically the writer has selected what years to show in the legend on the right side.

<https://wattsupwiththat.com/2019/02/24/strong-arctic-sea-ice-growth-this-year/>

Try the tool that was used to make the graphic for yourself

<http://nsidc.org/arcticseaicenews/charctic-interactive-sea-ice-graph/>

## Use Relative units rather than Absolute Units

<img src="https://imgs.xkcd.com/comics/heatmap_2x.png" width="600">

## Avoid 3D graphs unless having the extra dimension is effective

Usually you can Split 3D graphs into multiple 2D graphs

3D graphs that are interactive can be very cool. (See Plotly and Bokeh)

<img src="https://thumbor.forbes.com/thumbor/1280x868/https%3A%2F%2Fblogs-images.forbes.com%2Fthumbnails%2Fblog_1855%2Fpt_1855_811_o.jpg%3Ft%3D1339592470" width="600">

## Don't go against typical conventions

<img src="http://www.callingbullshit.org/twittercards/tools_misleading_axes.png" width="600">

# Tips for choosing an appropriate visualization:

## Use Appropriate "Visual Vocabulary"

[Visual Vocabulary - Vega Edition](http://ft.com/vocabulary)

## What are the properties of your data?

- Is your primary variable of interest continuous or discrete?

- Is in wide or long (tidy) format?

- Does your visualization involve multiple variables?

- How many dimensions do you need to include on your plot?

Can you express the main idea of your visualization in a single sentence?

How hard does your visualization make the user work in order to draw the intended conclusion?

## Which Visualization tool is most appropriate?

[Choosing a Python Visualization Tool flowchart](http://pbpython.com/python-vis-flowchart.html)

## Anatomy of a Matplotlib Plot

```

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.ticker import AutoMinorLocator, MultipleLocator, FuncFormatter

np.random.seed(19680801)

X = np.linspace(0.5, 3.5, 100)

Y1 = 3+np.cos(X)

Y2 = 1+np.cos(1+X/0.75)/2

Y3 = np.random.uniform(Y1, Y2, len(X))

fig = plt.figure(figsize=(8, 8))

ax = fig.add_subplot(1, 1, 1, aspect=1)

def minor_tick(x, pos):

if not x % 1.0:

return ""

return "%.2f" % x

ax.xaxis.set_major_locator(MultipleLocator(1.000))

ax.xaxis.set_minor_locator(AutoMinorLocator(4))

ax.yaxis.set_major_locator(MultipleLocator(1.000))

ax.yaxis.set_minor_locator(AutoMinorLocator(4))

ax.xaxis.set_minor_formatter(FuncFormatter(minor_tick))

ax.set_xlim(0, 4)

ax.set_ylim(0, 4)

ax.tick_params(which='major', width=1.0)

ax.tick_params(which='major', length=10)

ax.tick_params(which='minor', width=1.0, labelsize=10)

ax.tick_params(which='minor', length=5, labelsize=10, labelcolor='0.25')

ax.grid(linestyle="--", linewidth=0.5, color='.25', zorder=-10)

ax.plot(X, Y1, c=(0.25, 0.25, 1.00), lw=2, label="Blue signal", zorder=10)

ax.plot(X, Y2, c=(1.00, 0.25, 0.25), lw=2, label="Red signal")

ax.plot(X, Y3, linewidth=0,

marker='o', markerfacecolor='w', markeredgecolor='k')

ax.set_title("Anatomy of a figure", fontsize=20, verticalalignment='bottom')

ax.set_xlabel("X axis label")

ax.set_ylabel("Y axis label")

ax.legend()

def circle(x, y, radius=0.15):

from matplotlib.patches import Circle

from matplotlib.patheffects import withStroke

circle = Circle((x, y), radius, clip_on=False, zorder=10, linewidth=1,

edgecolor='black', facecolor=(0, 0, 0, .0125),

path_effects=[withStroke(linewidth=5, foreground='w')])

ax.add_artist(circle)

def text(x, y, text):

ax.text(x, y, text, backgroundcolor="white",

ha='center', va='top', weight='bold', color='blue')

# Minor tick

circle(0.50, -0.10)

text(0.50, -0.32, "Minor tick label")

# Major tick

circle(-0.03, 4.00)

text(0.03, 3.80, "Major tick")

# Minor tick

circle(0.00, 3.50)

text(0.00, 3.30, "Minor tick")

# Major tick label

circle(-0.15, 3.00)

text(-0.15, 2.80, "Major tick label")

# X Label

circle(1.80, -0.27)

text(1.80, -0.45, "X axis label")

# Y Label

circle(-0.27, 1.80)

text(-0.27, 1.6, "Y axis label")

# Title

circle(1.60, 4.13)

text(1.60, 3.93, "Title")

# Blue plot

circle(1.75, 2.80)

text(1.75, 2.60, "Line\n(line plot)")

# Red plot

circle(1.20, 0.60)