code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

```

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn import metrics

from sklearn.metrics import classification_report, confusion_matrix, f1_score

from sklearn.metrics import make_scorer, f1_score, accuracy_score, recall_score, precision_score, classification_report, precision_recall_fscore_support

import itertools

# file used to write preserve the results of the classfier

# confusion matrix and precision recall fscore matrix

def plot_confusion_matrix(cm,

target_names,

title='Confusion matrix',

cmap=None,

normalize=True):

"""

given a sklearn confusion matrix (cm), make a nice plot

Arguments

---------

cm: confusion matrix from sklearn.metrics.confusion_matrix

target_names: given classification classes such as [0, 1, 2]

the class names, for example: ['high', 'medium', 'low']

title: the text to display at the top of the matrix

cmap: the gradient of the values displayed from matplotlib.pyplot.cm

see http://matplotlib.org/examples/color/colormaps_reference.html

plt.get_cmap('jet') or plt.cm.Blues

normalize: If False, plot the raw numbers

If True, plot the proportions

Usage

-----

plot_confusion_matrix(cm = cm, # confusion matrix created by

# sklearn.metrics.confusion_matrix

normalize = True, # show proportions

target_names = y_labels_vals, # list of names of the classes

title = best_estimator_name) # title of graph

Citiation

---------

http://scikit-learn.org/stable/auto_examples/model_selection/plot_confusion_matrix.html

"""

accuracy = np.trace(cm) / float(np.sum(cm))

misclass = 1 - accuracy

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

if cmap is None:

cmap = plt.get_cmap('Blues')

plt.figure(figsize=(8, 6))

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

if target_names is not None:

tick_marks = np.arange(len(target_names))

plt.xticks(tick_marks, target_names, rotation=45)

plt.yticks(tick_marks, target_names)

thresh = cm.max() / 1.5 if normalize else cm.max() / 2

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

if normalize:

plt.text(j, i, "{:0.4f}".format(cm[i, j]),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

else:

plt.text(j, i, "{:,}".format(cm[i, j]),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.ylabel('True label')

plt.xlabel('Predicted label\naccuracy={:0.4f}; misclass={:0.4f}'.format(accuracy, misclass))

plt.tight_layout()

return plt

##saving the classification report

def pandas_classification_report(y_true, y_pred):

metrics_summary = precision_recall_fscore_support(

y_true=y_true,

y_pred=y_pred)

cm = confusion_matrix(y_true, y_pred)

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

avg = list(precision_recall_fscore_support(

y_true=y_true,

y_pred=y_pred,

average='macro'))

avg.append(accuracy_score(y_true, y_pred, normalize=True))

metrics_sum_index = ['precision', 'recall', 'f1-score', 'support','accuracy']

list_all=list(metrics_summary)

list_all.append(cm.diagonal())

class_report_df = pd.DataFrame(

list_all,

index=metrics_sum_index)

support = class_report_df.loc['support']

total = support.sum()

avg[-2] = total

class_report_df['avg / total'] = avg

return class_report_df.T

from commen_preprocess import *

eng_train_dataset = pd.read_csv('../Data/english_dataset/english_dataset.tsv', sep='\t')

# #hindi_train_dataset = pd.read_csv('../Data/hindi_dataset/hindi_dataset.tsv', sep='\t',header=None)

# german_train_dataset = pd.read_csv('../Data/german_dataset/german_dataset_added_features.tsv', sep=',')

# eng_train_dataset=eng_train_dataset.drop(['Unnamed: 0'], axis=1)

# german_train_dataset=german_train_dataset.drop(['Unnamed: 0'], axis=1)

eng_train_dataset = eng_train_dataset.loc[eng_train_dataset['task_1'] == 'HOF']

eng_train_dataset.head()

l=eng_train_dataset['task_3'].value_counts()

print(l)

import numpy as np

from tqdm import tqdm

import pickle

####loading laser embeddings for english dataset

def load_laser_embeddings():

dim = 1024

engX_commen = np.fromfile("../Data/english_dataset/embeddings_eng_task23_commen.raw", dtype=np.float32, count=-1)

engX_lib = np.fromfile("../Data/english_dataset/embeddings_eng_task23_lib.raw", dtype=np.float32, count=-1)

engX_commen.resize(engX_commen.shape[0] // dim, dim)

engX_lib.resize(engX_lib.shape[0] // dim, dim)

return engX_commen,engX_lib

def load_bert_embeddings():

file = open('../Data/english_dataset/no_preprocess_bert_embed_task23.pkl', 'rb')

embeds = pickle.load(file)

return np.array(embeds)

def merge_feature(*args):

feat_all=[]

print(args[0].shape)

for i in tqdm(range(args[0].shape[0])):

feat=[]

for arg in args:

feat+=list(arg[i])

feat_all.append(feat)

return feat_all

convert_label={

'TIN':0,

'UNT':1,

}

convert_reverse_label={

0:'TIN',

1:'UNT',

}

labels=eng_train_dataset['task_3'].values

engX_commen,engX_lib=load_laser_embeddings()

bert_embeds =load_bert_embeddings()

feat_all=merge_feature(engX_commen,engX_lib,bert_embeds)

len(feat_all[0])

from sklearn.utils.multiclass import type_of_target

Classifier_Train_X=np.array(feat_all)

labels_int=[]

for i in range(len(labels)):

labels_int.append(convert_label[labels[i]])

Classifier_Train_Y=np.array(labels_int,dtype='float64')

print(type_of_target(Classifier_Train_Y))

Classifier_Train_Y

from sklearn.metrics import accuracy_score

import joblib

from sklearn.model_selection import StratifiedKFold as skf

###all classifier

from catboost import CatBoostClassifier

from xgboost.sklearn import XGBClassifier

from sklearn.svm import SVC, LinearSVC

from sklearn.linear_model import LogisticRegression

from sklearn import tree

from sklearn import neighbors

from sklearn import ensemble

from sklearn import neural_network

from sklearn import linear_model

import lightgbm as lgbm

from sklearn.naive_bayes import GaussianNB

from sklearn.linear_model import LogisticRegression

from lightgbm import LGBMClassifier

from nltk.classify.scikitlearn import SklearnClassifier

def train_model_no_ext(Classifier_Train_X,Classifier_Train_Y,model_type,save_model=False):

kf = skf(n_splits=10,shuffle=True)

y_total_preds=[]

y_total=[]

count=0

img_name = 'cm.png'

report_name = 'report.csv'

scale=list(Classifier_Train_Y).count(0)/list(Classifier_Train_Y).count(1)

print(scale)

if(save_model==True):

Classifier=get_model(scale,m_type=model_type)

Classifier.fit(Classifier_Train_X,Classifier_Train_Y)

filename = model_type+'_eng_task_2.joblib.pkl'

joblib.dump(Classifier, filename, compress=9)

# filename1 = model_name+'select_features_eng_task1.joblib.pkl'

# joblib.dump(model_featureSelection, filename1, compress=9)

else:

for train_index, test_index in kf.split(Classifier_Train_X,Classifier_Train_Y):

X_train, X_test = Classifier_Train_X[train_index], Classifier_Train_X[test_index]

y_train, y_test = Classifier_Train_Y[train_index], Classifier_Train_Y[test_index]

classifier=get_model(scale,m_type=model_type)

print(type(y_train))

classifier.fit(X_train,y_train)

y_preds = classifier.predict(X_test)

for ele in y_test:

y_total.append(ele)

for ele in y_preds:

y_total_preds.append(ele)

y_pred_train = classifier.predict(X_train)

print(y_pred_train)

print(y_train)

count=count+1

print('accuracy_train:',accuracy_score(y_train, y_pred_train),'accuracy_test:',accuracy_score(y_test, y_preds))

print('TRAINING:')

print(classification_report( y_train, y_pred_train ))

print("TESTING:")

print(classification_report( y_test, y_preds ))

report = classification_report( y_total, y_total_preds )

cm=confusion_matrix(y_total, y_total_preds)

plt=plot_confusion_matrix(cm,normalize= True,target_names = ['TIN','UNT'],title = "Confusion Matrix")

plt.savefig('eng_task3'+model_type+'_'+img_name)

print(classifier)

print(report)

print(accuracy_score(y_total, y_total_preds))

df_result=pandas_classification_report(y_total,y_total_preds)

df_result.to_csv('eng_task3'+model_type+'_'+report_name, sep=',')

def get_model(scale,m_type=None):

if not m_type:

print("ERROR: Please specify a model type!")

return None

if m_type == 'decision_tree_classifier':

logreg = tree.DecisionTreeClassifier(max_features=1000,max_depth=3,class_weight='balanced')

elif m_type == 'gaussian':

logreg = GaussianNB()

elif m_type == 'logistic_regression':

logreg = LogisticRegression(n_jobs=10, random_state=42,class_weight='balanced',solver='liblinear')

elif m_type == 'MLPClassifier':

# logreg = neural_network.MLPClassifier((500))

logreg = neural_network.MLPClassifier((100),random_state=42,early_stopping=True)

elif m_type == 'RandomForestClassifier':

logreg = ensemble.RandomForestClassifier(n_estimators=100, class_weight='balanced', n_jobs=12, max_depth=7)

elif m_type == 'SVC':

#logreg = LinearSVC(dual=False,max_iter=200)

logreg = SVC(kernel='linear',random_state=1526)

elif m_type == 'Catboost':

logreg = CatBoostClassifier(iterations=100,learning_rate=0.2,

l2_leaf_reg=500,depth=10,use_best_model=False, random_state=42,loss_function='MultiClass')

# logreg = CatBoostClassifier(scale_pos_weight=0.8, random_seed=42,);

elif m_type == 'XGB_classifier':

# logreg=XGBClassifier(silent=False,eta=0.1,objective='binary:logistic',max_depth=5,min_child_weight=0,gamma=0.2,subsample=0.8, colsample_bytree = 0.8,scale_pos_weight=1,n_estimators=500,reg_lambda=3,nthread=12)

logreg=XGBClassifier(silent=False,objective='multi:softmax',num_class=3,

reg_lambda=3,nthread=12, random_state=42)

elif m_type == 'light_gbm':

logreg = LGBMClassifier(objective='multiclass',max_depth=3,learning_rate=0.2,num_leaves=20,scale_pos_weight=scale,

boosting_type='gbdt', metric='multi_logloss',random_state=5,reg_lambda=20,silent=False)

else:

print("give correct model")

print(logreg)

return logreg

models_name=['decision_tree_classifier','gaussian','logistic_regression','MLPClassifier','RandomForestClassifier',

'SVC','light_gbm']

for model in models_name:

train_model_no_ext(Classifier_Train_X,Classifier_Train_Y,model)

```

|

github_jupyter

|

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn import metrics

from sklearn.metrics import classification_report, confusion_matrix, f1_score

from sklearn.metrics import make_scorer, f1_score, accuracy_score, recall_score, precision_score, classification_report, precision_recall_fscore_support

import itertools

# file used to write preserve the results of the classfier

# confusion matrix and precision recall fscore matrix

def plot_confusion_matrix(cm,

target_names,

title='Confusion matrix',

cmap=None,

normalize=True):

"""

given a sklearn confusion matrix (cm), make a nice plot

Arguments

---------

cm: confusion matrix from sklearn.metrics.confusion_matrix

target_names: given classification classes such as [0, 1, 2]

the class names, for example: ['high', 'medium', 'low']

title: the text to display at the top of the matrix

cmap: the gradient of the values displayed from matplotlib.pyplot.cm

see http://matplotlib.org/examples/color/colormaps_reference.html

plt.get_cmap('jet') or plt.cm.Blues

normalize: If False, plot the raw numbers

If True, plot the proportions

Usage

-----

plot_confusion_matrix(cm = cm, # confusion matrix created by

# sklearn.metrics.confusion_matrix

normalize = True, # show proportions

target_names = y_labels_vals, # list of names of the classes

title = best_estimator_name) # title of graph

Citiation

---------

http://scikit-learn.org/stable/auto_examples/model_selection/plot_confusion_matrix.html

"""

accuracy = np.trace(cm) / float(np.sum(cm))

misclass = 1 - accuracy

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

if cmap is None:

cmap = plt.get_cmap('Blues')

plt.figure(figsize=(8, 6))

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

if target_names is not None:

tick_marks = np.arange(len(target_names))

plt.xticks(tick_marks, target_names, rotation=45)

plt.yticks(tick_marks, target_names)

thresh = cm.max() / 1.5 if normalize else cm.max() / 2

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

if normalize:

plt.text(j, i, "{:0.4f}".format(cm[i, j]),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

else:

plt.text(j, i, "{:,}".format(cm[i, j]),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.ylabel('True label')

plt.xlabel('Predicted label\naccuracy={:0.4f}; misclass={:0.4f}'.format(accuracy, misclass))

plt.tight_layout()

return plt

##saving the classification report

def pandas_classification_report(y_true, y_pred):

metrics_summary = precision_recall_fscore_support(

y_true=y_true,

y_pred=y_pred)

cm = confusion_matrix(y_true, y_pred)

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

avg = list(precision_recall_fscore_support(

y_true=y_true,

y_pred=y_pred,

average='macro'))

avg.append(accuracy_score(y_true, y_pred, normalize=True))

metrics_sum_index = ['precision', 'recall', 'f1-score', 'support','accuracy']

list_all=list(metrics_summary)

list_all.append(cm.diagonal())

class_report_df = pd.DataFrame(

list_all,

index=metrics_sum_index)

support = class_report_df.loc['support']

total = support.sum()

avg[-2] = total

class_report_df['avg / total'] = avg

return class_report_df.T

from commen_preprocess import *

eng_train_dataset = pd.read_csv('../Data/english_dataset/english_dataset.tsv', sep='\t')

# #hindi_train_dataset = pd.read_csv('../Data/hindi_dataset/hindi_dataset.tsv', sep='\t',header=None)

# german_train_dataset = pd.read_csv('../Data/german_dataset/german_dataset_added_features.tsv', sep=',')

# eng_train_dataset=eng_train_dataset.drop(['Unnamed: 0'], axis=1)

# german_train_dataset=german_train_dataset.drop(['Unnamed: 0'], axis=1)

eng_train_dataset = eng_train_dataset.loc[eng_train_dataset['task_1'] == 'HOF']

eng_train_dataset.head()

l=eng_train_dataset['task_3'].value_counts()

print(l)

import numpy as np

from tqdm import tqdm

import pickle

####loading laser embeddings for english dataset

def load_laser_embeddings():

dim = 1024

engX_commen = np.fromfile("../Data/english_dataset/embeddings_eng_task23_commen.raw", dtype=np.float32, count=-1)

engX_lib = np.fromfile("../Data/english_dataset/embeddings_eng_task23_lib.raw", dtype=np.float32, count=-1)

engX_commen.resize(engX_commen.shape[0] // dim, dim)

engX_lib.resize(engX_lib.shape[0] // dim, dim)

return engX_commen,engX_lib

def load_bert_embeddings():

file = open('../Data/english_dataset/no_preprocess_bert_embed_task23.pkl', 'rb')

embeds = pickle.load(file)

return np.array(embeds)

def merge_feature(*args):

feat_all=[]

print(args[0].shape)

for i in tqdm(range(args[0].shape[0])):

feat=[]

for arg in args:

feat+=list(arg[i])

feat_all.append(feat)

return feat_all

convert_label={

'TIN':0,

'UNT':1,

}

convert_reverse_label={

0:'TIN',

1:'UNT',

}

labels=eng_train_dataset['task_3'].values

engX_commen,engX_lib=load_laser_embeddings()

bert_embeds =load_bert_embeddings()

feat_all=merge_feature(engX_commen,engX_lib,bert_embeds)

len(feat_all[0])

from sklearn.utils.multiclass import type_of_target

Classifier_Train_X=np.array(feat_all)

labels_int=[]

for i in range(len(labels)):

labels_int.append(convert_label[labels[i]])

Classifier_Train_Y=np.array(labels_int,dtype='float64')

print(type_of_target(Classifier_Train_Y))

Classifier_Train_Y

from sklearn.metrics import accuracy_score

import joblib

from sklearn.model_selection import StratifiedKFold as skf

###all classifier

from catboost import CatBoostClassifier

from xgboost.sklearn import XGBClassifier

from sklearn.svm import SVC, LinearSVC

from sklearn.linear_model import LogisticRegression

from sklearn import tree

from sklearn import neighbors

from sklearn import ensemble

from sklearn import neural_network

from sklearn import linear_model

import lightgbm as lgbm

from sklearn.naive_bayes import GaussianNB

from sklearn.linear_model import LogisticRegression

from lightgbm import LGBMClassifier

from nltk.classify.scikitlearn import SklearnClassifier

def train_model_no_ext(Classifier_Train_X,Classifier_Train_Y,model_type,save_model=False):

kf = skf(n_splits=10,shuffle=True)

y_total_preds=[]

y_total=[]

count=0

img_name = 'cm.png'

report_name = 'report.csv'

scale=list(Classifier_Train_Y).count(0)/list(Classifier_Train_Y).count(1)

print(scale)

if(save_model==True):

Classifier=get_model(scale,m_type=model_type)

Classifier.fit(Classifier_Train_X,Classifier_Train_Y)

filename = model_type+'_eng_task_2.joblib.pkl'

joblib.dump(Classifier, filename, compress=9)

# filename1 = model_name+'select_features_eng_task1.joblib.pkl'

# joblib.dump(model_featureSelection, filename1, compress=9)

else:

for train_index, test_index in kf.split(Classifier_Train_X,Classifier_Train_Y):

X_train, X_test = Classifier_Train_X[train_index], Classifier_Train_X[test_index]

y_train, y_test = Classifier_Train_Y[train_index], Classifier_Train_Y[test_index]

classifier=get_model(scale,m_type=model_type)

print(type(y_train))

classifier.fit(X_train,y_train)

y_preds = classifier.predict(X_test)

for ele in y_test:

y_total.append(ele)

for ele in y_preds:

y_total_preds.append(ele)

y_pred_train = classifier.predict(X_train)

print(y_pred_train)

print(y_train)

count=count+1

print('accuracy_train:',accuracy_score(y_train, y_pred_train),'accuracy_test:',accuracy_score(y_test, y_preds))

print('TRAINING:')

print(classification_report( y_train, y_pred_train ))

print("TESTING:")

print(classification_report( y_test, y_preds ))

report = classification_report( y_total, y_total_preds )

cm=confusion_matrix(y_total, y_total_preds)

plt=plot_confusion_matrix(cm,normalize= True,target_names = ['TIN','UNT'],title = "Confusion Matrix")

plt.savefig('eng_task3'+model_type+'_'+img_name)

print(classifier)

print(report)

print(accuracy_score(y_total, y_total_preds))

df_result=pandas_classification_report(y_total,y_total_preds)

df_result.to_csv('eng_task3'+model_type+'_'+report_name, sep=',')

def get_model(scale,m_type=None):

if not m_type:

print("ERROR: Please specify a model type!")

return None

if m_type == 'decision_tree_classifier':

logreg = tree.DecisionTreeClassifier(max_features=1000,max_depth=3,class_weight='balanced')

elif m_type == 'gaussian':

logreg = GaussianNB()

elif m_type == 'logistic_regression':

logreg = LogisticRegression(n_jobs=10, random_state=42,class_weight='balanced',solver='liblinear')

elif m_type == 'MLPClassifier':

# logreg = neural_network.MLPClassifier((500))

logreg = neural_network.MLPClassifier((100),random_state=42,early_stopping=True)

elif m_type == 'RandomForestClassifier':

logreg = ensemble.RandomForestClassifier(n_estimators=100, class_weight='balanced', n_jobs=12, max_depth=7)

elif m_type == 'SVC':

#logreg = LinearSVC(dual=False,max_iter=200)

logreg = SVC(kernel='linear',random_state=1526)

elif m_type == 'Catboost':

logreg = CatBoostClassifier(iterations=100,learning_rate=0.2,

l2_leaf_reg=500,depth=10,use_best_model=False, random_state=42,loss_function='MultiClass')

# logreg = CatBoostClassifier(scale_pos_weight=0.8, random_seed=42,);

elif m_type == 'XGB_classifier':

# logreg=XGBClassifier(silent=False,eta=0.1,objective='binary:logistic',max_depth=5,min_child_weight=0,gamma=0.2,subsample=0.8, colsample_bytree = 0.8,scale_pos_weight=1,n_estimators=500,reg_lambda=3,nthread=12)

logreg=XGBClassifier(silent=False,objective='multi:softmax',num_class=3,

reg_lambda=3,nthread=12, random_state=42)

elif m_type == 'light_gbm':

logreg = LGBMClassifier(objective='multiclass',max_depth=3,learning_rate=0.2,num_leaves=20,scale_pos_weight=scale,

boosting_type='gbdt', metric='multi_logloss',random_state=5,reg_lambda=20,silent=False)

else:

print("give correct model")

print(logreg)

return logreg

models_name=['decision_tree_classifier','gaussian','logistic_regression','MLPClassifier','RandomForestClassifier',

'SVC','light_gbm']

for model in models_name:

train_model_no_ext(Classifier_Train_X,Classifier_Train_Y,model)

| 0.639849 | 0.583144 |

# Lost Rhino

In this notebook, we plot **Figures 1(a) and 1(b)**. To do so, we need to get the ratings for the particular beer called *Lost Rhino* from the brewery *Lost Rhino Ice Breaker*.

**Requirements**:

- You need to run notebook `4-zscores` to get the file `z_score_params_matched_ratings` in `data/tmp` and the files `ratings_ba.txt.gz` and `ratings_rb.txt.gz` in `data/matched`. In other words, you need to **run the first 5 cells of `4-zscores`**.

**Benchmark time**: This notebook has been run on a Dell Latitude (ElementaryOS 0.4.1 Loki, i7-7600U, 16GB RAM).

```

import os

os.chdir('..')

# Helpers functions

from python.helpers import parse

# Libraries for preparing data

import json

import gzip

import numpy as np

import pandas as pd

from datetime import datetime

# Libraries for plotting

import seaborn as sns

import matplotlib.pyplot as plt

from matplotlib.ticker import FuncFormatter

import matplotlib

# Folders

data_folder = '../data/'

fig_folder = '../figures/'

# For the Python notebook

%matplotlib inline

%reload_ext autoreload

%autoreload 2

# General info for plotting

colors = {'ba': (232/255,164/255,29/255),

'rb': (0/255,152/255,205/255)}

labels = {'ba': 'BeerAdvocate', 'rb': 'RateBeer'}

# Check that folders exist

if not os.path.exists(data_folder + 'tmp'):

os.makedirs(data_folder + 'tmp')

if not os.path.exists(data_folder + 'prepared'):

os.makedirs(data_folder + 'prepared')

if not os.path.exists(fig_folder):

os.makedirs(fig_folder)

```

# Prepare the data

We simply need to get all the ratings of the beers *Lost Rhino* in order.

```

%%time

with open('../data/tmp/z_score_params_matched_ratings.json') as file:

z_score_params = json.load(file)

lost_rhino = {'ba': [], 'rb': []}

lost_rhino_dates = {'ba': [], 'rb': []}

id_ = {'ba': 78599, 'rb': 166120}

# Go through RB and BA

for key in ['ba', 'rb']:

print('Parsing {} reviews.'.format(key.upper()))

# Get the iterator with the ratings fo the matched beers

gen = parse(data_folder + 'matched/ratings_{}.txt.gz'.format(key))

# Go through the iterator

for item in gen:

if int(item['beer_id']) == id_[key]:

# Get the year

date = int(item['date'])

year = str(datetime.fromtimestamp(date).year)

# Get the rating

rat = float(item['rating'])

# Compute its zscore based on the year

zs = (rat-z_score_params[key][year]['mean'])/z_score_params[key][year]['std']

# Add date and zscore

lost_rhino_dates[key].append(date)

lost_rhino[key].append(zs)

for key in lost_rhino.keys():

# Get the sorted dates from smallest to biggest

idx = np.argsort(lost_rhino_dates[key])

# Sort the zscores for lost_rhino

lost_rhino_dates[key] = list(np.array(lost_rhino_dates[key])[idx])

lost_rhino[key] = list(np.array(lost_rhino[key])[idx])

with open(data_folder + 'prepared/lost_rhino.json', 'w') as outfile:

json.dump(lost_rhino, outfile)

```

## Plot the ratings of the *Lost Rhino*

The first cell plots each ratings and the second cell plots the running mean.

```

with open(data_folder + 'prepared/lost_rhino.json', 'r') as infile:

ratings = json.load(infile)

plt.figure(figsize=(5, 3.5), frameon=False)

sns.set_context("paper")

sns.set(font_scale = 1.3)

sns.set_style("white", {

"font.family": "sans-serif",

"font.serif": ['Helvetica'],

"font.scale": 2

})

sns.set_style("ticks", {"xtick.major.size": 4,

"ytick.major.size": 4})

ax = plt.subplot(111)

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

plt.plot([-10, 55], [0, 0], 'grey', linewidth=0.5)

for key in ['ba', 'rb']:

rats = ratings[key]

rmean = np.cumsum(rats)/np.array(range(1, len(rats)+1))

ax.plot(list(range(1, len(rmean)+1)), rats, color=colors[key], label=labels[key], linewidth=2)

plt.xlim([-1, 55])

plt.ylim([-4, 2.5])

plt.yticks(list(range(-4, 3)), list(range(-4, 3)))

plt.ylabel('Rating (standardized)')

plt.xlabel('Rating index')

leg = plt.legend()

leg.get_frame().set_linewidth(0.0)

plt.savefig(fig_folder + 'timeseries_zscore_example.pdf', bbox_inches='tight')

with open(data_folder + 'prepared/lost_rhino.json', 'r') as infile:

ratings = json.load(infile)

plt.figure(figsize=(5, 3.75), frameon=False)

sns.set_context("paper")

sns.set(font_scale = 1.28)

sns.set_style("white", {

"font.family": "sans-serif",

"font.serif": ['Helvetica'],

"font.scale": 2

})

sns.set_style("ticks", {"xtick.major.size": 4,

"ytick.major.size": 4})

ax = plt.subplot(111)

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

plt.plot([-10, 55], [0, 0], 'grey', linewidth=0.5)

for key in ['ba', 'rb']:

rats = ratings[key]

rmean = np.cumsum(rats)/np.array(range(1, len(rats)+1))

ax.plot(list(range(1, len(rmean)+1)), rmean, color=colors[key], label=labels[key], linewidth=2)

plt.ylabel('Cum. avg rating (standardized)')

plt.xlabel('Rating index')

plt.xlim([-1, 55])

plt.ylim([-4, 2.5])

plt.yticks(list(range(-4, 3)), list(range(-4, 3)))

leg = plt.legend(loc=4)

leg.get_frame().set_linewidth(0.0)

plt.tight_layout()

plt.savefig(fig_folder + 'timeseries_avg_zscore_example.pdf', bbox_inches='tight')

```

|

github_jupyter

|

import os

os.chdir('..')

# Helpers functions

from python.helpers import parse

# Libraries for preparing data

import json

import gzip

import numpy as np

import pandas as pd

from datetime import datetime

# Libraries for plotting

import seaborn as sns

import matplotlib.pyplot as plt

from matplotlib.ticker import FuncFormatter

import matplotlib

# Folders

data_folder = '../data/'

fig_folder = '../figures/'

# For the Python notebook

%matplotlib inline

%reload_ext autoreload

%autoreload 2

# General info for plotting

colors = {'ba': (232/255,164/255,29/255),

'rb': (0/255,152/255,205/255)}

labels = {'ba': 'BeerAdvocate', 'rb': 'RateBeer'}

# Check that folders exist

if not os.path.exists(data_folder + 'tmp'):

os.makedirs(data_folder + 'tmp')

if not os.path.exists(data_folder + 'prepared'):

os.makedirs(data_folder + 'prepared')

if not os.path.exists(fig_folder):

os.makedirs(fig_folder)

%%time

with open('../data/tmp/z_score_params_matched_ratings.json') as file:

z_score_params = json.load(file)

lost_rhino = {'ba': [], 'rb': []}

lost_rhino_dates = {'ba': [], 'rb': []}

id_ = {'ba': 78599, 'rb': 166120}

# Go through RB and BA

for key in ['ba', 'rb']:

print('Parsing {} reviews.'.format(key.upper()))

# Get the iterator with the ratings fo the matched beers

gen = parse(data_folder + 'matched/ratings_{}.txt.gz'.format(key))

# Go through the iterator

for item in gen:

if int(item['beer_id']) == id_[key]:

# Get the year

date = int(item['date'])

year = str(datetime.fromtimestamp(date).year)

# Get the rating

rat = float(item['rating'])

# Compute its zscore based on the year

zs = (rat-z_score_params[key][year]['mean'])/z_score_params[key][year]['std']

# Add date and zscore

lost_rhino_dates[key].append(date)

lost_rhino[key].append(zs)

for key in lost_rhino.keys():

# Get the sorted dates from smallest to biggest

idx = np.argsort(lost_rhino_dates[key])

# Sort the zscores for lost_rhino

lost_rhino_dates[key] = list(np.array(lost_rhino_dates[key])[idx])

lost_rhino[key] = list(np.array(lost_rhino[key])[idx])

with open(data_folder + 'prepared/lost_rhino.json', 'w') as outfile:

json.dump(lost_rhino, outfile)

with open(data_folder + 'prepared/lost_rhino.json', 'r') as infile:

ratings = json.load(infile)

plt.figure(figsize=(5, 3.5), frameon=False)

sns.set_context("paper")

sns.set(font_scale = 1.3)

sns.set_style("white", {

"font.family": "sans-serif",

"font.serif": ['Helvetica'],

"font.scale": 2

})

sns.set_style("ticks", {"xtick.major.size": 4,

"ytick.major.size": 4})

ax = plt.subplot(111)

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

plt.plot([-10, 55], [0, 0], 'grey', linewidth=0.5)

for key in ['ba', 'rb']:

rats = ratings[key]

rmean = np.cumsum(rats)/np.array(range(1, len(rats)+1))

ax.plot(list(range(1, len(rmean)+1)), rats, color=colors[key], label=labels[key], linewidth=2)

plt.xlim([-1, 55])

plt.ylim([-4, 2.5])

plt.yticks(list(range(-4, 3)), list(range(-4, 3)))

plt.ylabel('Rating (standardized)')

plt.xlabel('Rating index')

leg = plt.legend()

leg.get_frame().set_linewidth(0.0)

plt.savefig(fig_folder + 'timeseries_zscore_example.pdf', bbox_inches='tight')

with open(data_folder + 'prepared/lost_rhino.json', 'r') as infile:

ratings = json.load(infile)

plt.figure(figsize=(5, 3.75), frameon=False)

sns.set_context("paper")

sns.set(font_scale = 1.28)

sns.set_style("white", {

"font.family": "sans-serif",

"font.serif": ['Helvetica'],

"font.scale": 2

})

sns.set_style("ticks", {"xtick.major.size": 4,

"ytick.major.size": 4})

ax = plt.subplot(111)

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

plt.plot([-10, 55], [0, 0], 'grey', linewidth=0.5)

for key in ['ba', 'rb']:

rats = ratings[key]

rmean = np.cumsum(rats)/np.array(range(1, len(rats)+1))

ax.plot(list(range(1, len(rmean)+1)), rmean, color=colors[key], label=labels[key], linewidth=2)

plt.ylabel('Cum. avg rating (standardized)')

plt.xlabel('Rating index')

plt.xlim([-1, 55])

plt.ylim([-4, 2.5])

plt.yticks(list(range(-4, 3)), list(range(-4, 3)))

leg = plt.legend(loc=4)

leg.get_frame().set_linewidth(0.0)

plt.tight_layout()

plt.savefig(fig_folder + 'timeseries_avg_zscore_example.pdf', bbox_inches='tight')

| 0.457379 | 0.827375 |

# Interactive data visualizations

Jupyter Notebook has support for many kinds of interactive outputs, including

the ipywidgets ecosystem as well as many interactive visualization libraries.

These are supported in Jupyter Book, with the right configuration.

This page has a few common examples.

First off, we'll download a little bit of data

and show its structure:

```

import plotly.express as px

data = px.data.iris()

data.head()

```

## Altair

Interactive outputs will work under the assumption that the outputs they produce have

self-contained HTML that works without requiring any external dependencies to load.

See the [`Altair` installation instructions](https://altair-viz.github.io/getting_started/installation.html#installation)

to get set up with Altair. Below is some example output.

```

import altair as alt

alt.Chart(data=data).mark_point().encode(

x="sepal_width",

y="sepal_length",

color="species",

size='sepal_length'

)

```

## Plotly

Plotly is another interactive plotting library that provides a high-level API for

visualization. See the [Plotly JupyterLab documentation](https://plotly.com/python/getting-started/#JupyterLab-Support-(Python-3.5+))

to get started with Plotly in the notebook.

```{margin}

Plotly uses [renderers to output different kinds of information](https://plotly.com/python/renderers/)

when you display a plot. Experiment with renderers to get the output you want.

```

Below is some example output.

:::{important}

For these plots to show, it may be necessary to load `require.js`, in your `_config.yml`:

```yaml

sphinx:

config:

html_js_files:

- https://cdnjs.cloudflare.com/ajax/libs/require.js/2.3.4/require.min.js

```

:::

```

import plotly.io as pio

import plotly.express as px

import plotly.offline as py

df = px.data.iris()

fig = px.scatter(df, x="sepal_width", y="sepal_length", color="species", size="sepal_length")

fig

```

## Bokeh

Bokeh provides several options for interactive visualizations, and is part of the PyViz ecosystem. See

[the Bokeh with Jupyter documentation](https://docs.bokeh.org/en/latest/docs/user_guide/jupyter.html#userguide-jupyter) to

get started.

Below is some example output. First we'll initialized Bokeh with `output_notebook()`.

This needs to be in a separate cell to give the JavaScript time to load.

```

from bokeh.plotting import figure, show, output_notebook

output_notebook()

```

Now we'll make our plot.

```

p = figure()

p.circle(data["sepal_width"], data["sepal_length"], fill_color=data["species"], size=data["sepal_length"])

show(p)

```

## ipywidgets

You may also run code for Jupyter Widgets in your document, and the interactive HTML

outputs will embed themselves in your side. See [the ipywidgets documentation](https://ipywidgets.readthedocs.io/en/latest/user_install.html)

for how to get set up in your own environment.

```{admonition} Widgets often need a kernel

Note that `ipywidgets` tend to behave differently from other interactive visualization libraries. They

interact both with Javascript, and with Python. Some functionality in `ipywidgets` may not

work in default Jupyter Book pages (because no Python kernel is running). You may be able to

get around this with [tools for remote kernels, like thebe](https://thebelab.readthedocs.org).

```

Here are some simple widget elements rendered below.

```

import ipywidgets as widgets

widgets.IntSlider(

value=7,

min=0,

max=10,

step=1,

description='Test:',

disabled=False,

continuous_update=False,

orientation='horizontal',

readout=True,

readout_format='d'

)

tab_contents = ['P0', 'P1', 'P2', 'P3', 'P4']

children = [widgets.Text(description=name) for name in tab_contents]

tab = widgets.Tab()

tab.children = children

for ii in range(len(children)):

tab.set_title(ii, f"tab_{ii}")

tab

```

You can find [a list of existing Jupyter Widgets](https://ipywidgets.readthedocs.io/en/latest/examples/Widget%20List.html)

in the jupyter-widgets documentation.

|

github_jupyter

|

import plotly.express as px

data = px.data.iris()

data.head()

import altair as alt

alt.Chart(data=data).mark_point().encode(

x="sepal_width",

y="sepal_length",

color="species",

size='sepal_length'

)

Below is some example output.

:::{important}

For these plots to show, it may be necessary to load `require.js`, in your `_config.yml`:

:::

## Bokeh

Bokeh provides several options for interactive visualizations, and is part of the PyViz ecosystem. See

[the Bokeh with Jupyter documentation](https://docs.bokeh.org/en/latest/docs/user_guide/jupyter.html#userguide-jupyter) to

get started.

Below is some example output. First we'll initialized Bokeh with `output_notebook()`.

This needs to be in a separate cell to give the JavaScript time to load.

Now we'll make our plot.

## ipywidgets

You may also run code for Jupyter Widgets in your document, and the interactive HTML

outputs will embed themselves in your side. See [the ipywidgets documentation](https://ipywidgets.readthedocs.io/en/latest/user_install.html)

for how to get set up in your own environment.

Here are some simple widget elements rendered below.

| 0.746786 | 0.952706 |

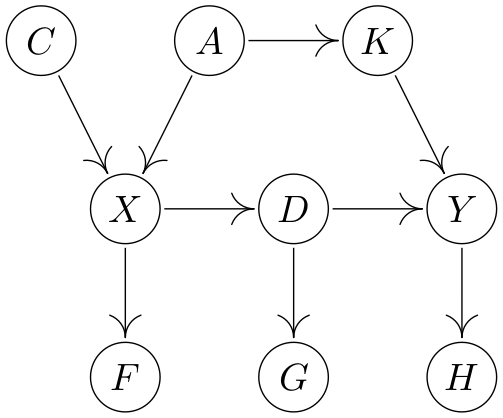

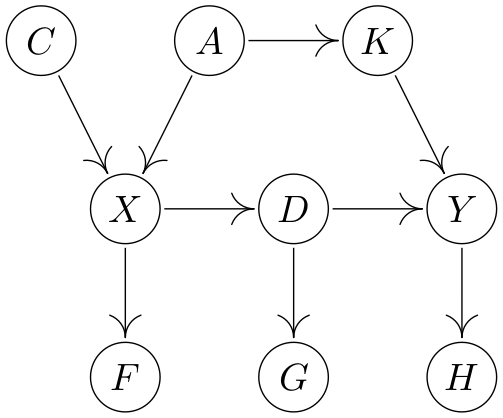

# Causality Tutorial Exercises – Python

Contributors: Rune Christiansen, Jonas Peters, Niklas Pfister, Sorawit Saengkyongam, Sebastian Weichwald.

The MIT License applies; copyright is with the authors.

Some exercises are adapted from "Elements of Causal Inference: Foundations and Learning Algorithms" by J. Peters, D. Janzing and B. Schölkopf.

# Exercise 1 – Structural Causal Model

Let's first draw a sample from an SCM

```

import numpy as np

# set seed

np.random.seed(1)

rnorm = lambda n: np.random.normal(size=n)

n = 200

C = rnorm(n)

A = .8 * rnorm(n)

K = A + .1 * rnorm(n)

X = C - 2 * A + .2 * rnorm(n)

F = 3 * X + .8 * rnorm(n)

D = -2 * X + .5 * rnorm(n)

G = D + .5 * rnorm(n)

Y = 2 * K - D + .2 * rnorm(n)

H = .5 * Y + .1 * rnorm(n)

data = np.c_[C, A, K, X, F, D, G, Y, H]

```

__a)__

What is the graph corresponding to the above SCM? (Draw on a paper.)

Take a pair of variables and think about whether you expect this pair to be dependent

(at this stage, you can only guess, later you will have tools to know). Check empirically.

__b)__

Generate a sample of size 300 from the interventional distribution $P_{\mathrm{do}(X=\mathcal{N}(2, 1))}$

and store the data matrix as `data_int`.

```

```

__c)__

Do you expect the marginal distribution of $Y$ to be different in both samples?

Double-click (or enter) to edit

__d)__

Do you expect the joint distribution of $(A, Y)$ to be different in both samples?

Double-click (or enter) to edit

__e)__

Check your answers to c) and d) empirically.

```

```

# Exercise 2 – Adjusting

Suppose we are given a fixed DAG (like the one above).

a) What are valid adjustment sets (VAS) used for?

b) Assume we want to find a VAS for the causal effect from $X$ to $Y$.

What are general recipies (plural 😉) for constructing VASs (no proof)?

Which sets are VAS in the DAG above?

c) The following code samples from an SCM. Perform linear regressions using different VAS and compare the regression coefficient against the causal effect from $X$ to $Y$.

```

import numpy as np

# set seed

np.random.seed(1)

rnorm = lambda n: np.random.normal(size=n)

n = 200

C = rnorm(n)

A = .8 * rnorm(n)

K = A + .1 * rnorm(n)

X = C - 2 * A + .2 * rnorm(n)

F = 3 * X + .8 * rnorm(n)

D = -2 * X + .5 * rnorm(n)

G = D + .5 * rnorm(n)

Y = 2 * K - D + .2 * rnorm(n)

H = .5 * Y + .1 * rnorm(n)

data = np.c_[C, A, K, X, F, D, G, Y, H]

```

d) Why could it be interesting to have several options for choosing a VAS?

e) If you indeed have access to several VASs, what would you do?

# Exercise 3 – Independence-based Causal Structure Learning

__a)__

Assume $P^{X,Y,Z}$ is Markov and faithful wrt. $G$. Assume all (!) conditional independences are

$$

\newcommand{\indep}{{\,⫫\,}}

\newcommand{\dep}{\not{}\!\!\indep}

$$

$$X \dep Z \mid \emptyset$$

(plus symmetric statements). What is $G$?

__b)__

Assume $P^{W,X,Y,Z}$ is Markov and faithful wrt. $G$. Assume all (!) conditional independences are

$$\begin{aligned}

(Y,Z) &\indep W \mid \emptyset \\

W &\indep Y \mid (X,Z) \\

(X,W) &\indep Y | Z

\end{aligned}

$$

(plus symmetric statements). What is $G$?

# Exercise 4 – Additive Noise Models

Set-up required packages:

```

# set up – not needed when run on mybinder

# if needed (colab), change False to True and run cell

if False:

!mkdir ../data/

!wget https://raw.githubusercontent.com/sweichwald/causality-tutorial-exercises/main/data/Exercise-ANM.csv -q -O ../data/Exercise-ANM.csv

!wget https://raw.githubusercontent.com/sweichwald/causality-tutorial-exercises/main/python/kerpy/__init__.py -q -O kerpy.py

!pip install pygam

from kerpy import hsic

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from pygam import GAM, s

```

Let's load and plot some real data set:

```

data = pd.read_csv('../data/Exercise-ANM.csv')

plt.scatter(data["X"].values, data["Y"].values, s=2.);

```

__a)__

Do you believed that $X \to Y$ or that $X \gets Y$? Why?

Double-click (or enter) to edit

$$

\newcommand{\indep}{{\,⫫\,}}

\newcommand{\dep}{\not{}\!\!\indep}

$$

__b)__

Let us now try to get a more statistical answer. We have heard that we cannot

have

$$Y = f(X) + N_Y,\ N_Y \indep X$$

and

$$X = g(Y) + N_X,\ N_X \indep Y$$

at the same time.

Given a data set over $(X,Y)$,

we now want to decide for one of the two models.

Come up with a method to do so.

Hints:

* `GAM(s(0)).fit(A, B).deviance_residuals(A, B)` provides residuals when regressing $B$ on $A$.

* `hsic(a, b)` can be used as an independence test (here, `a` and `b` are $n \times 1$ numpy arrays).

```

```

__c)__

Assume that the error terms are Gaussian with zero mean and variances

$\sigma_X^2$ and $\sigma_Y^2$, respectively.

The maximum likelihood for DAG G is

then proportional to

$-\log(\mathrm{var}(R^G_X)) - \log(\mathrm{var}(R^G_Y))$,

where $R^G_X$ and $R^G_Y$ are the residuals obtained from regressing $X$ and $Y$ on

their parents in $G$, respectively (no proof).

Find the maximum likelihood solution.

```

```

# Exercise 5 – Invariant Causal Prediction

Set-up required packages and data:

```

# set up – not needed when run on mybinder

# if needed (colab), change False to True and run cell

if False:

!mkdir ../data/

!wget https://raw.githubusercontent.com/sweichwald/causality-tutorial-exercises/main/data/Exercise-ICP.csv -q -O ../data/Exercise-ICP.csv

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

import statsmodels.api as sm

```

__a)__

Generate some observational and interventional data:

```

# Generate n=1000 observations from the observational distribution

na = 1000

Xa = np.random.normal(size=na)

Ya = 1.5*Xa + np.random.normal(size=na)

# Generate n=1000 observations from an interventional distribution

nb = 1000

Xb = np.random.normal(loc=2, scale=1, size=nb)

Yb = 1.5*Xb + np.random.normal(size=nb)

# plot Y vs X1

fig, ax = plt.subplots(figsize=(7,5))

ax.scatter(Xa, Ya, label='observational', marker='o', alpha=0.6)

ax.scatter(Xb, Yb, label='interventional', marker ='^', alpha=0.6)

ax.legend();

```

Look at the above plot. Is the predictor $\{X\}$ an invariant set, that is (roughly speaking), does $Y \mid X = x$ have the same distribution in the orange and blue data?

Double-click (or enter) to edit

__b)__

We now consider data over a response and three covariates $X1, X2$, and $X3$

and try to infer $\mathrm{pa}(Y)$. To do so, we need to find all sets for which this

invariance is satisfied.

```

# load data

data = pd.read_csv('../data/Exercise-ICP.csv')

data['env'] = np.concatenate([np.repeat('observational', 140), np.repeat('interventional', 80)])

# pairplot

sns.pairplot(data, hue='env', height=2, plot_kws={'alpha':0.6});

# The code below plots the residuals versus fitted values for all sets of

# predictors.

# extract response and predictors

Y = data['Y'].to_numpy()

X = data[['X1','X2','X3']].to_numpy()

# get environment indicator

obs_ind = data[data['env'] == 'observational'].index

int_ind = data[data['env'] == 'interventional'].index

# create all sets

all_sets = [(0,), (1,), (2,), (0,1), (0,2), (1,2), (0,1,2)]

# label each set

set_labels = ['X1', 'X2', 'X3', 'X1,X2', 'X1,X3', 'X2,X3', 'X1,X2,X3']

# fit OLS and store fitted values and residuals for each set

fitted = []

resid = []

for s in all_sets:

model = sm.OLS(Y, X[:, s]).fit()

fitted += [model.fittedvalues]

resid += [model.resid]

# plotting function

def plot_fitted_resid(fv, res, ax, title):

ax.scatter(fv[obs_ind], res[obs_ind], label='observational', marker='o', alpha=0.6)

ax.scatter(fv[int_ind], res[int_ind], label='interventional', marker ='^', alpha=0.6)

ax.legend()

ax.set_xlabel('fitted values')

ax.set_ylabel('residuals')

ax.set_title(title)

# creating plots

fig, axes = plt.subplots(4, 2, figsize=(7,14))

# plot result for the empty set predictor

ax0 = axes[0,0]

ax0.scatter(obs_ind, Y[obs_ind], label='observational', marker='o', alpha=0.6)

ax0.scatter(int_ind, Y[int_ind], label='interventional', marker ='^', alpha=0.6)

ax0.legend()

ax0.set_xlabel('index')

ax0.set_ylabel('Y')

ax0.set_title('empty set')

# plot result for the other sets

for i, ax in enumerate(axes.flatten()[1:]):

plot_fitted_resid(fitted[i], resid[i], ax, set_labels[i])

# make tight layout

plt.tight_layout()

```

Which of the sets are invariant? (There are two plots with four scatter plots each.)

Double-click (or enter) to edit

__c)__

What is your best guess for $\mathrm{pa}(Y)$?

Double-click (or enter) to edit

__d) (optional)__

Use the function ICP to check your result.

```

# set up – not needed when run on mybinder

# if needed (colab), change False to True and run cell

if False:

!pip install causalicp

import causalicp as icp

```

|

github_jupyter

|

import numpy as np

# set seed

np.random.seed(1)

rnorm = lambda n: np.random.normal(size=n)

n = 200

C = rnorm(n)

A = .8 * rnorm(n)

K = A + .1 * rnorm(n)

X = C - 2 * A + .2 * rnorm(n)

F = 3 * X + .8 * rnorm(n)

D = -2 * X + .5 * rnorm(n)

G = D + .5 * rnorm(n)

Y = 2 * K - D + .2 * rnorm(n)

H = .5 * Y + .1 * rnorm(n)

data = np.c_[C, A, K, X, F, D, G, Y, H]

```

__c)__

Do you expect the marginal distribution of $Y$ to be different in both samples?

Double-click (or enter) to edit

__d)__

Do you expect the joint distribution of $(A, Y)$ to be different in both samples?

Double-click (or enter) to edit

__e)__

Check your answers to c) and d) empirically.

# Exercise 2 – Adjusting

Suppose we are given a fixed DAG (like the one above).

a) What are valid adjustment sets (VAS) used for?

b) Assume we want to find a VAS for the causal effect from $X$ to $Y$.

What are general recipies (plural 😉) for constructing VASs (no proof)?

Which sets are VAS in the DAG above?

c) The following code samples from an SCM. Perform linear regressions using different VAS and compare the regression coefficient against the causal effect from $X$ to $Y$.

d) Why could it be interesting to have several options for choosing a VAS?

e) If you indeed have access to several VASs, what would you do?

# Exercise 3 – Independence-based Causal Structure Learning

__a)__

Assume $P^{X,Y,Z}$ is Markov and faithful wrt. $G$. Assume all (!) conditional independences are

$$

\newcommand{\indep}{{\,⫫\,}}

\newcommand{\dep}{\not{}\!\!\indep}

$$

$$X \dep Z \mid \emptyset$$

(plus symmetric statements). What is $G$?

__b)__

Assume $P^{W,X,Y,Z}$ is Markov and faithful wrt. $G$. Assume all (!) conditional independences are

$$\begin{aligned}

(Y,Z) &\indep W \mid \emptyset \\

W &\indep Y \mid (X,Z) \\

(X,W) &\indep Y | Z

\end{aligned}

$$

(plus symmetric statements). What is $G$?

# Exercise 4 – Additive Noise Models

Set-up required packages:

Let's load and plot some real data set:

__a)__

Do you believed that $X \to Y$ or that $X \gets Y$? Why?

Double-click (or enter) to edit

$$

\newcommand{\indep}{{\,⫫\,}}

\newcommand{\dep}{\not{}\!\!\indep}

$$

__b)__

Let us now try to get a more statistical answer. We have heard that we cannot

have

$$Y = f(X) + N_Y,\ N_Y \indep X$$

and

$$X = g(Y) + N_X,\ N_X \indep Y$$

at the same time.

Given a data set over $(X,Y)$,

we now want to decide for one of the two models.

Come up with a method to do so.

Hints:

* `GAM(s(0)).fit(A, B).deviance_residuals(A, B)` provides residuals when regressing $B$ on $A$.

* `hsic(a, b)` can be used as an independence test (here, `a` and `b` are $n \times 1$ numpy arrays).

__c)__

Assume that the error terms are Gaussian with zero mean and variances

$\sigma_X^2$ and $\sigma_Y^2$, respectively.

The maximum likelihood for DAG G is

then proportional to

$-\log(\mathrm{var}(R^G_X)) - \log(\mathrm{var}(R^G_Y))$,

where $R^G_X$ and $R^G_Y$ are the residuals obtained from regressing $X$ and $Y$ on

their parents in $G$, respectively (no proof).

Find the maximum likelihood solution.

# Exercise 5 – Invariant Causal Prediction

Set-up required packages and data:

__a)__

Generate some observational and interventional data:

Look at the above plot. Is the predictor $\{X\}$ an invariant set, that is (roughly speaking), does $Y \mid X = x$ have the same distribution in the orange and blue data?

Double-click (or enter) to edit

__b)__

We now consider data over a response and three covariates $X1, X2$, and $X3$

and try to infer $\mathrm{pa}(Y)$. To do so, we need to find all sets for which this

invariance is satisfied.

Which of the sets are invariant? (There are two plots with four scatter plots each.)

Double-click (or enter) to edit

__c)__

What is your best guess for $\mathrm{pa}(Y)$?

Double-click (or enter) to edit

__d) (optional)__

Use the function ICP to check your result.

| 0.6973 | 0.90657 |

```

import os

import sys

from flask import Flask, request, session, g, redirect, url_for, abort, render_template

from flaskext.mysql import MySQL

from flask_wtf import FlaskForm

from wtforms.fields.html5 import DateField

from wtforms import SelectField

from datetime import date

import time

import gmplot

import pandas

app = Flask(__name__)

app.secret_key = 'A0Zr98slkjdf984jnflskj_sdkfjhT'

mysql = MySQL()

app.config['MYSQL_DATABASE_USER'] = 'root'

app.config['MYSQL_DATABASE_PASSWORD'] = '1234'

app.config['MYSQL_DATABASE_DB'] = 'CREDIT'

app.config['MYSQL_DATABASE_HOST'] = 'localhost'

mysql.init_app(app)

conn = mysql.connect()

cursor = conn.cursor()

class AnalyticsForm(FlaskForm):

attributes = SelectField('Data Attributes', choices=[('Agency', 'Agency'),

('Borough', 'Borough'), ('Complaint Type', 'Complaint Type')])

@app.route("/")

def home():

#session["data_loaded"] = True

return render_template('home.html', links=get_homepage_links())

def get_df_data():

query = "select unique_key, agency, complaint_type, borough from incidents;"

cursor.execute(query)

data = cursor.fetchall()

df = pandas.DataFrame(data=list(data),columns=['Unique_key','Agency','Complaint Type','Borough'])

return df

from flask import Flask

from flask import Flask, request, render_template

import os

import sys

from flask import Flask, request, session, g, redirect, url_for, abort, render_template

from flaskext.mysql import MySQL

from flask_wtf import FlaskForm

from wtforms.fields.html5 import DateField

from wtforms import SelectField

from datetime import date

import time

import gmplot

import pandas

app = Flask(__name__)

app.secret_key = 'A0Zr98slkjdf984jnflskj_sdkfjhT'

mysql = MySQL()

app.config['MYSQL_DATABASE_USER'] = 'root'

app.config['MYSQL_DATABASE_PASSWORD'] = '1234'

app.config['MYSQL_DATABASE_DB'] = 'CREDIT'

app.config['MYSQL_DATABASE_HOST'] = 'localhost'

mysql.init_app(app)

conn = mysql.connect()

cursor = conn.cursor()

class AnalyticsForm(FlaskForm):

attributes = SelectField('Data Attributes', choices=[('SEX', 'SEX'), ('AGE', 'AGE'), ('EDUCATION', 'EDUCATION')])

def get_homepage_links():

return [{"href": url_for('analytics'), "label":"Plot"}]

def get_df_data():

query = "select ID, AGE, SEX, EDUCATION,default_payment from credit_card;"

cursor.execute(query)

data = cursor.fetchall()

df = pandas.DataFrame(data=list(data),columns=['ID', 'AGE', 'SEX', 'EDUCATION','default_payment'])

return df

@app.route("/")

def home():

#session["data_loaded"] = True

return render_template('home.html', links=get_homepage_links())

@app.route('/analytics',methods=['GET','POST'])

def analytics():

form = AnalyticsForm()

if form.validate_on_submit():

df = get_df_data()

column = request.form.get('attributes')

group = df.groupby([column,'default_payment'])

ax = group.size().unstack().plot(kind='bar')

fig = ax.get_figure()

filename = 'static/charts/group_by_figure.png' #_'+str(int(time.time()))+".png"

fig.savefig(filename)

return render_template('analytics.html', chart_src="/"+filename)

return render_template('analyticsparams.html', form=form)

if __name__ == "__main__":

app.run()

```

|

github_jupyter

|

import os

import sys

from flask import Flask, request, session, g, redirect, url_for, abort, render_template

from flaskext.mysql import MySQL

from flask_wtf import FlaskForm

from wtforms.fields.html5 import DateField

from wtforms import SelectField

from datetime import date

import time

import gmplot

import pandas

app = Flask(__name__)

app.secret_key = 'A0Zr98slkjdf984jnflskj_sdkfjhT'

mysql = MySQL()

app.config['MYSQL_DATABASE_USER'] = 'root'

app.config['MYSQL_DATABASE_PASSWORD'] = '1234'

app.config['MYSQL_DATABASE_DB'] = 'CREDIT'

app.config['MYSQL_DATABASE_HOST'] = 'localhost'

mysql.init_app(app)

conn = mysql.connect()

cursor = conn.cursor()

class AnalyticsForm(FlaskForm):

attributes = SelectField('Data Attributes', choices=[('Agency', 'Agency'),

('Borough', 'Borough'), ('Complaint Type', 'Complaint Type')])

@app.route("/")

def home():

#session["data_loaded"] = True

return render_template('home.html', links=get_homepage_links())

def get_df_data():

query = "select unique_key, agency, complaint_type, borough from incidents;"

cursor.execute(query)

data = cursor.fetchall()

df = pandas.DataFrame(data=list(data),columns=['Unique_key','Agency','Complaint Type','Borough'])

return df

from flask import Flask

from flask import Flask, request, render_template

import os

import sys

from flask import Flask, request, session, g, redirect, url_for, abort, render_template

from flaskext.mysql import MySQL

from flask_wtf import FlaskForm

from wtforms.fields.html5 import DateField

from wtforms import SelectField

from datetime import date

import time

import gmplot

import pandas

app = Flask(__name__)

app.secret_key = 'A0Zr98slkjdf984jnflskj_sdkfjhT'

mysql = MySQL()

app.config['MYSQL_DATABASE_USER'] = 'root'

app.config['MYSQL_DATABASE_PASSWORD'] = '1234'

app.config['MYSQL_DATABASE_DB'] = 'CREDIT'

app.config['MYSQL_DATABASE_HOST'] = 'localhost'

mysql.init_app(app)

conn = mysql.connect()

cursor = conn.cursor()

class AnalyticsForm(FlaskForm):

attributes = SelectField('Data Attributes', choices=[('SEX', 'SEX'), ('AGE', 'AGE'), ('EDUCATION', 'EDUCATION')])

def get_homepage_links():

return [{"href": url_for('analytics'), "label":"Plot"}]

def get_df_data():

query = "select ID, AGE, SEX, EDUCATION,default_payment from credit_card;"

cursor.execute(query)

data = cursor.fetchall()

df = pandas.DataFrame(data=list(data),columns=['ID', 'AGE', 'SEX', 'EDUCATION','default_payment'])

return df

@app.route("/")

def home():

#session["data_loaded"] = True

return render_template('home.html', links=get_homepage_links())

@app.route('/analytics',methods=['GET','POST'])

def analytics():

form = AnalyticsForm()

if form.validate_on_submit():

df = get_df_data()

column = request.form.get('attributes')

group = df.groupby([column,'default_payment'])

ax = group.size().unstack().plot(kind='bar')

fig = ax.get_figure()

filename = 'static/charts/group_by_figure.png' #_'+str(int(time.time()))+".png"

fig.savefig(filename)

return render_template('analytics.html', chart_src="/"+filename)

return render_template('analyticsparams.html', form=form)

if __name__ == "__main__":

app.run()

| 0.155174 | 0.071819 |

```

import urllib

from IPython.display import Markdown as md

### change to reflect your notebook

_nb_loc = "07_training/07b_gpumax.ipynb"

_nb_title = "GPU utilization"

_icons=["https://raw.githubusercontent.com/GoogleCloudPlatform/practical-ml-vision-book/master/logo-cloud.png", "https://www.tensorflow.org/images/colab_logo_32px.png", "https://www.tensorflow.org/images/GitHub-Mark-32px.png", "https://www.tensorflow.org/images/download_logo_32px.png"]

_links=["https://console.cloud.google.com/vertex-ai/workbench/deploy-notebook?" + urllib.parse.urlencode({"name": _nb_title, "download_url": "https://github.com/takumiohym/practical-ml-vision-book-ja/raw/master/"+_nb_loc}), "https://colab.research.google.com/github/takumiohym/practical-ml-vision-book-ja/blob/master/{0}".format(_nb_loc), "https://github.com/takumiohym/practical-ml-vision-book-ja/blob/master/{0}".format(_nb_loc), "https://raw.githubusercontent.com/takumiohym/practical-ml-vision-book-ja/master/{0}".format(_nb_loc)]

md("""<table class="tfo-notebook-buttons" align="left"><td><a target="_blank" href="{0}"><img src="{4}"/>Run in Vertex AI Workbench</a></td><td><a target="_blank" href="{1}"><img src="{5}" />Run in Google Colab</a></td><td><a target="_blank" href="{2}"><img src="{6}" />View source on GitHub</a></td><td><a href="{3}"><img src="{7}" />Download notebook</a></td></table><br/><br/>""".format(_links[0], _links[1], _links[2], _links[3], _icons[0], _icons[1], _icons[2], _icons[3]))

```

# GPU使用率

このノートブックでは、GPUのTensorFlow最適化を利用する方法を示します。

## GPUを有効にし、ヘルパー関数を設定します

このノートブックと、このリポジトリ内の他のほとんどすべてのノートブック

GPUを使用している場合は、より高速に実行されます。

Colabについて:

- [編集]→[ノートブック設定]に移動します

- [ハードウェアアクセラレータ]ドロップダウンから[GPU]を選択します

クラウドAIプラットフォームノートブック:

- https://console.cloud.google.com/ai-platform/notebooksに移動します

- GPUを使用してインスタンスを作成するか、インスタンスを選択してGPUを追加します

次に、テンソルフローを使用してGPUに接続できることを確認します。

```

import tensorflow as tf

print('TensorFlow version' + tf.version.VERSION)

print('Built with GPU support? ' + ('Yes!' if tf.test.is_built_with_cuda() else 'Noooo!'))

print('There are {} GPUs'.format(len(tf.config.experimental.list_physical_devices("GPU"))))

device_name = tf.test.gpu_device_name()

if device_name != '/device:GPU:0':

raise SystemError('GPU device not found')

print('Found GPU at: {}'.format(device_name))

```

## コードを取り込む

```

%%writefile input.txt

gs://practical-ml-vision-book/images/california_fire1.jpg

gs://practical-ml-vision-book/images/california_fire2.jpg

import matplotlib.pylab as plt

import numpy as np

import tensorflow as tf

def read_jpeg(filename):

img = tf.io.read_file(filename)

img = tf.image.decode_jpeg(img, channels=3)

img = tf.image.convert_image_dtype(img, tf.float32)

img = tf.reshape(img, [338, 600, 3])

return img

ds = tf.data.TextLineDataset('input.txt').map(read_jpeg)

f, ax = plt.subplots(1, 2, figsize=(15,10))

for idx, img in enumerate(ds):

ax[idx].imshow( img.numpy() );

```

## マップ関数の追加

画像を変換するためにカスタム式を適用したいとします。

```

def to_grayscale(img):

red = img[:, :, 0]

green = img[:, :, 1]

blue = img[:, :, 2]

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

print(gray.shape)

return gray

ds = tf.data.TextLineDataset('input.txt').map(read_jpeg).map(to_grayscale)

f, ax = plt.subplots(1, 2, figsize=(15,10))

for idx, img in enumerate(ds):

im = ax[idx].imshow( img.numpy() , interpolation='none');

if idx == 1:

f.colorbar(im, fraction=0.028, pad=0.04)

```

### 1. 画像の反復

(これをしないでください)

```

# This function is not accelerated. At all.

def to_grayscale(img):

rows, cols, _ = img.shape

result = np.zeros([rows, cols], dtype=np.float32)

for row in range(rows):

for col in range(cols):

red = img[row][col][0]

green = img[row][col][1]

blue = img[row][col][2]

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

if c_linear > 0.0031308:

result[row][col] = 1.055 * pow(c_linear, 1/2.4) - 0.055

else:

result[row][col] = 12.92*c_linear

return result

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg)

overall = tf.constant([0.], dtype=tf.float32)

count = 0

for img in ds:

# Notice that we have to call .numpy() to move the data outside TF Graph

gray = to_grayscale(img.numpy())

# This moves the data back into the graph

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

```

### 2. Pyfunc

Pythonのみの機能(time/jsonなど)を繰り返しまたは呼び出す必要があり、それでもmap()を使用する必要がある場合は、py_funcを使用できます。

データは引き続きグラフから移動され、作業が完了し、データはグラフに戻されます。したがって、効率的には利益ではありません。

```

def to_grayscale_numpy(img):

# the numpy happens here

img = img.numpy()

rows, cols, _ = img.shape

result = np.zeros([rows, cols], dtype=np.float32)

for row in range(rows):

for col in range(cols):

red = img[row][col][0]

green = img[row][col][1]

blue = img[row][col][2]

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

if c_linear > 0.0031308:

result[row][col] = 1.055 * pow(c_linear, 1/2.4) - 0.055

else:

result[row][col] = 12.92*c_linear

# the convert back happens here

return tf.convert_to_tensor(result)

def to_grayscale(img):

return tf.py_function(to_grayscale_numpy, [img], tf.float32)

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).map(to_grayscale)

overall = tf.constant([0.], dtype=tf.float32)

count = 0

for gray in ds:

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

```

### 3. TensorFlowスライシングとtf.whereを使用します

これは、反復よりも10倍高速です。

```

# All in GPU

def to_grayscale(img):

# TensorFlow slicing functionality

red = img[:, :, 0]

green = img[:, :, 1]

blue = img[:, :, 2]

# All these are actually tf.mul(), tf.add(), etc.

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

# Use tf.cond and tf.where for if-then statements

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

return gray

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).map(to_grayscale)

overall = tf.constant([0.])

count = 0

for gray in ds:

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

```

### 4. Matrixmathとtf.whereを使用します

これはスライスの3倍の速さです。

```

def to_grayscale(img):

wt = tf.constant([[0.2126], [0.7152], [0.0722]]) # 3x1 matrix

c_linear = tf.matmul(img, wt) # (ht,wd,3) x (3x1) -> (ht, wd)

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

return gray

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).map(to_grayscale)

overall = tf.constant([0.])

count = 0

for gray in ds:

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

```

### 5. バッチ処理

画像のバッチで機能するように、操作を完全にベクトル化します

```

class Grayscale(tf.keras.layers.Layer):

def __init__(self, **kwargs):

super(Grayscale, self).__init__(kwargs)

def call(self, img):

wt = tf.constant([[0.2126], [0.7152], [0.0722]]) # 3x1 matrix

c_linear = tf.matmul(img, wt) # (N, ht,wd,3) x (3x1) -> (N, ht, wd)

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

return gray # (N, ht, wd)

model = tf.keras.Sequential([

Grayscale(input_shape=(336, 600, 3)),

tf.keras.layers.Lambda(lambda gray: tf.reduce_mean(gray, axis=[1, 2])) # note axis change

])

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).batch(5)

overall = tf.constant([0.])

count = 0

for batch in ds:

bm = model(batch)

overall += tf.reduce_sum(bm)

count += len(bm)

print(overall/count)

```

## 結果

私たちがそれをしたとき、これらは私たちが得たタイミングでした:

| 方法| CPU時間|実時間|

| ---------------------- | ----------- | ------------ |

| 繰り返す| 39.6秒| 41.1秒|

| Pyfunc | 39.7秒| 41.1秒|

| スライス| 4.44秒| 3.07秒|

| Matmul | 1.22秒| 2.29秒|

| バッチ| 1.11秒| 2.13秒|

## サイン

署名で遊んで

```

from inspect import signature

def myfunc(a, b):

return (a + b)

print(myfunc(3,5))

print(myfunc('foo', 'bar'))

print(signature(myfunc).parameters)

print(signature(myfunc).return_annotation)

from inspect import signature

def myfunc(a: int, b: float) -> float:

return (a + b)

print(myfunc(3,5))

print(myfunc('foo', 'bar')) # runtime doesn't check

print(signature(myfunc).parameters)

print(signature(myfunc).return_annotation)

from inspect import signature

import tensorflow as tf

@tf.function(input_signature=[

tf.TensorSpec([3,5], name='a'),

tf.TensorSpec([5,8], name='b')

])

def myfunc(a, b):

return (tf.matmul(a,b))

print(myfunc.get_concrete_function(tf.ones((3,5)), tf.ones((5,8))))

```

## License

Copyright 2022 Google Inc. Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

|

github_jupyter

|

import urllib

from IPython.display import Markdown as md

### change to reflect your notebook

_nb_loc = "07_training/07b_gpumax.ipynb"

_nb_title = "GPU utilization"

_icons=["https://raw.githubusercontent.com/GoogleCloudPlatform/practical-ml-vision-book/master/logo-cloud.png", "https://www.tensorflow.org/images/colab_logo_32px.png", "https://www.tensorflow.org/images/GitHub-Mark-32px.png", "https://www.tensorflow.org/images/download_logo_32px.png"]

_links=["https://console.cloud.google.com/vertex-ai/workbench/deploy-notebook?" + urllib.parse.urlencode({"name": _nb_title, "download_url": "https://github.com/takumiohym/practical-ml-vision-book-ja/raw/master/"+_nb_loc}), "https://colab.research.google.com/github/takumiohym/practical-ml-vision-book-ja/blob/master/{0}".format(_nb_loc), "https://github.com/takumiohym/practical-ml-vision-book-ja/blob/master/{0}".format(_nb_loc), "https://raw.githubusercontent.com/takumiohym/practical-ml-vision-book-ja/master/{0}".format(_nb_loc)]

md("""<table class="tfo-notebook-buttons" align="left"><td><a target="_blank" href="{0}"><img src="{4}"/>Run in Vertex AI Workbench</a></td><td><a target="_blank" href="{1}"><img src="{5}" />Run in Google Colab</a></td><td><a target="_blank" href="{2}"><img src="{6}" />View source on GitHub</a></td><td><a href="{3}"><img src="{7}" />Download notebook</a></td></table><br/><br/>""".format(_links[0], _links[1], _links[2], _links[3], _icons[0], _icons[1], _icons[2], _icons[3]))

import tensorflow as tf

print('TensorFlow version' + tf.version.VERSION)

print('Built with GPU support? ' + ('Yes!' if tf.test.is_built_with_cuda() else 'Noooo!'))

print('There are {} GPUs'.format(len(tf.config.experimental.list_physical_devices("GPU"))))

device_name = tf.test.gpu_device_name()

if device_name != '/device:GPU:0':

raise SystemError('GPU device not found')

print('Found GPU at: {}'.format(device_name))

%%writefile input.txt

gs://practical-ml-vision-book/images/california_fire1.jpg

gs://practical-ml-vision-book/images/california_fire2.jpg

import matplotlib.pylab as plt

import numpy as np

import tensorflow as tf

def read_jpeg(filename):

img = tf.io.read_file(filename)

img = tf.image.decode_jpeg(img, channels=3)

img = tf.image.convert_image_dtype(img, tf.float32)

img = tf.reshape(img, [338, 600, 3])

return img

ds = tf.data.TextLineDataset('input.txt').map(read_jpeg)

f, ax = plt.subplots(1, 2, figsize=(15,10))

for idx, img in enumerate(ds):

ax[idx].imshow( img.numpy() );

def to_grayscale(img):

red = img[:, :, 0]

green = img[:, :, 1]

blue = img[:, :, 2]

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

print(gray.shape)

return gray

ds = tf.data.TextLineDataset('input.txt').map(read_jpeg).map(to_grayscale)

f, ax = plt.subplots(1, 2, figsize=(15,10))

for idx, img in enumerate(ds):

im = ax[idx].imshow( img.numpy() , interpolation='none');

if idx == 1:

f.colorbar(im, fraction=0.028, pad=0.04)

# This function is not accelerated. At all.

def to_grayscale(img):

rows, cols, _ = img.shape

result = np.zeros([rows, cols], dtype=np.float32)

for row in range(rows):

for col in range(cols):

red = img[row][col][0]

green = img[row][col][1]

blue = img[row][col][2]

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

if c_linear > 0.0031308:

result[row][col] = 1.055 * pow(c_linear, 1/2.4) - 0.055

else:

result[row][col] = 12.92*c_linear

return result

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg)

overall = tf.constant([0.], dtype=tf.float32)

count = 0

for img in ds:

# Notice that we have to call .numpy() to move the data outside TF Graph

gray = to_grayscale(img.numpy())

# This moves the data back into the graph

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

def to_grayscale_numpy(img):

# the numpy happens here

img = img.numpy()

rows, cols, _ = img.shape

result = np.zeros([rows, cols], dtype=np.float32)

for row in range(rows):

for col in range(cols):

red = img[row][col][0]

green = img[row][col][1]

blue = img[row][col][2]

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

if c_linear > 0.0031308:

result[row][col] = 1.055 * pow(c_linear, 1/2.4) - 0.055

else:

result[row][col] = 12.92*c_linear

# the convert back happens here

return tf.convert_to_tensor(result)

def to_grayscale(img):

return tf.py_function(to_grayscale_numpy, [img], tf.float32)

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).map(to_grayscale)

overall = tf.constant([0.], dtype=tf.float32)

count = 0

for gray in ds:

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

# All in GPU

def to_grayscale(img):

# TensorFlow slicing functionality

red = img[:, :, 0]

green = img[:, :, 1]

blue = img[:, :, 2]

# All these are actually tf.mul(), tf.add(), etc.

c_linear = 0.2126 * red + 0.7152 * green + 0.0722 * blue

# Use tf.cond and tf.where for if-then statements

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

return gray

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).map(to_grayscale)

overall = tf.constant([0.])

count = 0

for gray in ds:

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

def to_grayscale(img):

wt = tf.constant([[0.2126], [0.7152], [0.0722]]) # 3x1 matrix

c_linear = tf.matmul(img, wt) # (ht,wd,3) x (3x1) -> (ht, wd)

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

return gray

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).map(to_grayscale)

overall = tf.constant([0.])

count = 0

for gray in ds:

m = tf.reduce_mean(gray, axis=[0, 1])

overall += m

count += 1

print(overall/count)

class Grayscale(tf.keras.layers.Layer):

def __init__(self, **kwargs):

super(Grayscale, self).__init__(kwargs)

def call(self, img):

wt = tf.constant([[0.2126], [0.7152], [0.0722]]) # 3x1 matrix

c_linear = tf.matmul(img, wt) # (N, ht,wd,3) x (3x1) -> (N, ht, wd)

gray = tf.where(c_linear > 0.0031308,

1.055 * tf.pow(c_linear, 1/2.4) - 0.055,

12.92*c_linear)

return gray # (N, ht, wd)

model = tf.keras.Sequential([

Grayscale(input_shape=(336, 600, 3)),

tf.keras.layers.Lambda(lambda gray: tf.reduce_mean(gray, axis=[1, 2])) # note axis change

])

%%time

ds = tf.data.TextLineDataset('input.txt').repeat(10).map(read_jpeg).batch(5)

overall = tf.constant([0.])

count = 0

for batch in ds:

bm = model(batch)

overall += tf.reduce_sum(bm)

count += len(bm)

print(overall/count)

from inspect import signature

def myfunc(a, b):