code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

```

import spynnaker8 as p

import time

from matplotlib import pyplot as plt

import numpy as np

import spynnaker8.spynnaker_plotting as pl

import spynnaker8.utilities.neo_convertor as convert

from pyNN.utility.plotting import Figure, Panel

start_time = time.time()

#time of simulation

TotalDuration = 100.0

#parameters

a = 0.03

b = -2

c = -50

d = 100

#Constant current

current_pulse = 250

current_pulse = current_pulse+100

#Number of output neurons

NumYCells = 10

#Model used

model_Izh = p.Izhikevich

'''Starting the Spinnaker Simulation'''

p.setup(timestep=0.1,min_delay=1.0,max_delay=14.0)

#number of neurons per core

p.set_number_of_neurons_per_core(p.SpikeSourceArray,50)

#setting up the parameters for Izh

cell_params = {'a':a, 'b':b, 'c':c, 'd':d,'i_offset':current_pulse}

y_Izh_population = p.Population(NumYCells, model_Izh(**cell_params),label='Izh_neuron_input')

#recording the spikes and voltage

y_Izh_population.record(["spikes","v"])

#running simulation for total duration

p.run(TotalDuration)

#extracting the membrane potential data in millivolts

y_izh_data = y_Izh_population.get_data(["v","spikes"])

Figure(

#raster plot of the presynaptic neuron spike times

Panel(y_izh_data.segments[0].spiketrains,yticks=True,markersize=0.4, xlim=(0,TotalDuration)),

title="Izh").save("Izh_output.png")

plt.show()

#release spinnaker machine

p.end()

import spynnaker8 as p

import time

from matplotlib import pyplot as plt

import numpy as np

import spynnaker8.spynnaker_plotting as pl

import spynnaker8.utilities.neo_convertor as convert

from pyNN.utility.plotting import Figure, Panel

start_time = time.time()

#time of simulation

TotalDuration = 100.0

#parameters

a = 0.03

b = -2

c = -50

d = 100

#Constant current

current_pulse = 250

current_pulse = current_pulse+100

#Number of output neurons

NumYCells = 10

#Model used

model_Izh = p.Izhikevich

'''Starting the Spinnaker Simulation'''

p.setup(timestep=0.1,min_delay=1.0,max_delay=14.0)

#number of neurons per core

p.set_number_of_neurons_per_core(p.SpikeSourceArray,50)

#setting up the parameters for Izh

cell_params = {'a':a, 'b':b, 'c':c, 'd':d,'i_offset':current_pulse}

y_Izh_population = p.Population(NumYCells, model_Izh(**cell_params),label='Izh_neuron_input')

#recording the spikes and voltage

y_Izh_population.record(["spikes","v"])

#running simulation for total duration

p.run(TotalDuration)

#extracting the membrane potential data in millivolts

# y_izh_data = y_Izh_population.get_data(["v","spikes"])

data = y_Izh_population.get_data().segments[0]

vm = data.filter(name="v")[0]

Figure(

Panel(vm, ylabel="Membrane potential (mV)"),

Panel(data.spiketrains, xlabel="Time (ms)", xticks=True)

).save("simulation_results.png")

# Figure(

# #raster plot of the presynaptic neuron spike times

# Panel(y_izh_data.segments[0].spiketrains,yticks=True,markersize=0.4, xlim=(0,TotalDuration)),

# title="Izh").save("Izh_output.png")

# plt.show()

#release spinnaker machine

p.end()

```

|

github_jupyter

|

import spynnaker8 as p

import time

from matplotlib import pyplot as plt

import numpy as np

import spynnaker8.spynnaker_plotting as pl

import spynnaker8.utilities.neo_convertor as convert

from pyNN.utility.plotting import Figure, Panel

start_time = time.time()

#time of simulation

TotalDuration = 100.0

#parameters

a = 0.03

b = -2

c = -50

d = 100

#Constant current

current_pulse = 250

current_pulse = current_pulse+100

#Number of output neurons

NumYCells = 10

#Model used

model_Izh = p.Izhikevich

'''Starting the Spinnaker Simulation'''

p.setup(timestep=0.1,min_delay=1.0,max_delay=14.0)

#number of neurons per core

p.set_number_of_neurons_per_core(p.SpikeSourceArray,50)

#setting up the parameters for Izh

cell_params = {'a':a, 'b':b, 'c':c, 'd':d,'i_offset':current_pulse}

y_Izh_population = p.Population(NumYCells, model_Izh(**cell_params),label='Izh_neuron_input')

#recording the spikes and voltage

y_Izh_population.record(["spikes","v"])

#running simulation for total duration

p.run(TotalDuration)

#extracting the membrane potential data in millivolts

y_izh_data = y_Izh_population.get_data(["v","spikes"])

Figure(

#raster plot of the presynaptic neuron spike times

Panel(y_izh_data.segments[0].spiketrains,yticks=True,markersize=0.4, xlim=(0,TotalDuration)),

title="Izh").save("Izh_output.png")

plt.show()

#release spinnaker machine

p.end()

import spynnaker8 as p

import time

from matplotlib import pyplot as plt

import numpy as np

import spynnaker8.spynnaker_plotting as pl

import spynnaker8.utilities.neo_convertor as convert

from pyNN.utility.plotting import Figure, Panel

start_time = time.time()

#time of simulation

TotalDuration = 100.0

#parameters

a = 0.03

b = -2

c = -50

d = 100

#Constant current

current_pulse = 250

current_pulse = current_pulse+100

#Number of output neurons

NumYCells = 10

#Model used

model_Izh = p.Izhikevich

'''Starting the Spinnaker Simulation'''

p.setup(timestep=0.1,min_delay=1.0,max_delay=14.0)

#number of neurons per core

p.set_number_of_neurons_per_core(p.SpikeSourceArray,50)

#setting up the parameters for Izh

cell_params = {'a':a, 'b':b, 'c':c, 'd':d,'i_offset':current_pulse}

y_Izh_population = p.Population(NumYCells, model_Izh(**cell_params),label='Izh_neuron_input')

#recording the spikes and voltage

y_Izh_population.record(["spikes","v"])

#running simulation for total duration

p.run(TotalDuration)

#extracting the membrane potential data in millivolts

# y_izh_data = y_Izh_population.get_data(["v","spikes"])

data = y_Izh_population.get_data().segments[0]

vm = data.filter(name="v")[0]

Figure(

Panel(vm, ylabel="Membrane potential (mV)"),

Panel(data.spiketrains, xlabel="Time (ms)", xticks=True)

).save("simulation_results.png")

# Figure(

# #raster plot of the presynaptic neuron spike times

# Panel(y_izh_data.segments[0].spiketrains,yticks=True,markersize=0.4, xlim=(0,TotalDuration)),

# title="Izh").save("Izh_output.png")

# plt.show()

#release spinnaker machine

p.end()

| 0.38341 | 0.512449 |

# Hardy's Paradox

Hardy's Paradox nicely illustrates the fundamental difference of Quantum Mechanics and classical physics. In particular, it can be used to discuss the claim made by Einstein, Podolsky and Rosen ("EPR") back in 1935. They objected to the uncertainty seen in quantum mechanics, and thought it meant that the theory was incomplete. They thought that a qubit should always know what output it would give for both kinds of measurement, and that it only seems random because some information is hidden from us. As Einstein said: God does not play dice with the universe.

The idea and part of the source code for this tutorial was published in a previous version of the [Qiskit Textbook](https://qiskit.org/textbook/), in the (now removed) chapter [The Unique Properties of Qubits](https://github.com/Qiskit/qiskit-textbook/blob/master/content/ch-states/old-unique-properties-qubits.ipynb).

This variant of Hardy's Paradox is a relatively simple example for an entangled qubit state that couldn't be reproduced by a few classical bits and a random number generator. It shows that quantum variables aren't just classical variables with some randomness bundled in.

(hit space or right arrow to move to next slide)

## Usage instructions for the user interface

1. "Ctrl -" and "Ctrl +" (or "command -", "command +") adjust the zoom level to fit the text to the browser window

* Use "space" and "shift space" to navigate through the slides

* "Shift Enter" executes the interactive cells (might need to click the cell, first)

* Execute the interactive cells on each slide ("In [1]:", etc)

* In case a cell is not formatted correctly, try to double-click and then "Shift Enter" to re-execute

* Interactive cells can be modified, if needed

* "X" at the top left exits the slideshow and enters the jupyter notebook interface

## Manufacturing Cars

Let's assume we build cars.

The cars have a color (red or blue) and an engine type (gasoline or diesel).

The director of the production plant enures us that the following is always true for the first two cars that leave the plant each morning:

1. If we look at the colors of both cars, it never happens that both are red.

* If the engine type of one car is diesel, then the other car is red.

Let's encode the two cars with two qubits, and the colors by a measurement in the (standard) Z Basis, where 0 relates to red and 1 relates to blue. The engine type is encoded by a measurement in the X Basis, where 0 relates to gasoline and 1 relates to diesel.

Or in short: <br>

Z color: 0 red, 1 blue <br>

X engine type: 0 gasoline, 1: diesel

We now initialize the quantum circuit and create a specific state of the two qubits.

We will show that this state satisfies the two conditions mentioned before.

We will then analyze the question if both cars can be diesel.

```

from qiskit import *

from qiskit.tools.visualization import plot_histogram

```

The following circuit creates a specific entangled state of the two qubits.

```

# hit "shift + Enter" to execute this cell

q = QuantumRegister(2) # create a quantum register with one qubit

# create a classical register that will hold the results of the measurement

c = ClassicalRegister(2)

qc_hardy = QuantumCircuit(q, c)

qc_hardy.ry(1.911,q[1])

qc_hardy.cx(q[1],q[0])

qc_hardy.ry(0.785,q[0])

qc_hardy.cx(q[1],q[0])

qc_hardy.ry(2.356,q[0])

qc_hardy.draw(output='mpl')

```

Let's see what happens if we look at the color of both cars, i.e. if we make an Z measurement on each of the qubits. <br>

A result of 00 would indicate that both cars are red, which is not allowed by rule #1.

```

measurements = QuantumCircuit(q,c)

# z measurement on both qubits

measurements.measure(q[0],c[0])

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for two z (=color) measurements:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

```

The count of 00 is zero, and so these qubits do indeed satisfy property 1.

Next, let's see the results of an x (engine type) measurement of one and a z (color) measurement of the other.<br>

A result of 11 would indicate that car 1 is a diesel and car two is blue, which is not allowed by rule #2.

```

measurements = QuantumCircuit(q,c)

# x measurement on qubit 0

measurements.h(q[0])

measurements.measure(q[0],c[0])

# z measurement on qubit 1

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for an x (engine type) measurement on qubit 0 and a z (color) measurement on qubit 1:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

```

The count of 11 is zero.

If we also show that the same is true if we measure the other way round (), we have shown that the cars (qubits) satisfy property #2.

```

measurements = QuantumCircuit(q,c)

# z measurement on qubit 0

measurements.measure(q[0],c[0])

# x measurement on qubit 1

measurements.h(q[1])

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for an z (color) measurement on qubit 0 and a x (engien type) measurement on qubit 1:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

```

As result 11 never occurs, property #2 also holds true.

What can we now infer (classically) about the engine types of both cars?

Let's first recall the properties we have confirmed:

1. If we look at the colors of the cars, it never happens that both are red.

* If the engine type of one car is diesel, then the other car is red.

Let's assume we measure the engine type for both cars and both would be diesel. Then by applying property #2, we can deduce what the result would have been if we had made color measurements instead: We would have gotten an output of red for both.

However, this result is impossible according to property #1. We can therefore conclude that it must be impossible that both cars are diesel.

But now let's do an measurement of the engine type for both cars, i.e. an measurement in the x basis for both qubits.

```

measurements = QuantumCircuit(q,c)

measurements.h(q[0])

measurements.measure(q[0],c[0])

measurements.h(q[1])

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for two x (engine type) measurement on both qubits:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

```

The result is surprising, because in a few cases we actually measured 11, which encodes the "impossible case" that both cars are diesel.

We reasoned that, given properties 1 and 2, it would be impossible to get the output 11 if we measure engine type for both cars. From the results above, we see that our reasoning was not correct: one in every dozen results will have this 'impossible' result.

How can we explain this?

## Backgound on Hardy's Paradox

In their famous paper in 1935, EPR essentially claimed that qubits can indeed be described by some form of classical variable. They didn’t know how to do it, but they were sure it could be done. Then quantum mechanics could be replaced by a much nicer and more sensible theory.

It took until 1964 to show that they were wrong. J. S. Bell proved that quantum variables behaved in a way that was fundamentally unique. Since then, many new ways have been found to prove this, and extensive experiments have been done to show that this is exactly the way the universe works. We'll now consider a simple demonstration, using a variant of Hardy’s paradox.

## What went wrong?

Our mistake was in the following piece of reasoning.

* By applying property 2 we can deduce what the result would have been if we had made z measurements instead

We used our knowledge of the x (color) outputs to work out what the z (engine type) outputs were. Once we’d done that, we assumed that we were certain about the value of both.

Our logic would be completely valid if we weren’t reasoning about quantum objects.

But as D.Mermin concludes at the end of his excellent book "...", for quantum objects you have to accept "what didn't happen, didn't happen", i.e. we cannot make an assumptions about a measurement that wasn't done.

This is (part of) what makes quantum computers able to outperform classical computers. It leads to effects that allow programs made with quantum variables to solve problems in ways that those with normal variables cannot. But just because qubits don’t follow the same logic as normal computers, it doesn’t mean they defy logic entirely. They obey the definite rules laid out by quantum mechanics.

```

import qiskit

qiskit.__qiskit_version__

```

## BACKUP / OLD

Hadamard-Gate maps $\;|0\rangle\;$ to $\;\frac{|0\rangle + |1\rangle}{\sqrt{2}}\;\;$ and $\;\;|1\rangle\;$ to $\;\frac{|0\rangle - |1\rangle}{\sqrt{2}}$.

If we can show that

$$ H(\; id( H(|0\rangle) ) \;) = |0\rangle\, $$

and

$$ H(\;\, X( H(|0\rangle) ) \;) = |0\rangle, $$

it becomes clear that if A applies an H-Gate in both of her moves, she wins the game - independent of the move of B (X or id).

Remember: Heads is encoded by $|0\rangle$, Tails encoded by $|1\rangle$.

The first equation holds because:

\begin{align*}

H(\; id(\; H(|0\rangle) \;)\; )

= &\;\; H(\; H(|0\rangle)\; ) \\

= &\;\; H(\; \frac{|0\rangle + |1\rangle}{\sqrt{2}}\;) \\

= &\;\; \frac{1}{\sqrt{2}}\;(\; H(|0\rangle) + H(|1\rangle) \;) \\

= &\;\; \frac{1}{\sqrt{2}}\;(\;\frac{|0\rangle + |1\rangle}{\sqrt{2}} + \frac{|0\rangle - |1\rangle}{\sqrt{2}}\;) \\

= &\;\; \frac{1}{{2}}\; (\;|0\rangle + |1\rangle + |0\rangle - |1\rangle\; )\\

= &\;\; |0\rangle

\end{align*}

In case B choses to use an X-Gate instead of id, the following identity

$$ X(\; H(|0\rangle) \;) = X\; (\;\frac{|0\rangle + |1\rangle}{\sqrt{2}}\; ) = \frac{|1\rangle + |0\rangle}{\sqrt{2}} = H(|0\rangle) $$

can be used to show that the final state is $ |0\rangle$:

$$ H(\; X( H(|0\rangle) ) \;) = H(\; H(|0\rangle)\; ) = |0\rangle $$

[These charts](https://github.com/JanLahmann/Fun-with-Quantum/raw/master/QuantumTheory-for-QuantumCoinGame.pdf) explain a bit more of the quantum theory and formlism required to prove the above identities, in case you are interested.

|

github_jupyter

|

from qiskit import *

from qiskit.tools.visualization import plot_histogram

# hit "shift + Enter" to execute this cell

q = QuantumRegister(2) # create a quantum register with one qubit

# create a classical register that will hold the results of the measurement

c = ClassicalRegister(2)

qc_hardy = QuantumCircuit(q, c)

qc_hardy.ry(1.911,q[1])

qc_hardy.cx(q[1],q[0])

qc_hardy.ry(0.785,q[0])

qc_hardy.cx(q[1],q[0])

qc_hardy.ry(2.356,q[0])

qc_hardy.draw(output='mpl')

measurements = QuantumCircuit(q,c)

# z measurement on both qubits

measurements.measure(q[0],c[0])

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for two z (=color) measurements:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

measurements = QuantumCircuit(q,c)

# x measurement on qubit 0

measurements.h(q[0])

measurements.measure(q[0],c[0])

# z measurement on qubit 1

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for an x (engine type) measurement on qubit 0 and a z (color) measurement on qubit 1:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

measurements = QuantumCircuit(q,c)

# z measurement on qubit 0

measurements.measure(q[0],c[0])

# x measurement on qubit 1

measurements.h(q[1])

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for an z (color) measurement on qubit 0 and a x (engien type) measurement on qubit 1:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

measurements = QuantumCircuit(q,c)

measurements.h(q[0])

measurements.measure(q[0],c[0])

measurements.h(q[1])

measurements.measure(q[1],c[1])

qc = qc_hardy + measurements

print('Results for two x (engine type) measurement on both qubits:')

plot_histogram(execute(qc,Aer.get_backend('qasm_simulator')).result().get_counts())

import qiskit

qiskit.__qiskit_version__

| 0.73678 | 0.990678 |

# Convolutional Neural Networks

In this notebook, I'll try converting radio images into useful features using a simple convolutional neural network in Keras. The best kinds of CNN to use are apparently fast region-based CNNs, but because computer vision is hard and somewhat off-topic I'll instead be doing this pretty naïvely. The [Keras MNIST example](https://github.com/fchollet/keras/blob/master/examples/mnist_cnn.py) will be a good starting point.

I'll also use SWIRE to find potential hosts (notebook 13) and pull out radio images surrounding them. I'll be using the frozen ATLAS classifications that I prepared earlier (notebook 12).

```

import collections

import io

from pprint import pprint

import sqlite3

import sys

import warnings

import astropy.io.votable

import astropy.wcs

import matplotlib.pyplot

import numpy

import requests

import requests_cache

import sklearn.cross_validation

%matplotlib inline

sys.path.insert(1, '..')

import crowdastro.data

import crowdastro.labels

import crowdastro.rgz_analysis.consensus

import crowdastro.show

warnings.simplefilter('ignore', UserWarning) # astropy always raises warnings on Windows.

requests_cache.install_cache(cache_name='gator_cache', backend='sqlite', expire_after=None)

def get_potential_hosts(subject):

if subject['metadata']['source'].startswith('C'):

# CDFS

catalog = 'chandra_cat_f05'

else:

# ELAIS-S1

catalog = 'elaiss1_cat_f05'

query = {

'catalog': catalog,

'spatial': 'box',

'objstr': '{} {}'.format(*subject['coords']),

'size': '120',

'outfmt': '3',

}

url = 'http://irsa.ipac.caltech.edu/cgi-bin/Gator/nph-query'

r = requests.get(url, params=query)

votable = astropy.io.votable.parse_single_table(io.BytesIO(r.content), pedantic=False)

ras = votable.array['ra']

decs = votable.array['dec']

# Convert to px.

fits = crowdastro.data.get_ir_fits(subject)

wcs = astropy.wcs.WCS(fits.header)

xs, ys = wcs.all_world2pix(ras, decs, 0)

return numpy.array((xs, ys)).T

def get_true_hosts(subject, potential_hosts, conn):

consensus_xs = []

consensus_ys = []

consensus = crowdastro.labels.get_subject_consensus(subject, conn, 'atlas_classifications')

true_hosts = {} # Maps radio signature to (x, y) tuples.

for radio, (x, y) in consensus.items():

if x is not None and y is not None:

closest = None

min_distance = float('inf')

for host in potential_hosts:

dist = numpy.hypot(x - host[0], y - host[1])

if dist < min_distance:

closest = host

min_distance = dist

true_hosts[radio] = closest

return true_hosts

```

## Training data

The first step is to separate out all the training data. I'm well aware that having too much training data at once will cause Python to run out of memory, so I'll need to figure out how to deal with that when I get to it.

For each potential host, I'll pull out a $20 \times 20$, $40 \times 40$, and $80 \times 80$ patch of radio image. These numbers are totally arbitrary but they seem like nice sizes. Note that this will miss really spread out black hole jets. I'm probably fine with that.

```

subject = crowdastro.data.db.radio_subjects.find_one({'zooniverse_id': 'ARG0003rga'})

crowdastro.show.subject(subject)

matplotlib.pyplot.show()

crowdastro.show.radio(subject)

matplotlib.pyplot.show()

potential_hosts = get_potential_hosts(subject)

conn = sqlite3.connect('../crowdastro-data/processed.db')

true_hosts = {tuple(i) for i in get_true_hosts(subject, potential_hosts, conn).values()}

conn.close()

xs = []

ys = []

for x, y in true_hosts:

xs.append(x)

ys.append(y)

crowdastro.show.subject(subject)

matplotlib.pyplot.scatter(xs, ys, c='r', s=100)

matplotlib.pyplot.show()

def get_training_data(subject, potential_hosts, true_hosts):

radio_image = crowdastro.data.get_radio(subject, size='5x5')

training_data = []

radius = 40

padding = 150

for host_x, host_y in potential_hosts:

patch_80 = radio_image[int(host_x - radius + padding) : int(host_x + radius + padding),

int(host_y - radius + padding) : int(host_y + radius + padding)]

classification = (host_x, host_y) in true_hosts

training_data.append((patch_80, classification))

return training_data

patches, classifications = zip(*get_training_data(subject, potential_hosts, true_hosts))

```

Now, I'll run this over the ATLAS data.

```

conn = sqlite3.connect('../crowdastro-data/processed.db')

training_inputs = []

training_outputs = []

for index, subject in enumerate(crowdastro.data.get_all_subjects(atlas=True)):

print('Extracting training data from ATLAS subject #{}'.format(index))

potential_hosts = get_potential_hosts(subject)

true_hosts = {tuple(i) for i in get_true_hosts(subject, potential_hosts, conn).values()}

patches, classifications = zip(*get_training_data(subject, potential_hosts, true_hosts))

training_inputs.extend(patches)

training_outputs.extend(classifications)

conn.close()

```

Keras doesn't support class weights, so I need to downsample the non-host galaxies.

```

n_hosts = sum(training_outputs)

n_not_hosts = len(training_outputs) - n_hosts

n_to_discard = n_not_hosts - n_hosts

new_training_inputs = []

new_training_outputs = []

for inp, out in zip(training_inputs, training_outputs):

if not out and n_to_discard > 0:

n_to_discard -= 1

else:

new_training_inputs.append(inp)

new_training_outputs.append(out)

print(sum(new_training_outputs))

print(len(new_training_outputs))

training_inputs = numpy.array(new_training_inputs)

training_outputs = numpy.array(new_training_outputs, dtype=float)

```

## Convolutional neural network

The basic structure will be as follows:

- An input layer.

- A 2D convolution layer with 32 filters and a $10 \times 10$ kernel. (This is the same size kernel that Radio Galaxy Zoo uses for their peak detection.)

- A relu activation layer.

- A max pooling layer with pool size 5.

- A 25% dropout layer.

- A flatten layer.

- A dense layer with 64 nodes.

- A relu activation layer.

- A dense layer with 1 node.

- A sigmoid activation layer.

I may try to split the input into three images of different sizes in future.

```

import keras.layers.convolutional

import keras.layers.core

import keras.models

model = keras.models.Sequential()

n_filters = 32

conv_size = 10

pool_size = 5

dropout = 0.25

hidden_layer_size = 64

model.add(keras.layers.convolutional.Convolution2D(n_filters, conv_size, conv_size,

border_mode='valid',

input_shape=(1, 80, 80)))

model.add(keras.layers.core.Activation('relu'))

model.add(keras.layers.convolutional.MaxPooling2D(pool_size=(pool_size, pool_size)))

model.add(keras.layers.convolutional.Convolution2D(n_filters, conv_size, conv_size,

border_mode='valid',))

model.add(keras.layers.core.Activation('relu'))

model.add(keras.layers.convolutional.MaxPooling2D(pool_size=(pool_size, pool_size)))

model.add(keras.layers.core.Dropout(dropout))

model.add(keras.layers.core.Flatten())

model.add(keras.layers.core.Dense(hidden_layer_size))

model.add(keras.layers.core.Activation('sigmoid'))

model.add(keras.layers.core.Dense(1))

model.add(keras.layers.core.Activation('sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adadelta')

```

Now we can train it!

```

xs_train, xs_test, ts_train, ts_test = sklearn.cross_validation.train_test_split(

training_inputs, training_outputs, test_size=0.1, random_state=0, stratify=training_outputs)

image_size = xs_train.shape[1:]

xs_train = xs_train.reshape(xs_train.shape[0], 1, image_size[0], image_size[1])

xs_test = xs_test.reshape(xs_test.shape[0], 1, image_size[0], image_size[1])

xs_train.shape

model.fit(xs_train, ts_train)

```

Let's see some filters.

```

get_convolutional_output = keras.backend.function([model.layers[0].input],

[model.layers[2].get_output()])

model.get_weights()[2].shape

figure = matplotlib.pyplot.figure(figsize=(15, 15))

for i in range(32):

ax = figure.add_subplot(8, 4, i+1)

ax.axis('off')

ax.pcolor(model.get_weights()[0][i, 0], cmap='gray')

matplotlib.pyplot.show()

```

Good enough. Now, let's save the models.

```

model_json = model.to_json()

with open('../crowdastro-data/cnn_model_2.json', 'w') as f:

f.write(model_json)

model.save_weights('../crowdastro-data/cnn_weights_2.h5')

```

...Now, let's *test* that it saved.

```

with open('../crowdastro-data/cnn_model_2.json', 'r') as f:

model2 = keras.models.model_from_json(f.read())

model2.load_weights('../crowdastro-data/cnn_weights_2.h5')

figure = matplotlib.pyplot.figure(figsize=(15, 15))

for i in range(32):

ax = figure.add_subplot(8, 4, i+1)

ax.axis('off')

ax.pcolor(model2.get_weights()[0][i, 0], cmap='gray')

matplotlib.pyplot.show()

```

Looks good. Ideally, we train this longer, but I don't have enough time right now. Let's save the training data and move on.

```

import tables

with tables.open_file('../crowdastro-data/atlas_training_data.h5', mode='w', title='ATLAS training data') as f:

root = f.root

f.create_array(root, 'training_inputs', training_inputs)

f.create_array(root, 'training_outputs', training_outputs)

```

|

github_jupyter

|

import collections

import io

from pprint import pprint

import sqlite3

import sys

import warnings

import astropy.io.votable

import astropy.wcs

import matplotlib.pyplot

import numpy

import requests

import requests_cache

import sklearn.cross_validation

%matplotlib inline

sys.path.insert(1, '..')

import crowdastro.data

import crowdastro.labels

import crowdastro.rgz_analysis.consensus

import crowdastro.show

warnings.simplefilter('ignore', UserWarning) # astropy always raises warnings on Windows.

requests_cache.install_cache(cache_name='gator_cache', backend='sqlite', expire_after=None)

def get_potential_hosts(subject):

if subject['metadata']['source'].startswith('C'):

# CDFS

catalog = 'chandra_cat_f05'

else:

# ELAIS-S1

catalog = 'elaiss1_cat_f05'

query = {

'catalog': catalog,

'spatial': 'box',

'objstr': '{} {}'.format(*subject['coords']),

'size': '120',

'outfmt': '3',

}

url = 'http://irsa.ipac.caltech.edu/cgi-bin/Gator/nph-query'

r = requests.get(url, params=query)

votable = astropy.io.votable.parse_single_table(io.BytesIO(r.content), pedantic=False)

ras = votable.array['ra']

decs = votable.array['dec']

# Convert to px.

fits = crowdastro.data.get_ir_fits(subject)

wcs = astropy.wcs.WCS(fits.header)

xs, ys = wcs.all_world2pix(ras, decs, 0)

return numpy.array((xs, ys)).T

def get_true_hosts(subject, potential_hosts, conn):

consensus_xs = []

consensus_ys = []

consensus = crowdastro.labels.get_subject_consensus(subject, conn, 'atlas_classifications')

true_hosts = {} # Maps radio signature to (x, y) tuples.

for radio, (x, y) in consensus.items():

if x is not None and y is not None:

closest = None

min_distance = float('inf')

for host in potential_hosts:

dist = numpy.hypot(x - host[0], y - host[1])

if dist < min_distance:

closest = host

min_distance = dist

true_hosts[radio] = closest

return true_hosts

subject = crowdastro.data.db.radio_subjects.find_one({'zooniverse_id': 'ARG0003rga'})

crowdastro.show.subject(subject)

matplotlib.pyplot.show()

crowdastro.show.radio(subject)

matplotlib.pyplot.show()

potential_hosts = get_potential_hosts(subject)

conn = sqlite3.connect('../crowdastro-data/processed.db')

true_hosts = {tuple(i) for i in get_true_hosts(subject, potential_hosts, conn).values()}

conn.close()

xs = []

ys = []

for x, y in true_hosts:

xs.append(x)

ys.append(y)

crowdastro.show.subject(subject)

matplotlib.pyplot.scatter(xs, ys, c='r', s=100)

matplotlib.pyplot.show()

def get_training_data(subject, potential_hosts, true_hosts):

radio_image = crowdastro.data.get_radio(subject, size='5x5')

training_data = []

radius = 40

padding = 150

for host_x, host_y in potential_hosts:

patch_80 = radio_image[int(host_x - radius + padding) : int(host_x + radius + padding),

int(host_y - radius + padding) : int(host_y + radius + padding)]

classification = (host_x, host_y) in true_hosts

training_data.append((patch_80, classification))

return training_data

patches, classifications = zip(*get_training_data(subject, potential_hosts, true_hosts))

conn = sqlite3.connect('../crowdastro-data/processed.db')

training_inputs = []

training_outputs = []

for index, subject in enumerate(crowdastro.data.get_all_subjects(atlas=True)):

print('Extracting training data from ATLAS subject #{}'.format(index))

potential_hosts = get_potential_hosts(subject)

true_hosts = {tuple(i) for i in get_true_hosts(subject, potential_hosts, conn).values()}

patches, classifications = zip(*get_training_data(subject, potential_hosts, true_hosts))

training_inputs.extend(patches)

training_outputs.extend(classifications)

conn.close()

n_hosts = sum(training_outputs)

n_not_hosts = len(training_outputs) - n_hosts

n_to_discard = n_not_hosts - n_hosts

new_training_inputs = []

new_training_outputs = []

for inp, out in zip(training_inputs, training_outputs):

if not out and n_to_discard > 0:

n_to_discard -= 1

else:

new_training_inputs.append(inp)

new_training_outputs.append(out)

print(sum(new_training_outputs))

print(len(new_training_outputs))

training_inputs = numpy.array(new_training_inputs)

training_outputs = numpy.array(new_training_outputs, dtype=float)

import keras.layers.convolutional

import keras.layers.core

import keras.models

model = keras.models.Sequential()

n_filters = 32

conv_size = 10

pool_size = 5

dropout = 0.25

hidden_layer_size = 64

model.add(keras.layers.convolutional.Convolution2D(n_filters, conv_size, conv_size,

border_mode='valid',

input_shape=(1, 80, 80)))

model.add(keras.layers.core.Activation('relu'))

model.add(keras.layers.convolutional.MaxPooling2D(pool_size=(pool_size, pool_size)))

model.add(keras.layers.convolutional.Convolution2D(n_filters, conv_size, conv_size,

border_mode='valid',))

model.add(keras.layers.core.Activation('relu'))

model.add(keras.layers.convolutional.MaxPooling2D(pool_size=(pool_size, pool_size)))

model.add(keras.layers.core.Dropout(dropout))

model.add(keras.layers.core.Flatten())

model.add(keras.layers.core.Dense(hidden_layer_size))

model.add(keras.layers.core.Activation('sigmoid'))

model.add(keras.layers.core.Dense(1))

model.add(keras.layers.core.Activation('sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adadelta')

xs_train, xs_test, ts_train, ts_test = sklearn.cross_validation.train_test_split(

training_inputs, training_outputs, test_size=0.1, random_state=0, stratify=training_outputs)

image_size = xs_train.shape[1:]

xs_train = xs_train.reshape(xs_train.shape[0], 1, image_size[0], image_size[1])

xs_test = xs_test.reshape(xs_test.shape[0], 1, image_size[0], image_size[1])

xs_train.shape

model.fit(xs_train, ts_train)

get_convolutional_output = keras.backend.function([model.layers[0].input],

[model.layers[2].get_output()])

model.get_weights()[2].shape

figure = matplotlib.pyplot.figure(figsize=(15, 15))

for i in range(32):

ax = figure.add_subplot(8, 4, i+1)

ax.axis('off')

ax.pcolor(model.get_weights()[0][i, 0], cmap='gray')

matplotlib.pyplot.show()

model_json = model.to_json()

with open('../crowdastro-data/cnn_model_2.json', 'w') as f:

f.write(model_json)

model.save_weights('../crowdastro-data/cnn_weights_2.h5')

with open('../crowdastro-data/cnn_model_2.json', 'r') as f:

model2 = keras.models.model_from_json(f.read())

model2.load_weights('../crowdastro-data/cnn_weights_2.h5')

figure = matplotlib.pyplot.figure(figsize=(15, 15))

for i in range(32):

ax = figure.add_subplot(8, 4, i+1)

ax.axis('off')

ax.pcolor(model2.get_weights()[0][i, 0], cmap='gray')

matplotlib.pyplot.show()

import tables

with tables.open_file('../crowdastro-data/atlas_training_data.h5', mode='w', title='ATLAS training data') as f:

root = f.root

f.create_array(root, 'training_inputs', training_inputs)

f.create_array(root, 'training_outputs', training_outputs)

| 0.422028 | 0.893495 |

<h1 align='center'>8.1 Hierarchical Indexing

Hierarchical indexing is an important feature of pandas that enables you to have mul‐tiple (two or more) index levels on an axis.

Somewhat abstractly, it provides a way foryou to work with higher dimensional data in a lower dimensional form

```

import pandas as pd

import numpy as np

data = pd.Series(np.random.randn(9),

index=[['a', 'a', 'a', 'b', 'b', 'c', 'c', 'd', 'd'],

[1, 2, 3, 1, 3, 1, 2, 2, 3]])

data

data.index

```

With a hierarchically indexed object, so-called partial indexing is possible, enablingyou to concisely select subsets of the data

```

data.loc[['b', 'd']]

```

Selection is even possible from an “inner” level:

```

data.loc[:, 2]

```

Hierarchical indexing plays an important role in reshaping data and group-basedoperations like forming a pivot table.

For example, you could rearrange the data intoa DataFrame using its unstack method

```

data.unstack()

data.unstack().stack()

```

With a DataFrame, either axis can have a hierarchical index

```

frame = pd.DataFrame(np.arange(12).reshape((4, 3)),

index=[['a', 'a', 'b', 'b'],

[1, 2, 1, 2]],

columns=[['Ohio', 'Ohio', 'Colorado'],

['Green', 'Red', 'Green']])

frame

frame.stack()

```

The hierarchical levels can have names (as strings or any Python objects). If so, thesewill show up in the console output:

```

frame.index.names = ['key1', 'key2']

frame.columns.names = ['state', 'color']

frame

```

With partial column indexing you can similarly select groups of columns:

```

frame1=frame.copy()

frame1.index= ['key1', 'key2','key3','key4']

frame1.columns= ['state', 'color', 'misc']

frame1

```

A MultiIndex can be created by itself and then reused; the columns in the precedingDataFrame with level names could be created like this:

<b> Reordering and Sorting Levels

At times you will need to rearrange the order of the levels on an axis or sort the databy the values in one specific level.

The swaplevel takes two level numbers or namesand returns a new object with the levels

interchanged (but the data is otherwise unaltered)

```

frame.swaplevel('key1', 'key2')

```

Sort_index, on the other hand, sorts the data using only the values in a single level.

When swapping levels, it’s not uncommon to also use sort_index so that the result islexicographically sorted by the indicated level

```

frame.sort_index(level=1)

frame.swaplevel(0, 1).sort_index(level=0)

```

Data selection performance is much better on hierarchically indexed objects if the index is lexicographically sorted starting with the outermost level—that is,the result of callingsort_index(level=0) or sort_index()

<b>Summary Statistics by Level

Many descriptive and summary statistics on DataFrame and Series have a leveloption in which you can specify the level you want to aggregate by on a particularaxis.

Consider the above DataFrame; we can aggregate by level on either the rows orcolumns like so

```

frame

frame.sum(level='key2')

frame.sum(level='color', axis=1)

```

<b>Indexing with a DataFrame’s columns

It’s not unusual to want to use one or more columns from a DataFrame as the rowindex; alternatively, you may wish to move the row index into the DataFrame’s col‐umns. Here’s an example DataFrame

```

frame = pd.DataFrame({'a': range(7), 'b': range(7, 0, -1),

'c': ['one', 'one', 'one', 'two', 'two','two', 'two'],

'd': [0, 1, 2, 0, 1, 2, 3]})

frame

```

DataFrame’s set_index function will create a new DataFrame using one or more ofits columns as the index

```

frame.set_index(['c','d'])

frame.set_index(['c', 'd'], drop=False)

```

reset_index, on the other hand, does the opposite of set_index; the hierarchicalindex levels are moved into the columns

```

framex=frame.set_index(['c','d'])

framex

framex.reset_index()

```

|

github_jupyter

|

import pandas as pd

import numpy as np

data = pd.Series(np.random.randn(9),

index=[['a', 'a', 'a', 'b', 'b', 'c', 'c', 'd', 'd'],

[1, 2, 3, 1, 3, 1, 2, 2, 3]])

data

data.index

data.loc[['b', 'd']]

data.loc[:, 2]

data.unstack()

data.unstack().stack()

frame = pd.DataFrame(np.arange(12).reshape((4, 3)),

index=[['a', 'a', 'b', 'b'],

[1, 2, 1, 2]],

columns=[['Ohio', 'Ohio', 'Colorado'],

['Green', 'Red', 'Green']])

frame

frame.stack()

frame.index.names = ['key1', 'key2']

frame.columns.names = ['state', 'color']

frame

frame1=frame.copy()

frame1.index= ['key1', 'key2','key3','key4']

frame1.columns= ['state', 'color', 'misc']

frame1

frame.swaplevel('key1', 'key2')

frame.sort_index(level=1)

frame.swaplevel(0, 1).sort_index(level=0)

frame

frame.sum(level='key2')

frame.sum(level='color', axis=1)

frame = pd.DataFrame({'a': range(7), 'b': range(7, 0, -1),

'c': ['one', 'one', 'one', 'two', 'two','two', 'two'],

'd': [0, 1, 2, 0, 1, 2, 3]})

frame

frame.set_index(['c','d'])

frame.set_index(['c', 'd'], drop=False)

framex=frame.set_index(['c','d'])

framex

framex.reset_index()

| 0.225672 | 0.966726 |

<a href="https://colab.research.google.com/github/plaupla/awsProject1BikeSharing/blob/main/project_template.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

# Predict Bike Sharing Demand with AutoGluon Template

## Project: Predict Bike Sharing Demand with AutoGluon

This notebook is a template with each step that you need to complete for the project.

Please fill in your code where there are explicit `?` markers in the notebook. You are welcome to add more cells and code as you see fit.

Once you have completed all the code implementations, please export your notebook as a HTML file so the reviews can view your code. Make sure you have all outputs correctly outputted.

`File-> Export Notebook As... -> Export Notebook as HTML`

There is a writeup to complete as well after all code implememtation is done. Please answer all questions and attach the necessary tables and charts. You can complete the writeup in either markdown or PDF.

Completing the code template and writeup template will cover all of the rubric points for this project.

The rubric contains "Stand Out Suggestions" for enhancing the project beyond the minimum requirements. The stand out suggestions are optional. If you decide to pursue the "stand out suggestions", you can include the code in this notebook and also discuss the results in the writeup file.

## Step 1: Create an account with Kaggle

### Create Kaggle Account and download API key

Below is example of steps to get the API username and key. Each student will have their own username and key.

1. Open account settings.

2. Scroll down to API and click Create New API Token.

3. Open up `kaggle.json` and use the username and key.

## Step 2: Download the Kaggle dataset using the kaggle python library

### Open up Sagemaker Studio and use starter template

1. Notebook should be using a `ml.t3.medium` instance (2 vCPU + 4 GiB)

2. Notebook should be using kernal: `Python 3 (MXNet 1.8 Python 3.7 CPU Optimized)`

### Install packages

```

!pip install -U pip

!pip install -U setuptools wheel

!pip install -U "mxnet<2.0.0" bokeh==2.0.1

!pip install autogluon --no-cache-dir

# Without --no-cache-dir, smaller aws instances may have trouble installing

```

### Setup Kaggle API Key

```

# create the .kaggle directory and an empty kaggle.json file

!mkdir -p /root/.kaggle

!touch /root/.kaggle/kaggle.json

!chmod 600 /root/.kaggle/kaggle.json

# Fill in your user name and key from creating the kaggle account and API token file

import json

kaggle_username = "asamiyuu"

kaggle_key = "76374006b2b499f499899d9dae40d605"

# Save API token the kaggle.json file

with open("/root/.kaggle/kaggle.json", "w") as f:

f.write(json.dumps({"username": kaggle_username, "key": kaggle_key}))

```

### Download and explore dataset

### Go to the bike sharing demand competition and agree to the terms

```

# Download the dataset, it will be in a .zip file so you'll need to unzip it as well.

!kaggle competitions download -c bike-sharing-demand

# If you already downloaded it you can use the -o command to overwrite the file

!unzip -o bike-sharing-demand.zip

import pandas as pd

from autogluon.tabular import TabularPredictor

# Create the train dataset in pandas by reading the csv

# Set the parsing of the datetime column so you can use some of the `dt` features in pandas later

train = pd.read_csv("train.csv", parse_dates = ["datetime"])

train.head()

# Simple output of the train dataset to view some of the min/max/varition of the dataset features.

train.describe

# Create the test pandas dataframe in pandas by reading the csv, remember to parse the datetime!

test = pd.read_csv("test.csv", parse_dates = ["datetime"])

test.head()

# Same thing as train and test dataset

submission = pd.read_csv("sampleSubmission.csv", parse_dates = ["datetime"])

submission.head()

```

## Step 3: Train a model using AutoGluon’s Tabular Prediction

Requirements:

* We are prediting `count`, so it is the label we are setting.

* Ignore `casual` and `registered` columns as they are also not present in the test dataset.

* Use the `root_mean_squared_error` as the metric to use for evaluation.

* Set a time limit of 10 minutes (600 seconds).

* Use the preset `best_quality` to focus on creating the best model.

```

predictor = TabularPredictor(label="count", learner_kwargs={'ignored_columns': ["casual", "registered"]}).fit(

train_data=train, time_limit=600, presets="best_quality"

)

```

### Review AutoGluon's training run with ranking of models that did the best.

```

predictor.fit_summary()

```

### Create predictions from test dataset

```

predictions = predictor.predict(test)

predictions.head()

```

#### NOTE: Kaggle will reject the submission if we don't set everything to be > 0.

```

# Describe the `predictions` series to see if there are any negative values

predictions.lt(0).value_counts()

# How many negative values do we have?

predictions.iloc[predictions<0] = 0

predictions.lt(0).value_counts()

# Set them to zero

submission["count"] = predictions

submission.to_csv("submission.csv", index=False)

```

### Set predictions to submission dataframe, save, and submit

```

submission["count"] = predictions

submission.to_csv("submission.csv", index=False)

!kaggle competitions submit -c bike-sharing-demand -f submission.csv -m "first raw submission"

```

#### View submission via the command line or in the web browser under the competition's page - `My Submissions`

```

!kaggle competitions submissions -c bike-sharing-demand | tail -n +1 | head -n 6

```

#### Initial score of `?`

## Step 4: Exploratory Data Analysis and Creating an additional feature

* Any additional feature will do, but a great suggestion would be to separate out the datetime into hour, day, or month parts.

```

# Create a histogram of all features to show the distribution of each one relative to the data. This is part of the exploritory data analysis

train.hist()

# create a new feature

train["year"] = train.datetime.dt.year

train["month"] = train.datetime.dt.month

train["day"] = train.datetime.dt.day

train.drop(["datetime"], axis=1, inplace=True)

test["year"] = test.datetime.dt.year

test["month"] = test.datetime.dt.month

test["day"] = test.datetime.dt.day

test.drop(["datetime"], axis=1, inplace=True)

train.head()

test.head()

```

## Make category types for these so models know they are not just numbers

* AutoGluon originally sees these as ints, but in reality they are int representations of a category.

* Setting the dtype to category will classify these as categories in AutoGluon.

```

train["season"] = train["season"].astype("category")

train["weather"] = train["weather"].astype("category")

test["season"] = test["season"].astype("category")

test["weather"] = test["weather"].astype("category")

# View are new feature

train.head()

# View histogram of all features again now with the hour feature

train.hist()

```

## Step 5: Rerun the model with the same settings as before, just with more features

```

predictor_new_features = TabularPredictor(label="count", learner_kwargs={'ignored_columns': ["casual", "registered"]}).fit(

train_data=train, time_limit=600, presets="best_quality"

)

predictor_new_features.fit_summary()

# Remember to set all negative values to zero

predictions_new_features = predictor_new_features.predict(test)

predictions_new_features.head()

# Same submitting predictions

submission_new_features = pd.read_csv('./sampleSubmission.csv', parse_dates=["datetime"])

submission_new_features["count"] = predictions_new_features

submission_new_features.to_csv("submission_new_features.csv", index=False)

!kaggle competitions submit -c bike-sharing-demand -f submission_new_features.csv -m "new features"

!kaggle competitions submissions -c bike-sharing-demand | tail -n +1 | head -n 6

```

#### New Score of `?`

## Step 6: Hyper parameter optimization

* There are many options for hyper parameter optimization.

* Options are to change the AutoGluon higher level parameters or the individual model hyperparameters.

* The hyperparameters of the models themselves that are in AutoGluon. Those need the `hyperparameter` and `hyperparameter_tune_kwargs` arguments.

```

import autogluon.core as ag

predictor_new_hpo = TabularPredictor(label="count", eval_metric="root_mean_squared_error", learner_kwargs={'ignored_columns': ["casual", "registered"]}).fit(

train_data=train, time_limit=600, num_bag_folds=5, num_bag_sets=1, num_stack_levels=1, presets="best_quality"

)

predictor_new_hpo.fit_summary()

# Remember to set all negative values to zero

predictions_new_hpo = predictor_new_hpo.predict(test)

predictions_new_hpo.iloc[predictions_new_hpo.lt(0)] = 0

# Same submitting predictions

submission_new_hpo = pd.read_csv('./sampleSubmission.csv', parse_dates=["datetime"])

submission_new_hpo["count"] = predictions_new_hpo

submission_new_hpo.to_csv("submission_new_hpo.csv", index=False)

!kaggle competitions submit -c bike-sharing-demand -f submission_new_hpo.csv -m "new features with hyperparameters"

!kaggle competitions submissions -c bike-sharing-demand | tail -n +1 | head -n 6

```

#### New Score of `?`

## Step 7: Write a Report

### Refer to the markdown file for the full report

### Creating plots and table for report

```

import pandas as pd

import matplotlib.pyplot as plt

# Taking the top model score from each training run and creating a line plot to show improvement

# You can create these in the notebook and save them to PNG or use some other tool (e.g. google sheets, excel)

fig = pd.DataFrame(

{

"model": ["initial", "add_features", "hpo"],

"score": [ 1.39377 , 1.33121 , 1.32740]

}

).plot(x="model", y="score", figsize=(8, 6)).get_figure()

fig.savefig('model_train_score.png')

# Take the 3 kaggle scores and creating a line plot to show improvement

fig = pd.DataFrame(

{

"test_eval": ["initial", "add_features", "WeightedEnsemble", "WeightedEnsemble"],

"score": [ 1.39377 , 1.33121, 1.31933 , 1.32740 ]

}

).plot(x="test_eval", y="score", figsize=(8, 6)).get_figure()

fig.savefig('model_test_score.png')

```

### Hyperparameter table

```

# The 3 hyperparameters we tuned with the kaggle score as the result

pd.DataFrame({

"model": ["initial", "add_features", "WeightedEnsemble"],

"WeightedEnsemble": [0, 0, 5],

"WeightedEnsemble": [20, 20, 1],

"WeightedEnsemble": [0, 0, 1],

"score": [1.33883, 1.39281, 1.32740 ]

})

fig = pd.DataFrame(

{

"model": ["initial", "add_features", "hpo"],

"WeightedEnsemble": [0, 0, 5],

"WeightedEnsemble": [20, 20, 1],

"WeightedEnsemble": [0, 0, 1],

"score": [1.33883 , 1.39281 , 1.32740 ]

}

).plot(x="model", y="score", figsize=(8, 6)).get_figure()

fig.savefig('model_test2_score.png')

```

|

github_jupyter

|

!pip install -U pip

!pip install -U setuptools wheel

!pip install -U "mxnet<2.0.0" bokeh==2.0.1

!pip install autogluon --no-cache-dir

# Without --no-cache-dir, smaller aws instances may have trouble installing

# create the .kaggle directory and an empty kaggle.json file

!mkdir -p /root/.kaggle

!touch /root/.kaggle/kaggle.json

!chmod 600 /root/.kaggle/kaggle.json

# Fill in your user name and key from creating the kaggle account and API token file

import json

kaggle_username = "asamiyuu"

kaggle_key = "76374006b2b499f499899d9dae40d605"

# Save API token the kaggle.json file

with open("/root/.kaggle/kaggle.json", "w") as f:

f.write(json.dumps({"username": kaggle_username, "key": kaggle_key}))

# Download the dataset, it will be in a .zip file so you'll need to unzip it as well.

!kaggle competitions download -c bike-sharing-demand

# If you already downloaded it you can use the -o command to overwrite the file

!unzip -o bike-sharing-demand.zip

import pandas as pd

from autogluon.tabular import TabularPredictor

# Create the train dataset in pandas by reading the csv

# Set the parsing of the datetime column so you can use some of the `dt` features in pandas later

train = pd.read_csv("train.csv", parse_dates = ["datetime"])

train.head()

# Simple output of the train dataset to view some of the min/max/varition of the dataset features.

train.describe

# Create the test pandas dataframe in pandas by reading the csv, remember to parse the datetime!

test = pd.read_csv("test.csv", parse_dates = ["datetime"])

test.head()

# Same thing as train and test dataset

submission = pd.read_csv("sampleSubmission.csv", parse_dates = ["datetime"])

submission.head()

predictor = TabularPredictor(label="count", learner_kwargs={'ignored_columns': ["casual", "registered"]}).fit(

train_data=train, time_limit=600, presets="best_quality"

)

predictor.fit_summary()

predictions = predictor.predict(test)

predictions.head()

# Describe the `predictions` series to see if there are any negative values

predictions.lt(0).value_counts()

# How many negative values do we have?

predictions.iloc[predictions<0] = 0

predictions.lt(0).value_counts()

# Set them to zero

submission["count"] = predictions

submission.to_csv("submission.csv", index=False)

submission["count"] = predictions

submission.to_csv("submission.csv", index=False)

!kaggle competitions submit -c bike-sharing-demand -f submission.csv -m "first raw submission"

!kaggle competitions submissions -c bike-sharing-demand | tail -n +1 | head -n 6

# Create a histogram of all features to show the distribution of each one relative to the data. This is part of the exploritory data analysis

train.hist()

# create a new feature

train["year"] = train.datetime.dt.year

train["month"] = train.datetime.dt.month

train["day"] = train.datetime.dt.day

train.drop(["datetime"], axis=1, inplace=True)

test["year"] = test.datetime.dt.year

test["month"] = test.datetime.dt.month

test["day"] = test.datetime.dt.day

test.drop(["datetime"], axis=1, inplace=True)

train.head()

test.head()

train["season"] = train["season"].astype("category")

train["weather"] = train["weather"].astype("category")

test["season"] = test["season"].astype("category")

test["weather"] = test["weather"].astype("category")

# View are new feature

train.head()

# View histogram of all features again now with the hour feature

train.hist()

predictor_new_features = TabularPredictor(label="count", learner_kwargs={'ignored_columns': ["casual", "registered"]}).fit(

train_data=train, time_limit=600, presets="best_quality"

)

predictor_new_features.fit_summary()

# Remember to set all negative values to zero

predictions_new_features = predictor_new_features.predict(test)

predictions_new_features.head()

# Same submitting predictions

submission_new_features = pd.read_csv('./sampleSubmission.csv', parse_dates=["datetime"])

submission_new_features["count"] = predictions_new_features

submission_new_features.to_csv("submission_new_features.csv", index=False)

!kaggle competitions submit -c bike-sharing-demand -f submission_new_features.csv -m "new features"

!kaggle competitions submissions -c bike-sharing-demand | tail -n +1 | head -n 6

import autogluon.core as ag

predictor_new_hpo = TabularPredictor(label="count", eval_metric="root_mean_squared_error", learner_kwargs={'ignored_columns': ["casual", "registered"]}).fit(

train_data=train, time_limit=600, num_bag_folds=5, num_bag_sets=1, num_stack_levels=1, presets="best_quality"

)

predictor_new_hpo.fit_summary()

# Remember to set all negative values to zero

predictions_new_hpo = predictor_new_hpo.predict(test)

predictions_new_hpo.iloc[predictions_new_hpo.lt(0)] = 0

# Same submitting predictions

submission_new_hpo = pd.read_csv('./sampleSubmission.csv', parse_dates=["datetime"])

submission_new_hpo["count"] = predictions_new_hpo

submission_new_hpo.to_csv("submission_new_hpo.csv", index=False)

!kaggle competitions submit -c bike-sharing-demand -f submission_new_hpo.csv -m "new features with hyperparameters"

!kaggle competitions submissions -c bike-sharing-demand | tail -n +1 | head -n 6

import pandas as pd

import matplotlib.pyplot as plt

# Taking the top model score from each training run and creating a line plot to show improvement

# You can create these in the notebook and save them to PNG or use some other tool (e.g. google sheets, excel)

fig = pd.DataFrame(

{

"model": ["initial", "add_features", "hpo"],

"score": [ 1.39377 , 1.33121 , 1.32740]

}

).plot(x="model", y="score", figsize=(8, 6)).get_figure()

fig.savefig('model_train_score.png')

# Take the 3 kaggle scores and creating a line plot to show improvement

fig = pd.DataFrame(

{

"test_eval": ["initial", "add_features", "WeightedEnsemble", "WeightedEnsemble"],

"score": [ 1.39377 , 1.33121, 1.31933 , 1.32740 ]

}

).plot(x="test_eval", y="score", figsize=(8, 6)).get_figure()

fig.savefig('model_test_score.png')

# The 3 hyperparameters we tuned with the kaggle score as the result

pd.DataFrame({

"model": ["initial", "add_features", "WeightedEnsemble"],

"WeightedEnsemble": [0, 0, 5],

"WeightedEnsemble": [20, 20, 1],

"WeightedEnsemble": [0, 0, 1],

"score": [1.33883, 1.39281, 1.32740 ]

})

fig = pd.DataFrame(

{

"model": ["initial", "add_features", "hpo"],

"WeightedEnsemble": [0, 0, 5],

"WeightedEnsemble": [20, 20, 1],

"WeightedEnsemble": [0, 0, 1],

"score": [1.33883 , 1.39281 , 1.32740 ]

}

).plot(x="model", y="score", figsize=(8, 6)).get_figure()

fig.savefig('model_test2_score.png')

| 0.64232 | 0.956594 |

[Home Page](../Start_Here.ipynb)

[Next Notebook](CNN's.ipynb)

# CNN Primer and Keras 101

In this notebook, participants will be introduced to CNN, implement it using Keras. For an absolute beginner this notebook would serve as a good starting point.

**Contents of the this notebook:**

- [How a Deep Learning project is planned ?](#Machine-Learning-Pipeline)

- [Wrapping things up with an example ( Classification )](#Image-Classification-on-types-of-clothes)

**By the end of this notebook participant will:**

- Understand the Machine Learning Pipeline

- Write a Deep Learning Classifier and train it.

**We will be building a _Multi-class Classifier_ to classify images of clothing to their respective classes**

## Machine Learning Pipeline

During the bootcamp we will be making use of the following buckets to help us understand how a Machine Learning project should be planned and executed:

1. **Data**: To start with any ML project we need data which is pre-processed and can be fed into the network.

2. **Task**: There are many tasks present in ML, we need to make sure we understand and define the problem statement accurately.

3. **Model**: We need to build our model, which is neither too deep and complex, thereby taking a lot of computational power or too small that it could not learn the important features.

4. **Loss**: Out of the many _loss functions_ present, we need to carefully choose a _loss function_ which is suitable for the task we are about to carry out.

5. **Learning**: As we mentioned in our last notebook, there are a variety of _optimisers_ each with their advantages and disadvantages. So here we choose an _optimiser_ which is suitable for our task and train our model using the set hyperparameters.

6. **Evaluation**: This is a crucial step in the process to determine if our model has learnt the features properly by analysing how it performs when unseen data is given to it.

**Here we will be building a _Multi-class Classifier_ to classify images of clothing to their respective classes.**

We will follow the above discussed pipeline to complete the example.

## Image Classification on types of clothes

#### Step -1 : Data

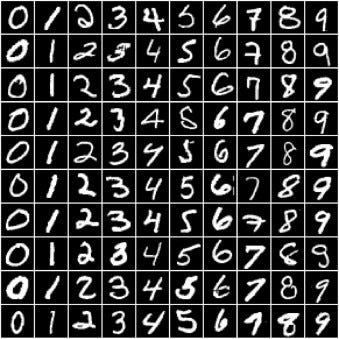

We will be using the **F-MNIST ( Fashion MNIST )** dataset, which is a very popular dataset. This dataset contains 70,000 grayscale images in 10 categories. The images show individual articles of clothing at low resolution (28 by 28 pixels).

<img src="images/fashion-mnist.png" alt="Fashion MNIST sprite" width="600">

*Source: https://www.tensorflow.org/tutorials/keras/classification*

```

# Import Necessary Libraries

from __future__ import absolute_import, division, print_function, unicode_literals

# TensorFlow and tf.keras

import tensorflow as tf

from tensorflow import keras

# Helper libraries

import numpy as np

import matplotlib.pyplot as plt

print(tf.__version__)

# Let's Import the Dataset

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

```

Loading the dataset returns four NumPy arrays:

* The `train_images` and `train_labels` arrays are the *training set*—the data the model uses to learn.

* The model is tested against the *test set*, the `test_images`, and `test_labels` arrays.

The images are 28x28 NumPy arrays, with pixel values ranging from 0 to 255. The *labels* are an array of integers, ranging from 0 to 9. These correspond to the *class* of clothing the image represents:

<table>

<tr>

<th>Label</th>

<th>Class</th>

</tr>

<tr>

<td>0</td>

<td>T-shirt/top</td>

</tr>

<tr>

<td>1</td>

<td>Trouser</td>

</tr>

<tr>

<td>2</td>

<td>Pullover</td>

</tr>

<tr>

<td>3</td>

<td>Dress</td>

</tr>

<tr>

<td>4</td>

<td>Coat</td>

</tr>

<tr>

<td>5</td>

<td>Sandal</td>

</tr>

<tr>

<td>6</td>

<td>Shirt</td>

</tr>

<tr>

<td>7</td>

<td>Sneaker</td>

</tr>

<tr>

<td>8</td>

<td>Bag</td>

</tr>

<tr>

<td>9</td>

<td>Ankle boot</td>

</tr>

</table>

Each image is mapped to a single label. Since the *class names* are not included with the dataset, let us store them in an array so that we can use them later when plotting the images:

```

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

```

## Understanding the Data

```

#Print Array Size of Training Set

print("Size of Training Images :"+str(train_images.shape))

#Print Array Size of Label

print("Size of Training Labels :"+str(train_labels.shape))

#Print Array Size of Test Set

print("Size of Test Images :"+str(test_images.shape))

#Print Array Size of Label

print("Size of Test Labels :"+str(test_labels.shape))

#Let's See how our Outputs Look like

print("Training Set Labels :"+str(train_labels))

#Data in the Test Set

print("Test Set Labels :"+str(test_labels))

```

## Data Pre-processing

```

plt.figure()

plt.imshow(train_images[0])

plt.colorbar()

plt.grid(False)

plt.show()

```

The image pixel values range from 0 to 255. Let us now normalise the data range from 0 - 255 to 0 - 1 in both the *Train* and *Test* set. This Normalisation of pixels helps us by optimizing the process where the gradients are computed.

```

train_images = train_images / 255.0

test_images = test_images / 255.0

# Let's Print to Veryify if the Data is of the correct format.

plt.figure(figsize=(10,10))

for i in range(25):

plt.subplot(5,5,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(class_names[train_labels[i]])

plt.show()

```

## Defining our Model

Our Model has three layers :

- 784 Input features ( 28 * 28 )

- 128 nodes in hidden layer (Feel free to experiment with the value)

- 10 output nodes to denote the Class

Implementing the same in Keras ( Machine Learning framework built on top of Tensorflow, Theano, etc..)

```

from tensorflow.keras import backend as K

K.clear_session()

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

```

The first layer in this network, `tf.keras.layers.Flatten`, transforms the format of the images from a two-dimensional array (of 28 by 28 pixels) to a one-dimensional array (of 28 * 28 = 784 pixels). Think of this layer as unstacking rows of pixels in the image and lining them up. This layer has no parameters to learn; it only reformats the data.

After the pixels are flattened, the network consists of a sequence of two `tf.keras.layers.Dense` layers. These are densely connected, or fully connected, neural layers. The first `Dense` layer has 128 nodes (or neurons). The second (and last) layer is a 10-node *softmax* layer that returns an array of 10 probability scores that sum to 1. Each node contains a score that indicates the probability that the current image belongs to one of the 10 classes.

### Compile the model

Before the model is ready for training, it needs a few more settings. These are added during the model's *compile* step:

* *Loss function* —This measures how accurate the model is during training. You want to minimize this function to "steer" the model in the right direction.

* *Optimizer* —This is how the model is updated based on the data it sees and its loss function.

* *Metrics* —Used to monitor the training and testing steps. The following example uses *accuracy*, the fraction of the images that are correctly classified.

```

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

```

## Train the model

Training the neural network model requires the following steps:

1. Feed the training data to the model. In this example, the training data is in the `train_images` and `train_labels` arrays.

2. The model learns to associate images and labels.

3. You ask the model to make predictions about a test set—in this example, the `test_images` array. Verify that the predictions match the labels from the `test_labels` array.

To start training, call the `model.fit` method—so called because it "fits" the model to the training data:

```

model.fit(train_images, train_labels ,epochs=5)

```

## Evaluate accuracy

Next, compare how the model performs on the test dataset:

```

#Evaluating the Model using the Test Set

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print('\nTest accuracy:', test_acc)

```

## Exercise

Try adding more dense layers to the network above and observe change in accuracy.

We get an Accuracy of 87% in the Test dataset which is less than the 89% we got during the Training phase, This problem in ML is called as Overfitting

## Important:

<mark>Shutdown the kernel before clicking on “Next Notebook” to free up the GPU memory.</mark>

## Licensing

This material is released by OpenACC-Standard.org, in collaboration with NVIDIA Corporation, under the Creative Commons Attribution 4.0 International (CC BY 4.0).

[Home Page](../Start_Here.ipynb)

[Next Notebook](CNN's.ipynb)

|

github_jupyter

|

# Import Necessary Libraries

from __future__ import absolute_import, division, print_function, unicode_literals

# TensorFlow and tf.keras

import tensorflow as tf

from tensorflow import keras

# Helper libraries

import numpy as np

import matplotlib.pyplot as plt

print(tf.__version__)

# Let's Import the Dataset

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

#Print Array Size of Training Set

print("Size of Training Images :"+str(train_images.shape))

#Print Array Size of Label

print("Size of Training Labels :"+str(train_labels.shape))

#Print Array Size of Test Set

print("Size of Test Images :"+str(test_images.shape))

#Print Array Size of Label

print("Size of Test Labels :"+str(test_labels.shape))

#Let's See how our Outputs Look like

print("Training Set Labels :"+str(train_labels))

#Data in the Test Set

print("Test Set Labels :"+str(test_labels))

plt.figure()

plt.imshow(train_images[0])

plt.colorbar()

plt.grid(False)

plt.show()

train_images = train_images / 255.0

test_images = test_images / 255.0

# Let's Print to Veryify if the Data is of the correct format.

plt.figure(figsize=(10,10))

for i in range(25):

plt.subplot(5,5,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(class_names[train_labels[i]])

plt.show()

from tensorflow.keras import backend as K

K.clear_session()

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(train_images, train_labels ,epochs=5)

#Evaluating the Model using the Test Set

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print('\nTest accuracy:', test_acc)

| 0.735926 | 0.992415 |

# Opdracht 1.1

# Opdracht 1.2

$

R=

\begin{bmatrix}

-2 & 0 & 3 & -10\\

\end{bmatrix}

$

# Opdracht 1.3

In de twee regels hieronder heb ik twee voorbeelden genomen van een route die afgelegd kan worden door een agent. Alle rewards van de states die de agent heeft gehad worden bij elkaar opgeteld, en zo is er gekomen tot de volgende uitkomsten.

$

G_{t} = R(Rain) + R(Cloudy) + R(Sunny) + R(Meteor) = -2 + 0 + 3 + -10 = -9\\

G_{t} = R(Cloudy) + R(Sunny) + R(Cloudy) + R(Sunny) + R(Cloudy) + R(Sunny) + R(Meteor) = 0 + 3 + 0 + 3 + 0 + 3 + -10 = -1

$

# Opdracht 1.4

| | init | iter 1 | iter 2 |

|----|------|--------|--------|

| s1 | 0 | 0.1 | 6.56 |

| s2 | 0 | 17.8 | 17.96 |

| s3 | 0 | 1.6 | 2.15 |

| s4 | 0 | 1.6 | 5.17 |

| s5 | 0 | 0 | 0 |

# Opdracht 1.5

# Opdracht 2

Hieronder heb ik value iteratie uitgevoerd met de methode de eerst was uit gelegd bij opdracht 1.4. Ik merkte dat het verschil van de nieuwe values steeds kleiner werd, ten opzichte van de values die ik daarvoor berekend had. Dus heb ik voor mijzelf besloten om te stoppen met de value iteration toen het verschil kleiner was dan 0.01. Ik zou nog langer kunnen door itereren maar dat heeft weinig zin aangezien de values niet veel meer veranderen. Ook heb ik ter visualisatie een plot gemaakt van de values, ook dit laat zien dat de values niet veel meer veranderen.

| | init | iter 1 | iter 2 | iter 3 | iter 4 | iter 5 | iter 6 | iter 7 | iter 8 | iter 9 | iter 10 | iter 11 | iter 12 | iter 13 |

|----|------|--------|--------|--------|--------|---------|---------|----------|----------|-----------|-----------|------------|------------|-------------|

| s0 | 0 | -0.1 | -0.62 | -0.7 | -0.975 | -1 | -1.1375 | -1.15 | -1.21875 | -1.225 | -1.259375 | -1.2625 | -1.2796875 | -1.28125 |

| s1 | 0 | -0.55 | -0.6 | -0.875 | -0.9 | -1.0375 | -1.05 | -1.11875 | -1.125 | -1.159375 | -1.1625 | -1.1796875 | -1.18125 | -1.18984375 |

| s2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

```

import matplotlib.pyplot as plt

x = [i for i in range(14)]

s0 = [0, -0.1, -0.62, -0.7, -0.975, -1, -1.1375, -1.15, -1.21875, -1.225, -1.259375, -1.2625, -1.2796875, -1.28125]

s1 = [0, -0.55, -0.6, -0.875, -0.9, -1.0375, -1.05, -1.11875, -1.125, -1.159375, -1.1625, -1.1796875, -1.18125, -1.18984375]

s2 = [0 for i in range(14)]

plt.plot(x, s0)

plt.plot(x, s1)

plt.plot(x, s2)

plt.legend(["s0", "s1", "s2"])

plt.title("Value Iteration (Opdracht 2)")

plt.xlabel("Aantal iteraties")

plt.ylabel("Value")

```

# Opdracht 3

Hieronder Heb ik een voorbeeld run van de code met output van de values en greedy-policy. En hierin is te zien dat de vakje richting de finish van 40+ steeds donkerder worden. Wat laat zien dat de agent die richting op gaat.

```

from grid import Grid

import numpy as np

rewards = np.array([

[-1, -1, -1, 40],

[-1, -1, -10, -10],

[-1, -1, -1, -1],

[10, -2, -1, -1]

])

terminal_states = [(0, 3), (3, 0)]

gamma = 0.9

grid = Grid(rewards, terminal_states, gamma)

grid.run(10, verbose=True)

```

|

github_jupyter

|

import matplotlib.pyplot as plt

x = [i for i in range(14)]

s0 = [0, -0.1, -0.62, -0.7, -0.975, -1, -1.1375, -1.15, -1.21875, -1.225, -1.259375, -1.2625, -1.2796875, -1.28125]

s1 = [0, -0.55, -0.6, -0.875, -0.9, -1.0375, -1.05, -1.11875, -1.125, -1.159375, -1.1625, -1.1796875, -1.18125, -1.18984375]

s2 = [0 for i in range(14)]

plt.plot(x, s0)

plt.plot(x, s1)

plt.plot(x, s2)

plt.legend(["s0", "s1", "s2"])

plt.title("Value Iteration (Opdracht 2)")

plt.xlabel("Aantal iteraties")

plt.ylabel("Value")

from grid import Grid

import numpy as np