text

stringlengths 2.5k

6.39M

| kind

stringclasses 3

values |

|---|---|

** ----- IMPORTANT ------ **

The code presented here assumes that you're running TensorFlow v1.3.0 or higher, this was not released yet so the easiet way to run this is update your TensorFlow version to TensorFlow's master.

To do that go [here](https://github.com/tensorflow/tensorflow#installation) and then execute:

`pip install --ignore-installed --upgrade <URL for the right binary for your machine>`.

For example, considering a Linux CPU-only running python2:

`pip install --upgrade https://ci.tensorflow.org/view/Nightly/job/nightly-matrix-cpu/TF_BUILD_IS_OPT=OPT,TF_BUILD_IS_PIP=PIP,TF_BUILD_PYTHON_VERSION=PYTHON2,label=cpu-slave/lastSuccessfulBuild/artifact/pip_test/whl/tensorflow-1.2.1-cp27-none-linux_x86_64.whl`

## Here is walk-through to help getting started with tensorflow

1) Simple Linear Regression with low-level TensorFlow

2) Simple Linear Regression with a canned estimator

3) Playing with real data: linear regressor and DNN

4) Building a custom estimator to classify handwritten digits (MNIST)

### [What's next?](https://goo.gl/hZaLPA)

## Dependencies

```

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import collections

# tensorflow

import tensorflow as tf

print('Expected TensorFlow version is v1.3.0 or higher')

print('Your TensorFlow version:', tf.__version__)

# data manipulation

import numpy as np

import pandas as pd

# visualization

import matplotlib

import matplotlib.pyplot as plt

%matplotlib inline

matplotlib.rcParams['figure.figsize'] = [12,8]

```

## 1) Simple Linear Regression with low-level TensorFlow

### Generating data

This function creates a noisy dataset that's roughly linear, according to the equation y = mx + b + noise.

Notice that the expected value for m is 0.1 and for b is 0.3. This is the values we expect the model to predict.

```

def make_noisy_data(m=0.1, b=0.3, n=100):

x = np.random.randn(n)

noise = np.random.normal(scale=0.01, size=len(x))

y = m * x + b + noise

return x, y

```

Create training data

```

x_train, y_train = make_noisy_data()

```

Plot the training data

```

plt.plot(x_train, y_train, 'b.')

```

### The Model

```

# input and output

x = tf.placeholder(shape=[None], dtype=tf.float32, name='x')

y_label = tf.placeholder(shape=[None], dtype=tf.float32, name='y_label')

# variables

W = tf.Variable(tf.random_normal([1], name="W")) # weight

b = tf.Variable(tf.random_normal([1], name="b")) # bias

# actual model

y = W * x + b

```

### The Loss and Optimizer

Define a loss function (here, squared error) and an optimizer (here, gradient descent).

```

loss = tf.reduce_mean(tf.square(y - y_label))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1)

train = optimizer.minimize(loss)

```

### The Training Loop and generating predictions

```

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init) # initialize variables

for i in range(100): # train for 100 steps

sess.run(train, feed_dict={x: x_train, y_label:y_train})

x_plot = np.linspace(-3, 3, 101) # return evenly spaced numbers over a specified interval

# using the trained model to predict values for the training data

y_plot = sess.run(y, feed_dict={x: x_plot})

# saving final weight and bias

final_W = sess.run(W)

final_b = sess.run(b)

```

### Visualizing predictions

```

plt.scatter(x_train, y_train)

plt.plot(x_plot, y_plot, 'g')

```

### What is the final weight and bias?

```

print('W:', final_W, 'expected: 0.1')

print('b:', final_b, 'expected: 0.3')

```

## 2) Simple Linear Regression with a canned estimator

### Input Pipeline

```

x_dict = {'x': x_train}

train_input = tf.estimator.inputs.numpy_input_fn(x_dict, y_train,

shuffle=True,

num_epochs=None) # repeat forever

```

### Describe input feature usage

```

features = [tf.feature_column.numeric_column('x')] # because x is a real number

```

### Build and train the model

```

estimator = tf.estimator.LinearRegressor(features)

estimator.train(train_input, steps = 1000)

```

### Generating and visualizing predictions

```

x_test_dict = {'x': np.linspace(-5, 5, 11)}

data_source = tf.estimator.inputs.numpy_input_fn(x_test_dict, shuffle=False)

predictions = list(estimator.predict(data_source))

preds = [p['predictions'][0] for p in predictions]

for y in predictions:

print(y['predictions'])

plt.scatter(x_train, y_train)

plt.plot(x_test_dict['x'], preds, 'g')

```

## 3) Playing with real data: linear regressor and DNN

### Get the data

The Adult dataset is from the Census bureau and the task is to predict whether a given adult makes more than $50,000 a year based attributes such as education, hours of work per week, etc.

But the code here presented can be easilly aplicable to any csv dataset that fits in memory.

More about the data [here](https://archive.ics.uci.edu/ml/machine-learning-databases/adult/old.adult.names)

```

census_train_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.data'

census_train_path = tf.contrib.keras.utils.get_file('census.train', census_train_url)

census_test_url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.test'

census_test_path = tf.contrib.keras.utils.get_file('census.test', census_test_url)

```

### Load the data

```

column_names = [

'age', 'workclass', 'fnlwgt', 'education', 'education-num',

'marital-status', 'occupation', 'relationship', 'race', 'sex',

'capital-gain', 'capital-loss', 'hours-per-week', 'native-country',

'income'

]

census_train = pd.read_csv(census_train_path, index_col=False, names=column_names)

census_test = pd.read_csv(census_train_path, index_col=False, names=column_names)

census_train_label = census_train.pop('income') == " >50K"

census_test_label = census_test.pop('income') == " >50K"

census_train.head(10)

census_train_label[:20]

```

### Input pipeline

```

train_input = tf.estimator.inputs.pandas_input_fn(

census_train,

census_train_label,

shuffle=True,

batch_size = 32, # process 32 examples at a time

num_epochs=None,

)

test_input = tf.estimator.inputs.pandas_input_fn(

census_test,

census_test_label,

shuffle=True,

num_epochs=1)

features, labels = train_input()

features

```

### Feature description

```

features = [

tf.feature_column.numeric_column('hours-per-week'),

tf.feature_column.bucketized_column(tf.feature_column.numeric_column('education-num'), list(range(25))),

tf.feature_column.categorical_column_with_vocabulary_list('sex', ['male','female']),

tf.feature_column.categorical_column_with_hash_bucket('native-country', 1000),

]

estimator = tf.estimator.LinearClassifier(features, model_dir='census/linear',n_classes=2)

estimator.train(train_input, steps=5000)

```

### Evaluate the model

```

estimator.evaluate(test_input)

```

## DNN model

### Update input pre-processing

```

features = [

tf.feature_column.numeric_column('education-num'),

tf.feature_column.numeric_column('hours-per-week'),

tf.feature_column.numeric_column('age'),

tf.feature_column.indicator_column(

tf.feature_column.categorical_column_with_vocabulary_list('sex',['male','female'])),

tf.feature_column.embedding_column( # now using embedding!

tf.feature_column.categorical_column_with_hash_bucket('native-country', 1000), 10)

]

estimator = tf.estimator.DNNClassifier(hidden_units=[20,20],

feature_columns=features,

n_classes=2,

model_dir='census/dnn')

estimator.train(train_input, steps=5000)

estimator.evaluate(test_input)

```

## Custom Input Pipeline using Datasets API

### Read the data

```

def census_input_fn(path):

def input_fn():

dataset = (

tf.contrib.data.TextLineDataset(path)

.map(csv_decoder)

.shuffle(buffer_size=100)

.batch(32)

.repeat())

columns = dataset.make_one_shot_iterator().get_next()

income = tf.equal(columns.pop('income')," >50K")

return columns, income

return input_fn

csv_defaults = collections.OrderedDict([

('age',[0]),

('workclass',['']),

('fnlwgt',[0]),

('education',['']),

('education-num',[0]),

('marital-status',['']),

('occupation',['']),

('relationship',['']),

('race',['']),

('sex',['']),

('capital-gain',[0]),

('capital-loss',[0]),

('hours-per-week',[0]),

('native-country',['']),

('income',['']),

])

def csv_decoder(line):

parsed = tf.decode_csv(line, csv_defaults.values())

return dict(zip(csv_defaults.keys(), parsed))

```

### Try the input function

```

tf.reset_default_graph()

census_input = census_input_fn(census_train_path)

training_batch = census_input()

with tf.Session() as sess:

features, high_income = sess.run(training_batch)

print(features['education'])

print(features['age'])

print(high_income)

```

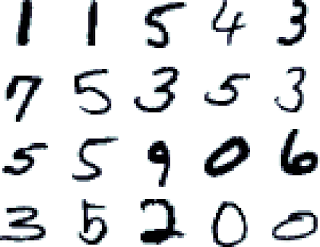

## 4) Building a custom estimator to classify handwritten digits (MNIST)

Image from: http://rodrigob.github.io/are_we_there_yet/build/images/mnist.png?1363085077

```

train,test = tf.contrib.keras.datasets.mnist.load_data()

x_train,y_train = train

x_test,y_test = test

mnist_train_input = tf.estimator.inputs.numpy_input_fn({'x':np.array(x_train, dtype=np.float32)},

np.array(y_train,dtype=np.int32),

shuffle=True,

num_epochs=None)

mnist_test_input = tf.estimator.inputs.numpy_input_fn({'x':np.array(x_test, dtype=np.float32)},

np.array(y_test,dtype=np.int32),

shuffle=True,

num_epochs=1)

```

### tf.estimator.LinearClassifier

```

estimator = tf.estimator.LinearClassifier([tf.feature_column.numeric_column('x',shape=784)],

n_classes=10,

model_dir="mnist/linear")

estimator.train(mnist_train_input, steps = 10000)

estimator.evaluate(mnist_test_input)

```

### Examine the results with [TensorBoard](http://0.0.0.0:6006)

$> tensorboard --logdir mnnist/DNN

```

estimator = tf.estimator.DNNClassifier(hidden_units=[256],

feature_columns=[tf.feature_column.numeric_column('x',shape=784)],

n_classes=10,

model_dir="mnist/DNN")

estimator.train(mnist_train_input, steps = 10000)

estimator.evaluate(mnist_test_input)

# Parameters

BATCH_SIZE = 128

STEPS = 10000

```

## A Custom Model

```

def build_cnn(input_layer, mode):

with tf.name_scope("conv1"):

conv1 = tf.layers.conv2d(inputs=input_layer,filters=32, kernel_size=[5, 5],

padding='same', activation=tf.nn.relu)

with tf.name_scope("pool1"):

pool1 = tf.layers.max_pooling2d(inputs=conv1, pool_size=[2, 2], strides=2)

with tf.name_scope("conv2"):

conv2 = tf.layers.conv2d(inputs=pool1,filters=64, kernel_size=[5, 5],

padding='same', activation=tf.nn.relu)

with tf.name_scope("pool2"):

pool2 = tf.layers.max_pooling2d(inputs=conv2, pool_size=[2, 2], strides=2)

with tf.name_scope("dense"):

pool2_flat = tf.reshape(pool2, [-1, 7 * 7 * 64])

dense = tf.layers.dense(inputs=pool2_flat, units=1024, activation=tf.nn.relu)

with tf.name_scope("dropout"):

is_training_mode = mode == tf.estimator.ModeKeys.TRAIN

dropout = tf.layers.dropout(inputs=dense, rate=0.4, training=is_training_mode)

logits = tf.layers.dense(inputs=dropout, units=10)

return logits

def model_fn(features, labels, mode):

# Describing the model

input_layer = tf.reshape(features['x'], [-1, 28, 28, 1])

tf.summary.image('mnist_input',input_layer)

logits = build_cnn(input_layer, mode)

# Generate Predictions

classes = tf.argmax(input=logits, axis=1)

predictions = {

'classes': classes,

'probabilities': tf.nn.softmax(logits, name='softmax_tensor')

}

if mode == tf.estimator.ModeKeys.PREDICT:

# Return an EstimatorSpec object

return tf.estimator.EstimatorSpec(mode=mode, predictions=predictions)

with tf.name_scope('loss'):

loss = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=labels, logits=logits)

loss = tf.reduce_sum(loss)

tf.summary.scalar('loss', loss)

with tf.name_scope('accuracy'):

accuracy = tf.cast(tf.equal(tf.cast(classes,tf.int32),labels),tf.float32)

accuracy = tf.reduce_mean(accuracy)

tf.summary.scalar('accuracy', accuracy)

# Configure the Training Op (for TRAIN mode)

if mode == tf.estimator.ModeKeys.TRAIN:

train_op = tf.contrib.layers.optimize_loss(

loss=loss,

global_step=tf.train.get_global_step(),

learning_rate=1e-4,

optimizer='Adam')

return tf.estimator.EstimatorSpec(mode=mode, predictions=predictions,

loss=loss, train_op=train_op)

# Configure the accuracy metric for evaluation

eval_metric_ops = {

'accuracy': tf.metrics.accuracy(

classes,

input=labels)

}

return tf.estimator.EstimatorSpec(mode=mode, predictions=predictions,

loss=loss, eval_metric_ops=eval_metric_ops)

```

## Runs estimator

```

# create estimator

run_config = tf.contrib.learn.RunConfig(model_dir='mnist/CNN')

estimator = tf.estimator.Estimator(model_fn=model_fn, config=run_config)

# train for 10000 steps

estimator.train(input_fn=mnist_train_input, steps=10000)

# evaluate

estimator.evaluate(input_fn=mnist_test_input)

# predict

preds = estimator.predict(input_fn=test_input_fn)

```

## Distributed tensorflow: using experiments

```

# Run an experiment

from tensorflow.contrib.learn.python.learn import learn_runner

# Enable TensorFlow logs

tf.logging.set_verbosity(tf.logging.INFO)

# create experiment

def experiment_fn(run_config, hparams):

# create estimator

estimator = tf.estimator.Estimator(model_fn=model_fn,

config=run_config)

return tf.contrib.learn.Experiment(

estimator,

train_input_fn=train_input_fn,

eval_input_fn=test_input_fn,

train_steps=STEPS

)

# run experiment

learn_runner.run(experiment_fn,

run_config=run_config)

```

### Examine the results with [TensorBoard](http://0.0.0.0:6006)

$> tensorboard --logdir mnist/CNN

|

github_jupyter

|

```

from autoreduce import *

import numpy as np

from sympy import symbols

# Post conservation law and other approximations phenomenological model at the RNA level

n = 4 # Number of states

nouts = 2 # Number of outputs

# Inputs by user

x_init = np.zeros(n)

n = 4 # Number of states

timepoints_ode = np.linspace(0, 100, 100)

C = [[0, 0, 1, 0], [0, 0, 0, 1]]

nstates_tol = 3

error_tol = 0.3

# System dynamics symbolically

# params = [100, 50, 10, 5, 5, 0.02, 0.02, 0.01, 0.01]

# params = [1, 1, 5, 0.1, 0.2, 1, 1, 100, 100] # Parameter set for which reduction doesn't work

# K,b_t,b_l,d_t,d_l,del_t,del_l,beta_t,beta_l = params

x0 = symbols('x0')

x1 = symbols('x1')

x2 = symbols('x2')

x3 = symbols('x3')

x = [x0, x1, x2, x3]

K = symbols('K')

b_t = symbols('b_t')

b_l = symbols('b_l')

d_t = symbols('d_t')

d_l = symbols('d_l')

del_t = symbols('del_t')

del_l = symbols('del_l')

beta_t = symbols('beta_t')

beta_l = symbols('beta_l')

params = [K,b_t,b_l,d_t,d_l,del_t,del_l,beta_t,beta_l]

f0 = K * b_t**2/(b_t**2 + x[3]**2) - d_t * x[0]

f1 = K * b_l**2/(b_l**2 + x[2]**2) - d_l * x[1]

f2 = beta_t * x[0] - del_t * x[2]

f3 = beta_l * x[1] - del_l * x[3]

f = [f0,f1,f2,f3]

# parameter values

params_values = [100, 50, 10, 5, 5, 0.02, 0.02, 0.01, 0.01]

sys = System(x, f, params = params, params_values = params_values, C = C, x_init = x_init)

from autoreduce.utils import get_ODE

sys_ode = get_ODE(sys, timepoints_ode)

sol = sys_ode.solve_system().T

try:

import matplotlib.pyplot as plt

plt.plot(timepoints_ode, np.transpose(np.array(C)@sol))

plt.xlabel('Time')

plt.ylabel('[Outputs]')

plt.show()

except:

print('Plotting libraries missing.')

from autoreduce.utils import get_SSM

timepoints_ssm = np.linspace(0,100,100)

sys_ssm = get_SSM(sys, timepoints_ssm)

Ss = sys_ssm.compute_SSM() # len(timepoints) x len(params) x len(states)

out_Ss = []

for i in range(len(params)):

out_Ss.append((np.array(C)@(Ss[:,i,:].T)))

out_Ss = np.reshape(np.array(out_Ss), (len(timepoints_ssm), len(params), nouts))

try:

import seaborn as sn

import matplotlib.pyplot as plt

for j in range(nouts):

sn.heatmap(out_Ss[:,:,j].T)

plt.xlabel('Time')

plt.ylabel('Parameters')

plt.title('Sensitivity of output[{0}] with respect to all parameters'.format(j))

plt.show()

except:

print('Plotting libraries missing.')

from autoreduce.utils import get_reducible

timepoints_ssm = np.linspace(0,100,10)

timepoints_ode = np.linspace(0, 100, 100)

sys_reduce = get_reducible(sys, timepoints_ode, timepoints_ssm)

results = sys_reduce.reduce_simple()

list(results.keys())[0].f[1]

reduced_system, collapsed_system = sys_reduce.solve_timescale_separation([x0,x1], fast_states = [x3, x2])

reduced_system.f[1]

```

|

github_jupyter

|

# Understanding the FFT Algorithm

Copy from http://jakevdp.github.io/blog/2013/08/28/understanding-the-fft/

*This notebook first appeared as a post by Jake Vanderplas on [Pythonic Perambulations](http://jakevdp.github.io/blog/2013/08/28/understanding-the-fft/). The notebook content is BSD-licensed.*

<!-- PELICAN_BEGIN_SUMMARY -->

The Fast Fourier Transform (FFT) is one of the most important algorithms in signal processing and data analysis. I've used it for years, but having no formal computer science background, It occurred to me this week that I've never thought to ask *how* the FFT computes the discrete Fourier transform so quickly. I dusted off an old algorithms book and looked into it, and enjoyed reading about the deceptively simple computational trick that JW Cooley and John Tukey outlined in their classic [1965 paper](http://www.ams.org/journals/mcom/1965-19-090/S0025-5718-1965-0178586-1/) introducing the subject.

The goal of this post is to dive into the Cooley-Tukey FFT algorithm, explaining the symmetries that lead to it, and to show some straightforward Python implementations putting the theory into practice. My hope is that this exploration will give data scientists like myself a more complete picture of what's going on in the background of the algorithms we use.

<!-- PELICAN_END_SUMMARY -->

## The Discrete Fourier Transform

The FFT is a fast, $\mathcal{O}[N\log N]$ algorithm to compute the Discrete Fourier Transform (DFT), which

naively is an $\mathcal{O}[N^2]$ computation. The DFT, like the more familiar continuous version of the Fourier transform, has a forward and inverse form which are defined as follows:

**Forward Discrete Fourier Transform (DFT):**

$$X_k = \sum_{n=0}^{N-1} x_n \cdot e^{-i~2\pi~k~n~/~N}$$

**Inverse Discrete Fourier Transform (IDFT):**

$$x_n = \frac{1}{N}\sum_{k=0}^{N-1} X_k e^{i~2\pi~k~n~/~N}$$

The transformation from $x_n \to X_k$ is a translation from configuration space to frequency space, and can be very useful in both exploring the power spectrum of a signal, and also for transforming certain problems for more efficient computation. For some examples of this in action, you can check out Chapter 10 of our upcoming Astronomy/Statistics book, with figures and Python source code available [here](http://www.astroml.org/book_figures/chapter10/). For an example of the FFT being used to simplify an otherwise difficult differential equation integration, see my post on [Solving the Schrodinger Equation in Python](http://jakevdp.github.io/blog/2012/09/05/quantum-python/).

Because of the importance of the FFT in so many fields, Python contains many standard tools and wrappers to compute this. Both NumPy and SciPy have wrappers of the extremely well-tested FFTPACK library, found in the submodules ``numpy.fft`` and ``scipy.fftpack`` respectively. The fastest FFT I am aware of is in the [FFTW](http://www.fftw.org/) package, which is also available in Python via the [PyFFTW](https://pypi.python.org/pypi/pyFFTW) package.

For the moment, though, let's leave these implementations aside and ask how we might compute the FFT in Python from scratch.

## Computing the Discrete Fourier Transform

For simplicity, we'll concern ourself only with the forward transform, as the inverse transform can be implemented in a very similar manner. Taking a look at the DFT expression above, we see that it is nothing more than a straightforward linear operation: a matrix-vector multiplication of $\vec{x}$,

$$\vec{X} = M \cdot \vec{x}$$

with the matrix $M$ given by

$$M_{kn} = e^{-i~2\pi~k~n~/~N}.$$

With this in mind, we can compute the DFT using simple matrix multiplication as follows:

```

import numpy as np

def DFT_slow(x):

"""Compute the discrete Fourier Transform of the 1D array x"""

x = np.asarray(x, dtype=float)

N = x.shape[0]

n = np.arange(N)

k = n.reshape((N, 1))

M = np.exp(-2j * np.pi * k * n / N)

return np.dot(M, x)

```

We can double-check the result by comparing to numpy's built-in FFT function:

```

x = np.random.random(1024)

np.allclose(DFT_slow(x), np.fft.fft(x))

```

Just to confirm the sluggishness of our algorithm, we can compare the execution times

of these two approaches:

```

%timeit DFT_slow(x)

%timeit np.fft.fft(x)

```

We are over 1000 times slower, which is to be expected for such a simplistic implementation. But that's not the worst of it. For an input vector of length $N$, the FFT algorithm scales as $\mathcal{O}[N\log N]$, while our slow algorithm scales as $\mathcal{O}[N^2]$. That means that for $N=10^6$ elements, we'd expect the FFT to complete in somewhere around 50 ms, while our slow algorithm would take nearly 20 hours!

So how does the FFT accomplish this speedup? The answer lies in exploiting symmetry.

## Symmetries in the Discrete Fourier Transform

One of the most important tools in the belt of an algorithm-builder is to exploit symmetries of a problem. If you can show analytically that one piece of a problem is simply related to another, you can compute the subresult

only once and save that computational cost. Cooley and Tukey used exactly this approach in deriving the FFT.

We'll start by asking what the value of $X_{N+k}$ is. From our above expression:

$$

\begin{align*}

X_{N + k} &= \sum_{n=0}^{N-1} x_n \cdot e^{-i~2\pi~(N + k)~n~/~N}\\

&= \sum_{n=0}^{N-1} x_n \cdot e^{- i~2\pi~n} \cdot e^{-i~2\pi~k~n~/~N}\\

&= \sum_{n=0}^{N-1} x_n \cdot e^{-i~2\pi~k~n~/~N}

\end{align*}

$$

where we've used the identity $\exp[2\pi~i~n] = 1$ which holds for any integer $n$.

The last line shows a nice symmetry property of the DFT:

$$X_{N+k} = X_k.$$

By a simple extension,

$$X_{k + i \cdot N} = X_k$$

for any integer $i$. As we'll see below, this symmetry can be exploited to compute the DFT much more quickly.

## DFT to FFT: Exploiting Symmetry

Cooley and Tukey showed that it's possible to divide the DFT computation into two smaller parts. From

the definition of the DFT we have:

$$

\begin{align}

X_k &= \sum_{n=0}^{N-1} x_n \cdot e^{-i~2\pi~k~n~/~N} \\

&= \sum_{m=0}^{N/2 - 1} x_{2m} \cdot e^{-i~2\pi~k~(2m)~/~N} + \sum_{m=0}^{N/2 - 1} x_{2m + 1} \cdot e^{-i~2\pi~k~(2m + 1)~/~N} \\

&= \sum_{m=0}^{N/2 - 1} x_{2m} \cdot e^{-i~2\pi~k~m~/~(N/2)} + e^{-i~2\pi~k~/~N} \sum_{m=0}^{N/2 - 1} x_{2m + 1} \cdot e^{-i~2\pi~k~m~/~(N/2)}

\end{align}

$$

We've split the single Discrete Fourier transform into two terms which themselves look very similar to smaller Discrete Fourier Transforms, one on the odd-numbered values, and one on the even-numbered values. So far, however, we haven't saved any computational cycles. Each term consists of $(N/2)*N$ computations, for a total of $N^2$.

The trick comes in making use of symmetries in each of these terms. Because the range of $k$ is $0 \le k < N$, while the range of $n$ is $0 \le n < M \equiv N/2$, we see from the symmetry properties above that we need only perform half the computations for each sub-problem. Our $\mathcal{O}[N^2]$ computation has become $\mathcal{O}[M^2]$, with $M$ half the size of $N$.

But there's no reason to stop there: as long as our smaller Fourier transforms have an even-valued $M$, we can reapply this divide-and-conquer approach, halving the computational cost each time, until our arrays are small enough that the strategy is no longer beneficial. In the asymptotic limit, this recursive approach scales as $\mathcal{O}[N\log N]$.

This recursive algorithm can be implemented very quickly in Python, falling-back on our slow DFT code when the size of the sub-problem becomes suitably small:

```

def FFT(x):

"""A recursive implementation of the 1D Cooley-Tukey FFT"""

x = np.asarray(x, dtype=float)

N = x.shape[0]

if N % 2 > 0:

raise ValueError("size of x must be a power of 2")

elif N <= 32: # this cutoff should be optimized

return DFT_slow(x)

else:

X_even = FFT(x[::2])

X_odd = FFT(x[1::2])

factor = np.exp(-2j * np.pi * np.arange(N) / N)

return np.concatenate([X_even + factor[:N / 2] * X_odd,

X_even + factor[N / 2:] * X_odd])

```

Here we'll do a quick check that our algorithm produces the correct result:

```

x = np.random.random(1024)

np.allclose(FFT(x), np.fft.fft(x))

```

And we'll time this algorithm against our slow version:

```

%timeit DFT_slow(x)

%timeit FFT(x)

%timeit np.fft.fft(x)

```

Our calculation is faster than the naive version by over an order of magnitude! What's more, our recursive algorithm is asymptotically $\mathcal{O}[N\log N]$: we've implemented the Fast Fourier Transform.

Note that we still haven't come close to the speed of the built-in FFT algorithm in numpy, and this is to be expected. The FFTPACK algorithm behind numpy's ``fft`` is a Fortran implementation which has received years of tweaks and optimizations. Furthermore, our NumPy solution involves both Python-stack recursions and the allocation of many temporary arrays, which adds significant computation time.

A good strategy to speed up code when working with Python/NumPy is to vectorize repeated computations where possible. We can do this, and in the process remove our recursive function calls, and make our Python FFT even more efficient.

## Vectorized Numpy Version

Notice that in the above recursive FFT implementation, at the lowest recursion level we perform $N~/~32$ identical matrix-vector products. The efficiency of our algorithm would benefit by computing these matrix-vector products all at once as a single matrix-matrix product. At each subsequent level of recursion, we also perform duplicate operations which can be vectorized. NumPy excels at this sort of operation, and we can make use of that fact to create this vectorized version of the Fast Fourier Transform:

```

def FFT_vectorized(x):

"""A vectorized, non-recursive version of the Cooley-Tukey FFT"""

x = np.asarray(x, dtype=float)

N = x.shape[0]

if np.log2(N) % 1 > 0:

raise ValueError("size of x must be a power of 2")

# N_min here is equivalent to the stopping condition above,

# and should be a power of 2

N_min = min(N, 32)

# Perform an O[N^2] DFT on all length-N_min sub-problems at once

n = np.arange(N_min)

k = n[:, None]

M = np.exp(-2j * np.pi * n * k / N_min)

X = np.dot(M, x.reshape((N_min, -1)))

# build-up each level of the recursive calculation all at once

while X.shape[0] < N:

X_even = X[:, :X.shape[1] / 2]

X_odd = X[:, X.shape[1] / 2:]

factor = np.exp(-1j * np.pi * np.arange(X.shape[0])

/ X.shape[0])[:, None]

X = np.vstack([X_even + factor * X_odd,

X_even - factor * X_odd])

return X.ravel()

```

Though the algorithm is a bit more opaque, it is simply a rearrangement of the operations used in the recursive version with one exception: we exploit a symmetry in the ``factor`` computation and construct only half of the array. Again, we'll confirm that our function yields the correct result:

```

x = np.random.random(1024)

np.allclose(FFT_vectorized(x), np.fft.fft(x))

```

Because our algorithms are becoming much more efficient, we can use a larger array to compare the timings,

leaving out ``DFT_slow``:

```

x = np.random.random(1024 * 16)

%timeit FFT(x)

%timeit FFT_vectorized(x)

%timeit np.fft.fft(x)

```

We've improved our implementation by another order of magnitude! We're now within about a factor of 10 of the FFTPACK benchmark, using only a couple dozen lines of pure Python + NumPy. Though it's still no match computationally speaking, readibility-wise the Python version is far superior to the FFTPACK source, which you can browse [here](http://www.netlib.org/fftpack/fft.c).

So how does FFTPACK attain this last bit of speedup? Well, mainly it's just a matter of detailed bookkeeping. FFTPACK spends a lot of time making sure to reuse any sub-computation that can be reused. Our numpy version still involves an excess of memory allocation and copying; in a low-level language like Fortran it's easier to control and minimize memory use. In addition, the Cooley-Tukey algorithm can be extended to use splits of size other than 2 (what we've implemented here is known as the *radix-2* Cooley-Tukey FFT). Also, other more sophisticated FFT algorithms may be used, including fundamentally distinct approaches based on convolutions (see, e.g. Bluestein's algorithm and Rader's algorithm). The combination of the above extensions and techniques can lead to very fast FFTs even on arrays whose size is not a power of two.

Though the pure-Python functions are probably not useful in practice, I hope they've provided a bit of an intuition into what's going on in the background of FFT-based data analysis. As data scientists, we can make-do with black-box implementations of fundamental tools constructed by our more algorithmically-minded colleagues, but I am a firm believer that the more understanding we have about the low-level algorithms we're applying to our data, the better practitioners we'll be.

*This blog post was written entirely in the IPython Notebook. The full notebook can be downloaded

[here](http://jakevdp.github.io/downloads/notebooks/UnderstandingTheFFT.ipynb),

or viewed statically

[here](http://nbviewer.ipython.org/url/jakevdp.github.io/downloads/notebooks/UnderstandingTheFFT.ipynb).*

|

github_jupyter

|

```

# default_exp label

```

# Label

> A collection of functions to do label-based quantification

```

#hide

from nbdev.showdoc import *

```

## Label search

The label search is implemented based on the compare_frags from the search.

We have a fixed number of reporter channels and check if we find a respective peak within the search tolerance.

Useful resources:

- [IsobaricAnalyzer](https://abibuilder.informatik.uni-tuebingen.de/archive/openms/Documentation/nightly/html/TOPP_IsobaricAnalyzer.html)

- [TMT Talk from Hupo 2015](https://assets.thermofisher.com/TFS-Assets/CMD/Reference-Materials/PP-TMT-Multiplexed-Protein-Quantification-HUPO2015-EN.pdf)

```

#export

from numba import njit

from alphapept.search import compare_frags

import numpy as np

@njit

def label_search(query_frag: np.ndarray, query_int: np.ndarray, label: np.ndarray, reporter_frag_tol:float, ppm:bool)-> (np.ndarray, np.ndarray):

"""Function to search for a label for a given spectrum.

Args:

query_frag (np.ndarray): Array with query fragments.

query_int (np.ndarray): Array with query intensities.

label (np.ndarray): Array with label masses.

reporter_frag_tol (float): Fragment tolerance for search.

ppm (bool): Flag to use ppm instead of Dalton.

Returns:

np.ndarray: Array with intensities for the respective label channel.

np.ndarray: Array with offset masses.

"""

report = np.zeros(len(label))

off_mass = np.zeros_like(label)

hits = compare_frags(query_frag, label, reporter_frag_tol, ppm)

for idx, _ in enumerate(hits):

if _ > 0:

report[idx] = query_int[_-1]

off_mass[idx] = query_frag[_-1] - label[idx]

if ppm:

off_mass[idx] = off_mass[idx] / (query_frag[_-1] + label[idx]) *2 * 1e6

return report, off_mass

def test_label_search():

query_frag = np.array([1,2,3,4,5])

query_int = np.array([1,2,3,4,5])

label = np.array([1.0, 2.0, 3.0, 4.0, 5.0])

frag_tolerance = 0.1

ppm= False

assert np.allclose(label_search(query_frag, query_int, label, frag_tolerance, ppm)[0], query_int)

query_frag = np.array([1,2,3,4,6])

query_int = np.array([1,2,3,4,5])

assert np.allclose(label_search(query_frag, query_int, label, frag_tolerance, ppm)[0], np.array([1,2,3,4,0]))

query_frag = np.array([1,2,3,4,6])

query_int = np.array([5,4,3,2,1])

assert np.allclose(label_search(query_frag, query_int, label, frag_tolerance, ppm)[0], np.array([5,4,3,2,0]))

query_frag = np.array([1.1, 2.2, 3.3, 4.4, 6.6])

query_int = np.array([1,2,3,4,5])

frag_tolerance = 0.5

ppm= False

assert np.allclose(label_search(query_frag, query_int, label, frag_tolerance, ppm)[1], np.array([0.1, 0.2, 0.3, 0.4, 0.0]))

test_label_search()

#Example usage

query_frag = np.array([127, 128, 129.1, 132])

query_int = np.array([100, 200, 300, 400, 500])

label = np.array([127.0, 128.0, 129.0, 130.0])

frag_tolerance = 0.1

ppm = False

report, offset = label_search(query_frag, query_int, label, frag_tolerance, ppm)

print(f'Reported intensities {report}, Offset {offset}')

```

## MS2 Search

```

#export

from typing import NamedTuple

import alphapept.io

def search_label_on_ms_file(file_name:str, label:NamedTuple, reporter_frag_tol:float, ppm:bool):

"""Wrapper function to search labels on an ms_file and write results to the peptide_fdr of the file.

Args:

file_name (str): Path to ms_file:

label (NamedTuple): Label with channels, mod_name and masses.

reporter_frag_tol (float): Fragment tolerance for search.

ppm (bool): Flag to use ppm instead of Dalton.

"""

ms_file = alphapept.io.MS_Data_File(file_name, is_read_only = False)

df = ms_file.read(dataset_name='peptide_fdr')

label_intensities = np.zeros((len(df), len(label.channels)))

off_masses = np.zeros((len(df), len(label.channels)))

labeled = df['sequence'].str.startswith(label.mod_name).values

query_data = ms_file.read_DDA_query_data()

query_indices = query_data["indices_ms2"]

query_frags = query_data['mass_list_ms2']

query_ints = query_data['int_list_ms2']

for idx, query_idx in enumerate(df['raw_idx']):

query_idx_start = query_indices[query_idx]

query_idx_end = query_indices[query_idx + 1]

query_frag = query_frags[query_idx_start:query_idx_end]

query_int = query_ints[query_idx_start:query_idx_end]

query_frag_idx = query_frag < label.masses[-1]+1

query_frag = query_frag[query_frag_idx]

query_int = query_int[query_frag_idx]

if labeled[idx]:

label_int, off_mass = label_search(query_frag, query_int, label.masses, reporter_frag_tol, ppm)

label_intensities[idx, :] = label_int

off_masses[idx, :] = off_mass

df[label.channels] = label_intensities

df[[_+'_off_ppm' for _ in label.channels]] = off_masses

ms_file.write(df, dataset_name="peptide_fdr", overwrite=True) #Overwrite dataframe with label information

#export

import logging

import os

from alphapept.constants import label_dict

def find_labels(

to_process: dict,

callback: callable = None,

parallel:bool = False

) -> bool:

"""Wrapper function to search for labels.

Args:

to_process (dict): A dictionary with settings indicating which files are to be processed and how.

callback (callable): A function that accepts a float between 0 and 1 as progress. Defaults to None.

parallel (bool): If True, process multiple files in parallel.

This is not implemented yet!

Defaults to False.

Returns:

bool: True if and only if the label finding was succesful.

"""

index, settings = to_process

raw_file = settings['experiment']['file_paths'][index]

try:

base, ext = os.path.splitext(raw_file)

file_name = base+'.ms_data.hdf'

label = label_dict[settings['isobaric_label']['label']]

reporter_frag_tol = settings['isobaric_label']['reporter_frag_tolerance']

ppm = settings['isobaric_label']['reporter_frag_tolerance_ppm']

search_label_on_ms_file(file_name, label, reporter_frag_tol, ppm)

logging.info(f'Tag finding of file {file_name} complete.')

return True

except Exception as e:

logging.error(f'Tag finding of file {file_name} failed. Exception {e}')

return f"{e}" #Can't return exception object, cast as string

return True

#hide

from nbdev.export import *

notebook2script()

```

|

github_jupyter

|

```

import numpy as np

import pandas as pd

import warnings

warnings.filterwarnings('ignore')

import seaborn as sns

sns.set_palette('Set2')

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn.metrics import confusion_matrix, mean_squared_error

from sklearn.preprocessing import LabelEncoder, MinMaxScaler, StandardScaler

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.linear_model import LinearRegression, Lasso, Ridge, SGDRegressor

from sklearn.preprocessing import KBinsDiscretizer

from sklearn.ensemble import RandomForestRegressor

from sklearn.svm import LinearSVC, SVC, LinearSVR, SVR

from sklearn.tree import DecisionTreeRegressor

from sklearn.model_selection import GridSearchCV

from scipy.stats import zscore

from sklearn.metrics import mean_squared_error

import requests

import json

from datetime import datetime

import time

import os

from pandas.tseries.holiday import USFederalHolidayCalendar as calendar

from config import yelp_api_key

from config import darksky_api_key

```

## Set Up

```

# Analysis Dates

start_date = '2017-01-01' # Start Date Inclusive

end_date = '2019-06-19' # End Date Exclusive

search_business = 'Jupiter Disco'

location = 'Brooklyn, NY'

```

## Pull Weather Data

### Latitude + Longitude from Yelp API

```

host = 'https://api.yelp.com'

path = '/v3/businesses/search'

search_limit = 10

# Yelp Authorization Header with API Key

headers = {

'Authorization': 'Bearer {}'.format(yelp_api_key)

}

# Build Requests Syntax with Yelp Host and Path and URL Paramaters

# Return JSON response

def request(host, path, url_params=None):

url_params = url_params or {}

url = '{}{}'.format(host, path)

response = requests.get(url, headers=headers, params=url_params)

return response.json()

# Build URL Params for the Request and provide the host and path

def search(term, location):

url_params = {

'term': term.replace(' ', '+'),

'location': location.replace(' ', '+'),

'limit': search_limit

}

return request(host, path, url_params=url_params)

# Return Coordinates if Exact Match Found

def yelp_lat_long(business, location):

# Call search function here with business name and location

response = search(business, location)

# Set state to 'No Match' in case no Yelp match found

state = 'No Match'

possible_matches = []

# Check search returns for match wtith business

for i in range(len(response['businesses'])):

# If match found:

if response['businesses'][i]['name'] == business:

# Local variables to help navigate JSON return

response_ = response['businesses'][0]

name_ = response_['name']

print(f'Weather Location: {name_}')

state = 'Match Found'

#print(response['businesses'][0])

return response_['coordinates']['latitude'], response_['coordinates']['longitude']

else:

# If no exact match, append all search returns to list

possible_matches.append(response['businesses'][i]['name'])

# If no match, show user potential matches

if state == 'No Match':

print('Exact match not found, did you mean one of the following? \n')

for possible_match in possible_matches:

print(possible_match)

return None, None

lat, long = yelp_lat_long(search_business, location)

#print(f'Latitude: {lat}\nLongitude: {long}')

```

### Darksky API Call

```

# Create List of Dates of target Weather Data

def find_dates(start_date, end_date):

list_of_days = []

daterange = pd.date_range(start_date, end_date)

for single_date in daterange:

list_of_days.append(single_date.strftime("%Y-%m-%d"))

return list_of_days

# Concatenate URL to make API Call

def build_url(api_key, lat, long, day):

_base_url = 'https://api.darksky.net/forecast/'

_time = 'T20:00:00'

_url = f'{_base_url}{api_key}/{lat},{long},{day + _time}?America/New_York&exclude=flags'

return _url

def make_api_call(url):

r = requests.get(url)

return r.json()

# Try / Except Helper Function for Handling JSON API Output

def find_val(dictionary, *keys):

level = dictionary

for key in keys:

try:

level = level[key]

except:

return np.NAN

return level

# Parse API Call Data using Try / Except Helper Function

def parse_data(data):

time = datetime.fromtimestamp(data['currently']['time']).strftime('%Y-%m-%d')

try:

precip_max_time = datetime.fromtimestamp(find_val(data, 'daily', 'data', 0, 'precipIntensityMaxTime')).strftime('%I:%M%p')

except:

precip_max_time = datetime(1900,1,1,5,1).strftime('%I:%M%p')

entry = {'date': time,

'temperature': float(find_val(data, 'currently', 'temperature')),

'apparent_temperature': float(find_val(data, 'currently', 'apparentTemperature')),

'humidity': float(find_val(data, 'currently', 'humidity')),

'precip_intensity_max': float(find_val(data,'daily','data', 0, 'precipIntensityMax')),

'precip_type': find_val(data, 'daily', 'data', 0, 'precipType'),

'precip_prob': float(find_val(data, 'currently', 'precipProbability')),

'pressure': float(find_val(data, 'currently', 'pressure')),

'summary': find_val(data, 'currently', 'icon'),

'precip_max_time': precip_max_time}

return entry

# Create List of Weather Data Dictionaries & Input Target Dates

def weather_call(start_date, end_date, _lat, _long):

weather = []

list_of_days = find_dates(start_date, end_date)

for day in list_of_days:

data = make_api_call(build_url(darksky_api_key, _lat, _long, day))

weather.append(parse_data(data))

return weather

result = weather_call(start_date, end_date, lat, long)

# Build DataFrame from List of Dictionaries

def build_weather_df(api_call_results):

df = pd.DataFrame(api_call_results)

# Add day of week to DataFrame + Set Index as date

df['date'] = pd.to_datetime(df['date'])

df['day_of_week'] = df['date'].dt.weekday

df['month'] = df['date'].dt.month

df.set_index('date', inplace=True)

df['apparent_temperature'].fillna(method='ffill',inplace=True)

df['temperature'].fillna(method='ffill',inplace=True)

df['humidity'].fillna(method='ffill',inplace=True)

df['precip_prob'].fillna(method='ffill', inplace=True)

df['pressure'].fillna(method='ffill', inplace=True)

df['precip_type'].fillna(value='none', inplace=True)

return df

weather_df = build_weather_df(result);

weather_df.to_csv(f'weather_{start_date}_to_{end_date}.csv')

weather_csv_file = f'weather_{start_date}_to_{end_date}.csv'

```

## Import / Clean / Prep File

```

# Import Sales Data

bar_sales_file = 'bar_x_sales_export.csv'

rest_1_file = 'rest_1_dinner_sales_w_covers_061819.csv'

# Set File

current_file = rest_1_file

weather_csv_file = 'weather_2017-01-01_to_2019-06-19.csv'

# HELPER FUNCTION

def filter_df(df, start_date, end_date):

return df[(df.index > start_date) & (df.index < end_date)]

# HELPER FUNCTION

def import_parse(file):

data = pd.read_csv(file, index_col = 'date', parse_dates=True)

df = pd.DataFrame(data)

# Rename Column to 'sales'

df = df.rename(columns={df.columns[0]: 'sales',

'dinner_covers': 'covers'})

# Drop NaN

#df = df.query('sales > 0').copy()

df.fillna(0, inplace=True)

print(f'"{file}" has been imported + parsed. The file has {len(df)} rows.')

return df

# HELPER FUNCTION

def prepare_data(current_file, weather_file):

df = filter_df(import_parse(current_file), start_date, end_date)

weather_df_csv = pd.read_csv(weather_csv_file, parse_dates=True, index_col='date')

weather_df_csv['summary'].fillna(value='none', inplace=True)

df = pd.merge(df, weather_df_csv, how='left', on='date')

return df

```

### Encode Closed Days

```

# Set Closed Dates using Sales

## REST 1 CLOSED DATES

additional_closed_dates = ['2018-12-24', '2017-12-24', '2017-02-05', '2017-03-14', '2018-01-01', '2018-02-04', '2019-02-03']

## BAR CLOSED DATES

#additional_closed_dates = ['2018-12-24', '2017-12-24', '2017-10-22']

closed_dates = [pd.to_datetime(date) for date in additional_closed_dates]

# Drop or Encode Closed Days

def encode_closed_days(df):

# CLOSED FEATURE

cal = calendar()

# Local list of days with zero sales

potential_closed_dates = df[df['sales'] == 0].index

# Enocodes closed days with 1

df['closed'] = np.where((((df.index.isin(potential_closed_dates)) & \

(df.index.isin(cal.holidays(start_date, end_date)))) | df.index.isin(closed_dates)), 1, 0)

df['sales'] = np.where(df['closed'] == 1, 0, df['sales'])

return df

baseline_df = encode_closed_days(prepare_data(current_file, weather_csv_file))

baseline_df = baseline_df[['sales', 'outside', 'day_of_week', 'month', 'closed']]

baseline_df = add_dummies(add_clusters(baseline_df))

mod_baseline = target_trend_engineering(add_cal_features(impute_outliers(baseline_df)))

mod_baseline = mod_baseline.drop(['month'], axis=1)

```

### Replace Outliers in Training Data

```

# Replace Outliers with Medians

## Targets for Outliers

z_thresh = 3

def impute_outliers(df, *col):

# Check for Outliers in Sales + Covers

for c in col:

# Impute Median for Sales & Covers Based on Day of Week Outiers

for d in df['day_of_week'].unique():

# Median / Mean / STD for each day of the week

daily_median = np.median(df[df['day_of_week'] == d][c])

daily_mean = np.mean(df[df['day_of_week'] == d][c])

daily_std = np.std(df[df['day_of_week'] ==d ][c])

# Temporary column encoded if Target Columns have an Outlier

df['temp_col'] = np.where((df['day_of_week'] == d) & (df['closed'] == 0) & ((np.abs(df[c] - daily_mean)) > (daily_std * z_thresh)), 1, 0)

# Replace Outlier with Median

df[c] = np.where(df['temp_col'] == 1, daily_median, df[c])

df = df.drop(['temp_col'], axis=1)

return df

def add_ppa(df):

df['ppa'] = np.where(df['covers'] > 0, df['sales'] / df['covers'], 0)

return df

```

## Clean File Here

```

data = add_ppa(impute_outliers(encode_closed_days(prepare_data(current_file, weather_csv_file)), 'sales', 'covers'))

```

### Download CSV for EDA

```

data.to_csv('CSV_for_EDA.csv')

df_outside = data['outside']

```

## CHOOSE TARGET --> SALES OR COVERS

```

target = 'sales'

def daily_average_matrix_ann(df, target):

matrix = df.groupby([df.index.dayofweek, df.index.month, df.index.year]).agg({target: 'mean'})

matrix = matrix.rename_axis(['day', 'month', 'year'])

return matrix.unstack(level=1)

daily_average_matrix_ann(data, target)

```

### Create Month Clusters

```

from sklearn.cluster import KMeans

day_k = 7

mo_k = 3

def create_clusters(df, target, col, k):

# MAKE DATAFRAME USING CENTRAL TENDENCIES AS FEATURES

describe = df.groupby(col)[target].aggregate(['median', 'std', 'max'])

df = describe.reset_index()

# SCALE TEMPORARY DF

scaler = MinMaxScaler()

f = scaler.fit_transform(df)

# INSTANTIATE MODEL

km = KMeans(n_clusters=k, random_state=0).fit(f)

# GET KMEANS CLUSTER PREDICTIONS

labels = km.predict(f)

# MAKE SERIES FROM PREDICTIONS

temp = pd.DataFrame(labels, columns = ['cluster'], index=df.index)

# CONCAT CLUSTERS TO DATAFRAME

df = pd.concat([df, temp], axis=1)

# CREATE CLUSTER DICTIONARY

temp_dict = {}

for i in list(df[col]):

temp_dict[i] = df.loc[df[col] == i, 'cluster'].iloc[0]

return temp_dict

# Create Global Dictionaries to Categorize Day / Month

#day_dict = create_clusters(data, 'day_of_week', day_k)

month_dict = create_clusters(data, target, 'month', mo_k)

# Print Clusters

#print('Day Clusters: ', day_dict, '\n', 'Total Clusters: ', len(set(day_dict.values())), '\n')

print('Month Clusters: ', month_dict, '\n', 'Total Clusters: ', len(set(month_dict.values())))

```

### Add Temperature Onehot Categories

```

def encode_temp(df):

temp_enc = KBinsDiscretizer(n_bins=5, encode='onehot', strategy='kmeans')

temp_enc.fit(df[['apparent_temperature']])

return temp_enc

def one_hot_temp(df, temp_enc):

binned_transform = temp_enc.transform(df[['apparent_temperature']])

binned_df = pd.DataFrame(binned_transform.toarray(), index=df.index, columns=['temp_very_cold', 'temp_cold', 'temp_warm', 'temp_hot', 'temp_very_hot'])

df = df.merge(binned_df, how='left', on='date')

df.drop(['apparent_temperature', 'temperature'], axis=1, inplace=True)

return df, temp_enc

```

## Feature Engineering

```

# Add Clusters to DataFrame to use as Features

def add_clusters(df):

#df['day_cluster'] = df['day_of_week'].apply(lambda x: day_dict[x]).astype('category')

df['month_cluster'] = df['month'].apply(lambda x: month_dict[x]).astype('category')

return df

```

### Add Weather Features

```

hours_start = '05:00PM'

hours_end = '11:59PM'

hs_dt = datetime.strptime(hours_start, "%I:%M%p")

he_dt = datetime.strptime(hours_end, "%I:%M%p")

def between_time(check_time):

if hs_dt <= datetime.strptime(check_time, "%I:%M%p") <= he_dt:

return 1

else:

return 0

add_weather = True

temp_delta_window = 1

def add_weather_features(df):

if add_weather:

# POOR WEATHER FEATURES

df['precip_while_open'] = df['precip_max_time'].apply(lambda x: between_time(x))

# DROP FEATURES

features_to_drop = ['precip_max_time']

df.drop(features_to_drop, axis=1, inplace=True)

return df

```

### Add Calendar Features

```

def add_cal_features(df):

cal = calendar()

# THREE DAY WEEKEND FEATURE

sunday_three_days = [date + pd.DateOffset(-1) for date in cal.holidays(start_date, end_date) if date.dayofweek == 0]

df['sunday_three_day'] = np.where(df.index.isin(sunday_three_days), 1, 0)

return df

```

### Add Dummies

```

def add_dummies(df):

df['day_of_week'] = df['day_of_week'].astype('category')

df = pd.get_dummies(data=df, columns=['day_of_week', 'month_cluster'])

return df

```

### Add Interactions

```

def add_interactions(df):

apply_this_interaction = False

if apply_this_interaction:

for d in [col for col in df.columns if col.startswith('day_cluster')]:

for m in [col for col in df.columns if col.startswith('month_cluster')]:

col_name = d + '_X_' + m

df[col_name] = df[d] * df[m]

df.drop([d], axis=1, inplace=True)

df.drop([col for col in df.columns if col.startswith('month_cluster')], axis=1, inplace=True)

return df

else:

return df

def add_weather_interactions(df):

apply_this_interaction = True

if apply_this_interaction:

try:

df['outside_X_precip_open'] = df['outside'] * df['precip_while_open']

for w in [col for col in df.columns if col.startswith('temp_')]:

col_name = w + '_X_' + 'outside'

df[col_name] = df[w] * df['outside']

df.drop(['outside'], axis=1, inplace=True)

except:

pass

return df

else:

return df

```

### Feature Selection

```

def feature_selection(df):

try:

target_list = ['sales', 'covers', 'ppa']

target_to_drop = [t for t in target_list if t != target]

df = df.drop(target_to_drop, axis=1)

except:

pass

# Feature Selection / Drop unnecessary or correlated columns

cols_to_drop = ['month', 'precip_type', 'summary', 'pressure', 'precip_intensity_max', 'day_of_week_0']

df = df.drop(cols_to_drop, axis=1)

return df

```

### Add Target Trend Feature Engineering

```

trend_days_rolling = 31

trend_days_shift = 7

days_fwd = trend_days_rolling + trend_days_shift + 1

def target_trend_engineering(df):

df['target_trend'] = df[target].rolling(trend_days_rolling).mean() / df[target].shift(trend_days_shift).rolling(trend_days_rolling).mean()

#df['target_delta'] = df[target].shift(7) + df[target].shift(14) - df[target].shift(21) - df[target].shift(28)

return df

```

## Start Here

```

# IMPORT & PARSE CLEAN TRAINING SET

data = add_ppa(impute_outliers(encode_closed_days(prepare_data(current_file, weather_csv_file)), 'sales', 'covers'));

# One Hot Encode Temperature Data

data, temp_enc = one_hot_temp(data, encode_temp(data))

# Create CSV

data.to_csv('csv_before_features.csv')

def feature_engineering(df):

df.columns = df.columns.map(str)

# Add day & Month Clusters // Dicts with data held in Global Variable

df = add_clusters(df)

# Add Engineered Features for Weather & Calendar

df = add_weather_features(df)

df = add_cal_features(df)

# Create Dummies

df = add_dummies(df)

# Add Interactions

df = add_interactions(df)

df = add_weather_interactions(df)

# Drop Selected Columns

df = feature_selection(df)

return df

dfx = feature_engineering(data)

dfx = target_trend_engineering(dfx)

def corr_chart(df):

corr = df.corr()

mask = np.zeros_like(corr, dtype=np.bool)

mask[np.triu_indices_from(mask)] = True

# Set up the matplotlib figure

sns.set_style('whitegrid')

f, ax = plt.subplots(figsize=(16, 12))

# Generate a custom diverging colormap

cmap = sns.diverging_palette(220, 10, as_cmap=True)

# Draw the heatmap with the mask and correct aspect ratio

sns.heatmap(corr, mask=mask, cmap=cmap, vmax=1, vmin=-1, center=0,

square=True, linewidths=.75, annot=False, cbar_kws={"shrink": .75});

corr_chart(dfx)

```

## Update Sales

```

# # File from Start based on Target Variable

# current_sales_df = import_parse(rest_1_file)

# date = '2019-06-13'

# sales = 15209.75

# covers = 207

# outside = 1

# closed = 0

# def add_sales_row(date, sales):

# df = pd.DataFrame({'sales': sales,

# 'covers': covers,

# 'outside': outside,

# 'closed': closed},

# index=[date])

# return df

# temp = add_sales_row(date, sales)

# def build_sales_df(df, temp):

# df = df.append(temp)

# return df

# current_sales_df = build_sales_df(current_sales_df, temp)

# # Download Current DataFrame to CSV

# current_sales_df.to_csv(f'rest_1_clean_updated_{start_date}_to_{end_date}.csv')

# df_import = pd.read_csv('rest_1_clean_updated_2017-01-01_to_2019-06-17.csv', parse_dates=True, index_col='Unnamed: 0')

# def import_current(df):

# df.index = pd.to_datetime(df.index)

# df = add_ppa(df)

# target_list = ['sales', 'covers', 'ppa']

# target_to_drop = [t for t in target_list if t != target]

# df = df.drop(target_to_drop, axis=1)

# return df

# current_df = import_current(df_import)

```

### Add Recent Sales Data

```

# # Import Most Recent DataFrame

# df_before_features = pd.read_csv('csv_before_features.csv', index_col='date', parse_dates=True)

# # Create New Weather DataFrame with Updated Data

# new_date_start = '2019-06-15'

# new_date_end = '2019-06-17'

# def update_current_df(sales_df, df_before_features, new_date_start, new_end_date):

# sales_df = sales_df[new_date_start:]

# sales_df = sales_df.rename_axis(index = 'date')

# sales_df.index = pd.to_datetime(sales_df.index)

# ## Find Lat Long for Business

# lat, long = yelp_lat_long(search_business, location)

# ## Pull Weather Data / Forecast

# weather_df = build_weather_df(weather_call(new_date_start, new_date_end, lat, long))

# ## Parse, Clean, Engineer

# df = pd.merge(sales_df, weather_df, how='left', on='date')

# df, _ = one_hot_temp(df, temp_enc)

# df = pd.concat([df_before_features, df])

# df = target_trend_engineering(feature_engineering(df))

# return df

# current_df = update_current_df(current_df, df_before_features, new_date_start, new_date_end)

```

## Test / Train / Split

### Drop Closed Days?

```

drop_all_closed = False

if drop_all_closed:

current_df = current_df[current_df['closed'] == 0]

dfx.columns

def drop_weather(df):

no_weather = False

if no_weather:

df = df.drop(['humidity', 'precip_prob', 'temp_very_cold', 'temp_cold', 'temp_hot', 'temp_very_hot', 'precip_while_open', \

'temp_very_cold_X_outside', 'temp_cold_X_outside', 'temp_hot_X_outside','temp_very_hot_X_outside', 'outside_X_precip_open'], axis=1)

df = df.merge(df_outside, on='date', how='left')

return df

else:

return df

dfx = drop_weather(dfx)

dfx.head()

def cv_split(df):

features = dfx.drop([target], axis=1)[days_fwd:]

y = dfx[target][days_fwd:]

return features, y

cv_features, cv_y = cv_split(dfx)

baseline_cv_x, baseline_cv_y = cv_split(mod_baseline)

def train_test_split(df):

# Separate Target & Features

y = df[target]

features = df.drop([target], axis=1)

# Test / Train / Split

train_date_start = '2017-01-01'

train_date_end = '2018-12-31'

X_train = features[pd.to_datetime(train_date_start) + pd.DateOffset(days_fwd):train_date_end]

X_test = features[pd.to_datetime(train_date_end) + pd.DateOffset(1): ]

y_train = y[pd.to_datetime(train_date_start) + pd.DateOffset(days_fwd):train_date_end]

y_test = y[pd.to_datetime(train_date_end) + pd.DateOffset(1): ]

# Scale

scaler = MinMaxScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

X_train = pd.DataFrame(X_train_scaled, columns=X_train.columns)

X_test = pd.DataFrame(X_test_scaled, columns=X_train.columns)

print('Train set: ', len(X_train))

print('Test set: ', len(X_test))

return X_train, X_test, y_train, y_test, scaler

X_train, X_test, y_train, y_test, scaler = train_test_split(dfx)

baseline_X_train, baseline_X_test, baseline_y_train, baseline_y_test, baseline_scaler = train_test_split(mod_baseline)

```

### Linear Regression

```

def linear_regression_model(X_train, y_train):

lr = LinearRegression(fit_intercept=True)

lr_rgr = lr.fit(X_train, y_train)

return lr_rgr

lr_rgr = linear_regression_model(X_train, y_train)

baseline_lr_rgr = linear_regression_model(baseline_X_train, baseline_y_train)

def rgr_score(rgr, X_train, y_train, X_test, y_test, cv_features, cv_y):

y_hat = rgr.predict(X_test)

sum_squares_residual = sum((y_test - y_hat)**2)

sum_squares_total = sum((y_test - np.mean(y_test))**2)

r_squared = 1 - (float(sum_squares_residual))/sum_squares_total

adjusted_r_squared = 1 - (1-r_squared)*(len(y_test)-1)/(len(y_test)-X_test.shape[1]-1)

print('Formula Scores - R-Squared: ', r_squared, 'Adjusted R-Squared: ', adjusted_r_squared, '\n')

train_score = rgr.score(X_train, y_train)

test_score = rgr.score(X_test, y_test)

y_pred = rgr.predict(X_test)

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

pred_df = pd.DataFrame(y_pred, index=y_test.index)

pred_df = pred_df.rename(columns={0: target})

print('Train R-Squared: ', train_score)

print('Test R-Squared: ', test_score, '\n')

print('Root Mean Squared Error: ', rmse, '\n')

print('Cross Val Avg R-Squared: ', \

np.mean(cross_val_score(rgr, cv_features, cv_y, cv=10, scoring='r2')), '\n')

print('Intercept: ', rgr.intercept_, '\n')

print('Coefficients: \n')

for index, col_name in enumerate(X_test.columns):

print(col_name, ' --> ', rgr.coef_[index])

return pred_df

pred_df = rgr_score(lr_rgr, X_train, y_train, X_test, y_test, cv_features, cv_y)

baseline_preds = rgr_score(baseline_lr_rgr, baseline_X_train, baseline_y_train, baseline_X_test, baseline_y_test, baseline_cv_x, baseline_cv_y)

```

### Prediction Function

```

outside = 1

def predict_df(clf, scaler, X_train, current_df, date_1, date_2):

# Find Lat Long for Business

lat, long = yelp_lat_long(search_business, location)

# Pull Weather Data / Forecast

weather_df = build_weather_df(weather_call(date_1, date_2, lat, long))

day_of_week, apparent_temperature = weather_df['day_of_week'], weather_df['apparent_temperature']

weather_df['outside'] = outside

# One Hot Encode Temperature Using Fitted Encoder

df, _ = one_hot_temp(weather_df, temp_enc)

df['closed'] = 0

# Add Feature Engineering

df = feature_engineering(df)

# Add Sales Data for Sales Trend Engineering

current_df = current_df[target]

df = pd.merge(df, current_df, on='date', how='left')

df[target] = df[target].fillna(method='ffill')

df = target_trend_engineering(df)

df = df.drop([target], axis=1)

# Ensure Column Parity

missing_cols = set(X_train.columns) - set(df.columns)

for c in missing_cols:

df[c] = 0

df = df[X_train.columns][-2:]

# Scale Transform

df_scaled = scaler.transform(df)

df = pd.DataFrame(df_scaled, columns=df.columns, index=df.index)

# Predict and Build Prediction DataFrame for Review

pred_array = pd.DataFrame(clf.predict(df), index=df.index, columns=[target])

pred_df = df[df.columns[(df != 0).any()]]

pred_df = pd.concat([pred_df, day_of_week, apparent_temperature], axis=1)

final_predict = pd.concat([pred_array, pred_df], axis=1)

return final_predict

tonight = predict_df(lr_rgr, scaler, X_train, dfx, pd.datetime.now().date() + pd.DateOffset(-days_fwd), pd.datetime.now().date())

tonight[-2:]

```

## Lasso

```

def lasso_model(X_train, y_train):

lassoReg = Lasso(fit_intercept=True, alpha=.05)

lasso_rgr = lassoReg.fit(X_train,y_train)

return lasso_rgr

lasso_rgr = lasso_model(X_train, y_train)

baseline_lasso = lasso_model(baseline_X_train, baseline_y_train)

pred_df_ppa_lasso = rgr_score(lasso_rgr, X_train, y_train, X_test, y_test, cv_features, cv_y)

baseline_lasso = rgr_score(baseline_lasso, baseline_X_train, baseline_y_train, baseline_X_test, baseline_y_test, baseline_cv_x, baseline_cv_y)

tonight = predict_df(lasso_rgr, scaler, X_train, dfx, pd.datetime.now().date() + pd.DateOffset(-days_fwd), pd.datetime.now().date())

tonight[-2:]

from yellowbrick.regressor import ResidualsPlot

from yellowbrick.features.importances import FeatureImportances

plt.figure(figsize=(12,8))

visualizer = ResidualsPlot(lasso_rgr, hist=False)

visualizer.fit(X_train, y_train)

visualizer.score(X_test, y_test)

visualizer.poof()

features = list(X_train.columns)

fig = plt.figure(figsize=(12,8))

ax = fig.add_subplot()

labels = list(map(lambda x: x.title(), features))

visualizer = FeatureImportances(lasso_rgr, ax=ax, labels=labels, relative=False)

visualizer.fit(X_train, y_train)

visualizer.poof()

```

### Random Forest Regression

```

(1/6)**20

8543 * 8543

def rf_regression_model(X_train, y_train):

rfr = RandomForestRegressor(max_depth= 11,

max_features= 0.60,

min_impurity_decrease= 0.005,

n_estimators= 300,

min_samples_leaf = 2,

min_samples_split = 2,

random_state = 0)

rfr_rgr = rfr.fit(X_train, y_train)

return rfr_rgr

rfr_rgr = rf_regression_model(X_train, y_train)

def rfr_score(rgr, X_test, y_test, cv_features, cv_y):

y_hat = rgr.predict(X_test)

sum_squares_residual = sum((y_test - y_hat)**2)

sum_squares_total = sum((y_test - np.mean(y_test))**2)

r_squared = 1 - (float(sum_squares_residual))/sum_squares_total

adjusted_r_squared = 1 - (1-r_squared)*(len(y_test)-1)/(len(y_test)-X_test.shape[1]-1)

print('Formula Scores - R-Squared: ', r_squared, 'Adjusted R-Squared: ', adjusted_r_squared, '\n')

train_score = rgr.score(X_train, y_train)

test_score = rgr.score(X_test, y_test)

y_pred = rgr.predict(X_test)

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

pred_df = pd.DataFrame(y_pred, index=y_test.index)

pred_df = pred_df.rename(columns={0: target})

print('Train R-Squared: ', train_score)

print('Test R-Squared: ', test_score, '\n')

print('Root Mean Squared Error: ', rmse, '\n')

print('Cross Val Avg R-Squared: ', \

np.mean(cross_val_score(rgr, cv_features, cv_y, cv=10, scoring='r2')), '\n')

return pred_df

pred_df = rfr_score(rfr_rgr, X_test, y_test, cv_features, cv_y)

```

# Random Forest Regression Prediction

```

tonight = predict_df(rfr_rgr, scaler, X_train, dfx, pd.datetime.now().date() + pd.DateOffset(-days_fwd), pd.datetime.now().date())

tonight[-2:]

```

### Grid Search Helper Function

```

def run_grid_search(rgr, params, X_train, y_train):

cv = 5

n_jobs = -1

scoring = 'neg_mean_squared_error'

grid = GridSearchCV(rgr, params, cv=cv, n_jobs=n_jobs, scoring=scoring, verbose=10)

grid = grid.fit(X_train, y_train)

best_grid_rgr = grid.best_estimator_

print('Grid Search: ', rgr.__class__.__name__, '\n')

print('Grid Search Best Score: ', grid.best_score_)

print('Grid Search Best Params: ', grid.best_params_)

print('Grid Search Best Estimator: ', grid.best_estimator_)

return best_grid_rgr

params = {

'n_estimators': [250, 275, 300, 350, 400,500],

'max_depth': [5, 7, 9, 11, 13, 15],

'min_impurity_decrease': [0.005, 0.001, 0.0001],

'max_features': ['auto', 0.65, 0.75, 0.85, 0.95]

}

best_grid_rgr = run_grid_search(rfr_rgr, params, X_train, y_train)

```

### OLS Model

```

import statsmodels.api as sm

from statsmodels.formula.api import ols

f = ''

for c in dfx.columns:

f += c + '+'

x = f[6:-1]

f= target + '~' + x

model = ols(formula=f, data=dfx).fit()

model.summary()

```

## XGB Regressor

```

from xgboost import XGBRegressor

def xgb_model(X_train, y_train):

objective = 'reg:linear'

booster = 'gbtree'

nthread = 4

learning_rate = 0.02

max_depth = 3

colsample_bytree = 0.75

n_estimators = 450

min_child_weight = 2

xgb_rgr= XGBRegressor(booster=booster, objective=objective, colsample_bytree=colsample_bytree, learning_rate=learning_rate, \

max_depth=max_depth, nthread=nthread, n_estimators=n_estimators, min_child_weight=min_child_weight, random_state = 0)

xgb_rgr = xgb_rgr.fit(X_train, y_train)

return xgb_rgr

xgb_rgr = xgb_model(X_train, y_train)

# Convert column names back to original

X_test = X_test[X_train.columns]

pred_df_covers_xgb = rfr_score(xgb_rgr, X_test, y_test, cv_features, cv_y)

```

## HYBRID

```

filex = 'predicted_ppa_timeseries.csv'

df_ts = pd.read_csv(filex,index_col='date',parse_dates=True)

pred_df = pred_df_covers_xgb.merge(df_ts, on='date', how='left')

pred_df.head()

pred_df['sales'] = pred_df['covers'] * pred_df['pred_ppa']

pred_df = pred_df[['sales']]

r2 = metrics.r2_score(y_test, pred_df)

r2

rmse = np.sqrt(mean_squared_error(y_test, pred_df))

rmse

tonight = predict_df(xgb_rgr, scaler, X_train, dfx, pd.datetime.now().date() + pd.DateOffset(-days_fwd), pd.datetime.now().date())

tonight[-2:]

xgb1 = XGBRegressor()

parameters = {'nthread':[4], #when use hyperthread, xgboost may become slower

'objective':['reg:linear'],

'learning_rate': [.015, 0.02, .025], #so called `eta` value

'max_depth': [3, 4, 5],

'min_child_weight': [1, 2, 3],

'silent': [1],

'subsample': [0.7],

'colsample_bytree': [0.55, 0.60, 0.65],

'n_estimators': [400, 500, 600]}

xgb_grid = GridSearchCV(xgb1,

parameters,

cv = 3,

n_jobs = 5,

verbose=True,

scoring = 'neg_mean_squared_error')

xgb_grid.fit(X_train,

y_train)

print(xgb_grid.best_score_)

print(xgb_grid.best_params_)

```

## CLEAN RUN

|

github_jupyter

|

# Numpy

### GitHub repository: https://github.com/jorgemauricio/curso_itesm

### Instructor: Jorge Mauricio

```

# librerías

import numpy as np

```

# Crear Numpy Arrays

## De una lista de python

Creamos el arreglo directamente de una lista o listas de python

```

my_list = [1,2,3]

my_list

np.array(my_list)

my_matrix = [[1,2,3],[4,5,6],[7,8,9]]

my_matrix

```

## Métodos

### arange

```

np.arange(0,10)

np.arange(0,11,2)

```

### ceros y unos

Generar arreglos de ceros y unos

```

np.zeros(3)

np.zeros((5,5))

np.ones(3)

np.ones((3,3))

```

### linspace

Generar un arreglo especificando un intervalo

```

np.linspace(0,10,3)

np.linspace(0,10,50)

```

### eye

Generar matrices de identidad

```

np.eye(4)

```

### Random

### rand

Generar un arreglo con una forma determinada de numeros con una distribución uniforme [0,1]

```

np.random.rand(2)

np.random.rand(5,5)

```

### randn

Generar un arreglo con una distribucion estandar a diferencia de rand que es uniforme

```

np.random.randn(2)

np.random.randn(5,5)

```

### randint

Generar numeros aleatorios en un rango determinado

```

np.random.randint(1,100)

np.random.randint(1,100,10)

```

### Métodos y atributos de arreglos

```

arr = np.arange(25)

ranarr = np.random.randint(0,50,10)

arr

ranarr

```

### Reshape

Regresa el mismo arreglo pero en diferente forma

```

arr.reshape(5,5)

```

### max, min, argmax, argmin

Metodos para encontrar máximos y mínimos de los valores y sus indices

```

ranarr

ranarr.max()

ranarr.argmax()

ranarr.min()

ranarr.argmin()

```

### Shape

Atributo para desplegar la forma que tienen el arreglo

```

# Vector

arr.shape

# Tomar en cuenta que se implementan dos corchetes

arr.reshape(1,25)

arr.reshape(1,25).shape

arr.reshape(25,1)

arr.reshape(25,1).shape

```

### dtype

Despliega el tipo de dato de los objetos del arreglo

```

arr.dtype

```

# Selección e indices en Numpy

```

# crear un arreglo

arr = np.arange(0,11)

# desplegar el arreglo

arr

```

# Selección utilizando corchetes

```

# obtener el valor del indice 8

arr[8]

# obtener los valores de un rango

arr[1:5]

#obtener los valores de otro rango

arr[2:6]

```

# Reemplazar valores

```

# reemplazar valores en un rango determinado

arr[0:5]=100

# desplegar el arreglo

arr

# Generar nuevamente el arreglo

arr = np.arange(0,11)

# desplegar

arr

# corte de un arreglo

slice_of_arr = arr[0:6]

# desplegar el corte

slice_of_arr

# cambiar valores del corte

slice_of_arr[:]=99

# desplegar los valores del corte

slice_of_arr

# desplegar arreglo

arr

# para obtener una copia se debe hacer explicitamente

arr_copy = arr.copy()

# desplegar el arreglo copia

arr_copy

```

## Indices en un arreglo 2D (matrices)

La forma general de un arreglo 2d es la siguiente **arr_2d[row][col]** o **arr_2d[row,col]**

```

# generar un arreglo 2D

arr_2d = np.array(([5,10,15],[20,25,30],[35,40,45]))

#Show

arr_2d

# indices de filas

arr_2d[1]

# Formato es arr_2d[row][col] o arr_2d[row,col]

# Seleccionar un solo elemento

arr_2d[1][0]

# Seleccionar un solo elemento

arr_2d[1,0]

# Cortes en 2D

# forma (2,2) desde la esquina superior derecha

arr_2d[:2,1:]

#forma desde la ultima fila

arr_2d[2]

# forma desde la ultima fila

arr_2d[2,:]

# longitud de un arreglo

arr_length = arr_2d.shape[1]

arr_length

```

# Selección

```

arr = np.arange(1,11)

arr

arr > 4

bool_arr = arr>4

bool_arr

arr[bool_arr]

arr[arr>2]

x = 2

arr[arr>x]

```

|

github_jupyter

|

```

import os

import numpy as np

import pandas as pd

import glob

from prediction_utils.util import yaml_read, df_dict_concat

table_path = '../figures/hyperparameters/'

os.makedirs(table_path, exist_ok = True)

param_grid_base = {

"lr": [1e-3, 1e-4, 1e-5],

"batch_size": [128, 256, 512],

"drop_prob": [0.0, 0.25, 0.5, 0.75],

"num_hidden": [1, 2, 3],

"hidden_dim": [128, 256],

}

the_dict = {'hyperparameter': [], 'Grid': []}

for key, value in param_grid_base.items():

the_dict['hyperparameter'].append(key)

the_dict['Grid'].append(value)

the_df = pd.DataFrame(the_dict)

rename_grid = {

'hyperparameter': ['lr', 'batch_size', 'drop_prob', 'num_hidden', 'hidden_dim'],

'Hyperparameter': ['Learning Rate', 'Batch Size', 'Dropout Probability', 'Number of Hidden Layers', 'Hidden Dimension']

}

rename_df = pd.DataFrame(rename_grid)

the_df = the_df.merge(rename_df)[['Hyperparameter', 'Grid']].sort_values('Hyperparameter')

the_df

the_df.to_latex(os.path.join(table_path, 'param_grid.txt'), index=False)

selected_models_path = '/share/pi/nigam/projects/spfohl/cohorts/admissions/optum/experiments/baseline_tuning_fold_1/config/selected_models'

selected_models_path_dict = {

'starr': '/share/pi/nigam/projects/spfohl/cohorts/admissions/starr_20200523/experiments/baseline_tuning_fold_1_10/config/selected_models',

'mimic': '/share/pi/nigam/projects/spfohl/cohorts/admissions/mimic_omop/experiments/baseline_tuning_fold_1_10/config/selected_models',

'optum': '/share/pi/nigam/projects/spfohl/cohorts/admissions/optum/experiments/baseline_tuning_fold_1/config/selected_models',

}

selected_param_dict = {

db: {

task: yaml_read(glob.glob(os.path.join(db_path, task, '*.yaml'), recursive=True)[0]) for task in os.listdir(db_path)

}