metadata

license: mit

datasets:

- flwrlabs/code-alpaca-20k

language:

- en

metrics:

- accuracy

base_model:

- Qwen/Qwen2.5-Coder-0.5B-Instruct

pipeline_tag: text-generation

library_name: peft

tags:

- text-generation-inference

- code

Model Card for FlowerTune-Qwen2.5-Coder-0.5B-Instruct-PEFT

Evaluation Results (Accuracy)

- MBPP: 25.60 %

- HumanEval: 37.81 %

- MultiPL-E (JS): 41.00 %

- MultiPL-E (C++): 32.92 %

- Average: 34.34 %

Model Details

This PEFT adapter has been trained by using Flower, a friendly federated AI framework.

The adapter and benchmark results have been submitted to the FlowerTune LLM Code Leaderboard.

Please check the following GitHub project for details on how to reproduce training and evaluation steps:

https://github.com/ethicalabs-ai/FlowerTune-Qwen2.5-Coder-0.5B-Instruct/

How to Get Started with the Model

Use this model as:

from peft import PeftModel

from transformers import AutoModelForCausalLM

base_model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-Coder-0.5B-Instruct")

model = PeftModel.from_pretrained(base_model, "ethicalabs/FlowerTune-Qwen2.5-Coder-0.5B-Instruct")

Communication Budget

8766.51 MB Megabytes

Virtual Machine Details

For this experiment, I utilized CUDO Compute as the GPU compute provider.

| Component | Specification |

|---|---|

| GPU | 1 × RTX A4000 16 GB |

| vCPUs | 4 |

| CPU | AMD EPYC (Milan) |

| Memory | 16 GB |

Cost Breakdown

Compute Costs

| Component | Details | Cost/hr |

|---|---|---|

| vCPUs | 4 cores | $0.0088/hr |

| Memory | 16 GB | $0.056/hr |

| GPU | 1 × RTX A4000 | $0.25/hr |

Storage Costs

| Component | Details | Cost/hr |

|---|---|---|

| Boot Disk Size | 70 GB | $0.0077/hr |

Network Costs

| Component | Details | Cost/hr |

|---|---|---|

| Public IPv4 Address | N/A | $0.005/hr |

Total Cost

| Total Cost/hr |

|---|

| $0.3275/hr |

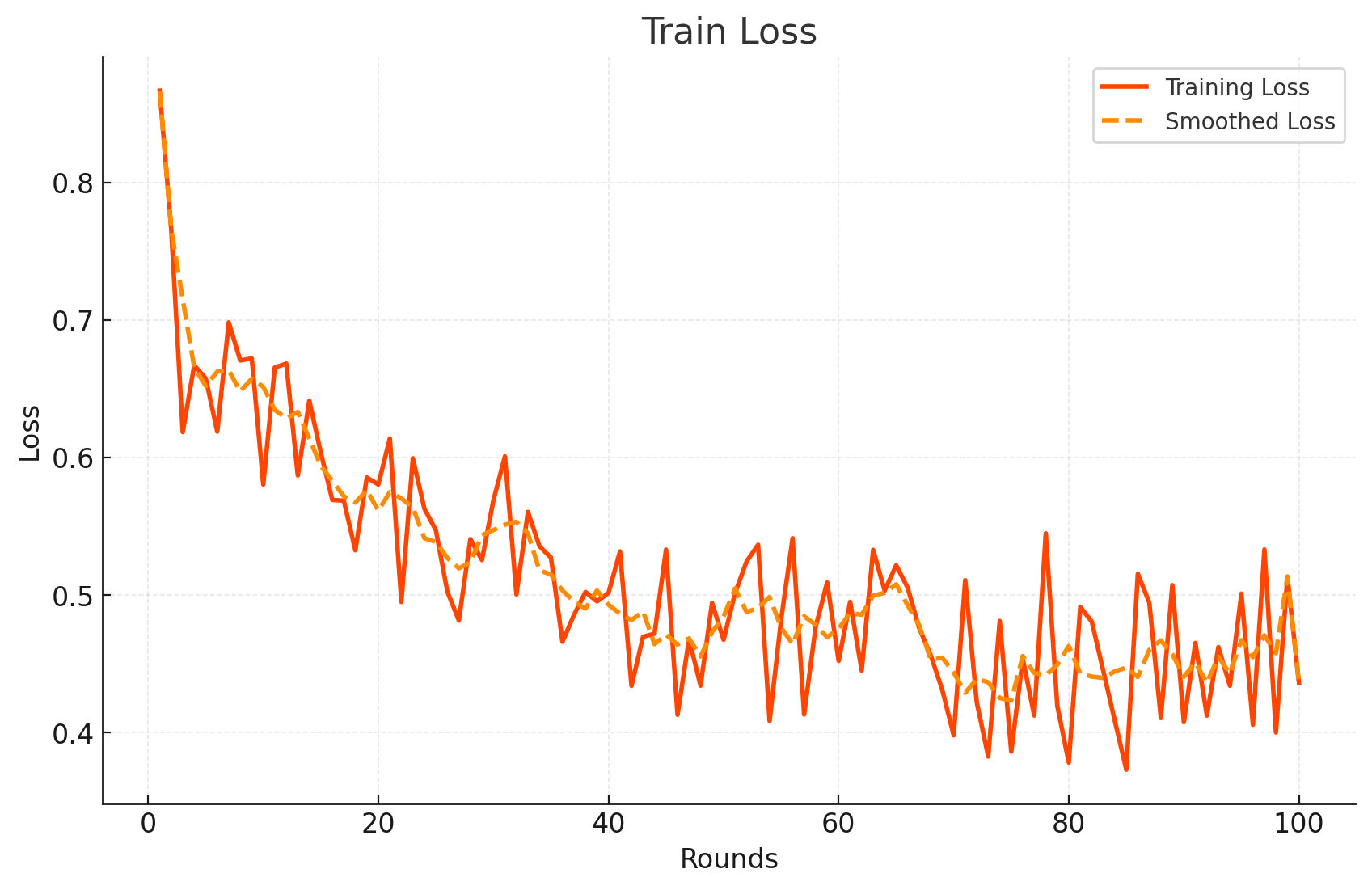

Simulation Details

| Parameter | Value |

|---|---|

| Runtime | 1924.52 seconds (00:32:04) |

| Simulation Cost | $0.18 |

Framework versions

- PEFT 0.14.0

- Flower 1.13.1