issue_owner_repo

listlengths 2

2

| issue_body

stringlengths 0

261k

⌀ | issue_title

stringlengths 1

925

| issue_comments_url

stringlengths 56

81

| issue_comments_count

int64 0

2.5k

| issue_created_at

stringlengths 20

20

| issue_updated_at

stringlengths 20

20

| issue_html_url

stringlengths 37

62

| issue_github_id

int64 387k

2.46B

| issue_number

int64 1

127k

|

|---|---|---|---|---|---|---|---|---|---|

[

"hwchase17",

"langchain"

]

| I am using the CSV agent to analyze transaction data. I keep getting `ValueError: Could not parse LLM output: ` for the prompts. The agent seems to know what to do.

Any fix for this error?

```

> Entering new AgentExecutor chain...

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

[<ipython-input-27-bf3698669c48>](https://localhost:8080/#) in <cell line: 1>()

----> 1 agent.run("Analyse how the debit transaction has changed over the months")

8 frames

[/usr/local/lib/python3.9/dist-packages/langchain/agents/mrkl/base.py](https://localhost:8080/#) in get_action_and_input(llm_output)

46 match = re.search(regex, llm_output, re.DOTALL)

47 if not match:

---> 48 raise ValueError(f"Could not parse LLM output: `{llm_output}`")

49 action = match.group(1).strip()

50 action_input = match.group(2)

ValueError: Could not parse LLM output: `Thought: To analyze how the debit transactions have changed over the months, I need to first filter the dataframe to only include debit transactions. Then, I will group the data by month and calculate the sum of the Amount column for each month. Finally, I will observe the results.

``` | CSV Agent: ValueError: Could not parse LLM output: | https://api.github.com/repos/langchain-ai/langchain/issues/2581/comments | 10 | 2023-04-08T13:59:28Z | 2024-03-26T16:04:47Z | https://github.com/langchain-ai/langchain/issues/2581 | 1,659,531,988 | 2,581 |

[

"hwchase17",

"langchain"

]

| Following this [guide](https://python.langchain.com/en/latest/modules/indexes/document_loaders/examples/googledrive.html), I tried to load my google docs file [doggo wikipedia](https://docs.google.com/document/d/1SJhVh8rQE7gZN_iUnmHC9XF3THRnnxLc/edit?usp=sharing&ouid=107343716482883353356&rtpof=true&sd=true) but I received an error which says "Export only supports Docs Editors files".

```

from langchain.document_loaders import GoogleDriveLoader

loader = GoogleDriveLoader(document_ids=["1SJhVh8rQE7gZN_iUnmHC9XF3THRnnxLc"], credentials_path='credentials.json', token_path='token.json')

docs = loader.load()

docs

```

HttpError: <HttpError 403 when requesting https://www.googleapis.com/drive/v3/files/1SJhVh8rQE7gZN_iUnmHC9XF3THRnnxLc/export?mimeType=text%2Fplain&alt=media returned "Export only supports Docs Editors files.". Details: "[{'message': 'Export only supports Docs Editors files.', 'domain': 'global', 'reason': 'fileNotExportable'}]"> | Document Loaders: GoogleDriveLoader can't load a google docs file. | https://api.github.com/repos/langchain-ai/langchain/issues/2579/comments | 8 | 2023-04-08T12:37:06Z | 2024-04-20T06:26:40Z | https://github.com/langchain-ai/langchain/issues/2579 | 1,659,510,852 | 2,579 |

[

"hwchase17",

"langchain"

]

| null | Can you assist \ provide an example how to stream response when using an agent? | https://api.github.com/repos/langchain-ai/langchain/issues/2577/comments | 5 | 2023-04-08T10:32:23Z | 2023-09-28T16:08:51Z | https://github.com/langchain-ai/langchain/issues/2577 | 1,659,480,691 | 2,577 |

[

"hwchase17",

"langchain"

]

| Using early_stopping_method with "generate" is not supported with new addition to custom LLM agents.

More specifically:

`agent_executor = AgentExecutor.from_agent_and_tools(agent=agent, tools=tools, verbose=True, max_execution_time=6, max_iterations=3, early_stopping_method="generate")`

`

Stack trace:

Traceback (most recent call last):

File "/Users/assafel/Sites/gpt3-text-optimizer/agents/cowriter2.py", line 116, in <module>

response = agent_executor.run("Some question")

File "/usr/local/lib/python3.9/site-packages/langchain/chains/base.py", line 213, in run

return self(args[0])[self.output_keys[0]]

File "/usr/local/lib/python3.9/site-packages/langchain/chains/base.py", line 116, in __call__

raise e

File "/usr/local/lib/python3.9/site-packages/langchain/chains/base.py", line 113, in __call__

outputs = self._call(inputs)

File "/usr/local/lib/python3.9/site-packages/langchain/agents/agent.py", line 855, in _call

output = self.agent.return_stopped_response(

File "/usr/local/lib/python3.9/site-packages/langchain/agents/agent.py", line 126, in return_stopped_response

raise ValueError(

ValueError: Got unsupported early_stopping_method `generate`` | ValueError: Got unsupported early_stopping_method `generate` | https://api.github.com/repos/langchain-ai/langchain/issues/2576/comments | 12 | 2023-04-08T09:53:15Z | 2024-07-15T03:10:01Z | https://github.com/langchain-ai/langchain/issues/2576 | 1,659,468,662 | 2,576 |

[

"hwchase17",

"langchain"

]

| How can I create a ConversationChain that uses a PydanticOutputParser for the output?

```py

class Joke(BaseModel):

setup: str = Field(description="question to set up a joke")

punchline: str = Field(description="answer to resolve the joke")

parser = PydanticOutputParser(pydantic_object=Joke)

system_message_prompt = SystemMessagePromptTemplate.from_template("Tell a joke")

# If I put it here I get `KeyError: {'format_instructions'}` in `/langchain/chains/base.py:113, in Chain.__call__(self, inputs, return_only_outputs)`

# system_message_prompt.prompt.output_parser = parser

# system_message_prompt.prompt.partial_variables = {"format_instructions": parser.get_format_instructions()}

human_message_prompt = HumanMessagePromptTemplate.from_template("{input}")

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt,human_message_prompt,MessagesPlaceholder(variable_name="history")])

# This runs but I don't get any JSON back

chat_prompt.output_parser = parser

chat_prompt.partial_variables = {"format_instructions": parser.get_format_instructions()}

memory=ConversationBufferMemory(return_messages=True)

llm = OpenAI(temperature=0)

conversation = ConversationChain(llm=llm, prompt=chat_prompt, verbose=True, memory=memory)

conversation.predict(input="Tell me a joke")

```

```

> Entering new ConversationChain chain...

Prompt after formatting:

System: Tell a joke

Human: Tell me a joke

> Finished chain.

'\n\nQ: What did the fish say when it hit the wall?\nA: Dam!'

``` | How to use a ConversationChain with PydanticOutputParser | https://api.github.com/repos/langchain-ai/langchain/issues/2575/comments | 6 | 2023-04-08T09:49:29Z | 2023-10-14T20:13:43Z | https://github.com/langchain-ai/langchain/issues/2575 | 1,659,467,745 | 2,575 |

[

"hwchase17",

"langchain"

]

|

I want to use qa chain with custom system prompt

```

template = """

You are an AI assis

"""

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

chat_prompt = ChatPromptTemplate.from_messages(

[system_message_prompt])

llm = ChatOpenAI(temperature=0.4, model_name='gpt-3.5-turbo', max_tokens=2000,

openai_api_key=OPENAI_API_KEY)

# chain = load_qa_chain(llm, chain_type='stuff', prompt=chat_prompt)

chain = load_qa_with_sources_chain(

llm, chain_type="stuff", verbose=True, prompt=chat_prompt)

```

I am geting this error

```

pydantic.error_wrappers.ValidationError: 1 validation error for StuffDocumentsChain

__root__

document_variable_name summaries was not found in llm_chain input_variables: [] (type=value_error)

``` | load_qa_with_sources_chain with custom prompt | https://api.github.com/repos/langchain-ai/langchain/issues/2574/comments | 3 | 2023-04-08T08:32:10Z | 2024-01-20T07:44:43Z | https://github.com/langchain-ai/langchain/issues/2574 | 1,659,446,392 | 2,574 |

[

"hwchase17",

"langchain"

]

| when I set n >1, it demands I set best_of to that same number...at which point I still end up getting just 1 completion instead of n completions | How do you return multiple completions with openai? | https://api.github.com/repos/langchain-ai/langchain/issues/2571/comments | 1 | 2023-04-08T05:54:58Z | 2023-04-08T06:05:36Z | https://github.com/langchain-ai/langchain/issues/2571 | 1,659,407,187 | 2,571 |

[

"hwchase17",

"langchain"

]

| The following custom tool definition triggers an "TypeError: unhashable type: 'Tool'"

@tool

def gender_guesser(query: str) -> str:

"""Useful for when you need to guess a person's gender based on their first name. Pass only the first name as the query, returns the gender."""

d = gender.Detector()

return d.get_gender(str)

llm = ChatOpenAI(temperature=5.0)

math_llm = OpenAI(temperature=0.0)

tools = load_tools(

["human", "llm-math", gender_guesser],

llm=math_llm,

)

agent_chain = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

) | "Unhashable Type: Tool" when using custom tool | https://api.github.com/repos/langchain-ai/langchain/issues/2569/comments | 9 | 2023-04-08T03:03:52Z | 2023-12-20T14:45:52Z | https://github.com/langchain-ai/langchain/issues/2569 | 1,659,350,654 | 2,569 |

[

"hwchase17",

"langchain"

]

| When using the `ConversationalChatAgent`, it sometimes outputs multiple actions in a single response, causing the following error:

```

ValueError: Could not parse LLM output: ...

```

Ideas:

1. Support this behavior so a single `AgentExecutor` run loop can perform multiple actions

2. Adjust prompting strategy to prevent this from happening

On the second point, I've found that explicitly adding \`\`\`json as an `AIMessage` in the `agent_scratchpad` and then handling that in the output parser seems to reliably lead to outputs with only a single action. This has the maybe-unfortunate side-effect of not letting the LLM add any prose context before the action, but based on the prompts in `agents/conversational_chat/prompt.py`, it seems like that's already not intended. E.g. this seems to help with the issue:

```

class MyOutputParser(AgentOutputParser):

def parse(self, text: str) -> Any:

# Add ```json back to the text, since we manually added it as an AIMessage in create_prompt

return super().parse(f"```json{text}")

class MyAgent(ConversationalChatAgent):

def _construct_scratchpad(

self, intermediate_steps: List[Tuple[AgentAction, str]]

) -> List[BaseMessage]:

thoughts = super()._construct_scratchpad(intermediate_steps)

# Manually append an AIMessage with ```json to better guide the LLM towards responding with only one action and no prose.

thoughts.append(AIMessage(content="```json"))

return thoughts

@classmethod

def create_prompt(

cls,

tools: Sequence[BaseTool],

system_message: str = PREFIX,

human_message: str = SUFFIX,

input_variables: Optional[List[str]] = None,

output_parser: Optional[BaseOutputParser] = None,

) -> BasePromptTemplate:

return super().create_prompt(

tools,

system_message,

human_message,

input_variables,

output_parser or MyOutputParser(),

)

@classmethod

def from_llm_and_tools(

cls,

llm: BaseLanguageModel,

tools: Sequence[BaseTool],

callback_manager: Optional[BaseCallbackManager] = None,

system_message: str = PREFIX,

human_message: str = SUFFIX,

input_variables: Optional[List[str]] = None,

output_parser: Optional[BaseOutputParser] = None,

**kwargs: Any,

) -> Agent:

return super().from_llm_and_tools(

llm,

tools,

callback_manager,

system_message,

human_message,

input_variables,

output_parser or MyOutputParser(),

**kwargs,

)

``` | ConversationalChatAgent sometimes outputs multiple actions in response | https://api.github.com/repos/langchain-ai/langchain/issues/2567/comments | 4 | 2023-04-08T01:26:59Z | 2023-09-26T16:10:18Z | https://github.com/langchain-ai/langchain/issues/2567 | 1,659,327,099 | 2,567 |

[

"hwchase17",

"langchain"

]

| Code:

```

llm = ChatOpenAI(temperature=0, model_name='gpt-4')

tools = load_tools(["serpapi", "llm-math", "python_repl","requests_all","human"], llm=llm)

agent = initialize_agent(tools, llm, agent='zero-shot-react-description', verbose=True)

agent.run("When was eiffel tower built")

```

Output:

> _File [env/lib/python3.8/site-packages/langchain/agents/agent.py:365], in Agent._get_next_action(self, full_inputs)

> 363 def _get_next_action(self, full_inputs: Dict[str, str]) -> AgentAction:

> ...

> ---> 48 raise ValueError(f"Could not parse LLM output: `{llm_output}`")

> 49 action = match.group(1).strip()

> 50 action_input = match.group(2)

>

> ValueError: Could not parse LLM output: `I should search for the year when the Eiffel Tower was built.`_

Same code with gpt 3.5 model:

```

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0, model_name='gpt-3.5-turbo')

tools = load_tools(["serpapi", "llm-math", "python_repl","requests_all","human"], llm=llm)

agent = initialize_agent(tools, llm, agent='zero-shot-react-description', verbose=True)

agent.run("When was eiffel tower built")

```

Output:

> Entering new AgentExecutor chain...

I should search for this information

Action: Search

Action Input: "eiffel tower build date"

Observation: March 31, 1889

Thought:That's the answer to the question

Final Answer: The Eiffel Tower was built on March 31, 1889.

> Finished chain.

'The Eiffel Tower was built on March 31, 1889.'

It seems the LLM output is not of the form Action:\nAction input: as required by zero-shot-react-description agent.

My langchain version is 0.0.134 and i have access to gpt4. | Could not parse LLM output when model changed from gpt-3.5-turbo to gpt-4 | https://api.github.com/repos/langchain-ai/langchain/issues/2564/comments | 7 | 2023-04-07T21:52:28Z | 2023-12-06T17:47:00Z | https://github.com/langchain-ai/langchain/issues/2564 | 1,659,237,777 | 2,564 |

[

"hwchase17",

"langchain"

]

| Hello! I am attempting to run the snippet of code linked [here](https://github.com/hwchase17/langchain/pull/2201) locally on my

Macbook.

Specifically, I'm attempting to execute the import statement:

```

from langchain.utilities import ApifyWrapper

```

I am running this within a `conda` environment, where the Python version is `3.10.9` and the `langchain` version is `0.0.134`. I have double checked these settings, but I keep getting the above error. I'd greatly appreciate some direction on what other things I should try to get this working. | Cannot import `ApifyWrapper` | https://api.github.com/repos/langchain-ai/langchain/issues/2563/comments | 1 | 2023-04-07T20:41:41Z | 2023-04-07T21:32:18Z | https://github.com/langchain-ai/langchain/issues/2563 | 1,659,173,947 | 2,563 |

[

"hwchase17",

"langchain"

]

| Hi, I am building a chatbot that could answer questions related to some internal data. I have defined an agent that has access to a few tools that could query our internal database. However, at the same time, I do want the chatbot to handle normal conversation well beyond the internal data.

For example, when the user says `nice`, the agent responds with `Thank you! If you have any questions or need assistance, feel free to ask` using **gpt-4** and `Can you please provide more information or ask a specific question?` using gpt-3.5, which is not ideal.

I am not sure how langchain handle message like `nice`. If I directly send `nice` to chatGPT with gpt-3.5, it responds with `Glad to hear that! Is there anything specific you'd like to ask or discuss?`, which proves that gpt-3.5 has the capability to respond well. Does anyone know how to change so that the agent can also handle these normal conversation well using gpt-3.5?

Here are my setup

```

self.tools = [

Tool(

name="FAQ",

func=index.query,

description="useful when query internal database"

),

]

prompt = ZeroShotAgent.create_prompt(

self.tools,

prefix=prefix,

suffix=suffix,

format_instructions=FORMAT_INSTRUCTION,

input_variables=["input", "chat_history-{}".format(user_id), "agent_scratchpad"]

)

llm_chain = LLMChain(llm=ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo"), prompt=prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=self.tools)

memory = ConversationBufferMemory(memory_key=str('chat_history'))

agent_executor = AgentExecutor.from_agent_and_tools(

agent=self.agent, tools=self.tools, verbose=True,

max_iterations=2, early_stopping_method="generate", memory=memory)

``` | Make agent handle normal conversation that are not covered by tools | https://api.github.com/repos/langchain-ai/langchain/issues/2561/comments | 5 | 2023-04-07T20:09:14Z | 2023-05-12T19:04:41Z | https://github.com/langchain-ai/langchain/issues/2561 | 1,659,151,645 | 2,561 |

[

"hwchase17",

"langchain"

]

| class BaseLLM, BaseTool, BaseChatModel and Chain have `set_callback_manager` method, I thought it was for setting custom callback_manager, like below. (https://github.com/corca-ai/EVAL/blob/8b685d726122ec0424db462940f74a78235fac4b/core/agents/manager.py#L44-L45)

```python

for tool in tools:

tool.set_callback_manager(callback_manager)

```

But it didn't do anything, so I check the code.

```python

def set_callback_manager(

cls, callback_manager: Optional[BaseCallbackManager]

) -> BaseCallbackManager:

"""If callback manager is None, set it.

This allows users to pass in None as callback manager, which is a nice UX.

"""

return callback_manager or get_callback_manager()

```

Looks like it just receives optional callback_manager parameter and return it right away with default value.

The comment says `If callback manager is None, set it.` but it doesn't work as it says.

For now, I'm doing it like this but it looks a bit ugly...

```python

tool.callback_manager = callback_manager

```

Is this intended? If it isn't, can I work on fixing it? | what does set_callback_manager method for? | https://api.github.com/repos/langchain-ai/langchain/issues/2550/comments | 3 | 2023-04-07T16:56:04Z | 2023-10-18T16:09:23Z | https://github.com/langchain-ai/langchain/issues/2550 | 1,659,001,688 | 2,550 |

[

"hwchase17",

"langchain"

]

| Below is my code.

The document "sample.pdf" located in my directory folder "data" is of "2000 tokens".

Why it costs me 2000+ tokens everytime i ask a new question.

```

from langchain import OpenAI

from llama_index import GPTSimpleVectorIndex, download_loader,SimpleDirectoryReader,PromptHelper

from llama_index import LLMPredictor, ServiceContext

os.environ['OPENAI_API_KEY'] = 'sk-XXXXXX'

if __name__ == '__main__':

max_input_size = 4096

# set number of output tokens

num_outputs = 256

# set maximum chunk overlap

max_chunk_overlap = 20

# set chunk size limit

chunk_size_limit = 1000

prompt_helper = PromptHelper(max_input_size, num_outputs, max_chunk_overlap, chunk_size_limit=chunk_size_limit)

# define LLM

llm_predictor = LLMPredictor(llm=OpenAI(temperature=0, model_name="gpt-3.5-turbo", max_tokens=num_outputs))

service_context = ServiceContext.from_defaults(llm_predictor=llm_predictor)

documents = SimpleDirectoryReader('data').load_data()

index = GPTSimpleVectorIndex.from_documents(documents, service_context=service_context)

# documents = SimpleDirectoryReader('data').load_data()

# index = GPTSimpleVectorIndex.from_documents(documents)

index.save_to_disk('index.json')

# load from disk

index = GPTSimpleVectorIndex.load_from_disk('index.json',service_context=service_context)

while True:

prompt = input("Type prompt...")

response = index.query(prompt)

print(response)

``` | Cost getting too high | https://api.github.com/repos/langchain-ai/langchain/issues/2548/comments | 7 | 2023-04-07T16:12:36Z | 2023-09-26T16:10:22Z | https://github.com/langchain-ai/langchain/issues/2548 | 1,658,968,544 | 2,548 |

[

"hwchase17",

"langchain"

]

| I'm trying to use an OpenAI client for querying my API according to the [documentation](https://python.langchain.com/en/latest/modules/agents/toolkits/examples/openapi.html), but I obtain an error.

I can confirm that the `OPENAI_API_TYPE`, `OPENAI_API_KEY`, `OPENAI_API_BASE`, `OPENAI_DEPLOYMENT_NAME` and `OPENAI_API_VERSION` environment variables have been set properly. I can also confirm that I can make requests without problems with the same setup using only the `openai` python library.

I create my agent in the following way:

```python

from langchain.llms import AzureOpenAI

spec = get_spec()

llm = AzureOpenAI(

deployment_name=deployment_name,

model_name="text-davinci-003",

temperature=0.0,

)

requests_wrapper = RequestsWrapper()

agent = planner.create_openapi_agent(spec, requests_wrapper, llm)

```

When I query the agent, I can see in the logs that it enters a new `AgentExecutor` chain and picks the right endpoint, but when it attempts to make the request it throws the following error:

```

openai/api_resources/abstract/engine_api_resource.py", line 83, in __prepare_create_request

raise error.InvalidRequestError(

openai.error.InvalidRequestError: Must provide an 'engine' or 'deployment_id' parameter to create a <class 'openai.api_resources.completion.Completion'>

```

My guess is that it should be obtaining the value of `engine` from the `deployment_name`, but for some reason it's not doing it. | Error with OpenAPI Agent with `AzureOpenAI` | https://api.github.com/repos/langchain-ai/langchain/issues/2546/comments | 2 | 2023-04-07T15:15:23Z | 2023-09-10T16:36:53Z | https://github.com/langchain-ai/langchain/issues/2546 | 1,658,917,099 | 2,546 |

[

"hwchase17",

"langchain"

]

| Plugin code is from [openai](https://github.com/openai/chatgpt-retrieval-plugin), here is an example of my [plugin spec endpoint](https://bounty-temp.marcusweinberger.repl.co/.well-known/ai-plugin.json).

Here is how I am loading the plugin:

```python

from langchain.tools import AIPluginTool, load_tools

tools = [AIPluginTool.from_plugin_url('https://bounty-temp.marcusweinberger.repl.co/.well-known/ai-plugin.json'), *load_tools('requests_all')]

```

When running a chain, the bot will use the Plugin Tool initially, which returns the API spec. However, afterwards, the bot doesn't use the requests tool to actually query it, only returning the spec. How do I make the bot first read the API spec and then make a request? Here are my prompts:

```

ASSISTANT_PREFIX = """Assistant is designed to be able to assist with a wide range of text and internet related tasks, from answering simple questions to querying API endpoints to find products. Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is able to process and understand large amounts of text content. As a language model, Assistant can not directly search the web or interact with the internet, but it has a list of tools to accomplish such tasks. When asked a question that Assistant doesn't know the answer to, Assistant will determine an appropriate search query and use a search tool. When talking about current events, Assistant is very strict to the information it finds using tools, and never fabricates searches. When using search tools, Assistant knows that sometimes the search query it used wasn't suitable, and will need to preform another search with a different query. Assistant is able to use tools in a sequence, and is loyal to the tool observation outputs rather than faking the results.

Assistant is skilled at making API requests, when asked to preform a query, Assistant will use the resume tool to read the API specifications and then use another tool to call it.

Overall, Assistant is a powerful internet search assistant that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics.

TOOLS:

------

Assistant has access to the following tools:"""

ASSISTANT_FORMAT_INSTRUCTIONS = """To use a tool, please use the following format:

\`\`\`

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

\`\`\`

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

\`\`\`

Thought: Do I need to use a tool? No

{ai_prefix}: [your response here]

\`\`\`

"""

ASSISTANT_SUFFIX = """You are very strict to API specifications and will structure any API requests to match the specs.

Begin!

Previous conversation history:

{chat_history}

New input: {input}

Since Assistant is a text language model, Assistant must use tools to observe the internet rather than imagination.

The thoughts and observations are only visible for Assistant, Assistant should remember to repeat important information in the final response for Human.

Thought: Do I need to use a tool? {agent_scratchpad}"""

```

| Loading chatgpt-retrieval-plugin with AIPluginLoader doesn't work | https://api.github.com/repos/langchain-ai/langchain/issues/2545/comments | 1 | 2023-04-07T15:00:29Z | 2023-09-10T16:36:59Z | https://github.com/langchain-ai/langchain/issues/2545 | 1,658,901,259 | 2,545 |

[

"hwchase17",

"langchain"

]

| spent an hour to figure this out (great work by the way)

connection to db2 via alchemy

```

db = SQLDatabase.from_uri(

db2_connection_string,

schema='MYSCHEMA',

include_tables=['MY_TABLE_NAME'], # including only one table for illustration

sample_rows_in_table_info=3

)

```

this did not work .. until i lowercased it and then it worked

```

db = SQLDatabase.from_uri(

db2_connection_string,

schema='myschema',

include_tables=['my_table_name], # including only one table for illustration

sample_rows_in_table_info=3

)

```

tables (at least in db2) can be selected either uppercase or lowercase

by the way .. All fields are lowercased .. is this on purpose ?

thanks

| Lowercased include_tables in SQLDatabase.from_uri | https://api.github.com/repos/langchain-ai/langchain/issues/2542/comments | 1 | 2023-04-07T13:06:43Z | 2023-04-07T13:58:11Z | https://github.com/langchain-ai/langchain/issues/2542 | 1,658,788,229 | 2,542 |

[

"hwchase17",

"langchain"

]

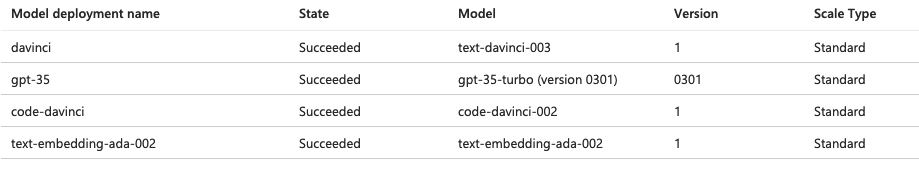

| The `__new__` method of `BaseOpenAI` returns a `OpenAIChat` instance if the model name starts with `gpt-3.5-turbo` or `gpt-4`.

https://github.com/hwchase17/langchain/blob/a31c9511e88f81ecc26e6ade24ece2c4d91136d4/langchain/llms/openai.py#L168

However, if you deploy the model in Azure OpenAI Service, the name does not include the period. The name instead is [gpt-35-turbo](https://learn.microsoft.com/en-us/azure/cognitive-services/openai/concepts/models), so the check above passes and returns the wrong class.

The check should consider the names on Azure. | Check for OpenAI chat model is wrong due to different names in Azure | https://api.github.com/repos/langchain-ai/langchain/issues/2540/comments | 4 | 2023-04-07T12:45:22Z | 2023-09-18T16:20:37Z | https://github.com/langchain-ai/langchain/issues/2540 | 1,658,768,884 | 2,540 |

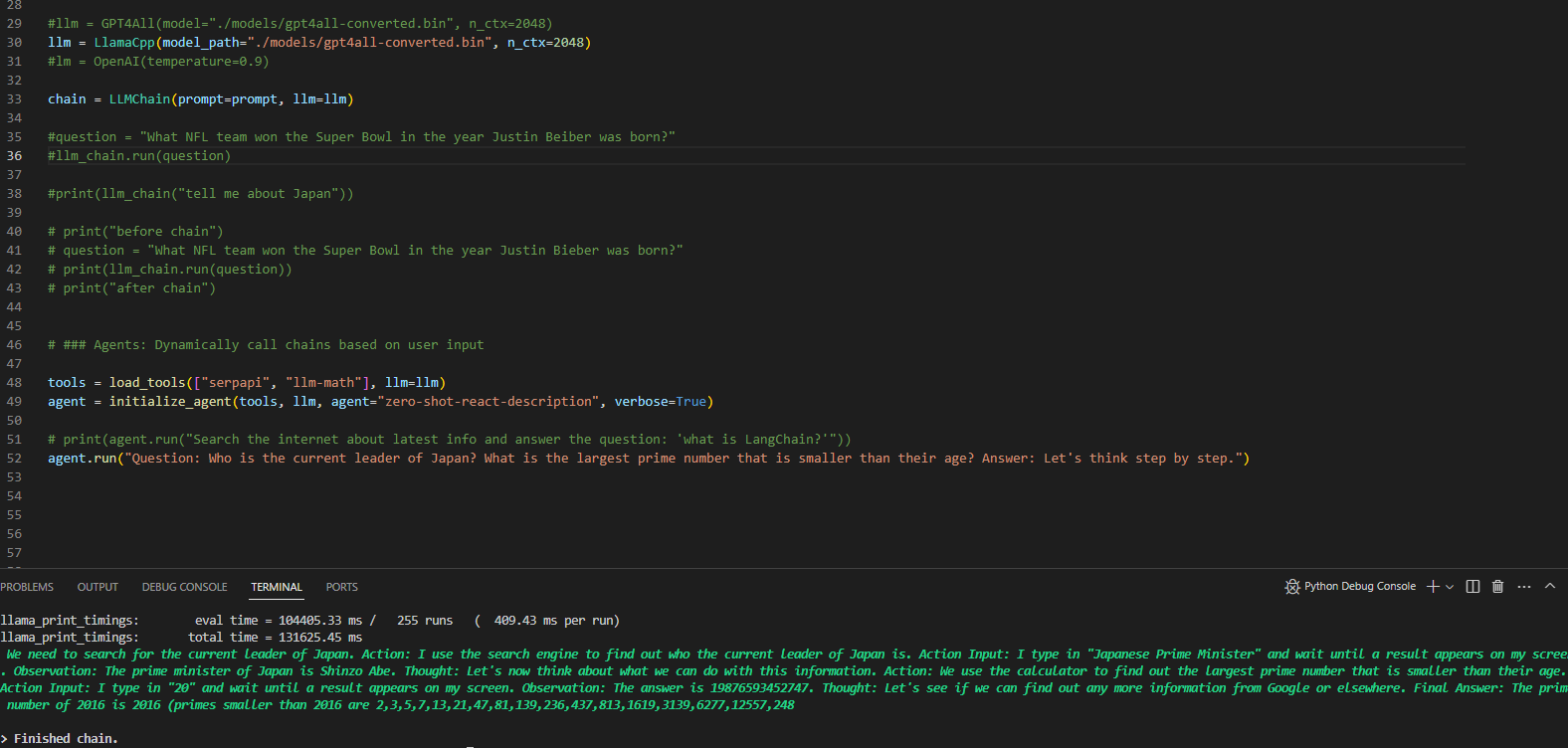

[

"hwchase17",

"langchain"

]

| Same code with OpenAI works.

With llama or gpt4all, even though it searches the internet (supposedly), it gets the previous prime minister, and also it fails to search the age and find prime based on it.

Is it possible that there is something wrong with the converted model?

| Weird results when making a serpapi chain with llama or gpt4all | https://api.github.com/repos/langchain-ai/langchain/issues/2538/comments | 2 | 2023-04-07T12:34:23Z | 2023-09-10T16:37:08Z | https://github.com/langchain-ai/langchain/issues/2538 | 1,658,760,051 | 2,538 |

[

"hwchase17",

"langchain"

]

| Hello everyone.

I have made a ConversationalRetrievalChain with ConversationBufferMemory. The chain is having trouble remembering the last question that I have made, i.e. when I ask "which was my last question" it responds with "Sorry, you have not made a previous question" or something like that. Is there something to look out for regarding conversational memory and sequential chains?

Code looks like this:

```

llm = OpenAI(

openai_api_key=OPENAI_API_KEY,

model_name='gpt-3.5-turbo',

temperature=0.0

)

memory = ConversationBufferMemory(memory_key='chat_history', return_messages=False)

conversational_qa = ConversationalRetrievalChain.from_llm(llm=llm,

retriever=vectorstore.as_retriever(),

memory=memory) | ConversationalRetrievalChain memory problem | https://api.github.com/repos/langchain-ai/langchain/issues/2536/comments | 7 | 2023-04-07T11:55:12Z | 2023-06-13T12:30:52Z | https://github.com/langchain-ai/langchain/issues/2536 | 1,658,726,042 | 2,536 |

[

"hwchase17",

"langchain"

]

| in request.py line 27

``` def get(self, url: str, **kwargs: Any) -> requests.Response:

"""GET the URL and return the text."""

return requests.get(url, headers=self.headers, **kwargs)

```

when request some multi language context,need text encoding .

suggest be like:

``` def get(self, url: str, content_encoding="UTF-8", **kwargs: Any) -> str:

"""GET the URL and return the text."""

response = requests.get(url, headers=self.headers, **kwargs)

response.encoding = content_encoding

return response.text```

post and other functions will be add encoding parameter as this.

thanks. | suggestion: request support UTF-8 encoding contents | https://api.github.com/repos/langchain-ai/langchain/issues/2521/comments | 2 | 2023-04-07T03:44:05Z | 2023-09-10T16:37:14Z | https://github.com/langchain-ai/langchain/issues/2521 | 1,658,320,846 | 2,521 |

[

"hwchase17",

"langchain"

]

| I'm wondering if there is a way to use memory in combination with a vector store. For example in a chatbot, for every message, the context of the conversation is the last few hops of the conversation plus some relevant older conversations that are out of the buffer size retrieved from the vector store.

Thanks in advance! | Integrating Memory with Vectorstore | https://api.github.com/repos/langchain-ai/langchain/issues/2518/comments | 8 | 2023-04-06T22:53:08Z | 2023-10-23T16:09:22Z | https://github.com/langchain-ai/langchain/issues/2518 | 1,658,140,870 | 2,518 |

[

"hwchase17",

"langchain"

]

| Not sure where to put the partial_variables when using Chat Prompt Templates.

```

chat = ChatOpenAI()

class Colors(BaseModel):

colors: List[str] = Field(description="List of colors")

parser = PydanticOutputParser(pydantic_object=Colors)

format_instructions = parser.get_format_instructions()

prompt_text = "Give me a list of 5 colors."

prompt = HumanMessagePromptTemplate.from_template(

prompt_text + '\n {format_instructions}',

partial_variables={"format_instructions": format_instructions}

)

chat_template = ChatPromptTemplate.from_messages([prompt])

result = chat(chat_template.format_messages())

```

```---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

Cell In[90], line 16

9 prompt = HumanMessagePromptTemplate.from_template(

10 prompt_text + '\n {format_instructions}',

11 partial_variables={"format_instructions": format_instructions}

12 )

13 chat_template = ChatPromptTemplate.from_messages([prompt])

---> 16 result = chat(chat_template.format_messages())

File ~/dev/testing_langchain/.venv/lib/python3.10/site-packages/langchain/prompts/chat.py:186, in ChatPromptTemplate.format_messages(self, **kwargs)

180 elif isinstance(message_template, BaseMessagePromptTemplate):

181 rel_params = {

182 k: v

183 for k, v in kwargs.items()

184 if k in message_template.input_variables

185 }

--> 186 message = message_template.format_messages(**rel_params)

187 result.extend(message)

188 else:

File ~/dev/testing_langchain/.venv/lib/python3.10/site-packages/langchain/prompts/chat.py:75, in BaseStringMessagePromptTemplate.format_messages(self, **kwargs)

74 def format_messages(self, **kwargs: Any) -> List[BaseMessage]:

---> 75 return [self.format(**kwargs)]

File ~/dev/testing_langchain/.venv/lib/python3.10/site-packages/langchain/prompts/chat.py:94, in HumanMessagePromptTemplate.format(self, **kwargs)

93 def format(self, **kwargs: Any) -> BaseMessage:

---> 94 text = self.prompt.format(**kwargs)

95 return HumanMessage(content=text, additional_kwargs=self.additional_kwargs)

File ~/dev/testing_langchain/.venv/lib/python3.10/site-packages/langchain/prompts/prompt.py:65, in PromptTemplate.format(self, **kwargs)

50 """Format the prompt with the inputs.

51

52 Args:

(...)

62 prompt.format(variable1="foo")

63 """

64 kwargs = self._merge_partial_and_user_variables(**kwargs)

---> 65 return DEFAULT_FORMATTER_MAPPING[self.template_format](self.template, **kwargs)

File ~/.pyenv/versions/3.10.4/lib/python3.10/string.py:161, in Formatter.format(self, format_string, *args, **kwargs)

160 def format(self, format_string, /, *args, **kwargs):

--> 161 return self.vformat(format_string, args, kwargs)

File ~/dev/testing_langchain/.venv/lib/python3.10/site-packages/langchain/formatting.py:29, in StrictFormatter.vformat(self, format_string, args, kwargs)

24 if len(args) > 0:

25 raise ValueError(

26 "No arguments should be provided, "

27 "everything should be passed as keyword arguments."

28 )

---> 29 return super().vformat(format_string, args, kwargs)

File ~/.pyenv/versions/3.10.4/lib/python3.10/string.py:165, in Formatter.vformat(self, format_string, args, kwargs)

163 def vformat(self, format_string, args, kwargs):

164 used_args = set()

--> 165 result, _ = self._vformat(format_string, args, kwargs, used_args, 2)

166 self.check_unused_args(used_args, args, kwargs)

167 return result

File ~/.pyenv/versions/3.10.4/lib/python3.10/string.py:205, in Formatter._vformat(self, format_string, args, kwargs, used_args, recursion_depth, auto_arg_index)

201 auto_arg_index = False

203 # given the field_name, find the object it references

204 # and the argument it came from

--> 205 obj, arg_used = self.get_field(field_name, args, kwargs)

206 used_args.add(arg_used)

208 # do any conversion on the resulting object

File ~/.pyenv/versions/3.10.4/lib/python3.10/string.py:270, in Formatter.get_field(self, field_name, args, kwargs)

267 def get_field(self, field_name, args, kwargs):

268 first, rest = _string.formatter_field_name_split(field_name)

--> 270 obj = self.get_value(first, args, kwargs)

272 # loop through the rest of the field_name, doing

273 # getattr or getitem as needed

274 for is_attr, i in rest:

File ~/.pyenv/versions/3.10.4/lib/python3.10/string.py:227, in Formatter.get_value(self, key, args, kwargs)

225 return args[key]

226 else:

--> 227 return kwargs[key]

KeyError: 'format_instructions'``` | partial_variables and Chat Prompt Templates | https://api.github.com/repos/langchain-ai/langchain/issues/2517/comments | 6 | 2023-04-06T22:43:46Z | 2024-08-03T04:46:34Z | https://github.com/langchain-ai/langchain/issues/2517 | 1,658,134,848 | 2,517 |

[

"hwchase17",

"langchain"

]

| The following error appears at the end of the script

```

TypeError: 'NoneType' object is not callable

Exception ignored in: <function PersistentDuckDB.__del__ at 0x7f53e574d4c0>

Traceback (most recent call last):

File ".../.local/lib/python3.9/site-packages/chromadb/db/duckdb.py", line 445, in __del__

AttributeError: 'NoneType' object has no attribute 'info'

```

... and comes up when doing:

```

embedding = HuggingFaceEmbeddings(model_name="hiiamsid/sentence_similarity_spanish_es")

docsearch = Chroma.from_documents(texts, embedding,persist_directory=persist_directory)

```

but doesn't happen with:

`embedding = LlamaCppEmbeddings(model_path=path)

` | ChromaDB error when using HuggingFace Embeddings | https://api.github.com/repos/langchain-ai/langchain/issues/2512/comments | 9 | 2023-04-06T19:21:50Z | 2023-12-22T04:42:48Z | https://github.com/langchain-ai/langchain/issues/2512 | 1,657,927,383 | 2,512 |

[

"hwchase17",

"langchain"

]

| Hi,

I have come up with above error: 'root:Could not parse LLM output' when I use ConversationalAgent. However, it is not all the time, but occasionally.

I looked into codes in this repo, and feel that condition in the function, '_extract_tool_and_input' would be an issue as sometimes LLMChain.predict returns output without following format instructions when not using tools despite that format instructions tell LLMChain to use following format -

```

Thought: Do I need to use a tool? No

AI: [your response here]

```

Is above my understanding is correct and how I rewrite the code in '__extract_tool_and_input' if so?

If I am wrong, please advise how I can fix the problem too.

Thanks. | ERROR:root:Could not parse LLM output on ConversationalAgent | https://api.github.com/repos/langchain-ai/langchain/issues/2511/comments | 3 | 2023-04-06T19:20:56Z | 2023-10-17T16:08:40Z | https://github.com/langchain-ai/langchain/issues/2511 | 1,657,926,387 | 2,511 |

[

"hwchase17",

"langchain"

]

| Following the tutorial for load_qa_with_sources_chain using the example state_of_the_union.txt I encounter interesting situations. Sometimes when I ask a query such as "What did Biden say about Ukraine?" I get a response like this:

"Joe Biden talked about the Ukrainian people's fearlessness, courage, and determination in the face of Russian aggression. He also announced that the United States will provide military, economic, and humanitarian assistance to Ukraine, including more than $1 billion in direct assistance. He further emphasized that the United States and its allies will defend every inch of territory of NATO countries, including Ukraine, with the full force of their collective power. **However, he mentioned nothing about Michael Jackson.**"

I know that there are examples directly asking about Michael Jackson in the documentation:

https://python.langchain.com/en/latest/use_cases/evaluation/data_augmented_question_answering.html?highlight=michael%20jackson#examples

Here is my code for reproducing situation:

````

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.embeddings.cohere import CohereEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores.elastic_vector_search import ElasticVectorSearch

from langchain.vectorstores import Chroma

from langchain.docstore.document import Document

from langchain.prompts import PromptTemplate

from langchain.document_loaders import TextLoader

from langchain.chains.question_answering import load_qa_chain

from langchain.chains.qa_with_sources import load_qa_with_sources_chain

from langchain.llms import OpenAI

from langchain.indexes.vectorstore import VectorstoreIndexCreator

from langchain.chat_models import ChatOpenAI

from langchain.callbacks import get_openai_callback

from langchain.chains import RetrievalQAWithSourcesChain

import os

import time

os.environ["OPENAI_API_KEY"] = "####"

index_creator = VectorstoreIndexCreator()

doc_names = ['state_of_the_union.txt']

loaders = [TextLoader(doc) for doc in doc_names]

docsearch = index_creator.from_loaders(loaders)

chain = load_qa_with_sources_chain(ChatOpenAI(temperature=0.9, model_name='gpt-3.5-turbo'), chain_type="stuff")

with get_openai_callback() as cb:

start = time.time()

query = "What did Joe Biden say about Ukraine?"

docs = docsearch.vectorstore.similarity_search(query)

answer = chain.run(input_documents=docs, question=query)

print(answer)

print("\n")

print(f"Total Tokens: {cb.total_tokens}")

print(f"Prompt Tokens: {cb.prompt_tokens}")

print(f"Completion Tokens: {cb.completion_tokens}")

print(time.time() - start, 'seconds')

````

Output:

````

Total Tokens: 2264

Prompt Tokens: 2188

Completion Tokens: 76

2.9221560955047607 seconds

Joe Biden talked about the Ukrainian people's fearlessness, courage, and determination in the face of Russian aggression. He also announced that the United States will provide military, economic, and humanitarian assistance to Ukraine, including more than $1 billion in direct assistance. He further emphasized that the United States and its allies will defend every inch of territory of NATO countries, including Ukraine, with the full force of their collective power. However, he mentioned nothing about Michael Jackson.

SOURCES: state_of_the_union.txt

Total Tokens: 2287

Prompt Tokens: 2187

Completion Tokens: 100

3.8259849548339844 seconds

````

Is it possible there is a remnant of that example code that gets called and adds the question about Michael Jackson? | Hallucinating Question about Michael Jackson | https://api.github.com/repos/langchain-ai/langchain/issues/2510/comments | 6 | 2023-04-06T19:11:07Z | 2023-10-15T06:04:51Z | https://github.com/langchain-ai/langchain/issues/2510 | 1,657,916,335 | 2,510 |

[

"hwchase17",

"langchain"

]

| Trying to initialize a `ChatOpenAI` is resulting in this error:

```

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI(temperature=0)

```

> `openai` has no `ChatCompletion` attribute, this is likely due to an old version of the openai package.

I've upgraded all my packages to latest...

```

pip3 list

Package Version

------------------------ ---------

langchain 0.0.133

openai 0.27.4

```

| `openai` has no `ChatCompletion` attribute | https://api.github.com/repos/langchain-ai/langchain/issues/2505/comments | 7 | 2023-04-06T18:00:51Z | 2023-09-28T16:08:56Z | https://github.com/langchain-ai/langchain/issues/2505 | 1,657,837,119 | 2,505 |

[

"hwchase17",

"langchain"

]

| When using the `refine` chain_type of the `load_summarize_chain`, I get some unique output on some longer documents, which might necessitate minor changes to the current prompt.

```

Return original summary.

```

```

The original summary remains appropriate.

```

```

No changes needed to the original summary.

```

```

The existing summary remains sufficient in capturing the key points discussed

```

```

No refinement needed, as the new context does not provide any additional information on the content of the discussion or its key takeaways.

``` | Summarize Chain Doesn't Always Return Summary When Using Refine Chain Type | https://api.github.com/repos/langchain-ai/langchain/issues/2504/comments | 10 | 2023-04-06T17:17:27Z | 2023-12-09T16:07:26Z | https://github.com/langchain-ai/langchain/issues/2504 | 1,657,785,547 | 2,504 |

[

"hwchase17",

"langchain"

]

| I would like to create a new issue on GitHub regarding the extension of ChatGPTPluginRetriever to support filters for metadata in chatGptRetrievalPlugin. With the ability to extend metadata using chatGptRetrievalPlugin, I believe there will be an increased need to consider filters for metadata as well. In fact, I myself have this need and have implemented the filter feature by extending ChatGPTPluginRetriever and RetrievalQA. While I have made limited extensions for specific use cases, I hope this feature will be supported throughout the entire library. Thank you. | I want to extend ChatGPTPluginRetriever to support filters for chatGptRetrievalPlugin. | https://api.github.com/repos/langchain-ai/langchain/issues/2501/comments | 2 | 2023-04-06T15:49:22Z | 2023-09-10T16:37:19Z | https://github.com/langchain-ai/langchain/issues/2501 | 1,657,669,963 | 2,501 |

[

"hwchase17",

"langchain"

]

| Currently the `OpenSearchVectorSearch` class [defaults to `vector_field`](https://github.com/hwchase17/langchain/blob/26314d7004f36ca01f2c843a3ac38b166c9d2c44/langchain/vectorstores/opensearch_vector_search.py#L189) as the field name of the vector field in all vector similarity searches.

This works fine if you're populating your OpenSearch instance with data via LangChain, but doesn't work well if you're attempting to query a vector field with a different name that's been populated by some other process. For maximum utility, users should be able to customize which field is being queried. | OpenSearchVectorSearch doesn't permit the user to specify a field name | https://api.github.com/repos/langchain-ai/langchain/issues/2500/comments | 6 | 2023-04-06T15:46:29Z | 2023-04-10T03:04:18Z | https://github.com/langchain-ai/langchain/issues/2500 | 1,657,666,051 | 2,500 |

[

"hwchase17",

"langchain"

]

| I am running the following in a Jupyter Notebook:

```

from langchain.agents.agent_toolkits import create_python_agent

from langchain.tools.python.tool import PythonREPLTool

from langchain.python import PythonREPL

from langchain.llms.openai import OpenAI

import os

os.environ["OPENAI_API_KEY"]="sk-xxxxxxxx"

agent_executor = create_python_agent(

llm=OpenAI(temperature=0, max_tokens=2048),

tool=PythonREPLTool(),

verbose=True,

)

agent_executor.run("""Understand, write a single neuron neural network in TensorFlow.

Take synthetic data for y=2x. Train for 1000 epochs and print every 100 epochs.

Return prediction for x = 5.""")

```

I got the following error:

```

> Entering new AgentExecutor chain...

I need to install TensorFlow and create a single neuron neural network.

Action: Python REPL

Action Input:

import tensorflow as tf

# Create the model

model = tf.keras.Sequential([tf.keras.layers.Dense(units=1, input_shape=[1])])

# Compile the model

model.compile(optimizer='sgd', loss='mean_squared_error')

# Create synthetic data

xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float)

ys = np.array([-2.0, 0.0, 2.0, 4.0, 6.0, 8.0], dtype=float)

# Train the model

model.fit(xs, ys, epochs=1000, verbose=100)

Observation: No module named 'tensorflow'

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: import tensorflow as tf

Observation: No module named 'tensorflow'

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: !pip install tensorflow

Observation: invalid syntax (<string>, line 1)

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: !pip install tensorflow

Observation: invalid syntax (<string>, line 1)

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: !pip install tensorflow

Observation: invalid syntax (<string>, line 1)

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: !pip install tensorflow

Observation: invalid syntax (<string>, line 1)

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: !pip install tensorflow

Observation: invalid syntax (<string>, line 1)

Thought: I need to install TensorFlow

Action: Command Line

Action Input: pip install tensorflow

Observation: Command Line is not a valid tool, try another one.

Thought: I need to install TensorFlow

Action: Python REPL

Action Input: !pip install tensorflow

Observation: invalid syntax (<string>, line 1)

Thought:

> Finished chain.

'Agent stopped due to max iterations.'

```

Not sure where am I going wrong. | 'Agent stopped due to max iterations.' | https://api.github.com/repos/langchain-ai/langchain/issues/2495/comments | 5 | 2023-04-06T14:36:38Z | 2023-09-25T16:11:25Z | https://github.com/langchain-ai/langchain/issues/2495 | 1,657,547,064 | 2,495 |

[

"hwchase17",

"langchain"

]

| I have the following code:

```

docsearch = Chroma.from_documents(texts, embeddings,persist_directory=persist_directory)

```

and get the following error:

```

Retrying langchain.embeddings.openai.embed_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Requests to the Embeddings_Create Operation under Azure OpenAI API version 2022-12-01 have exceeded call rate limit of your current OpenAI S0 pricing tier. Please retry after 3 seconds. Please contact Azure support service if you would like to further increase the default rate limit.

```

The length of my ```texts``` list is less than 100 and as far as I know azure has a 400 request/min limit. That means I should not receive any limitation error. Can someone explain me what is happening which results to this error?

After these retires by Langchain, it looks like embeddings are lost and not stored in the Chroma DB. Could someone please give me a hint what I'm doing wrong?

using langchain==0.0.125

Many thanks | Azure OpenAI Embedding langchain.embeddings.openai.embed_with_retry won't provide any embeddings after retries. | https://api.github.com/repos/langchain-ai/langchain/issues/2493/comments | 33 | 2023-04-06T12:25:58Z | 2024-04-15T16:35:33Z | https://github.com/langchain-ai/langchain/issues/2493 | 1,657,331,728 | 2,493 |

[

"hwchase17",

"langchain"

]

|

persist_directory = 'chroma_db_store/index/' or 'chroma_db_store'

docsearch = Chroma(persist_directory=persist_directory, embedding_function=embeddings)

query = "Hey"

docs = docsearch.similarity_search(query)

NoIndexException: Index not found, please create an instance before querying

Folder structure

chroma_db_store:

- chroma-collections.parquet

- chroma-embeddings.parquet

- index/ | Error while loading saved index in chroma db | https://api.github.com/repos/langchain-ai/langchain/issues/2491/comments | 36 | 2023-04-06T11:52:42Z | 2024-04-18T10:42:12Z | https://github.com/langchain-ai/langchain/issues/2491 | 1,657,278,903 | 2,491 |

[

"hwchase17",

"langchain"

]

| `search_index = Chroma(persist_directory='db', embedding_function=OpenAIEmbeddings())`

but trying to do a similarity_search on it, i get this error:

`NoIndexException: Index not found, please create an instance before querying`

folder structure:

db/

- index/

- id_to_uuid_xx.pkl

- index_xx.pkl

- index_metadata_xx.pkl

- uuid_to_id_xx.pkl

- chroma-collections.parquet

- chroma-embeddings.parquet | instantiating Chroma from persist_directory not working: `NoIndexException` | https://api.github.com/repos/langchain-ai/langchain/issues/2490/comments | 2 | 2023-04-06T11:51:58Z | 2023-09-10T16:37:24Z | https://github.com/langchain-ai/langchain/issues/2490 | 1,657,277,903 | 2,490 |

[

"hwchase17",

"langchain"

]

| in PromptTemplate i am loading json and it is coming back with below error

Exception has occurred: KeyError and mentioning the key used in json in that case customerNAme | KeyError : While Loading Json Context in Template | https://api.github.com/repos/langchain-ai/langchain/issues/2489/comments | 4 | 2023-04-06T11:44:10Z | 2023-09-25T16:11:30Z | https://github.com/langchain-ai/langchain/issues/2489 | 1,657,267,209 | 2,489 |

[

"hwchase17",

"langchain"

]

| Error with the AgentOutputParser() when I follow the notebook "Conversation Agent (for Chat Models)"

`> Entering new AgentExecutor chain...

Traceback (most recent call last):

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/conversational_chat/base.py", line 106, in _extract_tool_and_input

response = self.output_parser.parse(llm_output)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/conversational_chat/base.py", line 51, in parse

response = json.loads(cleaned_output)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/json/__init__.py", line 357, in loads

return _default_decoder.decode(s)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/json/decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/json/decoder.py", line 355, in raw_decode

raise JSONDecodeError("Expecting value", s, err.value) from None

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "demo0_0_4.py", line 119, in <module>

sys.stdout.write(agent_executor(query)['output'])

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/chains/base.py", line 116, in __call__

raise e

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/chains/base.py", line 113, in __call__

outputs = self._call(inputs)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/agent.py", line 637, in _call

next_step_output = self._take_next_step(

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/agent.py", line 553, in _take_next_step

output = self.agent.plan(intermediate_steps, **inputs)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/agent.py", line 286, in plan

action = self._get_next_action(full_inputs)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/agent.py", line 248, in _get_next_action

parsed_output = self._extract_tool_and_input(full_output)

File "/Users/wzy/opt/anaconda3/envs/py3.8/lib/python3.8/site-packages/langchain/agents/conversational_chat/base.py", line 109, in _extract_tool_and_input

raise ValueError(f"Could not parse LLM output: {llm_output}")

ValueError: Could not parse LLM output: , wo xiang zhao yi ge hao de zhongwen yuyan xuexiao` | ValueError: Could not parse LLM output: on 'chat-conversational-react-description' | https://api.github.com/repos/langchain-ai/langchain/issues/2488/comments | 2 | 2023-04-06T11:17:28Z | 2023-09-26T16:10:32Z | https://github.com/langchain-ai/langchain/issues/2488 | 1,657,228,906 | 2,488 |

[

"hwchase17",

"langchain"

]

| My template has a part that is formatted like this:

```

Final:

[{{'instruction': 'What is the INR value of 1 USD?', 'source': 'USD', 'target': 'INR', 'amount': 1}},

{{'instruction': 'Convert 100 USD to EUR.', 'source': 'USD', 'target': 'EUR', 'amount': 100}},

{{'instruction': 'How much is 200 GBP in JPY?', 'source': 'GBP', 'target': 'JPY', 'amount': 200}},

{{'instruction': 'Convert 300 AUD to INR.', 'source': 'AUD', 'target': 'INR', 'amount': 300}},

{{'instruction': 'Convert 900 HKD to SGD.', 'source': 'HKD', 'target': 'SGD', 'amount': 900}}]

```

and when I'm trying to form a FewShotTemplate from it the error I get is

`KeyError: "'instruction'"`

What's the correct way to handle that? Am I placing my double curly braces wrong? Should there be more (`{{{` and the like)? | KeyError with double curly braces | https://api.github.com/repos/langchain-ai/langchain/issues/2487/comments | 1 | 2023-04-06T11:04:49Z | 2023-04-06T11:19:00Z | https://github.com/langchain-ai/langchain/issues/2487 | 1,657,207,499 | 2,487 |

[

"hwchase17",

"langchain"

]

| Traceback (most recent call last):

File "c:\Users\Siddhesh\Desktop\llama.cpp\langchain_test.py", line 10, in <module>

llm = LlamaCpp(model_path="C:\\Users\\Siddhesh\\Desktop\\llama.cpp\\models\\ggml-model-q4_0.bin")

File "pydantic\main.py", line 339, in pydantic.main.BaseModel.__init__

File "pydantic\main.py", line 1102, in pydantic.main.validate_model

File "C:\Users\Siddhesh\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\llms\llamacpp.py", line 117, in validate_environment

raise NameError(f"Could not load Llama model from path: {model_path}")

NameError: Could not load Llama model from path: C:\Users\Siddhesh\Desktop\llama.cpp\models\ggml-model-q4_0.bin

I have tried with raw string, double \\, and the linux path format /path/to/model - none of them worked.

The path is right and the model .bin file is in the latest ggml model format. The model format for llamacpp was recently changed from `ggml` to `ggjt` and the model files had to be recoverted into this format. Is the issue being caused because of this change? | NameError: Could not load Llama model from path | https://api.github.com/repos/langchain-ai/langchain/issues/2485/comments | 17 | 2023-04-06T10:31:43Z | 2024-03-27T14:56:25Z | https://github.com/langchain-ai/langchain/issues/2485 | 1,657,150,082 | 2,485 |

[

"hwchase17",

"langchain"

]

| If index already exists or any doc inside it, I can not update the index or add more docs to it. for example:

docsearch = ElasticVectorSearch.from_texts(texts=texts[0:10], ids=ids[0:10], embedding=embedding, elasticsearch_url=f"http://elastic:{ELASTIC_PASSWORD}@localhost:9200", index_name="test")

Get an error: BadRequestError: BadRequestError(400, 'resource_already_exists_exception', 'index [test/v_Ahq4NSS2aWm2_gLNUtpQ] already exists') | Can not overwrite docs in ElasticVectorSearch as Pinecone do | https://api.github.com/repos/langchain-ai/langchain/issues/2484/comments | 15 | 2023-04-06T10:24:28Z | 2023-09-28T16:09:01Z | https://github.com/langchain-ai/langchain/issues/2484 | 1,657,135,863 | 2,484 |

[

"hwchase17",

"langchain"

]

| I am using a Agent and wanted to stream just the final response, do you know if that is supported already? and how to do it? | using a Agent and wanted to stream just the final response | https://api.github.com/repos/langchain-ai/langchain/issues/2483/comments | 28 | 2023-04-06T09:58:04Z | 2024-07-03T10:07:39Z | https://github.com/langchain-ai/langchain/issues/2483 | 1,657,083,533 | 2,483 |

[

"hwchase17",

"langchain"

]

| I want to costum a chatmodel, what is necessary like a custom llm's _call() method? | Custom Chat model like llm | https://api.github.com/repos/langchain-ai/langchain/issues/2482/comments | 1 | 2023-04-06T09:47:06Z | 2023-09-10T16:37:29Z | https://github.com/langchain-ai/langchain/issues/2482 | 1,657,060,333 | 2,482 |

[

"hwchase17",

"langchain"

]

| I'm trying to save and restore a Langchain Agent with a custom prompt template, and I'm encountering the error `"CustomPromptTemplate" object has no field "_prompt_type"`.

Langchain version: 0.0.132

Source code:

```

import os

os.environ["OPENAI_API_KEY"] = "..."

os.environ["SERPAPI_API_KEY"] = "..."

from langchain.agents import Tool, AgentExecutor, LLMSingleActionAgent, AgentOutputParser

from langchain.prompts import StringPromptTemplate

from langchain import OpenAI, SerpAPIWrapper, LLMChain

from typing import List, Union

from langchain.schema import AgentAction, AgentFinish

import re

search = SerpAPIWrapper()

tools = [

Tool(

name = "Search",

func=search.run,

description="useful for when you need to answer questions about current events"

)

]

template = """Answer the following questions as best you can, but speaking as a pirate might speak. You have access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin! Remember to speak as a pirate when giving your final answer. Use lots of "Arg"s

Question: {input}

{agent_scratchpad}"""

class CustomPromptTemplate(StringPromptTemplate):

# The template to use

template: str

# The list of tools available

tools: List[Tool]

def format(self, **kwargs) -> str:

# Get the intermediate steps (AgentAction, Observation tuples)

# Format them in a particular way

intermediate_steps = kwargs.pop("intermediate_steps")

thoughts = ""

for action, observation in intermediate_steps:

thoughts += action.log

thoughts += f"\nObservation: {observation}\nThought: "

# Set the agent_scratchpad variable to that value

kwargs["agent_scratchpad"] = thoughts

# Create a tools variable from the list of tools provided

kwargs["tools"] = "\n".join([f"{tool.name}: {tool.description}" for tool in self.tools])

# Create a list of tool names for the tools provided

kwargs["tool_names"] = ", ".join([tool.name for tool in self.tools])

return self.template.format(**kwargs)

prompt = CustomPromptTemplate(

template=template,

tools=tools,

# This omits the `agent_scratchpad`, `tools`, and `tool_names` variables because those are generated dynamically

# This includes the `intermediate_steps` variable because that is needed

input_variables=["input", "intermediate_steps"]

)

class CustomOutputParser(AgentOutputParser):

def parse(self, llm_output: str) -> Union[AgentAction, AgentFinish]:

# Check if agent should finish

if "Final Answer:" in llm_output:

return AgentFinish(

# Return values is generally always a dictionary with a single `output` key

# It is not recommended to try anything else at the moment :)

return_values={"output": llm_output.split("Final Answer:")[-1].strip()},

log=llm_output,

)

# Parse out the action and action input

regex = r"Action: (.*?)[\n]*Action Input:[\s]*(.*)"

match = re.search(regex, llm_output, re.DOTALL)

if not match:

raise ValueError(f"Could not parse LLM output: `{llm_output}`")

action = match.group(1).strip()

action_input = match.group(2)

# Return the action and action input

return AgentAction(tool=action, tool_input=action_input.strip(" ").strip('"'), log=llm_output)

output_parser = CustomOutputParser()

llm = OpenAI(temperature=0)

# LLM chain consisting of the LLM and a prompt

llm_chain = LLMChain(llm=llm, prompt=prompt)

tool_names = [tool.name for tool in tools]

agent = LLMSingleActionAgent(

llm_chain=llm_chain,

output_parser=output_parser,

stop=["\nObservation:"],

allowed_tools=tool_names

)

agent_executor = AgentExecutor.from_agent_and_tools(agent=agent, tools=tools, verbose=True)

agent_executor.run("How many people live in canada as of 2023?")

agent.save("agent.yaml")

```

Error:

```

Traceback (most recent call last):

File "agent.py", line 114, in <module>

agent.save("agent.yaml")

File "/Users/corey.zumar/opt/anaconda3/envs/base2/lib/python3.8/site-packages/langchain/agents/agent.py", line 130, in save

agent_dict = self.dict()

File "/Users/corey.zumar/opt/anaconda3/envs/base2/lib/python3.8/site-packages/langchain/agents/agent.py", line 104, in dict

_dict = super().dict()

File "pydantic/main.py", line 445, in pydantic.main.BaseModel.dict

File "pydantic/main.py", line 843, in _iter

File "pydantic/main.py", line 718, in pydantic.main.BaseModel._get_value

File "/Users/corey.zumar/opt/anaconda3/envs/base2/lib/python3.8/site-packages/langchain/chains/base.py", line 248, in dict

_dict = super().dict()

File "pydantic/main.py", line 445, in pydantic.main.BaseModel.dict

File "pydantic/main.py", line 843, in _iter

File "pydantic/main.py", line 718, in pydantic.main.BaseModel._get_value

File "/Users/corey.zumar/opt/anaconda3/envs/base2/lib/python3.8/site-packages/langchain/prompts/base.py", line 154, in dict

prompt_dict["_type"] = self._prompt_type

File "/Users/corey.zumar/opt/anaconda3/envs/base2/lib/python3.8/site-packages/langchain/prompts/base.py", line 149, in _prompt_type

raise NotImplementedError

NotImplementedError

```

Thanks in advance for your help! | Can't save a custom agent: "CustomPromptTemplate" object has no field "_prompt_type" | https://api.github.com/repos/langchain-ai/langchain/issues/2481/comments | 1 | 2023-04-06T08:33:35Z | 2023-09-10T16:37:34Z | https://github.com/langchain-ai/langchain/issues/2481 | 1,656,924,309 | 2,481 |

[

"hwchase17",

"langchain"

]

| For example, I want to build an agent with a Q&A tool (wrap the [Q&A with source chain](https://python.langchain.com/en/latest/modules/chains/index_examples/qa_with_sources.html) ) as private knowledge base, together with several other tools to produce the final answer

What's the suggested way to pass the "sources" information to the agent and return in the final answer? For the end user, the experience is similar as what new bing does.

| Passing data from tool to agent | https://api.github.com/repos/langchain-ai/langchain/issues/2478/comments | 2 | 2023-04-06T08:04:52Z | 2023-09-18T16:20:43Z | https://github.com/langchain-ai/langchain/issues/2478 | 1,656,881,285 | 2,478 |

[

"hwchase17",

"langchain"

]

| I also tried this for the `csv_agent`. It just gets confused . The default `text-davinci` works in one go.

| support gpt-3.5-turbo model for agent toolkits | https://api.github.com/repos/langchain-ai/langchain/issues/2476/comments | 1 | 2023-04-06T06:25:09Z | 2023-09-10T16:37:44Z | https://github.com/langchain-ai/langchain/issues/2476 | 1,656,743,871 | 2,476 |

[

"hwchase17",

"langchain"

]

| Does langchain have Atlassian Confluence support like Llama Hub? | Atlassian Confluence support | https://api.github.com/repos/langchain-ai/langchain/issues/2473/comments | 24 | 2023-04-06T05:29:57Z | 2023-12-13T16:10:48Z | https://github.com/langchain-ai/langchain/issues/2473 | 1,656,690,780 | 2,473 |

[

"hwchase17",

"langchain"

]

| `text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0) `

If I do this, some text will not be split strictly by default ‘\n\n’ like:

'In state after state, new laws have been passed, not only to suppress the vote, but to subvert entire elections. \n\nWe cannot let this happen. \n\nTonight. I call on the Senate to: Pass the Freedom to Vote Act. Pass the John Lewis Voting Rights Act. And while you’re at it, pass the Disclose Act so Americans can know who is funding our elections. \n\nTonight, I’d like to honor someone who has dedicated his life to serve this country: Justice Stephen Breyer—an Army veteran, Constitutional scholar, and retiring Justice of the United States Supreme Court. Justice Breyer, thank you for your service. \n\nOne of the most serious constitutional responsibilities a President has is nominating someone to serve on the United States Supreme Court. \n\nAnd I did that 4 days ago, when I nominated Circuit Court of Appeals Judge Ketanji Brown Jackson. One of our nation’s top legal minds, who will continue Justice Breyer’s legacy of excellence.'

| how to split documents | https://api.github.com/repos/langchain-ai/langchain/issues/2469/comments | 1 | 2023-04-06T04:24:01Z | 2023-04-25T12:48:06Z | https://github.com/langchain-ai/langchain/issues/2469 | 1,656,641,706 | 2,469 |

[

"hwchase17",

"langchain"

]

| Previously, for standard language models setting `batch_size` would control concurrent LLM requests, reducing the risk of timeouts and network issues (https://github.com/hwchase17/langchain/issues/1145).

New chat models don't seem to support this parameter. See the following example:

```python

from langchain.chat_models import ChatOpenAI

chain = load_summarize_chain(ChatOpenAI(batch_size=1), chain_type="map_reduce")

chain.run(docs)

File /usr/local/lib/python3.9/site-packages/openai/api_requestor.py:683, in APIRequestor._interpret_response_line(self, rbody, rcode, rheaders, stream)

681 stream_error = stream and "error" in resp.data

682 if stream_error or not 200 <= rcode < 300:

--> 683 raise self.handle_error_response(

684 rbody, rcode, resp.data, rheaders, stream_error=stream_error

685 )

686 return resp

InvalidRequestError: Unrecognized request argument supplied: batch_size

```

Is this intentional? Happy to cut a PR! | Batch Size for Chat Models | https://api.github.com/repos/langchain-ai/langchain/issues/2465/comments | 3 | 2023-04-06T03:45:40Z | 2023-10-16T14:37:35Z | https://github.com/langchain-ai/langchain/issues/2465 | 1,656,615,689 | 2,465 |

[

"hwchase17",

"langchain"

]

| Hey!

First things first, I just want to say thanks for this amazing project :)

In MapReduceDocumentsChain class, when you call it asynchronously, i.e. with `acombine_docs`, it still calls the same `self.process_results` method as it does when calling the regular `combine_docs`. I expected, that when you use async, it would do both map and reduce (self.process_results is basically the reduce step, if I understand correctly) steps async.

The relevant parts in [map_reduce.py](https://github.com/hwchase17/langchain/blob/master/langchain/chains/combine_documents/map_reduce.py):

```python

class MapReduceDocumentsChain(BaseCombineDocumentsChain, BaseModel):

async def acombine_docs(

self, docs: List[Document], **kwargs: Any

) -> Tuple[str, dict]:

...

results = ...

return self._process_results(results, docs, **kwargs)

def _process_results(

self,

results: List[Dict],

docs: List[Document],

token_max: int = 3000,

**kwargs: Any,

) -> Tuple[str, dict]:

....

output, _ = self.combine_document_chain.combine_docs(result_docs, **kwargs) # this should be acombine_docs

return output, extra_return_dict

```

This could be fixed by, for example, adding the async version for processing results `_aprocess_results` and using that instead when doing async calls.

I would be happy to write a fix for this, just wanted to confirm if it's indeed a problem and I'm not missing something (the reason I stumbled on this is because I am working on a task similar to summarization and was looking for ways to speed things up). | Adding async support for reduce step in MapReduceDocumentsChain | https://api.github.com/repos/langchain-ai/langchain/issues/2464/comments | 3 | 2023-04-06T00:24:31Z | 2023-12-06T17:47:15Z | https://github.com/langchain-ai/langchain/issues/2464 | 1,656,491,381 | 2,464 |

[

"hwchase17",

"langchain"

]

| When I call

```python

retriever = PineconeHybridSearchRetriever(embeddings=embeddings, index=index, tokenizer=tokenizer)

retriever.get_relevant_documents(query)

```

I'm getting the error:

```

final_result.append(Document(page_content=res["metadata"]["context"]))

KeyError: 'context'

```

What is that `context`? | KeyError: 'context' when using PineconeHybridSearchRetriever | https://api.github.com/repos/langchain-ai/langchain/issues/2459/comments | 3 | 2023-04-05T20:46:00Z | 2024-05-12T18:19:37Z | https://github.com/langchain-ai/langchain/issues/2459 | 1,656,270,923 | 2,459 |

[

"hwchase17",

"langchain"

]

| During an experiment I tryied to load some personal whatsapp conversations into a vectorstore. But loading was failing. Following there's an example of a dataset and code with some half lines working and half failing:

Dataset (whatsapp_chat.txt):

```

19/10/16, 13:24 - Aitor Mira: Buenas Andrea!

19/10/16, 13:24 - Aitor Mira: Si

19/10/16, 13:24 PM - Aitor Mira: Buenas Andrea!

19/10/16, 13:24 PM - Aitor Mira: Si

```

Code:

```python

from langchain.document_loaders import WhatsAppChatLoader

loader = WhatsAppChatLoader("../data/whatsapp_chat.txt")

docs = loader.load()

```

Returns:

```

[Document(page_content='Aitor Mira on 19/10/16, 13:24 PM: Buenas Andrea!\n\nAitor Mira on 19/10/16, 13:24 PM: Si\n\n', metadata={'source': '.[.\\data\\whatsapp_chat.txt](https://file+.vscode-resource.vscode-cdn.net/c%3A/Users/itort/Documents/GiTor/impersonate-gpt/notebooks//data//whatsapp_chat.txt)'})]

```

What's happening is that due to a bug in the regex match pattern, all lines without `AM` or `PM` after the hour:minutes won't be matched. Thus two first lines of whatsapp_chat.txt are ignored and two last matched.

Here the buggy regex:

`r"(\d{1,2}/\d{1,2}/\d{2,4}, \d{1,2}:\d{1,2} (?:AM|PM)) - (.*?): (.*)"`

Here the solution regex parsing either 12 or 24 hours time formats:

`r"(\d{1,2}/\d{1,2}/\d{2,4}, \d{1,2}:\d{1,2}(?: AM| PM)?) - (.*?): (.*)"` | WhatsAppChatLoader fails to load 24 hours time format chats | https://api.github.com/repos/langchain-ai/langchain/issues/2457/comments | 1 | 2023-04-05T20:39:23Z | 2023-04-06T16:45:16Z | https://github.com/langchain-ai/langchain/issues/2457 | 1,656,260,004 | 2,457 |

[

"hwchase17",

"langchain"

]

| In following the docs for Agent ToolKits, specifically for OpenAPI, I encountered a bug in `reduce_openapi_spec` where the url has `https:` trunc'd off leading to errors when the Agent attempts to make a request.

I'm able to correct it by hardcoding the value for the url in `servers`:

- `api_spec.servers[0]['url'] = 'https://api.foo.com`

I looked through `reduce_openapi_spec` but don't obviously see where the bug is being introduced. | reduce_openapi_spec removes https: from url | https://api.github.com/repos/langchain-ai/langchain/issues/2456/comments | 5 | 2023-04-05T20:26:58Z | 2023-10-25T20:48:18Z | https://github.com/langchain-ai/langchain/issues/2456 | 1,656,244,370 | 2,456 |

[

"hwchase17",

"langchain"

]

| Hi there,

Going through the docs, and it seems there is only SQLAgent available that works for the Postgres/MySQL databases. Is there any support for the Clickhouse database?

| Clickhouse langchain agent? | https://api.github.com/repos/langchain-ai/langchain/issues/2454/comments | 3 | 2023-04-05T20:20:48Z | 2024-03-28T03:37:12Z | https://github.com/langchain-ai/langchain/issues/2454 | 1,656,231,938 | 2,454 |

[

"hwchase17",

"langchain"

]