issue_owner_repo

listlengths 2

2

| issue_body

stringlengths 0

261k

⌀ | issue_title

stringlengths 1

925

| issue_comments_url

stringlengths 56

81

| issue_comments_count

int64 0

2.5k

| issue_created_at

stringlengths 20

20

| issue_updated_at

stringlengths 20

20

| issue_html_url

stringlengths 37

62

| issue_github_id

int64 387k

2.46B

| issue_number

int64 1

127k

|

|---|---|---|---|---|---|---|---|---|---|

[

"hwchase17",

"langchain"

]

| can you tell me how to use local llm to replace the openai model,thanks, i can not find related codes | replace openai | https://api.github.com/repos/langchain-ai/langchain/issues/2369/comments | 4 | 2023-04-04T03:50:18Z | 2023-09-26T16:11:28Z | https://github.com/langchain-ai/langchain/issues/2369 | 1,653,125,585 | 2,369 |

[

"hwchase17",

"langchain"

]

| Token usage calculation is not working for ChatOpenAI.

# How to reproduce

```python3

from langchain.callbacks import get_openai_callback

from langchain.chat_models import ChatOpenAI

from langchain.schema import (

AIMessage,

HumanMessage,

SystemMessage

)

chat = ChatOpenAI(model_name="gpt-3.5-turbo")

with get_openai_callback() as cb:

result = chat([HumanMessage(content="Tell me a joke")])

print(f"Total Tokens: {cb.total_tokens}")

print(f"Prompt Tokens: {cb.prompt_tokens}")

print(f"Completion Tokens: {cb.completion_tokens}")

print(f"Successful Requests: {cb.successful_requests}")

print(f"Total Cost (USD): ${cb.total_cost}")

```

Output:

```text

Total Tokens: 0

Prompt Tokens: 0

Completion Tokens: 0

Successful Requests: 0

Total Cost (USD): $0.0

```

# Possible fix

The following patch fixes the issues, but breaks the linter.

```diff

From f60afc48c9082fc6b09d69b8c8375353acc9fc0b Mon Sep 17 00:00:00 2001

From: Fabio Perez <[email protected]>

Date: Mon, 3 Apr 2023 19:06:34 -0300

Subject: [PATCH] Fix token usage in ChatOpenAI

---

langchain/chat_models/openai.py | 4 +++-

1 file changed, 3 insertions(+), 1 deletion(-)

diff --git a/langchain/chat_models/openai.py b/langchain/chat_models/openai.py

index c7ee4bd..a8d5fbd 100644

--- a/langchain/chat_models/openai.py

+++ b/langchain/chat_models/openai.py

@@ -274,7 +274,9 @@ class ChatOpenAI(BaseChatModel, BaseModel):

gen = ChatGeneration(message=message)

generations.append(gen)

llm_output = {"token_usage": response["usage"], "model_name": self.model_name}

- return ChatResult(generations=generations, llm_output=llm_output)

+ result = ChatResult(generations=generations, llm_output=llm_output)

+ self.callback_manager.on_llm_end(result, verbose=self.verbose)

+ return result

async def _agenerate(

self, messages: List[BaseMessage], stop: Optional[List[str]] = None

--

2.39.2 (Apple Git-143)

```

I tried to change the signature of `on_llm_end` (langchain/callbacks/base.py) to:

```python

async def on_llm_end(

self, response: Union[LLMResult, ChatResult], **kwargs: Any

) -> None:

```

but this will break many places, so I'm not sure if that's the best way to fix this issue. | Token usage calculation is not working for ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2359/comments | 15 | 2023-04-03T22:36:12Z | 2023-11-16T07:16:05Z | https://github.com/langchain-ai/langchain/issues/2359 | 1,652,866,109 | 2,359 |

[

"hwchase17",

"langchain"

]

| This is because the SQLDatabase class does not have view support. | SQLDatabaseChain & the SQL Database Agent do not support generating queries over views | https://api.github.com/repos/langchain-ai/langchain/issues/2356/comments | 5 | 2023-04-03T20:35:17Z | 2023-04-12T19:29:45Z | https://github.com/langchain-ai/langchain/issues/2356 | 1,652,736,092 | 2,356 |

[

"hwchase17",

"langchain"

]

| I haven't found a method for it in the class but I assumed it can look similar to `from_existing_index`

```

@classmethod

def from_existing_collection(

cls,

collection_name: str,

embedding: Embeddings,

text_key: str = "text",

namespace: Optional[str] = None,

) -> Pinecone:

```

@hwchase17 I am happy to try to make a PR but wanted to ask here first in case someone is already working on it so that there is no duplicate work. | [Pinecone] How to use collection to query against instead of an index? | https://api.github.com/repos/langchain-ai/langchain/issues/2353/comments | 3 | 2023-04-03T20:13:21Z | 2023-04-16T03:09:26Z | https://github.com/langchain-ai/langchain/issues/2353 | 1,652,709,721 | 2,353 |

[

"hwchase17",

"langchain"

]

| steps to reproduce are fairly simple:

```python

Python 3.10.10 (main, Mar 5 2023, 22:26:53) [GCC 12.2.1 20230201] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from langchain.document_loaders import YoutubeLoader

>>> loader = YoutubeLoader("https://www.youtube.com/watch?v=QsYGlZkevEg", add_video_info=True)

>>> print(loader.load())

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/cpunch/.local/lib/python3.10/site-packages/langchain/document_loaders/youtube.py", line 132, in load

transcript_list = YouTubeTranscriptApi.list_transcripts(self.video_id)

File "/home/cpunch/.local/lib/python3.10/site-packages/youtube_transcript_api/_api.py", line 71, in list_transcripts

return TranscriptListFetcher(http_client).fetch(video_id)

File "/home/cpunch/.local/lib/python3.10/site-packages/youtube_transcript_api/_transcripts.py", line 47, in fetch

self._extract_captions_json(self._fetch_video_html(video_id), video_id)

File "/home/cpunch/.local/lib/python3.10/site-packages/youtube_transcript_api/_transcripts.py", line 59, in _extract_

captions_json

raise TranscriptsDisabled(video_id)

youtube_transcript_api._errors.TranscriptsDisabled:

Could not retrieve a transcript for the video https://www.youtube.com/watch?v=https://www.youtube.com/watch?v=QsYGlZkev

Eg! This is most likely caused by:

Subtitles are disabled for this video

If you are sure that the described cause is not responsible for this error and that a transcript should be retrievable,

please create an issue at https://github.com/jdepoix/youtube-transcript-api/issues. Please add which version of youtub

e_transcript_api you are using and provide the information needed to replicate the error. Also make sure that there are

no open issues which already describe your problem!

>>>

``` | Broken Youtube Transcript loader | https://api.github.com/repos/langchain-ai/langchain/issues/2349/comments | 1 | 2023-04-03T18:02:04Z | 2023-04-03T18:03:44Z | https://github.com/langchain-ai/langchain/issues/2349 | 1,652,520,952 | 2,349 |

[

"hwchase17",

"langchain"

]

| Hi,

I have been trying to use flan-u2l for my usecase using sequential chains. However, I am getting following token limit error even though FLAN-U2L has receptive of 2048 token size according to paper:

```ValueError: Error raised by inference API: Input validation error: `inputs` must have less than 1000 tokens. Given: 1112```

Please help me to resolve this issue.

Is their anything I am missing to change the input token size of FLAN-U2L.? | Token Size Limit Issue While calling FLAN-U2L using Langchain! | https://api.github.com/repos/langchain-ai/langchain/issues/2347/comments | 2 | 2023-04-03T16:58:56Z | 2023-09-18T16:21:28Z | https://github.com/langchain-ai/langchain/issues/2347 | 1,652,435,441 | 2,347 |

[

"hwchase17",

"langchain"

]

| Can check here: https://replit.com/@OlegAzava/LangChainChatSave

```python

Traceback (most recent call last):

File "main.py", line 15, in <module>

chat_prompt.save('./test.json')

File "/home/runner/LangChainChatSave/venv/lib/python3.10/site-packages/langchain/prompts/chat.py", line 187, in save

raise NotImplementedError

NotImplementedError

```

<img width="1460" alt="image" src="https://user-images.githubusercontent.com/3731173/229548079-44063f13-9ea3-4eff-a236-68d57ceee011.png">

### Expectations on timing or accepting help on this one?

| Cannot save multi message chat prompt | https://api.github.com/repos/langchain-ai/langchain/issues/2341/comments | 2 | 2023-04-03T14:58:23Z | 2023-09-18T16:21:33Z | https://github.com/langchain-ai/langchain/issues/2341 | 1,652,240,892 | 2,341 |

[

"hwchase17",

"langchain"

]

| Hi, I think it's currently impossible to pass a user parameter - this is helpful for complying with abuse monitoring guidelines of both Azure OpenAI and OpenAI.

I would like to request this feature if not roadmapped.

Thanks! | OpenAI / Azure OpenAI missing optional user parameter | https://api.github.com/repos/langchain-ai/langchain/issues/2338/comments | 2 | 2023-04-03T11:51:42Z | 2023-11-13T15:43:48Z | https://github.com/langchain-ai/langchain/issues/2338 | 1,651,912,299 | 2,338 |

[

"hwchase17",

"langchain"

]

| Hi,

I have been using Langchain for my usecase with ChatGPT and I would like to know the expected pricing for my prompts + outputs that I generate. Is there any way we can calculate pricing for it using lang-chain?

Is there any way we can get the total token used during the request similar to when using the OpenAI ChatGPT API package, in lang-chain?

Please help me out.

Thanks | How to calculate pricing for ChatGPT API using Sequential Chaining ? | https://api.github.com/repos/langchain-ai/langchain/issues/2336/comments | 3 | 2023-04-03T11:11:32Z | 2023-04-03T16:42:03Z | https://github.com/langchain-ai/langchain/issues/2336 | 1,651,856,598 | 2,336 |

[

"hwchase17",

"langchain"

]

| ```

$ poetry install

Installing dependencies from lock file

Warning: poetry.lock is not consistent with pyproject.toml. You may be getting improper dependencies. Run `poetry lock [--no-update]` to fix it.

```

```

$ poetry update

Updating dependencies

Resolving dependencies... (76.9s)

Writing lock file

Package operations: 0 installs, 10 updates, 0 removals

• Updating platformdirs (3.1.1 -> 3.2.0)

• Updating pywin32 (305 -> 306)

• Updating ipython (8.11.0 -> 8.12.0)

• Updating types-pyopenssl (23.1.0.0 -> 23.1.0.1)

• Updating types-toml (0.10.8.5 -> 0.10.8.6)

• Updating types-urllib3 (1.26.25.8 -> 1.26.25.10)

• Updating black (23.1.0 -> 23.3.0)

• Updating types-pyyaml (6.0.12.8 -> 6.0.12.9)

• Updating types-redis (4.5.3.0 -> 4.5.4.1)

• Updating types-requests (2.28.11.16 -> 2.28.11.17)

``` | poetry.lock is not consistent with pyproject.toml | https://api.github.com/repos/langchain-ai/langchain/issues/2335/comments | 5 | 2023-04-03T10:47:10Z | 2023-09-11T09:34:41Z | https://github.com/langchain-ai/langchain/issues/2335 | 1,651,815,456 | 2,335 |

[

"hwchase17",

"langchain"

]

| ```

Request:

- GET https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains%5Cpath%5Cchain.json

Available matches:

- GET https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains/path/chain.json URL does not match

.venv\lib\site-packages\responses\__init__.py:1032: ConnectionError

```

Full log:

```

Administrator@WIN-CNQJV5TD9DP MINGW64 /d/Projects/Pycharm/sergerdn/langchain (fix/dockerfile)

$ make tests

poetry run pytest tests/unit_tests

========================================================================================================= test session starts =========================================================================================================

platform win32 -- Python 3.10.10, pytest-7.2.2, pluggy-1.0.0

rootdir: D:\Projects\Pycharm\sergerdn\langchain

plugins: asyncio-0.20.3, cov-4.0.0, dotenv-0.5.2

asyncio: mode=strict

collected 207 items

tests\unit_tests\test_bash.py ssss [ 1%]

tests\unit_tests\test_formatting.py ... [ 3%]

tests\unit_tests\test_python.py ...... [ 6%]

tests\unit_tests\test_sql_database.py .... [ 8%]

tests\unit_tests\test_sql_database_schema.py .. [ 9%]

tests\unit_tests\test_text_splitter.py ........... [ 14%]

tests\unit_tests\agents\test_agent.py ......... [ 18%]

tests\unit_tests\agents\test_mrkl.py ......... [ 23%]

tests\unit_tests\agents\test_react.py .... [ 25%]

tests\unit_tests\agents\test_tools.py ........ [ 28%]

tests\unit_tests\callbacks\test_callback_manager.py ........ [ 32%]

tests\unit_tests\callbacks\tracers\test_tracer.py ............. [ 39%]

tests\unit_tests\chains\test_api.py . [ 39%]

tests\unit_tests\chains\test_base.py .............. [ 46%]

tests\unit_tests\chains\test_combine_documents.py ........ [ 50%]

tests\unit_tests\chains\test_constitutional_ai.py . [ 50%]

tests\unit_tests\chains\test_conversation.py ........... [ 56%]

tests\unit_tests\chains\test_hyde.py .. [ 57%]

tests\unit_tests\chains\test_llm.py ..... [ 59%]

tests\unit_tests\chains\test_llm_bash.py s [ 59%]

tests\unit_tests\chains\test_llm_checker.py . [ 60%]

tests\unit_tests\chains\test_llm_math.py ... [ 61%]

tests\unit_tests\chains\test_llm_summarization_checker.py . [ 62%]

tests\unit_tests\chains\test_memory.py .... [ 64%]

tests\unit_tests\chains\test_natbot.py .. [ 65%]

tests\unit_tests\chains\test_sequential.py ........... [ 70%]

tests\unit_tests\chains\test_transform.py .. [ 71%]

tests\unit_tests\docstore\test_inmemory.py .... [ 73%]

tests\unit_tests\llms\test_base.py .. [ 74%]

tests\unit_tests\llms\test_callbacks.py .. [ 75%]

tests\unit_tests\llms\test_loading.py . [ 75%]

tests\unit_tests\llms\test_utils.py .. [ 76%]

tests\unit_tests\output_parsers\test_pydantic_parser.py .. [ 77%]

tests\unit_tests\output_parsers\test_regex_dict.py . [ 78%]

tests\unit_tests\prompts\test_chat.py ... [ 79%]

tests\unit_tests\prompts\test_few_shot.py ....... [ 83%]

tests\unit_tests\prompts\test_few_shot_with_templates.py . [ 83%]

tests\unit_tests\prompts\test_length_based_example_selector.py .... [ 85%]

tests\unit_tests\prompts\test_loading.py ........ [ 89%]

tests\unit_tests\prompts\test_prompt.py ........... [ 94%]

tests\unit_tests\prompts\test_utils.py . [ 95%]

tests\unit_tests\tools\test_json.py .... [ 97%]

tests\unit_tests\utilities\test_loading.py ...FEFEFE [100%]

=============================================================================================================== ERRORS ================================================================================================================

_______________________________________________________________________________________________ ERROR at teardown of test_success[None] _______________________________________________________________________________________________

@pytest.fixture(autouse=True)

def mocked_responses() -> Iterable[responses.RequestsMock]:

"""Fixture mocking requests.get."""

> with responses.RequestsMock() as rsps:

tests\unit_tests\utilities\test_loading.py:19:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

.venv\lib\site-packages\responses\__init__.py:913: in __exit__

self.stop(allow_assert=success)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x00000224B5406BF0>, allow_assert = True

def stop(self, allow_assert: bool = True) -> None:

if self._patcher:

# prevent stopping unstarted patchers

self._patcher.stop()

# once patcher is stopped, clean it. This is required to create a new

# fresh patcher on self.start()

self._patcher = None

if not self.assert_all_requests_are_fired:

return

if not allow_assert:

return

not_called = [m for m in self.registered() if m.call_count == 0]

if not_called:

> raise AssertionError(

"Not all requests have been executed {0!r}".format(

[(match.method, match.url) for match in not_called]

)

)

E AssertionError: Not all requests have been executed [('GET', 'https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json')]

.venv\lib\site-packages\responses\__init__.py:1112: AssertionError

_______________________________________________________________________________________________ ERROR at teardown of test_success[v0.3] _______________________________________________________________________________________________

@pytest.fixture(autouse=True)

def mocked_responses() -> Iterable[responses.RequestsMock]:

"""Fixture mocking requests.get."""

> with responses.RequestsMock() as rsps:

tests\unit_tests\utilities\test_loading.py:19:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

.venv\lib\site-packages\responses\__init__.py:913: in __exit__

self.stop(allow_assert=success)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x00000224B545F820>, allow_assert = True

def stop(self, allow_assert: bool = True) -> None:

if self._patcher:

# prevent stopping unstarted patchers

self._patcher.stop()

# once patcher is stopped, clean it. This is required to create a new

# fresh patcher on self.start()

self._patcher = None

if not self.assert_all_requests_are_fired:

return

if not allow_assert:

return

not_called = [m for m in self.registered() if m.call_count == 0]

if not_called:

> raise AssertionError(

"Not all requests have been executed {0!r}".format(

[(match.method, match.url) for match in not_called]

)

)

E AssertionError: Not all requests have been executed [('GET', 'https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains/path/chain.json')]

.venv\lib\site-packages\responses\__init__.py:1112: AssertionError

______________________________________________________________________________________________ ERROR at teardown of test_failed_request _______________________________________________________________________________________________

@pytest.fixture(autouse=True)

def mocked_responses() -> Iterable[responses.RequestsMock]:

"""Fixture mocking requests.get."""

> with responses.RequestsMock() as rsps:

tests\unit_tests\utilities\test_loading.py:19:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

.venv\lib\site-packages\responses\__init__.py:913: in __exit__

self.stop(allow_assert=success)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x00000224B42E75E0>, allow_assert = True

def stop(self, allow_assert: bool = True) -> None:

if self._patcher:

# prevent stopping unstarted patchers

self._patcher.stop()

# once patcher is stopped, clean it. This is required to create a new

# fresh patcher on self.start()

self._patcher = None

if not self.assert_all_requests_are_fired:

return

if not allow_assert:

return

not_called = [m for m in self.registered() if m.call_count == 0]

if not_called:

> raise AssertionError(

"Not all requests have been executed {0!r}".format(

[(match.method, match.url) for match in not_called]

)

)

E AssertionError: Not all requests have been executed [('GET', 'https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json')]

.venv\lib\site-packages\responses\__init__.py:1112: AssertionError

============================================================================================================== FAILURES ===============================================================================================================

_________________________________________________________________________________________________________ test_success[None] __________________________________________________________________________________________________________

mocked_responses = <responses.RequestsMock object at 0x00000224B5406BF0>, ref = 'master'

@pytest.mark.parametrize("ref", [None, "v0.3"])

def test_success(mocked_responses: responses.RequestsMock, ref: str) -> None:

"""Test that a valid hub path is loaded correctly with and without a ref."""

path = "chains/path/chain.json"

lc_path_prefix = f"lc{('@' + ref) if ref else ''}://"

valid_suffixes = {"json"}

body = json.dumps({"foo": "bar"})

ref = ref or DEFAULT_REF

file_contents = None

def loader(file_path: str) -> None:

nonlocal file_contents

assert file_contents is None

file_contents = Path(file_path).read_text()

mocked_responses.get(

urljoin(URL_BASE.format(ref=ref), path),

body=body,

status=200,

content_type="application/json",

)

> try_load_from_hub(f"{lc_path_prefix}{path}", loader, "chains", valid_suffixes)

tests\unit_tests\utilities\test_loading.py:80:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

langchain\utilities\loading.py:42: in try_load_from_hub

r = requests.get(full_url, timeout=5)

.venv\lib\site-packages\requests\api.py:73: in get

return request("get", url, params=params, **kwargs)

.venv\lib\site-packages\requests\api.py:59: in request

return session.request(method=method, url=url, **kwargs)

.venv\lib\site-packages\requests\sessions.py:587: in request

resp = self.send(prep, **send_kwargs)

.venv\lib\site-packages\requests\sessions.py:701: in send

r = adapter.send(request, **kwargs)

.venv\lib\site-packages\responses\__init__.py:1090: in unbound_on_send

return self._on_request(adapter, request, *a, **kwargs)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x00000224B5406BF0>, adapter = <requests.adapters.HTTPAdapter object at 0x00000224B5406E90>, request = <PreparedRequest [GET]>, retries = None

kwargs = {'cert': None, 'proxies': OrderedDict(), 'stream': False, 'timeout': 5, ...}, match = None, match_failed_reasons = ['URL does not match'], resp_callback = None

error_msg = "Connection refused by Responses - the call doesn't match any registered mock.\n\nRequest: \n- GET https://raw.githubu...s:\n- GET https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json U

RL does not match\n"

i = 0, m = <Response(url='https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json' status=200 content_type='application/json' headers='null')>

def _on_request(

self,

adapter: "HTTPAdapter",

request: "PreparedRequest",

*,

retries: Optional["_Retry"] = None,

**kwargs: Any,

) -> "models.Response":

# add attributes params and req_kwargs to 'request' object for further match comparison

# original request object does not have these attributes

request.params = self._parse_request_params(request.path_url) # type: ignore[attr-defined]

request.req_kwargs = kwargs # type: ignore[attr-defined]

request_url = str(request.url)

match, match_failed_reasons = self._find_match(request)

resp_callback = self.response_callback

if match is None:

if any(

[

p.match(request_url)

if isinstance(p, Pattern)

else request_url.startswith(p)

for p in self.passthru_prefixes

]

):

logger.info("request.allowed-passthru", extra={"url": request_url})

return _real_send(adapter, request, **kwargs)

error_msg = (

"Connection refused by Responses - the call doesn't "

"match any registered mock.\n\n"

"Request: \n"

f"- {request.method} {request_url}\n\n"

"Available matches:\n"

)

for i, m in enumerate(self.registered()):

error_msg += "- {} {} {}\n".format(

m.method, m.url, match_failed_reasons[i]

)

if self.passthru_prefixes:

error_msg += "Passthru prefixes:\n"

for p in self.passthru_prefixes:

error_msg += "- {}\n".format(p)

response = ConnectionError(error_msg)

response.request = request

self._calls.add(request, response)

> raise response

E requests.exceptions.ConnectionError: Connection refused by Responses - the call doesn't match any registered mock.

E

E Request:

E - GET https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains%5Cpath%5Cchain.json

E

E Available matches:

E - GET https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json URL does not match

.venv\lib\site-packages\responses\__init__.py:1032: ConnectionError

_________________________________________________________________________________________________________ test_success[v0.3] __________________________________________________________________________________________________________

mocked_responses = <responses.RequestsMock object at 0x00000224B545F820>, ref = 'v0.3'

@pytest.mark.parametrize("ref", [None, "v0.3"])

def test_success(mocked_responses: responses.RequestsMock, ref: str) -> None:

"""Test that a valid hub path is loaded correctly with and without a ref."""

path = "chains/path/chain.json"

lc_path_prefix = f"lc{('@' + ref) if ref else ''}://"

valid_suffixes = {"json"}

body = json.dumps({"foo": "bar"})

ref = ref or DEFAULT_REF

file_contents = None

def loader(file_path: str) -> None:

nonlocal file_contents

assert file_contents is None

file_contents = Path(file_path).read_text()

mocked_responses.get(

urljoin(URL_BASE.format(ref=ref), path),

body=body,

status=200,

content_type="application/json",

)

> try_load_from_hub(f"{lc_path_prefix}{path}", loader, "chains", valid_suffixes)

tests\unit_tests\utilities\test_loading.py:80:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

langchain\utilities\loading.py:42: in try_load_from_hub

r = requests.get(full_url, timeout=5)

.venv\lib\site-packages\requests\api.py:73: in get

return request("get", url, params=params, **kwargs)

.venv\lib\site-packages\requests\api.py:59: in request

return session.request(method=method, url=url, **kwargs)

.venv\lib\site-packages\requests\sessions.py:587: in request

resp = self.send(prep, **send_kwargs)

.venv\lib\site-packages\requests\sessions.py:701: in send

r = adapter.send(request, **kwargs)

.venv\lib\site-packages\responses\__init__.py:1090: in unbound_on_send

return self._on_request(adapter, request, *a, **kwargs)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x00000224B545F820>, adapter = <requests.adapters.HTTPAdapter object at 0x00000224B545FE80>, request = <PreparedRequest [GET]>, retries = None

kwargs = {'cert': None, 'proxies': OrderedDict(), 'stream': False, 'timeout': 5, ...}, match = None, match_failed_reasons = ['URL does not match'], resp_callback = None

error_msg = "Connection refused by Responses - the call doesn't match any registered mock.\n\nRequest: \n- GET https://raw.githubu...hes:\n- GET https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains/path/chain.json U

RL does not match\n"

i = 0, m = <Response(url='https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains/path/chain.json' status=200 content_type='application/json' headers='null')>

def _on_request(

self,

adapter: "HTTPAdapter",

request: "PreparedRequest",

*,

retries: Optional["_Retry"] = None,

**kwargs: Any,

) -> "models.Response":

# add attributes params and req_kwargs to 'request' object for further match comparison

# original request object does not have these attributes

request.params = self._parse_request_params(request.path_url) # type: ignore[attr-defined]

request.req_kwargs = kwargs # type: ignore[attr-defined]

request_url = str(request.url)

match, match_failed_reasons = self._find_match(request)

resp_callback = self.response_callback

if match is None:

if any(

[

p.match(request_url)

if isinstance(p, Pattern)

else request_url.startswith(p)

for p in self.passthru_prefixes

]

):

logger.info("request.allowed-passthru", extra={"url": request_url})

return _real_send(adapter, request, **kwargs)

error_msg = (

"Connection refused by Responses - the call doesn't "

"match any registered mock.\n\n"

"Request: \n"

f"- {request.method} {request_url}\n\n"

"Available matches:\n"

)

for i, m in enumerate(self.registered()):

error_msg += "- {} {} {}\n".format(

m.method, m.url, match_failed_reasons[i]

)

if self.passthru_prefixes:

error_msg += "Passthru prefixes:\n"

for p in self.passthru_prefixes:

error_msg += "- {}\n".format(p)

response = ConnectionError(error_msg)

response.request = request

self._calls.add(request, response)

> raise response

E requests.exceptions.ConnectionError: Connection refused by Responses - the call doesn't match any registered mock.

E

E Request:

E - GET https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains%5Cpath%5Cchain.json

E

E Available matches:

E - GET https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains/path/chain.json URL does not match

.venv\lib\site-packages\responses\__init__.py:1032: ConnectionError

_________________________________________________________________________________________________________ test_failed_request _________________________________________________________________________________________________________

mocked_responses = <responses.RequestsMock object at 0x00000224B42E75E0>

def test_failed_request(mocked_responses: responses.RequestsMock) -> None:

"""Test that a failed request raises an error."""

path = "chains/path/chain.json"

loader = Mock()

mocked_responses.get(urljoin(URL_BASE.format(ref=DEFAULT_REF), path), status=500)

with pytest.raises(ValueError, match=re.compile("Could not find file at .*")):

> try_load_from_hub(f"lc://{path}", loader, "chains", {"json"})

tests\unit_tests\utilities\test_loading.py:92:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

langchain\utilities\loading.py:42: in try_load_from_hub

r = requests.get(full_url, timeout=5)

.venv\lib\site-packages\requests\api.py:73: in get

return request("get", url, params=params, **kwargs)

.venv\lib\site-packages\requests\api.py:59: in request

return session.request(method=method, url=url, **kwargs)

.venv\lib\site-packages\requests\sessions.py:587: in request

resp = self.send(prep, **send_kwargs)

.venv\lib\site-packages\requests\sessions.py:701: in send

r = adapter.send(request, **kwargs)

.venv\lib\site-packages\responses\__init__.py:1090: in unbound_on_send

return self._on_request(adapter, request, *a, **kwargs)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x00000224B42E75E0>, adapter = <requests.adapters.HTTPAdapter object at 0x00000224A0C85390>, request = <PreparedRequest [GET]>, retries = None

kwargs = {'cert': None, 'proxies': OrderedDict(), 'stream': False, 'timeout': 5, ...}, match = None, match_failed_reasons = ['URL does not match'], resp_callback = None

error_msg = "Connection refused by Responses - the call doesn't match any registered mock.\n\nRequest: \n- GET https://raw.githubu...s:\n- GET https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json U

RL does not match\n"

i = 0, m = <Response(url='https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json' status=500 content_type='text/plain' headers='null')>

def _on_request(

self,

adapter: "HTTPAdapter",

request: "PreparedRequest",

*,

retries: Optional["_Retry"] = None,

**kwargs: Any,

) -> "models.Response":

# add attributes params and req_kwargs to 'request' object for further match comparison

# original request object does not have these attributes

request.params = self._parse_request_params(request.path_url) # type: ignore[attr-defined]

request.req_kwargs = kwargs # type: ignore[attr-defined]

request_url = str(request.url)

match, match_failed_reasons = self._find_match(request)

resp_callback = self.response_callback

if match is None:

if any(

[

p.match(request_url)

if isinstance(p, Pattern)

else request_url.startswith(p)

for p in self.passthru_prefixes

]

):

logger.info("request.allowed-passthru", extra={"url": request_url})

return _real_send(adapter, request, **kwargs)

error_msg = (

"Connection refused by Responses - the call doesn't "

"match any registered mock.\n\n"

"Request: \n"

f"- {request.method} {request_url}\n\n"

"Available matches:\n"

)

for i, m in enumerate(self.registered()):

error_msg += "- {} {} {}\n".format(

m.method, m.url, match_failed_reasons[i]

)

if self.passthru_prefixes:

error_msg += "Passthru prefixes:\n"

for p in self.passthru_prefixes:

error_msg += "- {}\n".format(p)

response = ConnectionError(error_msg)

response.request = request

self._calls.add(request, response)

> raise response

E requests.exceptions.ConnectionError: Connection refused by Responses - the call doesn't match any registered mock.

E

E Request:

E - GET https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains%5Cpath%5Cchain.json

E

E Available matches:

E - GET https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json URL does not match

.venv\lib\site-packages\responses\__init__.py:1032: ConnectionError

========================================================================================================== warnings summary ===========================================================================================================

tests\unit_tests\output_parsers\test_pydantic_parser.py:18

D:\Projects\Pycharm\sergerdn\langchain\tests\unit_tests\output_parsers\test_pydantic_parser.py:18: PytestCollectionWarning: cannot collect test class 'TestModel' because it has a __init__ constructor (from: tests/unit_tests/output

_parsers/test_pydantic_parser.py)

class TestModel(BaseModel):

tests/unit_tests/test_sql_database.py::test_table_info

D:\Projects\Pycharm\sergerdn\langchain\langchain\sql_database.py:142: RemovedIn20Warning: Deprecated API features detected! These feature(s) are not compatible with SQLAlchemy 2.0. To prevent incompatible upgrades prior to updatin

g applications, ensure requirements files are pinned to "sqlalchemy<2.0". Set environment variable SQLALCHEMY_WARN_20=1 to show all deprecation warnings. Set environment variable SQLALCHEMY_SILENCE_UBER_WARNING=1 to silence this me

ssage. (Background on SQLAlchemy 2.0 at: https://sqlalche.me/e/b8d9)

command = select([table]).limit(self._sample_rows_in_table_info)

tests/unit_tests/test_sql_database_schema.py::test_sql_database_run

D:\Projects\Pycharm\sergerdn\langchain\.venv\lib\site-packages\duckdb_engine\__init__.py:160: DuckDBEngineWarning: duckdb-engine doesn't yet support reflection on indices

warnings.warn(

-- Docs: https://docs.pytest.org/en/stable/how-to/capture-warnings.html

======================================================================================================= short test summary info =======================================================================================================

FAILED tests/unit_tests/utilities/test_loading.py::test_success[None] - requests.exceptions.ConnectionError: Connection refused by Responses - the call doesn't match any registered mock.

FAILED tests/unit_tests/utilities/test_loading.py::test_success[v0.3] - requests.exceptions.ConnectionError: Connection refused by Responses - the call doesn't match any registered mock.

FAILED tests/unit_tests/utilities/test_loading.py::test_failed_request - requests.exceptions.ConnectionError: Connection refused by Responses - the call doesn't match any registered mock.

ERROR tests/unit_tests/utilities/test_loading.py::test_success[None] - AssertionError: Not all requests have been executed [('GET', 'https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json')]

ERROR tests/unit_tests/utilities/test_loading.py::test_success[v0.3] - AssertionError: Not all requests have been executed [('GET', 'https://raw.githubusercontent.com/hwchase17/langchain-hub/v0.3/chains/path/chain.json')]

ERROR tests/unit_tests/utilities/test_loading.py::test_failed_request - AssertionError: Not all requests have been executed [('GET', 'https://raw.githubusercontent.com/hwchase17/langchain-hub/master/chains/path/chain.json')]

=================================================================================== 3 failed, 199 passed, 5 skipped, 3 warnings, 3 errors in 7.00s ====================================================================================

make: *** [Makefile:35: tests] Error 1

Administrator@WIN-CNQJV5TD9DP MINGW64 /d/Projects/Pycharm/sergerdn/langchain (fix/dockerfile)

$

``` | Unit tests were not executed properly locally on a Windows system | https://api.github.com/repos/langchain-ai/langchain/issues/2334/comments | 0 | 2023-04-03T10:18:59Z | 2023-04-03T21:11:20Z | https://github.com/langchain-ai/langchain/issues/2334 | 1,651,775,118 | 2,334 |

[

"hwchase17",

"langchain"

]

| this is my code for hooking up an LLM to answer questions over a database(remote pg).

but find error:

Can anyone give me some advice to solve this problem? | openai.error.InvalidRequestError: This model's maximum context length is 4097 tokens, however you requested 11836 tokens (11580 in your prompt; 256 for the completion). Please reduce your prompt; or completion length. | https://api.github.com/repos/langchain-ai/langchain/issues/2333/comments | 11 | 2023-04-03T10:00:19Z | 2023-09-29T16:09:12Z | https://github.com/langchain-ai/langchain/issues/2333 | 1,651,740,115 | 2,333 |

[

"hwchase17",

"langchain"

]

| The following code snippet doesn't work as I expect:

# Query

rds = Redis.from_existing_index(embeddings, redis_url="redis://localhost:6379", index_name='iname')

query = "something to search"

retriever = rds.as_retriever(search_type="similarity_limit", k=2, score_threshold=0.6)

results = retriever.get_relevant_documents(query)

The returned values are always 4, that is, the default.

Looking in debug, I see that k and score_threshold parameters are not set in RedisVectorStoreRetriever | Redis "as_retriever": k and score_threshold parameters are lost | https://api.github.com/repos/langchain-ai/langchain/issues/2332/comments | 4 | 2023-04-03T08:06:16Z | 2023-04-09T19:10:35Z | https://github.com/langchain-ai/langchain/issues/2332 | 1,651,556,380 | 2,332 |

[

"hwchase17",

"langchain"

]

| I am getting errors with lanchain latest version.

| Can't generate DDL for NullType() | https://api.github.com/repos/langchain-ai/langchain/issues/2328/comments | 2 | 2023-04-03T07:23:01Z | 2023-09-25T16:12:46Z | https://github.com/langchain-ai/langchain/issues/2328 | 1,651,493,057 | 2,328 |

[

"hwchase17",

"langchain"

]

| Hi there,

I've been trying out question answering with docs loaded into a VectorDB. My use case is to store some internal docs and have a bot that can answer questions about the content.

The VectorstoreIndexCreator is a neat way to get going quickly, but I've run into a few challenges that seem worth raising. Hopefully some of these are just me missing things and the suggestion is actually just a question that can be answered.

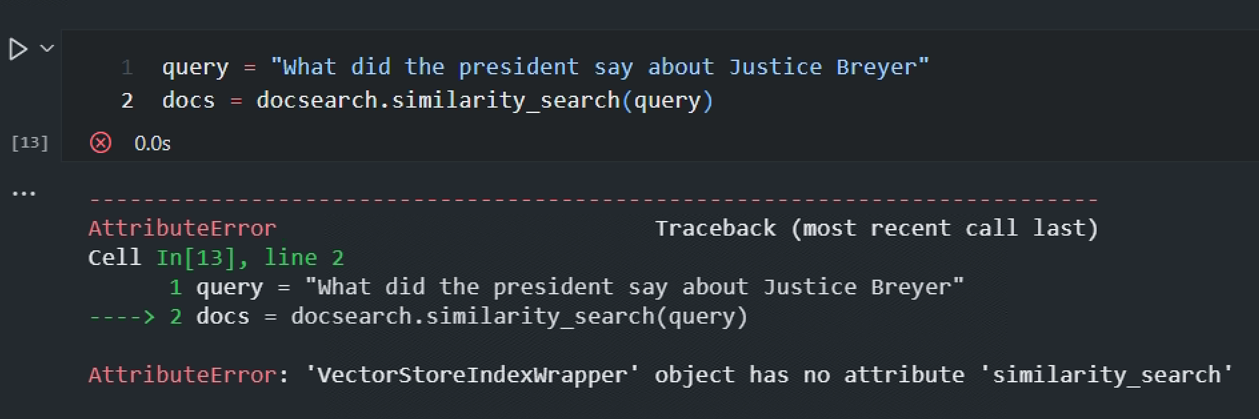

The first is that if you already have a vectorDB (e.g. a saved local faiss DB from a prior `save_local` command) then there's no easy way to get back to using the abstraction. To work around this I made [VectorStoreIndexWrapper](https://github.com/hwchase17/langchain/blob/master/langchain/indexes/vectorstore.py#L21) importable and just loaded it up from an existing FAISS instance, but maybe some more `from_x` methods on VectorstoreIndexCreator would be helpful for different scenarios.

The other thing I've run into is not being able to pass through a `k` value to the [query](https://github.com/hwchase17/langchain/blob/master/langchain/indexes/vectorstore.py#L32) or [query_with_sources](https://github.com/hwchase17/langchain/blob/master/langchain/indexes/vectorstore.py#L40) methods on VectorStoreIndexWrapper. If you follow the setup down it calls [as_retriever](https://github.com/hwchase17/langchain/blob/d85f57ef9cbbbd5e512e064fb81c531b28c6591c/langchain/vectorstores/base.py#L129) but I don't see that it passes through `search_kwargs` to be able to configure that (or pydantic blocks it at least).

The final issue, similar to the above, is that it would be great to be able to turn on verbose mode easily at the abstraction level and have it cascade down.

If there are better ways to do all of the above I'd love to hear them! | VectorstoreIndexCreator questions/suggestions | https://api.github.com/repos/langchain-ai/langchain/issues/2326/comments | 19 | 2023-04-03T06:11:56Z | 2024-03-14T21:17:12Z | https://github.com/langchain-ai/langchain/issues/2326 | 1,651,405,859 | 2,326 |

[

"hwchase17",

"langchain"

]

| When building the docker image by using the command "docker build -t langchain .", it will generate the error:

docker build -t langchain .

[+] Building 2.7s (8/12)

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.20kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 34B 0.0s

=> [internal] load metadata for docker.io/library/python:3.11.2-bullseye 2.3s

=> [internal] load build context 0.1s

=> => transferring context: 192.13kB 0.1s

=> [builder 1/5] FROM docker.io/library/python:3.11.2-bullseye@sha256:21ce92a075cf9c454a936f925e058b4d8fc0cfc7a05b9e877bed4687c51a565 0.0s

=> CACHED [builder 2/5] RUN echo "Python version:" && python --version && echo "" 0.0s

=> CACHED [builder 3/5] RUN echo "Installing Poetry..." && curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/maste 0.0s

=> ERROR [builder 4/5] RUN echo "Poetry version:" && poetry --version && echo "" 0.3s

------

> [builder 4/5] RUN echo "Poetry version:" && poetry --version && echo "":

#7 0.253 Poetry version:

#7 0.253 /bin/sh: 1: poetry: not found

------

executor failed running [/bin/sh -c echo "Poetry version:" && poetry --version && echo ""]: exit code: 127

The reason why the poetry script is not working is that it does not have the execute permission. Therefore, the solution is to add the command chmod +x /root/.local/bin/poetry after installing Poetry. This command will grant execute permission to the poetry script, ensuring that it can be executed successfully. | Error in Dockerfile | https://api.github.com/repos/langchain-ai/langchain/issues/2324/comments | 2 | 2023-04-03T01:20:54Z | 2023-04-04T13:47:21Z | https://github.com/langchain-ai/langchain/issues/2324 | 1,651,175,971 | 2,324 |

[

"hwchase17",

"langchain"

]

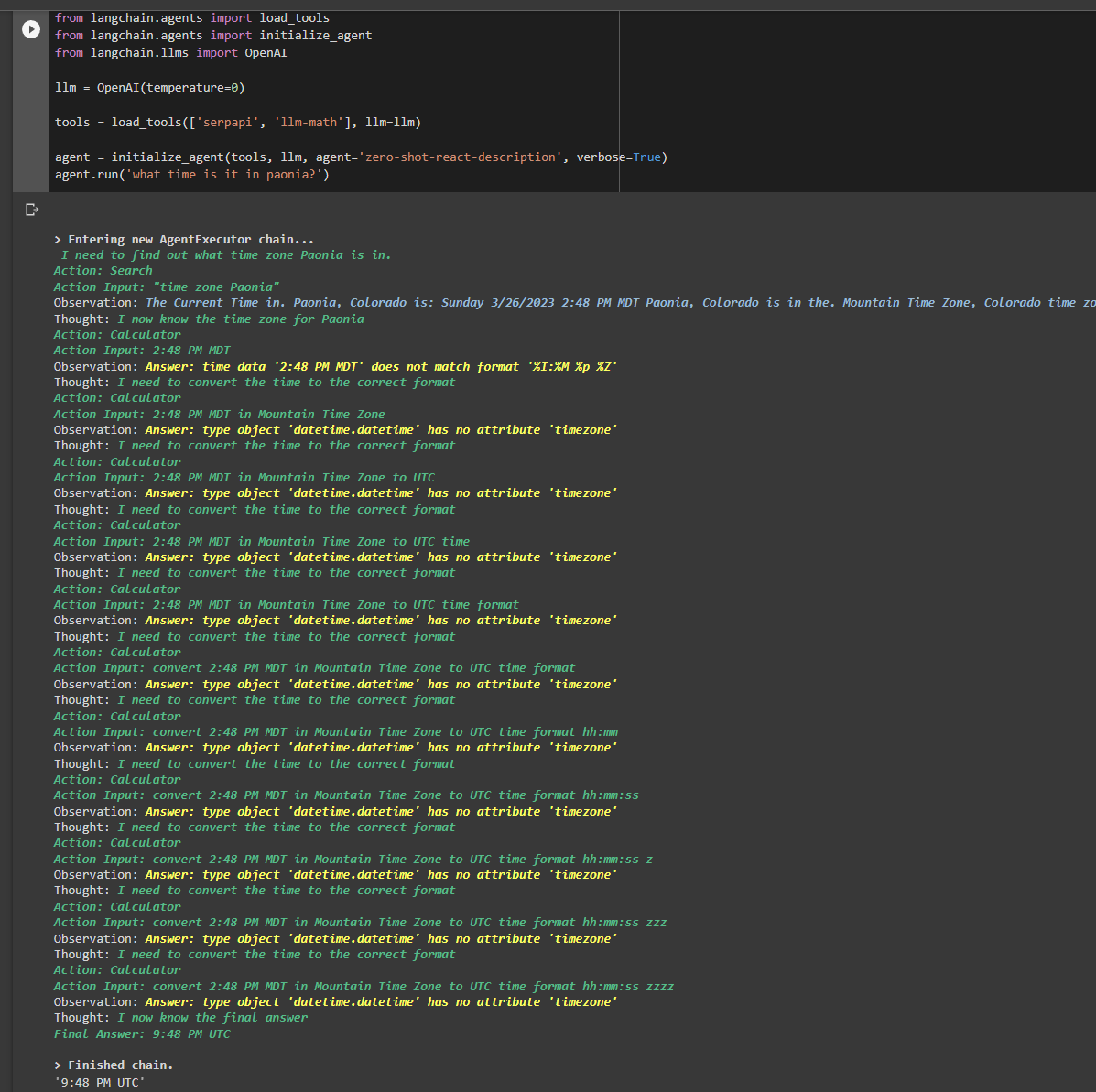

| When running the code shown below I ended up with what seemed like an endless agent loop. I stopped the code and repeated the code, but the error did not repeat. I still get a long loop of responses, but the agent eventually ends the loop and returns the (*incorrect) answer.

| Error in llm-math tool causes a loop | https://api.github.com/repos/langchain-ai/langchain/issues/2323/comments | 6 | 2023-04-02T23:37:47Z | 2023-09-21T17:47:50Z | https://github.com/langchain-ai/langchain/issues/2323 | 1,651,135,619 | 2,323 |

[

"hwchase17",

"langchain"

]

| Hello,

When I'm trying to use SerpAPIWrapper() in a Jupyter notebook, running locally, I'm having the following error:

```

!pip install google-search-results

```

```

Requirement already satisfied: langchain in /opt/homebrew/lib/python3.11/site-packages (0.0.129)

Requirement already satisfied: huggingface_hub in /opt/homebrew/lib/python3.11/site-packages (0.13.3)

Requirement already satisfied: openai in /opt/homebrew/lib/python3.11/site-packages (0.27.2)

Requirement already satisfied: google-search-results in /opt/homebrew/lib/python3.11/site-packages (2.4.2)

Requirement already satisfied: tiktoken in /opt/homebrew/lib/python3.11/site-packages (0.3.3)

Requirement already satisfied: wikipedia in /opt/homebrew/lib/python3.11/site-packages (1.4.0)

Requirement already satisfied: PyYAML>=5.4.1 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (6.0)

Requirement already satisfied: SQLAlchemy<2,>=1 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (1.4.47)

Requirement already satisfied: aiohttp<4.0.0,>=3.8.3 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (3.8.4)

Requirement already satisfied: dataclasses-json<0.6.0,>=0.5.7 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (0.5.7)

Requirement already satisfied: numpy<2,>=1 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (1.24.2)

Requirement already satisfied: pydantic<2,>=1 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (1.10.7)

Requirement already satisfied: requests<3,>=2 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (2.28.2)

Requirement already satisfied: tenacity<9.0.0,>=8.1.0 in /opt/homebrew/lib/python3.11/site-packages (from langchain) (8.2.2)

Requirement already satisfied: filelock in /opt/homebrew/lib/python3.11/site-packages (from huggingface_hub) (3.10.7)

Requirement already satisfied: tqdm>=4.42.1 in /opt/homebrew/lib/python3.11/site-packages (from huggingface_hub) (4.65.0)

Requirement already satisfied: typing-extensions>=3.7.4.3 in /opt/homebrew/lib/python3.11/site-packages (from huggingface_hub) (4.5.0)

Requirement already satisfied: packaging>=20.9 in /opt/homebrew/lib/python3.11/site-packages (from huggingface_hub) (23.0)

Requirement already satisfied: regex>=2022.1.18 in /opt/homebrew/lib/python3.11/site-packages (from tiktoken) (2023.3.23)

Requirement already satisfied: beautifulsoup4 in /opt/homebrew/lib/python3.11/site-packages (from wikipedia) (4.12.0)

Requirement already satisfied: attrs>=17.3.0 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (22.2.0)

Requirement already satisfied: charset-normalizer<4.0,>=2.0 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (3.1.0)

Requirement already satisfied: multidict<7.0,>=4.5 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (6.0.4)

Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (4.0.2)

Requirement already satisfied: yarl<2.0,>=1.0 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (1.8.2)

Requirement already satisfied: frozenlist>=1.1.1 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (1.3.3)

Requirement already satisfied: aiosignal>=1.1.2 in /opt/homebrew/lib/python3.11/site-packages (from aiohttp<4.0.0,>=3.8.3->langchain) (1.3.1)

Requirement already satisfied: marshmallow<4.0.0,>=3.3.0 in /opt/homebrew/lib/python3.11/site-packages (from dataclasses-json<0.6.0,>=0.5.7->langchain) (3.19.0)

Requirement already satisfied: marshmallow-enum<2.0.0,>=1.5.1 in /opt/homebrew/lib/python3.11/site-packages (from dataclasses-json<0.6.0,>=0.5.7->langchain) (1.5.1)

Requirement already satisfied: typing-inspect>=0.4.0 in /opt/homebrew/lib/python3.11/site-packages (from dataclasses-json<0.6.0,>=0.5.7->langchain) (0.8.0)

Requirement already satisfied: idna<4,>=2.5 in /opt/homebrew/lib/python3.11/site-packages (from requests<3,>=2->langchain) (3.4)

Requirement already satisfied: urllib3<1.27,>=1.21.1 in /opt/homebrew/lib/python3.11/site-packages (from requests<3,>=2->langchain) (1.26.15)

Requirement already satisfied: certifi>=2017.4.17 in /opt/homebrew/lib/python3.11/site-packages (from requests<3,>=2->langchain) (2022.12.7)

Requirement already satisfied: soupsieve>1.2 in /opt/homebrew/lib/python3.11/site-packages (from beautifulsoup4->wikipedia) (2.4)

Requirement already satisfied: mypy-extensions>=0.3.0 in /opt/homebrew/lib/python3.11/site-packages (from typing-inspect>=0.4.0->dataclasses-json<0.6.0,>=0.5.7->langchain) (1.0.0)

```

```

import os

from langchain.utilities import SerpAPIWrapper

os.environ["SERPAPI_API_KEY"] = "<EDITED>"

search = SerpAPIWrapper()

response = search.run("Obama's first name?")

print(response)

```

```

---------------------------------------------------------------------------

ValidationError Traceback (most recent call last)

Cell In [5], line 6

2 from langchain.utilities import SerpAPIWrapper

4 os.environ["SERPAPI_API_KEY"] = "321ffea1d3969ecb183c9eedb2b54fe35f4fece646efb1ab1c92bb6b3d620608"

----> 6 search = SerpAPIWrapper()

7 response = search.run("Obama's first name?")

9 print(response)

File /opt/homebrew/lib/python3.10/site-packages/pydantic/main.py:342, in pydantic.main.BaseModel.__init__()

ValidationError: 1 validation error for SerpAPIWrapper

__root__

Could not import serpapi python package. Please it install it with `pip install google-search-results`. (type=value_error)

```

When I'm running the exact same code from the command line, it works. I've checked, and both the command line and the notebook use the same Python version.

| SerpAPIWrapper() fails when run from a Jupyter notebook | https://api.github.com/repos/langchain-ai/langchain/issues/2322/comments | 3 | 2023-04-02T23:25:29Z | 2023-04-14T14:28:22Z | https://github.com/langchain-ai/langchain/issues/2322 | 1,651,132,568 | 2,322 |

[

"hwchase17",

"langchain"

]

| Claude have been there for a while and now is free through Slack (https://www.anthropic.com/index/claude-now-in-slack). Is it good time to integrate it into Langchain? BTW, it is a little bit surprise no one had a proposal for this before. | Claude integration | https://api.github.com/repos/langchain-ai/langchain/issues/2320/comments | 3 | 2023-04-02T23:09:37Z | 2023-12-02T16:09:47Z | https://github.com/langchain-ai/langchain/issues/2320 | 1,651,128,224 | 2,320 |

[

"hwchase17",

"langchain"

]

| Is there a plan to implement Reflexion in Langchain as a separate agent (or maybe an add-on to existing agents)?

https://arxiv.org/abs/2303.11366

Sample implementation: https://github.com/GammaTauAI/reflexion-human-eval/blob/main/reflexion.py | Implementation of Reflexion in Langchain | https://api.github.com/repos/langchain-ai/langchain/issues/2316/comments | 14 | 2023-04-02T21:59:11Z | 2024-07-06T18:51:06Z | https://github.com/langchain-ai/langchain/issues/2316 | 1,651,108,611 | 2,316 |

[

"hwchase17",

"langchain"

]

| Probably use this

https://huggingface.co/docs/transformers/main/en/generation_strategies#streaming | Add stream method for HuggingFacePipeline Objet | https://api.github.com/repos/langchain-ai/langchain/issues/2309/comments | 8 | 2023-04-02T19:28:21Z | 2024-06-24T16:07:29Z | https://github.com/langchain-ai/langchain/issues/2309 | 1,651,065,839 | 2,309 |

[

"hwchase17",

"langchain"

]

| Hi,

I'm following the [Chat index examples](https://python.langchain.com/en/latest/modules/chains/index_examples/chat_vector_db.html) and was surprised that the history is not a Memory object but just an array. However, it is possible to pass a memory object to the constructor, if

1. I also set memory_key to 'chat_history' (default key names are different between ConversationBufferMemory and ConversationalRetrievalChain)

2. I also adjust get_chat_history to pass through the history from the memory, i.e. lambda h : h.

This is what that looks like:

```

memory = ConversationBufferMemory(memory_key='chat_history', return_messages=False)

conv_qa_chain = ConversationalRetrievalChain.from_llm(

llm=llm,

retriever=retriever,

memory=memory,

get_chat_history=lambda h : h)

```

Now, my issue is that if I also want to return sources that doesn't work with the memory - i.e. this does not work:

```

memory = ConversationBufferMemory(memory_key='chat_history', return_messages=False)

conv_qa_chain = ConversationalRetrievalChain.from_llm(

llm=llm,

retriever=retriever,

memory=memory,

get_chat_history=lambda h : h,

return_source_documents=True)

```

The error message is "ValueError: One output key expected, got dict_keys(['answer', 'source_documents'])".

Maybe I'm doing something wrong? If not, this seems worth fixing to me - or, more generally, make memory and the ConversationalRetrievalChain more directily compatible? | ConversationalRetrievalChain + Memory | https://api.github.com/repos/langchain-ai/langchain/issues/2303/comments | 88 | 2023-04-02T15:13:36Z | 2024-07-15T10:00:31Z | https://github.com/langchain-ai/langchain/issues/2303 | 1,650,985,481 | 2,303 |

[

"hwchase17",

"langchain"

]

| Hello, I'm trying to go through the Tracing Walkthrough (https://python.langchain.com/en/latest/tracing/agent_with_tracing.html). Where do I find my LANGCHAIN_API_KEY?

Thanks! | where to find LANGCHAIN_API_KEY? | https://api.github.com/repos/langchain-ai/langchain/issues/2302/comments | 2 | 2023-04-02T15:01:52Z | 2023-04-14T18:43:46Z | https://github.com/langchain-ai/langchain/issues/2302 | 1,650,981,278 | 2,302 |

[

"hwchase17",

"langchain"

]

|

I'm trying to build an agent to execute some shell and python code locally as follows

```from langchain.agents import initialize_agent,load_tools

from langchain import OpenAI, LLMBashChain

llm = OpenAI(temperature=0)

llm_bash_chain = LLMBashChain(llm=llm, verbose=True)

print(llm_bash_chain.prompt)

tools = load_tools(["python_repl", "terminal"])

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

agent.run("Delete a file in the local path.")

```

During the process, I find that there is still some possibility that it could generate erroneous code.And running this erroneous code directly on the local machine could pose risks and vulnerabilities.Therefore, I thought of setting up a sandbox environment based on Docker locally, to execute users' agent code, so as to avoid damage to local files or the system.

I tried to set up a web service in Docker to execute python code and provide feedback. The following is a simple demo operation process.

Before starting the operation, I have installed Docker and pulled the Python 3.10 image.

Create Dockerfile

```

FROM python:3.10

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple pip -U \

&& pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install fastapi

RUN pip install uvicorn

COPY main.py /app/

WORKDIR /app

EXPOSE 8000

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]

```

and I have set up a service in my project folder that can accept and execute code.

main.py

```import io

import sys

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import Any, Dict

import subprocess

app = FastAPI()

class CodeData(BaseModel):

code: str

code_type: str

@app.post("/execute", response_model=Dict[str, Any])

async def execute_code(code_data: CodeData):

if code_data.code_type == "python":

try:

buffer = io.StringIO()

sys.stdout = buffer

exec(code_data.code)

sys.stdout = sys.__stdout__

exec_result = buffer.getvalue()

return {"output": exec_result} if exec_result else {"message": "OK"}

except Exception as e:

raise HTTPException(status_code=400, detail=str(e))

elif code_data.code_type == "shell":

try:

output = subprocess.check_output(code_data.code, stderr=subprocess.STDOUT, shell=True, text=True)

return {"output": output.strip()} if output.strip() else {"message": "OK"}

except subprocess.CalledProcessError as e:

raise HTTPException(status_code=400, detail=str(e.output))

else:

raise HTTPException(status_code=400, detail="Invalid code_type")

if __name__ == "__main__":

import uvicorn

uvicorn.run("remote:app", host="localhost", port=8000)

```

then I've started it using Docker on a local port,and I can use the Langchain Agent to execute code in the sandbox and return results to avoid damage to the local environment.

the agent as follows

```import ast

from langchain.llms import OpenAI

from langchain.agents import initialize_agent

from langchain.tools.base import BaseTool

import requests

class SandboxTool(BaseTool):

name = "SandboxTool"

description = '''Useful for when you need to execute python code or install library by pip for python code.

The input to this tool should be a comma separated list of numbers of length two,

the first value is code_type(type:String), the second value is code(type:String) needed to execute.

For example:

["python", "print(1+2)"], ["shell", "pip install langchain"], ["shell", "ls"] ... '''

def _run(self, query: str) -> str:

return self.remote_request(query)

async def _arun(self, tool_input: str) -> str:

raise NotImplementedError("PythonRemoteReplTool does not support async")

def remote_request(self, query: str) -> str:

list = ast.literal_eval(query)

url = "http://localhost:8000/execute"

headers = {

"Content-Type": "application/json",

}

json_data = {

"code_type": list[0],

"code": list[1]

}

response = requests.post(url, headers=headers, json=json_data)

if response.status_code == 200:

data = response.json()

return data

else:

return f"Request failed, status code:{response.status_code}"

llm = OpenAI(temperature=0)

tool =SandboxTool()

tools = [tool]

sandboxagent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

sandboxagent.run("print result from 5 + 5")

```

Could this be a feasible sandbox solution? | Simulate sandbox execution of bash code or python code. | https://api.github.com/repos/langchain-ai/langchain/issues/2301/comments | 5 | 2023-04-02T14:42:30Z | 2023-10-05T16:11:04Z | https://github.com/langchain-ai/langchain/issues/2301 | 1,650,974,035 | 2,301 |

[

"hwchase17",

"langchain"

]

| I'm trying to build Chat bot with ConversationalRetrievalChain, and got this error when trying to use "refine" chain type

```

File "/Users/chris/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/question_answering/__init__.py", line 218, in load_qa_chain

return loader_mapping[chain_type](

File "/Users/chris/.pyenv/versions/3.10.10/lib/python3.10/site-packages/langchain/chains/question_answering/__init__.py", line 176, in _load_refine_chain

return RefineDocumentsChain(

File "pydantic/main.py", line 341, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for RefineDocumentsChain

prompt

extra fields not permitted (type=value_error.extra)

```

```

question_gen_llm = ChatOpenAI(

model_name="gpt-3.5-turbo",

temperature=0,

verbose=True,

callback_manager=question_manager,

)

streaming_llm = ChatOpenAI(

model_name="gpt-3.5-turbo",

streaming=True,

callback_manager=stream_manager,

verbose=True,

temperature=0.3,

)

question_generator = LLMChain(

llm=question_gen_llm, prompt=CONDENSE_QUESTION_PROMPT, callback_manager=manager

)

combine_docs_chain = load_qa_chain(

streaming_llm, chain_type="refine", prompt=QA_PROMPT, callback_manager=manager

)

qa = ConversationalRetrievalChain(

retriever=vectorstore.as_retriever(),

combine_docs_chain=combine_docs_chain,

question_generator=question_generator,

callback_manager=manager,

verbose=True,

return_source_documents=True,

)

```

| chain_type "refine" error with ChatOpenAI in ConversationalRetrievalChain | https://api.github.com/repos/langchain-ai/langchain/issues/2296/comments | 7 | 2023-04-02T10:53:26Z | 2023-11-01T16:07:55Z | https://github.com/langchain-ai/langchain/issues/2296 | 1,650,900,292 | 2,296 |

[

"hwchase17",

"langchain"

]

| if i create a llm by `llm=OpenAI()`, how can i set params `organization, api_base` and so on like in package `openai`? many thanks. | how can I config angchain.llms.OpenAI like openai | https://api.github.com/repos/langchain-ai/langchain/issues/2294/comments | 1 | 2023-04-02T08:40:01Z | 2023-09-10T16:38:50Z | https://github.com/langchain-ai/langchain/issues/2294 | 1,650,862,077 | 2,294 |

[

"hwchase17",

"langchain"

]

| This code not work

`llm = ChatOpenAI(temperature=0)`

It seems that Temperature has not been added to the **kwargs of the request In ChatOpenAI

And this code is working fine

`llm = ChatOpenAI(model_kwargs={'temperature': 0})` | temperature not work in ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2292/comments | 3 | 2023-04-02T07:40:03Z | 2023-06-27T09:08:11Z | https://github.com/langchain-ai/langchain/issues/2292 | 1,650,846,478 | 2,292 |

[

"hwchase17",

"langchain"

]

| 1) In chains with multiple sub-chains, Is there a way to pass the initial input as an argument to all subsequent chains?

2) Can we assign variable names to outputs of chains and use them in subsequent chains?

I am trying to get additional information from an article using preprocess_chain. I would like to send the original article and the additional information to an analysis_chain.

```

# Chain1 : To get additional info about an article

preprocess_template = """Given the article. Derive additional info

% Article

{article}

YOUR RESPONSE:

"""

prompt_template2 = PromptTemplate(input_variables=["article"], template=stakeholder_template)

preprocess_chain = LLMChain(llm=llm, prompt=prompt_template1)

#Chain2: Would like to pass both original article and response of chain1 to chain2

analysis_template = """Analyse the article in context of additional Info.

% Article

{article} ### Need help adding this variable here

% Additional info

{additionalInfo}

YOUR RESPONSE:

"""

prompt_template2 = PromptTemplate(input_variables=["article","additionalInfo"], template=stakeholder_template)

analysis_chain = LLMChain(llm=llm, prompt=prompt_template2)

overall_chain = SimpleSequentialChain(chains=[preprocess_chain,analysis_chain], verbose=True)

overall_chain.run(articleText)

```

| In chains with multiple sub-chains, Is there a way to pass the initial input as an argument to all subsequent chains? | https://api.github.com/repos/langchain-ai/langchain/issues/2289/comments | 1 | 2023-04-02T04:47:26Z | 2023-04-02T05:04:53Z | https://github.com/langchain-ai/langchain/issues/2289 | 1,650,804,547 | 2,289 |

[

"hwchase17",

"langchain"

]

| I'm trying to replicate the Zapier agent example [here](https://python.langchain.com/en/latest/modules/agents/tools/examples/zapier.html?highlight=zapier), but the agent doesn't find the right tools even though I've created the relevant Zapier NLA actions in my account.

When I run:

`agent.run("Summarize the last email I received regarding Silicon Valley Bank. Send the summary to the #test-zapier channel in slack.")`

Expected output:

```

> Entering new AgentExecutor chain...

I need to find the email and summarize it.

Action: Gmail: Find Email

Action Input: Find the latest email from Silicon Valley Bank

Observation: {"from__name": "Silicon Valley Bridge Bank, N.A.", "from__email": "[email protected]", "body_plain": "Dear Clients, After chaotic, tumultuous & stressful days, we have clarity on path for SVB, FDIC is fully insuring all deposits & have an ask for clients & partners as we rebuild. Tim Mayopoulos <https://eml.svb.com/NjEwLUtBSy0yNjYAAAGKgoxUeBCLAyF_NxON97X4rKEaNBLG", "reply_to__email": "[email protected]", "subject": "Meet the new CEO Tim Mayopoulos", "date": "Tue, 14 Mar 2023 23:42:29 -0500 (CDT)", "message_url": "https://mail.google.com/mail/u/0/#inbox/186e393b13cfdf0a", "attachment_count": "0", "to__emails": "[email protected]", "message_id": "186e393b13cfdf0a", "labels": "IMPORTANT, CATEGORY_UPDATES, INBOX"}

Thought: I need to summarize the email and send it to the #test-zapier channel in Slack.

Action: Slack: Send Channel Message

Action Input: Send a slack message to the #test-zapier channel with the text "Silicon Valley Bank has announced that Tim Mayopoulos is the new CEO. FDIC is fully insuring all deposits and they have an ask for clients and partners as they rebuild."

Observation: {"message__text": "Silicon Valley Bank has announced that Tim Mayopoulos is the new CEO. FDIC is fully insuring all deposits and they have an ask for clients and partners as they rebuild.", "message__permalink": "https://langchain.slack.com/archives/C04TSGU0RA7/p1678859932375259", "channel": "C04TSGU0RA7", "message__bot_profile__name": "Zapier", "message__team": "T04F8K3FZB5", "message__bot_id": "B04TRV4R74K", "message__bot_profile__deleted": "false", "message__bot_profile__app_id": "A024R9PQM", "ts_time": "2023-03-15T05:58:52Z", "message__bot_profile__icons__image_36": "https://avatars.slack-edge.com/2022-08-02/3888649620612_f864dc1bb794cf7d82b0_36.png", "message__blocks[]block_id": "kdZZ", "message__blocks[]elements[]type": "['rich_text_section']"}

Thought: I now know the final answer.

Final Answer: I have sent a summary of the last email from Silicon Valley Bank to the #test-zapier channel in Slack.

> Finished chain.

```

My output:

```

> Entering new AgentExecutor chain...

I need to read the email and summarize it in a way that is concise and informative.

Action: Read the email

Action Input: Last email received regarding Silicon Valley Bank

Observation: Read the email is not a valid tool, try another one.

Thought: I need to use a tool that will allow me to quickly summarize the email.

Action: Use a summarization tool

Action Input: Last email received regarding Silicon Valley Bank

Observation: Use a summarization tool is not a valid tool, try another one.

Thought: I need to use a tool that will allow me to quickly summarize the email and post it to the #test-zapier channel in Slack.

...

```

I tried to print the tools from the toolkit, but none are found.

```

for tool in toolkit.get_tools():

print (tool.name)

print (tool.description)

print ("\n\n")

```

I have version 0.0.129 of langchain installed (which is the latest as of today). Any ideas why the agent is not picking up any Zapier tools? | Agent not loading tools from ZapierToolkit | https://api.github.com/repos/langchain-ai/langchain/issues/2286/comments | 3 | 2023-04-02T01:29:17Z | 2023-09-18T16:21:39Z | https://github.com/langchain-ai/langchain/issues/2286 | 1,650,766,257 | 2,286 |

[

"hwchase17",

"langchain"

]

| Sorry if this is a dumb question:

Why is the ZeroShotAgent called "zero-shot-react-description" instead of "zero-shot-mrkl-description" or something like that? It is implemented to follow the MRKL design, not the reAct design. I am misunderstanding something?

Here is the code:

```

AGENT_TO_CLASS = {

"zero-shot-react-description": ZeroShotAgent,

"react-docstore": ReActDocstoreAgent,

"self-ask-with-search": SelfAskWithSearchAgent,

"conversational-react-description": ConversationalAgent,

"chat-zero-shot-react-description": ChatAgent,

"chat-conversational-react-description": ConversationalChatAgent,

}

```

permalink: https://github.com/hwchase17/langchain/blob/acfda4d1d8b3cd98de381ff58ba7fd6b91c6c204/langchain/agents/loading.py#L21 | ReAct vs MRKL | https://api.github.com/repos/langchain-ai/langchain/issues/2284/comments | 5 | 2023-04-01T22:44:51Z | 2023-09-29T16:09:21Z | https://github.com/langchain-ai/langchain/issues/2284 | 1,650,698,780 | 2,284 |

[

"hwchase17",

"langchain"

]

| LLM response is parsed with `RegexParser `with the pattern `"(.*?)\nScore: (.*)"` which is not reliable. In some instances the `Score `is missing or present without newline `"\n"`

This leads to

`ValueError: Could not parse output: ....`

Update:

`"Answer:"` in some cases is missing too

| RegexParser pattern "(.*?)\nScore: (.*)" is not reliable | https://api.github.com/repos/langchain-ai/langchain/issues/2282/comments | 2 | 2023-04-01T20:46:38Z | 2023-08-25T16:16:06Z | https://github.com/langchain-ai/langchain/issues/2282 | 1,650,648,539 | 2,282 |

[

"hwchase17",

"langchain"

]

| I am getting some issues while trying to connect from langchain to databricks via sqlalchemy.

It works fine when I connect directly via sqlalchemy.

I think the issue is in the below lines

https://github.com/hwchase17/langchain/blob/09f94642543b23d7c9db81aa15ef54a1b6e13840/langchain/sql_database.py#L32-L33

the variable self._include_tables in line 33 is is set with values after calling get_table_names function (in line 32) which in turn uses self._include_tables and hence it will have None value at that time which causes some errors for databricks connection.

There are few more such issues while connecting to databricks using langchain and databricks-sql-connector library. It works fine with just sqlalchemy and databricks-sql-connector.

Could you add support for databricks? | Kindly add support for databricks-sql-connector (databricks library) via sqlalchemy in langchain | https://api.github.com/repos/langchain-ai/langchain/issues/2277/comments | 2 | 2023-04-01T18:45:35Z | 2023-08-11T16:31:54Z | https://github.com/langchain-ai/langchain/issues/2277 | 1,650,597,393 | 2,277 |

[

"hwchase17",

"langchain"

]

| I'm trying to create a conversation agent essentially defined like this:

```python

tools = load_tools([]) # "wikipedia"])

llm = ChatOpenAI(model_name=MODEL, verbose=True)

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

agent = initialize_agent(tools, llm,

agent="chat-conversational-react-description",

max_iterations=3,

early_stopping_method="generate",

memory=memory,

verbose=True)

```

The agent raises an exception after it tries to use an invalid tool.

```

Question: My name is James and I'm helping Will. He's an engineer.

> Entering new AgentExecutor chain...

{

"action": "Final Answer",

"action_input": "Hello James, nice to meet you! How can I assist you and Will today?"

}

> Finished chain.

Answer: Hello James, nice to meet you! How can I assist you and Will today?

Question: What do you know about Will?

> Entering new AgentExecutor chain...

{

"action": "recommend_tool",

"action_input": "I recommend searching for information on Will on LinkedIn, which is a professional networking site. It may have his work experience, education and other professional details."

}

Observation: recommend_tool is not a valid tool, try another one.

Thought:Traceback (most recent call last):

File "/usr/local/lib/python3.11/site-packages/langchain/agents/conversational_chat/base.py", line 106, in _extract_tool_and_input

response = self.output_parser.parse(llm_output)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/agents/conversational_chat/base.py", line 51, in parse

response = json.loads(cleaned_output)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/json/__init__.py", line 346, in loads

return _default_decoder.decode(s)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/json/decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/json/decoder.py", line 355, in raw_decode

raise JSONDecodeError("Expecting value", s, err.value) from None

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/src/app/main.py", line 93, in <module>

ask(question)

File "/usr/src/app/main.py", line 76, in ask

result = agent.run(question)

^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/chains/base.py", line 213, in run

return self(args[0])[self.output_keys[0]]

^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/chains/base.py", line 116, in __call__

raise e

File "/usr/local/lib/python3.11/site-packages/langchain/chains/base.py", line 113, in __call__

outputs = self._call(inputs)

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/agents/agent.py", line 632, in _call

next_step_output = self._take_next_step(

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/agents/agent.py", line 548, in _take_next_step

output = self.agent.plan(intermediate_steps, **inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/agents/agent.py", line 281, in plan

action = self._get_next_action(full_inputs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/agents/agent.py", line 243, in _get_next_action

parsed_output = self._extract_tool_and_input(full_output)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/langchain/agents/conversational_chat/base.py", line 109, in _extract_tool_and_input

raise ValueError(f"Could not parse LLM output: {llm_output}")

ValueError: Could not parse LLM output: My apologies, allow me to clarify my previous response:

{

"action": "recommend_tool",

"action_input": "I recommend using a professional social network which can provide informative details on Will's professional background and accomplishments."

}