issue_owner_repo

listlengths 2

2

| issue_body

stringlengths 0

261k

⌀ | issue_title

stringlengths 1

925

| issue_comments_url

stringlengths 56

81

| issue_comments_count

int64 0

2.5k

| issue_created_at

stringlengths 20

20

| issue_updated_at

stringlengths 20

20

| issue_html_url

stringlengths 37

62

| issue_github_id

int64 387k

2.46B

| issue_number

int64 1

127k

|

|---|---|---|---|---|---|---|---|---|---|

[

"hwchase17",

"langchain"

]

| I keep getting this error every time I try to ask my data a question using the code from "[chat_vector_db.ipynb](https://github.com/hwchase17/langchain/blob/f356cca1f278ac73f8e59f49da39854e1e47a205/docs/modules/chat/examples/chat_vector_db.ipynb)" notebook, so how can I fix this and is this from my stored data and if it is how can I encode it, also I'm using the UnstructuredFileLoader for a text file:

UnicodeEncodeError Traceback (most recent call last)

Cell In[97], line 3

1 chat_history = []

2 query = "who are you?"

----> 3 result = qa({"question": query, "chat_history": chat_history})

File [c:\Users\yousef\AppData\Local\Programs\Python\Python39\lib\site-packages\langchain\chains\base.py:116](file:///C:/Users/yousef/AppData/Local/Programs/Python/Python39/lib/site-packages/langchain/chains/base.py:116), in Chain.__call__(self, inputs, return_only_outputs)

114 except (KeyboardInterrupt, Exception) as e:

115 self.callback_manager.on_chain_error(e, verbose=self.verbose)

--> 116 raise e

117 self.callback_manager.on_chain_end(outputs, verbose=self.verbose)

118 return self.prep_outputs(inputs, outputs, return_only_outputs)

File [c:\Users\yousef\AppData\Local\Programs\Python\Python39\lib\site-packages\langchain\chains\base.py:113](file:///C:/Users/yousef/AppData/Local/Programs/Python/Python39/lib/site-packages/langchain/chains/base.py:113), in Chain.__call__(self, inputs, return_only_outputs)

107 self.callback_manager.on_chain_start(

108 {"name": self.__class__.__name__},

109 inputs,

110 verbose=self.verbose,

111 )

112 try:

--> 113 outputs = self._call(inputs)

114 except (KeyboardInterrupt, Exception) as e:

115 self.callback_manager.on_chain_error(e, verbose=self.verbose)

...

-> 1258 values[i] = one_value.encode('latin-1')

1259 elif isinstance(one_value, int):

1260 values[i] = str(one_value).encode('ascii')

UnicodeEncodeError: 'latin-1' codec can't encode character '\u201c' in position 7: ordinal not in range(256) | UnicodeEncodeError Using Chat Vector and My Own Data | https://api.github.com/repos/langchain-ai/langchain/issues/2121/comments | 8 | 2023-03-28T23:02:07Z | 2023-09-26T16:12:59Z | https://github.com/langchain-ai/langchain/issues/2121 | 1,644,771,527 | 2,121 |

[

"hwchase17",

"langchain"

]

| OpenAI python client supports passing additional headers when invoking the following functions

`openai.ChatCompletion.create`

or

`openai.Completion.create`

For example: I can pass the headers as shown in the sample code below.

```

completion = openai.Completion.create(deployment_id=deployment_id,

prompt=payload_dict['prompt'], stop=payload_dict['stop'], temperature=payload_dict['temperature'], headers=headers, max_tokens=1000)

```

Langchain does not surface the capability to pass the headers when we need to include custom HTTPS headers from the client. It would very useful to include this capability especially when you have custom authentication scheme where the model is exposed as an endpoint. | Unable to pass headers to Completion, ChatCompletion, Embedding endpoints | https://api.github.com/repos/langchain-ai/langchain/issues/2120/comments | 4 | 2023-03-28T22:29:45Z | 2023-09-27T16:11:24Z | https://github.com/langchain-ai/langchain/issues/2120 | 1,644,740,925 | 2,120 |

[

"hwchase17",

"langchain"

]

| I'm trying to save embeddings in Redis vectorstore and when I try to execute getting the following error. Any idea if this is a bug or if anything is wrong with my code? Any help is appreciated.

langchain version - both 0.0.123 and 0.0.124

Python 3.8.2

File "/Users/aruna/PycharmProjects/redis-test/database.py", line 16, in init_redis_database

rds = Redis.from_documents(docs, embeddings, redis_url="redis://localhost:6379", index_name='link')

File "/Users/aruna/PycharmProjects/redis-test/venv/lib/python3.8/site-packages/langchain/vectorstores/base.py", line 116, in from_documents

return cls.from_texts(texts, embedding, metadatas=metadatas, **kwargs)

File "/Users/aruna/PycharmProjects/redis-test/venv/lib/python3.8/site-packages/langchain/vectorstores/redis.py", line 224, in from_texts

if not _check_redis_module_exist(client, "search"):

File "/Users/aruna/PycharmProjects/redis-test/venv/lib/python3.8/site-packages/langchain/vectorstores/redis.py", line 23, in _check_redis_module_exist

return module in [m["name"] for m in client.info().get("modules", {"name": ""})]

File "/Users/aruna/PycharmProjects/redis-test/venv/lib/python3.8/site-packages/langchain/vectorstores/redis.py", line 23, in <listcomp>

return module in [m["name"] for m in client.info().get("modules", {"name": ""})]

**TypeError: string indices must be integers**

Sample code as follows.

REDIS_URL = 'redis://localhost:6379'

def init_redis_database(docs):

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_API_KEY)

rds = Redis.from_documents(docs, embeddings, redis_url=REDIS_URL, index_name='link')

| Unable save embeddings in Redis vectorstore | https://api.github.com/repos/langchain-ai/langchain/issues/2113/comments | 16 | 2023-03-28T20:22:00Z | 2024-03-15T05:05:26Z | https://github.com/langchain-ai/langchain/issues/2113 | 1,644,606,036 | 2,113 |

[

"hwchase17",

"langchain"

]

| while using llama_index GPTSimpleVectorIndex

I am reading a pdf file using SimpleDirectoryReader.

I am unable to create index for the file and it is generating the below error:

**INFO:openai:error_code=None error_message='Too many inputs for model None. The max number of inputs is 1. We hope to increase the number of inputs per request soon. Please contact us through an Azure support request at: https://go.microsoft.com/fwlink/?linkid=2213926 for further questions.' error_param=None error_type=invalid_request_error message='OpenAI API error received' stream_error=False**

The code works for some files and fails for others with the above error.

Please suggest what does it mean by **Too many inputs for model** that comes as a error only for some files. | openai:error_code=None error_message='Too many inputs for model None. The max number of inputs is 1. | https://api.github.com/repos/langchain-ai/langchain/issues/2096/comments | 9 | 2023-03-28T14:48:14Z | 2023-09-28T16:10:17Z | https://github.com/langchain-ai/langchain/issues/2096 | 1,644,118,649 | 2,096 |

[

"hwchase17",

"langchain"

]

| I need to supply a 'where' value to filter on metadata to Chromadb `similarity_search_with_score` function. I can't find a straightforward way to do it. Is there some way to do it when I kickoff my chain? Any hints, hacks, plans to support? | How to pass filter down to Chroma db when using ConversationalRetrievalChain | https://api.github.com/repos/langchain-ai/langchain/issues/2095/comments | 23 | 2023-03-28T14:47:44Z | 2024-01-01T09:39:52Z | https://github.com/langchain-ai/langchain/issues/2095 | 1,644,117,753 | 2,095 |

[

"hwchase17",

"langchain"

]

| Hi,

There seems to be a bug when trying to load a serialize faiss index when using Azure through OpenAIEmbeddings.

I get the following error:

```{python}

AttributeError: Can't get attribute 'Document' on <module 'langchain.schema' from '/langchain/schema.py'>

```

| Can't load faiss index when using Azure embeddings | https://api.github.com/repos/langchain-ai/langchain/issues/2094/comments | 2 | 2023-03-28T14:23:38Z | 2023-09-10T16:40:07Z | https://github.com/langchain-ai/langchain/issues/2094 | 1,644,073,204 | 2,094 |

[

"hwchase17",

"langchain"

]

| As the title says, there's a full implementation of ConversationalChatAgent which however is not in the __init__ file of agents, thus could not import it by

`from langchain.agents import ConversationalChatAgent`

I'm going to fix this right now. | ConversationalChatAgent is not in agent.__init__.py | https://api.github.com/repos/langchain-ai/langchain/issues/2093/comments | 0 | 2023-03-28T13:43:34Z | 2023-03-28T15:14:24Z | https://github.com/langchain-ai/langchain/issues/2093 | 1,643,984,599 | 2,093 |

[

"hwchase17",

"langchain"

]

| Hello i'm trying to provide a diifferent API key for each profile, but it seems that the last profile API key i set is the one used by all the profiles, is there a way to force use each profile its dedicated key? | Multiple openai keys | https://api.github.com/repos/langchain-ai/langchain/issues/2091/comments | 9 | 2023-03-28T12:34:07Z | 2023-10-28T16:07:45Z | https://github.com/langchain-ai/langchain/issues/2091 | 1,643,856,338 | 2,091 |

[

"hwchase17",

"langchain"

]

| I am using the latest version and i get this error message while only trying to:

from langchain.chains.chat_index.prompts import CONDENSE_QUESTION_PROMPT

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[5], line 3

1 from langchain.chains import LLMChain

2 from langchain.chains.question_answering import load_qa_chain

----> 3 from langchain.chains.chat_index.prompts import CONDENSE_QUESTION_PROMPT

ModuleNotFoundError: No module named 'langchain.chains.chat_index' | On the latest version 0.0.123 i get No module named 'langchain.chains.chat_index' | https://api.github.com/repos/langchain-ai/langchain/issues/2090/comments | 13 | 2023-03-28T09:35:57Z | 2023-12-08T16:08:40Z | https://github.com/langchain-ai/langchain/issues/2090 | 1,643,564,375 | 2,090 |

[

"hwchase17",

"langchain"

]

| No module 'datasets' found in langchain.evaluation | ModuleNotFoundError: No module named 'datasets' | https://api.github.com/repos/langchain-ai/langchain/issues/2088/comments | 2 | 2023-03-28T08:22:52Z | 2023-06-16T15:37:47Z | https://github.com/langchain-ai/langchain/issues/2088 | 1,643,446,461 | 2,088 |

[

"hwchase17",

"langchain"

]

| I'm testing on windows where default encoding is cp1252 and not utf-8 and I still have encoding problems that cannot overcome. | Add optional encoding parameter on each Loader | https://api.github.com/repos/langchain-ai/langchain/issues/2087/comments | 2 | 2023-03-28T08:10:59Z | 2023-09-18T16:22:19Z | https://github.com/langchain-ai/langchain/issues/2087 | 1,643,429,036 | 2,087 |

[

"hwchase17",

"langchain"

]

| Was trying to follow the document to run summarization, here's my code:

```python

from langchain.text_splitter import CharacterTextSplitter

from langchain.chains.mapreduce import MapReduceChain

from langchain.prompts import PromptTemplate

llm = OpenAI(temperature=0, engine='text-davinci-003')

text_splitter = CharacterTextSplitter()

with open("./state_of_the_union.txt") as f:

state_of_the_union = f.read()

texts = text_splitter.split_text(state_of_the_union)

from langchain.docstore.document import Document

docs = [Document(page_content=t) for t in texts[:3]]

from langchain.chains.summarize import load_summarize_chain

chain = load_summarize_chain(llm, chain_type="map_reduce")

chain.run(docs)

```

Got errors like below:

<img width="1273" alt="image" src="https://user-images.githubusercontent.com/30015018/228138989-1808a102-4246-412b-a86a-388d60579543.png">

Any ideas how to fix this? langchain version is 0.0.123 | load_summarize_chain cannot run | https://api.github.com/repos/langchain-ai/langchain/issues/2081/comments | 4 | 2023-03-28T05:41:15Z | 2023-04-14T11:41:02Z | https://github.com/langchain-ai/langchain/issues/2081 | 1,643,238,154 | 2,081 |

[

"hwchase17",

"langchain"

]

| Trying to run a simple script:

```

from langchain.llms import OpenAI

llm = OpenAI(temperature=0.9)

text = "What would be a good company name for a company that makes colorful socks?"

print(llm(text))

```

I'm running into this error:

`ModuleNotFoundError: No module named 'langchain.llms'; 'langchain' is not a package`

I've got a virtualenv installed with langchains downloaded.

```⇒ pip show langchain

Name: langchain

Version: 0.0.39

Summary: Building applications with LLMs through composability

Home-page: https://www.github.com/hwchase17/langchain

Author:

Author-email:

License: MIT

Location: /Users/jkaye/dev/langchain-tutorial/venv/lib/python3.11/site-packages

Requires: numpy, pydantic, PyYAML, requests, SQLAlchemy

```

```

⇒ python --version

Python 3.11.0

```

I'm using zsh so I ran `pip install 'langchain[all]'` | 'langchain' is not a package | https://api.github.com/repos/langchain-ai/langchain/issues/2079/comments | 29 | 2023-03-28T04:42:16Z | 2024-04-26T03:52:15Z | https://github.com/langchain-ai/langchain/issues/2079 | 1,643,187,784 | 2,079 |

[

"hwchase17",

"langchain"

]

| from langchain.document_loaders.csv_loader import CSVLoader

loader = CSVLoader(file_path='docs/whats-new-latest.csv', csv_args={

'fieldnames': ['Line of Business',

'Short Description'

]

})

data = loader.load()

print(data)

/.pyenv/versions/3.9.2/envs/s4-hana-chatbot/lib/python3.9/site-packages/langchain/document_loaders/csv_loader.py", line 53, in <genexpr>

content = "\n".join(f"{k.strip()}: {v.strip()}" for k, v in row.items())

AttributeError: 'NoneType' object has no attribute 'strip'

Can anyone assist how to solve this? | 'NoneType' object has no attribute 'strip' | https://api.github.com/repos/langchain-ai/langchain/issues/2074/comments | 9 | 2023-03-28T03:06:17Z | 2023-11-17T18:19:35Z | https://github.com/langchain-ai/langchain/issues/2074 | 1,643,123,069 | 2,074 |

[

"hwchase17",

"langchain"

]

| E.g. running

```python

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

tools = load_tools(["llm-math"], llm=llm)

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True, return_intermediate_steps=True)

agent.run("What is 2 raised to the 0.43 power?")

```

gives the error

```

203 """Run the chain as text in, text out or multiple variables, text out."""

204 if len(self.output_keys) != 1:

--> 205 raise ValueError(

206 f"`run` not supported when there is not exactly "

207 f"one output key. Got {self.output_keys}."

208 )

210 if args and not kwargs:

211 if len(args) != 1:

ValueError: `run` not supported when there is not exactly one output key. Got ['output', 'intermediate_steps'].

```

Is this supposed to be called differently or how else can the intermediate outputs ("Observations") be retrieved? | `initialize_agent` does not work with `return_intermediate_steps=True` | https://api.github.com/repos/langchain-ai/langchain/issues/2068/comments | 18 | 2023-03-28T00:50:15Z | 2024-02-23T16:09:08Z | https://github.com/langchain-ai/langchain/issues/2068 | 1,643,029,722 | 2,068 |

[

"hwchase17",

"langchain"

]

| This definition:

"purchase_order": """CREATE TABLE purchase_order (

id SERIAL NOT NULL,

name VARCHAR NOT NULL,

origin VARCHAR,

partner_ref VARCHAR,

date_order TIMESTAMP NOT NULL,

date_approve DATE,

partner_id INTEGER NOT NULL,

state VARCHAR,

notes TEXT,

amount_untaxed NUMERIC,

amount_tax NUMERIC,

amount_total NUMERIC,

user_id INTEGER,

company_id INTEGER NOT NULL,

create_uid INTEGER,

create_date TIMESTAMP,

write_uid INTEGER,

write_date TIMESTAMP,

CONSTRAINT PRIMARY KEY (id),

CONSTRAINT FOREIGN KEY(company_id) REFERENCES res_company (id) ,

CONSTRAINT FOREIGN KEY(partner_id) REFERENCES res_partner (id)

can be reduced to:

"purchase_order": """TABLE purchase_order (

id SERIAL NN PK,

name VC NN,

origin VC,

partner_ref VC,

date_order TIMESTAMP,

date_approve DATE,

partner_id INT NN,

state VC,

notes TX,

amount_untaxed NUM,

amount_tax NUM,

amount_total NUM,

user_id INT,

company_id INT NN,

create_uid INT,

create_date TIMESTAMP,

write_uid INT,

write_date TIMESTAMP,

FK(company_id) REF res_company (id) ,

FK(partner_id) REF res_partner (id)

and save a lot of space.

If need we can add some instruction for the aliases such as: VC=VARCHAR, etc... | DB Tools - Table definitions cam be shortened with some asumptions in order to keep used token low. | https://api.github.com/repos/langchain-ai/langchain/issues/2067/comments | 1 | 2023-03-28T00:36:30Z | 2023-08-25T16:14:11Z | https://github.com/langchain-ai/langchain/issues/2067 | 1,643,021,881 | 2,067 |

[

"hwchase17",

"langchain"

]

| I tried to map my db that have a lot of views that can leverage the whoole work. But the initial check does not allow this, also by having custom_table_info that describe the view structure. | DB Tools - Allow to reference also views thru custom_table_info | https://api.github.com/repos/langchain-ai/langchain/issues/2066/comments | 2 | 2023-03-28T00:31:19Z | 2023-09-10T16:40:17Z | https://github.com/langchain-ai/langchain/issues/2066 | 1,643,018,821 | 2,066 |

[

"hwchase17",

"langchain"

]

| ### Alpaca-LoRA

[Alpaca-LoRA](https://github.com/tloen/alpaca-lora) and Stanford Alpaca are NLP models that use the GPT architecture, but there are some critical differences between them. Here are three:

- **Training data**: Stanford Alpaca was trained on a larger dataset that includes a variety of sources, including webpages, books, and more. Alpaca-LoRA, on the other hand, was trained on a smaller dataset (but one that has been curated for quality) and uses low-rank adaptation (LoRA) to fine-tune the model for specific tasks.

- **Model size**: Stanford Alpaca is a larger model, with versions ranging from 774M to 1.5B parameters. Alpaca-LoRA, on the other hand, provides a smaller, 7B parameter model that is specifically optimized for low-cost devices such as the Raspberry Pi.

- **Pretrained models**: Both models offer pre-trained models that can be used out-of-the-box, but the available options are slightly different. Stanford Alpaca provides several models with different sizes and degrees of finetuning, while Alpaca-LoRA provides an Instruct model of similar quality to `text-davinci-003`.

### Similar To

https://github.com/hwchase17/langchain/issues/1777

### Resources

- [alpaca.cpp](https://github.com/antimatter15/alpaca.cpp), a native client for running Alpaca models on the CPU

- [Alpaca-LoRA-Serve](https://github.com/deep-diver/Alpaca-LoRA-Serve), a ChatGPT-style interface for Alpaca models

- [AlpacaDataCleaned](https://github.com/gururise/AlpacaDataCleaned), a project to improve the quality of the Alpaca dataset

- Various adapter weights (download at own risk):

- 7B:

- <https://huggingface.co/tloen/alpaca-lora-7b>

- <https://huggingface.co/samwit/alpaca7B-lora>

- 🇧🇷 <https://huggingface.co/22h/cabrita-lora-v0-1>

- 🇨🇳 <https://huggingface.co/qychen/luotuo-lora-7b-0.1>

- 🇯🇵 <https://huggingface.co/kunishou/Japanese-Alapaca-LoRA-7b-v0>

- 🇫🇷 <https://huggingface.co/bofenghuang/vigogne-lora-7b>

- 🇹🇭 <https://huggingface.co/Thaweewat/thai-buffala-lora-7b-v0-1>

- 🇩🇪 <https://huggingface.co/thisserand/alpaca_lora_german>

- 🇮🇹 <https://huggingface.co/teelinsan/camoscio-7b-llama>

- 13B:

- <https://huggingface.co/chansung/alpaca-lora-13b>

- <https://huggingface.co/mattreid/alpaca-lora-13b>

- <https://huggingface.co/samwit/alpaca13B-lora>

- 🇯🇵 <https://huggingface.co/kunishou/Japanese-Alapaca-LoRA-13b-v0>

- 🇰🇷 <https://huggingface.co/chansung/koalpaca-lora-13b>

- 🇨🇳 <https://huggingface.co/facat/alpaca-lora-cn-13b>

- 30B:

- <https://huggingface.co/baseten/alpaca-30b>

- <https://huggingface.co/chansung/alpaca-lora-30b>

- 🇯🇵 <https://huggingface.co/kunishou/Japanese-Alapaca-LoRA-30b-v0>

- [alpaca-native](https://huggingface.co/chavinlo/alpaca-native), a replication using the original Alpaca code | Alpaca-LoRA | https://api.github.com/repos/langchain-ai/langchain/issues/2063/comments | 4 | 2023-03-27T23:41:12Z | 2023-09-29T16:09:41Z | https://github.com/langchain-ai/langchain/issues/2063 | 1,642,978,571 | 2,063 |

[

"hwchase17",

"langchain"

]

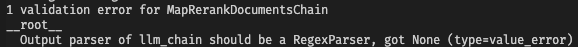

|

Moreover, I cannot use multiple prompts using `ChatPromptTemplate.from_messages` | map_rerank custom prompts don't work with SystemMessagePromptTemplate | https://api.github.com/repos/langchain-ai/langchain/issues/2053/comments | 1 | 2023-03-27T19:42:33Z | 2023-08-21T16:07:44Z | https://github.com/langchain-ai/langchain/issues/2053 | 1,642,705,217 | 2,053 |

[

"hwchase17",

"langchain"

]

| I was reading this blog post: https://blog.langchain.dev/retrieval/

There is this link:

> Other types of indexes, [like graphs](https://langchain.readthedocs.io/en/latest/modules/indexes/chain_examples/graph_qa.html), have piqued user's interests

Currently goes to a RTD 404 page

If I google for "langchain graph qa" the top result also goes to a 404

I can view a cached copy here https://webcache.googleusercontent.com/search?q=cache:obQTh41ZBRoJ:https://langchain.readthedocs.io/en/latest/modules/indexes/chain_examples/graph_qa.html&cd=1&hl=en&ct=clnk&gl=uk | Graph QA docs links are broken currently | https://api.github.com/repos/langchain-ai/langchain/issues/2049/comments | 3 | 2023-03-27T16:37:18Z | 2023-08-14T20:20:49Z | https://github.com/langchain-ai/langchain/issues/2049 | 1,642,438,709 | 2,049 |

[

"hwchase17",

"langchain"

]

| I understand that brace brackets "{ }" are used for the text replacement. I was wondering if we are able to use the brace brackets in our prompts where we want them to act as just text and do not want them to replace text, for example with c++ classes.

```c++

#include <string>

class BankAccount {

public:

// Constructor to initialize the account

BankAccount(const std::string& account_holder_name, const std::string& account_number, double initial_balance);

// Method to deposit money into the account

void deposit(double amount);

// Method to withdraw money from the account

bool withdraw(double amount);

// Method to display account details

void display_account_details() const;

private:

std::string account_holder_name_;

std::string account_number_;

double balance_;

};

``` | How to use brace brackets | https://api.github.com/repos/langchain-ai/langchain/issues/2048/comments | 2 | 2023-03-27T16:21:43Z | 2023-10-24T07:51:46Z | https://github.com/langchain-ai/langchain/issues/2048 | 1,642,415,345 | 2,048 |

[

"hwchase17",

"langchain"

]

| Is there a way to redirect the verbose logs away from stdout? I'm working on a frontend app, and I'd like to collect the logs to display in the frontend, but couldn't find in the docs a way to capture them into a variable or similar. | Redirect vebose logs | https://api.github.com/repos/langchain-ai/langchain/issues/2045/comments | 4 | 2023-03-27T15:27:23Z | 2023-07-18T03:25:03Z | https://github.com/langchain-ai/langchain/issues/2045 | 1,642,322,662 | 2,045 |

[

"hwchase17",

"langchain"

]

| Many OpenAI plugin implementations are starting to use yaml for the api doc.

```python

class AIPluginTool(BaseTool):

api_spec: str

def from_plugin_url(cls, url: str) -> "AIPluginTool":

response = requests.get(url)

if response.headers["Content-Type"] == "application/json":

response_data = response.json()

elif response.headers["Content-Type"] == "application/x-yaml":

response_data = yaml.safe_load(response.text)

else:

raise ValueError("Unsupported content type")

...

```

| Support also yaml files for the AIPluginTool | https://api.github.com/repos/langchain-ai/langchain/issues/2042/comments | 1 | 2023-03-27T15:01:41Z | 2023-03-28T07:54:03Z | https://github.com/langchain-ai/langchain/issues/2042 | 1,642,275,010 | 2,042 |

[

"hwchase17",

"langchain"

]

| Hey team,

As you can in the `get_docs` method there is no option to provide kwargs arguments, even the top_k is not updating. Do I have to make any changes on how to pass this info, or will it get fixed. | kwargs for ConversationalRetrievalChain | https://api.github.com/repos/langchain-ai/langchain/issues/2038/comments | 1 | 2023-03-27T13:41:57Z | 2023-03-28T06:46:16Z | https://github.com/langchain-ai/langchain/issues/2038 | 1,642,090,994 | 2,038 |

[

"hwchase17",

"langchain"

]

| Since `llms.OpenAIChat` is deprecated and `chat_models.ChatOpenAI` is sugguested in latest releases, I think it is necessary to add `prefix_messages` to `ChatOpenAI`, just like it in `OpenAIChat` so that we can provide messages to this chat model, for example, provide a system message helps set the behavior of the assistant.

```

m = ChatOpenAI(prefix_messages=[SystemMessage(content='you are a helpful assistant')])

``` | Add prefix_messages property to ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2036/comments | 4 | 2023-03-27T13:20:40Z | 2023-03-30T06:43:02Z | https://github.com/langchain-ai/langchain/issues/2036 | 1,642,034,579 | 2,036 |

[

"hwchase17",

"langchain"

]

| Since `llms.OpenAIChat` is deprecated and `chat_models.ChatOpenAI` is sugguested in latest releases, I think it is necessary to add `prefix_messages` to `ChatOpenAI`, just like it in `OpenAIChat` so that we can provide messages to this chat model, for example, provide a system message helps set the behavior of the assistant. | Add prefix_messages property to ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2035/comments | 0 | 2023-03-27T13:17:19Z | 2023-03-27T13:24:21Z | https://github.com/langchain-ai/langchain/issues/2035 | 1,642,026,037 | 2,035 |

[

"hwchase17",

"langchain"

]

| Since `llms.OpenAIChat` is deprecated and `chat_models.ChatOpenAI` is sugguested in latest releases, I think it is necessary to add `prefix_messages` to `ChatOpenAI`, just like it in `OpenAIChat` so that we can provide messages to this chat model, for example, provide a system message helps set the behavior of the assistant. | Add prefix_messages property to ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2034/comments | 0 | 2023-03-27T13:15:06Z | 2023-03-27T13:24:10Z | https://github.com/langchain-ai/langchain/issues/2034 | 1,642,025,652 | 2,034 |

[

"hwchase17",

"langchain"

]

| Since `llms.OpenAIChat` is deprecated and `chat_models.ChatOpenAI` is sugguested in latest releases, I think it is necessary to add `prefix_messages` to `ChatOpenAI`, just like it in `OpenAIChat` so that we can provide messages to this chat model, for example, provide a system message helps set the behavior of the assistant. | Add prefix_messages property to ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2033/comments | 0 | 2023-03-27T13:14:20Z | 2023-03-27T13:24:01Z | https://github.com/langchain-ai/langchain/issues/2033 | 1,642,025,513 | 2,033 |

[

"hwchase17",

"langchain"

]

| Since `llms.OpenAIChat` is deprecated and `chat_models.ChatOpenAI` is sugguested in latest releases, I think it is necessary to add `prefix_messages` to `ChatOpenAI`, just like it in `OpenAIChat` so that we can provide messages to this chat model, for example, provide a system message helps set the behavior of the assistant. | Add prefix_messages property to ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2032/comments | 0 | 2023-03-27T13:14:06Z | 2023-03-27T13:23:42Z | https://github.com/langchain-ai/langchain/issues/2032 | 1,642,025,480 | 2,032 |

[

"hwchase17",

"langchain"

]

| Since `langchain.llms.OpenAIChat` is deprecated and `langchain.chat_models.ChatOpenAI` is suggested in the latest versions,

but `ChatOpenAI` misses property `prefix_messages`, for example we can use this property to provide [a system message](https://platform.openai.com/docs/guides/chat/introduction) that helps set the behavior of the assistant.

| Add missing property prefix_messages for ChatOpenAI | https://api.github.com/repos/langchain-ai/langchain/issues/2031/comments | 0 | 2023-03-27T13:07:57Z | 2023-03-27T13:22:49Z | https://github.com/langchain-ai/langchain/issues/2031 | 1,642,024,275 | 2,031 |

[

"hwchase17",

"langchain"

]

| I just installed langchain and when I try to import WolframAlphaAPIWrapper using "from langchain.utilities.wolfram_alpha import WolframAlphaAPIWrapper", this error message is returned. I checked site-packages to see if the utilities is there, these are the list of files and no utilities:

VERSION chains formatting.py prompts sql_database.py

__init__.py docstore input.py py.typed text_splitter.py

__pycache__ embeddings llms python.py utils.py

agents example_generator.py model_laboratory.py serpapi.py vectorstores

| ModuleNotFoundError: No module named 'langchain.utilities' | https://api.github.com/repos/langchain-ai/langchain/issues/2029/comments | 7 | 2023-03-27T11:19:48Z | 2023-03-29T02:37:31Z | https://github.com/langchain-ai/langchain/issues/2029 | 1,641,927,118 | 2,029 |

[

"hwchase17",

"langchain"

]

| It looks like the result returned from the `predict` call to generate the query is returned surrounded by double quotes, so when passed to the db it's taken as a malformed query.

Example (using a postgres db):

```

llm = ChatOpenAI(temperature=0, model_name="gpt-4") # type: ignore

db = SQLDatabase.from_uri("<URI>")

db_chain = SQLDatabaseChain(llm=llm, database=db, verbose=True)

print(db_chain.run("give me any row"))

```

Result:

```

Traceback (most recent call last):

File "main.py", line 26, in <module>

print(db_chain.run("give me any row"))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/langchain/chains/base.py", line 213, in run

return self(args[0])[self.output_keys[0]]

^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/langchain/chains/base.py", line 116, in __call__

raise e

File "/opt/homebrew/lib/python3.11/site-packages/langchain/chains/base.py", line 113, in __call__

outputs = self._call(inputs)

^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/langchain/chains/sql_database/base.py", line 88, in _call

result = self.database.run(sql_cmd)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/langchain/sql_database.py", line 176, in run

cursor = connection.execute(text(command))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/engine/base.py", line 1380, in execute

return meth(self, multiparams, params, _EMPTY_EXECUTION_OPTS)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/sql/elements.py", line 334, in _execute_on_connection

return connection._execute_clauseelement(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/engine/base.py", line 1572, in _execute_clauseelement

ret = self._execute_context(

^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/engine/base.py", line 1943, in _execute_context

self._handle_dbapi_exception(

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/engine/base.py", line 2124, in _handle_dbapi_exception

util.raise_(

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/util/compat.py", line 211, in raise_

raise exception

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/engine/base.py", line 1900, in _execute_context

self.dialect.do_execute(

File "/opt/homebrew/lib/python3.11/site-packages/sqlalchemy/engine/default.py", line 736, in do_execute

cursor.execute(statement, parameters)

sqlalchemy.exc.ProgrammingError: (psycopg2.errors.SyntaxError) syntax error at or near ""SELECT data FROM table LIMIT 5""

LINE 1: "SELECT data FROM table LIMIT 5"

``` | SQLDatabaseChain malformed queries | https://api.github.com/repos/langchain-ai/langchain/issues/2027/comments | 11 | 2023-03-27T10:15:28Z | 2024-01-24T10:46:33Z | https://github.com/langchain-ai/langchain/issues/2027 | 1,641,823,337 | 2,027 |

[

"hwchase17",

"langchain"

]

| I am using `RecursiveCharacterTextSplitter` to split my documents for ingestion into a vector db. What is the intuition for selecting optimal chunk parameters?

It seems to me that chunk_size influences the size of documents being retrieved. Does that mean I should select the largest possible chunk_size to ensure maximum context retrieved?

What about chunk_overlap? This seems like a parameter that can be arbitrarily set i.e. select something that's not too big, not too small. Is that the right understanding? | Intuition for selecting optimal chunk_size and chunk_overlap for RecursiveCharacterTextSplitter | https://api.github.com/repos/langchain-ai/langchain/issues/2026/comments | 13 | 2023-03-27T09:43:31Z | 2024-04-04T16:06:31Z | https://github.com/langchain-ai/langchain/issues/2026 | 1,641,765,150 | 2,026 |

[

"hwchase17",

"langchain"

]

| openapi.json is so large for prompt token limit. and how to handle authorizations in the openai.json? | AIPluginTool submit long openapi.json in prompt exceeds the token limit | https://api.github.com/repos/langchain-ai/langchain/issues/2025/comments | 1 | 2023-03-27T08:16:49Z | 2023-08-21T16:07:49Z | https://github.com/langchain-ai/langchain/issues/2025 | 1,641,622,042 | 2,025 |

[

"hwchase17",

"langchain"

]

| II'm using some variable data in my prompt, and sending that prompt to ConversationChain and there I'm getting some validation error.

what changes we can make it to work as expected?

| ConversationChain Validation Error | https://api.github.com/repos/langchain-ai/langchain/issues/2024/comments | 6 | 2023-03-27T05:44:59Z | 2023-09-26T16:13:24Z | https://github.com/langchain-ai/langchain/issues/2024 | 1,641,417,241 | 2,024 |

[

"hwchase17",

"langchain"

]

| I am building an agent which answer user questions and I have plugged it with two tool, the regular llm and search tool like this

llm = OpenAI(temperature=0)

search = GoogleSerperAPIWrapper()

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate(template=template, input_variables=["question"])

llm_chain = LLMChain(llm=llm, prompt=prompt, verbose=True)

tools = [

Tool(

name = "Search",

func=search.run,

description="useful for when you need to answer questions about current events."

),

Tool(

name="LLM",

func=llm_chain.run,

description="useful for when you need to answer questions about anything in general."

)

]

mrkl = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

Now when I ask it a general question like "Who is Sachin", it still is trying to invoke the search action although the LLM can answer that question as it is not based on a recent event. How to make sure LLM answers any questions except questions on recent events to reduce the SERP usage ? | How to use Serp only for current events related searches and use GPT for regular info | https://api.github.com/repos/langchain-ai/langchain/issues/2023/comments | 9 | 2023-03-27T05:38:09Z | 2023-09-28T16:10:27Z | https://github.com/langchain-ai/langchain/issues/2023 | 1,641,412,258 | 2,023 |

[

"hwchase17",

"langchain"

]

| Trying to use `persist_directory` to have Chroma persist to disk:

```python

index = VectorstoreIndexCreator(vectorstore_kwargs={"persist_directory": "db"})

```

and it displays this warning message that implies it won't be persisted:

```

Using embedded DuckDB without persistence: data will be transient

```

However, it does create files to the `db` directory. The warning appears to originate from https://github.com/chroma-core/chroma/pull/204#issuecomment-1458243316. Do I have the setting correct? | Setting Chroma persist_directory says "Using embedded DuckDB without persistence" | https://api.github.com/repos/langchain-ai/langchain/issues/2022/comments | 18 | 2023-03-27T05:29:14Z | 2024-03-30T20:14:14Z | https://github.com/langchain-ai/langchain/issues/2022 | 1,641,406,148 | 2,022 |

[

"hwchase17",

"langchain"

]

| sqlalchemy in version 1.3.x, need to use select([]) way to query tables:

related stackoverflow question: https://stackoverflow.com/questions/62565993/sqlalchemy-exc-argumenterror-columns-argument-to-select-must-be-a-python-list

I will submit a PR to fix it | [bug]sqlalchemy 1.3.x compatibility issue | https://api.github.com/repos/langchain-ai/langchain/issues/2020/comments | 0 | 2023-03-27T05:22:30Z | 2023-03-28T06:45:52Z | https://github.com/langchain-ai/langchain/issues/2020 | 1,641,400,793 | 2,020 |

[

"hwchase17",

"langchain"

]

| If you want to create a Qdrant index and keep adding documents (i.e. adding new documents to existing qdrant), when your embedding is sentence transformers `qdrant_index.add_documents(docs)` gives error.

Because the `embedding_function` which is `model.encode()` is returning either `numpy` or `tensor` array and has to be converted to `list[float]`. Therefore, this line

`vectors=[self.embedding_function(text) for text in texts]`

needs to updated to:

`vectors=[self.embedding_function(text).tolist() for text in texts]`

To allow updating the collection.

https://github.com/hwchase17/langchain/blob/b83e8265102514d1722b2fb1aad29763c5cad62a/langchain/vectorstores/qdrant.py#L83

| Updating the Qdrant index with new documents raises error | https://api.github.com/repos/langchain-ai/langchain/issues/2016/comments | 5 | 2023-03-27T03:16:18Z | 2023-09-18T17:19:58Z | https://github.com/langchain-ai/langchain/issues/2016 | 1,641,294,710 | 2,016 |

[

"hwchase17",

"langchain"

]

| It would be great to see integration with [OpenAssistant](https://github.com/LAION-AI/Open-Assistant) by @LAION-AI, which aims to become the [largest and most open alternative to ChatGPT](https://projects.laion.ai/Open-Assistant/blog/we-need-your-help#:~:text=largest%20and%20most%20open%20alternative%20to%20ChatGPT).

> OpenAssistant is a chat-based assistant that understands tasks, can interact with third-party systems, and retrieve information dynamically to do so

Here's the [Open Assistant architecture](https://projects.laion.ai/Open-Assistant/blog/2023-02-11-architecture):

<img width="504" alt="screen" src="https://user-images.githubusercontent.com/6625584/227813941-3c77f348-8874-409a-9e8f-2ee1604a8611.png">

| Open Assistant by LAION-AI | https://api.github.com/repos/langchain-ai/langchain/issues/2015/comments | 2 | 2023-03-27T00:18:02Z | 2023-09-28T16:10:32Z | https://github.com/langchain-ai/langchain/issues/2015 | 1,641,160,728 | 2,015 |

[

"hwchase17",

"langchain"

]

| When verbose=True and the agent executor is run, then in get_prompt_input_key() an error is raised:

```python

raise ValueError(f"One input key expected got {prompt_input_keys}")

```

This is because there are two entries [verbose, input] not just one [input]. It may be that setting verbose=True is not relevant. However, if it is, then a possible solution is to change utils.py line 10:

```python

if len(prompt_input_keys) != 1:

```

to

```python

if ~len(prompt_input_keys) in [1,2]:

``` | Error raised in utils.py get_prompt_input_key when AgentExecutor.run verbose=True | https://api.github.com/repos/langchain-ai/langchain/issues/2013/comments | 3 | 2023-03-26T19:57:11Z | 2023-10-21T16:10:16Z | https://github.com/langchain-ai/langchain/issues/2013 | 1,641,060,799 | 2,013 |

[

"hwchase17",

"langchain"

]

| In [here](https://python.langchain.com/en/latest/modules/indexes/vectorstore_examples/pinecone.html) - Pinecone.from_documents doesn't exist.

In the code [here](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py) it is from_texts | Pinecone in docs is outdated | https://api.github.com/repos/langchain-ai/langchain/issues/2009/comments | 4 | 2023-03-26T18:02:47Z | 2023-12-09T14:17:37Z | https://github.com/langchain-ai/langchain/issues/2009 | 1,641,013,216 | 2,009 |

[

"hwchase17",

"langchain"

]

| All "smart websites" will have AI-friendly API schemas, not just **robots.txt**. These websites will have JSON manifest files for LLMs (ex: **openai.yaml**) and LLM plugins (ex: **ai-plugin.json**). These JSON manifest files will allow LLM agents like ChatGPT to interact with websites without data scraping or parsing API docs.

OpenAI uses JSON manifest files as part of its plugin system for ChatGPT[[2]](https://www.mlq.ai/introducing-chatgpt-plugins/)[[4]](https://openai.com/blog/chatgpt-plugins). A plugin consists of an API, an API schema (in OpenAPI JSON or YAML format), and a manifest that describes what the plugin can do for both humans and models, as well as other metadata[[2]](https://www.mlq.ai/introducing-chatgpt-plugins/). The manifest file links to the OpenAPI specification and includes plugin-specific metadata such as the name, description, and logo[[2]](https://www.mlq.ai/introducing-chatgpt-plugins/)[[4]](https://openai.com/blog/chatgpt-plugins). To create a plugin, the first step is to build an API[[1]](https://platform.openai.com/docs/plugins/getting-started/plugin-manifest). The API is then documented in the OpenAPI JSON or YAML format[[1]](https://platform.openai.com/docs/plugins/getting-started/plugin-manifest)[[2]](https://www.mlq.ai/introducing-chatgpt-plugins/). Finally, a JSON manifest file is created that defines relevant metadata for the plugin[[1]](https://platform.openai.com/docs/plugins/getting-started/plugin-manifest). The manifest file includes information such as the name, description, and API URL[[2]](https://www.mlq.ai/introducing-chatgpt-plugins/).

Here's an example of [.well-known/ai-plugin.json](https://github.com/openai/chatgpt-retrieval-plugin/tree/main/.well-known)

```

{

"schema_version": "v1",

"name_for_model": "retrieval",

"name_for_human": "Retrieval Plugin",

"description_for_model": "Plugin for searching through the user's documents (such as files, emails, and more) to find answers to questions and retrieve relevant information. Use it whenever a user asks something that might be found in their personal information.",

"description_for_human": "Search through your documents.",

"auth": {

"type": "user_http",

"authorization_type": "bearer"

},

"api": {

"type": "openapi",

"url": "https://your-app-url.com/.well-known/openapi.yaml",

"has_user_authentication": false

},

"logo_url": "https://your-app-url.com/.well-known/logo.png",

"contact_email": "[email protected]",

"legal_info_url": "[email protected]"

}

```

Here's an example [.well-known/openai.yaml](https://github.com/openai/chatgpt-retrieval-plugin/blob/main/.well-known/openapi.yaml)

```

openapi: 3.0.2

info:

title: Retrieval Plugin API

description: A retrieval API for querying and filtering documents based on natural language queries and metadata

version: 1.0.0

servers:

- url: https://your-app-url.com

paths:

/query:

post:

summary: Query

description: Accepts search query objects array each with query and optional filter. Break down complex questions into sub-questions. Refine results by criteria, e.g. time / source, don't do this often. Split queries if ResponseTooLargeError occurs.

operationId: query_query_post

requestBody:

content:

application/json:

schema:

$ref: "#/components/schemas/QueryRequest"

required: true

responses:

"200":

description: Successful Response

content:

application/json:

schema:

$ref: "#/components/schemas/QueryResponse"

"422":

description: Validation Error

content:

application/json:

schema:

$ref: "#/components/schemas/HTTPValidationError"

security:

- HTTPBearer: []

components:

schemas:

DocumentChunkMetadata:

title: DocumentChunkMetadata

type: object

properties:

source:

$ref: "#/components/schemas/Source"

source_id:

title: Source Id

type: string

url:

title: Url

type: string

created_at:

title: Created At

type: string

author:

title: Author

type: string

document_id:

title: Document Id

type: string

DocumentChunkWithScore:

title: DocumentChunkWithScore

required:

- text

- metadata

- score

type: object

properties:

id:

title: Id

type: string

text:

title: Text

type: string

metadata:

$ref: "#/components/schemas/DocumentChunkMetadata"

embedding:

title: Embedding

type: array

items:

type: number

score:

title: Score

type: number

DocumentMetadataFilter:

title: DocumentMetadataFilter

type: object

properties:

document_id:

title: Document Id

type: string

source:

$ref: "#/components/schemas/Source"

source_id:

title: Source Id

type: string

author:

title: Author

type: string

start_date:

title: Start Date

type: string

end_date:

title: End Date

type: string

HTTPValidationError:

title: HTTPValidationError

type: object

properties:

detail:

title: Detail

type: array

items:

$ref: "#/components/schemas/ValidationError"

Query:

title: Query

required:

- query

type: object

properties:

query:

title: Query

type: string

filter:

$ref: "#/components/schemas/DocumentMetadataFilter"

top_k:

title: Top K

type: integer

default: 3

QueryRequest:

title: QueryRequest

required:

- queries

type: object

properties:

queries:

title: Queries

type: array

items:

$ref: "#/components/schemas/Query"

QueryResponse:

title: QueryResponse

required:

- results

type: object

properties:

results:

title: Results

type: array

items:

$ref: "#/components/schemas/QueryResult"

QueryResult:

title: QueryResult

required:

- query

- results

type: object

properties:

query:

title: Query

type: string

results:

title: Results

type: array

items:

$ref: "#/components/schemas/DocumentChunkWithScore"

Source:

title: Source

enum:

- email

- file

- chat

type: string

description: An enumeration.

ValidationError:

title: ValidationError

required:

- loc

- msg

- type

type: object

properties:

loc:

title: Location

type: array

items:

anyOf:

- type: string

- type: integer

msg:

title: Message

type: string

type:

title: Error Type

type: string

securitySchemes:

HTTPBearer:

type: http

scheme: bearer

```

Here are five ways to implement JSON manifest files inside LangChain:

1. **Plugin chaining**: Allow users to enable and chain multiple ChatGPT plugins in LangChain using OpenAI's JSON manifest files for powerful, customized agents.

2. **Unified plugin management**: Develop a plugin management interface in LangChain that can parse OpenAI JSON manifest files, simplifying plugin integration.

3. **Context enrichment**: Enhance LangChain's context management by incorporating plugin information from OpenAI JSON manifest files, leading to context-aware responses.

4. **Dynamic plugin invocation**: Implement a mechanism for LangChain agents to call appropriate OpenAI plugin APIs based on user input and conversation context.

5. **Cross-platform plugin development**: Encourage creating cross-platform plugins compatible with both OpenAI's ChatGPT ecosystem and LangChain applications by standardizing JSON manifest files and OpenAPI specifications. | JSON Manifest Files | https://api.github.com/repos/langchain-ai/langchain/issues/2008/comments | 1 | 2023-03-26T17:48:06Z | 2023-09-10T16:40:23Z | https://github.com/langchain-ai/langchain/issues/2008 | 1,641,007,401 | 2,008 |

[

"hwchase17",

"langchain"

]

| I'd like to run query against `parquet` files with `duckdb`. I see some `duckdb` stuffs on the docs when I search it up, also there's this PR: https://github.com/hwchase17/langchain/pull/1991, which seems to be a nice addition. What are the missing pieces to make this work?

One workaround I've found is turning the `parquet` files into a SQLite db, make an agent out of it, then proceed from there. | feat: parquet file support for SQL agent | https://api.github.com/repos/langchain-ai/langchain/issues/2002/comments | 3 | 2023-03-26T05:28:21Z | 2023-09-18T16:22:24Z | https://github.com/langchain-ai/langchain/issues/2002 | 1,640,778,468 | 2,002 |

[

"hwchase17",

"langchain"

]

| Hi! Thanks for creating langchain! I wanted to give its "agents" feature a try and quickly found an example of its shortcomings:

```

> Entering new AgentExecutor chain...

I need to find out who the author of Moore's law is and if they are alive.

Action: Search

Action Input: "author of Moore's law"

Observation: Gordon Moore

Thought: I need to find out if Gordon Moore is alive.

Action: Search

Action Input: "Gordon Moore alive"

Observation: March 24, 2023

Thought: I now know the final answer.

Final Answer: Yes, Gordon Moore is alive.

> Finished chain.

```

Not sure if it's something that can be fixed within langchain, but I figured I'd report it just in case. | Agent misbehaving: "is the author of Moore's law alive?" | https://api.github.com/repos/langchain-ai/langchain/issues/1994/comments | 2 | 2023-03-25T18:46:50Z | 2023-09-18T16:22:29Z | https://github.com/langchain-ai/langchain/issues/1994 | 1,640,621,419 | 1,994 |

[

"hwchase17",

"langchain"

]

| Am refereing to this documentaion [here](https://langchain.readthedocs.io/en/latest/modules/chat/examples/chat_vector_db.html) created a file

test.py

```

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain.text_splitter import CharacterTextSplitter

from langchain.chains import ConversationalRetrievalChain

from langchain.chat_models import ChatOpenAI

from langchain.prompts.chat import (

ChatPromptTemplate,

SystemMessagePromptTemplate,

AIMessagePromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.schema import (

AIMessage,

HumanMessage,

SystemMessage

)

from langchain.document_loaders import TextLoader

loader = TextLoader('test.txt')

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=200, chunk_overlap=0)

documents = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vectorstore = Chroma.from_documents(documents, embeddings)

system_template="""Use the following pieces of context to answer the users question.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

----------------

{context}"""

messages = [

SystemMessagePromptTemplate.from_template(system_template),

HumanMessagePromptTemplate.from_template("{question}")

]

prompt = ChatPromptTemplate.from_messages(messages)

qa = ConversationalRetrievalChain.from_llm(ChatOpenAI(temperature=0), vectorstore, qa_prompt=prompt)

chat_history = []

query = "Who is the CEO?"

result = qa({"question": query, "chat_history": chat_history})

print(result)

```

Which throws

Traceback (most recent call last):

File "test.py", line 44, in <module>

qa = ConversationalRetrievalChain.from_llm(ChatOpenAI(temperature=0), vectorstore, qa_prompt=prompt)

File "/home/prajin/works/ults/gpt/lamda-openai-chat-python/venv/lib/python3.8/site-packages/langchain/chains/conversational_retrieval/base.py", line 140, in from_llm

return cls(

File "pydantic/main.py", line 341, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for ConversationalRetrievalChain

retriever

instance of BaseRetriever expected (type=type_error.arbitrary_type; expected_arbitrary_type=BaseRetriever)

| pydantic.error_wrappers.ValidationError: 1 validation error | https://api.github.com/repos/langchain-ai/langchain/issues/1986/comments | 4 | 2023-03-25T08:38:05Z | 2023-10-31T08:02:38Z | https://github.com/langchain-ai/langchain/issues/1986 | 1,640,432,940 | 1,986 |

[

"hwchase17",

"langchain"

]

| Pls. add support for Kagi Summarizer

https://blog.kagi.com/universal-summarizer#api | Add support for Kagi Summarizer API in Chains | https://api.github.com/repos/langchain-ai/langchain/issues/1983/comments | 1 | 2023-03-25T05:01:52Z | 2023-08-21T16:07:54Z | https://github.com/langchain-ai/langchain/issues/1983 | 1,640,380,648 | 1,983 |

[

"hwchase17",

"langchain"

]

| ### Summary

The QueryCheckerTool function currently creates an LLMChain object internally but does not provide a way to specify the `openai_api_key` manually via supplied arguments. This can cause issues for users who do not want to place their API key in environment variables.

### Steps to Reproduce

Run this code

```python

from langchain.agents import create_sql_agent

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

from langchain.sql_database import SQLDatabase

from langchain.llms.openai import OpenAI

from langchain.agents import AgentExecutor

import sqlite3

with sqlite3.connect("data.db") as conn:

pass

db = SQLDatabase.from_uri("sqlite:///data.db")

toolkit = SQLDatabaseToolkit(db=db)

llm = OpenAI(temperature=0, openai_api_key="your key here")

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

verbose=True

)

while True:

prompt = input("> ")

response = agent_executor.run(prompt)

print(response)

```

### Expected Behavior

`create_sql_agent` should not require me to supply an OpenAI API key, nor should it require me to have this stored in an environment variable.

### Actual Behavior

`create_sql_agent` generates an error when I call it without first setting the `OPENAI_API_KEY` environment variable.

### Obvious Solution

`create_sql_agent` should supply its argument `llm` to functions it calls, obviously. In particular, this line https://github.com/hwchase17/langchain/blob/b83e8265102514d1722b2fb1aad29763c5cad62a/langchain/tools/sql_database/tool.py#L85 is the source of the error.

### Environment

- LangChain version: 0.0.123

- Python version: 3.9.16

- Operating System: Windows 10 | QueryCheckerTool creates LLMChain object, does not allow manual specification of openai_api_key | https://api.github.com/repos/langchain-ai/langchain/issues/1982/comments | 2 | 2023-03-25T03:45:48Z | 2023-03-26T04:37:50Z | https://github.com/langchain-ai/langchain/issues/1982 | 1,640,354,701 | 1,982 |

[

"hwchase17",

"langchain"

]

| Here's what i'm using atm...

data:

```

{

"num_tweets": 199,

"total_impressions": 154586,

"total_engagements": 0,

"total_retweets": 4621,

"total_likes": 4249,

"average_impressions": 776.8140703517588,

"average_engagements": 0.0,

"average_retweets": 23.22110552763819,

"average_likes": 21.35175879396985,

"tweets": [

{

"timestamp": "2023-03-24T02:02:49+00:00",

"text": "RT : No show tonight sorry y\u2019all, business dinner went way long \ud83d\ude4f\ud83e\uddd1\u200d\ud83d\ude80\ud83e\udee1",

"replies": 0,

"impressions": 0,

"retweets": 2,

"quote_retweets": 0,

"likes": 0

},

{

"timestamp": "2023-03-23T09:59:45+00:00",

"text": "",

"replies": 0,

"impressions": 296,

"retweets": 0,

"quote_retweets": 0,

"likes": 1

},

.....

```

```

question = """You are a Solana NFT Expert, and a web3 social media guru, Analyze the data, and based on that, provide a detailed report, the report contains datapoints about the project's professionalism, social impact and reach, and the project's overall engagement with the community., with numbers to support the case,

Number of followers

Follower growth rate

Number of retweets and likes per tweet

Engagement rate per tweet

Hashtag usage

Quality of content

Frequency of posting

Tone and sentiment of tweets

Provide a thorough analysis of each metric and support your findings with specific numbers and examples. Additionally, provide any insights or recommendations based on your analysis that could help improve the project's social media presence and impact. Please provide the report in Markdown format.

"""

model_name = "gpt-3.5-turbo"

llm = ChatOpenAI(model_name=model_name, temperature=0.7)

recursive_character_text_splitter = (

RecursiveCharacterTextSplitter.from_tiktoken_encoder(

encoding_name="cl100k_base" if model_name == "gpt-3.5-turbo" else "p50k_base",

chunk_size=4097

if model_name == "gpt-3.5-turbo"

else llm.modelname_to_contextsize(model_name),

chunk_overlap=0,

)

)

text_chunks = recursive_character_text_splitter.split_text(open("twitter_profile.json").read())

documents = [Document(page_content=question + text_chunk) for text_chunk in text_chunks]

# Summarize the document by summarizing each document chunk and then summarizing the combined summary

chain = load_summarize_chain(llm, chain_type="map_reduce")

chain.run(documents)

```

response

```

"The report analyzes the social media presence of a Solana NFT project, examining metrics such as follower count, engagement rate, hashtag usage, content quality, posting frequency, tone/sentiment, and growth rate. Specific numbers and examples are provided, and recommendations are given for improving the project's impact. The data includes tweets related to partnerships, revenue sharing, and a recent airdrop, with engagement metrics and impressions listed."

```

takes about 27 seconds

I'm new to langchain, is there a better way to go about this? the responses seem undetailed and dull at the moment. | How to run gpt-3.5-turbo against my own data while using map_reduce? | https://api.github.com/repos/langchain-ai/langchain/issues/1980/comments | 4 | 2023-03-25T00:34:20Z | 2023-08-21T20:15:50Z | https://github.com/langchain-ai/langchain/issues/1980 | 1,640,254,185 | 1,980 |

[

"hwchase17",

"langchain"

]

| I am trying to use a Custom Prompt Template as an example_prompt to the FewShotPromptTemplate. Although, I am getting a 'key error' template issue. Does FewShotPromptTemplate support using Custom Prompt Template ?

A snippet example for reference

```

from langchain import PromptTemplate, FewShotPromptTemplate

class CustomPromptTemplate(StringPromptTemplate, BaseModel):

@validator("input_variables")

def validate_input_variables(cls, v):

""" Validate that the input variables are correct. """

if len(v) != 1 or "function_name" not in v:

raise ValueError("function_name must be the only input_variable.")

return v

def format(self, **kwargs) -> str:

# Get the source code of the function

source_code = get_source_code(kwargs["function_name"])

# Generate the prompt to be sent to the language model

prompt = f"""

Given the function name and source code, generate an English language explanation of the function.

Function Name: {kwargs["function_name"].__name__}

Source Code:

{source_code}

Explanation:

"""

return prompt

def _prompt_type(self):

return "function-explainer"

fn_explainer = CustomPromptTemplate(input_variables=["function_name"])

few_shot_prompt = FewShotPromptTemplate(

# These are the examples we want to insert into the prompt.

examples=examples,

# This is how we want to format the examples when we insert them into the prompt.

example_prompt= fn_explainer,

# The prefix is some text that goes before the examples in the prompt.

# Usually, this consists of intructions.

prefix="Give the antonym of every input",

# The suffix is some text that goes after the examples in the prompt.

# Usually, this is where the user input will go

suffix="Word: {input}\nAntonym:",

# The input variables are the variables that the overall prompt expects.

input_variables=["input"],

# The example_separator is the string we will use to join the prefix, examples, and suffix together with.

example_separator="\n\n",

)

``` | Using Custom Prompt with FewShotPromptTemplate | https://api.github.com/repos/langchain-ai/langchain/issues/1977/comments | 5 | 2023-03-24T21:57:37Z | 2024-02-14T16:14:33Z | https://github.com/langchain-ai/langchain/issues/1977 | 1,640,095,874 | 1,977 |

[

"hwchase17",

"langchain"

]

| * Allow users to choose the type in the schema (string | List[string])

* Allow users to get multiple json objects (get JSON array) in the response. I achieved it by replacing the {{ }} -> [[ ]] as follows: `prompt.replace("""{{

"ID": string // IDs which refers to the sentences.

"Text": string // Sentences that contains the answer to the question.

}}""", """[[

"ID": string // IDs which refers to the sentences.

"Text": string // Sentences that contains the answer to the question.

]]""")` And got a list of json objects with this method. | StructuredOutputParser - Allow users to get multiple items from response. | https://api.github.com/repos/langchain-ai/langchain/issues/1976/comments | 5 | 2023-03-24T20:27:06Z | 2023-12-27T12:00:23Z | https://github.com/langchain-ai/langchain/issues/1976 | 1,639,991,330 | 1,976 |

[

"hwchase17",

"langchain"

]

| I think the sqlalchemy dependency in pyproject.toml still needs to be bumped.

_Originally posted by @sliedes in https://github.com/hwchase17/langchain/issues/1272#issuecomment-1473683519_

| sqlalchemy dependency in pyproject.toml still needs to be bumped to * | https://api.github.com/repos/langchain-ai/langchain/issues/1975/comments | 5 | 2023-03-24T19:52:44Z | 2023-09-27T16:12:00Z | https://github.com/langchain-ai/langchain/issues/1975 | 1,639,955,235 | 1,975 |

[

"hwchase17",

"langchain"

]

| The history part for summay of past conversation is always in English. How to change it please? | How to set the 'history' of ConversationSummaryBufferMemory in other language | https://api.github.com/repos/langchain-ai/langchain/issues/1973/comments | 2 | 2023-03-24T17:24:27Z | 2023-09-18T16:22:34Z | https://github.com/langchain-ai/langchain/issues/1973 | 1,639,777,702 | 1,973 |

[

"hwchase17",

"langchain"

]

| A couple problems:

- `ChatOpenAI.get_num_tokens_from_messages()` takes a `model` parameter that is not included in the [base class method signature](https://github.com/hwchase17/langchain/blob/master/langchain/schema.py#L208). Instead, it should use `self.model_name`, similar to how the `BaseOpenAI.get_num_tokens()` does.

- `ChatOpenAI.get_num_tokens_from_messages()` does not support GPT-4. See here for the updated formula: https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb | ChatOpenAI.get_num_tokens_from_messages: use self.model_name and support GPT-4 | https://api.github.com/repos/langchain-ai/langchain/issues/1972/comments | 4 | 2023-03-24T16:47:58Z | 2023-09-18T16:22:40Z | https://github.com/langchain-ai/langchain/issues/1972 | 1,639,724,669 | 1,972 |

[

"hwchase17",

"langchain"

]

| I try to set the "system" role maessage when using ConversationChain with ConversationSummaryBufferMemory(CSBM), but it is failed. When I change the ConversationSummaryBufferMemory to the ConversationBufferMemory, it become worked. But I'd like to use the auto summarize utilities when exceeding the maxLength by CSBM.

Below is the error message:

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, MessagesPlaceholder(variable_name="history"),human_message_prompt])

from langchain.chains import ConversationChain

conversation_with_summary = ConversationChain(

llm=chat,

memory=ConversationSummaryBufferMemory(llm=chat, max_token_limit=10),

prompt=chat_prompt,

verbose=True

)

conversation_with_summary.predict(input="hello")

*******************************************************************************

> Entering new ConversationChain chain...

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[125], line 34

24 conversation_with_summary = ConversationChain(

25 #llm=llm,

26 llm=chat,

(...)

31 verbose=True

32 )

33 #conversation_with_summary.predict(identity="佛祖",text="你好")

---> 34 conversation_with_summary.predict(input="你好")

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\llm.py:151, in LLMChain.predict(self, **kwargs)

137 def predict(self, **kwargs: Any) -> str:

138 """Format prompt with kwargs and pass to LLM.

139

140 Args:

(...)

149 completion = llm.predict(adjective="funny")

150 """

--> 151 return self(kwargs)[self.output_key]

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\base.py:116, in Chain.__call__(self, inputs, return_only_outputs)

114 except (KeyboardInterrupt, Exception) as e:

115 self.callback_manager.on_chain_error(e, verbose=self.verbose)

--> 116 raise e

117 self.callback_manager.on_chain_end(outputs, verbose=self.verbose)

118 return self.prep_outputs(inputs, outputs, return_only_outputs)

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\base.py:113, in Chain.__call__(self, inputs, return_only_outputs)

107 self.callback_manager.on_chain_start(

108 {"name": self.__class__.__name__},

109 inputs,

110 verbose=self.verbose,

111 )

112 try:

--> 113 outputs = self._call(inputs)

114 except (KeyboardInterrupt, Exception) as e:

115 self.callback_manager.on_chain_error(e, verbose=self.verbose)

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\llm.py:57, in LLMChain._call(self, inputs)

56 def _call(self, inputs: Dict[str, Any]) -> Dict[str, str]:

---> 57 return self.apply([inputs])[0]

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\llm.py:118, in LLMChain.apply(self, input_list)

116 def apply(self, input_list: List[Dict[str, Any]]) -> List[Dict[str, str]]:

117 """Utilize the LLM generate method for speed gains."""

--> 118 response = self.generate(input_list)

119 return self.create_outputs(response)

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\llm.py:61, in LLMChain.generate(self, input_list)

59 def generate(self, input_list: List[Dict[str, Any]]) -> LLMResult:

60 """Generate LLM result from inputs."""

---> 61 prompts, stop = self.prep_prompts(input_list)

62 return self.llm.generate_prompt(prompts, stop)

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\chains\llm.py:79, in LLMChain.prep_prompts(self, input_list)

77 for inputs in input_list:

78 selected_inputs = {k: inputs[k] for k in self.prompt.input_variables}

---> 79 prompt = self.prompt.format_prompt(**selected_inputs)

80 _colored_text = get_colored_text(prompt.to_string(), "green")

81 _text = "Prompt after formatting:\n" + _colored_text

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\prompts\chat.py:173, in ChatPromptTemplate.format_prompt(self, **kwargs)

167 elif isinstance(message_template, BaseMessagePromptTemplate):

168 rel_params = {

169 k: v

170 for k, v in kwargs.items()

171 if k in message_template.input_variables

172 }

--> 173 message = message_template.format_messages(**rel_params)

174 result.extend(message)

175 else:

File ~\AppData\Local\Programs\Python\Python310\lib\site-packages\langchain\prompts\chat.py:43, in MessagesPlaceholder.format_messages(self, **kwargs)

41 value = kwargs[self.variable_name]

42 if not isinstance(value, list):

---> 43 raise ValueError(

44 f"variable {self.variable_name} should be a list of base messages, "

45 f"got {value}"

46 )

47 for v in value:

48 if not isinstance(v, BaseMessage):

ValueError: variable history should be a list of base messages, got | how to use SystemMessagePromptTemplate with ConversationSummaryBufferMemory please? | https://api.github.com/repos/langchain-ai/langchain/issues/1971/comments | 4 | 2023-03-24T16:39:01Z | 2023-09-28T16:10:42Z | https://github.com/langchain-ai/langchain/issues/1971 | 1,639,712,764 | 1,971 |

[

"hwchase17",

"langchain"

]

| I am reaching out to inquire about the possibility of implementing a `caching system` for popular prompts as vectors. As you may know, constantly re-embedding questions can be `costly` and `time-consuming`, especially for larger datasets.

Therefore, I was wondering if there are any plans to create a sub package that would allow users to store and reuse embeddings of commonly used questions. This would not only decrease the cost of re-embedding but also improve the overall efficiency of the system.

I would greatly appreciate it if you could let me know if this is something that may be considered in the future. Thank you for your time and consideration. | Proposal to Implement Caching of Popular Prompts as Vectors | https://api.github.com/repos/langchain-ai/langchain/issues/1968/comments | 7 | 2023-03-24T14:22:18Z | 2023-09-18T16:22:44Z | https://github.com/langchain-ai/langchain/issues/1968 | 1,639,490,018 | 1,968 |

[

"hwchase17",

"langchain"

]

| I am reaching out to inquire about the possibility of implementing a `caching system` for popular prompts as vectors. As you may know, constantly re-embedding questions can be `costly` and `time-consuming`, especially for larger datasets.

Therefore, I was wondering if there are any plans to create a sub package that would allow users to store and reuse embeddings of commonly used questions. This would not only decrease the cost of re-embedding but also improve the overall efficiency of the system.

I would greatly appreciate it if you could let me know if this is something that may be considered in the future. Thank you for your time and consideration. | Proposal to Implement Caching of Popular Prompts as Vectors | https://api.github.com/repos/langchain-ai/langchain/issues/1967/comments | 3 | 2023-03-24T14:21:57Z | 2023-10-23T02:26:40Z | https://github.com/langchain-ai/langchain/issues/1967 | 1,639,489,619 | 1,967 |

[

"hwchase17",

"langchain"

]

| I am reaching out to inquire about the possibility of implementing a `caching system` for popular prompts as vectors. As you may know, constantly re-embedding questions can be `costly` and `time-consuming`, especially for larger datasets.

Therefore, I was wondering if there are any plans to create a sub package that would allow users to store and reuse embeddings of commonly used questions. This would not only decrease the cost of re-embedding but also improve the overall efficiency of the system.

I would greatly appreciate it if you could let me know if this is something that may be considered in the future. Thank you for your time and consideration. | Proposal to Implement Caching of Popular Prompts as Vectors | https://api.github.com/repos/langchain-ai/langchain/issues/1966/comments | 1 | 2023-03-24T14:21:38Z | 2023-08-21T16:07:59Z | https://github.com/langchain-ai/langchain/issues/1966 | 1,639,489,505 | 1,966 |

[

"hwchase17",

"langchain"

]

| Say I query my vector database with a minimum distance threshold and no documents ("sources") are returned, how can I stop ChatVectorDBChain from answering the question without using prompts?

I observe that even though it finds no sources, it will answer based on chat history or overwrite the prompt instructions. I would like to have a deterministic switch like:

```python

if no_documents:

raise ValueError()

```

and then catch this error to print some message and continue. | Is there a non-prompt way to stop ChatVectorDBChain from answering when no vectors are found? | https://api.github.com/repos/langchain-ai/langchain/issues/1963/comments | 3 | 2023-03-24T10:23:54Z | 2023-09-25T16:15:24Z | https://github.com/langchain-ai/langchain/issues/1963 | 1,639,119,073 | 1,963 |

[

"hwchase17",

"langchain"

]

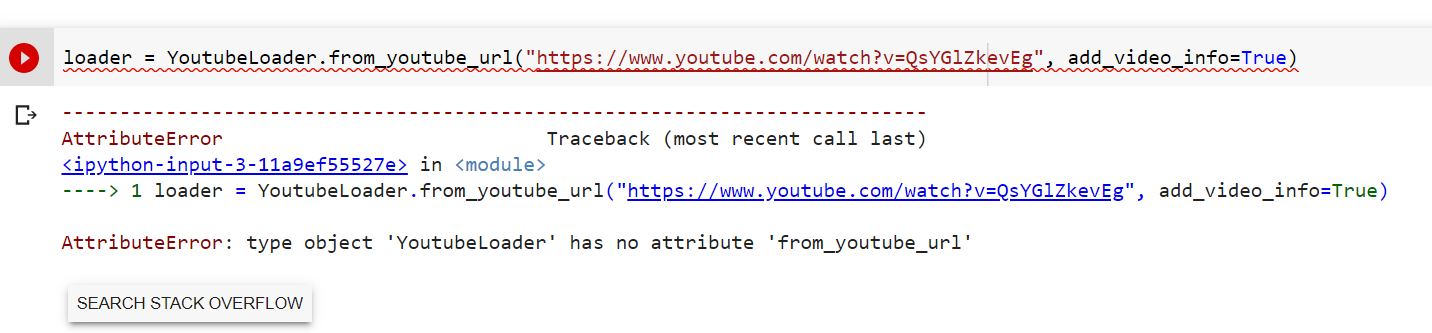

| I am trying to load load video and came across below issue.

I am using langchain version 0.0.121

| AttributeError: type object 'YoutubeLoader' has no attribute 'from_youtube_url' | https://api.github.com/repos/langchain-ai/langchain/issues/1962/comments | 5 | 2023-03-24T10:08:17Z | 2023-04-12T04:13:00Z | https://github.com/langchain-ai/langchain/issues/1962 | 1,639,095,508 | 1,962 |

[

"hwchase17",

"langchain"

]

| The score is useful for a lot of things. It'd be great if VectorStore exported the abstract methods so a VectorStore dependency can be injected.

| `*_with_score` methods should be part of VectorStore | https://api.github.com/repos/langchain-ai/langchain/issues/1959/comments | 1 | 2023-03-24T08:17:09Z | 2023-09-10T16:40:38Z | https://github.com/langchain-ai/langchain/issues/1959 | 1,638,930,794 | 1,959 |

[

"hwchase17",

"langchain"

]

| null | How to work with multiple csv files in the same agent session ? is there any option to call agent with multiple csv files, so that the model can interact multiple files and answer us. | https://api.github.com/repos/langchain-ai/langchain/issues/1958/comments | 12 | 2023-03-24T07:46:39Z | 2023-05-25T21:23:12Z | https://github.com/langchain-ai/langchain/issues/1958 | 1,638,890,881 | 1,958 |

[

"hwchase17",

"langchain"

]

| ## Summary

I'm seeing this `ImportError` on my Mac M1 when trying to use Chroma

```

(mach-o file, but is an incompatible architecture (have (x86_64), need (arm64e)))

```

Any ideas?

## Traceback

```

Traceback (most recent call last):

File "/Users/homanp/Library/Caches/com.vercel.fun/runtimes/python3/../python/bootstrap.py", line 147, in <module>

lambda_runtime_main()

File "/Users/homanp/Library/Caches/com.vercel.fun/runtimes/python3/../python/bootstrap.py", line 127, in lambda_runtime_main

fn = lambda_runtime_get_handler()

File "/Users/homanp/Library/Caches/com.vercel.fun/runtimes/python3/../python/bootstrap.py", line 113, in lambda_runtime_get_handler

mod = importlib.import_module(module_name)

File "/opt/homebrew/Cellar/[email protected]/3.9.5/Frameworks/Python.framework/Versions/3.9/lib/python3.9/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 855, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed