url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/13046 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13046/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13046/comments | https://api.github.com/repos/huggingface/transformers/issues/13046/events | https://github.com/huggingface/transformers/issues/13046 | 963,796,051 | MDU6SXNzdWU5NjM3OTYwNTE= | 13,046 | TFBertPreTrainingLoss has something wrong | {

"login": "ultimatedaotu",

"id": 58505034,

"node_id": "MDQ6VXNlcjU4NTA1MDM0",

"avatar_url": "https://avatars.githubusercontent.com/u/58505034?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ultimatedaotu",

"html_url": "https://github.com/ultimatedaotu",

"followers_url": "https://api.github.com/users/ultimatedaotu/followers",

"following_url": "https://api.github.com/users/ultimatedaotu/following{/other_user}",

"gists_url": "https://api.github.com/users/ultimatedaotu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ultimatedaotu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ultimatedaotu/subscriptions",

"organizations_url": "https://api.github.com/users/ultimatedaotu/orgs",

"repos_url": "https://api.github.com/users/ultimatedaotu/repos",

"events_url": "https://api.github.com/users/ultimatedaotu/events{/privacy}",

"received_events_url": "https://api.github.com/users/ultimatedaotu/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | {

"login": "Rocketknight1",

"id": 12866554,

"node_id": "MDQ6VXNlcjEyODY2NTU0",

"avatar_url": "https://avatars.githubusercontent.com/u/12866554?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Rocketknight1",

"html_url": "https://github.com/Rocketknight1",

"followers_url": "https://api.github.com/users/Rocketknight1/followers",

"following_url": "https://api.github.com/users/Rocketknight1/following{/other_user}",

"gists_url": "https://api.github.com/users/Rocketknight1/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Rocketknight1/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Rocketknight1/subscriptions",

"organizations_url": "https://api.github.com/users/Rocketknight1/orgs",

"repos_url": "https://api.github.com/users/Rocketknight1/repos",

"events_url": "https://api.github.com/users/Rocketknight1/events{/privacy}",

"received_events_url": "https://api.github.com/users/Rocketknight1/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "Rocketknight1",

"id": 12866554,

"node_id": "MDQ6VXNlcjEyODY2NTU0",

"avatar_url": "https://avatars.githubusercontent.com/u/12866554?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Rocketknight1",

"html_url": "https://github.com/Rocketknight1",

"followers_url": "https://api.github.com/users/Rocketknight1/followers",

"following_url": "https://api.github.com/users/Rocketknight1/following{/other_user}",

"gists_url": "https://api.github.com/users/Rocketknight1/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Rocketknight1/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Rocketknight1/subscriptions",

"organizations_url": "https://api.github.com/users/Rocketknight1/orgs",

"repos_url": "https://api.github.com/users/Rocketknight1/repos",

"events_url": "https://api.github.com/users/Rocketknight1/events{/privacy}",

"received_events_url": "https://api.github.com/users/Rocketknight1/received_events",

"type": "User",

"site_admin": false

}

] | [

"Hi, thanks for the issue but I'll need a little more info to investigate! Do you encounter an error when you run the code, or do you believe the outputted loss is incorrect? If you encounter an error, can you paste it here? If the loss is incorrect, can you upload a sample batch of data (e.g. a pickled dict of Numpy arrays) that gets different loss values on the PyTorch versus the TF version of the model, when both are initialized from the same checkpoint? \r\n\r\nAll of that will help us track down the problem here. Thanks for helping!",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,628 | 1,631 | 1,631 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform:

- Python version: 3.8

- PyTorch version (GPU?):

- Tensorflow version (GPU?): 2.5

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: no

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

Model I am using (Bert, XLNet ...): TFBertPreTrainingLoss

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. construct some inputs of MLM task

2. call TFBertForMaskedLM

3. while computing loss, something wrong happened.

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

```python

masked_lm_loss = loss_fn(y_true=masked_lm_labels, y_pred=masked_lm_reduced_logits)

next_sentence_loss = loss_fn(y_true=next_sentence_label, y_pred=next_sentence_reduced_logits)

masked_lm_loss = tf.reshape(tensor=masked_lm_loss, shape=(-1, shape_list(next_sentence_loss)[0]))

masked_lm_loss = tf.reduce_mean(input_tensor=masked_lm_loss, axis=0)

```

The number of masked_labels is uncertain ,thus ops of "reshape" is unsuitable. Why not calculate the total loss of batches? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13046/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13046/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/13045 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13045/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13045/comments | https://api.github.com/repos/huggingface/transformers/issues/13045/events | https://github.com/huggingface/transformers/pull/13045 | 963,510,502 | MDExOlB1bGxSZXF1ZXN0NzA2MTAzMjQ5 | 13,045 | Add FNet | {

"login": "gchhablani",

"id": 29076344,

"node_id": "MDQ6VXNlcjI5MDc2MzQ0",

"avatar_url": "https://avatars.githubusercontent.com/u/29076344?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gchhablani",

"html_url": "https://github.com/gchhablani",

"followers_url": "https://api.github.com/users/gchhablani/followers",

"following_url": "https://api.github.com/users/gchhablani/following{/other_user}",

"gists_url": "https://api.github.com/users/gchhablani/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gchhablani/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gchhablani/subscriptions",

"organizations_url": "https://api.github.com/users/gchhablani/orgs",

"repos_url": "https://api.github.com/users/gchhablani/repos",

"events_url": "https://api.github.com/users/gchhablani/events{/privacy}",

"received_events_url": "https://api.github.com/users/gchhablani/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Not sure why this test fails:\r\n```python\r\n=========================== short test summary info ============================\r\nFAILED tests/extended/test_trainer_ext.py::TestTrainerExt::test_run_seq2seq_no_dist\r\n==== 1 failed, 7169 passed, 3466 skipped, 708 warnings in 832.52s (0:13:52) ====\r\n\r\n```",

"The failure is due to the new release of sacrebleu. If you rebase on master to get the commit that pins it to < 2.0.0, the failure will go away (but it's not necessary for this PR to be merged as we know it has nothing to do with it).",

"Thanks for reviews @sgugger @patil-suraj\r\nI'll address them quickly. \r\n\r\nOne more concern apart from the ones I have mentioned above:\r\n\r\n~I have removed the slow integration testing from tokenization tests as it expects `attention_mask`. I'll take a look and update the test accordingly.~\r\n\r\nEDIT:\r\n------\r\nThis test has been updated.\r\n",

"Hey @gchhablani :-) \r\n\r\nWe've just added you to the Google org, so that you can move the model weights there. If you find some time, it would also be very nice to add some model cards (I can definitely help you with that). \r\n\r\nRegarding the failing doc test, you can just rebase to current master and it'll be fixed",

"I found two issues with the fourier transform.\r\n\r\n### Issue 1\r\nThe actual implementation uses `jax.vmap` on the `self.fourier_transform`. I made a mistake earlier in the implementation and do it for all dimensions - `hidden_size`, `sequence_length`, and `batch_size`, but it is just `sequence_length` and `batch_size`.\r\n\r\nThis leads to a mismatch issue when using `batch_size` more than one. I have fixed this issue by passing in the correct dimensions to `torch.fft.fftn` and using `functools.partial`.\r\n\r\nPlease check the Flax/Torch output match for `batch_size=2` [here](https://colab.research.google.com/drive/13cqOgP4DNrYbBdjwD0NwSxORCUTRxiZ-?usp=sharing).\r\n\r\nUnfortunately, there is no `vmap` in torch as of now in the stable version, but only in the nightly version [here](https://pytorch.org/tutorials/prototype/vmap_recipe.html).\r\n\r\n### Issue 2\r\nFollowing @sgugger's suggestion to add the optimizations for TPUs, I tried adding the `einsum` version of fourier transform where they use DFT matrix multiplication and the axis-wise FFT. I have had to make changes and few additions to support them in PyTorch. Currently, the outputs from those don't match (but they should, at least to some extent). So I am fixing that as well.\r\n\r\n\r\nEDIT:\r\n------\r\nI understand the issue with the `einsum` implementation. The original code uses the maximum sequence length possible as their sequence length during training - 512. Hence, during the initialization, they specify this maximum sequence length, and then use this variable to initialize the `DFT` matrix for sequence length. While that may have made sense for them, I'm not sure if it makes sense here?\r\n\r\nI think we can take in another parameter `sequence_length` during config initialization. This will be used to specify the sequence length (because `max_position_embeddings` is used to initialize the `self.position_embeddings`, so that shouldn't be changed). Along with this, a check that throws an error if the `sequence_length` does not match sequence length passed to the model. \r\n",

"With the latest changes, an error occurs:\r\n\r\n```python\r\nImportError while importing test module '/home/circleci/transformers/tests/test_modeling_fnet.py'.\r\nHint: make sure your test modules/packages have valid Python names.\r\nTraceback:\r\n/usr/local/lib/python3.7/importlib/__init__.py:127: in import_module\r\n return _bootstrap._gcd_import(name[level:], package, level)\r\ntests/test_modeling_fnet.py:23: in <module>\r\n from transformers.models.fnet.modeling_fnet import FNetBasicFourierTransform\r\nsrc/transformers/models/fnet/modeling_fnet.py:28: in <module>\r\n from scipy import linalg\r\nE ModuleNotFoundError: No module named 'scipy'\r\n```\r\nI am trying to use `scipy.linalg.dft` to get `DFT matrix`. Any chance this can be a dependency?\r\n\r\nEDIT\r\n------\r\nI have added a variable called `_scipy_available` which is used when initializing the fourier transform, and if it is not available, I add a warning. The users can install SciPy if they want?",

"I don't see a problem with using `scipy` as an optional dependency for this specific model",

"Let me know if need help making the tests pass with the dependency - I can fix this in your PR if you want :-)",

"Hi @patrickvonplaten\r\n\r\nI have pushed the code where I used the global variable `_scipy_available`, does that seem okay? The tests are working fine locally.\r\n\r\nAlso, in model tests I'm verifying whether `fourier_transform` implementations match or not in the test: `create_and_check_fourier_transform` for which I need to access `modeling_fnet`.\r\n\r\nI get this error on CircleCI:\r\n\r\n```python\r\n_________________ ERROR collecting tests/test_modeling_fnet.py _________________\r\nImportError while importing test module '/home/circleci/transformers/tests/test_modeling_fnet.py'.\r\nHint: make sure your test modules/packages have valid Python names.\r\nTraceback:\r\n/usr/local/lib/python3.7/importlib/__init__.py:127: in import_module\r\n return _bootstrap._gcd_import(name[level:], package, level)\r\ntests/test_modeling_fnet.py:23: in <module>\r\n from transformers.models.fnet.modeling_fnet import FNetBasicFourierTransform, _scipy_available\r\nsrc/transformers/models/fnet/modeling_fnet.py:22: in <module>\r\n import torch\r\nE ModuleNotFoundError: No module named 'torch'\r\n```\r\n\r\nAny idea how do I fix this?\r\n\r\nEDIT\r\n------\r\nTest is fixed. I followed `fsmt` tests. Had to add the imports under `is_torch_available()`. ",

"I have updated the checkpoints and added basic model cards. The model performance isn't great on MLM, not sure why. The accuracy scores are low, though.\r\n\r\nCheckpoints\r\n- [fnet-base](https://huggingface.co/google/fnet-base)\r\n- [fnet-large](https://huggingface.co/google/fnet-large)",

"Also, just to check :-) The reported eval metrics on GLUE - did you run them once with `run_glue.py` or is it a copy-paste of the paper? ",

"@patrickvonplaten No, I just copy pasted from the paper 🙈. Should I try fine-tuning it?\n\nMaybe, that itself can be the demo?",

"I am checking the checkpoint conversion. Ideally, there should be less than `1e-3`/`1e-4` differences in the outputs. I'm not sure how to exactly fix this, but the arg-sorted order of the predictions is different for the PyTorch and the Flax model. :/ \r\n\r\nFor different fourier transforms, I matched them against `np.fft.fftn` and `jnp.fft.fftn`, both give at best `1e-4` match, which means the problem is not the fourier transform.\r\n\r\nI'll do a layer-wise debugging and update here.\r\n\r\nNonetheless, the original masked LM weights lead to similar predictions, so fine-tuning example will be helpful.\r\n\r\n\r\nEDIT\r\n------\r\nThe issue was that the original implementation uses gelu from BERT, which is equivalent to `gelu_new`, I suppose. Changing the activation to `gelu_new` leads to a `1e-4` match on all logits and sequence output ^_^\r\n\r\nI am still working on verifying model outputs.",

"The original MLM model performs decently for the following: \"the man worked as a [MASK].\" The masked token top-10 predictions are:\r\n```\r\nman\r\nperson\r\nuse\r\nguide\r\nwork\r\nexample\r\nreason\r\nsource\r\none\r\nright\r\n```\r\nI had to modify the tokens as expected by the model. The tokenizer is having issues. The original one gives this output for the text above:\r\n```python\r\n[13, 283, 2479, 106, 8, 16657, 6, 16678]\r\n[ '▁the', '▁man', '▁worked', '▁as', '▁a', '▁', '[MASK]', '.' ]\r\n```\r\nThe tokenizer I wrote is returning this:\r\n```python\r\n[13, 283, 2479, 106, 8, 6, 845]\r\n['▁the', '▁man', '▁worked', '▁as', '▁a', '[MASK]', '▁.']\r\n```\r\nNotice how the space - `▁` is missing and that the period is actually `.` but becomes `▁.` in our tokenizer.\r\n\r\nAny ideas on why this might be happening?\r\n\r\nWhen I change `[MASK]` to `mask`, both lead to same output:\r\n\r\n```python\r\n[13, 283, 2479, 106, 8, 10469, 16678]\r\n['▁the', '▁man', '▁worked', '▁as', '▁a', '▁mask', '.']\r\n```\r\nIn their [input_pipeline](https://github.com/google-research/google-research/blob/master/f_net/input_pipeline.py#L258), they add the mask, cls and sep ids manually. Hence, they never use `[MASK]` in the text input. So, maybe, it's okay if we get `▁a`, `[MASK]`?\r\nBut in either case, we shouldn't get `▁.`? How do I handle this?\r\n\r\nThe problem happens in `tokenize`, where we split based on the `[MASK]` token. But if we don't do that, then `[MASK]` is broken into several tokens. `tokenize('.')` results in `▁.` ",

"I tried fixing the issue using a fix which basically skips first character after a mask token, only if it is not a `no_split_token`.\r\n\r\nI'm not sure if this is 100% correct.\r\n\r\nAlso, there is an error with `FNetTokenizerFast`, the `[MASK]` token is not working as expected:\r\n\r\n```python\r\n[4, 13, 283, 2479, 106, 8, 1932, 2594, 16681, 6154, 5]\r\n['[CLS]', '▁the', '▁man', '▁worked', '▁as', '▁a', '▁[', 'mas', 'k', '].', '[SEP]']\r\n```",

"@patrickvonplaten @LysandreJik @sgugger\nCan you please check the tokenizer once when you get a chance?\n\nOnce that is working, I can proceed with the fine-tuning without any issues.",

"Checking now!",

"Here @gchhablani, I looked into it and the tokenizer actually looks correct to me. See this colab: https://colab.research.google.com/drive/1QC4yvSHk0DSOObD6U2fbUE-9-6W3D3_F?usp=sharing \r\nNote that in the original code tokens are just \"manually\" replaced by the \"[MASK]\" token. So in the colab above, if the token for \"guide\" (3106) is replaced by the mask token id in the original code then the current tokenizer would be correct\r\n\r\nI'm wondering whether the model is actually the same. Checking this now...",

"@patrickvonplaten The tokenizer is working as expected because of the fixed I pushed in the previous commit. It handles the mask token, but I am not 100% sure if it is correct or if there is a better way to deal with this.",

"BTW, to fix the pipelines torch tests I think you just have to rebase to current master (or merge master into your branch :-) ) ",

"@patrickvonplaten\r\n\r\nThere is an issue with `FNetTokenizerFast`:\r\n\r\n```python\r\n>>> from src.transformers.models.fnet.tokenization_fnet_fast import FNetTokenizerFast\r\n2021-08-30 20:30:09.070691: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\r\n2021-08-30 20:30:09.070758: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\r\n>>> tokenizer = FNetTokenizerFast.from_pretrained('google/fnet-base')\r\n>>> text = \"the man worked as a [MASK].\"\r\n>>> tokenizer.encode(text)\r\n[4, 13, 283, 2479, 106, 8, 1932, 2594, 16681, 6154, 5]\r\n>>> tokenizer.tokenize(text)\r\n['▁the', '▁man', '▁worked', '▁as', '▁a', '▁[', 'mas', 'k', '].']\r\n```\r\n\r\nThe `[MASK]` should not get tokenized. Any idea why this might be happening?",

"@gchhablani it seems that FNet was trained with a SPM vocab, so the corect masking token should be `<mask>` :)",

"@stefan-it \r\nI haven't worked with sentencepiece before so I'm not sure. But, in the [original code](https://github.com/google-research/google-research/blob/8077479d91cca79b16417055511b7744c155c344/f_net/input_pipeline.py#L256-L258), they specify `[CLS], [SEP], [MASK]` explicitly. However, they do not use the `[MASK]` string token anywhere, but only the id.\r\n\r\nWhat do you think about this?\r\n\r\nIf changing to `<mask>` will fix things, then we can go with it. I will try it out.",

"Hi @gchhablani , oh I'm sorry I haven't yet read the official implementation. But it seems that they're really using `[MASK]` as the masking token (as previously done in [ALBERT](https://github.com/google-research/albert#sentencepiece)).",

"> @patrickvonplaten\r\n> \r\n> There is an issue with `FNetTokenizerFast`:\r\n> \r\n> ```python\r\n> >>> from src.transformers.models.fnet.tokenization_fnet_fast import FNetTokenizerFast\r\n> 2021-08-30 20:30:09.070691: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory\r\n> 2021-08-30 20:30:09.070758: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.\r\n> >>> tokenizer = FNetTokenizerFast.from_pretrained('google/fnet-base')\r\n> >>> text = \"the man worked as a [MASK].\"\r\n> >>> tokenizer.encode(text)\r\n> [4, 13, 283, 2479, 106, 8, 1932, 2594, 16681, 6154, 5]\r\n> >>> tokenizer.tokenize(text)\r\n> ['▁the', '▁man', '▁worked', '▁as', '▁a', '▁[', 'mas', 'k', '].']\r\n> ```\r\n> \r\n> The `[MASK]` should not get tokenized. Any idea why this might be happening?\r\n\r\nI can fix this once the changes to how `token_type_ids` are generated are applied :-)"

] | 1,628 | 1,632 | 1,632 | CONTRIBUTOR | null | # What does this PR do?

This PR adds the [FNet](https://arxiv.org/abs/2105.03824) model in PyTorch. I was working on it in another PR #12335 which got closed due to inactivity ;-;. This PR closes issue #12411.

## Checklist

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

Requesting @LysandreJik to review.

~**Note**:This model uses a SentencePiece tokenizer. They have provided the sentence-piece .model file which can be loaded. While creating FNetTokenizer should I inherit from some other existing tokenizer? Alternatively, I can copy the tokenizer from `ALBERT` (which is what I am doing right now). Wdyt?~

**Note**: I am trying to make this model as similar to Bert is possible. The original implementation has slightly different layers. For example, `FNetIntermediate` and `FNetOutput` equivalents are combined into a single layer in original FNet code, but I keep them separate. Hope this is okay?

EDIT 1:

------

I have made necessary changes for the model. And since the model compares against Bert, it makes sense to have (almost) all tasks - MultipleChoice, QuestionAnswering, etc. I am still working on:

- [x] Tokenizer (regular and fast)

- [x] Documentation

- [x] Checkpoint Conversion

- [x] Tests

EDIT 2:

------

~We also need to skip `attention_mask` totally from the tokenizer. The user, ideally, should not have an option to get the `attention_mask` using `FNetTokenizer`. I am using `model_input_names` for this.~

EDIT 3:

------

~One more concern is that, since I am implementing in PyTorch, do we expect the user to run this on TPU? The reason is that the original implementation changes the way they calculate FFT on TPU, based on the sequence length (they found some optimal rules for faster processing). Currently, I have only used `torch.fft.fftn` directly (they use `jnp.fft.fftn` in the CPU/GPU case). Please let me know what you think.~

EDIT 4:

------

One more thing to consider is that the original code allows `type_vocab_size` of 4, which is used only for GLUE tasks. During pre-training they only use `0` and `1`.

But, the checkpoints also have the shape of embedding weights as `(4, 768)` . Does that mean that the tokenizer might need to support something like:

```python

tokenizer = FNetTokenizer.from_pretrained('fnet-base')

inputs = tokenizer(text1, text2, text3, text4)

```

?

EDIT 5:

------

~The colab link to outputs on checkpoint conversion: [Flax to PyTorch](https://colab.research.google.com/drive/1CxxDwaH4Tei9cUBHRaMYWPHCpS2El2He?usp=sharing).

The model outputs, embedding layer, encoder layer 0 outputs match up to `1e-2`, except masked lm output for masked token, which matches to `1e-1`. Any idea on how I can improve this?~

~One reason I can think of this reduction is precision is the difference in precision in `torch.fft.fftn` and `jnp.fft.fftn` which is atmost `1e-4`. From a difference of atmost `1e-6`, after applying the corresponding transforms, the difference becomes atmost `1e-3` in the real part. Over layers, this might accumulate. Just a guess, however.~

~This was fixed by using `gelu_new` instead of `gelu`.~

EDIT 6:

------

They use a projection layer in the embeddings, and hence the embedding size and hidden size for the model are provided separately in the config. In their experiments, they keep it same, but the flexibility is still there. Do we want to keep both the sizes separate?

EDIT 7:

------

~The FastTokenizer requires a `tokenizer.json` file which I have created using `convert_slow_tokenizer`. I used `AlbertConverter` for this model. I don't know (in-detail) how SentencePiece and FastTokenizers work. Please let me know if I'm missing anything.~

EDIT 8:

------

Just realized that the original model is denoted as `f_net`. I am using `fnet` everywhere, is this acceptable?

EDIT 9:

------

~I am not sure about special tokens in the tokenizer. The original model gives some special tokens as empty string ``. Using the current tokenizer code to load these gives `<s>` and <\s> for those tokens (bos, eos), and <**unk**> for unknown and <**pad**> for pad tokens. Not sure which is the right way to go. Any suggestions?~ | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13045/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13045/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/13045",

"html_url": "https://github.com/huggingface/transformers/pull/13045",

"diff_url": "https://github.com/huggingface/transformers/pull/13045.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/13045.patch",

"merged_at": 1632137071000

} |

https://api.github.com/repos/huggingface/transformers/issues/13044 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13044/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13044/comments | https://api.github.com/repos/huggingface/transformers/issues/13044/events | https://github.com/huggingface/transformers/issues/13044 | 963,507,665 | MDU6SXNzdWU5NjM1MDc2NjU= | 13,044 | MLM example not able to run_mlm_flax.py | {

"login": "R4ZZ3",

"id": 25264037,

"node_id": "MDQ6VXNlcjI1MjY0MDM3",

"avatar_url": "https://avatars.githubusercontent.com/u/25264037?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/R4ZZ3",

"html_url": "https://github.com/R4ZZ3",

"followers_url": "https://api.github.com/users/R4ZZ3/followers",

"following_url": "https://api.github.com/users/R4ZZ3/following{/other_user}",

"gists_url": "https://api.github.com/users/R4ZZ3/gists{/gist_id}",

"starred_url": "https://api.github.com/users/R4ZZ3/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/R4ZZ3/subscriptions",

"organizations_url": "https://api.github.com/users/R4ZZ3/orgs",

"repos_url": "https://api.github.com/users/R4ZZ3/repos",

"events_url": "https://api.github.com/users/R4ZZ3/events{/privacy}",

"received_events_url": "https://api.github.com/users/R4ZZ3/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hey @R4ZZ3,\r\n\r\nCan you run:\r\n\r\n```transformers-cli env```\r\n\r\nAnd post the output here?",

"Sure, I will run it late this evening and post output here (UTC +3)",

"Ok, found time in between the day @patrickvonplaten \r\n\r\n- `transformers` version: 4.3.3\r\n- Platform: Linux-5.4.0-1043-gcp-x86_64-with-debian-bullseye-sid\r\n- Python version: 3.7.11\r\n- PyTorch version (GPU?): not installed (NA)\r\n- Tensorflow version (GPU?): not installed (NA)\r\n- Using GPU in script?: To my knowledge this script should only use TPUs\r\n- Using distributed or parallel set-up in script?: To my knowledge processing is spread out to 8 tpu cores",

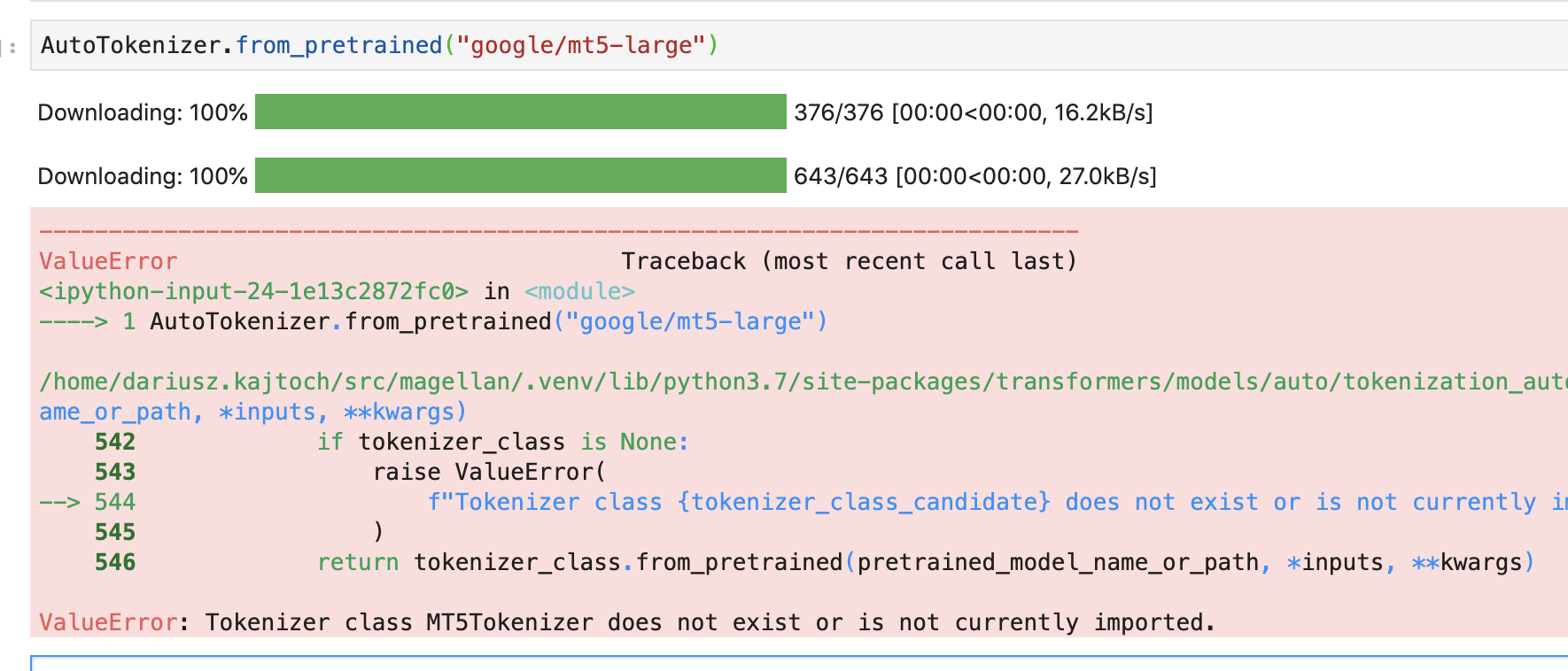

"I did pull changes. Tokenizer saving gives error (Doing this from norwegian-roberta-base folder)\r\n\r\n\r\n\r\n\r\ntokenizer.save(./tokenizer.json) works",

"I was able to fix symbolic link issue with by giving full paths but still have the same error with. Also FYI installed Pytorch 1.9 as I remember from Flax event that for some things it was necessary to have for some processing but no change to error\r\n\r\n",

"Hey @R4ZZ3,\r\n\r\nCould you please update your transformer version to a newer one? Ideally master for Flax examples as they have been added very recently?",

"Sure thing, ill try",

"Ok, now seems to move further @patrickvonplaten Thanks!\r\nStill had to save tokenizer with tokenizer.save(./tokenizer.json) though\r\n\r\n\r\n"

] | 1,628 | 1,628 | 1,628 | NONE | null | I am going through this mlm exaxmple on Google TPU VM instance v3-8 https://github.com/huggingface/transformers/tree/master/examples/flax/language-modeling

I have defined MODEL_DIR with:

export MODEL_DIR="./norwegian-roberta-base"

I have defined symbolic link with:

ln -s home/Admin/Research/transformers/examples/flax/language-modeling/run_mlm_flax.py norwegian-roberta-base/run_mlm_flax.py

I am running with remove VS code and am able to run first 2 steps. now at run_mlm_flax_py if I run referring to symbolic link I am getting:

If I run directly the original script I am getting:

Do you have some idea what I have done wrong?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13044/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13044/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/13043 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13043/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13043/comments | https://api.github.com/repos/huggingface/transformers/issues/13043/events | https://github.com/huggingface/transformers/issues/13043 | 963,451,880 | MDU6SXNzdWU5NjM0NTE4ODA= | 13,043 | [DeepSpeed] DeepSpeed 0.4.4 does not run with Wav2Vec2 pretraining script | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"@patrickvonplaten,\r\n\r\nThis is definitely something for Deepspeed and not our integration since you have a segfault in building the kernels:\r\n\r\n```\r\n[1/3] /usr/bin/nvcc --generate-dependencies-with-compile --dependency-output custom_cuda_kernel.cuda.o.d -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\\\"_gcc\\\" -DPYBIND11_STDLIB=\\\"_libstdcpp\\\" -DPYBIND11_BUILD_ABI=\\\"_cxxabi1011\\\" -I/home/patrick/anaconda3/envs/hu\r\ngging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/includes -I/usr/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/torch/csrc/api/include -isystem /home/\r\npatrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/TH -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/THC -isystem /home/patrick/anaconda3/envs/hugging_face/include/python3.9 -D_GLIBCXX_USE_CXX11_ABI=0 -D__CUDA_NO_HALF_OPERATORS__ -D_\r\n_CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -gencode=arch=compute_75,code=compute_75 -gencode=arch=compute_75,code=sm_75 --compiler-options '-fPIC' -O3 --use_fast_math -std=c++14 -U__CUDA_NO_HALF_OPERATORS__ -U__CUDA_NO_HALF_CONVERS\r\nIONS__ -U__CUDA_NO_HALF2_OPERATORS__ -gencode=arch=compute_75,code=sm_75 -gencode=arch=compute_75,code=compute_75 -c /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/adam/custom_cuda_kernel.cu -o custom_cuda_kernel.cuda.o \r\nFAILED: custom_cuda_kernel.cuda.o \r\n/usr/bin/nvcc --generate-dependencies-with-compile --dependency-output custom_cuda_kernel.cuda.o.d -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\\\"_gcc\\\" -DPYBIND11_STDLIB=\\\"_libstdcpp\\\" -DPYBIND11_BUILD_ABI=\\\"_cxxabi1011\\\" -I/home/patrick/anaconda3/envs/hugging_\r\nface/lib/python3.9/site-packages/deepspeed/ops/csrc/includes -I/usr/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/torch/csrc/api/include -isystem /home/patric\r\nk/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/TH -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/THC -isystem /home/patrick/anaconda3/envs/hugging_face/include/python3.9 -D_GLIBCXX_USE_CXX11_ABI=0 -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_\r\nNO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -gencode=arch=compute_75,code=compute_75 -gencode=arch=compute_75,code=sm_75 --compiler-options '-fPIC' -O3 --use_fast_math -std=c++14 -U__CUDA_NO_HALF_OPERATORS__ -U__CUDA_NO_HALF_CONVERSIONS__\r\n -U__CUDA_NO_HALF2_OPERATORS__ -gencode=arch=compute_75,code=sm_75 -gencode=arch=compute_75,code=compute_75 -c /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/adam/custom_cuda_kernel.cu -o custom_cuda_kernel.cuda.o \r\n/usr/include/c++/10/chrono: In substitution of ‘template<class _Rep, class _Period> template<class _Period2> using __is_harmonic = std::__bool_constant<(std::ratio<((_Period2::num / std::chrono::duration<_Rep, _Period>::_S_gcd(_Period2::num, _Period::num)) * (_Period::den / std::chrono::duration<_Rep, _Pe\r\nriod>::_S_gcd(_Period2::den, _Period::den))), ((_Period2::den / std::chrono::duration<_Rep, _Period>::_S_gcd(_Period2::den, _Period::den)) * (_Period::num / std::chrono::duration<_Rep, _Period>::_S_gcd(_Period2::num, _Period::num)))>::den == 1)> [with _Period2 = _Period2; _Rep = _Rep; _Period = _Period]’:\r\n/usr/include/c++/10/chrono:473:154: required from here \r\n/usr/include/c++/10/chrono:428:27: internal compiler error: Segmentation fault \r\n 428 | _S_gcd(intmax_t __m, intmax_t __n) noexcept \r\n```\r\n\r\nCould you please file a bug report at https://github.com/microsoft/DeepSpeed and tag @RezaYazdaniAminabadi\r\n\r\nIt probably has something to do with your specific environment, since deepspeed==0.4.4 passes all wav2vec2 tests on my setup:\r\n\r\n```\r\n$ RUN_SLOW=1 pyt examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py\r\n====================================================================== test session starts ======================================================================\r\nplatform linux -- Python 3.8.10, pytest-6.2.4, py-1.10.0, pluggy-0.13.1\r\nrootdir: /mnt/nvme1/code/huggingface, configfile: pytest.ini\r\nplugins: dash-1.20.0, forked-1.3.0, xdist-2.3.0, instafail-0.4.2\r\ncollected 16 items \r\n\r\nexamples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py ................ [100%]\r\n==================================================================== short test summary info ====================================================================\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_distributed_zero2_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_distributed_zero2_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_distributed_zero3_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_distributed_zero3_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_non_distributed_zero2_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_non_distributed_zero2_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_non_distributed_zero3_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp16_non_distributed_zero3_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_distributed_zero2_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_distributed_zero2_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_distributed_zero3_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_distributed_zero3_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_non_distributed_zero2_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_non_distributed_zero2_robust\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_non_distributed_zero3_base\r\nPASSED examples/research_projects/wav2vec2/test_wav2vec2_deepspeed.py::TestDeepSpeedWav2Vec2::test_fp32_non_distributed_zero3_robust\r\n================================================================ 16 passed in 491.63s (0:08:11) =================================================================\r\n```\r\n\r\nAlso yours is python-3.9, do you have access to 3.8 by chance to validate if perhaps it's a py39 incompatibility? Mine is 3.8.\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"It's actually solved - thanks for the help :-)"

] | 1,628 | 1,631 | 1,631 | MEMBER | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.10.0.dev0

- Platform: Linux-5.11.0-25-generic-x86_64-with-glibc2.33

- Python version: 3.9.1

- PyTorch version (GPU?): 1.9.0.dev20210217 (True)

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: yes

- Deepspeed: 0.4.4

- CUDA Version: 11.2

- GPU: 4 x TITAN RTX

### Who can help

@stas00

## To reproduce

Running the Wav2Vec2 pre-training script: https://github.com/huggingface/transformers/blob/master/examples/research_projects/wav2vec2/README.md#pretraining-wav2vec2

with the versions as defined above (on vorace) yields the following error:

<details>

<summary>Click for error message</summary>

<br>

```

Using amp fp16 backend

[2021-08-08 14:05:34,113] [INFO] [logging.py:68:log_dist] [Rank 0] DeepSpeed info: version=0.4.4, git-hash=unknown, git-branch=unknown

[2021-08-08 14:05:35,930] [INFO] [utils.py:11:_initialize_parameter_parallel_groups] data_parallel_size: 4, parameter_parallel_size: 4

[2021-08-08 14:57:51,866] [INFO] [engine.py:179:__init__] DeepSpeed Flops Profiler Enabled: False

Using /home/patrick/.cache/torch_extensions as PyTorch extensions root...

Creating extension directory /home/patrick/.cache/torch_extensions/cpu_adam...

Using /home/patrick/.cache/torch_extensions as PyTorch extensions root...

Using /home/patrick/.cache/torch_extensions as PyTorch extensions root...

Using /home/patrick/.cache/torch_extensions as PyTorch extensions root...

Detected CUDA files, patching ldflags

Emitting ninja build file /home/patrick/.cache/torch_extensions/cpu_adam/build.ninja...

Building extension module cpu_adam...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

[1/3] /usr/bin/nvcc --generate-dependencies-with-compile --dependency-output custom_cuda_kernel.cuda.o.d -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -I/home/patrick/anaconda3/envs/hu

gging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/includes -I/usr/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/torch/csrc/api/include -isystem /home/

patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/TH -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/THC -isystem /home/patrick/anaconda3/envs/hugging_face/include/python3.9 -D_GLIBCXX_USE_CXX11_ABI=0 -D__CUDA_NO_HALF_OPERATORS__ -D_

_CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -gencode=arch=compute_75,code=compute_75 -gencode=arch=compute_75,code=sm_75 --compiler-options '-fPIC' -O3 --use_fast_math -std=c++14 -U__CUDA_NO_HALF_OPERATORS__ -U__CUDA_NO_HALF_CONVERS

IONS__ -U__CUDA_NO_HALF2_OPERATORS__ -gencode=arch=compute_75,code=sm_75 -gencode=arch=compute_75,code=compute_75 -c /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/adam/custom_cuda_kernel.cu -o custom_cuda_kernel.cuda.o

FAILED: custom_cuda_kernel.cuda.o

/usr/bin/nvcc --generate-dependencies-with-compile --dependency-output custom_cuda_kernel.cuda.o.d -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -I/home/patrick/anaconda3/envs/hugging_

face/lib/python3.9/site-packages/deepspeed/ops/csrc/includes -I/usr/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/torch/csrc/api/include -isystem /home/patric

k/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/TH -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/THC -isystem /home/patrick/anaconda3/envs/hugging_face/include/python3.9 -D_GLIBCXX_USE_CXX11_ABI=0 -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_

NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr -gencode=arch=compute_75,code=compute_75 -gencode=arch=compute_75,code=sm_75 --compiler-options '-fPIC' -O3 --use_fast_math -std=c++14 -U__CUDA_NO_HALF_OPERATORS__ -U__CUDA_NO_HALF_CONVERSIONS__

-U__CUDA_NO_HALF2_OPERATORS__ -gencode=arch=compute_75,code=sm_75 -gencode=arch=compute_75,code=compute_75 -c /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/adam/custom_cuda_kernel.cu -o custom_cuda_kernel.cuda.o

/usr/include/c++/10/chrono: In substitution of ‘template<class _Rep, class _Period> template<class _Period2> using __is_harmonic = std::__bool_constant<(std::ratio<((_Period2::num / std::chrono::duration<_Rep, _Period>::_S_gcd(_Period2::num, _Period::num)) * (_Period::den / std::chrono::duration<_Rep, _Pe

riod>::_S_gcd(_Period2::den, _Period::den))), ((_Period2::den / std::chrono::duration<_Rep, _Period>::_S_gcd(_Period2::den, _Period::den)) * (_Period::num / std::chrono::duration<_Rep, _Period>::_S_gcd(_Period2::num, _Period::num)))>::den == 1)> [with _Period2 = _Period2; _Rep = _Rep; _Period = _Period]’:

/usr/include/c++/10/chrono:473:154: required from here

/usr/include/c++/10/chrono:428:27: internal compiler error: Segmentation fault

428 | _S_gcd(intmax_t __m, intmax_t __n) noexcept

| ^~~~~~

Please submit a full bug report,

with preprocessed source if appropriate.

See <file:///usr/share/doc/gcc-10/README.Bugs> for instructions.

[2/3] c++ -MMD -MF cpu_adam.o.d -DTORCH_EXTENSION_NAME=cpu_adam -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -I/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/includes -I/usr

/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/torch/csrc/api/include -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/inc

lude/TH -isystem /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/include/THC -isystem /home/patrick/anaconda3/envs/hugging_face/include/python3.9 -D_GLIBCXX_USE_CXX11_ABI=0 -fPIC -std=c++14 -O3 -std=c++14 -L/usr/lib64 -lcudart -lcublas -g -Wno-reorder -march=native -fopenmp -D_

_AVX512__ -c /home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/csrc/adam/cpu_adam.cpp -o cpu_adam.o

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1662, in _run_ninja_build

subprocess.run(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/subprocess.py", line 524, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home/patrick/hugging_face/transformers/examples/research_projects/wav2vec2/run_pretrain.py", line 394, in <module>

main()

File "/home/patrick/hugging_face/transformers/examples/research_projects/wav2vec2/run_pretrain.py", line 390, in main

trainer.train()

File "/home/patrick/hugging_face/transformers/src/transformers/trainer.py", line 1136, in train

deepspeed_engine, optimizer, lr_scheduler = deepspeed_init(

File "/home/patrick/hugging_face/transformers/src/transformers/deepspeed.py", line 370, in deepspeed_init

model, optimizer, _, lr_scheduler = deepspeed.initialize(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/__init__.py", line 126, in initialize

engine = DeepSpeedEngine(args=args,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 194, in __init__

self._configure_optimizer(optimizer, model_parameters)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 709, in _configure_optimizer

basic_optimizer = self._configure_basic_optimizer(model_parameters)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 778, in _configure_basic_optimizer

optimizer = DeepSpeedCPUAdam(model_parameters,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 79, in __init__

self.ds_opt_adam = CPUAdamBuilder().load()

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/op_builder/builder.py", line 347, in load

return self.jit_load(verbose)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/op_builder/builder.py", line 379, in jit_load

op_module = load(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1074, in load

return _jit_compile(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1287, in _jit_compile

_write_ninja_file_and_build_library(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1399, in _write_ninja_file_and_build_library

_run_ninja_build(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1678, in _run_ninja_build

raise RuntimeError(message) from e

RuntimeError: Error building extension 'cpu_adam'

Loading extension module cpu_adam...

Traceback (most recent call last):

File "/home/patrick/hugging_face/transformers/examples/research_projects/wav2vec2/run_pretrain.py", line 394, in <module>

main()

File "/home/patrick/hugging_face/transformers/examples/research_projects/wav2vec2/run_pretrain.py", line 390, in main

trainer.train()

File "/home/patrick/hugging_face/transformers/src/transformers/trainer.py", line 1136, in train

deepspeed_engine, optimizer, lr_scheduler = deepspeed_init(

File "/home/patrick/hugging_face/transformers/src/transformers/deepspeed.py", line 370, in deepspeed_init

model, optimizer, _, lr_scheduler = deepspeed.initialize(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/__init__.py", line 126, in initialize

engine = DeepSpeedEngine(args=args,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 194, in __init__

self._configure_optimizer(optimizer, model_parameters)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 709, in _configure_optimizer

basic_optimizer = self._configure_basic_optimizer(model_parameters)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 778, in _configure_basic_optimizer

optimizer = DeepSpeedCPUAdam(model_parameters,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 79, in __init__

self.ds_opt_adam = CPUAdamBuilder().load()

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/op_builder/builder.py", line 347, in load

return self.jit_load(verbose)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/op_builder/builder.py", line 379, in jit_load

op_module = load(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1074, in load

return _jit_compile(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1312, in _jit_compile

return _import_module_from_library(name, build_directory, is_python_module)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1694, in _import_module_from_library

file, path, description = imp.find_module(module_name, [path])

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/imp.py", line 296, in find_module

raise ImportError(_ERR_MSG.format(name), name=name)

ImportError: No module named 'cpu_adam'

Loading extension module cpu_adam...

Traceback (most recent call last):

File "/home/patrick/hugging_face/transformers/examples/research_projects/wav2vec2/run_pretrain.py", line 394, in <module>

main()

File "/home/patrick/hugging_face/transformers/examples/research_projects/wav2vec2/run_pretrain.py", line 390, in main

trainer.train()

File "/home/patrick/hugging_face/transformers/src/transformers/trainer.py", line 1136, in train

deepspeed_engine, optimizer, lr_scheduler = deepspeed_init(

File "/home/patrick/hugging_face/transformers/src/transformers/deepspeed.py", line 370, in deepspeed_init

model, optimizer, _, lr_scheduler = deepspeed.initialize(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/__init__.py", line 126, in initialize

engine = DeepSpeedEngine(args=args,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 194, in __init__

self._configure_optimizer(optimizer, model_parameters)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 709, in _configure_optimizer

basic_optimizer = self._configure_basic_optimizer(model_parameters)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/runtime/engine.py", line 778, in _configure_basic_optimizer

optimizer = DeepSpeedCPUAdam(model_parameters,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 79, in __init__

self.ds_opt_adam = CPUAdamBuilder().load()

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/op_builder/builder.py", line 347, in load

return self.jit_load(verbose)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/op_builder/builder.py", line 379, in jit_load

op_module = load(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1074, in load

return _jit_compile(

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1312, in _jit_compile

return _import_module_from_library(name, build_directory, is_python_module)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/torch/utils/cpp_extension.py", line 1694, in _import_module_from_library

file, path, description = imp.find_module(module_name, [path])

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/imp.py", line 296, in find_module

raise ImportError(_ERR_MSG.format(name), name=name)

ImportError: No module named 'cpu_adam'

Exception ignored in: <function DeepSpeedCPUAdam.__del__ at 0x7f1a372f01f0>

Traceback (most recent call last):

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 93, in __del__

self.ds_opt_adam.destroy_adam(self.opt_id)

AttributeError: 'DeepSpeedCPUAdam' object has no attribute 'ds_opt_adam'

Exception ignored in: <function DeepSpeedCPUAdam.__del__ at 0x7f961a8561f0>

Traceback (most recent call last):

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 93, in __del__

self.ds_opt_adam.destroy_adam(self.opt_id)

AttributeError: 'DeepSpeedCPUAdam' object has no attribute 'ds_opt_adam'

Exception ignored in: <function DeepSpeedCPUAdam.__del__ at 0x7fcc1f2be1f0>

Traceback (most recent call last):

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 93, in __del__

self.ds_opt_adam.destroy_adam(self.opt_id)

AttributeError: 'DeepSpeedCPUAdam' object has no attribute 'ds_opt_adam'

Exception ignored in: <function DeepSpeedCPUAdam.__del__ at 0x7f5bbf5cc1f0>

Traceback (most recent call last):

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/ops/adam/cpu_adam.py", line 93, in __del__

AttributeError: 'DeepSpeedCPUAdam' object has no attribute 'ds_opt_adam'

Killing subprocess 1135563

Killing subprocess 1135564

Killing subprocess 1135565

Killing subprocess 1135566

Traceback (most recent call last):

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/runpy.py", line 197, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/launcher/launch.py", line 171, in <module>

main()

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deepspeed/launcher/launch.py", line 161, in main

sigkill_handler(signal.SIGTERM, None) # not coming back

File "/home/patrick/anaconda3/envs/hugging_face/lib/python3.9/site-packages/deep

```

</details>

## Expected behavior

The script should run without problem.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13043/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13043/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/13042 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13042/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13042/comments | https://api.github.com/repos/huggingface/transformers/issues/13042/events | https://github.com/huggingface/transformers/pull/13042 | 963,439,918 | MDExOlB1bGxSZXF1ZXN0NzA2MDQ5MTk0 | 13,042 | Squad bert | {

"login": "kamfonas",

"id": 13737870,

"node_id": "MDQ6VXNlcjEzNzM3ODcw",

"avatar_url": "https://avatars.githubusercontent.com/u/13737870?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/kamfonas",

"html_url": "https://github.com/kamfonas",

"followers_url": "https://api.github.com/users/kamfonas/followers",

"following_url": "https://api.github.com/users/kamfonas/following{/other_user}",

"gists_url": "https://api.github.com/users/kamfonas/gists{/gist_id}",

"starred_url": "https://api.github.com/users/kamfonas/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/kamfonas/subscriptions",

"organizations_url": "https://api.github.com/users/kamfonas/orgs",

"repos_url": "https://api.github.com/users/kamfonas/repos",

"events_url": "https://api.github.com/users/kamfonas/events{/privacy}",

"received_events_url": "https://api.github.com/users/kamfonas/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Please disregard. "

] | 1,628 | 1,628 | 1,628 | NONE | null | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13042/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13042/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/13042",

"html_url": "https://github.com/huggingface/transformers/pull/13042",

"diff_url": "https://github.com/huggingface/transformers/pull/13042.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/13042.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/13041 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13041/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13041/comments | https://api.github.com/repos/huggingface/transformers/issues/13041/events | https://github.com/huggingface/transformers/issues/13041 | 963,423,018 | MDU6SXNzdWU5NjM0MjMwMTg= | 13,041 | Script to convert the bart model from pytorch checkpoint to tensorflow checkpoint | {

"login": "mazicwong",

"id": 17029801,

"node_id": "MDQ6VXNlcjE3MDI5ODAx",

"avatar_url": "https://avatars.githubusercontent.com/u/17029801?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mazicwong",

"html_url": "https://github.com/mazicwong",

"followers_url": "https://api.github.com/users/mazicwong/followers",

"following_url": "https://api.github.com/users/mazicwong/following{/other_user}",

"gists_url": "https://api.github.com/users/mazicwong/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mazicwong/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mazicwong/subscriptions",

"organizations_url": "https://api.github.com/users/mazicwong/orgs",

"repos_url": "https://api.github.com/users/mazicwong/repos",

"events_url": "https://api.github.com/users/mazicwong/events{/privacy}",

"received_events_url": "https://api.github.com/users/mazicwong/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,628 | 1,628 | 1,628 | CONTRIBUTOR | null | # Feature request

Request for a script to convert the bart model from pytorch checkpoint to tensorflow checkpoint

# Solution

https://github.com/huggingface/transformers/blob/master/src/transformers/convert_pytorch_checkpoint_to_tf2.py | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13041/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13041/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/13040 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13040/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13040/comments | https://api.github.com/repos/huggingface/transformers/issues/13040/events | https://github.com/huggingface/transformers/pull/13040 | 963,399,896 | MDExOlB1bGxSZXF1ZXN0NzA2MDE3OTc0 | 13,040 | Add try-except for torch_scatter | {

"login": "JetRunner",

"id": 22514219,

"node_id": "MDQ6VXNlcjIyNTE0MjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/22514219?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/JetRunner",

"html_url": "https://github.com/JetRunner",

"followers_url": "https://api.github.com/users/JetRunner/followers",

"following_url": "https://api.github.com/users/JetRunner/following{/other_user}",

"gists_url": "https://api.github.com/users/JetRunner/gists{/gist_id}",

"starred_url": "https://api.github.com/users/JetRunner/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/JetRunner/subscriptions",

"organizations_url": "https://api.github.com/users/JetRunner/orgs",

"repos_url": "https://api.github.com/users/JetRunner/repos",

"events_url": "https://api.github.com/users/JetRunner/events{/privacy}",

"received_events_url": "https://api.github.com/users/JetRunner/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"I don't think this will work. It's a `RuntimeError` being raised by `torch_scatter`, not an `OSError`. See the specific code at [line 59 of `__init__.py`](https://github.com/rusty1s/pytorch_scatter/blob/2.0.8/torch_scatter/__init__.py#L59). Also, this replaces the existing informative error message from `torch_scatter` with a less informative one.",

"@aphedges Thanks for the note - I have edited the description that does not indicate the association with your issue anymore. Also, the intention of this PR is to simply circumvent the error since in most cases, people just don't use TAPAS but still get blocked by this error.\r\n\r\nAlso, the original `torch_scatter` error message is not informative at all. It just says some file cannot be located and after some googling, I realize it's due to the CUDA version. So I'm basically replacing that with my googled solution.",

"@JetRunner, thanks for editing the description!\r\n\r\nSorry about my note about `RuntimeError` vs. `OSError` earlier. I think I got confused by the fact that `torch-scatter` explicitly throws a runtime error for some CUDA version mismatches, but the error you're logging here is for a different CUDA version mismatch that doesn't have a good error message. I think I had to google this one, too, so your error message is definitely an improvement."

] | 1,628 | 1,628 | 1,628 | CONTRIBUTOR | null | Add an error message for the CUDA version mismatch of `torch_scatter`.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/13040/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/13040/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/13040",

"html_url": "https://github.com/huggingface/transformers/pull/13040",

"diff_url": "https://github.com/huggingface/transformers/pull/13040.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/13040.patch",

"merged_at": 1628580575000

} |

https://api.github.com/repos/huggingface/transformers/issues/13039 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/13039/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/13039/comments | https://api.github.com/repos/huggingface/transformers/issues/13039/events | https://github.com/huggingface/transformers/pull/13039 | 963,379,401 | MDExOlB1bGxSZXF1ZXN0NzA2MDAyNDQw | 13,039 | Remove usage of local variables related with model parallel and move … | {

"login": "hyunwoongko",

"id": 38183241,

"node_id": "MDQ6VXNlcjM4MTgzMjQx",

"avatar_url": "https://avatars.githubusercontent.com/u/38183241?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/hyunwoongko",

"html_url": "https://github.com/hyunwoongko",

"followers_url": "https://api.github.com/users/hyunwoongko/followers",

"following_url": "https://api.github.com/users/hyunwoongko/following{/other_user}",

"gists_url": "https://api.github.com/users/hyunwoongko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/hyunwoongko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/hyunwoongko/subscriptions",

"organizations_url": "https://api.github.com/users/hyunwoongko/orgs",

"repos_url": "https://api.github.com/users/hyunwoongko/repos",

"events_url": "https://api.github.com/users/hyunwoongko/events{/privacy}",

"received_events_url": "https://api.github.com/users/hyunwoongko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Most of these modifications are encoder-decoder models from Bart's code and encoder models that has token type id. As you said, it is difficult to work on all models at once, so I will exclude the case where the model needs to be modified. I also agree that modifying multiple models at the same time makes it difficult to test. First, let's start with one decoder model like GPT-Neo. I will close this PR and upload a new one soon.",

"One more note: besides the dozens of models we also have a template. In this case it's mostly: https://github.com/huggingface/transformers/blob/master/templates/adding_a_new_model/cookiecutter-template-%7B%7Bcookiecutter.modelname%7D%7D/modeling_%7B%7Bcookiecutter.lowercase_modelname%7D%7D.py so when all is happy here, please let's not forget to apply the changes there as well.\r\n",

"I would like an approach that that does one model first, so we can clearly comment on the design, then all models after (unless it's very different for each model in which case, similar models by similar models if that makes sense).\r\n\r\nAs for the changes in themselves, I would need a clear explanation as to why the `token_type_ids` device need to be changed from the position_ids device. That kind of code should not be present in the modeling files as is, as people adding or tweaking models won't need/understand it. We can abstract away things in `PreTrainedModel` as you suggest @stas00, that seems like a better approach. Or maybe a method that creates those `token_type_ids` properly, at the very least."

] | 1,628 | 1,628 | 1,628 | CONTRIBUTOR | null | # What does this PR do?

This PR is related with [model parallel integration from Parallelformers](https://github.com/huggingface/transformers/issues/12772).

You can check detail of PR here: https://github.com/tunib-ai/parallelformers/issues/11#issuecomment-894719918

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@stas00

| {