url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/12946 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12946/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12946/comments | https://api.github.com/repos/huggingface/transformers/issues/12946/events | https://github.com/huggingface/transformers/issues/12946 | 956,500,360 | MDU6SXNzdWU5NTY1MDAzNjA= | 12,946 | ImportError: cannot import name 'BigBirdTokenizer' from 'transformers' | {

"login": "zynos",

"id": 8973150,

"node_id": "MDQ6VXNlcjg5NzMxNTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/8973150?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zynos",

"html_url": "https://github.com/zynos",

"followers_url": "https://api.github.com/users/zynos/followers",

"following_url": "https://api.github.com/users/zynos/following{/other_user}",

"gists_url": "https://api.github.com/users/zynos/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zynos/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zynos/subscriptions",

"organizations_url": "https://api.github.com/users/zynos/orgs",

"repos_url": "https://api.github.com/users/zynos/repos",

"events_url": "https://api.github.com/users/zynos/events{/privacy}",

"received_events_url": "https://api.github.com/users/zynos/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"The sentencepiece library was missing. ",

"`BigBirdTokenizer` requires a sentencepiece installation, but you should have had that error instead of an import error. This is because the `BigBirdTokenizer` was misplaced in the init, the PR linked above fixes it.",

"I sadly only got the import error, nothing else. An error indicating that sentencepiece is missing is definitely more helpful. Thanks for creating the PR",

"I installed sentencepiece but I got the same error:\r\n\r\n```\r\n!pip install --quiet sentencepiece\r\nfrom transformers import BigBirdTokenizer\r\n```\r\nImportError: cannot import name 'BigBirdTokenizer' from 'transformers' (/usr/local/lib/python3.7/dist-packages/transformers/__init__.py)",

"@MariamDundua what is the version of your transformers package?",

"Hi @zynos @sgugger . I'm using transformers 4.8.0 and have installed sentencepiece. But I'm having same cannot import name 'BigBirdTokenizer' issue. Thanks. ",

"Make sure you use the latest version of Transformers. It should include a clearer error message if the import fails."

] | 1,627 | 1,646 | 1,627 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.9.1

- Platform: windows

- Python version: 3.9

- PyTorch version (GPU?): 1.9 (CPU)

- Tensorflow version (GPU?):

- Using GPU in script?: no

- Using distributed or parallel set-up in script?:

## Information

Model I am using BigBird:

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [X] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

```

from transformers import BigBirdTokenizer,BigBirdModel

print("hello")

```

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

No import error.

Importing **BigBirdTokenizerFast** works without a problem. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12946/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12946/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12945 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12945/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12945/comments | https://api.github.com/repos/huggingface/transformers/issues/12945/events | https://github.com/huggingface/transformers/issues/12945 | 956,096,918 | MDU6SXNzdWU5NTYwOTY5MTg= | 12,945 | Transformers tokenizer pickling issue using hydra and submitit_slurm | {

"login": "asishgeek",

"id": 5291773,

"node_id": "MDQ6VXNlcjUyOTE3NzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/5291773?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/asishgeek",

"html_url": "https://github.com/asishgeek",

"followers_url": "https://api.github.com/users/asishgeek/followers",

"following_url": "https://api.github.com/users/asishgeek/following{/other_user}",

"gists_url": "https://api.github.com/users/asishgeek/gists{/gist_id}",

"starred_url": "https://api.github.com/users/asishgeek/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/asishgeek/subscriptions",

"organizations_url": "https://api.github.com/users/asishgeek/orgs",

"repos_url": "https://api.github.com/users/asishgeek/repos",

"events_url": "https://api.github.com/users/asishgeek/events{/privacy}",

"received_events_url": "https://api.github.com/users/asishgeek/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"This has been solved in v4.9, you should upgrade to the latest version of Transformers.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,627 | 1,630 | 1,630 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.8.2

- Platform: Linux-5.4.0-1051-aws-x86_64-with-glibc2.10

- Python version: 3.8.5

- PyTorch version (GPU?): 1.9.0 (False)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

-

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

-->

@LysandreJik

## Information

Model I am using (Bert, XLNet ...): t5

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Run the script using command:

python hf_hydra.py hydra/launcher=submitit_slurm -m

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

Code (hf_hydra.py):

import hydra

import logging

# from transformers import AutoTokenizer

import transformers

@hydra.main(config_path=None)

def main(cfg):

logger = logging.getLogger(__name__)

# tokenizer = AutoTokenizer.from_pretrained("t5-small")

tokenizer = transformers.T5Tokenizer.from_pretrained("t5-small")

logger.info(f"vocab size: {tokenizer.vocab_size}")

if __name__ == '__main__':

main()

Using AutoTokenizer works but using T5Tokenizer fails with the following error.

Traceback (most recent call last):

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/hydra/_internal/utils.py", line 211, in run_and_report

return func()

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/hydra/_internal/utils.py", line 376, in <lambda>

lambda: hydra.multirun(

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/hydra/_internal/hydra.py", line 139, in multirun

ret = sweeper.sweep(arguments=task_overrides)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/hydra/_internal/core_plugins/basic_sweeper.py", line 157, in sweep

results = self.launcher.launch(batch, initial_job_idx=initial_job_idx)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/hydra_plugins/hydra_submitit_launcher/submitit_launcher.py", line 145, in launch

jobs = executor.map_array(self, *zip(*job_params))

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/submitit/core/core.py", line 631, in map_array

return self._internal_process_submissions(submissions)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/submitit/auto/auto.py", line 213, in _internal_process_submissions

return self._executor._internal_process_submissions(delayed_submissions)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/submitit/slurm/slurm.py", line 313, in _internal_process_submissions

return super()._internal_process_submissions(delayed_submissions)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/submitit/core/core.py", line 749, in _internal_process_submissions

delayed.dump(pickle_path)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/submitit/core/utils.py", line 136, in dump

cloudpickle_dump(self, filepath)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/submitit/core/utils.py", line 240, in cloudpickle_dump

cloudpickle.dump(obj, ofile, pickle.HIGHEST_PROTOCOL)

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/cloudpickle/cloudpickle_fast.py", line 55, in dump

CloudPickler(

File "/data/home/aghoshal/miniconda/lib/python3.8/site-packages/cloudpickle/cloudpickle_fast.py", line 563, in dump

return Pickler.dump(self, obj)

TypeError: cannot pickle '_LazyModule' object

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

Job should run and print the vocab size. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12945/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12945/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12944 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12944/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12944/comments | https://api.github.com/repos/huggingface/transformers/issues/12944/events | https://github.com/huggingface/transformers/issues/12944 | 956,067,289 | MDU6SXNzdWU5NTYwNjcyODk= | 12,944 | rum_mlm crashes with bookcorpus and --preprocessing_num_workers | {

"login": "shairoz-deci",

"id": 73780196,

"node_id": "MDQ6VXNlcjczNzgwMTk2",

"avatar_url": "https://avatars.githubusercontent.com/u/73780196?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/shairoz-deci",

"html_url": "https://github.com/shairoz-deci",

"followers_url": "https://api.github.com/users/shairoz-deci/followers",

"following_url": "https://api.github.com/users/shairoz-deci/following{/other_user}",

"gists_url": "https://api.github.com/users/shairoz-deci/gists{/gist_id}",

"starred_url": "https://api.github.com/users/shairoz-deci/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/shairoz-deci/subscriptions",

"organizations_url": "https://api.github.com/users/shairoz-deci/orgs",

"repos_url": "https://api.github.com/users/shairoz-deci/repos",

"events_url": "https://api.github.com/users/shairoz-deci/events{/privacy}",

"received_events_url": "https://api.github.com/users/shairoz-deci/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,627 | 1,630 | 1,630 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.9.0

- Platform: Linux-5.8.0-63-generic-x86_64-with-debian-bullseye-sid

- Python version: 3.7.10

- PyTorch version (GPU?): 1.8.1+cu111 (True)

- Tensorflow version (GPU?): 2.5.0 (False)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: yes

### Who can help

@LysandreJik

Models:

- albert, bert, xlm: @LysandreJik @sgugger @patil-suraj

Library:

- trainer: @sgugger

- pipelines: @LysandreJik

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

## Information

Trying to train BERT from scratch on wikipedia and bookcorpus using the run_mlm.py example.

As the dataset is large and I am using a strong machine (80 CPU cores 350GB RAM) I set the --preprocessing_num_workers flag to 64 to accelerate the preprocessing.

When running a wikipedia or squad for sanity check, everything works fine but with bookcorpus, after dataset mapping is supposedly completed (all three occurrences), it gets stuck on with the info:

`Spawning 64 processes `

for a while and crashes with

`BrokenPipeError: [Errno 32] Broken pipe`

This does not occur when dropping the --preprocessing_num_workers flag but then processing wiki + bookcorpus will take nearly two days.

I tried changing the transformer version or upgrading/downgrading the multiprocessing and dill packages and it didn't help

The problem arises when using:

* [ x] the official example scripts: (give details below)

## To reproduce

Steps to reproduce the behavior:

run:

`python transformers/examples/pytorch/language-modeling/run_mlm.py --output_dir transformers/trained_models/bert_base --dataset_name bookcorpus --model_type bert --preprocessing_num_workers 64 --tokenizer_name bert-base-uncased --do_train --do_eval --per_device_train_batch_size 16 --overwrite_output_dir --dataloader_num_workers 64 --max_steps 1000000 --learning_rate 1e-4 --warmup_steps 10000 --save_steps 25000 --adam_epsilon 1e-6 --adam_beta1 0.9 --adam_beta2 0.999 --weight_decay 0.0'

## Expected behavior

Training should begin as done properly when loading wiki and other datasets

Thanks is advance, | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12944/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12944/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12943 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12943/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12943/comments | https://api.github.com/repos/huggingface/transformers/issues/12943/events | https://github.com/huggingface/transformers/pull/12943 | 956,065,225 | MDExOlB1bGxSZXF1ZXN0Njk5NzIxNjY0 | 12,943 | Moving fill-mask pipeline to new testing scheme | {

"login": "Narsil",

"id": 204321,

"node_id": "MDQ6VXNlcjIwNDMyMQ==",

"avatar_url": "https://avatars.githubusercontent.com/u/204321?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Narsil",

"html_url": "https://github.com/Narsil",

"followers_url": "https://api.github.com/users/Narsil/followers",

"following_url": "https://api.github.com/users/Narsil/following{/other_user}",

"gists_url": "https://api.github.com/users/Narsil/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Narsil/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Narsil/subscriptions",

"organizations_url": "https://api.github.com/users/Narsil/orgs",

"repos_url": "https://api.github.com/users/Narsil/repos",

"events_url": "https://api.github.com/users/Narsil/events{/privacy}",

"received_events_url": "https://api.github.com/users/Narsil/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"@LysandreJik I think it' s ready for 2nd review to check that everything you raised is fixed. I'll go on to the next pipeline after that."

] | 1,627 | 1,628 | 1,628 | CONTRIBUTOR | null | # What does this PR do?

Changes the testing of fill-mask so we can test all supported architectures.

Turns out quite a bit are NOT testable (because reference tokenizers do not include

mask token, reformer is a bit tricky to handle too).

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

--> | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12943/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12943/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12943",

"html_url": "https://github.com/huggingface/transformers/pull/12943",

"diff_url": "https://github.com/huggingface/transformers/pull/12943.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12943.patch",

"merged_at": 1628849058000

} |

https://api.github.com/repos/huggingface/transformers/issues/12942 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12942/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12942/comments | https://api.github.com/repos/huggingface/transformers/issues/12942/events | https://github.com/huggingface/transformers/issues/12942 | 955,976,366 | MDU6SXNzdWU5NTU5NzYzNjY= | 12,942 | trainer is not reproducible | {

"login": "jackfeinmann5",

"id": 59409879,

"node_id": "MDQ6VXNlcjU5NDA5ODc5",

"avatar_url": "https://avatars.githubusercontent.com/u/59409879?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jackfeinmann5",

"html_url": "https://github.com/jackfeinmann5",

"followers_url": "https://api.github.com/users/jackfeinmann5/followers",

"following_url": "https://api.github.com/users/jackfeinmann5/following{/other_user}",

"gists_url": "https://api.github.com/users/jackfeinmann5/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jackfeinmann5/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jackfeinmann5/subscriptions",

"organizations_url": "https://api.github.com/users/jackfeinmann5/orgs",

"repos_url": "https://api.github.com/users/jackfeinmann5/repos",

"events_url": "https://api.github.com/users/jackfeinmann5/events{/privacy}",

"received_events_url": "https://api.github.com/users/jackfeinmann5/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"The average training loss is indeed not saved and thus you will have a different one restarting from a checkpoint. It's also not a useful metric in most cases, which is why we don't bother. You will notice however that your eval BLEU is exactly the same, so the training yielded the same model at the end.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,627 | 1,630 | 1,630 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.9.1

- Platform: linux

- Python version: 3.7

- PyTorch version (GPU?): 1.9

- Tensorflow version (GPU?): -

- Using GPU in script?: -

- Using distributed or parallel set-up in script?: -

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

trainer: @sgugger

## Information

Model I am using T5-small model and I am testing the original run_translation.py codes [1] for reproducibility when we need to restart the codes from the previously saved checkpoints (I only have access to gpus for a short time and I need to restart the codes).

## To reproduce

Steps to reproduce the behavior:

1) Please kindly run this command:

```

python run_translation.py --model_name_or_path t5-small --do_train --do_eval --source_lang en --target_lang ro --source_prefix "translate English to Romanian: " --dataset_name wmt16 --dataset_config_name ro-en --output_dir /temp/jack/tst-translation --per_device_train_batch_size=4 --per_device_eval_batch_size=4 --overwrite_output_dir --predict_with_generate --max_steps 100 --eval_step 10 --evaluation_strategy steps --max_train_samples 100 --max_eval_samples 100 --save_total_limit 1 --load_best_model_at_end --metric_for_best_model bleu --greater_is_better true

```

then kindly break the codes in this points:

```

{'eval_loss': 1.3589547872543335, 'eval_bleu': 10.9552, 'eval_gen_len': 18.05, 'eval_runtime': 4.0518, 'eval_samples_per_second': 24.68, 'eval_steps_per_second': 6.17, 'epoch': 0.8}

20%|██████████████████████████████▍ | 20/100 [00:11<00:21, 3.70it/s[INFO|trainer.py:1919] 2021-07-29 17:22:43,852 >> Saving model checkpoint to /temp/jack/tst-translation/checkpoint-20

[INFO|configuration_utils.py:379] 2021-07-29 17:22:43,857 >> Configuration saved in /temp/jack/tst-translation/checkpoint-20/config.json

[INFO|modeling_utils.py:997] 2021-07-29 17:22:44,351 >> Model weights saved in /temp/jack/tst-translation/checkpoint-20/pytorch_model.bin

[INFO|tokenization_utils_base.py:2006] 2021-07-29 17:22:44,355 >> tokenizer config file saved in /temp/jack/tst-translation/checkpoint-20/tokenizer_config.json

[INFO|tokenization_utils_base.py:2012] 2021-07-29 17:22:44,357 >> Special tokens file saved in /temp/jack/tst-translation/checkpoint-20/special_tokens_map.json

29%|████████████████████████████████████████████ | 29/100 [00:14<00:22, 3.20it/s][INFO|trainer.py:2165] 2021-07-29 17:22:46,444 >> ***** Running Evaluation *****

[INFO|trainer.py:2167] 2021-07-29 17:22:46,444 >> Num examples = 100

[INFO|trainer.py:2170] 2021-07-29 17:22:46,444 >> Batch size = 4

```

break here please

```

{'eval_loss': 1.3670727014541626, 'eval_bleu': 10.9234, 'eval_gen_len': 18.01, 'eval_runtime': 3.9468, 'eval_samples_per_second': 25.337, 'eval_steps_per_second': 6.334, 'epoch': 2.4}

[INFO|trainer.py:1919] 2021-07-29 17:24:01,570 >> Saving model checkpoint to /temp/jack/tst-translation/checkpoint-60

[INFO|configuration_utils.py:379] 2021-07-29 17:24:01,576 >> Configuration saved in /temp/jack/tst-translation/checkpoint-60/config.json | 60/100 [00:23<00:11, 3.42it/s]

[INFO|modeling_utils.py:997] 2021-07-29 17:24:02,197 >> Model weights saved in /temp/jack/tst-translation/checkpoint-60/pytorch_model.bin

[INFO|tokenization_utils_base.py:2006] 2021-07-29 17:24:02,212 >> tokenizer config file saved in /temp/jack/tst-translation/checkpoint-60/tokenizer_config.json

[INFO|tokenization_utils_base.py:2012] 2021-07-29 17:24:02,218 >> Special tokens file saved in /temp/jack/tst-translation/checkpoint-60/special_tokens_map.json

[INFO|trainer.py:1995] 2021-07-29 17:24:03,216 >> Deleting older checkpoint [/temp/jack/tst-translation/checkpoint-50] due to args.save_total_limit

[INFO|trainer.py:2165] 2021-07-29 17:24:03,810 >> ***** Running Evaluation *****██████████████████████████████▉ | 69/100 [00:26<00:09, 3.37it/s]

[INFO|trainer.py:2167] 2021-07-29 17:24:03,810 >> Num examples = 100

[INFO|trainer.py:2170] 2021-07-29 17:24:03,810 >> Batch size = 4

```

break here please and then run the codes please from here till the end.

```

final train metrics

***** train metrics *****

epoch = 4.0

train_loss = 0.1368

train_runtime = 0:00:27.13

train_samples = 100

train_samples_per_second = 14.741

train_steps_per_second = 3.685

07/29/2021 17:25:08 - INFO - __main__ - *** Evaluate ***

[INFO|trainer.py:2165] 2021-07-29 17:25:08,774 >> ***** Running Evaluation *****

[INFO|trainer.py:2167] 2021-07-29 17:25:08,774 >> Num examples = 100

[INFO|trainer.py:2170] 2021-07-29 17:25:08,774 >> Batch size = 4

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 25/25 [00:08<00:00, 2.92it/s]

***** eval metrics *****

epoch = 4.0

eval_bleu = 24.3863

eval_gen_len = 32.84

eval_loss = 1.3565

eval_runtime = 0:00:09.08

eval_samples = 100

eval_samples_per_second = 11.005

eval_steps_per_second = 2.751

```

the final metrics when running the codes without breaks:

```

***** train metrics *****

epoch = 4.0

train_loss = 0.3274

train_runtime = 0:01:04.19

train_samples = 100

train_samples_per_second = 6.231

train_steps_per_second = 1.558

07/29/2021 17:00:12 - INFO - __main__ - *** Evaluate ***

[INFO|trainer.py:2165] 2021-07-29 17:00:12,315 >> ***** Running Evaluation *****

[INFO|trainer.py:2167] 2021-07-29 17:00:12,315 >> Num examples = 100

[INFO|trainer.py:2170] 2021-07-29 17:00:12,315 >> Batch size = 4

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 25/25 [00:08<00:00, 2.97it/s]

***** eval metrics *****

epoch = 4.0

eval_bleu = 24.3863

eval_gen_len = 32.84

eval_loss = 1.3565

eval_runtime = 0:00:08.95

eval_samples = 100

eval_samples_per_second = 11.164

eval_steps_per_second = 2.791

```

the training loss between the two runs with and without break would be different.

I kindly appreciate having a look, this is required for me to be able to use the great huggingface codes. and I would like to appreciate a lot your great work and colleague on this second to none, great work you are doing. thanks a lot.

## Expected behavior

to see the same training loss when the user trains the codes without any break and when we train the codes with breaking in between. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12942/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12942/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12941 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12941/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12941/comments | https://api.github.com/repos/huggingface/transformers/issues/12941/events | https://github.com/huggingface/transformers/issues/12941 | 955,959,361 | MDU6SXNzdWU5NTU5NTkzNjE= | 12,941 | OSError: Can't load config for 'bert-base-uncased | {

"login": "WinMinTun",

"id": 22287008,

"node_id": "MDQ6VXNlcjIyMjg3MDA4",

"avatar_url": "https://avatars.githubusercontent.com/u/22287008?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/WinMinTun",

"html_url": "https://github.com/WinMinTun",

"followers_url": "https://api.github.com/users/WinMinTun/followers",

"following_url": "https://api.github.com/users/WinMinTun/following{/other_user}",

"gists_url": "https://api.github.com/users/WinMinTun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/WinMinTun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/WinMinTun/subscriptions",

"organizations_url": "https://api.github.com/users/WinMinTun/orgs",

"repos_url": "https://api.github.com/users/WinMinTun/repos",

"events_url": "https://api.github.com/users/WinMinTun/events{/privacy}",

"received_events_url": "https://api.github.com/users/WinMinTun/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Was it just a fluke or is the issue still happening? On Colab I have no problem downloading that model.",

"@sgugger Hi it is still happening now. Not just me, many people I know of. I can access the config file from browser, but not through the code. Thanks",

"Still not okay online, but I managed to do it locally\r\n\r\ngit clone https://huggingface.co/bert-base-uncased\r\n\r\n#model = AutoModelWithHeads.from_pretrained(\"bert-base-uncased\")\r\nmodel = AutoModelWithHeads.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)\r\n\r\n#tokenizer = AutoTokenizer.from_pretrained(\"bert-base-uncased\")\r\ntokenizer = AutoTokenizer.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)\r\n\r\nadapter_name = model2.load_adapter(localpath, config=config, model_name=BERT_LOCAL_PATH)",

"This, like #12940, is probably related to a change we've made on the infra side (cc @n1t0), which we'll partially revert. Please let us know if this still occurs.",

"@WinMinTun Could you share a small collab that reproduces the bug? I'd like to have a look at it.",

"With additional testing, I've found that this issue only occurs with adapter-tranformers, the AdapterHub.ml modified version of the transformers module. With the HuggingFace module, we can pull pretrained weights without issue.\r\n\r\nUsing adapter-transformers this is now working again from Google Colab, but is still failing locally and from servers running in AWS. Interestingly, with adapter-transformers I get a 403 even if I try to load a nonexistent model (e.g. fake-model-that-should-fail). I would expect this to fail with a 401, as there is no corresponding config.json on huggingface.co. The fact that it fails with a 403 seems to indicate that something in front of the web host is rejecting the request before the web host has a change to respond with a not found error.",

"Thanks so much @jason-weddington. This will help us pinpoint the issue. (@n1t0 @Pierrci)",

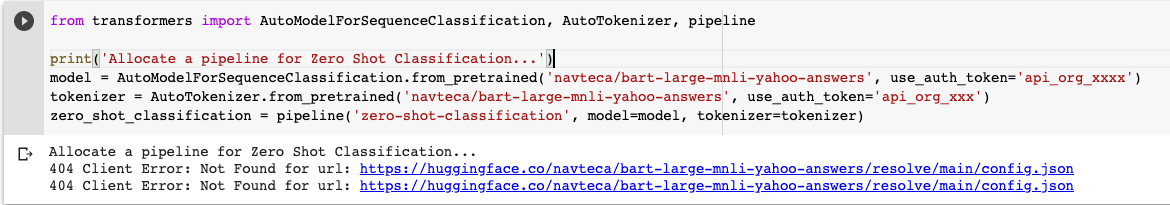

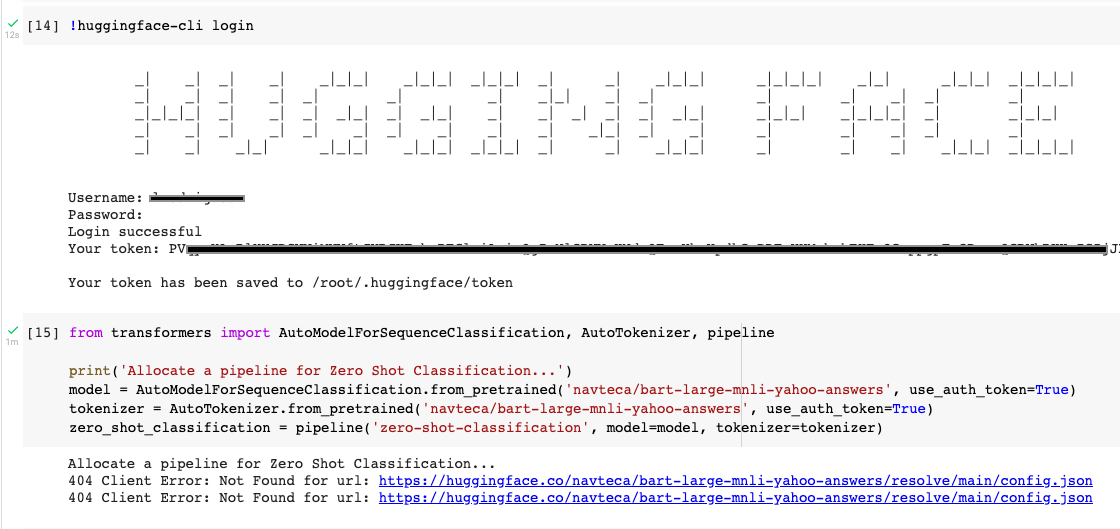

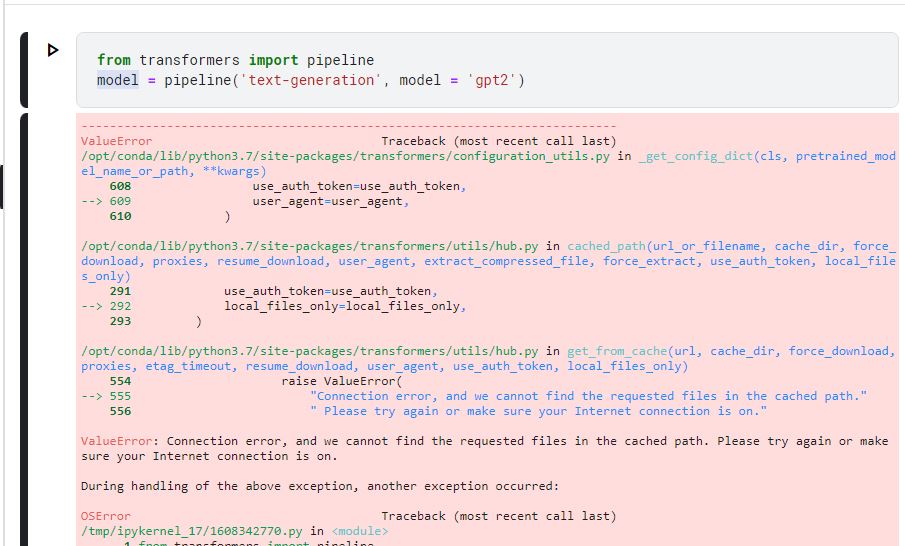

"I have the same problem, but it only happens when the model is private.\r\n\r\n\r\n",

"Your token for `use_auth_token` is not the same as your API token. The easiest way to get it is to login with `!huggingface-cli login` and then just pass `use_auth_token=True`.",

"I think the problem is something else:\r\n\r\n\r\n",

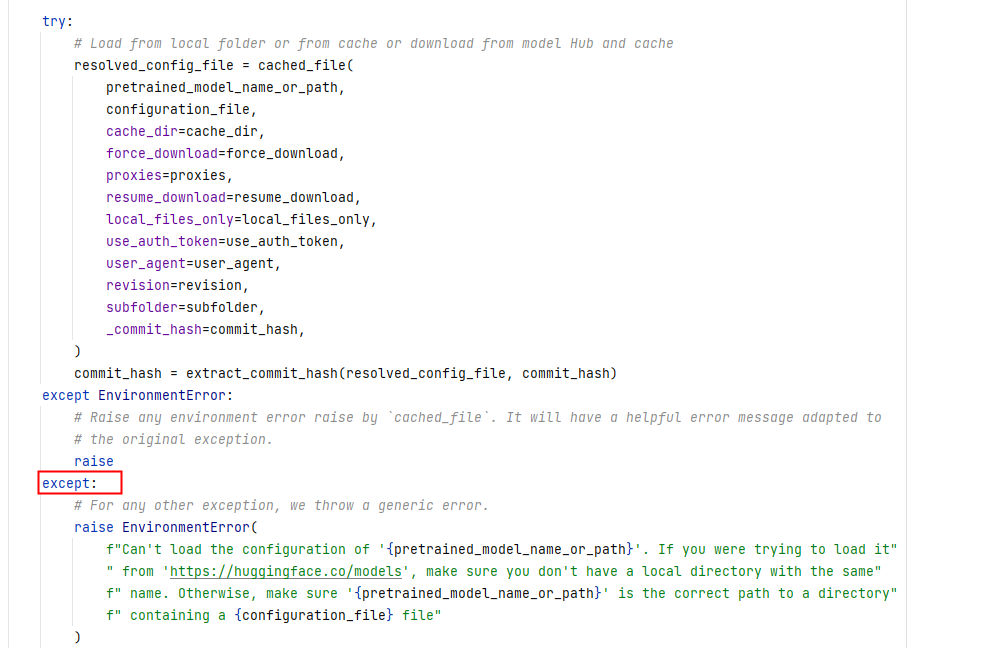

"Yes, I have come across this as well. I have tracked it down to this line\r\n\r\nhttps://github.com/huggingface/transformers/blob/143738214cb83e471f3a43652617c8881370342c/src/transformers/pipelines/__init__.py#L422\r\n\r\nIt's because the `use_auth_token` has not been set up early enough in the model_kwargs. The line referenced above needs to be moved above instantiate config section. \r\n\r\n",

"I've added a pull request to which I think will fix this issue. You can get round it for now by adding `use_auth_token` to the model_kwargs param when creating a pipeline e.g.:\r\n`pipeline('zero-shot-classification', model=model, tokenizer=tokenizer, model_kwargs={'use_auth_token': True})`",

"Still getting the same error \r\nHere is my code : \r\n```\r\nfrom transformers import AutoModelForTokenClassification, AutoTokenizer\r\nmodel = AutoModelForTokenClassification.from_pretrained(\"hemangjoshi37a/autotrain-ratnakar_600_sample_test-1427753567\", use_auth_token=True)\r\ntokenizer = AutoTokenizer.from_pretrained(\"hemangjoshi37a/autotrain-ratnakar_600_sample_test-1427753567\", use_auth_token=True)\r\ninputs = tokenizer(\"I love AutoTrain\", return_tensors=\"pt\")\r\noutputs = model(**inputs)\r\n```\r\nError :\r\n```\r\n----> 3 model = AutoModelForTokenClassification.from_pretrained(\"hemangjoshi37a/autotrain-ratnakar_600_sample_test-1427753567\", use_auth_token=True)\r\nOSError: Can't load config for 'hemangjoshi37a/autotrain-ratnakar_600_sample_test-1427753567'. If you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. Otherwise, make sure 'hemangjoshi37a/autotrain-ratnakar_600_sample_test-1427753567' is the correct path to a directory containing a config.json file\r\n```\r\n\r\nI have transformers version : `4.21.3`\r\nhttps://hjlabs.in",

"runnign this command and authenticating it solved issue: `huggingface-cli login`\r\nhttps://hjlabs.in",

"I am facing the same problem in Kaggle too... How can I\r\n\r\n resolve this issue ?",

"Hello, I had the same problem when using transformers - pipeline in the aws-sagemaker notebook.\r\n\r\nI started to think it was the version or the network problem. But, after some local tests, this guess is wrong. So, I just debug the source code. I find that:\r\n\r\nThis will raise any error as EnviromentError. So, from experience, I solve it, by running this pip:\r\n!pip install --upgrade jupyter\r\n!pip install --upgrade ipywidgets\r\n\r\nYou guys can try it when meeting the problem in aws-notebook or colab!",

"\r\n\r\n\r\nI am unable to solve this issues Since Morning .. i had been trying to Solve it ... \r\n\r\nIm working on my Final Year Project .. can someone pls help me in it ...",

"Just ask chatGPT LOL...😂😂",

"I dont understand it ?? What do u mean ..\r\nThe Hugging Face Website is also not working ...",

"@VRDJ goto this website [chatGPT](chat.openai.com) and enter your error in the chatbox in this website and for the 99% you will get your solution there.",

"> Still not okay online, but I managed to do it locally\r\n> \r\n> git clone https://huggingface.co/bert-base-uncased\r\n> \r\n> #model = AutoModelWithHeads.from_pretrained(\"bert-base-uncased\") model = AutoModelWithHeads.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)\r\n> \r\n> #tokenizer = AutoTokenizer.from_pretrained(\"bert-base-uncased\") tokenizer = AutoTokenizer.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)\r\n> \r\n> adapter_name = model2.load_adapter(localpath, config=config, model_name=BERT_LOCAL_PATH)\r\n-------------------\r\n\r\nHello! Thanks for your sharing. I wonder in \r\n'tokenizer = AutoTokenizer.from_pretrained(BERT_LOCAL_PATH, local_files_only=True)', \r\nwhich file does 'BERT_LOCAL_PATH' refer to specifically? Is it the path for the directory 'bert-base-uncased', or the 'pytorch_model.bin', or something else?"

] | 1,627 | 1,681 | 1,630 | NONE | null | ## Environment info

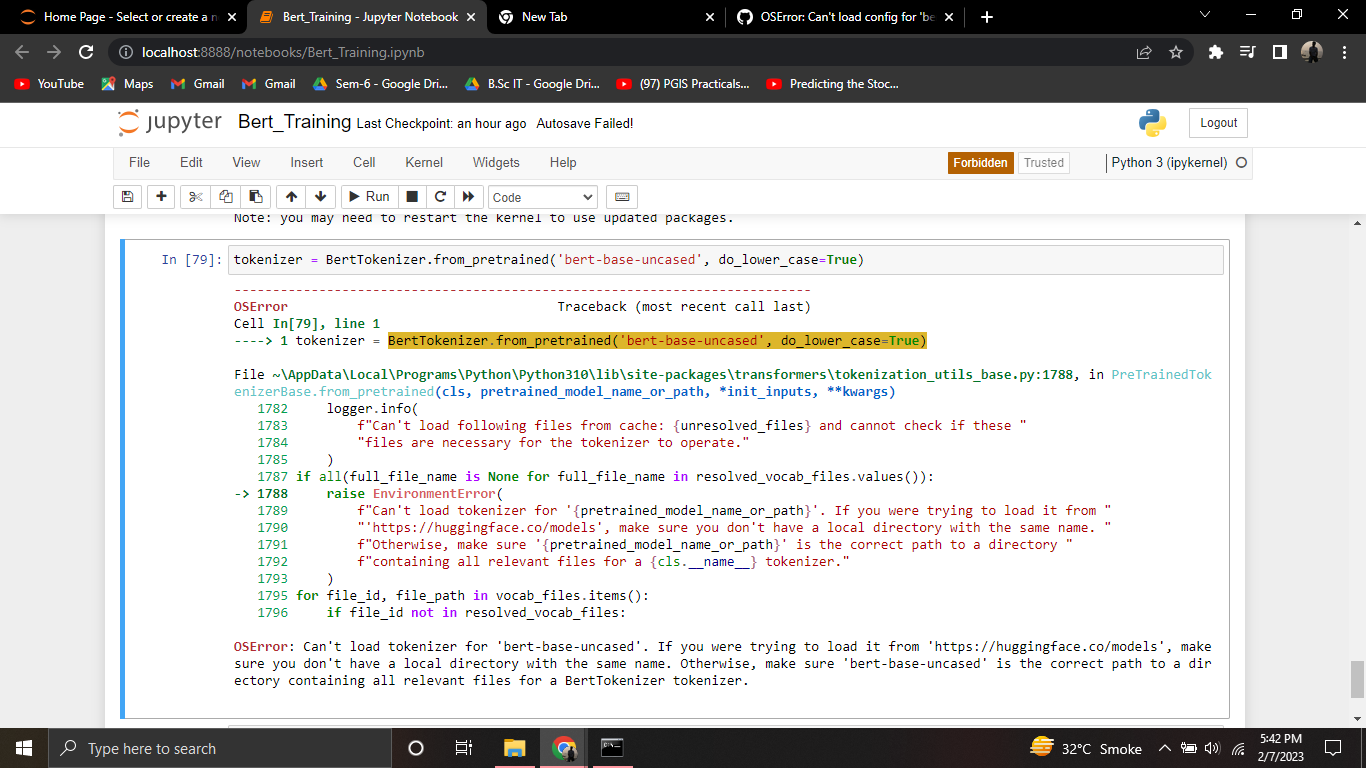

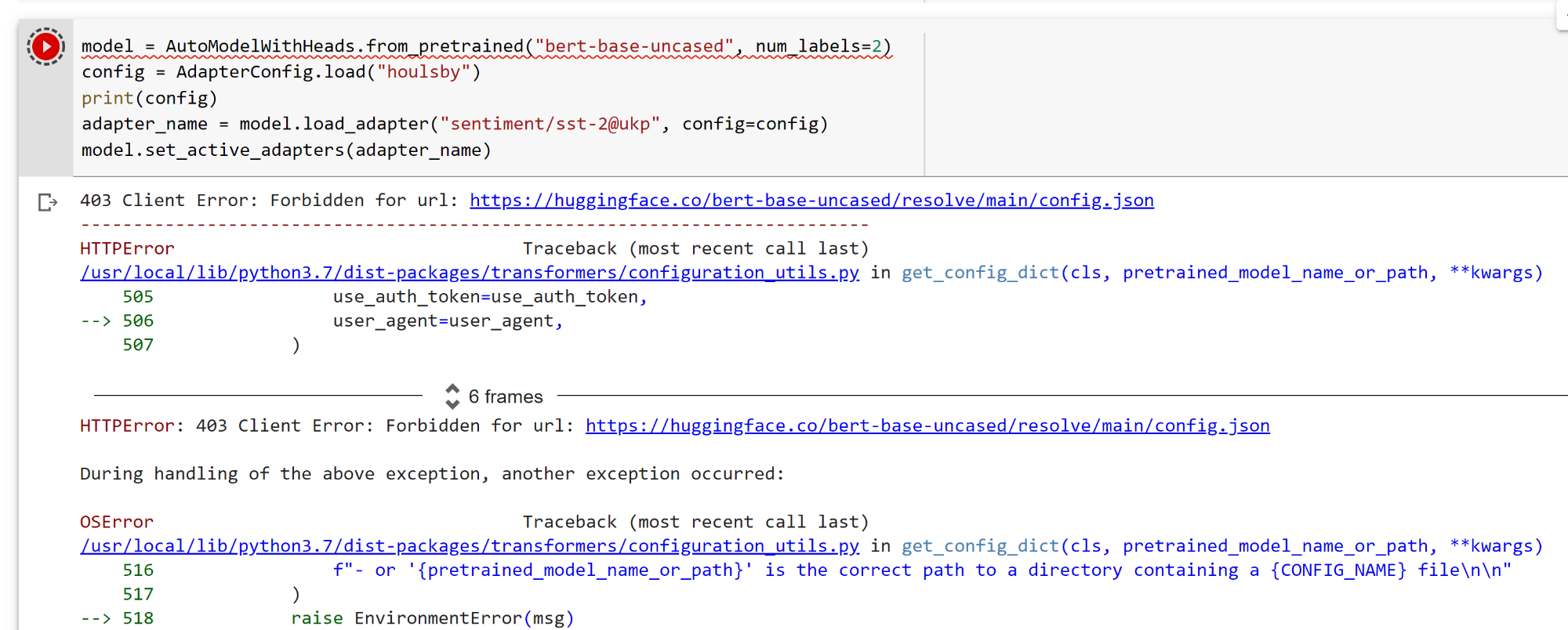

It happens in local machine, Colab, and my colleagues also.

- `transformers` version:

- Platform: Window, Colab

- Python version: 3.7

- PyTorch version (GPU?): 1.8.1 (GPU yes)

- Tensorflow version (GPU?):

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@LysandreJik It is to do with 'bert-base-uncased'

## Information

Hi, I m having this error suddenly this afternoon. It was all okay before for days. It happens in local machine, Colab and also to my colleagues. I can access this file in browser https://huggingface.co/bert-base-uncased/resolve/main/config.json no problem. Btw, I m from Singapore. Any urgent help will be appreciated because I m rushing some project and stuck there.

Thanks

403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json

---------------------------------------------------------------------------

HTTPError Traceback (most recent call last)

/usr/local/lib/python3.7/dist-packages/transformers/configuration_utils.py in get_config_dict(cls, pretrained_model_name_or_path, **kwargs)

505 use_auth_token=use_auth_token,

--> 506 user_agent=user_agent,

507 )

6 frames

HTTPError: 403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json

During handling of the above exception, another exception occurred:

OSError Traceback (most recent call last)

/usr/local/lib/python3.7/dist-packages/transformers/configuration_utils.py in get_config_dict(cls, pretrained_model_name_or_path, **kwargs)

516 f"- or '{pretrained_model_name_or_path}' is the correct path to a directory containing a {CONFIG_NAME} file\n\n"

517 )

--> 518 raise EnvironmentError(msg)

519

520 except json.JSONDecodeError:

OSError: Can't load config for 'bert-base-uncased'. Make sure that:

- 'bert-base-uncased' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'bert-base-uncased' is the correct path to a directory containing a config.json file | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12941/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12941/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12940 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12940/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12940/comments | https://api.github.com/repos/huggingface/transformers/issues/12940/events | https://github.com/huggingface/transformers/issues/12940 | 955,950,852 | MDU6SXNzdWU5NTU5NTA4NTI= | 12,940 | Starting today, I get an error downloading pre-trained models | {

"login": "jason-weddington",

"id": 7495045,

"node_id": "MDQ6VXNlcjc0OTUwNDU=",

"avatar_url": "https://avatars.githubusercontent.com/u/7495045?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jason-weddington",

"html_url": "https://github.com/jason-weddington",

"followers_url": "https://api.github.com/users/jason-weddington/followers",

"following_url": "https://api.github.com/users/jason-weddington/following{/other_user}",

"gists_url": "https://api.github.com/users/jason-weddington/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jason-weddington/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jason-weddington/subscriptions",

"organizations_url": "https://api.github.com/users/jason-weddington/orgs",

"repos_url": "https://api.github.com/users/jason-weddington/repos",

"events_url": "https://api.github.com/users/jason-weddington/events{/privacy}",

"received_events_url": "https://api.github.com/users/jason-weddington/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi @jason-weddington, are you calling those URLs from any particular workload or infrastructure?\r\n\r\nThe only reason I can see where you would get a 403 on this URL is if your usage triggers our infra's firewall. Would you mind contacting us at `expert-acceleration at huggingface.co` so we can take a look?",

"Thanks, I'll email you. I'm running this in a notebook on my desktop, using my home internet connection, but we're also seeing this in Google Colab. The issue just stated today.",

"This is working again, thanks for the help."

] | 1,627 | 1,627 | 1,627 | NONE | null | ## Environment info

Copy-and-paste the text below in your GitHub issue and FILL OUT the two last points.

- `transformers` version: 2.1.1

- Platform: Linux-5.8.0-63-generic-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyTorch version (GPU?): 1.9.0+cu111 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: no

## Information

Model I am using (Bert, XLNet ...):

roberta-base, but this is currently an issue with all models

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

- downloading pre-trained models is currently failing, this seems have have started just in the last day

## To reproduce

Steps to reproduce the behavior:

1. attempt to load any pre-trained model from HuggingFace (code below)

This code:

`generator = pipeline("text-generation", model="bert-base-uncased")`

Generates this error:

403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json

---------------------------------------------------------------------------

HTTPError Traceback (most recent call last)

...

OSError: Can't load config for 'bert-base-uncased'. Make sure that:

- 'bert-base-uncased' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'bert-base-uncased' is the correct path to a directory containing a config.json file

## Expected behavior

I expect the pre-trained model to be downloaded. This issue just started today.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12940/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12940/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12939 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12939/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12939/comments | https://api.github.com/repos/huggingface/transformers/issues/12939/events | https://github.com/huggingface/transformers/pull/12939 | 955,946,165 | MDExOlB1bGxSZXF1ZXN0Njk5NjIxMDE3 | 12,939 | Fix from_pretrained with corrupted state_dict | {

"login": "sgugger",

"id": 35901082,

"node_id": "MDQ6VXNlcjM1OTAxMDgy",

"avatar_url": "https://avatars.githubusercontent.com/u/35901082?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sgugger",

"html_url": "https://github.com/sgugger",

"followers_url": "https://api.github.com/users/sgugger/followers",

"following_url": "https://api.github.com/users/sgugger/following{/other_user}",

"gists_url": "https://api.github.com/users/sgugger/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sgugger/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sgugger/subscriptions",

"organizations_url": "https://api.github.com/users/sgugger/orgs",

"repos_url": "https://api.github.com/users/sgugger/repos",

"events_url": "https://api.github.com/users/sgugger/events{/privacy}",

"received_events_url": "https://api.github.com/users/sgugger/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"The test caught something weird with `sshleifer/tiny-distilbert-base-uncased-finetuned-sst-2-english` (another plus for this PR in my opinion!)\r\n\r\nThis model is used in the benchmark tests and in the zero shot pipeline but that model is beyond salvation: its weights have the names of BERT (in the keys) when it's a DistilBERT architecture, the number of labels of the config don't match the weights, the embedding size of the weights does not match the vocab size of the tokenzier or the embedding size in the config... \r\nLoading it for now just results in a random model (silently) since none of the weights can't be loaded.\r\n\r\nTo fix this, I created a new tiny random model following the same kind of config as `sshleifer/tiny-distilbert-base-uncased-finetuned-sst-2-english` (but not messed up) and stored it in `sgugger/tiny-distilbert-classification`.",

"I'll address @patrickvonplaten 's remarks regarding a more general refactor of the method to clean the code later on, merging this PR in the meantime."

] | 1,627 | 1,628 | 1,628 | COLLABORATOR | null | # What does this PR do?

As we discovered in #12843, when a state dictionary contains keys for the body of the model that are not prefixed *and* keys for the head, the body is loaded but the head is ignored with no warning.

This PR fixes that by keeping track of the expected key that do not contain the prefix and erroring out if we load only the body of the model and there are some keys to load in that list of expected keys that do not contain the prefix. I chose the error since those kinds of state dictionaries should not exist, since `from_pretrained` or `torch.save(model.state_dict())` do not generate those. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12939/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12939/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12939",

"html_url": "https://github.com/huggingface/transformers/pull/12939",

"diff_url": "https://github.com/huggingface/transformers/pull/12939.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12939.patch",

"merged_at": 1628070519000

} |

https://api.github.com/repos/huggingface/transformers/issues/12938 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12938/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12938/comments | https://api.github.com/repos/huggingface/transformers/issues/12938/events | https://github.com/huggingface/transformers/pull/12938 | 955,878,521 | MDExOlB1bGxSZXF1ZXN0Njk5NTYzNjU4 | 12,938 | Add CpmTokenizerFast | {

"login": "JetRunner",

"id": 22514219,

"node_id": "MDQ6VXNlcjIyNTE0MjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/22514219?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/JetRunner",

"html_url": "https://github.com/JetRunner",

"followers_url": "https://api.github.com/users/JetRunner/followers",

"following_url": "https://api.github.com/users/JetRunner/following{/other_user}",

"gists_url": "https://api.github.com/users/JetRunner/gists{/gist_id}",

"starred_url": "https://api.github.com/users/JetRunner/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/JetRunner/subscriptions",

"organizations_url": "https://api.github.com/users/JetRunner/orgs",

"repos_url": "https://api.github.com/users/JetRunner/repos",

"events_url": "https://api.github.com/users/JetRunner/events{/privacy}",

"received_events_url": "https://api.github.com/users/JetRunner/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"> I don't think the fast tokenizer as it's written works for now, as the fast tokenizer do not call the `_tokenize` method.\n\nOops! It looks the old pull request isn't right. I'll take a closer look",

"@sgugger I've updated and tested it. It works fine - only needs to wait for the `tokenizer.json` to be uploaded.",

"Tokenizer file uploaded. Merging it."

] | 1,627 | 1,627 | 1,627 | CONTRIBUTOR | null | # What does this PR do?

Add a fast version of `CpmTokenizer`

Fixes #12837 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12938/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12938/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12938",

"html_url": "https://github.com/huggingface/transformers/pull/12938",

"diff_url": "https://github.com/huggingface/transformers/pull/12938.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12938.patch",

"merged_at": 1627585516000

} |

https://api.github.com/repos/huggingface/transformers/issues/12937 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12937/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12937/comments | https://api.github.com/repos/huggingface/transformers/issues/12937/events | https://github.com/huggingface/transformers/issues/12937 | 955,717,183 | MDU6SXNzdWU5NTU3MTcxODM= | 12,937 | Not able use TF Dataset on TPU when created via generator in Summarization example | {

"login": "prikmm",

"id": 47216475,

"node_id": "MDQ6VXNlcjQ3MjE2NDc1",

"avatar_url": "https://avatars.githubusercontent.com/u/47216475?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/prikmm",

"html_url": "https://github.com/prikmm",

"followers_url": "https://api.github.com/users/prikmm/followers",

"following_url": "https://api.github.com/users/prikmm/following{/other_user}",

"gists_url": "https://api.github.com/users/prikmm/gists{/gist_id}",

"starred_url": "https://api.github.com/users/prikmm/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/prikmm/subscriptions",

"organizations_url": "https://api.github.com/users/prikmm/orgs",

"repos_url": "https://api.github.com/users/prikmm/repos",

"events_url": "https://api.github.com/users/prikmm/events{/privacy}",

"received_events_url": "https://api.github.com/users/prikmm/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"cc @Rocketknight1 ",

"Hi, I'm sorry for the slow response here! It does seem like an upstream bug, but we'll hopefully be supporting TF 2.6 in the next release. I'm also working on a refactor of the examples using a new data pipeline, so I'll test TPU training with this example when that's implemented to make sure it's working then.",

"> Hi, I'm sorry for the slow response here! It does seem like an upstream bug, but we'll hopefully be supporting TF 2.6 in the next release. I'm also working on a refactor of the examples using a new data pipeline, so I'll test TPU training with this example when that's implemented to make sure it's working then.\r\n\r\n@Rocketknight1 Ohh alright. I will keep this issue open for now since it is not yet solved just incase someone needs it. Eagerly waiting for increased TensorFlow support. :smiley:",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,627 | 1,633 | 1,633 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.9.1

- Platform: Kaggle/Colab

- Python version: 3.7.10

- Tensorflow version (GPU?): 2.4.1 / 2.5.1

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

@patil-suraj, @Rocketknight1

## Information

Model I am using (Bert, XLNet ...):

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: (XSum)

* [ ] my own task or dataset: (give details below)

I am trying to replicate the summarization example present [here](https://github.com/huggingface/transformers/blob/master/examples/tensorflow/summarization/run_summarization.py) on XSum dataset using T5, but, am facing error when trying to use a TPU (it works on gpu).

## To reproduce

[Kaggle link](https://www.kaggle.com/rehanwild/tpu-tf-huggingface-error?scriptVersionId=69298817)

Error in TF 2.4.1:

```

---------------------------------------------------------------------------

UnavailableError Traceback (most recent call last)

<ipython-input-11-8513f78e8e35> in <module>

72 model.fit(tf_tokenized_train_ds,

73 validation_data=tf_tokenized_valid_ds,

---> 74 epochs=1,

75 )

76 #callbacks=[WandbCallback()])

/opt/conda/lib/python3.7/site-packages/wandb/integration/keras/keras.py in new_v2(*args, **kwargs)

122 for cbk in cbks:

123 set_wandb_attrs(cbk, val_data)

--> 124 return old_v2(*args, **kwargs)

125

126 training_arrays.orig_fit_loop = old_arrays

/opt/conda/lib/python3.7/site-packages/tensorflow/python/keras/engine/training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

1100 tmp_logs = self.train_function(iterator)

1101 if data_handler.should_sync:

-> 1102 context.async_wait()

1103 logs = tmp_logs # No error, now safe to assign to logs.

1104 end_step = step + data_handler.step_increment

/opt/conda/lib/python3.7/site-packages/tensorflow/python/eager/context.py in async_wait()

2328 an error state.

2329 """

-> 2330 context().sync_executors()

2331

2332

/opt/conda/lib/python3.7/site-packages/tensorflow/python/eager/context.py in sync_executors(self)

643 """

644 if self._context_handle:

--> 645 pywrap_tfe.TFE_ContextSyncExecutors(self._context_handle)

646 else:

647 raise ValueError("Context is not initialized.")

UnavailableError: 9 root error(s) found.

(0) Unavailable: {{function_node __inference_train_function_49588}} failed to connect to all addresses

Additional GRPC error information from remote target /job:localhost/replica:0/task:0/device:CPU:0:

:{"created":"@1627548744.739596558","description":"Failed to pick subchannel","file":"third_party/grpc/src/core/ext/filters/client_channel/client_channel.cc","file_line":4143,"referenced_errors":[{"created":"@1627548744.739593083","description":"failed to connect to all addresses","file":"third_party/grpc/src/core/ext/filters/client_channel/lb_policy/pick_first/pick_first.cc","file_line":398,"grpc_status":14}]}

[[{{node MultiDeviceIteratorGetNextFromShard}}]]

[[RemoteCall]]

[[IteratorGetNextAsOptional]]

[[cond_14/switch_pred/_200/_88]]

(1) Unavailable: {{function_node __inference_train_function_49588}} failed to connect to all addresses

Additional GRPC error information from remote target /job:localhost/replica:0/task:0/device:CPU:0:

:{"created":"@1627548744.739596558","description":"Failed to pick subchannel","file":"third_party/grpc/src/core/ext/filters/client_channel/client_channel.cc","file_line":4143,"referenced_errors":[{"created":"@1627548744.739593083","description":"failed to connect to all addresses","file":"third_party/grpc/src/core/ext/filters/client_channel/lb_policy/pick_first/pick_first.cc","file_line":398,"grpc_status":14}]}

[[{{node MultiDeviceIteratorGetNextFromShard}}]]

[[RemoteCall]]

[[IteratorGetNextAsOptional]]

[[strided_slice_18/_288]]

(2) Unavailable: {{function_node __inference_train_function_49588}} failed to connect to all addresses

Additional GRPC error information from remote target /job:localhost/replica:0/task:0/device:CPU:0:

:{"created":"@1627548744.739596558","description":"Failed to pick subchannel","file":"third_party/grpc/src/core/ext/filters/client_channel/client_channel.cc","file_line":4143,"referenced_errors":[{"created":"@1627548744.739593083","description":"failed to connect to all addresses","file":"third_party/grpc/src/core/ext/filters/client_channel/lb_policy/pick_first/pick_first.cc","file_line":398,"grpc_status":14}]}

[[{{node MultiDeviceIteratorGetNextFromShard}}]]

[[RemoteCall]]

[[IteratorGetNextAsOptional]]

[[tpu_compile_succeeded_assert/_1965840270157496994/_8/_335]]

(3) Unavailable: {{function_node __inference_train_function_49588}} failed to connect to all addresses

Additional GRPC error information from remote target /job:localhost/replica:0/task:0/device:CPU:0:

:{"created":"@1627548744.739596558","description":"Failed to pick subchannel","file":"third_party/grpc/src/core/ext/filters/client_channel/client_channel.cc","file_line":4143,"referenced_errors":[{"created":"@1627548744.739593083","description":"failed to connect to all addresses","file":"third_party/grpc/src/core/ext/filters/client_channel/lb_policy/pick_first/pick_first.cc","file_line":398,"grpc_status":14}]}

[[{{node MultiDeviceIteratorGetNextFromShard}}]]

[[RemoteCall]]

[[IteratorGetNextAsOptional]]

[[Pad_27/paddings/_218]]

(4) Unavailable: ... [truncated]

```

Error in TF 2.5.1:

```

NotFoundError: Op type not registered 'XlaSetDynamicDimensionSize' in binary running on n-f62ff7a1-w-0. Make sure the Op and Kernel are registered in the binary running in this process. Note that if you are loading a saved graph which used ops from tf.contrib, accessing (e.g.) `tf.contrib.resampler` should be done before importing the graph, as contrib ops are lazily registered when the module is first accessed.

```

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

No such error

<!-- A clear and concise description of what you would expect to happen. -->

EDIT:

I found tensorflow/tensorflow#48268, though it has been closed it is not yet completely solved I guess, since I found tensorflow/tensorflow#50980. I was not able to try with TF-2.6.0-rc1 as it is not yet supported by transformers. Since, this is an upstream bug, I think there should be an edit in the [run_summarization.py](https://github.com/huggingface/transformers/blob/master/examples/tensorflow/summarization/run_summarization.py) stating its incompatibility with TPU for the timebeing.

PS: Since, I have not ran the original script, I would like to know whether my above kaggle kernel is missing anything. I was able to run it on GPU. Only got the problem while using TPU. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12937/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12937/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12936 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12936/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12936/comments | https://api.github.com/repos/huggingface/transformers/issues/12936/events | https://github.com/huggingface/transformers/issues/12936 | 955,493,727 | MDU6SXNzdWU5NTU0OTM3Mjc= | 12,936 | `PretrainedTokenizer.return_special_tokens` returns incorrect mask | {

"login": "tamuhey",

"id": 24998666,

"node_id": "MDQ6VXNlcjI0OTk4NjY2",

"avatar_url": "https://avatars.githubusercontent.com/u/24998666?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tamuhey",

"html_url": "https://github.com/tamuhey",

"followers_url": "https://api.github.com/users/tamuhey/followers",

"following_url": "https://api.github.com/users/tamuhey/following{/other_user}",

"gists_url": "https://api.github.com/users/tamuhey/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tamuhey/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tamuhey/subscriptions",

"organizations_url": "https://api.github.com/users/tamuhey/orgs",

"repos_url": "https://api.github.com/users/tamuhey/repos",

"events_url": "https://api.github.com/users/tamuhey/events{/privacy}",

"received_events_url": "https://api.github.com/users/tamuhey/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Indeed, we have an error in the way the special tokens mask is computed here. See here for the slow tokenizer: https://github.com/huggingface/transformers/blob/3f44a66cb617c72efeef0c0b4201cbe2945d8edf/src/transformers/models/bert/tokenization_bert.py#L297-L299\r\n\r\nThis seems to also be the case for the fast tokenizer. Would you like to propose a fix? Pinging @SaulLu as it might be of interest to her.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"Unstale",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"unstale\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"@SaulLu do you have time to look at this?",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"**TL,DR :**\r\nTo come back on this issue, I would tend to think that in its current state this method (`get_special_tokens_mask`) and this argument (`return_special_tokens_mask` in `__call__`) is very useful. \r\n\r\nIndeed, this behavior is common to all tokenizers (I checked all tokenizers listed in `AutoTokenizer`, I can share a code if you want to have a look) and from my point of view it allows identifying the special tokens that are added by the `add_special_tokens` argument in the `__call__` method (the unknown token is not included in them, see the details section below). \r\n\r\nNevertheless, I imagine that it is not something obvious at all and that we should perhaps see how it could be better explained in the documentation. Futhermore, we can think about creating a new method that would generate a mask that would also include the unknow token if needed.\r\n\r\nWhat do you think about it ?\r\n\r\n**Details:**\r\nThe unknow special token does indeed differ from other special tokens in that it is a special token that is essential to the proper functioning of the tokenization algorithm and is therefore not an \"add-on\" oroptional like all other special tokens. A \"unknow\" token will correspond to a part of the initial text. \r\n\r\nBy the way, the documentation of `get_special_tokens_mask` is `Retrieves sequence ids from a token list that has no special tokens added. This method is called when adding special tokens using the tokenizer prepare_for_model or encode_plus methods.` and the unknow token is not added by the `prepare_for_model` or `encode_plus` methods but by the heart of the tokenizer : the tokenization algorithm.\r\n\r\n@tamuhey , could you share your use case where you need to identify the position of unknown tokens? That would be really useful to us :blush: ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"Re-opened this issue as I thought a fix needed to be done - but reading @SaulLu's answer I believe the current behavior is correct.\r\n\r\nPlease let us know if this is an issue to your workflow and we'll look into solutions.",

"Hello @LysandreJik, I also encountered the problem. I will use the example in this [issue](https://github.com/huggingface/transformers/issues/16938).\r\n\r\n``` Python\r\nimport transformers\r\nprint(transformers.__version__)\r\ntokenizer = transformers.AutoTokenizer.from_pretrained('roberta-base')\r\n\r\nspecial_tokens_dict = {\"additional_special_tokens\": [\"<test1>\", \"<test2>\"]}\r\ntokenizer.add_special_tokens(special_tokens_dict)\r\n\r\nprocessed = tokenizer(\"this <test1> that <test2> this\", return_special_tokens_mask=True)\r\ntokens = tokenizer.convert_ids_to_tokens(processed.input_ids)\r\n\r\nfor i in range(len(processed.input_ids)):\r\n print(f\"{processed.input_ids[i]}\\t{tokens[i]}\\t{processed.special_tokens_mask[i]}\")\r\n```\r\n\r\n``` Python\r\nReturned output:\r\n\r\n0 <s> 1\r\n9226 this 0\r\n1437 Ġ 0\r\n50265 <test1> 0\r\n14 Ġthat 0\r\n1437 Ġ 0\r\n50266 <test2> 0\r\n42 Ġthis 0\r\n2 </s> 1\r\n\r\n\r\nExpected output:\r\n\r\n\r\n0 <s> 1\r\n9226 this 0\r\n1437 Ġ 0\r\n50265 <test1> 1\r\n14 Ġthat 0\r\n1437 Ġ 0\r\n50266 <test2> 1\r\n42 Ġthis 0\r\n2 </s> 1\r\n```\r\nMy goal is to train a RoBERTa model from scratch with two additional special tokens `<test1>` and `<test2>`. \r\n\r\n\r\nFor masked language modelling, I don't want customized special tokens to be masked during training. I used `tokenizer` and `DataCollatorForLanguageModeling`. I thought `special_tokens_mask` from tokenizer could [disable special token masking](https://github.com/huggingface/transformers/blob/v4.26.0/src/transformers/data/data_collator.py#L767) in `DataCollatorForLanguageModeling`.\r\n``` Python\r\nprocessed = tokenizer(\"this <test1> that <test2> this\", return_special_tokens_mask=True)\r\n```\r\nBut it didn't recognize `<test1>` and `<test2>`. \r\n\r\nThe workaround is \r\n``` Python\r\nprocessed = tokenizer(\"this <test1> that <test2> this\")\r\nprocessed['special_tokens_mask'] = tokenizer.get_special_tokens_mask(processed['input_ids'], already_has_special_tokens=True)\r\n```\r\nIt works fine for me on one sentence, but it seems `get_special_tokens_mask` cannot encode in batch, unlike the default tokenizer. \r\n\r\nDo you think it makes sense to modify the behaviour of `return_special_tokens_mask` or to create a new method?\r\n"

] | 1,627 | 1,675 | 1,641 | CONTRIBUTOR | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.9.1

- Platform: ubuntu

- Python version: 3.8

- PyTorch version (GPU?): 1.9

- Tensorflow version (GPU?):

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@LysandreJik

## Information

Model I am using (Bert, XLNet ...): Bert

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

## To reproduce

```python

tokenizer = transformers.AutoTokenizer.from_pretrained("bert-base-uncased")

text = "foo 雲 bar"

tokens=tokenizer.tokenize(text)

print("tokens : ", tokens)

inputs = tokenizer(text, return_special_tokens_mask=True)