url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

sequence | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/6321 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6321/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6321/comments | https://api.github.com/repos/huggingface/transformers/issues/6321/events | https://github.com/huggingface/transformers/pull/6321 | 674,886,481 | MDExOlB1bGxSZXF1ZXN0NDY0NDk2ODA2 | 6,321 | [Community notebooks] Add notebook on fine-tuning Electra and interpreting with IG | {

"login": "elsanns",

"id": 3648991,

"node_id": "MDQ6VXNlcjM2NDg5OTE=",

"avatar_url": "https://avatars.githubusercontent.com/u/3648991?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/elsanns",

"html_url": "https://github.com/elsanns",

"followers_url": "https://api.github.com/users/elsanns/followers",

"following_url": "https://api.github.com/users/elsanns/following{/other_user}",

"gists_url": "https://api.github.com/users/elsanns/gists{/gist_id}",

"starred_url": "https://api.github.com/users/elsanns/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/elsanns/subscriptions",

"organizations_url": "https://api.github.com/users/elsanns/orgs",

"repos_url": "https://api.github.com/users/elsanns/repos",

"events_url": "https://api.github.com/users/elsanns/events{/privacy}",

"received_events_url": "https://api.github.com/users/elsanns/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=h1) Report\n> Merging [#6321](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/c72f9c90a160e74108d50568fa71e1f216949846&el=desc) will **decrease** coverage by `0.25%`.\n> The diff coverage is `n/a`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #6321 +/- ##\n==========================================\n- Coverage 79.52% 79.27% -0.26% \n==========================================\n Files 148 148 \n Lines 27194 27194 \n==========================================\n- Hits 21627 21559 -68 \n- Misses 5567 5635 +68 \n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [src/transformers/tokenization\\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fcm9iZXJ0YS5weQ==) | `76.71% <0.00%> (-21.92%)` | :arrow_down: |\n| [src/transformers/tokenization\\_utils\\_base.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHNfYmFzZS5weQ==) | `86.43% <0.00%> (-7.42%)` | :arrow_down: |\n| [src/transformers/tokenization\\_transfo\\_xl.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdHJhbnNmb194bC5weQ==) | `38.73% <0.00%> (-3.76%)` | :arrow_down: |\n| [src/transformers/tokenization\\_utils\\_fast.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHNfZmFzdC5weQ==) | `92.14% <0.00%> (-2.15%)` | :arrow_down: |\n| [src/transformers/tokenization\\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fb3BlbmFpLnB5) | `82.57% <0.00%> (-1.52%)` | :arrow_down: |\n| [src/transformers/tokenization\\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYmVydC5weQ==) | `91.07% <0.00%> (-0.45%)` | :arrow_down: |\n| [src/transformers/tokenization\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `90.00% <0.00%> (-0.41%)` | :arrow_down: |\n| [src/transformers/file\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `82.18% <0.00%> (-0.26%)` | :arrow_down: |\n| [src/transformers/tokenization\\_bart.py](https://codecov.io/gh/huggingface/transformers/pull/6321/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYmFydC5weQ==) | `95.77% <0.00%> (+35.21%)` | :arrow_up: |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=footer). Last update [c72f9c9...6505a38](https://codecov.io/gh/huggingface/transformers/pull/6321?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n",

"Hey @elsanns - thanks a lot for your notebook! It looks great :-) \r\n\r\nAlso cc @LysandreJik, you might be interested in this!",

"Thank you;)"

] | 1,596 | 1,596 | 1,596 | CONTRIBUTOR | null | Adding a link to a community notebook containing an example of:

- fine-tuning Electra on GLUE SST-2 with Trainer,

- running Captum Integrated Gradients token importance attribution on the results ,

- visualizing attribution with captum.attr.visualization. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6321/reactions",

"total_count": 5,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 3,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6321/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/6321",

"html_url": "https://github.com/huggingface/transformers/pull/6321",

"diff_url": "https://github.com/huggingface/transformers/pull/6321.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/6321.patch",

"merged_at": 1596880054000

} |

https://api.github.com/repos/huggingface/transformers/issues/6320 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6320/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6320/comments | https://api.github.com/repos/huggingface/transformers/issues/6320/events | https://github.com/huggingface/transformers/issues/6320 | 674,844,085 | MDU6SXNzdWU2NzQ4NDQwODU= | 6,320 | Multi-gpu LM finetuning | {

"login": "cppntn",

"id": 26765504,

"node_id": "MDQ6VXNlcjI2NzY1NTA0",

"avatar_url": "https://avatars.githubusercontent.com/u/26765504?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cppntn",

"html_url": "https://github.com/cppntn",

"followers_url": "https://api.github.com/users/cppntn/followers",

"following_url": "https://api.github.com/users/cppntn/following{/other_user}",

"gists_url": "https://api.github.com/users/cppntn/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cppntn/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cppntn/subscriptions",

"organizations_url": "https://api.github.com/users/cppntn/orgs",

"repos_url": "https://api.github.com/users/cppntn/repos",

"events_url": "https://api.github.com/users/cppntn/events{/privacy}",

"received_events_url": "https://api.github.com/users/cppntn/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,596 | 1,602 | 1,602 | NONE | null | Hello,

how can I run LM finetuning with more than one gpu (specifically I want to train gpt2-medium on Google Cloud with four nvidia T4, 64GB).

What are the arguments to pass to `run_language_modeling.py` script?

Thanks | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6320/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6320/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6319 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6319/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6319/comments | https://api.github.com/repos/huggingface/transformers/issues/6319/events | https://github.com/huggingface/transformers/issues/6319 | 674,821,137 | MDU6SXNzdWU2NzQ4MjExMzc= | 6,319 | num_beams error in GPT2DoubleHead model | {

"login": "vibhavagarwal5",

"id": 23319631,

"node_id": "MDQ6VXNlcjIzMzE5NjMx",

"avatar_url": "https://avatars.githubusercontent.com/u/23319631?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/vibhavagarwal5",

"html_url": "https://github.com/vibhavagarwal5",

"followers_url": "https://api.github.com/users/vibhavagarwal5/followers",

"following_url": "https://api.github.com/users/vibhavagarwal5/following{/other_user}",

"gists_url": "https://api.github.com/users/vibhavagarwal5/gists{/gist_id}",

"starred_url": "https://api.github.com/users/vibhavagarwal5/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/vibhavagarwal5/subscriptions",

"organizations_url": "https://api.github.com/users/vibhavagarwal5/orgs",

"repos_url": "https://api.github.com/users/vibhavagarwal5/repos",

"events_url": "https://api.github.com/users/vibhavagarwal5/events{/privacy}",

"received_events_url": "https://api.github.com/users/vibhavagarwal5/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

}

] | [

"encountered the same issue",

"I think @patrickvonplaten might have some ideas."

] | 1,596 | 1,598 | 1,598 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 2.9.1

- Platform: Linux

- Python version: 3.6

- PyTorch version (GPU?): 1.5

- Tensorflow version (GPU?):

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Yes

### Who can help

@LysandreJik @patil-suraj

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, GPT2, XLM: @LysandreJik

tokenizers: @mfuntowicz

Trainer: @sgugger

Speed and Memory Benchmarks: @patrickvonplaten

Model Cards: @julien-c

Translation: @sshleifer

Summarization: @sshleifer

TextGeneration: @TevenLeScao

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @TevenLeScao

blenderbot: @mariamabarham

Bart: @sshleifer

Marian: @sshleifer

T5: @patrickvonplaten

Longformer/Reformer: @patrickvonplaten

TransfoXL/XLNet: @TevenLeScao

examples/seq2seq: @sshleifer

tensorflow: @jplu

documentation: @sgugger

-->

## Information

I am trying to use `model.generate()` for the GPT2DoubleHeadModel but the beam search is giving an error.

Setting the `num_beams > 1` results in the following error:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/hdd1/vibhav/anaconda3/envs/vesnli/lib/python3.7/site-packages/torch/autograd/grad_mode.py", line 15, in decorate_context

return func(*args, **kwargs)

File "/home/hdd1/vibhav/anaconda3/envs/vesnli/lib/python3.7/site-packages/transformers/modeling_utils.py", line 1125, in generate

model_specific_kwargs=model_specific_kwargs,

File "/home/hdd1/vibhav/anaconda3/envs/vesnli/lib/python3.7/site-packages/transformers/modeling_utils.py", line 1481, in _generate_beam_search

past = self._reorder_cache(past, beam_idx)

File "/home/hdd1/vibhav/anaconda3/envs/vesnli/lib/python3.7/site-packages/transformers/modeling_utils.py", line 1551, in _reorder_cache

return tuple(layer_past.index_select(1, beam_idx) for layer_past in past)

File "/home/hdd1/vibhav/anaconda3/envs/vesnli/lib/python3.7/site-packages/transformers/modeling_utils.py", line 1551, in <genexpr>

return tuple(layer_past.index_select(1, beam_idx) for layer_past in past)

IndexError: Dimension out of range (expected to be in range of [-1, 0], but got 1)

```

However, things are working fine for `num_beams=1` and for GPT2LMHeadModel(both beam search and non beam search)

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6319/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6319/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6318 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6318/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6318/comments | https://api.github.com/repos/huggingface/transformers/issues/6318/events | https://github.com/huggingface/transformers/issues/6318 | 674,714,837 | MDU6SXNzdWU2NzQ3MTQ4Mzc= | 6,318 | TFBert runs slower than keras-bert, any plan to speed up? | {

"login": "kismit",

"id": 17515460,

"node_id": "MDQ6VXNlcjE3NTE1NDYw",

"avatar_url": "https://avatars.githubusercontent.com/u/17515460?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/kismit",

"html_url": "https://github.com/kismit",

"followers_url": "https://api.github.com/users/kismit/followers",

"following_url": "https://api.github.com/users/kismit/following{/other_user}",

"gists_url": "https://api.github.com/users/kismit/gists{/gist_id}",

"starred_url": "https://api.github.com/users/kismit/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/kismit/subscriptions",

"organizations_url": "https://api.github.com/users/kismit/orgs",

"repos_url": "https://api.github.com/users/kismit/repos",

"events_url": "https://api.github.com/users/kismit/events{/privacy}",

"received_events_url": "https://api.github.com/users/kismit/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"This is related: https://github.com/huggingface/transformers/pull/6877",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,596 | 1,604 | 1,604 | NONE | null | classification task run with keras-bert using 7ms, but run with TFBert using 50+ms, both of them runing on GPU, detail arguments:

hidden layers: 6

max_seq_length: 64

cuda: 2080ti

As I see, most of time using cost by bert encoder, average 6 ms per encoder layer, while keras-bert encoder per layer use less than 1ms

do we have any plan to solve this problem?

ths! | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6318/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6318/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6317 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6317/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6317/comments | https://api.github.com/repos/huggingface/transformers/issues/6317/events | https://github.com/huggingface/transformers/issues/6317 | 674,714,165 | MDU6SXNzdWU2NzQ3MTQxNjU= | 6,317 | codecov invalid reports due to inconsistent code coverage outputs (non-idempotent test-suite) | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

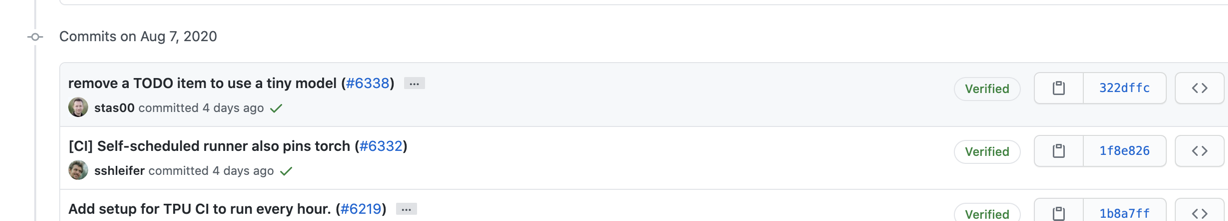

"Here is another gem - PR to remove a single comment from a test file https://github.com/huggingface/transformers/pull/6338 - guess what codecov's report was - it will increase coverage by 0.32%! Sounds like its output would make a pretty good RNG.",

"Pinging @thomasrockhu\r\n\r\nWe've seen such reports for a while now, could you explain why these diffs in coverage happen, or provide a link that explains why? Thank you!",

"I took at look at #6338 because that is extremely strange.\r\n\r\n-- Codecov --\r\nFirst, I took a look at the commits to make sure we were comparing against the right commits SHAs here: https://codecov.io/gh/huggingface/transformers/pull/6338/commits\r\n\r\n\r\n\r\nwhich matches roughly to the commit stack on `master` (merging commits changes the SHA, I'm fairly sure, but the commit messages are consistent) https://github.com/huggingface/transformers/commits/master?after=3f071c4b6e36c4f2d4aee35d76fd2196f82b7936+34&branch=master\r\n\r\n\r\n\r\nSo, I thought that maybe we read the coverage reports wrong. I focused on this file `src/transformers/modeling_tf_electra.py`, because it had the most changes. Going into the build tab of the [base commit](https://codecov.io/gh/huggingface/transformers/commit/1f8e8265188de8b76f5c28539056d6eb772e4e0f/build) and the [head commit](https://codecov.io/gh/huggingface/transformers/commit/8721f03d83b58c52db266be1f10bc0de2dea5a10/build), I noticed that the coverage reports uploaded to `Codecov` show different coverages\r\n\r\n**Base commit**\r\n\r\n\r\n**Head commit**\r\n\r\n\r\nTo further confirm, I went into the CircleCI builds and compared the coverage generated by running `python -m pytest -n 8 --dist=loadfile -s ./tests/ --cov | tee output.txt`\r\n\r\n**Base commit**\r\nhttps://app.circleci.com/pipelines/github/huggingface/transformers/10177/workflows/c12a8e4b-4ec1-4c7c-be7a-e54b0d6b9835/jobs/70077\r\n\r\n\r\n**Head commit**\r\nhttps://app.circleci.com/pipelines/github/huggingface/transformers/10180/workflows/90610a5f-d9b1-4468-8c80-fcbd874dbe22/jobs/70104\r\n\r\n\r\nI don't know the codebase well enough here, but my suspicion is that your test suite is not idempotent",

"As for notifications, could I get some more details here? One thing to note is `target` and `threshold` are not the same. `Target` is the coverage percentage to hit (like 80% of the total project), while `threshold` is the \"wiggle\" room (if set to 1%, it allows a 1% drop from the `target` to be considered acceptable)",

"> As for notifications, could I get some more details here?\r\n\r\nMy thinking was that the project could set a threshold so that when it's crossed codecov makes itself heard, say -1% decrease would raise a flag. That way codecov becomes a useful ally and not something that most start ignoring because it's always there. but that's just IMHO.\r\n\r\n",

"> To further confirm, I went into the CircleCI builds and compared the coverage generated by running python -m pytest -n 8 --dist=loadfile -s ./tests/ --cov | tee output.txt\r\n\r\nThank you for pointing out how we could use coverage data to explain this discrepancy, @thomasrockhu \r\n\r\n> I don't know the codebase well enough here, but my suspicion is that your test suite is not idempotent\r\n\r\nIs there a tool, that can narrow down which tests cause the idempotent behavior? Other then doing a binary search, which often fails in such complex situation of many tests.\r\n\r\nThank you!\r\n",

"If you are talking about a `notification` not in `GitHub` ([comments](https://docs.codecov.io/docs/pull-request-comments) and [status checks](https://docs.codecov.io/docs/commit-status)), you could do something like this in the [codecov.yml](https://github.com/huggingface/transformers/blob/master/codecov.yml) file\r\n```\r\ncoverage:\r\n notify:\r\n {{ notification_provider (e.g. slack) }}:\r\n default:\r\n threshold: 1%\r\n```\r\n\r\nThis should only notify in cases of a 1% drop. (https://docs.codecov.io/docs/notifications#threshold)",

"> Is there a tool, that can narrow down which tests cause the idempotent behavior? Other then doing a binary search, which often fails in such complex situation of many tests.\r\n\r\nUnfortunately, if there is one, we are not aware of it. I wish we could be a little more helpful here right now.",

"> > Is there a tool, that can narrow down which tests cause the idempotent behavior? Other then doing a binary search, which often fails in such complex situation of many tests.\r\n> \r\n> Unfortunately, if there is one, we are not aware of it. I wish we could be a little more helpful here right now.\r\n\r\nSo, the brute force approach would be to run groups of tests on the same code base, comparing the coverage before and after, narrowing it down to the smallest group of tests that cause the coverage to vary - Am I correct? \r\n\r\nProbably to make an intelligent guess instead of the brute force, I'd look at the covebot reports for PRs that had no changes in code and yet wild swings were reported in some files. And from those files, consistently reported at the top, deduct the suspect tests.\r\n\r\nedit: I looked a bit and most likely this issue has to do with TF tests, as most of the time the large coverage changes get reported in `src/transformers/modeling_tf_*py`, when the changes have nothing to do with TF.\r\n",

"@thomasrockhu, I run and re-run a bunch of tests, comparing the coverage reports and I can't reproduce the suggested possible lack of idempotency in the test suite. \r\n\r\nHowever, if I look at for example https://codecov.io/gh/huggingface/transformers/pull/6505 it says it doesn't have a base to compare to, yet it produces a (invalid) codecov report https://github.com/huggingface/transformers/pull/6505#issuecomment-674440545. So to me it tells that something else is broken. i.e. it's not comparing that PR to the base, but comparing it to some totally unrelated nearest code branch that codecov happened to have the coverage file for. Does it make sense?",

"Hi @stas00, basically what that's saying is that in this [PR](https://github.com/huggingface/transformers/pull/6505), GitHub told us the parent was `24107c2` (https://codecov.io/gh/huggingface/transformers/pull/6505/commits). Unfortunately, we did not receive coverage reports or the CI might have failed. So we took the next parent from the `master` branch\r\n\r\n\r\n\r\nThis is an unfortunate consequence of not having coverage for a base commit.",

"Thank you for confirming that, @thomasrockhu. \r\n\r\n> Unfortunately, we did not receive coverage reports or the CI might have failed. So we took the next parent from the master branch\r\n\r\nGiven that the report is misleading then, would it be possible to let the user configure codecov to not provide any report in such situation or a note that a report couldn't be generated? And perhaps make that the default?\r\n\r\nOr, perhaps, there should be a special internal action triggered that will go back to the base hash, run a CI on it, generate the coverage report, and now codecov can compare the 2 reports it was awesomely designed for. If that is possible at all.\r\n\r\nIt oddly seems to happen a lot, here is just a sample of a few recent PRs.\r\n\r\n- https://codecov.io/gh/huggingface/transformers/pull/6494?src=pr&el=desc\r\n- https://codecov.io/gh/huggingface/transformers/pull/6505?src=pr&el=desc\r\n- https://codecov.io/gh/huggingface/transformers/pull/6504?src=pr&el=desc\r\n- https://codecov.io/gh/huggingface/transformers/pull/6511?src=pr&el=desc\r\n\r\nI did find a few recent ones that were fine, i.e. there was a base coverage report.",

"> Given that the report is misleading then, would it be possible to let the user configure codecov to not provide any report in such situation or a note that a report couldn't be generated? And perhaps make that the default?\r\n\r\n@stas00, unfortunately this is not possible in the Codecov UI. It is possible on the comments sent by Codecov in PRs via [require_base](https://docs.codecov.io/docs/pull-request-comments#configuration).\r\n\r\n> Or, perhaps, there should be a special internal action triggered that will go back to the base hash, run a CI on it, generate the coverage report, and now codecov can compare the 2 reports it was awesomely designed for. If that is possible at all.\r\n\r\nWe depend on users to make this determination. We often find that users will use [blocking status checks](https://docs.codecov.io/docs/commit-status#target) to enforce a failed commit which would imply that Codecov receives a coverage report.\r\n\r\n> It oddly seems to happen a lot, here is just a sample of a few recent PRs.\r\n\r\nLooking at these PRs, they all depend on the same commit `24107c2c83e79d195826f18f66892feab6b000e9` as their base, so it makes sense that it would be breaking for those PRs.",

"Thank you very much, @thomasrockhu! Going to try to fix this issue by adding `require_base=yes` as you suggested: https://github.com/huggingface/transformers/pull/6553\r\n\r\nThank you for your awesome support!\r\n",

"For sure, let me know if there's anything else I can do to help!",

"@thomasrockhu, could you please have another look at the situation of this project? \r\n\r\nAfter applying https://github.com/huggingface/transformers/pull/6553 it should now not generate invalid reports when the base is missing - this is good.\r\n\r\nHowever, the problem of code coverage diff when there should be none is still there. e.g. here are some recent examples of pure non-code changes:\r\n\r\n- https://codecov.io/gh/huggingface/transformers/pull/6650/changes \r\n- https://codecov.io/gh/huggingface/transformers/pull/6650/changes\r\n- https://codecov.io/gh/huggingface/transformers/pull/6629/changes\r\n- https://codecov.io/gh/huggingface/transformers/pull/6649/changes\r\n\r\nI did multiple experiments and tried hard to get the test suite to behave in a non-idempotent way, but I couldn't get any such results other than very minor 1-line differences in coverage. This was done on the same machine. I'm not sure how to approach this issue - perhaps CI ends up running different PRs on different types of hardware/different libraries - which perhaps could lead to significant discrepancies in coverage.\r\n\r\nIf changes in hardware and system software libraries could cause such an impact, is there some way of doing a fingerprinting of the host setup so that we know the report came from the same type of setup?\r\n\r\nThank you!\r\n",

"Apologies here @stas00 this got lost. Do you have a more recent pull request to take a look at so I can dig into the logs?",

"Yes, of course, @thomasrockhu.\r\n\r\nHere is a fresh one: https://codecov.io/gh/huggingface/transformers/pull/6852 (no code change)\r\n\r\nLet me know if it'd help to have a few.",

"@stas00 this is really strange. I was focusing in on `src/transformers/trainer.py`\r\n\r\nMost recently on `master`, [this commit](https://codecov.io/gh/huggingface/transformers/src/367235ee52537ff7cada5e1c5c41cdd78731f092/src/transformers/trainer.py) is showing much lower coverage than normal (13.55% vs ~50%)\r\n\r\nI'm comparing it to the commit [right after](https://codecov.io/gh/huggingface/transformers/commit/a497dee6f52f3b8f308675a50601added7e738c3)\r\n\r\nThe [CI build](https://app.circleci.com/pipelines/github/huggingface/transformers/11349/workflows/9ba002b6-c63e-4078-96d0-0feb988b304f/jobs/79674/steps) for the first, shows that there are fewer tests run by 1\r\n\r\n\r\nCompared to the `a497de` [run](https://app.circleci.com/pipelines/github/huggingface/transformers/11356/workflows/1ba4518f-e8d0-4970-9ef6-b8bba290f9bb/jobs/79726).\r\n\r\n\r\nMaybe there's something here?",

"Thank you, @thomasrockhu!\r\nThis is definitely a great find. I'm requesting to add `-rA` to `pytest` runs https://github.com/huggingface/transformers/pull/6861\r\nthen we can easily diff which tests aren't being run. I will follow up once this is merged and we have new data to work with.",

"OK, the `-rA` report is active now, so we can see and diff the exact tests that were run and skipped.\r\n\r\nHave a look at these recent ones with no code changes:\r\n\r\n- https://github.com/huggingface/transformers/pull/6861\r\n- https://github.com/huggingface/transformers/pull/6867\r\n\r\nI double checked that same number of tests were run in both, but codecov report is reporting huge coverage differences.\r\n\r\nThis is odd:\r\n- https://codecov.io/gh/huggingface/transformers/pull/6861/changes\r\n- https://codecov.io/gh/huggingface/transformers/pull/6867/changes\r\n\r\nIt seems to be reporting a huge number of changes, which mostly cancel each other.\r\n\r\n",

"@thomasrockhu? Please, let me know when you can have a look at it - otherwise your logs will be gone again and the provided examples per your request will be unusable again. Thanks.",

"Hi @stas00, apologies I took a look a few days ago, but I really couldn't find a good reason or another step to figure out what is going on in your testing setup. I'll take a look again today.",

"@thomasrockhu, thank you for all your attempts so far. As you originally correctly guessed `transformers` tests suite is not idempotent. I finally was able to reproduce that first in a large sub-set of randomly run tests and then reduced it to a very small sub-set. So from here on it's totally up to us to either sort it out or let `codecov` go.\r\n\r\n# reproducing the problem\r\n\r\nnote: this is not the only guilty sub-test, there are others (I have more sub-groups that I haven't reduced to a very small sub-set yet), but it's good to enough to demonstrate the problem and see if we can find a solution.\r\n\r\n## Step 1. prep\r\n\r\n```\r\npip install pytest-flakefinder pytest-randomly\r\n```\r\nnote: make sure you `pip uninstall pytest-randomly` when you're done here, since it'll randomize your tests w/o asking you - i.e. no flags to enable it - you installed it, all your tests suites are now random.\r\n\r\n**why randomize? because `pytest -n auto` ends up running tests somewhat randomly across the many processors**\r\n\r\n`flakefinder` is the only pytest plugin that I know of that allows repetition of unittests, but this one you can leave around - it doesn't do anything on its own, unless you tell it to.\r\n\r\n## Case 1. multiprocess\r\n\r\nWe will run 2 sub-tests in a random order:\r\n```\r\nexport TESTS=\"tests/test_benchmark_tf.py::TFBenchmarkTest::test_inference_encoder_decoder_with_configs \\ \r\ntests/test_benchmark_tf.py::TFBenchmarkTest::test_trace_memory\"\r\npytest $TESTS --cov --flake-finder --flake-runs=5 | tee k1; \\\r\npytest $TESTS --cov --flake-finder --flake-runs=5 | tee k2; \\\r\ndiff -u k1 k2 | egrep \"^(\\-|\\+)\"\r\n```\r\nand we get:\r\n```\r\n--- k1 2020-09-11 20:00:32.246210967 -0700\r\n+++ k2 2020-09-11 20:01:31.778468283 -0700\r\n-Using --randomly-seed=1418403633\r\n+Using --randomly-seed=1452350401\r\n-src/transformers/benchmark/benchmark_tf.py 152 62 59%\r\n-src/transformers/benchmark/benchmark_utils.py 401 239 40%\r\n+src/transformers/benchmark/benchmark_tf.py 152 50 67%\r\n+src/transformers/benchmark/benchmark_utils.py 401 185 54%\r\n-src/transformers/configuration_t5.py 32 16 50%\r\n+src/transformers/configuration_t5.py 32 4 88%\r\n-src/transformers/modeling_tf_t5.py 615 526 14%\r\n+src/transformers/modeling_tf_t5.py 615 454 26%\r\n-src/transformers/modeling_tf_utils.py 309 214 31%\r\n+src/transformers/modeling_tf_utils.py 309 212 31%\r\n-TOTAL 32394 24146 25%\r\n+TOTAL 32394 23994 26%\r\n-================== 10 passed, 3 warnings in 71.87s (0:01:11) ===================\r\n+======================= 10 passed, 3 warnings in 58.82s ========================\r\n```\r\nWhoah! Non-Idempotent test suite it is! A whooping 1% change in coverage over no change in code.\r\n\r\nSaving the seeds I'm now able to reproduce this at will by adding the specific seeds of the first run:\r\n\r\n```\r\nexport TESTS=\"tests/test_benchmark_tf.py::TFBenchmarkTest::test_inference_encoder_decoder_with_configs \\ \r\ntests/test_benchmark_tf.py::TFBenchmarkTest::test_trace_memory\"\r\npytest $TESTS --cov --flake-finder --flake-runs=5 --randomly-seed=1418403633 | tee k1; \\\r\npytest $TESTS --cov --flake-finder --flake-runs=5 --randomly-seed=1452350401 | tee k2; \\\r\ndiff -u k1 k2 | egrep \"^(\\-|\\+)\"\r\n```\r\ngetting the same results.\r\n\r\n## Case 2. randomization issue\r\n\r\nHere are some other tests with the same problem, but the cause is different - randomization\r\n\r\n```\r\nCUDA_VISIBLE_DEVICES=\"\" pytest -n 3 --dist=loadfile tests/test_data_collator.py --cov | tee c1; \\\r\nCUDA_VISIBLE_DEVICES=\"\" pytest -n 3 --dist=loadfile tests/test_data_collator.py --cov | tee c2; \\\r\ndiff -u c1 c2 | egrep \"^(\\-|\\+)\"\r\n```\r\nthis time w/o using flake-finder, but instead relying on `-n 3` + randomly.\r\n\r\n```\r\n--- c1 2020-09-11 19:00:00.259221772 -0700\r\n+++ c2 2020-09-11 19:00:14.103276713 -0700\r\n-Using --randomly-seed=4211396884\r\n+Using --randomly-seed=3270809055\r\n-src/transformers/data/datasets/language_modeling.py 168 23 86%\r\n+src/transformers/data/datasets/language_modeling.py 168 25 85%\r\n-src/transformers/tokenization_utils_base.py 750 321 57%\r\n+src/transformers/tokenization_utils_base.py 750 316 58%\r\n-TOTAL 32479 23282 28%\r\n+TOTAL 32479 23279 28%\r\n-======================= 9 passed, 13 warnings in 13.10s ========================\r\n+======================= 9 passed, 13 warnings in 13.44s ========================\r\n```\r\na much smaller diff, but a diff nevertheless\r\n\r\nNext is to try to resolve this or give up codecov.\r\n\r\nThe preliminary reading points the blaming finger to `multiprocessing` (`Pool`, and others). \r\n\r\nThank you for reading.\r\n",

"@stas00 this is absolutely incredible. I'll admit that I wouldn't be able to have found this myself, you've done a hell of an investigation here. How can I be useful?",

"Thank you for the kind words, @thomasrockhu. I have a solution for the random issue (need to set a fixed seed before the test), but not yet for the multiproc. It's all the tools that fork sub-processes that are the issue potentially, as they don't all get accounted for consistently. I need more time staring at the screen doing experiments.\r\n\r\nBut I do need your help here: https://codecov.io/gh/huggingface/transformers/pull/7067/changes\r\n\r\nWhat does it mean? If you look at +X/-X - they all are identical numbers, and should add up to 0. Yet, we get 2.41% diff in coverage. How does that get calculated and why are those identical numbers but flipped up - clearly there is something off there, not sure if it's related to coverage as they are perfectly complementary. \r\n\r\nI did see many others cases where they weren't complementary, but in this case it's 100% so. Ideas?\r\n\r\nOr perhaps if I rephrase this: how on that screen can I see the 2.41% difference if I look at it as a black box. I imagine the numbers are the same, but perhaps they are not the same lines in the code, hence the difference. But it's impossible to see that from that presentation. Clicking on the specific diff makes no sense to me. it's just one screen of one type/color - I can't see the actual diff.",

"@thomasrockhu? Could you please have a look that issue mentioned in my last comment? Thank you.",

"@stas00 apologies will take a look today/tomorrow",

"@stas00, so the +X/-X are actually showing coverage change for that file. So as an example, \r\n\r\n\r\nyou see -1 -> +1. This means in total, one line that was not covered is now covered (this is not always a zero-sum game if a line is removed). You can see that two lines have added coverage (red to green) and one line has no coverage (green to red).\r\n\r\nSo taking the total over all those changes actually leads to a -777 line coverage drop. You can see that in the commits of this PR \r\n\r\nbase -> https://codecov.io/gh/huggingface/transformers/tree/8fcbe486e1592321e868f872545c8fd9d359a515\r\n\r\n\r\nhead -> https://codecov.io/gh/huggingface/transformers/tree/a4dd71ef19033ec8e059a0a76c7141a8a5840e66\r\n\r\n\r\nDoes this make more sense?",

"The case you're are showing makes total sense. I'm absolutely clear on that one.\r\n\r\nBut your example doesn't work for https://codecov.io/gh/huggingface/transformers/pull/7067/changes\r\n\r\nLet's pick a small one: `Changes in src/transformers/modelcard.py`\r\n\r\n\r\n\r\nAs you can see there is only addition, I don't see any subtraction. i.e I only see red lines - where are the green ones? If it's +2/-2 I'd expect to see 2 in red and 2 in green. Does it make sense which parts of the reports I'm struggling to understand?\r\n"

] | 1,596 | 1,601 | 1,601 | CONTRIBUTOR | null | Currently PRs get a codecov report

1. Observing various commits - especially pure doc commits - It's either not working right or it needs to be configured.

e.g., this PR has 0.00% change to the code:

https://github.com/huggingface/transformers/pull/6315/files

yet, codecov found -0.51% decrease in coverage - this makes no sense. (edit: it updates itself with other commits to master, now it shows -0.31%)

2. Does it have to send a notification and not just comment on the PR?

It appears that it can be finetuned, and notifications sent only if a desired threshold is passed: https://docs.codecov.io/docs/notifications#standard-notification-fields - so that it actually flags an issue when there is one.

Here is a ready conf file from a random project: https://github.com/zulip/zulip/blob/master/.codecov.yml

except perhaps adjusting threshold to 1%? (edited) and not sure whether we want it to comment by default. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6317/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6317/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6316 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6316/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6316/comments | https://api.github.com/repos/huggingface/transformers/issues/6316/events | https://github.com/huggingface/transformers/issues/6316 | 674,711,324 | MDU6SXNzdWU2NzQ3MTEzMjQ= | 6,316 | Dataloader number of workers in Trainer | {

"login": "ggaemo",

"id": 8081512,

"node_id": "MDQ6VXNlcjgwODE1MTI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8081512?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ggaemo",

"html_url": "https://github.com/ggaemo",

"followers_url": "https://api.github.com/users/ggaemo/followers",

"following_url": "https://api.github.com/users/ggaemo/following{/other_user}",

"gists_url": "https://api.github.com/users/ggaemo/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ggaemo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ggaemo/subscriptions",

"organizations_url": "https://api.github.com/users/ggaemo/orgs",

"repos_url": "https://api.github.com/users/ggaemo/repos",

"events_url": "https://api.github.com/users/ggaemo/events{/privacy}",

"received_events_url": "https://api.github.com/users/ggaemo/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"+1.\r\n\r\nI suppose it should not be difficult to add additional argument to ```self.args``` specifying number of workers to use and to use it as:\r\n```\r\nreturn DataLoader(\r\n self.train_dataset,\r\n batch_size=self.args.train_batch_size,\r\n sampler=train_sampler,\r\n collate_fn=self.data_collator,\r\n drop_last=self.args.dataloader_drop_last,\r\n num_workers=self.args.num_workers\r\n )\r\n```\r\n\r\nIs there any particular reason why this was not yet implemented? ",

"+1",

"+1",

"No particular reason why it was not implemented, would welcome a PR!"

] | 1,596 | 1,600 | 1,600 | NONE | null | https://github.com/huggingface/transformers/blob/175cd45e13b2e33d1efec9e2ac217cba99f6ae58/src/transformers/trainer.py#L252

If you want to use the Trainer from trainer.py, you only have the option to use only 0 number of workers for your dataloader.

However, even if I change the source code to have 10 number or workers for the data loader, the model still uses the same thread.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6316/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6316/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6315 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6315/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6315/comments | https://api.github.com/repos/huggingface/transformers/issues/6315/events | https://github.com/huggingface/transformers/pull/6315 | 674,702,744 | MDExOlB1bGxSZXF1ZXN0NDY0MzQ3MTk3 | 6,315 | [examples] consistently use --gpus, instead of --n_gpu | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"I had to update all the files since n_gpu seems like a common param.",

"You could create an issue, suggesting to use `n_gpu` everywhere instead, supporting it with some stats that would be in favor of this naming. As long as it's consistent across the project either way works, IMHO."

] | 1,596 | 1,603 | 1,596 | CONTRIBUTOR | null | - some docs were wrongly suggesting to use `--n_gpu`, when the code is `--gpus`

- `examples/distillation/` had `--n_gpu`, in the code - switched it and the doc to `--gpus` | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6315/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6315/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/6315",

"html_url": "https://github.com/huggingface/transformers/pull/6315",

"diff_url": "https://github.com/huggingface/transformers/pull/6315.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/6315.patch",

"merged_at": 1596810993000

} |

https://api.github.com/repos/huggingface/transformers/issues/6314 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6314/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6314/comments | https://api.github.com/repos/huggingface/transformers/issues/6314/events | https://github.com/huggingface/transformers/pull/6314 | 674,700,588 | MDExOlB1bGxSZXF1ZXN0NDY0MzQ1NTEx | 6,314 | [pl] restore lr logging behavior for glue, ner examples | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=h1) Report\n> Merging [#6314](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/be1520d3a3c09d729649c49fa3163bd938b6a238&el=desc) will **decrease** coverage by `0.85%`.\n> The diff coverage is `n/a`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #6314 +/- ##\n==========================================\n- Coverage 79.93% 79.08% -0.86% \n==========================================\n Files 153 153 \n Lines 27888 27888 \n==========================================\n- Hits 22293 22054 -239 \n- Misses 5595 5834 +239 \n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [src/transformers/tokenization\\_xlm.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25feGxtLnB5) | `16.26% <0.00%> (-66.67%)` | :arrow_down: |\n| [src/transformers/tokenization\\_marian.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fbWFyaWFuLnB5) | `66.66% <0.00%> (-32.50%)` | :arrow_down: |\n| [src/transformers/modeling\\_tf\\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9iZXJ0LnB5) | `69.06% <0.00%> (-27.52%)` | :arrow_down: |\n| [src/transformers/tokenization\\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fb3BlbmFpLnB5) | `71.21% <0.00%> (-12.88%)` | :arrow_down: |\n| [src/transformers/tokenization\\_gpt2.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fZ3B0Mi5weQ==) | `87.50% <0.00%> (-9.73%)` | :arrow_down: |\n| [src/transformers/tokenization\\_transfo\\_xl.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdHJhbnNmb194bC5weQ==) | `33.56% <0.00%> (-8.93%)` | :arrow_down: |\n| [src/transformers/tokenization\\_utils\\_base.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHNfYmFzZS5weQ==) | `92.08% <0.00%> (-1.39%)` | :arrow_down: |\n| [src/transformers/modeling\\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19iZXJ0LnB5) | `88.26% <0.00%> (-0.17%)` | :arrow_down: |\n| [src/transformers/modeling\\_tf\\_distilbert.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9kaXN0aWxiZXJ0LnB5) | `97.41% <0.00%> (+32.94%)` | :arrow_up: |\n| [src/transformers/tokenization\\_albert.py](https://codecov.io/gh/huggingface/transformers/pull/6314/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYWxiZXJ0LnB5) | `87.50% <0.00%> (+58.65%)` | :arrow_up: |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=footer). Last update [be1520d...6321287](https://codecov.io/gh/huggingface/transformers/pull/6314?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n",

"How do we know this works?",

"> How do we know this works?\r\n\r\nI described what it did in the first comment:\r\n1. use public API instead of digging into PL internals\r\n2. restoring bits removed by https://github.com/huggingface/transformers/pull/6027 you have to compare against the original (pre-6027) see the second to last part of the diff: https://github.com/huggingface/transformers/pull/6027/files#diff-6cf9887b73b621b2d881039a61ccfa5fR47\r\n```\r\n # tensorboard_logs = {\"loss\": loss, \"rate\": self.lr_scheduler.get_last_lr()[-1]}\r\n tensorboard_logs = {\"loss\": loss}\r\n```\r\nwhy `rate` was removed?\r\n\r\ni.e. this PR is restoring, not changing anything. PR6027 did change behavior w/o testing the change.",

"note: This does not effect seq2seq/ because of `Seq2SeqLoggingCallback`",

"Thanks @stas00 !"

] | 1,596 | 1,603 | 1,597 | CONTRIBUTOR | null | 2 more fixes for https://github.com/huggingface/transformers/pull/6027

1. restore the original code and add what was there already, instead of a complex line of code.

2. restore removed `rate` field - solve the missing bit

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6314/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6314/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/6314",

"html_url": "https://github.com/huggingface/transformers/pull/6314",

"diff_url": "https://github.com/huggingface/transformers/pull/6314.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/6314.patch",

"merged_at": 1597177632000

} |

https://api.github.com/repos/huggingface/transformers/issues/6313 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6313/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6313/comments | https://api.github.com/repos/huggingface/transformers/issues/6313/events | https://github.com/huggingface/transformers/issues/6313 | 674,674,583 | MDU6SXNzdWU2NzQ2NzQ1ODM= | 6,313 | Error trying to import SquadDataset | {

"login": "brian8128",

"id": 10691563,

"node_id": "MDQ6VXNlcjEwNjkxNTYz",

"avatar_url": "https://avatars.githubusercontent.com/u/10691563?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/brian8128",

"html_url": "https://github.com/brian8128",

"followers_url": "https://api.github.com/users/brian8128/followers",

"following_url": "https://api.github.com/users/brian8128/following{/other_user}",

"gists_url": "https://api.github.com/users/brian8128/gists{/gist_id}",

"starred_url": "https://api.github.com/users/brian8128/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/brian8128/subscriptions",

"organizations_url": "https://api.github.com/users/brian8128/orgs",

"repos_url": "https://api.github.com/users/brian8128/repos",

"events_url": "https://api.github.com/users/brian8128/events{/privacy}",

"received_events_url": "https://api.github.com/users/brian8128/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi @brian8128 , `SquadDataset` was added after the 3.0.2 release. You'll need to install from source to use it",

"Thanks! It's working now. You guys have written some code around this stuff!"

] | 1,596 | 1,596 | 1,596 | NONE | null | ## Environment info

- `transformers` version: 3.0.2

- Platform: Linux-4.15.0-108-generic-x86_64-with-glibc2.10

- Python version: 3.8.2

- PyTorch version (GPU?): 1.4.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help

@sgugger @julien-c

## Information

I am trying to follow the run_squad_trainer example. However I am unable to import the SquadDataset from transformers. I tried updating to 3.0.2 but got the same error.

https://github.com/huggingface/transformers/blob/master/examples/question-answering/run_squad_trainer.py

```

from transformers import SquadDataset

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

<ipython-input-6-13f8e9ce9352> in <module>

----> 1 from transformers import SquadDataset

ImportError: cannot import name 'SquadDataset' from 'transformers' (/home/brian/miniconda3/envs/ML38/lib/python3.8/site-packages/transformers/__init__.py)

```

## Expected behavior

Import runs without error. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6313/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6313/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6312 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6312/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6312/comments | https://api.github.com/repos/huggingface/transformers/issues/6312/events | https://github.com/huggingface/transformers/pull/6312 | 674,674,023 | MDExOlB1bGxSZXF1ZXN0NDY0MzI0NDg4 | 6,312 | clarify shuffle | {

"login": "xujiaze13",

"id": 37360975,

"node_id": "MDQ6VXNlcjM3MzYwOTc1",

"avatar_url": "https://avatars.githubusercontent.com/u/37360975?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/xujiaze13",

"html_url": "https://github.com/xujiaze13",

"followers_url": "https://api.github.com/users/xujiaze13/followers",

"following_url": "https://api.github.com/users/xujiaze13/following{/other_user}",

"gists_url": "https://api.github.com/users/xujiaze13/gists{/gist_id}",

"starred_url": "https://api.github.com/users/xujiaze13/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/xujiaze13/subscriptions",

"organizations_url": "https://api.github.com/users/xujiaze13/orgs",

"repos_url": "https://api.github.com/users/xujiaze13/repos",

"events_url": "https://api.github.com/users/xujiaze13/events{/privacy}",

"received_events_url": "https://api.github.com/users/xujiaze13/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"# [Codecov](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=h1) Report\n> Merging [#6312](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/0eecaceac7a2cb3c067a435a7571a2ee0de619b9?el=desc) will **increase** coverage by `0.24%`.\n> The diff coverage is `n/a`.\n\n[](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=tree)\n\n```diff\n@@ Coverage Diff @@\n## master #6312 +/- ##\n==========================================\n+ Coverage 79.72% 79.96% +0.24% \n==========================================\n Files 157 157 \n Lines 28586 28586 \n==========================================\n+ Hits 22790 22859 +69 \n+ Misses 5796 5727 -69 \n```\n\n\n| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=tree) | Coverage Δ | |\n|---|---|---|\n| [src/transformers/modeling\\_tf\\_electra.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl9lbGVjdHJhLnB5) | `25.13% <0.00%> (-73.83%)` | :arrow_down: |\n| [src/transformers/tokenization\\_marian.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fbWFyaWFuLnB5) | `32.20% <0.00%> (-66.95%)` | :arrow_down: |\n| [src/transformers/modeling\\_marian.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19tYXJpYW4ucHk=) | `60.00% <0.00%> (-30.00%)` | :arrow_down: |\n| [src/transformers/tokenization\\_xlm\\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25feGxtX3JvYmVydGEucHk=) | `84.52% <0.00%> (-10.72%)` | :arrow_down: |\n| [src/transformers/activations.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9hY3RpdmF0aW9ucy5weQ==) | `85.00% <0.00%> (-5.00%)` | :arrow_down: |\n| [src/transformers/tokenization\\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYXV0by5weQ==) | `95.55% <0.00%> (-2.23%)` | :arrow_down: |\n| [src/transformers/configuration\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX3V0aWxzLnB5) | `96.00% <0.00%> (-0.67%)` | :arrow_down: |\n| [src/transformers/modeling\\_bart.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19iYXJ0LnB5) | `95.06% <0.00%> (-0.35%)` | :arrow_down: |\n| [src/transformers/modeling\\_tf\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `86.97% <0.00%> (-0.33%)` | :arrow_down: |\n| [src/transformers/generation\\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9nZW5lcmF0aW9uX3V0aWxzLnB5) | `96.94% <0.00%> (+0.27%)` | :arrow_up: |\n| ... and [11 more](https://codecov.io/gh/huggingface/transformers/pull/6312/diff?src=pr&el=tree-more) | |\n\n------\n\n[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=continue).\n> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)\n> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`\n> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=footer). Last update [0eecace...5871cac](https://codecov.io/gh/huggingface/transformers/pull/6312?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).\n"

] | 1,596 | 1,598 | 1,598 | CONTRIBUTOR | null | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6312/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6312/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/6312",

"html_url": "https://github.com/huggingface/transformers/pull/6312",

"diff_url": "https://github.com/huggingface/transformers/pull/6312.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/6312.patch",

"merged_at": 1598786770000

} |

|

https://api.github.com/repos/huggingface/transformers/issues/6311 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6311/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6311/comments | https://api.github.com/repos/huggingface/transformers/issues/6311/events | https://github.com/huggingface/transformers/pull/6311 | 674,671,965 | MDExOlB1bGxSZXF1ZXN0NDY0MzIyNzc4 | 6,311 | modify ``val_loss_mean`` | {

"login": "xujiaze13",

"id": 37360975,

"node_id": "MDQ6VXNlcjM3MzYwOTc1",

"avatar_url": "https://avatars.githubusercontent.com/u/37360975?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/xujiaze13",

"html_url": "https://github.com/xujiaze13",

"followers_url": "https://api.github.com/users/xujiaze13/followers",

"following_url": "https://api.github.com/users/xujiaze13/following{/other_user}",

"gists_url": "https://api.github.com/users/xujiaze13/gists{/gist_id}",

"starred_url": "https://api.github.com/users/xujiaze13/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/xujiaze13/subscriptions",

"organizations_url": "https://api.github.com/users/xujiaze13/orgs",

"repos_url": "https://api.github.com/users/xujiaze13/repos",

"events_url": "https://api.github.com/users/xujiaze13/events{/privacy}",

"received_events_url": "https://api.github.com/users/xujiaze13/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,596 | 1,602 | 1,602 | CONTRIBUTOR | null | revised by this warning

***/lib/python3.*/site-packages/pytorch_lightning/utilities/distributed.py:25: RuntimeWarning: The metric you returned 1.234 must be a `torch.Tensor` instance, checkpoint not saved HINT: what is the value of val_loss in validation_epoch_end()? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6311/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6311/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/6311",

"html_url": "https://github.com/huggingface/transformers/pull/6311",

"diff_url": "https://github.com/huggingface/transformers/pull/6311.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/6311.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/6310 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6310/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6310/comments | https://api.github.com/repos/huggingface/transformers/issues/6310/events | https://github.com/huggingface/transformers/issues/6310 | 674,648,681 | MDU6SXNzdWU2NzQ2NDg2ODE= | 6,310 | collision between different cl arg definitions in examples | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"Here is a potential idea of how to keep all the common cl arg definitions in `BaseTransformer` and then let each example subclass tell which ones it wants to support, w/o needing to duplicate the same thing everywhere.\r\n```\r\nimport argparse\r\n\r\n# removes an option from the parser after parser.add_argument's are all done\r\n#https://stackoverflow.com/a/49753634/9201239\r\ndef remove_option(parser, arg):\r\n for action in parser._actions:\r\n if (vars(action)['option_strings']\r\n and vars(action)['option_strings'][0] == arg) \\\r\n or vars(action)['dest'] == arg:\r\n parser._remove_action(action)\r\n\r\n for action in parser._action_groups:\r\n vars_action = vars(action)\r\n var_group_actions = vars_action['_group_actions']\r\n for x in var_group_actions:\r\n if x.dest == arg:\r\n var_group_actions.remove(x)\r\n return\r\n\r\n# another way to remove an arg, but perhaps incomplete\r\n#parser._handle_conflict_resolve(None, [('--bar',parser._actions[2])])\r\n\r\n# tell the parser which args to keep (the rest will be removed)\r\ndef keep_arguments(parser, supported_args):\r\n for act in parser._actions:\r\n arg = act.dest\r\n if not arg in supported_args:\r\n remove_option(parser, arg)\r\n\r\nparser = argparse.ArgumentParser()\r\n\r\n# superclass can register all kinds of options\r\nparser.add_argument('--foo', help='foo argument', required=False)\r\nparser.add_argument('--bar', help='bar argument', required=False)\r\nparser.add_argument('--tar', help='bar argument', required=False)\r\n\r\n# then a subclass can choose which of them it wants/can support\r\nsupported_args = ('foo bar'.split()) # no --tar please\r\n\r\nkeep_arguments(parser, supported_args)\r\n\r\nargs = parser.parse_args()\r\n```\r\n\r\nGranted, there is no public API to remove args once registered. This idea uses a hack that taps into an internal API.\r\n\r\n----\r\n\r\nAlternatively, `BaseTransformer` could maintain a dict of all the common args with help/defaults/etc w/o registering any of them, and then the subclass can just tell it which cl args it wants to be registered. This will be just a matter of formatting the dict and then a subclass would call:\r\n\r\n```\r\n# a potential new function to be called by a subclass \r\nregister_arguments(parser, 'foo bar'.split())\r\n```\r\nor if no abstraction is desired it could go as explicit as:\r\n```\r\ndefs = self.args_def() # non-existing method fetching the possible args\r\nparser.add_argument(defs['foo'])\r\nparser.add_argument(defs['bar'])\r\n```\r\nbut this probably defeats the purpose, just as well copy the whole thing.\r\n\r\n---\r\n\r\n\r\nOne thing to consider in either solution is that a subclass may want to have different defaults, so the new API could provide for defaults override as well.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,596 | 1,603 | 1,603 | CONTRIBUTOR | null | The `examples` have an incosistency of how the cl args are defined and parsed. Some rely on PL's main args as `finetune.py` does: https://github.com/huggingface/transformers/blob/master/examples/seq2seq/finetune.py#L410

```

parser = argparse.ArgumentParser()

parser = pl.Trainer.add_argparse_args(parser)

```

others like `run_pl_glue.py` rely on `lightening_base.py`'s main args: https://github.com/huggingface/transformers/blob/master/examples/text-classification/run_pl_glue.py#L176

```

parser = argparse.ArgumentParser()

add_generic_args(parser, os.getcwd())

```

now that we pushed `--gpus` into `lightening_base.py`'s main args the scripts that run PL's main args collide and we have:

```

fail.argparse.ArgumentError: argument --gpus: conflicting option string: --gpus

```

i.e. PL already supplies `--gpus` and many other args that some of the scripts in `examples` re-define.

So either the example scripts need to stop using `pl.Trainer.add_argparse_args(parser)` and rely exclusively on `lightning_base.add_generic_args`, or we need a different clean approach. It appears that different scripts have different needs arg-wise. But they all use `lightning_base`.

The problem got exposed in: https://github.com/huggingface/transformers/pull/6027 and https://github.com/huggingface/transformers/pull/6307 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/6310/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/6310/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/6309 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/6309/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/6309/comments | https://api.github.com/repos/huggingface/transformers/issues/6309/events | https://github.com/huggingface/transformers/pull/6309 | 674,632,888 | MDExOlB1bGxSZXF1ZXN0NDY0MjkwMzQy | 6,309 | pl version: examples/requirements.txt is single source of truth | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [