markdown

stringlengths 0

1.02M

| code

stringlengths 0

832k

| output

stringlengths 0

1.02M

| license

stringlengths 3

36

| path

stringlengths 6

265

| repo_name

stringlengths 6

127

|

|---|---|---|---|---|---|

Shapiro-Wilk Test | # define Shapiro Wilk Test function

def shapiro_test(data):

'''calculate K-S Test for and out results in table'''

data = data._get_numeric_data()

data_shapiro_test = pd.DataFrame()

# Iterate over columns, calculate test statistic & create table

for column in data:

column_shapiro_test = shapiro(data[column])

shapiro_pvalue_column = column_shapiro_test.pvalue

if column_shapiro_test.pvalue < .05:

shapiro_pvalue_column = '{:.6f}'.format(shapiro_pvalue_column) + '*'

column_distr = 'non-normal'

else:

column_distr = 'normal'

new_row = {'variable': column,

'Shapiro Wilk p-value': shapiro_pvalue_column,

'Shapiro Wilk statistic': column_shapiro_test.statistic,

'distribution': column_distr

}

data_shapiro_test = data_shapiro_test.append(new_row, ignore_index=True)

data_shapiro_test = data_shapiro_test[['variable', 'Shapiro Wilk statistic', 'Shapiro Wilk p-value', 'distribution']]

return data_shapiro_test

shapiro_test(df.dropna()) | _____no_output_____ | MIT | 210601 gca data analyses.ipynb | rbnjd/gca_data_analyses |

Histograms **Histograms: Likert-scale variables** | for column in df._get_numeric_data().drop(columns=['assessed PEB','age']):

sns.set(rc={'figure.figsize':(5,5)})

data = df[column]

sns.histplot(data, bins=np.arange(1,9)-.5)

plt.xlabel(column)

plt.show() | _____no_output_____ | MIT | 210601 gca data analyses.ipynb | rbnjd/gca_data_analyses |

**Histogramm: age** | sns.histplot(df['age'], bins=10) | _____no_output_____ | MIT | 210601 gca data analyses.ipynb | rbnjd/gca_data_analyses |

**Histogramm: assessed PEB** | sns.histplot(df['assessed PEB'], bins=np.arange(0,8)-.5) | _____no_output_____ | MIT | 210601 gca data analyses.ipynb | rbnjd/gca_data_analyses |

Kendall's Tau correlation | # create df with correlation coefficient and p-value indication

def kendall_pval(x,y):

return kendalltau(x,y)[1]

# calculate kendall's tau correlation with p values ( < .01 = ***, < .05 = **, < .1 = *)

tau = df.corr(method = 'kendall').round(decimals=2)

pval = df.corr(method=kendall_pval) - np.eye(*tau.shape)

p = pval.applymap(lambda x: ''.join(['*' for t in [0.1,0.05] if x<=t]))

tau_corr_with_p_values = tau.round(4).astype(str) + p

# set colored highlights for correlation matri

def color_sig_blue(val):

"""

color all significant values in blue

"""

color = 'blue' if val.endswith('*') else 'black'

return 'color: %s' % color

tau_corr_with_p_values.style.applymap(color_sig_blue) | _____no_output_____ | MIT | 210601 gca data analyses.ipynb | rbnjd/gca_data_analyses |

Correlation Heatmap All not significant correlations (p < .05) are not shown. | # calculate correlation coefficient

corr = df.corr(method='kendall')

# calculate column correlations and make a seaborn heatmap

sns.set(rc={'figure.figsize':(12,12)})

ax = sns.heatmap(

corr,

vmin=-1, vmax=1, center=0,

cmap=sns.diverging_palette(20, 220, n=200),

square=True

)

ax.set_xticklabels(

ax.get_xticklabels(),

rotation=45,

horizontalalignment='right'

);

heatmap = ax.get_figure()

# calculate correlation coefficient and p-values

corr_p_values = df.corr(method = kendall_pval)

corr = df.corr(method='kendall')

# calculate column correlations and make a seaborn heatmap

sns.set(rc={'figure.figsize':(12,12)})

#set mask for only significant values (p <= .05)

mask = np.invert(np.tril(corr_p_values<.05))

ax = sns.heatmap(

corr,

vmin=-1, vmax=1, center=0,

cmap=sns.diverging_palette(20, 220, n=200),

square=True,

annot=True,

mask=mask

)

ax.set_xticklabels(

ax.get_xticklabels(),

rotation=45,

horizontalalignment='right'

);

heatmap = ax.get_figure() | _____no_output_____ | MIT | 210601 gca data analyses.ipynb | rbnjd/gca_data_analyses |

Cherry Blossoms!If we travel back in time a few months, [cherry blossoms](https://en.wikipedia.org/wiki/Cherry_blossom) were in full bloom! We don't live in Japan or DC, but we do have our fair share of the trees - buuut you sadly missed [Brooklyn Botanic Garden's annual festival](https://www.bbg.org/visit/event/sakura_matsuri_2019).We'll have to make up for it with data-driven cherry blossoms instead. Once upon a time [Data is Plural](https://tinyletter.com/data-is-plural) linked to [a dataset](http://atmenv.envi.osakafu-u.ac.jp/aono/kyophenotemp4/) about when the cherry trees blossom each year. It's a little out of date, but it's quirky in a real nice way so we're sticking with it. 0. Do all of your importing/setup stuff | import pandas as pd

import numpy as np

%matplotlib inline | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

1. Read in the file using pandas, and look at the first five rows | df = pd.read_excel("KyotoFullFlower7.xls")

df.head(5) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

2. Read in the file using pandas CORRECTLY, and look at the first five rowsHrm, how do your column names look? Read the file in again but this time add a parameter to make sure your columns look right.**TIP: The first year should be 801 AD, and it should not have any dates or anything.** | df=df[25:]

df

df.dtypes

df.head(5)

| _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

3. Look at the final five rows of the data | df.tail(5) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

4. Add some more NaN values It looks like you should probably have some NaN/missing values earlier on in the dataset under "Reference name." Read in the file *one more time*, this time making sure all of those missing reference names actually show up as `NaN` instead of `-`. | df.replace("-", np.nan, inplace=True)

df

df.rename(columns={'Full-flowering dates of Japanese cherry (Prunus jamasakura) at Kyoto, Japan. (Latest version, Jun. 12, 2012)': 'AD', 'Unnamed:_1': 'Full-flowering date'}, inplace=True)

df.rename(columns={'Unnamed: 1': 'DOY', 'Unnamed: 2': 'Full_flowering_date', 'Unnamed: 3': 'Source_code'}, inplace=True)

df.rename(columns={'Unnamed: 4': 'Data_type_code', 'Unnamed: 5': 'Reference_Name'}, inplace=True)

df | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

5. What source is the most common as a reference? | df.dtypes

df.Source_code.value_counts() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

6. Filter the list to only include columns where the `Full-flowering date (DOY)` is not missingIf you'd like to do it in two steps (which might be easier to think through), first figure out how to test whether a column is empty/missing/null/NaN, get the list of `True`/`False` values, and then later feed it to your `df`. | df.DOY.value_counts(dropna=False) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

7. Make a histogram of the full-flowering dateIs it not showing up? Remember the "magic" command that makes graphs show up in matplotlib notebooks! | df.DOY.value_counts().hist() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

8. Make another histogram of the full-flowering date, but with 39 bins instead of 10 | df.DOY.value_counts().hist(bins=39) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

9. What's the average number of days it takes for the flowers to blossom? And how many records do we have?Answer these both with one line of code. | df.DOY.describe() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

10. What's the average days into the year cherry flowers normally blossomed before 1900? | (df.AD>=1900).mean() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

11. How about after 1900? | (df.AD<=1900).mean() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

12. How many times was our data from a title in Japanese poetry?You'll need to read the documentation inside of the Excel file. | #Data_type_code

#4=poetry

df.Data_type_code.value_counts() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

13. Show only the years where our data was from a title in Japanese poetry | df[df.Data_type_code == 4]

| _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

14. Graph the full-flowering date (DOY) over time | df.DOY.plot(x="DOY", y="AD", figsize=(10,7)) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

15. Smooth out the graphIt's so jagged! You can use `df.rolling` to calculate a rolling average.The following code calculates a **10-year mean**, using the `AD` column as the anchor. If there aren't 20 samples to work with in a row, it'll accept down to 5. Neat, right?(We're only looking at the final 5) | df.rolling(10, on='AD', min_periods=5)['DOY'].mean().tail()

df.rolling(10, on='AD', min_periods=5)['DOY'].mean().tail().plot(ylim=(80, 120)) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

Use the code above to create a new column called `rolling_date` in our dataset. It should be the 20-year rolling average of the flowering date. Then plot it, with the year on the x axis and the day of the year on the y axis.Try adding `ylim=(80, 120)` to your `.plot` command to make things look a little less dire. 16. Add a month columnRight now the "Full-flowering date" column is pretty rough. It uses numbers like '402' to mean "April 2nd" and "416" to mean "April 16th." Let's make a column to explain what month it happened in.* Every row that happened in April should have 'April' in the `month` column.* Every row that happened in March should have 'March' as the `month` column.* Every row that happened in May should have 'May' as the `month` column.**I've given you March as an example**, you just need to add in two more lines to do April and May. | df.loc[df['Full_flowering_date'] < 400, 'month'] = 'March'

df.loc[df['Full_flowering_date'] < 500, 'month'] = 'April'

df.loc[df['Full_flowering_date'] < 600, 'month'] = 'May' | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

17. Using your new column, how many blossomings happened in each month? | df.month.value_counts() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

18. Graph how many blossomings happened in each month. | df.month.value_counts().hist() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

19. Adding a day-of-month columnNow we're going to add a new column called "day of month." It's actually a little tougher than it should be since the `Full-flowering date` column is a *float* instead of an integer. | df.Full_flowering_date.astype(int) | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

And if you try to convert it to an int, **pandas yells at you!** That's because, as you can read, you can't have an `NaN` be an integer. But, for some reason, it *can* be a float. Ugh! So what we'll do is **drop all of the na values, then convert them to integers to get rid of the decimals.**I'll show you the first 5 here. | df['Full_flowering_date'].dropna().astype(int).head() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

On the next line, I take the first character of the row and add a bunch of exclamation points on it. I want you to edit this code to **return the last TWO digits of the number**. This only shows you the first 5, by the way.You might want to look up 'list slicing.' | df['Full_flowering_date'].dropna().astype(int).astype(str).apply(lambda value: value[0] + "!!!").head() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

Now that you've successfully extracted the last two letters, save them into a new column called `'day-of-month'` | df['day-of-month'] = df['Full_flowering_date'].dropna().astype(int).astype(str).apply(lambda value: value[0] + "!!!")

df.head() | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

20. Adding a date columnNow take the `'month'` and `'day-of-month'` columns and combine them in order to create a new column called `'date'` | df["date"] = df["month"] + df["day-of-month"]

df | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

YOU ARE DONE.And **incredible.** | !!!!!!!!!!! | _____no_output_____ | MIT | 07-homework/cherry-blossoms/Cherry Blossoms.ipynb | giovanafleck/foundations_homework |

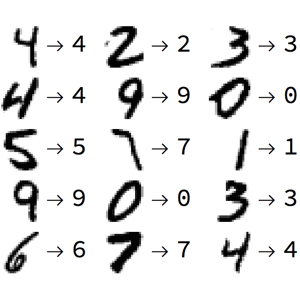

Tarea N°02 Instrucciones1.- Completa tus datos personales (nombre y rol USM) en siguiente celda.**Nombre**: Fabián Rubilar Álvarez **Rol**: 201510509-K2.- Debes pushear este archivo con tus cambios a tu repositorio personal del curso, incluyendo datos, imágenes, scripts, etc.3.- Se evaluará:- Soluciones- Código- Que Binder esté bien configurado.- Al presionar `Kernel -> Restart Kernel and Run All Cells` deben ejecutarse todas las celdas sin error. I.- Clasificación de dígitosEn este laboratorio realizaremos el trabajo de reconocer un dígito a partir de una imagen.  El objetivo es a partir de los datos, hacer la mejor predicción de cada imagen. Para ellos es necesario realizar los pasos clásicos de un proyecto de _Machine Learning_, como estadística descriptiva, visualización y preprocesamiento. * Se solicita ajustar al menos tres modelos de clasificación: * Regresión logística * K-Nearest Neighbours * Uno o más algoritmos a su elección [link](https://scikit-learn.org/stable/supervised_learning.htmlsupervised-learning) (es obligación escoger un _estimator_ que tenga por lo menos un hiperparámetro). * En los modelos que posean hiperparámetros es mandatorio buscar el/los mejores con alguna técnica disponible en `scikit-learn` ([ver más](https://scikit-learn.org/stable/modules/grid_search.htmltuning-the-hyper-parameters-of-an-estimator)).* Para cada modelo, se debe realizar _Cross Validation_ con 10 _folds_ utilizando los datos de entrenamiento con tal de determinar un intervalo de confianza para el _score_ del modelo.* Realizar una predicción con cada uno de los tres modelos con los datos _test_ y obtener el _score_. * Analizar sus métricas de error (**accuracy**, **precision**, **recall**, **f-score**) Exploración de los datosA continuación se carga el conjunto de datos a utilizar, a través del sub-módulo `datasets` de `sklearn`. | import numpy as np

import pandas as pd

from sklearn import datasets

import matplotlib.pyplot as plt

%matplotlib inline

digits_dict = datasets.load_digits()

print(digits_dict["DESCR"])

digits_dict.keys()

digits_dict["target"] | _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

A continuación se crea dataframe declarado como `digits` con los datos de `digits_dict` tal que tenga 65 columnas, las 6 primeras a la representación de la imagen en escala de grises (0-blanco, 255-negro) y la última correspondiente al dígito (`target`) con el nombre _target_. | digits = (

pd.DataFrame(

digits_dict["data"],

)

.rename(columns=lambda x: f"c{x:02d}")

.assign(target=digits_dict["target"])

.astype(int)

)

digits.head() | _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Ejercicio 1**Análisis exploratorio:** Realiza tu análisis exploratorio, no debes olvidar nada! Recuerda, cada análisis debe responder una pregunta.Algunas sugerencias:* ¿Cómo se distribuyen los datos?* ¿Cuánta memoria estoy utilizando?* ¿Qué tipo de datos son?* ¿Cuántos registros por clase hay?* ¿Hay registros que no se correspondan con tu conocimiento previo de los datos? | #Primero veamos los tipos de datos del DF y cierta información que puede ser de utilidad

digits.info()

#Veamos si hay valores nulos en las columnas

if True not in digits.isnull().any().values:

print('No existen valores nulos')

#Veamos que elementos únicos tenemos en la columna target del DF

digits.target.unique()

#Veamos cuantos registros por clase existen luego de saber que hay 10 tipos de clase en la columna target

(u,v) = np.unique(digits['target'] , return_counts = True)

for i in range(0,10):

print ('Tenemos', v[i], 'registros para', u[i])

#Como tenemos 10 tipos de elementos en target, veamos las caracteristicas que poseen los datos

caract_datos = [len(digits[digits['target'] ==i ].target) for i in range(0,10)]

print ('El total de los datos es:', sum(caract_datos))

print ('El máximo de los datos es:', max(caract_datos))

print ('El mínimo de los datos es:', min(caract_datos))

print ('El promedio de los datos es:', 0.1*sum(caract_datos)) | El total de los datos es: 1797

El máximo de los datos es: 183

El mínimo de los datos es: 174

El promedio de los datos es: 179.70000000000002

| MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Por lo tanto, tenemos un promedio de 180 (aproximando por arriba) donde el menor valor es de 174 y el mayor valor es de 183. | #Para mejorar la visualización, construyamos un histograma

digits.target.plot.hist(bins=12, alpha=0.5) | _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Sabemos que cada dato corresponde a una matriz cuadrada de dimensión 8 con entradas de 0 a 16. Cada dato proviene de otra matriz cuadrada de dimensión 32, el cual ha sido procesado por un método de reducción de dimensiones. Además, cada dato es una imagen de un número entre 0 a 9, por lo tanto se utilizan 8$\times$8 = 64 bits, sumado al bit para guardar información. Así, como tenemos 1797 datos, calculamos 1797$\times$65 = 116805 bits en total. Ahora, si no se aplica la reducción de dimensiones, tendriamos 32$\times$32$\times$1797 = 1840128 bits, que es aproximadamente 15,7 veces mayor. Ejercicio 2**Visualización:** Para visualizar los datos utilizaremos el método `imshow` de `matplotlib`. Resulta necesario convertir el arreglo desde las dimensiones (1,64) a (8,8) para que la imagen sea cuadrada y pueda distinguirse el dígito. Superpondremos además el label correspondiente al dígito, mediante el método `text`. Esto nos permitirá comparar la imagen generada con la etiqueta asociada a los valores. Realizaremos lo anterior para los primeros 25 datos del archivo. | digits_dict["images"][0] | _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Visualiza imágenes de los dígitos utilizando la llave `images` de `digits_dict`. Sugerencia: Utiliza `plt.subplots` y el método `imshow`. Puedes hacer una grilla de varias imágenes al mismo tiempo! | nx, ny = 5, 5

fig, axs = plt.subplots(nx, ny, figsize=(12, 12))

for x in range(0,5):

for y in range(0,5):

axs[x,y].imshow(digits_dict['images'][5*x+y], cmap = 'plasma')

axs[x,y].text(3,4,s = digits['target'][5*x+y], fontsize = 30) | _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Ejercicio 3**Machine Learning**: En esta parte usted debe entrenar los distintos modelos escogidos desde la librería de `skelearn`. Para cada modelo, debe realizar los siguientes pasos:* **train-test** * Crear conjunto de entrenamiento y testeo (usted determine las proporciones adecuadas). * Imprimir por pantalla el largo del conjunto de entrenamiento y de testeo. * **modelo**: * Instanciar el modelo objetivo desde la librería sklearn. * *Hiper-parámetros*: Utiliza `sklearn.model_selection.GridSearchCV` para obtener la mejor estimación de los parámetros del modelo objetivo.* **Métricas**: * Graficar matriz de confusión. * Analizar métricas de error.__Preguntas a responder:__* ¿Cuál modelo es mejor basado en sus métricas?* ¿Cuál modelo demora menos tiempo en ajustarse?* ¿Qué modelo escoges? | X = digits.drop(columns="target").values

y = digits["target"].values

from sklearn import datasets

from sklearn.model_selection import train_test_split

#Ahora vemos los conjuntos de testeo y entrenamiento

X_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.2,random_state=42)

print('El conjunto de testeo tiene la siguiente cantidad de datos:', len(y_test))

print('El conjunto de entrenamiento tiene la siguiente cantidad de datos:', len(y_train))

#REGRESIÓN LOGÍSTICA

from sklearn.linear_model import LogisticRegression

from metrics_classification import *

from sklearn.metrics import r2_score

from sklearn.metrics import confusion_matrix

#Creando el modelo

rlog = LogisticRegression()

rlog.fit(X_train, y_train) #Ajustando el modelo

#Matriz de confusión

y_true = list(y_test)

y_pred = list(rlog.predict(X_test))

print('\nMatriz de confusion:\n ')

print(confusion_matrix(y_true,y_pred))

#Métricas

df_temp = pd.DataFrame(

{

'y':y_true,

'yhat':y_pred

}

)

df_metrics = summary_metrics(df_temp)

print("\nMetricas para los regresores")

print("")

print(df_metrics)

#K-NEAREST NEIGHBORS

from sklearn.neighbors import KNeighborsClassifier

from sklearn import neighbors

from sklearn import preprocessing

#Creando el modelo

knn = neighbors.KNeighborsClassifier()

knn.fit(X_train,y_train) #Ajustando el modelo

#Matriz de confusión

y_true = list(y_test)

y_pred = list(knn.predict(X_test))

print('\nMatriz de confusion:\n ')

print(confusion_matrix(y_true,y_pred))

#Métricas

df_temp = pd.DataFrame(

{

'y':y_true,

'yhat':y_pred

}

)

df_metrics = summary_metrics(df_temp)

print("\nMetricas para los regresores")

print("")

print(df_metrics)

#ÁRBOL DE DECISIÓN

from sklearn.tree import DecisionTreeClassifier

#Creando el modelo

add = DecisionTreeClassifier(max_depth=10)

add = add.fit(X_train, y_train) #Ajustando el modelo

#Matriz de confusión

y_true = list(y_test)

y_pred = list(add.predict(X_test))

print('\nMatriz de confusion:\n ')

print(confusion_matrix(y_true,y_pred))

#Métricas

df_temp = pd.DataFrame(

{

'y':y_true,

'yhat':y_pred

}

)

df_metrics = summary_metrics(df_temp)

print("\nMetricas para los regresores")

print("")

print(df_metrics)

#GRIDSEARCH

from sklearn.model_selection import GridSearchCV

model = DecisionTreeClassifier()

# rango de parametros

rango_criterion = ['gini','entropy']

rango_max_depth = np.array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 15, 20, 30, 40, 50, 70, 90, 120, 150])

param_grid = dict(criterion = rango_criterion, max_depth = rango_max_depth)

print(param_grid)

print('\n')

gs = GridSearchCV(estimator=model,

param_grid=param_grid,

scoring='accuracy',

cv=10,

n_jobs=-1)

gs = gs.fit(X_train, y_train)

print(gs.best_score_)

print('\n')

print(gs.best_params_)

| {'criterion': ['gini', 'entropy'], 'max_depth': array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 15,

20, 30, 40, 50, 70, 90, 120, 150])}

0.8761308281141267

{'criterion': 'entropy', 'max_depth': 11}

| MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Ejercicio 4__Comprensión del modelo:__ Tomando en cuenta el mejor modelo entontrado en el `Ejercicio 3`, debe comprender e interpretar minuciosamente los resultados y gráficos asocados al modelo en estudio, para ello debe resolver los siguientes puntos: * **Cross validation**: usando **cv** (con n_fold = 10), sacar una especie de "intervalo de confianza" sobre alguna de las métricas estudiadas en clases: * $\mu \pm \sigma$ = promedio $\pm$ desviación estandar * **Curva de Validación**: Replica el ejemplo del siguiente [link](https://scikit-learn.org/stable/auto_examples/model_selection/plot_validation_curve.htmlsphx-glr-auto-examples-model-selection-plot-validation-curve-py) pero con el modelo, parámetros y métrica adecuada. Saque conclusiones del gráfico. * **Curva AUC–ROC**: Replica el ejemplo del siguiente [link](https://scikit-learn.org/stable/auto_examples/model_selection/plot_roc.htmlsphx-glr-auto-examples-model-selection-plot-roc-py) pero con el modelo, parámetros y métrica adecuada. Saque conclusiones del gráfico. | #Cross Validation

from sklearn.model_selection import cross_val_score

model = KNeighborsClassifier()

precision = cross_val_score(estimator = model, X = X_train, y = y_train, cv = 10)

med = precision.mean()#Media

desv = precision.std()#Desviación estandar

a = med - desv

b = med + desv

print('(',a,',', b,')')

#Curva de Validación

from sklearn.model_selection import validation_curve

knn.get_params()

parameters = np.arange(1,10)

train_scores, test_scores = validation_curve(model,

X_train,

y_train,

param_name = 'n_neighbors',

param_range = parameters,

scoring = 'accuracy',

n_jobs = -1)

train_scores_mean = np.mean(train_scores, axis = 1)

train_scores_std = np.std(train_scores, axis = 1)

test_scores_mean = np.mean(test_scores, axis = 1)

test_scores_std = np.std(test_scores, axis = 1)

plt.figure(figsize=(12,8))

plt.title('Validation Curve (KNeighbors)')

plt.xlabel('n_neighbors')

plt.ylabel('scores')

#Train

plt.semilogx(parameters,

train_scores_mean,

label = 'Training Score',

color = 'red',

lw =2)

plt.fill_between(parameters,

train_scores_mean - train_scores_std,

train_scores_mean + train_scores_std,

alpha = 0.2,

color = 'red',

lw = 2)

#Test

plt.semilogx(parameters,

test_scores_mean,

label = 'Cross Validation Score',

color = 'navy',

lw =2)

plt.fill_between(parameters,

test_scores_mean - test_scores_std,

test_scores_mean + test_scores_std,

alpha = 0.2,

color = 'navy',

lw = 2)

plt.legend(loc = 'Best')

plt.show()

#Curva AUC–ROC

| _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Ejercicio 5__Reducción de la dimensión:__ Tomando en cuenta el mejor modelo encontrado en el `Ejercicio 3`, debe realizar una reducción de dimensionalidad del conjunto de datos. Para ello debe abordar el problema ocupando los dos criterios visto en clases: * **Selección de atributos*** **Extracción de atributos**__Preguntas a responder:__Una vez realizado la reducción de dimensionalidad, debe sacar algunas estadísticas y gráficas comparativas entre el conjunto de datos original y el nuevo conjunto de datos (tamaño del dataset, tiempo de ejecución del modelo, etc.) | #Selección de atributos

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import f_classif

df = pd.DataFrame(X)

df.columns = [f'P{k}' for k in range(1,X.shape[1]+1)]

df['y']=y

print('Vemos que el df respectivo es de la forma:')

print('\n')

print(df.head())

# Separamos las columnas objetivo

x_training = df.drop(['y',], axis=1)

y_training = df['y']

# Aplicando el algoritmo univariante de prueba F.

k = 40 # número de atributos a seleccionar

columnas = list(x_training.columns.values)

seleccionadas = SelectKBest(f_classif, k=k).fit(x_training, y_training)

catrib = seleccionadas.get_support()

atributos = [columnas[i] for i in list(catrib.nonzero()[0])]

print('\n')

print('Los atributos quedan como:')

print('\n')

print(atributos)

#Veamos que pasa si entrenamos un nuevo modelo K-NEAREST NEIGHBORS con los atributos seleccionados anteriormente

x=df[atributos]

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.2,random_state=42)

#Creando el modelo

knn = neighbors.KNeighborsClassifier()

knn.fit(x_train,y_train) #Ajustando el modelo

#Matriz de confusión

y_true = list(y_test)

y_pred = list(knn.predict(x_test))

print('\nMatriz de confusion:\n ')

print(confusion_matrix(y_true,y_pred))

#Métricas

df_temp = pd.DataFrame(

{

'y':y_true,

'yhat':y_pred

}

)

df_metrics = summary_metrics(df_temp)

print("\nMetricas para los regresores ")

print("")

print(df_metrics)

#Extracción de atributos

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

x = StandardScaler().fit_transform(X)

n_components = 50

pca = PCA(n_components)

principalComponents = pca.fit_transform(x)

# Graficar varianza por componente

percent_variance = np.round(pca.explained_variance_ratio_* 100, decimals =2)

columns = [ 'P'+str(i) for i in range(n_components)]

plt.figure(figsize=(20,4))

plt.bar(x= range(0,n_components), height=percent_variance, tick_label=columns)

plt.ylabel('Percentate of Variance Explained')

plt.xlabel('Principal Component')

plt.title('PCA Scree Plot')

plt.show()

# graficar varianza por la suma acumulada de los componente

percent_variance_cum = np.cumsum(percent_variance)

columns = [ 'P' + str(0) + '+...+P' + str(i) for i in range(n_components) ]

plt.figure(figsize=(20,4))

plt.bar(x= range(0,n_components), height=percent_variance_cum, tick_label=columns)

plt.xticks(range(len(columns)), columns, rotation=90)

plt.xlabel('Principal Component Cumsum')

plt.title('PCA Scree Plot')

plt.show()

| _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Ejercicio 6__Visualizando Resultados:__ A continuación se provee código para comparar las etiquetas predichas vs las etiquetas reales del conjunto de _test_. | def mostar_resultados(digits,model,nx=5, ny=5,label = "correctos"):

"""

Muestra los resultados de las prediciones de un modelo

de clasificacion en particular. Se toman aleatoriamente los valores

de los resultados.

- label == 'correcto': retorna los valores en que el modelo acierta.

- label == 'incorrecto': retorna los valores en que el modelo no acierta.

Observacion: El modelo que recibe como argumento debe NO encontrarse

'entrenado'.

:param digits: dataset 'digits'

:param model: modelo de sklearn

:param nx: numero de filas (subplots)

:param ny: numero de columnas (subplots)

:param label: datos correctos o incorrectos

:return: graficos matplotlib

"""

X = digits.drop(columns = "target").values

y = digits["target"].values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 42)

model.fit(X_train, y_train) # ajustando el modelo

y_pred = model.predict(X_test)

# Mostrar los datos correctos

if label == "correctos":

mask = (y_pred == y_test)

color = "green"

# Mostrar los datos correctos

elif label == "incorrectos":

mask = (y_pred != y_test)

color = "red"

else:

raise ValueError("Valor incorrecto")

X_aux = X_test[mask]

y_aux_true = y_test[mask]

y_aux_pred = y_pred[mask]

# We'll plot the first 100 examples, randomly choosen

fig, ax = plt.subplots(nx, ny, figsize=(12,12))

for i in range(nx):

for j in range(ny):

index = j + ny * i

data = X_aux[index, :].reshape(8,8)

label_pred = str(int(y_aux_pred[index]))

label_true = str(int(y_aux_true[index]))

ax[i][j].imshow(data, interpolation = 'nearest', cmap = 'gray_r')

ax[i][j].text(0, 0, label_pred, horizontalalignment = 'center', verticalalignment = 'center', fontsize = 10, color = color)

ax[i][j].text(7, 0, label_true, horizontalalignment = 'center', verticalalignment = 'center', fontsize = 10, color = 'blue')

ax[i][j].get_xaxis().set_visible(False)

ax[i][j].get_yaxis().set_visible(False)

plt.show()

| _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

**Pregunta*** Tomando en cuenta el mejor modelo entontrado en el `Ejercicio 3`, grafique los resultados cuando: * el valor predicho y original son iguales * el valor predicho y original son distintos * Cuando el valor predicho y original son distintos , ¿Por qué ocurren estas fallas? | mostar_resultados(digits, KNeighborsClassifier(), nx=5, ny=5,label = "correctos")

mostar_resultados(digits, neighbors.KNeighborsClassifier(), nx=5, ny=5,label = "incorrectos")

| _____no_output_____ | MIT | homeworks/tarea_02/tarea_02.ipynb | FabianSaulRubilarAlvarez/mat281_portfolio_template |

Ocean heat transport in CMIP5 models Read data | import matplotlib.pyplot as plt

import iris

import iris.plot as iplt

import iris.coord_categorisation

import cf_units

import numpy

%matplotlib inline

infile = '/g/data/ua6/DRSv2/CMIP5/NorESM1-M/rcp85/mon/ocean/r1i1p1/hfbasin/latest/hfbasin_Omon_NorESM1-M_rcp85_r1i1p1_200601-210012.nc'

cube = iris.load_cube(infile)

print(cube)

dim_coord_names = [coord.name() for coord in cube.dim_coords]

print(dim_coord_names)

cube.coord('latitude').points

aux_coord_names = [coord.name() for coord in cube.aux_coords]

print(aux_coord_names)

cube.coord('region')

global_cube = cube.extract(iris.Constraint(region='global_ocean'))

def convert_to_annual(cube):

"""Convert data to annual timescale.

Args:

cube (iris.cube.Cube)

full_months(bool): only include years with data for all 12 months

"""

iris.coord_categorisation.add_year(cube, 'time')

iris.coord_categorisation.add_month(cube, 'time')

cube = cube.aggregated_by(['year'], iris.analysis.MEAN)

cube.remove_coord('year')

cube.remove_coord('month')

return cube

global_cube_annual = convert_to_annual(global_cube)

print(global_cube_annual)

iplt.plot(global_cube_annual[5, ::])

iplt.plot(global_cube_annual[20, ::])

plt.show() | _____no_output_____ | MIT | development/hfbasin.ipynb | DamienIrving/ocean-analysis |

So for any given year, the annual mean shows ocean heat transport away from the tropics. Trends | def convert_to_seconds(time_axis):

"""Convert time axis units to seconds.

Args:

time_axis(iris.DimCoord)

"""

old_units = str(time_axis.units)

old_timestep = old_units.split(' ')[0]

new_units = old_units.replace(old_timestep, 'seconds')

new_unit = cf_units.Unit(new_units, calendar=time_axis.units.calendar)

time_axis.convert_units(new_unit)

return time_axis

def linear_trend(data, time_axis):

"""Calculate the linear trend.

polyfit returns [a, b] corresponding to y = a + bx

"""

masked_flag = False

if type(data) == numpy.ma.core.MaskedArray:

if type(data.mask) == numpy.bool_:

if data.mask:

masked_flag = True

elif data.mask[0]:

masked_flag = True

if masked_flag:

return data.fill_value

else:

return numpy.polynomial.polynomial.polyfit(time_axis, data, 1)[-1]

def calc_trend(cube):

"""Calculate linear trend.

Args:

cube (iris.cube.Cube)

running_mean(bool, optional):

A 12-month running mean can first be applied to the data

yr (bool, optional):

Change units from per second to per year

"""

time_axis = cube.coord('time')

time_axis = convert_to_seconds(time_axis)

trend = numpy.ma.apply_along_axis(linear_trend, 0, cube.data, time_axis.points)

trend = numpy.ma.masked_values(trend, cube.data.fill_value)

return trend

trend_data = calc_trend(global_cube_annual)

trend_cube = global_cube_annual[0, ::].copy()

trend_cube.data = trend_data

trend_cube.remove_coord('time')

#trend_unit = ' yr-1'

#trend_cube.units = str(global_cube_annual.units) + trend_unit

iplt.plot(trend_cube)

plt.show() | _____no_output_____ | MIT | development/hfbasin.ipynb | DamienIrving/ocean-analysis |

So the trends in ocean heat transport suggest reduced transport in the RCP 8.5 simulation (i.e. the trend plot is almost the inverse of the climatology plot). Convergence | print(global_cube_annual)

diffs_data = numpy.diff(global_cube_annual.data, axis=1)

lats = global_cube_annual.coord('latitude').points

diffs_lats = (lats[1:] + lats[:-1]) / 2.

print(diffs_data.shape)

print(len(diffs_lats))

plt.plot(diffs_lats, diffs_data[0, :])

plt.plot(lats, global_cube_annual[0, ::].data / 10.0)

plt.show() | _____no_output_____ | MIT | development/hfbasin.ipynb | DamienIrving/ocean-analysis |

Convergence trend | time_axis = global_cube_annual.coord('time')

time_axis = convert_to_seconds(time_axis)

diffs_trend = numpy.ma.apply_along_axis(linear_trend, 0, diffs_data, time_axis.points)

diffs_trend = numpy.ma.masked_values(diffs_trend, global_cube_annual.data.fill_value)

print(diffs_trend.shape)

plt.plot(diffs_lats, diffs_trend * -1)

plt.axhline(y=0)

plt.show()

plt.plot(diffs_lats, diffs_trend * -1, color='black')

plt.axhline(y=0)

plt.axvline(x=30)

plt.axvline(x=50)

plt.axvline(x=77)

plt.xlim(20, 90)

plt.show() | _____no_output_____ | MIT | development/hfbasin.ipynb | DamienIrving/ocean-analysis |

Baseline | def customVectorizer(df, toRemove):

# leEmbarked.fit(df_raw['Embarked'])

leSex = preprocessing.LabelEncoder()

leEmbarked = preprocessing.LabelEncoder()

df.fillna(inplace=True, value=0)

leSex.fit(df['Sex'])

# leEmbarked.fit(df['Embarked'])

# df['Embarked'] = leEmbarked.transform(df['Embarked'])

df['Sex'] = leSex.transform(df['Sex'])

return df.drop(labels=toRemove, axis=1)

X = customVectorizer(X, ['Embarked', 'PassengerId', 'Name', 'Age', 'Ticket', 'Cabin'])

print(X.shape)

from sklearn.linear_model import LogisticRegression

model = LogisticRegression(random_state=42)

from sklearn.model_selection import cross_val_score

lr_model = LogisticRegression()

cv_scores = cross_val_score(lr_model, X=X, y=y, cv=5, n_jobs=4)

print(cv_scores)

model.fit(X,y)

df_raw_test = pd.read_csv('test.csv')

df_test = baselineVectorizer(df_raw_test)

y_test_predicted = model.predict(df_test)

print('\n'.join(["{},{}".format(892 + i, y_test_predicted[i]) for i in range(len(y_test_predicted))]) , file=open('test_pred.csv', 'w'))

import matplotlib.pyplot as plt

plt.hist(X['Age'], bins=30) | _____no_output_____ | MIT | titanic/Titanic Clean.ipynb | bhi5hmaraj/Applied-ML |

Train a classifer for Age and use it to fill gapsSo first we split the train.csv into 2 parts (one with non null age and the other with null) . We train a regressor on the non null data points to predict age and use this trained regressor to fill the missing ages. Now we combine the 2 split data sets into a single dataset and train a logistic regression classifier. | X = pd.read_csv('train.csv')

age_present = X['Age'] > 0

age_present.describe()

False in age_present

X_age_p = X[age_present]

X_age_p.shape

age = X_age_p['Age']

# X_age_p

X_age_p = customVectorizer(X_age_p, ['Embarked', 'Age', 'PassengerId', 'Name', 'Ticket', 'Cabin'])

from sklearn.linear_model import LinearRegression

reg = LinearRegression().fit(X_age_p, age)

X_null_age = X[X['Age'].isnull()]

pred_age = reg.predict(customVectorizer(X_null_age, ['Embarked', 'Age', 'PassengerId', 'Name', 'Ticket', 'Cabin']))

age.mean()

pred_age = list(map(lambda x : max(0, x), pred_age))

X_null_age['Age'] = pred_age

y = X['Survived']

# from sklearn.model_selection import cross_val_score

# lr_model = LogisticRegression()

X_age_p['Age'] = age

X_age_p.shape

X = pd.concat([X_age_p, customVectorizer(X_null_age, ['Embarked', 'PassengerId', 'Name', 'Ticket', 'Cabin'])])

y = X['Survived']

X = X.drop(labels=['Survived'], axis=1)

lr_model = LogisticRegression()

cv_scores = cross_val_score(lr_model, X=X, y=y, cv=10, n_jobs=4)

print(cv_scores) | [0.76666667 0.76666667 0.85393258 0.7752809 0.80898876 0.78651685

0.79775281 0.80898876 0.86516854 0.79545455]

| MIT | titanic/Titanic Clean.ipynb | bhi5hmaraj/Applied-ML |

Try ensemble with LR, SVC, RF | # taken from https://machinelearningmastery.com/ensemble-machine-learning-algorithms-python-scikit-learn/

from sklearn import model_selection

from sklearn.tree import DecisionTreeClassifier

from sklearn.svm import SVC

from sklearn.ensemble import VotingClassifier

kfold = model_selection.KFold(n_splits=10, random_state=42)

# create the sub models

estimators = []

model1 = LogisticRegression()

estimators.append(('logistic', model1))

model2 = DecisionTreeClassifier()

estimators.append(('cart', model2))

model3 = SVC()

estimators.append(('svm', model3))

# create the ensemble model

ensemble = VotingClassifier(estimators)

results = model_selection.cross_val_score(ensemble, X, y, cv=kfold)

print(results.mean())

print(results)

| /home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/svm/base.py:196: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/svm/base.py:196: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/svm/base.py:196: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/svm/base.py:196: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/svm/base.py:196: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

/home/bhishma/anaconda3/lib/python3.7/site-packages/sklearn/svm/base.py:196: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

| MIT | titanic/Titanic Clean.ipynb | bhi5hmaraj/Applied-ML |

Notebook BasicsIn this lesson we'll learn how to work with **notebooks**. Notebooks allow us to do interactive and visual computing which makes it a great learning tool. We'll use notebooks to code in Python and learn the basics of machine learning. View on practicalAI Run in Google Colab View code on GitHub Set Up 1. Sign into your [Google](https://accounts.google.com/signin) account to start using the notebook. If you don't want to save your work, you can skip the steps below.2. If you do want to save your work, click the **COPY TO DRIVE** button on the toolbar. This will open a new notebook in a new tab.  3. Rename this new notebook by removing the words `Copy of` from the title (change "`Copy of 00_Notebooks`" to "`00_Notebooks`").  4. Now you can run the code, make changes and it's all saved to your personal Google Drive. Types of cells Notebooks are made up of cells. Each cell can either be a **code cell** or a **text cell**. * **code cell**: used for writing and executing code.* **text cell**: used for writing text, HTML, Markdown, etc. Creating cells First, let's create a text cell. Click on a desired location in the notebook and create the cell by clicking on the **➕TEXT** (located in the top left corner). Once you create the cell, click on it and type the following inside it:``` This is a headerHello world!``` This is a headerHello world! Running cells Once you type inside the cell, press the **SHIFT** and **RETURN** (enter key) together to run the cell. Editing cells To edit a cell, double click on it and you can edit it. Moving cells Once you create the cell, you can move it up and down by clicking on the cell and then pressing the ⬆️ and ⬇️ button on the top right of the cell. Deleting cells You can delete the cell by clicking on it and pressing the trash can button 🗑️ on the top right corner of the cell. Alternatively, you can also press ⌘/Ctrl + M + D. Creating a code cell You can repeat the steps above to create and edit a *code* cell. You can create a code cell by clicking on the ➕CODE (located in the top left corner).Once you've created the code cell, double click on it, type the following inside it and then press `Shift + Enter` to execute the code.```print ("Hello world!")``` | print ("Hello world!") | Hello world!

| MIT | notebooks/00_Notebooks.ipynb | raidery/practicalAI |

Tutorial 2: Learning Hyperparameters**Week 1, Day 2: Linear Deep Learning****By Neuromatch Academy**__Content creators:__ Saeed Salehi, Andrew Saxe__Content reviewers:__ Polina Turishcheva, Antoine De Comite, Kelson Shilling-Scrivo__Content editors:__ Anoop Kulkarni__Production editors:__ Khalid Almubarak, Spiros Chavlis **Our 2021 Sponsors, including Presenting Sponsor Facebook Reality Labs** --- Tutorial Objectives* Training landscape* The effect of depth* Choosing a learning rate* Initialization matters | # @title Tutorial slides

# @markdown These are the slides for the videos in the tutorial

# @markdown If you want to locally dowload the slides, click [here](https://osf.io/sne2m/download)

from IPython.display import IFrame

IFrame(src=f"https://mfr.ca-1.osf.io/render?url=https://osf.io/sne2m/?direct%26mode=render%26action=download%26mode=render", width=854, height=480) | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

--- SetupThis a GPU-Free tutorial! | # @title Install dependencies

!pip install git+https://github.com/NeuromatchAcademy/evaltools --quiet

from evaltools.airtable import AirtableForm

# Imports

import time

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

# @title Figure settings

from ipywidgets import interact, IntSlider, FloatSlider, fixed

from ipywidgets import HBox, interactive_output, ToggleButton, Layout

from mpl_toolkits.axes_grid1 import make_axes_locatable

%config InlineBackend.figure_format = 'retina'

plt.style.use("https://raw.githubusercontent.com/NeuromatchAcademy/content-creation/main/nma.mplstyle")

# @title Plotting functions

def plot_x_y_(x_t_, y_t_, x_ev_, y_ev_, loss_log_, weight_log_):

"""

"""

plt.figure(figsize=(12, 4))

plt.subplot(1, 3, 1)

plt.scatter(x_t_, y_t_, c='r', label='training data')

plt.plot(x_ev_, y_ev_, c='b', label='test results', linewidth=2)

plt.xlabel('x')

plt.ylabel('y')

plt.legend()

plt.subplot(1, 3, 2)

plt.plot(loss_log_, c='r')

plt.xlabel('epochs')

plt.ylabel('mean squared error')

plt.subplot(1, 3, 3)

plt.plot(weight_log_)

plt.xlabel('epochs')

plt.ylabel('weights')

plt.show()

def plot_vector_field(what, init_weights=None):

"""

"""

n_epochs=40

lr=0.15

x_pos = np.linspace(2.0, 0.5, 100, endpoint=True)

y_pos = 1. / x_pos

xx, yy = np.mgrid[-1.9:2.0:0.2, -1.9:2.0:0.2]

zz = np.empty_like(xx)

x, y = xx[:, 0], yy[0]

x_temp, y_temp = gen_samples(10, 1.0, 0.0)

cmap = matplotlib.cm.plasma

plt.figure(figsize=(8, 7))

ax = plt.gca()

if what == 'all' or what == 'vectors':

for i, a in enumerate(x):

for j, b in enumerate(y):

temp_model = ShallowNarrowLNN([a, b])

da, db = temp_model.dloss_dw(x_temp, y_temp)

zz[i, j] = temp_model.loss(temp_model.forward(x_temp), y_temp)

scale = min(40 * np.sqrt(da**2 + db**2), 50)

ax.quiver(a, b, - da, - db, scale=scale, color=cmap(np.sqrt(da**2 + db**2)))

if what == 'all' or what == 'trajectory':

if init_weights is None:

for init_weights in [[0.5, -0.5], [0.55, -0.45], [-1.8, 1.7]]:

temp_model = ShallowNarrowLNN(init_weights)

_, temp_records = temp_model.train(x_temp, y_temp, lr, n_epochs)

ax.scatter(temp_records[:, 0], temp_records[:, 1],

c=np.arange(len(temp_records)), cmap='Greys')

ax.scatter(temp_records[0, 0], temp_records[0, 1], c='blue', zorder=9)

ax.scatter(temp_records[-1, 0], temp_records[-1, 1], c='red', marker='X', s=100, zorder=9)

else:

temp_model = ShallowNarrowLNN(init_weights)

_, temp_records = temp_model.train(x_temp, y_temp, lr, n_epochs)

ax.scatter(temp_records[:, 0], temp_records[:, 1],

c=np.arange(len(temp_records)), cmap='Greys')

ax.scatter(temp_records[0, 0], temp_records[0, 1], c='blue', zorder=9)

ax.scatter(temp_records[-1, 0], temp_records[-1, 1], c='red', marker='X', s=100, zorder=9)

if what == 'all' or what == 'loss':

contplt = ax.contourf(x, y, np.log(zz+0.001), zorder=-1, cmap='coolwarm', levels=100)

divider = make_axes_locatable(ax)

cax = divider.append_axes("right", size="5%", pad=0.05)

cbar = plt.colorbar(contplt, cax=cax)

cbar.set_label('log (Loss)')

ax.set_xlabel("$w_1$")

ax.set_ylabel("$w_2$")

ax.set_xlim(-1.9, 1.9)

ax.set_ylim(-1.9, 1.9)

plt.show()

def plot_loss_landscape():

"""

"""

x_temp, y_temp = gen_samples(10, 1.0, 0.0)

xx, yy = np.mgrid[-1.9:2.0:0.2, -1.9:2.0:0.2]

zz = np.empty_like(xx)

x, y = xx[:, 0], yy[0]

for i, a in enumerate(x):

for j, b in enumerate(y):

temp_model = ShallowNarrowLNN([a, b])

zz[i, j] = temp_model.loss(temp_model.forward(x_temp), y_temp)

temp_model = ShallowNarrowLNN([-1.8, 1.7])

loss_rec_1, w_rec_1 = temp_model.train(x_temp, y_temp, 0.02, 240)

temp_model = ShallowNarrowLNN([1.5, -1.5])

loss_rec_2, w_rec_2 = temp_model.train(x_temp, y_temp, 0.02, 240)

plt.figure(figsize=(12, 8))

ax = plt.subplot(1, 1, 1, projection='3d')

ax.plot_surface(xx, yy, np.log(zz+0.5), cmap='coolwarm', alpha=0.5)

ax.scatter3D(w_rec_1[:, 0], w_rec_1[:, 1], np.log(loss_rec_1+0.5),

c='k', s=50, zorder=9)

ax.scatter3D(w_rec_2[:, 0], w_rec_2[:, 1], np.log(loss_rec_2+0.5),

c='k', s=50, zorder=9)

plt.axis("off")

ax.view_init(45, 260)

plt.show()

def depth_widget(depth):

if depth == 0:

depth_lr_init_interplay(depth, 0.02, 0.9)

else:

depth_lr_init_interplay(depth, 0.01, 0.9)

def lr_widget(lr):

depth_lr_init_interplay(50, lr, 0.9)

def depth_lr_interplay(depth, lr):

depth_lr_init_interplay(depth, lr, 0.9)

def depth_lr_init_interplay(depth, lr, init_weights):

n_epochs = 600

x_train, y_train = gen_samples(100, 2.0, 0.1)

model = DeepNarrowLNN(np.full((1, depth+1), init_weights))

plt.figure(figsize=(10, 5))

plt.plot(model.train(x_train, y_train, lr, n_epochs),

linewidth=3.0, c='m')

plt.title("Training a {}-layer LNN with"

" $\eta=${} initialized with $w_i=${}".format(depth, lr, init_weights), pad=15)

plt.yscale('log')

plt.xlabel('epochs')

plt.ylabel('Log mean squared error')

plt.ylim(0.001, 1.0)

plt.show()

def plot_init_effect():

depth = 15

n_epochs = 250

lr = 0.02

x_train, y_train = gen_samples(100, 2.0, 0.1)

plt.figure(figsize=(12, 6))

for init_w in np.arange(0.7, 1.09, 0.05):

model = DeepNarrowLNN(np.full((1, depth), init_w))

plt.plot(model.train(x_train, y_train, lr, n_epochs),

linewidth=3.0, label="initial weights {:.2f}".format(init_w))

plt.title("Training a {}-layer narrow LNN with $\eta=${}".format(depth, lr), pad=15)

plt.yscale('log')

plt.xlabel('epochs')

plt.ylabel('Log mean squared error')

plt.legend(loc='lower left', ncol=4)

plt.ylim(0.001, 1.0)

plt.show()

class InterPlay:

def __init__(self):

self.lr = [None]

self.depth = [None]

self.success = [None]

self.min_depth, self.max_depth = 5, 65

self.depth_list = np.arange(10, 61, 10)

self.i_depth = 0

self.min_lr, self.max_lr = 0.001, 0.105

self.n_epochs = 600

self.x_train, self.y_train = gen_samples(100, 2.0, 0.1)

self.converged = False

self.button = None

self.slider = None

def train(self, lr, update=False, init_weights=0.9):

if update and self.converged and self.i_depth < len(self.depth_list):

depth = self.depth_list[self.i_depth]

self.plot(depth, lr)

self.i_depth += 1

self.lr.append(None)

self.depth.append(None)

self.success.append(None)

self.converged = False

self.slider.value = 0.005

if self.i_depth < len(self.depth_list):

self.button.value = False

self.button.description = 'Explore!'

self.button.disabled = True

self.button.button_style = 'danger'

else:

self.button.value = False

self.button.button_style = ''

self.button.disabled = True

self.button.description = 'Done!'

time.sleep(1.0)

elif self.i_depth < len(self.depth_list):

depth = self.depth_list[self.i_depth]

# assert self.min_depth <= depth <= self.max_depth

assert self.min_lr <= lr <= self.max_lr

self.converged = False

model = DeepNarrowLNN(np.full((1, depth), init_weights))

self.losses = np.array(model.train(self.x_train, self.y_train, lr, self.n_epochs))

if np.any(self.losses < 1e-2):

success = np.argwhere(self.losses < 1e-2)[0][0]

if np.all((self.losses[success:] < 1e-2)):

self.converged = True

self.success[-1] = success

self.lr[-1] = lr

self.depth[-1] = depth

self.button.disabled = False

self.button.button_style = 'success'

self.button.description = 'Register!'

else:

self.button.disabled = True

self.button.button_style = 'danger'

self.button.description = 'Explore!'

else:

self.button.disabled = True

self.button.button_style = 'danger'

self.button.description = 'Explore!'

self.plot(depth, lr)

def plot(self, depth, lr):

fig = plt.figure(constrained_layout=False, figsize=(10, 8))

gs = fig.add_gridspec(2, 2)

ax1 = fig.add_subplot(gs[0, :])

ax2 = fig.add_subplot(gs[1, 0])

ax3 = fig.add_subplot(gs[1, 1])

ax1.plot(self.losses, linewidth=3.0, c='m')

ax1.set_title("Training a {}-layer LNN with"

" $\eta=${}".format(depth, lr), pad=15, fontsize=16)

ax1.set_yscale('log')

ax1.set_xlabel('epochs')

ax1.set_ylabel('Log mean squared error')

ax1.set_ylim(0.001, 1.0)

ax2.set_xlim(self.min_depth, self.max_depth)

ax2.set_ylim(-10, self.n_epochs)

ax2.set_xlabel('Depth')

ax2.set_ylabel('Learning time (Epochs)')

ax2.set_title("Learning time vs depth", fontsize=14)

ax2.scatter(np.array(self.depth), np.array(self.success), c='r')

# ax3.set_yscale('log')

ax3.set_xlim(self.min_depth, self.max_depth)

ax3.set_ylim(self.min_lr, self.max_lr)

ax3.set_xlabel('Depth')

ax3.set_ylabel('Optimial learning rate')

ax3.set_title("Empirically optimal $\eta$ vs depth", fontsize=14)

ax3.scatter(np.array(self.depth), np.array(self.lr), c='r')

plt.show()

# @title Helper functions

atform = AirtableForm('appn7VdPRseSoMXEG','W1D2_T2','https://portal.neuromatchacademy.org/api/redirect/to/9c55f6cb-cdf9-4429-ac1c-ec44fe64c303')

def gen_samples(n, a, sigma):

"""

Generates `n` samples with `y = z * x + noise(sgma)` linear relation.

Args:

n : int

a : float

sigma : float

Retutns:

x : np.array

y : np.array

"""

assert n > 0

assert sigma >= 0

if sigma > 0:

x = np.random.rand(n)

noise = np.random.normal(scale=sigma, size=(n))

y = a * x + noise

else:

x = np.linspace(0.0, 1.0, n, endpoint=True)

y = a * x

return x, y

class ShallowNarrowLNN:

"""

Shallow and narrow (one neuron per layer) linear neural network

"""

def __init__(self, init_ws):

"""

init_ws: initial weights as a list

"""

assert isinstance(init_ws, list)

assert len(init_ws) == 2

self.w1 = init_ws[0]

self.w2 = init_ws[1]

def forward(self, x):

"""

The forward pass through netwrok y = x * w1 * w2

"""

y = x * self.w1 * self.w2

return y

def loss(self, y_p, y_t):

"""

Mean squared error (L2) with 1/2 for convenience

"""

assert y_p.shape == y_t.shape

mse = ((y_t - y_p)**2).mean()

return mse

def dloss_dw(self, x, y_t):

"""

partial derivative of loss with respect to weights

Args:

x : np.array

y_t : np.array

"""

assert x.shape == y_t.shape

Error = y_t - self.w1 * self.w2 * x

dloss_dw1 = - (2 * self.w2 * x * Error).mean()

dloss_dw2 = - (2 * self.w1 * x * Error).mean()

return dloss_dw1, dloss_dw2

def train(self, x, y_t, eta, n_ep):

"""

Gradient descent algorithm

Args:

x : np.array

y_t : np.array

eta: float

n_ep : int

"""

assert x.shape == y_t.shape

loss_records = np.empty(n_ep) # pre allocation of loss records

weight_records = np.empty((n_ep, 2)) # pre allocation of weight records

for i in range(n_ep):

y_p = self.forward(x)

loss_records[i] = self.loss(y_p, y_t)

dloss_dw1, dloss_dw2 = self.dloss_dw(x, y_t)

self.w1 -= eta * dloss_dw1

self.w2 -= eta * dloss_dw2

weight_records[i] = [self.w1, self.w2]

return loss_records, weight_records

class DeepNarrowLNN:

"""

Deep but thin (one neuron per layer) linear neural network

"""

def __init__(self, init_ws):

"""

init_ws: initial weights as a numpy array

"""

self.n = init_ws.size

self.W = init_ws.reshape(1, -1)

def forward(self, x):

"""

x : np.array

input features

"""

y = np.prod(self.W) * x

return y

def loss(self, y_t, y_p):

"""

mean squared error (L2 loss)

Args:

y_t : np.array

y_p : np.array

"""

assert y_p.shape == y_t.shape

mse = ((y_t - y_p)**2 / 2).mean()

return mse

def dloss_dw(self, x, y_t, y_p):

"""

analytical gradient of weights

Args:

x : np.array

y_t : np.array

y_p : np.array

"""

E = y_t - y_p # = y_t - x * np.prod(self.W)

Ex = np.multiply(x, E).mean()

Wp = np.prod(self.W) / (self.W + 1e-9)

dW = - Ex * Wp

return dW

def train(self, x, y_t, eta, n_epochs):

"""

training using gradient descent

Args:

x : np.array

y_t : np.array

eta: float

n_epochs : int

"""

loss_records = np.empty(n_epochs)

loss_records[:] = np.nan

for i in range(n_epochs):

y_p = self.forward(x)

loss_records[i] = self.loss(y_t, y_p).mean()

dloss_dw = self.dloss_dw(x, y_t, y_p)

if np.isnan(dloss_dw).any() or np.isinf(dloss_dw).any():

return loss_records

self.W -= eta * dloss_dw

return loss_records

#@title Set random seed

#@markdown Executing `set_seed(seed=seed)` you are setting the seed

# for DL its critical to set the random seed so that students can have a

# baseline to compare their results to expected results.

# Read more here: https://pytorch.org/docs/stable/notes/randomness.html

# Call `set_seed` function in the exercises to ensure reproducibility.

import random

import torch

def set_seed(seed=None, seed_torch=True):

if seed is None:

seed = np.random.choice(2 ** 32)

random.seed(seed)

np.random.seed(seed)

if seed_torch:

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.cuda.manual_seed(seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

print(f'Random seed {seed} has been set.')

# In case that `DataLoader` is used

def seed_worker(worker_id):

worker_seed = torch.initial_seed() % 2**32

np.random.seed(worker_seed)

random.seed(worker_seed)

#@title Set device (GPU or CPU). Execute `set_device()`

# especially if torch modules used.

# inform the user if the notebook uses GPU or CPU.

def set_device():

device = "cuda" if torch.cuda.is_available() else "cpu"

if device != "cuda":

print("GPU is not enabled in this notebook. \n"

"If you want to enable it, in the menu under `Runtime` -> \n"

"`Hardware accelerator.` and select `GPU` from the dropdown menu")

else:

print("GPU is enabled in this notebook. \n"

"If you want to disable it, in the menu under `Runtime` -> \n"

"`Hardware accelerator.` and select `None` from the dropdown menu")

return device

SEED = 2021

set_seed(seed=SEED)

DEVICE = set_device() | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

--- Section 1: A Shallow Narrow Linear Neural Network*Time estimate: ~30 mins* | # @title Video 1: Shallow Narrow Linear Net

from ipywidgets import widgets

out2 = widgets.Output()

with out2:

from IPython.display import IFrame

class BiliVideo(IFrame):

def __init__(self, id, page=1, width=400, height=300, **kwargs):

self.id=id

src = "https://player.bilibili.com/player.html?bvid={0}&page={1}".format(id, page)

super(BiliVideo, self).__init__(src, width, height, **kwargs)

video = BiliVideo(id=f"BV1F44y117ot", width=854, height=480, fs=1)

print("Video available at https://www.bilibili.com/video/{0}".format(video.id))

display(video)

out1 = widgets.Output()

with out1:

from IPython.display import YouTubeVideo

video = YouTubeVideo(id=f"6e5JIYsqVvU", width=854, height=480, fs=1, rel=0)

print("Video available at https://youtube.com/watch?v=" + video.id)

display(video)

out = widgets.Tab([out1, out2])

out.set_title(0, 'Youtube')

out.set_title(1, 'Bilibili')

#add event to airtable

atform.add_event('video 1: Shallow Narrow Linear Net')

display(out) | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

Section 1.1: A Shallow Narrow Linear Net To better understand the behavior of neural network training with gradient descent, we start with the incredibly simple case of a shallow narrow linear neural net, since state-of-the-art models are impossible to dissect and comprehend with our current mathematical tools.The model we use has one hidden layer, with only one neuron, and two weights. We consider the squared error (or L2 loss) as the cost function. As you may have already guessed, we can visualize the model as a neural network:or by its computation graph:or on a rare occasion, even as a reasonably compact mapping:$$ loss = (y - w_1 \cdot w_2 \cdot x)^2 $$Implementing a neural network from scratch without using any Automatic Differentiation tool is rarely necessary. The following two exercises are therefore **Bonus** (optional) exercises. Please ignore them if you have any time-limits or pressure and continue to Section 1.2. Analytical Exercise 1.1: Loss Gradients (Optional)Once again, we ask you to calculate the network gradients analytically, since you will need them for the next exercise. We understand how annoying this is.$\dfrac{\partial{loss}}{\partial{w_1}} = ?$$\dfrac{\partial{loss}}{\partial{w_2}} = ?$--- Solution$\dfrac{\partial{loss}}{\partial{w_1}} = -2 \cdot w_2 \cdot x \cdot (y - w_1 \cdot w_2 \cdot x)$$\dfrac{\partial{loss}}{\partial{w_2}} = -2 \cdot w_1 \cdot x \cdot (y - w_1 \cdot w_2 \cdot x)$--- Coding Exercise 1.1: Implement simple narrow LNN (Optional)Next, we ask you to implement the `forward` pass for our model from scratch without using PyTorch.Also, although our model gets a single input feature and outputs a single prediction, we could calculate the loss and perform training for multiple samples at once. This is the common practice for neural networks, since computers are incredibly fast doing matrix (or tensor) operations on batches of data, rather than processing samples one at a time through `for` loops. Therefore, for the `loss` function, please implement the **mean** squared error (MSE), and adjust your analytical gradients accordingly when implementing the `dloss_dw` function.Finally, complete the `train` function for the gradient descent algorithm:\begin{equation}\mathbf{w}^{(t+1)} = \mathbf{w}^{(t)} - \eta \nabla loss (\mathbf{w}^{(t)})\end{equation} | class ShallowNarrowExercise:

"""Shallow and narrow (one neuron per layer) linear neural network

"""

def __init__(self, init_weights):

"""

Args:

init_weights (list): initial weights

"""

assert isinstance(init_weights, (list, np.ndarray, tuple))

assert len(init_weights) == 2

self.w1 = init_weights[0]

self.w2 = init_weights[1]

def forward(self, x):

"""The forward pass through netwrok y = x * w1 * w2

Args:

x (np.ndarray): features (inputs) to neural net

returns:

(np.ndarray): neural network output (prediction)

"""

#################################################

## Implement the forward pass to calculate prediction

## Note that prediction is not the loss

# Complete the function and remove or comment the line below

raise NotImplementedError("Forward Pass `forward`")

#################################################

y = ...

return y

def dloss_dw(self, x, y_true):

"""Gradient of loss with respect to weights

Args:

x (np.ndarray): features (inputs) to neural net

y_true (np.ndarray): true labels

returns:

(float): mean gradient of loss with respect to w1

(float): mean gradient of loss with respect to w2

"""

assert x.shape == y_true.shape

#################################################

## Implement the gradient computation function

# Complete the function and remove or comment the line below

raise NotImplementedError("Gradient of Loss `dloss_dw`")

#################################################

dloss_dw1 = ...

dloss_dw2 = ...

return dloss_dw1, dloss_dw2

def train(self, x, y_true, lr, n_ep):

"""Training with Gradient descent algorithm

Args:

x (np.ndarray): features (inputs) to neural net

y_true (np.ndarray): true labels

lr (float): learning rate

n_ep (int): number of epochs (training iterations)

returns:

(list): training loss records

(list): training weight records (evolution of weights)

"""

assert x.shape == y_true.shape

loss_records = np.empty(n_ep) # pre allocation of loss records

weight_records = np.empty((n_ep, 2)) # pre allocation of weight records

for i in range(n_ep):

y_prediction = self.forward(x)

loss_records[i] = loss(y_prediction, y_true)

dloss_dw1, dloss_dw2 = self.dloss_dw(x, y_true)

#################################################

## Implement the gradient descent step

# Complete the function and remove or comment the line below

raise NotImplementedError("Training loop `train`")

#################################################

self.w1 -= ...

self.w2 -= ...

weight_records[i] = [self.w1, self.w2]

return loss_records, weight_records

def loss(y_prediction, y_true):

"""Mean squared error

Args:

y_prediction (np.ndarray): model output (prediction)

y_true (np.ndarray): true label

returns:

(np.ndarray): mean squared error loss

"""

assert y_prediction.shape == y_true.shape

#################################################

## Implement the MEAN squared error

# Complete the function and remove or comment the line below

raise NotImplementedError("Loss function `loss`")

#################################################

mse = ...

return mse

#add event to airtable

atform.add_event('Coding Exercise 1.1: Implement simple narrow LNN')

set_seed(seed=SEED)

n_epochs = 211

learning_rate = 0.02

initial_weights = [1.4, -1.6]

x_train, y_train = gen_samples(n=73, a=2.0, sigma=0.2)

x_eval = np.linspace(0.0, 1.0, 37, endpoint=True)

## Uncomment to run

# sn_model = ShallowNarrowExercise(initial_weights)

# loss_log, weight_log = sn_model.train(x_train, y_train, learning_rate, n_epochs)

# y_eval = sn_model.forward(x_eval)

# plot_x_y_(x_train, y_train, x_eval, y_eval, loss_log, weight_log) | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

[*Click for solution*](https://github.com/NeuromatchAcademy/course-content-dl/tree/main//tutorials/W1D2_LinearDeepLearning/solutions/W1D2_Tutorial2_Solution_46492cd6.py)*Example output:* Section 1.2: Learning landscapes | # @title Video 2: Training Landscape

from ipywidgets import widgets

out2 = widgets.Output()

with out2:

from IPython.display import IFrame

class BiliVideo(IFrame):

def __init__(self, id, page=1, width=400, height=300, **kwargs):

self.id=id

src = "https://player.bilibili.com/player.html?bvid={0}&page={1}".format(id, page)

super(BiliVideo, self).__init__(src, width, height, **kwargs)

video = BiliVideo(id=f"BV1Nv411J71X", width=854, height=480, fs=1)

print("Video available at https://www.bilibili.com/video/{0}".format(video.id))

display(video)

out1 = widgets.Output()

with out1:

from IPython.display import YouTubeVideo

video = YouTubeVideo(id=f"k28bnNAcOEg", width=854, height=480, fs=1, rel=0)

print("Video available at https://youtube.com/watch?v=" + video.id)

display(video)

out = widgets.Tab([out1, out2])

out.set_title(0, 'Youtube')

out.set_title(1, 'Bilibili')

#add event to airtable

atform.add_event('Video 2: Training Landscape')

display(out) | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

As you may have already asked yourself, we can analytically find $w_1$ and $w_2$ without using gradient descent:\begin{equation}w_1 \cdot w_2 = \dfrac{y}{x}\end{equation}In fact, we can plot the gradients, the loss function and all the possible solutions in one figure. In this example, we use the $y = 1x$ mapping:**Blue ribbon**: shows all possible solutions: $~ w_1 w_2 = \dfrac{y}{x} = \dfrac{x}{x} = 1 \Rightarrow w_1 = \dfrac{1}{w_2}$**Contour background**: Shows the loss values, red being higher loss**Vector field (arrows)**: shows the gradient vector field. The larger yellow arrows show larger gradients, which correspond to bigger steps by gradient descent.**Scatter circles**: the trajectory (evolution) of weights during training for three different initializations, with blue dots marking the start of training and red crosses ( **x** ) marking the end of training. You can also try your own initializations (keep the initial values between `-2.0` and `2.0`) as shown here:```pythonplot_vector_field('all', [1.0, -1.0])```Finally, if the plot is too crowded, feel free to pass one of the following strings as argument:```pythonplot_vector_field('vectors') for vector fieldplot_vector_field('trajectory') for training trajectoryplot_vector_field('loss') for loss contour```**Think!**Explore the next two plots. Try different initial values. Can you find the saddle point? Why does training slow down near the minima? | plot_vector_field('all') | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

Here, we also visualize the loss landscape in a 3-D plot, with two training trajectories for different initial conditions.Note: the trajectories from the 3D plot and the previous plot are independent and different. | plot_loss_landscape()

# @title Student Response

from ipywidgets import widgets

text=widgets.Textarea(

value='Type your answer here and click on `Submit!`',

placeholder='Type something',

description='',

disabled=False

)

button = widgets.Button(description="Submit!")

display(text,button)

def on_button_clicked(b):

atform.add_answer('q1', text.value)

print("Submission successful!")

button.on_click(on_button_clicked)

# @title Video 3: Training Landscape - Discussion

from ipywidgets import widgets

out2 = widgets.Output()

with out2:

from IPython.display import IFrame

class BiliVideo(IFrame):

def __init__(self, id, page=1, width=400, height=300, **kwargs):

self.id=id

src = "https://player.bilibili.com/player.html?bvid={0}&page={1}".format(id, page)

super(BiliVideo, self).__init__(src, width, height, **kwargs)

video = BiliVideo(id=f"BV1py4y1j7cv", width=854, height=480, fs=1)

print("Video available at https://www.bilibili.com/video/{0}".format(video.id))

display(video)

out1 = widgets.Output()

with out1:

from IPython.display import YouTubeVideo

video = YouTubeVideo(id=f"0EcUGgxOdkI", width=854, height=480, fs=1, rel=0)

print("Video available at https://youtube.com/watch?v=" + video.id)

display(video)

out = widgets.Tab([out1, out2])

out.set_title(0, 'Youtube')

out.set_title(1, 'Bilibili')

#add event to airtable

atform.add_event('Video 3: Training Landscape - Discussiond')

display(out) | _____no_output_____ | CC-BY-4.0 | tutorials/W1D2_LinearDeepLearning/student/W1D2_Tutorial2.ipynb | eduardojdiniz/course-content-dl |

--- Section 2: Depth, Learning rate, and initialization*Time estimate: ~45 mins* Successful deep learning models are often developed by a team of very clever people, spending many many hours "tuning" learning hyperparameters, and finding effective initializations. In this section, we look at three basic (but often not simple) hyperparameters: depth, learning rate, and initialization. Section 2.1: The effect of depth | # @title Video 4: Effect of Depth

from ipywidgets import widgets

out2 = widgets.Output()

with out2:

from IPython.display import IFrame

class BiliVideo(IFrame):