hexsha

stringlengths 40

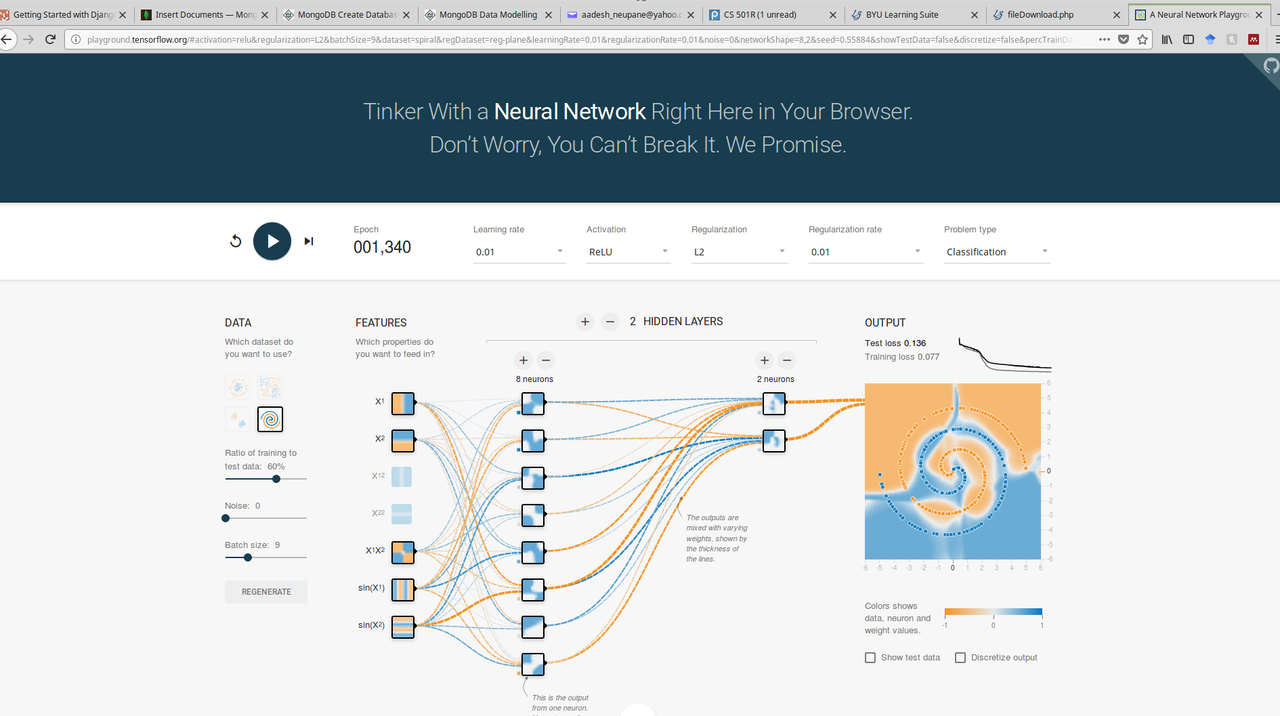

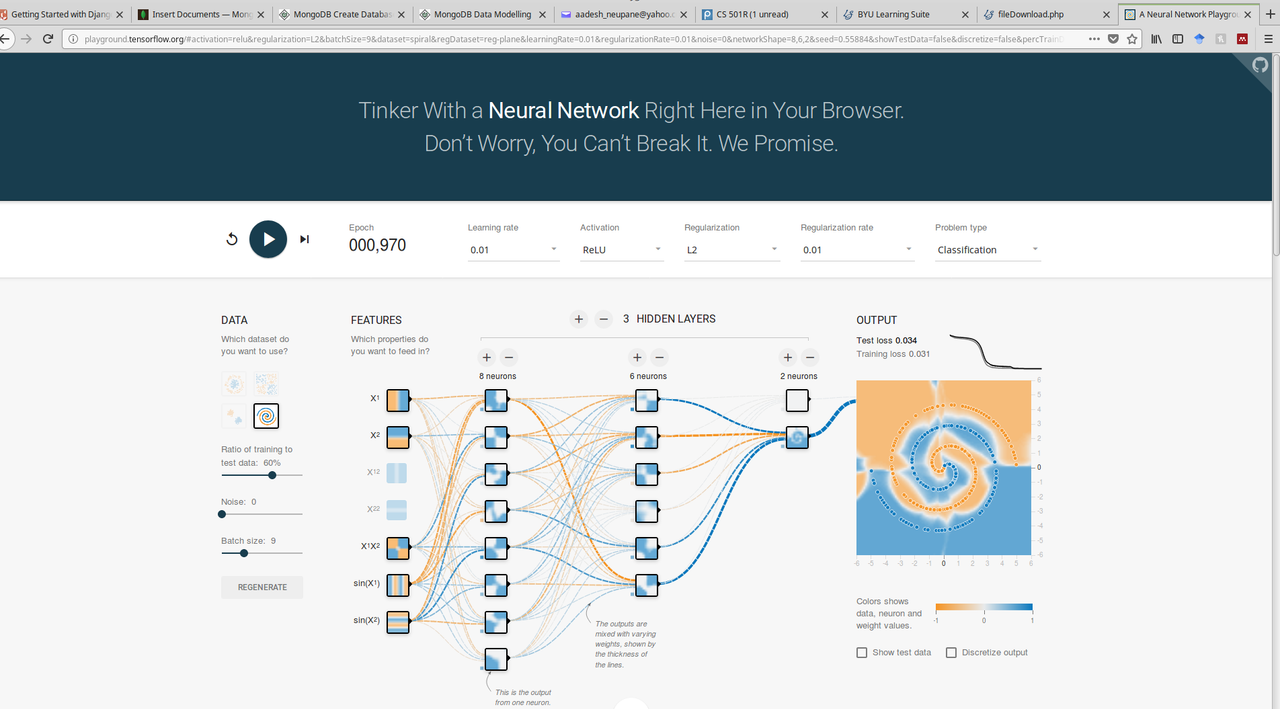

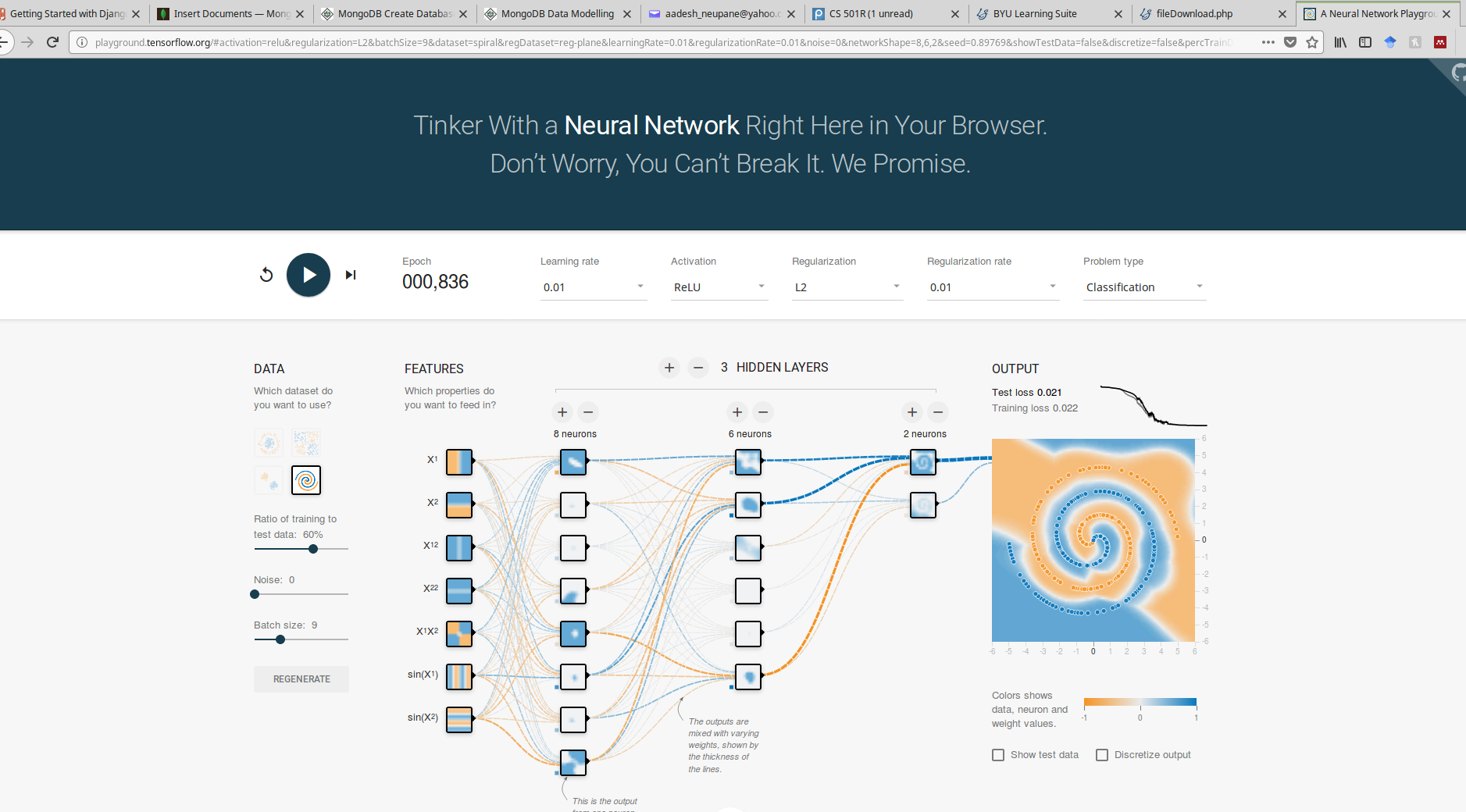

40

| size

int64 6

14.9M

| ext

stringclasses 1

value | lang

stringclasses 1

value | max_stars_repo_path

stringlengths 6

260

| max_stars_repo_name

stringlengths 6

119

| max_stars_repo_head_hexsha

stringlengths 40

41

| max_stars_repo_licenses

list | max_stars_count

int64 1

191k

⌀ | max_stars_repo_stars_event_min_datetime

stringlengths 24

24

⌀ | max_stars_repo_stars_event_max_datetime

stringlengths 24

24

⌀ | max_issues_repo_path

stringlengths 6

260

| max_issues_repo_name

stringlengths 6

119

| max_issues_repo_head_hexsha

stringlengths 40

41

| max_issues_repo_licenses

list | max_issues_count

int64 1

67k

⌀ | max_issues_repo_issues_event_min_datetime

stringlengths 24

24

⌀ | max_issues_repo_issues_event_max_datetime

stringlengths 24

24

⌀ | max_forks_repo_path

stringlengths 6

260

| max_forks_repo_name

stringlengths 6

119

| max_forks_repo_head_hexsha

stringlengths 40

41

| max_forks_repo_licenses

list | max_forks_count

int64 1

105k

⌀ | max_forks_repo_forks_event_min_datetime

stringlengths 24

24

⌀ | max_forks_repo_forks_event_max_datetime

stringlengths 24

24

⌀ | avg_line_length

float64 2

1.04M

| max_line_length

int64 2

11.2M

| alphanum_fraction

float64 0

1

| cells

list | cell_types

list | cell_type_groups

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

cb3a96dd0de35e3ee9ff26128f44972678ea29bf | 499,535 | ipynb | Jupyter Notebook | Quantium/Quantium_task_2.ipynb | tiffanysn/general_learning | e4a17bf566fe696d69ba8fb2ee936616adf1abf1 | [

"Apache-2.0"

] | null | null | null | Quantium/Quantium_task_2.ipynb | tiffanysn/general_learning | e4a17bf566fe696d69ba8fb2ee936616adf1abf1 | [

"Apache-2.0"

] | 27 | 2020-07-19T16:14:40.000Z | 2021-09-19T01:24:42.000Z | Quantium/Quantium_task_2.ipynb | tiffanysn/general_learning | e4a17bf566fe696d69ba8fb2ee936616adf1abf1 | [

"Apache-2.0"

] | 2 | 2020-05-16T18:47:05.000Z | 2020-10-15T10:58:42.000Z | 40.27209 | 8,680 | 0.322736 | [

[

[

"<a href=\"https://colab.research.google.com/github/tiffanysn/general_learning/blob/dev/Quantium/Quantium_task_2.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>",

"_____no_output_____"

]

],

[

[

"from google.colab import drive\ndrive.mount('/content/drive')",

"Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount(\"/content/drive\", force_remount=True).\n"

]

],

[

[

"## Load required libraries and datasets",

"_____no_output_____"

]

],

[

[

"! cp drive/My\\ Drive/QVI_data.csv .",

"_____no_output_____"

],

[

"import pandas as pd",

"_____no_output_____"

],

[

"import plotly.express as px",

"_____no_output_____"

],

[

"import numpy as np\n",

"_____no_output_____"

],

[

"df=pd.read_csv('QVI_data.csv')",

"_____no_output_____"

],

[

"df.shape",

"_____no_output_____"

],

[

"df.info()",

"<class 'pandas.core.frame.DataFrame'>\nRangeIndex: 264834 entries, 0 to 264833\nData columns (total 12 columns):\n # Column Non-Null Count Dtype \n--- ------ -------------- ----- \n 0 LYLTY_CARD_NBR 264834 non-null int64 \n 1 DATE 264834 non-null object \n 2 STORE_NBR 264834 non-null int64 \n 3 TXN_ID 264834 non-null int64 \n 4 PROD_NBR 264834 non-null int64 \n 5 PROD_NAME 264834 non-null object \n 6 PROD_QTY 264834 non-null int64 \n 7 TOT_SALES 264834 non-null float64\n 8 PACK_SIZE 264834 non-null int64 \n 9 BRAND 264834 non-null object \n 10 LIFESTAGE 264834 non-null object \n 11 PREMIUM_CUSTOMER 264834 non-null object \ndtypes: float64(1), int64(6), object(5)\nmemory usage: 24.2+ MB\n"

],

[

"df.describe(include= 'all')",

"_____no_output_____"

],

[

"df.info()",

"<class 'pandas.core.frame.DataFrame'>\nRangeIndex: 264834 entries, 0 to 264833\nData columns (total 12 columns):\n # Column Non-Null Count Dtype \n--- ------ -------------- ----- \n 0 LYLTY_CARD_NBR 264834 non-null int64 \n 1 DATE 264834 non-null object \n 2 STORE_NBR 264834 non-null int64 \n 3 TXN_ID 264834 non-null int64 \n 4 PROD_NBR 264834 non-null int64 \n 5 PROD_NAME 264834 non-null object \n 6 PROD_QTY 264834 non-null int64 \n 7 TOT_SALES 264834 non-null float64\n 8 PACK_SIZE 264834 non-null int64 \n 9 BRAND 264834 non-null object \n 10 LIFESTAGE 264834 non-null object \n 11 PREMIUM_CUSTOMER 264834 non-null object \ndtypes: float64(1), int64(6), object(5)\nmemory usage: 24.2+ MB\n"

]

],

[

[

"# Trial store 77",

"_____no_output_____"

],

[

"## Select control store",

"_____no_output_____"

],

[

"#### Add Month column",

"_____no_output_____"

]

],

[

[

"import datetime",

"_____no_output_____"

],

[

"df['year'] = pd.DatetimeIndex(df['DATE']).year\ndf['month']=pd.DatetimeIndex(df['DATE']).month\ndf['year_month']=pd.to_datetime(df['DATE']).dt.floor('d') - pd.offsets.MonthBegin(1)\ndf",

"_____no_output_____"

]

],

[

[

"#### Monthly calculation for each store",

"_____no_output_____"

]

],

[

[

"totSales= df.groupby(['STORE_NBR','year_month'])['TOT_SALES'].sum().reset_index()\ntotSales",

"_____no_output_____"

],

[

"measureOverTime2 = pd.DataFrame(data=totSales)",

"_____no_output_____"

],

[

"nTxn= df.groupby(['STORE_NBR','year_month'])['TXN_ID'].count().reset_index(drop=True)\nnTxn",

"_____no_output_____"

],

[

"sorted(df['year_month'].unique())",

"_____no_output_____"

],

[

"measureOverTime2['nCustomers'] = df.groupby(['STORE_NBR','year_month','LYLTY_CARD_NBR'])['DATE'].count().groupby(['STORE_NBR','year_month']).count().reset_index(drop=True)\nmeasureOverTime2.head()",

"_____no_output_____"

],

[

"measureOverTime2['nTxnPerCust'] = nTxn/measureOverTime2['nCustomers']\nmeasureOverTime2.head()",

"_____no_output_____"

],

[

"totQty = df.groupby(['STORE_NBR','year_month'])['PROD_QTY'].sum().reset_index(drop=True)\ntotQty",

"_____no_output_____"

],

[

"measureOverTime2['nChipsPerTxn'] = totQty/nTxn\nmeasureOverTime2",

"_____no_output_____"

],

[

"measureOverTime2['avgPricePerUnit'] = totSales['TOT_SALES']/totQty\nmeasureOverTime2",

"_____no_output_____"

]

],

[

[

"#### Filter pre-trial & stores with full obs",

"_____no_output_____"

]

],

[

[

"measureOverTime2.set_index('year_month', inplace=True)",

"_____no_output_____"

],

[

"preTrialMeasures = measureOverTime2.loc['2018-06-01':'2019-01-01'].reset_index()\npreTrialMeasures",

"_____no_output_____"

]

],

[

[

"#### Owen's *Solution*",

"_____no_output_____"

]

],

[

[

"measureOverTime = df.groupby(['STORE_NBR','year_month','LYLTY_CARD_NBR']).\\\n agg(\n totSalesPerCust=('TOT_SALES', sum),\n nTxn=('TXN_ID', \"count\"),\n nChips=('PROD_QTY', sum)\n ).\\\n groupby(['STORE_NBR','year_month']).\\\n agg(\n totSales=(\"totSalesPerCust\", sum),\n nCustomers=(\"nTxn\", \"count\"),\n nTxnPerCust=(\"nTxn\", lambda x: x.sum()/x.count()),\n totChips=(\"nChips\", sum),\n totTxn=(\"nTxn\", sum)).\\\n reset_index()",

"_____no_output_____"

],

[

"measureOverTime['nChipsPerTxn'] = measureOverTime['totChips']/measureOverTime['totTxn']\nmeasureOverTime['avgPricePerUnit'] = measureOverTime['totSales']/measureOverTime['totChips']\nmeasureOverTime.drop(['totChips', 'totTxn'], axis=1, inplace=True)",

"_____no_output_____"

]

],

[

[

"#### Calculate correlation",

"_____no_output_____"

]

],

[

[

"preTrialMeasures",

"_____no_output_____"

],

[

"# Input\ninputTable = preTrialMeasures\nmetricCol = 'TOT_SALES'\nstoreComparison = 77\n\nx = 1",

"_____no_output_____"

],

[

"corr = preTrialMeasures.\\\n loc[preTrialMeasures['STORE_NBR'].\\\n isin([x,storeComparison])].\\\n loc[:, ['year_month', 'STORE_NBR', metricCol]].\\\n pivot(index='year_month', columns='STORE_NBR', values=metricCol).\\\n corr().\\\n iloc[0, 1]",

"_____no_output_____"

],

[

"preTrialMeasures.loc[preTrialMeasures['STORE_NBR'].isin([x,storeComparison])].loc[:, ['year_month', 'STORE_NBR', metricCol]].\\\npivot(index='year_month', columns='STORE_NBR', values=metricCol).corr()\n ",

"_____no_output_____"

],

[

"df = pd.DataFrame(columns=['Store1', 'Store2', 'corr_measure'])",

"_____no_output_____"

],

[

"df.append({'Store1':x, 'Store2':storeComparison, 'corr_measure':corr}, ignore_index=True)",

"_____no_output_____"

],

[

"def calculateCorrelation(inputTable, metricCol, storeComparison):\n df = pd.DataFrame(columns=['Store1', 'Store2', 'corr_measure'])\n for x in inputTable.STORE_NBR.unique():\n if x in [77, 86, 88]:\n pass\n else:\n corr = inputTable.\\\n loc[inputTable['STORE_NBR'].\\\n isin([x,storeComparison])].\\\n loc[:, ['year_month', 'STORE_NBR', metricCol]].\\\n pivot(index='year_month', columns='STORE_NBR', values=metricCol).\\\n corr().\\\n iloc[0, 1]\n df = df.append({'Store1':storeComparison, 'Store2':x, 'corr_measure':corr}, ignore_index=True)\n return(df)",

"_____no_output_____"

],

[

"calcCorrTable = calculateCorrelation(inputTable=preTrialMeasures, metricCol='nCustomers', storeComparison=77)",

"_____no_output_____"

],

[

"calcCorrTable",

"_____no_output_____"

]

],

[

[

"#### Calculate magnitude distance",

"_____no_output_____"

]

],

[

[

"inputTable = preTrialMeasures\nmetricCol = 'TOT_SALES'\nstoreComparison = '77'\n\nx='2'",

"_____no_output_____"

],

[

"mag = preTrialMeasures.\\\n loc[preTrialMeasures['STORE_NBR'].isin([x, storeComparison])].\\\n loc[:, ['year_month', 'STORE_NBR', metricCol]].\\\n pivot(index='year_month', columns='STORE_NBR', values=metricCol).\\\n reset_index().rename_axis(None, axis=1)\nmag",

"_____no_output_____"

],

[

"mag.columns = mag.columns.map(str)\nmag",

"_____no_output_____"

],

[

"mag['measures'] = mag.apply(lambda row: row[x]-row[storeComparison], axis=1).abs()\n\nmag",

"_____no_output_____"

],

[

"mag['Store1'] = x\nmag['Store2'] = storeComparison",

"_____no_output_____"

],

[

"df_temp = mag.loc[:, ['Store1', 'Store2', 'year_month','measures']]",

"_____no_output_____"

],

[

"df_temp",

"_____no_output_____"

],

[

"df = pd.DataFrame(columns=['Store1', 'Store2', 'year_month','measures'])\ndf",

"_____no_output_____"

],

[

"inputTable = preTrialMeasures\nmetricCol = 'TOT_SALES'\nstoreComparison = '77'\ndf = pd.DataFrame(columns=['Store1', 'Store2', 'year_month','measures'])\nfor x in inputTable.STORE_NBR.unique():\n if x in [77, 86, 88]:\n pass\n else:\n mag = preTrialMeasures.\\\n loc[preTrialMeasures['STORE_NBR'].\\\n isin([x, storeComparison])].\\\n loc[:, ['year_month', 'STORE_NBR', metricCol]].\\\n pivot(index='year_month', columns='STORE_NBR', values=metricCol).\\\n reset_index().rename_axis(None, axis=1)\n mag.columns = ['year_month', 'Store1', 'Store2']\n mag['measures'] = mag.apply(lambda row: row['Store1']-row['Store2'], axis=1).abs()\n mag['Store1'] = x\n mag['Store2'] = storeComparison \n df_temp = mag.loc[:, ['Store1', 'Store2', 'year_month','measures']]\n df = pd.concat([df, df_temp])",

"_____no_output_____"

],

[

"df",

"_____no_output_____"

],

[

"def calculateMagnitudeDistance(inputTable, metricCol, storeComparison):\n df = pd.DataFrame(columns=['Store1', 'Store2', 'year_month','measures'])\n for x in inputTable.STORE_NBR.unique():\n if x in [77, 86, 88]:\n pass\n else:\n mag = preTrialMeasures.\\\n loc[preTrialMeasures['STORE_NBR'].\\\n isin([x, storeComparison])].\\\n loc[:, ['year_month', 'STORE_NBR', metricCol]].\\\n pivot(index='year_month', columns='STORE_NBR', values=metricCol).\\\n reset_index().rename_axis(None, axis=1)\n mag.columns = ['year_month', 'Store1', 'Store2']\n mag['measures'] = mag.apply(lambda row: row['Store1']-row['Store2'], axis=1).abs()\n mag['Store1'] = storeComparison\n mag['Store2'] = x \n df_temp = mag.loc[:, ['Store1', 'Store2', 'year_month','measures']]\n df = pd.concat([df, df_temp])\n return df",

"_____no_output_____"

],

[

"def finalDistTable(inputTable, metricCol, storeComparison):\n calcDistTable = calculateMagnitudeDistance(inputTable, metricCol, storeComparison)\n minMaxDist = calcDistTable.groupby(['Store1','year_month'])['measures'].agg(['max','min']).reset_index()\n distTable = calcDistTable.merge(minMaxDist, on=['year_month', 'Store1'])\n distTable['magnitudeMeasure']= distTable.apply(lambda row: 1- (row['measures']-row['min'])/(row['max']-row['min']),axis=1)\n finalDistTable = distTable.groupby(['Store1','Store2'])['magnitudeMeasure'].mean().reset_index()\n finalDistTable.columns = ['Store1','Store2','mag_measure']\n return finalDistTable",

"_____no_output_____"

],

[

"calcDistTable = calculateMagnitudeDistance(inputTable=preTrialMeasures, metricCol='nCustomers', storeComparison='77')\ncalcDistTable",

"_____no_output_____"

]

],

[

[

"#### Standardise the magnitude distance",

"_____no_output_____"

]

],

[

[

"#calcDistTable.groupby(['Store1','year_month'])['measures'].apply(lambda g: g.max() - g.min()).reset_index()",

"_____no_output_____"

],

[

"minMaxDist = calcDistTable.groupby(['Store1','year_month'])['measures'].agg(['max','min']).reset_index()\nminMaxDist",

"_____no_output_____"

],

[

"calcDistTable.merge(minMaxDist, on=['year_month', 'Store1'])",

"_____no_output_____"

],

[

"distTable = calcDistTable.merge(minMaxDist, on=['year_month', 'Store1'])\ndistTable",

"_____no_output_____"

],

[

"distTable['magnitudeMeasure']= distTable.apply(lambda row: 1- (row['measures']-row['min'])/(row['max']-row['min']),axis=1)\ndistTable",

"_____no_output_____"

]

],

[

[

"#### Merge nTotSals & nCustomers\n\n\n\n",

"_____no_output_____"

]

],

[

[

"corr_nSales = calculateCorrelation(inputTable=preTrialMeasures, metricCol='TOT_SALES',storeComparison='77')\ncorr_nSales",

"_____no_output_____"

],

[

"corr_nCustomers = calculateCorrelation(inputTable=preTrialMeasures, metricCol='nCustomers',storeComparison='77')\ncorr_nCustomers",

"_____no_output_____"

],

[

"magnitude_nSales = finalDistTable(inputTable=preTrialMeasures, metricCol='TOT_SALES',storeComparison='77')\nmagnitude_nSales",

"_____no_output_____"

],

[

"magnitude_nCustomers = finalDistTable(inputTable=preTrialMeasures, metricCol='nCustomers',storeComparison='77')\nmagnitude_nCustomers",

"_____no_output_____"

]

],

[

[

"#### Get control store\n\n\n",

"_____no_output_____"

]

],

[

[

"score_nSales = corr_nSales.merge(magnitude_nSales, on=['Store1','Store2'])\nscore_nSales['scoreNSales'] = score_nSales.apply(lambda row: row['corr_measure']*0.5 + row['mag_measure']*0.5, axis=1)\nscore_nSales = score_nSales.loc[:,['Store1','Store2', 'scoreNSales']]\nscore_nSales",

"_____no_output_____"

],

[

"score_nCustomers = corr_nCustomers.merge(magnitude_nCustomers, on=['Store1','Store2'])\nscore_nCustomers['scoreNCust'] = score_nCustomers.apply(lambda row: row['corr_measure']*0.5 + row['mag_measure']*0.5, axis=1)\nscore_nCustomers = score_nCustomers.loc[:,['Store1','Store2','scoreNCust']]\nscore_nCustomers",

"_____no_output_____"

],

[

"score_Control = score_nSales.merge(score_nCustomers, on=['Store1','Store2'])\nscore_Control",

"_____no_output_____"

],

[

"score_Control['finalControlScore'] = score_Control.apply(lambda row: row['scoreNSales']*0.5 + row['scoreNCust']*0.5, axis=1)\nscore_Control",

"_____no_output_____"

],

[

"final_control_store = score_Control['finalControlScore'].max()",

"_____no_output_____"

],

[

"score_Control[score_Control['finalControlScore']==final_control_store]",

"_____no_output_____"

]

],

[

[

"#### Visualization the control store ",

"_____no_output_____"

]

],

[

[

"measureOverTime['Store_type'] = measureOverTime.apply(lambda row: 'Trail' if row['STORE_NBR']==77 else ('Control' if row['STORE_NBR']==233 else 'Other stores'), axis=1)\nmeasureOverTime",

"_____no_output_____"

],

[

"measureOverTime['Store_type'].unique()",

"_____no_output_____"

],

[

"measureOverTimeSales = measureOverTime.groupby(['year_month','Store_type'])['totSales'].mean().reset_index()\nmeasureOverTimeSales",

"_____no_output_____"

],

[

"measureOverTimeSales.set_index('year_month',inplace=True)",

"_____no_output_____"

],

[

"pastSales = measureOverTimeSales.loc['2018-06-01':'2019-01-01'].reset_index()\npastSales",

"_____no_output_____"

],

[

"px.line(data_frame=pastSales, x='year_month', y='totSales', color='Store_type', title='Total sales by month',labels={'year_month':'Month of operation','totSales':'Total sales'})",

"_____no_output_____"

],

[

"measureOverTimeCusts = measureOverTime.groupby(['year_month','Store_type'])['nCustomers'].mean().reset_index()\nmeasureOverTimeCusts",

"_____no_output_____"

],

[

"measureOverTimeCusts.set_index('year_month',inplace=True)\npastCustomers = measureOverTimeCusts.loc['2018-06-01':'2019-01-01'].reset_index()\npastCustomers",

"_____no_output_____"

],

[

"px.line(data_frame=pastCustomers, x='year_month', y='nCustomers', color='Store_type', title='Total customers by month',labels={'year_month':'Month of operation','nCustomers':'Total customers'})",

"_____no_output_____"

]

],

[

[

"## Assessment of trial period",

"_____no_output_____"

],

[

"### Calculate for totSales",

"_____no_output_____"

],

[

"#### Scale sales ",

"_____no_output_____"

]

],

[

[

"preTrialMeasures",

"_____no_output_____"

],

[

"preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==77, 'TOT_SALES'].sum()",

"_____no_output_____"

],

[

"preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==233, 'TOT_SALES'].sum()",

"_____no_output_____"

],

[

"scalingFactorForControlSales = preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==77, 'TOT_SALES'].sum() / preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==233, 'TOT_SALES'].sum()\nscalingFactorForControlSales",

"_____no_output_____"

]

],

[

[

"#### Apply the scaling factor",

"_____no_output_____"

]

],

[

[

"scaledControlSales = measureOverTimeSales.loc[measureOverTimeSales['Store_type']=='Control','totSales'].reset_index()\nscaledControlSales",

"_____no_output_____"

],

[

"scaledControlSales['scaledControlSales'] = scaledControlSales.apply(lambda row: row['totSales']*scalingFactorForControlSales,axis=1)\nscaledControlSales",

"_____no_output_____"

],

[

"TrailStoreSales = measureOverTimeSales.loc[measureOverTimeSales['Store_type']=='Trail',['totSales']]\nTrailStoreSales",

"_____no_output_____"

],

[

"TrailStoreSales.columns = ['trailSales']\nTrailStoreSales",

"_____no_output_____"

]

],

[

[

"#### %Diff between scaled control and trial for sales\n\n",

"_____no_output_____"

]

],

[

[

"percentageDiff = scaledControlSales.merge(TrailStoreSales, on='year_month',)\npercentageDiff",

"_____no_output_____"

],

[

"percentageDiff['percentDiff'] = percentageDiff.apply(lambda row: (row['scaledControlSales']-row['trailSales'])/row['scaledControlSales'], axis=1)",

"_____no_output_____"

],

[

"percentageDiff",

"_____no_output_____"

]

],

[

[

"#### Get standard deviation",

"_____no_output_____"

]

],

[

[

"stdDev = percentageDiff.loc[percentageDiff['year_month']< '2019-02-01', 'percentDiff'].std(ddof=8-1)\nstdDev",

"_____no_output_____"

]

],

[

[

"#### Calculate the t-values for the trial months",

"_____no_output_____"

]

],

[

[

"from scipy.stats import ttest_ind",

"_____no_output_____"

],

[

"control = percentageDiff.loc[percentageDiff['year_month']>'2019-01-01',['scaledControlSales']]\ncontrol",

"_____no_output_____"

],

[

"trail = percentageDiff.loc[percentageDiff['year_month']>'2019-01-01',['trailSales']]\ntrail",

"_____no_output_____"

],

[

"ttest_ind(control,trail)",

"_____no_output_____"

]

],

[

[

"The null hypothesis here is \"the sales between control and trial stores has **NO** significantly difference in trial period.\" The pvalue is 0.32, which is 32% that they are same in sales,which is much greater than 5%. Fail to reject the null hypothesis. Therefore, we are not confident to say \"the trial period impact trial store sales.\"",

"_____no_output_____"

]

],

[

[

"percentageDiff['t-value'] = percentageDiff.apply(lambda row: (row['percentDiff']- 0) / stdDev,axis=1)\npercentageDiff",

"_____no_output_____"

]

],

[

[

"We can observe that the t-value is much larger than the 95th percentile value of the t-distribution for March and April. \ni.e. the increase in sales in the trial store in March and April is statistically greater than in the control store.",

"_____no_output_____"

],

[

"#### 95th & 5th percentile of control store ",

"_____no_output_____"

]

],

[

[

"measureOverTimeSales",

"_____no_output_____"

],

[

"pastSales_Controls95 = measureOverTimeSales.loc[measureOverTimeSales['Store_type']=='Control']\npastSales_Controls95['totSales'] = pastSales_Controls95.apply(lambda row: row['totSales']*(1+stdDev*2),axis=1)\npastSales_Controls95.iloc[0:13,0] = 'Control 95th % confidence interval'\npastSales_Controls95.reset_index()",

"/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n/usr/local/lib/python3.6/dist-packages/pandas/core/indexing.py:966: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n"

],

[

"pastSales_Controls5 = measureOverTimeSales.loc[measureOverTimeSales['Store_type']=='Control']\npastSales_Controls5['totSales'] = pastSales_Controls95.apply(lambda row: row['totSales']*(1-stdDev*2),axis=1)\npastSales_Controls5.iloc[0:13,0] = 'Control 5th % confidence interval'\npastSales_Controls5.reset_index()",

"/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n/usr/local/lib/python3.6/dist-packages/pandas/core/indexing.py:966: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n"

],

[

"trialAssessment = pd.concat([measureOverTimeSales,pastSales_Controls5,pastSales_Controls95])\ntrialAssessment = trialAssessment.sort_values(by=['year_month'])\ntrialAssessment = trialAssessment.reset_index()\ntrialAssessment",

"_____no_output_____"

]

],

[

[

"#### Visualization Trial ",

"_____no_output_____"

]

],

[

[

"px.line(data_frame=trialAssessment, x='year_month', y='totSales', color='Store_type', title='Total sales by month',labels={'year_month':'Month of operation','totSales':'Total sales'})",

"_____no_output_____"

]

],

[

[

"### Calculate for nCustomers",

"_____no_output_____"

],

[

"#### Scales nCustomers",

"_____no_output_____"

]

],

[

[

"preTrialMeasures",

"_____no_output_____"

],

[

"preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==77,'nCustomers'].sum()",

"_____no_output_____"

],

[

"preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==233,'nCustomers'].sum()",

"_____no_output_____"

],

[

"scalingFactorForControlnCustomers = preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==77,'nCustomers'].sum() / preTrialMeasures.loc[preTrialMeasures['STORE_NBR']==233,'nCustomers'].sum()\nscalingFactorForControlnCustomers",

"_____no_output_____"

]

],

[

[

"#### Apply the scaling factor",

"_____no_output_____"

]

],

[

[

"measureOverTime",

"_____no_output_____"

],

[

"scaledControlNcustomers = measureOverTime.loc[measureOverTime['Store_type']=='Control',['year_month','nCustomers']]\nscaledControlNcustomers",

"_____no_output_____"

],

[

"scaledControlNcustomers['scaledControlNcus'] = scaledControlNcustomers.apply(lambda row: row['nCustomers']*scalingFactorForControlnCustomers, axis=1)\nscaledControlNcustomers",

"_____no_output_____"

]

],

[

[

"#### %Diff between scaled control & trail for nCustomers",

"_____no_output_____"

]

],

[

[

"measureOverTime.loc[measureOverTime['Store_type']=='Trail',['year_month','nCustomers']]",

"_____no_output_____"

],

[

"percentageDiff = scaledControlNcustomers.merge(measureOverTime.loc[measureOverTime['Store_type']=='Trail',['year_month','nCustomers']],on='year_month')\npercentageDiff",

"_____no_output_____"

],

[

"percentageDiff.columns=['year_month','controlCustomers','scaledControlNcus','trialCustomers']\npercentageDiff",

"_____no_output_____"

],

[

"percentageDiff['%Diff'] = percentageDiff.apply(lambda row: (row['scaledControlNcus']-row['trialCustomers'])/row['scaledControlNcus'],axis=1)\npercentageDiff",

"_____no_output_____"

]

],

[

[

"#### Get standard deviation",

"_____no_output_____"

]

],

[

[

"stdDev = percentageDiff.loc[percentageDiff['year_month']< '2019-02-01', '%Diff'].std(ddof=8-1)\nstdDev",

"_____no_output_____"

]

],

[

[

"#### Calculate the t-values for the trial months",

"_____no_output_____"

]

],

[

[

"percentageDiff['t-value'] = percentageDiff.apply(lambda row: (row['%Diff']- 0) / stdDev,axis=1)\npercentageDiff",

"_____no_output_____"

]

],

[

[

"#### 95th & 5th percentile of control store ",

"_____no_output_____"

]

],

[

[

"measureOverTimeCusts",

"_____no_output_____"

],

[

"pastNcus_Controls95 = measureOverTimeCusts.loc[measureOverTimeCusts['Store_type']=='Control']\npastNcus_Controls95['nCustomers'] = pastNcus_Controls95.apply(lambda row: row['nCustomers']*(1+stdDev*2),axis=1)\npastNcus_Controls95.iloc[0:13,0] = 'Control 95th % confidence interval'\npastNcus_Controls95.reset_index()",

"/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n/usr/local/lib/python3.6/dist-packages/pandas/core/indexing.py:966: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n"

],

[

"pastNcus_Controls5 = measureOverTimeCusts.loc[measureOverTimeCusts['Store_type']=='Control']\npastNcus_Controls5['nCustomers'] = pastNcus_Controls5.apply(lambda row: row['nCustomers']*(1-stdDev*2),axis=1)\npastNcus_Controls5.iloc[0:13,0] = 'Control 5th % confidence interval'\npastNcus_Controls5.reset_index()",

"/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n/usr/local/lib/python3.6/dist-packages/pandas/core/indexing.py:966: SettingWithCopyWarning:\n\n\nA value is trying to be set on a copy of a slice from a DataFrame.\nTry using .loc[row_indexer,col_indexer] = value instead\n\nSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n\n"

],

[

"trialAssessment = pd.concat([measureOverTimeCusts,pastNcus_Controls5,pastNcus_Controls95])\ntrialAssessment = trialAssessment.sort_values(by=['year_month'])\ntrialAssessment = trialAssessment.reset_index()\ntrialAssessment",

"_____no_output_____"

]

],

[

[

"#### Visualization Trial ",

"_____no_output_____"

]

],

[

[

"px.line(data_frame=trialAssessment, x='year_month', y='nCustomers', color='Store_type', title='Total nCustomers by month',labels={'year_month':'Month of operation','nCustomers':'Total nCustomers'})",

"_____no_output_____"

]

],

[

[

"# Trial store 86",

"_____no_output_____"

],

[

"## Select control store",

"_____no_output_____"

],

[

"#### corr_nSales",

"_____no_output_____"

]

],

[

[

"measureOverTime",

"_____no_output_____"

],

[

"",

"_____no_output_____"

]

]

] | [

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code",

"markdown",

"code"

] | [

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown",

"markdown",

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

],

[

"markdown",

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown"

],

[

"code",

"code",

"code",

"code"

],

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown",

"markdown"

],

[

"code",

"code"

]

] |

cb3aa5d76711c0a29023a7bc10bc173bbed6a1bd | 235,498 | ipynb | Jupyter Notebook | Lab1.ipynb | aadeshnpn/cs501r | 72c59e91d28bbcd6e620842a33d83d278d4c13a4 | [

"Apache-2.0"

] | null | null | null | Lab1.ipynb | aadeshnpn/cs501r | 72c59e91d28bbcd6e620842a33d83d278d4c13a4 | [

"Apache-2.0"

] | null | null | null | Lab1.ipynb | aadeshnpn/cs501r | 72c59e91d28bbcd6e620842a33d83d278d4c13a4 | [

"Apache-2.0"

] | 1 | 2018-12-14T10:17:47.000Z | 2018-12-14T10:17:47.000Z | 1,110.839623 | 230,072 | 0.954552 | [

[

[

"# 501R Lab1\n## Part 1\n## Program to generate random image",

"_____no_output_____"

]

],

[

[

"import cairo\nimport numpy as np\n\n# Set the random seed\nseed = None # Populate for using specific value for consistency\nrandom = np.random.RandomState(seed)\n\n# Function to draw random integers in a range\ndef randinteger(n, m=1):\n if m == 1:\n return random.randint(1, n, m)[0]\n else:\n return random.randint(1, n, m)\n\n# Generate random colors\ndef randcolor():\n r = random.rand()\n g = random.rand()\n b = random.rand()\n a = random.rand()\n cr.set_source_rgba(r, g, b, a)\n\n# Get random line width\ndef linewidth():\n cr.set_line_width(randinteger(30))\n \n# Draw random curve \ndef curve():\n x, x1, x2, x3 = randinteger(512, 4)\n y, y1, y2, y3 = randinteger(288, 4)\n randcolor()\n linewidth()\n cr.move_to(x, y)\n cr.curve_to(x1, y1, x2, y2, x3, y3)\n cr.set_line_join(cairo.LINE_JOIN_ROUND)\n cr.stroke()\n \n# Draw random line \ndef line():\n randcolor()\n linewidth()\n x,x1 = randinteger(512, 2)\n y,y1 = randinteger(288, 2)\n cr.move_to(x, y)\n cr.line_to(x1, y1)\n \n cr.stroke() \n\n# Draw random arc\ndef arc():\n randcolor()\n linewidth()\n c1 = randinteger(512)\n c2 = randinteger(288)\n r = randinteger(30)\n a1 = np.pi * random.randint(0, 3) * random.rand()\n a2 = np.pi * random.randint(0, 3) * random.rand()\n cr.arc(c1, c2, r, a1, a2)\n cr.fill()\n cr.stroke()\n\n# Draw border \ndef border():\n # Setting line width and color\n randcolor()\n cr.set_line_width(5.0)\n cr.rectangle(0, 0, 512, 288)\n cr.set_line_join(cairo.LINE_JOIN_ROUND)\n cr.set_source_rgba(0.0, 0.0, 0.0, 0.3)\n cr.fill()\n # Filling all the commands\n cr.stroke() \n \n# Draw random rectangle \ndef rectangle():\n randcolor()\n linewidth()\n x,x1 = randinteger(512, 2)\n y,y1 = randinteger(288, 2)\n cr.rectangle(x,y,x1,y1)\n cr.set_line_join(cairo.LINE_JOIN_ROUND)\n cr.fill()\n cr.stroke() \n\n# Drawing the objects\ndef draw():\n border()\n for i in range(60):\n curve()\n line()\n arc()\n #rectangle()\n \n \ndef nbimage(data):\n from IPython.display import display\n from PIL.Image import fromarray\n \n # Creating image data from numpy array\n image = fromarray(data)\n # Saving the image to the disk\n image.save('shape.png')\n \n # Displaying the image in Notebook\n display(image)\n \n \nWIDTH = 512\nHEIGHT = 288\n\ndata = np.zeros((HEIGHT, WIDTH, 4), dtype=np.uint8)\n\n# Setting up cairo\nims = cairo.ImageSurface.create_for_data(data, cairo.FORMAT_ARGB32, WIDTH, HEIGHT)\ncr = cairo.Context(ims)\n\ndraw()\n\nnbimage(data)\n",

"_____no_output_____"

]

],

[

[

"## Part 2\n### Tensorplaygound with Spiral Dataset\n#### Experiment 1",

"_____no_output_____"

],

[

"",

"_____no_output_____"

],

[

"#### Experiment 2\n",

"_____no_output_____"

],

[

"#### Experiment 3\n",

"_____no_output_____"

]

]

] | [

"markdown",

"code",

"markdown"

] | [

[

"markdown"

],

[

"code"

],

[

"markdown",

"markdown",

"markdown",

"markdown"

]

] |

cb3ac68516afcb8ba4d87554988edd7c1168d7ab | 51,770 | ipynb | Jupyter Notebook | tests/loginterp_tests.ipynb | kokron/velocileptors | 50016dd66ec9a2d33effecc248a48ca7ea7322bf | [

"MIT"

] | 11 | 2020-04-30T02:59:36.000Z | 2022-03-30T08:12:51.000Z | tests/loginterp_tests.ipynb | kokron/velocileptors | 50016dd66ec9a2d33effecc248a48ca7ea7322bf | [

"MIT"

] | null | null | null | tests/loginterp_tests.ipynb | kokron/velocileptors | 50016dd66ec9a2d33effecc248a48ca7ea7322bf | [

"MIT"

] | 4 | 2021-02-17T12:55:05.000Z | 2022-03-16T08:57:11.000Z | 154.537313 | 16,816 | 0.898976 | [

[

[

"import numpy as np\n\nfrom matplotlib import pyplot as plt",

"_____no_output_____"

],

[

"# Make trial function\nx = np.logspace(-2,2,10**2)\ny = - (x - 1)**2 + 10",

"_____no_output_____"

],

[

"plt.loglog(x, np.abs(y), '.')",

"_____no_output_____"

],

[

"plt.semilogx(x,np.abs(y))\nplt.ylim(-1,11)",

"_____no_output_____"

],

[

"# Find the zero:\nii = np.argmin(np.abs(y))\nprint(y[ii-1], y[ii], y[ii+1])",

"1.8573745648763982 -0.4280095417953529 -3.2611692330933018\n"

],

[

"# import routine\nimport sys\nsys.path.append('../../velocileptors/')\nfrom velocileptors.Utils.loginterp import loginterp",

"_____no_output_____"

],

[

"xint = np.logspace(-3,3,1000)\nind = ii -1\nyint = loginterp(x[:ind], y[:ind], interp_max = 3, interp_min = -3, Nint = 20)(xint)",

"0.0024062827925594044 -2.725445575878377\n[ 1. 1.35304777 1.83073828 2.47707636 3.35160265\n 4.53487851 6.13590727 8.30217568 11.23324033 15.19911083\n 20.56512308 27.82559402 37.64935807 50.94138015 68.92612104\n 93.26033469 126.18568831 170.73526475 231.01297001 312.57158497]\n"

],

[

"plt.loglog(xint, np.abs(yint) )\nplt.loglog(x[:ind],np.abs(y)[:ind],'.')\nplt.loglog(x[ind-1], np.abs(y[ind-1]),'+',label='final point included')\nplt.ylim(1e0,30)\nplt.xlim(5e-1,10)\nplt.legend()",

"_____no_output_____"

],

[

"plt.loglog(xint, np.abs(yint) )\nplt.ylim(1e-2,30)",

"_____no_output_____"

],

[

"import time\nt1 = time.time()\nyint = loginterp(x[:ind], y[:ind], interp_max = 3, interp_min = -3, Nint = 100)(xint)\nt2 = time.time()\nprint(t2-t1)",

"0.0024062827925594044 -2.725445575878377\n[ 1. 1.05974533 1.12306016 1.19015775 1.26126412\n 1.33661875 1.41647548 1.50110327 1.59078717 1.68582927\n 1.78654969 1.89328769 2.00640278 2.12627597 2.25331102\n 2.38793582 2.53060383 2.68179558 2.84202033 3.01181777\n 3.1917598 3.38245254 3.58453827 3.79869768 4.02565211\n 4.26616601 4.52104949 4.79116107 5.07741055 5.38076211\n 5.7022375 6.04291954 6.40395574 6.78656217 7.19202754\n 7.62171757 8.07707958 8.55964734 9.07104626 9.61299889\n 10.18733064 10.79597604 11.44098516 12.12453055 12.84891459\n 13.61657719 14.43010404 15.29223532 16.20587491 17.1741002\n 18.20017243 19.28754767 20.43988851 21.66107633 22.95522441\n 24.32669179 25.78009793 27.3203383 28.95260084 30.68238343\n 32.51551245 34.45816237 36.51687653 38.69858925 41.0106491\n 43.46084373 46.05742603 48.80914199 51.72526013 54.81560269\n 58.09057877 61.56121938 65.23921454 69.13695272 73.26756254\n 77.644957 82.28388031 87.19995762 92.40974757 97.93079812\n 103.78170564 109.98217754 116.55309866 123.51660161 130.89614132\n 138.71657404 147.00424106 155.78705746 165.0946061 174.95823726\n 185.41117429 196.48862546 208.22790257 220.66854662 233.85246102\n 247.82405268 262.63038166 278.3213196 294.94971774 312.57158497]\n0.0031173229217529297\n"

],

[

"t1 = time.time()\nyint = loginterp(x[:ind], y[:ind], interp_max = 3, interp_min = -3, Nint = 100,option='B')(xint)\nt2 = time.time()\nprint(t2-t1)",

"0.0024062827925594044 -2.725445575878377\n0.0020127296447753906\n"

]

]

] | [

"code"

] | [

[

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code",

"code"

]

] |

cb3ac6a4385ec59dcb885192b1756def8ec76b51 | 79,846 | ipynb | Jupyter Notebook | site/en-snapshot/guide/migrate.ipynb | ilyaspiridonov/docs-l10n | a061a44e40d25028d0a4458094e48ab717d3565c | [

"Apache-2.0"

] | 1 | 2021-09-23T09:56:29.000Z | 2021-09-23T09:56:29.000Z | site/en-snapshot/guide/migrate.ipynb | ilyaspiridonov/docs-l10n | a061a44e40d25028d0a4458094e48ab717d3565c | [

"Apache-2.0"

] | null | null | null | site/en-snapshot/guide/migrate.ipynb | ilyaspiridonov/docs-l10n | a061a44e40d25028d0a4458094e48ab717d3565c | [

"Apache-2.0"

] | 1 | 2020-06-02T13:44:09.000Z | 2020-06-02T13:44:09.000Z | 36.795392 | 518 | 0.541317 | [

[

[

"##### Copyright 2018 The TensorFlow Authors.",

"_____no_output_____"

]

],

[

[

"#@title Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# https://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.",

"_____no_output_____"

]

],

[

[

"# Migrate your TensorFlow 1 code to TensorFlow 2\n\n<table class=\"tfo-notebook-buttons\" align=\"left\">\n <td>\n <a target=\"_blank\" href=\"https://www.tensorflow.org/guide/migrate\">\n <img src=\"https://www.tensorflow.org/images/tf_logo_32px.png\" />\n View on TensorFlow.org</a>\n </td>\n <td>\n <a target=\"_blank\" href=\"https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/guide/migrate.ipynb\">\n <img src=\"https://www.tensorflow.org/images/colab_logo_32px.png\" />\n Run in Google Colab</a>\n </td>\n <td>\n <a target=\"_blank\" href=\"https://github.com/tensorflow/docs/blob/master/site/en/guide/migrate.ipynb\">\n <img src=\"https://www.tensorflow.org/images/GitHub-Mark-32px.png\" />\n View source on GitHub</a>\n </td>\n <td>\n <a href=\"https://storage.googleapis.com/tensorflow_docs/docs/site/en/guide/migrate.ipynb\"><img src=\"https://www.tensorflow.org/images/download_logo_32px.png\" />Download notebook</a>\n </td>\n</table>",

"_____no_output_____"

],

[

"This doc for users of low level TensorFlow APIs. If you are using \nthe high level APIs (`tf.keras`) there may be little or no action\nyou need to take to make your code fully TensorFlow 2.0 compatible: \n \n* Check your [optimizer's default learning rate](#keras_optimizer_lr). \n* Note that the \"name\" that metrics are logged to [may have changed](#keras_metric_names).",

"_____no_output_____"

],

[

"It is still possible to run 1.X code, unmodified ([except for contrib](https://github.com/tensorflow/community/blob/master/rfcs/20180907-contrib-sunset.md)), in TensorFlow 2.0:\n\n```\nimport tensorflow.compat.v1 as tf\ntf.disable_v2_behavior()\n```\n\nHowever, this does not let you take advantage of many of the improvements made in TensorFlow 2.0. This guide will help you upgrade your code, making it simpler, more performant, and easier to maintain.",

"_____no_output_____"

],

[

"## Automatic conversion script\n\nThe first step, before attempting to implement the changes described in this doc, is to try running the [upgrade script](./upgrade.md).\n\nThis will do an initial pass at upgrading your code to TensorFlow 2.0. But it can't make your code idiomatic to 2.0. Your code may still make use of `tf.compat.v1` endpoints to access placeholders, sessions, collections, and other 1.x-style functionality.",

"_____no_output_____"

],

[

"## Top-level behavioral changes\n\nIf your code works in TensorFlow 2.0 using `tf.compat.v1.disable_v2_behavior()`, there are still global behavioral changes you may need to address. The major changes are:",

"_____no_output_____"

],

[

"* *Eager execution, `v1.enable_eager_execution()`* : Any code that implicitly uses a `tf.Graph` will fail. Be sure to wrap this code in a `with tf.Graph().as_default()` context. \n \n* *Resource variables, `v1.enable_resource_variables()`*: Some code may depends on non-deterministic behaviors enabled by TF reference variables. \nResource variables are locked while being written to, and so provide more intuitive consistency guarantees.\n\n * This may change behavior in edge cases.\n * This may create extra copies and can have higher memory usage.\n * This can be disabled by passing `use_resource=False` to the `tf.Variable` constructor.\n\n* *Tensor shapes, `v1.enable_v2_tensorshape()`*: TF 2.0 simplifies the behavior of tensor shapes. Instead of `t.shape[0].value` you can say `t.shape[0]`. These changes should be small, and it makes sense to fix them right away. See [TensorShape](#tensorshape) for examples.\n\n* *Control flow, `v1.enable_control_flow_v2()`*: The TF 2.0 control flow implementation has been simplified, and so produces different graph representations. Please [file bugs](https://github.com/tensorflow/tensorflow/issues) for any issues.",

"_____no_output_____"

],

[

"## Make the code 2.0-native\n\n\nThis guide will walk through several examples of converting TensorFlow 1.x code to TensorFlow 2.0. These changes will let your code take advantage of performance optimizations and simplified API calls.\n\nIn each case, the pattern is:",

"_____no_output_____"

],

[

"### 1. Replace `v1.Session.run` calls\n\nEvery `v1.Session.run` call should be replaced by a Python function.\n\n* The `feed_dict` and `v1.placeholder`s become function arguments.\n* The `fetches` become the function's return value. \n* During conversion eager execution allows easy debugging with standard Python tools like `pdb`.\n\nAfter that add a `tf.function` decorator to make it run efficiently in graph. See the [Autograph Guide](function.ipynb) for more on how this works.\n\nNote that:\n\n* Unlike `v1.Session.run` a `tf.function` has a fixed return signature, and always returns all outputs. If this causes performance problems, create two separate functions.\n\n* There is no need for a `tf.control_dependencies` or similar operations: A `tf.function` behaves as if it were run in the order written. `tf.Variable` assignments and `tf.assert`s, for example, are executed automatically.\n",

"_____no_output_____"

],

[

"### 2. Use Python objects to track variables and losses\n\nAll name-based variable tracking is strongly discouraged in TF 2.0. Use Python objects to to track variables.\n\nUse `tf.Variable` instead of `v1.get_variable`.\n\nEvery `v1.variable_scope` should be converted to a Python object. Typically this will be one of:\n\n* `tf.keras.layers.Layer`\n* `tf.keras.Model`\n* `tf.Module`\n\nIf you need to aggregate lists of variables (like `tf.Graph.get_collection(tf.GraphKeys.VARIABLES)`), use the `.variables` and `.trainable_variables` attributes of the `Layer` and `Model` objects.\n\nThese `Layer` and `Model` classes implement several other properties that remove the need for global collections. Their `.losses` property can be a replacement for using the `tf.GraphKeys.LOSSES` collection.\n\nSee the [keras guides](keras.ipynb) for details.\n\nWarning: Many `tf.compat.v1` symbols use the global collections implicitly.\n",

"_____no_output_____"

],

[

"### 3. Upgrade your training loops\n\nUse the highest level API that works for your use case. Prefer `tf.keras.Model.fit` over building your own training loops.\n\nThese high level functions manage a lot of the low-level details that might be easy to miss if you write your own training loop. For example, they automatically collect the regularization losses, and set the `training=True` argument when calling the model.\n",

"_____no_output_____"

],

[

"### 4. Upgrade your data input pipelines\n\nUse `tf.data` datasets for data input. These objects are efficient, expressive, and integrate well with tensorflow.\n\nThey can be passed directly to the `tf.keras.Model.fit` method.\n\n```\nmodel.fit(dataset, epochs=5)\n```\n\nThey can be iterated over directly standard Python:\n\n```\nfor example_batch, label_batch in dataset:\n break\n```\n",

"_____no_output_____"

],

[

"#### 5. Migrate off `compat.v1` symbols \n\nThe `tf.compat.v1` module contains the complete TensorFlow 1.x API, with its original semantics.\n\nThe [TF2 upgrade script](upgrade.ipynb) will convert symbols to their 2.0 equivalents if such a conversion is safe, i.e., if it can determine that the behavior of the 2.0 version is exactly equivalent (for instance, it will rename `v1.arg_max` to `tf.argmax`, since those are the same function). \n\nAfter the upgrade script is done with a piece of code, it is likely there are many mentions of `compat.v1`. It is worth going through the code and converting these manually to the 2.0 equivalent (it should be mentioned in the log if there is one).",

"_____no_output_____"

],

[

"## Converting models\n\n### Setup",

"_____no_output_____"

]

],

[

[

"import tensorflow as tf\n\n\nimport tensorflow_datasets as tfds",

"_____no_output_____"

]

],

[

[

"### Low-level variables & operator execution\n\nExamples of low-level API use include:\n\n* using variable scopes to control reuse\n* creating variables with `v1.get_variable`.\n* accessing collections explicitly\n* accessing collections implicitly with methods like :\n\n * `v1.global_variables`\n * `v1.losses.get_regularization_loss`\n\n* using `v1.placeholder` to set up graph inputs\n* executing graphs with `Session.run`\n* initializing variables manually\n",

"_____no_output_____"

],

[

"#### Before converting\n\nHere is what these patterns may look like in code using TensorFlow 1.x.\n\n```python\nin_a = tf.placeholder(dtype=tf.float32, shape=(2))\nin_b = tf.placeholder(dtype=tf.float32, shape=(2))\n\ndef forward(x):\n with tf.variable_scope(\"matmul\", reuse=tf.AUTO_REUSE):\n W = tf.get_variable(\"W\", initializer=tf.ones(shape=(2,2)),\n regularizer=tf.contrib.layers.l2_regularizer(0.04))\n b = tf.get_variable(\"b\", initializer=tf.zeros(shape=(2)))\n return W * x + b\n\nout_a = forward(in_a)\nout_b = forward(in_b)\n\nreg_loss=tf.losses.get_regularization_loss(scope=\"matmul\")\n\nwith tf.Session() as sess:\n sess.run(tf.global_variables_initializer())\n outs = sess.run([out_a, out_b, reg_loss],\n \t feed_dict={in_a: [1, 0], in_b: [0, 1]})\n\n```",

"_____no_output_____"

],

[

"#### After converting",

"_____no_output_____"

],

[

"In the converted code:\n\n* The variables are local Python objects.\n* The `forward` function still defines the calculation.\n* The `Session.run` call is replaced with a call to `forward`\n* The optional `tf.function` decorator can be added for performance.\n* The regularizations are calculated manually, without referring to any global collection.\n* **No sessions or placeholders.**",

"_____no_output_____"

]

],

[

[

"W = tf.Variable(tf.ones(shape=(2,2)), name=\"W\")\nb = tf.Variable(tf.zeros(shape=(2)), name=\"b\")\n\[email protected]\ndef forward(x):\n return W * x + b\n\nout_a = forward([1,0])\nprint(out_a)",

"_____no_output_____"

],

[

"out_b = forward([0,1])\n\nregularizer = tf.keras.regularizers.l2(0.04)\nreg_loss=regularizer(W)",

"_____no_output_____"

]

],

[

[

"### Models based on `tf.layers`",

"_____no_output_____"

],

[

"The `v1.layers` module is used to contain layer-functions that relied on `v1.variable_scope` to define and reuse variables.",

"_____no_output_____"

],

[

"#### Before converting\n```python\ndef model(x, training, scope='model'):\n with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):\n x = tf.layers.conv2d(x, 32, 3, activation=tf.nn.relu,\n kernel_regularizer=tf.contrib.layers.l2_regularizer(0.04))\n x = tf.layers.max_pooling2d(x, (2, 2), 1)\n x = tf.layers.flatten(x)\n x = tf.layers.dropout(x, 0.1, training=training)\n x = tf.layers.dense(x, 64, activation=tf.nn.relu)\n x = tf.layers.batch_normalization(x, training=training)\n x = tf.layers.dense(x, 10)\n return x\n\ntrain_out = model(train_data, training=True)\ntest_out = model(test_data, training=False)\n```",

"_____no_output_____"

],

[

"#### After converting",

"_____no_output_____"

],

[

"* The simple stack of layers fits neatly into `tf.keras.Sequential`. (For more complex models see [custom layers and models](keras/custom_layers_and_models.ipynb), and [the functional API](keras/functional.ipynb).)\n* The model tracks the variables, and regularization losses.\n* The conversion was one-to-one because there is a direct mapping from `v1.layers` to `tf.keras.layers`.\n\nMost arguments stayed the same. But notice the differences:\n\n* The `training` argument is passed to each layer by the model when it runs.\n* The first argument to the original `model` function (the input `x`) is gone. This is because object layers separate building the model from calling the model.\n\n\nAlso note that:\n\n* If you were using regularizers of initializers from `tf.contrib`, these have more argument changes than others.\n* The code no longer writes to collections, so functions like `v1.losses.get_regularization_loss` will no longer return these values, potentially breaking your training loops.",

"_____no_output_____"

]

],

[

[

"model = tf.keras.Sequential([\n tf.keras.layers.Conv2D(32, 3, activation='relu',\n kernel_regularizer=tf.keras.regularizers.l2(0.04),\n input_shape=(28, 28, 1)),\n tf.keras.layers.MaxPooling2D(),\n tf.keras.layers.Flatten(),\n tf.keras.layers.Dropout(0.1),\n tf.keras.layers.Dense(64, activation='relu'),\n tf.keras.layers.BatchNormalization(),\n tf.keras.layers.Dense(10)\n])\n\ntrain_data = tf.ones(shape=(1, 28, 28, 1))\ntest_data = tf.ones(shape=(1, 28, 28, 1))",

"_____no_output_____"

],

[

"train_out = model(train_data, training=True)\nprint(train_out)",

"_____no_output_____"

],

[

"test_out = model(test_data, training=False)\nprint(test_out)",

"_____no_output_____"

],

[

"# Here are all the trainable variables.\nlen(model.trainable_variables)",

"_____no_output_____"

],

[

"# Here is the regularization loss.\nmodel.losses",

"_____no_output_____"

]

],

[

[

"### Mixed variables & `v1.layers`\n",

"_____no_output_____"

],

[

"Existing code often mixes lower-level TF 1.x variables and operations with higher-level `v1.layers`.",

"_____no_output_____"

],

[

"#### Before converting\n```python\ndef model(x, training, scope='model'):\n with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):\n W = tf.get_variable(\n \"W\", dtype=tf.float32,\n initializer=tf.ones(shape=x.shape),\n regularizer=tf.contrib.layers.l2_regularizer(0.04),\n trainable=True)\n if training:\n x = x + W\n else:\n x = x + W * 0.5\n x = tf.layers.conv2d(x, 32, 3, activation=tf.nn.relu)\n x = tf.layers.max_pooling2d(x, (2, 2), 1)\n x = tf.layers.flatten(x)\n return x\n\ntrain_out = model(train_data, training=True)\ntest_out = model(test_data, training=False)\n```",

"_____no_output_____"

],

[

"#### After converting",

"_____no_output_____"

],

[

"To convert this code, follow the pattern of mapping layers to layers as in the previous example.\n\nA `v1.variable_scope` is effectively a layer of its own. So rewrite it as a `tf.keras.layers.Layer`. See [the guide](keras/custom_layers_and_models.ipynb) for details.\n\nThe general pattern is:\n\n* Collect layer parameters in `__init__`.\n* Build the variables in `build`.\n* Execute the calculations in `call`, and return the result.\n\nThe `v1.variable_scope` is essentially a layer of its own. So rewrite it as a `tf.keras.layers.Layer`. See [the guide](keras/custom_layers_and_models.ipynb) for details.",

"_____no_output_____"

]

],

[

[

"# Create a custom layer for part of the model\nclass CustomLayer(tf.keras.layers.Layer):\n def __init__(self, *args, **kwargs):\n super(CustomLayer, self).__init__(*args, **kwargs)\n\n def build(self, input_shape):\n self.w = self.add_weight(\n shape=input_shape[1:],\n dtype=tf.float32,\n initializer=tf.keras.initializers.ones(),\n regularizer=tf.keras.regularizers.l2(0.02),\n trainable=True)\n\n # Call method will sometimes get used in graph mode,\n # training will get turned into a tensor\n @tf.function\n def call(self, inputs, training=None):\n if training:\n return inputs + self.w\n else:\n return inputs + self.w * 0.5",

"_____no_output_____"

],

[

"custom_layer = CustomLayer()\nprint(custom_layer([1]).numpy())\nprint(custom_layer([1], training=True).numpy())",

"_____no_output_____"

],

[

"train_data = tf.ones(shape=(1, 28, 28, 1))\ntest_data = tf.ones(shape=(1, 28, 28, 1))\n\n# Build the model including the custom layer\nmodel = tf.keras.Sequential([\n CustomLayer(input_shape=(28, 28, 1)),\n tf.keras.layers.Conv2D(32, 3, activation='relu'),\n tf.keras.layers.MaxPooling2D(),\n tf.keras.layers.Flatten(),\n])\n\ntrain_out = model(train_data, training=True)\ntest_out = model(test_data, training=False)\n",

"_____no_output_____"

]

],

[

[

"Some things to note:\n\n* Subclassed Keras models & layers need to run in both v1 graphs (no automatic control dependencies) and in eager mode\n * Wrap the `call()` in a `tf.function()` to get autograph and automatic control dependencies\n\n* Don't forget to accept a `training` argument to `call`.\n * Sometimes it is a `tf.Tensor`\n * Sometimes it is a Python boolean.\n\n* Create model variables in constructor or `Model.build` using `self.add_weight()`.\n * In `Model.build` you have access to the input shape, so can create weights with matching shape.\n * Using `tf.keras.layers.Layer.add_weight` allows Keras to track variables and regularization losses.\n\n* Don't keep `tf.Tensors` in your objects.\n * They might get created either in a `tf.function` or in the eager context, and these tensors behave differently.\n * Use `tf.Variable`s for state, they are always usable from both contexts\n * `tf.Tensors` are only for intermediate values.",

"_____no_output_____"

],

[

"### A note on Slim & contrib.layers\n\nA large amount of older TensorFlow 1.x code uses the [Slim](https://ai.googleblog.com/2016/08/tf-slim-high-level-library-to-define.html) library, which was packaged with TensorFlow 1.x as `tf.contrib.layers`. As a `contrib` module, this is no longer available in TensorFlow 2.0, even in `tf.compat.v1`. Converting code using Slim to TF 2.0 is more involved than converting repositories that use `v1.layers`. In fact, it may make sense to convert your Slim code to `v1.layers` first, then convert to Keras.\n\n* Remove `arg_scopes`, all args need to be explicit\n* If you use them, split `normalizer_fn` and `activation_fn` into their own layers\n* Separable conv layers map to one or more different Keras layers (depthwise, pointwise, and separable Keras layers)\n* Slim and `v1.layers` have different arg names & default values\n* Some args have different scales\n* If you use Slim pre-trained models, try out Keras's pre-traimed models from `tf.keras.applications` or [TF Hub](https://tfhub.dev/s?q=slim%20tf2)'s TF2 SavedModels exported from the original Slim code.\n\nSome `tf.contrib` layers might not have been moved to core TensorFlow but have instead been moved to the [TF add-ons package](https://github.com/tensorflow/addons).\n",

"_____no_output_____"

],

[

"## Training",

"_____no_output_____"

],

[

"There are many ways to feed data to a `tf.keras` model. They will accept Python generators and Numpy arrays as input.\n\nThe recommended way to feed data to a model is to use the `tf.data` package, which contains a collection of high performance classes for manipulating data.\n\nIf you are still using `tf.queue`, these are now only supported as data-structures, not as input pipelines.",

"_____no_output_____"

],

[

"### Using Datasets",

"_____no_output_____"

],

[

"The [TensorFlow Datasets](https://tensorflow.org/datasets) package (`tfds`) contains utilities for loading predefined datasets as `tf.data.Dataset` objects.\n\nFor this example, load the MNISTdataset, using `tfds`:",

"_____no_output_____"

]

],

[

[

"datasets, info = tfds.load(name='mnist', with_info=True, as_supervised=True)\nmnist_train, mnist_test = datasets['train'], datasets['test']",

"_____no_output_____"

]

],

[

[

"Then prepare the data for training:\n\n * Re-scale each image.\n * Shuffle the order of the examples.\n * Collect batches of images and labels.\n",

"_____no_output_____"

]

],

[

[

"BUFFER_SIZE = 10 # Use a much larger value for real code.\nBATCH_SIZE = 64\nNUM_EPOCHS = 5\n\n\ndef scale(image, label):\n image = tf.cast(image, tf.float32)\n image /= 255\n\n return image, label",

"_____no_output_____"

]

],

[

[

" To keep the example short, trim the dataset to only return 5 batches:",

"_____no_output_____"

]

],

[

[

"train_data = mnist_train.map(scale).shuffle(BUFFER_SIZE).batch(BATCH_SIZE)\ntest_data = mnist_test.map(scale).batch(BATCH_SIZE)\n\nSTEPS_PER_EPOCH = 5\n\ntrain_data = train_data.take(STEPS_PER_EPOCH)\ntest_data = test_data.take(STEPS_PER_EPOCH)",

"_____no_output_____"

],

[

"image_batch, label_batch = next(iter(train_data))",

"_____no_output_____"

]

],

[

[

"### Use Keras training loops\n\nIf you don't need low level control of your training process, using Keras's built-in `fit`, `evaluate`, and `predict` methods is recommended. These methods provide a uniform interface to train the model regardless of the implementation (sequential, functional, or sub-classed).\n\nThe advantages of these methods include:\n\n* They accept Numpy arrays, Python generators and, `tf.data.Datasets`\n* They apply regularization, and activation losses automatically.\n* They support `tf.distribute` [for multi-device training](distributed_training.ipynb).\n* They support arbitrary callables as losses and metrics.\n* They support callbacks like `tf.keras.callbacks.TensorBoard`, and custom callbacks.\n* They are performant, automatically using TensorFlow graphs.\n\nHere is an example of training a model using a `Dataset`. (For details on how this works see [tutorials](../tutorials).)",

"_____no_output_____"

]

],

[

[

"model = tf.keras.Sequential([\n tf.keras.layers.Conv2D(32, 3, activation='relu',\n kernel_regularizer=tf.keras.regularizers.l2(0.02),\n input_shape=(28, 28, 1)),\n tf.keras.layers.MaxPooling2D(),\n tf.keras.layers.Flatten(),\n tf.keras.layers.Dropout(0.1),\n tf.keras.layers.Dense(64, activation='relu'),\n tf.keras.layers.BatchNormalization(),\n tf.keras.layers.Dense(10)\n])\n\n# Model is the full model w/o custom layers\nmodel.compile(optimizer='adam',\n loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),\n metrics=['accuracy'])\n\nmodel.fit(train_data, epochs=NUM_EPOCHS)\nloss, acc = model.evaluate(test_data)\n\nprint(\"Loss {}, Accuracy {}\".format(loss, acc))",

"_____no_output_____"

]

],

[

[

"### Write your own loop\n\nIf the Keras model's training step works for you, but you need more control outside that step, consider using the `tf.keras.Model.train_on_batch` method, in your own data-iteration loop.\n\nRemember: Many things can be implemented as a `tf.keras.callbacks.Callback`.\n\nThis method has many of the advantages of the methods mentioned in the previous section, but gives the user control of the outer loop.\n\nYou can also use `tf.keras.Model.test_on_batch` or `tf.keras.Model.evaluate` to check performance during training.\n\nNote: `train_on_batch` and `test_on_batch`, by default return the loss and metrics for the single batch. If you pass `reset_metrics=False` they return accumulated metrics and you must remember to appropriately reset the metric accumulators. Also remember that some metrics like `AUC` require `reset_metrics=False` to be calculated correctly.\n\nTo continue training the above model:\n",

"_____no_output_____"

]

],

[

[

"# Model is the full model w/o custom layers\nmodel.compile(optimizer='adam',\n loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),\n metrics=['accuracy'])\n\nfor epoch in range(NUM_EPOCHS):\n #Reset the metric accumulators\n model.reset_metrics()\n\n for image_batch, label_batch in train_data:\n result = model.train_on_batch(image_batch, label_batch)\n metrics_names = model.metrics_names\n print(\"train: \",\n \"{}: {:.3f}\".format(metrics_names[0], result[0]),\n \"{}: {:.3f}\".format(metrics_names[1], result[1]))\n for image_batch, label_batch in test_data:\n result = model.test_on_batch(image_batch, label_batch,\n # return accumulated metrics\n reset_metrics=False)\n metrics_names = model.metrics_names\n print(\"\\neval: \",\n \"{}: {:.3f}\".format(metrics_names[0], result[0]),\n \"{}: {:.3f}\".format(metrics_names[1], result[1]))",

"_____no_output_____"

]

],

[

[

"<a name=\"custom_loop\"></a>\n\n### Customize the training step\n\nIf you need more flexibility and control, you can have it by implementing your own training loop. There are three steps:\n\n1. Iterate over a Python generator or `tf.data.Dataset` to get batches of examples.\n2. Use `tf.GradientTape` to collect gradients.\n3. Use one of the `tf.keras.optimizers` to apply weight updates to the model's variables.\n\nRemember:\n\n* Always include a `training` argument on the `call` method of subclassed layers and models.\n* Make sure to call the model with the `training` argument set correctly.\n* Depending on usage, model variables may not exist until the model is run on a batch of data.\n* You need to manually handle things like regularization losses for the model.\n\nNote the simplifications relative to v1:\n\n* There is no need to run variable initializers. Variables are initialized on creation.\n* There is no need to add manual control dependencies. Even in `tf.function` operations act as in eager mode.",

"_____no_output_____"

]

],

[

[

"model = tf.keras.Sequential([\n tf.keras.layers.Conv2D(32, 3, activation='relu',\n kernel_regularizer=tf.keras.regularizers.l2(0.02),\n input_shape=(28, 28, 1)),\n tf.keras.layers.MaxPooling2D(),\n tf.keras.layers.Flatten(),\n tf.keras.layers.Dropout(0.1),\n tf.keras.layers.Dense(64, activation='relu'),\n tf.keras.layers.BatchNormalization(),\n tf.keras.layers.Dense(10)\n])\n\noptimizer = tf.keras.optimizers.Adam(0.001)\nloss_fn = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)\n\[email protected]\ndef train_step(inputs, labels):\n with tf.GradientTape() as tape:\n predictions = model(inputs, training=True)\n regularization_loss=tf.math.add_n(model.losses)\n pred_loss=loss_fn(labels, predictions)\n total_loss=pred_loss + regularization_loss\n\n gradients = tape.gradient(total_loss, model.trainable_variables)\n optimizer.apply_gradients(zip(gradients, model.trainable_variables))\n\nfor epoch in range(NUM_EPOCHS):\n for inputs, labels in train_data:\n train_step(inputs, labels)\n print(\"Finished epoch\", epoch)\n",

"_____no_output_____"

]

],

[

[

"### New-style metrics and losses\n\nIn TensorFlow 2.0, metrics and losses are objects. These work both eagerly and in `tf.function`s. \n\nA loss object is callable, and expects the (y_true, y_pred) as arguments:\n",

"_____no_output_____"

]

],

[

[

"cce = tf.keras.losses.CategoricalCrossentropy(from_logits=True)\ncce([[1, 0]], [[-1.0,3.0]]).numpy()",

"_____no_output_____"

]

],

[

[

"A metric object has the following methods:\n\n* `Metric.update_state()` — add new observations\n* `Metric.result()` —get the current result of the metric, given the observed values\n* `Metric.reset_states()` — clear all observations.\n\nThe object itself is callable. Calling updates the state with new observations, as with `update_state`, and returns the new result of the metric.\n\nYou don't have to manually initialize a metric's variables, and because TensorFlow 2.0 has automatic control dependencies, you don't need to worry about those either.\n\nThe code below uses a metric to keep track of the mean loss observed within a custom training loop.",

"_____no_output_____"

]

],

[

[

"# Create the metrics\nloss_metric = tf.keras.metrics.Mean(name='train_loss')\naccuracy_metric = tf.keras.metrics.SparseCategoricalAccuracy(name='train_accuracy')\n\[email protected]\ndef train_step(inputs, labels):\n with tf.GradientTape() as tape:\n predictions = model(inputs, training=True)\n regularization_loss=tf.math.add_n(model.losses)\n pred_loss=loss_fn(labels, predictions)\n total_loss=pred_loss + regularization_loss\n\n gradients = tape.gradient(total_loss, model.trainable_variables)\n optimizer.apply_gradients(zip(gradients, model.trainable_variables))\n # Update the metrics\n loss_metric.update_state(total_loss)\n accuracy_metric.update_state(labels, predictions)\n\n\nfor epoch in range(NUM_EPOCHS):\n # Reset the metrics\n loss_metric.reset_states()\n accuracy_metric.reset_states()\n\n for inputs, labels in train_data:\n train_step(inputs, labels)\n # Get the metric results\n mean_loss=loss_metric.result()\n mean_accuracy = accuracy_metric.result()\n\n print('Epoch: ', epoch)\n print(' loss: {:.3f}'.format(mean_loss))\n print(' accuracy: {:.3f}'.format(mean_accuracy))\n",

"_____no_output_____"

]

],

[

[

"<a id=\"keras_metric_names\"></a>\n\n### Keras metric names",

"_____no_output_____"

],

[

"In TensorFlow 2.0 keras models are more consistent about handling metric names.\n\nNow when you pass a string in the list of metrics, that _exact_ string is used as the metric's `name`. These names are visible in the history object returned by `model.fit`, and in the logs passed to `keras.callbacks`. is set to the string you passed in the metric list. ",

"_____no_output_____"

]

],

[

[

"model.compile(\n optimizer = tf.keras.optimizers.Adam(0.001),\n loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),\n metrics = ['acc', 'accuracy', tf.keras.metrics.SparseCategoricalAccuracy(name=\"my_accuracy\")])\nhistory = model.fit(train_data)",

"_____no_output_____"

],

[

"history.history.keys()",

"_____no_output_____"

]

],

[

[

"This differs from previous versions where passing `metrics=[\"accuracy\"]` would result in `dict_keys(['loss', 'acc'])` ",

"_____no_output_____"

],

[

"### Keras optimizers",

"_____no_output_____"

],

[

"The optimizers in `v1.train`, like `v1.train.AdamOptimizer` and `v1.train.GradientDescentOptimizer`, have equivalents in `tf.keras.optimizers`.",

"_____no_output_____"

],

[

"#### Convert `v1.train` to `keras.optimizers`\n\nHere are things to keep in mind when converting your optimizers:\n\n* Upgrading your optimizers [may make old checkpoints incompatible](#checkpoints).\n* All epsilons now default to `1e-7` instead of `1e-8` (which is negligible in most use cases).\n* `v1.train.GradientDescentOptimizer` can be directly replaced by `tf.keras.optimizers.SGD`. \n* `v1.train.MomentumOptimizer` can be directly replaced by the `SGD` optimizer using the momentum argument: `tf.keras.optimizers.SGD(..., momentum=...)`.\n* `v1.train.AdamOptimizer` can be converted to use `tf.keras.optimizers.Adam`. The `beta1` and `beta2` arguments have been renamed to `beta_1` and `beta_2`.\n* `v1.train.RMSPropOptimizer` can be converted to `tf.keras.optimizers.RMSprop`. The `decay` argument has been renamed to `rho`.\n* `v1.train.AdadeltaOptimizer` can be converted directly to `tf.keras.optimizers.Adadelta`.\n* `tf.train.AdagradOptimizer` can be converted directly to `tf.keras.optimizers.Adagrad`.\n* `tf.train.FtrlOptimizer` can be converted directly to `tf.keras.optimizers.Ftrl`. The `accum_name` and `linear_name` arguments have been removed.\n* The `tf.contrib.AdamaxOptimizer` and `tf.contrib.NadamOptimizer`, can be converted directly to `tf.keras.optimizers.Adamax` and `tf.keras.optimizers.Nadam`. The `beta1`, and `beta2` arguments have been renamed to `beta_1` and `beta_2`.\n",

"_____no_output_____"

],

[

"#### New defaults for some `tf.keras.optimizers`\n<a id=\"keras_optimizer_lr\"></a>\n\nWarning: If you see a change in convergence behavior for your models, check the default learning rates.\n\nThere are no changes for `optimizers.SGD`, `optimizers.Adam`, or `optimizers.RMSprop`.\n\nThe following default learning rates have changed:\n\n* `optimizers.Adagrad` from 0.01 to 0.001\n* `optimizers.Adadelta` from 1.0 to 0.001\n* `optimizers.Adamax` from 0.002 to 0.001\n* `optimizers.Nadam` from 0.002 to 0.001",

"_____no_output_____"

],

[

"### TensorBoard",

"_____no_output_____"

],

[

"TensorFlow 2 includes significant changes to the `tf.summary` API used to write summary data for visualization in TensorBoard. For a general introduction to the new `tf.summary`, there are [several tutorials available](https://www.tensorflow.org/tensorboard/get_started) that use the TF 2 API. This includes a [TensorBoard TF 2 Migration Guide](https://www.tensorflow.org/tensorboard/migrate)",

"_____no_output_____"

],

[

"## Saving & Loading\n",

"_____no_output_____"

],

[