modelId

stringlengths 4

112

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 21

values | files

list | publishedBy

stringlengths 2

37

| downloads_last_month

int32 0

9.44M

| library

stringclasses 15

values | modelCard

large_stringlengths 0

100k

|

|---|---|---|---|---|---|---|---|---|

fspanda/Medical-Bio-BERT2 | 2021-05-19T16:57:41.000Z | [

"pytorch",

"jax",

"bert",

"masked-lm",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"config.json",

"flax_model.msgpack",

"pytorch_model.bin",

"vocab.txt"

]

| fspanda | 81 | transformers | |

fspanda/electra-medical-discriminator | 2020-10-28T11:33:37.000Z | [

"pytorch",

"electra",

"pretraining",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"tokenizer_config.json",

"vocab.txt"

]

| fspanda | 11 | transformers | ||

fspanda/electra-medical-small-discriminator | 2020-10-29T00:30:38.000Z | [

"pytorch",

"electra",

"pretraining",

"transformers"

]

| [

".gitattributes",

"config.json",

"pytorch_model.bin",

"tokenizer_config.json",

"vocab.txt"

]

| fspanda | 12 | transformers | ||

fspanda/electra-medical-small-generator | 2020-10-29T00:33:04.000Z | [

"pytorch",

"electra",

"masked-lm",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

"config.json",

"pytorch_model.bin",

"tokenizer_config.json",

"vocab.txt"

]

| fspanda | 24 | transformers | |

fuliucansheng/adsplus | 2021-04-06T08:07:09.000Z | []

| [

".gitattributes",

"mass/mainstream/mass-base-uncased-config.json",

"mass/mainstream/mass-base-uncased-slab-desc-from-lp.bin",

"mass/mainstream/mass-base-uncased-slab-desc-from-title.bin",

"mass/mainstream/mass-base-uncased-slab-title-from-lp.bin",

"mass/mainstream/mass-base-uncased-vocab.txt",

"twinbert/mainstream/mt_twinbert_tri_letter_weight.bin",

"twinbert/mainstream/twinbert_tri_letter_config.json",

"twinbert/mainstream/twinbert_tri_letter_pretrain_weight.bin",

"twinbert/mainstream/twinbert_tri_letter_vocab.txt",

"unilm/infoxlm/infoxlm-roberta-config.json",

"unilm/mainstream/deepgen_v3_config.json",

"unilm/mainstream/deepgen_v3_model.bin"

]

| fuliucansheng | 0 | |||

fuliucansheng/mass | 2021-02-21T15:35:33.000Z | []

| [

".gitattributes",

"mass-base-uncased-config.json",

"mass-base-uncased-pytorch-model.bin",

"mass-base-uncased-vocab.txt",

"mass_for_generation.ini"

]

| fuliucansheng | 0 | |||

fuliucansheng/unilm | 2021-06-08T05:37:10.000Z | []

| [

".gitattributes",

"cnndm-unilm-base-cased-config.json",

"cnndm-unilm-large-cased-config.json",

"cnndm-unilm-large-cased.bin",

"infoxlm-roberta-config.json",

"unilm-base-uncased-config.json"

]

| fuliucansheng | 0 | |||

fullshowbox/DSADAWF | 2021-04-08T18:33:40.000Z | []

| [

".gitattributes",

"README.md"

]

| fullshowbox | 0 | https://vrip.unmsm.edu.pe/forum/profile/liexylezzy/

https://vrip.unmsm.edu.pe/forum/profile/ellindanatasya/

https://vrip.unmsm.edu.pe/forum/profile/oploscgv/

https://vrip.unmsm.edu.pe/forum/profile/Zackoplos/

https://vrip.unmsm.edu.pe/forum/profile/unholyzulk/

https://vrip.unmsm.edu.pe/forum/profile/aurorarezash/ |

||

fullshowbox/full-tv-free | 2021-04-22T02:47:22.000Z | []

| [

".gitattributes",

"README.md"

]

| fullshowbox | 0 | https://community.afpglobal.org/network/members/profile?UserKey=fb4fdcef-dde4-4258-a423-2159545d84c1

https://community.afpglobal.org/network/members/profile?UserKey=e6ccc088-b709-45ec-b61e-4d56088acbda

https://community.afpglobal.org/network/members/profile?UserKey=ba280059-0890-4510-81d0-a79522b75ac8

https://community.afpglobal.org/network/members/profile?UserKey=799ba769-6e99-4a6a-a173-4f1b817e978c

https://community.afpglobal.org/network/members/profile?UserKey=babb84d7-e91a-4972-b26a-51067c66d793

https://community.afpglobal.org/network/members/profile?UserKey=8e4656bc-8d0d-44e1-b280-e68a2ace9353

https://community.afpglobal.org/network/members/profile?UserKey=8e7b41a8-9bed-4cb0-9021-a164b0aa6dd3

https://community.afpglobal.org/network/members/profile?UserKey=e4f38596-d772-4fbe-9e93-9aef5618f26e

https://community.afpglobal.org/network/members/profile?UserKey=18221e49-74ba-4155-ac1e-6f184bfb2398

https://community.afpglobal.org/network/members/profile?UserKey=ef4391e8-03df-467f-bf3f-4a45087817eb

https://community.afpglobal.org/network/members/profile?UserKey=832774fd-a035-421a-8236-61cf45a7747d

https://community.afpglobal.org/network/members/profile?UserKey=9f05cd73-b75c-4820-b60a-5df6357b2af9

https://community.afpglobal.org/network/members/profile?UserKey=c1727992-5024-4321-b0c9-ecc6f51e6532

https://www.hybrid-analysis.com/sample/255948e335dd9f873d11bf0224f8d180cd097509d23d27506292c22443fa92b8

https://www.facebook.com/PS5Giveaways2021

https://cgvmovie.cookpad-blog.jp/articles/589986

https://myanimelist.net/blog.php?eid=850892

https://comicvine.gamespot.com/profile/full-tv-free/about-me/

https://pantip.com/topic/40658194 |

||

fullshowbox/nacenetwork21 | 2021-04-13T02:57:06.000Z | []

| [

".gitattributes",

"README.md"

]

| fullshowbox | 0 | https://volunteer.alz.org/network/members/profile?UserKey=f4774542-39b3-4cfd-8c21-7b834795f7d7

https://volunteer.alz.org/network/members/profile?UserKey=05a00b90-f854-45fb-9a3a-7420144d290c

https://volunteer.alz.org/network/members/profile?UserKey=45cceddd-29b9-4c6c-8612-e2a16aaa391a

https://volunteer.alz.org/network/members/profile?UserKey=ae3c28f9-72a3-4af5-bd50-3b2ea2c0d3a3

https://volunteer.alz.org/network/members/profile?UserKey=7ab8e28e-e31f-4906-ab06-84b9ea3a880f

https://volunteer.alz.org/network/members/profile?UserKey=1b31fc90-e18e-4ef6-81f0-5c0b55fb95a3

https://volunteer.alz.org/network/members/profile?UserKey=23971b11-04ad-4eb4-abc5-6e659c6b071c

123movies-watch-online-movie-full-free-2021

https://myanimelist.net/blog.php?eid=849353

https://comicvine.gamespot.com/profile/nacenetwork21/about-me/

https://pantip.com/topic/40639721 |

||

fullshowbox/networkprofile | 2021-04-13T03:40:48.000Z | []

| [

".gitattributes",

"README.md"

]

| fullshowbox | 0 | https://www.nace.org/network/members/profile?UserKey=461a690a-bff6-4e4c-be63-ea8e39264459

https://www.nace.org/network/members/profile?UserKey=b4a6a66a-fb8a-4f2b-8af9-04f003ad9d46

https://www.nace.org/network/members/profile?UserKey=24544ab2-551d-42aa-adbe-7a1c1d68fd9c

https://www.nace.org/network/members/profile?UserKey=3e8035d5-056a-482d-9010-9883e5990f4a

https://www.nace.org/network/members/profile?UserKey=d7241c69-28c4-4146-a077-a00cc2c9ccf5

https://www.nace.org/network/members/profile?UserKey=2c58c2fb-13a4-4e5a-b044-f467bb295d83

https://www.nace.org/network/members/profile?UserKey=dd8a290c-e53a-4b56-9a17-d35dbcb6b8bd

https://www.nace.org/network/members/profile?UserKey=0e96a1af-91f4-496a-af02-6d753a1bbded |

||

fullshowbox/ragbrai | 2021-04-12T05:10:28.000Z | []

| [

".gitattributes",

"README.md"

]

| fullshowbox | 0 | https://ragbrai.com/groups/hd-movie-watch-french-exit-2021-full-movie-online-for-free/

https://ragbrai.com/groups/hd-movie-watch-nobody-2021-full-movie-online-for-free/

https://ragbrai.com/groups/hd-movie-watch-voyagers-2021-full-movie-online-for-free/

https://ragbrai.com/groups/hd-movie-watch-godzilla-vs-kong-2021-full-movie-online-for-free/

https://ragbrai.com/groups/hd-movie-watch-raya-and-the-last-dragon-2021-full-movie-online-for-free/

https://ragbrai.com/groups/hd-movie-watch-mortal-kombat-2021-full-movie-online-for-free/

https://ragbrai.com/groups/hd-movie-watch-the-father-2021-full-movie-online-for-free/ |

||

funnel-transformer/intermediate-base | 2020-12-11T21:40:21.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 235 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer intermediate model (B6-6-6 without decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

**Note:** This model does not contain the decoder, so it ouputs hidden states that have a sequence length of one fourth

of the inputs. It's good to use for tasks requiring a summary of the sentence (like sentence classification) but not if

you need one input per initial token. You should use the `intermediate` model in that case.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/intermediate-base")

model = FunnelBaseModel.from_pretrained("funnel-transformer/intermediate-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/intermediate-base")

model = TFFunnelBaseModel.from_pretrained("funnel-transformer/intermediate-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/intermediate | 2020-12-11T21:40:25.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 432 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer intermediate model (B6-6-6 with decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/intermediate")

model = FunneModel.from_pretrained("funnel-transformer/intermediate")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/intermediate")

model = TFFunnelModel.from_pretrained("funnel-transformer/intermediatesmall")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/large-base | 2020-12-11T21:40:28.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 745 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer large model (B8-8-8 without decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

**Note:** This model does not contain the decoder, so it ouputs hidden states that have a sequence length of one fourth

of the inputs. It's good to use for tasks requiring a summary of the sentence (like sentence classification) but not if

you need one input per initial token. You should use the `large` model in that case.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/large-base")

model = FunnelBaseModel.from_pretrained("funnel-transformer/large-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/large-base")

model = TFFunnelBaseModel.from_pretrained("funnel-transformer/large-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/large | 2020-12-11T21:40:31.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 188 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer large model (B8-8-8 with decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/large")

model = FunneModel.from_pretrained("funnel-transformer/large")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/large")

model = TFFunnelModel.from_pretrained("funnel-transformer/large")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/medium-base | 2020-12-11T21:40:34.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 242 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer medium model (B6-3x2-3x2 without decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

**Note:** This model does not contain the decoder, so it ouputs hidden states that have a sequence length of one fourth

of the inputs. It's good to use for tasks requiring a summary of the sentence (like sentence classification) but not if

you need one input per initial token. You should use the `medium` model in that case.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/medium-base")

model = FunnelBaseModel.from_pretrained("funnel-transformer/medium-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/medium-base")

model = TFFunnelBaseModel.from_pretrained("funnel-transformer/medium-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/medium | 2020-12-11T21:40:38.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 179 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer medium model (B6-3x2-3x2 with decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/medium")

model = FunneModel.from_pretrained("funnel-transformer/medium")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/medium")

model = TFFunnelModel.from_pretrained("funnel-transformer/medium")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/small-base | 2020-12-11T21:40:41.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 5,642 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer small model (B4-4-4 without decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

**Note:** This model does not contain the decoder, so it ouputs hidden states that have a sequence length of one fourth

of the inputs. It's good to use for tasks requiring a summary of the sentence (like sentence classification) but not if

you need one input per initial token. You should use the `small` model in that case.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/small-base")

model = FunnelBaseModel.from_pretrained("funnel-transformer/small-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/small-base")

model = TFFunnelBaseModel.from_pretrained("funnel-transformer/small-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/small | 2020-12-11T21:40:44.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 517 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer small model (B4-4-4 with decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/small")

model = FunneModel.from_pretrained("funnel-transformer/small")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/small")

model = TFFunnelModel.from_pretrained("funnel-transformer/small")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/xlarge-base | 2020-12-11T21:40:48.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 71,624 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer xlarge model (B10-10-10 without decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

**Note:** This model does not contain the decoder, so it ouputs hidden states that have a sequence length of one fourth

of the inputs. It's good to use for tasks requiring a summary of the sentence (like sentence classification) but not if

you need one input per initial token. You should use the `xlarge` model in that case.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/xlarge-base")

model = FunnelBaseModel.from_pretrained("funnel-transformer/xlarge-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelBaseModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/xlarge-base")

model = TFFunnelBaseModel.from_pretrained("funnel-transformer/xlarge-base")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

funnel-transformer/xlarge | 2020-12-11T21:40:51.000Z | [

"pytorch",

"tf",

"funnel",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"dataset:gigaword",

"arxiv:2006.03236",

"transformers",

"license:apache-2.0"

]

| [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tf_model.h5",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| funnel-transformer | 144 | transformers | ---

language: en

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

- gigaword

---

# Funnel Transformer xlarge model (B10-10-10 with decoder)

Pretrained model on English language using a similar objective objective as [ELECTRA](https://huggingface.co/transformers/model_doc/electra.html). It was introduced in

[this paper](https://arxiv.org/pdf/2006.03236.pdf) and first released in

[this repository](https://github.com/laiguokun/Funnel-Transformer). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing Funnel Transformer did not write a model card for this model so this model card has been

written by the Hugging Face team.

## Model description

Funnel Transformer is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts.

More precisely, a small language model corrupts the input texts and serves as a generator of inputs for this model, and

the pretraining objective is to predict which token is an original and which one has been replaced, a bit like a GAN training.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model to extract a vector representation of a given text, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=funnel-transformer) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import FunnelTokenizer, FunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/xlarge")

model = FunneModel.from_pretrained("funnel-transformer/xlarge")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import FunnelTokenizer, TFFunnelModel

tokenizer = FunnelTokenizer.from_pretrained("funnel-transformer/xlarge")

model = TFFunnelModel.from_pretrained("funnel-transformer/xlarge")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

The BERT model was pretrained on:

- [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038 unpublished books,

- [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and headers),

- [Clue Web](https://lemurproject.org/clueweb12/), a dataset of 733,019,372 English web pages,

- [GigaWord](https://catalog.ldc.upenn.edu/LDC2011T07), an archive of newswire text data,

- [Common Crawl](https://commoncrawl.org/), a dataset of raw web pages.

### BibTeX entry and citation info

```bibtex

@misc{dai2020funneltransformer,

title={Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing},

author={Zihang Dai and Guokun Lai and Yiming Yang and Quoc V. Le},

year={2020},

eprint={2006.03236},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

|

|

furkanbilgin/gpt2-eksisozluk | 2021-05-02T18:20:53.000Z | []

| [

".gitattributes"

]

| furkanbilgin | 0 | |||

fuyunhuayu/face | 2021-03-16T13:44:55.000Z | []

| [

".gitattributes"

]

| fuyunhuayu | 0 | |||

fvillena/bio-bert-base-spanish-wwm-uncased | 2021-06-08T16:12:08.000Z | [

"pytorch",

"bert",

"masked-lm",

"es",

"transformers",

"fill-mask"

]

| fill-mask | [

".gitattributes",

".gitignore",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer.json",

"tokenizer_config.json",

"vocab.txt"

]

| fvillena | 165 | transformers | ---

language:

- es

widget:

- text: "Periodontitis [MASK] generalizada severa."

- text: "Caries dentinaria [MASK]."

- text: "Movilidad aumentada en pza [MASK]."

- text: "Pcte con dm en tto con [MASK]."

- text: "Pcte con erc en tto con [MASK]."

---

# Bio-BERT-Spanish

BERT masked language model fine-tuned from `dccuchile/bert-base-Spanish-wwm-uncased` over clinical text in Spanish.

## Training data

This model was fine-tuned over a clinical corpus comprised of 5,157,902 free-text diagnostic suspicions extracted from Chilean waiting list referrals. |

g4brielvs/gaga | 2021-01-19T22:29:44.000Z | []

| [

".gitattributes"

]

| g4brielvs | 0 | |||

gael1130/gael_first_model | 2020-12-05T12:54:42.000Z | []

| [

".gitattributes",

"README.md"

]

| gael1130 | 0 | I am adding my first README in order to test the interface. How good is it really? |

||

gagan3012/Fox-News-Generator | 2021-05-21T16:03:28.000Z | [

"pytorch",

"jax",

"gpt2",

"lm-head",

"causal-lm",

"transformers",

"text-generation"

]

| text-generation | [

".gitattributes",

"README.md",

"config.json",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"train_results.txt",

"trainer_state.json",

"training_args.bin",

"vocab.json"

]

| gagan3012 | 43 | transformers | # Generating Right Wing News Using GPT2

### I have built a custom model for it using data from Kaggle

Creating a new finetuned model using data from FOX news

### My model can be accessed at gagan3012/Fox-News-Generator

Check the [BenchmarkTest](https://github.com/gagan3012/Fox-News-Generator/blob/master/BenchmarkTest.ipynb) notebook for results

Find the model at [gagan3012/Fox-News-Generator](https://huggingface.co/gagan3012/Fox-News-Generator)

```

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/Fox-News-Generator")

model = AutoModelWithLMHead.from_pretrained("gagan3012/Fox-News-Generator")

```

|

gagan3012/k2t-base | 2021-05-08T00:53:41.000Z | [

"pytorch",

"t5",

"lm-head",

"seq2seq",

"en",

"dataset:WebNLG",

"dataset:Dart",

"transformers",

"keytotext",

"k2t-base",

"Keywords to Sentences",

"license:mit",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"tokenizer.json"

]

| gagan3012 | 477 | transformers | ---

language: "en"

thumbnail: "Keywords to Sentences"

tags:

- keytotext

- k2t-base

- Keywords to Sentences

license: "MIT"

datasets:

- WebNLG

- Dart

metrics:

- NLG

---

# keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

gagan3012/k2t-new | 2021-06-18T22:26:34.000Z | [

"pytorch",

"t5",

"seq2seq",

"en",

"dataset:common_gen",

"transformers",

"keytotext",

"k2t",

"Keywords to Sentences",

"license:mit",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"special_tokens_map.json",

"spiece.model",

"tokenizer.json",

"tokenizer_config.json"

]

| gagan3012 | 0 | transformers | |

gagan3012/k2t-tiny | 2021-05-08T00:53:27.000Z | [

"pytorch",

"t5",

"seq2seq",

"en",

"dataset:WebNLG",

"dataset:Dart",

"transformers",

"keytotext",

"k2t-tiny",

"Keywords to Sentences",

"license:mit",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"tokenizer.json"

]

| gagan3012 | 16 | transformers | ---

language: "en"

thumbnail: "Keywords to Sentences"

tags:

- keytotext

- k2t-tiny

- Keywords to Sentences

license: "MIT"

datasets:

- WebNLG

- Dart

metrics:

- NLG

---

# keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

gagan3012/k2t | 2021-05-08T00:52:45.000Z | [

"pytorch",

"t5",

"lm-head",

"seq2seq",

"en",

"dataset:WebNLG",

"dataset:Dart",

"transformers",

"keytotext",

"k2t",

"Keywords to Sentences",

"license:mit",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"tokenizer.json"

]

| gagan3012 | 689 | transformers | ---

language: "en"

thumbnail: "Keywords to Sentences"

tags:

- keytotext

- k2t

- Keywords to Sentences

license: "MIT"

datasets:

- WebNLG

- Dart

metrics:

- NLG

---

# keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Keytotext is powered by Huggingface 🤗

[](https://pypi.org/project/keytotext/)

[](https://pepy.tech/project/keytotext)

[](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

[](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

## Model:

Keytotext is based on the Amazing T5 Model:

- `k2t`: [Model](https://huggingface.co/gagan3012/k2t)

- `k2t-tiny`: [Model](https://huggingface.co/gagan3012/k2t-tiny)

- `k2t-base`: [Model](https://huggingface.co/gagan3012/k2t-base)

Training Notebooks can be found in the [`Training Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Training%20Notebooks) Folder

## Usage:

Example usage: [](https://colab.research.google.com/github/gagan3012/keytotext/blob/master/Examples/K2T.ipynb)

Example Notebooks can be found in the [`Notebooks`](https://github.com/gagan3012/keytotext/tree/master/Examples) Folder

```

pip install keytotext

```

## UI:

UI: [](https://share.streamlit.io/gagan3012/keytotext/UI/app.py)

```

pip install streamlit-tags

```

This uses a custom streamlit component built by me: [GitHub](https://github.com/gagan3012/streamlit-tags)

|

gagan3012/keytotext-gpt | 2021-05-21T16:04:39.000Z | [

"pytorch",

"jax",

"gpt2",

"lm-head",

"causal-lm",

"transformers",

"text-generation"

]

| text-generation | [

".gitattributes",

"added_tokens.json",

"config.json",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer.json",

"tokenizer_config.json",

"vocab.json"

]

| gagan3012 | 82 | transformers | |

gagan3012/keytotext-small | 2021-03-11T23:33:47.000Z | [

"pytorch",

"t5",

"lm-head",

"seq2seq",

"transformers",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"tokenizer.json"

]

| gagan3012 | 47 | transformers | # keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Model:

Two Models have been built:

- Using T5-base size = 850 MB can be found here: https://huggingface.co/gagan3012/keytotext

- Using T5-small size = 230 MB can be found here: https://huggingface.co/gagan3012/keytotext-small

#### Usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/keytotext-small")

model = AutoModelWithLMHead.from_pretrained("gagan3012/keytotext-small")

```

### Demo:

[](https://share.streamlit.io/gagan3012/keytotext/app.py)

https://share.streamlit.io/gagan3012/keytotext/app.py

### Example:

['India', 'Wedding'] -> We are celebrating today in New Delhi with three wedding anniversary parties.

|

gagan3012/keytotext | 2021-03-11T20:23:32.000Z | [

"pytorch",

"t5",

"lm-head",

"seq2seq",

"transformers",

"text2text-generation"

]

| text2text-generation | [

".gitattributes",

"README.md",

"config.json",

"pytorch_model.bin",

"tokenizer.json"

]

| gagan3012 | 40 | transformers | # keytotext

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

### Model:

Two Models have been built:

- Using T5-base size = 850 MB can be found here: https://huggingface.co/gagan3012/keytotext

- Using T5-small size = 230 MB can be found here: https://huggingface.co/gagan3012/keytotext-small

#### Usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/keytotext-small")

model = AutoModelWithLMHead.from_pretrained("gagan3012/keytotext-small")

```

### Demo:

[](https://share.streamlit.io/gagan3012/keytotext/app.py)

https://share.streamlit.io/gagan3012/keytotext/app.py

### Example:

['India', 'Wedding'] -> We are celebrating today in New Delhi with three wedding anniversary parties.

|

gagan3012/project-code-py-micro | 2021-05-21T16:05:34.000Z | [

"pytorch",

"jax",

"gpt2",

"lm-head",

"causal-lm",

"transformers",

"text-generation"

]

| text-generation | [

".gitattributes",

"all_results.json",

"config.json",

"eval_results.json",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"train_results.json",

"trainer_state.json",

"training_args.bin",

"vocab.json"

]

| gagan3012 | 13 | transformers | |

gagan3012/project-code-py-neo | 2021-05-25T07:32:07.000Z | [

"pytorch",

"gpt_neo",

"causal-lm",

"transformers",

"text-generation"

]

| text-generation | [

".gitattributes",

"all_results.json",

"config.json",

"eval_results.json",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer.json",

"tokenizer_config.json",

"train_results.json",

"trainer_state.json",

"training_args.bin",

"vocab.json"

]

| gagan3012 | 40 | transformers | |

gagan3012/project-code-py-small | 2021-05-21T16:06:24.000Z | [

"pytorch",

"jax",

"gpt2",

"lm-head",

"causal-lm",

"transformers",

"text-generation"

]

| text-generation | [

".gitattributes",

"README.md",

"all_results.json",

"config.json",

"eval_results.json",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"train_results.json",

"trainer_state.json",

"training_args.bin",

"vocab.json"

]

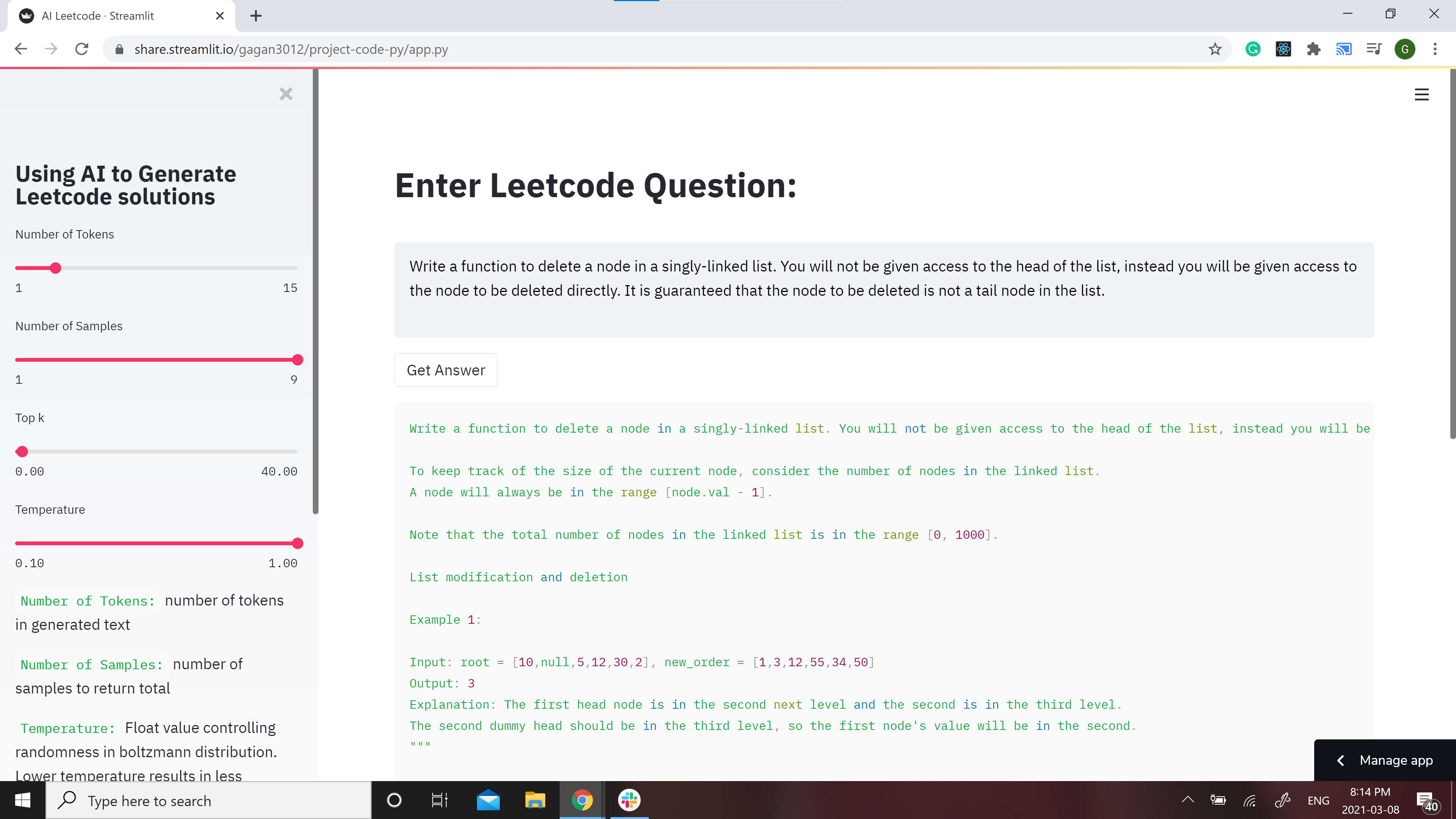

| gagan3012 | 137 | transformers | # Leetcode using AI :robot:

GPT-2 Model for Leetcode Questions in python

**Note**: the Answers might not make sense in some cases because of the bias in GPT-2

**Contribtuions:** If you would like to make the model better contributions are welcome Check out [CONTRIBUTIONS.md](https://github.com/gagan3012/project-code-py/blob/master/CONTRIBUTIONS.md)

### 📢 Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at https://huggingface.co/gagan3012

The model weights can be found here: [GPT-2](https://huggingface.co/gagan3012/project-code-py) and [DistilGPT-2](https://huggingface.co/gagan3012/project-code-py-small)

### Example usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/project-code-py")

model = AutoModelWithLMHead.from_pretrained("gagan3012/project-code-py")

```

## Demo

[](https://share.streamlit.io/gagan3012/project-code-py/app.py)

A streamlit webapp has been setup to use the model: https://share.streamlit.io/gagan3012/project-code-py/app.py

## Example results:

### Question:

```

Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

```

### Answer:

```python

""" Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

For example,

a = 1->2->3

b = 3->1->2

t = ListNode(-1, 1)

Note: The lexicographic ordering of the nodes in a tree matters. Do not assign values to nodes in a tree.

Example 1:

Input: [1,2,3]

Output: 1->2->5

Explanation: 1->2->3->3->4, then 1->2->5[2] and then 5->1->3->4.

Note:

The length of a linked list will be in the range [1, 1000].

Node.val must be a valid LinkedListNode type.

Both the length and the value of the nodes in a linked list will be in the range [-1000, 1000].

All nodes are distinct.

"""

# Definition for singly-linked list.

# class ListNode:

# def __init__(self, x):

# self.val = x

# self.next = None

class Solution:

def deleteNode(self, head: ListNode, val: int) -> None:

"""

BFS

Linked List

:param head: ListNode

:param val: int

:return: ListNode

"""

if head is not None:

return head

dummy = ListNode(-1, 1)

dummy.next = head

dummy.next.val = val

dummy.next.next = head

dummy.val = ""

s1 = Solution()

print(s1.deleteNode(head))

print(s1.deleteNode(-1))

print(s1.deleteNode(-1))

```

|

gagan3012/project-code-py | 2021-05-21T16:08:09.000Z | [

"pytorch",

"jax",

"gpt2",

"lm-head",

"causal-lm",

"transformers",

"text-generation"

]

| text-generation | [

".gitattributes",

"README.md",

"config.json",

"flax_model.msgpack",

"merges.txt",

"pytorch_model.bin",

"special_tokens_map.json",

"tokenizer_config.json",

"training_args.bin",

"vocab.json"

]

| gagan3012 | 109 | transformers | # Leetcode using AI :robot:

GPT-2 Model for Leetcode Questions in python

**Note**: the Answers might not make sense in some cases because of the bias in GPT-2

**Contribtuions:** If you would like to make the model better contributions are welcome Check out [CONTRIBUTIONS.md](https://github.com/gagan3012/project-code-py/blob/master/CONTRIBUTIONS.md)

### 📢 Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at https://huggingface.co/gagan3012

The model weights can be found here: [GPT-2](https://huggingface.co/gagan3012/project-code-py) and [DistilGPT-2](https://huggingface.co/gagan3012/project-code-py-small)

### Example usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/project-code-py")

model = AutoModelWithLMHead.from_pretrained("gagan3012/project-code-py")

```

## Demo

[](https://share.streamlit.io/gagan3012/project-code-py/app.py)

A streamlit webapp has been setup to use the model: https://share.streamlit.io/gagan3012/project-code-py/app.py

## Example results:

### Question:

```

Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

```

### Answer:

```python

""" Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

For example,

a = 1->2->3

b = 3->1->2

t = ListNode(-1, 1)