Search is not available for this dataset

pipeline_tag

stringclasses 48

values | library_name

stringclasses 205

values | text

stringlengths 0

18.3M

| metadata

stringlengths 2

1.07B

| id

stringlengths 5

122

| last_modified

null | tags

listlengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

|

|---|---|---|---|---|---|---|---|---|

null | null | tags:

- conversational | {} | ShinxisS/DialoGPT-medium-Neku | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers |

# My Awesome Model

| {"tags": ["conversational"]} | NaturesDisaster/DialoGPT-small-Neku | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | Shipkov/1 | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers |

# Rick DialoGPT Model | {"tags": ["conversational"]} | ShiroNeko/DialoGPT-small-rick | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | ShivaniARORA/distilbert-base-uncased-finetuned-squad | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Shivaraj/ner | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

question-answering | null | model card | {"language": "en", "license": "mit", "tags": ["exbert", "my-tag"], "datasets": ["dataset1", "scan-web"], "pipeline_tag": "question-answering"} | Shiyu/my-repo | null | [

"exbert",

"my-tag",

"question-answering",

"en",

"dataset:dataset1",

"dataset:scan-web",

"license:mit",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | ShogunZE/DialoGPT-small-shayo | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Shoki/mnli_two | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Shokk/DialoGPT-small-Amadeus | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Shokk/DialoGPT-small-Kurisu_Amadeus | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Shreesha/discord-rick-bot | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-classification | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned-roberta-depression

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1385

- Accuracy: 0.9745

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0238 | 1.0 | 625 | 0.1385 | 0.9745 |

| 0.0333 | 2.0 | 1250 | 0.1385 | 0.9745 |

| 0.0263 | 3.0 | 1875 | 0.1385 | 0.9745 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

| {"license": "mit", "tags": ["generated_from_trainer"], "metrics": ["accuracy"], "widget": [{"text": "I feel so low and numb, don't feel like doing anything. Just passing my days"}, {"text": "Sleep is my greatest and most comforting escape whenever I wake up these days. The literal very first emotion I feel is just misery and reminding myself of all my problems."}, {"text": "I went to a movie today. It was below my expectations but the day was fine."}, {"text": "The first day of work was a little hectic but met pretty good colleagues, we went for a team dinner party at the end of the day."}], "model-index": [{"name": "finetuned-roberta-depression", "results": []}]} | ShreyaR/finetuned-roberta-depression | null | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | ShrikanthSingh/legal_data_tokenizer | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers |

#goku DialoGPT Model

| {"tags": ["conversational"]} | Shubham-Kumar-DTU/DialoGPT-small-goku | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | Shubham28204/DialoGPT-small-harrypotter | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | ShuhuaiRen/roberta-large-defteval-t6-st2 | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | ShuhuaiRen/roberta-large-qa-suffix-defteval-t6-st1 | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

question-answering | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext-ContaminationQAmodel_PubmedBERT

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7515

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 22 | 3.9518 |

| No log | 2.0 | 44 | 3.2703 |

| No log | 3.0 | 66 | 2.9308 |

| No log | 4.0 | 88 | 2.7806 |

| No log | 5.0 | 110 | 2.6926 |

| No log | 6.0 | 132 | 2.7043 |

| No log | 7.0 | 154 | 2.7113 |

| No log | 8.0 | 176 | 2.7236 |

| No log | 9.0 | 198 | 2.7559 |

| No log | 10.0 | 220 | 2.7515 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

| {"license": "mit", "tags": ["generated_from_trainer"], "model-index": [{"name": "BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext-ContaminationQAmodel_PubmedBERT", "results": []}]} | Shushant/BiomedNLP-PubMedBERT-base-uncased-abstract-fulltext-ContaminationQAmodel_PubmedBERT | null | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"license:mit",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

question-answering | transformers | {} | Shushant/ContaminationQuestionAnswering | null | [

"transformers",

"pytorch",

"distilbert",

"question-answering",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

question-answering | transformers | {} | Shushant/ContaminationQuestionAnsweringTry | null | [

"transformers",

"pytorch",

"distilbert",

"question-answering",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

fill-mask | transformers | # NepNewsBERT

## Masked Language Model for nepali language trained on nepali news scrapped from different nepali news website. The data set contained about 10 million of nepali sentences mainly related to nepali news.

## Usage

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("Shushant/NepNewsBERT")

model = AutoModelForMaskedLM.from_pretrained("Shushant/NepNewsBERT")

from transformers import pipeline

fill_mask = pipeline(

"fill-mask",

model=model,

tokenizer=tokenizer,

)

from pprint import pprint

pprint(fill_mask(f"तिमीलाई कस्तो {tokenizer.mask_token}.")) | {} | Shushant/NepNewsBERT | null | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

question-answering | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# biobert-v1.1-biomedicalQuestionAnswering

This model is a fine-tuned version of [dmis-lab/biobert-v1.1](https://huggingface.co/dmis-lab/biobert-v1.1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.9009

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 22 | 3.7409 |

| No log | 2.0 | 44 | 3.1852 |

| No log | 3.0 | 66 | 3.0342 |

| No log | 4.0 | 88 | 2.9416 |

| No log | 5.0 | 110 | 2.9009 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

| {"tags": ["generated_from_trainer"], "model-index": [{"name": "biobert-v1.1-biomedicalQuestionAnswering", "results": []}]} | Shushant/biobert-v1.1-biomedicalQuestionAnswering | null | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

fill-mask | transformers | # NEPALI BERT

## Masked Language Model for nepali language trained on nepali news scrapped from different nepali news website. The data set contained about 10 million of nepali sentences mainly related to nepali news.

This model is a fine-tuned version of [Bert Base Uncased](https://huggingface.co/bert-base-uncased) on dataset composed of different news scrapped from nepali news portals comprising of 4.6 GB of textual data.

It achieves the following results on the evaluation set:

- Loss: 1.0495

## Model description

Pretraining done on bert base architecture.

## Intended uses & limitations

This transformer model can be used for any NLP tasks related to Devenagari language. At the time of training, this is the state of the art model developed

for Devanagari dataset. Intrinsic evaluation with Perplexity of 8.56 achieves this state of the art whereas extrinsit evaluation done on sentiment analysis of Nepali tweets outperformed other existing

masked language models on Nepali dataset.

## Training and evaluation data

THe training corpus is developed using 85467 news scrapped from different job portals. This is a preliminary dataset

for the experimentation. THe corpus size is about 4.3 GB of textual data. Similary evaluation data contains few news articles about 12 mb of textual data.

## Training procedure

For the pretraining of masked language model, Trainer API from Huggingface is used. The pretraining took about 3 days 8 hours 57 minutes. Training was done on Tesla V100 GPU.

With 640 Tensor Cores, Tesla V100 is the world's first GPU to break the 100 teraFLOPS (TFLOPS) barrier of deep learning performance. This GPU was faciliated by Kathmandu University (KU) supercomputer.

Thanks to KU administration.

Usage

```

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("Shushant/nepaliBERT")

model = AutoModelForMaskedLM.from_pretrained("Shushant/nepaliBERT")

from transformers import pipeline

fill_mask = pipeline( "fill-mask", model=model, tokenizer=tokenizer, )

from pprint import pprint pprint(fill_mask(f"तिमीलाई कस्तो {tokenizer.mask_token}."))

```

## Data Description

Trained on about 4.6 GB of Nepali text corpus collected from various sources

These data were collected from nepali news site, OSCAR nepali corpus

# Paper and CItation Details

If you are interested to read the implementation details of this language model, you can read the full paper here.

https://www.researchgate.net/publication/375019515_NepaliBERT_Pre-training_of_Masked_Language_Model_in_Nepali_Corpus

## Plain Text

S. Pudasaini, S. Shakya, A. Tamang, S. Adhikari, S. Thapa and S. Lamichhane, "NepaliBERT: Pre-training of Masked Language Model in Nepali Corpus," 2023 7th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Kirtipur, Nepal, 2023, pp. 325-330, doi: 10.1109/I-SMAC58438.2023.10290690.

## Bibtex

@INPROCEEDINGS{10290690,

author={Pudasaini, Shushanta and Shakya, Subarna and Tamang, Aakash and Adhikari, Sajjan and Thapa, Sunil and Lamichhane, Sagar},

booktitle={2023 7th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC)},

title={NepaliBERT: Pre-training of Masked Language Model in Nepali Corpus},

year={2023},

volume={},

number={},

pages={325-330},

doi={10.1109/I-SMAC58438.2023.10290690}}

| {"language": ["ne"], "license": "mit", "library_name": "transformers", "datasets": ["Shushant/nepali"], "metrics": ["perplexity"], "pipeline_tag": "fill-mask"} | Shushant/nepaliBERT | null | [

"transformers",

"pytorch",

"bert",

"fill-mask",

"ne",

"dataset:Shushant/nepali",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 164469

## Validation Metrics

- Loss: 0.05527503043413162

- Accuracy: 0.9853049228508449

- Precision: 0.991044776119403

- Recall: 0.9793510324483776

- AUC: 0.9966895139869654

- F1: 0.9851632047477745

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/Shuvam/autonlp-college_classification-164469

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("Shuvam/autonlp-college_classification-164469", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("Shuvam/autonlp-college_classification-164469", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

``` | {"language": "en", "tags": "autonlp", "datasets": ["Shuvam/autonlp-data-college_classification"], "widget": [{"text": "I love AutoNLP \ud83e\udd17"}]} | Shuvam/autonlp-college_classification-164469 | null | [

"transformers",

"pytorch",

"jax",

"bert",

"text-classification",

"autonlp",

"en",

"dataset:Shuvam/autonlp-data-college_classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | Shyamji/Shyamji | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Shz/distilbert-base-uncased-finetuned-qqp | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | Sid51/CB | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | Sid51/Chan | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | Sid51/ChanBot | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Siddhant136/demo | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Sideways/chadgoro | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Sidhant/argclassifier | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Sierra1669/DialoGPT-medium-Alice | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | SilentMyuth/CakeChat | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | SilentMyuth/oik | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | This model is a fine-tuned version of Microsoft/DialoGPT-medium trained to created sarcastic responses from the dataset "Sarcasm on Reddit" located [here](https://www.kaggle.com/danofer/sarcasm). | {"pipeline_tag": "conversational"} | SilentMyuth/sarcastic-model | null | [

"transformers",

"conversational",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers | {} | SilentMyuth/stable-jenny | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers |

# My Awesome Model | {"tags": ["conversational"]} | SilentMyuth/stableben | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers | Hewlo | {} | SilentMyuth/stablejen | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | SilentMyuth/test | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | transformers | {} | SilentMyuth/test1 | null | [

"transformers",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | SimonThormeyer/movie-plot-generator-longer-plots | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | SimonThormeyer/movie-plot-generator | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Simovod/DialoGPT-small-joshua34 | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Simovod/DialoGPT-small-testRU | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | Simovod/simRU | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Simovod/testRU | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | {} | Simovod/testSIM | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Sin/DialoGPT-small-joshua | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-generation | transformers | conver = pipeline("conversational")

---

tags:

- conversational

---

# Harry potter DialoGPT model | {} | Sin/DialoGPT-small-zai | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | Sin/MY_MODEL_NAME | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Sina/Persian-Digital-sentiments | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

question-answering | transformers | # Muril Large Squad2

This model is finetuned for QA task on Squad2 from [Muril Large checkpoint](https://huggingface.co/google/muril-large-cased).

## Hyperparameters

```

Batch Size: 4

Grad Accumulation Steps = 8

Total epochs = 3

MLM Checkpoint = google/muril-large-cased

max_seq_len = 256

learning_rate = 1e-5

lr_schedule = LinearWarmup

warmup_ratio = 0.1

doc_stride = 128

```

## Squad 2 Evaluation stats:

Generated from [the official Squad2 evaluation script](https://worksheets.codalab.org/rest/bundles/0x6b567e1cf2e041ec80d7098f031c5c9e/contents/blob/)

```json

{

"exact": 82.0180240882675,

"f1": 85.10110304685352,

"total": 11873,

"HasAns_exact": 81.6970310391363,

"HasAns_f1": 87.87203044454981,

"HasAns_total": 5928,

"NoAns_exact": 82.3380992430614,

"NoAns_f1": 82.3380992430614,

"NoAns_total": 5945

}

```

## Limitations

MuRIL is specifically trained to work on 18 Indic languages and English. This model is not expected to perform well in any other languages. See the MuRIL checkpoint for further details.

For any questions, you can reach out to me [on Twitter](https://twitter.com/batw0man) | {} | Sindhu/muril-large-squad2 | null | [

"transformers",

"pytorch",

"bert",

"question-answering",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

question-answering | transformers |

# Rembert Squad2

This model is finetuned for QA task on Squad2 from [Rembert checkpoint](https://huggingface.co/google/rembert).

## Hyperparameters

```

Batch Size: 4

Grad Accumulation Steps = 8

Total epochs = 3

MLM Checkpoint = "rembert"

max_seq_len = 256

learning_rate = 1e-5

lr_schedule = LinearWarmup

warmup_ratio = 0.1

doc_stride = 128

```

## Squad 2 Evaluation stats:

Metrics generated from [the official Squad2 evaluation script](https://worksheets.codalab.org/rest/bundles/0x6b567e1cf2e041ec80d7098f031c5c9e/contents/blob/)

```json

{

"exact": 84.51107554956624,

"f1": 87.46644042781853,

"total": 11873,

"HasAns_exact": 80.97165991902834,

"HasAns_f1": 86.89086491219469,

"HasAns_total": 5928,

"NoAns_exact": 88.04037005887301,

"NoAns_f1": 88.04037005887301,

"NoAns_total": 5945

}

```

For any questions, you can reach out to me [on Twitter](https://twitter.com/batw0man) | {"language": ["multilingual"], "tags": ["question-answering"], "datasets": ["squad2"], "metrics": ["squad2"]} | Sindhu/rembert-squad2 | null | [

"transformers",

"pytorch",

"rembert",

"question-answering",

"multilingual",

"dataset:squad2",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers |

# The Vampire Diaries DialoGPT Model | {"tags": ["conversational"]} | SirBastianXVII/DialoGPT-small-TVD | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers |

# Trump Insults GPT Bot | {"tags": ["conversational"]} | Sired/DialoGPT-small-trumpbot | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

question-answering | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wangchanberta-base-att-spm-uncased-finetuned-th-squad

This model is a fine-tuned version of [airesearch/wangchanberta-base-att-spm-uncased](https://huggingface.co/airesearch/wangchanberta-base-att-spm-uncased) on the thaiqa_squad dataset.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.13.0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

| {"tags": ["generated_from_trainer"], "datasets": ["thaiqa_squad"], "widget": [{"text": "\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e40\u0e23\u0e2d\u0e31\u0e25\u0e21\u0e32\u0e14\u0e23\u0e34\u0e14\u0e01\u0e48\u0e2d\u0e15\u0e31\u0e49\u0e07\u0e02\u0e36\u0e49\u0e19\u0e43\u0e19\u0e1b\u0e35\u0e43\u0e14", "context": "\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e1f\u0e38\u0e15\u0e1a\u0e2d\u0e25\u0e40\u0e23\u0e2d\u0e31\u0e25\u0e21\u0e32\u0e14\u0e23\u0e34\u0e14 (\u0e2a\u0e40\u0e1b\u0e19: Real Madrid Club de F\u00fatbol) \u0e40\u0e1b\u0e47\u0e19\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e1f\u0e38\u0e15\u0e1a\u0e2d\u0e25\u0e17\u0e35\u0e48\u0e21\u0e35\u0e0a\u0e37\u0e48\u0e2d\u0e40\u0e2a\u0e35\u0e22\u0e07\u0e43\u0e19\u0e1b\u0e23\u0e30\u0e40\u0e17\u0e28\u0e2a\u0e40\u0e1b\u0e19 \u0e15\u0e31\u0e49\u0e07\u0e2d\u0e22\u0e39\u0e48\u0e17\u0e35\u0e48\u0e01\u0e23\u0e38\u0e07\u0e21\u0e32\u0e14\u0e23\u0e34\u0e14 \u0e1b\u0e31\u0e08\u0e08\u0e38\u0e1a\u0e31\u0e19\u0e40\u0e25\u0e48\u0e19\u0e2d\u0e22\u0e39\u0e48\u0e43\u0e19\u0e25\u0e32\u0e25\u0e34\u0e01\u0e32 \u0e01\u0e48\u0e2d\u0e15\u0e31\u0e49\u0e07\u0e02\u0e36\u0e49\u0e19\u0e43\u0e19 \u0e04.\u0e28. 1902 \u0e42\u0e14\u0e22\u0e40\u0e1b\u0e47\u0e19\u0e2b\u0e19\u0e36\u0e48\u0e07\u0e43\u0e19\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e17\u0e35\u0e48\u0e1b\u0e23\u0e30\u0e2a\u0e1a\u0e04\u0e27\u0e32\u0e21\u0e2a\u0e33\u0e40\u0e23\u0e47\u0e08\u0e21\u0e32\u0e01\u0e17\u0e35\u0e48\u0e2a\u0e38\u0e14\u0e43\u0e19\u0e17\u0e27\u0e35\u0e1b\u0e22\u0e38\u0e42\u0e23\u0e1b \u0e40\u0e23\u0e2d\u0e31\u0e25\u0e21\u0e32\u0e14\u0e23\u0e34\u0e14\u0e40\u0e1b\u0e47\u0e19\u0e2a\u0e21\u0e32\u0e0a\u0e34\u0e01\u0e02\u0e2d\u0e07\u0e01\u0e25\u0e38\u0e48\u0e21 14 \u0e0b\u0e36\u0e48\u0e07\u0e40\u0e1b\u0e47\u0e19\u0e01\u0e25\u0e38\u0e48\u0e21\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e0a\u0e31\u0e49\u0e19\u0e19\u0e33\u0e02\u0e2d\u0e07\u0e22\u0e39\u0e1f\u0e48\u0e32 \u0e41\u0e25\u0e30\u0e22\u0e31\u0e07\u0e40\u0e1b\u0e47\u0e19\u0e2b\u0e19\u0e36\u0e48\u0e07\u0e43\u0e19\u0e2a\u0e32\u0e21\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e1c\u0e39\u0e49\u0e23\u0e48\u0e27\u0e21\u0e01\u0e48\u0e2d\u0e15\u0e31\u0e49\u0e07\u0e25\u0e32\u0e25\u0e34\u0e01\u0e32\u0e0b\u0e36\u0e48\u0e07\u0e44\u0e21\u0e48\u0e40\u0e04\u0e22\u0e15\u0e01\u0e0a\u0e31\u0e49\u0e19\u0e08\u0e32\u0e01\u0e25\u0e35\u0e01\u0e2a\u0e39\u0e07\u0e2a\u0e38\u0e14\u0e19\u0e31\u0e1a\u0e15\u0e31\u0e49\u0e07\u0e41\u0e15\u0e48 \u0e04.\u0e28. 1929 \u0e21\u0e35\u0e04\u0e39\u0e48\u0e2d\u0e23\u0e34\u0e04\u0e37\u0e2d\u0e2a\u0e42\u0e21\u0e2a\u0e23\u0e1a\u0e32\u0e23\u0e4c\u0e40\u0e0b\u0e42\u0e25\u0e19\u0e32 \u0e41\u0e25\u0e30 \u0e2d\u0e31\u0e15\u0e40\u0e25\u0e15\u0e34\u0e42\u0e01\u0e40\u0e14\u0e21\u0e32\u0e14\u0e23\u0e34\u0e14 \u0e21\u0e35\u0e2a\u0e19\u0e32\u0e21\u0e40\u0e2b\u0e22\u0e49\u0e32\u0e04\u0e37\u0e2d\u0e0b\u0e32\u0e19\u0e40\u0e15\u0e35\u0e22\u0e42\u0e01 \u0e40\u0e1a\u0e23\u0e4c\u0e19\u0e32\u0e40\u0e1a\u0e27"}, {"text": "\u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49\u0e16\u0e37\u0e2d\u0e01\u0e33\u0e40\u0e19\u0e34\u0e14\u0e02\u0e36\u0e49\u0e19\u0e43\u0e19\u0e1b\u0e35\u0e43\u0e14", "context": "\u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49 \u0e40\u0e1b\u0e47\u0e19\u0e01\u0e35\u0e2c\u0e32\u0e0a\u0e19\u0e34\u0e14\u0e2b\u0e19\u0e36\u0e48\u0e07\u0e16\u0e37\u0e2d\u0e01\u0e33\u0e40\u0e19\u0e34\u0e14\u0e02\u0e36\u0e49\u0e19\u0e08\u0e32\u0e01\u0e42\u0e23\u0e07\u0e40\u0e23\u0e35\u0e22\u0e19\u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49 (Rugby School) \u0e43\u0e19\u0e40\u0e21\u0e37\u0e2d\u0e07\u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49 \u0e43\u0e19\u0e40\u0e02\u0e15\u0e27\u0e2d\u0e23\u0e4c\u0e27\u0e34\u0e01\u0e40\u0e0a\u0e35\u0e22\u0e23\u0e4c \u0e1b\u0e23\u0e30\u0e40\u0e17\u0e28\u0e2d\u0e31\u0e07\u0e01\u0e24\u0e29 \u0e40\u0e23\u0e34\u0e48\u0e21\u0e15\u0e49\u0e19\u0e08\u0e32\u0e01 \u0e43\u0e19\u0e1b\u0e35 \u0e04.\u0e28. 1826 \u0e02\u0e13\u0e30\u0e19\u0e31\u0e49\u0e19\u0e40\u0e1b\u0e47\u0e19\u0e01\u0e32\u0e23\u0e41\u0e02\u0e48\u0e07\u0e02\u0e31\u0e19 \u0e1f\u0e38\u0e15\u0e1a\u0e2d\u0e25 \u0e20\u0e32\u0e22\u0e43\u0e19\u0e02\u0e2d\u0e07\u0e42\u0e23\u0e07\u0e40\u0e23\u0e35\u0e22\u0e19\u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49 \u0e0b\u0e36\u0e48\u0e07\u0e15\u0e31\u0e49\u0e07\u0e2d\u0e22\u0e39\u0e48 \u0e13 \u0e40\u0e21\u0e37\u0e2d\u0e07\u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49 \u0e1b\u0e23\u0e30\u0e40\u0e17\u0e28\u0e2d\u0e31\u0e07\u0e01\u0e24\u0e29 \u0e1c\u0e39\u0e49\u0e40\u0e25\u0e48\u0e19\u0e04\u0e19\u0e2b\u0e19\u0e36\u0e48\u0e07\u0e0a\u0e37\u0e48\u0e2d \u0e27\u0e34\u0e25\u0e40\u0e25\u0e35\u0e22\u0e21 \u0e40\u0e27\u0e1a\u0e1a\u0e4c \u0e40\u0e2d\u0e25\u0e25\u0e34\u0e2a (William Webb Ellis) \u0e44\u0e14\u0e49\u0e17\u0e33\u0e1c\u0e34\u0e14\u0e01\u0e15\u0e34\u0e01\u0e32\u0e01\u0e32\u0e23\u0e41\u0e02\u0e48\u0e07\u0e02\u0e31\u0e19\u0e17\u0e35\u0e48\u0e27\u0e32\u0e07\u0e44\u0e27\u0e49 \u0e42\u0e14\u0e22\u0e27\u0e34\u0e48\u0e07\u0e2d\u0e38\u0e49\u0e21\u0e25\u0e39\u0e01\u0e1a\u0e2d\u0e25\u0e0b\u0e36\u0e48\u0e07\u0e15\u0e31\u0e27\u0e40\u0e02\u0e32\u0e40\u0e2d\u0e07\u0e44\u0e21\u0e48\u0e44\u0e14\u0e49\u0e40\u0e1b\u0e47\u0e19\u0e1c\u0e39\u0e49\u0e40\u0e25\u0e48\u0e19\u0e43\u0e19\u0e15\u0e33\u0e41\u0e2b\u0e19\u0e48\u0e07\u0e1c\u0e39\u0e49\u0e23\u0e31\u0e01\u0e29\u0e32\u0e1b\u0e23\u0e30\u0e15\u0e39 \u0e41\u0e25\u0e30\u0e44\u0e14\u0e49\u0e27\u0e34\u0e48\u0e07\u0e2d\u0e38\u0e49\u0e21\u0e25\u0e39\u0e01\u0e1a\u0e2d\u0e25\u0e44\u0e1b\u0e08\u0e19\u0e16\u0e36\u0e07\u0e40\u0e2a\u0e49\u0e19\u0e1b\u0e23\u0e30\u0e15\u0e39\u0e1d\u0e48\u0e32\u0e22\u0e15\u0e23\u0e07\u0e02\u0e49\u0e32\u0e21 \u0e40\u0e02\u0e32\u0e08\u0e30\u0e08\u0e07\u0e43\u0e08\u0e2b\u0e23\u0e37\u0e2d\u0e44\u0e21\u0e48\u0e01\u0e47\u0e15\u0e32\u0e21\u0e41\u0e15\u0e48 \u0e41\u0e15\u0e48\u0e01\u0e32\u0e23\u0e40\u0e25\u0e48\u0e19\u0e17\u0e35\u0e48\u0e19\u0e2d\u0e01\u0e25\u0e39\u0e48\u0e19\u0e2d\u0e01\u0e17\u0e32\u0e07\u0e02\u0e2d\u0e07\u0e40\u0e02\u0e32\u0e44\u0e14\u0e49\u0e40\u0e1b\u0e47\u0e19\u0e17\u0e35\u0e48\u0e1e\u0e39\u0e14\u0e16\u0e36\u0e07\u0e2d\u0e22\u0e48\u0e32\u0e07\u0e41\u0e1e\u0e23\u0e48\u0e2b\u0e25\u0e32\u0e22 \u0e43\u0e19\u0e2b\u0e21\u0e39\u0e48\u0e1c\u0e39\u0e49\u0e40\u0e25\u0e48\u0e19\u0e41\u0e25\u0e30\u0e1c\u0e39\u0e49\u0e14\u0e39\u0e08\u0e19\u0e41\u0e1e\u0e23\u0e48\u0e01\u0e23\u0e30\u0e08\u0e32\u0e22\u0e44\u0e1b\u0e15\u0e32\u0e21\u0e42\u0e23\u0e07\u0e40\u0e23\u0e35\u0e22\u0e19\u0e15\u0e48\u0e32\u0e07\u0e46\u0e43\u0e19\u0e2d\u0e31\u0e07\u0e01\u0e24\u0e29 \u0e42\u0e14\u0e22\u0e40\u0e09\u0e1e\u0e32\u0e30\u0e43\u0e19\u0e2b\u0e21\u0e39\u0e48\u0e19\u0e31\u0e01\u0e40\u0e23\u0e35\u0e22\u0e19\u0e02\u0e2d\u0e07\u0e42\u0e23\u0e07\u0e40\u0e23\u0e35\u0e22\u0e19\u0e40\u0e04\u0e21\u0e1a\u0e23\u0e34\u0e14\u0e08\u0e4c \u0e44\u0e14\u0e49\u0e19\u0e33\u0e40\u0e2d\u0e32\u0e27\u0e34\u0e18\u0e35\u0e01\u0e32\u0e23\u0e40\u0e25\u0e48\u0e19\u0e02\u0e2d\u0e07 \u0e19\u0e32\u0e22\u0e40\u0e2d\u0e25\u0e25\u0e35\u0e2a \u0e44\u0e1b\u0e08\u0e31\u0e14\u0e01\u0e32\u0e23\u0e41\u0e02\u0e48\u0e07\u0e02\u0e31\u0e19\u0e42\u0e14\u0e22\u0e40\u0e23\u0e35\u0e22\u0e01\u0e0a\u0e37\u0e48\u0e2d\u0e40\u0e01\u0e21\u0e0a\u0e19\u0e34\u0e14\u0e43\u0e2b\u0e21\u0e48\u0e19\u0e35\u0e49\u0e27\u0e48\u0e32 \u0e23\u0e31\u0e01\u0e1a\u0e35\u0e49\u0e40\u0e01\u0e21\u0e2a\u0e4c (Rugby Games) \u0e20\u0e32\u0e22\u0e2b\u0e25\u0e31\u0e07\u0e08\u0e32\u0e01\u0e19\u0e31\u0e49\u0e19\u0e01\u0e47\u0e40\u0e1b\u0e47\u0e19\u0e17\u0e35\u0e48\u0e19\u0e34\u0e22\u0e21\u0e40\u0e25\u0e48\u0e19\u0e01\u0e31\u0e19\u0e21\u0e32\u0e01\u0e02\u0e36\u0e49\u0e19 \u0e17\u0e31\u0e49\u0e07\u0e44\u0e14\u0e49\u0e21\u0e35\u0e01\u0e32\u0e23\u0e40\u0e1b\u0e25\u0e35\u0e48\u0e22\u0e19\u0e41\u0e1b\u0e25\u0e07\u0e41\u0e01\u0e49\u0e44\u0e02\u0e01\u0e32\u0e23\u0e40\u0e25\u0e48\u0e19\u0e40\u0e23\u0e37\u0e48\u0e2d\u0e22\u0e21\u0e32\u0e43\u0e19\u0e1b\u0e23\u0e30\u0e40\u0e17\u0e28\u0e2d\u0e31\u0e07\u0e01\u0e24\u0e29"}], "model-index": [{"name": "wangchanberta-base-att-spm-uncased-finetuned-th-squad", "results": []}]} | Sirinya/wangchanberta-th-squad_test1 | null | [

"transformers",

"pytorch",

"tensorboard",

"camembert",

"question-answering",

"generated_from_trainer",

"dataset:thaiqa_squad",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers | # DialoGPT Trained on a customized various spiritual texts and mixed with various different character personalities.

This is an instance of [microsoft/DialoGPT-medium](https://huggingface.co/microsoft/DialoGPT-medium) trained on the energy complex known as Ra. Some text has been changed from the original with the intention of making it fit our discord server better. I've also trained it on various channeling experiences. I'm testing mixing this dataset with character from popular shows with the intention of creating a more diverse dialogue.

I built a Discord AI chatbot based on this model for internal use within Siyris, Inc.

Chat with the model:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("Siyris/DialoGPT-medium-SIY")

model = AutoModelWithLMHead.from_pretrained("Siyris/DialoGPT-medium-SIY")

# Let's chat for 4 lines

for step in range(4):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# print(new_user_input_ids)

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(

bot_input_ids, max_length=200,

pad_token_id=tokenizer.eos_token_id,

no_repeat_ngram_size=3,

do_sample=True,

top_k=100,

top_p=0.7,

temperature=0.8

)

# pretty print last ouput tokens from bot

print("SIY: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

``` | {"license": "mit", "tags": ["conversational"], "thumbnail": "https://huggingface.co/front/thumbnails/dialogpt.png"} | Siyris/DialoGPT-medium-SIY | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers | # DialoGPT Trained on a customized version of The Law of One.

This is an instance of [microsoft/DialoGPT-medium](https://huggingface.co/microsoft/DialoGPT-medium) trained on the energy complex known as Ra. Some text has been changed from the original with the intention of making it fit our discord server better.

I built a Discord AI chatbot based on this model for internal use within Siyris, Inc.

Chat with the model:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("Siyris/SIY")

model = AutoModelWithLMHead.from_pretrained("Siyris/SIY")

# Let's chat for 4 lines

for step in range(4):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# print(new_user_input_ids)

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(

bot_input_ids, max_length=200,

pad_token_id=tokenizer.eos_token_id,

no_repeat_ngram_size=3,

do_sample=True,

top_k=100,

top_p=0.7,

temperature=0.8

)

# pretty print last ouput tokens from bot

print("SIY: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

``` | {"license": "mit", "tags": ["conversational"], "thumbnail": "https://huggingface.co/front/thumbnails/dialogpt.png"} | Siyris/SIY | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | Skandagn/checkmodel | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Skas/stopit | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

question-answering | transformers |

# BERT base Japanese - JaQuAD

## Description

A Japanese Question Answering model fine-tuned on [JaQuAD](https://huggingface.co/datasets/SkelterLabsInc/JaQuAD).

Please refer [BERT base Japanese](https://huggingface.co/cl-tohoku/bert-base-japanese) for details about the pre-training model.

The codes for the fine-tuning are available at [SkelterLabsInc/JaQuAD](https://github.com/SkelterLabsInc/JaQuAD)

## Evaluation results

On the development set.

```shell

{"f1": 77.35, "exact_match": 61.01}

```

On the test set.

```shell

{"f1": 78.92, "exact_match": 63.38}

```

## Usage

```python

from transformers import AutoModelForQuestionAnswering, AutoTokenizer

question = 'アレクサンダー・グラハム・ベルは、どこで生まれたの?'

context = 'アレクサンダー・グラハム・ベルは、スコットランド生まれの科学者、発明家、工学者である。世界初の>実用的電話の発明で知られている。'

model = AutoModelForQuestionAnswering.from_pretrained(

'SkelterLabsInc/bert-base-japanese-jaquad')

tokenizer = AutoTokenizer.from_pretrained(

'SkelterLabsInc/bert-base-japanese-jaquad')

inputs = tokenizer(

question, context, add_special_tokens=True, return_tensors="pt")

input_ids = inputs["input_ids"].tolist()[0]

outputs = model(**inputs)

answer_start_scores = outputs.start_logits

answer_end_scores = outputs.end_logits

# Get the most likely beginning of answer with the argmax of the score.

answer_start = torch.argmax(answer_start_scores)

# Get the most likely end of answer with the argmax of the score.

# 1 is added to `answer_end` because the index pointed by score is inclusive.

answer_end = torch.argmax(answer_end_scores) + 1

answer = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end]))

# answer = 'スコットランド'

```

## License

The fine-tuned model is licensed under the [CC BY-SA 3.0](https://creativecommons.org/licenses/by-sa/3.0/) license.

## Citation

```bibtex

@misc{so2022jaquad,

title={{JaQuAD: Japanese Question Answering Dataset for Machine Reading Comprehension}},

author={ByungHoon So and Kyuhong Byun and Kyungwon Kang and Seongjin Cho},

year={2022},

eprint={2202.01764},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` | {"language": "ja", "license": "cc-by-sa-3.0", "tags": ["question-answering", "extractive-qa"], "datasets": ["SkelterLabsInc/JaQuAD"], "metrics": ["Exact match", "F1 score"], "pipeline_tag": ["None"]} | SkelterLabsInc/bert-base-japanese-jaquad | null | [

"transformers",

"pytorch",

"bert",

"question-answering",

"extractive-qa",

"ja",

"dataset:SkelterLabsInc/JaQuAD",

"arxiv:2202.01764",

"license:cc-by-sa-3.0",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

null | null | {} | SkimBeeble41/user_sentiment | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | SkimBeeble41/wav2vec2-base-timit-demo-colab | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

null | null | {} | Skkamal/Face | null | [

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text2text-generation | transformers |

**Model Overview**

This is the model presented in the paper ["ParaDetox: Detoxification with Parallel Data"](https://aclanthology.org/2022.acl-long.469/).

The model itself is [BART (base)](https://huggingface.co/facebook/bart-base) model trained on parallel detoxification dataset ParaDetox achiving SOTA results for detoxification task. More details, code and data can be found [here](https://github.com/skoltech-nlp/paradetox).

**How to use**

```python

from transformers import BartForConditionalGeneration, AutoTokenizer

base_model_name = 'facebook/bart-base'

model_name = 'SkolkovoInstitute/bart-base-detox'

tokenizer = AutoTokenizer.from_pretrained(base_model_name)

model = BartForConditionalGeneration.from_pretrained(model_name)

```

**Citation**

```

@inproceedings{logacheva-etal-2022-paradetox,

title = "{P}ara{D}etox: Detoxification with Parallel Data",

author = "Logacheva, Varvara and

Dementieva, Daryna and

Ustyantsev, Sergey and

Moskovskiy, Daniil and

Dale, David and

Krotova, Irina and

Semenov, Nikita and

Panchenko, Alexander",

booktitle = "Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.acl-long.469",

pages = "6804--6818",

abstract = "We present a novel pipeline for the collection of parallel data for the detoxification task. We collect non-toxic paraphrases for over 10,000 English toxic sentences. We also show that this pipeline can be used to distill a large existing corpus of paraphrases to get toxic-neutral sentence pairs. We release two parallel corpora which can be used for the training of detoxification models. To the best of our knowledge, these are the first parallel datasets for this task.We describe our pipeline in detail to make it fast to set up for a new language or domain, thus contributing to faster and easier development of new parallel resources.We train several detoxification models on the collected data and compare them with several baselines and state-of-the-art unsupervised approaches. We conduct both automatic and manual evaluations. All models trained on parallel data outperform the state-of-the-art unsupervised models by a large margin. This suggests that our novel datasets can boost the performance of detoxification systems.",

}

``` | {"language": ["en"], "license": "openrail++", "tags": ["detoxification"], "datasets": ["s-nlp/paradetox"], "licenses": ["cc-by-nc-sa"]} | s-nlp/bart-base-detox | null | [

"transformers",

"pytorch",

"safetensors",

"bart",

"text2text-generation",

"detoxification",

"en",

"dataset:s-nlp/paradetox",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-generation | transformers | # Model Details

This is a conditional language model based on [gpt2-medium](https://huggingface.co/gpt2-medium/) but with a vocabulary from [t5-base](https://huggingface.co/t5-base), for compatibility with T5-based paraphrasers such as [t5-paranmt-detox](https://huggingface.co/SkolkovoInstitute/t5-paranmt-detox). The model is conditional on two styles, `toxic` and `normal`, and was fine-tuned on the dataset from the Jigsaw [toxic comment classification challenge](https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge).

The model was trained for the paper [Text Detoxification using Large Pre-trained Neural Models](https://arxiv.org/abs/2109.08914) (Dale et al, 2021) that describes its possible usage in more detail.

An example of its use and the code for its training is given in https://github.com/skoltech-nlp/detox.

## Model Description

- **Developed by:** SkolkovoInstitute

- **Model type:** Conditional Text Generation

- **Language:** English

- **Related Models:**

- **Parent Model:** [gpt2-medium](https://huggingface.co/gpt2-medium/)

- **Source of vocabulary:** [t5-base](https://huggingface.co/t5-base)

- **Resources for more information:**

- The paper [Text Detoxification using Large Pre-trained Neural Models](https://arxiv.org/abs/2109.08914)

- Its repository https://github.com/skoltech-nlp/detox.

# Uses

The model is intended for usage as a discriminator in a text detoxification pipeline using the ParaGeDi approach (see [the paper](https://arxiv.org/abs/2109.08914) for more details). It can also be used for text generation conditional on toxic or non-toxic style, but we do not know how to condition it on the things other than toxicity, so we do not recommend this usage. Another possible use is as a toxicity classifier (using the Bayes rule), but the model is not expected to perform better than e.g. a BERT-based standard classifier.

# Bias, Risks, and Limitations

The model inherits all the risks of its parent model, [gpt2-medium](https://huggingface.co/gpt2-medium/). It also inherits all the biases of the [Jigsaw dataset](https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge) on which it was fine-tuned. The model is intended to be conditional on style, but in fact it does not clearly separate the concepts of style and content, so it might regard some texts as toxic or safe based not on the style, but on their topics or keywords.

# Training Details

See the paper [Text Detoxification using Large Pre-trained Neural Models](https://arxiv.org/abs/2109.08914) and [the associated code](https://github.com/s-nlp/detox/tree/main/emnlp2021/style_transfer/paraGeDi).

# Evaluation

The model has not been evaluated on its own, only as a part as a ParaGeDi text detoxification pipeline (see [the paper](https://arxiv.org/abs/2109.08914)).

# Citation

**BibTeX:**

```

@inproceedings{dale-etal-2021-text,

title = "Text Detoxification using Large Pre-trained Neural Models",

author = "Dale, David and

Voronov, Anton and

Dementieva, Daryna and

Logacheva, Varvara and

Kozlova, Olga and

Semenov, Nikita and

Panchenko, Alexander",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

address = "Online and Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.629",

pages = "7979--7996",

}

```

| {"language": ["en"], "tags": ["text-generation", "conditional-text-generation"]} | s-nlp/gpt2-base-gedi-detoxification | null | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conditional-text-generation",

"en",

"arxiv:2109.08914",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

The model has been trained to predict for English sentences, whether they are formal or informal.

Base model: `roberta-base`

Datasets: [GYAFC](https://github.com/raosudha89/GYAFC-corpus) from [Rao and Tetreault, 2018](https://aclanthology.org/N18-1012) and [online formality corpus](http://www.seas.upenn.edu/~nlp/resources/formality-corpus.tgz) from [Pavlick and Tetreault, 2016](https://aclanthology.org/Q16-1005).

Data augmentation: changing texts to upper or lower case; removing all punctuation, adding dot at the end of a sentence. It was applied because otherwise the model is over-reliant on punctuation and capitalization and does not pay enough attention to other features.

Loss: binary classification (on GYAFC), in-batch ranking (on PT data).

Performance metrics on the test data:

| dataset | ROC AUC | precision | recall | fscore | accuracy | Spearman |

|----------------------------------------------|---------|-----------|--------|--------|----------|------------|

| GYAFC | 0.9779 | 0.90 | 0.91 | 0.90 | 0.9087 | 0.8233 |

| GYAFC normalized (lowercase + remove punct.) | 0.9234 | 0.85 | 0.81 | 0.82 | 0.8218 | 0.7294 |

| P&T subset | Spearman R |

| - | - |

news | 0.4003

answers | 0.7500

blog | 0.7334

email | 0.7606

## Citation

If you are using the model in your research, please cite the following

[paper](https://doi.org/10.1007/978-3-031-35320-8_4) where it was introduced:

```

@InProceedings{10.1007/978-3-031-35320-8_4,

author="Babakov, Nikolay

and Dale, David

and Gusev, Ilya

and Krotova, Irina

and Panchenko, Alexander",

editor="M{\'e}tais, Elisabeth

and Meziane, Farid

and Sugumaran, Vijayan

and Manning, Warren

and Reiff-Marganiec, Stephan",

title="Don't Lose the Message While Paraphrasing: A Study on Content Preserving Style Transfer",

booktitle="Natural Language Processing and Information Systems",

year="2023",

publisher="Springer Nature Switzerland",

address="Cham",

pages="47--61",

isbn="978-3-031-35320-8"

}

```

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png | {"language": ["en"], "license": "cc-by-nc-sa-4.0", "tags": ["formality"], "datasets": ["GYAFC", "Pavlick-Tetreault-2016"]} | s-nlp/roberta-base-formality-ranker | null | [

"transformers",

"pytorch",

"safetensors",

"roberta",

"text-classification",

"formality",

"en",

"dataset:GYAFC",

"dataset:Pavlick-Tetreault-2016",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers | ## Toxicity Classification Model (but for the first part of the data)

This model is trained for toxicity classification task. The dataset used for training is the merge of the English parts of the three datasets by **Jigsaw** ([Jigsaw 2018](https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge), [Jigsaw 2019](https://www.kaggle.com/c/jigsaw-unintended-bias-in-toxicity-classification), [Jigsaw 2020](https://www.kaggle.com/c/jigsaw-multilingual-toxic-comment-classification)), containing around 2 million examples. We split it into two parts and fine-tune a RoBERTa model ([RoBERTa: A Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692)) on it. THIS MODEL WAS FINE-TUNED ON THE FIRST PART. The classifiers perform closely on the test set of the first Jigsaw competition, reaching the **AUC-ROC** of 0.98 and **F1-score** of 0.76.

## How to use

```python

from transformers import RobertaTokenizer, RobertaForSequenceClassification

# load tokenizer and model weights, but be careful, here we need to use auth token

tokenizer = RobertaTokenizer.from_pretrained('SkolkovoInstitute/roberta_toxicity_classifier', use_auth_token=True)

model = RobertaForSequenceClassification.from_pretrained('SkolkovoInstitute/roberta_toxicity_classifier', use_auth_token=True)

# prepare the input

batch = tokenizer.encode('you are amazing', return_tensors='pt')

# inference

model(batch)

```

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png | {"language": ["en"], "tags": ["toxic comments classification"], "licenses": ["cc-by-nc-sa"]} | s-nlp/roberta_first_toxicity_classifier | null | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"toxic comments classification",

"en",

"arxiv:1907.11692",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

## Toxicity Classification Model

This model is trained for toxicity classification task. The dataset used for training is the merge of the English parts of the three datasets by **Jigsaw** ([Jigsaw 2018](https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge), [Jigsaw 2019](https://www.kaggle.com/c/jigsaw-unintended-bias-in-toxicity-classification), [Jigsaw 2020](https://www.kaggle.com/c/jigsaw-multilingual-toxic-comment-classification)), containing around 2 million examples. We split it into two parts and fine-tune a RoBERTa model ([RoBERTa: A Robustly Optimized BERT Pretraining Approach](https://arxiv.org/abs/1907.11692)) on it. The classifiers perform closely on the test set of the first Jigsaw competition, reaching the **AUC-ROC** of 0.98 and **F1-score** of 0.76.

## How to use

```python

from transformers import RobertaTokenizer, RobertaForSequenceClassification

# load tokenizer and model weights

tokenizer = RobertaTokenizer.from_pretrained('SkolkovoInstitute/roberta_toxicity_classifier')

model = RobertaForSequenceClassification.from_pretrained('SkolkovoInstitute/roberta_toxicity_classifier')

# prepare the input

batch = tokenizer.encode('you are amazing', return_tensors='pt')

# inference

model(batch)

```

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png | {"language": ["en"], "tags": ["toxic comments classification"], "licenses": ["cc-by-nc-sa"]} | s-nlp/roberta_toxicity_classifier | null | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"toxic comments classification",

"en",

"arxiv:1907.11692",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers | This model is a clone of [SkolkovoInstitute/roberta_toxicity_classifier](https://huggingface.co/SkolkovoInstitute/roberta_toxicity_classifier) trained on a disjoint dataset.

While `roberta_toxicity_classifier` is used for evaluation of detoxification algorithms, `roberta_toxicity_classifier_v1` can be used within these algorithms, as in the paper [Text Detoxification using Large Pre-trained Neural Models](https://arxiv.org/abs/1911.00536). | {} | s-nlp/roberta_toxicity_classifier_v1 | null | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"arxiv:1911.00536",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text2text-generation | transformers | This is the detoxification baseline model trained on the [train](https://github.com/skoltech-nlp/russe_detox_2022/blob/main/data/input/train.tsv) part of "RUSSE 2022: Russian Text Detoxification Based on Parallel Corpora" competition. The source sentences are Russian toxic messages from Odnoklassniki, Pikabu, and Twitter platforms. The base model is [ruT5](https://huggingface.co/sberbank-ai/ruT5-base) provided from Sber.

**How to use**

```python

from transformers import T5ForConditionalGeneration, AutoTokenizer

base_model_name = 'sberbank-ai/ruT5-base'

model_name = 'SkolkovoInstitute/ruT5-base-detox'

tokenizer = AutoTokenizer.from_pretrained(base_model_name)

model = T5ForConditionalGeneration.from_pretrained(model_name)

``` | {"language": ["ru"], "license": "openrail++", "tags": ["text-generation-inference"], "datasets": ["s-nlp/ru_paradetox"]} | s-nlp/ruT5-base-detox | null | [

"transformers",

"pytorch",

"safetensors",

"t5",

"text2text-generation",

"text-generation-inference",

"ru",

"dataset:s-nlp/ru_paradetox",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

This is a model for evaluation of naturalness of short Russian texts. It has been trained to distinguish human-written texts from their corrupted versions.

Corruption sources: random replacement, deletion, addition, shuffling, and re-inflection of words and characters, random changes of capitalization, round-trip translation, filling random gaps with T5 and RoBERTA models. For each original text, we sampled three corrupted texts, so the model is uniformly biased towards the `unnatural` label.

Data sources: web-corpora from [the Leipzig collection](https://wortschatz.uni-leipzig.de/en/download) (`rus_news_2020_100K`, `rus_newscrawl-public_2018_100K`, `rus-ru_web-public_2019_100K`, `rus_wikipedia_2021_100K`), comments from [OK](https://www.kaggle.com/alexandersemiletov/toxic-russian-comments) and [Pikabu](https://www.kaggle.com/blackmoon/russian-language-toxic-comments).

On our private test dataset, the model has achieved 40% rank correlation with human judgements of naturalness, which is higher than GPT perplexity, another popular fluency metric. | {"language": ["ru"], "tags": ["fluency"]} | s-nlp/rubert-base-corruption-detector | null | [

"transformers",

"pytorch",

"bert",

"text-classification",

"fluency",

"ru",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

Bert-based classifier (finetuned from [Conversational Rubert](https://huggingface.co/DeepPavlov/rubert-base-cased-conversational)) trained on merge of Russian Language Toxic Comments [dataset](https://www.kaggle.com/blackmoon/russian-language-toxic-comments/metadata) collected from 2ch.hk and Toxic Russian Comments [dataset](https://www.kaggle.com/alexandersemiletov/toxic-russian-comments) collected from ok.ru.

The datasets were merged, shuffled, and split into train, dev, test splits in 80-10-10 proportion.

The metrics obtained from test dataset is as follows

| | precision | recall | f1-score | support |

|:------------:|:---------:|:------:|:--------:|:-------:|

| 0 | 0.98 | 0.99 | 0.98 | 21384 |

| 1 | 0.94 | 0.92 | 0.93 | 4886 |

| accuracy | | | 0.97 | 26270|

| macro avg | 0.96 | 0.96 | 0.96 | 26270 |

| weighted avg | 0.97 | 0.97 | 0.97 | 26270 |

## How to use

```python

from transformers import BertTokenizer, BertForSequenceClassification

# load tokenizer and model weights

tokenizer = BertTokenizer.from_pretrained('SkolkovoInstitute/russian_toxicity_classifier')

model = BertForSequenceClassification.from_pretrained('SkolkovoInstitute/russian_toxicity_classifier')

# prepare the input

batch = tokenizer.encode('ты супер', return_tensors='pt')

# inference

model(batch)

```

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png | {"language": ["ru"], "tags": ["toxic comments classification"], "licenses": ["cc-by-nc-sa"]} | s-nlp/russian_toxicity_classifier | null | [

"transformers",

"pytorch",

"tf",

"safetensors",

"bert",

"text-classification",

"toxic comments classification",

"ru",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text2text-generation | transformers | This is a paraphraser based on [ceshine/t5-paraphrase-paws-msrp-opinosis](https://huggingface.co/ceshine/t5-paraphrase-paws-msrp-opinosis)

and additionally fine-tuned on [ParaNMT](https://arxiv.org/abs/1711.05732) filtered for the task of detoxification.

The model was trained for the paper [Text Detoxification using Large Pre-trained Neural Models](https://arxiv.org/abs/1911.00536).

An example of its use and the code for its training is given in https://github.com/skoltech-nlp/detox | {"license": "openrail++", "datasets": ["s-nlp/paranmt_for_detox"]} | s-nlp/t5-paranmt-detox | null | [

"transformers",

"pytorch",

"safetensors",

"t5",

"text2text-generation",

"dataset:s-nlp/paranmt_for_detox",

"arxiv:1711.05732",

"arxiv:1911.00536",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text2text-generation | transformers | This is a paraphraser based on [ceshine/t5-paraphrase-paws-msrp-opinosis](https://huggingface.co/ceshine/t5-paraphrase-paws-msrp-opinosis)

and additionally fine-tuned on [ParaNMT](https://arxiv.org/abs/1711.05732).

The model was trained for the paper [Text Detoxification using Large Pre-trained Neural Models](https://arxiv.org/abs/1911.00536).

An example of its use is given in https://github.com/skoltech-nlp/detox | {} | s-nlp/t5-paraphrase-paws-msrp-opinosis-paranmt | null | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"arxiv:1711.05732",

"arxiv:1911.00536",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text2text-generation | transformers | {} | s-nlp/t5_ru_5_10000_detox | null | [

"transformers",

"pytorch",

"safetensors",

"t5",

"text2text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

|

text-classification | transformers |

XLMRoberta-based classifier trained on XFORMAL.

all

| | precision | recall | f1-score | support |

|--------------|-----------|----------|----------|---------|

| 0 | 0.744912 | 0.927790 | 0.826354 | 108019 |

| 1 | 0.889088 | 0.645630 | 0.748048 | 96845 |

| accuracy | | | 0.794405 | 204864 |

| macro avg | 0.817000 | 0.786710 | 0.787201 | 204864 |

| weighted avg | 0.813068 | 0.794405 | 0.789337 | 204864 |

en

| | precision | recall | f1-score | support |

|--------------|-----------|----------|----------|---------|

| 0 | 0.800053 | 0.962981 | 0.873988 | 22151 |

| 1 | 0.945106 | 0.725899 | 0.821124 | 19449 |

| accuracy | | | 0.852139 | 41600 |

| macro avg | 0.872579 | 0.844440 | 0.847556 | 41600 |

| weighted avg | 0.867869 | 0.852139 | 0.849273 | 41600 |

fr

| | precision | recall | f1-score | support |

|--------------|-----------|----------|----------|---------|

| 0 | 0.746709 | 0.925738 | 0.826641 | 21505 |

| 1 | 0.887305 | 0.650592 | 0.750731 | 19327 |

| accuracy | | | 0.795504 | 40832 |

| macro avg | 0.817007 | 0.788165 | 0.788686 | 40832 |

| weighted avg | 0.813257 | 0.795504 | 0.790711 | 40832 |

it

| | precision | recall | f1-score | support |

|--------------|-----------|----------|----------|---------|

| 0 | 0.721282 | 0.914669 | 0.806545 | 21528 |

| 1 | 0.864887 | 0.607135 | 0.713445 | 19368 |

| accuracy | | | 0.769024 | 40896 |

| macro avg | 0.793084 | 0.760902 | 0.759995 | 40896 |

| weighted avg | 0.789292 | 0.769024 | 0.762454 | 40896 |

pt

| | precision | recall | f1-score | support |

|--------------|-----------|----------|----------|---------|

| 0 | 0.717546 | 0.908167 | 0.801681 | 21637 |

| 1 | 0.853628 | 0.599700 | 0.704481 | 19323 |

| accuracy | | | 0.762646 | 40960 |

| macro avg | 0.785587 | 0.753933 | 0.753081 | 40960 |

| weighted avg | 0.781743 | 0.762646 | 0.755826 | 40960 |

## How to use

```python

from transformers import XLMRobertaTokenizerFast, XLMRobertaForSequenceClassification

# load tokenizer and model weights

tokenizer = XLMRobertaTokenizerFast.from_pretrained('SkolkovoInstitute/xlmr_formality_classifier')

model = XLMRobertaForSequenceClassification.from_pretrained('SkolkovoInstitute/xlmr_formality_classifier')

# prepare the input

batch = tokenizer.encode('ты супер', return_tensors='pt')

# inference

model(batch)

```

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png | {"language": ["en", "fr", "it", "pt"], "license": "cc-by-nc-sa-4.0", "tags": ["formal or informal classification"], "licenses": ["cc-by-nc-sa"]} | s-nlp/xlmr_formality_classifier | null | [

"transformers",

"pytorch",

"safetensors",

"xlm-roberta",

"text-classification",

"formal or informal classification",

"en",

"fr",

"it",

"pt",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

## General concept of the model

#### Proposed usage

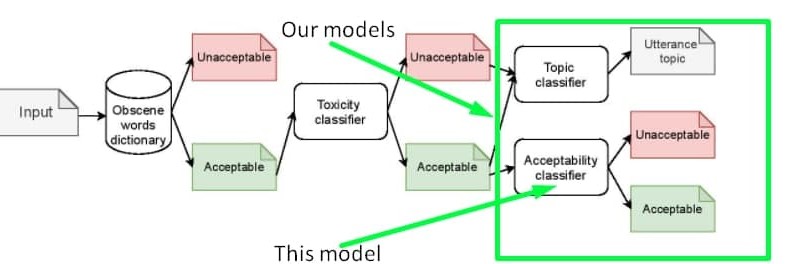

The **'inappropriateness'** substance we tried to collect in the dataset and detect with the model **is NOT a substitution of toxicity**, it is rather a derivative of toxicity. So the model based on our dataset could serve as **an additional layer of inappropriateness filtering after toxicity and obscenity filtration**. You can detect the exact sensitive topic by using [another model](https://huggingface.co/Skoltech/russian-sensitive-topics). The proposed pipeline is shown in the scheme below.

You can also train one classifier for both toxicity and inappropriateness detection. The data to be mixed with toxic labelled samples could be found on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/appropriateness/Appropriateness.csv) or on [kaggle](https://www.kaggle.com/nigula/russianinappropriatemessages)

#### Inappropraiteness intuition

This model is trained on the dataset of inappropriate messages of the Russian language. Generally, an inappropriate utterance is an utterance that has not obscene words or any kind of toxic intent, but can still harm the reputation of the speaker. Find some sample for more intuition in the table below. Learn more about the concept of inappropriateness [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/appropriateness/Appropriateness.csv) or on [kaggle](https://www.kaggle.com/nigula/russianinappropriatemessages). The properties of the dataset are the same as the one described in the article, the only difference is the size.

| Text | Inappropriate | Comment | Unsafe topic |

|------------------------------------------------------------------------------------------------------|---------------|----------------------------------------------------------------|---------------|

| Ладно бы видного деятеля завалили а тут какого то ноунейм нигру преступника | yes | Оправдание убийства | offline crime |

| Это нарушение УКРФ! | no | Осуждение нарушения закона | offline crime |

| Религия - это насилие высшего уровня. Верующего принуждают к вере еще в младенчестве. | yes | Оскорбление чувств верующих | religion |

| Христиане, как вы относитесь к иноверцам, в частности к Гамалиилу и к уверовавшим в Иисуса фарисеям? | no | Обычные вопросы к верующим без намека на оскорбление их чувств | religion |

| Хорошо что проституция запрещена | no | Осуждение простуитуции | prostitution |

| Возьму бабки и сниму шлюх на выходных | yes | Популяризация проституции | prostitution |

#### Metrics

The model was trained, validated, and tested only on the samples with 100% confidence, which allowed to get the following metrics on test set:

| | precision | recall | f1-score | support |

|--------------|----------|--------|----------|---------|

| 0 | 0.92 | 0.93 | 0.93 | 7839 |

| 1 | 0.80 | 0.76 | 0.78 | 2726 |

| accuracy | | | 0.89 | 10565 |

| macro avg | 0.86 | 0.85 | 0.85 | 10565 |

| weighted avg | 0.89 | 0.89 | 0.89 | 10565 |

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png

## Citation

If you find this repository helpful, feel free to cite our publication:

```

@inproceedings{babakov-etal-2021-detecting,

title = "Detecting Inappropriate Messages on Sensitive Topics that Could Harm a Company{'}s Reputation",

author = "Babakov, Nikolay and

Logacheva, Varvara and

Kozlova, Olga and

Semenov, Nikita and

Panchenko, Alexander",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.4",

pages = "26--36",

abstract = "Not all topics are equally {``}flammable{''} in terms of toxicity: a calm discussion of turtles or fishing less often fuels inappropriate toxic dialogues than a discussion of politics or sexual minorities. We define a set of sensitive topics that can yield inappropriate and toxic messages and describe the methodology of collecting and labelling a dataset for appropriateness. While toxicity in user-generated data is well-studied, we aim at defining a more fine-grained notion of inappropriateness. The core of inappropriateness is that it can harm the reputation of a speaker. This is different from toxicity in two respects: (i) inappropriateness is topic-related, and (ii) inappropriate message is not toxic but still unacceptable. We collect and release two datasets for Russian: a topic-labelled dataset and an appropriateness-labelled dataset. We also release pre-trained classification models trained on this data.",

}

```

## Contacts

If you have any questions please contact [Nikolay](mailto:[email protected]) | {"language": ["ru"], "tags": ["toxic comments classification"], "licenses": ["cc-by-nc-sa"]} | apanc/russian-inappropriate-messages | null | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"toxic comments classification",

"ru",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05+00:00 |

text-classification | transformers |

## General concept of the model

This model is trained on the dataset of sensitive topics of the Russian language. The concept of sensitive topics is described [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/sensitive_topics/sensitive_topics.csv) or on [kaggle](https://www.kaggle.com/nigula/russian-sensitive-topics). The properties of the dataset is the same as the one described in the article, the only difference is the size.

## Instructions

The model predicts combinations of 18 sensitive topics described in the [article](https://arxiv.org/abs/2103.05345). You can find step-by-step instructions for using the model [here](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/sensitive_topics/Inference.ipynb)

## Metrics