modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Ayham/xlnet_bert_summarization_cnn_dailymail | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

] | text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9265

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2101

- Accuracy: 0.9265

- F1 Score: 0.9265

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 Score |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:--------:|

| No log | 1.0 | 250 | 0.3016 | 0.9095 | 0.9067 |

| No log | 2.0 | 500 | 0.2101 | 0.9265 | 0.9265 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Ayham/xlnet_distilgpt2_summarization_cnn_dailymail | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

] | text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 13 | null | ---

language: ms

---

# roberta-base-bahasa-cased

Pretrained RoBERTa base language model for Malay.

## Pretraining Corpus

`roberta-base-bahasa-cased` model was pretrained on ~400 miliion words. Below is list of data we trained on,

1. IIUM confession, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

2. local Instagram, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

3. local news, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

4. local parliament hansards, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

5. local research papers related to `kebudayaan`, `keagaaman` and `etnik`, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

6. local twitter, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

7. Malay Wattpad, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

8. Malay Wikipedia, https://github.com/huseinzol05/malay-dataset/tree/master/dumping/clean

## Pretraining details

- All steps can reproduce from https://github.com/huseinzol05/Malaya/tree/master/pretrained-model/roberta.

## Example using AutoModelWithLMHead

```python

from transformers import AutoTokenizer, AutoModelForMaskedLM, pipeline

model = AutoModelForMaskedLM.from_pretrained('mesolitica/roberta-base-bahasa-cased')

tokenizer = AutoTokenizer.from_pretrained(

'mesolitica/roberta-base-bahasa-cased',

do_lower_case = False,

)

fill_mask = pipeline('fill-mask', model=model, tokenizer=tokenizer)

fill_mask('Permohonan Najib, anak untuk dengar isu perlembagaan <mask> .')

```

Output is,

```json

[{'score': 0.3368818759918213,

'token': 746,

'token_str': ' negara',

'sequence': 'Permohonan Najib, anak untuk dengar isu perlembagaan negara.'},

{'score': 0.09646568447351456,

'token': 598,

'token_str': ' Malaysia',

'sequence': 'Permohonan Najib, anak untuk dengar isu perlembagaan Malaysia.'},

{'score': 0.029483484104275703,

'token': 3265,

'token_str': ' UMNO',

'sequence': 'Permohonan Najib, anak untuk dengar isu perlembagaan UMNO.'},

{'score': 0.026470622047781944,

'token': 2562,

'token_str': ' parti',

'sequence': 'Permohonan Najib, anak untuk dengar isu perlembagaan parti.'},

{'score': 0.023237623274326324,

'token': 391,

'token_str': ' ini',

'sequence': 'Permohonan Najib, anak untuk dengar isu perlembagaan ini.'}]

```

|

Ayham/xlnetgpt2_xsum7 | [

"pytorch",

"tensorboard",

"encoder-decoder",

"text2text-generation",

"transformers",

"generated_from_trainer",

"autotrain_compatible"

] | text2text-generation | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

library_name: stable-baselines3

tags:

- AntBulletEnv-v0

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- metrics:

- type: mean_reward

value: 1704.47 +/- 175.74

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: AntBulletEnv-v0

type: AntBulletEnv-v0

---

# **A2C** Agent playing **AntBulletEnv-v0**

This is a trained model of a **A2C** agent playing **AntBulletEnv-v0**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Ayoola/pytorch_model | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- generated_from_trainer

model-index:

- name: GPT-Neo_DnD_Control

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# GPT-Neo_DnD_Control

This model is a fine-tuned version of [PatrickTyBrown/GPT-Neo_DnD](https://huggingface.co/PatrickTyBrown/GPT-Neo_DnD) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 2.6518

- eval_runtime: 141.422

- eval_samples_per_second: 6.527

- eval_steps_per_second: 3.267

- epoch: 3.9

- step: 36000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1.5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Ayoola/wav2vec2-large-xlsr-turkish-demo-colab | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | Access to model clam004/emerg-intent-consistent-good-gpt2-xl-v2 is restricted and you are not in the authorized list. Visit https://huggingface.co/clam004/emerg-intent-consistent-good-gpt2-xl-v2 to ask for access. |

Ayran/DialoGPT-medium-harry-1 | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

tags:

- generated_from_trainer

datasets:

- imagefolder

model-index:

- name: donut-base-sroie

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# donut-base-sroie

This model is a fine-tuned version of [naver-clova-ix/donut-base](https://huggingface.co/naver-clova-ix/donut-base) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.23.0.dev0

- Pytorch 1.8.1+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Ayran/DialoGPT-small-gandalf | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 11 | null | ---

license: mit

---

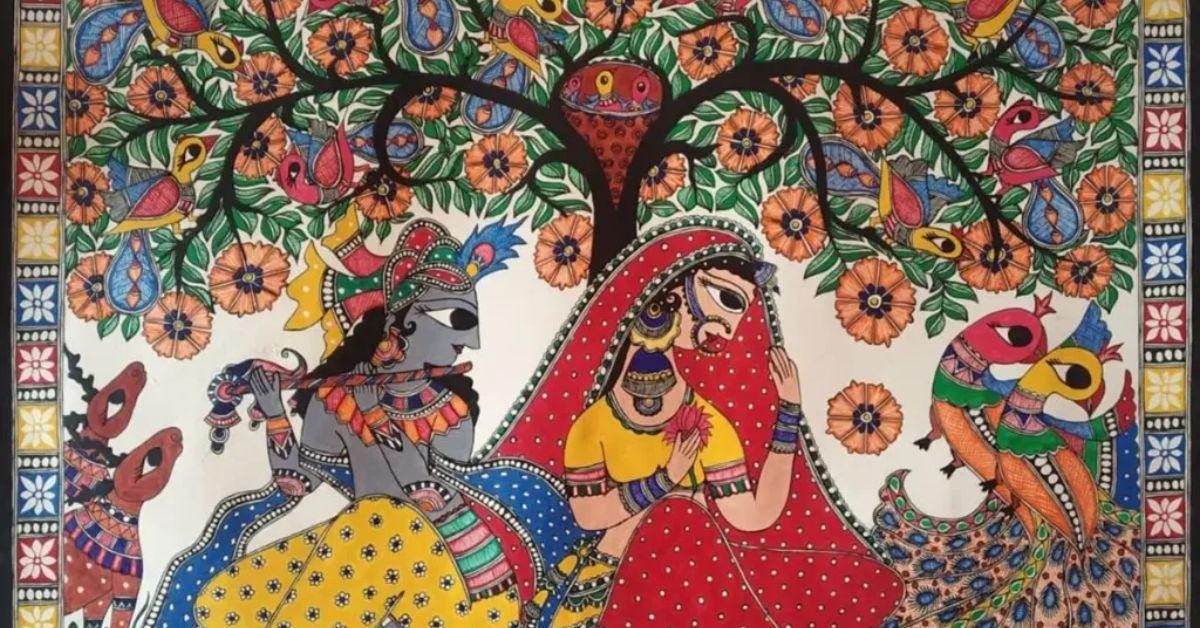

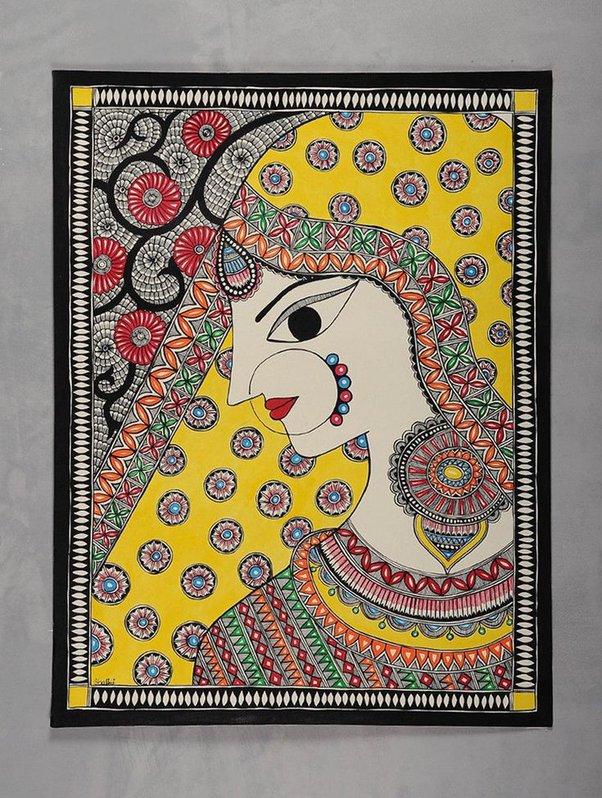

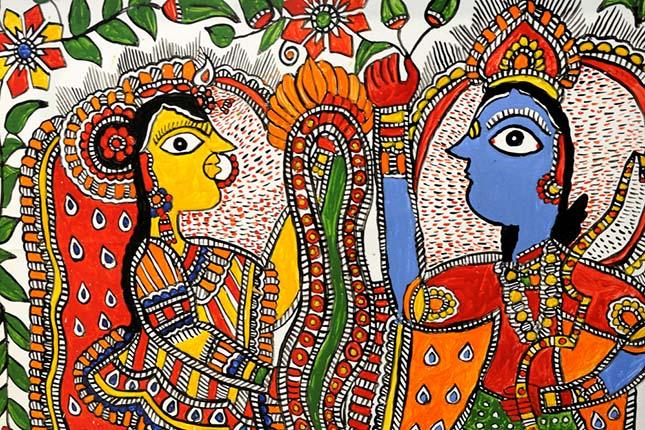

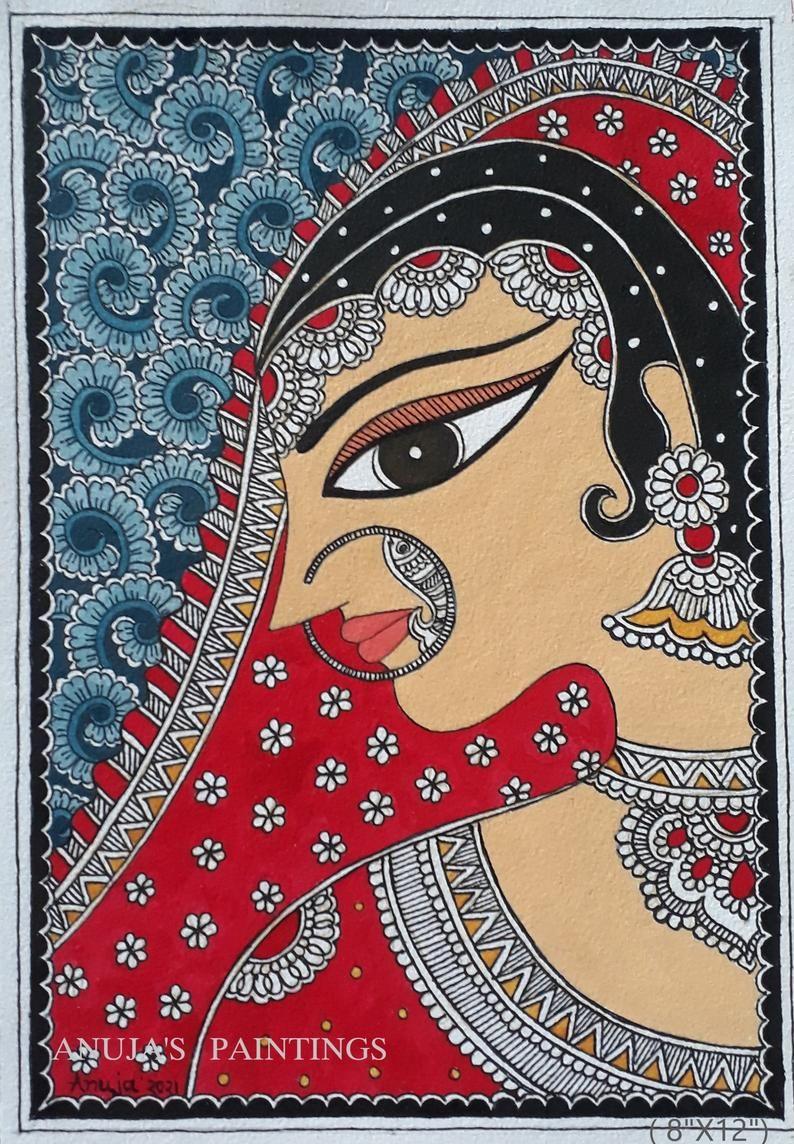

### madhubani art on Stable Diffusion

This is the `<madhubani-art>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `style`:

|

Ayran/DialoGPT-small-harry-potter-1-through-3 | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | null | ---

tags:

- CartPole-v1

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: 208.80 +/- 135.81

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

---

# PPO Agent Playing CartPole-v1

This is a trained model of a PPO agent playing CartPole-v1.

To learn to code your own PPO agent and train it Unit 8 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit8

# Hyperparameters

```python

{'exp_name': 'ppo'

'seed': 1

'torch_deterministic': True

'cuda': True

'track': False

'wandb_project_name': 'cleanRL'

'wandb_entity': None

'capture_video': False

'env_id': 'CartPole-v1'

'total_timesteps': 50000

'learning_rate': 0.00025

'num_envs': 4

'num_steps': 128

'anneal_lr': True

'gae': True

'gamma': 0.99

'gae_lambda': 0.95

'num_minibatches': 4

'update_epochs': 4

'norm_adv': True

'clip_coef': 0.2

'clip_vloss': True

'ent_coef': 0.01

'vf_coef': 0.5

'max_grad_norm': 0.5

'target_kl': None

'virtual_display': True

'repo_id': 'NithirojTripatarasit/ppo-CartPole-v1'

'batch_size': 512

'minibatch_size': 128}

```

|

Azaghast/GPT2-SCP-Descriptions | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

] | text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- matthews_correlation

model-index:

- name: distilbert-base-uncased-finetuned-cola

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: cola

split: train

args: cola

metrics:

- name: Matthews Correlation

type: matthews_correlation

value: 0.5451837431775948

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5532

- Matthews Correlation: 0.5452

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5248 | 1.0 | 535 | 0.5479 | 0.3922 |

| 0.3503 | 2.0 | 1070 | 0.5148 | 0.4822 |

| 0.2386 | 3.0 | 1605 | 0.5532 | 0.5452 |

| 0.1773 | 4.0 | 2140 | 0.6818 | 0.5282 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Azuris/DialoGPT-medium-senorita | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 14 | null | ---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.5911706349206349

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.3235294117647059

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.314540059347181

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.4118954974986103

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.43

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.34649122807017546

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.3125

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8142232936567726

- name: F1 (macro)

type: f1_macro

value: 0.7823150685401111

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.7779342723004695

- name: F1 (macro)

type: f1_macro

value: 0.4495225434483775

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.5357529794149513

- name: F1 (macro)

type: f1_macro

value: 0.45418166183928343

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8190164846630034

- name: F1 (macro)

type: f1_macro

value: 0.6465234410767566

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8834221247257913

- name: F1 (macro)

type: f1_macro

value: 0.8771202456083294

---

# relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.3235294117647059

- Accuracy on SAT: 0.314540059347181

- Accuracy on BATS: 0.4118954974986103

- Accuracy on U2: 0.34649122807017546

- Accuracy on U4: 0.3125

- Accuracy on Google: 0.43

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.8142232936567726

- Micro F1 score on CogALexV: 0.7779342723004695

- Micro F1 score on EVALution: 0.5357529794149513

- Micro F1 score on K&H+N: 0.8190164846630034

- Micro F1 score on ROOT09: 0.8834221247257913

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.5911706349206349

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: mask

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: Today, I finally discovered the relation between <subj> and <obj> : <mask>

- loss_function: nce_logout

- classification_loss: True

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 24

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-c-nce-classification-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

BSC-LT/roberta-large-bne-capitel-ner | [

"pytorch",

"roberta",

"token-classification",

"es",

"dataset:bne",

"dataset:capitel",

"arxiv:1907.11692",

"arxiv:2107.07253",

"transformers",

"national library of spain",

"spanish",

"bne",

"capitel",

"ner",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"RobertaForTokenClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- huggingface/autotrain-data-imdb-full

co2_eq_emissions:

emissions: 74.06131825522797

---

# Model Trained Using AutoTrain

- Problem type: Binary Classification

- Model ID: 1373552879

- CO2 Emissions (in grams): 74.0613

## Validation Metrics

- Loss: 0.193

- Accuracy: 0.933

- Precision: 0.941

- Recall: 0.923

- AUC: 0.980

- F1: 0.932

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/huggingface/autotrain-imdb-full-1373552879

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("huggingface/autotrain-imdb-full-1373552879", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("huggingface/autotrain-imdb-full-1373552879", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

Badr/model1 | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: bsd

---

a lightweight solution for the Kaggle ELL competition using distilbert

Info about the Kaggle ELL competition: <a href="https://www.kaggle.com/competitions/feedback-prize-english-language-learning/code">https://www.kaggle.com/competitions/feedback-prize-english-language-learning/code</a>

|

Bagus/ser-japanese | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null |

- ./gcc-arm-8.3-2019.03-x86_64-arm-linux-gnueabihf.tar.xz

|Filename | URL for downloading| comment|

|-------|-----| ---|

|./gcc-arm-8.3-2019.03-x86_64-arm-linux-gnueabihf.tar.xz | <https://developer.arm.com/tools-and-software/open-source-software/developer-tools/gnu-toolchain/gnu-a/downloads/8-3-2019-03> | <https://developer.arm.com/downloads/-/gnu-a> |

|

BaptisteDoyen/camembert-base-xnli | [

"pytorch",

"tf",

"camembert",

"text-classification",

"fr",

"dataset:xnli",

"transformers",

"zero-shot-classification",

"xnli",

"nli",

"license:mit",

"has_space"

] | zero-shot-classification | {

"architectures": [

"CamembertForSequenceClassification"

],

"model_type": "camembert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 405,474 | 2022-09-07T13:15:31Z | ---

license: cc-by-4.0

---

Install Instructions

1. Download Model into Google Drive > AI > DiscoDiffusion > Models

2. Add path '/content/drive/MyDrive/AI/DiscoDiffusion/Models/AIDM_130k_v01.pt' to Disco Diffusion Step 2 > Custom Model > Custom Path

3. In Custom Model Settings add the following code below

4. Run All

Custom Model Settings

---

#@markdown ####**Custom Model Settings:**

if diffusion_model == 'custom':

model_config.update({

'attention_resolutions': '32, 16, 8',

'class_cond': False,

'diffusion_steps': 1000,

'rescale_timesteps': True,

'image_size': 512,

'learn_sigma': True,

'noise_schedule': 'linear',

'num_channels': 128,

'num_heads': 4,

'num_res_blocks': 2,

'resblock_updown': True,

'use_checkpoint': use_checkpoint,

'use_fp16': True,

'use_scale_shift_norm': True,

})

|

BatuhanYilmaz/bert-finetuned-nerxD | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- huggingface/autotrain-data-emotions

co2_eq_emissions:

emissions: 0.05402221758817422

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1374752887

- CO2 Emissions (in grams): 0.0540

## Validation Metrics

- Loss: 0.122

- Accuracy: 0.940

- Macro F1: 0.912

- Micro F1: 0.940

- Weighted F1: 0.940

- Macro Precision: 0.902

- Micro Precision: 0.940

- Weighted Precision: 0.943

- Macro Recall: 0.928

- Micro Recall: 0.940

- Weighted Recall: 0.940

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/huggingface/autotrain-emotions-1374752887

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("huggingface/autotrain-emotions-1374752887", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("huggingface/autotrain-emotions-1374752887", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

BatuhanYilmaz/code-search-net-tokenizer1 | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- huggingface/autotrain-data-emotions

co2_eq_emissions:

emissions: 0.030012388645982102

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1374752888

- CO2 Emissions (in grams): 0.0300

## Validation Metrics

- Loss: 0.136

- Accuracy: 0.938

- Macro F1: 0.901

- Micro F1: 0.938

- Weighted F1: 0.937

- Macro Precision: 0.923

- Micro Precision: 0.938

- Weighted Precision: 0.938

- Macro Recall: 0.888

- Micro Recall: 0.938

- Weighted Recall: 0.938

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/huggingface/autotrain-emotions-1374752888

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("huggingface/autotrain-emotions-1374752888", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("huggingface/autotrain-emotions-1374752888", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

BatuhanYilmaz/distilbert-base-uncased-finetuned-squad-d5716d28 | [

"pytorch",

"distilbert",

"fill-mask",

"en",

"dataset:squad",

"arxiv:1910.01108",

"transformers",

"question-answering",

"license:apache-2.0",

"autotrain_compatible"

] | question-answering | {

"architectures": [

"DistilBertForMaskedLM"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 18 | null | ---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- huggingface/autotrain-data-emotions

co2_eq_emissions:

emissions: 5.378843181503548

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1374752889

- CO2 Emissions (in grams): 5.3788

## Validation Metrics

- Loss: 0.136

- Accuracy: 0.938

- Macro F1: 0.903

- Micro F1: 0.938

- Weighted F1: 0.938

- Macro Precision: 0.925

- Micro Precision: 0.938

- Weighted Precision: 0.939

- Macro Recall: 0.890

- Micro Recall: 0.938

- Weighted Recall: 0.938

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/huggingface/autotrain-emotions-1374752889

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("huggingface/autotrain-emotions-1374752889", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("huggingface/autotrain-emotions-1374752889", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

BatuhanYilmaz/dummy-model | [

"tf",

"camembert",

"fill-mask",

"transformers",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"CamembertForMaskedLM"

],

"model_type": "camembert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null | ---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- huggingface/autotrain-data-emotions

co2_eq_emissions:

emissions: 18.738323825083565

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 1374752890

- CO2 Emissions (in grams): 18.7383

## Validation Metrics

- Loss: 0.141

- Accuracy: 0.939

- Macro F1: 0.905

- Micro F1: 0.939

- Weighted F1: 0.939

- Macro Precision: 0.914

- Micro Precision: 0.939

- Weighted Precision: 0.942

- Macro Recall: 0.903

- Micro Recall: 0.939

- Weighted Recall: 0.939

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/huggingface/autotrain-emotions-1374752890

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("huggingface/autotrain-emotions-1374752890", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("huggingface/autotrain-emotions-1374752890", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

BatuhanYilmaz/mlm-finetuned-imdb | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- yelp_review_full

metrics:

- accuracy

model-index:

- name: Bert_Classifier

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: yelp_review_full

type: yelp_review_full

config: yelp_review_full

split: train

args: yelp_review_full

metrics:

- name: Accuracy

type: accuracy

value: 0.634

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Bert_Classifier

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the yelp_review_full dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0546

- Accuracy: 0.634

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.9528 | 1.0 | 2500 | 0.9097 | 0.5985 |

| 0.7607 | 2.0 | 5000 | 0.8969 | 0.627 |

| 0.5039 | 3.0 | 7500 | 1.0546 | 0.634 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Baybars/debateGPT | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- MRC

- Natural Questions List

- xlm-roberta-large

language:

- multilingual

---

# Model description

An XLM-RoBERTa reading comprehension model for List Question Answering using a fine-tuned [xlm-roberta-large](https://huggingface.co/xlm-roberta-large/) model that is further fine-tuned on the list questions in the [Natural Questions](https://huggingface.co/datasets/natural_questions) dataset.

## Intended uses & limitations

You can use the raw model for the reading comprehension task. Biases associated with the pre-existing language model, xlm-roberta-large, that we used may be present in our fine-tuned model, listqa_nq-task-xlm-roberta-large.

## Usage

You can use this model directly with the [PrimeQA](https://github.com/primeqa/primeqa) pipeline for reading comprehension [listqa.ipynb](https://github.com/primeqa/primeqa/blob/main/notebooks/mrc/listqa.ipynb).

### BibTeX entry and citation info

```bibtex

@article{kwiatkowski-etal-2019-natural,

title = "Natural Questions: A Benchmark for Question Answering Research",

author = "Kwiatkowski, Tom and

Palomaki, Jennimaria and

Redfield, Olivia and

Collins, Michael and

Parikh, Ankur and

Alberti, Chris and

Epstein, Danielle and

Polosukhin, Illia and

Devlin, Jacob and

Lee, Kenton and

Toutanova, Kristina and

Jones, Llion and

Kelcey, Matthew and

Chang, Ming-Wei and

Dai, Andrew M. and

Uszkoreit, Jakob and

Le, Quoc and

Petrov, Slav",

journal = "Transactions of the Association for Computational Linguistics",

volume = "7",

year = "2019",

address = "Cambridge, MA",

publisher = "MIT Press",

url = "https://aclanthology.org/Q19-1026",

doi = "10.1162/tacl_a_00276",

pages = "452--466",

}

```

```bibtex

@article{DBLP:journals/corr/abs-1911-02116,

author = {Alexis Conneau and

Kartikay Khandelwal and

Naman Goyal and

Vishrav Chaudhary and

Guillaume Wenzek and

Francisco Guzm{\'{a}}n and

Edouard Grave and

Myle Ott and

Luke Zettlemoyer and

Veselin Stoyanov},

title = {Unsupervised Cross-lingual Representation Learning at Scale},

journal = {CoRR},

volume = {abs/1911.02116},

year = {2019},

url = {http://arxiv.org/abs/1911.02116},

eprinttype = {arXiv},

eprint = {1911.02116},

timestamp = {Mon, 11 Nov 2019 18:38:09 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1911-02116.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

Bia18/Beatriz | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: Imene/vit-base-patch16-224-in21k-Wr

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Imene/vit-base-patch16-224-in21k-Wr

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.3104

- Train Accuracy: 0.9956

- Train Top-3-accuracy: 0.9981

- Validation Loss: 1.6041

- Validation Accuracy: 0.5770

- Validation Top-3-accuracy: 0.8035

- Epoch: 7

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 0.0001, 'decay_steps': 1500, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Train Accuracy | Train Top-3-accuracy | Validation Loss | Validation Accuracy | Validation Top-3-accuracy | Epoch |

|:----------:|:--------------:|:--------------------:|:---------------:|:-------------------:|:-------------------------:|:-----:|

| 3.8300 | 0.0583 | 0.1381 | 3.6801 | 0.0951 | 0.2203 | 0 |

| 3.2915 | 0.2418 | 0.4557 | 3.0277 | 0.3004 | 0.5507 | 1 |

| 2.6535 | 0.4438 | 0.7106 | 2.5932 | 0.3780 | 0.6546 | 2 |

| 2.0541 | 0.6308 | 0.8575 | 2.2998 | 0.4556 | 0.6871 | 3 |

| 1.4622 | 0.7924 | 0.9496 | 2.0054 | 0.5056 | 0.7234 | 4 |

| 0.9098 | 0.9201 | 0.9887 | 1.8079 | 0.5695 | 0.7785 | 5 |

| 0.5220 | 0.9821 | 0.9969 | 1.6444 | 0.5845 | 0.7922 | 6 |

| 0.3104 | 0.9956 | 0.9981 | 1.6041 | 0.5770 | 0.8035 | 7 |

### Framework versions

- Transformers 4.21.3

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Biasface/DDDC | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 14 | null | ---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-hmdb-2-shot

results:

- task:

type: video-classification

dataset:

name: HMDB-51

type: hmdb-51

metrics:

- type: top-1 accuracy

value: 53.0

---

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=2) on [HMDB-51](https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [HMDB-51](https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/).

### Preprocessing

The exact details of preprocessing during training can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L247).

The exact details of preprocessing during validation can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L285).

During validation, one resizes the shorter edge of each frame, after which center cropping is performed to a fixed-size resolution (like 224x224). Next, frames are normalized across the RGB channels with the ImageNet mean and standard deviation.

## Evaluation results

This model achieves a top-1 accuracy of 53.0%.

|

BigDaddyNe1L/Hhaa | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-hmdb-8-shot

results:

- task:

type: video-classification

dataset:

name: HMDB-51

type: hmdb-51

metrics:

- type: top-1 accuracy

value: 62.8

---

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=8) on [HMDB-51](https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [HMDB-51](https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/).

### Preprocessing

The exact details of preprocessing during training can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L247).

The exact details of preprocessing during validation can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L285).

During validation, one resizes the shorter edge of each frame, after which center cropping is performed to a fixed-size resolution (like 224x224). Next, frames are normalized across the RGB channels with the ImageNet mean and standard deviation.

## Evaluation results

This model achieves a top-1 accuracy of 62.8%.

|

BigSalmon/FormalBerta | [

"pytorch",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"RobertaForMaskedLM"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 10 | null | ---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-ucf-8-shot

results:

- task:

type: video-classification

dataset:

name: UCF101

type: ucf101

metrics:

- type: top-1 accuracy

value: 88.3

---

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=8) on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php).

### Preprocessing

The exact details of preprocessing during training can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L247).

The exact details of preprocessing during validation can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L285).

During validation, one resizes the shorter edge of each frame, after which center cropping is performed to a fixed-size resolution (like 224x224). Next, frames are normalized across the RGB channels with the ImageNet mean and standard deviation.

## Evaluation results

This model achieves a top-1 accuracy of 88.3%.

|

BigSalmon/FormalBerta2 | [

"pytorch",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"RobertaForMaskedLM"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 16 | null | ---

language: en # <-- my language

widget:

- text: "Moody’s decision to upgrade the credit rating of Air Liquide is all the more remarkable as it is taking place in a more difficult macroeconomic and geopolitical environment. It underlines the Group’s capacity to maintain a high level of cash flow despite the fluctuations of the economy. Following Standard & Poor’s decision to upgrade Air Liquide’s credit rating, this decision recognizes the Group’s level of debt, which has been brought back to its pre-Airgas 2016 acquisition level in five years. It also reflects the largely demonstrated resilience of the Group’s business model."

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: roberta-sent-generali

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-sent-generali

This model was fine-tuned on Roberta Large using a private dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4885

- F1: 0.9104

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.355 | 1.0 | 262 | 0.3005 | 0.8829 |

| 0.2201 | 2.0 | 524 | 0.3566 | 0.8930 |

| 0.1293 | 3.0 | 786 | 0.3644 | 0.9193 |

| 0.0662 | 4.0 | 1048 | 0.4202 | 0.9145 |

| 0.026 | 5.0 | 1310 | 0.4885 | 0.9104 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

|

BigSalmon/GPTHeHe | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"has_space"

] | text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: mit

---

### Cheburashka on Stable Diffusion

This is the `<cheburashka>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

BigSalmon/GPTIntro | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-zero-shot

results:

- task:

type: video-classification

dataset:

name: HMDB-51

type: hmdb-51

metrics:

- type: top-1 accuracy

value: 44.6