modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

AlekseyKorshuk/comedy-scripts | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

] | text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 20 | 2022-09-13T23:26:30Z | ---

license: mit

---

### Tubby Cats on Stable Diffusion

This is the `<tubby>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

AlexMaclean/sentence-compression-roberta | [

"pytorch",

"roberta",

"token-classification",

"transformers",

"generated_from_trainer",

"license:mit",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"RobertaForTokenClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 13 | null | ```

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("BigSalmon/Infill04")

model = AutoModelForCausalLM.from_pretrained("BigSalmon/Infill04")

```

```

Try it out here:

https://huggingface.co/spaces/BigSalmon/TestAnyGPTModel

```

```

prompt = """few sights are as [blank] new york city as the colorful, flashing signage of its bodegas [sep]"""

input_ids = tokenizer.encode(prompt, return_tensors='pt')

outputs = model.generate(input_ids=input_ids,

max_length=10 + len(prompt),

temperature=1.0,

top_k=50,

top_p=0.95,

do_sample=True,

num_return_sequences=5,

early_stopping=True)

for i in range(5):

print(tokenizer.decode(outputs[i]))

```

Most likely outputs (Disclaimer: I highly recommend using this over just generating):

```

prompt = """few sights are as [blank] new york city as the colorful, flashing signage of its bodegas [sep]"""

text = tokenizer.encode(prompt)

myinput, past_key_values = torch.tensor([text]), None

myinput = myinput

myinput= myinput.to(device)

logits, past_key_values = model(myinput, past_key_values = past_key_values, return_dict=False)

logits = logits[0,-1]

probabilities = torch.nn.functional.softmax(logits)

best_logits, best_indices = logits.topk(250)

best_words = [tokenizer.decode([idx.item()]) for idx in best_indices]

text.append(best_indices[0].item())

best_probabilities = probabilities[best_indices].tolist()

words = []

print(best_words)

```

Infill / Infilling / Masking / Phrase Masking

```

His contention [blank] by the evidence [sep] was refuted [answer]

***

Few sights are as [blank] New York City as the colorful, flashing signage of its bodegas [sep] synonymous with [answer]

***

When rick won the lottery, all of his distant relatives [blank] his winnings [sep] clamored for [answer]

***

The library’s quiet atmosphere encourages visitors to [blank] in their work [sep] immerse themselves [answer]

***

```

```

original: Other film stars to have appeared in Scrubs include Heather Graham, while Friends actor Matthew Perry has guest-starred and directed an episode of the [MASK] star, who recently played the title role in historical blockbuster Alexander, will make a cameo appearance as an unruly Irishman. Its leading star, Zach Braff, has recently [MASK] the big screen in Garden State, which he also directed. Farrell is pencilled in to [MASK] of Crockett in a film version of 1980s police [MASK] Farrell's appearance is said to be a result of his friendship with Zach Braff, who stars in the programme.

infill: Other film stars to have appeared in Scrubs include Heather Graham, while Friends actor Matthew Perry has guest-starred and directed an episode of the show. The film star, who recently played the title role in historical blockbuster Alexander, will make a cameo appearance as an unruly Irishman. Its leading star, Zach Braff, has recently been seen on the big screen in Garden State, which he also directed. Farrell is pencilled in to play the role of Crockett in a film version of 1980s police drama Miami Vice. Farrell's appearance is said to be a result of his friendship with Zach Braff, who stars in the programme.

``` |

AlexMaclean/sentence-compression | [

"pytorch",

"distilbert",

"token-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"DistilBertForTokenClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 16 | null | ---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.840515873015873

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6818181818181818

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.685459940652819

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.8076709282934964

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.94

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6535087719298246

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6388888888888888

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9281301792978756

- name: F1 (macro)

type: f1_macro

value: 0.9254620165261186

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8856807511737088

- name: F1 (macro)

type: f1_macro

value: 0.7505936116426153

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.7210184182015169

- name: F1 (macro)

type: f1_macro

value: 0.707381518416115

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9625791194268624

- name: F1 (macro)

type: f1_macro

value: 0.8830231594217628

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9207145095581323

- name: F1 (macro)

type: f1_macro

value: 0.9189981669115016

---

# relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.6818181818181818

- Accuracy on SAT: 0.685459940652819

- Accuracy on BATS: 0.8076709282934964

- Accuracy on U2: 0.6535087719298246

- Accuracy on U4: 0.6388888888888888

- Accuracy on Google: 0.94

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9281301792978756

- Micro F1 score on CogALexV: 0.8856807511737088

- Micro F1 score on EVALution: 0.7210184182015169

- Micro F1 score on K&H+N: 0.9625791194268624

- Micro F1 score on ROOT09: 0.9207145095581323

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.840515873015873

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: mask

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: I wasn’t aware of this relationship, but I just read in the encyclopedia that <subj> is the <mask> of <obj>

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 25

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-mask-prompt-d-nce-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

AlexN/xls-r-300m-fr | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"fr",

"dataset:mozilla-foundation/common_voice_8_0",

"transformers",

"generated_from_trainer",

"hf-asr-leaderboard",

"mozilla-foundation/common_voice_8_0",

"robust-speech-event",

"model-index"

] | automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 17 | null | data: https://github.com/BigSalmon2/InformalToFormalDataset

```

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("BigSalmon/InformalToFormalLincoln78Paraphrase")

model = AutoModelForCausalLM.from_pretrained("BigSalmon/InformalToFormalLincoln78Paraphrase")

```

```

Demo:

https://huggingface.co/spaces/BigSalmon/FormalInformalConciseWordy

```

```

prompt = """informal english: corn fields are all across illinois, visible once you leave chicago.\nTranslated into the Style of Abraham Lincoln:"""

input_ids = tokenizer.encode(prompt, return_tensors='pt')

outputs = model.generate(input_ids=input_ids,

max_length=10 + len(prompt),

temperature=1.0,

top_k=50,

top_p=0.95,

do_sample=True,

num_return_sequences=5,

early_stopping=True)

for i in range(5):

print(tokenizer.decode(outputs[i]))

```

Most likely outputs (Disclaimer: I highly recommend using this over just generating):

```

prompt = """informal english: corn fields are all across illinois, visible once you leave chicago.\nTranslated into the Style of Abraham Lincoln:"""

text = tokenizer.encode(prompt)

myinput, past_key_values = torch.tensor([text]), None

myinput = myinput

myinput= myinput.to(device)

logits, past_key_values = model(myinput, past_key_values = past_key_values, return_dict=False)

logits = logits[0,-1]

probabilities = torch.nn.functional.softmax(logits)

best_logits, best_indices = logits.topk(250)

best_words = [tokenizer.decode([idx.item()]) for idx in best_indices]

text.append(best_indices[0].item())

best_probabilities = probabilities[best_indices].tolist()

words = []

print(best_words)

```

```

How To Make Prompt:

informal english: i am very ready to do that just that.

Translated into the Style of Abraham Lincoln: you can assure yourself of my readiness to work toward this end.

Translated into the Style of Abraham Lincoln: please be assured that i am most ready to undertake this laborious task.

***

informal english: space is huge and needs to be explored.

Translated into the Style of Abraham Lincoln: space awaits traversal, a new world whose boundaries are endless.

Translated into the Style of Abraham Lincoln: space is a ( limitless / boundless ) expanse, a vast virgin domain awaiting exploration.

***

informal english: corn fields are all across illinois, visible once you leave chicago.

Translated into the Style of Abraham Lincoln: corn fields ( permeate illinois / span the state of illinois / ( occupy / persist in ) all corners of illinois / line the horizon of illinois / envelop the landscape of illinois ), manifesting themselves visibly as one ventures beyond chicago.

informal english:

```

```

original: microsoft word's [MASK] pricing invites competition.

Translated into the Style of Abraham Lincoln: microsoft word's unconscionable pricing invites competition.

***

original: the library’s quiet atmosphere encourages visitors to [blank] in their work.

Translated into the Style of Abraham Lincoln: the library’s quiet atmosphere encourages visitors to immerse themselves in their work.

```

```

Essay Intro (Warriors vs. Rockets in Game 7):

text: eagerly anticipated by fans, game 7's are the highlight of the post-season.

text: ever-building in suspense, game 7's have the crowd captivated.

***

Essay Intro (South Korean TV Is Becoming Popular):

text: maturing into a bona fide paragon of programming, south korean television ( has much to offer / entertains without fail / never disappoints ).

text: increasingly held in critical esteem, south korean television continues to impress.

text: at the forefront of quality content, south korea is quickly achieving celebrity status.

***

Essay Intro (

```

```

Search: What is the definition of Checks and Balances?

https://en.wikipedia.org/wiki/Checks_and_balances

Checks and Balances is the idea of having a system where each and every action in government should be subject to one or more checks that would not allow one branch or the other to overly dominate.

https://www.harvard.edu/glossary/Checks_and_Balances

Checks and Balances is a system that allows each branch of government to limit the powers of the other branches in order to prevent abuse of power

https://www.law.cornell.edu/library/constitution/Checks_and_Balances

Checks and Balances is a system of separation through which branches of government can control the other, thus preventing excess power.

***

Search: What is the definition of Separation of Powers?

https://en.wikipedia.org/wiki/Separation_of_powers

The separation of powers is a principle in government, whereby governmental powers are separated into different branches, each with their own set of powers, that are prevent one branch from aggregating too much power.

https://www.yale.edu/tcf/Separation_of_Powers.html

Separation of Powers is the division of governmental functions between the executive, legislative and judicial branches, clearly demarcating each branch's authority, in the interest of ensuring that individual liberty or security is not undermined.

***

Search: What is the definition of Connection of Powers?

https://en.wikipedia.org/wiki/Connection_of_powers

Connection of Powers is a feature of some parliamentary forms of government where different branches of government are intermingled, typically the executive and legislative branches.

https://simple.wikipedia.org/wiki/Connection_of_powers

The term Connection of Powers describes a system of government in which there is overlap between different parts of the government.

***

Search: What is the definition of

```

```

Search: What are phrase synonyms for "second-guess"?

https://www.powerthesaurus.org/second-guess/synonyms

Shortest to Longest:

- feel dubious about

- raise an eyebrow at

- wrinkle their noses at

- cast a jaundiced eye at

- teeter on the fence about

***

Search: What are phrase synonyms for "mean to newbies"?

https://www.powerthesaurus.org/mean_to_newbies/synonyms

Shortest to Longest:

- readiness to balk at rookies

- absence of tolerance for novices

- hostile attitude toward newcomers

***

Search: What are phrase synonyms for "make use of"?

https://www.powerthesaurus.org/make_use_of/synonyms

Shortest to Longest:

- call upon

- glean value from

- reap benefits from

- derive utility from

- seize on the merits of

- draw on the strength of

- tap into the potential of

***

Search: What are phrase synonyms for "hurting itself"?

https://www.powerthesaurus.org/hurting_itself/synonyms

Shortest to Longest:

- erring

- slighting itself

- forfeiting its integrity

- doing itself a disservice

- evincing a lack of backbone

***

Search: What are phrase synonyms for "

```

```

- nebraska

- unicamerical legislature

- different from federal house and senate

text: featuring a unicameral legislature, nebraska's political system stands in stark contrast to the federal model, comprised of a house and senate.

***

- penny has practically no value

- should be taken out of circulation

- just as other coins have been in us history

- lost use

- value not enough

- to make environmental consequences worthy

text: all but valueless, the penny should be retired. as with other coins in american history, it has become defunct. too minute to warrant the environmental consequences of its production, it has outlived its usefulness.

***

-

```

```

original: sports teams are profitable for owners. [MASK], their valuations experience a dramatic uptick.

infill: sports teams are profitable for owners. ( accumulating vast sums / stockpiling treasure / realizing benefits / cashing in / registering robust financials / scoring on balance sheets ), their valuations experience a dramatic uptick.

***

original:

```

```

wordy: classical music is becoming less popular more and more.

Translate into Concise Text: interest in classic music is fading.

***

wordy:

```

```

sweet: savvy voters ousted him.

longer: voters who were informed delivered his defeat.

***

sweet:

```

```

1: commercial space company spacex plans to launch a whopping 52 flights in 2022.

2: spacex, a commercial space company, intends to undertake a total of 52 flights in 2022.

3: in 2022, commercial space company spacex has its sights set on undertaking 52 flights.

4: 52 flights are in the pipeline for 2022, according to spacex, a commercial space company.

5: a commercial space company, spacex aims to conduct 52 flights in 2022.

***

1:

```

Keywords to sentences or sentence.

```

ngos are characterized by:

□ voluntary citizens' group that is organized on a local, national or international level

□ encourage political participation

□ often serve humanitarian functions

□ work for social, economic, or environmental change

***

what are the drawbacks of living near an airbnb?

□ noise

□ parking

□ traffic

□ security

□ strangers

***

```

```

original: musicals generally use spoken dialogue as well as songs to convey the story. operas are usually fully sung.

adapted: musicals generally use spoken dialogue as well as songs to convey the story. ( in a stark departure / on the other hand / in contrast / by comparison / at odds with this practice / far from being alike / in defiance of this standard / running counter to this convention ), operas are usually fully sung.

***

original: akoya and tahitian are types of pearls. akoya pearls are mostly white, and tahitian pearls are naturally dark.

adapted: akoya and tahitian are types of pearls. ( a far cry from being indistinguishable / easily distinguished / on closer inspection / setting them apart / not to be mistaken for one another / hardly an instance of mere synonymy / differentiating the two ), akoya pearls are mostly white, and tahitian pearls are naturally dark.

***

original:

```

```

original: had trouble deciding.

translated into journalism speak: wrestled with the question, agonized over the matter, furrowed their brows in contemplation.

***

original:

```

```

input: not loyal

1800s english: ( two-faced / inimical / perfidious / duplicitous / mendacious / double-dealing / shifty ).

***

input:

```

```

first: ( was complicit in / was involved in ).

antonym: ( was blameless / was not an accomplice to / had no hand in / was uninvolved in ).

***

first: ( have no qualms about / see no issue with ).

antonym: ( are deeply troubled by / harbor grave reservations about / have a visceral aversion to / take ( umbrage at / exception to ) / are wary of ).

***

first: ( do not see eye to eye / disagree often ).

antonym: ( are in sync / are united / have excellent rapport / are like-minded / are in step / are of one mind / are in lockstep / operate in perfect harmony / march in lockstep ).

***

first:

```

```

stiff with competition, law school {A} is the launching pad for countless careers, {B} is a crowded field, {C} ranks among the most sought-after professional degrees, {D} is a professional proving ground.

***

languishing in viewership, saturday night live {A} is due for a creative renaissance, {B} is no longer a ratings juggernaut, {C} has been eclipsed by its imitators, {C} can still find its mojo.

***

dubbed the "manhattan of the south," atlanta {A} is a bustling metropolis, {B} is known for its vibrant downtown, {C} is a city of rich history, {D} is the pride of georgia.

***

embattled by scandal, harvard {A} is feeling the heat, {B} cannot escape the media glare, {C} is facing its most intense scrutiny yet, {D} is in the spotlight for all the wrong reasons.

```

Infill / Infilling / Masking / Phrase Masking (Works pretty decently actually, especially when you use logprobs code from above):

```

his contention [blank] by the evidence [sep] was refuted [answer]

***

few sights are as [blank] new york city as the colorful, flashing signage of its bodegas [sep] synonymous with [answer]

***

when rick won the lottery, all of his distant relatives [blank] his winnings [sep] clamored for [answer]

***

the library’s quiet atmosphere encourages visitors to [blank] in their work [sep] immerse themselves [answer]

***

the joy of sport is that no two games are alike. for every exhilarating experience, however, there is an interminable one. the national pastime, unfortunately, has a penchant for the latter. what begins as a summer evening at the ballpark can quickly devolve into a game of tedium. the primary culprit is the [blank] of play. from batters readjusting their gloves to fielders spitting on their mitts, the action is [blank] unnecessary interruptions. the sport's future is [blank] if these tendencies are not addressed [sep] plodding pace [answer] riddled with [answer] bleak [answer]

***

microsoft word's [blank] pricing [blank] competition [sep] unconscionable [answer] invites [answer]

***

```

```

original: microsoft word's [MASK] pricing invites competition.

Translated into the Style of Abraham Lincoln: microsoft word's unconscionable pricing invites competition.

***

original: the library’s quiet atmosphere encourages visitors to [blank] in their work.

Translated into the Style of Abraham Lincoln: the library’s quiet atmosphere encourages visitors to immerse themselves in their work.

```

Backwards

```

Essay Intro (National Parks):

text: tourists are at ease in the national parks, ( swept up in the beauty of their natural splendor ).

***

Essay Intro (D.C. Statehood):

washington, d.c. is a city of outsize significance, ( ground zero for the nation's political life / center stage for the nation's political machinations ).

```

```

topic: the Golden State Warriors.

characterization 1: the reigning kings of the NBA.

characterization 2: possessed of a remarkable cohesion.

characterization 3: helmed by superstar Stephen Curry.

characterization 4: perched atop the league’s hierarchy.

characterization 5: boasting a litany of hall-of-famers.

***

topic: emojis.

characterization 1: shorthand for a digital generation.

characterization 2: more versatile than words.

characterization 3: the latest frontier in language.

characterization 4: a form of self-expression.

characterization 5: quintessentially millennial.

characterization 6: reflective of a tech-centric world.

***

topic:

```

```

regular: illinois went against the census' population-loss prediction by getting more residents.

VBG: defying the census' prediction of population loss, illinois experienced growth.

***

regular: microsoft word’s high pricing increases the likelihood of competition.

VBG: extortionately priced, microsoft word is inviting competition.

***

regular:

```

```

source: badminton should be more popular in the US.

QUERY: Based on the given topic, can you develop a story outline?

target: (1) games played with racquets are popular, (2) just look at tennis and ping pong, (3) but badminton underappreciated, (4) fun, fast-paced, competitive, (5) needs to be marketed more

text: the sporting arena is dominated by games that are played with racquets. tennis and ping pong, in particular, are immensely popular. somewhat curiously, however, badminton is absent from this pantheon. exciting, fast-paced, and competitive, it is an underappreciated pastime. all that it lacks is more effective marketing.

***

source: movies in theaters should be free.

QUERY: Based on the given topic, can you develop a story outline?

target: (1) movies provide vital life lessons, (2) many venues charge admission, (3) those without much money

text: the lessons that movies impart are far from trivial. the vast catalogue of cinematic classics is replete with inspiring sagas of friendship, bravery, and tenacity. it is regrettable, then, that admission to theaters is not free. in their current form, the doors of this most vital of institutions are closed to those who lack the means to pay.

***

source:

```

```

in the private sector, { transparency } is vital to the business’s credibility. the { disclosure of information } can be the difference between success and failure.

***

the labor market is changing, with { remote work } now the norm. this { flexible employment } allows the individual to design their own schedule.

***

the { cubicle } is the locus of countless grievances. many complain that the { enclosed workspace } restricts their freedom of movement.

***

```

```

indeed, many business leaders have { ceded control } to their workers. this change has led to a flowering of innovation.

question: what does “this change” mean in the above context?

(a) collaborative culture

(b) empowerment of employees

(c) decentralization of power

(d) participatory management

***

employees' refusal to assume { extraneous responsibilities } has not affected their performance in their official capacity. this trend, however, has been a particular irritant to management.

question: what does “this trend” mean in the above context?

(a) demarcating work boundaries

(b) resisting management's overreach

(c) sticking to one's prescribed duties

(d) working within a contract

***

in the private sector, { transparency } is vital to the business’s credibility. this orientation can be the difference between success and failure.

question: what does “this orientation” mean in the above context?

(a) visible business practices

(b) candor with the public

(c) open, honest communication

(d) culture of accountability

``` |

Aliskin/xlm-roberta-base-finetuned-marc | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

---

### Collage3-HubCity on Stable Diffusion

This is the `<C3Hub>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

Amba/wav2vec2-large-xls-r-300m-turkish-colab | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

---

### Goku on Stable Diffusion

This is the `<goku>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

Amrrs/indian-foods | [

"pytorch",

"tensorboard",

"vit",

"image-classification",

"transformers",

"huggingpics",

"model-index",

"autotrain_compatible"

] | image-classification | {

"architectures": [

"ViTForImageClassification"

],

"model_type": "vit",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: results

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# results

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.1822

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 13 | 3.1802 |

| No log | 2.0 | 26 | 3.1813 |

| No log | 3.0 | 39 | 3.1822 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.13.0.dev20220912

- Datasets 2.4.0

- Tokenizers 0.11.0

|

Analufm/Ana | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | Access to model ncthuan/tmp is restricted and you are not in the authorized list. Visit https://huggingface.co/ncthuan/tmp to ask for access. |

AndrewMcDowell/wav2vec2-xls-r-1b-japanese-hiragana-katakana | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"ja",

"dataset:common_voice",

"transformers",

"mozilla-foundation/common_voice_8_0",

"generated_from_trainer",

"robust-speech-event",

"hf-asr-leaderboard",

"license:apache-2.0"

] | automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null | ---

license: mit

---

### Daycare Attendant Sun FNAF on Stable Diffusion

This is the `<biblic-sun-fnaf>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

AnonymousSub/AR_EManuals-RoBERTa | [

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | 2022-09-14T13:52:06Z | ---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: bert-hoofdthemas

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-hoofdthemas

This model is a fine-tuned version of [GroNLP/bert-base-dutch-cased](https://huggingface.co/GroNLP/bert-base-dutch-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1925

- Accuracy: 0.7327

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 1.0712 | 1.0 | 2107 | 0.9968 | 0.7035 |

| 0.8567 | 2.0 | 4214 | 0.9297 | 0.7230 |

| 0.6865 | 3.0 | 6321 | 0.9586 | 0.7346 |

| 0.5546 | 4.0 | 8428 | 0.9767 | 0.7387 |

| 0.4429 | 5.0 | 10535 | 1.0708 | 0.7370 |

| 0.3395 | 6.0 | 12642 | 1.1925 | 0.7327 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

AnonymousSub/AR_rule_based_twostagetriplet_hier_epochs_1_shard_1 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null | ---

license: apache-2.0

tags:

- Summarization

- generated_from_trainer

datasets:

- amazon_reviews_multi

metrics:

- rouge

model-index:

- name: t5-finetuned-amazon-english

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: amazon_reviews_multi

type: amazon_reviews_multi

config: en

split: train

args: en

metrics:

- name: Rouge1

type: rouge

value: 19.1814

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-finetuned-amazon-english

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the amazon_reviews_multi dataset.

It achieves the following results on the evaluation set:

- Loss: 3.1713

- Rouge1: 19.1814

- Rouge2: 9.8673

- Rougel: 18.1982

- Rougelsum: 18.2963

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|

| 3.3583 | 1.0 | 771 | 3.2513 | 16.6865 | 9.0598 | 15.8299 | 15.8472 |

| 3.1022 | 2.0 | 1542 | 3.2147 | 16.8499 | 9.4849 | 16.1568 | 16.2437 |

| 3.0067 | 3.0 | 2313 | 3.1718 | 16.9516 | 8.762 | 16.104 | 16.2186 |

| 2.9482 | 4.0 | 3084 | 3.1854 | 18.9582 | 9.5416 | 18.0846 | 18.2938 |

| 2.8934 | 5.0 | 3855 | 3.1669 | 18.857 | 9.934 | 17.9027 | 18.0272 |

| 2.8389 | 6.0 | 4626 | 3.1782 | 18.6736 | 9.326 | 17.6943 | 17.8852 |

| 2.8174 | 7.0 | 5397 | 3.1709 | 18.4342 | 9.6936 | 17.5714 | 17.6516 |

| 2.8 | 8.0 | 6168 | 3.1713 | 19.1814 | 9.8673 | 18.1982 | 18.2963 |

### Framework versions

- Transformers 4.22.0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

AnonymousSub/AR_specter | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

license: apache-2.0

---

This is a copy of [trinart_stable_diffusion_v2](https://huggingface.co/naclbit/trinart_stable_diffusion_v2) ported for use with the (diffusers)[https://github.com/huggingface/diffusers]) library.

All credit for this model goes to [naclbit](https://huggingface.co/naclbit).

|

AnonymousSub/SR_EManuals-RoBERTa | [

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1 | null | ---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/rvidaurre/ddpm-butterflies-128/tensorboard?#scalars)

|

AnonymousSub/SR_rule_based_roberta_hier_triplet_epochs_1_shard_1_wikiqa_copy | [

"pytorch",

"roberta",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: sbi-model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sbi-model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5290

- F1: 0.8211

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.813 | 1.0 | 40 | 1.5304 | 0.5227 |

| 1.2312 | 2.0 | 80 | 0.9138 | 0.7439 |

| 0.7428 | 3.0 | 120 | 0.6869 | 0.7518 |

| 0.5055 | 4.0 | 160 | 0.5766 | 0.8050 |

| 0.3581 | 5.0 | 200 | 0.5454 | 0.8052 |

| 0.2664 | 6.0 | 240 | 0.5208 | 0.8200 |

| 0.2145 | 7.0 | 280 | 0.5218 | 0.8241 |

| 0.1853 | 8.0 | 320 | 0.5290 | 0.8211 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Tokenizers 0.12.1

|

AnonymousSub/bert_mean_diff_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

language: en

thumbnail: http://www.huggingtweets.com/ashoswai/1663179098941/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1493344204449275911/qQgn0Rtu_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Ashok Swain</div>

<div style="text-align: center; font-size: 14px;">@ashoswai</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Ashok Swain.

| Data | Ashok Swain |

| --- | --- |

| Tweets downloaded | 3249 |

| Retweets | 664 |

| Short tweets | 157 |

| Tweets kept | 2428 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1x78488z/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @ashoswai's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2x38jczu) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2x38jczu/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/ashoswai')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

AnonymousSub/cline-emanuals-s10-AR | [

"pytorch",

"roberta",

"text-classification",

"transformers"

] | text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 27 | null | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: engg48112-ds

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# engg48112-ds

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 256

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.22.0

- Pytorch 1.12.1+cu113

- Tokenizers 0.12.1

|

AnonymousSub/dummy_2_parent | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

tags:

- FrozenLake-v1-8x8

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-isSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-8x8

type: FrozenLake-v1-8x8

metrics:

- type: mean_reward

value: 0.44 +/- 0.50

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="michael20at/q-FrozenLake-v1-4x4-isSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

AnonymousSub/hier_triplet_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

AnonymousSub/roberta-base_wikiqa | [

"pytorch",

"roberta",

"text-classification",

"transformers"

] | text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

license: mit

---

### kaneoya sachiko on Stable Diffusion

This is the `<Kaneoya>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

AnonymousSub/rule_based_bert_hier_diff_equal_wts_epochs_1_shard_1 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: mit

---

### Retro-Girl on Stable Diffusion

This is the `<retro-girl>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

AnonymousSub/rule_based_bert_mean_diff_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

license: mit

---

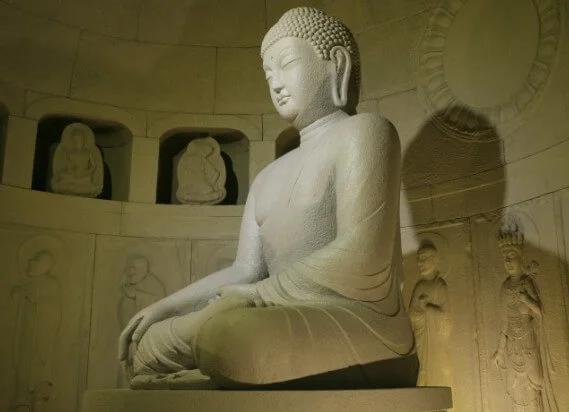

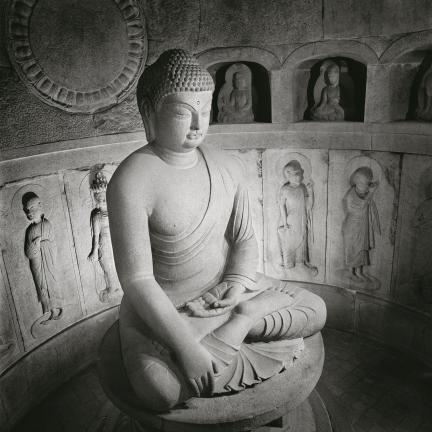

### Buddha statue on Stable Diffusion

This is the `<buddha-statue>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

AnonymousSub/rule_based_bert_quadruplet_epochs_1_shard_1 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: mit

---

### NOTE: USED WAIFU DIFFUSION

<https://huggingface.co/hakurei/waifu-diffusion>

### hitokomoru-style

Artist: <https://www.pixiv.net/en/users/30837811>

This is the `<hitokomoru-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

AnonymousSub/rule_based_bert_quadruplet_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 8 | null | ---

license: mit

---

### plant style on Stable Diffusion