modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

CAMeL-Lab/bert-base-arabic-camelbert-ca-pos-msa | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 71 | null | language: kor

thumbnail: "Keywords to Sentences"

tags:

- keytotext

- k2t

- Keywords to Sentences

license: "MIT"

datasets:

- dataset.py

--- |

CAMeL-Lab/bert-base-arabic-camelbert-ca | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 580 | null | ---

license: mit

---

### UZUMAKI on Stable Diffusion

This is the `<NARUTO>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

CAMeL-Lab/bert-base-arabic-camelbert-da-ner | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 42 | null | ---

language:

- en

thumbnail: null

tags:

- automatic-speech-recognition

- CTC

- Attention

- pytorch

- speechbrain

license: apache-2.0

datasets:

- switchboard

metrics:

- wer

- cer

---

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# wav2vec 2.0 with CTC/Attention trained on Switchboard (No LM)

This repository provides all the necessary tools to perform automatic speech recognition from an end-to-end system pretrained on the Switchboard (EN) corpus within SpeechBrain.

For a better experience, we encourage you to learn more about [SpeechBrain](https://speechbrain.github.io).

The performance of the model is the following:

| Release | Swbd CER | Callhome CER | Eval2000 CER | Swbd WER | Callhome WER | Eval2000 WER | GPUs |

|:--------:|:--------:|:------------:|:------------:|:--------:|:------------:|:------------:|:-----------:|

| 17-09-22 | 5.24 | 9.69 | 7.44 | 8 .76 | 14.67 | 11.78 | 4xA100 40GB |

## Pipeline Description

This ASR system is composed of 2 different but linked blocks:

- Tokenizer (unigram) that transforms words into subword units trained on the Switchboard training transcriptions and the Fisher corpus.

- Acoustic model (wav2vec2.0 + CTC). A pretrained wav2vec 2.0 model ([facebook/wav2vec2-large-lv60](https://huggingface.co/facebook/wav2vec2-large-lv60)) is combined with a feature encoder consisting of three DNN layers and finetuned on Switchboard. The obtained final acoustic representation is given to a greedy CTC decoder.

The system is trained with recordings sampled at 16kHz (single channel).

The code will automatically normalize your audio (i.e., resampling + mono channel selection) when calling `transcribe_file` if needed.

## Install SpeechBrain

First of all, please install tranformers and SpeechBrain with the following command:

```

pip install speechbrain transformers

```

Please notice that we encourage you to read our tutorials and learn more about

[SpeechBrain](https://speechbrain.github.io).

## Transcribing Your Own Audio Files

```python

from speechbrain.pretrained import EncoderASR

asr_model = EncoderASR.from_hparams(source="speechbrain/asr-wav2vec2-switchboard", savedir="pretrained_models/asr-wav2vec2-switchboard")

asr_model.transcribe_file('speechbrain/asr-wav2vec2-switchboard/example.wav')

```

## Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

## Training

The model was trained with SpeechBrain (commit hash: `70904d0`).

To train it from scratch follow these steps:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```bash

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

3. Run Training:

```bash

cd recipes/Switchboard/ASR/CTC

python train_with_wav2vec.py hparams/train_with_wav2vec.yaml --data_folder=your_data_folder

```

## Limitations

The SpeechBrain team does not provide any warranty on the performance achieved by this model when used on other datasets.

## Credits

This model was trained with resources provided by the [THN Center for AI](https://www.th-nuernberg.de/en/kiz).

# About SpeechBrain

SpeechBrain is an open-source and all-in-one speech toolkit. It is designed to be simple, extremely flexible, and user-friendly.

Competitive or state-of-the-art performance is obtained in various domains.

- Website: https://speechbrain.github.io/

- GitHub: https://github.com/speechbrain/speechbrain/

- HuggingFace: https://huggingface.co/speechbrain/

# Citing SpeechBrain

Please cite SpeechBrain if you use it for your research or business.

```bibtex

@misc{speechbrain,

title={{SpeechBrain}: A General-Purpose Speech Toolkit},

author={Mirco Ravanelli and Titouan Parcollet and Peter Plantinga and Aku Rouhe and Samuele Cornell and Loren Lugosch and Cem Subakan and Nauman Dawalatabad and Abdelwahab Heba and Jianyuan Zhong and Ju-Chieh Chou and Sung-Lin Yeh and Szu-Wei Fu and Chien-Feng Liao and Elena Rastorgueva and François Grondin and William Aris and Hwidong Na and Yan Gao and Renato De Mori and Yoshua Bengio},

year={2021},

eprint={2106.04624},

archivePrefix={arXiv},

primaryClass={eess.AS},

note={arXiv:2106.04624}

}

```

|

CAMeL-Lab/bert-base-arabic-camelbert-da-poetry | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:1905.05700",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 37 | null | ---

language:

- en

thumbnail: null

tags:

- automatic-speech-recognition

- CTC

- Attention

- Transformer

- pytorch

- speechbrain

license: apache-2.0

datasets:

- switchboard

metrics:

- wer

- cer

---

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# Transformer for Switchboard (with Transformer LM)

This repository provides all the necessary tools to perform automatic speech

recognition from an end-to-end system pretrained on Switchboard (EN) within SpeechBrain.

For a better experience, we encourage you to learn more about [SpeechBrain](https://speechbrain.github.io).

The performance of the model is the following:

| Release | Swbd WER | Callhome WER | Eval2000 WER | GPUs |

|:--------:|:--------:|:------------:|:------------:|:-----------:|

| 17-09-22 | 9.80 | 17.89 | 13.94 | 1xA100 40GB |

## Pipeline Description

This ASR system is composed of 3 different but linked blocks:

- Tokenizer (unigram) that transforms words into subword units trained on the Switchboard training transcriptions and the Fisher corpus.

- Neural language model (Transformer LM) trained on the Switchboard training transcriptions and the Fisher corpus.

- Acoustic model made of a transformer encoder and a joint decoder with CTC +

transformer. Hence, the decoding also incorporates the CTC probabilities.

The system is trained with recordings sampled at 16kHz (single channel).

The code will automatically normalize your audio (i.e., resampling + mono channel selection) when calling `transcribe_file` if needed.

## Install SpeechBrain

First of all, please install SpeechBrain with the following command:

```

pip install speechbrain

```

Please notice that we encourage you to read our tutorials and learn more about

[SpeechBrain](https://speechbrain.github.io).

## Transcribing Your Own Audio Files

```python

from speechbrain.pretrained import EncoderDecoderASR

asr_model = EncoderDecoderASR.from_hparams(source="speechbrain/asr-transformer-switchboard", savedir="pretrained_models/asr-transformer-switchboard")

asr_model.transcribe_file("speechbrain/asr-transformer-switchboard/example.wav")

```

## Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

## Parallel Inference on a Batch

Please, [see this Colab notebook](https://colab.research.google.com/drive/1hX5ZI9S4jHIjahFCZnhwwQmFoGAi3tmu?usp=sharing) to figure out how to transcribe in parallel a batch of input sentences using a pre-trained model.

## Training

The model was trained with SpeechBrain (commit hash: `70904d0`).

To train it from scratch follow these steps:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```bash

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

3. Run Training:

```bash

cd recipes/Switchboard/ASR/transformer

python train.py hparams/transformer.yaml --data_folder=your_data_folder

```

## Limitations

The SpeechBrain team does not provide any warranty on the performance achieved by this model when used on other datasets.

## Credits

This model was trained with resources provided by the [THN Center for AI](https://www.th-nuernberg.de/en/kiz).

# About SpeechBrain

SpeechBrain is an open-source and all-in-one speech toolkit. It is designed to be simple, extremely flexible, and user-friendly.

Competitive or state-of-the-art performance is obtained in various domains.

- Website: https://speechbrain.github.io/

- GitHub: https://github.com/speechbrain/speechbrain/

- HuggingFace: https://huggingface.co/speechbrain/

# Citing SpeechBrain

Please cite SpeechBrain if you use it for your research or business.

```bibtex

@misc{speechbrain,

title={{SpeechBrain}: A General-Purpose Speech Toolkit},

author={Mirco Ravanelli and Titouan Parcollet and Peter Plantinga and Aku Rouhe and Samuele Cornell and Loren Lugosch and Cem Subakan and Nauman Dawalatabad and Abdelwahab Heba and Jianyuan Zhong and Ju-Chieh Chou and Sung-Lin Yeh and Szu-Wei Fu and Chien-Feng Liao and Elena Rastorgueva and François Grondin and William Aris and Hwidong Na and Yan Gao and Renato De Mori and Yoshua Bengio},

year={2021},

eprint={2106.04624},

archivePrefix={arXiv},

primaryClass={eess.AS},

note={arXiv:2106.04624}

}

```

|

CAMeL-Lab/bert-base-arabic-camelbert-da-pos-egy | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 32 | null | ---

datasets:

- relbert/semeval2012_relational_similarity

model-index:

- name: relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated

results:

- task:

name: Relation Mapping

type: sorting-task

dataset:

name: Relation Mapping

args: relbert/relation_mapping

type: relation-mapping

metrics:

- name: Accuracy

type: accuracy

value: 0.8450793650793651

- task:

name: Analogy Questions (SAT full)

type: multiple-choice-qa

dataset:

name: SAT full

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6176470588235294

- task:

name: Analogy Questions (SAT)

type: multiple-choice-qa

dataset:

name: SAT

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6261127596439169

- task:

name: Analogy Questions (BATS)

type: multiple-choice-qa

dataset:

name: BATS

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.7498610339077265

- task:

name: Analogy Questions (Google)

type: multiple-choice-qa

dataset:

name: Google

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.886

- task:

name: Analogy Questions (U2)

type: multiple-choice-qa

dataset:

name: U2

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.618421052631579

- task:

name: Analogy Questions (U4)

type: multiple-choice-qa

dataset:

name: U4

args: relbert/analogy_questions

type: analogy-questions

metrics:

- name: Accuracy

type: accuracy

value: 0.6203703703703703

- task:

name: Lexical Relation Classification (BLESS)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9199939731806539

- name: F1 (macro)

type: f1_macro

value: 0.9158483158560947

- task:

name: Lexical Relation Classification (CogALexV)

type: classification

dataset:

name: CogALexV

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.8457746478873239

- name: F1 (macro)

type: f1_macro

value: 0.6760195209742395

- task:

name: Lexical Relation Classification (EVALution)

type: classification

dataset:

name: BLESS

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.6684723726977249

- name: F1 (macro)

type: f1_macro

value: 0.65910797043685

- task:

name: Lexical Relation Classification (K&H+N)

type: classification

dataset:

name: K&H+N

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.959379564582319

- name: F1 (macro)

type: f1_macro

value: 0.8779321856206035

- task:

name: Lexical Relation Classification (ROOT09)

type: classification

dataset:

name: ROOT09

args: relbert/lexical_relation_classification

type: relation-classification

metrics:

- name: F1

type: f1

value: 0.9031651519899718

- name: F1 (macro)

type: f1_macro

value: 0.9015700872047177

---

# relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated

RelBERT fine-tuned from [roberta-large](https://huggingface.co/roberta-large) on

[relbert/semeval2012_relational_similarity](https://huggingface.co/datasets/relbert/semeval2012_relational_similarity).

Fine-tuning is done via [RelBERT](https://github.com/asahi417/relbert) library (see the repository for more detail).

It achieves the following results on the relation understanding tasks:

- Analogy Question ([dataset](https://huggingface.co/datasets/relbert/analogy_questions), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/analogy.json)):

- Accuracy on SAT (full): 0.6176470588235294

- Accuracy on SAT: 0.6261127596439169

- Accuracy on BATS: 0.7498610339077265

- Accuracy on U2: 0.618421052631579

- Accuracy on U4: 0.6203703703703703

- Accuracy on Google: 0.886

- Lexical Relation Classification ([dataset](https://huggingface.co/datasets/relbert/lexical_relation_classification), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/classification.json)):

- Micro F1 score on BLESS: 0.9199939731806539

- Micro F1 score on CogALexV: 0.8457746478873239

- Micro F1 score on EVALution: 0.6684723726977249

- Micro F1 score on K&H+N: 0.959379564582319

- Micro F1 score on ROOT09: 0.9031651519899718

- Relation Mapping ([dataset](https://huggingface.co/datasets/relbert/relation_mapping), [full result](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/relation_mapping.json)):

- Accuracy on Relation Mapping: 0.8450793650793651

### Usage

This model can be used through the [relbert library](https://github.com/asahi417/relbert). Install the library via pip

```shell

pip install relbert

```

and activate model as below.

```python

from relbert import RelBERT

model = RelBERT("relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated")

vector = model.get_embedding(['Tokyo', 'Japan']) # shape of (1024, )

```

### Training hyperparameters

The following hyperparameters were used during training:

- model: roberta-large

- max_length: 64

- mode: average_no_mask

- data: relbert/semeval2012_relational_similarity

- split: train

- data_eval: relbert/conceptnet_high_confidence

- split_eval: full

- template_mode: manual

- template: I wasn’t aware of this relationship, but I just read in the encyclopedia that <obj> is <subj>’s <mask>

- loss_function: nce_logout

- classification_loss: False

- temperature_nce_constant: 0.05

- temperature_nce_rank: {'min': 0.01, 'max': 0.05, 'type': 'linear'}

- epoch: 29

- batch: 128

- lr: 5e-06

- lr_decay: False

- lr_warmup: 1

- weight_decay: 0

- random_seed: 0

- exclude_relation: None

- exclude_relation_eval: None

- n_sample: 640

- gradient_accumulation: 8

The full configuration can be found at [fine-tuning parameter file](https://huggingface.co/relbert/roberta-large-semeval2012-average-no-mask-prompt-e-nce-conceptnet-validated/raw/main/trainer_config.json).

### Reference

If you use any resource from RelBERT, please consider to cite our [paper](https://aclanthology.org/2021.eacl-demos.7/).

```

@inproceedings{ushio-etal-2021-distilling-relation-embeddings,

title = "{D}istilling {R}elation {E}mbeddings from {P}re-trained {L}anguage {M}odels",

author = "Ushio, Asahi and

Schockaert, Steven and

Camacho-Collados, Jose",

booktitle = "EMNLP 2021",

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

}

```

|

CAMeL-Lab/bert-base-arabic-camelbert-da-pos-glf | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 54 | null | ---

language:

- en

thumbnail: null

tags:

- automatic-speech-recognition

- CTC

- Attention

- pytorch

- speechbrain

license: "apache-2.0"

datasets:

- switchboard

metrics:

- wer

- cer

---

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# CRDNN with CTC/Attention trained on Switchboard (No LM)

This repository provides all the necessary tools to perform automatic speech recognition from an end-to-end system pretrained on Switchboard (EN) within SpeechBrain.

For a better experience we encourage you to learn more about [SpeechBrain](https://speechbrain.github.io).

The performance of the model is the following:

| Release | Swbd CER | Callhome CER | Eval2000 CER | Swbd WER | Callhome WER | Eval2000 WER | GPUs |

|:--------:|:--------:|:------------:|:------------:|:--------:|:------------:|:------------:|:-----------:|

| 17-09-22 | 9.89 | 16.30 | 13.17 | 16.01 | 25.12 | 20.71 | 1xA100 40GB |

## Pipeline description

This ASR system is composed with 2 different but linked blocks:

- Tokenizer (unigram) that transforms words into subword units trained on

the training transcriptions of the Switchboard and Fisher corpus.

- Acoustic model (CRDNN + CTC/Attention). The CRDNN architecture is made of

N blocks of convolutional neural networks with normalisation and pooling on the

frequency domain. Then, a bidirectional LSTM is connected to a final DNN to obtain

the final acoustic representation that is given to the CTC and attention decoders.

The system is trained with recordings sampled at 16kHz (single channel).

The code will automatically normalize your audio (i.e., resampling + mono channel selection) when calling `transcribe_file` if needed.

## Install SpeechBrain

First of all, please install SpeechBrain with the following command:

```

pip install speechbrain

```

Note that we encourage you to read our tutorials and learn more about

[SpeechBrain](https://speechbrain.github.io).

## Transcribing Your Own Audio Files

```python

from speechbrain.pretrained import EncoderDecoderASR

asr_model = EncoderDecoderASR.from_hparams(source="speechbrain/asr-crdnn-switchboard", savedir="pretrained_models/speechbrain/asr-crdnn-switchboard")

asr_model.transcribe_file('speechbrain/asr-crdnn-switchboard/example.wav')

```

## Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

## Parallel Inference on a Batch

Please, [see this Colab notebook](https://colab.research.google.com/drive/1hX5ZI9S4jHIjahFCZnhwwQmFoGAi3tmu?usp=sharing) to figure out how to transcribe in parallel a batch of input sentences using a pre-trained model.

## Training

The model was trained with SpeechBrain (commit hash: `70904d0`).

To train it from scratch follow these steps:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```bash

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

3. Run Training:

```bash

cd recipes/Switchboard/ASR/seq2seq

python train.py hparams/train_BPE_2000.yaml --data_folder=your_data_folder

```

## Limitations

The SpeechBrain team does not provide any warranty on the performance achieved by this model when used on other datasets.

## Credits

This model was trained with resources provided by the [THN Center for AI](https://www.th-nuernberg.de/en/kiz).

# About SpeechBrain

SpeechBrain is an open-source and all-in-one speech toolkit. It is designed to be simple, extremely flexible, and user-friendly.

Competitive or state-of-the-art performance is obtained in various domains.

- Website: https://speechbrain.github.io/

- GitHub: https://github.com/speechbrain/speechbrain/

- HuggingFace: https://huggingface.co/speechbrain/

# Citing SpeechBrain

Please cite SpeechBrain if you use it for your research or business.

```bibtex

@misc{speechbrain,

title={{SpeechBrain}: A General-Purpose Speech Toolkit},

author={Mirco Ravanelli and Titouan Parcollet and Peter Plantinga and Aku Rouhe and Samuele Cornell and Loren Lugosch and Cem Subakan and Nauman Dawalatabad and Abdelwahab Heba and Jianyuan Zhong and Ju-Chieh Chou and Sung-Lin Yeh and Szu-Wei Fu and Chien-Feng Liao and Elena Rastorgueva and François Grondin and William Aris and Hwidong Na and Yan Gao and Renato De Mori and Yoshua Bengio},

year={2021},

eprint={2106.04624},

archivePrefix={arXiv},

primaryClass={eess.AS},

note={arXiv:2106.04624}

}

```

|

CAMeL-Lab/bert-base-arabic-camelbert-da-sentiment | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"has_space"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 19,850 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: mit-b2-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8523956723338485

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.8580847578266328

name: F1

- type: precision

value: 0.8587893412503379

name: Precision

- type: recall

value: 0.8593508500772797

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mit-b2-finetuned-memes

This model is a fine-tuned version of [aaraki/vit-base-patch16-224-in21k-finetuned-cifar10](https://huggingface.co/aaraki/vit-base-patch16-224-in21k-finetuned-cifar10) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4137

- Accuracy: 0.8524

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.9727 | 0.99 | 40 | 0.8400 | 0.7334 |

| 0.5305 | 1.99 | 80 | 0.5147 | 0.8284 |

| 0.3124 | 2.99 | 120 | 0.4698 | 0.8145 |

| 0.2263 | 3.99 | 160 | 0.3892 | 0.8563 |

| 0.1453 | 4.99 | 200 | 0.3874 | 0.8570 |

| 0.1255 | 5.99 | 240 | 0.4097 | 0.8470 |

| 0.0989 | 6.99 | 280 | 0.3860 | 0.8570 |

| 0.0755 | 7.99 | 320 | 0.4141 | 0.8539 |

| 0.08 | 8.99 | 360 | 0.4049 | 0.8594 |

| 0.0639 | 9.99 | 400 | 0.4137 | 0.8524 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

CAMeL-Lab/bert-base-arabic-camelbert-da | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 449 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-base-patch4-window7-224-20epochs-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.847758887171561

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.8504084378729573

name: F1

- type: precision

value: 0.8519647060733512

name: Precision

- type: recall

value: 0.8523956723338485

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-base-patch4-window7-224-20epochs-finetuned-memes

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7090

- Accuracy: 0.8478

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.0238 | 0.99 | 40 | 0.9636 | 0.6445 |

| 0.777 | 1.99 | 80 | 0.6591 | 0.7666 |

| 0.4763 | 2.99 | 120 | 0.5381 | 0.8130 |

| 0.3215 | 3.99 | 160 | 0.5244 | 0.8253 |

| 0.2179 | 4.99 | 200 | 0.5123 | 0.8238 |

| 0.1868 | 5.99 | 240 | 0.5052 | 0.8308 |

| 0.154 | 6.99 | 280 | 0.5444 | 0.8338 |

| 0.1166 | 7.99 | 320 | 0.6318 | 0.8238 |

| 0.1099 | 8.99 | 360 | 0.5656 | 0.8338 |

| 0.0925 | 9.99 | 400 | 0.6057 | 0.8338 |

| 0.0779 | 10.99 | 440 | 0.5942 | 0.8393 |

| 0.0629 | 11.99 | 480 | 0.6112 | 0.8400 |

| 0.0742 | 12.99 | 520 | 0.6588 | 0.8331 |

| 0.0752 | 13.99 | 560 | 0.6143 | 0.8408 |

| 0.0577 | 14.99 | 600 | 0.6450 | 0.8516 |

| 0.0589 | 15.99 | 640 | 0.6787 | 0.8400 |

| 0.0555 | 16.99 | 680 | 0.6641 | 0.8454 |

| 0.052 | 17.99 | 720 | 0.7213 | 0.8524 |

| 0.0589 | 18.99 | 760 | 0.6917 | 0.8470 |

| 0.0506 | 19.99 | 800 | 0.7090 | 0.8478 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

CAMeL-Lab/bert-base-arabic-camelbert-mix-ner | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1,860 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

config: plain_text

split: train

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.8766666666666667

- name: F1

type: f1

value: 0.877887788778878

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3275

- Accuracy: 0.8767

- F1: 0.8779

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

CAMeL-Lab/bert-base-arabic-camelbert-mix-poetry | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:1905.05700",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 31 | null | ---

tags:

- autotrain

- translation

language:

- tr

- en

datasets:

- Tritkoman/autotrain-data-qjnwjkwnw

co2_eq_emissions:

emissions: 148.66763338560511

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1490354394

- CO2 Emissions (in grams): 148.6676

## Validation Metrics

- Loss: 2.112

- SacreBLEU: 8.676

- Gen len: 13.161 |

CAMeL-Lab/bert-base-arabic-camelbert-mix-pos-glf | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 132 | null | ---

license: mit

---

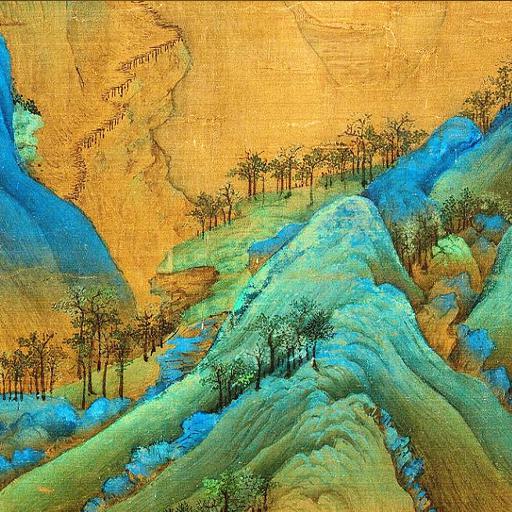

### Sorami style on Stable Diffusion

This is the `<sorami-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

CAMeL-Lab/bert-base-arabic-camelbert-mix | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"Arabic",

"Dialect",

"Egyptian",

"Gulf",

"Levantine",

"Classical Arabic",

"MSA",

"Modern Standard Arabic",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 20,880 | null | ---

license: bigscience-bloom-rail-1.0

widget :

- text: "ആധുനിക ഭാരതം കണ്ട "

example_title: "ആധുനിക ഭാരതം"

- text : "മലയാളഭാഷ എഴുതുന്നതിനായി"

example_title: "മലയാളഭാഷ എഴുതുന്നതിനായി"

- text : "ഇന്ത്യയിൽ കേരള സംസ്ഥാനത്തിലും"

example_title : "ഇന്ത്യയിൽ കേരള"

---

# GPT2-Malayalam

## Model description

GPT2-Malayalam is a GPT-2 transformer model fine Tuned on a large corpus of Malayalam data in a self-supervised fashion. This means it was pretrained on the raw texts only, with no humans labelling them in any way with an automatic process to generate inputs and labels from those texts. More precisely, it was trained to guess the next word in sentences.

More precisely, inputs are sequences of continuous text of a certain length and the targets are the same sequence, shifted one token (word or piece of word) to the right. The model uses internally a mask-mechanism to make sure the predictions for the token i only uses the inputs from 1 to i but not the future tokens.

This way, the model learns an inner representation of the Malayalam language that can then be used to extract features useful for downstream tasks.

## Intended uses & limitations

You can use the raw model for text generation or fine-tune it to a downstream task. See the

[model hub](https://huggingface.co/models?filter=gpt2) to look for fine-tuned versions on a task that interests you.

## Usage

You can use this model for Malayalam text generation:

```python

>>> from transformers import TFGPT2LMHeadModel, GPT2Tokenizer

>>> tokenizer = GPT2Tokenizer.from_pretrained("ashiqabdulkhader/GPT2-Malayalam")

>>> model = TFGPT2LMHeadModel.from_pretrained("ashiqabdulkhader/GPT2-Malayalam")

>>> text = "മലയാളത്തിലെ പ്രധാന ഭാഷയാണ്"

>>> encoded_text = tokenizer.encode(text, return_tensors='tf')

>>> beam_output = model.generate(

encoded_text,

max_length=100,

num_beams=5,

temperature=0.7,

no_repeat_ngram_size=2,

num_return_sequences=5

)

>>> print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

``` |

CAMeL-Lab/bert-base-arabic-camelbert-msa-did-madar-twitter5 | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 75 | null | ---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: pegasus-model-3-x25

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-model-3-x25

This model is a fine-tuned version of [theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback](https://huggingface.co/theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5668

- Rouge1: 61.9972

- Rouge2: 48.1531

- Rougel: 48.845

- Rougelsum: 59.5019

- Gen Len: 123.0814

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:------:|:---------:|:--------:|

| 1.144 | 1.0 | 883 | 0.5668 | 61.9972 | 48.1531 | 48.845 | 59.5019 | 123.0814 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

CAMeL-Lab/bert-base-arabic-camelbert-msa-ner | [

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

] | token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 229 | null | ---

tags:

- Pong-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pong-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pong-PLE-v0

type: Pong-PLE-v0

metrics:

- type: mean_reward

value: -16.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pong-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pong-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

CAMeL-Lab/bert-base-arabic-camelbert-msa-poetry | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:1905.05700",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

tags:

- audio

- spectrograms

datasets:

- teticio/audio-diffusion-instrumental-hiphop-256

---

Denoising Diffusion Probabilistic Model trained on [teticio/audio-diffusion-instrumental-hiphop-256](https://huggingface.co/datasets/teticio/audio-diffusion-instrumental-hiphop-256) to generate mel spectrograms of 256x256 corresponding to 5 seconds of audio. The audio consists of samples of instrumental Hip Hop music. The code to convert from audio to spectrogram and vice versa can be found in https://github.com/teticio/audio-diffusion along with scripts to train and run inference.

|

CAMeL-Lab/bert-base-arabic-camelbert-msa-quarter | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 12 | null | ---

tags:

- autotrain

- translation

language:

- en

- es

datasets:

- Tritkoman/autotrain-data-akakka

co2_eq_emissions:

emissions: 4.471184695619804

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1492154441

- CO2 Emissions (in grams): 4.4712

## Validation Metrics

- Loss: 0.899

- SacreBLEU: 59.218

- Gen len: 9.889 |

CAMeL-Lab/bert-base-arabic-camelbert-msa-sentiment | [

"pytorch",

"tf",

"bert",

"text-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 574 | null | ---

tags:

- autotrain

- translation

language:

- en

- es

datasets:

- Tritkoman/autotrain-data-akakka

co2_eq_emissions:

emissions: 0.26170356193686023

---

# Model Trained Using AutoTrain

- Problem type: Translation

- Model ID: 1492154444

- CO2 Emissions (in grams): 0.2617

## Validation Metrics

- Loss: 0.770

- SacreBLEU: 62.097

- Gen len: 8.635 |

CAMeL-Lab/bert-base-arabic-camelbert-msa-sixteenth | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 26 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.54 +/- 2.70

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="matemato/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

CAMeL-Lab/bert-base-arabic-camelbert-msa | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"BertForMaskedLM"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2,967 | null | ---

license: mit

---

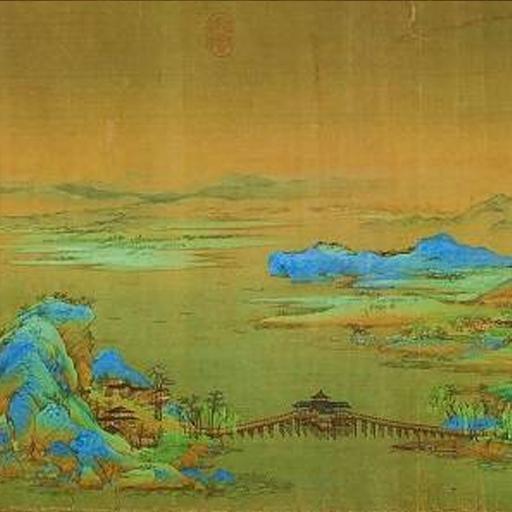

### lxj-o4 on Stable Diffusion

This is the `<csp>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

CBreit00/DialoGPT_small_Rick | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

tags:

- text-classification

- generated_from_trainer

datasets:

- paws

metrics:

- f1

- precision

- recall

model-index:

- name: deberta-v3-large-finetuned-paws-paraphrase-detector

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: paws

type: paws

args: labeled_final

metrics:

- name: F1

type: f1

value: 0.9426698284279537

- name: Precision

type: precision

value: 0.9300853289292595

- name: Recall

type: recall

value: 0.9555995475113123

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-v3-large-finetuned-paws-paraphrase-detector

Feel free to use for paraphrase detection tasks!

This model is a fine-tuned version of [microsoft/deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) on the paws dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3046

- F1: 0.9427

- Precision: 0.9301

- Recall: 0.9556

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 50

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Precision | Recall |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:---------:|:------:|

| 0.1492 | 1.0 | 6176 | 0.1650 | 0.9537 | 0.9385 | 0.9695 |

| 0.1018 | 2.0 | 12352 | 0.1968 | 0.9544 | 0.9427 | 0.9664 |

| 0.0482 | 3.0 | 18528 | 0.2419 | 0.9521 | 0.9388 | 0.9658 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

CL/safe-math-bot | [] | null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

---

### She-Hulk Law Art on Stable Diffusion

This is the `<shehulk-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

CLAck/indo-pure | [

"pytorch",

"marian",

"text2text-generation",

"en",

"id",

"dataset:ALT",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | {

"architectures": [

"MarianMTModel"

],

"model_type": "marian",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

license: mit

---

### led-toy on Stable Diffusion

This is the `<led-toy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

CLTL/MedRoBERTa.nl | [

"pytorch",

"roberta",

"fill-mask",

"nl",

"transformers",

"license:mit",

"autotrain_compatible"

] | fill-mask | {

"architectures": [

"RobertaForMaskedLM"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2,988 | null | ---

license: mit

---

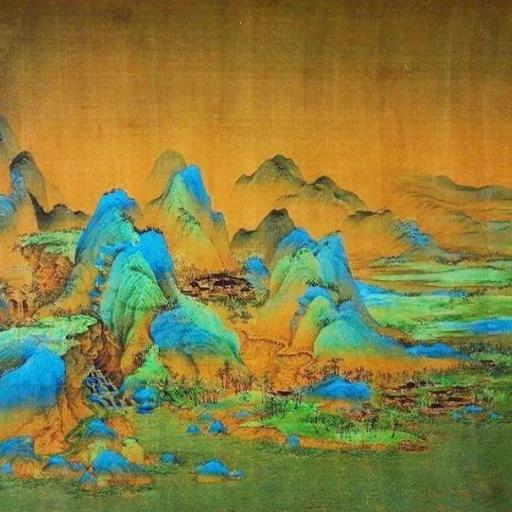

### durer style on Stable Diffusion

This is the `<drr-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

CLTL/icf-levels-stm | [

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

] | text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 32 | null | ---

language: el

tags:

- summarization

license: apache-2.0

---

# Abstractive Greek Text Summarization

Application is deployed in [Hugging Face Spaces](https://huggingface.co/spaces/kriton/greek-text-summarization).<br>

We trained mT5-small for the downstream task of text summarization in Greek using this [News Article Dataset](https://www.kaggle.com/datasets/kpittos/news-articles).

```python

from transformers import AutoTokenizer

from transformers import AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("kriton/greek-text-summarization")

model = AutoModelForSeq2SeqLM.from_pretrained("kriton/greek-text-summarization")

```

```python

from transformers import pipeline

summarizer = pipeline("summarization", model="kriton/greek-text-summarization")

article = """ Στη ΔΕΘ πρόκειται να ανακοινωθεί και με τον πλέον επίσημο τρόπο το Food Pass - το τρίτο επίδομα που εξετάσει η κυβέρνηση έπειτα από τις επιδοτήσει σε καύσιμα και ηλεκτρικό ρεύμα.

Η επιταγή ακρίβειας για τρόφιμα θα δοθεί εφάπαξ σε οικογένειες, που πληρούν συγκεκριμένα κριτήρια, με στόχο να στηριχτούν όσοι πλήττονται περισσότερο από την αύξηση του πληθωρισμού και την ακρίβεια, όπως:

χαμηλοσυνταξιούχοι, άνεργοι και ευάλωτες κοινωνικές ομάδες.

Σύμφωνα με τις διαθέσιμες πληροφορίες, η πληρωμή θα γίνει κοντά στις γιορτές των Χριστουγέννων, όταν - σύμφωνα με εκτιμήσεις - θα έχει βαθύνει η ενεργειακή κρίση και θα έχουν αυξηθεί ακόμη παραπάνω οι ανάγκες των νοικοκυριών.

Ουσιαστικά, με τον τρόπο αυτό, η κυβέρνηση θα επιδοτήσει τις αγορές του σούπερ μάρκετ για έναν μήνα για ευάλωτες ομάδες.

Αντιδράσεις από τους κρεοπώλες

Υπενθυμίζεται πως την Πέμπτη η Πανελλήνια Ομοσπονδία Καταστηματαρχών Κρεοπωλών (ΠΟΚΚ) Οικονομικών - με επιστολή της προς τα συναρμόδια υπουργεία - διαμαρτυρήθηκε σχετικά με τη χορήγηση του Food Pass στα σούπερ μάρκετ.

Στην επιστολή της ομοσπονδίας, την οποία κοινοποίησε και στην Κεντρική Ένωση Επιμελητηρίων και στη ΓΣΕΒΕΕ, σημειώνεται ότι εάν το μέτρο εξαργύρωσης του επιδόματος τροφίμων αφορά μόνο στις αλυσίδες τροφίμων τότε απαξιώνεται ο κλάδος των κρεοπωλών και «εντείνεται ο αθέμιτος ανταγωνισμός, εφόσον κατευθύνεται ο καταναλωτή σε συγκεκριμένες επαγγελματικές κατηγορίες».

Στο πλαίσιο αυτό, οι κρεοπώλες ζητούν να διευθυνθεί η λίστα των επαγγελματιών όπου ο καταναλωτής θα μπορεί να εξαργυρώσει το εν λόγω βοήθημα.

"""

def genarate_summary(article):

inputs = tokenizer(

'summarize: ' + article,

return_tensors="pt",

max_length=1024,

truncation=True,

padding="max_length",

)

outputs = model.generate(

inputs["input_ids"],

max_length=512,

min_length=130,

length_penalty=3.0,

num_beams=8,

early_stopping=True,

repetition_penalty=3.0,

)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

print(genarate_summary(article))

>>> `Το Food Pass - το τρίτο επίδομα που εξετάζει η κυβέρνηση έπειτα από τις επιδοτήσεις σε καύσιμα και ηλεκτρικό ρεύμα για έναν μήνα για ευάλωτες ομάδες. Σύμφωνα με πληροφορίες της Πανελλήνια Ομοσπονδίας Καταστηματαρχών Κρεοπωλών (ΠΟΚΚ) Οικονομικών, οι κρεοπώλες διαμαρτυρήθηκαν σχετικά με τη χορήγηση του «fast food pass» προκειμένου να αυξάνουν ακόμη περισσότερες κοινωνικές ανάγκους στο κλάδο`

``` |

Cameron/BERT-Jigsaw | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {