modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

mnaylor/mega-base-wikitext | mnaylor | 2023-06-28T20:32:48Z | 977 | 1 | transformers | [

"transformers",

"pytorch",

"safetensors",

"mega",

"fill-mask",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2023-02-21T20:56:10Z | ---

license: apache-2.0

language:

- en

library_name: transformers

---

# Mega Masked LM on wikitext-103

This is the location on the Hugging Face hub for the Mega MLM checkpoint. I trained this model on the `wikitext-103` dataset using standard

BERT-style masked LM pretraining using the [original Mega repository](https://github.com/facebookresearch/mega) and uploaded the weights

initially to hf.co/mnaylor/mega-wikitext-103. When the implementation of Mega into Hugging Face's `transformers` is finished, the weights here

are designed to be used with `MegaForMaskedLM` and are compatible with the other (encoder-based) `MegaFor*` model classes.

This model uses the RoBERTa base tokenizer since the Mega paper does not implement a specific tokenizer aside from the character-level

tokenizer used to illustrate long-sequence performance. |

khhuang/zerofec-qa2claim-t5-base | khhuang | 2023-08-31T18:16:21Z | 977 | 4 | transformers | [

"transformers",

"pytorch",

"safetensors",

"t5",

"text2text-generation",

"en",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text2text-generation | 2023-08-13T19:43:58Z | ---

language: en

widget:

- text: a 1968 american independent horror film \\n What is Night of the Living Dead?

---

# QA2Claim Model From ZeroFEC

ZeroFEC is a faithful and interpetable factual error correction framework introduced in the paper [Zero-shot Faithful Factual Error Correction](https://aclanthology.org/2023.acl-long.311/). It involves a component that converts qa-pairs to declarative statements, which is hosted in this repo. The associated code is released in [this](https://github.com/khuangaf/ZeroFEC) repository.

### How to use

Using Huggingface pipeline abstraction:

```python

from transformers import pipeline

nlp = pipeline("text2text-generation", model='khhuang/zerofec-qa2claim-t5-base', tokenizer='khhuang/zerofec-qa2claim-t5-base')

QUESTION = "What is Night of the Living Dead?"

ANSWER = "a 1968 american independent horror film"

def format_inputs(question: str, answer: str):

return f"{answer} \\n {question}"

text = format_inputs(QUESTION, ANSWER)

nlp(text)

# should output [{'generated_text': 'Night of the Living Dead is a 1968 american independent horror film.'}]

```

Using the pre-trained model directly:

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained('khhuang/zerofec-qa2claim-t5-base')

model = AutoModelForSeq2SeqLM.from_pretrained('khhuang/zerofec-qa2claim-t5-base')

QUESTION = "What is Night of the Living Dead?"

ANSWER = "a 1968 american independent horror film"

def format_inputs(question: str, answer: str):

return f"{answer} \\n {question}"

text = format_inputs(QUESTION, ANSWER)

input_ids = tokenizer(text, return_tensors="pt").input_ids

generated_ids = model.generate(input_ids, max_length=32, num_beams=4)

output = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

print(output)

# should output "Night of the Living Dead is a 1968 american independent horror film."

```

### Citation

```

@inproceedings{huang-etal-2023-zero,

title = "Zero-shot Faithful Factual Error Correction",

author = "Huang, Kung-Hsiang and

Chan, Hou Pong and

Ji, Heng",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.311",

doi = "10.18653/v1/2023.acl-long.311",

pages = "5660--5676",

}

``` |

telosnex/fllama | telosnex | 2024-06-28T18:58:17Z | 977 | 3 | null | [

"gguf",

"region:us"

]

| null | 2024-01-21T01:04:53Z | Entry not found |

mradermacher/MiquMaid-v3-70B-GGUF | mradermacher | 2024-05-06T05:13:51Z | 977 | 1 | transformers | [

"transformers",

"gguf",

"not-for-all-audiences",

"nsfw",

"merge",

"en",

"base_model:NeverSleep/MiquMaid-v3-70B",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-04-05T22:05:22Z | ---

base_model: NeverSleep/MiquMaid-v3-70B

language:

- en

library_name: transformers

license: cc-by-nc-4.0

quantized_by: mradermacher

tags:

- not-for-all-audiences

- nsfw

- merge

---

## About

<!-- ### quantize_version: 1 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: -->

<!-- ### vocab_type: -->

static quants of https://huggingface.co/NeverSleep/MiquMaid-v3-70B

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/MiquMaid-v3-70B-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q2_K.gguf) | Q2_K | 25.6 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.IQ3_XS.gguf) | IQ3_XS | 28.4 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.IQ3_S.gguf) | IQ3_S | 30.0 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q3_K_S.gguf) | Q3_K_S | 30.0 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.IQ3_M.gguf) | IQ3_M | 31.0 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q3_K_M.gguf) | Q3_K_M | 33.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q3_K_L.gguf) | Q3_K_L | 36.2 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.IQ4_XS.gguf) | IQ4_XS | 37.3 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q4_K_S.gguf) | Q4_K_S | 39.3 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q4_K_M.gguf) | Q4_K_M | 41.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q5_K_S.gguf) | Q5_K_S | 47.6 | |

| [GGUF](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q5_K_M.gguf) | Q5_K_M | 48.9 | |

| [PART 1](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q6_K.gguf.part2of2) | Q6_K | 56.7 | very good quality |

| [PART 1](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q8_0.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/MiquMaid-v3-70B-GGUF/resolve/main/MiquMaid-v3-70B.Q8_0.gguf.part2of2) | Q8_0 | 73.4 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

imdatta0/nanollama | imdatta0 | 2024-04-23T13:55:48Z | 977 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-04-22T11:26:56Z | ---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

FallenMerick/Smart-Lemon-Cookie-7B | FallenMerick | 2024-05-25T19:03:34Z | 977 | 1 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"roleplay",

"conversational",

"en",

"arxiv:2306.01708",

"base_model:SanjiWatsuki/Silicon-Maid-7B",

"base_model:MTSAIR/multi_verse_model",

"base_model:SanjiWatsuki/Kunoichi-7B",

"base_model:KatyTheCutie/LemonadeRP-4.5.3",

"license:cc-by-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-04-30T00:54:39Z | ---

language:

- en

license: cc-by-4.0

library_name: transformers

tags:

- mergekit

- merge

- mistral

- text-generation

- roleplay

base_model:

- SanjiWatsuki/Silicon-Maid-7B

- MTSAIR/multi_verse_model

- SanjiWatsuki/Kunoichi-7B

- KatyTheCutie/LemonadeRP-4.5.3

model-index:

- name: Smart-Lemon-Cookie-7B

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 66.3

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=FallenMerick/Smart-Lemon-Cookie-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 85.53

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=FallenMerick/Smart-Lemon-Cookie-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 64.69

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=FallenMerick/Smart-Lemon-Cookie-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 60.66

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=FallenMerick/Smart-Lemon-Cookie-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 77.74

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=FallenMerick/Smart-Lemon-Cookie-7B

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 54.06

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=FallenMerick/Smart-Lemon-Cookie-7B

name: Open LLM Leaderboard

---

*image courtesy of [@matchaaaaa](https://huggingface.co/matchaaaaa)*

</br>

</br>

# Smart-Lemon-Cookie-7B

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

GGUF quants:

* https://huggingface.co/FaradayDotDev/Smart-Lemon-Cookie-7B-GGUF

* https://huggingface.co/mradermacher/Smart-Lemon-Cookie-7B-GGUF

## Merge Details

### Merge Method

This model was merged using the [TIES](https://arxiv.org/abs/2306.01708) merge method using [MTSAIR/multi_verse_model](https://huggingface.co/MTSAIR/multi_verse_model) as a base.

### Models Merged

The following models were included in the merge:

* [SanjiWatsuki/Silicon-Maid-7B](https://huggingface.co/SanjiWatsuki/Silicon-Maid-7B)

* [SanjiWatsuki/Kunoichi-7B](https://huggingface.co/SanjiWatsuki/Kunoichi-7B)

* [KatyTheCutie/LemonadeRP-4.5.3](https://huggingface.co/KatyTheCutie/LemonadeRP-4.5.3)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: SanjiWatsuki/Silicon-Maid-7B

parameters:

density: 1.0

weight: 1.0

- model: SanjiWatsuki/Kunoichi-7B

parameters:

density: 0.4

weight: 1.0

- model: KatyTheCutie/LemonadeRP-4.5.3

parameters:

density: 0.6

weight: 1.0

merge_method: ties

base_model: MTSAIR/multi_verse_model

parameters:

normalize: true

dtype: float16

```

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_FallenMerick__Smart-Lemon-Cookie-7B)

| Metric |Value|

|---------------------------------|----:|

|Avg. |68.16|

|AI2 Reasoning Challenge (25-Shot)|66.30|

|HellaSwag (10-Shot) |85.53|

|MMLU (5-Shot) |64.69|

|TruthfulQA (0-shot) |60.66|

|Winogrande (5-shot) |77.74|

|GSM8k (5-shot) |54.06|

|

google/t5-3b-ssm-nqo | google | 2023-01-24T16:43:49Z | 976 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"t5",

"text2text-generation",

"en",

"dataset:c4",

"dataset:wikipedia",

"dataset:natural_questions",

"arxiv:2002.08909",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text2text-generation | 2022-03-02T23:29:05Z | ---

language: en

datasets:

- c4

- wikipedia

- natural_questions

license: apache-2.0

---

[Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) for **Closed Book Question Answering**.

The model was pre-trained using T5's denoising objective on [C4](https://huggingface.co/datasets/c4), subsequently additionally pre-trained using [REALM](https://arxiv.org/pdf/2002.08909.pdf)'s salient span masking objective on [Wikipedia](https://huggingface.co/datasets/wikipedia), and finally fine-tuned on [Natural Questions (NQ)](https://huggingface.co/datasets/natural_questions).

**Note**: The model was fine-tuned on 90% of the train splits of [Natural Questions (NQ)](https://huggingface.co/datasets/natural_questions) for 20k steps and validated on the held-out 10% of the train split.

Other community Checkpoints: [here](https://huggingface.co/models?search=ssm)

Paper: [How Much Knowledge Can You Pack

Into the Parameters of a Language Model?](https://arxiv.org/abs/1910.10683.pdf)

Authors: *Adam Roberts, Colin Raffel, Noam Shazeer*

## Results on Natural Questions - Test Set

|Id | link | Exact Match |

|---|---|---|

|T5-large|https://huggingface.co/google/t5-large-ssm-nqo|29.0|

|T5-xxl|https://huggingface.co/google/t5-xxl-ssm-nqo|35.2|

|**T5-3b**|**https://huggingface.co/google/t5-3b-ssm-nqo**|**31.7**|

|T5-11b|https://huggingface.co/google/t5-11b-ssm-nqo|34.8|

## Usage

The model can be used as follows for **closed book question answering**:

```python

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

t5_qa_model = AutoModelForSeq2SeqLM.from_pretrained("google/t5-3b-ssm-nqo")

t5_tok = AutoTokenizer.from_pretrained("google/t5-3b-ssm-nqo")

input_ids = t5_tok("When was Franklin D. Roosevelt born?", return_tensors="pt").input_ids

gen_output = t5_qa_model.generate(input_ids)[0]

print(t5_tok.decode(gen_output, skip_special_tokens=True))

```

## Abstract

It has recently been observed that neural language models trained on unstructured text can implicitly store and retrieve knowledge using natural language queries. In this short paper, we measure the practical utility of this approach by fine-tuning pre-trained models to answer questions without access to any external context or knowledge. We show that this approach scales with model size and performs competitively with open-domain systems that explicitly retrieve answers from an external knowledge source when answering questions. To facilitate reproducibility and future work, we release our code and trained models at https://goo.gle/t5-cbqa.

|

diffusers/controlnet-canny-sdxl-1.0-mid | diffusers | 2023-08-16T12:59:53Z | 976 | 17 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"controlnet",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"license:openrail++",

"region:us"

]

| text-to-image | 2023-08-16T11:20:41Z | ---

license: openrail++

base_model: stabilityai/stable-diffusion-xl-base-1.0

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- controlnet

inference: false

---

# Small SDXL-controlnet: Canny

These are small controlnet weights trained on stabilityai/stable-diffusion-xl-base-1.0 with canny conditioning. This checkpoint is 5x smaller than the original XL controlnet checkpoint.

You can find some example images in the following.

prompt: aerial view, a futuristic research complex in a bright foggy jungle, hard lighting

prompt: a woman, close up, detailed, beautiful, street photography, photorealistic, detailed, Kodak ektar 100, natural, candid shot

prompt: megatron in an apocalyptic world ground, runied city in the background, photorealistic

prompt: a couple watching sunset, 4k photo

## Usage

Make sure to first install the libraries:

```bash

pip install accelerate transformers safetensors opencv-python diffusers

```

And then we're ready to go:

```python

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline, AutoencoderKL

from diffusers.utils import load_image

from PIL import Image

import torch

import numpy as np

import cv2

prompt = "aerial view, a futuristic research complex in a bright foggy jungle, hard lighting"

negative_prompt = "low quality, bad quality, sketches"

image = load_image("https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/sd_controlnet/hf-logo.png")

controlnet_conditioning_scale = 0.5 # recommended for good generalization

controlnet = ControlNetModel.from_pretrained(

"diffusers/controlnet-canny-sdxl-1.0-mid",

torch_dtype=torch.float16

)

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

controlnet=controlnet,

vae=vae,

torch_dtype=torch.float16,

)

pipe.enable_model_cpu_offload()

image = np.array(image)

image = cv2.Canny(image, 100, 200)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

image = Image.fromarray(image)

images = pipe(

prompt, negative_prompt=negative_prompt, image=image, controlnet_conditioning_scale=controlnet_conditioning_scale,

).images

images[0].save(f"hug_lab.png")

```

To more details, check out the official documentation of [`StableDiffusionXLControlNetPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet_sdxl).

🚨 Please note that this checkpoint is experimental and there's a lot of room for improvement. We encourage the community to build on top of it, improve it, and provide us with feedback. 🚨

### Training

Our training script was built on top of the official training script that we provide [here](https://github.com/huggingface/diffusers/blob/main/examples/controlnet/README_sdxl.md).

You can refer to [this script](https://github.com/huggingface/diffusers/blob/7b93c2a882d8e12209fbaeffa51ee2b599ab5349/examples/research_projects/controlnet/train_controlnet_webdataset.py) for full discolsure.

* This checkpoint does not perform distillation. We just use a smaller ControlNet initialized from the SDXL UNet. We

encourage the community to try and conduct distillation too. This resource might be of help in [this regard](https://huggingface.co/blog/sd_distillation).

* To learn more about how the ControlNet was initialized, refer to [this code block](https://github.com/huggingface/diffusers/blob/7b93c2a882d8e12209fbaeffa51ee2b599ab5349/examples/research_projects/controlnet/train_controlnet_webdataset.py#L981C1-L999C36).

* It does not have any attention blocks.

* The model works pretty good on most conditioning images. But for more complex conditionings, the bigger checkpoints might be better. We are still working on improving the quality of this checkpoint and looking for feedback from the community.

* We recommend playing around with the `controlnet_conditioning_scale` and `guidance_scale` arguments for potentially better

image generation quality.

#### Training data

The model was trained on 3M images from LAION aesthetic 6 plus subset, with batch size of 256 for 50k steps with constant learning rate of 3e-5.

#### Compute

One 8xA100 machine

#### Mixed precision

FP16 |

UCSC-VLAA/ViT-L-14-CLIPA-datacomp1B | UCSC-VLAA | 2023-10-17T05:46:10Z | 976 | 2 | open_clip | [

"open_clip",

"safetensors",

"clip",

"zero-shot-image-classification",

"dataset:mlfoundations/datacomp_1b",

"arxiv:2306.15658",

"arxiv:2305.07017",

"license:apache-2.0",

"region:us"

]

| zero-shot-image-classification | 2023-10-17T05:42:03Z | ---

tags:

- clip

library_name: open_clip

pipeline_tag: zero-shot-image-classification

license: apache-2.0

datasets:

- mlfoundations/datacomp_1b

---

# Model card for ViT-L-14-CLIPA-datacomp1B

A CLIPA-v2 model...

## Model Details

- **Model Type:** Contrastive Image-Text, Zero-Shot Image Classification.

- **Original:** https://github.com/UCSC-VLAA/CLIPA

- **Dataset:** mlfoundations/datacomp_1b

- **Papers:**

- CLIPA-v2: Scaling CLIP Training with 81.1% Zero-shot ImageNet Accuracy within a $10,000 Budget; An Extra $4,000 Unlocks 81.8% Accuracy: https://arxiv.org/abs/2306.15658

- An Inverse Scaling Law for CLIP Training: https://arxiv.org/abs/2305.07017

## Model Usage

### With OpenCLIP

```

import torch

import torch.nn.functional as F

from urllib.request import urlopen

from PIL import Image

from open_clip import create_model_from_pretrained, get_tokenizer

model, preprocess = create_model_from_pretrained('hf-hub:ViT-L-14-CLIPA')

tokenizer = get_tokenizer('hf-hub:ViT-L-14-CLIPA')

image = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

image = preprocess(image).unsqueeze(0)

text = tokenizer(["a diagram", "a dog", "a cat", "a beignet"], context_length=model.context_length)

with torch.no_grad(), torch.cuda.amp.autocast():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features = F.normalize(image_features, dim=-1)

text_features = F.normalize(text_features, dim=-1)

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

print("Label probs:", text_probs) # prints: [[0., 0., 0., 1.0]]

```

## Citation

```bibtex

@article{li2023clipav2,

title={CLIPA-v2: Scaling CLIP Training with 81.1% Zero-shot ImageNet Accuracy within a $10,000 Budget; An Extra $4,000 Unlocks 81.8% Accuracy},

author={Xianhang Li and Zeyu Wang and Cihang Xie},

journal={arXiv preprint arXiv:2306.15658},

year={2023},

}

```

```bibtex

@inproceedings{li2023clipa,

title={An Inverse Scaling Law for CLIP Training},

author={Xianhang Li and Zeyu Wang and Cihang Xie},

booktitle={NeurIPS},

year={2023},

}

```

|

TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF | TheBloke | 2023-10-29T09:32:14Z | 976 | 15 | transformers | [

"transformers",

"gguf",

"mistral",

"not-for-all-audiences",

"nsfw",

"base_model:Undi95/Mistral-ClaudeLimaRP-v3-7B",

"license:apache-2.0",

"text-generation-inference",

"region:us"

]

| null | 2023-10-29T09:27:59Z | ---

base_model: Undi95/Mistral-ClaudeLimaRP-v3-7B

inference: false

license: apache-2.0

model_creator: Undi

model_name: Mistral ClaudeLimaRP v3 7B

model_type: mistral

prompt_template: "### Instruction:\nCharacter's Persona: bot character description\n\

\nUser's persona: user character description\n \nScenario: what happens in the\

\ story\n\nPlay the role of Character. You must engage in a roleplaying chat with\

\ User below this line. Do not write dialogues and narration for User. Character\

\ should respond with messages of medium length.\n\n### Input:\nUser: {prompt}\n\

\n### Response:\nCharacter: \n"

quantized_by: TheBloke

tags:

- not-for-all-audiences

- nsfw

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Mistral ClaudeLimaRP v3 7B - GGUF

- Model creator: [Undi](https://huggingface.co/Undi95)

- Original model: [Mistral ClaudeLimaRP v3 7B](https://huggingface.co/Undi95/Mistral-ClaudeLimaRP-v3-7B)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Undi's Mistral ClaudeLimaRP v3 7B](https://huggingface.co/Undi95/Mistral-ClaudeLimaRP-v3-7B).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF)

* [Undi's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/Undi95/Mistral-ClaudeLimaRP-v3-7B)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: LimaRP-Alpaca

```

### Instruction:

Character's Persona: bot character description

User's persona: user character description

Scenario: what happens in the story

Play the role of Character. You must engage in a roleplaying chat with User below this line. Do not write dialogues and narration for User. Character should respond with messages of medium length.

### Input:

User: {prompt}

### Response:

Character:

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [mistral-claudelimarp-v3-7b.Q2_K.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q2_K.gguf) | Q2_K | 2 | 3.08 GB| 5.58 GB | smallest, significant quality loss - not recommended for most purposes |

| [mistral-claudelimarp-v3-7b.Q3_K_S.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q3_K_S.gguf) | Q3_K_S | 3 | 3.16 GB| 5.66 GB | very small, high quality loss |

| [mistral-claudelimarp-v3-7b.Q3_K_M.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q3_K_M.gguf) | Q3_K_M | 3 | 3.52 GB| 6.02 GB | very small, high quality loss |

| [mistral-claudelimarp-v3-7b.Q3_K_L.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q3_K_L.gguf) | Q3_K_L | 3 | 3.82 GB| 6.32 GB | small, substantial quality loss |

| [mistral-claudelimarp-v3-7b.Q4_0.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q4_0.gguf) | Q4_0 | 4 | 4.11 GB| 6.61 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [mistral-claudelimarp-v3-7b.Q4_K_S.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q4_K_S.gguf) | Q4_K_S | 4 | 4.14 GB| 6.64 GB | small, greater quality loss |

| [mistral-claudelimarp-v3-7b.Q4_K_M.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q4_K_M.gguf) | Q4_K_M | 4 | 4.37 GB| 6.87 GB | medium, balanced quality - recommended |

| [mistral-claudelimarp-v3-7b.Q5_0.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q5_0.gguf) | Q5_0 | 5 | 5.00 GB| 7.50 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [mistral-claudelimarp-v3-7b.Q5_K_S.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q5_K_S.gguf) | Q5_K_S | 5 | 5.00 GB| 7.50 GB | large, low quality loss - recommended |

| [mistral-claudelimarp-v3-7b.Q5_K_M.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q5_K_M.gguf) | Q5_K_M | 5 | 5.13 GB| 7.63 GB | large, very low quality loss - recommended |

| [mistral-claudelimarp-v3-7b.Q6_K.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q6_K.gguf) | Q6_K | 6 | 5.94 GB| 8.44 GB | very large, extremely low quality loss |

| [mistral-claudelimarp-v3-7b.Q8_0.gguf](https://huggingface.co/TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF/blob/main/mistral-claudelimarp-v3-7b.Q8_0.gguf) | Q8_0 | 8 | 7.70 GB| 10.20 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF and below it, a specific filename to download, such as: mistral-claudelimarp-v3-7b.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF mistral-claudelimarp-v3-7b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF mistral-claudelimarp-v3-7b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m mistral-claudelimarp-v3-7b.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "### Instruction:\nCharacter's Persona: bot character description\n\nUser's persona: user character description\n \nScenario: what happens in the story\n\nPlay the role of Character. You must engage in a roleplaying chat with User below this line. Do not write dialogues and narration for User. Character should respond with messages of medium length.\n\n### Input:\nUser: {prompt}\n\n### Response:\nCharacter:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/Mistral-ClaudeLimaRP-v3-7B-GGUF", model_file="mistral-claudelimarp-v3-7b.Q4_K_M.gguf", model_type="mistral", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, NimbleBox.ai, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Undi's Mistral ClaudeLimaRP v3 7B

## Description

This repo contains fp16 files of [Norquinal/Mistral-7B-claude-chat](https://huggingface.co/Norquinal/Mistral-7B-claude-chat) with the LoRA [lemonilia/LimaRP-Mistral-7B-v0.1](https://huggingface.co/lemonilia/LimaRP-Mistral-7B-v0.1) applied at weight "0.75".

All credit go to [lemonilia](https://huggingface.co/lemonilia) and [Norquinal](https://huggingface.co/Norquinal)

## Prompt format

Same as before. It uses the [extended Alpaca format](https://github.com/tatsu-lab/stanford_alpaca),

with `### Input:` immediately preceding user inputs and `### Response:` immediately preceding

model outputs. While Alpaca wasn't originally intended for multi-turn responses, in practice this

is not a problem; the format follows a pattern already used by other models.

```

### Instruction:

Character's Persona: {bot character description}

User's Persona: {user character description}

Scenario: {what happens in the story}

Play the role of Character. You must engage in a roleplaying chat with User below this line. Do not write dialogues and narration for User.

### Input:

User: {utterance}

### Response:

Character: {utterance}

### Input

User: {utterance}

### Response:

Character: {utterance}

(etc.)

```

You should:

- Replace all text in curly braces (curly braces included) with your own text.

- Replace `User` and `Character` with appropriate names.

### Message length control

Inspired by the previously named "Roleplay" preset in SillyTavern, with this

version of LimaRP it is possible to append a length modifier to the response instruction

sequence, like this:

```

### Input

User: {utterance}

### Response: (length = medium)

Character: {utterance}

```

This has an immediately noticeable effect on bot responses. The available lengths are:

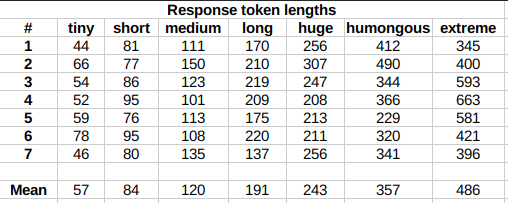

`tiny`, `short`, `medium`, `long`, `huge`, `humongous`, `extreme`, `unlimited`. **The

recommended starting length is `medium`**. Keep in mind that the AI may ramble

or impersonate the user with very long messages.

The length control effect is reproducible, but the messages will not necessarily follow

lengths very precisely, rather follow certain ranges on average, as seen in this table

with data from tests made with one reply at the beginning of the conversation:

Response length control appears to work well also deep into the conversation.

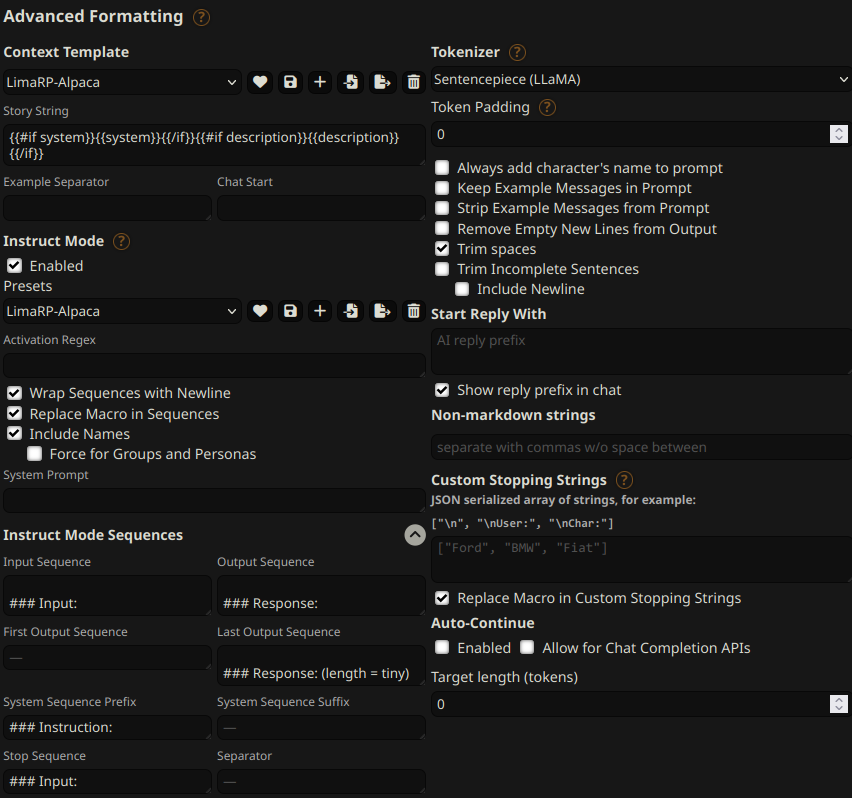

## Suggested settings

You can follow these instruction format settings in SillyTavern. Replace `tiny` with

your desired response length:

## Text generation settings

Extensive testing with Mistral has not been performed yet, but suggested starting text

generation settings may be:

- TFS = 0.90~0.95

- Temperature = 0.70~0.85

- Repetition penalty = 1.08~1.10

- top-k = 0 (disabled)

- top-p = 1 (disabled)

If you want to support me, you can [here](https://ko-fi.com/undiai).

<!-- original-model-card end -->

|

timm/edgenext_base.in21k_ft_in1k | timm | 2023-04-23T22:42:41Z | 975 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"dataset:imagenet-21k-p",

"arxiv:2206.10589",

"license:mit",

"region:us"

]

| image-classification | 2023-04-23T22:42:29Z | ---

tags:

- image-classification

- timm

library_name: timm

license: mit

datasets:

- imagenet-1k

- imagenet-21k-p

---

# Model card for edgenext_base.in21k_ft_in1k

An EdgeNeXt image classification model. Pretrained on ImageNet-21k-P (winter21 subset) and fine-tuned on ImageNet-1k by paper authors.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 18.5

- GMACs: 3.8

- Activations (M): 15.6

- Image size: train = 256 x 256, test = 320 x 320

- **Papers:**

- EdgeNeXt: Efficiently Amalgamated CNN-Transformer Architecture for Mobile Vision Applications: https://arxiv.org/abs/2206.10589

- **Pretrain Dataset:** ImageNet-21K-P

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/mmaaz60/EdgeNeXt

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('edgenext_base.in21k_ft_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'edgenext_base.in21k_ft_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 80, 64, 64])

# torch.Size([1, 160, 32, 32])

# torch.Size([1, 288, 16, 16])

# torch.Size([1, 584, 8, 8])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'edgenext_base.in21k_ft_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 584, 8, 8) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Citation

```bibtex

@inproceedings{Maaz2022EdgeNeXt,

title={EdgeNeXt: Efficiently Amalgamated CNN-Transformer Architecture for Mobile Vision Applications},

author={Muhammad Maaz and Abdelrahman Shaker and Hisham Cholakkal and Salman Khan and Syed Waqas Zamir and Rao Muhammad Anwer and Fahad Shahbaz Khan},

booktitle={International Workshop on Computational Aspects of Deep Learning at 17th European Conference on Computer Vision (CADL2022)},

year={2022},

organization={Springer}

}

```

|

WhitePeak/bert-base-cased-Korean-sentiment | WhitePeak | 2023-09-19T01:59:03Z | 975 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"ko",

"dataset:WhitePeak/shopping_review",

"base_model:bert-base-multilingual-cased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-09-18T23:20:53Z | ---

license: apache-2.0

base_model: bert-base-multilingual-cased

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: bert-base-cased-Korean-sentiment

results: []

datasets:

- WhitePeak/shopping_review

language:

- ko

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-Korean-sentiment

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2338

- Accuracy: 0.9234

- F1: 0.9238

## Model description

This is a fine-tuned model for a sentiment analysis for the Korean language based on customer reviews in the Korean language

## Intended uses & limitations

```python

from transformers import pipeline

sentiment_model = pipeline(model="WhitePeak/bert-base-cased-Korean-sentiment")

sentiment_mode("매우 좋아")

```

Result:

```

LABEL_0: negative

LABEL_1: positive

```

## Training and evaluation data

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu118

- Datasets 2.14.5

- Tokenizers 0.13.3 |

ALBADDAWI/DeepCode-7B-Aurora-v13 | ALBADDAWI | 2024-04-13T16:09:41Z | 975 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"deepseek-ai/deepseek-math-7b-rl",

"conversational",

"base_model:deepseek-ai/deepseek-math-7b-rl",

"license:afl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-04-11T16:21:04Z | ---

tags:

- deepseek-ai/deepseek-math-7b-rl

base_model:

- deepseek-ai/deepseek-math-7b-rl

- deepseek-ai/deepseek-math-7b-rl

- deepseek-ai/deepseek-math-7b-rl

- deepseek-ai/deepseek-math-7b-rl

- deepseek-ai/deepseek-math-7b-rl

license: afl-3.0

---

# DeepCode-7B-Aurora-v13

DeepCode-7B-Aurora-v13 is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

* [deepseek-ai/deepseek-math-7b-rl](https://huggingface.co/deepseek-ai/deepseek-math-7b-rl)

## 🧩 Configuration

```yaml

models:

- model: deepseek-ai/deepseek-math-7b-rl

# No parameters necessary for base model

- model: deepseek-ai/deepseek-math-7b-rl

parameters:

density: 0.66

weight: 0.2

- model: deepseek-ai/deepseek-math-7b-rl

parameters:

density: 0.55

weight: 0.2

- model: deepseek-ai/deepseek-math-7b-rl

parameters:

density: 0.55

weight: 0.2

- model: deepseek-ai/deepseek-math-7b-rl

parameters:

density: 0.44

weight: 0.2

- model: deepseek-ai/deepseek-math-7b-rl

parameters:

density: 0.66

weight: 0.2

merge_method: dare_ties

base_model: deepseek-ai/deepseek-math-7b-rl

parameters:

int8_mask: true

dtype: bfloat16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "ALBADDAWI/DeepCode-7B-Aurora-v13"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

``` |

Meina/MeinaMix | Meina | 2023-05-25T11:18:03Z | 974 | 137 | diffusers | [

"diffusers",

"anime",

"art",

"stable diffusion",

"text-to-image",

"en",

"license:creativeml-openrail-m",

"region:us"

]

| text-to-image | 2023-02-08T08:52:00Z | ---

license: creativeml-openrail-m

language:

- en

tags:

- anime

- art

- stable diffusion

pipeline_tag: text-to-image

library_name: diffusers

---

MeinaMix Objective is to be able to do good art with little prompting.

* For examples and prompts, please checkout: https://civitai.com/models/7240/meinamix

I have a discord server where you can post images that you generated, discuss prompt and/or ask for help.

* https://discord.gg/XC9nGZNDUd

If you like one of my models and want to support their updates

* I've made a ko-fi page; https://ko-fi.com/meina where you can pay me a coffee <3

* And a Patreon page; https://www.patreon.com/MeinaMix where you can support me and get acess to beta of my models!

* You may also try this model using Sinkin.ai: https://sinkin.ai/m/vln8Nwr

* MeinaMix and the other of Meinas will ALWAYS be FREE.

* Recommendations of use:

Enable Quantization in K samplers.

Hires.fix is needed for prompts where the character is far away in order to make decent images, it drastically improve the quality of face and eyes!

Recommended parameters:

* Sampler: Euler a: 40 to 60 steps.

* Sampler: DPM++ SDE Karras: 30 to 60 steps.

* CFG Scale: 7.

* Resolutions: 512x768, 512x1024 for Portrait!

* Resolutions: 768x512, 1024x512, 1536x512 for Landscape!

* Hires.fix: R-ESRGAN 4x+Anime6b, with 10 steps at 0.1 up to 0.3 denoising.

* Clip Skip: 2.

* Negatives: ' (worst quality:2, low quality:2), (zombie, sketch, interlocked fingers, comic), '

|

sinkinai/Beautiful-Realistic-Asians-v5 | sinkinai | 2024-05-16T14:09:02Z | 974 | 17 | diffusers | [

"diffusers",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-05-08T09:09:39Z | You can run this model for free at: https://sinkin.ai/m/vlDnKP6

We offer API at low rates as well |

beowolx/CodeNinja-1.0-OpenChat-7B-GGUF | beowolx | 2023-12-22T21:11:54Z | 974 | 14 | null | [

"gguf",

"code",

"text-generation-inference",

"text-generation",

"en",

"dataset:glaiveai/glaive-code-assistant-v2",

"dataset:TokenBender/code_instructions_122k_alpaca_style",

"license:mit",

"region:us"

]

| text-generation | 2023-12-20T21:40:36Z | ---

license: mit

datasets:

- glaiveai/glaive-code-assistant-v2

- TokenBender/code_instructions_122k_alpaca_style

language:

- en

metrics:

- code_eval

pipeline_tag: text-generation

tags:

- code

- text-generation-inference

---

<p align="center">

<img width="700px" alt="DeepSeek Coder" src="https://cdn-uploads.huggingface.co/production/uploads/64b566ab04fa6584c03b5247/5COagfF6EwrV4utZJ-ClI.png">

</p>

<hr>

# CodeNinja: Your Advanced Coding Assistant

## Overview

CodeNinja is an enhanced version of the renowned model [openchat/openchat-3.5-1210](https://huggingface.co/openchat/openchat-3.5-1210). It having been fine-tuned through Supervised Fine Tuning on two expansive datasets, encompassing over 400,000 coding instructions. Designed to be an indispensable tool for coders, CodeNinja aims to integrate seamlessly into your daily coding routine.

### Key Features

- **Expansive Training Database**: CodeNinja has been refined with datasets from [glaiveai/glaive-code-assistant-v2](https://huggingface.co/datasets/glaiveai/glaive-code-assistant-v2) and [TokenBender/code_instructions_122k_alpaca_style](https://huggingface.co/datasets/TokenBender/code_instructions_122k_alpaca_style), incorporating around 400,000 coding instructions across various languages including Python, C, C++, Rust, Java, JavaScript, and more.

- **Flexibility and Scalability**: Available in a 7B model size, CodeNinja is adaptable for local runtime environments.

- **Advanced Code Completion**: With a substantial context window size of 8192, it supports comprehensive project-level code completion.

## Prompt Format

CodeNinja maintains the same prompt structure as OpenChat 3.5. Effective utilization requires adherence to this format:

```

GPT4 Correct User: Hello<|end_of_turn|>GPT4 Correct Assistant: Hi<|end_of_turn|>GPT4 Correct User: How are you today?<|end_of_turn|>GPT4 Correct Assistant:

```

🚨 Important: Ensure the use of `<|end_of_turn|>` as the end-of-generation token.

**Adhering to this format is crucial for optimal results.**

## Usage Instructions

### Using LM Studio

The simplest way to engage with CodeNinja is via the [quantized versions](https://huggingface.co/beowolx/CodeNinja-1.0-OpenChat-7B-GGUF) on [LM Studio](https://lmstudio.ai/). Ensure you select the "OpenChat" preset, which incorporates the necessary prompt format. The preset is also available in this [gist](https://gist.github.com/beowolx/b219466681c02ff67baf8f313a3ad817).

### Using the Transformers Library

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Initialize the model

model_path = "beowolx/CodeNinja-1.0-OpenChat-7B"

model = AutoModelForCausalLM.from_pretrained(model_path, device_map="auto")

# Load the OpenChat tokenizer

tokenizer = AutoTokenizer.from_pretrained("openchat/openchat-3.5-1210", use_fast=True)

def generate_one_completion(prompt: str):

messages = [

{"role": "user", "content": prompt},

{"role": "assistant", "content": ""} # Model response placeholder

]

# Generate token IDs using the chat template

input_ids = tokenizer.apply_chat_template(messages, add_generation_prompt=True)

# Produce completion

generate_ids = model.generate(

torch.tensor([input_ids]).to("cuda"),

max_length=256,

pad_token_id=tokenizer.pad_token_id,

eos_token_id=tokenizer.eos_token_id

)

# Process the completion

completion = tokenizer.decode(generate_ids[0], skip_special_tokens=True)

completion = completion.split("\n\n\n")[0].strip()

return completion

```

## License

CodeNinja is licensed under the MIT License, with model usage subject to the Model License.

## Contact

For queries or support, please open an issue in the repository. |

timm/pvt_v2_b0.in1k | timm | 2023-04-25T04:03:07Z | 973 | 1 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2106.13797",

"license:apache-2.0",

"region:us"

]

| image-classification | 2023-04-25T04:03:01Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for pvt_v2_b0

A PVT-v2 (Pyramid Vision Transformer) image classification model. Trained on ImageNet-1k by paper authors.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 3.7

- GMACs: 0.6

- Activations (M): 8.0

- Image size: 224 x 224

- **Papers:**

- PVT v2: Improved Baselines with Pyramid Vision Transformer: https://arxiv.org/abs/2106.13797

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/whai362/PVT

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('pvt_v2_b0', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'pvt_v2_b0',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 32, 56, 56])

# torch.Size([1, 64, 28, 28])

# torch.Size([1, 160, 14, 14])

# torch.Size([1, 256, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'pvt_v2_b0',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 256, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

## Citation

```bibtex

@article{wang2021pvtv2,

title={Pvtv2: Improved baselines with pyramid vision transformer},

author={Wang, Wenhai and Xie, Enze and Li, Xiang and Fan, Deng-Ping and Song, Kaitao and Liang, Ding and Lu, Tong and Luo, Ping and Shao, Ling},

journal={Computational Visual Media},

volume={8},

number={3},

pages={1--10},

year={2022},

publisher={Springer}

}

```

|

andreas122001/roberta-academic-detector | andreas122001 | 2024-02-02T12:21:29Z | 973 | 5 | transformers | [

"transformers",

"pytorch",

"safetensors",

"roberta",

"text-classification",

"mgt-detection",

"ai-detection",

"en",

"dataset:NicolaiSivesind/human-vs-machine",

"dataset:gfissore/arxiv-abstracts-2021",

"license:openrail",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-05-07T00:56:43Z | ---

license: openrail

widget:

- text: I am totally a human, trust me bro.

example_title: default

- text: >-

In Finnish folklore, all places and things, and also human beings, have a

haltija (a genius, guardian spirit) of their own. One such haltija is called

etiäinen—an image, doppelgänger, or just an impression that goes ahead of a

person, doing things the person in question later does. For example, people

waiting at home might hear the door close or even see a shadow or a

silhouette, only to realize that no one has yet arrived. Etiäinen can also

refer to some kind of a feeling that something is going to happen. Sometimes

it could, for example, warn of a bad year coming. In modern Finnish, the

term has detached from its shamanistic origins and refers to premonition.

Unlike clairvoyance, divination, and similar practices, etiäiset (plural)

are spontaneous and can't be induced. Quite the opposite, they may be

unwanted and cause anxiety, like ghosts. Etiäiset need not be too dramatic

and may concern everyday events, although ones related to e.g. deaths are

common. As these phenomena are still reported today, they can be considered

a living tradition, as a way to explain the psychological experience of

premonition.

example_title: real wikipedia

- text: >-

In Finnish folklore, all places and things, animate or inanimate, have a

spirit or "etiäinen" that lives there. Etiäinen can manifest in many forms,

but is usually described as a kind, elderly woman with white hair. She is

the guardian of natural places and often helps people in need. Etiäinen has

been a part of Finnish culture for centuries and is still widely believed in

today. Folklorists study etiäinen to understand Finnish traditions and how

they have changed over time.

example_title: generated wikipedia

- text: >-

This paper presents a novel framework for sparsity-certifying graph

decompositions, which are important tools in various areas of computer

science, including algorithm design, complexity theory, and optimization.