modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

stablediffusionapi/real-dream-sdxl | stablediffusionapi | 2024-04-30T21:14:44Z | 592 | 2 | diffusers | [

"diffusers",

"modelslab.com",

"stable-diffusion-api",

"text-to-image",

"ultra-realistic",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

]

| text-to-image | 2024-04-30T21:11:15Z | ---

license: creativeml-openrail-m

tags:

- modelslab.com

- stable-diffusion-api

- text-to-image

- ultra-realistic

pinned: true

---

# Real Dream SDXL API Inference

## Get API Key

Get API key from [ModelsLab API](http://modelslab.com), No Payment needed.

Replace Key in below code, change **model_id** to "real-dream-sdxl"

Coding in PHP/Node/Java etc? Have a look at docs for more code examples: [View docs](https://modelslab.com/docs)

Try model for free: [Generate Images](https://modelslab.com/models/real-dream-sdxl)

Model link: [View model](https://modelslab.com/models/real-dream-sdxl)

View all models: [View Models](https://modelslab.com/models)

import requests

import json

url = "https://modelslab.com/api/v6/images/text2img"

payload = json.dumps({

"key": "your_api_key",

"model_id": "real-dream-sdxl",

"prompt": "ultra realistic close up portrait ((beautiful pale cyberpunk female with heavy black eyeliner)), blue eyes, shaved side haircut, hyper detail, cinematic lighting, magic neon, dark red city, Canon EOS R3, nikon, f/1.4, ISO 200, 1/160s, 8K, RAW, unedited, symmetrical balance, in-frame, 8K",

"negative_prompt": "painting, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, deformed, ugly, blurry, bad anatomy, bad proportions, extra limbs, cloned face, skinny, glitchy, double torso, extra arms, extra hands, mangled fingers, missing lips, ugly face, distorted face, extra legs, anime",

"width": "512",

"height": "512",

"samples": "1",

"num_inference_steps": "30",

"safety_checker": "no",

"enhance_prompt": "yes",

"seed": None,

"guidance_scale": 7.5,

"multi_lingual": "no",

"panorama": "no",

"self_attention": "no",

"upscale": "no",

"embeddings": "embeddings_model_id",

"lora": "lora_model_id",

"webhook": None,

"track_id": None

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)

> Use this coupon code to get 25% off **DMGG0RBN** |

mradermacher/Halu-8B-Llama3-v0.35-GGUF | mradermacher | 2024-06-03T17:29:13Z | 592 | 1 | transformers | [

"transformers",

"gguf",

"en",

"base_model:Hastagaras/Halu-8B-Llama3-v0.35",

"license:llama3",

"endpoints_compatible",

"region:us"

]

| null | 2024-05-31T20:36:09Z | ---

base_model: Hastagaras/Halu-8B-Llama3-v0.35

language:

- en

library_name: transformers

license: llama3

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/Hastagaras/Halu-8B-Llama3-v0.35

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.IQ3_XS.gguf) | IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.IQ3_S.gguf) | IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.IQ3_M.gguf) | IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Halu-8B-Llama3-v0.35-GGUF/resolve/main/Halu-8B-Llama3-v0.35.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

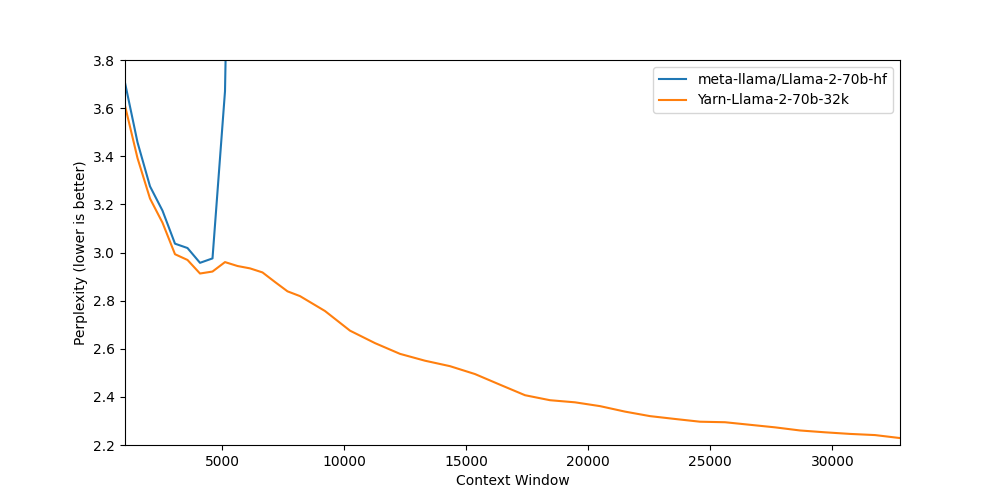

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

mohitsha/Llama-2-70b-chat-hf-FP8-KV-AMMO | mohitsha | 2024-06-25T13:57:47Z | 592 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-06-25T12:59:25Z | ---

license: llama2

---

|

IVN-RIN/medBIT-r3-plus | IVN-RIN | 2024-05-24T11:58:02Z | 591 | 2 | transformers | [

"transformers",

"pytorch",

"safetensors",

"bert",

"fill-mask",

"Biomedical Language Modeling",

"it",

"dataset:IVN-RIN/BioBERT_Italian",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-12-01T12:03:49Z | ---

language:

- it

tags:

- Biomedical Language Modeling

widget:

- text: >-

L'asma allergica è una patologia dell'[MASK] respiratorio causata dalla

presenza di allergeni responsabili dell'infiammazione dell'albero

bronchiale.

example_title: Example 1

- text: >-

Il pancreas produce diversi [MASK] molto importanti tra i quali l'insulina e

il glucagone.

example_title: Example 2

- text: >-

Il GABA è un amminoacido ed è il principale neurotrasmettitore inibitorio

del [MASK].

example_title: Example 3

datasets:

- IVN-RIN/BioBERT_Italian

---

🤗 + 📚🩺🇮🇹 + 📖🧑⚕️ + 🌐⚕️ = **MedBIT-r3-plus**

From this repository you can download the **MedBIT-r3-plus** (Medical Bert for ITalian) checkpoint.

**MedBIT-r3-plus** is built on top of [BioBIT](https://huggingface.co/IVN-RIN/bioBIT), further pretrained on a corpus of medical textbooks, either directly written by Italian authors or translated by human professional translators, used in formal medical doctors’ education and specialized training. The size of this corpus amounts to 100 MB of data.

These comprehensive collections of medical concepts can impact the encoding of biomedical knowledge in language models, with the advantage of being natively available in Italian, and not being translated.

Online healthcare information dissemination is another source of biomedical texts that is commonly available in many less-resourced languages. Therefore, we also gathered an additional 100 MB of web-crawled data from reliable Italian, health-related websites.

More details in the paper.

**MedBIT-r3-plus** has been evaluated on 3 downstream tasks: **NER** (Named Entity Recognition), extractive **QA** (Question Answering), **RE** (Relation Extraction).

Here are the results, summarized:

- NER:

- [BC2GM](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb32) = 81.87%

- [BC4CHEMD](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb35) = 80.68%

- [BC5CDR(CDR)](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb31) = 81.97%

- [BC5CDR(DNER)](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb31) = 76.32%

- [NCBI_DISEASE](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb33) = 63.36%

- [SPECIES-800](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb34) = 63.90%

- QA:

- [BioASQ 4b](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb30) = 68.21%

- [BioASQ 5b](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb30) = 77.89%

- [BioASQ 6b](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb30) = 75.28%

- RE:

- [CHEMPROT](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb36) = 38.82%

- [BioRED](http://refhub.elsevier.com/S1532-0464(23)00152-1/sb37) = 67.62%

[Check the full paper](https://www.sciencedirect.com/science/article/pii/S1532046423001521) for further details, and feel free to contact us if you have some inquiry! |

diabolic6045/harry_potter_chatbot | diabolic6045 | 2023-05-02T17:38:38Z | 591 | 1 | transformers | [

"transformers",

"pytorch",

"safetensors",

"gpt2",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-05-02T13:04:38Z | # Harry Potter Chatbot

This model is a chatbot designed to generate responses in the style of Harry Potter, the protagonist from J.K. Rowling's popular book series and its movie adaptations.

## Model Architecture

The `harry_potter_chatbot` is based on the [`DialoGPT-medium`](https://huggingface.co/microsoft/DialoGPT-medium) model, a powerful GPT-based architecture designed for generating conversational responses. It has been fine-tuned on a dataset of Harry Potter's dialogues from movie transcripts.

## Usage

You can use this model to generate responses for a given input text using the following code:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("diabolic6045/harry_potter_chatbot")

model = AutoModelForCausalLM.from_pretrained("diabolic6045/harry_potter_chatbot")

input_text = "What's your favorite spell?"

input_tokens = tokenizer.encode(input_text, return_tensors='pt')

output_tokens = model.generate(input_tokens, max_length=50, num_return_sequences=1)

output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True)

print(output_text)

```

## Limitations

This model is specifically designed to generate responses in the style of Harry Potter and may not provide accurate or coherent answers to general knowledge questions. It may also sometimes generate inappropriate responses. Be cautious while using this model in a public setting or for critical applications.

## Training Data

The model was fine-tuned on a dataset of Harry Potter's dialogues from movie transcripts. The dataset was collected from publicly available movie scripts and includes conversations and quotes from various Harry Potter films.

## Acknowledgments

This model was trained using the Hugging Face [Transformers](https://github.com/huggingface/transformers) library, and it is based on the [`DialoGPT-medium`](https://huggingface.co/microsoft/DialoGPT-medium) model by Microsoft. Special thanks to the Hugging Face team and Microsoft for their contributions to the NLP community.

---

Feel free to test the model and provide feedback or report any issues. Enjoy chatting with Harry Potter!

|

shihab17/bangla-sentence-transformer | shihab17 | 2023-09-08T10:40:51Z | 591 | 2 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"bn",

"en",

"autotrain_compatible",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

]

| sentence-similarity | 2023-05-18T02:58:45Z | ---

language:

- bn

- en

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# Bangla Sentence Transformer

Sentence Transformer is a cutting-edge natural language processing (NLP) model that is capable of encoding and transforming sentences into high-dimensional embeddings. With this technology, we can unlock powerful insights and applications in various fields like text classification, information retrieval, semantic search, and more.

This model is finetuned from ```stsb-xlm-r-multilingual```

It's now available on Hugging Face! 🎉🎉

## Install

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

```python

from sentence_transformers import SentenceTransformer

sentences = ['আমি আপেল খেতে পছন্দ করি। ', 'আমার একটি আপেল মোবাইল আছে।','আপনি কি এখানে কাছাকাছি থাকেন?', 'আশেপাশে কেউ আছেন?']

model = SentenceTransformer('shihab17/bangla-sentence-transformer')

embeddings = model.encode(sentences)

print(embeddings)

```

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['আমি আপেল খেতে পছন্দ করি। ', 'আমার একটি আপেল মোবাইল আছে।','আপনি কি এখানে কাছাকাছি থাকেন?', 'আশেপাশে কেউ আছেন?']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('shihab17/bangla-sentence-transformer')

model = AutoModel.from_pretrained('shihab17/bangla-sentence-transformer')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## How to get sentence similarity

```python

from sentence_transformers import SentenceTransformer

from sentence_transformers.util import pytorch_cos_sim

transformer = SentenceTransformer('shihab17/bangla-sentence-transformer')

sentences = ['আমি আপেল খেতে পছন্দ করি। ', 'আমার একটি আপেল মোবাইল আছে।','আপনি কি এখানে কাছাকাছি থাকেন?', 'আশেপাশে কেউ আছেন?']

sentences_embeddings = transformer.encode(sentences)

for i in range(len(sentences)):

for j in range(i, len(sentences)):

sen_1 = sentences[i]

sen_2 = sentences[j]

sim_score = float(pytorch_cos_sim(sentences_embeddings[i], sentences_embeddings[j]))

print(sen_1, '----->', sen_2, sim_score)

```

## Best MSE: 7.57528096437454 |

timm/efficientvit_b2.r224_in1k | timm | 2023-11-21T21:39:27Z | 591 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2205.14756",

"license:apache-2.0",

"region:us"

]

| image-classification | 2023-08-18T22:45:12Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for efficientvit_b2.r224_in1k

An EfficientViT (MIT) image classification model. Trained on ImageNet-1k by paper authors.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 24.3

- GMACs: 1.6

- Activations (M): 14.6

- Image size: 224 x 224

- **Papers:**

- EfficientViT: Multi-Scale Linear Attention for High-Resolution Dense Prediction: https://arxiv.org/abs/2205.14756

- **Original:** https://github.com/mit-han-lab/efficientvit

- **Dataset:** ImageNet-1k

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('efficientvit_b2.r224_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'efficientvit_b2.r224_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 48, 56, 56])

# torch.Size([1, 96, 28, 28])

# torch.Size([1, 192, 14, 14])

# torch.Size([1, 384, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'efficientvit_b2.r224_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 384, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Citation

```bibtex

@article{cai2022efficientvit,

title={EfficientViT: Enhanced linear attention for high-resolution low-computation visual recognition},

author={Cai, Han and Gan, Chuang and Han, Song},

journal={arXiv preprint arXiv:2205.14756},

year={2022}

}

```

|

mmnga/ELYZA-japanese-CodeLlama-7b-instruct-gguf | mmnga | 2023-11-16T14:28:24Z | 591 | 7 | null | [

"gguf",

"llama2",

"ja",

"arxiv:2308.12950",

"arxiv:2307.09288",

"license:llama2",

"region:us"

]

| null | 2023-11-15T09:48:32Z | ---

license: llama2

language:

- ja

tags:

- llama2

---

# ELYZA-japanese-CodeLlama-7b-instruct-gguf

[ELYZAさんが公開しているELYZA-japanese-CodeLlama-7b-instruct](https://huggingface.co/ELYZA/ELYZA-japanese-CodeLlama-7b-instruct)のggufフォーマット変換版です。

他のモデルはこちら

通常版: llama2に日本語のデータセットで学習したモデル

[mmnga/ELYZA-japanese-Llama-2-7b-gguf](https://huggingface.co/mmnga/ELYZA-japanese-Llama-2-7b-gguf)

[mmnga/ELYZA-japanese-Llama-2-7b-instruct-gguf](https://huggingface.co/mmnga/ELYZA-japanese-Llama-2-7b-instruct-gguf)

Fast版 日本語の語彙を追加してトークンコストを減らし、1.8倍高速化したモデル

[mmnga/ELYZA-japanese-Llama-2-7b-fast-gguf](https://huggingface.co/mmnga/ELYZA-japanese-Llama-2-7b-fast-gguf)

[mmnga/ELYZA-japanese-Llama-2-7b-fast-instruct-gguf](https://huggingface.co/mmnga/ELYZA-japanese-Llama-2-7b-fast-instruct-gguf)

Codellama版 GGUF

[mmnga/ELYZA-japanese-CodeLlama-7b-gguf](https://huggingface.co/mmnga/ELYZA-japanese-CodeLlama-7b-gguf)

[mmnga/ELYZA-japanese-CodeLlama-7b-instruct-gguf](https://huggingface.co/mmnga/ELYZA-japanese-CodeLlama-7b-instruct-gguf)

Codellama版 GPTQ

[mmnga/ELYZA-japanese-CodeLlama-7b-instruct-GPTQ-calib-ja-1k](https://huggingface.co/mmnga/ELYZA-japanese-CodeLlama-7b-instruct-GPTQ-calib-ja-1k)

## Usage

```

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

make -j

./main -m 'ELYZA-japanese-CodeLlama-7b-instruct-q4_0.gguf' -n 256 -p '[INST] <<SYS>>あなたは誠実で優秀な日本人のアシスタントです。<</SYS>>エラトステネスの篩についてサンプルコードを示し、解説してください。 [/INST]'

```

## ggufへの変換

llama.cppのconvert.pyで変換するとエラーになってしまうので、下記の方法で変換できます。

- [tokenizer.model](https://huggingface.co/elyza/ELYZA-japanese-Llama-2-7b/resolve/main/tokenizer.model?download=true) を持ってきてモデルディレクトリに配置してください。

- added_tokens.jsonに下記内容で保存してモデルディレクトリに配置してください。

~~~javascript

{

"<SU": 32000,

"<SUF": 32001,

"<PRE": 32002,

"<M": 32003,

"<MID": 32004,

"<E": 32005,

"<EOT": 32006,

"<PRE>": 32007,

"<SUF>": 32008,

"<MID>": 32009,

"<EOT>": 32010,

"<EOT><EOT>": 32011,

"<EOT><EOT><EOT>": 32012,

"<EOT><EOT><EOT><EOT>": 32013,

"<EOT><EOT><EOT><EOT><EOT>": 32014,

"<EOT><EOT><EOT><EOT><EOT><EOT>": 32015

}

~~~

~~~bash

convert.py "<path_to_model>" --outtype f16

~~~

で変換できます。

### Licence

Llama 2 is licensed under the LLAMA 2 Community License, Copyright (c) Meta Platforms, Inc. All Rights Reserved.

### 引用 Citations

```tex

@misc{elyzacodellama2023,

title={ELYZA-japanese-CodeLlama-7b},

url={https://huggingface.co/elyza/ELYZA-japanese-CodeLlama-7b},

author={Akira Sasaki and Masato Hirakawa and Shintaro Horie and Tomoaki Nakamura},

year={2023},

}

@misc{rozière2023code,

title={Code Llama: Open Foundation Models for Code},

author={Baptiste Rozière and Jonas Gehring and Fabian Gloeckle and Sten Sootla and Itai Gat and Xiaoqing Ellen Tan and Yossi Adi and Jingyu Liu and Tal Remez and Jérémy Rapin and Artyom Kozhevnikov and Ivan Evtimov and Joanna Bitton and Manish Bhatt and Cristian Canton Ferrer and Aaron Grattafiori and Wenhan Xiong and Alexandre Défossez and Jade Copet and Faisal Azhar and Hugo Touvron and Louis Martin and Nicolas Usunier and Thomas Scialom and Gabriel Synnaeve},

year={2023},

eprint={2308.12950},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{touvron2023llama,

title={Llama 2: Open Foundation and Fine-Tuned Chat Models},

author={Hugo Touvron and Louis Martin and Kevin Stone and Peter Albert and Amjad Almahairi and Yasmine Babaei and Nikolay Bashlykov and Soumya Batra and Prajjwal Bhargava and Shruti Bhosale and Dan Bikel and Lukas Blecher and Cristian Canton Ferrer and Moya Chen and Guillem Cucurull and David Esiobu and Jude Fernandes and Jeremy Fu and Wenyin Fu and Brian Fuller and Cynthia Gao and Vedanuj Goswami and Naman Goyal and Anthony Hartshorn and Saghar Hosseini and Rui Hou and Hakan Inan and Marcin Kardas and Viktor Kerkez and Madian Khabsa and Isabel Kloumann and Artem Korenev and Punit Singh Koura and Marie-Anne Lachaux and Thibaut Lavril and Jenya Lee and Diana Liskovich and Yinghai Lu and Yuning Mao and Xavier Martinet and Todor Mihaylov and Pushkar Mishra and Igor Molybog and Yixin Nie and Andrew Poulton and Jeremy Reizenstein and Rashi Rungta and Kalyan Saladi and Alan Schelten and Ruan Silva and Eric Michael Smith and Ranjan Subramanian and Xiaoqing Ellen Tan and Binh Tang and Ross Taylor and Adina Williams and Jian Xiang Kuan and Puxin Xu and Zheng Yan and Iliyan Zarov and Yuchen Zhang and Angela Fan and Melanie Kambadur and Sharan Narang and Aurelien Rodriguez and Robert Stojnic and Sergey Edunov and Thomas Scialom},

year={2023},

eprint={2307.09288},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

looppayments/table_cell_value_classification_model | looppayments | 2023-11-27T01:38:06Z | 591 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2023-11-15T23:43:41Z | Entry not found |

mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF | mradermacher | 2024-05-06T06:20:44Z | 591 | 4 | transformers | [

"transformers",

"gguf",

"mergekit",

"merge",

"en",

"base_model:sophosympatheia/Midnight-Miqu-70B-v1.0",

"license:other",

"endpoints_compatible",

"region:us"

]

| null | 2024-03-02T19:05:24Z | ---

base_model: sophosympatheia/Midnight-Miqu-70B-v1.0

language:

- en

library_name: transformers

license: other

quantized_by: mradermacher

tags:

- mergekit

- merge

---

## About

weighted/imatrix quants of https://huggingface.co/sophosympatheia/Midnight-Miqu-70B-v1.0

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ1_S.gguf) | i1-IQ1_S | 15.0 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ1_M.gguf) | i1-IQ1_M | 16.0 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 18.7 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ2_XS.gguf) | i1-IQ2_XS | 20.8 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ2_S.gguf) | i1-IQ2_S | 21.8 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ2_M.gguf) | i1-IQ2_M | 23.7 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q2_K.gguf) | i1-Q2_K | 25.9 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 27.0 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ3_XS.gguf) | i1-IQ3_XS | 28.6 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ3_S.gguf) | i1-IQ3_S | 30.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q3_K_S.gguf) | i1-Q3_K_S | 30.3 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ3_M.gguf) | i1-IQ3_M | 31.4 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q3_K_M.gguf) | i1-Q3_K_M | 33.7 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q3_K_L.gguf) | i1-Q3_K_L | 36.6 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ4_XS.gguf) | i1-IQ4_XS | 37.2 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-IQ4_NL.gguf) | i1-IQ4_NL | 39.4 | prefer IQ4_XS |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q4_0.gguf) | i1-Q4_0 | 39.4 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q4_K_S.gguf) | i1-Q4_K_S | 39.7 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q4_K_M.gguf) | i1-Q4_K_M | 41.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q5_K_S.gguf) | i1-Q5_K_S | 47.9 | |

| [GGUF](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q5_K_M.gguf) | i1-Q5_K_M | 49.2 | |

| [PART 1](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/Midnight-Miqu-70B-v1.0-i1-GGUF/resolve/main/Midnight-Miqu-70B-v1.0.i1-Q6_K.gguf.part2of2) | i1-Q6_K | 57.0 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

pmking27/PrathameshLLM-2B-GGUF | pmking27 | 2024-04-09T08:34:44Z | 591 | 1 | transformers | [

"transformers",

"gguf",

"gemma",

"text-generation-inference",

"llama.cpp",

"en",

"base_model:pmking27/PrathameshLLM-2B",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-03-30T15:23:46Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- gemma

- gguf

- llama.cpp

base_model: pmking27/PrathameshLLM-2B

---

<img src="https://github.com/Pmking27/AutoTalker/assets/97112558/96853321-e460-4464-a062-9bd1633964d8" width="600" height="600">

# Uploaded model

- **Developed by:** pmking27

- **License:** apache-2.0

- **Finetuned from model :** pmking27/PrathameshLLM-2B

## Provided Quants Files

| Name | Quant method | Bits | Size |

| ---- | ---- | ---- | ---- |

| [PrathameshLLM-2B.IQ3_M.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.IQ3_M.gguf) | IQ3_M | 3 | 1.31 GB|

| [PrathameshLLM-2B.IQ3_S.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.IQ3_S.gguf) | IQ3_S | 3 | 1.29 GB|

| [PrathameshLLM-2B.IQ3_XS.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.IQ3_XS.gguf) | IQ3_XS | 3 | 1.24 GB|

| [PrathameshLLM-2B.IQ4_NL.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.IQ4_NL.gguf) | IQ4_NL | 4 | 1.56 GB|

| [PrathameshLLM-2B.IQ4_XS.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.IQ4_XS.gguf) | IQ4_XS | 4 | 1.5 GB|

| [PrathameshLLM-2B.Q2_K.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q2_K.gguf) | Q2_K | 2 | 1.16 GB|

| [PrathameshLLM-2B.Q3_K_L.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q3_K_L.gguf) | Q3_K_L | 3 | 1.47 GB|

| [PrathameshLLM-2B.Q3_K_M.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q3_K_M.gguf) | Q3_K_M | 3 | 1.38 GB|

| [PrathameshLLM-2B.Q3_K_S.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q3_K_S.gguf) | Q3_K_S | 3 | 1.29 GB|

| [PrathameshLLM-2B.Q4_0.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q4_0.gguf) | Q4_0 | 4 | 1.55 GB|

| [PrathameshLLM-2B.Q4_K_M.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q4_K_M.gguf) | Q4_K_M | 4 | 1.63 GB|

| [PrathameshLLM-2B.Q4_K_S.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q4_K_S.gguf) | Q4_K_S | 4 | 1.56 GB|

| [PrathameshLLM-2B.Q5_0.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q5_0.gguf) | Q5_0 | 5 | 1.8 GB|

| [PrathameshLLM-2B.Q5_K_M.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q5_K_M.gguf) | Q5_K_M | 5 | 1.84 GB|

| [PrathameshLLM-2B.Q5_K_S.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q5_K_S.gguf) | Q5_K_S | 5 | 1.8 GB|

| [PrathameshLLM-2B.Q6_K.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q6_K.gguf) | Q6_K | 6 | 2.06 GB|

| [PrathameshLLM-2B.Q8_0.gguf](https://huggingface.co/pmking27/PrathameshLLM-2B-GGUF/blob/main/PrathameshLLM-2B.Q8_0.gguf) | Q8_0 | 8 | 2.67 GB|

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

## Model Download Script

```python

import os

from huggingface_hub import hf_hub_download

# Specify model details

model_repo_id = "pmking27/PrathameshLLM-2B-GGUF" # Replace with the desired model repo

filename = "PrathameshLLM-2B.Q4_K_M.gguf" # Replace with the specific GGUF filename

local_folder = "." # Replace with your desired local storage path

# Create the local directory if it doesn't exist

os.makedirs(local_folder, exist_ok=True)

# Download the model file to the specified local folder

filepath = hf_hub_download(repo_id=model_repo_id, filename=filename, cache_dir=local_folder)

print(f"GGUF model downloaded and saved to: {filepath}")

```

Replace `model_repo_id` and `filename` with the desired model repository ID and specific GGUF filename respectively. Also, modify `local_folder` to specify where you want to save the downloaded model file.

#### Simple llama-cpp-python Simple inference example code

```python

from llama_cpp import Llama

llm = Llama(

model_path = filepath, # Download the model file first

n_ctx = 32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads = 8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers = 35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Defining the Alpaca prompt template

alpaca_prompt = """

### Instruction:

{}

### Input:

{}

### Response:

{}"""

output = llm(

alpaca_prompt.format(

'''

You're an assistant trained to answer questions using the given context.

context:

General elections will be held in India from 19 April 2024 to 1 June 2024 to elect the 543 members of the 18th Lok Sabha. The elections will be held in seven phases and the results will be announced on 4 June 2024. This will be the largest-ever election in the world, surpassing the 2019 Indian general election, and will be the longest-held general elections in India with a total span of 44 days (excluding the first 1951–52 Indian general election). The incumbent prime minister Narendra Modi who completed a second term will be contesting elections for a third consecutive term.

Approximately 960 million individuals out of a population of 1.4 billion are eligible to participate in the elections, which are expected to span a month for completion. The Legislative assembly elections in the states of Andhra Pradesh, Arunachal Pradesh, Odisha, and Sikkim will be held simultaneously with the general election, along with the by-elections for 35 seats among 16 states.

''', # instruction

"In how many phases will the general elections in India be held?", # input

"", # output - leave this blank for generation!

), #Alpaca Prompt

max_tokens = 512, # Generate up to 512 tokens

stop = ["<eos>"], #stop token

echo = True # Whether to echo the prompt

)

output_text = output['choices'][0]['text']

start_marker = "### Response:"

end_marker = "<eos>"

start_pos = output_text.find(start_marker) + len(start_marker)

end_pos = output_text.find(end_marker, start_pos)

# Extracting the response text

response_text = output_text[start_pos:end_pos].strip()

print(response_text)

```

#### Simple llama-cpp-python Chat Completion API Example Code

```python

from llama_cpp import Llama

llm = Llama(model_path = filepath, chat_format="gemma") # Set chat_format according to the model you are using

message=llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

message['choices'][0]["message"]["content"]

``` |

RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf | RichardErkhov | 2024-04-17T10:23:47Z | 591 | 0 | null | [

"gguf",

"arxiv:2012.05628",

"region:us"

]

| null | 2024-04-17T10:20:46Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

gpt2-small-italian-embeddings - GGUF

- Model creator: https://huggingface.co/GroNLP/

- Original model: https://huggingface.co/GroNLP/gpt2-small-italian-embeddings/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [gpt2-small-italian-embeddings.Q2_K.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q2_K.gguf) | Q2_K | 0.06GB |

| [gpt2-small-italian-embeddings.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.IQ3_XS.gguf) | IQ3_XS | 0.06GB |

| [gpt2-small-italian-embeddings.IQ3_S.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.IQ3_S.gguf) | IQ3_S | 0.06GB |

| [gpt2-small-italian-embeddings.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q3_K_S.gguf) | Q3_K_S | 0.06GB |

| [gpt2-small-italian-embeddings.IQ3_M.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.IQ3_M.gguf) | IQ3_M | 0.07GB |

| [gpt2-small-italian-embeddings.Q3_K.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q3_K.gguf) | Q3_K | 0.07GB |

| [gpt2-small-italian-embeddings.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q3_K_M.gguf) | Q3_K_M | 0.07GB |

| [gpt2-small-italian-embeddings.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q3_K_L.gguf) | Q3_K_L | 0.07GB |

| [gpt2-small-italian-embeddings.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.IQ4_XS.gguf) | IQ4_XS | 0.07GB |

| [gpt2-small-italian-embeddings.Q4_0.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q4_0.gguf) | Q4_0 | 0.08GB |

| [gpt2-small-italian-embeddings.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.IQ4_NL.gguf) | IQ4_NL | 0.08GB |

| [gpt2-small-italian-embeddings.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q4_K_S.gguf) | Q4_K_S | 0.08GB |

| [gpt2-small-italian-embeddings.Q4_K.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q4_K.gguf) | Q4_K | 0.08GB |

| [gpt2-small-italian-embeddings.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q4_K_M.gguf) | Q4_K_M | 0.08GB |

| [gpt2-small-italian-embeddings.Q4_1.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q4_1.gguf) | Q4_1 | 0.08GB |

| [gpt2-small-italian-embeddings.Q5_0.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q5_0.gguf) | Q5_0 | 0.09GB |

| [gpt2-small-italian-embeddings.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q5_K_S.gguf) | Q5_K_S | 0.09GB |

| [gpt2-small-italian-embeddings.Q5_K.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q5_K.gguf) | Q5_K | 0.09GB |

| [gpt2-small-italian-embeddings.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q5_K_M.gguf) | Q5_K_M | 0.09GB |

| [gpt2-small-italian-embeddings.Q5_1.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q5_1.gguf) | Q5_1 | 0.1GB |

| [gpt2-small-italian-embeddings.Q6_K.gguf](https://huggingface.co/RichardErkhov/GroNLP_-_gpt2-small-italian-embeddings-gguf/blob/main/gpt2-small-italian-embeddings.Q6_K.gguf) | Q6_K | 0.1GB |

Original model description:

---

language: it

tags:

- adaption

- recycled

- gpt2-small

pipeline_tag: text-generation

---

# GPT-2 recycled for Italian (small, adapted lexical embeddings)

[Wietse de Vries](https://www.semanticscholar.org/author/Wietse-de-Vries/144611157) •

[Malvina Nissim](https://www.semanticscholar.org/author/M.-Nissim/2742475)

## Model description

This model is based on the small OpenAI GPT-2 ([`gpt2`](https://huggingface.co/gpt2)) model.

The Transformer layer weights in this model are identical to the original English, model but the lexical layer has been retrained for an Italian vocabulary.

For details, check out our paper on [arXiv](https://arxiv.org/abs/2012.05628) and the code on [Github](https://github.com/wietsedv/gpt2-recycle).

## Related models

### Dutch

- [`gpt2-small-dutch-embeddings`](https://huggingface.co/GroNLP/gpt2-small-dutch-embeddings): Small model size with only retrained lexical embeddings.

- [`gpt2-small-dutch`](https://huggingface.co/GroNLP/gpt2-small-dutch): Small model size with retrained lexical embeddings and additional fine-tuning of the full model. (**Recommended**)

- [`gpt2-medium-dutch-embeddings`](https://huggingface.co/GroNLP/gpt2-medium-dutch-embeddings): Medium model size with only retrained lexical embeddings.

### Italian

- [`gpt2-small-italian-embeddings`](https://huggingface.co/GroNLP/gpt2-small-italian-embeddings): Small model size with only retrained lexical embeddings.

- [`gpt2-small-italian`](https://huggingface.co/GroNLP/gpt2-small-italian): Small model size with retrained lexical embeddings and additional fine-tuning of the full model. (**Recommended**)

- [`gpt2-medium-italian-embeddings`](https://huggingface.co/GroNLP/gpt2-medium-italian-embeddings): Medium model size with only retrained lexical embeddings.

## How to use

```python

from transformers import pipeline

pipe = pipeline("text-generation", model="GroNLP/gpt2-small-italian-embeddings")

```

```python

from transformers import AutoTokenizer, AutoModel, TFAutoModel

tokenizer = AutoTokenizer.from_pretrained("GroNLP/gpt2-small-italian-embeddings")

model = AutoModel.from_pretrained("GroNLP/gpt2-small-italian-embeddings") # PyTorch

model = TFAutoModel.from_pretrained("GroNLP/gpt2-small-italian-embeddings") # Tensorflow

```

## BibTeX entry

```bibtex

@misc{devries2020good,

title={As good as new. How to successfully recycle English GPT-2 to make models for other languages},

author={Wietse de Vries and Malvina Nissim},

year={2020},

eprint={2012.05628},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

llmware/bling-phi-3 | llmware | 2024-05-02T20:38:06Z | 591 | 5 | transformers | [

"transformers",

"pytorch",

"phi3",

"text-generation",

"conversational",

"custom_code",

"license:apache-2.0",

"autotrain_compatible",

"region:us"

]

| text-generation | 2024-05-01T22:54:46Z | ---

license: apache-2.0

inference: false

---

# bling-phi-3

<!-- Provide a quick summary of what the model is/does. -->

bling-phi-3 is part of the BLING ("Best Little Instruct No-GPU") model series, RAG-instruct trained on top of a Microsoft Phi-3 base model.

### Benchmark Tests

Evaluated against the benchmark test: [RAG-Instruct-Benchmark-Tester](https://www.huggingface.co/datasets/llmware/rag_instruct_benchmark_tester)

1 Test Run (temperature=0.0, sample=False) with 1 point for correct answer, 0.5 point for partial correct or blank / NF, 0.0 points for incorrect, and -1 points for hallucinations.

--**Accuracy Score**: **99.5** correct out of 100

--Not Found Classification: 95.0%

--Boolean: 97.5%

--Math/Logic: 80.0%

--Complex Questions (1-5): 4 (Above Average - multiple-choice, causal)

--Summarization Quality (1-5): 4 (Above Average)

--Hallucinations: No hallucinations observed in test runs.

For test run results (and good indicator of target use cases), please see the files ("core_rag_test" and "answer_sheet" in this repo).

Note: compare results with [bling-phi-2](https://www.huggingface.co/llmware/bling-phi-2-v0), and [dragon-mistral-7b](https://www.huggingface.co/llmware/dragon-mistral-7b-v0).

Note: see also the quantized gguf version of the model- [bling-phi-3-gguf](https://www.huggingface.co/llmware/bling-phi-3-gguf).

Note: the Pytorch version answered 1 question with "Not Found" while the quantized version answered it correctly, hence the small difference in scores.

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** llmware

- **Model type:** bling

- **Language(s) (NLP):** English

- **License:** Apache 2.0

- **Finetuned from model:** Microsoft Phi-3

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

The intended use of BLING models is two-fold:

1. Provide high-quality RAG-Instruct models designed for fact-based, no "hallucination" question-answering in connection with an enterprise RAG workflow.

2. BLING models are fine-tuned on top of leading base foundation models, generally in the 1-3B+ range, and purposefully rolled-out across multiple base models to provide choices and "drop-in" replacements for RAG specific use cases.

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

BLING is designed for enterprise automation use cases, especially in knowledge-intensive industries, such as financial services,

legal and regulatory industries with complex information sources.

BLING models have been trained for common RAG scenarios, specifically: question-answering, key-value extraction, and basic summarization as the core instruction types

without the need for a lot of complex instruction verbiage - provide a text passage context, ask questions, and get clear fact-based responses.

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

Any model can provide inaccurate or incomplete information, and should be used in conjunction with appropriate safeguards and fact-checking mechanisms.

## How to Get Started with the Model

The fastest way to get started with BLING is through direct import in transformers:

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("llmware/bling-phi-3", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("llmware/bling-phi-3", trust_remote_code=True)

Please refer to the generation_test .py files in the Files repository, which includes 200 samples and script to test the model. The **generation_test_llmware_script.py** includes built-in llmware capabilities for fact-checking, as well as easy integration with document parsing and actual retrieval to swap out the test set for RAG workflow consisting of business documents.

The BLING model was fine-tuned with a simple "\<human> and \<bot> wrapper", so to get the best results, wrap inference entries as:

full_prompt = "<human>: " + my_prompt + "\n" + "<bot>:"

(As an aside, we intended to retire "human-bot" and tried several variations of the new Microsoft Phi-3 prompt template and ultimately had slightly better results with the very simple "human-bot" separators, so we opted to keep them.)

The BLING model was fine-tuned with closed-context samples, which assume generally that the prompt consists of two sub-parts:

1. Text Passage Context, and

2. Specific question or instruction based on the text passage

To get the best results, package "my_prompt" as follows:

my_prompt = {{text_passage}} + "\n" + {{question/instruction}}

If you are using a HuggingFace generation script:

# prepare prompt packaging used in fine-tuning process

new_prompt = "<human>: " + entries["context"] + "\n" + entries["query"] + "\n" + "<bot>:"

inputs = tokenizer(new_prompt, return_tensors="pt")

start_of_output = len(inputs.input_ids[0])

# temperature: set at 0.0 with do_sample=False for consistency of output

# max_new_tokens: set at 100 - may prematurely stop a few of the summaries

outputs = model.generate(

inputs.input_ids.to(device),

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.eos_token_id,

do_sample=False,

temperature=0.0,

max_new_tokens=100,

)

output_only = tokenizer.decode(outputs[0][start_of_output:],skip_special_tokens=True)

## Model Card Contact

Darren Oberst & llmware team

|

RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf | RichardErkhov | 2024-05-03T10:59:29Z | 591 | 1 | null | [

"gguf",

"region:us"

]

| null | 2024-05-03T10:55:42Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

MagicPrompt-Stable-Diffusion - GGUF

- Model creator: https://huggingface.co/Gustavosta/

- Original model: https://huggingface.co/Gustavosta/MagicPrompt-Stable-Diffusion/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [MagicPrompt-Stable-Diffusion.Q2_K.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q2_K.gguf) | Q2_K | 0.07GB |

| [MagicPrompt-Stable-Diffusion.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.IQ3_XS.gguf) | IQ3_XS | 0.08GB |

| [MagicPrompt-Stable-Diffusion.IQ3_S.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.IQ3_S.gguf) | IQ3_S | 0.08GB |

| [MagicPrompt-Stable-Diffusion.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q3_K_S.gguf) | Q3_K_S | 0.08GB |

| [MagicPrompt-Stable-Diffusion.IQ3_M.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.IQ3_M.gguf) | IQ3_M | 0.09GB |

| [MagicPrompt-Stable-Diffusion.Q3_K.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q3_K.gguf) | Q3_K | 0.09GB |

| [MagicPrompt-Stable-Diffusion.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q3_K_M.gguf) | Q3_K_M | 0.09GB |

| [MagicPrompt-Stable-Diffusion.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q3_K_L.gguf) | Q3_K_L | 0.09GB |

| [MagicPrompt-Stable-Diffusion.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.IQ4_XS.gguf) | IQ4_XS | 0.09GB |

| [MagicPrompt-Stable-Diffusion.Q4_0.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q4_0.gguf) | Q4_0 | 0.1GB |

| [MagicPrompt-Stable-Diffusion.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.IQ4_NL.gguf) | IQ4_NL | 0.1GB |

| [MagicPrompt-Stable-Diffusion.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q4_K_S.gguf) | Q4_K_S | 0.1GB |

| [MagicPrompt-Stable-Diffusion.Q4_K.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q4_K.gguf) | Q4_K | 0.1GB |

| [MagicPrompt-Stable-Diffusion.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q4_K_M.gguf) | Q4_K_M | 0.1GB |

| [MagicPrompt-Stable-Diffusion.Q4_1.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q4_1.gguf) | Q4_1 | 0.1GB |

| [MagicPrompt-Stable-Diffusion.Q5_0.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q5_0.gguf) | Q5_0 | 0.11GB |

| [MagicPrompt-Stable-Diffusion.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q5_K_S.gguf) | Q5_K_S | 0.11GB |

| [MagicPrompt-Stable-Diffusion.Q5_K.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q5_K.gguf) | Q5_K | 0.12GB |

| [MagicPrompt-Stable-Diffusion.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q5_K_M.gguf) | Q5_K_M | 0.12GB |

| [MagicPrompt-Stable-Diffusion.Q5_1.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q5_1.gguf) | Q5_1 | 0.12GB |

| [MagicPrompt-Stable-Diffusion.Q6_K.gguf](https://huggingface.co/RichardErkhov/Gustavosta_-_MagicPrompt-Stable-Diffusion-gguf/blob/main/MagicPrompt-Stable-Diffusion.Q6_K.gguf) | Q6_K | 0.13GB |

Original model description:

---

license: mit

---

# MagicPrompt - Stable Diffusion

This is a model from the MagicPrompt series of models, which are [GPT-2](https://huggingface.co/gpt2) models intended to generate prompt texts for imaging AIs, in this case: [Stable Diffusion](https://huggingface.co/CompVis/stable-diffusion).

## 🖼️ Here's an example:

<img src="https://files.catbox.moe/ac3jq7.png">

This model was trained with 150,000 steps and a set of about 80,000 data filtered and extracted from the image finder for Stable Diffusion: "[Lexica.art](https://lexica.art/)". It was a little difficult to extract the data, since the search engine still doesn't have a public API without being protected by cloudflare, but if you want to take a look at the original dataset, you can have a look here: [datasets/Gustavosta/Stable-Diffusion-Prompts](https://huggingface.co/datasets/Gustavosta/Stable-Diffusion-Prompts).

If you want to test the model with a demo, you can go to: "[spaces/Gustavosta/MagicPrompt-Stable-Diffusion](https://huggingface.co/spaces/Gustavosta/MagicPrompt-Stable-Diffusion)".

## 💻 You can see other MagicPrompt models:

- For Dall-E 2: [Gustavosta/MagicPrompt-Dalle](https://huggingface.co/Gustavosta/MagicPrompt-Dalle)

- For Midjourney: [Gustavosta/MagicPrompt-Midourney](https://huggingface.co/Gustavosta/MagicPrompt-Midjourney) **[⚠️ In progress]**

- MagicPrompt full: [Gustavosta/MagicPrompt](https://huggingface.co/Gustavosta/MagicPrompt) **[⚠️ In progress]**

## ⚖️ Licence:

[MIT](https://huggingface.co/models?license=license:mit)

When using this model, please credit: [Gustavosta](https://huggingface.co/Gustavosta)

**Thanks for reading this far! :)**

|

Helsinki-NLP/opus-mt-ro-fr | Helsinki-NLP | 2023-08-16T12:03:13Z | 590 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"marian",

"text2text-generation",

"translation",

"ro",

"fr",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| translation | 2022-03-02T23:29:04Z | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-ro-fr

* source languages: ro

* target languages: fr

* OPUS readme: [ro-fr](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/ro-fr/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/ro-fr/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/ro-fr/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/ro-fr/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.ro.fr | 54.5 | 0.697 |

|

KPF/KPF-bert-ner | KPF | 2024-04-03T04:44:41Z | 590 | 1 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-07-04T07:48:49Z | # KPF-BERT-NER

- 빅카인즈랩 인사이드 메뉴의 개체명 분석에서 사용된 개체명 인식 모델이다.

- 사용 방법에 대한 안내 및 코드는 [KPF-bigkinds github](https://github.com/KPF-bigkinds/BIGKINDS-LAB/tree/main/KPF-BERT-NER)에서 확인할 수 있습니다.

## 모델 소개

### KPF-BERT-NER

한국언론진흥재단이 개발한 kpf-BERT 모델을 기반으로 NER(Named Entity Recognition) task를 수행할 수 있는 kpf-BERT-ner 모델을 설계 및 개발한다. NER은 이름을 가진 객체를 인식하는 것을 의미한다. 한국정보통신기술협회가 제공하는 정보통신용어사전에 따르면 NER은 다음과 같다.

“NER은 미리 정의해둔 사람, 회사, 장소, 시간, 단위 등에 해당하는 단어(개체명)를 문서에서 인식하여 추출 분류하는 기법. 추출된 개체명은 인명(person), 지명(location), 기관명(organization), 시간(time) 등으로 분류된다. 개체명 인식은 정보 추출을 목적으로 시작되어 자연어 처리, 정보 검색 등에 사용된다.”

실무적으로 표현하면 ‘문자열을 입력으로 받아 단어별로 해당하는 태그를 출력하게 하는 multi-class 분류 작업’이다. 본 과제에서는 kpf-BERT-ner 모델을 설계 및 개발하고 언론 기사를 학습하여 150개 클래스를 분류한다.

- 본 예제에 사용된 kpf-BERT는 [kpfBERT](https://github.com/KPFBERT/kpfbert)에 공개되어 있다.

- 한국어 데이터 셋은 모두의 말뭉치에서 제공되는 [국립국어원 신문 말뭉치 추출](https://corpus.korean.go.kr/request/reausetMain.do) 를 사용하였다.

한국언론진흥재단이 개발한 kpf-BERT를 기반으로 classification layer를 추가하여 kpf-BERT-ner 모델을 개발한다.

BERT는 대량의 데이터를 사전학습에 사용한다.

kpf-BERT는 신문기사에 특화된 BERT 모델로 언론, 방송 매체에 강인한 모델이다.

BERT 모델의 학습을 위해서는 문장에서 토큰을 추출하는 과정이 필요하다.

이는 kpf-BERT에서 제공하는 토크나이저를 사용한다.

kpf-BERT 토크나이저는 문장을 토큰화해서 전체 문장벡터를 만든다.

이후 문장의 시작과 끝 그 외 몇가지 특수 토큰을 추가한다.

이 과정에서 문장별로 구별하는 세그먼트 토큰, 각 토큰의 위치를 표시하는 포지션 토큰 등을 생성한다.

NER 모델 개발을 위해서는 추가로 토큰이 어떤 클래스를 가졌는지에 대한 정보가 필요하다.

본 과제에서는 토크나이저를 사용하여 문장을 토큰으로 분류한 이후에 해당 토큰별로 NER 태깅을 진행한다.

추가로 BIO(Begin-Inside-Outside) 표기법을 사용하여 정확도를 높인다.

B는 개체명이 시작되는 부분, I는 개체명의 내부 부분, O는 개체명이 아닌 부분으로 구분한다.

|

TheBloke/fiction.live-Kimiko-V2-70B-GGUF | TheBloke | 2023-09-27T12:46:49Z | 590 | 11 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation",

"en",

"base_model:nRuaif/fiction.live-Kimiko-V2-70B",

"license:creativeml-openrail-m",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-08-30T23:05:20Z | ---

language:

- en

license: creativeml-openrail-m

model_name: Fiction Live Kimiko V2 70B

base_model: nRuaif/fiction.live-Kimiko-V2-70B

inference: false

model_creator: nRuaif

model_type: llama

pipeline_tag: text-generation

prompt_template: 'A chat between a curious user and an artificial intelligence assistant.

The assistant gives helpful, detailed, and polite answers to the user''s questions.

USER: {prompt} ASSISTANT:

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Fiction Live Kimiko V2 70B - GGUF

- Model creator: [nRuaif](https://huggingface.co/nRuaif)

- Original model: [Fiction Live Kimiko V2 70B](https://huggingface.co/nRuaif/fiction.live-Kimiko-V2-70B)

<!-- description start -->

## Description

This repo contains GGUF format model files for [nRuaif's Fiction Live Kimiko V2 70B](https://huggingface.co/nRuaif/fiction.live-Kimiko-V2-70B).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF)

* [Unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-fp16)

* [nRuaif's original LoRA adapter, which can be merged on to the base model.](https://huggingface.co/nRuaif/fiction.live-Kimiko-V2-70B)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Vicuna

```

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: {prompt} ASSISTANT:

```

<!-- prompt-template end -->

<!-- licensing start -->

## Licensing

The creator of the source model has listed its license as `creativeml-openrail-m`, and this quantization has therefore used that same license.

As this model is based on Llama 2, it is also subject to the Meta Llama 2 license terms, and the license files for that are additionally included. It should therefore be considered as being claimed to be licensed under both licenses. I contacted Hugging Face for clarification on dual licensing but they do not yet have an official position. Should this change, or should Meta provide any feedback on this situation, I will update this section accordingly.

In the meantime, any questions regarding licensing, and in particular how these two licenses might interact, should be directed to the original model repository: [nRuaif's Fiction Live Kimiko V2 70B](https://huggingface.co/nRuaif/fiction.live-Kimiko-V2-70B).

<!-- licensing end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [fiction.live-Kimiko-V2-70B.Q2_K.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q2_K.gguf) | Q2_K | 2 | 29.28 GB| 31.78 GB | smallest, significant quality loss - not recommended for most purposes |

| [fiction.live-Kimiko-V2-70B.Q3_K_S.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q3_K_S.gguf) | Q3_K_S | 3 | 29.92 GB| 32.42 GB | very small, high quality loss |

| [fiction.live-Kimiko-V2-70B.Q3_K_M.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q3_K_M.gguf) | Q3_K_M | 3 | 33.19 GB| 35.69 GB | very small, high quality loss |

| [fiction.live-Kimiko-V2-70B.Q3_K_L.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q3_K_L.gguf) | Q3_K_L | 3 | 36.15 GB| 38.65 GB | small, substantial quality loss |

| [fiction.live-Kimiko-V2-70B.Q4_0.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q4_0.gguf) | Q4_0 | 4 | 38.87 GB| 41.37 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [fiction.live-Kimiko-V2-70B.Q4_K_S.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q4_K_S.gguf) | Q4_K_S | 4 | 39.07 GB| 41.57 GB | small, greater quality loss |

| [fiction.live-Kimiko-V2-70B.Q4_K_M.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q4_K_M.gguf) | Q4_K_M | 4 | 41.42 GB| 43.92 GB | medium, balanced quality - recommended |

| [fiction.live-Kimiko-V2-70B.Q5_0.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q5_0.gguf) | Q5_0 | 5 | 47.46 GB| 49.96 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [fiction.live-Kimiko-V2-70B.Q5_K_S.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q5_K_S.gguf) | Q5_K_S | 5 | 47.46 GB| 49.96 GB | large, low quality loss - recommended |

| [fiction.live-Kimiko-V2-70B.Q5_K_M.gguf](https://huggingface.co/TheBloke/fiction.live-Kimiko-V2-70B-GGUF/blob/main/fiction.live-Kimiko-V2-70B.Q5_K_M.gguf) | Q5_K_M | 5 | 48.75 GB| 51.25 GB | large, very low quality loss - recommended |

| fiction.live-Kimiko-V2-70B.Q6_K.gguf | Q6_K | 6 | 56.59 GB| 59.09 GB | very large, extremely low quality loss |

| fiction.live-Kimiko-V2-70B.Q8_0.gguf | Q8_0 | 8 | 73.29 GB| 75.79 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

### Q6_K and Q8_0 files are split and require joining

**Note:** HF does not support uploading files larger than 50GB. Therefore I have uploaded the Q6_K and Q8_0 files as split files.

<details>

<summary>Click for instructions regarding Q6_K and Q8_0 files</summary>

### q6_K

Please download:

* `fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-a`

* `fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-b`

### q8_0

Please download:

* `fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-a`

* `fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-b`

To join the files, do the following:

Linux and macOS:

```

cat fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-* > fiction.live-Kimiko-V2-70B.Q6_K.gguf && rm fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-*

cat fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-* > fiction.live-Kimiko-V2-70B.Q8_0.gguf && rm fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-*

```

Windows command line:

```

COPY /B fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-a + fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-b fiction.live-Kimiko-V2-70B.Q6_K.gguf

del fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-a fiction.live-Kimiko-V2-70B.Q6_K.gguf-split-b

COPY /B fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-a + fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-b fiction.live-Kimiko-V2-70B.Q8_0.gguf

del fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-a fiction.live-Kimiko-V2-70B.Q8_0.gguf-split-b

```

</details>

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/fiction.live-Kimiko-V2-70B-GGUF and below it, a specific filename to download, such as: fiction.live-Kimiko-V2-70B.q4_K_M.gguf.

Then click Download.