modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

abmorton/wall-spring-1 | abmorton | 2024-07-01T00:46:59Z | 584 | 0 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2024-07-01T00:42:44Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### wall-spring-1 Dreambooth model trained by abmorton with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

google/long-t5-tglobal-xl | google | 2023-01-24T17:11:32Z | 583 | 21 | transformers | [

"transformers",

"pytorch",

"jax",

"longt5",

"text2text-generation",

"en",

"arxiv:2112.07916",

"arxiv:1912.08777",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-06-14T08:32:52Z | ---

license: apache-2.0

language: en

---

# LongT5 (transient-global attention, XL-sized model)

LongT5 model pre-trained on English language. The model was introduced in the paper [LongT5: Efficient Text-To-Text Transformer for Long Sequences](https://arxiv.org/pdf/2112.07916.pdf) by Guo et al. and first released in [the LongT5 repository](https://github.com/google-research/longt5). All the model architecture and configuration can be found in [Flaxformer repository](https://github.com/google/flaxformer) which uses another Google research project repository [T5x](https://github.com/google-research/t5x).

Disclaimer: The team releasing LongT5 did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

LongT5 model is an encoder-decoder transformer pre-trained in a text-to-text denoising generative setting ([Pegasus-like generation pre-training](https://arxiv.org/pdf/1912.08777.pdf)). LongT5 model is an extension of [T5 model](https://arxiv.org/pdf/1910.10683.pdf), and it enables using one of the two different efficient attention mechanisms - (1) Local attention, or (2) Transient-Global attention. The usage of attention sparsity patterns allows the model to efficiently handle input sequence.

LongT5 is particularly effective when fine-tuned for text generation (summarization, question answering) which requires handling long input sequences (up to 16,384 tokens).

## Intended uses & limitations

The model is mostly meant to be fine-tuned on a supervised dataset. See the [model hub](https://huggingface.co/models?search=longt5) to look for fine-tuned versions on a task that interests you.

### How to use

```python

from transformers import AutoTokenizer, LongT5Model

tokenizer = AutoTokenizer.from_pretrained("google/long-t5-tglobal-xl")

model = LongT5Model.from_pretrained("google/long-t5-tglobal-xl")

inputs = tokenizer("Hello, my dog is cute", return_tensors="pt")

outputs = model(**inputs)

last_hidden_states = outputs.last_hidden_state

```

### BibTeX entry and citation info

```bibtex

@article{guo2021longt5,

title={LongT5: Efficient Text-To-Text Transformer for Long Sequences},

author={Guo, Mandy and Ainslie, Joshua and Uthus, David and Ontanon, Santiago and Ni, Jianmo and Sung, Yun-Hsuan and Yang, Yinfei},

journal={arXiv preprint arXiv:2112.07916},

year={2021}

}

``` |

TheBloke/Luban-13B-GGUF | TheBloke | 2023-09-27T12:46:46Z | 583 | 6 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation",

"en",

"dataset:Open-Orca/OpenOrca",

"base_model:AIDC-ai-business/Luban-13B",

"license:cc-by-nc-4.0",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-08-30T15:46:28Z | ---

language:

- en

license: cc-by-nc-4.0

datasets:

- Open-Orca/OpenOrca

model_name: Luban 13B

base_model: AIDC-ai-business/Luban-13B

inference: false

model_creator: AIDC-ai-business

model_type: llama

pipeline_tag: text-generation

prompt_template: 'Below is an instruction that describes a task. Write a response

that appropriately completes the request.

### Instruction:

{prompt}

### Response:

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Luban 13B - GGUF

- Model creator: [AIDC-ai-business](https://huggingface.co/AIDC-ai-business)

- Original model: [Luban 13B](https://huggingface.co/AIDC-ai-business/Luban-13B)

<!-- description start -->

## Description

This repo contains GGUF format model files for [AIDC-ai-business's Luban 13B](https://huggingface.co/AIDC-ai-business/Luban-13B).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Luban-13B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Luban-13B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Luban-13B-GGUF)

* [AIDC-ai-business's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/AIDC-ai-business/Luban-13B)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Alpaca

```

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

{prompt}

### Response:

```

<!-- prompt-template end -->

<!-- licensing start -->

## Licensing

The creator of the source model has listed its license as `cc-by-nc-4.0`, and this quantization has therefore used that same license.

As this model is based on Llama 2, it is also subject to the Meta Llama 2 license terms, and the license files for that are additionally included. It should therefore be considered as being claimed to be licensed under both licenses. I contacted Hugging Face for clarification on dual licensing but they do not yet have an official position. Should this change, or should Meta provide any feedback on this situation, I will update this section accordingly.

In the meantime, any questions regarding licensing, and in particular how these two licenses might interact, should be directed to the original model repository: [AIDC-ai-business's Luban 13B](https://huggingface.co/AIDC-ai-business/Luban-13B).

<!-- licensing end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [luban-13b.Q2_K.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [luban-13b.Q3_K_S.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [luban-13b.Q3_K_M.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [luban-13b.Q3_K_L.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [luban-13b.Q4_0.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [luban-13b.Q4_K_S.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [luban-13b.Q4_K_M.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [luban-13b.Q5_0.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [luban-13b.Q5_K_S.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [luban-13b.Q5_K_M.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [luban-13b.Q6_K.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [luban-13b.Q8_0.gguf](https://huggingface.co/TheBloke/Luban-13B-GGUF/blob/main/luban-13b.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Luban-13B-GGUF and below it, a specific filename to download, such as: luban-13b.q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub>=0.17.1

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Luban-13B-GGUF luban-13b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/Luban-13B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Luban-13B-GGUF luban-13b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows CLI users: Use `set HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1` before running the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m luban-13b.q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "Below is an instruction that describes a task. Write a response that appropriately completes the request.\n\n### Instruction:\n{prompt}\n\n### Response:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model from Python using ctransformers

#### First install the package

```bash

# Base ctransformers with no GPU acceleration

pip install ctransformers>=0.2.24

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]>=0.2.24

# Or with ROCm GPU acceleration

CT_HIPBLAS=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems

CT_METAL=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

```

#### Simple example code to load one of these GGUF models

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/Luban-13B-GGUF", model_file="luban-13b.q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here's guides on using llama-cpp-python or ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: AIDC-ai-business's Luban 13B

# Luban-13B

Fine-tuned from Llama2-13B,we use Orca-style data and other open source data for fine-tuning.

# Model Details

* **Trained by**: trained by AIDC AI-Business.

* **Model type:** **Luban-13B** is an auto-regressive language model based on the Llama 2 transformer architecture.

* **Language(s)**: English

* **License for Luban-13B base weights**: Non-Commercial Creative Commons license ([CC BY-NC-4.0](https://creativecommons.org/licenses/by-nc/4.0/))

# Prompting

## Prompt Template for alpaca style

```

### Instruction:

<prompt> (without the <>)

### Response:

```

# Evulation Results ([Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard))

| Metric | Value |

|-----------------------|-------|

| Avg. | 65.03 |

| ARC (25-shot) | 63.05 |

| HellaSwag (10-shot) | 82.8 |

| MMLU (5-shot) | 58.73 |

| TruthfulQA (0-shot) | 55.53 |

<!-- original-model-card end -->

|

facebook/mms-tts-deu | facebook | 2023-09-01T10:25:29Z | 583 | 5 | transformers | [

"transformers",

"pytorch",

"safetensors",

"vits",

"text-to-audio",

"mms",

"text-to-speech",

"arxiv:2305.13516",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

]

| text-to-speech | 2023-09-01T10:25:13Z |

---

license: cc-by-nc-4.0

tags:

- mms

- vits

pipeline_tag: text-to-speech

---

# Massively Multilingual Speech (MMS): German, Standard Text-to-Speech

This repository contains the **German, Standard (deu)** language text-to-speech (TTS) model checkpoint.

This model is part of Facebook's [Massively Multilingual Speech](https://arxiv.org/abs/2305.13516) project, aiming to

provide speech technology across a diverse range of languages. You can find more details about the supported languages

and their ISO 639-3 codes in the [MMS Language Coverage Overview](https://dl.fbaipublicfiles.com/mms/misc/language_coverage_mms.html),

and see all MMS-TTS checkpoints on the Hugging Face Hub: [facebook/mms-tts](https://huggingface.co/models?sort=trending&search=facebook%2Fmms-tts).

MMS-TTS is available in the 🤗 Transformers library from version 4.33 onwards.

## Model Details

VITS (**V**ariational **I**nference with adversarial learning for end-to-end **T**ext-to-**S**peech) is an end-to-end

speech synthesis model that predicts a speech waveform conditional on an input text sequence. It is a conditional variational

autoencoder (VAE) comprised of a posterior encoder, decoder, and conditional prior.

A set of spectrogram-based acoustic features are predicted by the flow-based module, which is formed of a Transformer-based

text encoder and multiple coupling layers. The spectrogram is decoded using a stack of transposed convolutional layers,

much in the same style as the HiFi-GAN vocoder. Motivated by the one-to-many nature of the TTS problem, where the same text

input can be spoken in multiple ways, the model also includes a stochastic duration predictor, which allows the model to

synthesise speech with different rhythms from the same input text.

The model is trained end-to-end with a combination of losses derived from variational lower bound and adversarial training.

To improve the expressiveness of the model, normalizing flows are applied to the conditional prior distribution. During

inference, the text encodings are up-sampled based on the duration prediction module, and then mapped into the

waveform using a cascade of the flow module and HiFi-GAN decoder. Due to the stochastic nature of the duration predictor,

the model is non-deterministic, and thus requires a fixed seed to generate the same speech waveform.

For the MMS project, a separate VITS checkpoint is trained on each langauge.

## Usage

MMS-TTS is available in the 🤗 Transformers library from version 4.33 onwards. To use this checkpoint,

first install the latest version of the library:

```

pip install --upgrade transformers accelerate

```

Then, run inference with the following code-snippet:

```python

from transformers import VitsModel, AutoTokenizer

import torch

model = VitsModel.from_pretrained("facebook/mms-tts-deu")

tokenizer = AutoTokenizer.from_pretrained("facebook/mms-tts-deu")

text = "some example text in the German, Standard language"

inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

output = model(**inputs).waveform

```

The resulting waveform can be saved as a `.wav` file:

```python

import scipy

scipy.io.wavfile.write("techno.wav", rate=model.config.sampling_rate, data=output)

```

Or displayed in a Jupyter Notebook / Google Colab:

```python

from IPython.display import Audio

Audio(output, rate=model.config.sampling_rate)

```

## BibTex citation

This model was developed by Vineel Pratap et al. from Meta AI. If you use the model, consider citing the MMS paper:

```

@article{pratap2023mms,

title={Scaling Speech Technology to 1,000+ Languages},

author={Vineel Pratap and Andros Tjandra and Bowen Shi and Paden Tomasello and Arun Babu and Sayani Kundu and Ali Elkahky and Zhaoheng Ni and Apoorv Vyas and Maryam Fazel-Zarandi and Alexei Baevski and Yossi Adi and Xiaohui Zhang and Wei-Ning Hsu and Alexis Conneau and Michael Auli},

journal={arXiv},

year={2023}

}

```

## License

The model is licensed as **CC-BY-NC 4.0**.

|

TheBloke/Dolphin-Llama-13B-GGUF | TheBloke | 2023-09-27T12:52:29Z | 583 | 4 | transformers | [

"transformers",

"gguf",

"llama",

"base_model:ehartford/dolphin-llama-13b",

"license:other",

"text-generation-inference",

"region:us"

]

| null | 2023-09-19T22:55:17Z | ---

license: other

model_name: Dolphin Llama 13B

base_model: ehartford/dolphin-llama-13b

inference: false

model_creator: Eric Hartford

model_type: llama

prompt_template: 'SYSTEM: {system_message}

USER: {prompt}

ASSISTANT:

'

quantized_by: TheBloke

task_categories:

- text-generation

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Dolphin Llama 13B - GGUF

- Model creator: [Eric Hartford](https://huggingface.co/ehartford)

- Original model: [Dolphin Llama 13B](https://huggingface.co/ehartford/dolphin-llama-13b)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Eric Hartford's Dolphin Llama 13B](https://huggingface.co/ehartford/dolphin-llama-13b).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Dolphin-Llama-13B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF)

* [Eric Hartford's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/ehartford/dolphin-llama-13b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Orca-Vicuna

```

SYSTEM: {system_message}

USER: {prompt}

ASSISTANT:

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [dolphin-llama-13b.Q2_K.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [dolphin-llama-13b.Q3_K_S.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [dolphin-llama-13b.Q3_K_M.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [dolphin-llama-13b.Q3_K_L.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [dolphin-llama-13b.Q4_0.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [dolphin-llama-13b.Q4_K_S.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [dolphin-llama-13b.Q4_K_M.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [dolphin-llama-13b.Q5_0.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [dolphin-llama-13b.Q5_K_S.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [dolphin-llama-13b.Q5_K_M.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [dolphin-llama-13b.Q6_K.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [dolphin-llama-13b.Q8_0.gguf](https://huggingface.co/TheBloke/Dolphin-Llama-13B-GGUF/blob/main/dolphin-llama-13b.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Dolphin-Llama-13B-GGUF and below it, a specific filename to download, such as: dolphin-llama-13b.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Dolphin-Llama-13B-GGUF dolphin-llama-13b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/Dolphin-Llama-13B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Dolphin-Llama-13B-GGUF dolphin-llama-13b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m dolphin-llama-13b.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "SYSTEM: {system_message}\nUSER: {prompt}\nASSISTANT:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/Dolphin-Llama-13B-GGUF", model_file="dolphin-llama-13b.Q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Eric Hartford's Dolphin Llama 13B

Dolphin 🐬

https://erichartford.com/dolphin

This model is based on llama1, so it is for non-commercial use only. Future versions will be trained on llama2 and other open models that are suitable for commercial use.

This model is uncensored. I have filtered the dataset to remove alignment and bias. This makes the model compliant to any requests. You are advised to implement your own alignment layer before exposing the model as a service. It will be highly compliant to any requests, even unethical ones. Please read my blog post about uncensored models. https://erichartford.com/uncensored-models

You are responsible for any content you create using this model. Enjoy responsibly.

## Dataset

This dataset is an open source implementation of [Microsoft's Orca](https://www.microsoft.com/en-us/research/publication/orca-progressive-learning-from-complex-explanation-traces-of-gpt-4/)

After uncensoring, deduping, and cleaning, our dataset consists of:

- 842,610 instructions of FLANv2 augmented with GPT-4 completions

- 2,625,353 instructions of FLANv2 augmented with GPT-3.5 completions

We followed the submix and system prompt distribution outlined in the Orca paper. With a few exceptions. We included all 75k of CoT in the FLAN-1m dataset rather than sampling that. Also, we found that many items were duplicated, so we removed duplicates.

Then we filtered out instances of alignment, refusal, avoidance, and bias, in order to produce an uncensored model upon which can be layered your personalized alignment LoRA.

We also filtered out duplicates and cleaned the data.

## Training

We trained with the flan5m (gpt3.5 completions) dataset in its entirety for 3 epochs at a learning rate of 2e-5 before we stopped training to avoid overfit.

We trained with the flan1m (gpt4 completions) dataset in its entirety for 2.5 epochs at a learning rate of 1e-5 before we stopped training to avoid overfit.

It took about 600 hours to train on 8x H100s

We used a prompt format similar to Vicuna, but we added the SYSTEM: field.

Prompt format:

```

SYSTEM: {system}

USER: {prompt}

ASSISTANT:

```

Example:

```

SYSTEM: you are an expert marine biologist.

USER: Please list 10 ways that dolphins are superior to orcas.

ASSISTANT:

```

## Evaluation

Evaluation will be coming soon.

## Team

The core Dolphin Team includes:

- Eric "Faldore" Hartford

- Pankaj Mathur

- Rob "Rohan" O'Callahan

- Tom "TheBloke" Jobbins

## Gratitude

- Thank you to Microsoft for authoring the Orca paper and inspiring this work.

- Special thanks to WingLian, NanoBit, Teknium for helpful advice

- Special thanks to EdenCoder and chirper.ai for mentorship and financial sponsorship.

- Special thanks to Kilkonie for his very valued mentorship.

- Thank you to Catto.

- Thank you to Nicolai Schleifer, financial sponsor.

- Thank you to Eric Fleming, financial sponsor.

- Thank you to all the other people in the Open Source AI community who have taught me and helped me along the way.

- And biggest thanks and love to my wife Hope and my babies, who have endured two months of my absenteeism while working long days and nights on this project.

## Example Output

```

SYSTEM: you are an expert marine biologist.

USER: Please list 10 ways that dolphins are superior to orcas.

ASSISTANT:

```

1. Social structure: Dolphins live in complex social groups called pods, which consist of extended family units and can include hundreds of individuals. Orcas, on the other hand, typically form smaller, more temporary groupings known as clans or matrilineal kinship groups.

2. Communication: Dolphins have a highly developed system of vocalizations, body language, and even telepathy to communicate with each other for various purposes such as coordinating cooperative hunting strategies, sharing information about food sources, and maintaining social bonds. While orcas also exhibit some communication abilities, their methods are generally less sophisticated compared to those of dolphins.

3. Intelligence: Dolphins are widely regarded as one of the most intelligent animal species, showcasing advanced problem-solving skills, self-awareness, tool use (such as using sea sponges as tools), and learning capabilities. Some dolphin species have been observed demonstrating altruism and understanding of human emotions. In contrast, while orcas are also smart, they do not display the same level of cognitive complexity as dolphins.

4. Playfulness: Dolphins are well-known for their playful behavior, often engaging in acrobatic displays, chasing each other's tails, and playing with objects like balls or seaweed. This playful nature is thought to be essential for their social bonding and mental stimulation. Although orcas may occasionally interact playfully, it is not as prevalent or diverse as among dolphins.

5. Agility and speed: Dolphins are incredibly agile swimmers, capable of reaching speeds up to 30 miles per hour (48 km/h) when pursuing prey. They can change direction rapidly and maneuver through tight spaces with ease. While orcas are powerful predators and fast swimmers themselves, they cannot match the sheer acceleration and agility of dolphins.

6. Feeding habits: Both dolphins and orcas are carnivorous, feeding primarily on fish and cephalopods. However, dolphins employ a wider variety of hunting techniques, including cooperative hunting strategies involving herding, encircling, and driving schools of fish into shallow waters where they become disoriented and easier to catch. Orcas mainly rely on ambushing their prey from below and using their powerful jaws to strike quickly.

7. Reproduction: Female dolphins usually give birth to a single calf after a gestation period of around 12 months. The mother plays a crucial role in raising and nurturing her offspring until they become independent. On the other hand, female orcas tend to breed every three to five years, giving birth to multiple offspring at once, and relying heavily on their mothers for protection and teaching.

8. Vocal repertoire: Dolphins possess a wide range of sounds, including clicks, whistles, and body sounds, which allow them to convey different messages and establish unique identities within their pods. Orcas, too, produce vocalizations but lack the diversity found in dolphin calls.

9. Conservation status: Many dolphin species face threats due to habitat loss, pollution, overfishing, and climate change. As a result, several populations are considered vulnerable or endangered by the International Union for Conservation of Nature (IUCN). In comparison, orca conservation status varies depending on the region; however, no species is currently listed as critically endangered or facing extinction.

10. Adaptability: Dolphins have adapted to various aquatic environments, ranging from warm coastal waters to colder open oceans. Their versatility allows them to thrive in both tropical and temperate climates. Orcas, conversely, are adapted to specific habitats such as cooler coastal waters and are mostly found in the Northern Hemisphere.

<!-- original-model-card end -->

|

macadeliccc/laser-dolphin-mixtral-2x7b-dpo-GGUF | macadeliccc | 2024-02-06T05:24:57Z | 583 | 8 | null | [

"gguf",

"license:cc-by-nc-4.0",

"region:us"

]

| null | 2024-01-18T03:37:10Z | ---

license: cc-by-nc-4.0

---

# Laser-dolphin-mixtral-2x7b-dpo-GGUF

The original model is listed here [macadeliccc/laser-dolphin-mixtral-2x7b-dpo](https://huggingface.co/macadeliccc/laser-dolphin-mixtral-2x7b-dpo)

## Quantizations

+ Q8_0

+ Q5_K_M

+ Q4_K_M

## Example Output

|

mradermacher/WizardIceLemonTeaRP-32k-GGUF | mradermacher | 2024-05-06T04:41:59Z | 583 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:icefog72/WizardIceLemonTeaRP-32k",

"endpoints_compatible",

"region:us"

]

| null | 2024-04-17T17:45:36Z | ---

base_model: icefog72/WizardIceLemonTeaRP-32k

language:

- en

library_name: transformers

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 1 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: -->

<!-- ### vocab_type: -->

static quants of https://huggingface.co/icefog72/WizardIceLemonTeaRP-32k

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q2_K.gguf) | Q2_K | 2.8 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.IQ3_XS.gguf) | IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q3_K_S.gguf) | Q3_K_S | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.IQ3_S.gguf) | IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.IQ3_M.gguf) | IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q3_K_M.gguf) | Q3_K_M | 3.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q3_K_L.gguf) | Q3_K_L | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.IQ4_XS.gguf) | IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q4_K_S.gguf) | Q4_K_S | 4.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q4_K_M.gguf) | Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q5_K_S.gguf) | Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q5_K_M.gguf) | Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q6_K.gguf) | Q6_K | 6.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/WizardIceLemonTeaRP-32k-GGUF/resolve/main/WizardIceLemonTeaRP-32k.Q8_0.gguf) | Q8_0 | 7.8 | fast, best quality |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

mmnga/haqishen-Llama-3-8B-Japanese-Instruct-gguf | mmnga | 2024-04-23T14:54:23Z | 583 | 4 | null | [

"gguf",

"llama3",

"en",

"ja",

"dataset:TFMC/imatrix-dataset-for-japanese-llm",

"license:llama3",

"region:us"

]

| null | 2024-04-23T13:55:17Z | ---

license: llama3

language:

- en

- ja

tags:

- llama3

datasets:

- TFMC/imatrix-dataset-for-japanese-llm

---

# haqishen-Llama-3-8B-Japanese-Instruct-gguf

[haqishenさんが公開しているLlama-3-8B-Japanese-Instruct](https://huggingface.co/haqishen/Llama-3-8B-Japanese-Instruct)のggufフォーマット変換版です。

imatrixのデータは[TFMC/imatrix-dataset-for-japanese-llm](https://huggingface.co/datasets/TFMC/imatrix-dataset-for-japanese-llm)を使用して作成しました。

## 他のモデル

[mmnga/haqishen-Llama-3-8B-Japanese-Instruct-gguf](https://huggingface.co/mmnga/haqishen-Llama-3-8B-Japanese-Instruct-gguf)

## Usage

```

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

make -j

./main -m 'haqishen-Llama-3-8B-Japanese-Instruct-Q4_0.gguf' -p "<|begin_of_text|><|start_header_id|>user <|end_header_id|>\n\nこんにちわ<|eot_id|><|start_header_id|>assistant <|end_header_id|>\n\n" -n 128

``` |

votepurchase/waiREALCN_v10 | votepurchase | 2024-06-04T10:16:28Z | 583 | 0 | diffusers | [

"diffusers",

"safetensors",

"ja",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

]

| text-to-image | 2024-05-26T10:54:17Z | ---

license: mit

language:

- ja

library_name: diffusers

---

[waiREALCN_v10](https://civitai.com/models/469902/wai-realcn) |

Joaoffg/ELM | Joaoffg | 2024-06-04T21:13:58Z | 583 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"academic",

"university",

"en",

"nl",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-05-29T10:42:04Z | ---

license: llama2

language:

- en

- nl

tags:

- academic

- university

---

# Model Card for the Erasmian Language Model

ELM is a community driven large language model tailored to the research and education needs of Erasmus University (EUR, Netherlands) students and staff.

The model draws inspiration from ChatGPT and Llama in terms of architecture, but it aims to be privacy sensitive, environmentally conscious, and from and for the Erasmus community. Here are a few key points of ELM:

We hope that the ELM experience becomes a template for community driven, decentralized and purpuseful AI development and application.

## Model Details

### Model Description

The underlying language model is trained and fine-tuned on academic outputs from Erasmus University, such as scientific papers or student theses;

Training and fine-tuning the model is a joint effort of students and staff, transparent for all parties involved;

The prompt-response examples used to fine tune the model come from students and staff, not crowdsourcing services;

Defining what is the "better" model output also comes from the perspective of research and education.

The true richness of ELM lies in the way its training data is generated. What is the "state-of-the-art" model may change quickly, but quality data will maintain its relevance and ensure that ELM and its future iterations serve the needs of the community that nurtured it.

- **Developed by:** João Gonçalves, Nick Jelicic

- **Funded by [optional]:** Convergence AI and Digitalization, Erasmus Trustfonds

- **Model type:** Llama-2 Instruct

- **Language(s) (NLP):** English, Dutch

- **License:** Llama2

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/Joaoffg/ELM

- **Paper [optional]:** In progress

- **Demo [optional]:** https://huggingface.co/spaces/Joaoffg/Joaoffg-ELM

|

jeiku/Templar_v1_8B-Q3_K_S-GGUF | jeiku | 2024-06-23T00:20:32Z | 583 | 0 | transformers | [

"transformers",

"gguf",

"mergekit",

"merge",

"llama-cpp",

"gguf-my-repo",

"base_model:jeiku/Templar_v1_8B",

"endpoints_compatible",

"region:us"

]

| null | 2024-06-23T00:20:11Z | ---

base_model: jeiku/Templar_v1_8B

library_name: transformers

tags:

- mergekit

- merge

- llama-cpp

- gguf-my-repo

---

# jeiku/Templar_v1_8B-Q3_K_S-GGUF

This model was converted to GGUF format from [`jeiku/Templar_v1_8B`](https://huggingface.co/jeiku/Templar_v1_8B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/jeiku/Templar_v1_8B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo jeiku/Templar_v1_8B-Q3_K_S-GGUF --hf-file templar_v1_8b-q3_k_s.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo jeiku/Templar_v1_8B-Q3_K_S-GGUF --hf-file templar_v1_8b-q3_k_s.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo jeiku/Templar_v1_8B-Q3_K_S-GGUF --hf-file templar_v1_8b-q3_k_s.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo jeiku/Templar_v1_8B-Q3_K_S-GGUF --hf-file templar_v1_8b-q3_k_s.gguf -c 2048

```

|

Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B-Q5_K_M-GGUF | Casual-Autopsy | 2024-06-23T23:12:49Z | 583 | 0 | transformers | [

"transformers",

"gguf",

"mergekit",

"merge",

"llama-cpp",

"gguf-my-repo",

"not-for-all-audiences",

"base_model:Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B",

"endpoints_compatible",

"region:us"

]

| null | 2024-06-23T17:07:11Z | ---

base_model: Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B

library_name: transformers

tags:

- mergekit

- merge

- llama-cpp

- gguf-my-repo

- not-for-all-audiences

---

# Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B-Q5_K_M-GGUF

This model was converted to GGUF format from [`Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B`](https://huggingface.co/Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B-Q5_K_M-GGUF --hf-file l3-uncen-merger-omelette-rp-v0.1-8b-q5_k_m-imat.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B-Q5_K_M-GGUF --hf-file l3-uncen-merger-omelette-rp-v0.1-8b-q5_k_m-imat.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B-Q5_K_M-GGUF --hf-file l3-uncen-merger-omelette-rp-v0.1-8b-q5_k_m-imat.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Casual-Autopsy/L3-Uncen-Merger-Omelette-RP-v0.1-8B-Q5_K_M-GGUF --hf-file l3-uncen-merger-omelette-rp-v0.1-8b-q5_k_m-imat.gguf -c 2048

``` |

NikolayKozloff/Replete-Coder-Llama3-8B-Q4_0-GGUF | NikolayKozloff | 2024-06-25T10:07:19Z | 583 | 1 | null | [

"gguf",

"region:us"

]

| null | 2024-06-25T10:06:59Z | Entry not found |

larenspear/Yi-1.5-6B-Chat-Q4_0-GGUF | larenspear | 2024-07-01T01:38:29Z | 583 | 0 | null | [

"gguf",

"llama-cpp",

"gguf-my-repo",

"base_model:01-ai/Yi-1.5-6B-Chat",

"license:apache-2.0",

"region:us"

]

| null | 2024-07-01T01:38:14Z | ---

base_model: 01-ai/Yi-1.5-6B-Chat

license: apache-2.0

tags:

- llama-cpp

- gguf-my-repo

---

# larenspear/Yi-1.5-6B-Chat-Q4_0-GGUF

This model was converted to GGUF format from [`01-ai/Yi-1.5-6B-Chat`](https://huggingface.co/01-ai/Yi-1.5-6B-Chat) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/01-ai/Yi-1.5-6B-Chat) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo larenspear/Yi-1.5-6B-Chat-Q4_0-GGUF --hf-file yi-1.5-6b-chat-q4_0.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo larenspear/Yi-1.5-6B-Chat-Q4_0-GGUF --hf-file yi-1.5-6b-chat-q4_0.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo larenspear/Yi-1.5-6B-Chat-Q4_0-GGUF --hf-file yi-1.5-6b-chat-q4_0.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo larenspear/Yi-1.5-6B-Chat-Q4_0-GGUF --hf-file yi-1.5-6b-chat-q4_0.gguf -c 2048

```

|

Den4ikAI/rugpt3_2ch | Den4ikAI | 2023-05-18T15:22:49Z | 582 | 3 | transformers | [

"transformers",

"pytorch",

"safetensors",

"gpt2",

"text-generation",

"rus",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2022-07-26T15:56:30Z | ---

license: mit

language: rus

---

RUGPT-3 обученная на диалогах с имиджборд по типу 2ch

Для генерации ответа в модель нужно ввести такой формат данных:

"- Привет\n-"

Пример инференса тут: https://github.com/Den4ikAI/rugpt3_2ch |

microsoft/trocr-base-str | microsoft | 2024-05-27T20:12:19Z | 582 | 5 | transformers | [

"transformers",

"pytorch",

"vision-encoder-decoder",

"trocr",

"image-to-text",

"arxiv:2109.10282",

"endpoints_compatible",

"region:us"

]

| image-to-text | 2022-09-08T09:02:01Z | ---

tags:

- trocr

- image-to-text

widget:

- src: https://raw.githubusercontent.com/ku21fan/STR-Fewer-Labels/main/demo_image/1.png

example_title: Example 1

- src: https://raw.githubusercontent.com/HCIILAB/Scene-Text-Recognition-Recommendations/main/Dataset_images/LSVT1.jpg

example_title: Example 2

- src: https://raw.githubusercontent.com/HCIILAB/Scene-Text-Recognition-Recommendations/main/Dataset_images/ArT2.jpg

example_title: Example 3

---

# TrOCR (base-sized model, fine-tuned on STR benchmarks)

TrOCR model fine-tuned on the training sets of IC13, IC15, IIIT5K, SVT. It was introduced in the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Li et al. and first released in [this repository](https://github.com/microsoft/unilm/tree/master/trocr).

## Model description

The TrOCR model is an encoder-decoder model, consisting of an image Transformer as encoder, and a text Transformer as decoder. The image encoder was initialized from the weights of BEiT, while the text decoder was initialized from the weights of RoBERTa.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded. One also adds absolute position embeddings before feeding the sequence to the layers of the Transformer encoder. Next, the Transformer text decoder autoregressively generates tokens.

## Intended uses & limitations

You can use the raw model for optical character recognition (OCR) on single text-line images. See the [model hub](https://huggingface.co/models?search=microsoft/trocr) to look for fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model in PyTorch:

```python

from transformers import TrOCRProcessor, VisionEncoderDecoderModel

from PIL import Image

import requests

# load image from the IIIT-5k dataset

url = 'https://i.postimg.cc/ZKwLg2Gw/367-14.png'

image = Image.open(requests.get(url, stream=True).raw).convert("RGB")

processor = TrOCRProcessor.from_pretrained('microsoft/trocr-base-str')

model = VisionEncoderDecoderModel.from_pretrained('microsoft/trocr-base-str')

pixel_values = processor(images=image, return_tensors="pt").pixel_values

generated_ids = model.generate(pixel_values)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0]

```

### BibTeX entry and citation info

```bibtex

@misc{li2021trocr,

title={TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models},

author={Minghao Li and Tengchao Lv and Lei Cui and Yijuan Lu and Dinei Florencio and Cha Zhang and Zhoujun Li and Furu Wei},

year={2021},

eprint={2109.10282},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

Guizmus/MosaicArt | Guizmus | 2023-03-06T16:20:51Z | 582 | 35 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"text-to-image",

"image-to-image",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2022-12-01T14:43:57Z | ---

language:

- en

license: creativeml-openrail-m

thumbnail: "https://huggingface.co/Guizmus/MosaicArt/resolve/main/showcase.jpg"

tags:

- stable-diffusion

- text-to-image

- image-to-image

---

# Mosaic Art

## Details

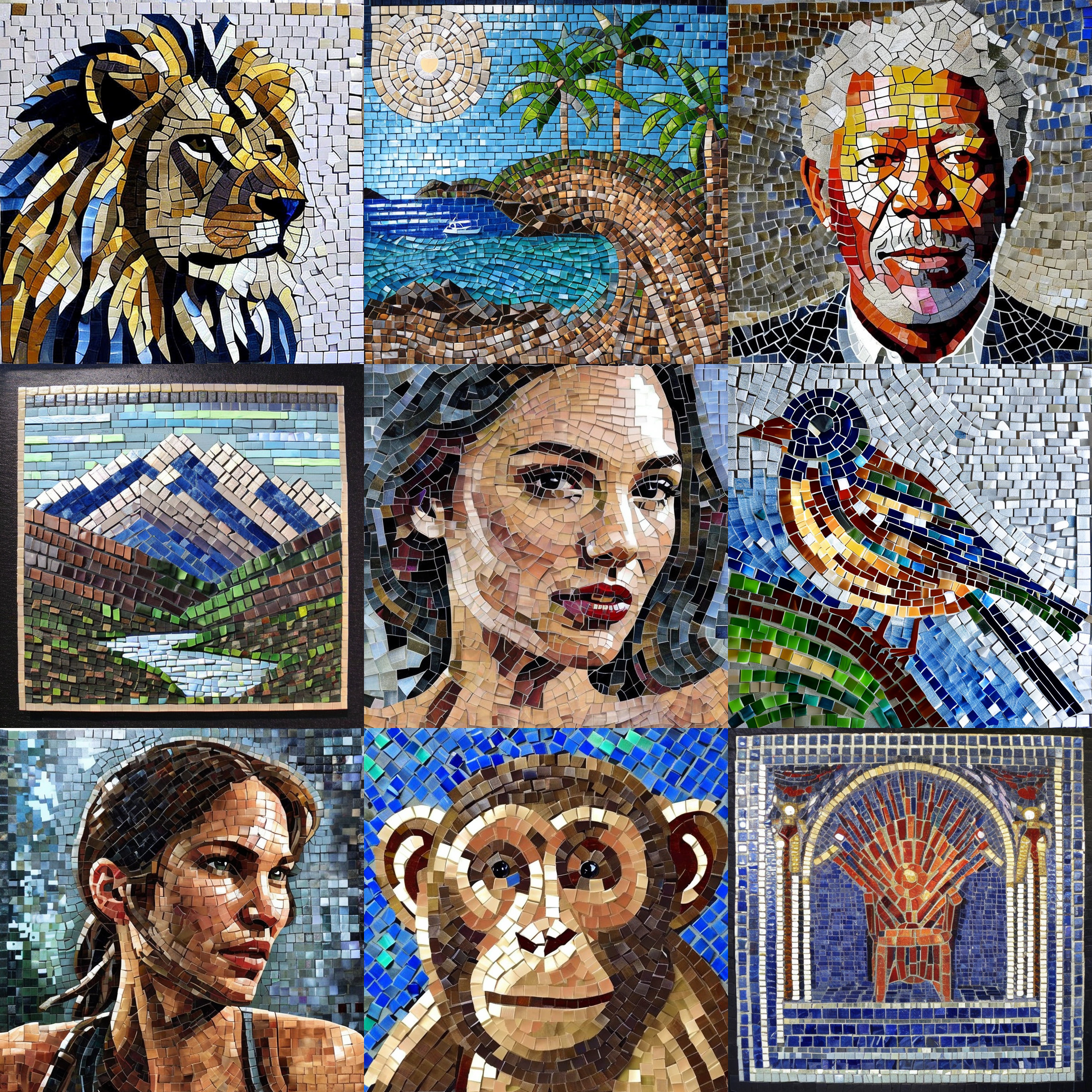

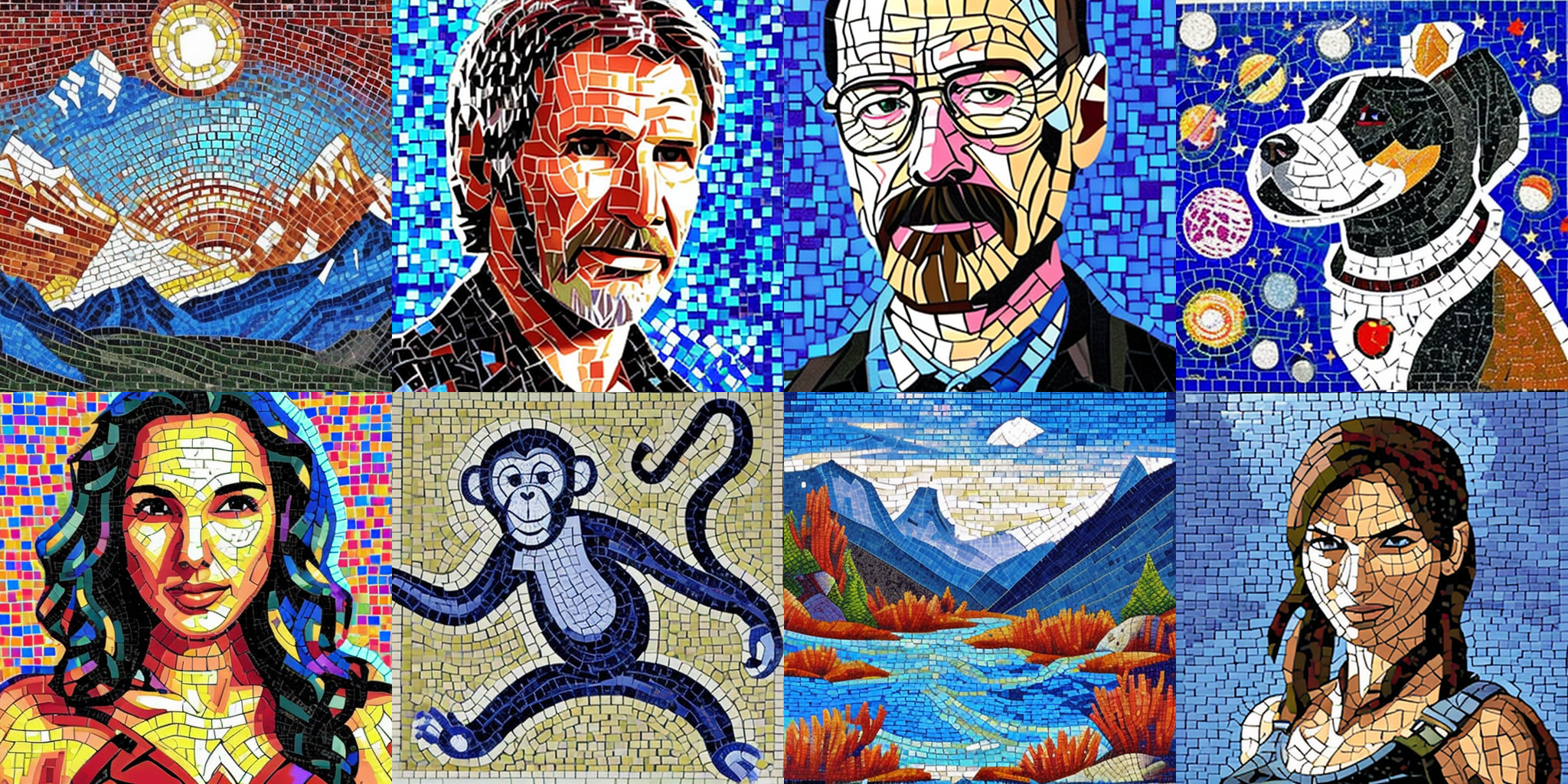

This is a Dreamboothed Stable Diffusion model trained on pictures of mosaic art.

The total dataset is made of 46 pictures. V2 was trained on [Stable diffusion 2.1 768](https://huggingface.co/stabilityai/stable-diffusion-2-1). I used [StableTuner](https://github.com/devilismyfriend/StableTuner) to do the training, using full caption on the pictures with almost no recurring word outside the main concept, so that no additionnal regularisation was needed. 6 epochs of 40 repeats on LR 1e-6 were used, with prior preservation.

V1 was trained on [runawayml 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) and the [new VAE](https://huggingface.co/stabilityai/sd-vae-ft-mse). I used [EveryDream](https://github.com/victorchall/EveryDream-trainer) to do the training, using full caption on the pictures with almost no recurring word outside the main concept, so that no additionnal regularisation was needed. Out of e0 to e11 epochs, e8 was selected as the best application of style while not overtraining. Prior preservation was constated as good. A total of 9 epochs of 40 repeats with a learning rate of 1e-6.

The token "Mosaic Art" will bring in the new concept, trained as a style.

The recommended sampling is k_Euler_a or DPM++ 2M Karras on 20 steps, CFGS 7.5 .

## Model v2

[CKPT v2](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v2.ckpt)

[YAML v2](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v2.yaml)

## Model v1

[CKPT v1](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v1.ckpt)

[CKPT v1 with ema weights](https://huggingface.co/Guizmus/MosaicArt/resolve/main/MosaicArt_v1_ema.ckpt)

[Dataset](https://huggingface.co/Guizmus/MosaicArt/resolve/main/dataset_v1.zip)

## 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

```python

from diffusers import StableDiffusionPipeline

import torch

model_id = "Guizmus/MosaicArt"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "Mosaic Art dog on the moon"

image = pipe(prompt).images[0]

image.save("./MosaicArt.png")

``` |

TheBloke/Pygmalion-2-13B-SuperCOT-weighed-GGUF | TheBloke | 2023-09-27T12:49:32Z | 582 | 8 | transformers | [

"transformers",

"gguf",

"llama",

"llama-2",

"text-generation",

"en",

"base_model:royallab/Pygmalion-2-13b-SuperCoT-weighed",

"license:llama2",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-09-16T13:51:30Z | ---

language:

- en

license: llama2

library_name: transformers

tags:

- llama

- llama-2

model_name: Pygmalion 2 13B SuperCOT Weighed

base_model: royallab/Pygmalion-2-13b-SuperCoT-weighed

inference: false

model_creator: royallab

model_type: llama

pipeline_tag: text-generation

prompt_template: 'Below is an instruction that describes a task. Write a response

that appropriately completes the request.

### Instruction:

{prompt}

### Response:

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>