modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

timm/pit_b_distilled_224.in1k | timm | 2023-04-26T00:07:39Z | 564 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2103.16302",

"license:apache-2.0",

"region:us"

]

| image-classification | 2023-04-26T00:06:44Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for pit_b_distilled_224.in1k

A PiT (Pooling based Vision Transformer) image classification model. Trained on ImageNet-1k with token based distillation by paper authors.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 74.8

- GMACs: 12.5

- Activations (M): 33.1

- Image size: 224 x 224

- **Papers:**

- Rethinking Spatial Dimensions of Vision Transformers: https://arxiv.org/abs/2103.16302

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/naver-ai/pit

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('pit_b_distilled_224.in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'pit_b_distilled_224.in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 256, 31, 31])

# torch.Size([1, 512, 16, 16])

# torch.Size([1, 1024, 8, 8])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'pit_b_distilled_224.in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 2, 1024) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

## Citation

```bibtex

@inproceedings{heo2021pit,

title={Rethinking Spatial Dimensions of Vision Transformers},

author={Byeongho Heo and Sangdoo Yun and Dongyoon Han and Sanghyuk Chun and Junsuk Choe and Seong Joon Oh},

booktitle = {International Conference on Computer Vision (ICCV)},

year={2021},

}

```

|

MariaK/vilt_finetuned_200 | MariaK | 2023-08-01T17:08:24Z | 564 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vilt",

"visual-question-answering",

"generated_from_trainer",

"dataset:vqa",

"base_model:dandelin/vilt-b32-mlm",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| visual-question-answering | 2023-08-01T16:10:06Z | ---

license: apache-2.0

base_model: dandelin/vilt-b32-mlm

tags:

- generated_from_trainer

datasets:

- vqa

model-index:

- name: vilt_finetuned_200

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vilt_finetuned_200

This model is a fine-tuned version of [dandelin/vilt-b32-mlm](https://huggingface.co/dandelin/vilt-b32-mlm) on the vqa dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.2

- Tokenizers 0.13.3

|

TheBloke/WizardLM-13B-V1.1-GGUF | TheBloke | 2023-09-27T12:52:57Z | 564 | 2 | transformers | [

"transformers",

"gguf",

"llama",

"arxiv:2304.12244",

"arxiv:2306.08568",

"arxiv:2308.09583",

"base_model:WizardLM/WizardLM-13B-V1.1",

"license:other",

"text-generation-inference",

"region:us"

]

| null | 2023-09-20T00:48:08Z | ---

license: other

model_name: WizardLM 13B V1.1

base_model: WizardLM/WizardLM-13B-V1.1

inference: false

model_creator: WizardLM

model_type: llama

prompt_template: 'A chat between a curious user and an artificial intelligence assistant.

The assistant gives helpful, detailed, and polite answers to the user''s questions.

USER: {prompt} ASSISTANT:

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# WizardLM 13B V1.1 - GGUF

- Model creator: [WizardLM](https://huggingface.co/WizardLM)

- Original model: [WizardLM 13B V1.1](https://huggingface.co/WizardLM/WizardLM-13B-V1.1)

<!-- description start -->

## Description

This repo contains GGUF format model files for [WizardLM's WizardLM 13B V1.1](https://huggingface.co/WizardLM/WizardLM-13B-V1.1).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF)

* [WizardLM's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/WizardLM/WizardLM-13B-V1.1)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Vicuna

```

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: {prompt} ASSISTANT:

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [wizardlm-13b-v1.1.Q2_K.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [wizardlm-13b-v1.1.Q3_K_S.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |

| [wizardlm-13b-v1.1.Q3_K_M.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q3_K_M.gguf) | Q3_K_M | 3 | 6.34 GB| 8.84 GB | very small, high quality loss |

| [wizardlm-13b-v1.1.Q3_K_L.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q3_K_L.gguf) | Q3_K_L | 3 | 6.93 GB| 9.43 GB | small, substantial quality loss |

| [wizardlm-13b-v1.1.Q4_0.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q4_0.gguf) | Q4_0 | 4 | 7.37 GB| 9.87 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [wizardlm-13b-v1.1.Q4_K_S.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q4_K_S.gguf) | Q4_K_S | 4 | 7.41 GB| 9.91 GB | small, greater quality loss |

| [wizardlm-13b-v1.1.Q4_K_M.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q4_K_M.gguf) | Q4_K_M | 4 | 7.87 GB| 10.37 GB | medium, balanced quality - recommended |

| [wizardlm-13b-v1.1.Q5_0.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q5_0.gguf) | Q5_0 | 5 | 8.97 GB| 11.47 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [wizardlm-13b-v1.1.Q5_K_S.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q5_K_S.gguf) | Q5_K_S | 5 | 8.97 GB| 11.47 GB | large, low quality loss - recommended |

| [wizardlm-13b-v1.1.Q5_K_M.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q5_K_M.gguf) | Q5_K_M | 5 | 9.23 GB| 11.73 GB | large, very low quality loss - recommended |

| [wizardlm-13b-v1.1.Q6_K.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q6_K.gguf) | Q6_K | 6 | 10.68 GB| 13.18 GB | very large, extremely low quality loss |

| [wizardlm-13b-v1.1.Q8_0.gguf](https://huggingface.co/TheBloke/WizardLM-13B-V1.1-GGUF/blob/main/wizardlm-13b-v1.1.Q8_0.gguf) | Q8_0 | 8 | 13.83 GB| 16.33 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

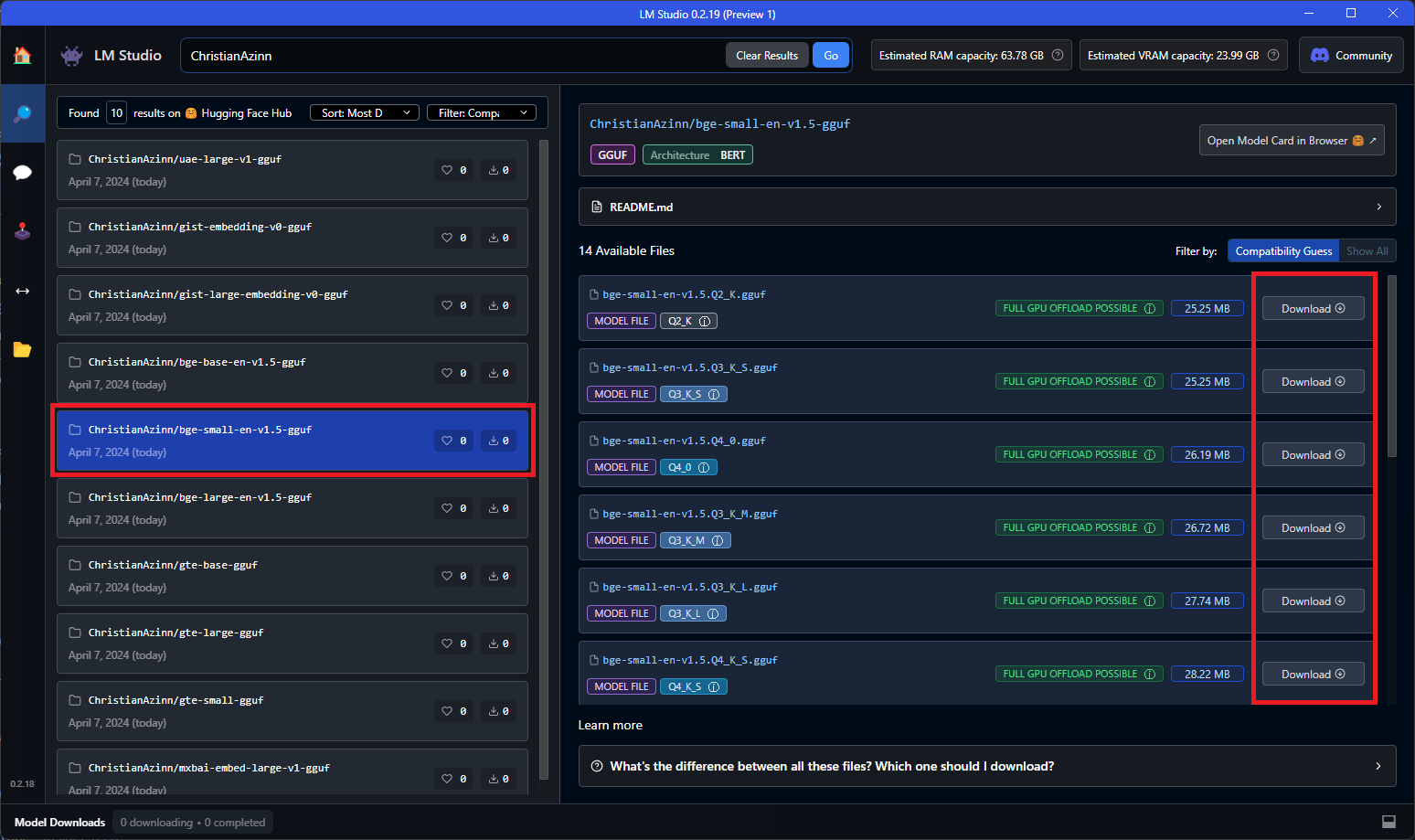

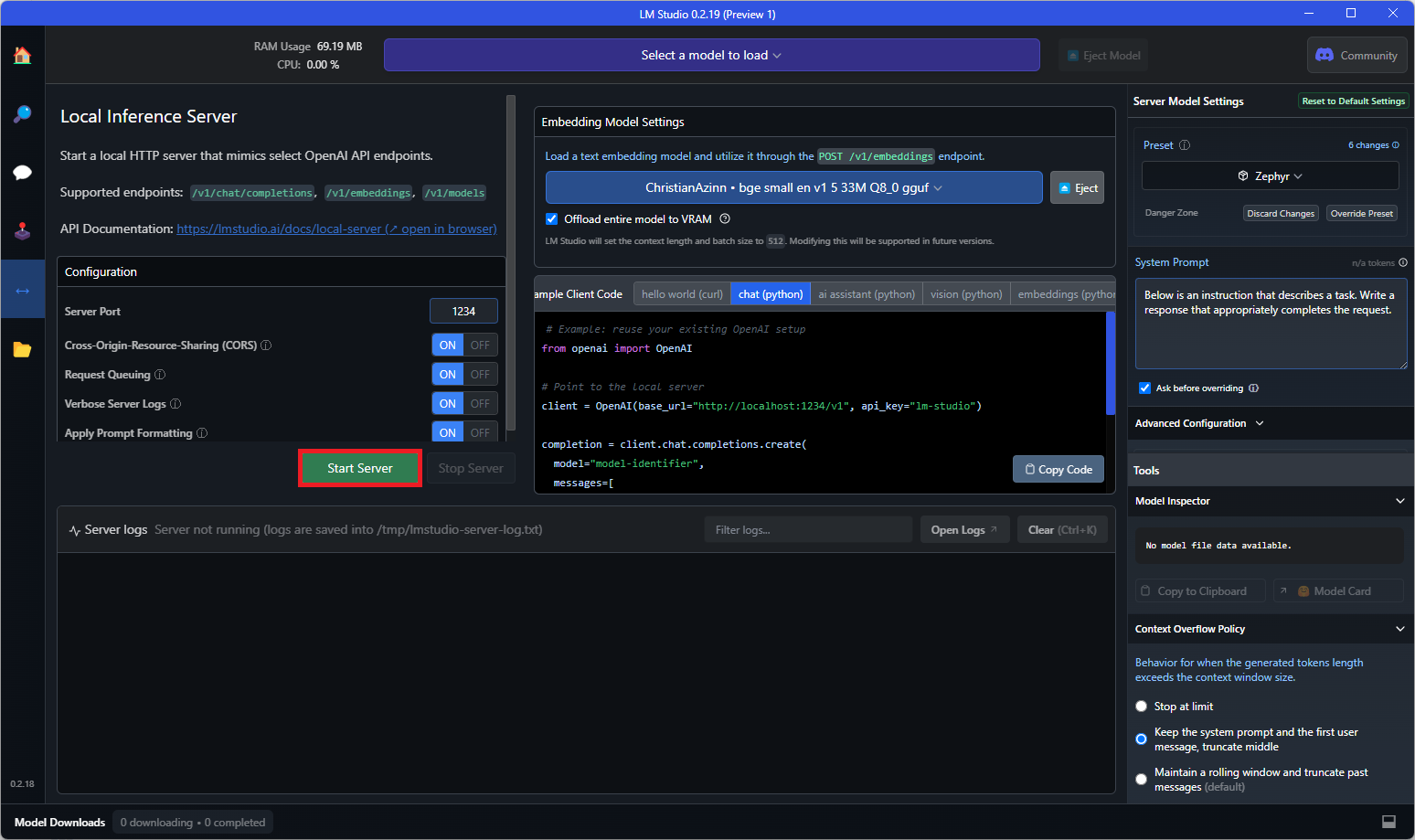

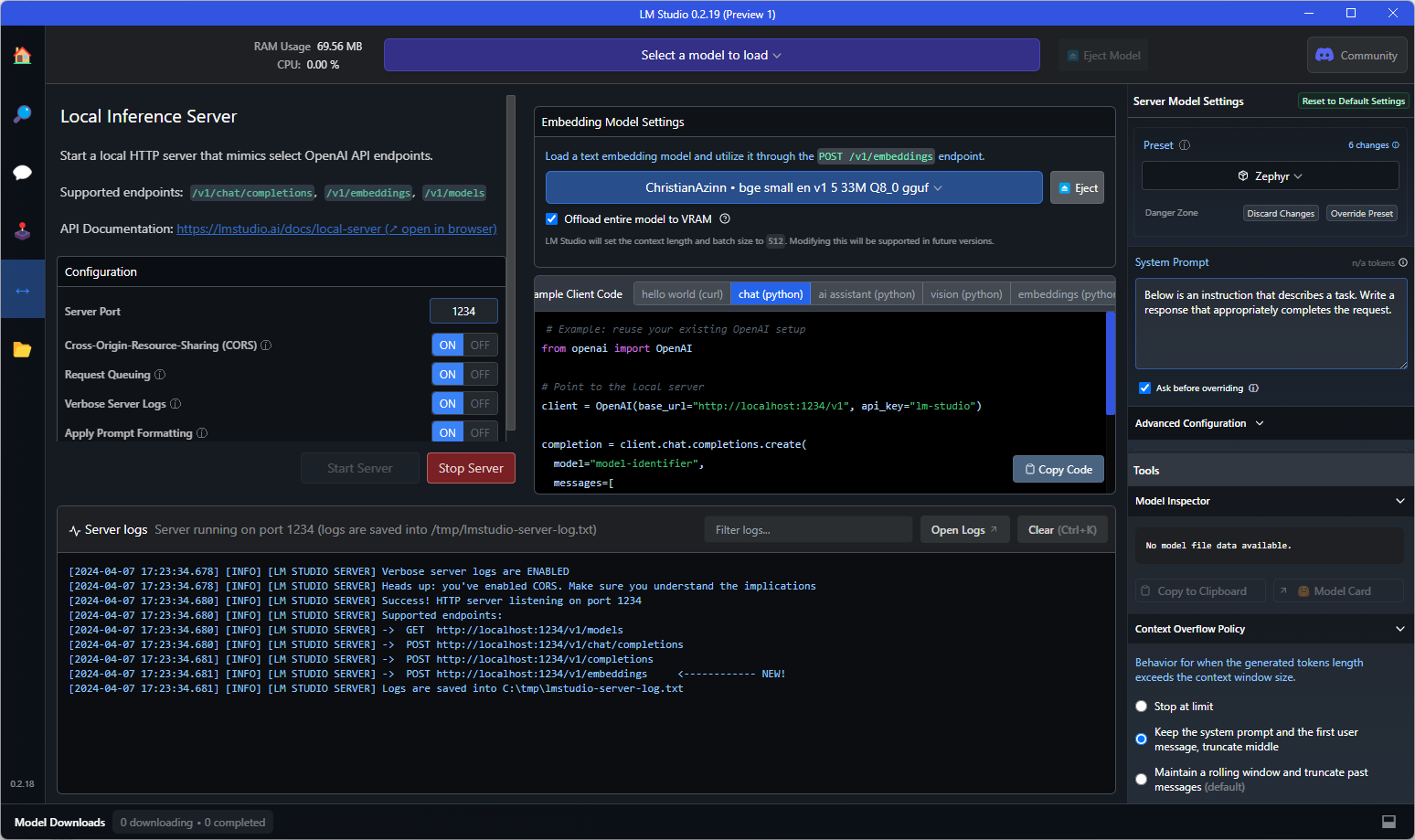

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/WizardLM-13B-V1.1-GGUF and below it, a specific filename to download, such as: wizardlm-13b-v1.1.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/WizardLM-13B-V1.1-GGUF wizardlm-13b-v1.1.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/WizardLM-13B-V1.1-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/WizardLM-13B-V1.1-GGUF wizardlm-13b-v1.1.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m wizardlm-13b-v1.1.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: {prompt} ASSISTANT:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/WizardLM-13B-V1.1-GGUF", model_file="wizardlm-13b-v1.1.Q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: WizardLM's WizardLM 13B V1.1

This is the **Full-Weight** of WizardLM-13B V1.1 model.

## WizardLM: Empowering Large Pre-Trained Language Models to Follow Complex Instructions

<p align="center">

🤗 <a href="https://huggingface.co/WizardLM" target="_blank">HF Repo</a> •🐱 <a href="https://github.com/nlpxucan/WizardLM" target="_blank">Github Repo</a> • 🐦 <a href="https://twitter.com/WizardLM_AI" target="_blank">Twitter</a> • 📃 <a href="https://arxiv.org/abs/2304.12244" target="_blank">[WizardLM]</a> • 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> • 📃 <a href="https://arxiv.org/abs/2308.09583" target="_blank">[WizardMath]</a> <br>

</p>

<p align="center">

👋 Join our <a href="https://discord.gg/VZjjHtWrKs" target="_blank">Discord</a>

</p>

| Model | Checkpoint | Paper | HumanEval | MBPP | Demo | License |

| ----- |------| ---- |------|-------| ----- | ----- |

| WizardCoder-Python-34B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardCoder-Python-34B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> | 73.2 | 61.2 | [Demo](http://47.103.63.15:50085/) | <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama2</a> |

| WizardCoder-15B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardCoder-15B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> | 59.8 |50.6 | -- | <a href="https://huggingface.co/spaces/bigcode/bigcode-model-license-agreement" target="_blank">OpenRAIL-M</a> |

| WizardCoder-Python-13B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardCoder-Python-13B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> | 64.0 | 55.6 | -- | <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama2</a> |

| WizardCoder-Python-7B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardCoder-Python-7B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> | 55.5 | 51.6 | [Demo](http://47.103.63.15:50088/) | <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama2</a> |

| WizardCoder-3B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardCoder-3B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> | 34.8 |37.4 | -- | <a href="https://huggingface.co/spaces/bigcode/bigcode-model-license-agreement" target="_blank">OpenRAIL-M</a> |

| WizardCoder-1B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardCoder-1B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2306.08568" target="_blank">[WizardCoder]</a> | 23.8 |28.6 | -- | <a href="https://huggingface.co/spaces/bigcode/bigcode-model-license-agreement" target="_blank">OpenRAIL-M</a> |

| Model | Checkpoint | Paper | GSM8k | MATH |Online Demo| License|

| ----- |------| ---- |------|-------| ----- | ----- |

| WizardMath-70B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardMath-70B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2308.09583" target="_blank">[WizardMath]</a>| **81.6** | **22.7** |[Demo](http://47.103.63.15:50083/)| <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama 2 </a> |

| WizardMath-13B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardMath-13B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2308.09583" target="_blank">[WizardMath]</a>| **63.9** | **14.0** |[Demo](http://47.103.63.15:50082/)| <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama 2 </a> |

| WizardMath-7B-V1.0 | 🤗 <a href="https://huggingface.co/WizardLM/WizardMath-7B-V1.0" target="_blank">HF Link</a> | 📃 <a href="https://arxiv.org/abs/2308.09583" target="_blank">[WizardMath]</a>| **54.9** | **10.7** | [Demo](http://47.103.63.15:50080/)| <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama 2 </a>|

<font size=4>

| <sup>Model</sup> | <sup>Checkpoint</sup> | <sup>Paper</sup> |<sup>MT-Bench</sup> | <sup>AlpacaEval</sup> | <sup>WizardEval</sup> | <sup>HumanEval</sup> | <sup>License</sup>|

| ----- |------| ---- |------|-------| ----- | ----- | ----- |

| <sup>WizardLM-13B-V1.2</sup> | <sup>🤗 <a href="https://huggingface.co/WizardLM/WizardLM-13B-V1.2" target="_blank">HF Link</a> </sup>| | <sup>7.06</sup> | <sup>89.17%</sup> | <sup>101.4% </sup>|<sup>36.6 pass@1</sup>|<sup> <a href="https://ai.meta.com/resources/models-and-libraries/llama-downloads/" target="_blank">Llama 2 License </a></sup> |

| <sup>WizardLM-13B-V1.1</sup> |<sup> 🤗 <a href="https://huggingface.co/WizardLM/WizardLM-13B-V1.1" target="_blank">HF Link</a> </sup> | | <sup>6.76</sup> |<sup>86.32%</sup> | <sup>99.3% </sup> |<sup>25.0 pass@1</sup>| <sup>Non-commercial</sup>|

| <sup>WizardLM-30B-V1.0</sup> | <sup>🤗 <a href="https://huggingface.co/WizardLM/WizardLM-30B-V1.0" target="_blank">HF Link</a></sup> | | <sup>7.01</sup> | | <sup>97.8% </sup> | <sup>37.8 pass@1</sup>| <sup>Non-commercial</sup> |

| <sup>WizardLM-13B-V1.0</sup> | <sup>🤗 <a href="https://huggingface.co/WizardLM/WizardLM-13B-V1.0" target="_blank">HF Link</a> </sup> | | <sup>6.35</sup> | <sup>75.31%</sup> | <sup>89.1% </sup> |<sup> 24.0 pass@1 </sup> | <sup>Non-commercial</sup>|

| <sup>WizardLM-7B-V1.0 </sup>| <sup>🤗 <a href="https://huggingface.co/WizardLM/WizardLM-7B-V1.0" target="_blank">HF Link</a> </sup> |<sup> 📃 <a href="https://arxiv.org/abs/2304.12244" target="_blank">[WizardLM]</a> </sup>| | | <sup>78.0% </sup> |<sup>19.1 pass@1 </sup>|<sup> Non-commercial</sup>|

</font>

**Repository**: https://github.com/nlpxucan/WizardLM

**Twitter**: https://twitter.com/WizardLM_AI/status/1677282955490918401

- 🔥🔥🔥 [7/7/2023] We released **WizardLM V1.1** models. The **WizardLM-13B-V1.1** is here ([Demo_13B-V1.1](https://e8a06366ccd1c4d1.gradio.app), [Demo_13B-V1.1_bak-1](https://59da107262a25764.gradio.app), [Demo_13B-V1.1_bak-2](https://dfc5113f66739c80.gradio.app), [Full Model Weight](https://huggingface.co/WizardLM/WizardLM-13B-V1.1)). **WizardLM-7B-V1.1**, **WizardLM-30B-V1.1**, and **WizardLM-65B-V1.1** are coming soon. Please checkout the [Full Model Weights](https://huggingface.co/WizardLM) and [paper](https://arxiv.org/abs/2304.12244).

- 🔥🔥🔥 [7/7/2023] The **WizardLM-13B-V1.1** achieves **6.74** on [MT-Bench Leaderboard](https://chat.lmsys.org/?leaderboard), **86.32%** on [AlpacaEval Leaderboard](https://tatsu-lab.github.io/alpaca_eval/), and **99.3%** on [WizardLM Eval](https://github.com/nlpxucan/WizardLM/blob/main/WizardLM/data/WizardLM_testset.jsonl). (Note: MT-Bench and AlpacaEval are all self-test, will push update and request review. All tests are completed under their official settings.)

## Inference WizardLM Demo Script

We provide the inference WizardLM demo code [here](https://github.com/nlpxucan/WizardLM/tree/main/demo).

<!-- original-model-card end -->

|

PrunaAI/ChimeraLlama-3-8B-v2-GGUF-smashed | PrunaAI | 2024-05-02T23:57:58Z | 564 | 0 | null | [

"gguf",

"pruna-ai",

"region:us"

]

| null | 2024-05-02T21:54:56Z | ---

thumbnail: "https://assets-global.website-files.com/646b351987a8d8ce158d1940/64ec9e96b4334c0e1ac41504_Logo%20with%20white%20text.svg"

metrics:

- memory_disk

- memory_inference

- inference_latency

- inference_throughput

- inference_CO2_emissions

- inference_energy_consumption

tags:

- pruna-ai

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<a href="https://www.pruna.ai/" target="_blank" rel="noopener noreferrer">

<img src="https://i.imgur.com/eDAlcgk.png" alt="PrunaAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</a>

</div>

<!-- header end -->

[](https://twitter.com/PrunaAI)

[](https://github.com/PrunaAI)

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

[](https://discord.com/invite/vb6SmA3hxu)

## This repo contains GGUF versions of the mlabonne/ChimeraLlama-3-8B-v2 model.

# Simply make AI models cheaper, smaller, faster, and greener!

- Give a thumbs up if you like this model!

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

- Join Pruna AI community on Discord [here](https://discord.com/invite/vb6SmA3hxu) to share feedback/suggestions or get help.

**Frequently Asked Questions**

- ***How does the compression work?*** The model is compressed with GGUF.

- ***How does the model quality change?*** The quality of the model output might vary compared to the base model.

- ***What is the model format?*** We use GGUF format.

- ***What calibration data has been used?*** If needed by the compression method, we used WikiText as the calibration data.

- ***How to compress my own models?*** You can request premium access to more compression methods and tech support for your specific use-cases [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

# Downloading and running the models

You can download the individual files from the Files & versions section. Here is a list of the different versions we provide. For more info checkout [this chart](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9) and [this guide](https://www.reddit.com/r/LocalLLaMA/comments/1ba55rj/overview_of_gguf_quantization_methods/):

| Quant type | Description |

|------------|--------------------------------------------------------------------------------------------|

| Q5_K_M | High quality, recommended. |

| Q5_K_S | High quality, recommended. |

| Q4_K_M | Good quality, uses about 4.83 bits per weight, recommended. |

| Q4_K_S | Slightly lower quality with more space savings, recommended. |

| IQ4_NL | Decent quality, slightly smaller than Q4_K_S with similar performance, recommended. |

| IQ4_XS | Decent quality, smaller than Q4_K_S with similar performance, recommended. |

| Q3_K_L | Lower quality but usable, good for low RAM availability. |

| Q3_K_M | Even lower quality. |

| IQ3_M | Medium-low quality, new method with decent performance comparable to Q3_K_M. |

| IQ3_S | Lower quality, new method with decent performance, recommended over Q3_K_S quant, same size with better performance. |

| Q3_K_S | Low quality, not recommended. |

| IQ3_XS | Lower quality, new method with decent performance, slightly better than Q3_K_S. |

| Q2_K | Very low quality but surprisingly usable. |

## How to download GGUF files ?

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

- **Option A** - Downloading in `text-generation-webui`:

- **Step 1**: Under Download Model, you can enter the model repo: PrunaAI/ChimeraLlama-3-8B-v2-GGUF-smashed and below it, a specific filename to download, such as: phi-2.IQ3_M.gguf.

- **Step 2**: Then click Download.

- **Option B** - Downloading on the command line (including multiple files at once):

- **Step 1**: We recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

- **Step 2**: Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download PrunaAI/ChimeraLlama-3-8B-v2-GGUF-smashed ChimeraLlama-3-8B-v2.IQ3_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

Alternatively, you can also download multiple files at once with a pattern:

```shell

huggingface-cli download PrunaAI/ChimeraLlama-3-8B-v2-GGUF-smashed --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download PrunaAI/ChimeraLlama-3-8B-v2-GGUF-smashed ChimeraLlama-3-8B-v2.IQ3_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## How to run model in GGUF format?

- **Option A** - Introductory example with `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m ChimeraLlama-3-8B-v2.IQ3_M.gguf --color -c 32768 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<s>[INST] {prompt\} [/INST]"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 32768` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

- **Option B** - Running in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20-%20Model%20Tab.md#llamacpp).

- **Option C** - Running from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./ChimeraLlama-3-8B-v2.IQ3_M.gguf", # Download the model file first

n_ctx=32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"<s>[INST] {prompt} [/INST]", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./ChimeraLlama-3-8B-v2.IQ3_M.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

- **Option D** - Running with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

## Configurations

The configuration info are in `smash_config.json`.

## Credits & License

The license of the smashed model follows the license of the original model. Please check the license of the original model before using this model which provided the base model. The license of the `pruna-engine` is [here](https://pypi.org/project/pruna-engine/) on Pypi.

## Want to compress other models?

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

- Request access to easily compress your own AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

nvidia/parakeet-tdt_ctc-1.1b | nvidia | 2024-05-08T03:33:30Z | 564 | 10 | nemo | [

"nemo",

"automatic-speech-recognition",

"speech",

"audio",

"Transducer",

"TDT",

"FastConformer",

"Conformer",

"pytorch",

"NeMo",

"hf-asr-leaderboard",

"en",

"dataset:librispeech_asr",

"dataset:fisher_corpus",

"dataset:mozilla-foundation/common_voice_8_0",

"dataset:National-Singapore-Corpus-Part-1",

"dataset:vctk",

"dataset:voxpopuli",

"dataset:europarl",

"dataset:multilingual_librispeech",

"arxiv:2305.05084",

"arxiv:2304.06795",

"arxiv:2104.02821",

"license:cc-by-4.0",

"model-index",

"region:us"

]

| automatic-speech-recognition | 2024-05-07T11:42:30Z | ---

language:

- en

library_name: nemo

datasets:

- librispeech_asr

- fisher_corpus

- mozilla-foundation/common_voice_8_0

- National-Singapore-Corpus-Part-1

- vctk

- voxpopuli

- europarl

- multilingual_librispeech

thumbnail: null

tags:

- automatic-speech-recognition

- speech

- audio

- Transducer

- TDT

- FastConformer

- Conformer

- pytorch

- NeMo

- hf-asr-leaderboard

license: cc-by-4.0

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

model-index:

- name: parakeet_tdt_1.1b

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: AMI (Meetings test)

type: edinburghcstr/ami

config: ihm

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 15.94

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Earnings-22

type: revdotcom/earnings22

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 11.86

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: GigaSpeech

type: speechcolab/gigaspeech

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 10.19

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: LibriSpeech (clean)

type: librispeech_asr

config: other

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 1.82

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: LibriSpeech (other)

type: librispeech_asr

config: other

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 3.67

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: SPGI Speech

type: kensho/spgispeech

config: test

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 2.24

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: tedlium-v3

type: LIUM/tedlium

config: release1

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 3.87

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Vox Populi

type: facebook/voxpopuli

config: en

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 6.19

- task:

type: Automatic Speech Recognition

name: automatic-speech-recognition

dataset:

name: Mozilla Common Voice 9.0

type: mozilla-foundation/common_voice_9_0

config: en

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 8.69

metrics:

- wer

pipeline_tag: automatic-speech-recognition

---

# Parakeet TDT-CTC 1.1B PnC(en)

<style>

img {

display: inline;

}

</style>

[](#model-architecture)

| [](#model-architecture)

| [](#datasets)

`parakeet-hyb-pnc-1.1b` is an ASR model that transcribes speech with Punctuations and Capitalizations of English alphabet. This model is jointly developed by [NVIDIA NeMo](https://github.com/NVIDIA/NeMo) and [Suno.ai](https://www.suno.ai/) teams.

It is an XXL version of Hybrid FastConformer [1] TDT-CTC [2] (around 1.1B parameters) model. This model has been trained with Local Attention and Global token hence this model can transcribe **11 hrs** of audio in one single pass. And for reference this model can transcibe 90mins of audio in <16 sec on A100.

See the [model architecture](#model-architecture) section and [NeMo documentation](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html#fast-conformer) for complete architecture details.

## NVIDIA NeMo: Training

To train, fine-tune or play with the model you will need to install [NVIDIA NeMo](https://github.com/NVIDIA/NeMo). We recommend you install it after you've installed latest PyTorch version.

```

pip install nemo_toolkit['all']

```

## How to Use this Model

The model is available for use in the NeMo toolkit [3], and can be used as a pre-trained checkpoint for inference or for fine-tuning on another dataset.

### Automatically instantiate the model

```python

import nemo.collections.asr as nemo_asr

asr_model = nemo_asr.models.ASRModel.from_pretrained(model_name="nvidia/parakeet-tdt_ctc-1.1b")

```

### Transcribing using Python

First, let's get a sample

```

wget https://dldata-public.s3.us-east-2.amazonaws.com/2086-149220-0033.wav

```

Then simply do:

```

asr_model.transcribe(['2086-149220-0033.wav'])

```

### Transcribing many audio files

By default model uses TDT to transcribe the audio files, to switch decoder to use CTC, use decoding_type='ctc'

```shell

python [NEMO_GIT_FOLDER]/examples/asr/transcribe_speech.py

pretrained_name="nvidia/parakeet-tdt_ctc-1.1b"

audio_dir="<DIRECTORY CONTAINING AUDIO FILES>"

```

### Input

This model accepts 16000 Hz mono-channel audio (wav files) as input.

### Output

This model provides transcribed speech as a string for a given audio sample.

## Model Architecture

This model uses a Hybrid FastConformer-TDT-CTC architecture. FastConformer [1] is an optimized version of the Conformer model with 8x depthwise-separable convolutional downsampling. You may find more information on the details of FastConformer here: [Fast-Conformer Model](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html#fast-conformer).

## Training

The NeMo toolkit [3] was used for finetuning this model for 20,000 steps over `parakeet-tdt-1.1` model. This model is trained with this [example script](https://github.com/NVIDIA/NeMo/blob/main/examples/asr/asr_hybrid_transducer_ctc/speech_to_text_hybrid_rnnt_ctc_bpe.py) and this [base config](https://github.com/NVIDIA/NeMo/blob/main/examples/asr/conf/fastconformer/hybrid_transducer_ctc/fastconformer_hybrid_transducer_ctc_bpe.yaml).

The tokenizers for these models were built using the text transcripts of the train set with this [script](https://github.com/NVIDIA/NeMo/blob/main/scripts/tokenizers/process_asr_text_tokenizer.py).

### Datasets

The model was trained on 36K hours of English speech collected and prepared by NVIDIA NeMo and Suno teams.

The training dataset consists of private subset with 27K hours of English speech plus 9k hours from the following public PnC datasets:

- Librispeech 960 hours of English speech

- Fisher Corpus

- National Speech Corpus Part 1

- VCTK

- VoxPopuli (EN)

- Europarl-ASR (EN)

- Multilingual Librispeech (MLS EN) - 2,000 hour subset

- Mozilla Common Voice (v7.0)

## Performance

The performance of Automatic Speech Recognition models is measuring using Word Error Rate. Since this dataset is trained on multiple domains and a much larger corpus, it will generally perform better at transcribing audio in general.

The following tables summarizes the performance of the available models in this collection with the Transducer decoder. Performances of the ASR models are reported in terms of Word Error Rate (WER%) with greedy decoding.

|**Version**|**Tokenizer**|**Vocabulary Size**|**AMI**|**Earnings-22**|**Giga Speech**|**LS test-clean**|**SPGI Speech**|**TEDLIUM-v3**|**Vox Populi**|**Common Voice**|

|---------|-----------------------|-----------------|---------------|---------------|------------|-----------|-----|-------|------|------|

| 1.23.0 | SentencePiece Unigram | 1024 | 15.94 | 11.86 | 10.19 | 1.82 | 3.67 | 2.24 | 3.87 | 6.19 | 8.69 |

These are greedy WER numbers without external LM. More details on evaluation can be found at [HuggingFace ASR Leaderboard](https://huggingface.co/spaces/hf-audio/open_asr_leaderboard)

## Model Fairness Evaluation

As outlined in the paper "Towards Measuring Fairness in AI: the Casual Conversations Dataset", we assessed the parakeet-tdt_ctc-1.1b model for fairness. The model was evaluated on the CausalConversations-v1 dataset, and the results are reported as follows:

### Gender Bias:

| Gender | Male | Female | N/A | Other |

| :--- | :--- | :--- | :--- | :--- |

| Num utterances | 19325 | 24532 | 926 | 33 |

| % WER | 12.81 | 10.49 | 13.88 | 23.12 |

### Age Bias:

| Age Group | (18-30) | (31-45) | (46-85) | (1-100) |

| :--- | :--- | :--- | :--- | :--- |

| Num utterances | 15956 | 14585 | 13349 | 43890 |

| % WER | 11.50 | 11.63 | 11.38 | 11.51 |

(Error rates for fairness evaluation are determined by normalizing both the reference and predicted text, similar to the methods used in the evaluations found at https://github.com/huggingface/open_asr_leaderboard.)

## NVIDIA Riva: Deployment

[NVIDIA Riva](https://developer.nvidia.com/riva), is an accelerated speech AI SDK deployable on-prem, in all clouds, multi-cloud, hybrid, on edge, and embedded.

Additionally, Riva provides:

* World-class out-of-the-box accuracy for the most common languages with model checkpoints trained on proprietary data with hundreds of thousands of GPU-compute hours

* Best in class accuracy with run-time word boosting (e.g., brand and product names) and customization of acoustic model, language model, and inverse text normalization

* Streaming speech recognition, Kubernetes compatible scaling, and enterprise-grade support

Although this model isn’t supported yet by Riva, the [list of supported models is here](https://huggingface.co/models?other=Riva).

Check out [Riva live demo](https://developer.nvidia.com/riva#demos).

## References

[1] [Fast Conformer with Linearly Scalable Attention for Efficient Speech Recognition](https://arxiv.org/abs/2305.05084)

[2] [Efficient Sequence Transduction by Jointly Predicting Tokens and Durations](https://arxiv.org/abs/2304.06795)

[3] [Google Sentencepiece Tokenizer](https://github.com/google/sentencepiece)

[4] [NVIDIA NeMo Toolkit](https://github.com/NVIDIA/NeMo)

[5] [Suno.ai](https://suno.ai/)

[6] [HuggingFace ASR Leaderboard](https://huggingface.co/spaces/hf-audio/open_asr_leaderboard)

[7] [Towards Measuring Fairness in AI: the Casual Conversations Dataset](https://arxiv.org/abs/2104.02821)

## Licence

License to use this model is covered by the [CC-BY-4.0](https://creativecommons.org/licenses/by/4.0/). By downloading the public and release version of the model, you accept the terms and conditions of the [CC-BY-4.0](https://creativecommons.org/licenses/by/4.0/) license. |

cognitivecomputations/dolphin-2.9.3-qwen2-1.5b | cognitivecomputations | 2024-06-14T01:17:55Z | 564 | 9 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"axolotl",

"conversational",

"dataset:cognitivecomputations/Dolphin-2.9",

"dataset:teknium/OpenHermes-2.5",

"dataset:m-a-p/CodeFeedback-Filtered-Instruction",

"dataset:cognitivecomputations/dolphin-coder",

"dataset:cognitivecomputations/samantha-data",

"dataset:microsoft/orca-math-word-problems-200k",

"dataset:Locutusque/function-calling-chatml",

"dataset:internlm/Agent-FLAN",

"base_model:Qwen/Qwen2-1.5B",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-06-10T15:16:01Z | ---

license: apache-2.0

base_model: Qwen/Qwen2-1.5B

tags:

- generated_from_trainer

- axolotl

datasets:

- cognitivecomputations/Dolphin-2.9

- teknium/OpenHermes-2.5

- m-a-p/CodeFeedback-Filtered-Instruction

- cognitivecomputations/dolphin-coder

- cognitivecomputations/samantha-data

- microsoft/orca-math-word-problems-200k

- Locutusque/function-calling-chatml

- internlm/Agent-FLAN

---

# Dolphin 2.9.3 Qwen2 1.5B 🐬

Curated and trained by Eric Hartford, Lucas Atkins, and Fernando Fernandes, and Cognitive Computations

[](https://discord.gg/cognitivecomputations)

Discord: https://discord.gg/cognitivecomputations

<img src="https://cdn-uploads.huggingface.co/production/uploads/63111b2d88942700629f5771/ldkN1J0WIDQwU4vutGYiD.png" width="600" />

Our appreciation for the sponsors of Dolphin 2.9.3:

- [Crusoe Cloud](https://crusoe.ai/) - provided excellent on-demand 8xH100 node

This model is based on Qwen2-1.5b, and is governed by the Apache-2.0

The base model has 128k context, and the full-weight fine-tuning was with 16k sequence length.

Due to the complexities of fine tuning smaller models on datasets created by/for larger models - we removed coding, function calling and systemchat-multilingual datasets when tuning these models.

example:

```

<|im_start|>system

You are Dolphin, a helpful AI assistant.<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

```

Dolphin-2.9.3 has a variety of instruction and conversational skills.

Dolphin is uncensored. We have filtered the dataset to remove alignment and bias. This makes the model more compliant. You are advised to implement your own alignment layer before exposing the model as a service. It will be highly compliant with any requests, even unethical ones. Please read my blog post about uncensored models. https://erichartford.com/uncensored-models You are responsible for any content you create using this model. Enjoy responsibly.

Dolphin is licensed according to Apache-2.0 We grant permission for any use, including commercial, that falls within accordance with said license. Dolphin was trained on data generated from GPT4, among other models.

Evals:

<img src="https://i.ibb.co/JpNLRYG/file-9-Fs-Qpm4-LKPz-CZy08-Bu-MZj-Xi-V.png" width="600" /> |

Quant-Cartel/TeTO-MS-8x7b-iMat-GGUF | Quant-Cartel | 2024-06-13T16:18:58Z | 564 | 1 | null | [

"gguf",

"conversational",

"mixtral",

"merge",

"mergekit",

"arxiv:2403.19522",

"license:cc-by-nc-4.0",

"region:us"

]

| null | 2024-06-11T03:20:56Z | ---

license: cc-by-nc-4.0

tags:

- conversational

- mixtral

- merge

- mergekit

---

```

e88 88e d8

d888 888b 8888 8888 ,"Y88b 888 8e d88

C8888 8888D 8888 8888 "8" 888 888 88b d88888

Y888 888P Y888 888P ,ee 888 888 888 888

"88 88" "88 88" "88 888 888 888 888

b

8b,

e88'Y88 d8 888

d888 'Y ,"Y88b 888,8, d88 ,e e, 888

C8888 "8" 888 888 " d88888 d88 88b 888

Y888 ,d ,ee 888 888 888 888 , 888

"88,d88 "88 888 888 888 "YeeP" 888

PROUDLY PRESENTS

```

<img src="https://files.catbox.moe/zdxyzv.png" width="400"/>

## TeTO-MS-8x7b-iMat-GGUF

<i>Weighted quants were made using the full precision fp16 model and groups_merged_enhancedV3.</i>

<u><b>Te</b></u>soro + <u><b>T</b></u>yphon + <u><b>O</b></u>penGPT

Presenting a Model Stock experiment combining the unique strengths from the following 8x7b Mixtral models:

* Tess-2.0-Mixtral-8x7B-v0.2 / [migtissera](https://huggingface.co/migtissera) / General Purpose

* Typhon-Mixtral-v1 / [Sao10K](https://huggingface.co/Sao10K) / Creative & Story Completion

* Open_Gpt4_8x7B_v0.2 / [rombodawg](https://huggingface.co/rombodawg) / Conversational

# Recommended Template

* Basic: Alpaca Format

* Advanced: See context/instruct/sampler settings in [our new Recommended Settings repo](https://huggingface.co/Quant-Cartel/Recommended-Settings/tree/main/Teto-MS-8x7b).

* Huge shout out to [rAIfle](https://huggingface.co/rAIfle) for his original work on the Wizard 8x22b templates which were modified for this model.

<H2>Methodology</H2>

> [I]nnovative layer-wise weight averaging technique surpasses state-of-the-art model methods such as Model Soup, utilizing only two fine-tuned models. This strategy can be aptly coined Model Stock, highlighting its reliance on selecting a minimal number of models to draw a more optimized-averaged model

<i> (From [arXiv:2403.19522](https://arxiv.org/pdf/2403.19522))</i>

* Methodology and merging process was based on the following paper - [Model Stock: All we need is just a few fine-tuned models](https://arxiv.org/abs/2403.19522)

* Initial model selection was based on top performing models of Mixtral architecture covering a variety of use cases and skills

* Base model (Mixtral Instruct 8x7b v0.1) was chosen after outperforming two other potential base models in terms of MMLU benchmark performance.

# Output

<img src="https://files.catbox.moe/bw97yg.PNG" width="400"/>

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using Mixtral-8x7B-v0.1-Instruct as a base.

### Models Merged

The following models were included in the merge:

* migtissera_Tess-2.0-Mixtral-8x7B-v0.2

* rombodawg_Open_Gpt4_8x7B_v0.2

* Sao10K_Typhon-Mixtral-v1

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: models/migtissera_Tess-2.0-Mixtral-8x7B-v0.2

- model: models/Sao10K_Typhon-Mixtral-v1

- model: models/rombodawg_Open_Gpt4_8x7B_v0.2

merge_method: model_stock

base_model: models/Mixtral-8x7B-v0.1-Instruct

dtype: float16

```

## Appendix - Llama.cpp MMLU Benchmark Results*

<i>These results were calculated via perplexity.exe from llama.cpp using the following params:</i>

`.\perplexity -m .\models\TeTO-8x7b-MS-v0.03\TeTO-MS-8x7b-Q6_K.gguf -bf .\evaluations\mmlu-test.bin --multiple-choice -c 8192 -t 23 -ngl 200`

```

* V0.01 (4 model / Mixtral Base):

Final result: 43.3049 +/- 0.4196

Random chance: 25.0000 +/- 0.3667

* V0.02 (3 model / Tess Mixtral Base):

Final result: 43.8356 +/- 0.4202

Random chance: 25.0000 +/- 0.3667

* V0.03 (4 model / Mixtral Instruct Base):

Final result: 45.7004 +/- 0.4219

Random chance: 25.0000 +/- 0.3667

```

*Please be advised metrics above are not representative of final HF benchmark scores for reasons given [here](https://github.com/ggerganov/llama.cpp/pull/5047) |

abmorton/standard-small-2 | abmorton | 2024-06-30T23:07:21Z | 564 | 0 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2024-06-30T23:03:17Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### standard-small-2 Dreambooth model trained by abmorton with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

ai-forever/ruclip-vit-base-patch16-384 | ai-forever | 2022-01-11T02:29:57Z | 563 | 1 | transformers | [

"transformers",

"pytorch",

"endpoints_compatible",

"region:us"

]

| null | 2022-03-02T23:29:05Z | # ruclip-vit-base-patch16-384

**RuCLIP** (**Ru**ssian **C**ontrastive **L**anguage–**I**mage **P**retraining) is a multimodal model

for obtaining images and text similarities and rearranging captions and pictures.

RuCLIP builds on a large body of work on zero-shot transfer, computer vision, natural language processing and

multimodal learning.

Model was trained by [Sber AI](https://github.com/sberbank-ai) and [SberDevices](https://sberdevices.ru/) teams.

* Task: `text ranking`; `image ranking`; `zero-shot image classification`;

* Type: `encoder`

* Num Parameters: `150M`

* Training Data Volume: `240 million text-image pairs`

* Language: `Russian`

* Context Length: `77`

* Transformer Layers: `12`

* Transformer Width: `512`

* Transformer Heads: `8`

* Image Size: `384`

* Vision Layers: `12`

* Vision Width: `768`

* Vision Patch Size: `16`

## Usage [Github](https://github.com/sberbank-ai/ru-clip)

```

pip install ruclip

```

```python

clip, processor = ruclip.load("ruclip-vit-base-patch16-384", device="cuda")

```

## Performance

We have evaluated the performance on the following datasets:

| Dataset | Metric Name | Metric Result |

|:--------------|:---------------|:--------------------|

| Food101 | acc | 0.689 |

| CIFAR10 | acc | 0.845 |

| CIFAR100 | acc | 0.569 |

| Birdsnap | acc | 0.195 |

| SUN397 | acc | 0.521 |

| Stanford Cars | acc | 0.626 |

| DTD | acc | 0.421 |

| MNIST | acc | 0.478 |

| STL10 | acc | 0.964 |

| PCam | acc | 0.501 |

| CLEVR | acc | 0.132 |

| Rendered SST2 | acc | 0.525 |

| ImageNet | acc | 0.482 |

| FGVC Aircraft | mean-per-class | 0.046 |

| Oxford Pets | mean-per-class | 0.635 |

| Caltech101 | mean-per-class | 0.835 |

| Flowers102 | mean-per-class | 0.452 |

| HatefulMemes | roc-auc | 0.543 |

# Authors

+ Alex Shonenkov: [Github](https://github.com/shonenkov), [Kaggle GM](https://www.kaggle.com/shonenkov)

+ Daniil Chesakov: [Github](https://github.com/Danyache)

+ Denis Dimitrov: [Github](https://github.com/denndimitrov)

+ Igor Pavlov: [Github](https://github.com/boomb0om)

|

DucHaiten/DH_ClassicAnime | DucHaiten | 2023-03-02T17:04:56Z | 563 | 49 | diffusers | [

"diffusers",

"stable-diffusion",

"text-to-image",

"image-to-image",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-02-13T15:41:07Z | ---

license: creativeml-openrail-m

language:

- en

tags:

- stable-diffusion

- text-to-image

- image-to-image

- diffusers

---

I don't know about you, but in my opinion this is the best anime model I've ever created. With a bit of romance, a little bit of classic and indispensable NSFW, this is my favorite anime model. I even intended to sell it but changed my mind in the end, it wouldn't be good if it couldn't be used by everyone.

After studying this model for a while, I have learned some experiences to create better images:

1. always add the keyword **(80s anime style)** at the beginning of the prompt. added gta style, the trigger keyword is **(gtav style)** note only one keyword can be added in the prompt, gta no anime, anime no gta

2. use this negative prompt <pre>illustration, painting, cartoons, sketch, (worst quality:2), (low quality:2), (normal quality:2), lowres, bad anatomy, bad hands, ((monochrome)), ((grayscale)), collapsed eyeshadow, multiple eyebrows, vaginas in breasts, (cropped), oversaturated, extra limb, missing limbs, deformed hands, long neck, long body, imperfect, (bad hands), signature, watermark, username, artist name, conjoined fingers, deformed fingers, ugly eyes, imperfect eyes, skewed eyes, unnatural face, unnatural body, error</pre>

3. CFG Scale to range from 12.5 to 15

Note that my sample image has no VAE

|

TheBloke/OrcaMaidXL-17B-32k-GGUF | TheBloke | 2023-12-21T13:34:28Z | 563 | 6 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation",

"base_model:ddh0/OrcaMaidXL-17B-32k",

"license:other",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-12-21T11:48:13Z | ---

base_model: ddh0/OrcaMaidXL-17B-32k

inference: false

license: other

license_link: https://huggingface.co/microsoft/Orca-2-13b/blob/main/LICENSE

license_name: microsoft-research-license

model_creator: ddh0

model_name: OrcaMaidXL 17B 32K

model_type: llama

pipeline_tag: text-generation

prompt_template: 'Below is an instruction that describes a task. Write a response

that appropriately completes the request.

### Instruction:

{prompt}

### Response:

'

quantized_by: TheBloke

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# OrcaMaidXL 17B 32K - GGUF

- Model creator: [ddh0](https://huggingface.co/ddh0)

- Original model: [OrcaMaidXL 17B 32K](https://huggingface.co/ddh0/OrcaMaidXL-17B-32k)

<!-- description start -->

## Description

This repo contains GGUF format model files for [ddh0's OrcaMaidXL 17B 32K](https://huggingface.co/ddh0/OrcaMaidXL-17B-32k).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GGUF)

* [ddh0's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/ddh0/OrcaMaidXL-17B-32k)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Alpaca

```

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

{prompt}

### Response:

```

<!-- prompt-template end -->

<!-- licensing start -->

## Licensing

The creator of the source model has listed its license as `other`, and this quantization has therefore used that same license.

As this model is based on Llama 2, it is also subject to the Meta Llama 2 license terms, and the license files for that are additionally included. It should therefore be considered as being claimed to be licensed under both licenses. I contacted Hugging Face for clarification on dual licensing but they do not yet have an official position. Should this change, or should Meta provide any feedback on this situation, I will update this section accordingly.

In the meantime, any questions regarding licensing, and in particular how these two licenses might interact, should be directed to the original model repository: [ddh0's OrcaMaidXL 17B 32K](https://huggingface.co/ddh0/OrcaMaidXL-17B-32k).

<!-- licensing end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [orcamaidxl-17b-32k.Q2_K.gguf](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GGUF/blob/main/orcamaidxl-17b-32k.Q2_K.gguf) | Q2_K | 2 | 7.26 GB| 9.76 GB | smallest, significant quality loss - not recommended for most purposes |

| [orcamaidxl-17b-32k.Q3_K_S.gguf](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GGUF/blob/main/orcamaidxl-17b-32k.Q3_K_S.gguf) | Q3_K_S | 3 | 7.57 GB| 10.07 GB | very small, high quality loss |

| [orcamaidxl-17b-32k.Q3_K_M.gguf](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GGUF/blob/main/orcamaidxl-17b-32k.Q3_K_M.gguf) | Q3_K_M | 3 | 8.48 GB| 10.98 GB | very small, high quality loss |

| [orcamaidxl-17b-32k.Q3_K_L.gguf](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GGUF/blob/main/orcamaidxl-17b-32k.Q3_K_L.gguf) | Q3_K_L | 3 | 9.28 GB| 11.78 GB | small, substantial quality loss |

| [orcamaidxl-17b-32k.Q4_0.gguf](https://huggingface.co/TheBloke/OrcaMaidXL-17B-32k-GGUF/blob/main/orcamaidxl-17b-32k.Q4_0.gguf) | Q4_0 | 4 | 9.87 GB| 12.37 GB | legacy; small, very high quality loss - prefer using Q3_K_M |