modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

mradermacher/llama-3-8b-chat-music-v2-GGUF | mradermacher | 2024-06-16T22:58:39Z | 3,254 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:wstcpyt1988/llama-3-8b-chat-music-v2",

"endpoints_compatible",

"region:us"

] | null | 2024-06-16T20:59:46Z | ---

base_model: wstcpyt1988/llama-3-8b-chat-music-v2

language:

- en

library_name: transformers

quantized_by: mradermacher

tags: []

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/wstcpyt1988/llama-3-8b-chat-music-v2

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.IQ3_XS.gguf) | IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.IQ3_S.gguf) | IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.IQ3_M.gguf) | IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/llama-3-8b-chat-music-v2-GGUF/resolve/main/llama-3-8b-chat-music-v2.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

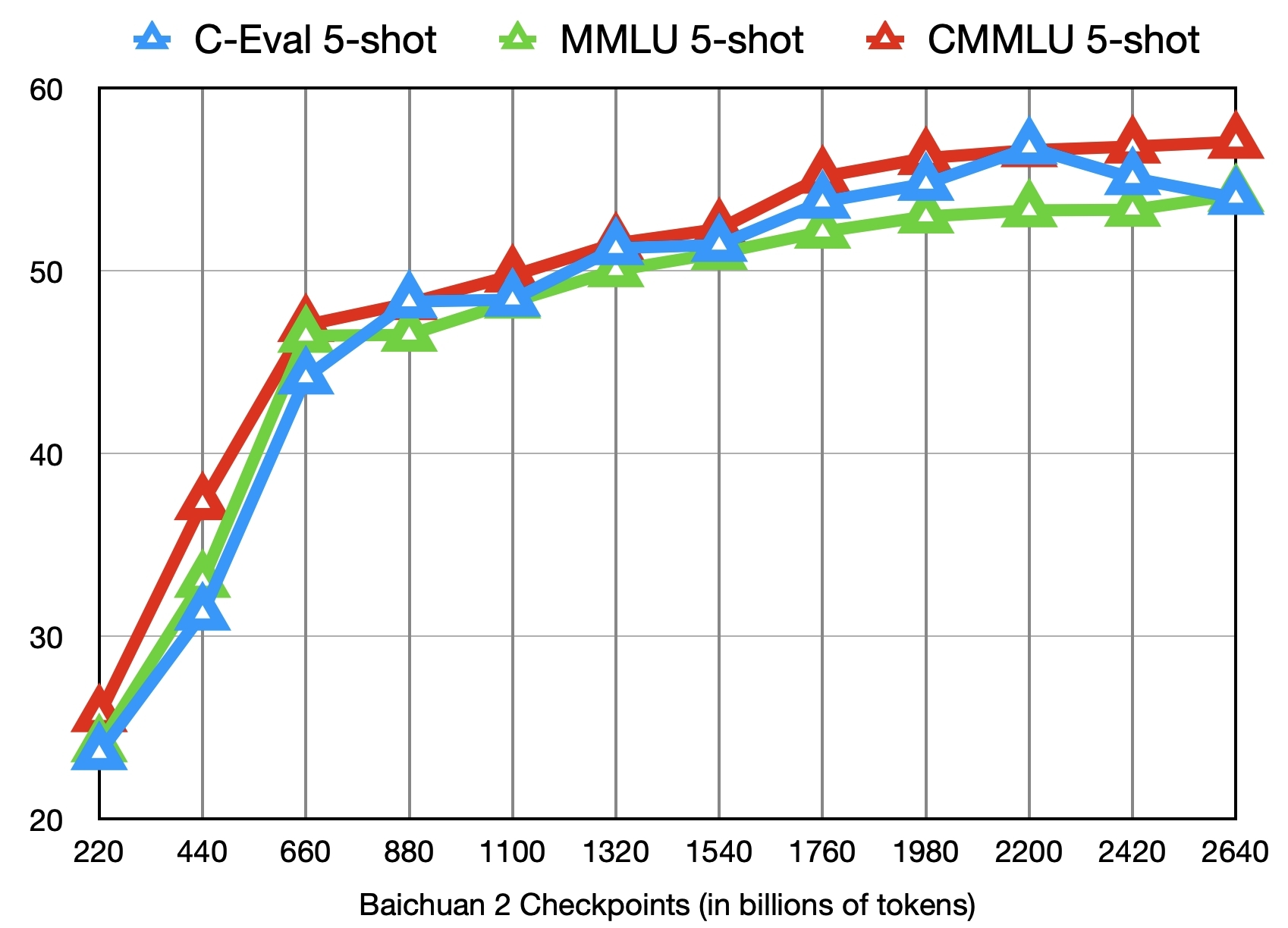

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

timm/vit_large_patch32_384.orig_in21k_ft_in1k | timm | 2023-05-06T00:26:10Z | 3,252 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"dataset:imagenet-21k",

"arxiv:2010.11929",

"license:apache-2.0",

"region:us"

] | image-classification | 2022-12-22T07:51:06Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

- imagenet-21k

---

# Model card for vit_large_patch32_384.orig_in21k_ft_in1k

A Vision Transformer (ViT) image classification model. Trained on ImageNet-21k and fine-tuned on ImageNet-1k in JAX by paper authors, ported to PyTorch by Ross Wightman.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 306.6

- GMACs: 44.3

- Activations (M): 32.2

- Image size: 384 x 384

- **Papers:**

- An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale: https://arxiv.org/abs/2010.11929v2

- **Dataset:** ImageNet-1k

- **Pretrain Dataset:** ImageNet-21k

- **Original:** https://github.com/google-research/vision_transformer

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('vit_large_patch32_384.orig_in21k_ft_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'vit_large_patch32_384.orig_in21k_ft_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 145, 1024) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

## Citation

```bibtex

@article{dosovitskiy2020vit,

title={An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale},

author={Dosovitskiy, Alexey and Beyer, Lucas and Kolesnikov, Alexander and Weissenborn, Dirk and Zhai, Xiaohua and Unterthiner, Thomas and Dehghani, Mostafa and Minderer, Matthias and Heigold, Georg and Gelly, Sylvain and Uszkoreit, Jakob and Houlsby, Neil},

journal={ICLR},

year={2021}

}

```

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

```

|

uukuguy/speechless-codellama-orca-airoboros-13b-0.10e | uukuguy | 2023-09-04T10:17:20Z | 3,252 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"llama-2",

"en",

"dataset:garage-bAInd/Open-Platypus",

"arxiv:2308.12950",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-04T09:49:05Z | ---

language:

- en

library_name: transformers

pipeline_tag: text-generation

datasets:

- garage-bAInd/Open-Platypus

tags:

- llama-2

license: llama2

---

<p><h1> speechless-codellama-orca-airoboros-13b <h1></p>

Fine-tune the codellama/CodeLlama-13b-hf with Orca and Airoboros datasets.

| Metric | Value |

| --- | --- |

| ARC | |

| HellaSwag | |

| MMLU | |

| TruthfulQA | |

| Average | |

# **Code Llama**

Code Llama is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 34 billion parameters. This is the repository for the base 13B version in the Hugging Face Transformers format. This model is designed for general code synthesis and understanding. Links to other models can be found in the index at the bottom.

| | Base Model | Python | Instruct |

| --- | ----------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------- | ----------------------------------------------------------------------------------------------- |

| 7B | [codellama/CodeLlama-7b-hf](https://huggingface.co/codellama/CodeLlama-7b-hf) | [codellama/CodeLlama-7b-Python-hf](https://huggingface.co/codellama/CodeLlama-7b-Python-hf) | [codellama/CodeLlama-7b-Instruct-hf](https://huggingface.co/codellama/CodeLlama-7b-Instruct-hf) |

| 13B | [codellama/CodeLlama-13b-hf](https://huggingface.co/codellama/CodeLlama-13b-hf) | [codellama/CodeLlama-13b-Python-hf](https://huggingface.co/codellama/CodeLlama-13b-Python-hf) | [codellama/CodeLlama-13b-Instruct-hf](https://huggingface.co/codellama/CodeLlama-13b-Instruct-hf) |

| 34B | [codellama/CodeLlama-34b-hf](https://huggingface.co/codellama/CodeLlama-34b-hf) | [codellama/CodeLlama-34b-Python-hf](https://huggingface.co/codellama/CodeLlama-34b-Python-hf) | [codellama/CodeLlama-34b-Instruct-hf](https://huggingface.co/codellama/CodeLlama-34b-Instruct-hf) |

## Model Use

To use this model, please make sure to install transformers from `main` until the next version is released:

```bash

pip install git+https://github.com/huggingface/transformers.git@main accelerate

```

Model capabilities:

- [x] Code completion.

- [x] Infilling.

- [ ] Instructions / chat.

- [ ] Python specialist.

```python

from transformers import AutoTokenizer

import transformers

import torch

model = "codellama/CodeLlama-13b-hf"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

sequences = pipeline(

'import socket\n\ndef ping_exponential_backoff(host: str):',

do_sample=True,

top_k=10,

temperature=0.1,

top_p=0.95,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=200,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

```

## Model Details

*Note: Use of this model is governed by the Meta license. Meta developed and publicly released the Code Llama family of large language models (LLMs).

**Model Developers** Meta

**Variations** Code Llama comes in three model sizes, and three variants:

* Code Llama: base models designed for general code synthesis and understanding

* Code Llama - Python: designed specifically for Python

* Code Llama - Instruct: for instruction following and safer deployment

All variants are available in sizes of 7B, 13B and 34B parameters.

**This repository contains the base version of the 13B parameters model.**

**Input** Models input text only.

**Output** Models generate text only.

**Model Architecture** Code Llama is an auto-regressive language model that uses an optimized transformer architecture.

**Model Dates** Code Llama and its variants have been trained between January 2023 and July 2023.

**Status** This is a static model trained on an offline dataset. Future versions of Code Llama - Instruct will be released as we improve model safety with community feedback.

**License** A custom commercial license is available at: [https://ai.meta.com/resources/models-and-libraries/llama-downloads/](https://ai.meta.com/resources/models-and-libraries/llama-downloads/)

**Research Paper** More information can be found in the paper "[Code Llama: Open Foundation Models for Code](https://ai.meta.com/research/publications/code-llama-open-foundation-models-for-code/)" or its [arXiv page](https://arxiv.org/abs/2308.12950).

## Intended Use

**Intended Use Cases** Code Llama and its variants is intended for commercial and research use in English and relevant programming languages. The base model Code Llama can be adapted for a variety of code synthesis and understanding tasks, Code Llama - Python is designed specifically to handle the Python programming language, and Code Llama - Instruct is intended to be safer to use for code assistant and generation applications.

**Out-of-Scope Uses** Use in any manner that violates applicable laws or regulations (including trade compliance laws). Use in languages other than English. Use in any other way that is prohibited by the Acceptable Use Policy and Licensing Agreement for Code Llama and its variants.

## Hardware and Software

**Training Factors** We used custom training libraries. The training and fine-tuning of the released models have been performed Meta’s Research Super Cluster.

**Carbon Footprint** In aggregate, training all 9 Code Llama models required 400K GPU hours of computation on hardware of type A100-80GB (TDP of 350-400W). Estimated total emissions were 65.3 tCO2eq, 100% of which were offset by Meta’s sustainability program.

## Training Data

All experiments reported here and the released models have been trained and fine-tuned using the same data as Llama 2 with different weights (see Section 2 and Table 1 in the [research paper](https://ai.meta.com/research/publications/code-llama-open-foundation-models-for-code/) for details).

## Evaluation Results

See evaluations for the main models and detailed ablations in Section 3 and safety evaluations in Section 4 of the research paper.

## Ethical Considerations and Limitations

Code Llama and its variants are a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, Code Llama’s potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate or objectionable responses to user prompts. Therefore, before deploying any applications of Code Llama, developers should perform safety testing and tuning tailored to their specific applications of the model.

Please see the Responsible Use Guide available available at [https://ai.meta.com/llama/responsible-user-guide](https://ai.meta.com/llama/responsible-user-guide).

|

NousResearch/CodeLlama-34b-hf | NousResearch | 2023-08-24T17:57:35Z | 3,250 | 2 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"custom_code",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-24T17:51:05Z | Entry not found |

jiaowobaba02/stable-diffusion-v2-1-GGUF | jiaowobaba02 | 2024-01-16T14:37:10Z | 3,250 | 11 | null | [

"gguf",

"art",

"stable-diffusion",

"text-to-image",

"region:us"

] | text-to-image | 2024-01-16T12:53:54Z | ---

pipeline_tag: text-to-image

tags:

- art

- stable-diffusion

---

# Stable-diffusion-GGUF

There are some files quantitated to q8_0 , q5_0 , q5_1 , q4_1 .

To run these models, you can go to [this page](https://github.com/leejet/stable-diffusion.cpp) to download the code or run this command

```

git clone --recursive https://github.com/leejet/stable-diffusion.cpp.git

```

\

And then compile it just as the instructions on the github page. \

Finally,run

```

./sd -m '/model/stable_diffusion-ema-pruned-v2-1_768.q8_0.gguf' -p "a lovely cat" -s -1

```

. Then you can see the 'output.png'. |

mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF | mradermacher | 2024-06-05T14:14:05Z | 3,250 | 0 | transformers | [

"transformers",

"gguf",

"mergekit",

"merge",

"en",

"base_model:arcee-ai/MyAlee-Mistral-Instruct-v2-32k-v3-merged",

"endpoints_compatible",

"region:us"

] | null | 2024-06-05T12:17:53Z | ---

base_model: arcee-ai/MyAlee-Mistral-Instruct-v2-32k-v3-merged

language:

- en

library_name: transformers

quantized_by: mradermacher

tags:

- mergekit

- merge

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/arcee-ai/MyAlee-Mistral-Instruct-v2-32k-v3-merged

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q2_K.gguf) | Q2_K | 2.8 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.IQ3_XS.gguf) | IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q3_K_S.gguf) | Q3_K_S | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.IQ3_S.gguf) | IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.IQ3_M.gguf) | IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q3_K_M.gguf) | Q3_K_M | 3.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q3_K_L.gguf) | Q3_K_L | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.IQ4_XS.gguf) | IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q4_K_S.gguf) | Q4_K_S | 4.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q4_K_M.gguf) | Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q5_K_S.gguf) | Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q5_K_M.gguf) | Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q6_K.gguf) | Q6_K | 6.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.Q8_0.gguf) | Q8_0 | 7.8 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/MyAlee-Mistral-Instruct-v2-32k-v3-merged-GGUF/resolve/main/MyAlee-Mistral-Instruct-v2-32k-v3-merged.f16.gguf) | f16 | 14.6 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

liddlefish/privacy_embedding_rag_10k_base_final | liddlefish | 2024-06-10T06:39:48Z | 3,250 | 1 | sentence-transformers | [

"sentence-transformers",

"safetensors",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"mteb",

"en",

"arxiv:2401.03462",

"arxiv:2312.15503",

"arxiv:2311.13534",

"arxiv:2310.07554",

"arxiv:2309.07597",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

] | feature-extraction | 2024-06-10T06:39:16Z | ---

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- mteb

model-index:

- name: bge-base-en-v1.5

results:

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (en)

config: en

split: test

revision: e8379541af4e31359cca9fbcf4b00f2671dba205

metrics:

- type: accuracy

value: 76.14925373134328

- type: ap

value: 39.32336517995478

- type: f1

value: 70.16902252611425

- task:

type: Classification

dataset:

type: mteb/amazon_polarity

name: MTEB AmazonPolarityClassification

config: default

split: test

revision: e2d317d38cd51312af73b3d32a06d1a08b442046

metrics:

- type: accuracy

value: 93.386825

- type: ap

value: 90.21276917991995

- type: f1

value: 93.37741030006174

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (en)

config: en

split: test

revision: 1399c76144fd37290681b995c656ef9b2e06e26d

metrics:

- type: accuracy

value: 48.846000000000004

- type: f1

value: 48.14646269778261

- task:

type: Retrieval

dataset:

type: arguana

name: MTEB ArguAna

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 40.754000000000005

- type: map_at_10

value: 55.761

- type: map_at_100

value: 56.330999999999996

- type: map_at_1000

value: 56.333999999999996

- type: map_at_3

value: 51.92

- type: map_at_5

value: 54.010999999999996

- type: mrr_at_1

value: 41.181

- type: mrr_at_10

value: 55.967999999999996

- type: mrr_at_100

value: 56.538

- type: mrr_at_1000

value: 56.542

- type: mrr_at_3

value: 51.980000000000004

- type: mrr_at_5

value: 54.208999999999996

- type: ndcg_at_1

value: 40.754000000000005

- type: ndcg_at_10

value: 63.605000000000004

- type: ndcg_at_100

value: 66.05199999999999

- type: ndcg_at_1000

value: 66.12

- type: ndcg_at_3

value: 55.708

- type: ndcg_at_5

value: 59.452000000000005

- type: precision_at_1

value: 40.754000000000005

- type: precision_at_10

value: 8.841000000000001

- type: precision_at_100

value: 0.991

- type: precision_at_1000

value: 0.1

- type: precision_at_3

value: 22.238

- type: precision_at_5

value: 15.149000000000001

- type: recall_at_1

value: 40.754000000000005

- type: recall_at_10

value: 88.407

- type: recall_at_100

value: 99.14699999999999

- type: recall_at_1000

value: 99.644

- type: recall_at_3

value: 66.714

- type: recall_at_5

value: 75.747

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-p2p

name: MTEB ArxivClusteringP2P

config: default

split: test

revision: a122ad7f3f0291bf49cc6f4d32aa80929df69d5d

metrics:

- type: v_measure

value: 48.74884539679369

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-s2s

name: MTEB ArxivClusteringS2S

config: default

split: test

revision: f910caf1a6075f7329cdf8c1a6135696f37dbd53

metrics:

- type: v_measure

value: 42.8075893810716

- task:

type: Reranking

dataset:

type: mteb/askubuntudupquestions-reranking

name: MTEB AskUbuntuDupQuestions

config: default

split: test

revision: 2000358ca161889fa9c082cb41daa8dcfb161a54

metrics:

- type: map

value: 62.128470519187736

- type: mrr

value: 74.28065778481289

- task:

type: STS

dataset:

type: mteb/biosses-sts

name: MTEB BIOSSES

config: default

split: test

revision: d3fb88f8f02e40887cd149695127462bbcf29b4a

metrics:

- type: cos_sim_pearson

value: 89.24629081484655

- type: cos_sim_spearman

value: 86.93752309911496

- type: euclidean_pearson

value: 87.58589628573816

- type: euclidean_spearman

value: 88.05622328825284

- type: manhattan_pearson

value: 87.5594959805773

- type: manhattan_spearman

value: 88.19658793233961

- task:

type: Classification

dataset:

type: mteb/banking77

name: MTEB Banking77Classification

config: default

split: test

revision: 0fd18e25b25c072e09e0d92ab615fda904d66300

metrics:

- type: accuracy

value: 86.9512987012987

- type: f1

value: 86.92515357973708

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-p2p

name: MTEB BiorxivClusteringP2P

config: default

split: test

revision: 65b79d1d13f80053f67aca9498d9402c2d9f1f40

metrics:

- type: v_measure

value: 39.10263762928872

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-s2s

name: MTEB BiorxivClusteringS2S

config: default

split: test

revision: 258694dd0231531bc1fd9de6ceb52a0853c6d908

metrics:

- type: v_measure

value: 36.69711517426737

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackAndroidRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 32.327

- type: map_at_10

value: 44.099

- type: map_at_100

value: 45.525

- type: map_at_1000

value: 45.641999999999996

- type: map_at_3

value: 40.47

- type: map_at_5

value: 42.36

- type: mrr_at_1

value: 39.199

- type: mrr_at_10

value: 49.651

- type: mrr_at_100

value: 50.29

- type: mrr_at_1000

value: 50.329

- type: mrr_at_3

value: 46.924

- type: mrr_at_5

value: 48.548

- type: ndcg_at_1

value: 39.199

- type: ndcg_at_10

value: 50.773

- type: ndcg_at_100

value: 55.67999999999999

- type: ndcg_at_1000

value: 57.495

- type: ndcg_at_3

value: 45.513999999999996

- type: ndcg_at_5

value: 47.703

- type: precision_at_1

value: 39.199

- type: precision_at_10

value: 9.914000000000001

- type: precision_at_100

value: 1.5310000000000001

- type: precision_at_1000

value: 0.198

- type: precision_at_3

value: 21.984

- type: precision_at_5

value: 15.737000000000002

- type: recall_at_1

value: 32.327

- type: recall_at_10

value: 63.743

- type: recall_at_100

value: 84.538

- type: recall_at_1000

value: 96.089

- type: recall_at_3

value: 48.065000000000005

- type: recall_at_5

value: 54.519

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackEnglishRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 32.671

- type: map_at_10

value: 42.954

- type: map_at_100

value: 44.151

- type: map_at_1000

value: 44.287

- type: map_at_3

value: 39.912

- type: map_at_5

value: 41.798

- type: mrr_at_1

value: 41.465

- type: mrr_at_10

value: 49.351

- type: mrr_at_100

value: 49.980000000000004

- type: mrr_at_1000

value: 50.016000000000005

- type: mrr_at_3

value: 47.144000000000005

- type: mrr_at_5

value: 48.592999999999996

- type: ndcg_at_1

value: 41.465

- type: ndcg_at_10

value: 48.565999999999995

- type: ndcg_at_100

value: 52.76499999999999

- type: ndcg_at_1000

value: 54.749

- type: ndcg_at_3

value: 44.57

- type: ndcg_at_5

value: 46.759

- type: precision_at_1

value: 41.465

- type: precision_at_10

value: 9.107999999999999

- type: precision_at_100

value: 1.433

- type: precision_at_1000

value: 0.191

- type: precision_at_3

value: 21.423000000000002

- type: precision_at_5

value: 15.414

- type: recall_at_1

value: 32.671

- type: recall_at_10

value: 57.738

- type: recall_at_100

value: 75.86500000000001

- type: recall_at_1000

value: 88.36

- type: recall_at_3

value: 45.626

- type: recall_at_5

value: 51.812000000000005

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGamingRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 41.185

- type: map_at_10

value: 53.929

- type: map_at_100

value: 54.92

- type: map_at_1000

value: 54.967999999999996

- type: map_at_3

value: 50.70400000000001

- type: map_at_5

value: 52.673

- type: mrr_at_1

value: 47.398

- type: mrr_at_10

value: 57.303000000000004

- type: mrr_at_100

value: 57.959

- type: mrr_at_1000

value: 57.985

- type: mrr_at_3

value: 54.932

- type: mrr_at_5

value: 56.464999999999996

- type: ndcg_at_1

value: 47.398

- type: ndcg_at_10

value: 59.653

- type: ndcg_at_100

value: 63.627

- type: ndcg_at_1000

value: 64.596

- type: ndcg_at_3

value: 54.455

- type: ndcg_at_5

value: 57.245000000000005

- type: precision_at_1

value: 47.398

- type: precision_at_10

value: 9.524000000000001

- type: precision_at_100

value: 1.243

- type: precision_at_1000

value: 0.13699999999999998

- type: precision_at_3

value: 24.389

- type: precision_at_5

value: 16.752

- type: recall_at_1

value: 41.185

- type: recall_at_10

value: 73.193

- type: recall_at_100

value: 90.357

- type: recall_at_1000

value: 97.253

- type: recall_at_3

value: 59.199999999999996

- type: recall_at_5

value: 66.118

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGisRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.27

- type: map_at_10

value: 36.223

- type: map_at_100

value: 37.218

- type: map_at_1000

value: 37.293

- type: map_at_3

value: 33.503

- type: map_at_5

value: 35.097

- type: mrr_at_1

value: 29.492

- type: mrr_at_10

value: 38.352000000000004

- type: mrr_at_100

value: 39.188

- type: mrr_at_1000

value: 39.247

- type: mrr_at_3

value: 35.876000000000005

- type: mrr_at_5

value: 37.401

- type: ndcg_at_1

value: 29.492

- type: ndcg_at_10

value: 41.239

- type: ndcg_at_100

value: 46.066

- type: ndcg_at_1000

value: 47.992000000000004

- type: ndcg_at_3

value: 36.11

- type: ndcg_at_5

value: 38.772

- type: precision_at_1

value: 29.492

- type: precision_at_10

value: 6.260000000000001

- type: precision_at_100

value: 0.914

- type: precision_at_1000

value: 0.11100000000000002

- type: precision_at_3

value: 15.104000000000001

- type: precision_at_5

value: 10.644

- type: recall_at_1

value: 27.27

- type: recall_at_10

value: 54.589

- type: recall_at_100

value: 76.70700000000001

- type: recall_at_1000

value: 91.158

- type: recall_at_3

value: 40.974

- type: recall_at_5

value: 47.327000000000005

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackMathematicaRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 17.848

- type: map_at_10

value: 26.207

- type: map_at_100

value: 27.478

- type: map_at_1000

value: 27.602

- type: map_at_3

value: 23.405

- type: map_at_5

value: 24.98

- type: mrr_at_1

value: 21.891

- type: mrr_at_10

value: 31.041999999999998

- type: mrr_at_100

value: 32.092

- type: mrr_at_1000

value: 32.151999999999994

- type: mrr_at_3

value: 28.358

- type: mrr_at_5

value: 29.969

- type: ndcg_at_1

value: 21.891

- type: ndcg_at_10

value: 31.585

- type: ndcg_at_100

value: 37.531

- type: ndcg_at_1000

value: 40.256

- type: ndcg_at_3

value: 26.508

- type: ndcg_at_5

value: 28.894

- type: precision_at_1

value: 21.891

- type: precision_at_10

value: 5.795999999999999

- type: precision_at_100

value: 0.9990000000000001

- type: precision_at_1000

value: 0.13799999999999998

- type: precision_at_3

value: 12.769

- type: precision_at_5

value: 9.279

- type: recall_at_1

value: 17.848

- type: recall_at_10

value: 43.452

- type: recall_at_100

value: 69.216

- type: recall_at_1000

value: 88.102

- type: recall_at_3

value: 29.18

- type: recall_at_5

value: 35.347

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackPhysicsRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 30.94

- type: map_at_10

value: 41.248000000000005

- type: map_at_100

value: 42.495

- type: map_at_1000

value: 42.602000000000004

- type: map_at_3

value: 37.939

- type: map_at_5

value: 39.924

- type: mrr_at_1

value: 37.824999999999996

- type: mrr_at_10

value: 47.041

- type: mrr_at_100

value: 47.83

- type: mrr_at_1000

value: 47.878

- type: mrr_at_3

value: 44.466

- type: mrr_at_5

value: 46.111999999999995

- type: ndcg_at_1

value: 37.824999999999996

- type: ndcg_at_10

value: 47.223

- type: ndcg_at_100

value: 52.394

- type: ndcg_at_1000

value: 54.432

- type: ndcg_at_3

value: 42.032000000000004

- type: ndcg_at_5

value: 44.772

- type: precision_at_1

value: 37.824999999999996

- type: precision_at_10

value: 8.393

- type: precision_at_100

value: 1.2890000000000001

- type: precision_at_1000

value: 0.164

- type: precision_at_3

value: 19.698

- type: precision_at_5

value: 14.013

- type: recall_at_1

value: 30.94

- type: recall_at_10

value: 59.316

- type: recall_at_100

value: 80.783

- type: recall_at_1000

value: 94.15400000000001

- type: recall_at_3

value: 44.712

- type: recall_at_5

value: 51.932

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackProgrammersRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.104

- type: map_at_10

value: 36.675999999999995

- type: map_at_100

value: 38.076

- type: map_at_1000

value: 38.189

- type: map_at_3

value: 33.733999999999995

- type: map_at_5

value: 35.287

- type: mrr_at_1

value: 33.904

- type: mrr_at_10

value: 42.55

- type: mrr_at_100

value: 43.434

- type: mrr_at_1000

value: 43.494

- type: mrr_at_3

value: 40.126

- type: mrr_at_5

value: 41.473

- type: ndcg_at_1

value: 33.904

- type: ndcg_at_10

value: 42.414

- type: ndcg_at_100

value: 48.203

- type: ndcg_at_1000

value: 50.437

- type: ndcg_at_3

value: 37.633

- type: ndcg_at_5

value: 39.67

- type: precision_at_1

value: 33.904

- type: precision_at_10

value: 7.82

- type: precision_at_100

value: 1.2409999999999999

- type: precision_at_1000

value: 0.159

- type: precision_at_3

value: 17.884

- type: precision_at_5

value: 12.648000000000001

- type: recall_at_1

value: 27.104

- type: recall_at_10

value: 53.563

- type: recall_at_100

value: 78.557

- type: recall_at_1000

value: 93.533

- type: recall_at_3

value: 39.92

- type: recall_at_5

value: 45.457

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.707749999999997

- type: map_at_10

value: 36.961

- type: map_at_100

value: 38.158833333333334

- type: map_at_1000

value: 38.270333333333326

- type: map_at_3

value: 34.07183333333334

- type: map_at_5

value: 35.69533333333334

- type: mrr_at_1

value: 32.81875

- type: mrr_at_10

value: 41.293

- type: mrr_at_100

value: 42.116499999999995

- type: mrr_at_1000

value: 42.170249999999996

- type: mrr_at_3

value: 38.83983333333333

- type: mrr_at_5

value: 40.29775

- type: ndcg_at_1

value: 32.81875

- type: ndcg_at_10

value: 42.355

- type: ndcg_at_100

value: 47.41374999999999

- type: ndcg_at_1000

value: 49.5805

- type: ndcg_at_3

value: 37.52825

- type: ndcg_at_5

value: 39.83266666666667

- type: precision_at_1

value: 32.81875

- type: precision_at_10

value: 7.382416666666666

- type: precision_at_100

value: 1.1640833333333334

- type: precision_at_1000

value: 0.15383333333333335

- type: precision_at_3

value: 17.134166666666665

- type: precision_at_5

value: 12.174833333333336

- type: recall_at_1

value: 27.707749999999997

- type: recall_at_10

value: 53.945

- type: recall_at_100

value: 76.191

- type: recall_at_1000

value: 91.101

- type: recall_at_3

value: 40.39083333333334

- type: recall_at_5

value: 46.40083333333333

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackStatsRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 26.482

- type: map_at_10

value: 33.201

- type: map_at_100

value: 34.107

- type: map_at_1000

value: 34.197

- type: map_at_3

value: 31.174000000000003

- type: map_at_5

value: 32.279

- type: mrr_at_1

value: 29.908

- type: mrr_at_10

value: 36.235

- type: mrr_at_100

value: 37.04

- type: mrr_at_1000

value: 37.105

- type: mrr_at_3

value: 34.355999999999995

- type: mrr_at_5

value: 35.382999999999996

- type: ndcg_at_1

value: 29.908

- type: ndcg_at_10

value: 37.325

- type: ndcg_at_100

value: 41.795

- type: ndcg_at_1000

value: 44.105

- type: ndcg_at_3

value: 33.555

- type: ndcg_at_5

value: 35.266999999999996

- type: precision_at_1

value: 29.908

- type: precision_at_10

value: 5.721

- type: precision_at_100

value: 0.8630000000000001

- type: precision_at_1000

value: 0.11299999999999999

- type: precision_at_3

value: 14.008000000000001

- type: precision_at_5

value: 9.754999999999999

- type: recall_at_1

value: 26.482

- type: recall_at_10

value: 47.072

- type: recall_at_100

value: 67.27

- type: recall_at_1000

value: 84.371

- type: recall_at_3

value: 36.65

- type: recall_at_5

value: 40.774

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackTexRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 18.815

- type: map_at_10

value: 26.369999999999997

- type: map_at_100

value: 27.458

- type: map_at_1000

value: 27.588

- type: map_at_3

value: 23.990000000000002

- type: map_at_5

value: 25.345000000000002

- type: mrr_at_1

value: 22.953000000000003

- type: mrr_at_10

value: 30.342999999999996

- type: mrr_at_100

value: 31.241000000000003

- type: mrr_at_1000

value: 31.319000000000003

- type: mrr_at_3

value: 28.16

- type: mrr_at_5

value: 29.406

- type: ndcg_at_1

value: 22.953000000000003

- type: ndcg_at_10

value: 31.151

- type: ndcg_at_100

value: 36.309000000000005

- type: ndcg_at_1000

value: 39.227000000000004

- type: ndcg_at_3

value: 26.921

- type: ndcg_at_5

value: 28.938000000000002

- type: precision_at_1

value: 22.953000000000003

- type: precision_at_10

value: 5.602

- type: precision_at_100

value: 0.9530000000000001

- type: precision_at_1000

value: 0.13899999999999998

- type: precision_at_3

value: 12.606

- type: precision_at_5

value: 9.119

- type: recall_at_1

value: 18.815

- type: recall_at_10

value: 41.574

- type: recall_at_100

value: 64.84400000000001

- type: recall_at_1000

value: 85.406

- type: recall_at_3

value: 29.694

- type: recall_at_5

value: 34.935

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackUnixRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 27.840999999999998

- type: map_at_10

value: 36.797999999999995

- type: map_at_100

value: 37.993

- type: map_at_1000

value: 38.086999999999996

- type: map_at_3

value: 34.050999999999995

- type: map_at_5

value: 35.379

- type: mrr_at_1

value: 32.649

- type: mrr_at_10

value: 41.025

- type: mrr_at_100

value: 41.878

- type: mrr_at_1000

value: 41.929

- type: mrr_at_3

value: 38.573

- type: mrr_at_5

value: 39.715

- type: ndcg_at_1

value: 32.649

- type: ndcg_at_10

value: 42.142

- type: ndcg_at_100

value: 47.558

- type: ndcg_at_1000

value: 49.643

- type: ndcg_at_3

value: 37.12

- type: ndcg_at_5

value: 38.983000000000004

- type: precision_at_1

value: 32.649

- type: precision_at_10

value: 7.08

- type: precision_at_100

value: 1.1039999999999999

- type: precision_at_1000

value: 0.13899999999999998

- type: precision_at_3

value: 16.698

- type: precision_at_5

value: 11.511000000000001

- type: recall_at_1

value: 27.840999999999998

- type: recall_at_10

value: 54.245

- type: recall_at_100

value: 77.947

- type: recall_at_1000

value: 92.36999999999999

- type: recall_at_3

value: 40.146

- type: recall_at_5

value: 44.951

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWebmastersRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 26.529000000000003

- type: map_at_10

value: 35.010000000000005

- type: map_at_100

value: 36.647

- type: map_at_1000

value: 36.857

- type: map_at_3

value: 31.968000000000004

- type: map_at_5

value: 33.554

- type: mrr_at_1

value: 31.818

- type: mrr_at_10

value: 39.550999999999995

- type: mrr_at_100

value: 40.54

- type: mrr_at_1000

value: 40.596

- type: mrr_at_3

value: 36.726

- type: mrr_at_5

value: 38.416

- type: ndcg_at_1

value: 31.818

- type: ndcg_at_10

value: 40.675

- type: ndcg_at_100

value: 46.548

- type: ndcg_at_1000

value: 49.126

- type: ndcg_at_3

value: 35.829

- type: ndcg_at_5

value: 38.0

- type: precision_at_1

value: 31.818

- type: precision_at_10

value: 7.826

- type: precision_at_100

value: 1.538

- type: precision_at_1000

value: 0.24

- type: precision_at_3

value: 16.601

- type: precision_at_5

value: 12.095

- type: recall_at_1

value: 26.529000000000003

- type: recall_at_10

value: 51.03

- type: recall_at_100

value: 77.556

- type: recall_at_1000

value: 93.804

- type: recall_at_3

value: 36.986000000000004

- type: recall_at_5

value: 43.096000000000004

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWordpressRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 23.480999999999998

- type: map_at_10

value: 30.817

- type: map_at_100

value: 31.838

- type: map_at_1000

value: 31.932

- type: map_at_3

value: 28.011999999999997

- type: map_at_5

value: 29.668

- type: mrr_at_1

value: 25.323

- type: mrr_at_10

value: 33.072

- type: mrr_at_100

value: 33.926

- type: mrr_at_1000

value: 33.993

- type: mrr_at_3

value: 30.436999999999998

- type: mrr_at_5

value: 32.092

- type: ndcg_at_1

value: 25.323

- type: ndcg_at_10

value: 35.514

- type: ndcg_at_100

value: 40.489000000000004

- type: ndcg_at_1000

value: 42.908

- type: ndcg_at_3

value: 30.092000000000002

- type: ndcg_at_5

value: 32.989000000000004

- type: precision_at_1

value: 25.323

- type: precision_at_10

value: 5.545

- type: precision_at_100

value: 0.861

- type: precision_at_1000

value: 0.117

- type: precision_at_3

value: 12.446

- type: precision_at_5

value: 9.131

- type: recall_at_1

value: 23.480999999999998

- type: recall_at_10

value: 47.825

- type: recall_at_100

value: 70.652

- type: recall_at_1000

value: 88.612

- type: recall_at_3

value: 33.537

- type: recall_at_5

value: 40.542

- task:

type: Retrieval

dataset:

type: climate-fever

name: MTEB ClimateFEVER

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 13.333999999999998

- type: map_at_10

value: 22.524

- type: map_at_100

value: 24.506

- type: map_at_1000

value: 24.715

- type: map_at_3

value: 19.022

- type: map_at_5

value: 20.693

- type: mrr_at_1

value: 29.186

- type: mrr_at_10

value: 41.22

- type: mrr_at_100

value: 42.16

- type: mrr_at_1000

value: 42.192

- type: mrr_at_3

value: 38.013000000000005

- type: mrr_at_5

value: 39.704

- type: ndcg_at_1

value: 29.186

- type: ndcg_at_10

value: 31.167

- type: ndcg_at_100

value: 38.879000000000005

- type: ndcg_at_1000

value: 42.376000000000005

- type: ndcg_at_3

value: 25.817

- type: ndcg_at_5

value: 27.377000000000002

- type: precision_at_1

value: 29.186

- type: precision_at_10

value: 9.693999999999999

- type: precision_at_100

value: 1.8030000000000002

- type: precision_at_1000

value: 0.246

- type: precision_at_3

value: 19.11

- type: precision_at_5

value: 14.344999999999999

- type: recall_at_1

value: 13.333999999999998

- type: recall_at_10

value: 37.092000000000006

- type: recall_at_100

value: 63.651

- type: recall_at_1000

value: 83.05

- type: recall_at_3

value: 23.74

- type: recall_at_5

value: 28.655

- task:

type: Retrieval

dataset:

type: dbpedia-entity

name: MTEB DBPedia

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 9.151

- type: map_at_10

value: 19.653000000000002

- type: map_at_100

value: 28.053

- type: map_at_1000

value: 29.709000000000003

- type: map_at_3

value: 14.191

- type: map_at_5

value: 16.456

- type: mrr_at_1

value: 66.25

- type: mrr_at_10

value: 74.4

- type: mrr_at_100

value: 74.715

- type: mrr_at_1000

value: 74.726

- type: mrr_at_3

value: 72.417

- type: mrr_at_5

value: 73.667

- type: ndcg_at_1

value: 54.25

- type: ndcg_at_10

value: 40.77

- type: ndcg_at_100

value: 46.359

- type: ndcg_at_1000

value: 54.193000000000005

- type: ndcg_at_3

value: 44.832

- type: ndcg_at_5

value: 42.63

- type: precision_at_1

value: 66.25

- type: precision_at_10

value: 32.175

- type: precision_at_100

value: 10.668

- type: precision_at_1000

value: 2.067

- type: precision_at_3

value: 47.667

- type: precision_at_5

value: 41.3

- type: recall_at_1

value: 9.151

- type: recall_at_10

value: 25.003999999999998

- type: recall_at_100

value: 52.976

- type: recall_at_1000

value: 78.315

- type: recall_at_3

value: 15.487

- type: recall_at_5

value: 18.999

- task:

type: Classification

dataset:

type: mteb/emotion

name: MTEB EmotionClassification

config: default

split: test

revision: 4f58c6b202a23cf9a4da393831edf4f9183cad37

metrics:

- type: accuracy

value: 51.89999999999999

- type: f1

value: 46.47777925067403

- task:

type: Retrieval

dataset:

type: fever

name: MTEB FEVER

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 73.706

- type: map_at_10

value: 82.423

- type: map_at_100

value: 82.67999999999999

- type: map_at_1000

value: 82.694

- type: map_at_3

value: 81.328

- type: map_at_5

value: 82.001

- type: mrr_at_1

value: 79.613

- type: mrr_at_10

value: 87.07000000000001

- type: mrr_at_100

value: 87.169

- type: mrr_at_1000

value: 87.17

- type: mrr_at_3

value: 86.404

- type: mrr_at_5

value: 86.856

- type: ndcg_at_1

value: 79.613

- type: ndcg_at_10

value: 86.289

- type: ndcg_at_100

value: 87.201

- type: ndcg_at_1000

value: 87.428

- type: ndcg_at_3

value: 84.625

- type: ndcg_at_5

value: 85.53699999999999

- type: precision_at_1

value: 79.613

- type: precision_at_10

value: 10.399

- type: precision_at_100

value: 1.1079999999999999

- type: precision_at_1000

value: 0.11499999999999999

- type: precision_at_3

value: 32.473

- type: precision_at_5

value: 20.132

- type: recall_at_1

value: 73.706

- type: recall_at_10

value: 93.559

- type: recall_at_100

value: 97.188

- type: recall_at_1000

value: 98.555

- type: recall_at_3

value: 88.98700000000001

- type: recall_at_5

value: 91.373

- task:

type: Retrieval

dataset:

type: fiqa

name: MTEB FiQA2018

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 19.841

- type: map_at_10

value: 32.643

- type: map_at_100

value: 34.575

- type: map_at_1000

value: 34.736

- type: map_at_3

value: 28.317999999999998

- type: map_at_5

value: 30.964000000000002

- type: mrr_at_1

value: 39.660000000000004

- type: mrr_at_10

value: 48.620000000000005

- type: mrr_at_100

value: 49.384

- type: mrr_at_1000

value: 49.415

- type: mrr_at_3

value: 45.988

- type: mrr_at_5

value: 47.361

- type: ndcg_at_1

value: 39.660000000000004

- type: ndcg_at_10

value: 40.646

- type: ndcg_at_100

value: 47.657

- type: ndcg_at_1000

value: 50.428

- type: ndcg_at_3

value: 36.689

- type: ndcg_at_5

value: 38.211

- type: precision_at_1

value: 39.660000000000004

- type: precision_at_10

value: 11.235000000000001

- type: precision_at_100

value: 1.8530000000000002

- type: precision_at_1000

value: 0.23600000000000002

- type: precision_at_3

value: 24.587999999999997

- type: precision_at_5

value: 18.395

- type: recall_at_1

value: 19.841

- type: recall_at_10

value: 48.135

- type: recall_at_100

value: 74.224

- type: recall_at_1000

value: 90.826

- type: recall_at_3

value: 33.536

- type: recall_at_5

value: 40.311

- task:

type: Retrieval

dataset:

type: hotpotqa

name: MTEB HotpotQA

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 40.358

- type: map_at_10

value: 64.497

- type: map_at_100

value: 65.362

- type: map_at_1000

value: 65.41900000000001

- type: map_at_3

value: 61.06700000000001

- type: map_at_5

value: 63.317

- type: mrr_at_1

value: 80.716

- type: mrr_at_10

value: 86.10799999999999

- type: mrr_at_100

value: 86.265

- type: mrr_at_1000

value: 86.27

- type: mrr_at_3

value: 85.271

- type: mrr_at_5

value: 85.82499999999999

- type: ndcg_at_1

value: 80.716

- type: ndcg_at_10

value: 72.597

- type: ndcg_at_100

value: 75.549

- type: ndcg_at_1000

value: 76.61

- type: ndcg_at_3

value: 67.874

- type: ndcg_at_5

value: 70.655

- type: precision_at_1

value: 80.716

- type: precision_at_10

value: 15.148

- type: precision_at_100

value: 1.745

- type: precision_at_1000

value: 0.188

- type: precision_at_3

value: 43.597

- type: precision_at_5

value: 28.351

- type: recall_at_1

value: 40.358

- type: recall_at_10

value: 75.739

- type: recall_at_100

value: 87.259

- type: recall_at_1000

value: 94.234

- type: recall_at_3

value: 65.39500000000001

- type: recall_at_5

value: 70.878

- task:

type: Classification

dataset:

type: mteb/imdb

name: MTEB ImdbClassification

config: default

split: test

revision: 3d86128a09e091d6018b6d26cad27f2739fc2db7

metrics:

- type: accuracy

value: 90.80799999999998

- type: ap

value: 86.81350378180757

- type: f1

value: 90.79901248314215

- task:

type: Retrieval

dataset:

type: msmarco

name: MTEB MSMARCO

config: default

split: dev

revision: None

metrics:

- type: map_at_1

value: 22.096

- type: map_at_10

value: 34.384

- type: map_at_100

value: 35.541

- type: map_at_1000

value: 35.589999999999996

- type: map_at_3

value: 30.496000000000002

- type: map_at_5

value: 32.718

- type: mrr_at_1

value: 22.750999999999998

- type: mrr_at_10

value: 35.024

- type: mrr_at_100

value: 36.125

- type: mrr_at_1000

value: 36.168

- type: mrr_at_3

value: 31.225

- type: mrr_at_5

value: 33.416000000000004

- type: ndcg_at_1

value: 22.750999999999998

- type: ndcg_at_10

value: 41.351

- type: ndcg_at_100

value: 46.92

- type: ndcg_at_1000

value: 48.111

- type: ndcg_at_3

value: 33.439

- type: ndcg_at_5

value: 37.407000000000004

- type: precision_at_1

value: 22.750999999999998

- type: precision_at_10

value: 6.564

- type: precision_at_100

value: 0.935

- type: precision_at_1000

value: 0.104

- type: precision_at_3

value: 14.288

- type: precision_at_5

value: 10.581999999999999

- type: recall_at_1

value: 22.096

- type: recall_at_10

value: 62.771

- type: recall_at_100

value: 88.529

- type: recall_at_1000

value: 97.55

- type: recall_at_3

value: 41.245

- type: recall_at_5

value: 50.788

- task:

type: Classification

dataset:

type: mteb/mtop_domain

name: MTEB MTOPDomainClassification (en)

config: en

split: test

revision: d80d48c1eb48d3562165c59d59d0034df9fff0bf

metrics:

- type: accuracy

value: 94.16780665754673

- type: f1

value: 93.96331194859894

- task:

type: Classification

dataset:

type: mteb/mtop_intent

name: MTEB MTOPIntentClassification (en)

config: en

split: test

revision: ae001d0e6b1228650b7bd1c2c65fb50ad11a8aba

metrics:

- type: accuracy

value: 76.90606475148198

- type: f1

value: 58.58344986604187

- task:

type: Classification

dataset:

type: mteb/amazon_massive_intent

name: MTEB MassiveIntentClassification (en)

config: en

split: test

revision: 31efe3c427b0bae9c22cbb560b8f15491cc6bed7

metrics:

- type: accuracy

value: 76.14660390047075

- type: f1

value: 74.31533923533614

- task:

type: Classification

dataset:

type: mteb/amazon_massive_scenario

name: MTEB MassiveScenarioClassification (en)

config: en

split: test

revision: 7d571f92784cd94a019292a1f45445077d0ef634

metrics:

- type: accuracy

value: 80.16139878950908

- type: f1

value: 80.18532656824924

- task:

type: Clustering

dataset:

type: mteb/medrxiv-clustering-p2p

name: MTEB MedrxivClusteringP2P

config: default

split: test

revision: e7a26af6f3ae46b30dde8737f02c07b1505bcc73

metrics:

- type: v_measure

value: 32.949880906135085

- task:

type: Clustering

dataset:

type: mteb/medrxiv-clustering-s2s

name: MTEB MedrxivClusteringS2S

config: default

split: test

revision: 35191c8c0dca72d8ff3efcd72aa802307d469663

metrics:

- type: v_measure

value: 31.56300351524862

- task:

type: Reranking

dataset:

type: mteb/mind_small

name: MTEB MindSmallReranking

config: default

split: test

revision: 3bdac13927fdc888b903db93b2ffdbd90b295a69

metrics:

- type: map

value: 31.196521894371315

- type: mrr

value: 32.22644231694389

- task:

type: Retrieval

dataset:

type: nfcorpus

name: MTEB NFCorpus

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 6.783

- type: map_at_10

value: 14.549000000000001

- type: map_at_100

value: 18.433

- type: map_at_1000

value: 19.949

- type: map_at_3

value: 10.936

- type: map_at_5

value: 12.514

- type: mrr_at_1

value: 47.368

- type: mrr_at_10

value: 56.42

- type: mrr_at_100

value: 56.908

- type: mrr_at_1000

value: 56.95

- type: mrr_at_3

value: 54.283

- type: mrr_at_5

value: 55.568

- type: ndcg_at_1

value: 45.666000000000004

- type: ndcg_at_10

value: 37.389

- type: ndcg_at_100

value: 34.253

- type: ndcg_at_1000

value: 43.059999999999995

- type: ndcg_at_3

value: 42.725

- type: ndcg_at_5

value: 40.193

- type: precision_at_1

value: 47.368

- type: precision_at_10

value: 27.988000000000003

- type: precision_at_100

value: 8.672

- type: precision_at_1000

value: 2.164

- type: precision_at_3

value: 40.248

- type: precision_at_5

value: 34.737

- type: recall_at_1

value: 6.783

- type: recall_at_10

value: 17.838

- type: recall_at_100

value: 33.672000000000004

- type: recall_at_1000

value: 66.166

- type: recall_at_3

value: 11.849

- type: recall_at_5

value: 14.205000000000002

- task:

type: Retrieval

dataset:

type: nq

name: MTEB NQ

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 31.698999999999998

- type: map_at_10

value: 46.556

- type: map_at_100

value: 47.652

- type: map_at_1000

value: 47.68

- type: map_at_3

value: 42.492000000000004

- type: map_at_5

value: 44.763999999999996

- type: mrr_at_1

value: 35.747

- type: mrr_at_10

value: 49.242999999999995

- type: mrr_at_100

value: 50.052

- type: mrr_at_1000

value: 50.068

- type: mrr_at_3

value: 45.867000000000004

- type: mrr_at_5

value: 47.778999999999996

- type: ndcg_at_1

value: 35.717999999999996

- type: ndcg_at_10

value: 54.14600000000001

- type: ndcg_at_100

value: 58.672999999999995

- type: ndcg_at_1000

value: 59.279

- type: ndcg_at_3

value: 46.407

- type: ndcg_at_5

value: 50.181

- type: precision_at_1

value: 35.717999999999996

- type: precision_at_10

value: 8.844000000000001

- type: precision_at_100

value: 1.139

- type: precision_at_1000

value: 0.12

- type: precision_at_3

value: 20.993000000000002

- type: precision_at_5

value: 14.791000000000002

- type: recall_at_1

value: 31.698999999999998

- type: recall_at_10

value: 74.693

- type: recall_at_100

value: 94.15299999999999

- type: recall_at_1000

value: 98.585

- type: recall_at_3

value: 54.388999999999996

- type: recall_at_5

value: 63.08200000000001

- task:

type: Retrieval

dataset:

type: quora

name: MTEB QuoraRetrieval

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 71.283

- type: map_at_10

value: 85.24000000000001

- type: map_at_100

value: 85.882

- type: map_at_1000

value: 85.897

- type: map_at_3

value: 82.326

- type: map_at_5

value: 84.177

- type: mrr_at_1

value: 82.21000000000001

- type: mrr_at_10

value: 88.228

- type: mrr_at_100

value: 88.32

- type: mrr_at_1000

value: 88.32

- type: mrr_at_3

value: 87.323

- type: mrr_at_5

value: 87.94800000000001

- type: ndcg_at_1

value: 82.17999999999999

- type: ndcg_at_10

value: 88.9

- type: ndcg_at_100

value: 90.079

- type: ndcg_at_1000

value: 90.158

- type: ndcg_at_3

value: 86.18299999999999

- type: ndcg_at_5

value: 87.71799999999999

- type: precision_at_1

value: 82.17999999999999

- type: precision_at_10

value: 13.464

- type: precision_at_100

value: 1.533

- type: precision_at_1000

value: 0.157

- type: precision_at_3

value: 37.693

- type: precision_at_5

value: 24.792

- type: recall_at_1

value: 71.283

- type: recall_at_10

value: 95.742

- type: recall_at_100

value: 99.67200000000001

- type: recall_at_1000

value: 99.981

- type: recall_at_3

value: 87.888

- type: recall_at_5

value: 92.24

- task:

type: Clustering

dataset:

type: mteb/reddit-clustering

name: MTEB RedditClustering

config: default

split: test

revision: 24640382cdbf8abc73003fb0fa6d111a705499eb

metrics:

- type: v_measure

value: 56.24267063669042

- task:

type: Clustering

dataset:

type: mteb/reddit-clustering-p2p

name: MTEB RedditClusteringP2P

config: default

split: test

revision: 282350215ef01743dc01b456c7f5241fa8937f16

metrics:

- type: v_measure

value: 62.88056988932578

- task:

type: Retrieval

dataset:

type: scidocs

name: MTEB SCIDOCS

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 4.903

- type: map_at_10

value: 13.202

- type: map_at_100

value: 15.5

- type: map_at_1000

value: 15.870999999999999

- type: map_at_3

value: 9.407

- type: map_at_5

value: 11.238

- type: mrr_at_1

value: 24.2

- type: mrr_at_10

value: 35.867

- type: mrr_at_100

value: 37.001

- type: mrr_at_1000

value: 37.043

- type: mrr_at_3

value: 32.5

- type: mrr_at_5

value: 34.35

- type: ndcg_at_1

value: 24.2

- type: ndcg_at_10

value: 21.731

- type: ndcg_at_100

value: 30.7

- type: ndcg_at_1000

value: 36.618

- type: ndcg_at_3

value: 20.72

- type: ndcg_at_5

value: 17.954

- type: precision_at_1

value: 24.2

- type: precision_at_10

value: 11.33

- type: precision_at_100

value: 2.4410000000000003

- type: precision_at_1000

value: 0.386

- type: precision_at_3

value: 19.667

- type: precision_at_5

value: 15.86

- type: recall_at_1

value: 4.903

- type: recall_at_10

value: 22.962

- type: recall_at_100

value: 49.563

- type: recall_at_1000

value: 78.238

- type: recall_at_3

value: 11.953

- type: recall_at_5

value: 16.067999999999998

- task:

type: STS

dataset:

type: mteb/sickr-sts

name: MTEB SICK-R

config: default

split: test

revision: a6ea5a8cab320b040a23452cc28066d9beae2cee

metrics:

- type: cos_sim_pearson

value: 84.12694254604078

- type: cos_sim_spearman

value: 80.30141815181918

- type: euclidean_pearson

value: 81.34015449877128

- type: euclidean_spearman

value: 80.13984197010849

- type: manhattan_pearson

value: 81.31767068124086

- type: manhattan_spearman

value: 80.11720513114103

- task:

type: STS

dataset:

type: mteb/sts12-sts

name: MTEB STS12

config: default

split: test

revision: a0d554a64d88156834ff5ae9920b964011b16384

metrics:

- type: cos_sim_pearson

value: 86.13112984010417

- type: cos_sim_spearman

value: 78.03063573402875

- type: euclidean_pearson

value: 83.51928418844804

- type: euclidean_spearman

value: 78.4045235411144

- type: manhattan_pearson

value: 83.49981637388689

- type: manhattan_spearman

value: 78.4042575139372

- task:

type: STS

dataset:

type: mteb/sts13-sts

name: MTEB STS13

config: default

split: test

revision: 7e90230a92c190f1bf69ae9002b8cea547a64cca

metrics:

- type: cos_sim_pearson

value: 82.50327987379504

- type: cos_sim_spearman

value: 84.18556767756205

- type: euclidean_pearson

value: 82.69684424327679

- type: euclidean_spearman

value: 83.5368106038335

- type: manhattan_pearson

value: 82.57967581007374

- type: manhattan_spearman

value: 83.43009053133697

- task:

type: STS

dataset:

type: mteb/sts14-sts

name: MTEB STS14

config: default

split: test

revision: 6031580fec1f6af667f0bd2da0a551cf4f0b2375

metrics:

- type: cos_sim_pearson

value: 82.50756863007814

- type: cos_sim_spearman

value: 82.27204331279108

- type: euclidean_pearson

value: 81.39535251429741

- type: euclidean_spearman

value: 81.84386626336239

- type: manhattan_pearson

value: 81.34281737280695

- type: manhattan_spearman

value: 81.81149375673166

- task:

type: STS

dataset:

type: mteb/sts15-sts

name: MTEB STS15

config: default

split: test

revision: ae752c7c21bf194d8b67fd573edf7ae58183cbe3

metrics:

- type: cos_sim_pearson

value: 86.8727714856726

- type: cos_sim_spearman

value: 87.95738287792312

- type: euclidean_pearson

value: 86.62920602795887

- type: euclidean_spearman

value: 87.05207355381243

- type: manhattan_pearson

value: 86.53587918472225

- type: manhattan_spearman

value: 86.95382961029586

- task:

type: STS

dataset:

type: mteb/sts16-sts

name: MTEB STS16

config: default

split: test

revision: 4d8694f8f0e0100860b497b999b3dbed754a0513

metrics:

- type: cos_sim_pearson

value: 83.52240359769479

- type: cos_sim_spearman

value: 85.47685776238286

- type: euclidean_pearson

value: 84.25815333483058

- type: euclidean_spearman

value: 85.27415639683198

- type: manhattan_pearson

value: 84.29127757025637

- type: manhattan_spearman

value: 85.30226224917351

- task:

type: STS

dataset:

type: mteb/sts17-crosslingual-sts

name: MTEB STS17 (en-en)

config: en-en

split: test

revision: af5e6fb845001ecf41f4c1e033ce921939a2a68d

metrics:

- type: cos_sim_pearson

value: 86.42501708915708

- type: cos_sim_spearman

value: 86.42276182795041

- type: euclidean_pearson

value: 86.5408207354761

- type: euclidean_spearman

value: 85.46096321750838

- type: manhattan_pearson

value: 86.54177303026881

- type: manhattan_spearman

value: 85.50313151916117

- task:

type: STS

dataset:

type: mteb/sts22-crosslingual-sts

name: MTEB STS22 (en)

config: en

split: test

revision: 6d1ba47164174a496b7fa5d3569dae26a6813b80

metrics:

- type: cos_sim_pearson

value: 64.86521089250766

- type: cos_sim_spearman

value: 65.94868540323003

- type: euclidean_pearson

value: 67.16569626533084

- type: euclidean_spearman

value: 66.37667004134917

- type: manhattan_pearson

value: 67.1482365102333

- type: manhattan_spearman

value: 66.53240122580029

- task:

type: STS

dataset:

type: mteb/stsbenchmark-sts

name: MTEB STSBenchmark

config: default

split: test

revision: b0fddb56ed78048fa8b90373c8a3cfc37b684831

metrics:

- type: cos_sim_pearson

value: 84.64746265365318

- type: cos_sim_spearman

value: 86.41888825906786

- type: euclidean_pearson

value: 85.27453642725811

- type: euclidean_spearman

value: 85.94095796602544

- type: manhattan_pearson

value: 85.28643660505334

- type: manhattan_spearman

value: 85.95028003260744

- task:

type: Reranking

dataset:

type: mteb/scidocs-reranking

name: MTEB SciDocsRR

config: default

split: test

revision: d3c5e1fc0b855ab6097bf1cda04dd73947d7caab

metrics:

- type: map

value: 87.48903153618527

- type: mrr

value: 96.41081503826601

- task:

type: Retrieval

dataset:

type: scifact

name: MTEB SciFact

config: default

split: test

revision: None

metrics:

- type: map_at_1

value: 58.594

- type: map_at_10

value: 69.296

- type: map_at_100

value: 69.782

- type: map_at_1000

value: 69.795

- type: map_at_3

value: 66.23

- type: map_at_5

value: 68.293

- type: mrr_at_1

value: 61.667

- type: mrr_at_10

value: 70.339

- type: mrr_at_100

value: 70.708

- type: mrr_at_1000

value: 70.722

- type: mrr_at_3

value: 68.0

- type: mrr_at_5

value: 69.56700000000001

- type: ndcg_at_1

value: 61.667

- type: ndcg_at_10

value: 74.039

- type: ndcg_at_100

value: 76.103

- type: ndcg_at_1000

value: 76.47800000000001

- type: ndcg_at_3

value: 68.967

- type: ndcg_at_5

value: 71.96900000000001

- type: precision_at_1

value: 61.667

- type: precision_at_10

value: 9.866999999999999

- type: precision_at_100

value: 1.097

- type: precision_at_1000

value: 0.11299999999999999

- type: precision_at_3

value: 27.111

- type: precision_at_5

value: 18.2

- type: recall_at_1

value: 58.594

- type: recall_at_10

value: 87.422

- type: recall_at_100

value: 96.667

- type: recall_at_1000