index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/llm_finetuning/smollm2.yml | task: llm-sft

base_model: HuggingFaceTB/SmolLM2-1.7B-Instruct

project_name: autotrain-smollm2-finetune

log: tensorboard

backend: local

data:

path: HuggingFaceH4/no_robots

train_split: train

valid_split: null

chat_template: tokenizer

column_mapping:

text_column: messages

params:

block_size: 2048

model_max_length: 4096

epochs: 2

batch_size: 1

lr: 1e-5

peft: true

quantization: int4

target_modules: all-linear

padding: right

optimizer: paged_adamw_8bit

scheduler: linear

gradient_accumulation: 8

mixed_precision: bf16

merge_adapter: true

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true

| 0 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/llm_finetuning/llama3-70b-sft.yml | task: llm-sft

base_model: meta-llama/Meta-Llama-3-70B-Instruct

project_name: autotrain-llama3-70b-math-v1

log: tensorboard

backend: local

data:

path: rishiraj/guanaco-style-metamath-40k

train_split: train

valid_split: null

chat_template: null

column_mapping:

text_column: text

params:

block_size: 2048

model_max_length: 8192

epochs: 2

batch_size: 1

lr: 1e-5

peft: true

quantization: null

target_modules: all-linear

padding: right

optimizer: paged_adamw_8bit

scheduler: linear

gradient_accumulation: 8

mixed_precision: bf16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 1 |

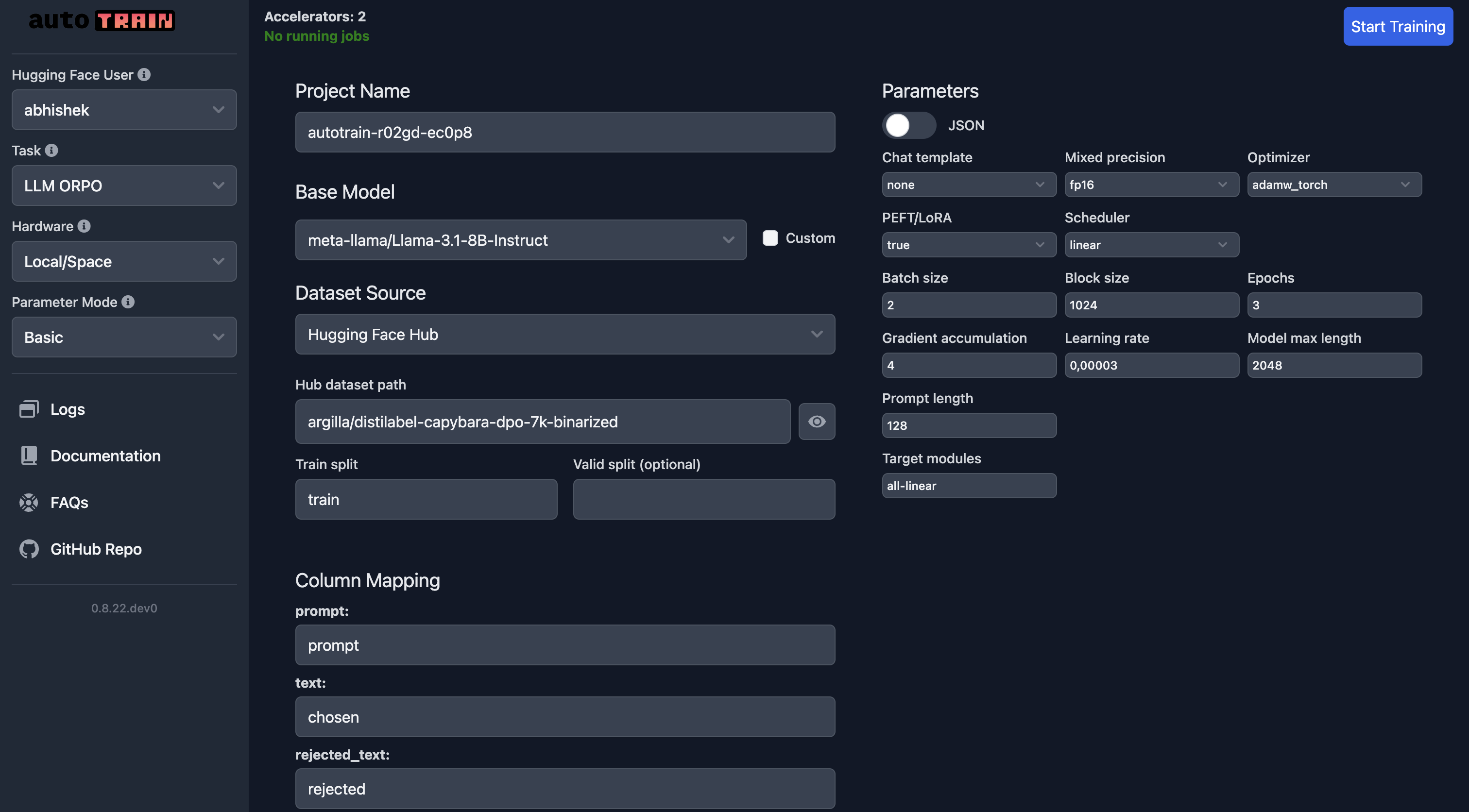

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/llm_finetuning/smollm2_orpo.yml | task: llm-orpo

base_model: HuggingFaceTB/SmolLM2-1.7B-Instruct

project_name: autotrain-smallm2-orpo

log: tensorboard

backend: local

data:

path: argilla/distilabel-capybara-dpo-7k-binarized

train_split: train

valid_split: null

chat_template: chatml

column_mapping:

text_column: chosen

rejected_text_column: rejected

prompt_text_column: prompt

params:

block_size: 1024

model_max_length: 2048

max_prompt_length: 512

epochs: 3

batch_size: 2

lr: 3e-5

peft: true

quantization: int4

target_modules: all-linear

padding: right

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 4

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: false | 2 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/llm_finetuning/smollm2_guanaco.yml | task: llm-sft

base_model: HuggingFaceTB/SmolLM2-135M-Instruct

project_name: autotrain-smollm2-135m-finetune-guanaco

log: tensorboard

backend: local

data:

path: timdettmers/openassistant-guanaco

train_split: train

valid_split: null

chat_template: null

column_mapping:

text_column: text

params:

block_size: 1024

model_max_length: 2048

epochs: 1

batch_size: 1

lr: 1e-5

peft: true

quantization: int4

target_modules: all-linear

padding: right

optimizer: paged_adamw_8bit

scheduler: linear

gradient_accumulation: 8

mixed_precision: bf16

merge_adapter: true

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 3 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/llm_finetuning/llama32-1b-sft.yml | task: llm-sft

base_model: meta-llama/Llama-3.2-1B

project_name: autotrain-llama32-1b-finetune

log: tensorboard

backend: local

data:

path: HuggingFaceH4/no_robots

train_split: train

valid_split: null

chat_template: tokenizer

column_mapping:

text_column: messages

params:

block_size: 2048

model_max_length: 4096

epochs: 2

batch_size: 1

lr: 1e-5

peft: true

quantization: int4

target_modules: all-linear

padding: right

optimizer: paged_adamw_8bit

scheduler: linear

gradient_accumulation: 8

mixed_precision: bf16

merge_adapter: true

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true

| 4 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/llm_finetuning/qwen.yml | task: llm-sft

base_model: Qwen/Qwen2.5-Coder-7B-Instruct

project_name: autotrain-qwen-finetune

log: tensorboard

backend: local

data:

path: HuggingFaceH4/no_robots

train_split: test

valid_split: null

chat_template: tokenizer

column_mapping:

text_column: messages

params:

block_size: 2048

model_max_length: 4096

epochs: 1

batch_size: 1

lr: 1e-5

peft: true

quantization: int4

target_modules: all-linear

padding: right

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

merge_adapter: true

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true

| 5 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/sentence_transformers/local_dataset.yml | task: sentence-transformers:pair_score

base_model: microsoft/mpnet-base

project_name: autotrain-st-pair-score-local-dataset

log: tensorboard

backend: local

data:

path: /path/to/your/dataset # this must be the path to the directory containing the train and valid files

train_split: train # this is the name of the train file (csv or jsonl)

valid_split: null # this is the name of the valid file (csv or jsonl), optional

column_mapping:

sentence1_column: input_sentence

sentence2_column: target_sentence

target_column: score

params:

max_seq_length: 512

epochs: 5

batch_size: 8

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 6 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/sentence_transformers/pair_score.yml | task: sentence-transformers:pair_score

base_model: microsoft/mpnet-base

project_name: autotrain-st-pair-score

log: tensorboard

backend: local

data:

path: sentence-transformers/all-nli

train_split: pair-score:train

valid_split: pair-score:dev

column_mapping:

sentence1_column: sentence1

sentence2_column: sentence2

target_column: score

params:

max_seq_length: 512

epochs: 5

batch_size: 8

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 7 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/sentence_transformers/qa.yml | task: sentence-transformers:qa

base_model: microsoft/mpnet-base

project_name: autotrain-st-qa

log: tensorboard

backend: local

data:

path: sentence-transformers/natural-questions

train_split: train

valid_split: null

column_mapping:

sentence1_column: query

sentence2_column: answer

params:

max_seq_length: 512

epochs: 5

batch_size: 8

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 8 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/sentence_transformers/pair.yml | task: sentence-transformers:pair

base_model: microsoft/mpnet-base

project_name: autotrain-st-pair

log: tensorboard

backend: local

data:

path: sentence-transformers/all-nli

train_split: pair:train

valid_split: pair:dev

column_mapping:

sentence1_column: anchor

sentence2_column: positive

params:

max_seq_length: 512

epochs: 5

batch_size: 8

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 9 |

0 | hf_public_repos/api-inference-community/docker_images/timm | hf_public_repos/api-inference-community/docker_images/timm/app/main.py | import functools

import logging

import os

from typing import Dict, Type

from api_inference_community.routes import pipeline_route, status_ok

from app.pipelines import ImageClassificationPipeline, Pipeline

from starlette.applications import Starlette

from starlette.middleware import Middleware

from starlette.middleware.gzip import GZipMiddleware

from starlette.routing import Route

TASK = os.getenv("TASK")

MODEL_ID = os.getenv("MODEL_ID")

logger = logging.getLogger(__name__)

# Add the allowed tasks

# Supported tasks are:

# - text-generation

# - text-classification

# - token-classification

# - translation

# - summarization

# - automatic-speech-recognition

# - ...

# For instance

# from app.pipelines import AutomaticSpeecRecognitionPipeline

# ALLOWED_TASKS = {"automatic-speech-recognition": AutomaticSpeecRecognitionPipeline}

# You can check the requirements and expectations of each pipelines in their respective

# directories. Implement directly within the directories.

ALLOWED_TASKS: Dict[str, Type[Pipeline]] = {

"image-classification": ImageClassificationPipeline

}

@functools.lru_cache()

def get_pipeline() -> Pipeline:

task = os.environ["TASK"]

model_id = os.environ["MODEL_ID"]

if task not in ALLOWED_TASKS:

raise EnvironmentError(f"{task} is not a valid pipeline for model : {model_id}")

return ALLOWED_TASKS[task](model_id)

routes = [

Route("/{whatever:path}", status_ok),

Route("/{whatever:path}", pipeline_route, methods=["POST"]),

]

middleware = [Middleware(GZipMiddleware, minimum_size=1000)]

if os.environ.get("DEBUG", "") == "1":

from starlette.middleware.cors import CORSMiddleware

middleware.append(

Middleware(

CORSMiddleware,

allow_origins=["*"],

allow_headers=["*"],

allow_methods=["*"],

)

)

app = Starlette(routes=routes, middleware=middleware)

@app.on_event("startup")

async def startup_event():

logger = logging.getLogger("uvicorn.access")

handler = logging.StreamHandler()

handler.setFormatter(logging.Formatter("%(asctime)s - %(levelname)s - %(message)s"))

logger.handlers = [handler]

# Link between `api-inference-community` and framework code.

app.get_pipeline = get_pipeline

try:

get_pipeline()

except Exception:

# We can fail so we can show exception later.

pass

if __name__ == "__main__":

try:

get_pipeline()

except Exception:

# We can fail so we can show exception later.

pass

| 0 |

0 | hf_public_repos/api-inference-community/docker_images/timm/app | hf_public_repos/api-inference-community/docker_images/timm/app/pipelines/base.py | from abc import ABC, abstractmethod

from typing import Any, Optional

class Pipeline(ABC):

task: Optional[str] = None

model_id: Optional[str] = None

@abstractmethod

def __init__(self, model_id: str):

raise NotImplementedError("Pipelines should implement an __init__ method")

@abstractmethod

def __call__(self, inputs: Any) -> Any:

raise NotImplementedError("Pipelines should implement a __call__ method")

class PipelineException(Exception):

pass

| 1 |

0 | hf_public_repos/api-inference-community/docker_images/timm/app | hf_public_repos/api-inference-community/docker_images/timm/app/pipelines/__init__.py | from app.pipelines.base import Pipeline, PipelineException # isort:skip

from app.pipelines.image_classification import ImageClassificationPipeline

| 2 |

0 | hf_public_repos/api-inference-community/docker_images/timm/app | hf_public_repos/api-inference-community/docker_images/timm/app/pipelines/image_classification.py | from typing import Any, Dict, List

import timm

import torch

from app.pipelines import Pipeline

from PIL import Image

from timm.data import (

CustomDatasetInfo,

ImageNetInfo,

create_transform,

infer_imagenet_subset,

resolve_model_data_config,

)

class ImageClassificationPipeline(Pipeline):

def __init__(self, model_id: str):

self.model = timm.create_model(f"hf_hub:{model_id}", pretrained=True)

self.transform = create_transform(

**resolve_model_data_config(self.model, use_test_size=True)

)

self.top_k = min(self.model.num_classes, 5)

self.model.eval()

self.dataset_info = None

label_names = self.model.pretrained_cfg.get("label_names", None)

label_descriptions = self.model.pretrained_cfg.get("label_descriptions", None)

if label_names is None:

# if no labels added to config, use imagenet labeller in timm

imagenet_subset = infer_imagenet_subset(self.model)

if imagenet_subset:

self.dataset_info = ImageNetInfo(imagenet_subset)

else:

# fallback label names

label_names = [f"LABEL_{i}" for i in range(self.model.num_classes)]

if self.dataset_info is None:

self.dataset_info = CustomDatasetInfo(

label_names=label_names,

label_descriptions=label_descriptions,

)

def __call__(self, inputs: Image.Image) -> List[Dict[str, Any]]:

"""

Args:

inputs (:obj:`PIL.Image`):

The raw image representation as PIL.

No transformation made whatsoever from the input. Make all necessary transformations here.

Return:

A :obj:`list`:. The list contains items that are dicts should be liked {"label": "XXX", "score": 0.82}

It is preferred if the returned list is in decreasing `score` order

"""

im = inputs.convert("RGB")

inputs = self.transform(im).unsqueeze(0)

with torch.no_grad():

out = self.model(inputs)

probabilities = out.squeeze(0).softmax(dim=0)

values, indices = torch.topk(probabilities, self.top_k)

labels = [

{

"label": self.dataset_info.index_to_description(i, detailed=True),

"score": v.item(),

}

for i, v in zip(indices, values)

]

return labels

| 3 |

0 | hf_public_repos/api-inference-community/docker_images/timm | hf_public_repos/api-inference-community/docker_images/timm/tests/test_docker_build.py | import os

import subprocess

from unittest import TestCase

class cd:

"""Context manager for changing the current working directory"""

def __init__(self, newPath):

self.newPath = os.path.expanduser(newPath)

def __enter__(self):

self.savedPath = os.getcwd()

os.chdir(self.newPath)

def __exit__(self, etype, value, traceback):

os.chdir(self.savedPath)

class DockerBuildTestCase(TestCase):

def test_can_build_docker_image(self):

with cd(os.path.dirname(os.path.dirname(__file__))):

subprocess.check_output(["docker", "build", "."])

| 4 |

0 | hf_public_repos/api-inference-community/docker_images/timm | hf_public_repos/api-inference-community/docker_images/timm/tests/test_api.py | import os

from typing import Dict, List

from unittest import TestCase, skipIf

from app.main import ALLOWED_TASKS, get_pipeline

# Must contain at least one example of each implemented pipeline

# Tests do not check the actual values of the model output, so small dummy

# models are recommended for faster tests.

TESTABLE_MODELS: Dict[str, List[str]] = {

"image-classification": [

"timm/vit_base_patch32_clip_224.laion2b_ft_in1k",

"timm/convnext_nano.in12k",

"nateraw/timm-resnet50-beans",

]

}

ALL_TASKS = {

"automatic-speech-recognition",

"audio-source-separation",

"image-classification",

"question-answering",

"text-generation",

"text-to-speech",

}

class PipelineTestCase(TestCase):

@skipIf(

os.path.dirname(os.path.dirname(__file__)).endswith("common"),

"common is a special case",

)

def test_has_at_least_one_task_enabled(self):

self.assertGreater(

len(ALLOWED_TASKS.keys()), 0, "You need to implement at least one task"

)

def test_unsupported_tasks(self):

unsupported_tasks = ALL_TASKS - ALLOWED_TASKS.keys()

for unsupported_task in unsupported_tasks:

with self.subTest(msg=unsupported_task, task=unsupported_task):

os.environ["TASK"] = unsupported_task

os.environ["MODEL_ID"] = "XX"

with self.assertRaises(EnvironmentError):

get_pipeline()

| 5 |

0 | hf_public_repos/api-inference-community/docker_images/timm | hf_public_repos/api-inference-community/docker_images/timm/tests/test_api_image_classification.py | import json

import os

from unittest import TestCase, skipIf

from app.main import ALLOWED_TASKS

from parameterized import parameterized_class

from starlette.testclient import TestClient

from tests.test_api import TESTABLE_MODELS

@skipIf(

"image-classification" not in ALLOWED_TASKS,

"image-classification not implemented",

)

@parameterized_class(

[{"model_id": model_id} for model_id in TESTABLE_MODELS["image-classification"]]

)

class ImageClassificationTestCase(TestCase):

def setUp(self):

self.old_model_id = os.getenv("MODEL_ID")

self.old_task = os.getenv("TASK")

os.environ["MODEL_ID"] = self.model_id

os.environ["TASK"] = "image-classification"

from app.main import app, get_pipeline

get_pipeline.cache_clear()

self.app = app

@classmethod

def setUpClass(cls):

from app.main import get_pipeline

get_pipeline.cache_clear()

def tearDown(self):

if self.old_model_id is not None:

os.environ["MODEL_ID"] = self.old_model_id

else:

del os.environ["MODEL_ID"]

if self.old_task is not None:

os.environ["TASK"] = self.old_task

else:

del os.environ["TASK"]

def read(self, filename: str) -> bytes:

dirname = os.path.dirname(os.path.abspath(__file__))

filename = os.path.join(dirname, "samples", filename)

with open(filename, "rb") as f:

bpayload = f.read()

return bpayload

def test_simple(self):

bpayload = self.read("plane.jpg")

with TestClient(self.app) as client:

response = client.post("/", data=bpayload)

self.assertEqual(

response.status_code,

200,

)

content = json.loads(response.content)

self.assertEqual(type(content), list)

self.assertEqual(set(type(el) for el in content), {dict})

self.assertEqual(

set((k, type(v)) for el in content for (k, v) in el.items()),

{("label", str), ("score", float)},

)

def test_different_resolution(self):

bpayload = self.read("plane2.jpg")

with TestClient(self.app) as client:

response = client.post("/", data=bpayload)

self.assertEqual(

response.status_code,

200,

)

content = json.loads(response.content)

self.assertEqual(type(content), list)

self.assertEqual(set(type(el) for el in content), {dict})

self.assertEqual(

set(k for el in content for k in el.keys()), {"label", "score"}

)

| 6 |

0 | hf_public_repos/api-inference-community/docker_images | hf_public_repos/api-inference-community/docker_images/spacy/requirements.txt | starlette==0.27.0

api-inference-community==0.0.23

huggingface_hub==0.5.1

requests==2.31.0

| 7 |

0 | hf_public_repos/api-inference-community/docker_images | hf_public_repos/api-inference-community/docker_images/spacy/Dockerfile | FROM tiangolo/uvicorn-gunicorn:python3.8

LABEL maintainer="Omar Sanseviero [email protected]"

# Add any system dependency here

# RUN apt-get update -y && apt-get install libXXX -y

COPY ./requirements.txt /app

RUN pip install --no-cache-dir -r requirements.txt

COPY ./prestart.sh /app/

# Most DL models are quite large in terms of memory, using workers is a HUGE

# slowdown because of the fork and GIL with python.

# Using multiple pods seems like a better default strategy.

# Feel free to override if it does not make sense for your library.

ARG max_workers=1

ENV MAX_WORKERS=$max_workers

ENV HUGGINGFACE_HUB_CACHE=/data

ENV PIP_CACHE=/data

# Necessary on GPU environment docker.

# TIMEOUT env variable is used by nvcr.io/nvidia/pytorch:xx for another purpose

# rendering TIMEOUT defined by uvicorn impossible to use correctly

# We're overriding it to be renamed UVICORN_TIMEOUT

# UVICORN_TIMEOUT is a useful variable for very large models that take more

# than 30s (the default) to load in memory.

# If UVICORN_TIMEOUT is too low, uvicorn will simply never loads as it will

# kill workers all the time before they finish.

RUN sed -i 's/TIMEOUT/UVICORN_TIMEOUT/g' /gunicorn_conf.py

COPY ./app /app/app

| 8 |

0 | hf_public_repos/api-inference-community/docker_images | hf_public_repos/api-inference-community/docker_images/spacy/prestart.sh | python app/main.py

| 9 |

0 | hf_public_repos/candle/candle-kernels | hf_public_repos/candle/candle-kernels/src/fill.cu | #include<stdint.h>

#include "cuda_fp16.h"

template<typename T>

__device__ void fill_with(T *buf, T value, const size_t numel) {

for (unsigned int i = blockIdx.x * blockDim.x + threadIdx.x; i < numel; i += blockDim.x * gridDim.x) {

buf[i] = value;

}

}

extern "C" __global__ void fill_u8(uint8_t *buf, uint8_t value, const size_t numel) { fill_with(buf, value, numel); }

extern "C" __global__ void fill_u32(uint32_t *buf, uint32_t value, const size_t numel) { fill_with(buf, value, numel); }

extern "C" __global__ void fill_i64(int64_t *buf, int64_t value, const size_t numel) { fill_with(buf, value, numel); }

extern "C" __global__ void fill_f32(float *buf, float value, const size_t numel) { fill_with(buf, value, numel); }

extern "C" __global__ void fill_f64(double *buf, double value, const size_t numel) { fill_with(buf, value, numel); }

template<typename T>

__device__ void copy2d(const T *src, T *dst, uint32_t d1, uint32_t d2, uint32_t src_s, uint32_t dst_s) {

uint32_t idx = blockIdx.x * blockDim.x + threadIdx.x;

if (idx >= d1 * d2) {

return;

}

uint32_t idx1 = idx / d2;

uint32_t idx2 = idx - d2 * idx1;

dst[idx1 * dst_s + idx2] = src[idx1 * src_s + idx2];

}

#define COPY2D_OP(TYPENAME, FNNAME) \

extern "C" __global__ \

void FNNAME(const TYPENAME *src, TYPENAME *dst, uint32_t d1, uint32_t d2, uint32_t src_s, uint32_t dst_s) { \

copy2d(src, dst, d1, d2, src_s, dst_s); \

} \

COPY2D_OP(float, copy2d_f32)

COPY2D_OP(double, copy2d_f64)

COPY2D_OP(uint8_t, copy2d_u8)

COPY2D_OP(uint32_t, copy2d_u32)

COPY2D_OP(int64_t, copy2d_i64)

#if __CUDA_ARCH__ >= 530

extern "C" __global__ void fill_f16(__half *buf, __half value, const size_t numel) { fill_with(buf, value, numel); }

COPY2D_OP(__half, copy2d_f16)

#endif

#if __CUDA_ARCH__ >= 800

#include <cuda_bf16.h>

extern "C" __global__ void fill_bf16(__nv_bfloat16 *buf, __nv_bfloat16 value, const size_t numel) { fill_with(buf, value, numel); }

COPY2D_OP(__nv_bfloat16, copy2d_bf16)

#endif

| 0 |

0 | hf_public_repos/candle/candle-kernels | hf_public_repos/candle/candle-kernels/src/reduce.cu | #include "cuda_utils.cuh"

#include <cmath>

#include <stdint.h>

#define WARP_SIZE 32

const int BLOCK_SIZE = 1024;

// TODO: Maybe add some fast_sum_f16_f32 variant that not only accumulate in f32

// but also expect a f32 output so that this can be used for normalization e.g.

// in softmax.

// Fast reduce sum kernel, this assumes that the dimensions to loop over are at

// the end, each block is responsible for populating one value in the output

// array. There are at most 1024 threads per block.

template <typename T>

__device__ void

fast_sum(const size_t src_numel, const size_t el_to_sum_per_block,

const size_t num_dims, const size_t *info, const T *src, T *dst) {

const size_t *dims = info;

const size_t *strides = info + num_dims;

__shared__ T shr[BLOCK_SIZE];

size_t tid = threadIdx.x;

size_t dst_id = blockIdx.x;

shr[tid] = 0;

// Elements summed in this block range from dst_id * el_to_sum_per_block

// to (dst_id + 1) * el_to_sum_per_block.

size_t start_idx = dst_id * el_to_sum_per_block;

size_t stop_idx = min(start_idx + el_to_sum_per_block, src_numel);

size_t idx = start_idx + tid;

while (idx < stop_idx) {

// TODO: Fast version for the contiguous case.

size_t strided_i = get_strided_index(idx, num_dims, dims, strides);

shr[tid] += src[strided_i];

idx += blockDim.x;

}

// Parallel reduction, see the slides:

// https://www.olcf.ornl.gov/wp-content/uploads/2019/12/05_Atomics_Reductions_Warp_Shuffle.pdf

// https://stackoverflow.com/questions/66078814/is-cuda-atomicadd-operation-faster-than-launch-another-kernel-when-we-do-reduce

for (int s = blockDim.x / 2; s > 0; s >>= 1) {

__syncthreads();

if (tid < s)

shr[tid] += shr[tid + s];

}

if (tid == 0)

dst[dst_id] = shr[0];

}

static __device__ __forceinline__ float2 warp_reduce_sum(float2 a) {

#pragma unroll

for (int mask = 16; mask > 0; mask >>= 1) {

a.x += __shfl_xor_sync(0xffffffff, a.x, mask, 32);

a.y += __shfl_xor_sync(0xffffffff, a.y, mask, 32);

}

return a;

}

static __device__ __forceinline__ float warp_reduce_sum(float x) {

#pragma unroll

for (int mask = 16; mask > 0; mask >>= 1) {

x += __shfl_xor_sync(0xffffffff, x, mask, 32);

}

return x;

}

// LayerNorm implementation adapted from ggml, accumulation is made using f32.

// https://github.com/ggerganov/llama.cpp/blob/d59bd97065cd7ded6c4ecab54b1d5e0b1b11e318/ggml-cuda.cu#L477

template <typename T>

__device__ void layernorm(const T * x, T * dst, const T * alpha, const T * beta, const int ncols, const int block_size, const float eps) {

const int row = blockIdx.x*blockDim.y + threadIdx.y;

const int tid = threadIdx.x;

float2 mean_var = make_float2(0.f, 0.f);

for (int col = tid; col < ncols; col += block_size) {

const float xi = x[row*ncols + col];

mean_var.x += xi;

mean_var.y += xi * xi;

}

// sum up partial sums

mean_var = warp_reduce_sum(mean_var);

if (block_size > WARP_SIZE) {

__shared__ float2 s_sum[32];

int warp_id = threadIdx.x / WARP_SIZE;

int lane_id = threadIdx.x % WARP_SIZE;

if (lane_id == 0) {

s_sum[warp_id] = mean_var;

}

__syncthreads();

mean_var = s_sum[lane_id];

mean_var = warp_reduce_sum(mean_var);

}

const float mean = mean_var.x / ncols;

const float var = mean_var.y / ncols - mean * mean;

const float inv_std = rsqrtf(var + eps);

if (alpha == nullptr && beta == nullptr) {

for (int col = tid; col < ncols; col += block_size) {

float lhs = (static_cast<float>(x[row*ncols + col]) - mean) * inv_std;

dst[row*ncols + col] = static_cast<T>(lhs);

}

}

else if (alpha == nullptr && beta != nullptr) {

for (int col = tid; col < ncols; col += block_size) {

float b = static_cast<float>(beta[col]);

float lhs = (static_cast<float>(x[row*ncols + col]) - mean) * inv_std;

dst[row*ncols + col] = static_cast<T>(lhs + b);

}

}

else if (alpha != nullptr && beta == nullptr) {

for (int col = tid; col < ncols; col += block_size) {

float a = static_cast<float>(alpha[col]);

float lhs = (static_cast<float>(x[row*ncols + col]) - mean) * inv_std;

dst[row*ncols + col] = static_cast<T>(lhs * a);

}

}

else {

for (int col = tid; col < ncols; col += block_size) {

float a = static_cast<float>(alpha[col]);

float b = static_cast<float>(beta[col]);

float lhs = (static_cast<float>(x[row*ncols + col]) - mean) * inv_std;

dst[row*ncols + col] = static_cast<T>(lhs * a + b);

}

}

}

// RmsNorm implementation adapted from ggml, accumulation is made using f32.

// https://github.com/ggerganov/llama.cpp/blob/d59bd97065cd7ded6c4ecab54b1d5e0b1b11e318/ggml-cuda.cu#L523

template <typename T>

__device__ void rmsnorm(const T * x, T * dst, const T * alpha, const int ncols, const int block_size, const float eps) {

const int row = blockIdx.x*blockDim.y + threadIdx.y;

const int tid = threadIdx.x;

float tmp = 0.0f; // partial sum for thread in warp

for (int col = tid; col < ncols; col += block_size) {

const float xi = static_cast<float>(x[row*ncols + col]);

tmp += xi * xi;

}

// sum up partial sums

tmp = warp_reduce_sum(tmp);

if (block_size > WARP_SIZE) {

__shared__ float s_sum[32];

int warp_id = threadIdx.x / WARP_SIZE;

int lane_id = threadIdx.x % WARP_SIZE;

if (lane_id == 0) {

s_sum[warp_id] = tmp;

}

__syncthreads();

tmp = s_sum[lane_id];

tmp = warp_reduce_sum(tmp);

}

const float mean = tmp / ncols;

const float scale = rsqrtf(mean + eps);

if (alpha == nullptr) {

for (int col = tid; col < ncols; col += block_size) {

dst[row*ncols + col] = static_cast<T>(scale * static_cast<float>(x[row*ncols + col]));

}

}

else {

for (int col = tid; col < ncols; col += block_size) {

float a = static_cast<float>(alpha[col]);

dst[row*ncols + col] = static_cast<T>(scale * static_cast<float>(x[row*ncols + col]) * a);

}

}

}

// Softmax implementation adapted from ggml.

// https://github.com/ggerganov/llama.cpp/blob/d59bd97065cd7ded6c4ecab54b1d5e0b1b11e318/ggml-cuda.cu#L4159

template <typename T, typename ACC>

__device__ void softmax(const T * x, T * dst, const int ncols) {

const int row = blockDim.x*blockIdx.x + threadIdx.x;

const int block_size = blockDim.y;

const int tid = threadIdx.y;

T max_val = -INFINITY;

for (int col = tid; col < ncols; col += block_size) {

const int i = row*ncols + col;

max_val = maxg(max_val, x[i]);

}

// find the max value in the block

#pragma unroll

for (int mask = 16; mask > 0; mask >>= 1) {

max_val = maxg(max_val, __shfl_xor_sync(0xffffffff, max_val, mask, 32));

}

ACC tmp = 0.;

for (int col = tid; col < ncols; col += block_size) {

const int i = row*ncols + col;

const T val = expg(x[i] - max_val);

tmp += static_cast<ACC>(val);

dst[i] = val;

}

// sum up partial sums

#pragma unroll

for (int mask = 16; mask > 0; mask >>= 1) {

tmp += __shfl_xor_sync(0xffffffff, tmp, mask, 32);

}

const ACC inv_tmp = 1. / tmp;

for (int col = tid; col < ncols; col += block_size) {

const int i = row*ncols + col;

dst[i] *= inv_tmp;

}

}

template <typename T>

__device__ void ropei(const T * src, const T * cos, const T * sin, T * dst, const uint32_t bh, const uint32_t td) {

const int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (2 * idx >= bh * td) return;

uint32_t rope_idx = idx % (td / 2);

T c = cos[rope_idx];

T s = sin[rope_idx];

dst[2 * idx] = src[2 * idx] * c - src[2 * idx + 1] * s;

dst[2 * idx + 1] = src[2 * idx] * s + src[2 * idx + 1] * c;

}

template <typename T>

__device__ void rope(const T * src, const T * cos, const T * sin, T * dst, const uint32_t bh, const uint32_t td, const uint32_t d) {

const int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (2 * idx >= bh * td) return;

uint32_t i_bh = idx / (td / 2);

uint32_t i_td = idx - (td / 2) * i_bh;

uint32_t i_t = i_td / (d / 2);

uint32_t i_d = i_td - (d / 2) * i_t;

uint32_t i1 = i_bh * td + i_t * d + i_d;

uint32_t i2 = i1 + d / 2;

uint32_t i_cs = i_t * (d / 2) + i_d;

T c = cos[i_cs];

T s = sin[i_cs];

dst[i1] = src[i1] * c - src[i2] * s;

dst[i2] = src[i1] * s + src[i2] * c;

}

template <typename T>

__device__ void rope_thd(

const T * src,

const T * cos,

const T * sin,

T * dst,

const uint32_t b,

const uint32_t t,

const uint32_t h,

const uint32_t d

) {

const int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (2 * idx >= b * t * h * d) return;

uint32_t i_bth = idx / (d / 2);

uint32_t i_d = idx - (d / 2) * i_bth;

uint32_t i_t = (i_bth / h) % t;

uint32_t i1 = i_bth * d + i_d;

uint32_t i2 = i1 + d / 2;

uint32_t i_cs = i_t * (d / 2) + i_d;

T c = cos[i_cs];

T s = sin[i_cs];

dst[i1] = src[i1] * c - src[i2] * s;

dst[i2] = src[i1] * s + src[i2] * c;

}

template <typename T>

__device__ void

fast_max(const size_t src_numel, const size_t el_to_sum_per_block,

const size_t num_dims, const size_t *info, const T *src, T *dst) {

const size_t *dims = info;

const size_t *strides = info + num_dims;

__shared__ T shr[BLOCK_SIZE];

size_t tid = threadIdx.x;

size_t dst_id = blockIdx.x;

shr[tid] = -INFINITY;

// Elements summed in this block range from dst_id * el_to_sum_per_block

// to (dst_id + 1) * el_to_sum_per_block.

size_t start_idx = dst_id * el_to_sum_per_block;

size_t stop_idx = min(start_idx + el_to_sum_per_block, src_numel);

size_t idx = start_idx + tid;

while (idx < stop_idx) {

// TODO: Fast version for the contiguous case.

size_t strided_i = get_strided_index(idx, num_dims, dims, strides);

shr[tid] = maxg(shr[tid], src[strided_i]);

idx += blockDim.x;

}

// Parallel reduction, see the slides:

// https://www.olcf.ornl.gov/wp-content/uploads/2019/12/05_Atomics_Reductions_Warp_Shuffle.pdf

// https://stackoverflow.com/questions/66078814/is-cuda-atomicadd-operation-faster-than-launch-another-kernel-when-we-do-reduce

for (int s = blockDim.x / 2; s > 0; s >>= 1) {

__syncthreads();

if (tid < s)

shr[tid] = maxg(shr[tid], shr[tid + s]);

}

if (tid == 0)

dst[dst_id] = shr[0];

}

template <typename T>

__device__ void

fast_min(const size_t src_numel, const size_t el_to_sum_per_block,

const size_t num_dims, const size_t *info, const T *src, T *dst) {

const size_t *dims = info;

const size_t *strides = info + num_dims;

__shared__ T shr[BLOCK_SIZE];

size_t tid = threadIdx.x;

size_t dst_id = blockIdx.x;

shr[tid] = INFINITY;

// Elements summed in this block range from dst_id * el_to_sum_per_block

// to (dst_id + 1) * el_to_sum_per_block.

size_t start_idx = dst_id * el_to_sum_per_block;

size_t stop_idx = min(start_idx + el_to_sum_per_block, src_numel);

size_t idx = start_idx + tid;

while (idx < stop_idx) {

// TODO: Fast version for the contiguous case.

size_t strided_i = get_strided_index(idx, num_dims, dims, strides);

shr[tid] = ming(shr[tid], src[strided_i]);

idx += blockDim.x;

}

// Parallel reduction, see the slides:

// https://www.olcf.ornl.gov/wp-content/uploads/2019/12/05_Atomics_Reductions_Warp_Shuffle.pdf

// https://stackoverflow.com/questions/66078814/is-cuda-atomicadd-operation-faster-than-launch-another-kernel-when-we-do-reduce

for (int s = blockDim.x / 2; s > 0; s >>= 1) {

__syncthreads();

if (tid < s)

shr[tid] = ming(shr[tid], shr[tid + s]);

}

if (tid == 0)

dst[dst_id] = shr[0];

}

template <typename T>

__device__ void

fast_argmin(const size_t src_numel, const size_t el_to_sum_per_block,

const size_t num_dims, const size_t *info, const T *src, uint32_t *dst) {

const size_t *dims = info;

const size_t *strides = info + num_dims;

__shared__ T shr[BLOCK_SIZE];

__shared__ uint32_t shr_index[BLOCK_SIZE];

size_t tid = threadIdx.x;

size_t dst_id = blockIdx.x;

// Not sure how that works on uint32_t and uint8_t but it seems to do ok.

shr[tid] = INFINITY;

shr_index[tid] = 0xFFFFFFFF;

bool not_set = true;

// Elements summed in this block range from dst_id * el_to_sum_per_block

// to (dst_id + 1) * el_to_sum_per_block.

size_t start_idx = dst_id * el_to_sum_per_block;

size_t stop_idx = min(start_idx + el_to_sum_per_block, src_numel);

size_t idx = start_idx + tid;

while (idx < stop_idx) {

// TODO: Fast version for the contiguous case.

size_t strided_i = get_strided_index(idx, num_dims, dims, strides);

if (not_set || src[strided_i] < shr[tid]) {

shr[tid] = src[strided_i];

// Assume that the reduction takes place over the last dimension which is contiguous.

shr_index[tid] = idx % dims[num_dims - 1];

not_set = false;

}

idx += blockDim.x;

}

// Parallel reduction, see the slides:

// https://www.olcf.ornl.gov/wp-content/uploads/2019/12/05_Atomics_Reductions_Warp_Shuffle.pdf

// https://stackoverflow.com/questions/66078814/is-cuda-atomicadd-operation-faster-than-launch-another-kernel-when-we-do-reduce

for (int s = blockDim.x / 2; s > 0; s >>= 1) {

__syncthreads();

if (tid < s && shr[tid + s] < shr[tid]) {

shr[tid] = shr[tid + s];

shr_index[tid] = shr_index[tid + s];

}

}

if (tid == 0)

dst[dst_id] = shr_index[0];

}

template <typename T>

__device__ void

fast_argmax(const size_t src_numel, const size_t el_to_sum_per_block,

const size_t num_dims, const size_t *info, const T *src, uint32_t *dst) {

const size_t *dims = info;

const size_t *strides = info + num_dims;

__shared__ T shr[BLOCK_SIZE];

__shared__ uint32_t shr_index[BLOCK_SIZE];

size_t tid = threadIdx.x;

size_t dst_id = blockIdx.x;

shr[tid] = -INFINITY;

shr_index[tid] = 0xFFFFFFFF;

bool not_set = true;

// Elements summed in this block range from dst_id * el_to_sum_per_block

// to (dst_id + 1) * el_to_sum_per_block.

size_t start_idx = dst_id * el_to_sum_per_block;

size_t stop_idx = min(start_idx + el_to_sum_per_block, src_numel);

size_t idx = start_idx + tid;

while (idx < stop_idx) {

// TODO: Fast version for the contiguous case.

size_t strided_i = get_strided_index(idx, num_dims, dims, strides);

if (not_set || src[strided_i] > shr[tid]) {

shr[tid] = src[strided_i];

// Assume that the reduction takes place over the last dimension which is contiguous.

shr_index[tid] = idx % dims[num_dims - 1];

not_set = false;

}

idx += blockDim.x;

}

// Parallel reduction, see the slides:

// https://www.olcf.ornl.gov/wp-content/uploads/2019/12/05_Atomics_Reductions_Warp_Shuffle.pdf

// https://stackoverflow.com/questions/66078814/is-cuda-atomicadd-operation-faster-than-launch-another-kernel-when-we-do-reduce

for (int s = blockDim.x / 2; s > 0; s >>= 1) {

__syncthreads();

if (tid < s && shr[tid + s] > shr[tid]) {

shr[tid] = shr[tid + s];

shr_index[tid] = shr_index[tid + s];

}

}

if (tid == 0)

dst[dst_id] = shr_index[0];

}

#define FAST_OP(TYPENAME, MIN_NAME, MAX_NAME, ARGMIN_NAME, ARGMAX_NAME, SUM_NAME) \

extern "C" __global__ void ARGMIN_NAME( \

const size_t src_numel, const size_t el_to_sum_per_block, \

const size_t num_dims, const size_t *info, const TYPENAME *src, \

uint32_t *dst) { \

fast_argmin(src_numel, el_to_sum_per_block, num_dims, info, src, dst); \

} \

extern "C" __global__ void ARGMAX_NAME( \

const size_t src_numel, const size_t el_to_sum_per_block, \

const size_t num_dims, const size_t *info, const TYPENAME *src, \

uint32_t *dst) { \

fast_argmax(src_numel, el_to_sum_per_block, num_dims, info, src, dst); \

} \

extern "C" __global__ void MIN_NAME( \

const size_t src_numel, const size_t el_to_sum_per_block, \

const size_t num_dims, const size_t *info, const TYPENAME *src, \

TYPENAME *dst) { \

fast_min(src_numel, el_to_sum_per_block, num_dims, info, src, dst); \

} \

extern "C" __global__ void MAX_NAME( \

const size_t src_numel, const size_t el_to_sum_per_block, \

const size_t num_dims, const size_t *info, const TYPENAME *src, \

TYPENAME *dst) { \

fast_max(src_numel, el_to_sum_per_block, num_dims, info, src, dst); \

} \

extern "C" __global__ void SUM_NAME( \

const size_t src_numel, const size_t el_to_sum_per_block, \

const size_t num_dims, const size_t *info, const TYPENAME *src, \

TYPENAME *dst) { \

fast_sum(src_numel, el_to_sum_per_block, num_dims, info, src, dst); \

}

#define SUM_OP(TYPENAME, FN_NAME) \

extern "C" __global__ void FN_NAME( \

const size_t numel, const size_t num_dims, const size_t num_sum_dims, \

const size_t *info, const TYPENAME *inp, TYPENAME *out) { \

const size_t *dims = info; \

const size_t *strides = info + num_dims; \

const size_t *sum_dims_l = info + 2 * num_dims; \

const size_t *sum_dims_s = info + 2 * num_dims + num_sum_dims; \

if (is_contiguous(num_dims, dims, strides)) { \

for (unsigned int i = blockIdx.x * blockDim.x + threadIdx.x; i < numel; \

i += blockDim.x * gridDim.x) { \

size_t dst_index = i; \

for (unsigned int nd = 0; nd < num_sum_dims; ++nd) { \

size_t stride = sum_dims_s[nd]; \

size_t pre = dst_index / stride; \

size_t post = dst_index % stride; \

dst_index = (pre / sum_dims_l[nd]) * stride + post; \

} \

atomicAdd(out + dst_index, inp[i]); \

} \

} else { \

for (unsigned int i = blockIdx.x * blockDim.x + threadIdx.x; i < numel; \

i += blockDim.x * gridDim.x) { \

unsigned strided_i = get_strided_index(i, num_dims, dims, strides); \

size_t dst_index = i; \

for (unsigned int nd = 0; nd < num_sum_dims; ++nd) { \

size_t stride = sum_dims_s[nd]; \

size_t pre = dst_index / stride; \

size_t post = dst_index % stride; \

dst_index = (pre / sum_dims_l[nd]) * stride + post; \

} \

atomicAdd(out + dst_index, inp[strided_i]); \

} \

} \

}

#define SOFTMAX_OP(TYPENAME, ACC_TYPENAME, FN_NAME) \

extern "C" __global__ void FN_NAME( \

const TYPENAME *src, TYPENAME *dst, \

const int n_cols) { \

softmax<TYPENAME, ACC_TYPENAME>(src, dst, n_cols); \

} \

#define RMSNORM_OP(TYPENAME, FN_NAME) \

extern "C" __global__ void FN_NAME( \

const TYPENAME *src, TYPENAME *dst, const TYPENAME *alpha, \

const int n_cols, const int block_size, const float eps) { \

rmsnorm<TYPENAME>(src, dst, alpha, n_cols, block_size, eps); \

} \

#define LAYERNORM_OP(TYPENAME, FN_NAME) \

extern "C" __global__ void FN_NAME( \

const TYPENAME *src, TYPENAME *dst, const TYPENAME *alpha, \

const TYPENAME *beta, const int n_cols, const int block_size, const float eps) { \

layernorm<TYPENAME>(src, dst, alpha, beta, n_cols, block_size, eps); \

} \

#define ROPE_OP(TYPENAME, FN_NAME, FN_NAME_I, FN_NAME_THD) \

extern "C" __global__ void FN_NAME_I( \

const TYPENAME *src, \

const TYPENAME *cos, \

const TYPENAME *sin, \

TYPENAME *dst, \

const uint32_t bh, \

const uint32_t td) { \

ropei<TYPENAME>(src, cos, sin, dst, bh, td); \

} \

extern "C" __global__ void FN_NAME( \

const TYPENAME *src, \

const TYPENAME *cos, \

const TYPENAME *sin, \

TYPENAME *dst, \

const uint32_t bh, \

const uint32_t td, \

const uint32_t d) { \

rope<TYPENAME>(src, cos, sin, dst, bh, td, d); \

} \

extern "C" __global__ void FN_NAME_THD( \

const TYPENAME *src, \

const TYPENAME *cos, \

const TYPENAME *sin, \

TYPENAME *dst, \

const uint32_t b, \

const uint32_t t, \

const uint32_t h, \

const uint32_t d) { \

rope_thd<TYPENAME>(src, cos, sin, dst, b, t, h, d); \

} \

#if __CUDA_ARCH__ >= 800

SOFTMAX_OP(__nv_bfloat16, float, softmax_bf16)

RMSNORM_OP(__nv_bfloat16, rmsnorm_bf16)

LAYERNORM_OP(__nv_bfloat16, layernorm_bf16)

ROPE_OP(__nv_bfloat16, rope_bf16, rope_i_bf16, rope_thd_bf16)

SUM_OP(__nv_bfloat16, sum_bf16)

FAST_OP(__nv_bfloat16, fast_min_bf16, fast_max_bf16, fast_argmin_bf16, fast_argmax_bf16, fast_sum_bf16)

#endif

#if __CUDA_ARCH__ >= 530

SOFTMAX_OP(__half, float, softmax_f16)

RMSNORM_OP(__half, rmsnorm_f16)

LAYERNORM_OP(__half, layernorm_f16)

ROPE_OP(__half, rope_f16, rope_i_f16, rope_thd_f16)

SUM_OP(__half, sum_f16)

FAST_OP(__half, fast_min_f16, fast_max_f16, fast_argmin_f16, fast_argmax_f16, fast_sum_f16)

#endif

SUM_OP(float, sum_f32)

SUM_OP(double, sum_f64)

SUM_OP(uint32_t, sum_u32)

SOFTMAX_OP(float, float, softmax_f32)

SOFTMAX_OP(double, double, softmax_f64)

RMSNORM_OP(float, rmsnorm_f32)

RMSNORM_OP(double, rmsnorm_f64)

LAYERNORM_OP(float, layernorm_f32)

LAYERNORM_OP(double, layernorm_f64)

ROPE_OP(float, rope_f32, rope_i_f32, rope_thd_f32)

ROPE_OP(double, rope_f64, rope_i_f64, rope_thd_f64)

FAST_OP(float, fast_min_f32, fast_max_f32, fast_argmin_f32, fast_argmax_f32, fast_sum_f32)

FAST_OP(double, fast_min_f64, fast_max_f64, fast_argmin_f64, fast_argmax_f64, fast_sum_f64)

FAST_OP(uint32_t, fast_min_u32, fast_max_u32, fast_argmin_u32, fast_argmax_u32, fast_sum_u32)

FAST_OP(int64_t, fast_min_i64, fast_max_i64, fast_argmin_i64, fast_argmax_i64, fast_sum_i64)

FAST_OP(uint8_t, fast_min_u8, fast_max_u8, fast_argmin_u8, fast_argmax_u8, fast_sum_u8)

| 1 |

0 | hf_public_repos/candle/candle-kernels | hf_public_repos/candle/candle-kernels/src/sort.cu | // Adapted from https://github.com/ggerganov/llama.cpp/blob/master/ggml-cuda/argsort.cu

#define SORT_ORDER_ASC 1

#define SORT_ORDER_DESC 0

#include "cuda_utils.cuh"

#include<stdint.h>

template<typename T>

static inline __device__ void ggml_cuda_swap(T & a, T & b) {

T tmp = a;

a = b;

b = tmp;

}

template<int order, typename T>

static __device__ void k_argsort(const T * x, uint32_t * dst, const int ncols, int ncols_pad) {

// bitonic sort

int col = threadIdx.x;

int row = blockIdx.y;

if (col >= ncols_pad) {

return;

}

const T * x_row = x + row * ncols;

extern __shared__ int dst_row[];

// initialize indices

dst_row[col] = col;

__syncthreads();

for (int k = 2; k <= ncols_pad; k *= 2) {

for (int j = k / 2; j > 0; j /= 2) {

int ixj = col ^ j;

if (ixj > col) {

if ((col & k) == 0) {

if (dst_row[col] >= ncols ||

(dst_row[ixj] < ncols && (order == SORT_ORDER_ASC ?

x_row[dst_row[col]] > x_row[dst_row[ixj]] :

x_row[dst_row[col]] < x_row[dst_row[ixj]]))

) {

ggml_cuda_swap(dst_row[col], dst_row[ixj]);

}

} else {

if (dst_row[ixj] >= ncols ||

(dst_row[col] < ncols && (order == SORT_ORDER_ASC ?

x_row[dst_row[col]] < x_row[dst_row[ixj]] :

x_row[dst_row[col]] > x_row[dst_row[ixj]]))

) {

ggml_cuda_swap(dst_row[col], dst_row[ixj]);

}

}

}

__syncthreads();

}

}

// copy the result to dst without the padding

if (col < ncols) {

dst[row * ncols + col] = dst_row[col];

}

}

#define ASORT_OP(TYPENAME, RUST_NAME) \

extern "C" __global__ void asort_asc_##RUST_NAME( \

const TYPENAME * x, uint32_t * dst, const int ncols, int ncols_pad \

) { \

k_argsort<SORT_ORDER_ASC>(x, dst, ncols, ncols_pad); \

} \

extern "C" __global__ void asort_desc_##RUST_NAME( \

const TYPENAME * x, uint32_t * dst, const int ncols, int ncols_pad \

) { \

k_argsort<SORT_ORDER_DESC>(x, dst, ncols, ncols_pad); \

} \

#if __CUDA_ARCH__ >= 800

ASORT_OP(__nv_bfloat16, bf16)

#endif

#if __CUDA_ARCH__ >= 530

ASORT_OP(__half, f16)

#endif

ASORT_OP(float, f32)

ASORT_OP(double, f64)

ASORT_OP(uint8_t, u8)

ASORT_OP(uint32_t, u32)

ASORT_OP(int64_t, i64)

| 2 |

0 | hf_public_repos/candle/candle-kernels | hf_public_repos/candle/candle-kernels/src/cuda_utils.cuh | #include "compatibility.cuh"

#include<stdint.h>

#include<cmath>

// TODO: This is often used to check that the data is contiguous so that

// kernels can be easily mapped. However this only returns true for row

// major, if all the inputs are column major, we could apply the fast path

// too (but we wouldn't if some of them are row major and some column major).

__device__ bool is_contiguous(

const size_t num_dims,

const size_t *dims,

const size_t *strides

) {

size_t acc = 1;

for (unsigned int d = 0; d < num_dims; d++) {

unsigned int dim_idx = num_dims - 1 - d;

if (dims[dim_idx] > 1 && acc != strides[dim_idx]) {

return false;

}

acc *= dims[dim_idx];

}

return true;

}

__device__ unsigned int get_strided_index(

unsigned int idx,

const size_t num_dims,

const size_t *dims,

const size_t *strides

) {

unsigned int strided_i = 0;

for (unsigned int d = 0; d < num_dims; d++) {

unsigned int dim_idx = num_dims - 1 - d;

strided_i += (idx % dims[dim_idx]) * strides[dim_idx];

idx /= dims[dim_idx];

}

return strided_i;

}

__device__ unsigned int restrided(

const unsigned int strided_i,

const size_t num_dims,

const size_t *dims,

const size_t *strides,

const size_t *new_strides

) {

unsigned int idx = 0;

for (int d = 0; d < num_dims; d++) {

idx += (strides[d] == 0 ? 0 : (strided_i / strides[d]) % dims[d]) * new_strides[d];

}

return idx;

}

// Sourced from https://graphics.stanford.edu/~seander/bithacks.html#RoundUpPowerOf2

// Input must be less than or equal to 2 ^ 16

// used in reductions

__device__ __forceinline__ unsigned int next_power_of_two(unsigned int v) {

v--;

v |= v >> 1;

v |= v >> 2;

v |= v >> 4;

v |= v >> 8;

v++;

return v;

}

// Efficiently computes the sum of each chunk in "data" of size chunk_len, and

// stores the sums in out[i / chunk_len]

template<typename T>

__device__ void chunk_sum(

const size_t chunk_len,

const T data,

T* out

) {

__shared__ T buf[1024];

// assumes that threads where i >= numel have already exited

unsigned int i = blockIdx.x * blockDim.x + threadIdx.x;

unsigned int block_i = threadIdx.x;

// Fall back to atomicAdd if chunk_len is small to reduce overhead

if (chunk_len <= 2) {

atomicAdd(out + i / chunk_len, data);

return;

}

buf[block_i] = data;

unsigned int chunk_i = i % chunk_len;

unsigned int chunk_start = max((int)(block_i - chunk_i), 0);

unsigned int chunk_end = min((unsigned int)(block_i + chunk_len - chunk_i), blockDim.x);

chunk_i = block_i - chunk_start;

size_t max_chunk_len = min(chunk_end - chunk_start, blockDim.x);

size_t incr = next_power_of_two(max_chunk_len) >> 1;

__syncthreads();

// Uses sequential addressing as discussed in

// https://developer.download.nvidia.com/assets/cuda/files/reduction.pdf

for (; incr > 0; incr >>= 1) {

unsigned int block_i_2 = block_i + incr;

if (block_i_2 < chunk_end && chunk_i < incr) {

// This is sound because __syncthreads and the conditions above

// ensure that no data races occur

buf[block_i] += buf[block_i_2];

}

__syncthreads();

}

if (block_i == chunk_start) {

atomicAdd(out + i / chunk_len, buf[block_i]);

}

}

__device__ __forceinline__ bool isnang(float a) { return isnan(a); }

__device__ __forceinline__ bool isnang(double a) { return isnan(a); }

__device__ __forceinline__ float recipg(float a) { return 1.0 / a; }

__device__ __forceinline__ double recipg(double a) { return 1.0 / a; }

__device__ __forceinline__ float cosg(float a) { return cosf(a); }

__device__ __forceinline__ double cosg(double a) { return cos(a); }

__device__ __forceinline__ float sing(float a) { return sinf(a); }

__device__ __forceinline__ double sing(double a) { return sin(a); }

__device__ __forceinline__ float sqrtg(float a) { return sqrtf(a); }

__device__ __forceinline__ double sqrtg(double a) { return sqrt(a); }

__device__ __forceinline__ float powg(float a, float b) { return powf(a, b); }

__device__ __forceinline__ double powg(double a, double b) { return pow(a, b); }

__device__ __forceinline__ float tanhg(float a) { return tanhf(a); }

__device__ __forceinline__ double tanhg(double a) { return tanh(a); }

__device__ __forceinline__ float erfg(float a) { return erff(a); }

__device__ __forceinline__ double erfg(double a) { return erf(a); }

__device__ __forceinline__ float ceilg(float a) { return ceilf(a); }

__device__ __forceinline__ double ceilg(double a) { return ceil(a); }

__device__ __forceinline__ float floorg(float a) { return floorf(a); }

__device__ __forceinline__ double floorg(double a) { return floor(a); }

__device__ __forceinline__ float roundg(float a) { return roundf(a); }

__device__ __forceinline__ double roundg(double a) { return round(a); }

__device__ __forceinline__ float normcdfg(float a) { return normcdff(a); }

__device__ __forceinline__ double normcdfg(double a) { return normcdf(a); }

__device__ __forceinline__ float maxg(float a, float b) { return fmaxf(a, b); }

__device__ __forceinline__ double maxg(double a, double b) { return fmax(a, b); }

__device__ __forceinline__ float ming(float a, float b) { return fminf(a, b); }

__device__ __forceinline__ double ming(double a, double b) { return fmin(a, b); }

__device__ __forceinline__ float logg(float a) { return logf(a); }

__device__ __forceinline__ double logg(double a) { return log(a); }

__device__ __forceinline__ float expg(float a) { return expf(a); }

__device__ __forceinline__ double expg(double a) { return exp(a); }

__device__ __forceinline__ float absg(float a) { return fabsf(a); }

__device__ __forceinline__ double absg(double a) { return fabs(a); }

__device__ __forceinline__ float copysigng(float a, float b) { return copysignf(a, b); }

__device__ __forceinline__ double copysigng(double a, double b) { return copysign(a, b); }

__device__ __forceinline__ int64_t ming(int64_t a, int64_t b) { return min(a, b); }

__device__ __forceinline__ int64_t maxg(int64_t a, int64_t b) { return max(a, b); }

__device__ __forceinline__ uint32_t ming(uint32_t a, uint32_t b) { return min(a, b); }

__device__ __forceinline__ uint32_t maxg(uint32_t a, uint32_t b) { return max(a, b); }

__device__ __forceinline__ uint8_t ming(uint8_t a, uint8_t b) { return min(a, b); }

__device__ __forceinline__ uint8_t maxg(uint8_t a, uint8_t b) { return max(a, b); }

#if __CUDA_ARCH__ >= 530

__device__ __forceinline__ __half powg(__half a, __half b) { return __float2half(powf(__half2float(a), __half2float(b))); }

__device__ __forceinline__ bool isnang(__half a) { return __hisnan(a); }

__device__ __forceinline__ __half sqrtg(__half a) { return hsqrt(a); }

__device__ __forceinline__ __half cosg(__half a) { return hcos(a); }

__device__ __forceinline__ __half sing(__half a) { return hsin(a); }

__device__ __forceinline__ __half recipg(__half a) { __half one = 1.0; return one / a; }

__device__ __forceinline__ __half maxg(__half a, __half b) { return __hmax_nan(a, b); }

__device__ __forceinline__ __half tanhg(__half a) { return __float2half(tanhf(__half2float(a))); }

__device__ __forceinline__ __half erfg(__half a) { return __float2half(erff(__half2float(a))); }

__device__ __forceinline__ __half ceilg(__half a) { return __float2half(ceilf(__half2float(a))); }

__device__ __forceinline__ __half floorg(__half a) { return __float2half(floorf(__half2float(a))); }

__device__ __forceinline__ __half roundg(__half a) { return __float2half(roundf(__half2float(a))); }

__device__ __forceinline__ __half normcdfg(__half a) { return __float2half(normcdff(__half2float(a))); }

__device__ __forceinline__ __half ming(__half a, __half b) { return __hmin_nan(a, b); }

__device__ __forceinline__ __half logg(__half a) { return hlog(a); }

__device__ __forceinline__ __half expg(__half a) { return hexp(a); }

__device__ __forceinline__ __half absg(__half a) { return __habs(a); }

__device__ __forceinline__ __half copysigng(__half a, __half b) { return __float2half(copysignf(__half2float(a), __half2float(b))); }

#endif

#if __CUDA_ARCH__ >= 800

__device__ __forceinline__ __nv_bfloat16 powg(__nv_bfloat16 a, __nv_bfloat16 b) { return __float2bfloat16(powf(__bfloat162float(a), __bfloat162float(b))); }

__device__ __forceinline__ bool isnang(__nv_bfloat16 a) { return __hisnan(a); }

__device__ __forceinline__ __nv_bfloat16 sqrtg(__nv_bfloat16 a) { return hsqrt(a); }

__device__ __forceinline__ __nv_bfloat16 cosg(__nv_bfloat16 a) { return hcos(a); }

__device__ __forceinline__ __nv_bfloat16 sing(__nv_bfloat16 a) { return hsin(a); }

__device__ __forceinline__ __nv_bfloat16 recipg(__nv_bfloat16 a) { __nv_bfloat16 one = 1.0; return one / a; }

__device__ __forceinline__ __nv_bfloat16 maxg(__nv_bfloat16 a, __nv_bfloat16 b) { return __hmax_nan(a, b); }

__device__ __forceinline__ __nv_bfloat16 tanhg(__nv_bfloat16 a) { return __float2bfloat16(tanhf(__bfloat162float(a))); }

__device__ __forceinline__ __nv_bfloat16 erfg(__nv_bfloat16 a) { return __float2bfloat16(erff(__bfloat162float(a))); }

__device__ __forceinline__ __nv_bfloat16 ceilg(__nv_bfloat16 a) { return __float2bfloat16(ceilf(__bfloat162float(a))); }

__device__ __forceinline__ __nv_bfloat16 floorg(__nv_bfloat16 a) { return __float2bfloat16(floorf(__bfloat162float(a))); }

__device__ __forceinline__ __nv_bfloat16 roundg(__nv_bfloat16 a) { return __float2bfloat16(roundf(__bfloat162float(a))); }

__device__ __forceinline__ __nv_bfloat16 normcdfg(__nv_bfloat16 a) { return __float2bfloat16(normcdff(__bfloat162float(a))); }

__device__ __forceinline__ __nv_bfloat16 ming(__nv_bfloat16 a, __nv_bfloat16 b) { return __hmin_nan(a, b); }

__device__ __forceinline__ __nv_bfloat16 logg(__nv_bfloat16 a) { return hlog(a); }

__device__ __forceinline__ __nv_bfloat16 expg(__nv_bfloat16 a) { return hexp(a); }

__device__ __forceinline__ __nv_bfloat16 absg(__nv_bfloat16 a) { return __habs(a); }

__device__ __forceinline__ __nv_bfloat16 copysigng(__nv_bfloat16 a, __nv_bfloat16 b) { return __float2bfloat16(copysignf(__bfloat162float(a), __bfloat162float(b))); }

#endif

| 3 |

0 | hf_public_repos/candle/candle-wasm-examples | hf_public_repos/candle/candle-wasm-examples/bert/bertWorker.js | //load Candle Bert Module wasm module

import init, { Model } from "./build/m.js";

async function fetchArrayBuffer(url) {

const cacheName = "bert-candle-cache";

const cache = await caches.open(cacheName);

const cachedResponse = await cache.match(url);

if (cachedResponse) {

const data = await cachedResponse.arrayBuffer();

return new Uint8Array(data);

}

const res = await fetch(url, { cache: "force-cache" });

cache.put(url, res.clone());

return new Uint8Array(await res.arrayBuffer());

}

class Bert {

static instance = {};

static async getInstance(weightsURL, tokenizerURL, configURL, modelID) {

if (!this.instance[modelID]) {

await init();

self.postMessage({ status: "loading", message: "Loading Model" });

const [weightsArrayU8, tokenizerArrayU8, mel_filtersArrayU8] =

await Promise.all([

fetchArrayBuffer(weightsURL),

fetchArrayBuffer(tokenizerURL),

fetchArrayBuffer(configURL),

]);

this.instance[modelID] = new Model(

weightsArrayU8,

tokenizerArrayU8,

mel_filtersArrayU8

);

} else {

self.postMessage({ status: "ready", message: "Model Already Loaded" });

}

return this.instance[modelID];

}

}

self.addEventListener("message", async (event) => {

const {

weightsURL,

tokenizerURL,

configURL,

modelID,

sentences,

normalize = true,

} = event.data;

try {

self.postMessage({ status: "ready", message: "Starting Bert Model" });

const model = await Bert.getInstance(

weightsURL,

tokenizerURL,

configURL,

modelID

);

self.postMessage({

status: "embedding",

message: "Calculating Embeddings",

});

const output = model.get_embeddings({

sentences: sentences,

normalize_embeddings: normalize,

});

self.postMessage({

status: "complete",

message: "complete",

output: output.data,

});

} catch (e) {

self.postMessage({ error: e });

}

});

| 4 |

0 | hf_public_repos/candle/candle-wasm-examples | hf_public_repos/candle/candle-wasm-examples/bert/Cargo.toml | [package]

name = "candle-wasm-example-bert"

version.workspace = true

edition.workspace = true

description.workspace = true

repository.workspace = true

keywords.workspace = true

categories.workspace = true

license.workspace = true

[dependencies]

candle = { workspace = true }

candle-nn = { workspace = true }

candle-transformers = { workspace = true }

num-traits = { workspace = true }

tokenizers = { workspace = true, features = ["unstable_wasm"] }

# App crates.

anyhow = { workspace = true }

byteorder = { workspace = true }

log = { workspace = true }

rand = { workspace = true }

serde = { workspace = true }

serde_json = { workspace = true }

safetensors = { workspace = true }

# Wasm specific crates.

console_error_panic_hook = "0.1.7"

getrandom = { version = "0.2", features = ["js"] }

gloo = "0.11"

js-sys = "0.3.64"

wasm-bindgen = "0.2.87"

serde-wasm-bindgen = "0.6.0"

| 5 |

0 | hf_public_repos/candle/candle-wasm-examples | hf_public_repos/candle/candle-wasm-examples/bert/build-lib.sh | cargo build --target wasm32-unknown-unknown --release

wasm-bindgen ../../target/wasm32-unknown-unknown/release/m.wasm --out-dir build --target web

| 6 |

0 | hf_public_repos/candle/candle-wasm-examples | hf_public_repos/candle/candle-wasm-examples/bert/lib-example.html | <html>

<head>

<meta content="text/html;charset=utf-8" http-equiv="Content-Type" />

<title>Candle Bert</title>

</head>

<body></body>

</html>

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<style>

@import url("https://fonts.googleapis.com/css2?family=Source+Code+Pro:wght@200;300;400&family=Source+Sans+3:wght@100;200;300;400;500;600;700;800;900&display=swap");

html,

body {

font-family: "Source Sans 3", sans-serif;

}

</style>

<script src="https://cdn.tailwindcss.com"></script>

<script type="module" src="./code.js"></script>

<script type="module">

import { hcl } from "https://cdn.skypack.dev/d3-color@3";

import { interpolateReds } from "https://cdn.skypack.dev/d3-scale-chromatic@3";

import { scaleLinear } from "https://cdn.skypack.dev/d3-scale@4";

import {

getModelInfo,

getEmbeddings,

getWikiText,

cosineSimilarity,

} from "./utils.js";

const bertWorker = new Worker("./bertWorker.js", {

type: "module",

});

const inputContainerEL = document.querySelector("#input-container");

const textAreaEl = document.querySelector("#input-area");

const outputAreaEl = document.querySelector("#output-area");

const formEl = document.querySelector("#form");

const searchInputEl = document.querySelector("#search-input");

const formWikiEl = document.querySelector("#form-wiki");

const searchWikiEl = document.querySelector("#search-wiki");

const outputStatusEl = document.querySelector("#output-status");

const modelSelectEl = document.querySelector("#model");

const sentencesRegex =

/(?<!\w\.\w.)(?<![A-Z][a-z]\.)(?<![A-Z]\.)(?<=\.|\?)\s/gm;

let sentenceEmbeddings = [];

let currInputText = "";

let isCalculating = false;

function toggleTextArea(state) {

if (state) {

textAreaEl.hidden = false;

textAreaEl.focus();

} else {

textAreaEl.hidden = true;

}

}

inputContainerEL.addEventListener("focus", (e) => {

toggleTextArea(true);

});

textAreaEl.addEventListener("blur", (e) => {

toggleTextArea(false);

});

textAreaEl.addEventListener("focusout", (e) => {

toggleTextArea(false);

if (currInputText === textAreaEl.value || isCalculating) return;

populateOutputArea(textAreaEl.value);

calculateEmbeddings(textAreaEl.value);

});

modelSelectEl.addEventListener("change", (e) => {

if (currInputText === "" || isCalculating) return;

populateOutputArea(textAreaEl.value);

calculateEmbeddings(textAreaEl.value);

});

function populateOutputArea(text) {

currInputText = text;

const sentences = text.split(sentencesRegex);

outputAreaEl.innerHTML = "";

for (const [id, sentence] of sentences.entries()) {

const sentenceEl = document.createElement("span");

sentenceEl.id = `sentence-${id}`;

sentenceEl.innerText = sentence + " ";

outputAreaEl.appendChild(sentenceEl);

}

}

formEl.addEventListener("submit", async (e) => {

e.preventDefault();

if (isCalculating || currInputText === "") return;

toggleInputs(true);

const modelID = modelSelectEl.value;

const { modelURL, tokenizerURL, configURL, search_prefix } =

getModelInfo(modelID);

const text = searchInputEl.value;

const query = search_prefix + searchInputEl.value;

outputStatusEl.classList.remove("invisible");

outputStatusEl.innerText = "Calculating embeddings for query...";

isCalculating = true;

const out = await getEmbeddings(

bertWorker,

modelURL,

tokenizerURL,

configURL,

modelID,

[query]

);

outputStatusEl.classList.add("invisible");

const queryEmbeddings = out.output[0];

// calculate cosine similarity with all sentences given the query

const distances = sentenceEmbeddings

.map((embedding, id) => ({

id,

similarity: cosineSimilarity(queryEmbeddings, embedding),

}))

.sort((a, b) => b.similarity - a.similarity)

// getting top 10 most similar sentences

.slice(0, 10);

const colorScale = scaleLinear()

.domain([

distances[distances.length - 1].similarity,

distances[0].similarity,

])

.range([0, 1])

.interpolate(() => interpolateReds);

outputAreaEl.querySelectorAll("span").forEach((el) => {

el.style.color = "unset";

el.style.backgroundColor = "unset";

});

distances.forEach((d) => {

const el = outputAreaEl.querySelector(`#sentence-${d.id}`);

const color = colorScale(d.similarity);

const fontColor = hcl(color).l < 70 ? "white" : "black";

el.style.color = fontColor;

el.style.backgroundColor = color;

});

outputAreaEl

.querySelector(`#sentence-${distances[0].id}`)

.scrollIntoView({

behavior: "smooth",

block: "center",

inline: "nearest",

});

isCalculating = false;

toggleInputs(false);

});

async function calculateEmbeddings(text) {

isCalculating = true;

toggleInputs(true);

const modelID = modelSelectEl.value;

const { modelURL, tokenizerURL, configURL, document_prefix } =

getModelInfo(modelID);

const sentences = text.split(sentencesRegex);

const allEmbeddings = [];

outputStatusEl.classList.remove("invisible");

for (const [id, sentence] of sentences.entries()) {

const query = document_prefix + sentence;

outputStatusEl.innerText = `Calculating embeddings: sentence ${

id + 1

} of ${sentences.length}`;

const embeddings = await getEmbeddings(

bertWorker,

modelURL,

tokenizerURL,

configURL,

modelID,

[query],

updateStatus

);

allEmbeddings.push(embeddings);

}

outputStatusEl.classList.add("invisible");

sentenceEmbeddings = allEmbeddings.map((e) => e.output[0]);

isCalculating = false;

toggleInputs(false);

}

function updateStatus(data) {

if ("status" in data) {

if (data.status === "loading") {

outputStatusEl.innerText = data.message;

outputStatusEl.classList.remove("invisible");

}

}

}

function toggleInputs(state) {

const interactive = document.querySelectorAll(".interactive");

interactive.forEach((el) => {

if (state) {

el.disabled = true;

} else {

el.disabled = false;

}

});

}

searchWikiEl.addEventListener("input", () => {

searchWikiEl.setCustomValidity("");

});

formWikiEl.addEventListener("submit", async (e) => {

e.preventDefault();

if ("example" in e.submitter.dataset) {

searchWikiEl.value = e.submitter.innerText;

}

const text = searchWikiEl.value;

if (isCalculating || text === "") return;

try {

const wikiText = await getWikiText(text);

searchWikiEl.setCustomValidity("");

textAreaEl.innerHTML = wikiText;

populateOutputArea(wikiText);

calculateEmbeddings(wikiText);

searchWikiEl.value = "";

} catch {

searchWikiEl.setCustomValidity("Invalid Wikipedia article name");

searchWikiEl.reportValidity();

}

});

</script>

</head>

<body class="container max-w-4xl mx-auto p-4">

<main class="grid grid-cols-1 gap-5 relative">

<span class="absolute text-5xl -ml-[1em]"> 🕯️ </span>

<div>

<h1 class="text-5xl font-bold">Candle BERT</h1>

<h2 class="text-2xl font-bold">Rust/WASM Demo</h2>

<p class="max-w-lg">

Running sentence embeddings and similarity search in the browser using

the Bert Model written with

<a

href="https://github.com/huggingface/candle/"

target="_blank"

class="underline hover:text-blue-500 hover:no-underline"

>Candle

</a>

and compiled to Wasm. Embeddings models from are from

<a

href="https://huggingface.co/sentence-transformers/"

target="_blank"

class="underline hover:text-blue-500 hover:no-underline"

>

Sentence Transformers

</a>

and

<a

href="https://huggingface.co/intfloat/"

target="_blank"

class="underline hover:text-blue-500 hover:no-underline"

>

Liang Wang - e5 Models

</a>

</p>

</div>

<div>

<label for="model" class="font-medium block">Models Options: </label>

<select

id="model"

class="border-2 border-gray-500 rounded-md font-light interactive disabled:cursor-not-allowed w-full max-w-max"

>

<option value="intfloat_e5_small_v2" selected>

intfloat/e5-small-v2 (133 MB)

</option>

<option value="intfloat_e5_base_v2">

intfloat/e5-base-v2 (438 MB)

</option>

<option value="intfloat_multilingual_e5_small">

intfloat/multilingual-e5-small (471 MB)

</option>

<option value="sentence_transformers_all_MiniLM_L6_v2">

sentence-transformers/all-MiniLM-L6-v2 (90.9 MB)

</option>

<option value="sentence_transformers_all_MiniLM_L12_v2">

sentence-transformers/all-MiniLM-L12-v2 (133 MB)

</option>

</select>

</div>

<div>

<h3 class="font-medium">Examples:</h3>

<form

id="form-wiki"

class="flex text-xs rounded-md justify-between w-min gap-3"

>

<input type="submit" hidden />

<button data-example class="disabled:cursor-not-allowed interactive">

Pizza

</button>

<button data-example class="disabled:cursor-not-allowed interactive">

Paris

</button>

<button data-example class="disabled:cursor-not-allowed interactive">

Physics

</button>

<input

type="text"

id="search-wiki"

title="Search Wikipedia article by title"

class="font-light py-0 mx-1 resize-none outline-none w-32 disabled:cursor-not-allowed interactive"

placeholder="Load Wikipedia article..."

/>

<button

title="Search Wikipedia article and load into input"

class="bg-gray-700 hover:bg-gray-800 text-white font-normal px-2 py-1 rounded disabled:bg-gray-300 disabled:cursor-not-allowed interactive"

>

Load

</button>

</form>

</div>

<form

id="form"

class="flex text-normal px-1 py-1 border border-gray-700 rounded-md items-center"

>

<input type="submit" hidden />

<input

type="text"

id="search-input"

class="font-light w-full px-3 py-2 mx-1 resize-none outline-none interactive disabled:cursor-not-allowed"

placeholder="Search query here..."

/>

<button

class="bg-gray-700 hover:bg-gray-800 text-white font-normal py-2 w-16 rounded disabled:bg-gray-300 disabled:cursor-not-allowed interactive"

>

Search

</button>

</form>

<div>

<h3 class="font-medium">Input text:</h3>

<div class="flex justify-between items-center">

<div class="rounded-md inline text-xs">

<span id="output-status" class="m-auto font-light invisible"

>C</span

>

</div>

</div>

<div

id="input-container"

tabindex="0"

class="min-h-[250px] bg-slate-100 text-gray-500 rounded-md p-4 flex flex-col gap-2 relative"

>

<textarea

id="input-area"

hidden

value=""

placeholder="Input text to perform semantic similarity search..."

class="flex-1 resize-none outline-none left-0 right-0 top-0 bottom-0 m-4 absolute interactive disabled:invisible"

></textarea>

<p id="output-area" class="grid-rows-2">

Input text to perform semantic similarity search...

</p>

</div>

</div>

</main>

</body>

</html>

| 7 |

0 | hf_public_repos/candle/candle-wasm-examples | hf_public_repos/candle/candle-wasm-examples/bert/utils.js | export async function getEmbeddings(

worker,

weightsURL,

tokenizerURL,

configURL,

modelID,

sentences,

updateStatus = null

) {

return new Promise((resolve, reject) => {

worker.postMessage({

weightsURL,

tokenizerURL,

configURL,

modelID,

sentences,

});

function messageHandler(event) {

if ("error" in event.data) {

worker.removeEventListener("message", messageHandler);

reject(new Error(event.data.error));

}

if (event.data.status === "complete") {

worker.removeEventListener("message", messageHandler);

resolve(event.data);

}

if (updateStatus) updateStatus(event.data);

}

worker.addEventListener("message", messageHandler);

});

}

const MODELS = {

intfloat_e5_small_v2: {

base_url: "https://huggingface.co/intfloat/e5-small-v2/resolve/main/",

search_prefix: "query: ",

document_prefix: "passage: ",

},

intfloat_e5_base_v2: {

base_url: "https://huggingface.co/intfloat/e5-base-v2/resolve/main/",

search_prefix: "query: ",

document_prefix: "passage:",

},

intfloat_multilingual_e5_small: {

base_url:

"https://huggingface.co/intfloat/multilingual-e5-small/resolve/main/",

search_prefix: "query: ",

document_prefix: "passage: ",

},

sentence_transformers_all_MiniLM_L6_v2: {