index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter4/demo.mdx | # Build a demo with Gradio

In this final section on audio classification, we'll build a [Gradio](https://gradio.app) demo to showcase the music

classification model that we just trained on the [GTZAN](https://huggingface.co/datasets/marsyas/gtzan) dataset. The first

thing to do is load up the fine-tuned checkpoint using the `pipeline()` class - this is very familiar now from the section

on [pre-trained models](classification_models). You can change the `model_id` to the namespace of your fine-tuned model

on the Hugging Face Hub:

```python

from transformers import pipeline

model_id = "sanchit-gandhi/distilhubert-finetuned-gtzan"

pipe = pipeline("audio-classification", model=model_id)

```

Secondly, we'll define a function that takes the filepath for an audio input and passes it through the pipeline. Here,

the pipeline automatically takes care of loading the audio file, resampling it to the correct sampling rate, and running

inference with the model. We take the models predictions of `preds` and format them as a dictionary object to be displayed on the

output:

```python

def classify_audio(filepath):

preds = pipe(filepath)

outputs = {}

for p in preds:

outputs[p["label"]] = p["score"]

return outputs

```

Finally, we launch the Gradio demo using the function we've just defined:

```python

import gradio as gr

demo = gr.Interface(

fn=classify_audio, inputs=gr.Audio(type="filepath"), outputs=gr.outputs.Label()

)

demo.launch(debug=True)

```

This will launch a Gradio demo similar to the one running on the Hugging Face Space:

<iframe src="https://course-demos-song-classifier.hf.space" frameBorder="0" height="450" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

| 0 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter4/classification_models.mdx | # Pre-trained models and datasets for audio classification

The Hugging Face Hub is home to over 500 pre-trained models for audio classification. In this section, we'll go through

some of the most common audio classification tasks and suggest appropriate pre-trained models for each. Using the `pipeline()`

class, switching between models and tasks is straightforward - once you know how to use `pipeline()` for one model, you'll

be able to use it for any model on the Hub no code changes! This makes experimenting with the `pipeline()` class extremely

fast, allowing you to quickly select the best pre-trained model for your needs.

Before we jump into the various audio classification problems, let's quickly recap the transformer architectures typically

used. The standard audio classification architecture is motivated by the nature of the task; we want to transform a sequence

of audio inputs (i.e. our input audio array) into a single class label prediction. Encoder-only models first map the input

audio sequence into a sequence of hidden-state representations by passing the inputs through a transformer block. The

sequence of hidden-state representations is then mapped to a class label output by taking the mean over the hidden-states,

and passing the resulting vector through a linear classification layer. Hence, there is a preference for _encoder-only_

models for audio classification.

Decoder-only models introduce unnecessary complexity to the task, since they assume that the outputs can also be a _sequence_

of predictions (rather than a single class label prediction), and so generate multiple outputs. Therefore, they have slower

inference speed and tend not to be used. Encoder-decoder models are largely omitted for the same reason. These architecture

choices are analogous to those in NLP, where encoder-only models such as [BERT](https://huggingface.co/blog/bert-101)

are favoured for sequence classification tasks, and decoder-only models such as GPT reserved for sequence generation tasks.

Now that we've recapped the standard transformer architecture for audio classification, let's jump into the different

subsets of audio classification and cover the most popular models!

## 🤗 Transformers Installation

At the time of writing, the latest updates required for audio classification pipeline are only on the `main` version of

the 🤗 Transformers repository, rather than the latest PyPi version. To make sure we have these updates locally, we'll

install Transformers from the `main` branch with the following command:

```

pip install git+https://github.com/huggingface/transformers

```

## Keyword Spotting

Keyword spotting (KWS) is the task of identifying a keyword in a spoken utterance. The set of possible keywords forms the

set of predicted class labels. Hence, to use a pre-trained keyword spotting model, you should ensure that your keywords

match those that the model was pre-trained on. Below, we'll introduce two datasets and models for keyword spotting.

### Minds-14

Let's go ahead and use the same [MINDS-14](https://huggingface.co/datasets/PolyAI/minds14) dataset that you have explored

in the previous unit. If you recall, MINDS-14 contains recordings of people asking an e-banking system questions in several

languages and dialects, and has the `intent_class` for each recording. We can classify the recordings by intent of the call.

```python

from datasets import load_dataset

minds = load_dataset("PolyAI/minds14", name="en-AU", split="train")

```

We'll load the checkpoint [`"anton-l/xtreme_s_xlsr_300m_minds14"`](https://huggingface.co/anton-l/xtreme_s_xlsr_300m_minds14),

which is an XLS-R model fine-tuned on MINDS-14 for approximately 50 epochs. It achieves 90% accuracy over all languages

from MINDS-14 on the evaluation set.

```python

from transformers import pipeline

classifier = pipeline(

"audio-classification",

model="anton-l/xtreme_s_xlsr_300m_minds14",

)

```

Finally, we can pass a sample to the classification pipeline to make a prediction:

```python

classifier(minds[0]["audio"])

```

**Output:**

```

[

{"score": 0.9631525278091431, "label": "pay_bill"},

{"score": 0.02819698303937912, "label": "freeze"},

{"score": 0.0032787492964416742, "label": "card_issues"},

{"score": 0.0019414445850998163, "label": "abroad"},

{"score": 0.0008378693601116538, "label": "high_value_payment"},

]

```

Great! We've identified that the intent of the call was paying a bill, with probability 96%. You can imagine this kind of

keyword spotting system being used as the first stage of an automated call centre, where we want to categorise incoming

customer calls based on their query and offer them contextualised support accordingly.

### Speech Commands

Speech Commands is a dataset of spoken words designed to evaluate audio classification models on simple command words.

The dataset consists of 15 classes of keywords, a class for silence, and an unknown class to include the false positive.

The 15 keywords are single words that would typically be used in on-device settings to control basic tasks or launch

other processes.

A similar model is running continuously on your mobile phone. Here, instead of having single command words, we have

'wake words' specific to your device, such as "Hey Google" or "Hey Siri". When the audio classification model detects

these wake words, it triggers your phone to start listening to the microphone and transcribe your speech using a speech

recognition model.

The audio classification model is much smaller and lighter than the speech recognition model, often only several millions

of parameters compared to several hundred millions for speech recognition. Thus, it can be run continuously on your device

without draining your battery! Only when the wake word is detected is the larger speech recognition model launched, and

afterwards it is shut down again. We'll cover transformer models for speech recognition in the next Unit, so by the end

of the course you should have the tools you need to build your own voice activated assistant!

As with any dataset on the Hugging Face Hub, we can get a feel for the kind of audio data it has present without downloading

or committing it memory. After heading to the [Speech Commands' dataset card](https://huggingface.co/datasets/speech_commands)

on the Hub, we can use the Dataset Viewer to scroll through the first 100 samples of the dataset, listening to the audio

files and checking any other metadata information:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/audio-course-images/resolve/main/speech_commands.png" alt="Diagram of datasets viewer.">

</div>

The Dataset Preview is a brilliant way of experiencing audio datasets before committing to using them. You can pick any

dataset on the Hub, scroll through the samples and listen to the audio for the different subsets and splits, gauging whether

it's the right dataset for your needs. Once you've selected a dataset, it's trivial to load the data so that you can start

using it.

Let's do exactly that and load a sample of the Speech Commands dataset using streaming mode:

```python

speech_commands = load_dataset(

"speech_commands", "v0.02", split="validation", streaming=True

)

sample = next(iter(speech_commands))

```

We'll load an official [Audio Spectrogram Transformer](https://huggingface.co/docs/transformers/model_doc/audio-spectrogram-transformer)

checkpoint fine-tuned on the Speech Commands dataset, under the namespace [`"MIT/ast-finetuned-speech-commands-v2"`](https://huggingface.co/MIT/ast-finetuned-speech-commands-v2):

```python

classifier = pipeline(

"audio-classification", model="MIT/ast-finetuned-speech-commands-v2"

)

classifier(sample["audio"].copy())

```

**Output:**

```

[{'score': 0.9999892711639404, 'label': 'backward'},

{'score': 1.7504888774055871e-06, 'label': 'happy'},

{'score': 6.703040185129794e-07, 'label': 'follow'},

{'score': 5.805884484288981e-07, 'label': 'stop'},

{'score': 5.614546694232558e-07, 'label': 'up'}]

```

Cool! Looks like the example contains the word "backward" with high probability. We can take a listen to the sample

and verify this is correct:

```

from IPython.display import Audio

Audio(sample["audio"]["array"], rate=sample["audio"]["sampling_rate"])

```

Now, you might be wondering how we've selected these pre-trained models to show you in these audio classification examples.

The truth is, finding pre-trained models for your dataset and task is very straightforward! The first thing we need to do

is head to the Hugging Face Hub and click on the "Models" tab: https://huggingface.co/models

This is going to bring up all the models on the Hugging Face Hub, sorted by downloads in the past 30 days:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/audio-course-images/resolve/main/all_models.png">

</div>

You'll notice on the left-hand side that we have a selection of tabs that we can select to filter models by task, library,

dataset, etc. Scroll down and select the task "Audio Classification" from the list of audio tasks:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/audio-course-images/resolve/main/by_audio_classification.png">

</div>

We're now presented with the sub-set of 500+ audio classification models on the Hub. To further refine this selection, we

can filter models by dataset. Click on the tab "Datasets", and in the search box type "speech_commands". As you begin typing,

you'll see the selection for `speech_commands` appear underneath the search tab. You can click this button to filter all

audio classification models to those fine-tuned on the Speech Commands dataset:

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/audio-course-images/resolve/main/by_speech_commands.png">

</div>

Great! We see that we have 6 pre-trained models available to us for this specific dataset and task. You'll recognise the

first of these models as the Audio Spectrogram Transformer checkpoint that we used in the previous example. This process

of filtering models on the Hub is exactly how we went about selecting the checkpoint to show you!

## Language Identification

Language identification (LID) is the task of identifying the language spoken in an audio sample from a list of candidate

languages. LID can form an important part in many speech pipelines. For example, given an audio sample in an unknown language,

an LID model can be used to categorise the language(s) spoken in the audio sample, and then select an appropriate speech

recognition model trained on that language to transcribe the audio.

### FLEURS

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition

systems in 102 languages, including many that are classified as 'low-resource'. Take a look at the FLEURS dataset

card on the Hub and explore the different languages that are present: [google/fleurs](https://huggingface.co/datasets/google/fleurs).

Can you find your native tongue here? If not, what's the most closely related language?

Let's load up a sample from the validation split of the FLEURS dataset using streaming mode:

```python

fleurs = load_dataset("google/fleurs", "all", split="validation", streaming=True)

sample = next(iter(fleurs))

```

Great! Now we can load our audio classification model. For this, we'll use a version of [Whisper](https://arxiv.org/pdf/2212.04356.pdf)

fine-tuned on the FLEURS dataset, which is currently the most performant LID model on the Hub:

```python

classifier = pipeline(

"audio-classification", model="sanchit-gandhi/whisper-medium-fleurs-lang-id"

)

```

We can then pass the audio through our classifier and generate a prediction:

```python

classifier(sample["audio"])

```

**Output:**

```

[{'score': 0.9999330043792725, 'label': 'Afrikaans'},

{'score': 7.093023668858223e-06, 'label': 'Northern-Sotho'},

{'score': 4.269149485480739e-06, 'label': 'Icelandic'},

{'score': 3.2661141631251667e-06, 'label': 'Danish'},

{'score': 3.2580724109720904e-06, 'label': 'Cantonese Chinese'}]

```

We can see that the model predicted the audio was in Afrikaans with extremely high probability (near 1). The FLEURS dataset

contains audio data from a wide range of languages - we can see that possible class labels include Northern-Sotho, Icelandic,

Danish and Cantonese Chinese amongst others. You can find the full list of languages on the dataset card here: [google/fleurs](https://huggingface.co/datasets/google/fleurs).

Over to you! What other checkpoints can you find for FLEURS LID on the Hub? What transformer models are they using under-the-hood?

## Zero-Shot Audio Classification

In the traditional paradigm for audio classification, the model predicts a class label from a _pre-defined_ set of

possible classes. This poses a barrier to using pre-trained models for audio classification, since the label set of the

pre-trained model must match that of the downstream task. For the previous example of LID, the model must predict one of

the 102 langauge classes on which it was trained. If the downstream task actually requires 110 languages, the model would

not be able to predict 8 of the 110 languages, and so would require re-training to achieve full coverage. This limits the

effectiveness of transfer learning for audio classification tasks.

Zero-shot audio classification is a method for taking a pre-trained audio classification model trained on a set of labelled

examples and enabling it to be able to classify new examples from previously unseen classes. Let's take a look at how we

can achieve this!

Currently, 🤗 Transformers supports one kind of model for zero-shot audio classification: the [CLAP model](https://huggingface.co/docs/transformers/model_doc/clap).

CLAP is a transformer-based model that takes both audio and text as inputs, and computes the _similarity_ between the two.

If we pass a text input that strongly correlates with an audio input, we'll get a high similarity score. Conversely, passing

a text input that is completely unrelated to the audio input will return a low similarity.

We can use this similarity prediction for zero-shot audio classification by passing one audio input to the model and

multiple candidate labels. The model will return a similarity score for each of the candidate labels, and we can pick the

one that has the highest score as our prediction.

Let's take an example where we use one audio input from the [Environmental Speech Challenge (ESC)](https://huggingface.co/datasets/ashraq/esc50)

dataset:

```python

dataset = load_dataset("ashraq/esc50", split="train", streaming=True)

audio_sample = next(iter(dataset))["audio"]["array"]

```

We then define our candidate labels, which form the set of possible classification labels. The model will return a

classification probability for each of the labels we define. This means we need to know _a-priori_ the set of possible

labels in our classification problem, such that the correct label is contained within the set and is thus assigned a

valid probability score. Note that we can either pass the full set of labels to the model, or a hand-selected subset

that we believe contains the correct label. Passing the full set of labels is going to be more exhaustive, but comes

at the expense of lower classification accuracy since the classification space is larger (provided the correct label is

our chosen subset of labels):

```python

candidate_labels = ["Sound of a dog", "Sound of vacuum cleaner"]

```

We can run both through the model to find the candidate label that is _most similar_ to the audio input:

```python

classifier = pipeline(

task="zero-shot-audio-classification", model="laion/clap-htsat-unfused"

)

classifier(audio_sample, candidate_labels=candidate_labels)

```

**Output:**

```

[{'score': 0.9997242093086243, 'label': 'Sound of a dog'}, {'score': 0.0002758323971647769, 'label': 'Sound of vacuum cleaner'}]

```

Alright! The model seems pretty confident we have the sound of a dog - it predicts it with 99.96% probability, so we'll

take that as our prediction. Let's confirm whether we were right by listening to the audio sample (don't turn up your

volume too high or else you might get a jump!):

```python

Audio(audio_sample, rate=16000)

```

Perfect! We have the sound of a dog barking 🐕, which aligns with the model's prediction. Have a play with different audio

samples and different candidate labels - can you define a set of labels that give good generalisation across the ESC

dataset? Hint: think about where you could find information on the possible sounds in ESC and construct your labels accordingly!

You might be wondering why we don't use the zero-shot audio classification pipeline for **all** audio classification tasks?

It seems as though we can make predictions for any audio classification problem by defining appropriate class labels _a-priori_,

thus bypassing the constraint that our classification task needs to match the labels that the model was pre-trained on.

This comes down to the nature of the CLAP model used in the zero-shot pipeline: CLAP is pre-trained on _generic_ audio

classification data, similar to the environmental sounds in the ESC dataset, rather than specifically speech data, like

we had in the LID task. If you gave it speech in English and speech in Spanish, CLAP would know that both examples were

speech data 🗣️ But it wouldn't be able to differentiate between the languages in the same way a dedicated LID model is

able to.

## What next?

We've covered a number of different audio classification tasks and presented the most relevant datasets and models that

you can download from the Hugging Face Hub and use in just several lines of code using the `pipeline()` class. These tasks

included keyword spotting, language identification and zero-shot audio classification.

But what if we want to do something **new**? We've worked extensively on speech processing tasks, but this is only one

aspect of audio classification. Another popular field of audio processing involves **music**. While music has inherently

different features to speech, many of the same principles that we've learnt about already can be applied to music.

In the following section, we'll go through a step-by-step guide on how you can fine-tune a transformer model with 🤗

Transformers on the task of music classification. By the end of it, you'll have a fine-tuned checkpoint that you can plug

into the `pipeline()` class, enabling you to classify songs in exactly the same way that we've classified speech here!

| 1 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter4/introduction.mdx | # Unit 4. Build a music genre classifier

## What you'll learn and what you'll build

Audio classification is one of the most common applications of transformers in audio and speech processing. Like other

classification tasks in machine learning, this task involves assigning one or more labels to an audio recording based on

its content. For example, in the case of speech, we might want to detect when wake words like "Hey Siri" are spoken, or

infer a key word like "temperature" from a spoken query like "What is the weather today?". Environmental sounds

provide another example, where we might want to automatically distinguish between sounds such as "car horn", "siren",

"dog barking", etc.

In this section, we'll look at how pre-trained audio transformers can be applied to a range of audio classification tasks.

We'll then fine-tune a transformer model on the task of music classification, classifying songs into genres like "pop" and

"rock". This is an important part of music streaming platforms like [Spotify](https://en.wikipedia.org/wiki/Spotify), which

recommend songs that are similar to the ones the user is listening to.

By the end of this section, you'll know how to:

* Find suitable pre-trained models for audio classification tasks

* Use the 🤗 Datasets library and the Hugging Face Hub to select audio classification datasets

* Fine-tune a pretrained model to classify songs by genre

* Build a Gradio demo that lets you classify your own songs

| 2 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter4/fine-tuning.mdx | # Fine-tuning a model for music classification

In this section, we'll present a step-by-step guide on fine-tuning an encoder-only transformer model for music classification.

We'll use a lightweight model for this demonstration and fairly small dataset, meaning the code is runnable end-to-end

on any consumer grade GPU, including the T4 16GB GPU provided in the Google Colab free tier. The section includes various

tips that you can try should you have a smaller GPU and encounter memory issues along the way.

## The Dataset

To train our model, we'll use the [GTZAN](https://huggingface.co/datasets/marsyas/gtzan) dataset, which is a popular

dataset of 1,000 songs for music genre classification. Each song is a 30-second clip from one of 10 genres of music,

spanning disco to metal. We can get the audio files and their corresponding labels from the Hugging Face Hub with the

`load_dataset()` function from 🤗 Datasets:

```python

from datasets import load_dataset

gtzan = load_dataset("marsyas/gtzan", "all")

gtzan

```

**Output:**

```out

Dataset({

features: ['file', 'audio', 'genre'],

num_rows: 999

})

```

<Tip warning={true}>

One of the recordings in GTZAN is corrupted, so it's been removed from the dataset. That's why we have 999 examples

instead of 1,000.

</Tip>

GTZAN doesn't provide a predefined validation set, so we'll have to create one ourselves. The dataset is balanced across

genres, so we can use the `train_test_split()` method to quickly create a 90/10 split as follows:

```python

gtzan = gtzan["train"].train_test_split(seed=42, shuffle=True, test_size=0.1)

gtzan

```

**Output:**

```out

DatasetDict({

train: Dataset({

features: ['file', 'audio', 'genre'],

num_rows: 899

})

test: Dataset({

features: ['file', 'audio', 'genre'],

num_rows: 100

})

})

```

Great, now that we've got our training and validation sets, let's take a look at one of the audio files:

```python

gtzan["train"][0]

```

**Output:**

```out

{

"file": "~/.cache/huggingface/datasets/downloads/extracted/fa06ce46130d3467683100aca945d6deafb642315765a784456e1d81c94715a8/genres/pop/pop.00098.wav",

"audio": {

"path": "~/.cache/huggingface/datasets/downloads/extracted/fa06ce46130d3467683100aca945d6deafb642315765a784456e1d81c94715a8/genres/pop/pop.00098.wav",

"array": array(

[

0.10720825,

0.16122437,

0.28585815,

...,

-0.22924805,

-0.20629883,

-0.11334229,

],

dtype=float32,

),

"sampling_rate": 22050,

},

"genre": 7,

}

```

As we saw in [Unit 1](../chapter1/audio_data), the audio files are represented as 1-dimensional NumPy arrays,

where the value of the array represents the amplitude at that timestep. For these songs, the sampling rate is 22,050 Hz,

meaning there are 22,050 amplitude values sampled per second. We'll have to keep this in mind when using a pretrained model

with a different sampling rate, converting the sampling rates ourselves to ensure they match. We can also see the genre

is represented as an integer, or _class label_, which is the format the model will make it's predictions in. Let's use the

`int2str()` method of the `genre` feature to map these integers to human-readable names:

```python

id2label_fn = gtzan["train"].features["genre"].int2str

id2label_fn(gtzan["train"][0]["genre"])

```

**Output:**

```out

'pop'

```

This label looks correct, since it matches the filename of the audio file. Let's now listen to a few more examples by

using Gradio to create a simple interface with the `Blocks` API:

```python

import gradio as gr

def generate_audio():

example = gtzan["train"].shuffle()[0]

audio = example["audio"]

return (

audio["sampling_rate"],

audio["array"],

), id2label_fn(example["genre"])

with gr.Blocks() as demo:

with gr.Column():

for _ in range(4):

audio, label = generate_audio()

output = gr.Audio(audio, label=label)

demo.launch(debug=True)

```

<iframe src="https://course-demos-gtzan-samples.hf.space" frameBorder="0" height="450" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

From these samples we can certainly hear the difference between genres, but can a transformer do this too? Let's train a

model to find out! First, we'll need to find a suitable pretrained model for this task. Let's see how we can do that.

## Picking a pretrained model for audio classification

To get started, let's pick a suitable pretrained model for audio classification. In this domain, pretraining is typically

carried out on large amounts of unlabeled audio data, using datasets like [LibriSpeech](https://huggingface.co/datasets/librispeech_asr)

and [Voxpopuli](https://huggingface.co/datasets/facebook/voxpopuli). The best way to find these models on the Hugging

Face Hub is to use the "Audio Classification" filter, as described in the previous section. Although models like Wav2Vec2 and

HuBERT are very popular, we'll use a model called _DistilHuBERT_. This is a much smaller (or _distilled_) version of the [HuBERT](https://huggingface.co/docs/transformers/model_doc/hubert)

model, which trains around 73% faster, yet preserves most of the performance.

<iframe src="https://autoevaluate-leaderboards.hf.space" frameBorder="0" height="450" title="Gradio app" class="container p-0 flex-grow space-iframe" allow="accelerometer; ambient-light-sensor; autoplay; battery; camera; document-domain; encrypted-media; fullscreen; geolocation; gyroscope; layout-animations; legacy-image-formats; magnetometer; microphone; midi; oversized-images; payment; picture-in-picture; publickey-credentials-get; sync-xhr; usb; vr ; wake-lock; xr-spatial-tracking" sandbox="allow-forms allow-modals allow-popups allow-popups-to-escape-sandbox allow-same-origin allow-scripts allow-downloads"></iframe>

## From audio to machine learning features

## Preprocessing the data

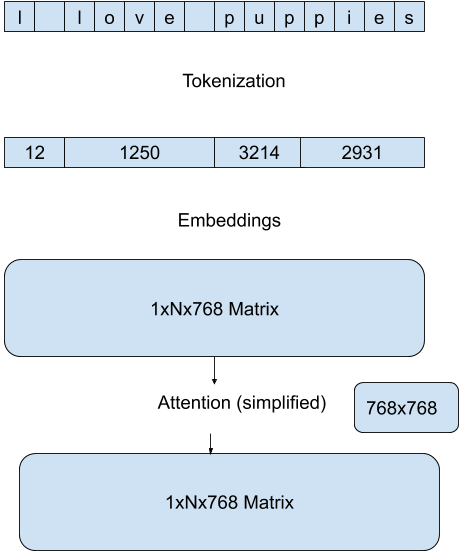

Similar to tokenization in NLP, audio and speech models require the input to be encoded in a format that the model

can process. In 🤗 Transformers, the conversion from audio to the input format is handled by the _feature extractor_ of

the model. Similar to tokenizers, 🤗 Transformers provides a convenient `AutoFeatureExtractor` class that can automatically

select the correct feature extractor for a given model. To see how we can process our audio files, let's begin by instantiating

the feature extractor for DistilHuBERT from the pre-trained checkpoint:

```python

from transformers import AutoFeatureExtractor

model_id = "ntu-spml/distilhubert"

feature_extractor = AutoFeatureExtractor.from_pretrained(

model_id, do_normalize=True, return_attention_mask=True

)

```

Since the sampling rate of the model and the dataset are different, we'll have to resample the audio file to 16,000

Hz before passing it to the feature extractor. We can do this by first obtaining the model's sample rate from the feature

extractor:

```python

sampling_rate = feature_extractor.sampling_rate

sampling_rate

```

**Output:**

```out

16000

```

Next, we resample the dataset using the `cast_column()` method and `Audio` feature from 🤗 Datasets:

```python

from datasets import Audio

gtzan = gtzan.cast_column("audio", Audio(sampling_rate=sampling_rate))

```

We can now check the first sample of the train-split of our dataset to verify that it is indeed at 16,000 Hz. 🤗 Datasets

will resample the audio file _on-the-fly_ when we load each audio sample:

```python

gtzan["train"][0]

```

**Output:**

```out

{

"file": "~/.cache/huggingface/datasets/downloads/extracted/fa06ce46130d3467683100aca945d6deafb642315765a784456e1d81c94715a8/genres/pop/pop.00098.wav",

"audio": {

"path": "~/.cache/huggingface/datasets/downloads/extracted/fa06ce46130d3467683100aca945d6deafb642315765a784456e1d81c94715a8/genres/pop/pop.00098.wav",

"array": array(

[

0.0873509,

0.20183384,

0.4790867,

...,

-0.18743178,

-0.23294401,

-0.13517427,

],

dtype=float32,

),

"sampling_rate": 16000,

},

"genre": 7,

}

```

Great! We can see that the sampling rate has been downsampled to 16kHz. The array values are also different, as we've

now only got approximately one amplitude value for every 1.5 that we had before.

A defining feature of Wav2Vec2 and HuBERT like models is that they accept a float array corresponding to the raw waveform

of the speech signal as an input. This is in contrast to other models, like Whisper, where we pre-process the raw audio waveform

to spectrogram format.

We mentioned that the audio data is represented as a 1-dimensional array, so it's already in the right format to be read

by the model (a set of continuous inputs at discrete time steps). So, what exactly does the feature extractor do?

Well, the audio data is in the right format, but we've imposed no restrictions on the values it can take. For our model to

work optimally, we want to keep all the inputs within the same dynamic range. This is going to make sure we get a similar

range of activations and gradients for our samples, helping with stability and convergence during training.

To do this, we _normalise_ our audio data, by rescaling each sample to zero mean and unit variance, a process called

_feature scaling_. It's exactly this feature normalisation that our feature extractor performs!

We can take a look at the feature extractor in operation by applying it to our first audio sample. First, let's compute

the mean and variance of our raw audio data:

```python

import numpy as np

sample = gtzan["train"][0]["audio"]

print(f"Mean: {np.mean(sample['array']):.3}, Variance: {np.var(sample['array']):.3}")

```

**Output:**

```out

Mean: 0.000185, Variance: 0.0493

```

We can see that the mean is close to zero already, but the variance is closer to 0.05. If the variance for the sample was

larger, it could cause our model problems, since the dynamic range of the audio data would be very small and thus difficult to

separate. Let's apply the feature extractor and see what the outputs look like:

```python

inputs = feature_extractor(sample["array"], sampling_rate=sample["sampling_rate"])

print(f"inputs keys: {list(inputs.keys())}")

print(

f"Mean: {np.mean(inputs['input_values']):.3}, Variance: {np.var(inputs['input_values']):.3}"

)

```

**Output:**

```out

inputs keys: ['input_values', 'attention_mask']

Mean: -4.53e-09, Variance: 1.0

```

Alright! Our feature extractor returns a dictionary of two arrays: `input_values` and `attention_mask`. The `input_values`

are the preprocessed audio inputs that we'd pass to the HuBERT model. The [`attention_mask`](https://huggingface.co/docs/transformers/glossary#attention-mask)

is used when we process a _batch_ of audio inputs at once - it is used to tell the model where we have padded inputs of

different lengths.

We can see that the mean value is now very much closer to zero, and the variance bang-on one! This is exactly the form we

want our audio samples in prior to feeding them to the HuBERT model.

<Tip warning={true}>

Note how we've passed the sampling rate of our audio data to our feature extractor. This is good practice, as the feature

extractor performs a check under-the-hood to make sure the sampling rate of our audio data matches the sampling rate

expected by the model. If the sampling rate of our audio data did not match the sampling rate of our model, we'd need to

up-sample or down-sample the audio data to the correct sampling rate.

</Tip>

Great, so now we know how to process our resampled audio files, the last thing to do is define a function that we can

apply to all the examples in the dataset. Since we expect the audio clips to be 30 seconds in length, we'll also

truncate any longer clips by using the `max_length` and `truncation` arguments of the feature extractor as follows:

```python

max_duration = 30.0

def preprocess_function(examples):

audio_arrays = [x["array"] for x in examples["audio"]]

inputs = feature_extractor(

audio_arrays,

sampling_rate=feature_extractor.sampling_rate,

max_length=int(feature_extractor.sampling_rate * max_duration),

truncation=True,

return_attention_mask=True,

)

return inputs

```

With this function defined, we can now apply it to the dataset using the [`map()`](https://huggingface.co/docs/datasets/v2.14.0/en/package_reference/main_classes#datasets.Dataset.map)

method. The `.map()` method supports working with batches of examples, which we'll enable by setting `batched=True`.

The default batch size is 1000, but we'll reduce it to 100 to ensure the peak RAM stays within a sensible range for

Google Colab's free tier:

<!--- TODO(SG): revert to multiprocessing when bug in datasets is fixed

Since audio datasets can be quite

slow to process, it is usually a good idea to use multiprocessing. We can do this by passing the `num_proc` argument to

`map()` and we'll use Python's `psutil` module to determine the number of CPU cores on the system:

--->

```python

gtzan_encoded = gtzan.map(

preprocess_function,

remove_columns=["audio", "file"],

batched=True,

batch_size=100,

num_proc=1,

)

gtzan_encoded

```

**Output:**

```out

DatasetDict({

train: Dataset({

features: ['genre', 'input_values','attention_mask'],

num_rows: 899

})

test: Dataset({

features: ['genre', 'input_values','attention_mask'],

num_rows: 100

})

})

```

<Tip warning={true}>

If you exhaust your device's RAM executing the above code, you can adjust the batch parameters to reduce the peak

RAM usage. In particular, the following two arguments can be modified:

* `batch_size`: defaults to 1000, but set to 100 above. Try reducing by a factor of 2 again to 50

* `writer_batch_size`: defaults to 1000. Try reducing it to 500, and if that doesn't work, then reduce it by a factor of 2 again to 250

</Tip>

To simplify the training, we've removed the `audio` and `file` columns from the dataset. The `input_values` column contains

the encoded audio files, the `attention_mask` a binary mask of 0/1 values that indicate where we have padded the audio input,

and the `genre` column contains the corresponding labels (or targets). To enable the `Trainer` to process the class labels,

we need to rename the `genre` column to `label`:

```python

gtzan_encoded = gtzan_encoded.rename_column("genre", "label")

```

Finally, we need to obtain the label mappings from the dataset. This mapping will take us from integer ids (e.g. `7`) to

human-readable class labels (e.g. `"pop"`) and back again. In doing so, we can convert our model's integer id prediction

into human-readable format, enabling us to use the model in any downstream application. We can do this by using the `int2str()`

method as follows:

```python

id2label = {

str(i): id2label_fn(i)

for i in range(len(gtzan_encoded["train"].features["label"].names))

}

label2id = {v: k for k, v in id2label.items()}

id2label["7"]

```

```out

'pop'

```

OK, we've now got a dataset that's ready for training! Let's take a look at how we can train a model on this dataset.

## Fine-tuning the model

To fine-tune the model, we'll use the `Trainer` class from 🤗 Transformers. As we've seen in other chapters, the `Trainer`

is a high-level API that is designed to handle the most common training scenarios. In this case, we'll use the `Trainer`

to fine-tune the model on GTZAN. To do this, we'll first need to load a model for this task. We can do this by using the

`AutoModelForAudioClassification` class, which will automatically add the appropriate classification head to our pretrained

DistilHuBERT model. Let's go ahead and instantiate the model:

```python

from transformers import AutoModelForAudioClassification

num_labels = len(id2label)

model = AutoModelForAudioClassification.from_pretrained(

model_id,

num_labels=num_labels,

label2id=label2id,

id2label=id2label,

)

```

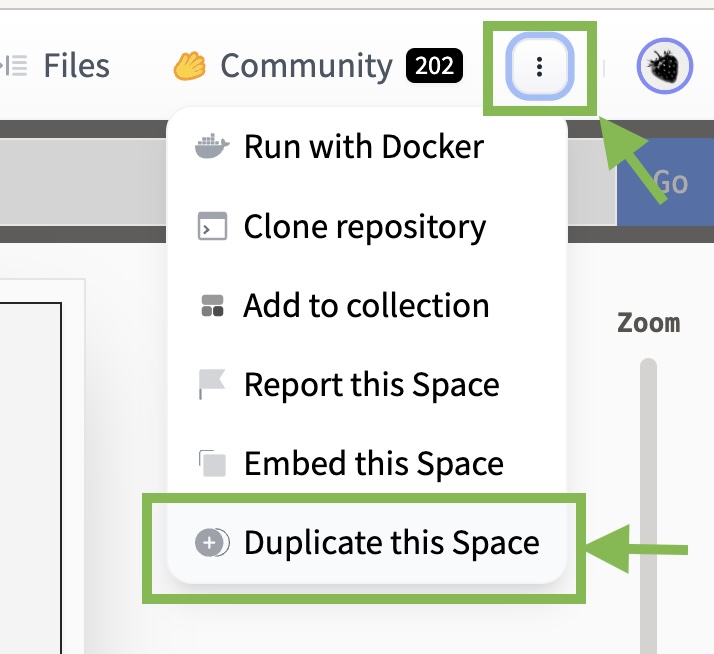

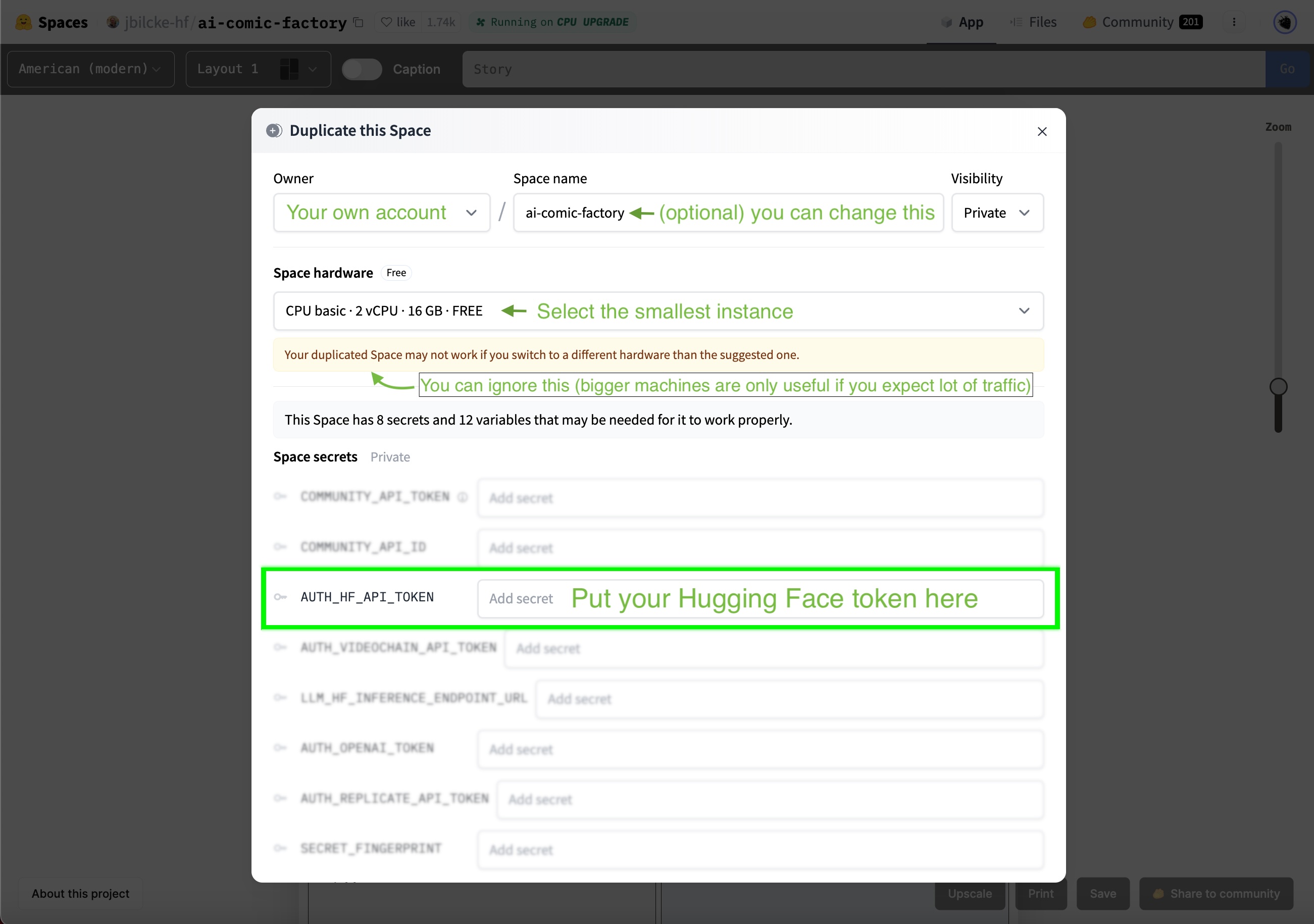

We strongly advise you to upload model checkpoints directly the [Hugging Face Hub](https://huggingface.co/) while training.

The Hub provides:

- Integrated version control: you can be sure that no model checkpoint is lost during training.

- Tensorboard logs: track important metrics over the course of training.

- Model cards: document what a model does and its intended use cases.

- Community: an easy way to share and collaborate with the community! 🤗

Linking the notebook to the Hub is straightforward - it simply requires entering your Hub authentication token when prompted.

Find your Hub authentication token [here](https://huggingface.co/settings/tokens):

```python

from huggingface_hub import notebook_login

notebook_login()

```

**Output:**

```bash

Login successful

Your token has been saved to /root/.huggingface/token

```

The next step is to define the training arguments, including the batch size, gradient accumulation steps, number of

training epochs and learning rate:

```python

from transformers import TrainingArguments

model_name = model_id.split("/")[-1]

batch_size = 8

gradient_accumulation_steps = 1

num_train_epochs = 10

training_args = TrainingArguments(

f"{model_name}-finetuned-gtzan",

evaluation_strategy="epoch",

save_strategy="epoch",

learning_rate=5e-5,

per_device_train_batch_size=batch_size,

gradient_accumulation_steps=gradient_accumulation_steps,

per_device_eval_batch_size=batch_size,

num_train_epochs=num_train_epochs,

warmup_ratio=0.1,

logging_steps=5,

load_best_model_at_end=True,

metric_for_best_model="accuracy",

fp16=True,

push_to_hub=True,

)

```

<Tip warning={true}>

Here we have set `push_to_hub=True` to enable automatic upload of our fine-tuned checkpoints during training. Should you

not wish for your checkpoints to be uploaded to the Hub, you can set this to `False`.

</Tip>

The last thing we need to do is define the metrics. Since the dataset is balanced, we'll use accuracy as our metric and

load it using the 🤗 Evaluate library:

```python

import evaluate

import numpy as np

metric = evaluate.load("accuracy")

def compute_metrics(eval_pred):

"""Computes accuracy on a batch of predictions"""

predictions = np.argmax(eval_pred.predictions, axis=1)

return metric.compute(predictions=predictions, references=eval_pred.label_ids)

```

We've now got all the pieces! Let's instantiate the `Trainer` and train the model:

```python

from transformers import Trainer

trainer = Trainer(

model,

training_args,

train_dataset=gtzan_encoded["train"],

eval_dataset=gtzan_encoded["test"],

tokenizer=feature_extractor,

compute_metrics=compute_metrics,

)

trainer.train()

```

<Tip warning={true}>

Depending on your GPU, it is possible that you will encounter a CUDA `"out-of-memory"` error when you start training.

In this case, you can reduce the `batch_size` incrementally by factors of 2 and employ [`gradient_accumulation_steps`](https://huggingface.co/docs/transformers/main_classes/trainer#transformers.TrainingArguments.gradient_accumulation_steps)

to compensate.

</Tip>

**Output:**

```out

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.7297 | 1.0 | 113 | 1.8011 | 0.44 |

| 1.24 | 2.0 | 226 | 1.3045 | 0.64 |

| 0.9805 | 3.0 | 339 | 0.9888 | 0.7 |

| 0.6853 | 4.0 | 452 | 0.7508 | 0.79 |

| 0.4502 | 5.0 | 565 | 0.6224 | 0.81 |

| 0.3015 | 6.0 | 678 | 0.5411 | 0.83 |

| 0.2244 | 7.0 | 791 | 0.6293 | 0.78 |

| 0.3108 | 8.0 | 904 | 0.5857 | 0.81 |

| 0.1644 | 9.0 | 1017 | 0.5355 | 0.83 |

| 0.1198 | 10.0 | 1130 | 0.5716 | 0.82 |

```

Training will take approximately 1 hour depending on your GPU or the one allocated to the Google Colab. Our best

evaluation accuracy is 83% - not bad for just 10 epochs with 899 examples of training data! We could certainly improve

upon this result by training for more epochs, using regularisation techniques such as _dropout_, or sub-diving each

audio example from 30s into 15s segments to use a more efficient data pre-processing strategy.

The big question is how this compares to other music classification systems 🤔

For that, we can view the [autoevaluate leaderboard](https://huggingface.co/spaces/autoevaluate/leaderboards?dataset=marsyas%2Fgtzan&only_verified=0&task=audio-classification&config=all&split=train&metric=accuracy),

a leaderboard that categorises models by language and dataset, and subsequently ranks them according to their accuracy.

We can automatically submit our checkpoint to the leaderboard when we push the training results to the Hub - we simply have

to set the appropriate key-word arguments (kwargs). You can change these values to match your dataset, language and model name

accordingly:

```python

kwargs = {

"dataset_tags": "marsyas/gtzan",

"dataset": "GTZAN",

"model_name": f"{model_name}-finetuned-gtzan",

"finetuned_from": model_id,

"tasks": "audio-classification",

}

```

The training results can now be uploaded to the Hub. To do so, execute the `.push_to_hub` command:

```python

trainer.push_to_hub(**kwargs)

```

This will save the training logs and model weights under `"your-username/distilhubert-finetuned-gtzan"`. For this example,

check out the upload at [`"sanchit-gandhi/distilhubert-finetuned-gtzan"`](https://huggingface.co/sanchit-gandhi/distilhubert-finetuned-gtzan).

## Share Model

You can now share this model with anyone using the link on the Hub. They can load it with the identifier `"your-username/distilhubert-finetuned-gtzan"`

directly into the `pipeline()` class. For instance, to load the fine-tuned checkpoint [`"sanchit-gandhi/distilhubert-finetuned-gtzan"`](https://huggingface.co/sanchit-gandhi/distilhubert-finetuned-gtzan):

```python

from transformers import pipeline

pipe = pipeline(

"audio-classification", model="sanchit-gandhi/distilhubert-finetuned-gtzan"

)

```

## Conclusion

In this section, we've covered a step-by-step guide for fine-tuning the DistilHuBERT model for music classification. While

we focussed on the task of music classification and the GTZAN dataset, the steps presented here apply more generally to any

audio classification task - the same script can be used for spoken language audio classification tasks like keyword spotting

or language identification. You just need to swap out the dataset for one that corresponds to your task of interest! If

you're interested in fine-tuning other Hugging Face Hub models for audio classification, we encourage you to check out the

other [examples](https://github.com/huggingface/transformers/tree/main/examples/pytorch/audio-classification) in the 🤗

Transformers repository.

In the next section, we'll take the model that you just fine-tuned and build a music classification demo that you can share

on the Hugging Face Hub.

| 3 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter4/hands_on.mdx | # Hands-on exercise

It's time to get your hands on some Audio models and apply what you have learned so far.

This exercise is one of the four hands-on exercises required to qualify for a course completion certificate.

Here are the instructions.

In this unit, we demonstrated how to fine-tune a Hubert model on `marsyas/gtzan` dataset for music classification. Our example achieved 83% accuracy.

Your task is to improve upon this accuracy metric.

Feel free to choose any model on the [🤗 Hub](https://huggingface.co/models) that you think is suitable for audio classification,

and use the exact same dataset [`marsyas/gtzan`](https://huggingface.co/datasets/marsyas/gtzan) to build your own classifier.

Your goal is to achieve 87% accuracy on this dataset with your classifier. You can choose the exact same model, and play with the training hyperparameters,

or pick an entirely different model - it's up to you!

For your result to count towards your certificate, don't forget to push your model to Hub as was shown in this unit with

the following `**kwargs` at the end of the training:

```python

kwargs = {

"dataset_tags": "marsyas/gtzan",

"dataset": "GTZAN",

"model_name": f"{model_name}-finetuned-gtzan",

"finetuned_from": model_id,

"tasks": "audio-classification",

}

trainer.push_to_hub(**kwargs)

```

Here are some additional resources that you may find helpful when working on this exercise:

* [Audio classification task guide in Transformers documentation](https://huggingface.co/docs/transformers/tasks/audio_classification)

* [Hubert model documentation](https://huggingface.co/docs/transformers/model_doc/hubert)

* [M-CTC-T model documentation](https://huggingface.co/docs/transformers/model_doc/mctct)

* [Audio Spectrogram Transformer documentation](https://huggingface.co/docs/transformers/model_doc/audio-spectrogram-transformer)

* [Wav2Vec2 documentation](https://huggingface.co/docs/transformers/model_doc/wav2vec2)

Feel free to build a demo of your model, and share it on Discord! If you have questions, post them in the #audio-study-group channel.

| 4 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter1/supplemental_reading.mdx | # Learn more

This unit covered many fundamental concepts relevant to understanding of audio data and working with it.

Want to learn more? Here you will find additional resources that will help you deepen your understanding of the topics and

enhance your learning experience.

In the following video, Monty Montgomery from xiph.org presents a real-time demonstrations of sampling, quantization,

bit-depth, and dither on real audio equipment using both modern digital analysis and vintage analog bench equipment, check it out:

<Youtube id="cIQ9IXSUzuM"/>

If you'd like to dive deeper into digital signal processing, check out the free ["Digital Signals Theory" book](https://brianmcfee.net/dstbook-site/content/intro.html)

authored by Brian McFee, an Assistant Professor of Music Technology and Data Science at New York University and the principal maintainer

of the `librosa` package.

| 5 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter1/preprocessing.mdx | # Preprocessing an audio dataset

Loading a dataset with 🤗 Datasets is just half of the fun. If you plan to use it either for training a model, or for running

inference, you will need to pre-process the data first. In general, this will involve the following steps:

* Resampling the audio data

* Filtering the dataset

* Converting audio data to model's expected input

## Resampling the audio data

The `load_dataset` function downloads audio examples with the sampling rate that they were published with. This is not

always the sampling rate expected by a model you plan to train, or use for inference. If there's a discrepancy between

the sampling rates, you can resample the audio to the model's expected sampling rate.

Most of the available pretrained models have been pretrained on audio datasets at a sampling rate of 16 kHz.

When we explored MINDS-14 dataset, you may have noticed that it is sampled at 8 kHz, which means we will likely need

to upsample it.

To do so, use 🤗 Datasets' `cast_column` method. This operation does not change the audio in-place, but rather signals

to datasets to resample the audio examples on the fly when they are loaded. The following code will set the sampling

rate to 16kHz:

```py

from datasets import Audio

minds = minds.cast_column("audio", Audio(sampling_rate=16_000))

```

Re-load the first audio example in the MINDS-14 dataset, and check that it has been resampled to the desired `sampling rate`:

```py

minds[0]

```

**Output:**

```out

{

"path": "/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-AU~PAY_BILL/response_4.wav",

"audio": {

"path": "/root/.cache/huggingface/datasets/downloads/extracted/f14948e0e84be638dd7943ac36518a4cf3324e8b7aa331c5ab11541518e9368c/en-AU~PAY_BILL/response_4.wav",

"array": array(

[

2.0634243e-05,

1.9437837e-04,

2.2419340e-04,

...,

9.3852862e-04,

1.1302452e-03,

7.1531429e-04,

],

dtype=float32,

),

"sampling_rate": 16000,

},

"transcription": "I would like to pay my electricity bill using my card can you please assist",

"intent_class": 13,

}

```

You may notice that the array values are now also different. This is because we've now got twice the number of amplitude values for

every one that we had before.

<Tip>

💡 Some background on resampling: If an audio signal has been sampled at 8 kHz, so that it has 8000 sample readings per

second, we know that the audio does not contain any frequencies over 4 kHz. This is guaranteed by the Nyquist sampling

theorem. Because of this, we can be certain that in between the sampling points the original continuous signal always

makes a smooth curve. Upsampling to a higher sampling rate is then a matter of calculating additional sample values that go in between

the existing ones, by approximating this curve. Downsampling, however, requires that we first filter out any frequencies

that would be higher than the new Nyquist limit, before estimating the new sample points. In other words, you can't

downsample by a factor 2x by simply throwing away every other sample — this will create distortions in the signal called

aliases. Doing resampling correctly is tricky and best left to well-tested libraries such as librosa or 🤗 Datasets.

</Tip>

## Filtering the dataset

You may need to filter the data based on some criteria. One of the common cases involves limiting the audio examples to a

certain duration. For instance, we might want to filter out any examples longer than 20s to prevent out-of-memory errors

when training a model.

We can do this by using the 🤗 Datasets' `filter` method and passing a function with filtering logic to it. Let's start by writing a

function that indicates which examples to keep and which to discard. This function, `is_audio_length_in_range`,

returns `True` if a sample is shorter than 20s, and `False` if it is longer than 20s.

```py

MAX_DURATION_IN_SECONDS = 20.0

def is_audio_length_in_range(input_length):

return input_length < MAX_DURATION_IN_SECONDS

```

The filtering function can be applied to a dataset's column but we do not have a column with audio track duration in this

dataset. However, we can create one, filter based on the values in that column, and then remove it.

```py

# use librosa to get example's duration from the audio file

new_column = [librosa.get_duration(path=x) for x in minds["path"]]

minds = minds.add_column("duration", new_column)

# use 🤗 Datasets' `filter` method to apply the filtering function

minds = minds.filter(is_audio_length_in_range, input_columns=["duration"])

# remove the temporary helper column

minds = minds.remove_columns(["duration"])

minds

```

**Output:**

```out

Dataset({features: ["path", "audio", "transcription", "intent_class"], num_rows: 624})

```

We can verify that dataset has been filtered down from 654 examples to 624.

## Pre-processing audio data

One of the most challenging aspects of working with audio datasets is preparing the data in the right format for model

training. As you saw, the raw audio data comes as an array of sample values. However, pre-trained models, whether you use them

for inference, or want to fine-tune them for your task, expect the raw data to be converted into input features. The

requirements for the input features may vary from one model to another — they depend on the model's architecture, and the data it was

pre-trained with. The good news is, for every supported audio model, 🤗 Transformers offer a feature extractor class

that can convert raw audio data into the input features the model expects.

So what does a feature extractor do with the raw audio data? Let's take a look at [Whisper](https://huggingface.co/papers/2212.04356)'s

feature extractor to understand some common feature extraction transformations. Whisper is a pre-trained model for

automatic speech recognition (ASR) published in September 2022 by Alec Radford et al. from OpenAI.

First, the Whisper feature extractor pads/truncates a batch of audio examples such that all

examples have an input length of 30s. Examples shorter than this are padded to 30s by appending zeros to the end of the

sequence (zeros in an audio signal correspond to no signal or silence). Examples longer than 30s are truncated to 30s.

Since all elements in the batch are padded/truncated to a maximum length in the input space, there is no need for an attention

mask. Whisper is unique in this regard, most other audio models require an attention mask that details

where sequences have been padded, and thus where they should be ignored in the self-attention mechanism. Whisper is

trained to operate without an attention mask and infer directly from the speech signals where to ignore the inputs.

The second operation that the Whisper feature extractor performs is converting the padded audio arrays to log-mel spectrograms.

As you recall, these spectrograms describe how the frequencies of a signal change over time, expressed on the mel scale

and measured in decibels (the log part) to make the frequencies and amplitudes more representative of human hearing.

All these transformations can be applied to your raw audio data with a couple of lines of code. Let's go ahead and load

the feature extractor from the pre-trained Whisper checkpoint to have ready for our audio data:

```py

from transformers import WhisperFeatureExtractor

feature_extractor = WhisperFeatureExtractor.from_pretrained("openai/whisper-small")

```

Next, you can write a function to pre-process a single audio example by passing it through the `feature_extractor`.

```py

def prepare_dataset(example):

audio = example["audio"]

features = feature_extractor(

audio["array"], sampling_rate=audio["sampling_rate"], padding=True

)

return features

```

We can apply the data preparation function to all of our training examples using 🤗 Datasets' map method:

```py

minds = minds.map(prepare_dataset)

minds

```

**Output:**

```out

Dataset(

{

features: ["path", "audio", "transcription", "intent_class", "input_features"],

num_rows: 624,

}

)

```

As easy as that, we now have log-mel spectrograms as `input_features` in the dataset.

Let's visualize it for one of the examples in the `minds` dataset:

```py

import numpy as np

example = minds[0]

input_features = example["input_features"]

plt.figure().set_figwidth(12)

librosa.display.specshow(

np.asarray(input_features[0]),

x_axis="time",

y_axis="mel",

sr=feature_extractor.sampling_rate,

hop_length=feature_extractor.hop_length,

)

plt.colorbar()

```

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface-course/audio-course-images/resolve/main/log_mel_whisper.png" alt="Log mel spectrogram plot">

</div>

Now you can see what the audio input to the Whisper model looks like after preprocessing.

The model's feature extractor class takes care of transforming raw audio data to the format that the model expects. However,

many tasks involving audio are multimodal, e.g. speech recognition. In such cases 🤗 Transformers also offer model-specific

tokenizers to process the text inputs. For a deep dive into tokenizers, please refer to our [NLP course](https://huggingface.co/course/chapter2/4).

You can load the feature extractor and tokenizer for Whisper and other multimodal models separately, or you can load both via

a so-called processor. To make things even simpler, use `AutoProcessor` to load a model's feature extractor and processor from a

checkpoint, like this:

```py

from transformers import AutoProcessor

processor = AutoProcessor.from_pretrained("openai/whisper-small")

```

Here we have illustrated the fundamental data preparation steps. Of course, custom data may require more complex preprocessing.

In this case, you can extend the function `prepare_dataset` to perform any sort of custom data transformations. With 🤗 Datasets,

if you can write it as a Python function, you can [apply it](https://huggingface.co/docs/datasets/audio_process) to your dataset!

| 6 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter1/streaming.mdx | # Streaming audio data

One of the biggest challenges faced with audio datasets is their sheer size. A single minute of uncompressed CD-quality audio (44.1kHz, 16-bit)

takes up a bit more than 5 MB of storage. Typically, an audio dataset would contains hours of recordings.

In the previous sections we used a very small subset of MINDS-14 audio dataset, however, typical audio datasets are much larger.

For example, the `xs` (smallest) configuration of [GigaSpeech from SpeechColab](https://huggingface.co/datasets/speechcolab/gigaspeech)

contains only 10 hours of training data, but takes over 13GB of storage space for download and preparation. So what

happens when we want to train on a larger split? The full `xl` configuration of the same dataset contains 10,000 hours of

training data, requiring over 1TB of storage space. For most of us, this well exceeds the specifications of a typical

hard drive disk. Do we need to fork out and buy additional storage? Or is there a way we can train on these datasets with no disk space constraints?

🤗 Datasets comes to the rescue by offering the [streaming mode](https://huggingface.co/docs/datasets/stream). Streaming allows us to load the data progressively as

we iterate over the dataset. Rather than downloading the whole dataset at once, we load the dataset one example at a time.

We iterate over the dataset, loading and preparing examples on the fly when they are needed. This way, we only ever

load the examples that we're using, and not the ones that we're not!

Once we're done with an example sample, we continue iterating over the dataset and load the next one.

Streaming mode has three primary advantages over downloading the entire dataset at once:

* Disk space: examples are loaded to memory one-by-one as we iterate over the dataset. Since the data is not downloaded

locally, there are no disk space requirements, so you can use datasets of arbitrary size.

* Download and processing time: audio datasets are large and need a significant amount of time to download and process.

With streaming, loading and processing is done on the fly, meaning you can start using the dataset as soon as the first

example is ready.

* Easy experimentation: you can experiment on a handful of examples to check that your script works without having to

download the entire dataset.

There is one caveat to streaming mode. When downloading a full dataset without streaming, both the raw data and processed

data are saved locally to disk. If we want to re-use this dataset, we can directly load the processed data from disk,

skipping the download and processing steps. Consequently, we only have to perform the downloading and processing

operations once, after which we can re-use the prepared data.

With streaming mode, the data is not downloaded to disk. Thus, neither the downloaded nor pre-processed data are cached.

If we want to re-use the dataset, the streaming steps must be repeated, with the audio files loaded and processed on

the fly again. For this reason, it is advised to download datasets that you are likely to use multiple times.

How can you enable streaming mode? Easy! Just set `streaming=True` when you load your dataset. The rest will be taken

care for you:

```py

gigaspeech = load_dataset("speechcolab/gigaspeech", "xs", streaming=True)

```

Just like we applied preprocessing steps to a downloaded subset of MINDS-14, you can do the same preprocessing with a

streaming dataset in the exactly the same manner.

The only difference is that you can no longer access individual samples using Python indexing (i.e. `gigaspeech["train"][sample_idx]`).

Instead, you have to iterate over the dataset. Here's how you can access an example when streaming a dataset:

```py

next(iter(gigaspeech["train"]))

```

**Output:**

```out

{

"segment_id": "YOU0000000315_S0000660",

"speaker": "N/A",

"text": "AS THEY'RE LEAVING <COMMA> CAN KASH PULL ZAHRA ASIDE REALLY QUICKLY <QUESTIONMARK>",

"audio": {

"path": "xs_chunks_0000/YOU0000000315_S0000660.wav",

"array": array(

[0.0005188, 0.00085449, 0.00012207, ..., 0.00125122, 0.00076294, 0.00036621]

),

"sampling_rate": 16000,

},

"begin_time": 2941.89,

"end_time": 2945.07,

"audio_id": "YOU0000000315",

"title": "Return to Vasselheim | Critical Role: VOX MACHINA | Episode 43",

"url": "https://www.youtube.com/watch?v=zr2n1fLVasU",

"source": 2,

"category": 24,

"original_full_path": "audio/youtube/P0004/YOU0000000315.opus",

}

```

If you'd like to preview several examples from a large dataset, use the `take()` to get the first n elements. Let's grab

the first two examples in the gigaspeech dataset:

```py

gigaspeech_head = gigaspeech["train"].take(2)

list(gigaspeech_head)

```

**Output:**

```out

[

{

"segment_id": "YOU0000000315_S0000660",

"speaker": "N/A",

"text": "AS THEY'RE LEAVING <COMMA> CAN KASH PULL ZAHRA ASIDE REALLY QUICKLY <QUESTIONMARK>",

"audio": {

"path": "xs_chunks_0000/YOU0000000315_S0000660.wav",

"array": array(

[

0.0005188,

0.00085449,

0.00012207,

...,

0.00125122,

0.00076294,

0.00036621,

]

),

"sampling_rate": 16000,

},

"begin_time": 2941.89,

"end_time": 2945.07,

"audio_id": "YOU0000000315",

"title": "Return to Vasselheim | Critical Role: VOX MACHINA | Episode 43",

"url": "https://www.youtube.com/watch?v=zr2n1fLVasU",

"source": 2,

"category": 24,

"original_full_path": "audio/youtube/P0004/YOU0000000315.opus",

},

{

"segment_id": "AUD0000001043_S0000775",

"speaker": "N/A",

"text": "SIX TOMATOES <PERIOD>",

"audio": {

"path": "xs_chunks_0000/AUD0000001043_S0000775.wav",

"array": array(

[

1.43432617e-03,

1.37329102e-03,

1.31225586e-03,

...,

-6.10351562e-05,

-1.22070312e-04,

-1.83105469e-04,

]

),

"sampling_rate": 16000,

},

"begin_time": 3673.96,

"end_time": 3675.26,

"audio_id": "AUD0000001043",

"title": "Asteroid of Fear",

"url": "http//www.archive.org/download/asteroid_of_fear_1012_librivox/asteroid_of_fear_1012_librivox_64kb_mp3.zip",

"source": 0,

"category": 28,

"original_full_path": "audio/audiobook/P0011/AUD0000001043.opus",

},

]

```

Streaming mode can take your research to the next level: not only are the biggest datasets accessible to you, but you

can easily evaluate systems over multiple datasets in one go without worrying about your disk space. Compared to

evaluating on a single dataset, multi-dataset evaluation gives a better metric for the generalisation abilities of a

speech recognition system (c.f. End-to-end Speech Benchmark (ESB)).

| 7 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter1/quiz.mdx | <!-- DISABLE-FRONTMATTER-SECTIONS -->

# Check your understanding of the course material

### 1. What units is the sampling rate measured in?

<Question

choices={[

{

text: "dB",

explain: "No, the amplitude is measured in decibels (dB)."

},

{

text: "Hz",

explain: "The sampling rate is the number of samples taken in one second and is measured in hertz (Hz).",

correct: true

},

{

text: "bit",

explain: "Bits are used to describe bit depth, which refers to the number of bits of information used to represent each sample of an audio signal.",

}

]}

/>

### 2. When streaming a large audio dataset, how soon can you start using it?

<Question

choices={[

{

text: "As soon as the full dataset is downloaded.",

explain: "The goal of streaming data is to be able to work with it without having to fully download a dataset."

},

{

text: "As soon as the first 16 examples are downloaded.",

explain: "Try again!"

},

{

text: "As soon as the first example is downloaded.",

explain: "",

correct: true

}

]}

/>

### 3. What is a spectrogram?

<Question

choices={[

{

text: "A device used to digitize the audio that is first captured by a microphone, which converts the sound waves into an electrical signal.",

explain: "A device used to digitize such electrical signal is called Analog-to-Digital Converter. Try again!"

},

{

text: "A plot that shows how the amplitude of an audio signal change over time. It is also known as the *time domain* representation of sound.",

explain: "The description above refers to waveform, not spectrogram."

},

{

text: "A visual representation of the frequency spectrum of a signal as it varies with time.",

explain: "",

correct: true

}

]}

/>

### 4. What is the easiest way to convert raw audio data into log-mel spectrogram expected by Whisper?

A.

```python

librosa.feature.melspectrogram(audio["array"])

```

B.

```python

feature_extractor = WhisperFeatureExtractor.from_pretrained("openai/whisper-small")

feature_extractor(audio["array"])

```

C.

```python

dataset.feature(audio["array"], model="whisper")

```

<Question

choices={[

{

text: "A",

explain: "`librosa.feature.melspectrogram()` creates a power spectrogram."

},

{

text: "B",

explain: "",

correct: true

},

{

text: "C",

explain: "Dataset does not prepare features for Transformer models, this is done by the model's preprocessor."

}

]}

/>

### 5. How do you load a dataset from 🤗 Hub?

A.

```python

from datasets import load_dataset

dataset = load_dataset(DATASET_NAME_ON_HUB)

```

B.

```python

import librosa

dataset = librosa.load(PATH_TO_DATASET)

```

C.

```python

from transformers import load_dataset

dataset = load_dataset(DATASET_NAME_ON_HUB)

```

<Question

choices={[

{

text: "A",

explain: "The best way is to use the 🤗 Datasets library.",

correct: true

},

{

text: "B",

explain: "Librosa.load is useful to load an individual audio file from a path into a tuple with audio time series and a sampling rate, but not an entire dataset with many examples and multiple features. "

},

{

text: "C",

explain: "load_dataset method comes in the 🤗 Datasets library, not in 🤗 Transformers."

}

]}

/>

### 6. Your custom dataset contains high-quality audio with 32 kHz sampling rate. You want to train a speech recognition model that expects the audio examples to have a 16 kHz sampling rate. What should you do?

<Question

choices={[

{

text: "Use the examples as is, the model will easily generalize to higher quality audio examples.",

explain: "Due to reliance on attention mechanisms, it is challenging for models to generalize between sampling rates."

},

{

text: "Use Audio module from the 🤗 Datasets library to downsample the examples in the custom dataset",

explain: "",

correct: true

},

{

text: "Downsample by a factor 2x by throwing away every other sample.",

explain: "This will create distortions in the signal called aliases. Doing resampling correctly is tricky and best left to well-tested libraries such as librosa or 🤗 Datasets."

}

]}

/>

### 7. How can you convert a spectrogram generated by a machine learning model into a waveform?

<Question

choices={[

{

text: "We can use a neural network called a vocoder to reconstruct a waveform from the spectrogram.",

explain: "Since the phase information is missing in this case, we need to use a vocoder, or the classic Griffin-Lim algorithm to reconstruct the waveform.",

correct: true

},

{

text: "We can use the inverse STFT to convert the generated spectrogram into a waveform",

explain: "A generated spectrogram is missing phase information that is required to use the inverse STFT."

},

{

text: "You can't convert a spectrogram generated by a machine learning model into a waveform.",

explain: "Try again!"

}

]}

/>

| 8 |

0 | hf_public_repos/audio-transformers-course/chapters/en | hf_public_repos/audio-transformers-course/chapters/en/chapter1/introduction.mdx | # Unit 1. Working with audio data

## What you'll learn in this unit

Every audio or speech task starts with an audio file. Before we can dive into solving these tasks, it's important to

understand what these files actually contain, and how to work with them.

In this unit, you will gain an understanding of the fundamental terminology related to audio data, including waveform,

sampling rate, and spectrogram. You will also learn how to work with audio datasets, including loading and preprocessing

audio data, and how to stream large datasets efficiently.

By the end of this unit, you will have a strong grasp of the essential audio data terminology and will be equipped with the

skills necessary to work with audio datasets for various applications. The knowledge you'll gain in this unit is going to

lay a foundation to understanding the remainder of the course. | 9 |

0 | hf_public_repos/candle/candle-examples/examples | hf_public_repos/candle/candle-examples/examples/dinov2/README.md | # candle-dinov2

[DINOv2](https://github.com/facebookresearch/dinov2) is a computer vision model.

In this example, it is used as an ImageNet classifier: the model returns the

probability for the image to belong to each of the 1000 ImageNet categories.

## Running some example

```bash

cargo run --example dinov2 --release -- --image candle-examples/examples/yolo-v8/assets/bike.jpg

> mountain bike, all-terrain bike, off-roader: 43.67%

> bicycle-built-for-two, tandem bicycle, tandem: 33.20%

> crash helmet : 13.23%

> unicycle, monocycle : 2.44%

> maillot : 2.42%

```

| 0 |

0 | hf_public_repos/candle/candle-examples/examples | hf_public_repos/candle/candle-examples/examples/siglip/main.rs | #[cfg(feature = "mkl")]

extern crate intel_mkl_src;