text

stringlengths 55

456k

| metadata

dict |

|---|---|

# 基于 SPG 建模的产业链企业图谱

[English](./README.md) |

[简体中文](./README_cn.md)

## 1. 建模文件

schema 文件语法介绍参见 [声明式 schema](https://openspg.yuque.com/ndx6g9/0.6/fzhov4l2sst6bede)。

企业供应链图谱 schema 建模参考文件 [SupplyChain.schema](./SupplyChain.schema)。

执行以下脚本,完成 schema 创建:

```bash

knext schema commit

```

## 2. SPG 建模方法 vs 属性图建模方法

本节对比 SPG 语义建模和普通建模的差异。

### 2.1 语义属性 vs 文本属性

假定存在如下公司信息:"北大药份限公司"生产的产品有四个"医疗器械批发,医药批发,制药,其他化学药品"。

```text

id,name,products

CSF0000000254,北大*药*份限公司,"医疗器械批发,医药批发,制药,其他化学药品"

```

#### 2.1.1 基于文本属性建模

```text

//文本属性建模

Company(企业): EntityType

properties:

product(经营产品): Text

```

此时经营产品只为文本,不包含语义信息,是无法得到“北大药份限公司”的上下游产业链相关信息,极不方便维护也不方便使用。

#### 2.1.2 基于关系建模

```text

Product(产品): EntityType

properties:

name(产品名): Text

relations:

isA(上位产品): Product

Company(企业): EntityType

relations:

product(经营产品): Product

```

但如此建模,则需要将数据分为 3 列:

```text

id,name,product

CSF0000000254,北大*药*份限公司,医疗器械批发

CSF0000000254,北大*药*份限公司,医药批发

CSF0000000254,北大*药*份限公司,制药

CSF0000000254,北大*药*份限公司,其他化学药品

```

这种方式也存在两个缺点:

1. 原始数据需要做一次清洗,转换成多行。

2. 需要新增维护关系数据,当原始数据发生变更时,需要删除原有关系,再新增数据,容易导致数据错误。

#### 2.1.3 基于 SPG 语义属性建模

SPG 支持语义属性,可简化知识构建,如下:

```text

Product(产品): ConceptType

hypernymPredicate: isA

Company(企业): EntityType

properties:

product(经营产品): Product

constraint: MultiValue

```

企业中具有一个经营产品属性,且该属性的类型为 ``Product`` 类型,只需将如下数据导入,可自动实现属性到关系的转换。

```text

id,name,products

CSF0000000254,北大*药*份限公司,"医疗器械批发,医药批发,制药,其他化学药品"

```

### 2.2 逻辑表达的属性、关系 vs 数据表达的属性、关系

假定需要得到企业所在行业,根据当前已有数据,可执行如下查询语句:

```cypher

MATCH

(s:Company)-[:product]->(o:Product)-[:belongToIndustry]->(i:Industry)

RETURN

s.id, i.id

```

该方式需要熟悉图谱 schema,对人员上手要求比较高,故也有一种实践是将这类属性重新导入图谱,如下:

```text

Company(企业): EntityType

properties:

product(经营产品): Product

constraint: MultiValue

relations:

belongToIndustry(所在行业): Industry

```

新增一个关系类型,来直接获取公司所属行业信息。

这种方式缺点主要有两个:

1. 需要用户手动维护新增关系数据,增加使用维护成本。

2. 由于新关系和图谱数据存在来源依赖,非常容易导致图谱数据出现不一致问题。

针对上述缺点,SPG 支持逻辑表达属性和关系,如下建模方式:

```text

Company(企业): EntityType

properties:

product(经营产品): Product

constraint: MultiValue

relations:

belongToIndustry(所在行业): Industry

rule: [[

Define (s:Company)-[p:belongToIndustry]->(o:Industry) {

Structure {

(s)-[:product]->(c:Product)-[:belongToIndustry]->(o)

}

Constraint {

}

}

]]

```

具体内容可参见 [产业链企业信用图谱查询任务](../reasoner/README_cn.md) 中场景 1、场景 2 的示例。

### 2.3 概念体系 vs 实体体系

现有图谱方案也有常识图谱,例如 ConceptNet 等,但在业务落地中,不同业务有各自体现业务语义的类目体系,基本上不存在一个常识图谱可应用到所有业务场景,故常见的实践为将业务领域体系创建为实体,和其他实体数据混用,这就导致在同一个分类体系上,既要对 schema 的扩展建模,又要对语义上的细分类建模,数据结构定义和语义建模的耦合,导致工程实现及维护管理的复杂性,也增加了业务梳理和表示(认知)领域知识的困难。

SPG 区分了概念和实体,用于解耦语义和数据,如下:

```text

Product(产品): ConceptType

hypernymPredicate: isA

Company(企业): EntityType

properties:

product(经营产品): Product

constraint: MultiValue

```

产品被定义为概念,公司被定义为实体,相互独立演进,两者通过 SPG 提供的语义属性进行挂载关联,用户无需手动维护企业和产品之间关联。

### 2.4 事件时空多元表达

事件多要素结构表示也是一类超图(HyperGrpah)无损表示的问题,它表达的是时空多元要素的时空关联性,事件是各要素因某种行为而产生的临时关联,一旦行为结束,这种关联也随即消失。在以往的属性图中,事件只能使用实体进行替代,由文本属性表达事件内容,如下类似事件:

```text

Event(事件):

properties:

eventTime(发生时间): Long

subject(涉事主体): Text

object(客体): Text

place(地点): Text

industry(涉事行业): Text

```

这种表达方式,是无法体现真实事件的多元关联性,SPG 提供了事件建模,可实现事件多元要素的关联,如下:

```text

CompanyEvent(公司事件): EventType

properties:

subject(主体): Company

index(指标): Index

trend(趋势): Trend

belongTo(属于): TaxOfCompanyEvent

```

上述的事件中,属性类型均为已被定义类型,没有基本类型表达,SPG 基于此申明实现事件多元要素表达,具体应用示例可见 [产业链企业信用图谱查询任务](../reasoner/README_cn.md) 中场景 3 的具体描述。

|

{

"source": "OpenSPG/KAG",

"title": "kag/examples/supplychain/schema/README_cn.md",

"url": "https://github.com/OpenSPG/KAG/blob/master/kag/examples/supplychain/schema/README_cn.md",

"date": "2024-09-21T13:56:44",

"stars": 5456,

"description": "KAG is a logical form-guided reasoning and retrieval framework based on OpenSPG engine and LLMs. It is used to build logical reasoning and factual Q&A solutions for professional domain knowledge bases. It can effectively overcome the shortcomings of the traditional RAG vector similarity calculation model.",

"file_size": 3871

}

|

# 1、周杰伦

<font style="color:rgb(51, 51, 51);background-color:rgb(224, 237, 255);">华语流行乐男歌手、音乐人、演员、导演、编剧</font>

周杰伦(Jay Chou),1979年1月18日出生于台湾省新北市,祖籍福建省永春县,华语流行乐男歌手、音乐人、演员、导演、编剧,毕业于[淡江中学](https://baike.baidu.com/item/%E6%B7%A1%E6%B1%9F%E4%B8%AD%E5%AD%A6/5340877?fromModule=lemma_inlink)。

2000年,发行个人首张音乐专辑《[Jay](https://baike.baidu.com/item/Jay/5291?fromModule=lemma_inlink)》 [26]。2001年,凭借专辑《[范特西](https://baike.baidu.com/item/%E8%8C%83%E7%89%B9%E8%A5%BF/22666?fromModule=lemma_inlink)》奠定其融合中西方音乐的风格 [16]。2002年,举行“The One”世界巡回演唱会 [1]。2003年,成为美国《[时代](https://baike.baidu.com/item/%E6%97%B6%E4%BB%A3/1944848?fromModule=lemma_inlink)》杂志封面人物 [2];同年,发行音乐专辑《[叶惠美](https://baike.baidu.com/item/%E5%8F%B6%E6%83%A0%E7%BE%8E/893?fromModule=lemma_inlink)》 [21],该专辑获得[第15届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC15%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/9773084?fromModule=lemma_inlink)最佳流行音乐演唱专辑奖 [23]。2004年,发行音乐专辑《[七里香](https://baike.baidu.com/item/%E4%B8%83%E9%87%8C%E9%A6%99/2181450?fromModule=lemma_inlink)》 [29],该专辑在亚洲的首月销量达到300万张 [316];同年,获得[世界音乐大奖](https://baike.baidu.com/item/%E4%B8%96%E7%95%8C%E9%9F%B3%E4%B9%90%E5%A4%A7%E5%A5%96/6690633?fromModule=lemma_inlink)中国区最畅销艺人奖 [320]。2005年,主演个人首部电影《[头文字D](https://baike.baidu.com/item/%E5%A4%B4%E6%96%87%E5%AD%97D/2711022?fromModule=lemma_inlink)》 [314],并凭借该片获得[第25届香港电影金像奖](https://baike.baidu.com/item/%E7%AC%AC25%E5%B1%8A%E9%A6%99%E6%B8%AF%E7%94%B5%E5%BD%B1%E9%87%91%E5%83%8F%E5%A5%96/10324781?fromModule=lemma_inlink)和[第42届台湾电影金马奖](https://baike.baidu.com/item/%E7%AC%AC42%E5%B1%8A%E5%8F%B0%E6%B9%BE%E7%94%B5%E5%BD%B1%E9%87%91%E9%A9%AC%E5%A5%96/10483829?fromModule=lemma_inlink)的最佳新演员奖 [3] [315]。2006年起,连续三年获得世界音乐大奖中国区最畅销艺人奖 [4]。

2007年,自编自导爱情电影《[不能说的秘密](https://baike.baidu.com/item/%E4%B8%8D%E8%83%BD%E8%AF%B4%E7%9A%84%E7%A7%98%E5%AF%86/39267?fromModule=lemma_inlink)》 [321],同年,成立[杰威尔音乐有限公司](https://baike.baidu.com/item/%E6%9D%B0%E5%A8%81%E5%B0%94%E9%9F%B3%E4%B9%90%E6%9C%89%E9%99%90%E5%85%AC%E5%8F%B8/5929467?fromModule=lemma_inlink) [10]。2008年,凭借歌曲《[青花瓷](https://baike.baidu.com/item/%E9%9D%92%E8%8A%B1%E7%93%B7/9864403?fromModule=lemma_inlink)》获得[第19届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC19%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/3968762?fromModule=lemma_inlink)最佳作曲人奖 [292]。2009年,入选美国[CNN](https://baike.baidu.com/item/CNN/86482?fromModule=lemma_inlink)“25位亚洲最具影响力人物” [6];同年,凭借专辑《[魔杰座](https://baike.baidu.com/item/%E9%AD%94%E6%9D%B0%E5%BA%A7/49875?fromModule=lemma_inlink)》获得[第20届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC20%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/8055336?fromModule=lemma_inlink)最佳国语男歌手奖 [7]。2010年,入选美国《[Fast Company](https://baike.baidu.com/item/Fast%20Company/6508066?fromModule=lemma_inlink)》杂志评出的“全球百大创意人物”。2011年,凭借专辑《[跨时代](https://baike.baidu.com/item/%E8%B7%A8%E6%97%B6%E4%BB%A3/516122?fromModule=lemma_inlink)》获得[第22届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC22%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/7220967?fromModule=lemma_inlink)最佳国语男歌手奖 [294]。2012年,登上[福布斯中国名人榜](https://baike.baidu.com/item/%E7%A6%8F%E5%B8%83%E6%96%AF%E4%B8%AD%E5%9B%BD%E5%90%8D%E4%BA%BA%E6%A6%9C/2125?fromModule=lemma_inlink)榜首 [8]。2014年,发行个人首张数字音乐专辑《[哎呦,不错哦](https://baike.baidu.com/item/%E5%93%8E%E5%91%A6%EF%BC%8C%E4%B8%8D%E9%94%99%E5%93%A6/9851748?fromModule=lemma_inlink)》 [295]。2023年,凭借专辑《[最伟大的作品](https://baike.baidu.com/item/%E6%9C%80%E4%BC%9F%E5%A4%A7%E7%9A%84%E4%BD%9C%E5%93%81/61539892?fromModule=lemma_inlink)》成为首位获得[国际唱片业协会](https://baike.baidu.com/item/%E5%9B%BD%E9%99%85%E5%94%B1%E7%89%87%E4%B8%9A%E5%8D%8F%E4%BC%9A/1486316?fromModule=lemma_inlink)“全球畅销专辑榜”冠军的华语歌手 [287]。

## <font style="color:rgb(51, 51, 51);">1.1、早年经历</font>

周杰伦出生于台湾省新北市,祖籍福建省泉州市永春县 [13]。4岁的时候,母亲[叶惠美](https://baike.baidu.com/item/%E5%8F%B6%E6%83%A0%E7%BE%8E/2325933?fromModule=lemma_inlink)把他送到淡江山叶幼儿音乐班学习钢琴。初中二年级时,父母因性格不合离婚,周杰伦归母亲叶惠美抚养。中考时,没有考上普通高中,同年,因为擅长钢琴而被[淡江中学](https://baike.baidu.com/item/%E6%B7%A1%E6%B1%9F%E4%B8%AD%E5%AD%A6/5340877?fromModule=lemma_inlink)第一届音乐班录取。高中毕业以后,两次报考[台北大学](https://baike.baidu.com/item/%E5%8F%B0%E5%8C%97%E5%A4%A7%E5%AD%A6/7685732?fromModule=lemma_inlink)音乐系均没有被录取,于是开始在一家餐馆打工。

1997年9月,周杰伦在母亲的鼓励下报名参加了台北星光电视台的娱乐节目《[超级新人王](https://baike.baidu.com/item/%E8%B6%85%E7%BA%A7%E6%96%B0%E4%BA%BA%E7%8E%8B/6107880?fromModule=lemma_inlink)》 [26],并在节目中邀人演唱了自己创作的歌曲《梦有翅膀》。当主持人[吴宗宪](https://baike.baidu.com/item/%E5%90%B4%E5%AE%97%E5%AE%AA/29494?fromModule=lemma_inlink)看到这首歌曲的曲谱后,就邀请周杰伦到[阿尔发音乐](https://baike.baidu.com/item/%E9%98%BF%E5%B0%94%E5%8F%91%E9%9F%B3%E4%B9%90/279418?fromModule=lemma_inlink)公司担任音乐助理。1998年,创作歌曲《[眼泪知道](https://baike.baidu.com/item/%E7%9C%BC%E6%B3%AA%E7%9F%A5%E9%81%93/2106916?fromModule=lemma_inlink)》,公司把这首歌曲给到[刘德华](https://baike.baidu.com/item/%E5%88%98%E5%BE%B7%E5%8D%8E/114923?fromModule=lemma_inlink)后被退歌,后为[张惠妹](https://baike.baidu.com/item/%E5%BC%A0%E6%83%A0%E5%A6%B9/234310?fromModule=lemma_inlink)创作的歌曲《[双截棍](https://baike.baidu.com/item/%E5%8F%8C%E6%88%AA%E6%A3%8D/2986610?fromModule=lemma_inlink)》和《[忍者](https://baike.baidu.com/item/%E5%BF%8D%E8%80%85/1498981?fromModule=lemma_inlink)》(后收录于周杰伦个人音乐专辑《[范特西](https://baike.baidu.com/item/%E8%8C%83%E7%89%B9%E8%A5%BF/22666?fromModule=lemma_inlink)》中)也被退回 [14]。

## 1.2、演艺经历

2000年,在[杨峻荣](https://baike.baidu.com/item/%E6%9D%A8%E5%B3%BB%E8%8D%A3/8379373?fromModule=lemma_inlink)的推荐下,周杰伦开始演唱自己创作的歌曲;11月7日,发行个人首张音乐专辑《[Jay](https://baike.baidu.com/item/Jay/5291?fromModule=lemma_inlink)》 [26],并包办专辑全部歌曲的作曲、和声编写以及监制工作,该专辑融合了[R&B](https://baike.baidu.com/item/R&B/15271596?fromModule=lemma_inlink)、[嘻哈](https://baike.baidu.com/item/%E5%98%BB%E5%93%88/161896?fromModule=lemma_inlink)等多种音乐风格,其中的主打歌曲《[星晴](https://baike.baidu.com/item/%E6%98%9F%E6%99%B4/4798844?fromModule=lemma_inlink)》获得第24届[十大中文金曲](https://baike.baidu.com/item/%E5%8D%81%E5%A4%A7%E4%B8%AD%E6%96%87%E9%87%91%E6%9B%B2/823339?fromModule=lemma_inlink)优秀国语歌曲金奖 [15],而他也凭借该专辑在华语乐坛受到关注,并在次年举办的[第12届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC12%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/61016222?fromModule=lemma_inlink)颁奖典礼上凭借该专辑获得最佳流行音乐演唱专辑奖、入围最佳制作人奖,凭借专辑中的歌曲《[可爱女人](https://baike.baidu.com/item/%E5%8F%AF%E7%88%B1%E5%A5%B3%E4%BA%BA/3225780?fromModule=lemma_inlink)》提名[第12届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC12%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/61016222?fromModule=lemma_inlink)最佳作曲人奖。

2001年9月,周杰伦发行个人第二张音乐专辑《[范特西](https://baike.baidu.com/item/%E8%8C%83%E7%89%B9%E8%A5%BF/22666?fromModule=lemma_inlink)》 [26],他除了担任专辑的制作人外,还包办了专辑中所有歌曲的作曲,该专辑是周杰伦确立其唱片风格的作品,其中结合中西方音乐元素的主打歌曲《[双截棍](https://baike.baidu.com/item/%E5%8F%8C%E6%88%AA%E6%A3%8D/2986610?fromModule=lemma_inlink)》成为饶舌歌曲的代表作之一,该专辑的发行也让周杰伦打开东南亚市场 [16],并于次年凭借该专辑获得[第13届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC13%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/12761754?fromModule=lemma_inlink)最佳专辑制作人奖、最佳流行音乐专辑奖 [241],以及香港唱片销量大奖颁奖典礼十大销量国语唱片等奖项,周杰伦亦凭借专辑中的歌曲《[爱在西元前](https://baike.baidu.com/item/%E7%88%B1%E5%9C%A8%E8%A5%BF%E5%85%83%E5%89%8D/3488?fromModule=lemma_inlink)》获得第13届台湾金曲奖最佳作曲人奖 [228];10月,为[李玟](https://baike.baidu.com/item/%E6%9D%8E%E7%8E%9F/333755?fromModule=lemma_inlink)创作融合中西方音乐元素的歌曲《[刀马旦](https://baike.baidu.com/item/%E5%88%80%E9%A9%AC%E6%97%A6/3894792?fromModule=lemma_inlink)》 [325];12月24日,发行个人音乐EP《[范特西plus](https://baike.baidu.com/item/%E8%8C%83%E7%89%B9%E8%A5%BFplus/4950842?fromModule=lemma_inlink)》,收录了他在桃园巨蛋演唱会上演唱的《[你比从前快乐](https://baike.baidu.com/item/%E4%BD%A0%E6%AF%94%E4%BB%8E%E5%89%8D%E5%BF%AB%E4%B9%90/3564385?fromModule=lemma_inlink)》《[世界末日](https://baike.baidu.com/item/%E4%B8%96%E7%95%8C%E6%9C%AB%E6%97%A5/5697158?fromModule=lemma_inlink)》等歌曲;同年,获得第19届[十大劲歌金曲颁奖典礼](https://baike.baidu.com/item/%E5%8D%81%E5%A4%A7%E5%8A%B2%E6%AD%8C%E9%87%91%E6%9B%B2%E9%A2%81%E5%A5%96%E5%85%B8%E7%A4%BC/477072?fromModule=lemma_inlink)最受欢迎唱作歌星金奖、[叱咤乐坛流行榜颁奖典礼](https://baike.baidu.com/item/%E5%8F%B1%E5%92%A4%E4%B9%90%E5%9D%9B%E6%B5%81%E8%A1%8C%E6%A6%9C%E9%A2%81%E5%A5%96%E5%85%B8%E7%A4%BC/1325994?fromModule=lemma_inlink)叱咤乐坛生力军男歌手金奖等奖项。

2002年,参演个人首部电视剧《[星情花园](https://baike.baidu.com/item/%E6%98%9F%E6%83%85%E8%8A%B1%E5%9B%AD/8740841?fromModule=lemma_inlink)》;2月,在新加坡新达城国际会议展览中心举行演唱会;7月,发行个人第三张音乐专辑《[八度空间](https://baike.baidu.com/item/%E5%85%AB%E5%BA%A6%E7%A9%BA%E9%97%B4/1347996?fromModule=lemma_inlink)》 [26] [317],除了包办专辑中所有歌曲的作曲外,他还担任专辑的制作人 [17],该专辑以节奏蓝调风格的歌曲为主,并获得[g-music](https://baike.baidu.com/item/g-music/6992427?fromModule=lemma_inlink)风云榜白金音乐奖十大金碟奖、华语流行乐传媒大奖十大华语唱片奖、[新加坡金曲奖](https://baike.baidu.com/item/%E6%96%B0%E5%8A%A0%E5%9D%A1%E9%87%91%E6%9B%B2%E5%A5%96/6360377?fromModule=lemma_inlink)大奖年度最畅销男歌手专辑奖等奖项 [18];9月28日,在台北体育场举行“THE ONE”演唱会;12月12日至16日,在[香港体育馆](https://baike.baidu.com/item/%E9%A6%99%E6%B8%AF%E4%BD%93%E8%82%B2%E9%A6%86/2370398?fromModule=lemma_inlink)举行5场“THE ONE”演唱会;12月25日,在美国拉斯维加斯举办“THE ONE”演唱会;同年,获得第1届MTV日本音乐录影带大奖亚洲最杰出艺人奖、第2届[全球华语歌曲排行榜](https://baike.baidu.com/item/%E5%85%A8%E7%90%83%E5%8D%8E%E8%AF%AD%E6%AD%8C%E6%9B%B2%E6%8E%92%E8%A1%8C%E6%A6%9C/3189656?fromModule=lemma_inlink)最受欢迎创作歌手奖和最佳制作人奖 [350]、第9届[新加坡金曲奖](https://baike.baidu.com/item/%E6%96%B0%E5%8A%A0%E5%9D%A1%E9%87%91%E6%9B%B2%E5%A5%96/6360377?fromModule=lemma_inlink)亚太最受推崇男歌手奖等奖项 [19]。

2003年2月,成为美国《[时代周刊](https://baike.baidu.com/item/%E6%97%B6%E4%BB%A3%E5%91%A8%E5%88%8A/6643818?fromModule=lemma_inlink)》亚洲版的封面人物 [2];3月,在[第3届音乐风云榜](https://baike.baidu.com/item/%E7%AC%AC3%E5%B1%8A%E9%9F%B3%E4%B9%90%E9%A3%8E%E4%BA%91%E6%A6%9C/23707987?fromModule=lemma_inlink)上获得港台年度最佳唱作人奖、年度风云大奖等奖项,其演唱的歌曲《[暗号](https://baike.baidu.com/item/%E6%9A%97%E5%8F%B7/3948301?fromModule=lemma_inlink)》则获得港台年度十大金曲奖 [236];5月17日,在[马来西亚](https://baike.baidu.com/item/%E9%A9%AC%E6%9D%A5%E8%A5%BF%E4%BA%9A/202243?fromModule=lemma_inlink)[吉隆坡](https://baike.baidu.com/item/%E5%90%89%E9%9A%86%E5%9D%A1/967683?fromModule=lemma_inlink)[默迪卡体育场](https://baike.baidu.com/item/%E9%BB%98%E8%BF%AA%E5%8D%A1%E4%BD%93%E8%82%B2%E5%9C%BA/8826151?fromModule=lemma_inlink)举行“THE ONE”演唱会;7月16日,他的歌曲《[以父之名](https://baike.baidu.com/item/%E4%BB%A5%E7%88%B6%E4%B9%8B%E5%90%8D/1341?fromModule=lemma_inlink)》在亚洲超过50家电台首播,预计有8亿人同时收听,而该曲首播的当日也被这些电台定为“[周杰伦日](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A6%E6%97%A5/9734555?fromModule=lemma_inlink)” [20];7月31日,发行个人第四张音乐专辑《[叶惠美](https://baike.baidu.com/item/%E5%8F%B6%E6%83%A0%E7%BE%8E/893?fromModule=lemma_inlink)》 [21] [26],他不仅包办了专辑所有歌曲的作曲,还担任专辑的制作人和造型师 [21],该专辑发行首月在亚洲的销量突破200万张 [22],并于次年获得[第15届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC15%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/9773084?fromModule=lemma_inlink)最佳流行音乐演唱专辑奖、第4届全球华语歌曲排行榜年度最受欢迎专辑等奖项 [23-24],专辑主打歌曲《[东风破](https://baike.baidu.com/item/%E4%B8%9C%E9%A3%8E%E7%A0%B4/1674691?fromModule=lemma_inlink)》也是周杰伦具有代表性的中国风作品之一,而他亦凭借该曲获得[第4届华语音乐传媒大奖](https://baike.baidu.com/item/%E7%AC%AC4%E5%B1%8A%E5%8D%8E%E8%AF%AD%E9%9F%B3%E4%B9%90%E4%BC%A0%E5%AA%92%E5%A4%A7%E5%A5%96/18003952?fromModule=lemma_inlink)最佳作曲人奖;9月12日,在[北京工人体育场](https://baike.baidu.com/item/%E5%8C%97%E4%BA%AC%E5%B7%A5%E4%BA%BA%E4%BD%93%E8%82%B2%E5%9C%BA/2214906?fromModule=lemma_inlink)举行“THE ONE”演唱会;11月13日,发行个人音乐EP《[寻找周杰伦](https://baike.baidu.com/item/%E5%AF%BB%E6%89%BE%E5%91%A8%E6%9D%B0%E4%BC%A6/2632938?fromModule=lemma_inlink)》 [25],该EP收录了周杰伦为同名电影《[寻找周杰伦](https://baike.baidu.com/item/%E5%AF%BB%E6%89%BE%E5%91%A8%E6%9D%B0%E4%BC%A6/1189?fromModule=lemma_inlink)》创作的两首歌曲《[轨迹](https://baike.baidu.com/item/%E8%BD%A8%E8%BF%B9/2770132?fromModule=lemma_inlink)》《[断了的弦](https://baike.baidu.com/item/%E6%96%AD%E4%BA%86%E7%9A%84%E5%BC%A6/1508695?fromModule=lemma_inlink)》 [25];12月12日,在[上海体育场](https://baike.baidu.com/item/%E4%B8%8A%E6%B5%B7%E4%BD%93%E8%82%B2%E5%9C%BA/9679224?fromModule=lemma_inlink)举办“THE ONE”演唱会,并演唱了变奏版的《[双截棍](https://baike.baidu.com/item/%E5%8F%8C%E6%88%AA%E6%A3%8D/2986610?fromModule=lemma_inlink)》、加长版的《[爷爷泡的茶](https://baike.baidu.com/item/%E7%88%B7%E7%88%B7%E6%B3%A1%E7%9A%84%E8%8C%B6/2746283?fromModule=lemma_inlink)》等歌曲;同年,客串出演的电影处女作《[寻找周杰伦](https://baike.baidu.com/item/%E5%AF%BB%E6%89%BE%E5%91%A8%E6%9D%B0%E4%BC%A6/1189?fromModule=lemma_inlink)》上映 [90]。

2004年1月21日,首次登上[中央电视台春节联欢晚会](https://baike.baidu.com/item/%E4%B8%AD%E5%A4%AE%E7%94%B5%E8%A7%86%E5%8F%B0%E6%98%A5%E8%8A%82%E8%81%94%E6%AC%A2%E6%99%9A%E4%BC%9A/7622174?fromModule=lemma_inlink)的舞台,并演唱歌曲《[龙拳](https://baike.baidu.com/item/%E9%BE%99%E6%8B%B3/2929202?fromModule=lemma_inlink)》 [27-28];3月,在[第4届音乐风云榜](https://baike.baidu.com/item/%E7%AC%AC4%E5%B1%8A%E9%9F%B3%E4%B9%90%E9%A3%8E%E4%BA%91%E6%A6%9C/23707984?fromModule=lemma_inlink)上获得台湾地区最受欢迎男歌手奖、年度风云大奖、年度港台及海外华人最佳制作人等奖项 [326];8月3日,发行融合嘻哈、R&B、[古典音乐](https://baike.baidu.com/item/%E5%8F%A4%E5%85%B8%E9%9F%B3%E4%B9%90/106197?fromModule=lemma_inlink)等风格的音乐专辑《[七里香](https://baike.baidu.com/item/%E4%B8%83%E9%87%8C%E9%A6%99/2181450?fromModule=lemma_inlink)》 [29] [289],该专辑发行当月在亚洲的销量突破300万张 [316],而专辑同名主打歌曲《[七里香](https://baike.baidu.com/item/%E4%B8%83%E9%87%8C%E9%A6%99/12009481?fromModule=lemma_inlink)》则获得[第27届十大中文金曲](https://baike.baidu.com/item/%E7%AC%AC27%E5%B1%8A%E5%8D%81%E5%A4%A7%E4%B8%AD%E6%96%87%E9%87%91%E6%9B%B2/12709616?fromModule=lemma_inlink)十大金曲奖、优秀流行国语歌曲奖金奖,以及[第5届全球华语歌曲排行榜](https://baike.baidu.com/item/%E7%AC%AC5%E5%B1%8A%E5%85%A8%E7%90%83%E5%8D%8E%E8%AF%AD%E6%AD%8C%E6%9B%B2%E6%8E%92%E8%A1%8C%E6%A6%9C/24682097?fromModule=lemma_inlink)年度25大金曲等奖项 [30];9月,获得第16届[世界音乐大奖](https://baike.baidu.com/item/%E4%B8%96%E7%95%8C%E9%9F%B3%E4%B9%90%E5%A4%A7%E5%A5%96/6690633?fromModule=lemma_inlink)中国区最畅销艺人奖 [320];10月起,在台北、香港、洛杉矶、蒙特维尔等地举行“无与伦比”世界巡回演唱会。

2005年1月11日,在第11届[全球华语榜中榜](https://baike.baidu.com/item/%E5%85%A8%E7%90%83%E5%8D%8E%E8%AF%AD%E6%A6%9C%E4%B8%AD%E6%A6%9C/10768347?fromModule=lemma_inlink)颁奖盛典上获得港台最佳男歌手奖、港台最受欢迎男歌手奖、港台最佳创作歌手奖等奖项 [31];4月,凭借专辑《[七里香](https://baike.baidu.com/item/%E4%B8%83%E9%87%8C%E9%A6%99/2181450?fromModule=lemma_inlink)》入围[第16届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC16%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/4745538?fromModule=lemma_inlink)最佳国语男演唱人奖、最佳流行音乐演唱专辑奖,凭借歌曲《[七里香](https://baike.baidu.com/item/%E4%B8%83%E9%87%8C%E9%A6%99/12009481?fromModule=lemma_inlink)》入围第16届台湾金曲奖最佳作曲人奖;6月23日,由其担任男主角主演的电影《[头文字D](https://baike.baidu.com/item/%E5%A4%B4%E6%96%87%E5%AD%97D/2711022?fromModule=lemma_inlink)》上映 [91],他在该片中饰演[藤原拓海](https://baike.baidu.com/item/%E8%97%A4%E5%8E%9F%E6%8B%93%E6%B5%B7/702611?fromModule=lemma_inlink) [314] [347],这也是他主演的个人首部电影 [314],他也凭借该片获得[第42届台湾电影金马奖](https://baike.baidu.com/item/%E7%AC%AC42%E5%B1%8A%E5%8F%B0%E6%B9%BE%E7%94%B5%E5%BD%B1%E9%87%91%E9%A9%AC%E5%A5%96/10483829?fromModule=lemma_inlink)最佳新演员奖 [3]、[第25届香港电影金像奖](https://baike.baidu.com/item/%E7%AC%AC25%E5%B1%8A%E9%A6%99%E6%B8%AF%E7%94%B5%E5%BD%B1%E9%87%91%E5%83%8F%E5%A5%96/10324781?fromModule=lemma_inlink)最佳新演员奖 [315];7月1日,在上海体育场举行“无与伦比巡回演唱会” [32];7月9日,在北京工人体育场举行“无与伦比巡回演唱会” [33]。8月31日,在日本发行个人首张精选专辑《[Initial J](https://baike.baidu.com/item/Initial%20J/2268270?fromModule=lemma_inlink)》 [327],该专辑收录了周杰伦为电影《头文字D》演唱的主题曲《[一路向北](https://baike.baidu.com/item/%E4%B8%80%E8%B7%AF%E5%90%91%E5%8C%97/52259?fromModule=lemma_inlink)》和《[飘移](https://baike.baidu.com/item/%E9%A3%98%E7%A7%BB/1246934?fromModule=lemma_inlink)》 [34];11月1日,发行个人第六张音乐专辑《[11月的萧邦](https://baike.baidu.com/item/11%E6%9C%88%E7%9A%84%E8%90%A7%E9%82%A6/467565?fromModule=lemma_inlink)》 [296],并包办了专辑中所有歌曲的作曲以及专辑的造型设计 [35],该专辑发行后以4.28%的销售份额获得台湾[G-MUSIC](https://baike.baidu.com/item/G-MUSIC/6992427?fromModule=lemma_inlink)年终排行榜冠军;同年,其创作的歌曲《[蜗牛](https://baike.baidu.com/item/%E8%9C%97%E7%89%9B/8578273?fromModule=lemma_inlink)》入选“上海中学生爱国主义歌曲推荐目录” [328]。

2006年1月11日,在第12届全球华语榜中榜颁奖盛典上获得最佳男歌手奖、最佳创作歌手奖、最受欢迎男歌手奖,并凭借歌曲《[夜曲](https://baike.baidu.com/item/%E5%A4%9C%E6%9B%B2/3886391?fromModule=lemma_inlink)》及其MV分别获得年度最佳歌曲奖、最受欢迎音乐录影带奖 [234];1月20日,发行个人音乐EP《[霍元甲](https://baike.baidu.com/item/%E9%9C%8D%E5%85%83%E7%94%B2/24226609?fromModule=lemma_inlink)》 [329],同名主打歌曲《[霍元甲](https://baike.baidu.com/item/%E9%9C%8D%E5%85%83%E7%94%B2/8903362?fromModule=lemma_inlink)》是[李连杰](https://baike.baidu.com/item/%E6%9D%8E%E8%BF%9E%E6%9D%B0/202569?fromModule=lemma_inlink)主演的同名电影《[霍元甲](https://baike.baidu.com/item/%E9%9C%8D%E5%85%83%E7%94%B2/8903304?fromModule=lemma_inlink)》的主题曲 [36];1月23日,在[第28届十大中文金曲](https://baike.baidu.com/item/%E7%AC%AC28%E5%B1%8A%E5%8D%81%E5%A4%A7%E4%B8%AD%E6%96%87%E9%87%91%E6%9B%B2/13467291?fromModule=lemma_inlink)颁奖典礼上获得了优秀流行歌手大奖、全年最高销量歌手大奖男歌手奖 [246];2月5日至6日,在日本东京举行演唱会;9月,发行个人第七张音乐专辑《[依然范特西](https://baike.baidu.com/item/%E4%BE%9D%E7%84%B6%E8%8C%83%E7%89%B9%E8%A5%BF/7709602?fromModule=lemma_inlink)》 [290],该专辑延续了周杰伦以往的音乐风格,并融合了中国风、说唱等音乐风格,其中与[费玉清](https://baike.baidu.com/item/%E8%B4%B9%E7%8E%89%E6%B8%85/651674?fromModule=lemma_inlink)合唱的中国风歌曲《[千里之外](https://baike.baidu.com/item/%E5%8D%83%E9%87%8C%E4%B9%8B%E5%A4%96/781?fromModule=lemma_inlink)》获得第13届全球华语音乐榜中榜年度最佳歌曲奖、[第29届十大中文金曲](https://baike.baidu.com/item/%E7%AC%AC29%E5%B1%8A%E5%8D%81%E5%A4%A7%E4%B8%AD%E6%96%87%E9%87%91%E6%9B%B2/7944447?fromModule=lemma_inlink)全国最受欢迎中文歌曲奖等奖项 [37-38],该专辑发行后以5.34%的销售份额位列台湾五大唱片排行榜第一位 [39],并获得[中华音乐人交流协会](https://baike.baidu.com/item/%E4%B8%AD%E5%8D%8E%E9%9F%B3%E4%B9%90%E4%BA%BA%E4%BA%A4%E6%B5%81%E5%8D%8F%E4%BC%9A/3212583?fromModule=lemma_inlink)年度十大优良专辑奖、IFPI香港唱片销量大奖最高销量国语唱片奖等奖项 [40];12月,发行个人音乐EP《[黄金甲](https://baike.baidu.com/item/%E9%BB%84%E9%87%91%E7%94%B2/62490685?fromModule=lemma_inlink)》 [330],该专辑获得IFPI香港唱片销量大奖十大畅销国语唱片奖 [332];同年,获得世界音乐大奖中国区最畅销艺人奖 [4];12月14日,主演的古装动作片《[满城尽带黄金甲](https://baike.baidu.com/item/%E6%BB%A1%E5%9F%8E%E5%B0%BD%E5%B8%A6%E9%BB%84%E9%87%91%E7%94%B2/18156?fromModule=lemma_inlink)》在中国内地上映 [331],他在片中饰演武功超群的二王子元杰,并凭借该片获得第16届上海影评人奖最佳男演员奖,而他为该片创作并演唱的主题曲《[菊花台](https://baike.baidu.com/item/%E8%8F%8A%E8%8A%B1%E5%8F%B0/2999088?fromModule=lemma_inlink)》则获得了[第26届香港电影金像奖](https://baike.baidu.com/item/%E7%AC%AC26%E5%B1%8A%E9%A6%99%E6%B8%AF%E7%94%B5%E5%BD%B1%E9%87%91%E5%83%8F%E5%A5%96/10324838?fromModule=lemma_inlink)最佳原创电影歌曲奖 [92] [220]。

2007年2月,首度担任导演并自导自演爱情片《[不能说的秘密](https://baike.baidu.com/item/%E4%B8%8D%E8%83%BD%E8%AF%B4%E7%9A%84%E7%A7%98%E5%AF%86/39267?fromModule=lemma_inlink)》 [93] [321],该片上映后获得[第44届台湾电影金马奖](https://baike.baidu.com/item/%E7%AC%AC44%E5%B1%8A%E5%8F%B0%E6%B9%BE%E7%94%B5%E5%BD%B1%E9%87%91%E9%A9%AC%E5%A5%96/10483746?fromModule=lemma_inlink)年度台湾杰出电影奖、[第27届香港电影金像奖](https://baike.baidu.com/item/%E7%AC%AC27%E5%B1%8A%E9%A6%99%E6%B8%AF%E7%94%B5%E5%BD%B1%E9%87%91%E5%83%8F%E5%A5%96/3846497?fromModule=lemma_inlink)最佳亚洲电影提名等奖项 [5],而他电影创作并演唱的同名主题曲《[不能说的秘密](https://baike.baidu.com/item/%E4%B8%8D%E8%83%BD%E8%AF%B4%E7%9A%84%E7%A7%98%E5%AF%86/1863255?fromModule=lemma_inlink)》获得了第44届台湾电影金马奖最佳原创电影歌曲奖 [5];5月,凭借《千里之外》和《[红模仿](https://baike.baidu.com/item/%E7%BA%A2%E6%A8%A1%E4%BB%BF/8705177?fromModule=lemma_inlink)》分别入围[第18届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC18%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/4678259?fromModule=lemma_inlink)最佳年度歌曲、最佳音乐录像带导演等奖项 [41];6月,凭借单曲《[霍元甲](https://baike.baidu.com/item/%E9%9C%8D%E5%85%83%E7%94%B2/8903362?fromModule=lemma_inlink)》获得第18届台湾金曲奖最佳单曲制作人奖 [42];11月2日,发行个人第八张音乐专辑《[我很忙](https://baike.baidu.com/item/%E6%88%91%E5%BE%88%E5%BF%99/1374653?fromModule=lemma_inlink)》 [243] [291],并在专辑中首次尝试美式乡村的音乐风格,而他也于次年凭借专辑中的中国风歌曲《[青花瓷](https://baike.baidu.com/item/%E9%9D%92%E8%8A%B1%E7%93%B7/9864403?fromModule=lemma_inlink)》获得[第19届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC19%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/3968762?fromModule=lemma_inlink)最佳作曲人奖以及最佳年度歌曲奖 [43] [292];11月4日,凭借专辑《[依然范特西](https://baike.baidu.com/item/%E4%BE%9D%E7%84%B6%E8%8C%83%E7%89%B9%E8%A5%BF/7709602?fromModule=lemma_inlink)》蝉联世界音乐大奖中国区最畅销艺人奖 [44];11月24日,在上海八万人体育场举行演唱会,并在演唱会中模仿了[维塔斯](https://baike.baidu.com/item/%E7%BB%B4%E5%A1%94%E6%96%AF/3770095?fromModule=lemma_inlink)的假声唱法 [45];12月,在香港体育馆举行7场“周杰伦07-08世界巡回香港站演唱会”。

2008年1月10日,周杰伦自导自演的爱情文艺片《[不能说的秘密](https://baike.baidu.com/item/%E4%B8%8D%E8%83%BD%E8%AF%B4%E7%9A%84%E7%A7%98%E5%AF%86/39267?fromModule=lemma_inlink)》在韩国上映 [94];2月6日,在[2008年中央电视台春节联欢晚会](https://baike.baidu.com/item/2008%E5%B9%B4%E4%B8%AD%E5%A4%AE%E7%94%B5%E8%A7%86%E5%8F%B0%E6%98%A5%E8%8A%82%E8%81%94%E6%AC%A2%E6%99%9A%E4%BC%9A/8970911?fromModule=lemma_inlink)上演唱歌曲《青花瓷》 [46];之后,《青花瓷》的歌词出现在山东、江苏两省的高考试题中 [47];2月16日,在日本[武道馆](https://baike.baidu.com/item/%E6%AD%A6%E9%81%93%E9%A6%86/1989260?fromModule=lemma_inlink)连开两场演唱会,成为继[邓丽君](https://baike.baidu.com/item/%E9%82%93%E4%B8%BD%E5%90%9B/27007?fromModule=lemma_inlink)、[王菲](https://baike.baidu.com/item/%E7%8E%8B%E8%8F%B2/11029?fromModule=lemma_inlink)之后第三位在武道馆开唱的华人歌手;同月,其主演的爱情喜剧片《[大灌篮](https://baike.baidu.com/item/%E5%A4%A7%E7%81%8C%E7%AF%AE/9173184?fromModule=lemma_inlink)》上映 [334],在片中饰演见义勇为、好打不平的孤儿[方世杰](https://baike.baidu.com/item/%E6%96%B9%E4%B8%96%E6%9D%B0/9936534?fromModule=lemma_inlink) [335],并为该片创作、演唱主题曲《[周大侠](https://baike.baidu.com/item/%E5%91%A8%E5%A4%A7%E4%BE%A0/10508241?fromModule=lemma_inlink)》 [334];4月30日,发行为[北京奥运会](https://baike.baidu.com/item/%E5%8C%97%E4%BA%AC%E5%A5%A5%E8%BF%90%E4%BC%9A/335299?fromModule=lemma_inlink)创作并演唱的歌曲《[千山万水](https://baike.baidu.com/item/%E5%8D%83%E5%B1%B1%E4%B8%87%E6%B0%B4/3167078?fromModule=lemma_inlink)》 [253];7月,在第19届台湾金曲奖颁奖典礼上凭借专辑《[不能说的秘密电影原声带](https://baike.baidu.com/item/%E4%B8%8D%E8%83%BD%E8%AF%B4%E7%9A%84%E7%A7%98%E5%AF%86%E7%94%B5%E5%BD%B1%E5%8E%9F%E5%A3%B0%E5%B8%A6/7752656?fromModule=lemma_inlink)》获得演奏类最佳专辑制作人奖,凭借《[琴房](https://baike.baidu.com/item/%E7%90%B4%E6%88%BF/2920397?fromModule=lemma_inlink)》获得演奏类最佳作曲人奖 [43];10月15日,发行个人第九张音乐专辑《[魔杰座](https://baike.baidu.com/item/%E9%AD%94%E6%9D%B0%E5%BA%A7/49875?fromModule=lemma_inlink)》 [297],该专辑融合了嘻哈、民谣等音乐风格,推出首周在G-MUSIC排行榜、五大唱片排行榜上获得冠军,发行一星期在亚洲的销量突破100万张 [48];11月,凭借专辑《[我很忙](https://baike.baidu.com/item/%E6%88%91%E5%BE%88%E5%BF%99/1374653?fromModule=lemma_inlink)》第四次获得世界音乐大奖中国区最畅销艺人奖 [4],并成为首位连续三届获得该奖项的华人歌手 [44]。

2009年1月25日,在[2009年中央电视台春节联欢晚会](https://baike.baidu.com/item/2009%E5%B9%B4%E4%B8%AD%E5%A4%AE%E7%94%B5%E8%A7%86%E5%8F%B0%E6%98%A5%E8%8A%82%E8%81%94%E6%AC%A2%E6%99%9A%E4%BC%9A/5938543?fromModule=lemma_inlink)上与[宋祖英](https://baike.baidu.com/item/%E5%AE%8B%E7%A5%96%E8%8B%B1/275282?fromModule=lemma_inlink)合作演唱歌曲《[本草纲目](https://baike.baidu.com/item/%E6%9C%AC%E8%8D%89%E7%BA%B2%E7%9B%AE/10619620?fromModule=lemma_inlink)》 [333];5月,在[昆山市体育中心](https://baike.baidu.com/item/%E6%98%86%E5%B1%B1%E5%B8%82%E4%BD%93%E8%82%B2%E4%B8%AD%E5%BF%83/10551658?fromModule=lemma_inlink)体育场举行演唱会;6月,在[第20届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC20%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/8055336?fromModule=lemma_inlink)颁奖典礼上,周杰伦凭借歌曲《[稻香](https://baike.baidu.com/item/%E7%A8%BB%E9%A6%99/11539?fromModule=lemma_inlink)》获得最佳年度歌曲奖,凭借歌曲《[魔术先生](https://baike.baidu.com/item/%E9%AD%94%E6%9C%AF%E5%85%88%E7%94%9F/6756619?fromModule=lemma_inlink)》获得最佳音乐录影带奖,凭借专辑《魔杰座》获得最佳国语男歌手奖 [7];7月,周杰伦悉尼演唱会的票房在美国公告牌上排名第二,成为该年全球单场演唱会票房收入第二名,并且打破了华人歌手在澳大利亚开演唱会的票房纪录;8月起,在[佛山世纪莲体育中心](https://baike.baidu.com/item/%E4%BD%9B%E5%B1%B1%E4%B8%96%E7%BA%AA%E8%8E%B2%E4%BD%93%E8%82%B2%E4%B8%AD%E5%BF%83/2393458?fromModule=lemma_inlink)体育场、[沈阳奥体中心](https://baike.baidu.com/item/%E6%B2%88%E9%98%B3%E5%A5%A5%E4%BD%93%E4%B8%AD%E5%BF%83/665665?fromModule=lemma_inlink)体育场等场馆举办个人巡回演唱会;12月,入选美国[CNN](https://baike.baidu.com/item/CNN/86482?fromModule=lemma_inlink)评出的“亚洲最具影响力的25位人物” [49];同月9日,与[林志玲](https://baike.baidu.com/item/%E6%9E%97%E5%BF%97%E7%8E%B2/172898?fromModule=lemma_inlink)共同主演的探险片《[刺陵](https://baike.baidu.com/item/%E5%88%BA%E9%99%B5/7759069?fromModule=lemma_inlink)》上映 [336],他在片中饰演拥有神秘力量的古城守陵人乔飞 [95]。

2010年2月9日,出演的古装武侠片《[苏乞儿](https://baike.baidu.com/item/%E8%8B%8F%E4%B9%9E%E5%84%BF/7887736?fromModule=lemma_inlink)》上映 [337],他在片中饰演冷酷、不苟言笑的[武神](https://baike.baidu.com/item/%E6%AD%A6%E7%A5%9E/61764957?fromModule=lemma_inlink) [338];同年,执导科幻剧《[熊猫人](https://baike.baidu.com/item/%E7%86%8A%E7%8C%AB%E4%BA%BA/23175?fromModule=lemma_inlink)》,并特别客串出演该剧 [339],他还为该剧创作了《[熊猫人](https://baike.baidu.com/item/%E7%86%8A%E7%8C%AB%E4%BA%BA/19687027?fromModule=lemma_inlink)》《[爱情引力](https://baike.baidu.com/item/%E7%88%B1%E6%83%85%E5%BC%95%E5%8A%9B/8585685?fromModule=lemma_inlink)》等歌曲 [96];3月28日,在[第14届全球华语榜中榜](https://baike.baidu.com/item/%E7%AC%AC14%E5%B1%8A%E5%85%A8%E7%90%83%E5%8D%8E%E8%AF%AD%E6%A6%9C%E4%B8%AD%E6%A6%9C/2234155?fromModule=lemma_inlink)暨亚洲影响力大典上获得12530无线音乐年度大奖 [242];5月18日,发行个人第十张音乐专辑《[跨时代](https://baike.baidu.com/item/%E8%B7%A8%E6%97%B6%E4%BB%A3/516122?fromModule=lemma_inlink)》 [293],并包办专辑中全部歌曲的作曲和制作,该专辑于次年获得[第22届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC22%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/7220967?fromModule=lemma_inlink)最佳国语专辑奖、[中国原创音乐流行榜](https://baike.baidu.com/item/%E4%B8%AD%E5%9B%BD%E5%8E%9F%E5%88%9B%E9%9F%B3%E4%B9%90%E6%B5%81%E8%A1%8C%E6%A6%9C/10663228?fromModule=lemma_inlink)最优秀专辑奖等奖项,而周杰伦也凭借该专辑获得第22届台湾金曲奖最佳国语男歌手奖 [50] [294];6月,入选美国杂志《[Fast Company](https://baike.baidu.com/item/Fast%20Company/6508066?fromModule=lemma_inlink)》评出的“全球百大创意人物”,并且成为首位入榜的华人男歌手;6月11日,在[台北小巨蛋](https://baike.baidu.com/item/%E5%8F%B0%E5%8C%97%E5%B0%8F%E5%B7%A8%E8%9B%8B/10648327?fromModule=lemma_inlink)举行“超时代”演唱会首场演出;8月,在一项名为“全球歌曲下载量最高歌手”(2008年年初至2010年8月10日)的调查中,周杰伦的歌曲下载量排名全球第三 [51];12月,编号为257248的小行星被命名为“[周杰伦星](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A6%E6%98%9F/8257706?fromModule=lemma_inlink)”,而周杰伦也创作了以该小行星为题材的歌曲《[爱的飞行日记](https://baike.baidu.com/item/%E7%88%B1%E7%9A%84%E9%A3%9E%E8%A1%8C%E6%97%A5%E8%AE%B0/1842823?fromModule=lemma_inlink)》;12月30日,美国古柏蒂奴市宣布把每年的12月31日设立为“周杰伦日” [52]。

2011年1月,凭借动作片《[青蜂侠](https://baike.baidu.com/item/%E9%9D%92%E8%9C%82%E4%BE%A0/7618833?fromModule=lemma_inlink)》进军好莱坞 [340],并入选美国电影网站Screen Crave评出的“十大最值得期待的新秀演员”;2月11日,登上[2011年中央电视台春节联欢晚会](https://baike.baidu.com/item/2011%E5%B9%B4%E4%B8%AD%E5%A4%AE%E7%94%B5%E8%A7%86%E5%8F%B0%E6%98%A5%E8%8A%82%E8%81%94%E6%AC%A2%E6%99%9A%E4%BC%9A/3001908?fromModule=lemma_inlink),并与林志玲表演、演唱歌曲《[兰亭序](https://baike.baidu.com/item/%E5%85%B0%E4%BA%AD%E5%BA%8F/2879867?fromModule=lemma_inlink)》 [341];2月23日,与[科比·布莱恩特](https://baike.baidu.com/item/%E7%A7%91%E6%AF%94%C2%B7%E5%B8%83%E8%8E%B1%E6%81%A9%E7%89%B9/318773?fromModule=lemma_inlink)拍摄雪碧广告以及MV,并创作了广告主题曲《[天地一斗](https://baike.baidu.com/item/%E5%A4%A9%E5%9C%B0%E4%B8%80%E6%96%97/6151126?fromModule=lemma_inlink)》;4月21日,美国《[时代周刊](https://baike.baidu.com/item/%E6%97%B6%E4%BB%A3%E5%91%A8%E5%88%8A/6643818?fromModule=lemma_inlink)》评选了“全球年度最具影响力人物100强”,周杰伦位列第二名;5月13日,凭借专辑《[跨时代](https://baike.baidu.com/item/%E8%B7%A8%E6%97%B6%E4%BB%A3/516122?fromModule=lemma_inlink)》、歌曲《[超人不会飞](https://baike.baidu.com/item/%E8%B6%85%E4%BA%BA%E4%B8%8D%E4%BC%9A%E9%A3%9E/39269?fromModule=lemma_inlink)》《[烟花易冷](https://baike.baidu.com/item/%E7%83%9F%E8%8A%B1%E6%98%93%E5%86%B7/211?fromModule=lemma_inlink)》分别入围第22届台湾金曲奖最佳专辑制作人奖、最佳年度歌曲奖、最佳作曲人奖等奖项 [53-54];5月,凭借动作片《青蜂侠》获得第20届美国[MTV电影电视奖](https://baike.baidu.com/item/MTV%E7%94%B5%E5%BD%B1%E7%94%B5%E8%A7%86%E5%A5%96/20817009?fromModule=lemma_inlink)最佳新人提名 [97];11月11日,发行个人第11张音乐专辑《[惊叹号!](https://baike.baidu.com/item/%E6%83%8A%E5%8F%B9%E5%8F%B7%EF%BC%81/10482087?fromModule=lemma_inlink)》 [247] [298],该专辑融合了[重金属摇滚](https://baike.baidu.com/item/%E9%87%8D%E9%87%91%E5%B1%9E%E6%91%87%E6%BB%9A/1514206?fromModule=lemma_inlink)、嘻哈、节奏蓝调、[爵士](https://baike.baidu.com/item/%E7%88%B5%E5%A3%AB/8315440?fromModule=lemma_inlink)等音乐风格,并首次引入[电子舞曲](https://baike.baidu.com/item/%E7%94%B5%E5%AD%90%E8%88%9E%E6%9B%B2/5673907?fromModule=lemma_inlink) [55];同年,在洛杉矶、吉隆坡、高雄等地举行“超时代世界巡回演唱会” [56]。

2012年,主演枪战动作电影《[逆战](https://baike.baidu.com/item/%E9%80%86%E6%88%98/9261017?fromModule=lemma_inlink)》,在片中饰演对错分明、具有强烈正义感的国际警务人员万飞 [98];4月,在[第16届全球华语榜中榜](https://baike.baidu.com/item/%E7%AC%AC16%E5%B1%8A%E5%85%A8%E7%90%83%E5%8D%8E%E8%AF%AD%E6%A6%9C%E4%B8%AD%E6%A6%9C/2211134?fromModule=lemma_inlink)亚洲影响力大典上获得了亚洲影响力最佳华语艺人奖、榜中榜最佳数字音乐奖,他的专辑《惊叹号!》也获得了港台最佳专辑奖 [342];5月,位列[福布斯中国名人榜](https://baike.baidu.com/item/%E7%A6%8F%E5%B8%83%E6%96%AF%E4%B8%AD%E5%9B%BD%E5%90%8D%E4%BA%BA%E6%A6%9C/2125?fromModule=lemma_inlink)第一名;5月15日,凭借专辑《惊叹号!》和歌曲《[水手怕水](https://baike.baidu.com/item/%E6%B0%B4%E6%89%8B%E6%80%95%E6%B0%B4/9504982?fromModule=lemma_inlink)》分别入围[第23届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC23%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/2044143?fromModule=lemma_inlink)最佳国语男歌手奖、最佳编曲人奖;9月22日,在新加坡F1赛道举办演唱会,成为首位在F1演出的华人歌手 [57];12月28日,发行个人第12张音乐专辑《[12新作](https://baike.baidu.com/item/12%E6%96%B0%E4%BD%9C/8186612?fromModule=lemma_inlink)》 [299],该专辑包括了中国风、说唱、蓝调、R&B、爵士等音乐风格,主打歌曲《[红尘客栈](https://baike.baidu.com/item/%E7%BA%A2%E5%B0%98%E5%AE%A2%E6%A0%88/8396283?fromModule=lemma_inlink)》获得第13届全球华语歌曲排行榜二十大金曲奖、[第36届十大中文金曲](https://baike.baidu.com/item/%E7%AC%AC36%E5%B1%8A%E5%8D%81%E5%A4%A7%E4%B8%AD%E6%96%87%E9%87%91%E6%9B%B2/12632953?fromModule=lemma_inlink)优秀流行国语歌曲银奖等奖项。

2013年5月17日,在上海[梅赛德斯-奔驰文化中心](https://baike.baidu.com/item/%E6%A2%85%E8%B5%9B%E5%BE%B7%E6%96%AF%EF%BC%8D%E5%A5%94%E9%A9%B0%E6%96%87%E5%8C%96%E4%B8%AD%E5%BF%83/12524895?fromModule=lemma_inlink)举行“魔天伦”世界巡回演唱会;5月22日,凭借专辑《12新作》入围[第24届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC24%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/4788862?fromModule=lemma_inlink)最佳国语专辑奖、最佳国语男歌手奖、最佳专辑制作人奖;6月1日,为动画电影《[十万个冷笑话](https://baike.baidu.com/item/%E5%8D%81%E4%B8%87%E4%B8%AA%E5%86%B7%E7%AC%91%E8%AF%9D/2883102?fromModule=lemma_inlink)》中的角色[太乙真人](https://baike.baidu.com/item/%E5%A4%AA%E4%B9%99%E7%9C%9F%E4%BA%BA/23686155?fromModule=lemma_inlink)配音;6月22日,在[成都市体育中心](https://baike.baidu.com/item/%E6%88%90%E9%83%BD%E5%B8%82%E4%BD%93%E8%82%B2%E4%B8%AD%E5%BF%83/4821286?fromModule=lemma_inlink)体育场举行演唱会;7月11日,自导自演的爱情片《[天台爱情](https://baike.baidu.com/item/%E5%A4%A9%E5%8F%B0%E7%88%B1%E6%83%85/3568321?fromModule=lemma_inlink)》上映 [344],该片还被选为[纽约亚洲电影节](https://baike.baidu.com/item/%E7%BA%BD%E7%BA%A6%E4%BA%9A%E6%B4%B2%E7%94%B5%E5%BD%B1%E8%8A%82/12609945?fromModule=lemma_inlink)闭幕影片 [99];9月6日至8日,在台北小巨蛋举行3场“魔天伦”演唱会 [58];10月4日,担任音乐爱情电影《[听见下雨的声音](https://baike.baidu.com/item/%E5%90%AC%E8%A7%81%E4%B8%8B%E9%9B%A8%E7%9A%84%E5%A3%B0%E9%9F%B3/7239472?fromModule=lemma_inlink)》的音乐总监 [100]。

2014年4月起,在悉尼、贵阳、上海、吉隆坡等地举行“魔天伦”世界巡回演唱会 [59];5月,位列福布斯中国名人榜第3名 [60];11月,在动作片《[惊天魔盗团2](https://baike.baidu.com/item/%E6%83%8A%E5%A4%A9%E9%AD%94%E7%9B%97%E5%9B%A22/9807509?fromModule=lemma_inlink)》中饰演魔术道具店的老板Li [101];12月10日,发行首张个人数字音乐专辑《[哎呦,不错哦](https://baike.baidu.com/item/%E5%93%8E%E5%91%A6%EF%BC%8C%E4%B8%8D%E9%94%99%E5%93%A6/9851748?fromModule=lemma_inlink)》 [295],成为首位发行数字音乐专辑的华人歌手 [61];该专辑发行后获得第二届[QQ音乐年度盛典](https://baike.baidu.com/item/QQ%E9%9F%B3%E4%B9%90%E5%B9%B4%E5%BA%A6%E7%9B%9B%E5%85%B8/13131216?fromModule=lemma_inlink)年度畅销数字专辑奖,专辑中的歌曲《[鞋子特大号](https://baike.baidu.com/item/%E9%9E%8B%E5%AD%90%E7%89%B9%E5%A4%A7%E5%8F%B7/16261949?fromModule=lemma_inlink)》获得第5届[全球流行音乐金榜](https://baike.baidu.com/item/%E5%85%A8%E7%90%83%E6%B5%81%E8%A1%8C%E9%9F%B3%E4%B9%90%E9%87%91%E6%A6%9C/3621354?fromModule=lemma_inlink)年度二十大金曲奖。

2015年4月,在[第19届全球华语榜中榜](https://baike.baidu.com/item/%E7%AC%AC19%E5%B1%8A%E5%85%A8%E7%90%83%E5%8D%8E%E8%AF%AD%E6%A6%9C%E4%B8%AD%E6%A6%9C/16913437?fromModule=lemma_inlink)暨亚洲影响力大典上获得亚洲影响力最受欢迎全能华语艺人奖、华语乐坛跨时代实力唱作人奖 [343];5月,在福布斯中国名人榜中排名第2位 [63];6月27日,凭借专辑《[哎呦,不错哦](https://baike.baidu.com/item/%E5%93%8E%E5%91%A6%EF%BC%8C%E4%B8%8D%E9%94%99%E5%93%A6/9851748?fromModule=lemma_inlink)》获得[第26届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC26%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/16997436?fromModule=lemma_inlink)最佳国语专辑奖、最佳专辑制作人奖两项提名;7月起,担任[浙江卫视](https://baike.baidu.com/item/%E6%B5%99%E6%B1%9F%E5%8D%AB%E8%A7%86/868580?fromModule=lemma_inlink)励志音乐评论节目《[中国好声音第四季](https://baike.baidu.com/item/%E4%B8%AD%E5%9B%BD%E5%A5%BD%E5%A3%B0%E9%9F%B3%E7%AC%AC%E5%9B%9B%E5%AD%A3/16040352?fromModule=lemma_inlink)》的导师 [62];9月26日,在[佛山世纪莲体育中心](https://baike.baidu.com/item/%E4%BD%9B%E5%B1%B1%E4%B8%96%E7%BA%AA%E8%8E%B2%E4%BD%93%E8%82%B2%E4%B8%AD%E5%BF%83/2393458?fromModule=lemma_inlink)体育场举行“魔天伦”演唱会;12月20日,在昆明拓东体育场举行“魔天伦”演唱会。

2016年3月,在[QQ音乐巅峰盛典](https://baike.baidu.com/item/QQ%E9%9F%B3%E4%B9%90%E5%B7%85%E5%B3%B0%E7%9B%9B%E5%85%B8/19430591?fromModule=lemma_inlink)上获得年度巅峰人气歌手奖、年度音乐全能艺人奖、年度最具影响力演唱会奖;3月24日,发行个人作词、作曲的单曲《[英雄](https://baike.baidu.com/item/%E8%8B%B1%E9%9B%84/19459565?fromModule=lemma_inlink)》,上线两周播放量突破8000万;6月1日,为电影《[惊天魔盗团2](https://baike.baidu.com/item/%E6%83%8A%E5%A4%A9%E9%AD%94%E7%9B%97%E5%9B%A22/9807509?fromModule=lemma_inlink)》创作的主题曲《[Now You See Me](https://baike.baidu.com/item/Now%20You%20See%20Me/19708831?fromModule=lemma_inlink)》发布 [64];6月24日,发行融合古典、摇滚、嘻哈等曲风的数字音乐专辑《[周杰伦的床边故事](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A6%E7%9A%84%E5%BA%8A%E8%BE%B9%E6%95%85%E4%BA%8B/19711456?fromModule=lemma_inlink)》 [65] [300],该专辑发行两日销量突破100万张,打破数字专辑在中国内地的销售纪录 [66],专辑在大中华地区的累计销量突破200万张,销售额超过4000万元 [67];6月,参演的好莱坞电影《[惊天魔盗团2](https://baike.baidu.com/item/%E6%83%8A%E5%A4%A9%E9%AD%94%E7%9B%97%E5%9B%A22/9807509?fromModule=lemma_inlink)》在中国内地上映;7月15日起,担任浙江卫视音乐评论节目《[中国新歌声第一季](https://baike.baidu.com/item/%E4%B8%AD%E5%9B%BD%E6%96%B0%E6%AD%8C%E5%A3%B0%E7%AC%AC%E4%B8%80%E5%AD%A3/19837166?fromModule=lemma_inlink)》的导师 [68];12月23日起,由周杰伦自编自导的文艺片《不能说的秘密》而改编的同名音乐剧《[不能说的秘密](https://baike.baidu.com/item/%E4%B8%8D%E8%83%BD%E8%AF%B4%E7%9A%84%E7%A7%98%E5%AF%86/19661975?fromModule=lemma_inlink)》在[北京天桥艺术中心](https://baike.baidu.com/item/%E5%8C%97%E4%BA%AC%E5%A4%A9%E6%A1%A5%E8%89%BA%E6%9C%AF%E4%B8%AD%E5%BF%83/17657501?fromModule=lemma_inlink)举行全球首演,该音乐剧的作曲、作词、原著故事均由周杰伦完成 [102-103];同年,在上海、北京、青岛、郑州、常州等地举行[周杰伦“地表最强”世界巡回演唱会](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A6%E2%80%9C%E5%9C%B0%E8%A1%A8%E6%9C%80%E5%BC%BA%E2%80%9D%E4%B8%96%E7%95%8C%E5%B7%A1%E5%9B%9E%E6%BC%94%E5%94%B1%E4%BC%9A/53069809?fromModule=lemma_inlink)。

2017年1月6日,周杰伦监制的爱情电影《[一万公里的约定](https://baike.baidu.com/item/%E4%B8%80%E4%B8%87%E5%85%AC%E9%87%8C%E7%9A%84%E7%BA%A6%E5%AE%9A/17561190?fromModule=lemma_inlink)》在中国内地上映 [104];1月13日,在江苏卫视推出的科学类真人秀节目《[最强大脑第四季](https://baike.baidu.com/item/%E6%9C%80%E5%BC%BA%E5%A4%A7%E8%84%91%E7%AC%AC%E5%9B%9B%E5%AD%A3/19450808?fromModule=lemma_inlink)》中担任嘉宾 [69];4月15日至16日,在昆明拓东体育场举办两场个人演唱会,其后在重庆、南京、沈阳、厦门等地举行“地表最强”世界巡回演唱会 [70];5月16日,凭借歌曲《[告白气球](https://baike.baidu.com/item/%E5%91%8A%E7%99%BD%E6%B0%94%E7%90%83/19713859?fromModule=lemma_inlink)》《[床边故事](https://baike.baidu.com/item/%E5%BA%8A%E8%BE%B9%E6%95%85%E4%BA%8B/19710370?fromModule=lemma_inlink)》、专辑《周杰伦的床边故事》分别入围[第28届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC28%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/20804578?fromModule=lemma_inlink)最佳年度歌曲奖、最佳音乐录影带奖、最佳国语男歌手奖 [235];6月4日,获得Hito年度最佳男歌手奖;随后,参加原创专业音乐节目《[中国新歌声第二季](https://baike.baidu.com/item/%E4%B8%AD%E5%9B%BD%E6%96%B0%E6%AD%8C%E5%A3%B0%E7%AC%AC%E4%BA%8C%E5%AD%A3/20128840?fromModule=lemma_inlink)》并担任导师 [71];8月9日,其发行的音乐专辑《周杰伦的床边故事》获得[华语金曲奖](https://baike.baidu.com/item/%E5%8D%8E%E8%AF%AD%E9%87%91%E6%9B%B2%E5%A5%96/2477095?fromModule=lemma_inlink)年度最佳国语专辑奖 [72]。

2018年1月6日,在新加坡举行“地表最强2”世界巡回演唱会的首场演出 [73];1月18日,发行由其个人作词、作曲的音乐单曲《[等你下课](https://baike.baidu.com/item/%E7%AD%89%E4%BD%A0%E4%B8%8B%E8%AF%BE/22344815?fromModule=lemma_inlink)》 [250],该曲由周杰伦与[杨瑞代](https://baike.baidu.com/item/%E6%9D%A8%E7%91%9E%E4%BB%A3/1538482?fromModule=lemma_inlink)共同演唱 [74];2月15日,在[2018年中央电视台春节联欢晚会](https://baike.baidu.com/item/2018%E5%B9%B4%E4%B8%AD%E5%A4%AE%E7%94%B5%E8%A7%86%E5%8F%B0%E6%98%A5%E8%8A%82%E8%81%94%E6%AC%A2%E6%99%9A%E4%BC%9A/20848218?fromModule=lemma_inlink)上与[蔡威泽](https://baike.baidu.com/item/%E8%94%A1%E5%A8%81%E6%B3%BD/20863889?fromModule=lemma_inlink)合作表演魔术与歌曲《[告白气球](https://baike.baidu.com/item/%E5%91%8A%E7%99%BD%E6%B0%94%E7%90%83/22388056?fromModule=lemma_inlink)》,该节目在2018年央视春晚节目收视率TOP10榜单中位列第一位 [75-76];5月15日,发行个人创作的音乐单曲《[不爱我就拉倒](https://baike.baidu.com/item/%E4%B8%8D%E7%88%B1%E6%88%91%E5%B0%B1%E6%8B%89%E5%80%92/22490709?fromModule=lemma_inlink)》 [77] [346];11月21日,加盟由[D·J·卡卢索](https://baike.baidu.com/item/D%C2%B7J%C2%B7%E5%8D%A1%E5%8D%A2%E7%B4%A2/16013808?fromModule=lemma_inlink)执导的电影《[极限特工4](https://baike.baidu.com/item/%E6%9E%81%E9%99%90%E7%89%B9%E5%B7%A54/20901306?fromModule=lemma_inlink)》 [105]。

2019年2月9日,在美国拉斯维加斯举行个人演唱会 [78];7月24日,宣布“嘉年华”世界巡回演唱会于10月启动 [79],该演唱会是周杰伦庆祝出道20周年的演唱会 [80];9月16日,发行与[陈信宏](https://baike.baidu.com/item/%E9%99%88%E4%BF%A1%E5%AE%8F/334?fromModule=lemma_inlink)共同演唱的音乐单曲《[说好不哭](https://baike.baidu.com/item/%E8%AF%B4%E5%A5%BD%E4%B8%8D%E5%93%AD/23748447?fromModule=lemma_inlink)》 [355],该曲由[方文山](https://baike.baidu.com/item/%E6%96%B9%E6%96%87%E5%B1%B1/135622?fromModule=lemma_inlink)作词 [81];10月17日,在上海举行[周杰伦“嘉年华”世界巡回演唱会](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A6%E2%80%9C%E5%98%89%E5%B9%B4%E5%8D%8E%E2%80%9D%E4%B8%96%E7%95%8C%E5%B7%A1%E5%9B%9E%E6%BC%94%E5%94%B1%E4%BC%9A/62969657?fromModule=lemma_inlink)的首场演出 [80];11月1日,发行“地表最强”世界巡回演唱会Live专辑 [82];12月15日,周杰伦为电影《[天·火](https://baike.baidu.com/item/%E5%A4%A9%C2%B7%E7%81%AB/23375274?fromModule=lemma_inlink)》献唱的主题曲《[我是如此相信](https://baike.baidu.com/item/%E6%88%91%E6%98%AF%E5%A6%82%E6%AD%A4%E7%9B%B8%E4%BF%A1/24194094?fromModule=lemma_inlink)》发行 [84]。

2020年1月10日至11日,在[新加坡国家体育场](https://baike.baidu.com/item/%E6%96%B0%E5%8A%A0%E5%9D%A1%E5%9B%BD%E5%AE%B6%E4%BD%93%E8%82%B2%E5%9C%BA/8820507?fromModule=lemma_inlink)举行两场“嘉年华”世界巡回演唱会 [85];3月21日,在浙江卫视全球户外生活文化实境秀节目《[周游记](https://baike.baidu.com/item/%E5%91%A8%E6%B8%B8%E8%AE%B0/22427755?fromModule=lemma_inlink)》中担任发起人 [86];6月12日,发行个人音乐单曲《[Mojito](https://baike.baidu.com/item/Mojito/50474451?fromModule=lemma_inlink)》 [88] [249];5月29日,周杰伦首个中文社交媒体在快手开通 [267];7月26日,周杰伦在快手进行了直播首秀,半小时内直播观看人次破6800万 [268];10月,监制并特别出演赛车题材电影《[叱咤风云](https://baike.baidu.com/item/%E5%8F%B1%E5%92%A4%E9%A3%8E%E4%BA%91/22756550?fromModule=lemma_inlink)》 [106-107]。

2021年1月29日,获得[中国歌曲TOP排行榜](https://baike.baidu.com/item/%E4%B8%AD%E5%9B%BD%E6%AD%8C%E6%9B%B2TOP%E6%8E%92%E8%A1%8C%E6%A6%9C/53567645?fromModule=lemma_inlink)最佳男歌手奖;2月12日,以“云录制”形式在[2021年中央广播电视总台春节联欢晚会](https://baike.baidu.com/item/2021%E5%B9%B4%E4%B8%AD%E5%A4%AE%E5%B9%BF%E6%92%AD%E7%94%B5%E8%A7%86%E6%80%BB%E5%8F%B0%E6%98%A5%E8%8A%82%E8%81%94%E6%AC%A2%E6%99%9A%E4%BC%9A/23312983?fromModule=lemma_inlink)演唱歌曲《Mojito》 [89];2月12日,周杰伦“既来之,则乐之”唱聊会在快手上线 [269];5月12日,凭借单曲《Mojito》入围[第32届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC32%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/56977769?fromModule=lemma_inlink)最佳单曲制作人奖 [240]。

2022年5月20日至21日,周杰伦“奇迹现场重映计划”线上视频演唱会开始播出 [259];7月6日,音乐专辑《[最伟大的作品](https://baike.baidu.com/item/%E6%9C%80%E4%BC%9F%E5%A4%A7%E7%9A%84%E4%BD%9C%E5%93%81/61539892?fromModule=lemma_inlink)》在QQ音乐的预约量超过568万人 [263];7月6日,音乐专辑《最伟大的作品》同名先行曲的MV在网络平台播出 [262] [264];7月8日,专辑《最伟大的作品》开始预售 [265],8小时内在QQ音乐、[咪咕音乐](https://baike.baidu.com/item/%E5%92%AA%E5%92%95%E9%9F%B3%E4%B9%90/4539596?fromModule=lemma_inlink)等平台的预售额超过三千万元 [266];7月15日,周杰伦正式发行个人第15张音乐专辑《[最伟大的作品](https://baike.baidu.com/item/%E6%9C%80%E4%BC%9F%E5%A4%A7%E7%9A%84%E4%BD%9C%E5%93%81/61539892?fromModule=lemma_inlink)》 [261],专辑上线后一小时的总销售额超过1亿 [270];截至17时,该专辑在四大音乐平台总销量突破500万张,销售额超过1.5亿元 [272];7月18日,周杰伦在快手开启独家直播,直播间累计观看人数1.1亿,最高实时在线观看人数超654万 [273];9月,参加2022联盟嘉年华 [274];11月19日,周杰伦通过快手平台直播线上“哥友会” [277-278] [280],这也是他首次以线上的方式举办歌友会 [276];他在直播中演唱了《[还在流浪](https://baike.baidu.com/item/%E8%BF%98%E5%9C%A8%E6%B5%81%E6%B5%AA/61707897?fromModule=lemma_inlink)》《[半岛铁盒](https://baike.baidu.com/item/%E5%8D%8A%E5%B2%9B%E9%93%81%E7%9B%92/2268287?fromModule=lemma_inlink)》等5首歌曲 [279];12月16日,周杰伦参加动感地带世界杯音乐盛典,并在现场演唱了歌曲《我是如此相信》以及《[安静](https://baike.baidu.com/item/%E5%AE%89%E9%9D%99/2940419?fromModule=lemma_inlink)》 [282] [284]。

2023年3月,周杰伦发行的音乐专辑《[最伟大的作品](https://baike.baidu.com/item/%E6%9C%80%E4%BC%9F%E5%A4%A7%E7%9A%84%E4%BD%9C%E5%93%81/61539892?fromModule=lemma_inlink)》获得[国际唱片业协会](https://baike.baidu.com/item/%E5%9B%BD%E9%99%85%E5%94%B1%E7%89%87%E4%B8%9A%E5%8D%8F%E4%BC%9A/1486316?fromModule=lemma_inlink)(IFPI)发布的“2022年全球畅销专辑榜”冠军,成为首位获得该榜冠军的华语歌手 [287];5月16日,其演唱的歌曲《[最伟大的作品](https://baike.baidu.com/item/%E6%9C%80%E4%BC%9F%E5%A4%A7%E7%9A%84%E4%BD%9C%E5%93%81/61702109?fromModule=lemma_inlink)》获得[第34届台湾金曲奖](https://baike.baidu.com/item/%E7%AC%AC34%E5%B1%8A%E5%8F%B0%E6%B9%BE%E9%87%91%E6%9B%B2%E5%A5%96/62736300?fromModule=lemma_inlink)年度歌曲奖提名 [288];8月17日-20日,在呼和浩特市举行嘉年华世界巡回演唱会 [349];11月25日,参加的户外实境互动综艺节目《[周游记2](https://baike.baidu.com/item/%E5%91%A8%E6%B8%B8%E8%AE%B02/53845056?fromModule=lemma_inlink)》在浙江卫视播出 [312];12月6日,[环球音乐集团](https://baike.baidu.com/item/%E7%8E%AF%E7%90%83%E9%9F%B3%E4%B9%90%E9%9B%86%E5%9B%A2/1964357?fromModule=lemma_inlink)与周杰伦及其经纪公司“杰威尔音乐”达成战略合作伙伴关系 [318];12月9日,在泰国曼谷[拉加曼加拉国家体育场](https://baike.baidu.com/item/%E6%8B%89%E5%8A%A0%E6%9B%BC%E5%8A%A0%E6%8B%89%E5%9B%BD%E5%AE%B6%E4%BD%93%E8%82%B2%E5%9C%BA/6136556?fromModule=lemma_inlink)举行“嘉年华”世界巡回演唱会 [324];12月21日,发行音乐单曲《[圣诞星](https://baike.baidu.com/item/%E5%9C%A3%E8%AF%9E%E6%98%9F/63869869?fromModule=lemma_inlink)》 [345]。2024年4月,由坚果工作室制片的说唱真人秀综艺《说唱梦工厂》在北京举行媒体探班活动,其中主要嘉宾有周杰伦。 [356]5月23日,参演的综艺《说唱梦工厂》播出。 [358]

## 1.3、个人经历

### 1.3.1、家庭情况

周杰伦的父亲[周耀中](https://baike.baidu.com/item/%E5%91%A8%E8%80%80%E4%B8%AD/4326853?fromModule=lemma_inlink)是淡江中学的生物老师 [123],母亲[叶惠美](https://baike.baidu.com/item/%E5%8F%B6%E6%83%A0%E7%BE%8E/2325933?fromModule=lemma_inlink)是淡江中学的美术老师。周杰伦跟母亲之间的关系就像弟弟跟姐姐。他也多次写歌给母亲,比如《[听妈妈的话](https://baike.baidu.com/item/%E5%90%AC%E5%A6%88%E5%A6%88%E7%9A%84%E8%AF%9D/79604?fromModule=lemma_inlink)》,甚至还把母亲的名字“叶惠美”作为专辑的名称。由于父母离异,因此周杰伦很少提及父亲周耀中,后来在母亲和外婆[叶詹阿妹](https://baike.baidu.com/item/%E5%8F%B6%E8%A9%B9%E9%98%BF%E5%A6%B9/926323?fromModule=lemma_inlink)的劝导下,他重新接纳了父亲。

### 1.3.2、感情生活

2004年底,周杰伦与[侯佩岑](https://baike.baidu.com/item/%E4%BE%AF%E4%BD%A9%E5%B2%91/257126?fromModule=lemma_inlink)相恋。2005年,两人公开承认恋情。2006年5月,两人分手 [237-238]。

2014年11月17日,周杰伦公开与[昆凌](https://baike.baidu.com/item/%E6%98%86%E5%87%8C/1545451?fromModule=lemma_inlink)的恋情 [124]。2015年1月17日,周杰伦与昆凌在英国举行婚礼 [125];2月9日,周杰伦与昆凌在台北举行泳池户外婚宴;3月9日,周杰伦与昆凌在澳大利亚举办家庭婚礼 [126];7月10日,周杰伦与昆凌的女儿[Hathaway](https://baike.baidu.com/item/Hathaway/18718544?fromModule=lemma_inlink)出生 [127-128]。2017年2月13日,周杰伦宣布妻子怀二胎 [129];6月8日,周杰伦与昆凌的儿子[Romeo](https://baike.baidu.com/item/Romeo/22180208?fromModule=lemma_inlink)出生 [130]。2022年1月19日,周杰伦宣布妻子昆凌怀三胎 [256];4月22日,昆凌表示第三胎是女儿 [258];5月6日,周杰伦的女儿[Jacinda](https://baike.baidu.com/item/Jacinda/61280507?fromModule=lemma_inlink)出生 [281]。

## 1.4、主要作品

### 1.4.1、音乐单曲

| **<font style="color:rgb(51, 51, 51);">歌曲名称</font>** | **<font style="color:rgb(51, 51, 51);">发行时间</font>** | **<font style="color:rgb(51, 51, 51);">歌曲简介</font>** |

|--------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| [<sup>圣诞星</sup>](https://baike.baidu.com/item/%E5%9C%A3%E8%AF%9E%E6%98%9F/63869869?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2023-12-21</font> | <font style="color:rgb(51, 51, 51);">-</font> |

| [Mojito](https://baike.baidu.com/item/Mojito/50474451?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2020-6-12</font> | <font style="color:rgb(51, 51, 51);">单曲</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[131]</font></sup> |

| [我是如此相信](https://baike.baidu.com/item/%E6%88%91%E6%98%AF%E5%A6%82%E6%AD%A4%E7%9B%B8%E4%BF%A1/24194094?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2019-12-15</font> | <font style="color:rgb(51, 51, 51);">电影《天火》主题曲</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[83]</font></sup> |

| [说好不哭](https://baike.baidu.com/item/%E8%AF%B4%E5%A5%BD%E4%B8%8D%E5%93%AD/23748447?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2019-09-16</font> | <font style="color:rgb(51, 51, 51);">with 五月天阿信</font> |

| [不爱我就拉倒](https://baike.baidu.com/item/%E4%B8%8D%E7%88%B1%E6%88%91%E5%B0%B1%E6%8B%89%E5%80%92/22490709?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2018-05-15</font> | <font style="color:rgb(51, 51, 51);">-</font> |

| [等你下课](https://baike.baidu.com/item/%E7%AD%89%E4%BD%A0%E4%B8%8B%E8%AF%BE/22344815?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2018-01-18</font> | <font style="color:rgb(51, 51, 51);">杨瑞代参与演唱</font> |

| [英雄](https://baike.baidu.com/item/%E8%8B%B1%E9%9B%84/19459565?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2016-03-24</font> | <font style="color:rgb(51, 51, 51);">《英雄联盟》游戏主题曲</font> |

| [Try](https://baike.baidu.com/item/Try/19208892?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2016-01-06</font> | <font style="color:rgb(51, 51, 51);">与派伟俊合唱,电影《功夫熊猫3》主题曲</font> |

| [婚礼曲](https://baike.baidu.com/item/%E5%A9%9A%E7%A4%BC%E6%9B%B2/22913856?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2015</font> | <font style="color:rgb(51, 51, 51);">纯音乐</font> |

| [夜店咖](https://baike.baidu.com/item/%E5%A4%9C%E5%BA%97%E5%92%96/16182672?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2014-11-25</font> | <font style="color:rgb(51, 51, 51);">与嘻游记合唱</font> |

### 1.4.2、为他人创作

| <font style="color:rgb(51, 51, 51);">歌曲名称</font> | <font style="color:rgb(51, 51, 51);">职能</font> | <font style="color:rgb(51, 51, 51);">演唱者</font> | <font style="color:rgb(51, 51, 51);">所属专辑</font> | <font style="color:rgb(51, 51, 51);">发行时间</font> |

|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------|----------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------|--------------------------------------------------------|

| [<sup>AMIGO</sup>](https://baike.baidu.com/item/AMIGO/62130287?fromModule=lemma_inlink)<br/><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[275]</font></sup> | <font style="color:rgb(51, 51, 51);">作曲</font> | <font style="color:rgb(51, 51, 51);">玖壹壹</font> | <font style="color:rgb(51, 51, 51);">-</font> | <font style="color:rgb(51, 51, 51);">2022-10-25</font> |

| [叱咤风云](https://baike.baidu.com/item/%E5%8F%B1%E5%92%A4%E9%A3%8E%E4%BA%91/55751566?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲、电吉他演奏</font> | <font style="color:rgb(51, 51, 51);">范逸臣、柯有伦</font> | <font style="color:rgb(51, 51, 51);">-</font> | <font style="color:rgb(51, 51, 51);">2021-1-10</font> |

| [等风雨经过](https://baike.baidu.com/item/%E7%AD%89%E9%A3%8E%E9%9B%A8%E7%BB%8F%E8%BF%87/24436567?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | <font style="color:rgb(51, 51, 51);">张学友</font> | <font style="color:rgb(51, 51, 51);">-</font> | <font style="color:rgb(51, 51, 51);">2020-2-23</font> |

| [一路上小心](https://baike.baidu.com/item/%E4%B8%80%E8%B7%AF%E4%B8%8A%E5%B0%8F%E5%BF%83/9221406?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | <font style="color:rgb(51, 51, 51);">吴宗宪</font> | <font style="color:rgb(51, 51, 51);">-</font> | <font style="color:rgb(51, 51, 51);">2019-05-17</font> |

| [谢谢一辈子](https://baike.baidu.com/item/%E8%B0%A2%E8%B0%A2%E4%B8%80%E8%BE%88%E5%AD%90/22823424?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | <font style="color:rgb(51, 51, 51);">成龙</font> | [我还是成龙](https://baike.baidu.com/item/%E6%88%91%E8%BF%98%E6%98%AF%E6%88%90%E9%BE%99/0?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2018-12-20</font> |

| [连名带姓](https://baike.baidu.com/item/%E8%BF%9E%E5%90%8D%E5%B8%A6%E5%A7%93/22238578?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | [张惠妹](https://baike.baidu.com/item/%E5%BC%A0%E6%83%A0%E5%A6%B9/234310?fromModule=lemma_inlink) | [偷故事的人](https://baike.baidu.com/item/%E5%81%B7%E6%95%85%E4%BA%8B%E7%9A%84%E4%BA%BA/0?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2017-12-12</font> |

| [时光之墟](https://baike.baidu.com/item/%E6%97%B6%E5%85%89%E4%B9%8B%E5%A2%9F/22093813?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | [许魏洲](https://baike.baidu.com/item/%E8%AE%B8%E9%AD%8F%E6%B4%B2/18762132?fromModule=lemma_inlink) | [时光之墟](https://baike.baidu.com/item/%E6%97%B6%E5%85%89%E4%B9%8B%E5%A2%9F/0?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2017-08-25</font> |

| [超猛](https://baike.baidu.com/item/%E8%B6%85%E7%8C%9B/19543891?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | <font style="color:rgb(51, 51, 51);">草蜢、MATZKA</font> | [Music Walker](https://baike.baidu.com/item/Music%20Walker/0?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2016-04-22</font> |

| [东山再起](https://baike.baidu.com/item/%E4%B8%9C%E5%B1%B1%E5%86%8D%E8%B5%B7/19208906?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | [南拳妈妈](https://baike.baidu.com/item/%E5%8D%97%E6%8B%B3%E5%A6%88%E5%A6%88/167625?fromModule=lemma_inlink) | [拳新出击](https://baike.baidu.com/item/%E6%8B%B3%E6%96%B0%E5%87%BA%E5%87%BB/19662007?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2016-04-20</font> |

| [剩下的盛夏](https://baike.baidu.com/item/%E5%89%A9%E4%B8%8B%E7%9A%84%E7%9B%9B%E5%A4%8F/18534130?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">作曲</font> | <font style="color:rgb(51, 51, 51);">TFBOYS、嘻游记</font> | [大梦想家](https://baike.baidu.com/item/%E5%A4%A7%E6%A2%A6%E6%83%B3%E5%AE%B6/0?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">2015-08-28</font> |

### 1.4.3、演唱会记录

| **<font style="color:rgb(51, 51, 51);">举办时间</font>** | **<font style="color:rgb(51, 51, 51);">演唱会名称</font>** | **<font style="color:rgb(51, 51, 51);">总场次</font>** |

|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:----------------------------------------------------|

| <font style="color:rgb(51, 51, 51);">2019-10-17</font> | <font style="color:rgb(51, 51, 51);">嘉年华世界巡回演唱会</font> | |

| <font style="color:rgb(51, 51, 51);">2016-6-30 至 2019-5</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[142]</font></sup> | [周杰伦“地表最强”世界巡回演唱会](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A6%E2%80%9C%E5%9C%B0%E8%A1%A8%E6%9C%80%E5%BC%BA%E2%80%9D%E4%B8%96%E7%95%8C%E5%B7%A1%E5%9B%9E%E6%BC%94%E5%94%B1%E4%BC%9A/53069809?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">120 场</font> |

| <font style="color:rgb(51, 51, 51);">2013-5-17 至 2015-12-20</font> | [魔天伦世界巡回演唱会](https://baike.baidu.com/item/%E9%AD%94%E5%A4%A9%E4%BC%A6%E4%B8%96%E7%95%8C%E5%B7%A1%E5%9B%9E%E6%BC%94%E5%94%B1%E4%BC%9A/24146025?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">76 场</font> |

| <font style="color:rgb(51, 51, 51);">2010-6-11 至 2011-12-18</font> | [周杰伦2010超时代世界巡回演唱会](https://baike.baidu.com/item/%E5%91%A8%E6%9D%B0%E4%BC%A62010%E8%B6%85%E6%97%B6%E4%BB%A3%E4%B8%96%E7%95%8C%E5%B7%A1%E5%9B%9E%E6%BC%94%E5%94%B1%E4%BC%9A/3238718?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">46 场</font> |

| <font style="color:rgb(51, 51, 51);">2007-11-10 至 2009-8-28</font> | [2007世界巡回演唱会](https://baike.baidu.com/item/2007%E4%B8%96%E7%95%8C%E5%B7%A1%E5%9B%9E%E6%BC%94%E5%94%B1%E4%BC%9A/12678549?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">42 场</font> |

| <font style="color:rgb(51, 51, 51);">2004-10-2 至 2006-2-6</font> | [无与伦比演唱会](https://baike.baidu.com/item/%E6%97%A0%E4%B8%8E%E4%BC%A6%E6%AF%94%E6%BC%94%E5%94%B1%E4%BC%9A/1655166?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">24 场</font> |

| <font style="color:rgb(51, 51, 51);">2002-9-28 至 2004-1-3</font> | [THEONE演唱会](https://baike.baidu.com/item/THEONE%E6%BC%94%E5%94%B1%E4%BC%9A/1543469?fromModule=lemma_inlink) | <font style="color:rgb(51, 51, 51);">16 场</font> |

| <font style="color:rgb(51, 51, 51);">2001-11-3 至 2002-2-10</font> | <font style="color:rgb(51, 51, 51);">范特西演唱会</font> | <font style="color:rgb(51, 51, 51);">5 场</font> |

## 1.5、社会活动

### 1.5.1、担任大使

| **<font style="color:rgb(51, 51, 51);">时间</font>** | **<font style="color:rgb(51, 51, 51);">名称</font>** |

|:---------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <font style="color:rgb(51, 51, 51);">2005年</font> | <font style="color:rgb(51, 51, 51);">环保大使</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[165]</font></sup> |

| <font style="color:rgb(51, 51, 51);">2010年</font> | <font style="color:rgb(51, 51, 51);">校园拒烟大使</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[190]</font></sup> |

| <font style="color:rgb(51, 51, 51);">2011年</font> | <font style="color:rgb(51, 51, 51);">河南青年创业形象大使</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[191]</font></sup> |

| <font style="color:rgb(51, 51, 51);">2013年</font> | <font style="color:rgb(51, 51, 51);">蒲公英梦想大使</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[192]</font></sup> |

| <font style="color:rgb(51, 51, 51);">2014年</font> | <font style="color:rgb(51, 51, 51);">中国禁毒宣传形象大使</font> |

| | <font style="color:rgb(51, 51, 51);">观澜湖世界明星赛的推广大使</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[193]</font></sup> |

| <font style="color:rgb(51, 51, 51);">2016年</font> | <font style="color:rgb(51, 51, 51);">国际野生救援野生救援全球大使</font><sup><font style="color:rgb(51, 102, 204);"> </font></sup><sup><font style="color:rgb(51, 102, 204);">[194]</font></sup> |

|

{

"source": "OpenSPG/KAG",

"title": "tests/unit/builder/data/test_markdown.md",

"url": "https://github.com/OpenSPG/KAG/blob/master/tests/unit/builder/data/test_markdown.md",

"date": "2024-09-21T13:56:44",

"stars": 5456,

"description": "KAG is a logical form-guided reasoning and retrieval framework based on OpenSPG engine and LLMs. It is used to build logical reasoning and factual Q&A solutions for professional domain knowledge bases. It can effectively overcome the shortcomings of the traditional RAG vector similarity calculation model.",

"file_size": 59840

}

|

角色信息表:[aml.adm_cust_role_dd](https://www.baidu.com/assets/catalog/detail/table/adm_cust_role_dd/info)

<a name="nSOL0"></a>

### 背景

此表为解释一个客户的ip_id (即cust_id,3333开头)会对应多个ip_role_id (即role_id,也是3333开头)。其实业务上理解,就是一个客户开户后,对应不同业务场景会生成不同的角色ID,比如又有结算户又有云资金商户,就会有个人role 以及商户role,两个role类型不一样,角色id也都不一样。

<a name="kpInt"></a>

### 关键字段说明

<a name="BLpPo"></a>

#### role_id 角色ID

同样是3333开头,但是它对应cust_id的关系是多对一,即一个客户会有多个role_id

<a name="AMs5V"></a>

#### role_type 角色类型

角色类型主要分为会员、商户、被关联角色等,主要使用的还是会员和商户;<br />对应描述在字段 role_type_desc中储存。

<a name="JHlTP"></a>

#### cust_id 客户ID

与role_id 是一对多的关系。

<a name="h5769"></a>

#### enable_status 可用状态

此字段对应的可用/禁用状态,是对应描述的role_id 的可用/禁用状态;<br />对应描述在字段 enable_status_desc中储存。<br />*同时在客户维度上,也有此客户cust_id是可用/禁用状态,不在此表中,且两者并不相关,选择需要查看的维度对应选择字段。

<a name="BhYXU"></a>

#### reg_from 角色注册来源

标注了客户的注册来源,使用较少,reg_from_desc为空。

<a name="q14I4"></a>

#### lifecycle_status 角色生命周期

标注了客户角色的生命周期,使用较少,lifecycle_status_desc为空。

|

{

"source": "OpenSPG/KAG",

"title": "tests/unit/builder/data/角色信息表说明.md",

"url": "https://github.com/OpenSPG/KAG/blob/master/tests/unit/builder/data/角色信息表说明.md",

"date": "2024-09-21T13:56:44",

"stars": 5456,

"description": "KAG is a logical form-guided reasoning and retrieval framework based on OpenSPG engine and LLMs. It is used to build logical reasoning and factual Q&A solutions for professional domain knowledge bases. It can effectively overcome the shortcomings of the traditional RAG vector similarity calculation model.",

"file_size": 947

}

|

# Introduction to Data of Enterprise Supply Chain

[English](./README.md) |

[简体中文](./README_cn.md)

## 1. Directory Structure

```text

supplychain

├── builder

│ ├── data

│ │ ├── Company.csv

│ │ ├── CompanyUpdate.csv

│ │ ├── Company_fundTrans_Company.csv

│ │ ├── Index.csv

│ │ ├── Industry.csv

│ │ ├── Person.csv

│ │ ├── Product.csv

│ │ ├── ProductChainEvent.csv

│ │ ├── TaxOfCompanyEvent.csv

│ │ ├── TaxOfProdEvent.csv

│ │ └── Trend.csv

```

We will introduce the tables by sampling some rows from each one.

## 2. The company instances (Company.csv)

```text

id,name,products

CSF0000002238,三角*胎股*限公司,"轮胎,全钢子午线轮胎"

```

* ``id``: The unique id of the company

* ``name``: Name of the company

* ``products``: Products produced by the company, separated by commas

## 3. Fund transferring between companies (Company_fundTrans_Company.csv)

```text

src,dst,transDate,transAmt

CSF0000002227,CSF0000001579,20230506,73

```

* ``src``: The source of the fund transfer

* ``dst``: The destination of the fund transfer

* ``transDate``: The date of the fund transfer

* ``transAmt``: The total amount of the fund transfer

## 4. The Person instances (Person.csv)

```text

id,name,age,legalRep

0,路**,63,"新疆*花*股*限公司,三角*胎股*限公司,传化*联*份限公司"

```

* ``id``: The unique id of the person

* ``name``: Name of the person

* ``age``: Age of the person

* ``legalRep``: Company list with the person as the legal representative, separated by commas

## 5. The industry concepts (Industry.csv)

```text

fullname

能源

能源-能源

能源-能源-能源设备与服务

能源-能源-能源设备与服务-能源设备与服务

能源-能源-石油、天然气与消费用燃料

```

The industry chain concepts is represented by its name, with dashes indicating its higher-level concepts.

For example, the higher-level concept of "能源-能源-能源设备与服务" is "能源-能源",

and the higher-level concept of "能源-能源-能源设备与服务-能源设备与服务" is "能源-能源-能源设备与服务".

## 6. The product concepts (Product.csv)

```text

fullname,belongToIndustry,hasSupplyChain

商品化工-橡胶-合成橡胶-顺丁橡胶,原材料-原材料-化学制品-商品化工,"化工商品贸易-化工产品贸易-橡塑制品贸易,轮胎与橡胶-轮胎,轮胎与橡胶-轮胎-特种轮胎,轮胎与橡胶-轮胎-工程轮胎,轮胎与橡胶-轮胎-斜交轮胎,轮胎与橡胶-轮胎-全钢子午线轮胎,轮胎与橡胶-轮胎-半钢子午线轮胎"

```

* ``fullname``: The name of the product, with dashes indicating its higher-level concepts.

* ``belongToIndustry``: The industry which the product belongs to. For example, in this case, "顺丁橡胶" belongs to "商品化工".

* ``hasSupplyChain``: The downstream industries related to the product, separated by commas. For example, the downstream industries of "顺丁橡胶" may include "橡塑制品贸易", "轮胎", and so on.

## 7. The industry chain events (ProductChainEvent.csv)

```text

id,name,subject,index,trend

1,顺丁橡胶成本上涨,商品化工-橡胶-合成橡胶-顺丁橡胶,价格,上涨

```

* ``id``: The ID of the event

* ``name``: The name of the event

* ``subject``: The subject of the event. In this example, it is "顺丁橡胶".

* ``index``: The index related to the event. In this example, it is "价格" (price).

* ``trend``: The trend of the event. In this example, it is "上涨" (rising).

## 8. The index concepts (Index.csv) and the trend concepts (Trend.csv)

Index and trend are atomic conceptual categories that can be combined to form industrial chain events and company events.

* index: The index related to the event, with possible values of "价格" (price), "成本" (cost) or "利润" (profit).

* trend: The trend of the event, with possible values of "上涨" (rising) or "下跌" (falling).

## 9 The event categorization (TaxOfProdEvent.csv, TaxOfCompanyEvent.csv)

Event classification includes industrial chain event classification and company event classification with the following data:

* Industrial chain event classification: "价格上涨" (price rising).

* Company event classification: "成本上涨" (cost rising), "利润下跌" (profit falling).

|

{

"source": "OpenSPG/KAG",

"title": "kag/examples/supplychain/builder/data/README.md",

"url": "https://github.com/OpenSPG/KAG/blob/master/kag/examples/supplychain/builder/data/README.md",

"date": "2024-09-21T13:56:44",

"stars": 5456,

"description": "KAG is a logical form-guided reasoning and retrieval framework based on OpenSPG engine and LLMs. It is used to build logical reasoning and factual Q&A solutions for professional domain knowledge bases. It can effectively overcome the shortcomings of the traditional RAG vector similarity calculation model.",

"file_size": 3647

}

|

# 产业链案例数据介绍

[English](./README.md) |

[简体中文](./README_cn.md)

## 1. 数据目录

```text

supplychain

├── builder

│ ├── data

│ │ ├── Company.csv

│ │ ├── CompanyUpdate.csv

│ │ ├── Company_fundTrans_Company.csv

│ │ ├── Index.csv

│ │ ├── Industry.csv

│ │ ├── Person.csv

│ │ ├── Product.csv

│ │ ├── ProductChainEvent.csv

│ │ ├── TaxOfCompanyEvent.csv

│ │ ├── TaxOfProdEvent.csv

│ │ └── Trend.csv

```

分别抽样部分数据进行介绍。

## 2. 公司数据(Company.csv)

```text

id,name,products

CSF0000002238,三角*胎股*限公司,"轮胎,全钢子午线轮胎"

```

* ``id``:公司在系统中的唯一 id

* ``name``:公司名

* ``products``:公司生产的产品,使用逗号分隔

## 3. 公司资金转账(Company_fundTrans_Company.csv)

```text

src,dst,transDate,transAmt

CSF0000002227,CSF0000001579,20230506,73

```

* ``src``:转出方

* ``dst``:转入方

* ``transDate``:转账日期

* ``transAmt``:转账总金额

## 4. 法人代表(Person.csv)

```text

id,name,age,legalRep

0,路**,63,"新疆*花*股*限公司,三角*胎股*限公司,传化*联*份限公司"

```

* ``id``:自然人在系统中唯一标识

* ``name``:自然人姓名

* ``age``:自然人年龄

* ``legalRep``:法人代表公司名字列表,逗号分隔

## 5. 产业类目概念(Industry.csv)

```text

fullname

能源

能源-能源

能源-能源-能源设备与服务

能源-能源-能源设备与服务-能源设备与服务

能源-能源-石油、天然气与消费用燃料

```

产业只有名字,其中段横线代表其上位概念,例如“能源-能源-能源设备与服务”的上位概念是“能源-能源”,“能源-能源-能源设备与服务-能源设备与服务”的上位概念为“能源-能源-能源设备与服务”。

## 6. 产品类目概念(Product.csv)

```text

fullname,belongToIndustry,hasSupplyChain

商品化工-橡胶-合成橡胶-顺丁橡胶,原材料-原材料-化学制品-商品化工,"化工商品贸易-化工产品贸易-橡塑制品贸易,轮胎与橡胶-轮胎,轮胎与橡胶-轮胎-特种轮胎,轮胎与橡胶-轮胎-工程轮胎,轮胎与橡胶-轮胎-斜交轮胎,轮胎与橡胶-轮胎-全钢子午线轮胎,轮胎与橡胶-轮胎-半钢子午线轮胎"

```

* ``fullname``:产品名,同样通过短横线分隔上下位

* ``belongToIndustry``:所归属的行业,例如本例中,顺丁橡胶属于商品化工

* ``hasSupplyChain``:是其下游产业,例如顺丁橡胶下游产业有橡塑制品贸易、轮胎等

## 7. 产业链事件(ProductChainEvent.csv)

```text

id,name,subject,index,trend

1,顺丁橡胶成本上涨,商品化工-橡胶-合成橡胶-顺丁橡胶,价格,上涨

```

* ``id``:事件的 id

* ``name``:事件的名字

* ``subject``:事件的主体,本例为顺丁橡胶

* ``index``:指标,本例为价格

* ``trend``:趋势,本例为上涨

## 8. 指标(Index.csv)和趋势(Trend.csv)

指标、趋势作为原子概念类目,可组合成产业链事件和公司事件。

* 指标,值域为:价格、成本、利润

* 趋势,值域为:上涨、下跌

## 9. 事件分类(TaxOfProdEvent.csv、TaxOfCompanyEvent.csv)

事件分类包括产业链事件分类和公司事件分类,数据为:

* 产业链事件分类,值域:价格上涨

* 公司事件分类,值域:成本上涨、利润下跌

|

{

"source": "OpenSPG/KAG",

"title": "kag/examples/supplychain/builder/data/README_cn.md",

"url": "https://github.com/OpenSPG/KAG/blob/master/kag/examples/supplychain/builder/data/README_cn.md",

"date": "2024-09-21T13:56:44",

"stars": 5456,

"description": "KAG is a logical form-guided reasoning and retrieval framework based on OpenSPG engine and LLMs. It is used to build logical reasoning and factual Q&A solutions for professional domain knowledge bases. It can effectively overcome the shortcomings of the traditional RAG vector similarity calculation model.",

"file_size": 1996

}

|

# Welcome!

Hi there! Welcome to the project. We're glad you're here.

# amateur's guide to react

1. Wrap rpc calls in useCallback. This one should not have empty dependency array (but don't omit it entirely, or it will run on every render!)

2. The useCallback result is a function. Assign it to a constant

3. Call the function from inside useEffect. Dependency array will include the function -- that's okay, the function never changes, so it won't trigger the effect.

Do not put rpcClient into any dependency arrays (just in case).

Actually, pure event handlers don't need to be wrapped (I think). But anything called from inside a useCallback does need to be wrapped.

# Webview contract

1. Call humanXYZ() when user does XYZ. This sends updateTask and then returns to indicate complete.

2. Immediately after, call startBotTurn() (returns immediately), which takes care of figuring out what to do to get back to human control.

3. Call stopBotTurn() if needed.

All task updates are sent via updateTask. eventually there will be an endBotTurn notification, but we don't need it yet.

# Issues encountered

## Unable to launch browser

Problem: Unable to launch browser: Could not connect to debug target at http://localhost:9222: Could not find any debuggable target

Solution: restart vscode

## A javascript error occurred in the main process

Solution: run yarn watch

|

{

"source": "meltylabs/melty",

"title": "CHARLIE_README.md",

"url": "https://github.com/meltylabs/melty/blob/main/CHARLIE_README.md",

"date": "2024-09-02T00:01:16",

"stars": 5355,

"description": "Chat first code editor. To download the packaged app:",

"file_size": 1376

}

|

# Contributing to Melty

Thanks for helping make Melty better!

## Feedback

We'd love to hear your feedback at [[email protected]](mailto:[email protected]).

## Issues and feature requests

Feel free to create a new GitHub issue for bugs or feature requests.

If you're reporting a bug, please include

- Version of Melty (About -> Melty)

- Your operating system

- Errors from the Dev Tools Console (open from the menu: Help > Toggle Developer Tools)

## Pull requests

We're working hard to get the Melty code into a stable state where it's easy to accept contributions. If you're interested in adding something to Melty, please reach out first and we can discuss how it fits into our roadmap.

## Local development

To get started with local development, clone the repository and open it in Melty or vscode. Then run

```bash

# install dependencies

yarn run melty:install

# auto-rebuild extensions/spectacular source

yarn run melty:extension-dev

```

Then run the default debug configuration.

In the development mode, any changes you make to the `./extensions/spectacular/` directory will be watched and the extension will be built. To see your changes, you must reload the code editor (`cmd+R` or `ctrl+R`).

#### Set up Claude API key

Melty uses Claude, and you may need to set the `melty.anthropicApiKey` in Melty's settings. You can do that by:

- Waiting for the editor to launch.

- Opening preferences by pressing `cmd+,` or `ctrl+,`.

- Searching for `melty.anthropicApiKey` and setting the API key.

|

{

"source": "meltylabs/melty",

"title": "CONTRIBUTING.md",

"url": "https://github.com/meltylabs/melty/blob/main/CONTRIBUTING.md",

"date": "2024-09-02T00:01:16",

"stars": 5355,

"description": "Chat first code editor. To download the packaged app:",

"file_size": 1508

}

|

# Melty is an AI code editor where every chat message is a git commit

Revert, branch, reset, and squash your chats. Melty stays in sync with you like a pair programmer so you never have to explain what you’re doing.

[Melty](https://melty.sh) 0.2 is almost ready. We'll start sending it out to people on the waitlist within the next few days and weeks. If we don't we'll eat a hat or something.

### [Get Early Access](https://docs.google.com/forms/d/e/1FAIpQLSc6uBe0ea26q7Iq0Co_q5fjW2nypUl8G_Is5M_6t8n7wZHuPA/viewform)

---

## Meet Melty

We're Charlie and Jackson. We're longtime friends who met playing ultimate frisbee at Brown.

[Charlie](http://charlieholtz.com) comes from [Replicate](https://replicate.com/), where he started the Hacker in Residence team and [accidentally struck fear into the heart of Hollywood](https://www.businessinsider.com/david-attenborough-ai-video-hollywood-actors-afraid-sag-aftra-2023-11). [Jackson](http://jdecampos.com) comes from Netflix, where he [built machine learning infrastructure](https://netflixtechblog.com/scaling-media-machine-learning-at-netflix-f19b400243) that picks the artwork you see for your favorite shows.

We've used most of the AI coding tools out there, and often ended up copy–pasting code, juggling ten chats for the same task, or committing buggy code that came back to bite us later. AI has already transformed how we code, but we know it can do a lot more.

**So we're building Melty, the first AI code editor that can make big changes across multiple files and integrate with your entire workflow.**

We've been building Melty for 28 days, and it's already writing about half of its own code. Melty can…

_(all demos real-time)_

## Refactor

https://github.com/user-attachments/assets/603ae418-038d-477c-aa36-83c139172ab8

## Create web apps from scratch

https://github.com/user-attachments/assets/26518a2e-cd75-4dc7-8ee6-32657727db80

## Navigate large codebases

https://github.com/user-attachments/assets/916c0d00-e451-40f8-9146-32ab044e76ad

## Write its own commits

We're designing Melty to:

- Help you understand your code better, not worse

- Watch every change you make, like a pair programmer

- Learn and adapt to your codebase

- Integrate with your compiler, terminal, and debugger, as well as tools like Linear and GitHub

Want to try Melty? Sign up here, and we'll get you early access:

### [Get Early Access](https://docs.google.com/forms/d/e/1FAIpQLSc6uBe0ea26q7Iq0Co_q5fjW2nypUl8G_Is5M_6t8n7wZHuPA/viewform)

https://twitter.com/charliebholtz/status/1825647665776046472

### Contributing

Please see our [contributing guidelines](CONTRIBUTING.md) for details on how to set up/contribute to the project.

|

{

"source": "meltylabs/melty",

"title": "README.md",

"url": "https://github.com/meltylabs/melty/blob/main/README.md",

"date": "2024-09-02T00:01:16",

"stars": 5355,

"description": "Chat first code editor. To download the packaged app:",

"file_size": 2879

}

|

# Code - OSS Development Container

[](https://vscode.dev/redirect?url=vscode://ms-vscode-remote.remote-containers/cloneInVolume?url=https://github.com/microsoft/vscode)

This repository includes configuration for a development container for working with Code - OSS in a local container or using [GitHub Codespaces](https://github.com/features/codespaces).

> **Tip:** The default VNC password is `vscode`. The VNC server runs on port `5901` and a web client is available on port `6080`.

## Quick start - local

If you already have VS Code and Docker installed, you can click the badge above or [here](https://vscode.dev/redirect?url=vscode://ms-vscode-remote.remote-containers/cloneInVolume?url=https://github.com/microsoft/vscode) to get started. Clicking these links will cause VS Code to automatically install the Dev Containers extension if needed, clone the source code into a container volume, and spin up a dev container for use.

1. Install Docker Desktop or Docker for Linux on your local machine. (See [docs](https://aka.ms/vscode-remote/containers/getting-started) for additional details.)

2. **Important**: Docker needs at least **4 Cores and 8 GB of RAM** to run a full build with **9 GB of RAM** being recommended. If you are on macOS, or are using the old Hyper-V engine for Windows, update these values for Docker Desktop by right-clicking on the Docker status bar item and going to **Preferences/Settings > Resources > Advanced**.

> **Note:** The [Resource Monitor](https://marketplace.visualstudio.com/items?itemName=mutantdino.resourcemonitor) extension is included in the container so you can keep an eye on CPU/Memory in the status bar.

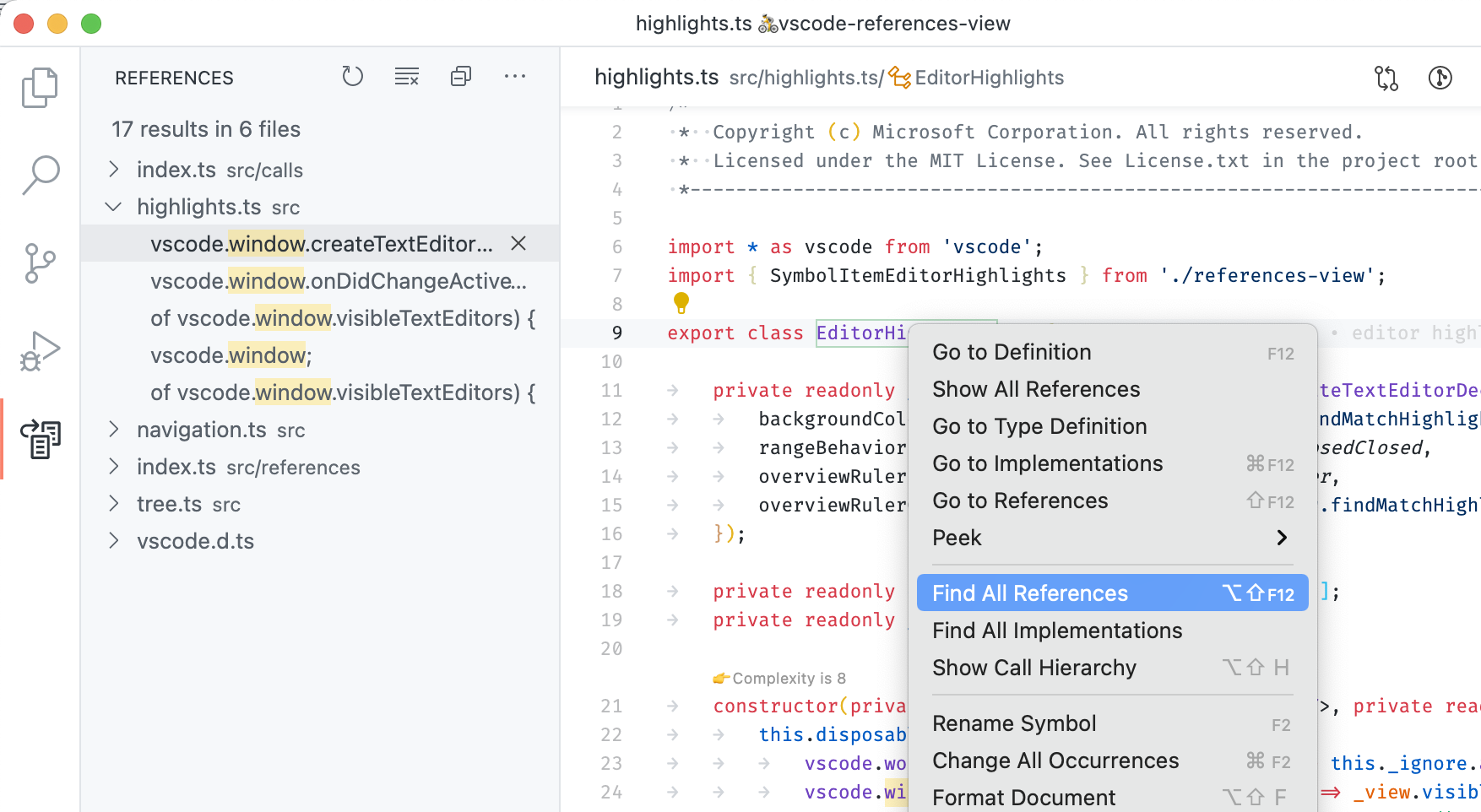

3. Install [Visual Studio Code Stable](https://code.visualstudio.com/) or [Insiders](https://code.visualstudio.com/insiders/) and the [Dev Containers](https://aka.ms/vscode-remote/download/containers) extension.

> **Note:** The Dev Containers extension requires the Visual Studio Code distribution of Code - OSS. See the [FAQ](https://aka.ms/vscode-remote/faq/license) for details.

4. Press <kbd>Ctrl/Cmd</kbd> + <kbd>Shift</kbd> + <kbd>P</kbd> or <kbd>F1</kbd> and select **Dev Containers: Clone Repository in Container Volume...**.

> **Tip:** While you can use your local source tree instead, operations like `yarn install` can be slow on macOS or when using the Hyper-V engine on Windows. We recommend using the WSL filesystem on Windows or the "clone repository in container" approach on Windows and macOS instead since it uses "named volume" rather than the local filesystem.

5. Type `https://github.com/microsoft/vscode` (or a branch or PR URL) in the input box and press <kbd>Enter</kbd>.

6. After the container is running:

1. If you have the `DISPLAY` or `WAYLAND_DISPLAY` environment variables set locally (or in WSL on Windows), desktop apps in the container will be shown in local windows.

2. If these are not set, open a web browser and go to [http://localhost:6080](http://localhost:6080), or use a [VNC Viewer][def] to connect to `localhost:5901` and enter `vscode` as the password. Anything you start in VS Code, or the integrated terminal, will appear here.

Next: **[Try it out!](#try-it)**

## Quick start - GitHub Codespaces

1. From the [microsoft/vscode GitHub repository](https://github.com/microsoft/vscode), click on the **Code** dropdown, select **Open with Codespaces**, and then click on **New codespace**. If prompted, select the **Standard** machine size (which is also the default).

> **Note:** You will not see these options within GitHub if you are not in the Codespaces beta.

2. After the codespace is up and running in your browser, press <kbd>Ctrl/Cmd</kbd> + <kbd>Shift</kbd> + <kbd>P</kbd> or <kbd>F1</kbd> and select **Ports: Focus on Ports View**.