Datasets:

dataset_info:

features:

- name: image

dtype: image

- name: question_id

dtype: int64

- name: question

dtype: string

- name: answers

sequence: string

- name: data_split

dtype: string

- name: ocr_results

struct:

- name: page

dtype: int64

- name: clockwise_orientation

dtype: float64

- name: width

dtype: int64

- name: height

dtype: int64

- name: unit

dtype: string

- name: lines

list:

- name: bounding_box

sequence: int64

- name: text

dtype: string

- name: words

list:

- name: bounding_box

sequence: int64

- name: text

dtype: string

- name: confidence

dtype: string

- name: other_metadata

struct:

- name: ucsf_document_id

dtype: string

- name: ucsf_document_page_no

dtype: string

- name: doc_id

dtype: int64

- name: image

dtype: string

splits:

- name: train

num_examples: 39463

- name: validation

num_examples: 5349

- name: test

num_examples: 5188

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

- split: test

path: data/test-*

license: mit

task_categories:

- question-answering

language:

- en

size_categories:

- 10K<n<100K

Dataset Card for DocVQA Dataset

Dataset Description

- Point of Contact from curators: Minesh Mathew, Dimosthenis Karatzas, C. V. Jawahar

- Point of Contact Hugging Face: Pablo Montalvo

Dataset Summary

DocVQA dataset is a document dataset introduced in Mathew et al. (2021) consisting of 50,000 questions defined on 12,000+ document images.

Please visit the challenge page (https://rrc.cvc.uab.es/?ch=17) and paper (https://arxiv.org/abs/2007.00398) for further information.

Usage

This dataset can be used with current releases of Hugging Face datasets library.

Here is an example using a custom collator to bundle batches in a trainable way on the train split

from datasets import load_dataset

docvqa_dataset = load_dataset("pixparse/docvqa-single-page-questions", split="train"

)

next(iter(dataset["train"])).keys()

>>> dict_keys(['image', 'question_id', 'question', 'answers', 'data_split', 'ocr_results', 'other_metadata'])

image will be a byte string containing the image contents. answers is a list of possible answers, aligned with the expected inputs to the ANLS metric.

Calling

from PIL import Image

from io import BytesIO

image = Image.open(BytesIO(docvqa_dataset["train"][0]["image"]['bytes']))

will yield the image

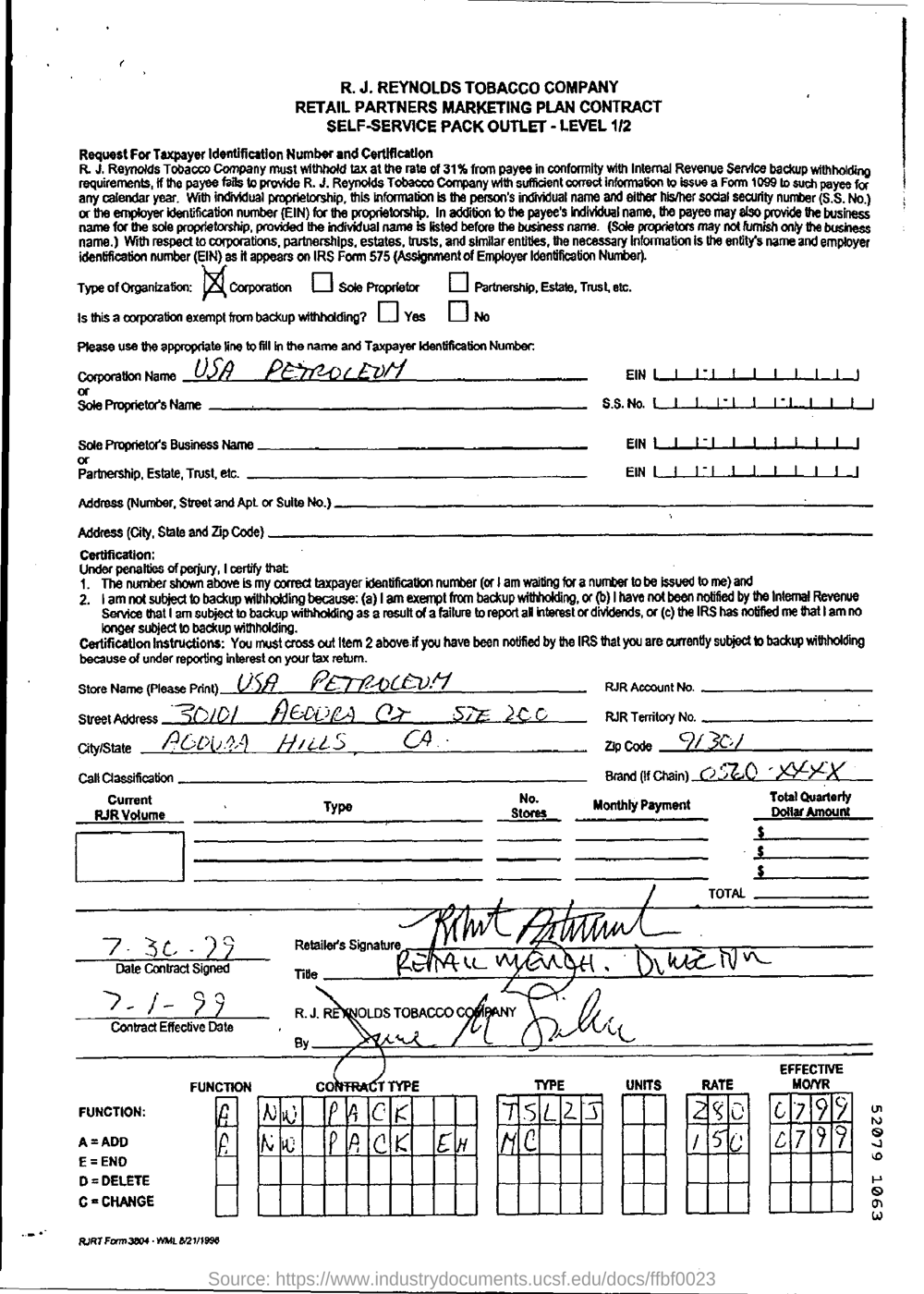

A document overlapping with tobacco on which questions are asked such as 'When is the contract effective date?' with the answer ['7 - 1 - 99']

The loader can then be iterated on normally and yields questions. Many questions rely on the same image, so there is some amount of data duplication.

For this sample 0, the question has just one possible answer, but in general answers is a list of strings.

# int identifier of the question

print(dataset["train"][0]['question_id'])

>>> 9951

# actual question

print(dataset["train"][0]['question'])

>>>'When is the contract effective date?'

# one-element list of accepted/ground truth answers for this question

print(dataset["train"][0]['answers'])

>>> ['7 - 1 - 99']

ocr_results contains OCR information about all files, which can be used for models that don't leverage only the image input.

Data Splits

Train

- 10194 images, 39463 questions and answers.

Validation

- 1286 images, 5349 questions and answers.

Test

- 1,287 images, 5,188 questions.

Additional Information

Dataset Curators

For original authors of the dataset, see citation below.

Hugging Face points of contact for this instance: Pablo Montalvo, Ross Wightman

Licensing Information

MIT

Citation Information

@InProceedings{docvqa_wacv,

author = {Mathew, Minesh and Karatzas, Dimosthenis and Jawahar, C.V.},

title = {DocVQA: A Dataset for VQA on Document Images},

booktitle = {WACV},

year = {2021},

pages = {2200-2209}

}