problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_23012

|

rasdani/github-patches

|

git_diff

|

tensorflow__addons-549

|

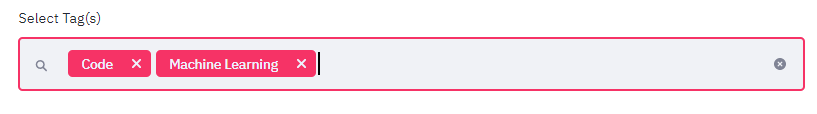

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Package TFA 0.5.2 pinned to TF2-RC2

RC2 is released so we can do a minor release pinned to this. For now this is blocked until #539 is merged.

</issue>

<code>

[start of setup.py]

1 # Copyright 2019 The TensorFlow Authors. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ==============================================================================

15 """TensorFlow Addons.

16

17 TensorFlow Addons is a repository of contributions that conform to well-

18 established API patterns, but implement new functionality not available

19 in core TensorFlow. TensorFlow natively supports a large number of

20 operators, layers, metrics, losses, and optimizers. However, in a fast

21 moving field like ML, there are many interesting new developments that

22 cannot be integrated into core TensorFlow (because their broad

23 applicability is not yet clear, or it is mostly used by a smaller subset

24 of the community).

25 """

26

27 from __future__ import absolute_import

28 from __future__ import division

29 from __future__ import print_function

30

31 import os

32 import platform

33 import sys

34

35 from datetime import datetime

36 from setuptools import find_packages

37 from setuptools import setup

38 from setuptools.dist import Distribution

39 from setuptools import Extension

40

41 DOCLINES = __doc__.split('\n')

42

43 TFA_NIGHTLY = 'tfa-nightly'

44 TFA_RELEASE = 'tensorflow-addons'

45

46 if '--nightly' in sys.argv:

47 project_name = TFA_NIGHTLY

48 nightly_idx = sys.argv.index('--nightly')

49 sys.argv.pop(nightly_idx)

50 else:

51 project_name = TFA_RELEASE

52

53 # Version

54 version = {}

55 base_dir = os.path.dirname(os.path.abspath(__file__))

56 with open(os.path.join(base_dir, "tensorflow_addons", "version.py")) as fp:

57 # yapf: disable

58 exec(fp.read(), version)

59 # yapf: enable

60

61 if project_name == TFA_NIGHTLY:

62 version['__version__'] += datetime.strftime(datetime.today(), "%Y%m%d")

63

64 # Dependencies

65 REQUIRED_PACKAGES = [

66 'six >= 1.10.0',

67 ]

68

69 if project_name == TFA_RELEASE:

70 # TODO: remove if-else condition when tf supports package consolidation.

71 if platform.system() == 'Linux':

72 REQUIRED_PACKAGES.append('tensorflow-gpu == 2.0.0-rc1')

73 else:

74 REQUIRED_PACKAGES.append('tensorflow == 2.0.0-rc1')

75 elif project_name == TFA_NIGHTLY:

76 # TODO: remove if-else condition when tf-nightly supports package consolidation.

77 if platform.system() == 'Linux':

78 REQUIRED_PACKAGES.append('tf-nightly-gpu-2.0-preview')

79 else:

80 REQUIRED_PACKAGES.append('tf-nightly-2.0-preview')

81

82

83 class BinaryDistribution(Distribution):

84 """This class is needed in order to create OS specific wheels."""

85

86 def has_ext_modules(self):

87 return True

88

89

90 setup(

91 name=project_name,

92 version=version['__version__'],

93 description=DOCLINES[0],

94 long_description='\n'.join(DOCLINES[2:]),

95 author='Google Inc.',

96 author_email='[email protected]',

97 packages=find_packages(),

98 ext_modules=[Extension('_foo', ['stub.cc'])],

99 install_requires=REQUIRED_PACKAGES,

100 include_package_data=True,

101 zip_safe=False,

102 distclass=BinaryDistribution,

103 classifiers=[

104 'Development Status :: 4 - Beta',

105 'Intended Audience :: Developers',

106 'Intended Audience :: Education',

107 'Intended Audience :: Science/Research',

108 'License :: OSI Approved :: Apache Software License',

109 'Programming Language :: Python :: 2.7',

110 'Programming Language :: Python :: 3.5',

111 'Programming Language :: Python :: 3.6',

112 'Programming Language :: Python :: 3.7',

113 'Topic :: Scientific/Engineering :: Mathematics',

114 'Topic :: Software Development :: Libraries :: Python Modules',

115 'Topic :: Software Development :: Libraries',

116 ],

117 license='Apache 2.0',

118 keywords='tensorflow addons machine learning',

119 )

120

[end of setup.py]

[start of tensorflow_addons/version.py]

1 # Copyright 2019 The TensorFlow Authors. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ============================================================================

15 """Define TensorFlow Addons version information."""

16 from __future__ import absolute_import

17 from __future__ import division

18 from __future__ import print_function

19

20 # We follow Semantic Versioning (https://semver.org/)

21 _MAJOR_VERSION = '0'

22 _MINOR_VERSION = '5'

23 _PATCH_VERSION = '1'

24

25 # When building releases, we can update this value on the release branch to

26 # reflect the current release candidate ('rc0', 'rc1') or, finally, the official

27 # stable release (indicated by `_VERSION_SUFFIX = ''`). Outside the context of a

28 # release branch, the current version is by default assumed to be a

29 # 'development' version, labeled 'dev'.

30 _VERSION_SUFFIX = ''

31

32 # Example, '0.1.0-dev'

33 __version__ = '.'.join([

34 _MAJOR_VERSION,

35 _MINOR_VERSION,

36 _PATCH_VERSION,

37 ])

38 if _VERSION_SUFFIX:

39 __version__ = '{}-{}'.format(__version__, _VERSION_SUFFIX)

40

[end of tensorflow_addons/version.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -69,9 +69,9 @@

if project_name == TFA_RELEASE:

# TODO: remove if-else condition when tf supports package consolidation.

if platform.system() == 'Linux':

- REQUIRED_PACKAGES.append('tensorflow-gpu == 2.0.0-rc1')

+ REQUIRED_PACKAGES.append('tensorflow-gpu == 2.0.0')

else:

- REQUIRED_PACKAGES.append('tensorflow == 2.0.0-rc1')

+ REQUIRED_PACKAGES.append('tensorflow == 2.0.0')

elif project_name == TFA_NIGHTLY:

# TODO: remove if-else condition when tf-nightly supports package consolidation.

if platform.system() == 'Linux':

diff --git a/tensorflow_addons/version.py b/tensorflow_addons/version.py

--- a/tensorflow_addons/version.py

+++ b/tensorflow_addons/version.py

@@ -20,7 +20,7 @@

# We follow Semantic Versioning (https://semver.org/)

_MAJOR_VERSION = '0'

_MINOR_VERSION = '5'

-_PATCH_VERSION = '1'

+_PATCH_VERSION = '2'

# When building releases, we can update this value on the release branch to

# reflect the current release candidate ('rc0', 'rc1') or, finally, the official

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -69,9 +69,9 @@\n if project_name == TFA_RELEASE:\n # TODO: remove if-else condition when tf supports package consolidation.\n if platform.system() == 'Linux':\n- REQUIRED_PACKAGES.append('tensorflow-gpu == 2.0.0-rc1')\n+ REQUIRED_PACKAGES.append('tensorflow-gpu == 2.0.0')\n else:\n- REQUIRED_PACKAGES.append('tensorflow == 2.0.0-rc1')\n+ REQUIRED_PACKAGES.append('tensorflow == 2.0.0')\n elif project_name == TFA_NIGHTLY:\n # TODO: remove if-else condition when tf-nightly supports package consolidation.\n if platform.system() == 'Linux':\ndiff --git a/tensorflow_addons/version.py b/tensorflow_addons/version.py\n--- a/tensorflow_addons/version.py\n+++ b/tensorflow_addons/version.py\n@@ -20,7 +20,7 @@\n # We follow Semantic Versioning (https://semver.org/)\n _MAJOR_VERSION = '0'\n _MINOR_VERSION = '5'\n-_PATCH_VERSION = '1'\n+_PATCH_VERSION = '2'\n \n # When building releases, we can update this value on the release branch to\n # reflect the current release candidate ('rc0', 'rc1') or, finally, the official\n", "issue": "Package TFA 0.5.2 pinned to TF2-RC2\nRC2 is released so we can do a minor release pinned to this. For now this is blocked until #539 is merged.\n", "before_files": [{"content": "# Copyright 2019 The TensorFlow Authors. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n# ==============================================================================\n\"\"\"TensorFlow Addons.\n\nTensorFlow Addons is a repository of contributions that conform to well-\nestablished API patterns, but implement new functionality not available\nin core TensorFlow. TensorFlow natively supports a large number of\noperators, layers, metrics, losses, and optimizers. However, in a fast\nmoving field like ML, there are many interesting new developments that\ncannot be integrated into core TensorFlow (because their broad\napplicability is not yet clear, or it is mostly used by a smaller subset\nof the community).\n\"\"\"\n\nfrom __future__ import absolute_import\nfrom __future__ import division\nfrom __future__ import print_function\n\nimport os\nimport platform\nimport sys\n\nfrom datetime import datetime\nfrom setuptools import find_packages\nfrom setuptools import setup\nfrom setuptools.dist import Distribution\nfrom setuptools import Extension\n\nDOCLINES = __doc__.split('\\n')\n\nTFA_NIGHTLY = 'tfa-nightly'\nTFA_RELEASE = 'tensorflow-addons'\n\nif '--nightly' in sys.argv:\n project_name = TFA_NIGHTLY\n nightly_idx = sys.argv.index('--nightly')\n sys.argv.pop(nightly_idx)\nelse:\n project_name = TFA_RELEASE\n\n# Version\nversion = {}\nbase_dir = os.path.dirname(os.path.abspath(__file__))\nwith open(os.path.join(base_dir, \"tensorflow_addons\", \"version.py\")) as fp:\n # yapf: disable\n exec(fp.read(), version)\n # yapf: enable\n\nif project_name == TFA_NIGHTLY:\n version['__version__'] += datetime.strftime(datetime.today(), \"%Y%m%d\")\n\n# Dependencies\nREQUIRED_PACKAGES = [\n 'six >= 1.10.0',\n]\n\nif project_name == TFA_RELEASE:\n # TODO: remove if-else condition when tf supports package consolidation.\n if platform.system() == 'Linux':\n REQUIRED_PACKAGES.append('tensorflow-gpu == 2.0.0-rc1')\n else:\n REQUIRED_PACKAGES.append('tensorflow == 2.0.0-rc1')\nelif project_name == TFA_NIGHTLY:\n # TODO: remove if-else condition when tf-nightly supports package consolidation.\n if platform.system() == 'Linux':\n REQUIRED_PACKAGES.append('tf-nightly-gpu-2.0-preview')\n else:\n REQUIRED_PACKAGES.append('tf-nightly-2.0-preview')\n\n\nclass BinaryDistribution(Distribution):\n \"\"\"This class is needed in order to create OS specific wheels.\"\"\"\n\n def has_ext_modules(self):\n return True\n\n\nsetup(\n name=project_name,\n version=version['__version__'],\n description=DOCLINES[0],\n long_description='\\n'.join(DOCLINES[2:]),\n author='Google Inc.',\n author_email='[email protected]',\n packages=find_packages(),\n ext_modules=[Extension('_foo', ['stub.cc'])],\n install_requires=REQUIRED_PACKAGES,\n include_package_data=True,\n zip_safe=False,\n distclass=BinaryDistribution,\n classifiers=[\n 'Development Status :: 4 - Beta',\n 'Intended Audience :: Developers',\n 'Intended Audience :: Education',\n 'Intended Audience :: Science/Research',\n 'License :: OSI Approved :: Apache Software License',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3.5',\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Topic :: Scientific/Engineering :: Mathematics',\n 'Topic :: Software Development :: Libraries :: Python Modules',\n 'Topic :: Software Development :: Libraries',\n ],\n license='Apache 2.0',\n keywords='tensorflow addons machine learning',\n)\n", "path": "setup.py"}, {"content": "# Copyright 2019 The TensorFlow Authors. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n# ============================================================================\n\"\"\"Define TensorFlow Addons version information.\"\"\"\nfrom __future__ import absolute_import\nfrom __future__ import division\nfrom __future__ import print_function\n\n# We follow Semantic Versioning (https://semver.org/)\n_MAJOR_VERSION = '0'\n_MINOR_VERSION = '5'\n_PATCH_VERSION = '1'\n\n# When building releases, we can update this value on the release branch to\n# reflect the current release candidate ('rc0', 'rc1') or, finally, the official\n# stable release (indicated by `_VERSION_SUFFIX = ''`). Outside the context of a\n# release branch, the current version is by default assumed to be a\n# 'development' version, labeled 'dev'.\n_VERSION_SUFFIX = ''\n\n# Example, '0.1.0-dev'\n__version__ = '.'.join([\n _MAJOR_VERSION,\n _MINOR_VERSION,\n _PATCH_VERSION,\n])\nif _VERSION_SUFFIX:\n __version__ = '{}-{}'.format(__version__, _VERSION_SUFFIX)\n", "path": "tensorflow_addons/version.py"}]}

| 2,194 | 310 |

gh_patches_debug_23993

|

rasdani/github-patches

|

git_diff

|

sanic-org__sanic-2640

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

There is an obvious bug in ASGI WebsocketConnection of Sanic

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Describe the bug

I started my sanic app with UvicornWorker. The original websocket will become WebsocketConnection. When I call

the ws.recv function will report an error if bytes data is received at this time.

`KeyError:‘text’`

[https://github.com/sanic-org/sanic/blob/main/sanic/server/websockets/connection.py](url)

` async def recv(self, *args, **kwargs) -> Optional[str]:

message = await self._receive()

if message["type"] == "websocket.receive":

return message["text"]

elif message["type"] == "websocket.disconnect":

pass

return None`

There is no data of bytes type processed here.

### Code snippet

_No response_

### Expected Behavior

_No response_

### How do you run Sanic?

ASGI

### Operating System

ubuntu

### Sanic Version

22.3

### Additional context

_No response_

</issue>

<code>

[start of sanic/server/websockets/connection.py]

1 from typing import (

2 Any,

3 Awaitable,

4 Callable,

5 Dict,

6 List,

7 MutableMapping,

8 Optional,

9 Union,

10 )

11

12

13 ASIMessage = MutableMapping[str, Any]

14

15

16 class WebSocketConnection:

17 """

18 This is for ASGI Connections.

19 It provides an interface similar to WebsocketProtocol, but

20 sends/receives over an ASGI connection.

21 """

22

23 # TODO

24 # - Implement ping/pong

25

26 def __init__(

27 self,

28 send: Callable[[ASIMessage], Awaitable[None]],

29 receive: Callable[[], Awaitable[ASIMessage]],

30 subprotocols: Optional[List[str]] = None,

31 ) -> None:

32 self._send = send

33 self._receive = receive

34 self._subprotocols = subprotocols or []

35

36 async def send(self, data: Union[str, bytes], *args, **kwargs) -> None:

37 message: Dict[str, Union[str, bytes]] = {"type": "websocket.send"}

38

39 if isinstance(data, bytes):

40 message.update({"bytes": data})

41 else:

42 message.update({"text": str(data)})

43

44 await self._send(message)

45

46 async def recv(self, *args, **kwargs) -> Optional[str]:

47 message = await self._receive()

48

49 if message["type"] == "websocket.receive":

50 return message["text"]

51 elif message["type"] == "websocket.disconnect":

52 pass

53

54 return None

55

56 receive = recv

57

58 async def accept(self, subprotocols: Optional[List[str]] = None) -> None:

59 subprotocol = None

60 if subprotocols:

61 for subp in subprotocols:

62 if subp in self.subprotocols:

63 subprotocol = subp

64 break

65

66 await self._send(

67 {

68 "type": "websocket.accept",

69 "subprotocol": subprotocol,

70 }

71 )

72

73 async def close(self, code: int = 1000, reason: str = "") -> None:

74 pass

75

76 @property

77 def subprotocols(self):

78 return self._subprotocols

79

80 @subprotocols.setter

81 def subprotocols(self, subprotocols: Optional[List[str]] = None):

82 self._subprotocols = subprotocols or []

83

[end of sanic/server/websockets/connection.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/sanic/server/websockets/connection.py b/sanic/server/websockets/connection.py

--- a/sanic/server/websockets/connection.py

+++ b/sanic/server/websockets/connection.py

@@ -9,8 +9,10 @@

Union,

)

+from sanic.exceptions import InvalidUsage

-ASIMessage = MutableMapping[str, Any]

+

+ASGIMessage = MutableMapping[str, Any]

class WebSocketConnection:

@@ -25,8 +27,8 @@

def __init__(

self,

- send: Callable[[ASIMessage], Awaitable[None]],

- receive: Callable[[], Awaitable[ASIMessage]],

+ send: Callable[[ASGIMessage], Awaitable[None]],

+ receive: Callable[[], Awaitable[ASGIMessage]],

subprotocols: Optional[List[str]] = None,

) -> None:

self._send = send

@@ -47,7 +49,13 @@

message = await self._receive()

if message["type"] == "websocket.receive":

- return message["text"]

+ try:

+ return message["text"]

+ except KeyError:

+ try:

+ return message["bytes"].decode()

+ except KeyError:

+ raise InvalidUsage("Bad ASGI message received")

elif message["type"] == "websocket.disconnect":

pass

|

{"golden_diff": "diff --git a/sanic/server/websockets/connection.py b/sanic/server/websockets/connection.py\n--- a/sanic/server/websockets/connection.py\n+++ b/sanic/server/websockets/connection.py\n@@ -9,8 +9,10 @@\n Union,\n )\n \n+from sanic.exceptions import InvalidUsage\n \n-ASIMessage = MutableMapping[str, Any]\n+\n+ASGIMessage = MutableMapping[str, Any]\n \n \n class WebSocketConnection:\n@@ -25,8 +27,8 @@\n \n def __init__(\n self,\n- send: Callable[[ASIMessage], Awaitable[None]],\n- receive: Callable[[], Awaitable[ASIMessage]],\n+ send: Callable[[ASGIMessage], Awaitable[None]],\n+ receive: Callable[[], Awaitable[ASGIMessage]],\n subprotocols: Optional[List[str]] = None,\n ) -> None:\n self._send = send\n@@ -47,7 +49,13 @@\n message = await self._receive()\n \n if message[\"type\"] == \"websocket.receive\":\n- return message[\"text\"]\n+ try:\n+ return message[\"text\"]\n+ except KeyError:\n+ try:\n+ return message[\"bytes\"].decode()\n+ except KeyError:\n+ raise InvalidUsage(\"Bad ASGI message received\")\n elif message[\"type\"] == \"websocket.disconnect\":\n pass\n", "issue": "There is an obvious bug in ASGI WebsocketConnection of Sanic\n### Is there an existing issue for this?\n\n- [X] I have searched the existing issues\n\n### Describe the bug\n\nI started my sanic app with UvicornWorker. The original websocket will become WebsocketConnection. When I call\r\nthe ws.recv function will report an error if bytes data is received at this time.\r\n`KeyError\uff1a\u2018text\u2019`\r\n[https://github.com/sanic-org/sanic/blob/main/sanic/server/websockets/connection.py](url)\r\n` async def recv(self, *args, **kwargs) -> Optional[str]:\r\n message = await self._receive()\r\n\r\n if message[\"type\"] == \"websocket.receive\":\r\n return message[\"text\"]\r\n elif message[\"type\"] == \"websocket.disconnect\":\r\n pass\r\n\r\n return None`\r\nThere is no data of bytes type processed here.\n\n### Code snippet\n\n_No response_\n\n### Expected Behavior\n\n_No response_\n\n### How do you run Sanic?\n\nASGI\n\n### Operating System\n\nubuntu\n\n### Sanic Version\n\n22.3\n\n### Additional context\n\n_No response_\n", "before_files": [{"content": "from typing import (\n Any,\n Awaitable,\n Callable,\n Dict,\n List,\n MutableMapping,\n Optional,\n Union,\n)\n\n\nASIMessage = MutableMapping[str, Any]\n\n\nclass WebSocketConnection:\n \"\"\"\n This is for ASGI Connections.\n It provides an interface similar to WebsocketProtocol, but\n sends/receives over an ASGI connection.\n \"\"\"\n\n # TODO\n # - Implement ping/pong\n\n def __init__(\n self,\n send: Callable[[ASIMessage], Awaitable[None]],\n receive: Callable[[], Awaitable[ASIMessage]],\n subprotocols: Optional[List[str]] = None,\n ) -> None:\n self._send = send\n self._receive = receive\n self._subprotocols = subprotocols or []\n\n async def send(self, data: Union[str, bytes], *args, **kwargs) -> None:\n message: Dict[str, Union[str, bytes]] = {\"type\": \"websocket.send\"}\n\n if isinstance(data, bytes):\n message.update({\"bytes\": data})\n else:\n message.update({\"text\": str(data)})\n\n await self._send(message)\n\n async def recv(self, *args, **kwargs) -> Optional[str]:\n message = await self._receive()\n\n if message[\"type\"] == \"websocket.receive\":\n return message[\"text\"]\n elif message[\"type\"] == \"websocket.disconnect\":\n pass\n\n return None\n\n receive = recv\n\n async def accept(self, subprotocols: Optional[List[str]] = None) -> None:\n subprotocol = None\n if subprotocols:\n for subp in subprotocols:\n if subp in self.subprotocols:\n subprotocol = subp\n break\n\n await self._send(\n {\n \"type\": \"websocket.accept\",\n \"subprotocol\": subprotocol,\n }\n )\n\n async def close(self, code: int = 1000, reason: str = \"\") -> None:\n pass\n\n @property\n def subprotocols(self):\n return self._subprotocols\n\n @subprotocols.setter\n def subprotocols(self, subprotocols: Optional[List[str]] = None):\n self._subprotocols = subprotocols or []\n", "path": "sanic/server/websockets/connection.py"}]}

| 1,412 | 298 |

gh_patches_debug_30786

|

rasdani/github-patches

|

git_diff

|

open-mmlab__mmdetection-9241

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[Bug] metric should be one of \\\'bbox\\\' when using tools/test.py

### Prerequisite

- [X] I have searched [Issues](https://github.com/open-mmlab/mmdetection/issues) and [Discussions](https://github.com/open-mmlab/mmdetection/discussions) but cannot get the expected help.

- [X] I have read the [FAQ documentation](https://mmdetection.readthedocs.io/en/latest/faq.html) but cannot get the expected help.

- [X] The bug has not been fixed in the [latest version (master)](https://github.com/open-mmlab/mmdetection) or [latest version (3.x)](https://github.com/open-mmlab/mmdetection/tree/dev-3.x).

### Task

I have modified the scripts/configs, or I'm working on my own tasks/models/datasets.

### Branch

3.x branch https://github.com/open-mmlab/mmdetection/tree/3.x

### Environment

OrderedDict([('sys.platform', 'linux'),

('Python',

'3.7.12 | packaged by conda-forge | (default, Oct 26 2021, 06:08:21) [GCC 9.4.0]'),

('CUDA available', True),

('numpy_random_seed', 2147483648),

('GPU 0', 'NVIDIA GeForce RTX 3080'),

('CUDA_HOME', '/usr/local/cuda-11.5'),

('NVCC', 'Cuda compilation tools, release 11.5, V11.5.50'),

('GCC', 'gcc (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0'),

('PyTorch', '1.12.1+cu113'),

('PyTorch compiling details',

'PyTorch built with:\n - GCC 9.3\n - C++ Version: 201402\n - Intel(R) Math Kernel Library Version 2020.0.0 Product Build 20191122 for Intel(R) 64 architecture applications\n - Intel(R) MKL-DNN v2.6.0 (Git Hash 52b5f107dd9cf10910aaa19cb47f3abf9b349815)\n - OpenMP 201511 (a.k.a. OpenMP 4.5)\n - LAPACK is enabled (usually provided by MKL)\n - NNPACK is enabled\n - CPU capability usage: AVX2\n - CUDA Runtime 11.3\n - NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86\n - CuDNN 8.3.2 (built against CUDA 11.5)\n - Magma 2.5.2\n - Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.3.2, CXX_COMPILER=/opt/rh/devtoolset-9/root/usr/bin/c++, CXX_FLAGS= -fabi-version=11 -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.12.1, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF, \n'),

('TorchVision', '0.13.1+cu113'),

('OpenCV', '4.6.0'),

('MMEngine', '0.2.0'),

('MMDetection', '3.0.0rc2+5897b32')])

### Reproduces the problem - code sample

Here is my config:

```python

test_evaluator = dict(

type='CocoMetric',

ann_file='./ballondatasets/balloon/val/annotation_coco.json',

metric=['bbox', 'segm'],

format_only=False)

```

if i use `python config checkpoint --out result.pkl`, it will be modified to

```

```python

test_evaluator = dict(

type='CocoMetric',

ann_file='./ballondatasets/balloon/val/annotation_coco.json',

metric=[None, {

'type': 'DumpResults',

'out_file_path': 'ceshi.pkl'

}],

format_only=False)

```

### Reproduces the problem - error message

```

File "/home/sanbu/anaconda3/envs/mmlab2/lib/python3.7/site-packages/mmengine/runner/runner.py", line 1533, in build_test_loop

evaluator=self._test_evaluator))

File "/home/sanbu/anaconda3/envs/mmlab2/lib/python3.7/site-packages/mmengine/registry/registry.py", line 421, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "/home/sanbu/anaconda3/envs/mmlab2/lib/python3.7/site-packages/mmengine/registry/build_functions.py", line 136, in build_from_cfg

f'class `{obj_cls.__name__}` in ' # type: ignore

KeyError: 'class `TestLoop` in mmengine/runner/loops.py: \'class `CocoMetric` in mmdet/evaluation/metrics/coco_metric.py: "metric should be one of \\\'bbox\\\', \\\'segm\\\', \\\'proposal\\\', \\\'proposal_fast\\\', but got {\\\'type\\\': \\\'DumpResults\\\', \\\'out_file_path\\\': \\\'ceshi.pkl\\\'}."\''

```

### Additional information

I use the tools/test.py to output pkl file but i got the problem,the python file will modify my conifg.

I see the mmdet documentation says that we can use --eval bbox or segm to specify the metric parameter. But the tools/test.py doesn't has the `--eval` option.I don't know if 3.x have implemented the function.

</issue>

<code>

[start of mmdet/utils/__init__.py]

1 # Copyright (c) OpenMMLab. All rights reserved.

2 from .collect_env import collect_env

3 from .compat_config import compat_cfg

4 from .dist_utils import (all_reduce_dict, allreduce_grads, reduce_mean,

5 sync_random_seed)

6 from .logger import get_caller_name, log_img_scale

7 from .memory import AvoidCUDAOOM, AvoidOOM

8 from .misc import add_dump_metric, find_latest_checkpoint, update_data_root

9 from .replace_cfg_vals import replace_cfg_vals

10 from .setup_env import register_all_modules, setup_multi_processes

11 from .split_batch import split_batch

12 from .typing import (ConfigType, InstanceList, MultiConfig, OptConfigType,

13 OptInstanceList, OptMultiConfig, OptPixelList, PixelList,

14 RangeType)

15

16 __all__ = [

17 'collect_env', 'find_latest_checkpoint', 'update_data_root',

18 'setup_multi_processes', 'get_caller_name', 'log_img_scale', 'compat_cfg',

19 'split_batch', 'register_all_modules', 'replace_cfg_vals', 'AvoidOOM',

20 'AvoidCUDAOOM', 'all_reduce_dict', 'allreduce_grads', 'reduce_mean',

21 'sync_random_seed', 'ConfigType', 'InstanceList', 'MultiConfig',

22 'OptConfigType', 'OptInstanceList', 'OptMultiConfig', 'OptPixelList',

23 'PixelList', 'RangeType', 'add_dump_metric'

24 ]

25

[end of mmdet/utils/__init__.py]

[start of mmdet/utils/misc.py]

1 # Copyright (c) OpenMMLab. All rights reserved.

2 import glob

3 import os

4 import os.path as osp

5 import warnings

6

7 from mmengine.config import Config, ConfigDict

8 from mmengine.logging import print_log

9

10

11 def find_latest_checkpoint(path, suffix='pth'):

12 """Find the latest checkpoint from the working directory.

13

14 Args:

15 path(str): The path to find checkpoints.

16 suffix(str): File extension.

17 Defaults to pth.

18

19 Returns:

20 latest_path(str | None): File path of the latest checkpoint.

21 References:

22 .. [1] https://github.com/microsoft/SoftTeacher

23 /blob/main/ssod/utils/patch.py

24 """

25 if not osp.exists(path):

26 warnings.warn('The path of checkpoints does not exist.')

27 return None

28 if osp.exists(osp.join(path, f'latest.{suffix}')):

29 return osp.join(path, f'latest.{suffix}')

30

31 checkpoints = glob.glob(osp.join(path, f'*.{suffix}'))

32 if len(checkpoints) == 0:

33 warnings.warn('There are no checkpoints in the path.')

34 return None

35 latest = -1

36 latest_path = None

37 for checkpoint in checkpoints:

38 count = int(osp.basename(checkpoint).split('_')[-1].split('.')[0])

39 if count > latest:

40 latest = count

41 latest_path = checkpoint

42 return latest_path

43

44

45 def update_data_root(cfg, logger=None):

46 """Update data root according to env MMDET_DATASETS.

47

48 If set env MMDET_DATASETS, update cfg.data_root according to

49 MMDET_DATASETS. Otherwise, using cfg.data_root as default.

50

51 Args:

52 cfg (:obj:`Config`): The model config need to modify

53 logger (logging.Logger | str | None): the way to print msg

54 """

55 assert isinstance(cfg, Config), \

56 f'cfg got wrong type: {type(cfg)}, expected mmengine.Config'

57

58 if 'MMDET_DATASETS' in os.environ:

59 dst_root = os.environ['MMDET_DATASETS']

60 print_log(f'MMDET_DATASETS has been set to be {dst_root}.'

61 f'Using {dst_root} as data root.')

62 else:

63 return

64

65 assert isinstance(cfg, Config), \

66 f'cfg got wrong type: {type(cfg)}, expected mmengine.Config'

67

68 def update(cfg, src_str, dst_str):

69 for k, v in cfg.items():

70 if isinstance(v, ConfigDict):

71 update(cfg[k], src_str, dst_str)

72 if isinstance(v, str) and src_str in v:

73 cfg[k] = v.replace(src_str, dst_str)

74

75 update(cfg.data, cfg.data_root, dst_root)

76 cfg.data_root = dst_root

77

78

79 def add_dump_metric(args, cfg):

80 dump_metric = dict(type='DumpResults', out_file_path=args.out)

81 if isinstance(cfg.test_evaluator, (list, tuple)):

82 cfg.test_evaluator = list(cfg.test_evaluator).append(dump_metric)

83 elif isinstance(cfg.test_evaluator, dict):

84 if isinstance(cfg.test_evaluator.metric, str):

85 cfg.test_evaluator = [cfg.test_evaluator, dump_metric]

86 elif isinstance(cfg.test_evaluator.metric, (list, tuple)):

87 cfg.test_evaluator.metric = list(

88 cfg.test_evaluator.metric).append(dump_metric)

89 else:

90 cfg.test_evaluator.metric = [

91 cfg.test_evaluator.metric, dump_metric

92 ]

93

[end of mmdet/utils/misc.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/mmdet/utils/__init__.py b/mmdet/utils/__init__.py

--- a/mmdet/utils/__init__.py

+++ b/mmdet/utils/__init__.py

@@ -5,7 +5,7 @@

sync_random_seed)

from .logger import get_caller_name, log_img_scale

from .memory import AvoidCUDAOOM, AvoidOOM

-from .misc import add_dump_metric, find_latest_checkpoint, update_data_root

+from .misc import find_latest_checkpoint, update_data_root

from .replace_cfg_vals import replace_cfg_vals

from .setup_env import register_all_modules, setup_multi_processes

from .split_batch import split_batch

@@ -20,5 +20,5 @@

'AvoidCUDAOOM', 'all_reduce_dict', 'allreduce_grads', 'reduce_mean',

'sync_random_seed', 'ConfigType', 'InstanceList', 'MultiConfig',

'OptConfigType', 'OptInstanceList', 'OptMultiConfig', 'OptPixelList',

- 'PixelList', 'RangeType', 'add_dump_metric'

+ 'PixelList', 'RangeType'

]

diff --git a/mmdet/utils/misc.py b/mmdet/utils/misc.py

--- a/mmdet/utils/misc.py

+++ b/mmdet/utils/misc.py

@@ -74,19 +74,3 @@

update(cfg.data, cfg.data_root, dst_root)

cfg.data_root = dst_root

-

-

-def add_dump_metric(args, cfg):

- dump_metric = dict(type='DumpResults', out_file_path=args.out)

- if isinstance(cfg.test_evaluator, (list, tuple)):

- cfg.test_evaluator = list(cfg.test_evaluator).append(dump_metric)

- elif isinstance(cfg.test_evaluator, dict):

- if isinstance(cfg.test_evaluator.metric, str):

- cfg.test_evaluator = [cfg.test_evaluator, dump_metric]

- elif isinstance(cfg.test_evaluator.metric, (list, tuple)):

- cfg.test_evaluator.metric = list(

- cfg.test_evaluator.metric).append(dump_metric)

- else:

- cfg.test_evaluator.metric = [

- cfg.test_evaluator.metric, dump_metric

- ]

|

{"golden_diff": "diff --git a/mmdet/utils/__init__.py b/mmdet/utils/__init__.py\n--- a/mmdet/utils/__init__.py\n+++ b/mmdet/utils/__init__.py\n@@ -5,7 +5,7 @@\n sync_random_seed)\n from .logger import get_caller_name, log_img_scale\n from .memory import AvoidCUDAOOM, AvoidOOM\n-from .misc import add_dump_metric, find_latest_checkpoint, update_data_root\n+from .misc import find_latest_checkpoint, update_data_root\n from .replace_cfg_vals import replace_cfg_vals\n from .setup_env import register_all_modules, setup_multi_processes\n from .split_batch import split_batch\n@@ -20,5 +20,5 @@\n 'AvoidCUDAOOM', 'all_reduce_dict', 'allreduce_grads', 'reduce_mean',\n 'sync_random_seed', 'ConfigType', 'InstanceList', 'MultiConfig',\n 'OptConfigType', 'OptInstanceList', 'OptMultiConfig', 'OptPixelList',\n- 'PixelList', 'RangeType', 'add_dump_metric'\n+ 'PixelList', 'RangeType'\n ]\ndiff --git a/mmdet/utils/misc.py b/mmdet/utils/misc.py\n--- a/mmdet/utils/misc.py\n+++ b/mmdet/utils/misc.py\n@@ -74,19 +74,3 @@\n \n update(cfg.data, cfg.data_root, dst_root)\n cfg.data_root = dst_root\n-\n-\n-def add_dump_metric(args, cfg):\n- dump_metric = dict(type='DumpResults', out_file_path=args.out)\n- if isinstance(cfg.test_evaluator, (list, tuple)):\n- cfg.test_evaluator = list(cfg.test_evaluator).append(dump_metric)\n- elif isinstance(cfg.test_evaluator, dict):\n- if isinstance(cfg.test_evaluator.metric, str):\n- cfg.test_evaluator = [cfg.test_evaluator, dump_metric]\n- elif isinstance(cfg.test_evaluator.metric, (list, tuple)):\n- cfg.test_evaluator.metric = list(\n- cfg.test_evaluator.metric).append(dump_metric)\n- else:\n- cfg.test_evaluator.metric = [\n- cfg.test_evaluator.metric, dump_metric\n- ]\n", "issue": "[Bug] metric should be one of \\\\\\'bbox\\\\\\' when using tools/test.py\n### Prerequisite\r\n\r\n- [X] I have searched [Issues](https://github.com/open-mmlab/mmdetection/issues) and [Discussions](https://github.com/open-mmlab/mmdetection/discussions) but cannot get the expected help.\r\n- [X] I have read the [FAQ documentation](https://mmdetection.readthedocs.io/en/latest/faq.html) but cannot get the expected help.\r\n- [X] The bug has not been fixed in the [latest version (master)](https://github.com/open-mmlab/mmdetection) or [latest version (3.x)](https://github.com/open-mmlab/mmdetection/tree/dev-3.x).\r\n\r\n### Task\r\n\r\nI have modified the scripts/configs, or I'm working on my own tasks/models/datasets.\r\n\r\n### Branch\r\n\r\n3.x branch https://github.com/open-mmlab/mmdetection/tree/3.x\r\n\r\n### Environment\r\n\r\nOrderedDict([('sys.platform', 'linux'),\r\n ('Python',\r\n '3.7.12 | packaged by conda-forge | (default, Oct 26 2021, 06:08:21) [GCC 9.4.0]'),\r\n ('CUDA available', True),\r\n ('numpy_random_seed', 2147483648),\r\n ('GPU 0', 'NVIDIA GeForce RTX 3080'),\r\n ('CUDA_HOME', '/usr/local/cuda-11.5'),\r\n ('NVCC', 'Cuda compilation tools, release 11.5, V11.5.50'),\r\n ('GCC', 'gcc (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0'),\r\n ('PyTorch', '1.12.1+cu113'),\r\n ('PyTorch compiling details',\r\n 'PyTorch built with:\\n - GCC 9.3\\n - C++ Version: 201402\\n - Intel(R) Math Kernel Library Version 2020.0.0 Product Build 20191122 for Intel(R) 64 architecture applications\\n - Intel(R) MKL-DNN v2.6.0 (Git Hash 52b5f107dd9cf10910aaa19cb47f3abf9b349815)\\n - OpenMP 201511 (a.k.a. OpenMP 4.5)\\n - LAPACK is enabled (usually provided by MKL)\\n - NNPACK is enabled\\n - CPU capability usage: AVX2\\n - CUDA Runtime 11.3\\n - NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86\\n - CuDNN 8.3.2 (built against CUDA 11.5)\\n - Magma 2.5.2\\n - Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.3.2, CXX_COMPILER=/opt/rh/devtoolset-9/root/usr/bin/c++, CXX_FLAGS= -fabi-version=11 -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Werror=cast-function-type -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.12.1, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF, \\n'),\r\n ('TorchVision', '0.13.1+cu113'),\r\n ('OpenCV', '4.6.0'),\r\n ('MMEngine', '0.2.0'),\r\n ('MMDetection', '3.0.0rc2+5897b32')])\r\n\r\n### Reproduces the problem - code sample\r\n\r\nHere is my config:\r\n```python\r\ntest_evaluator = dict(\r\n type='CocoMetric',\r\n ann_file='./ballondatasets/balloon/val/annotation_coco.json',\r\n metric=['bbox', 'segm'],\r\n format_only=False)\r\n```\r\n\r\nif i use `python config checkpoint --out result.pkl`, it will be modified to \r\n```\r\n```python\r\ntest_evaluator = dict(\r\n type='CocoMetric',\r\n ann_file='./ballondatasets/balloon/val/annotation_coco.json',\r\n metric=[None, {\r\n 'type': 'DumpResults',\r\n 'out_file_path': 'ceshi.pkl'\r\n }],\r\n format_only=False)\r\n```\r\n\r\n\r\n### Reproduces the problem - error message\r\n\r\n```\r\n File \"/home/sanbu/anaconda3/envs/mmlab2/lib/python3.7/site-packages/mmengine/runner/runner.py\", line 1533, in build_test_loop\r\n evaluator=self._test_evaluator))\r\n File \"/home/sanbu/anaconda3/envs/mmlab2/lib/python3.7/site-packages/mmengine/registry/registry.py\", line 421, in build\r\n return self.build_func(cfg, *args, **kwargs, registry=self)\r\n File \"/home/sanbu/anaconda3/envs/mmlab2/lib/python3.7/site-packages/mmengine/registry/build_functions.py\", line 136, in build_from_cfg\r\n f'class `{obj_cls.__name__}` in ' # type: ignore\r\nKeyError: 'class `TestLoop` in mmengine/runner/loops.py: \\'class `CocoMetric` in mmdet/evaluation/metrics/coco_metric.py: \"metric should be one of \\\\\\'bbox\\\\\\', \\\\\\'segm\\\\\\', \\\\\\'proposal\\\\\\', \\\\\\'proposal_fast\\\\\\', but got {\\\\\\'type\\\\\\': \\\\\\'DumpResults\\\\\\', \\\\\\'out_file_path\\\\\\': \\\\\\'ceshi.pkl\\\\\\'}.\"\\''\r\n\r\n```\r\n### Additional information\r\n\r\nI use the tools/test.py to output pkl file but i got the problem,the python file will modify my conifg.\r\n\r\nI see the mmdet documentation says that we can use --eval bbox or segm to specify the metric parameter. But the tools/test.py doesn't has the `--eval` option.I don't know if 3.x have implemented the function.\n", "before_files": [{"content": "# Copyright (c) OpenMMLab. All rights reserved.\nfrom .collect_env import collect_env\nfrom .compat_config import compat_cfg\nfrom .dist_utils import (all_reduce_dict, allreduce_grads, reduce_mean,\n sync_random_seed)\nfrom .logger import get_caller_name, log_img_scale\nfrom .memory import AvoidCUDAOOM, AvoidOOM\nfrom .misc import add_dump_metric, find_latest_checkpoint, update_data_root\nfrom .replace_cfg_vals import replace_cfg_vals\nfrom .setup_env import register_all_modules, setup_multi_processes\nfrom .split_batch import split_batch\nfrom .typing import (ConfigType, InstanceList, MultiConfig, OptConfigType,\n OptInstanceList, OptMultiConfig, OptPixelList, PixelList,\n RangeType)\n\n__all__ = [\n 'collect_env', 'find_latest_checkpoint', 'update_data_root',\n 'setup_multi_processes', 'get_caller_name', 'log_img_scale', 'compat_cfg',\n 'split_batch', 'register_all_modules', 'replace_cfg_vals', 'AvoidOOM',\n 'AvoidCUDAOOM', 'all_reduce_dict', 'allreduce_grads', 'reduce_mean',\n 'sync_random_seed', 'ConfigType', 'InstanceList', 'MultiConfig',\n 'OptConfigType', 'OptInstanceList', 'OptMultiConfig', 'OptPixelList',\n 'PixelList', 'RangeType', 'add_dump_metric'\n]\n", "path": "mmdet/utils/__init__.py"}, {"content": "# Copyright (c) OpenMMLab. All rights reserved.\nimport glob\nimport os\nimport os.path as osp\nimport warnings\n\nfrom mmengine.config import Config, ConfigDict\nfrom mmengine.logging import print_log\n\n\ndef find_latest_checkpoint(path, suffix='pth'):\n \"\"\"Find the latest checkpoint from the working directory.\n\n Args:\n path(str): The path to find checkpoints.\n suffix(str): File extension.\n Defaults to pth.\n\n Returns:\n latest_path(str | None): File path of the latest checkpoint.\n References:\n .. [1] https://github.com/microsoft/SoftTeacher\n /blob/main/ssod/utils/patch.py\n \"\"\"\n if not osp.exists(path):\n warnings.warn('The path of checkpoints does not exist.')\n return None\n if osp.exists(osp.join(path, f'latest.{suffix}')):\n return osp.join(path, f'latest.{suffix}')\n\n checkpoints = glob.glob(osp.join(path, f'*.{suffix}'))\n if len(checkpoints) == 0:\n warnings.warn('There are no checkpoints in the path.')\n return None\n latest = -1\n latest_path = None\n for checkpoint in checkpoints:\n count = int(osp.basename(checkpoint).split('_')[-1].split('.')[0])\n if count > latest:\n latest = count\n latest_path = checkpoint\n return latest_path\n\n\ndef update_data_root(cfg, logger=None):\n \"\"\"Update data root according to env MMDET_DATASETS.\n\n If set env MMDET_DATASETS, update cfg.data_root according to\n MMDET_DATASETS. Otherwise, using cfg.data_root as default.\n\n Args:\n cfg (:obj:`Config`): The model config need to modify\n logger (logging.Logger | str | None): the way to print msg\n \"\"\"\n assert isinstance(cfg, Config), \\\n f'cfg got wrong type: {type(cfg)}, expected mmengine.Config'\n\n if 'MMDET_DATASETS' in os.environ:\n dst_root = os.environ['MMDET_DATASETS']\n print_log(f'MMDET_DATASETS has been set to be {dst_root}.'\n f'Using {dst_root} as data root.')\n else:\n return\n\n assert isinstance(cfg, Config), \\\n f'cfg got wrong type: {type(cfg)}, expected mmengine.Config'\n\n def update(cfg, src_str, dst_str):\n for k, v in cfg.items():\n if isinstance(v, ConfigDict):\n update(cfg[k], src_str, dst_str)\n if isinstance(v, str) and src_str in v:\n cfg[k] = v.replace(src_str, dst_str)\n\n update(cfg.data, cfg.data_root, dst_root)\n cfg.data_root = dst_root\n\n\ndef add_dump_metric(args, cfg):\n dump_metric = dict(type='DumpResults', out_file_path=args.out)\n if isinstance(cfg.test_evaluator, (list, tuple)):\n cfg.test_evaluator = list(cfg.test_evaluator).append(dump_metric)\n elif isinstance(cfg.test_evaluator, dict):\n if isinstance(cfg.test_evaluator.metric, str):\n cfg.test_evaluator = [cfg.test_evaluator, dump_metric]\n elif isinstance(cfg.test_evaluator.metric, (list, tuple)):\n cfg.test_evaluator.metric = list(\n cfg.test_evaluator.metric).append(dump_metric)\n else:\n cfg.test_evaluator.metric = [\n cfg.test_evaluator.metric, dump_metric\n ]\n", "path": "mmdet/utils/misc.py"}]}

| 3,644 | 478 |

gh_patches_debug_20314

|

rasdani/github-patches

|

git_diff

|

urllib3__urllib3-773

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Broken vendoring in socks contrib module

Awkwardly, the SOCKS contrib module doesn't work properly when vendored because it tries to do an absolute import. We should probably rewrite it to use relative imports.

My bad.

</issue>

<code>

[start of urllib3/contrib/socks.py]

1 # -*- coding: utf-8 -*-

2 """

3 SOCKS support for urllib3

4 ~~~~~~~~~~~~~~~~~~~~~~~~~

5

6 This contrib module contains provisional support for SOCKS proxies from within

7 urllib3. This module supports SOCKS4 (specifically the SOCKS4A variant) and

8 SOCKS5. To enable its functionality, either install PySocks or install this

9 module with the ``socks`` extra.

10

11 Known Limitations:

12

13 - Currently PySocks does not support contacting remote websites via literal

14 IPv6 addresses. Any such connection attempt will fail.

15 - Currently PySocks does not support IPv6 connections to the SOCKS proxy. Any

16 such connection attempt will fail.

17 """

18 from __future__ import absolute_import

19

20 try:

21 import socks

22 except ImportError:

23 import warnings

24 from urllib3.exceptions import DependencyWarning

25

26 warnings.warn((

27 'SOCKS support in urllib3 requires the installation of optional '

28 'dependencies: specifically, PySocks. For more information, see '

29 'https://urllib3.readthedocs.org/en/latest/contrib.html#socks-proxies'

30 ),

31 DependencyWarning

32 )

33 raise

34

35 from socket import error as SocketError, timeout as SocketTimeout

36

37 from urllib3.connection import (

38 HTTPConnection, HTTPSConnection

39 )

40 from urllib3.connectionpool import (

41 HTTPConnectionPool, HTTPSConnectionPool

42 )

43 from urllib3.exceptions import ConnectTimeoutError, NewConnectionError

44 from urllib3.poolmanager import PoolManager

45 from urllib3.util.url import parse_url

46

47 try:

48 import ssl

49 except ImportError:

50 ssl = None

51

52

53 class SOCKSConnection(HTTPConnection):

54 """

55 A plain-text HTTP connection that connects via a SOCKS proxy.

56 """

57 def __init__(self, *args, **kwargs):

58 self._socks_options = kwargs.pop('_socks_options')

59 super(SOCKSConnection, self).__init__(*args, **kwargs)

60

61 def _new_conn(self):

62 """

63 Establish a new connection via the SOCKS proxy.

64 """

65 extra_kw = {}

66 if self.source_address:

67 extra_kw['source_address'] = self.source_address

68

69 if self.socket_options:

70 extra_kw['socket_options'] = self.socket_options

71

72 try:

73 conn = socks.create_connection(

74 (self.host, self.port),

75 proxy_type=self._socks_options['socks_version'],

76 proxy_addr=self._socks_options['proxy_host'],

77 proxy_port=self._socks_options['proxy_port'],

78 proxy_username=self._socks_options['username'],

79 proxy_password=self._socks_options['password'],

80 timeout=self.timeout,

81 **extra_kw

82 )

83

84 except SocketTimeout as e:

85 raise ConnectTimeoutError(

86 self, "Connection to %s timed out. (connect timeout=%s)" %

87 (self.host, self.timeout))

88

89 except socks.ProxyError as e:

90 # This is fragile as hell, but it seems to be the only way to raise

91 # useful errors here.

92 if e.socket_err:

93 error = e.socket_err

94 if isinstance(error, SocketTimeout):

95 raise ConnectTimeoutError(

96 self,

97 "Connection to %s timed out. (connect timeout=%s)" %

98 (self.host, self.timeout)

99 )

100 else:

101 raise NewConnectionError(

102 self,

103 "Failed to establish a new connection: %s" % error

104 )

105 else:

106 raise NewConnectionError(

107 self,

108 "Failed to establish a new connection: %s" % e

109 )

110

111 except SocketError as e: # Defensive: PySocks should catch all these.

112 raise NewConnectionError(

113 self, "Failed to establish a new connection: %s" % e)

114

115 return conn

116

117

118 # We don't need to duplicate the Verified/Unverified distinction from

119 # urllib3/connection.py here because the HTTPSConnection will already have been

120 # correctly set to either the Verified or Unverified form by that module. This

121 # means the SOCKSHTTPSConnection will automatically be the correct type.

122 class SOCKSHTTPSConnection(SOCKSConnection, HTTPSConnection):

123 pass

124

125

126 class SOCKSHTTPConnectionPool(HTTPConnectionPool):

127 ConnectionCls = SOCKSConnection

128

129

130 class SOCKSHTTPSConnectionPool(HTTPSConnectionPool):

131 ConnectionCls = SOCKSHTTPSConnection

132

133

134 class SOCKSProxyManager(PoolManager):

135 """

136 A version of the urllib3 ProxyManager that routes connections via the

137 defined SOCKS proxy.

138 """

139 pool_classes_by_scheme = {

140 'http': SOCKSHTTPConnectionPool,

141 'https': SOCKSHTTPSConnectionPool,

142 }

143

144 def __init__(self, proxy_url, username=None, password=None,

145 num_pools=10, headers=None, **connection_pool_kw):

146 parsed = parse_url(proxy_url)

147

148 if parsed.scheme == 'socks5':

149 socks_version = socks.PROXY_TYPE_SOCKS5

150 elif parsed.scheme == 'socks4':

151 socks_version = socks.PROXY_TYPE_SOCKS4

152 else:

153 raise ValueError(

154 "Unable to determine SOCKS version from %s" % proxy_url

155 )

156

157 self.proxy_url = proxy_url

158

159 socks_options = {

160 'socks_version': socks_version,

161 'proxy_host': parsed.host,

162 'proxy_port': parsed.port,

163 'username': username,

164 'password': password,

165 }

166 connection_pool_kw['_socks_options'] = socks_options

167

168 super(SOCKSProxyManager, self).__init__(

169 num_pools, headers, **connection_pool_kw

170 )

171

172 self.pool_classes_by_scheme = SOCKSProxyManager.pool_classes_by_scheme

173

[end of urllib3/contrib/socks.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/urllib3/contrib/socks.py b/urllib3/contrib/socks.py

--- a/urllib3/contrib/socks.py

+++ b/urllib3/contrib/socks.py

@@ -21,7 +21,7 @@

import socks

except ImportError:

import warnings

- from urllib3.exceptions import DependencyWarning

+ from ..exceptions import DependencyWarning

warnings.warn((

'SOCKS support in urllib3 requires the installation of optional '

@@ -34,15 +34,15 @@

from socket import error as SocketError, timeout as SocketTimeout

-from urllib3.connection import (

+from ..connection import (

HTTPConnection, HTTPSConnection

)

-from urllib3.connectionpool import (

+from ..connectionpool import (

HTTPConnectionPool, HTTPSConnectionPool

)

-from urllib3.exceptions import ConnectTimeoutError, NewConnectionError

-from urllib3.poolmanager import PoolManager

-from urllib3.util.url import parse_url

+from ..exceptions import ConnectTimeoutError, NewConnectionError

+from ..poolmanager import PoolManager

+from ..util.url import parse_url

try:

import ssl

|

{"golden_diff": "diff --git a/urllib3/contrib/socks.py b/urllib3/contrib/socks.py\n--- a/urllib3/contrib/socks.py\n+++ b/urllib3/contrib/socks.py\n@@ -21,7 +21,7 @@\n import socks\n except ImportError:\n import warnings\n- from urllib3.exceptions import DependencyWarning\n+ from ..exceptions import DependencyWarning\n \n warnings.warn((\n 'SOCKS support in urllib3 requires the installation of optional '\n@@ -34,15 +34,15 @@\n \n from socket import error as SocketError, timeout as SocketTimeout\n \n-from urllib3.connection import (\n+from ..connection import (\n HTTPConnection, HTTPSConnection\n )\n-from urllib3.connectionpool import (\n+from ..connectionpool import (\n HTTPConnectionPool, HTTPSConnectionPool\n )\n-from urllib3.exceptions import ConnectTimeoutError, NewConnectionError\n-from urllib3.poolmanager import PoolManager\n-from urllib3.util.url import parse_url\n+from ..exceptions import ConnectTimeoutError, NewConnectionError\n+from ..poolmanager import PoolManager\n+from ..util.url import parse_url\n \n try:\n import ssl\n", "issue": "Broken vendoring in socks contrib module\nAwkwardly, the SOCKS contrib module doesn't work properly when vendored because it tries to do an absolute import. We should probably rewrite it to use relative imports.\n\nMy bad.\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\"\"\"\nSOCKS support for urllib3\n~~~~~~~~~~~~~~~~~~~~~~~~~\n\nThis contrib module contains provisional support for SOCKS proxies from within\nurllib3. This module supports SOCKS4 (specifically the SOCKS4A variant) and\nSOCKS5. To enable its functionality, either install PySocks or install this\nmodule with the ``socks`` extra.\n\nKnown Limitations:\n\n- Currently PySocks does not support contacting remote websites via literal\n IPv6 addresses. Any such connection attempt will fail.\n- Currently PySocks does not support IPv6 connections to the SOCKS proxy. Any\n such connection attempt will fail.\n\"\"\"\nfrom __future__ import absolute_import\n\ntry:\n import socks\nexcept ImportError:\n import warnings\n from urllib3.exceptions import DependencyWarning\n\n warnings.warn((\n 'SOCKS support in urllib3 requires the installation of optional '\n 'dependencies: specifically, PySocks. For more information, see '\n 'https://urllib3.readthedocs.org/en/latest/contrib.html#socks-proxies'\n ),\n DependencyWarning\n )\n raise\n\nfrom socket import error as SocketError, timeout as SocketTimeout\n\nfrom urllib3.connection import (\n HTTPConnection, HTTPSConnection\n)\nfrom urllib3.connectionpool import (\n HTTPConnectionPool, HTTPSConnectionPool\n)\nfrom urllib3.exceptions import ConnectTimeoutError, NewConnectionError\nfrom urllib3.poolmanager import PoolManager\nfrom urllib3.util.url import parse_url\n\ntry:\n import ssl\nexcept ImportError:\n ssl = None\n\n\nclass SOCKSConnection(HTTPConnection):\n \"\"\"\n A plain-text HTTP connection that connects via a SOCKS proxy.\n \"\"\"\n def __init__(self, *args, **kwargs):\n self._socks_options = kwargs.pop('_socks_options')\n super(SOCKSConnection, self).__init__(*args, **kwargs)\n\n def _new_conn(self):\n \"\"\"\n Establish a new connection via the SOCKS proxy.\n \"\"\"\n extra_kw = {}\n if self.source_address:\n extra_kw['source_address'] = self.source_address\n\n if self.socket_options:\n extra_kw['socket_options'] = self.socket_options\n\n try:\n conn = socks.create_connection(\n (self.host, self.port),\n proxy_type=self._socks_options['socks_version'],\n proxy_addr=self._socks_options['proxy_host'],\n proxy_port=self._socks_options['proxy_port'],\n proxy_username=self._socks_options['username'],\n proxy_password=self._socks_options['password'],\n timeout=self.timeout,\n **extra_kw\n )\n\n except SocketTimeout as e:\n raise ConnectTimeoutError(\n self, \"Connection to %s timed out. (connect timeout=%s)\" %\n (self.host, self.timeout))\n\n except socks.ProxyError as e:\n # This is fragile as hell, but it seems to be the only way to raise\n # useful errors here.\n if e.socket_err:\n error = e.socket_err\n if isinstance(error, SocketTimeout):\n raise ConnectTimeoutError(\n self,\n \"Connection to %s timed out. (connect timeout=%s)\" %\n (self.host, self.timeout)\n )\n else:\n raise NewConnectionError(\n self,\n \"Failed to establish a new connection: %s\" % error\n )\n else:\n raise NewConnectionError(\n self,\n \"Failed to establish a new connection: %s\" % e\n )\n\n except SocketError as e: # Defensive: PySocks should catch all these.\n raise NewConnectionError(\n self, \"Failed to establish a new connection: %s\" % e)\n\n return conn\n\n\n# We don't need to duplicate the Verified/Unverified distinction from\n# urllib3/connection.py here because the HTTPSConnection will already have been\n# correctly set to either the Verified or Unverified form by that module. This\n# means the SOCKSHTTPSConnection will automatically be the correct type.\nclass SOCKSHTTPSConnection(SOCKSConnection, HTTPSConnection):\n pass\n\n\nclass SOCKSHTTPConnectionPool(HTTPConnectionPool):\n ConnectionCls = SOCKSConnection\n\n\nclass SOCKSHTTPSConnectionPool(HTTPSConnectionPool):\n ConnectionCls = SOCKSHTTPSConnection\n\n\nclass SOCKSProxyManager(PoolManager):\n \"\"\"\n A version of the urllib3 ProxyManager that routes connections via the\n defined SOCKS proxy.\n \"\"\"\n pool_classes_by_scheme = {\n 'http': SOCKSHTTPConnectionPool,\n 'https': SOCKSHTTPSConnectionPool,\n }\n\n def __init__(self, proxy_url, username=None, password=None,\n num_pools=10, headers=None, **connection_pool_kw):\n parsed = parse_url(proxy_url)\n\n if parsed.scheme == 'socks5':\n socks_version = socks.PROXY_TYPE_SOCKS5\n elif parsed.scheme == 'socks4':\n socks_version = socks.PROXY_TYPE_SOCKS4\n else:\n raise ValueError(\n \"Unable to determine SOCKS version from %s\" % proxy_url\n )\n\n self.proxy_url = proxy_url\n\n socks_options = {\n 'socks_version': socks_version,\n 'proxy_host': parsed.host,\n 'proxy_port': parsed.port,\n 'username': username,\n 'password': password,\n }\n connection_pool_kw['_socks_options'] = socks_options\n\n super(SOCKSProxyManager, self).__init__(\n num_pools, headers, **connection_pool_kw\n )\n\n self.pool_classes_by_scheme = SOCKSProxyManager.pool_classes_by_scheme\n", "path": "urllib3/contrib/socks.py"}]}

| 2,204 | 253 |

gh_patches_debug_10479

|

rasdani/github-patches

|

git_diff

|

kubeflow__pipelines-6993

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[bug] TFX sample fails after upgrading to 1.4

### What steps did you take

<!-- A clear and concise description of what the bug is.-->

* MLMD & TFX upgrade: https://github.com/kubeflow/pipelines/pull/6910

### What happened:

* Check test: https://oss-prow.knative.dev/view/gs/oss-prow/logs/kubeflow-pipeline-postsubmit-integration-test/1464225919869652992

### What did you expect to happen:

We need to upgrade the TFX sample to 1.4.0

### Anything else you would like to add:

<!-- Miscellaneous information that will assist in solving the issue.-->

### Labels

<!-- Please include labels below by uncommenting them to help us better triage issues -->

<!-- /area frontend -->

<!-- /area backend -->

<!-- /area sdk -->

<!-- /area testing -->

/area samples

<!-- /area components -->

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

</issue>

<code>

[start of samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py]

1 #!/usr/bin/env python3

2 # Copyright 2019 The Kubeflow Authors

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 # You may obtain a copy of the License at

7 #

8 # http://www.apache.org/licenses/LICENSE-2.0

9 #

10 # Unless required by applicable law or agreed to in writing, software

11 # distributed under the License is distributed on an "AS IS" BASIS,

12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 # See the License for the specific language governing permissions and

14 # limitations under the License.

15

16 import json

17 import os

18

19 import kfp

20 import tensorflow_model_analysis as tfma

21 from tfx import v1 as tfx

22

23 # Define pipeline params used for pipeline execution.

24 # Path to the module file, should be a GCS path,

25 # or a module file baked in the docker image used by the pipeline.

26 _taxi_module_file_param = tfx.dsl.experimental.RuntimeParameter(

27 name='module-file',

28 default='/opt/conda/lib/python3.7/site-packages/tfx/examples/chicago_taxi_pipeline/taxi_utils_native_keras.py',

29 ptype=str,

30 )

31

32 # Path to the CSV data file, under which their should be a data.csv file.

33 _data_root = '/opt/conda/lib/python3.7/site-packages/tfx/examples/chicago_taxi_pipeline/data/simple'

34

35 # Path of pipeline root, should be a GCS path.

36 _pipeline_root = os.path.join(

37 'gs://{{kfp-default-bucket}}', 'tfx_taxi_simple', kfp.dsl.RUN_ID_PLACEHOLDER

38 )

39

40 # Path that ML models are pushed, should be a GCS path.

41 _serving_model_dir = os.path.join('gs://your-bucket', 'serving_model', 'tfx_taxi_simple')

42 _push_destination = tfx.dsl.experimental.RuntimeParameter(

43 name='push_destination',

44 default=json.dumps({'filesystem': {'base_directory': _serving_model_dir}}),

45 ptype=str,

46 )

47

48 def _create_pipeline(

49 pipeline_root: str,

50 csv_input_location: str,

51 taxi_module_file: tfx.dsl.experimental.RuntimeParameter,

52 push_destination: tfx.dsl.experimental.RuntimeParameter,

53 enable_cache: bool

54 ):

55 """Creates a simple Kubeflow-based Chicago Taxi TFX pipeline.

56

57 Args:

58 pipeline_root: The root of the pipeline output.

59 csv_input_location: The location of the input data directory.

60 taxi_module_file: The location of the module file for Transform/Trainer.

61 enable_cache: Whether to enable cache or not.

62

63 Returns:

64 A logical TFX pipeline.Pipeline object.

65 """

66 example_gen = tfx.components.CsvExampleGen(input_base=csv_input_location)

67 statistics_gen = tfx.components.StatisticsGen(

68 examples=example_gen.outputs['examples'])

69 schema_gen = tfx.components.SchemaGen(

70 statistics=statistics_gen.outputs['statistics'],

71 infer_feature_shape=False,

72 )

73 example_validator = tfx.components.ExampleValidator(

74 statistics=statistics_gen.outputs['statistics'],

75 schema=schema_gen.outputs['schema'],

76 )

77 transform = tfx.components.Transform(

78 examples=example_gen.outputs['examples'],

79 schema=schema_gen.outputs['schema'],

80 module_file=taxi_module_file,

81 )

82 trainer = tfx.components.Trainer(

83 module_file=taxi_module_file,

84 examples=transform.outputs['transformed_examples'],

85 schema=schema_gen.outputs['schema'],

86 transform_graph=transform.outputs['transform_graph'],

87 train_args=tfx.proto.TrainArgs(num_steps=10),

88 eval_args=tfx.proto.EvalArgs(num_steps=5),

89 )

90 # Set the TFMA config for Model Evaluation and Validation.

91 eval_config = tfma.EvalConfig(

92 model_specs=[

93 tfma.ModelSpec(

94 signature_name='serving_default', label_key='tips_xf',

95 preprocessing_function_names=['transform_features'])

96 ],

97 metrics_specs=[

98 tfma.MetricsSpec(

99 # The metrics added here are in addition to those saved with the

100 # model (assuming either a keras model or EvalSavedModel is used).

101 # Any metrics added into the saved model (for example using

102 # model.compile(..., metrics=[...]), etc) will be computed

103 # automatically.

104 metrics=[tfma.MetricConfig(class_name='ExampleCount')],

105 # To add validation thresholds for metrics saved with the model,

106 # add them keyed by metric name to the thresholds map.

107 thresholds={

108 'binary_accuracy':

109 tfma.MetricThreshold(

110 value_threshold=tfma.GenericValueThreshold(

111 lower_bound={'value': 0.5}

112 ),

113 change_threshold=tfma.GenericChangeThreshold(

114 direction=tfma.MetricDirection.HIGHER_IS_BETTER,

115 absolute={'value': -1e-10}

116 )

117 )

118 }

119 )

120 ],

121 slicing_specs=[

122 # An empty slice spec means the overall slice, i.e. the whole dataset.

123 tfma.SlicingSpec(),

124 # Data can be sliced along a feature column. In this case, data is

125 # sliced along feature column trip_start_hour.

126 tfma.SlicingSpec(feature_keys=['trip_start_hour'])

127 ]

128 )

129

130 evaluator = tfx.components.Evaluator(

131 examples=example_gen.outputs['examples'],

132 model=trainer.outputs['model'],

133 eval_config=eval_config,

134 )

135

136 pusher = tfx.components.Pusher(

137 model=trainer.outputs['model'],

138 model_blessing=evaluator.outputs['blessing'],

139 push_destination=push_destination,

140 )

141

142 return tfx.dsl.Pipeline(

143 pipeline_name='parameterized_tfx_oss',

144 pipeline_root=pipeline_root,

145 components=[

146 example_gen, statistics_gen, schema_gen, example_validator, transform,

147 trainer, evaluator, pusher

148 ],

149 enable_cache=enable_cache,

150 )

151

152

153 if __name__ == '__main__':

154 enable_cache = True

155 pipeline = _create_pipeline(

156 _pipeline_root,

157 _data_root,

158 _taxi_module_file_param,

159 _push_destination,

160 enable_cache=enable_cache,

161 )

162 # Make sure the version of TFX image used is consistent with the version of

163 # TFX SDK.

164 config = tfx.orchestration.experimental.KubeflowDagRunnerConfig(

165 kubeflow_metadata_config=tfx.orchestration.experimental.

166 get_default_kubeflow_metadata_config(),

167 tfx_image='gcr.io/tfx-oss-public/tfx:1.2.0',

168 )

169 kfp_runner = tfx.orchestration.experimental.KubeflowDagRunner(

170 output_filename=__file__ + '.yaml', config=config

171 )

172

173 kfp_runner.run(pipeline)

174

[end of samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py b/samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py

--- a/samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py

+++ b/samples/core/parameterized_tfx_oss/parameterized_tfx_oss.py

@@ -164,7 +164,7 @@

config = tfx.orchestration.experimental.KubeflowDagRunnerConfig(

kubeflow_metadata_config=tfx.orchestration.experimental.

get_default_kubeflow_metadata_config(),

- tfx_image='gcr.io/tfx-oss-public/tfx:1.2.0',