problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

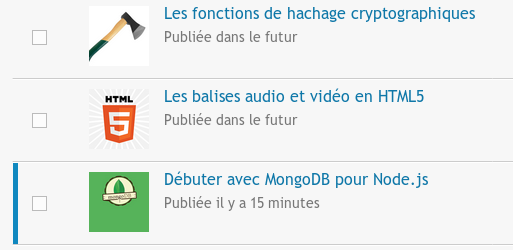

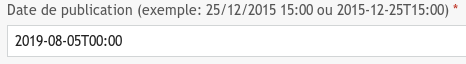

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_4176

|

rasdani/github-patches

|

git_diff

|

pallets__click-1081

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add newlines between options in help text

In case of multi-line help messages for options the list of options ``cmd --help`` gets difficult to read. It would be great to have an option to toggle extra newlines after multi-line help messages / in general between option help messages.

</issue>

<code>

[start of click/formatting.py]

1 from contextlib import contextmanager

2 from .termui import get_terminal_size

3 from .parser import split_opt

4 from ._compat import term_len

5

6

7 # Can force a width. This is used by the test system

8 FORCED_WIDTH = None

9

10

11 def measure_table(rows):

12 widths = {}

13 for row in rows:

14 for idx, col in enumerate(row):

15 widths[idx] = max(widths.get(idx, 0), term_len(col))

16 return tuple(y for x, y in sorted(widths.items()))

17

18

19 def iter_rows(rows, col_count):

20 for row in rows:

21 row = tuple(row)

22 yield row + ('',) * (col_count - len(row))

23

24

25 def wrap_text(text, width=78, initial_indent='', subsequent_indent='',

26 preserve_paragraphs=False):

27 """A helper function that intelligently wraps text. By default, it

28 assumes that it operates on a single paragraph of text but if the

29 `preserve_paragraphs` parameter is provided it will intelligently

30 handle paragraphs (defined by two empty lines).

31

32 If paragraphs are handled, a paragraph can be prefixed with an empty

33 line containing the ``\\b`` character (``\\x08``) to indicate that

34 no rewrapping should happen in that block.

35

36 :param text: the text that should be rewrapped.

37 :param width: the maximum width for the text.

38 :param initial_indent: the initial indent that should be placed on the

39 first line as a string.

40 :param subsequent_indent: the indent string that should be placed on

41 each consecutive line.

42 :param preserve_paragraphs: if this flag is set then the wrapping will

43 intelligently handle paragraphs.

44 """

45 from ._textwrap import TextWrapper

46 text = text.expandtabs()

47 wrapper = TextWrapper(width, initial_indent=initial_indent,

48 subsequent_indent=subsequent_indent,

49 replace_whitespace=False)

50 if not preserve_paragraphs:

51 return wrapper.fill(text)

52

53 p = []

54 buf = []

55 indent = None

56

57 def _flush_par():

58 if not buf:

59 return

60 if buf[0].strip() == '\b':

61 p.append((indent or 0, True, '\n'.join(buf[1:])))

62 else:

63 p.append((indent or 0, False, ' '.join(buf)))

64 del buf[:]

65

66 for line in text.splitlines():

67 if not line:

68 _flush_par()

69 indent = None

70 else:

71 if indent is None:

72 orig_len = term_len(line)

73 line = line.lstrip()

74 indent = orig_len - term_len(line)

75 buf.append(line)

76 _flush_par()

77

78 rv = []

79 for indent, raw, text in p:

80 with wrapper.extra_indent(' ' * indent):

81 if raw:

82 rv.append(wrapper.indent_only(text))

83 else:

84 rv.append(wrapper.fill(text))

85

86 return '\n\n'.join(rv)

87

88

89 class HelpFormatter(object):

90 """This class helps with formatting text-based help pages. It's

91 usually just needed for very special internal cases, but it's also

92 exposed so that developers can write their own fancy outputs.

93

94 At present, it always writes into memory.

95

96 :param indent_increment: the additional increment for each level.

97 :param width: the width for the text. This defaults to the terminal

98 width clamped to a maximum of 78.

99 """

100

101 def __init__(self, indent_increment=2, width=None, max_width=None):

102 self.indent_increment = indent_increment

103 if max_width is None:

104 max_width = 80

105 if width is None:

106 width = FORCED_WIDTH

107 if width is None:

108 width = max(min(get_terminal_size()[0], max_width) - 2, 50)

109 self.width = width

110 self.current_indent = 0

111 self.buffer = []

112

113 def write(self, string):

114 """Writes a unicode string into the internal buffer."""

115 self.buffer.append(string)

116

117 def indent(self):

118 """Increases the indentation."""

119 self.current_indent += self.indent_increment

120

121 def dedent(self):

122 """Decreases the indentation."""

123 self.current_indent -= self.indent_increment

124

125 def write_usage(self, prog, args='', prefix='Usage: '):

126 """Writes a usage line into the buffer.

127

128 :param prog: the program name.

129 :param args: whitespace separated list of arguments.

130 :param prefix: the prefix for the first line.

131 """

132 usage_prefix = '%*s%s ' % (self.current_indent, prefix, prog)

133 text_width = self.width - self.current_indent

134

135 if text_width >= (term_len(usage_prefix) + 20):

136 # The arguments will fit to the right of the prefix.

137 indent = ' ' * term_len(usage_prefix)

138 self.write(wrap_text(args, text_width,

139 initial_indent=usage_prefix,

140 subsequent_indent=indent))

141 else:

142 # The prefix is too long, put the arguments on the next line.

143 self.write(usage_prefix)

144 self.write('\n')

145 indent = ' ' * (max(self.current_indent, term_len(prefix)) + 4)

146 self.write(wrap_text(args, text_width,

147 initial_indent=indent,

148 subsequent_indent=indent))

149

150 self.write('\n')

151

152 def write_heading(self, heading):

153 """Writes a heading into the buffer."""

154 self.write('%*s%s:\n' % (self.current_indent, '', heading))

155

156 def write_paragraph(self):

157 """Writes a paragraph into the buffer."""

158 if self.buffer:

159 self.write('\n')

160

161 def write_text(self, text):

162 """Writes re-indented text into the buffer. This rewraps and

163 preserves paragraphs.

164 """

165 text_width = max(self.width - self.current_indent, 11)

166 indent = ' ' * self.current_indent

167 self.write(wrap_text(text, text_width,

168 initial_indent=indent,

169 subsequent_indent=indent,

170 preserve_paragraphs=True))

171 self.write('\n')

172

173 def write_dl(self, rows, col_max=30, col_spacing=2):

174 """Writes a definition list into the buffer. This is how options

175 and commands are usually formatted.

176

177 :param rows: a list of two item tuples for the terms and values.

178 :param col_max: the maximum width of the first column.

179 :param col_spacing: the number of spaces between the first and

180 second column.

181 """

182 rows = list(rows)

183 widths = measure_table(rows)

184 if len(widths) != 2:

185 raise TypeError('Expected two columns for definition list')

186

187 first_col = min(widths[0], col_max) + col_spacing

188

189 for first, second in iter_rows(rows, len(widths)):

190 self.write('%*s%s' % (self.current_indent, '', first))

191 if not second:

192 self.write('\n')

193 continue

194 if term_len(first) <= first_col - col_spacing:

195 self.write(' ' * (first_col - term_len(first)))

196 else:

197 self.write('\n')

198 self.write(' ' * (first_col + self.current_indent))

199

200 text_width = max(self.width - first_col - 2, 10)

201 wrapped_text = wrap_text(second, text_width, preserve_paragraphs=True)

202 lines = wrapped_text.splitlines()

203

204 if lines:

205 self.write(lines[0] + '\n')

206

207 for line in lines[1:]:

208 self.write('%*s%s\n' % (first_col + self.current_indent, '', line))

209 else:

210 self.write('\n')

211

212 @contextmanager

213 def section(self, name):

214 """Helpful context manager that writes a paragraph, a heading,

215 and the indents.

216

217 :param name: the section name that is written as heading.

218 """

219 self.write_paragraph()

220 self.write_heading(name)

221 self.indent()

222 try:

223 yield

224 finally:

225 self.dedent()

226

227 @contextmanager

228 def indentation(self):

229 """A context manager that increases the indentation."""

230 self.indent()

231 try:

232 yield

233 finally:

234 self.dedent()

235

236 def getvalue(self):

237 """Returns the buffer contents."""

238 return ''.join(self.buffer)

239

240

241 def join_options(options):

242 """Given a list of option strings this joins them in the most appropriate

243 way and returns them in the form ``(formatted_string,

244 any_prefix_is_slash)`` where the second item in the tuple is a flag that

245 indicates if any of the option prefixes was a slash.

246 """

247 rv = []

248 any_prefix_is_slash = False

249 for opt in options:

250 prefix = split_opt(opt)[0]

251 if prefix == '/':

252 any_prefix_is_slash = True

253 rv.append((len(prefix), opt))

254

255 rv.sort(key=lambda x: x[0])

256

257 rv = ', '.join(x[1] for x in rv)

258 return rv, any_prefix_is_slash

259

[end of click/formatting.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/click/formatting.py b/click/formatting.py

--- a/click/formatting.py

+++ b/click/formatting.py

@@ -206,6 +206,10 @@

for line in lines[1:]:

self.write('%*s%s\n' % (first_col + self.current_indent, '', line))

+

+ if len(lines) > 1:

+ # separate long help from next option

+ self.write("\n")

else:

self.write('\n')

|

{"golden_diff": "diff --git a/click/formatting.py b/click/formatting.py\n--- a/click/formatting.py\n+++ b/click/formatting.py\n@@ -206,6 +206,10 @@\n \n for line in lines[1:]:\n self.write('%*s%s\\n' % (first_col + self.current_indent, '', line))\n+\n+ if len(lines) > 1:\n+ # separate long help from next option\n+ self.write(\"\\n\")\n else:\n self.write('\\n')\n", "issue": "Add newlines between options in help text\nIn case of multi-line help messages for options the list of options ``cmd --help`` gets difficult to read. It would be great to have an option to toggle extra newlines after multi-line help messages / in general between option help messages.\n", "before_files": [{"content": "from contextlib import contextmanager\nfrom .termui import get_terminal_size\nfrom .parser import split_opt\nfrom ._compat import term_len\n\n\n# Can force a width. This is used by the test system\nFORCED_WIDTH = None\n\n\ndef measure_table(rows):\n widths = {}\n for row in rows:\n for idx, col in enumerate(row):\n widths[idx] = max(widths.get(idx, 0), term_len(col))\n return tuple(y for x, y in sorted(widths.items()))\n\n\ndef iter_rows(rows, col_count):\n for row in rows:\n row = tuple(row)\n yield row + ('',) * (col_count - len(row))\n\n\ndef wrap_text(text, width=78, initial_indent='', subsequent_indent='',\n preserve_paragraphs=False):\n \"\"\"A helper function that intelligently wraps text. By default, it\n assumes that it operates on a single paragraph of text but if the\n `preserve_paragraphs` parameter is provided it will intelligently\n handle paragraphs (defined by two empty lines).\n\n If paragraphs are handled, a paragraph can be prefixed with an empty\n line containing the ``\\\\b`` character (``\\\\x08``) to indicate that\n no rewrapping should happen in that block.\n\n :param text: the text that should be rewrapped.\n :param width: the maximum width for the text.\n :param initial_indent: the initial indent that should be placed on the\n first line as a string.\n :param subsequent_indent: the indent string that should be placed on\n each consecutive line.\n :param preserve_paragraphs: if this flag is set then the wrapping will\n intelligently handle paragraphs.\n \"\"\"\n from ._textwrap import TextWrapper\n text = text.expandtabs()\n wrapper = TextWrapper(width, initial_indent=initial_indent,\n subsequent_indent=subsequent_indent,\n replace_whitespace=False)\n if not preserve_paragraphs:\n return wrapper.fill(text)\n\n p = []\n buf = []\n indent = None\n\n def _flush_par():\n if not buf:\n return\n if buf[0].strip() == '\\b':\n p.append((indent or 0, True, '\\n'.join(buf[1:])))\n else:\n p.append((indent or 0, False, ' '.join(buf)))\n del buf[:]\n\n for line in text.splitlines():\n if not line:\n _flush_par()\n indent = None\n else:\n if indent is None:\n orig_len = term_len(line)\n line = line.lstrip()\n indent = orig_len - term_len(line)\n buf.append(line)\n _flush_par()\n\n rv = []\n for indent, raw, text in p:\n with wrapper.extra_indent(' ' * indent):\n if raw:\n rv.append(wrapper.indent_only(text))\n else:\n rv.append(wrapper.fill(text))\n\n return '\\n\\n'.join(rv)\n\n\nclass HelpFormatter(object):\n \"\"\"This class helps with formatting text-based help pages. It's\n usually just needed for very special internal cases, but it's also\n exposed so that developers can write their own fancy outputs.\n\n At present, it always writes into memory.\n\n :param indent_increment: the additional increment for each level.\n :param width: the width for the text. This defaults to the terminal\n width clamped to a maximum of 78.\n \"\"\"\n\n def __init__(self, indent_increment=2, width=None, max_width=None):\n self.indent_increment = indent_increment\n if max_width is None:\n max_width = 80\n if width is None:\n width = FORCED_WIDTH\n if width is None:\n width = max(min(get_terminal_size()[0], max_width) - 2, 50)\n self.width = width\n self.current_indent = 0\n self.buffer = []\n\n def write(self, string):\n \"\"\"Writes a unicode string into the internal buffer.\"\"\"\n self.buffer.append(string)\n\n def indent(self):\n \"\"\"Increases the indentation.\"\"\"\n self.current_indent += self.indent_increment\n\n def dedent(self):\n \"\"\"Decreases the indentation.\"\"\"\n self.current_indent -= self.indent_increment\n\n def write_usage(self, prog, args='', prefix='Usage: '):\n \"\"\"Writes a usage line into the buffer.\n\n :param prog: the program name.\n :param args: whitespace separated list of arguments.\n :param prefix: the prefix for the first line.\n \"\"\"\n usage_prefix = '%*s%s ' % (self.current_indent, prefix, prog)\n text_width = self.width - self.current_indent\n\n if text_width >= (term_len(usage_prefix) + 20):\n # The arguments will fit to the right of the prefix.\n indent = ' ' * term_len(usage_prefix)\n self.write(wrap_text(args, text_width,\n initial_indent=usage_prefix,\n subsequent_indent=indent))\n else:\n # The prefix is too long, put the arguments on the next line.\n self.write(usage_prefix)\n self.write('\\n')\n indent = ' ' * (max(self.current_indent, term_len(prefix)) + 4)\n self.write(wrap_text(args, text_width,\n initial_indent=indent,\n subsequent_indent=indent))\n\n self.write('\\n')\n\n def write_heading(self, heading):\n \"\"\"Writes a heading into the buffer.\"\"\"\n self.write('%*s%s:\\n' % (self.current_indent, '', heading))\n\n def write_paragraph(self):\n \"\"\"Writes a paragraph into the buffer.\"\"\"\n if self.buffer:\n self.write('\\n')\n\n def write_text(self, text):\n \"\"\"Writes re-indented text into the buffer. This rewraps and\n preserves paragraphs.\n \"\"\"\n text_width = max(self.width - self.current_indent, 11)\n indent = ' ' * self.current_indent\n self.write(wrap_text(text, text_width,\n initial_indent=indent,\n subsequent_indent=indent,\n preserve_paragraphs=True))\n self.write('\\n')\n\n def write_dl(self, rows, col_max=30, col_spacing=2):\n \"\"\"Writes a definition list into the buffer. This is how options\n and commands are usually formatted.\n\n :param rows: a list of two item tuples for the terms and values.\n :param col_max: the maximum width of the first column.\n :param col_spacing: the number of spaces between the first and\n second column.\n \"\"\"\n rows = list(rows)\n widths = measure_table(rows)\n if len(widths) != 2:\n raise TypeError('Expected two columns for definition list')\n\n first_col = min(widths[0], col_max) + col_spacing\n\n for first, second in iter_rows(rows, len(widths)):\n self.write('%*s%s' % (self.current_indent, '', first))\n if not second:\n self.write('\\n')\n continue\n if term_len(first) <= first_col - col_spacing:\n self.write(' ' * (first_col - term_len(first)))\n else:\n self.write('\\n')\n self.write(' ' * (first_col + self.current_indent))\n\n text_width = max(self.width - first_col - 2, 10)\n wrapped_text = wrap_text(second, text_width, preserve_paragraphs=True)\n lines = wrapped_text.splitlines()\n\n if lines:\n self.write(lines[0] + '\\n')\n\n for line in lines[1:]:\n self.write('%*s%s\\n' % (first_col + self.current_indent, '', line))\n else:\n self.write('\\n')\n\n @contextmanager\n def section(self, name):\n \"\"\"Helpful context manager that writes a paragraph, a heading,\n and the indents.\n\n :param name: the section name that is written as heading.\n \"\"\"\n self.write_paragraph()\n self.write_heading(name)\n self.indent()\n try:\n yield\n finally:\n self.dedent()\n\n @contextmanager\n def indentation(self):\n \"\"\"A context manager that increases the indentation.\"\"\"\n self.indent()\n try:\n yield\n finally:\n self.dedent()\n\n def getvalue(self):\n \"\"\"Returns the buffer contents.\"\"\"\n return ''.join(self.buffer)\n\n\ndef join_options(options):\n \"\"\"Given a list of option strings this joins them in the most appropriate\n way and returns them in the form ``(formatted_string,\n any_prefix_is_slash)`` where the second item in the tuple is a flag that\n indicates if any of the option prefixes was a slash.\n \"\"\"\n rv = []\n any_prefix_is_slash = False\n for opt in options:\n prefix = split_opt(opt)[0]\n if prefix == '/':\n any_prefix_is_slash = True\n rv.append((len(prefix), opt))\n\n rv.sort(key=lambda x: x[0])\n\n rv = ', '.join(x[1] for x in rv)\n return rv, any_prefix_is_slash\n", "path": "click/formatting.py"}]}

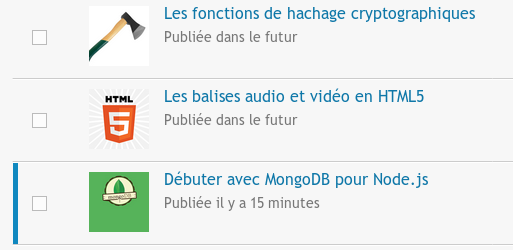

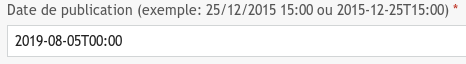

| 3,213 | 114 |

gh_patches_debug_3304

|

rasdani/github-patches

|

git_diff

|

DataDog__dd-trace-py-2699

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

0.50 bug sanic APM

Thanks for taking the time for reporting an issue!

Before reporting an issue on dd-trace-py, please be sure to provide all

necessary information.

If you're hitting a bug, make sure that you're using the latest version of this

library.

### Which version of dd-trace-py are you using?

0.50.0

### Which version of pip are you using?

19.0.3

### Which version of the libraries are you using?

Package Version

------------------- ---------

aiofiles 0.7.0

aiohttp 3.6.2

aiomysql 0.0.20

astroid 2.3.3

async-timeout 3.0.1

attrs 19.3.0

certifi 2019.9.11

cffi 1.13.2

chardet 3.0.4

Click 7.0

cryptography 2.8

ddtrace 0.50.0

Deprecated 1.2.11

elasticsearch 7.5.1

elasticsearch-async 6.2.0

h11 0.8.1

h2 3.1.1

hpack 3.0.0

hstspreload 2020.1.7

httpcore 0.3.0

httptools 0.0.13

httpx 0.9.3

hyperframe 5.2.0

idna 2.8

isort 4.3.21

lazy-object-proxy 1.4.3

mccabe 0.6.1

motor 2.4.0

multidict 5.1.0

packaging 21.0

peewee 3.13.1

pip 19.0.3

protobuf 3.17.3

pycparser 2.19

PyJWT 1.7.1

pymongo 3.11.4

PyMySQL 0.9.2

pyparsing 2.4.7

pytz 2019.3

PyYAML 5.3

requests 2.22.0

requests-async 0.5.0

rfc3986 1.3.2

sanic 21.3.4

sanic-motor 0.5.0

sanic-routing 0.6.2

sanic-scheduler 1.0.7

setuptools 40.8.0

six 1.14.0

sniffio 1.1.0

stringcase 1.2.0

tenacity 8.0.1

typed-ast 1.4.1

ujson 1.35

urllib3 1.25.6

uvloop 0.13.0

websockets 8.1

wrapt 1.11.2

yarl 1.4.2

### How can we reproduce your problem?

#### Description

It's not working patch when apply APM on Sanic

If path variable type is int on sanic route

Case code

```

@app.route('/<gam_id:int>/slot/count', methods=['GET'])

async def slot_count(request, gam_id):

try:

pass

except Exception as e:

abort(500, e)

return json(response(200, 'Complete Successfully', {}))

```

Error

```

[2021-07-13 19:50:48 +0000] [13] [ERROR] Exception occurred while handling uri: 'http://xxxxxxxxx.xxx/25/slot/count'

NoneType: None

```

### What is the result that you get?

my production env is not working on Sanic

### What is the result that you expected?

I wanna use datadog APM on my production SANIC

I made already pull request

- https://github.com/DataDog/dd-trace-py/pull/2662

</issue>

<code>

[start of ddtrace/contrib/sanic/patch.py]

1 import asyncio

2

3 import sanic

4

5 import ddtrace

6 from ddtrace import config

7 from ddtrace.constants import ANALYTICS_SAMPLE_RATE_KEY

8 from ddtrace.ext import SpanTypes

9 from ddtrace.pin import Pin

10 from ddtrace.utils.wrappers import unwrap as _u

11 from ddtrace.vendor import wrapt

12 from ddtrace.vendor.wrapt import wrap_function_wrapper as _w

13

14 from .. import trace_utils

15 from ...internal.logger import get_logger

16

17

18 log = get_logger(__name__)

19

20 config._add("sanic", dict(_default_service="sanic", distributed_tracing=True))

21

22 SANIC_PRE_21 = None

23

24

25 def update_span(span, response):

26 if isinstance(response, sanic.response.BaseHTTPResponse):

27 status_code = response.status

28 response_headers = response.headers

29 else:

30 # invalid response causes ServerError exception which must be handled

31 status_code = 500

32 response_headers = None

33 trace_utils.set_http_meta(span, config.sanic, status_code=status_code, response_headers=response_headers)

34

35

36 def _wrap_response_callback(span, callback):

37 # Only for sanic 20 and older

38 # Wrap response callbacks (either sync or async function) to set HTTP

39 # response span tags

40

41 @wrapt.function_wrapper

42 def wrap_sync(wrapped, instance, args, kwargs):

43 r = wrapped(*args, **kwargs)

44 response = args[0]

45 update_span(span, response)

46 return r

47

48 @wrapt.function_wrapper

49 async def wrap_async(wrapped, instance, args, kwargs):

50 r = await wrapped(*args, **kwargs)

51 response = args[0]

52 update_span(span, response)

53 return r

54

55 if asyncio.iscoroutinefunction(callback):

56 return wrap_async(callback)

57

58 return wrap_sync(callback)

59

60

61 async def patch_request_respond(wrapped, instance, args, kwargs):

62 # Only for sanic 21 and newer

63 # Wrap the framework response to set HTTP response span tags

64 response = await wrapped(*args, **kwargs)

65 pin = Pin._find(instance.ctx)

66 if pin is not None and pin.enabled():

67 span = pin.tracer.current_span()

68 if span is not None:

69 update_span(span, response)

70 return response

71

72

73 def _get_path(request):

74 """Get path and replace path parameter values with names if route exists."""

75 path = request.path

76 try:

77 match_info = request.match_info

78 except sanic.exceptions.SanicException:

79 return path

80 for key, value in match_info.items():

81 path = path.replace(value, f"<{key}>")

82 return path

83

84

85 async def patch_run_request_middleware(wrapped, instance, args, kwargs):

86 # Set span resource from the framework request

87 request = args[0]

88 pin = Pin._find(request.ctx)

89 if pin is not None and pin.enabled():

90 span = pin.tracer.current_span()

91 if span is not None:

92 span.resource = "{} {}".format(request.method, _get_path(request))

93 return await wrapped(*args, **kwargs)

94

95

96 def patch():

97 """Patch the instrumented methods."""

98 global SANIC_PRE_21

99

100 if getattr(sanic, "__datadog_patch", False):

101 return

102 setattr(sanic, "__datadog_patch", True)

103

104 SANIC_PRE_21 = sanic.__version__[:2] < "21"

105

106 _w("sanic", "Sanic.handle_request", patch_handle_request)

107 if not SANIC_PRE_21:

108 _w("sanic", "Sanic._run_request_middleware", patch_run_request_middleware)

109 _w(sanic.request, "Request.respond", patch_request_respond)

110

111

112 def unpatch():

113 """Unpatch the instrumented methods."""

114 _u(sanic.Sanic, "handle_request")

115 if not SANIC_PRE_21:

116 _u(sanic.Sanic, "_run_request_middleware")

117 _u(sanic.request.Request, "respond")

118 if not getattr(sanic, "__datadog_patch", False):

119 return

120 setattr(sanic, "__datadog_patch", False)

121

122

123 async def patch_handle_request(wrapped, instance, args, kwargs):

124 """Wrapper for Sanic.handle_request"""

125

126 def unwrap(request, write_callback=None, stream_callback=None, **kwargs):

127 return request, write_callback, stream_callback, kwargs

128

129 request, write_callback, stream_callback, new_kwargs = unwrap(*args, **kwargs)

130

131 if request.scheme not in ("http", "https"):

132 return await wrapped(*args, **kwargs)

133

134 pin = Pin()

135 if SANIC_PRE_21:

136 # Set span resource from the framework request

137 resource = "{} {}".format(request.method, _get_path(request))

138 else:

139 # The path is not available anymore in 21.x. Get it from

140 # the _run_request_middleware instrumented method.

141 resource = None

142 pin.onto(request.ctx)

143

144 headers = request.headers.copy()

145

146 trace_utils.activate_distributed_headers(ddtrace.tracer, int_config=config.sanic, request_headers=headers)

147

148 with pin.tracer.trace(

149 "sanic.request",

150 service=trace_utils.int_service(None, config.sanic),

151 resource=resource,

152 span_type=SpanTypes.WEB,

153 ) as span:

154 sample_rate = config.sanic.get_analytics_sample_rate(use_global_config=True)

155 if sample_rate is not None:

156 span.set_tag(ANALYTICS_SAMPLE_RATE_KEY, sample_rate)

157

158 method = request.method

159 url = "{scheme}://{host}{path}".format(scheme=request.scheme, host=request.host, path=request.path)

160 query_string = request.query_string

161 if isinstance(query_string, bytes):

162 query_string = query_string.decode()

163 trace_utils.set_http_meta(

164 span, config.sanic, method=method, url=url, query=query_string, request_headers=headers

165 )

166

167 if write_callback is not None:

168 new_kwargs["write_callback"] = _wrap_response_callback(span, write_callback)

169 if stream_callback is not None:

170 new_kwargs["stream_callback"] = _wrap_response_callback(span, stream_callback)

171

172 return await wrapped(request, **new_kwargs)

173

[end of ddtrace/contrib/sanic/patch.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/ddtrace/contrib/sanic/patch.py b/ddtrace/contrib/sanic/patch.py

--- a/ddtrace/contrib/sanic/patch.py

+++ b/ddtrace/contrib/sanic/patch.py

@@ -78,6 +78,11 @@

except sanic.exceptions.SanicException:

return path

for key, value in match_info.items():

+ try:

+ value = str(value)

+ except Exception:

+ # Best effort

+ continue

path = path.replace(value, f"<{key}>")

return path

|

{"golden_diff": "diff --git a/ddtrace/contrib/sanic/patch.py b/ddtrace/contrib/sanic/patch.py\n--- a/ddtrace/contrib/sanic/patch.py\n+++ b/ddtrace/contrib/sanic/patch.py\n@@ -78,6 +78,11 @@\n except sanic.exceptions.SanicException:\n return path\n for key, value in match_info.items():\n+ try:\n+ value = str(value)\n+ except Exception:\n+ # Best effort\n+ continue\n path = path.replace(value, f\"<{key}>\")\n return path\n", "issue": "0.50 bug sanic APM\nThanks for taking the time for reporting an issue!\r\n\r\nBefore reporting an issue on dd-trace-py, please be sure to provide all\r\nnecessary information.\r\n\r\nIf you're hitting a bug, make sure that you're using the latest version of this\r\nlibrary.\r\n\r\n### Which version of dd-trace-py are you using?\r\n0.50.0\r\n\r\n### Which version of pip are you using?\r\n19.0.3\r\n\r\n### Which version of the libraries are you using?\r\nPackage Version\r\n------------------- ---------\r\naiofiles 0.7.0\r\naiohttp 3.6.2\r\naiomysql 0.0.20\r\nastroid 2.3.3\r\nasync-timeout 3.0.1\r\nattrs 19.3.0\r\ncertifi 2019.9.11\r\ncffi 1.13.2\r\nchardet 3.0.4\r\nClick 7.0\r\ncryptography 2.8\r\nddtrace 0.50.0\r\nDeprecated 1.2.11\r\nelasticsearch 7.5.1\r\nelasticsearch-async 6.2.0\r\nh11 0.8.1\r\nh2 3.1.1\r\nhpack 3.0.0\r\nhstspreload 2020.1.7\r\nhttpcore 0.3.0\r\nhttptools 0.0.13\r\nhttpx 0.9.3\r\nhyperframe 5.2.0\r\nidna 2.8\r\nisort 4.3.21\r\nlazy-object-proxy 1.4.3\r\nmccabe 0.6.1\r\nmotor 2.4.0\r\nmultidict 5.1.0\r\npackaging 21.0\r\npeewee 3.13.1\r\npip 19.0.3\r\nprotobuf 3.17.3\r\npycparser 2.19\r\nPyJWT 1.7.1\r\npymongo 3.11.4\r\nPyMySQL 0.9.2\r\npyparsing 2.4.7\r\npytz 2019.3\r\nPyYAML 5.3\r\nrequests 2.22.0\r\nrequests-async 0.5.0\r\nrfc3986 1.3.2\r\nsanic 21.3.4\r\nsanic-motor 0.5.0\r\nsanic-routing 0.6.2\r\nsanic-scheduler 1.0.7\r\nsetuptools 40.8.0\r\nsix 1.14.0\r\nsniffio 1.1.0\r\nstringcase 1.2.0\r\ntenacity 8.0.1\r\ntyped-ast 1.4.1\r\nujson 1.35\r\nurllib3 1.25.6\r\nuvloop 0.13.0\r\nwebsockets 8.1\r\nwrapt 1.11.2\r\nyarl 1.4.2\r\n\r\n### How can we reproduce your problem?\r\n#### Description\r\nIt's not working patch when apply APM on Sanic\r\nIf path variable type is int on sanic route\r\n\r\nCase code\r\n```\r\[email protected]('/<gam_id:int>/slot/count', methods=['GET'])\r\nasync def slot_count(request, gam_id):\r\n try:\r\n pass\r\n except Exception as e:\r\n abort(500, e)\r\n return json(response(200, 'Complete Successfully', {}))\r\n\r\n```\r\n\r\nError\r\n```\r\n[2021-07-13 19:50:48 +0000] [13] [ERROR] Exception occurred while handling uri: 'http://xxxxxxxxx.xxx/25/slot/count'\r\nNoneType: None\r\n\r\n```\r\n\r\n### What is the result that you get?\r\nmy production env is not working on Sanic\r\n\r\n\r\n### What is the result that you expected?\r\nI wanna use datadog APM on my production SANIC\r\n\r\nI made already pull request\r\n- https://github.com/DataDog/dd-trace-py/pull/2662\r\n\n", "before_files": [{"content": "import asyncio\n\nimport sanic\n\nimport ddtrace\nfrom ddtrace import config\nfrom ddtrace.constants import ANALYTICS_SAMPLE_RATE_KEY\nfrom ddtrace.ext import SpanTypes\nfrom ddtrace.pin import Pin\nfrom ddtrace.utils.wrappers import unwrap as _u\nfrom ddtrace.vendor import wrapt\nfrom ddtrace.vendor.wrapt import wrap_function_wrapper as _w\n\nfrom .. import trace_utils\nfrom ...internal.logger import get_logger\n\n\nlog = get_logger(__name__)\n\nconfig._add(\"sanic\", dict(_default_service=\"sanic\", distributed_tracing=True))\n\nSANIC_PRE_21 = None\n\n\ndef update_span(span, response):\n if isinstance(response, sanic.response.BaseHTTPResponse):\n status_code = response.status\n response_headers = response.headers\n else:\n # invalid response causes ServerError exception which must be handled\n status_code = 500\n response_headers = None\n trace_utils.set_http_meta(span, config.sanic, status_code=status_code, response_headers=response_headers)\n\n\ndef _wrap_response_callback(span, callback):\n # Only for sanic 20 and older\n # Wrap response callbacks (either sync or async function) to set HTTP\n # response span tags\n\n @wrapt.function_wrapper\n def wrap_sync(wrapped, instance, args, kwargs):\n r = wrapped(*args, **kwargs)\n response = args[0]\n update_span(span, response)\n return r\n\n @wrapt.function_wrapper\n async def wrap_async(wrapped, instance, args, kwargs):\n r = await wrapped(*args, **kwargs)\n response = args[0]\n update_span(span, response)\n return r\n\n if asyncio.iscoroutinefunction(callback):\n return wrap_async(callback)\n\n return wrap_sync(callback)\n\n\nasync def patch_request_respond(wrapped, instance, args, kwargs):\n # Only for sanic 21 and newer\n # Wrap the framework response to set HTTP response span tags\n response = await wrapped(*args, **kwargs)\n pin = Pin._find(instance.ctx)\n if pin is not None and pin.enabled():\n span = pin.tracer.current_span()\n if span is not None:\n update_span(span, response)\n return response\n\n\ndef _get_path(request):\n \"\"\"Get path and replace path parameter values with names if route exists.\"\"\"\n path = request.path\n try:\n match_info = request.match_info\n except sanic.exceptions.SanicException:\n return path\n for key, value in match_info.items():\n path = path.replace(value, f\"<{key}>\")\n return path\n\n\nasync def patch_run_request_middleware(wrapped, instance, args, kwargs):\n # Set span resource from the framework request\n request = args[0]\n pin = Pin._find(request.ctx)\n if pin is not None and pin.enabled():\n span = pin.tracer.current_span()\n if span is not None:\n span.resource = \"{} {}\".format(request.method, _get_path(request))\n return await wrapped(*args, **kwargs)\n\n\ndef patch():\n \"\"\"Patch the instrumented methods.\"\"\"\n global SANIC_PRE_21\n\n if getattr(sanic, \"__datadog_patch\", False):\n return\n setattr(sanic, \"__datadog_patch\", True)\n\n SANIC_PRE_21 = sanic.__version__[:2] < \"21\"\n\n _w(\"sanic\", \"Sanic.handle_request\", patch_handle_request)\n if not SANIC_PRE_21:\n _w(\"sanic\", \"Sanic._run_request_middleware\", patch_run_request_middleware)\n _w(sanic.request, \"Request.respond\", patch_request_respond)\n\n\ndef unpatch():\n \"\"\"Unpatch the instrumented methods.\"\"\"\n _u(sanic.Sanic, \"handle_request\")\n if not SANIC_PRE_21:\n _u(sanic.Sanic, \"_run_request_middleware\")\n _u(sanic.request.Request, \"respond\")\n if not getattr(sanic, \"__datadog_patch\", False):\n return\n setattr(sanic, \"__datadog_patch\", False)\n\n\nasync def patch_handle_request(wrapped, instance, args, kwargs):\n \"\"\"Wrapper for Sanic.handle_request\"\"\"\n\n def unwrap(request, write_callback=None, stream_callback=None, **kwargs):\n return request, write_callback, stream_callback, kwargs\n\n request, write_callback, stream_callback, new_kwargs = unwrap(*args, **kwargs)\n\n if request.scheme not in (\"http\", \"https\"):\n return await wrapped(*args, **kwargs)\n\n pin = Pin()\n if SANIC_PRE_21:\n # Set span resource from the framework request\n resource = \"{} {}\".format(request.method, _get_path(request))\n else:\n # The path is not available anymore in 21.x. Get it from\n # the _run_request_middleware instrumented method.\n resource = None\n pin.onto(request.ctx)\n\n headers = request.headers.copy()\n\n trace_utils.activate_distributed_headers(ddtrace.tracer, int_config=config.sanic, request_headers=headers)\n\n with pin.tracer.trace(\n \"sanic.request\",\n service=trace_utils.int_service(None, config.sanic),\n resource=resource,\n span_type=SpanTypes.WEB,\n ) as span:\n sample_rate = config.sanic.get_analytics_sample_rate(use_global_config=True)\n if sample_rate is not None:\n span.set_tag(ANALYTICS_SAMPLE_RATE_KEY, sample_rate)\n\n method = request.method\n url = \"{scheme}://{host}{path}\".format(scheme=request.scheme, host=request.host, path=request.path)\n query_string = request.query_string\n if isinstance(query_string, bytes):\n query_string = query_string.decode()\n trace_utils.set_http_meta(\n span, config.sanic, method=method, url=url, query=query_string, request_headers=headers\n )\n\n if write_callback is not None:\n new_kwargs[\"write_callback\"] = _wrap_response_callback(span, write_callback)\n if stream_callback is not None:\n new_kwargs[\"stream_callback\"] = _wrap_response_callback(span, stream_callback)\n\n return await wrapped(request, **new_kwargs)\n", "path": "ddtrace/contrib/sanic/patch.py"}]}

| 3,277 | 127 |

gh_patches_debug_14171

|

rasdani/github-patches

|

git_diff

|

sql-machine-learning__elasticdl-373

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

When worker/master image creation failed, client should fail instead of trying to launch master.

</issue>

<code>

[start of elasticdl/client/client.py]

1 import argparse

2 import os

3 import inspect

4 import tempfile

5 import time

6 import getpass

7 import sys

8 from string import Template

9 import docker

10 import yaml

11 from kubernetes.client.apis import core_v1_api

12 from kubernetes import config

13

14

15 def _m_file_in_docker(model_file):

16 return "/model/" + os.path.basename(model_file)

17

18 def _build_docker_image(

19 m_file, image_name, image_base="elasticdl:dev",

20 repository=None

21 ):

22 DOCKER_TEMPLATE = """

23 FROM {}

24 COPY {} {}

25 """

26

27 with tempfile.NamedTemporaryFile(mode="w+", delete=False) as df:

28 df.write(DOCKER_TEMPLATE.format(image_base, m_file, _m_file_in_docker(m_file)))

29

30 client = docker.APIClient(base_url="unix://var/run/docker.sock")

31 print("===== Building Docker Image =====")

32 for line in client.build(

33 dockerfile=df.name, path=".", rm=True, tag=image_name, decode=True

34 ):

35 text = line.get("stream", None)

36 if text:

37 sys.stdout.write(text)

38 sys.stdout.flush()

39 print("===== Docker Image Built =====")

40 if repository != None:

41 for line in client.push(image_name, stream=True, decode=True):

42 print(line)

43

44 def _gen_master_def(image_name, model_file, job_name, argv):

45 master_yaml = """

46 apiVersion: v1

47 kind: Pod

48 metadata:

49 name: "elasticdl-master-{job_name}"

50 labels:

51 purpose: test-command

52 spec:

53 containers:

54 - name: "elasticdl-master-{job_name}"

55 image: "{image_name}"

56 command: ["python"]

57 args: [

58 "-m", "elasticdl.master.main",

59 "--job_name", "{job_name}",

60 "--worker_image", "{image_name}",

61 "--model_file", "{m_file}"

62 ]

63 imagePullPolicy: IfNotPresent

64 env:

65 - name: MY_POD_IP

66 valueFrom:

67 fieldRef:

68 fieldPath: status.podIP

69 restartPolicy: Never

70 """ .format(m_file=_m_file_in_docker(model_file), image_name=image_name, job_name=job_name)

71

72 master_def = yaml.safe_load(master_yaml)

73

74 # Build master arguments

75 master_def['spec']['containers'][0]['args'].extend(argv)

76 return master_def

77

78 def _submit(image_name, model_file, job_name, argv):

79 master_def = _gen_master_def(image_name, model_file, job_name, argv)

80 config.load_kube_config()

81 api = core_v1_api.CoreV1Api()

82 resp = api.create_namespaced_pod(body=master_def, namespace="default")

83 print("Master launched. status='%s'" % str(resp.status))

84

85 def main():

86 parser = argparse.ArgumentParser(description="ElasticDL Client")

87 # Rewrite model_file argument and pass all other arguments to master.

88 parser.add_argument("--model_file", help="Path to Model file", required=True)

89 parser.add_argument("--image_base", help="Base image containing elasticdl runtime environment.", required=True)

90 parser.add_argument("--repository", help="The repository to push docker image to.")

91 parser.add_argument("--job_name", help="ElasticDL job name", required=True)

92 args, argv = parser.parse_known_args()

93

94 job_name = args.job_name + "-" + str(int(round(time.time() * 1000)))

95 image_name = args.image_base + '_' + job_name

96 _build_docker_image(args.model_file, image_name, image_base=args.image_base,

97 repository=args.repository)

98 _submit(image_name, args.model_file, job_name, argv)

99

100

101 if __name__ == "__main__":

102 main()

103

[end of elasticdl/client/client.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/elasticdl/client/client.py b/elasticdl/client/client.py

--- a/elasticdl/client/client.py

+++ b/elasticdl/client/client.py

@@ -32,12 +32,14 @@

for line in client.build(

dockerfile=df.name, path=".", rm=True, tag=image_name, decode=True

):

+ if "error" in line:

+ raise RuntimeError("Docker image build failure: " % line["error"])

text = line.get("stream", None)

if text:

sys.stdout.write(text)

sys.stdout.flush()

print("===== Docker Image Built =====")

- if repository != None:

+ if repository is not None:

for line in client.push(image_name, stream=True, decode=True):

print(line)

|

{"golden_diff": "diff --git a/elasticdl/client/client.py b/elasticdl/client/client.py\n--- a/elasticdl/client/client.py\n+++ b/elasticdl/client/client.py\n@@ -32,12 +32,14 @@\n for line in client.build(\n dockerfile=df.name, path=\".\", rm=True, tag=image_name, decode=True\n ):\n+ if \"error\" in line:\n+ raise RuntimeError(\"Docker image build failure: \" % line[\"error\"])\n text = line.get(\"stream\", None)\n if text:\n sys.stdout.write(text)\n sys.stdout.flush()\n print(\"===== Docker Image Built =====\")\n- if repository != None:\n+ if repository is not None:\n for line in client.push(image_name, stream=True, decode=True):\n print(line)\n", "issue": "When worker/master image creation failed, client should fail instead of trying to launch master.\n\n", "before_files": [{"content": "import argparse\nimport os\nimport inspect\nimport tempfile\nimport time\nimport getpass\nimport sys\nfrom string import Template\nimport docker\nimport yaml\nfrom kubernetes.client.apis import core_v1_api\nfrom kubernetes import config\n\n\ndef _m_file_in_docker(model_file):\n return \"/model/\" + os.path.basename(model_file)\n\ndef _build_docker_image(\n m_file, image_name, image_base=\"elasticdl:dev\",\n repository=None\n):\n DOCKER_TEMPLATE = \"\"\"\nFROM {}\nCOPY {} {}\n\"\"\"\n\n with tempfile.NamedTemporaryFile(mode=\"w+\", delete=False) as df:\n df.write(DOCKER_TEMPLATE.format(image_base, m_file, _m_file_in_docker(m_file)))\n\n client = docker.APIClient(base_url=\"unix://var/run/docker.sock\")\n print(\"===== Building Docker Image =====\")\n for line in client.build(\n dockerfile=df.name, path=\".\", rm=True, tag=image_name, decode=True\n ):\n text = line.get(\"stream\", None)\n if text:\n sys.stdout.write(text)\n sys.stdout.flush()\n print(\"===== Docker Image Built =====\")\n if repository != None:\n for line in client.push(image_name, stream=True, decode=True):\n print(line)\n\ndef _gen_master_def(image_name, model_file, job_name, argv):\n master_yaml = \"\"\"\napiVersion: v1\nkind: Pod\nmetadata:\n name: \"elasticdl-master-{job_name}\"\n labels:\n purpose: test-command\nspec:\n containers:\n - name: \"elasticdl-master-{job_name}\"\n image: \"{image_name}\"\n command: [\"python\"]\n args: [\n \"-m\", \"elasticdl.master.main\",\n \"--job_name\", \"{job_name}\",\n \"--worker_image\", \"{image_name}\",\n \"--model_file\", \"{m_file}\"\n ]\n imagePullPolicy: IfNotPresent \n env:\n - name: MY_POD_IP\n valueFrom:\n fieldRef:\n fieldPath: status.podIP\n restartPolicy: Never\n\"\"\" .format(m_file=_m_file_in_docker(model_file), image_name=image_name, job_name=job_name)\n\n master_def = yaml.safe_load(master_yaml)\n\n # Build master arguments\n master_def['spec']['containers'][0]['args'].extend(argv)\n return master_def\n\ndef _submit(image_name, model_file, job_name, argv):\n master_def = _gen_master_def(image_name, model_file, job_name, argv)\n config.load_kube_config()\n api = core_v1_api.CoreV1Api()\n resp = api.create_namespaced_pod(body=master_def, namespace=\"default\")\n print(\"Master launched. status='%s'\" % str(resp.status))\n\ndef main():\n parser = argparse.ArgumentParser(description=\"ElasticDL Client\")\n # Rewrite model_file argument and pass all other arguments to master.\n parser.add_argument(\"--model_file\", help=\"Path to Model file\", required=True)\n parser.add_argument(\"--image_base\", help=\"Base image containing elasticdl runtime environment.\", required=True)\n parser.add_argument(\"--repository\", help=\"The repository to push docker image to.\")\n parser.add_argument(\"--job_name\", help=\"ElasticDL job name\", required=True)\n args, argv = parser.parse_known_args()\n\n job_name = args.job_name + \"-\" + str(int(round(time.time() * 1000)))\n image_name = args.image_base + '_' + job_name \n _build_docker_image(args.model_file, image_name, image_base=args.image_base,\n repository=args.repository)\n _submit(image_name, args.model_file, job_name, argv)\n\n\nif __name__ == \"__main__\":\n main()\n", "path": "elasticdl/client/client.py"}]}

| 1,544 | 172 |

gh_patches_debug_2469

|

rasdani/github-patches

|

git_diff

|

ansible-collections__community.aws-1197

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

ec2_customer_gateway: bgp_asn is not required

### Summary

The ec2_customer_gateway module has incorrect documentation for the bgp_asn parameter.

It says the ASN must be passed when state=present, but the code defaults to 25000 if the parameter is absent. See the ensure_cgw_present() method:

```

def ensure_cgw_present(self, bgp_asn, ip_address):

if not bgp_asn:

bgp_asn = 65000

response = self.ec2.create_customer_gateway(

DryRun=False,

Type='ipsec.1',

PublicIp=ip_address,

BgpAsn=bgp_asn,

)

return response

### Issue Type

Documentation Report

### Component Name

ec2_customer_gateway

### Ansible Version

```console (paste below)

$ ansible --version

ansible [core 2.12.4]

config file = None

configured module search path = ['/home/neil/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /home/neil/.local/share/virtualenvs/community.aws-uRL047Ho/lib/python3.10/site-packages/ansible

ansible collection location = /home/neil/.ansible/collections:/usr/share/ansible/collections

executable location = /home/neil/.local/share/virtualenvs/community.aws-uRL047Ho/bin/ansible

python version = 3.10.1 (main, Jan 10 2022, 00:00:00) [GCC 11.2.1 20211203 (Red Hat 11.2.1-7)]

jinja version = 3.1.1

libyaml = True

```

### Collection Versions

```console (paste below)

$ ansible-galaxy collection list

```

### Configuration

```console (paste below)

$ ansible-config dump --only-changed

```

### OS / Environment

main branch, as of 2022-04-18.

### Additional Information

Suggested rewording:

```

options:

bgp_asn:

description:

- Border Gateway Protocol (BGP) Autonomous System Number (ASN), defaults to 25000.

type: int

```

### Code of Conduct

- [X] I agree to follow the Ansible Code of Conduct

</issue>

<code>

[start of plugins/modules/ec2_customer_gateway.py]

1 #!/usr/bin/python

2 #

3 # GNU General Public License v3.0+ (see COPYING or https://www.gnu.org/licenses/gpl-3.0.txt)

4

5 from __future__ import absolute_import, division, print_function

6 __metaclass__ = type

7

8

9 DOCUMENTATION = '''

10 ---

11 module: ec2_customer_gateway

12 version_added: 1.0.0

13 short_description: Manage an AWS customer gateway

14 description:

15 - Manage an AWS customer gateway.

16 author: Michael Baydoun (@MichaelBaydoun)

17 notes:

18 - You cannot create more than one customer gateway with the same IP address. If you run an identical request more than one time, the

19 first request creates the customer gateway, and subsequent requests return information about the existing customer gateway. The subsequent

20 requests do not create new customer gateway resources.

21 - Return values contain customer_gateway and customer_gateways keys which are identical dicts. You should use

22 customer_gateway. See U(https://github.com/ansible/ansible-modules-extras/issues/2773) for details.

23 options:

24 bgp_asn:

25 description:

26 - Border Gateway Protocol (BGP) Autonomous System Number (ASN), required when I(state=present).

27 type: int

28 ip_address:

29 description:

30 - Internet-routable IP address for customers gateway, must be a static address.

31 required: true

32 type: str

33 name:

34 description:

35 - Name of the customer gateway.

36 required: true

37 type: str

38 routing:

39 description:

40 - The type of routing.

41 choices: ['static', 'dynamic']

42 default: dynamic

43 type: str

44 state:

45 description:

46 - Create or terminate the Customer Gateway.

47 default: present

48 choices: [ 'present', 'absent' ]

49 type: str

50 extends_documentation_fragment:

51 - amazon.aws.aws

52 - amazon.aws.ec2

53

54 '''

55

56 EXAMPLES = '''

57 - name: Create Customer Gateway

58 community.aws.ec2_customer_gateway:

59 bgp_asn: 12345

60 ip_address: 1.2.3.4

61 name: IndianapolisOffice

62 region: us-east-1

63 register: cgw

64

65 - name: Delete Customer Gateway

66 community.aws.ec2_customer_gateway:

67 ip_address: 1.2.3.4

68 name: IndianapolisOffice

69 state: absent

70 region: us-east-1

71 register: cgw

72 '''

73

74 RETURN = '''

75 gateway.customer_gateways:

76 description: details about the gateway that was created.

77 returned: success

78 type: complex

79 contains:

80 bgp_asn:

81 description: The Border Gateway Autonomous System Number.

82 returned: when exists and gateway is available.

83 sample: 65123

84 type: str

85 customer_gateway_id:

86 description: gateway id assigned by amazon.

87 returned: when exists and gateway is available.

88 sample: cgw-cb6386a2

89 type: str

90 ip_address:

91 description: ip address of your gateway device.

92 returned: when exists and gateway is available.

93 sample: 1.2.3.4

94 type: str

95 state:

96 description: state of gateway.

97 returned: when gateway exists and is available.

98 sample: available

99 type: str

100 tags:

101 description: Any tags on the gateway.

102 returned: when gateway exists and is available, and when tags exist.

103 type: list

104 type:

105 description: encryption type.

106 returned: when gateway exists and is available.

107 sample: ipsec.1

108 type: str

109 '''

110

111 try:

112 import botocore

113 except ImportError:

114 pass # Handled by AnsibleAWSModule

115

116 from ansible.module_utils.common.dict_transformations import camel_dict_to_snake_dict

117

118 from ansible_collections.amazon.aws.plugins.module_utils.core import AnsibleAWSModule

119 from ansible_collections.amazon.aws.plugins.module_utils.ec2 import AWSRetry

120

121

122 class Ec2CustomerGatewayManager:

123

124 def __init__(self, module):

125 self.module = module

126

127 try:

128 self.ec2 = module.client('ec2')

129 except (botocore.exceptions.ClientError, botocore.exceptions.BotoCoreError) as e:

130 module.fail_json_aws(e, msg='Failed to connect to AWS')

131

132 @AWSRetry.jittered_backoff(delay=2, max_delay=30, retries=6, catch_extra_error_codes=['IncorrectState'])

133 def ensure_cgw_absent(self, gw_id):

134 response = self.ec2.delete_customer_gateway(

135 DryRun=False,

136 CustomerGatewayId=gw_id

137 )

138 return response

139

140 def ensure_cgw_present(self, bgp_asn, ip_address):

141 if not bgp_asn:

142 bgp_asn = 65000

143 response = self.ec2.create_customer_gateway(

144 DryRun=False,

145 Type='ipsec.1',

146 PublicIp=ip_address,

147 BgpAsn=bgp_asn,

148 )

149 return response

150

151 def tag_cgw_name(self, gw_id, name):

152 response = self.ec2.create_tags(

153 DryRun=False,

154 Resources=[

155 gw_id,

156 ],

157 Tags=[

158 {

159 'Key': 'Name',

160 'Value': name

161 },

162 ]

163 )

164 return response

165

166 def describe_gateways(self, ip_address):

167 response = self.ec2.describe_customer_gateways(

168 DryRun=False,

169 Filters=[

170 {

171 'Name': 'state',

172 'Values': [

173 'available',

174 ]

175 },

176 {

177 'Name': 'ip-address',

178 'Values': [

179 ip_address,

180 ]

181 }

182 ]

183 )

184 return response

185

186

187 def main():

188 argument_spec = dict(

189 bgp_asn=dict(required=False, type='int'),

190 ip_address=dict(required=True),

191 name=dict(required=True),

192 routing=dict(default='dynamic', choices=['dynamic', 'static']),

193 state=dict(default='present', choices=['present', 'absent']),

194 )

195

196 module = AnsibleAWSModule(

197 argument_spec=argument_spec,

198 supports_check_mode=True,

199 required_if=[

200 ('routing', 'dynamic', ['bgp_asn'])

201 ]

202 )

203

204 gw_mgr = Ec2CustomerGatewayManager(module)

205

206 name = module.params.get('name')

207

208 existing = gw_mgr.describe_gateways(module.params['ip_address'])

209

210 results = dict(changed=False)

211 if module.params['state'] == 'present':

212 if existing['CustomerGateways']:

213 existing['CustomerGateway'] = existing['CustomerGateways'][0]

214 results['gateway'] = existing

215 if existing['CustomerGateway']['Tags']:

216 tag_array = existing['CustomerGateway']['Tags']

217 for key, value in enumerate(tag_array):

218 if value['Key'] == 'Name':

219 current_name = value['Value']

220 if current_name != name:

221 results['name'] = gw_mgr.tag_cgw_name(

222 results['gateway']['CustomerGateway']['CustomerGatewayId'],

223 module.params['name'],

224 )

225 results['changed'] = True

226 else:

227 if not module.check_mode:

228 results['gateway'] = gw_mgr.ensure_cgw_present(

229 module.params['bgp_asn'],

230 module.params['ip_address'],

231 )

232 results['name'] = gw_mgr.tag_cgw_name(

233 results['gateway']['CustomerGateway']['CustomerGatewayId'],

234 module.params['name'],

235 )

236 results['changed'] = True

237

238 elif module.params['state'] == 'absent':

239 if existing['CustomerGateways']:

240 existing['CustomerGateway'] = existing['CustomerGateways'][0]

241 results['gateway'] = existing

242 if not module.check_mode:

243 results['gateway'] = gw_mgr.ensure_cgw_absent(

244 existing['CustomerGateway']['CustomerGatewayId']

245 )

246 results['changed'] = True

247

248 pretty_results = camel_dict_to_snake_dict(results)

249 module.exit_json(**pretty_results)

250

251

252 if __name__ == '__main__':

253 main()

254

[end of plugins/modules/ec2_customer_gateway.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/plugins/modules/ec2_customer_gateway.py b/plugins/modules/ec2_customer_gateway.py

--- a/plugins/modules/ec2_customer_gateway.py

+++ b/plugins/modules/ec2_customer_gateway.py

@@ -23,7 +23,8 @@

options:

bgp_asn:

description:

- - Border Gateway Protocol (BGP) Autonomous System Number (ASN), required when I(state=present).

+ - Border Gateway Protocol (BGP) Autonomous System Number (ASN).

+ - Defaults to C(65000) if not specified when I(state=present).

type: int

ip_address:

description:

|

{"golden_diff": "diff --git a/plugins/modules/ec2_customer_gateway.py b/plugins/modules/ec2_customer_gateway.py\n--- a/plugins/modules/ec2_customer_gateway.py\n+++ b/plugins/modules/ec2_customer_gateway.py\n@@ -23,7 +23,8 @@\n options:\n bgp_asn:\n description:\n- - Border Gateway Protocol (BGP) Autonomous System Number (ASN), required when I(state=present).\n+ - Border Gateway Protocol (BGP) Autonomous System Number (ASN).\n+ - Defaults to C(65000) if not specified when I(state=present).\n type: int\n ip_address:\n description:\n", "issue": "ec2_customer_gateway: bgp_asn is not required\n### Summary\n\nThe ec2_customer_gateway module has incorrect documentation for the bgp_asn parameter.\r\n\r\nIt says the ASN must be passed when state=present, but the code defaults to 25000 if the parameter is absent. See the ensure_cgw_present() method:\r\n\r\n```\r\n def ensure_cgw_present(self, bgp_asn, ip_address):\r\n if not bgp_asn:\r\n bgp_asn = 65000\r\n response = self.ec2.create_customer_gateway(\r\n DryRun=False,\r\n Type='ipsec.1',\r\n PublicIp=ip_address,\r\n BgpAsn=bgp_asn,\r\n )\r\n return response\n\n### Issue Type\n\nDocumentation Report\n\n### Component Name\n\nec2_customer_gateway\n\n### Ansible Version\n\n```console (paste below)\r\n$ ansible --version\r\nansible [core 2.12.4]\r\n config file = None\r\n configured module search path = ['/home/neil/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']\r\n ansible python module location = /home/neil/.local/share/virtualenvs/community.aws-uRL047Ho/lib/python3.10/site-packages/ansible\r\n ansible collection location = /home/neil/.ansible/collections:/usr/share/ansible/collections\r\n executable location = /home/neil/.local/share/virtualenvs/community.aws-uRL047Ho/bin/ansible\r\n python version = 3.10.1 (main, Jan 10 2022, 00:00:00) [GCC 11.2.1 20211203 (Red Hat 11.2.1-7)]\r\n jinja version = 3.1.1\r\n libyaml = True\r\n```\r\n\n\n### Collection Versions\n\n```console (paste below)\r\n$ ansible-galaxy collection list\r\n```\r\n\n\n### Configuration\n\n```console (paste below)\r\n$ ansible-config dump --only-changed\r\n\r\n```\r\n\n\n### OS / Environment\n\nmain branch, as of 2022-04-18.\n\n### Additional Information\n\nSuggested rewording:\r\n\r\n```\r\noptions:\r\n bgp_asn:\r\n description:\r\n - Border Gateway Protocol (BGP) Autonomous System Number (ASN), defaults to 25000.\r\n type: int\r\n```\n\n### Code of Conduct\n\n- [X] I agree to follow the Ansible Code of Conduct\n", "before_files": [{"content": "#!/usr/bin/python\n#\n# GNU General Public License v3.0+ (see COPYING or https://www.gnu.org/licenses/gpl-3.0.txt)\n\nfrom __future__ import absolute_import, division, print_function\n__metaclass__ = type\n\n\nDOCUMENTATION = '''\n---\nmodule: ec2_customer_gateway\nversion_added: 1.0.0\nshort_description: Manage an AWS customer gateway\ndescription:\n - Manage an AWS customer gateway.\nauthor: Michael Baydoun (@MichaelBaydoun)\nnotes:\n - You cannot create more than one customer gateway with the same IP address. If you run an identical request more than one time, the\n first request creates the customer gateway, and subsequent requests return information about the existing customer gateway. The subsequent\n requests do not create new customer gateway resources.\n - Return values contain customer_gateway and customer_gateways keys which are identical dicts. You should use\n customer_gateway. See U(https://github.com/ansible/ansible-modules-extras/issues/2773) for details.\noptions:\n bgp_asn:\n description:\n - Border Gateway Protocol (BGP) Autonomous System Number (ASN), required when I(state=present).\n type: int\n ip_address:\n description:\n - Internet-routable IP address for customers gateway, must be a static address.\n required: true\n type: str\n name:\n description:\n - Name of the customer gateway.\n required: true\n type: str\n routing:\n description:\n - The type of routing.\n choices: ['static', 'dynamic']\n default: dynamic\n type: str\n state:\n description:\n - Create or terminate the Customer Gateway.\n default: present\n choices: [ 'present', 'absent' ]\n type: str\nextends_documentation_fragment:\n- amazon.aws.aws\n- amazon.aws.ec2\n\n'''\n\nEXAMPLES = '''\n- name: Create Customer Gateway\n community.aws.ec2_customer_gateway:\n bgp_asn: 12345\n ip_address: 1.2.3.4\n name: IndianapolisOffice\n region: us-east-1\n register: cgw\n\n- name: Delete Customer Gateway\n community.aws.ec2_customer_gateway:\n ip_address: 1.2.3.4\n name: IndianapolisOffice\n state: absent\n region: us-east-1\n register: cgw\n'''\n\nRETURN = '''\ngateway.customer_gateways:\n description: details about the gateway that was created.\n returned: success\n type: complex\n contains:\n bgp_asn:\n description: The Border Gateway Autonomous System Number.\n returned: when exists and gateway is available.\n sample: 65123\n type: str\n customer_gateway_id:\n description: gateway id assigned by amazon.\n returned: when exists and gateway is available.\n sample: cgw-cb6386a2\n type: str\n ip_address:\n description: ip address of your gateway device.\n returned: when exists and gateway is available.\n sample: 1.2.3.4\n type: str\n state:\n description: state of gateway.\n returned: when gateway exists and is available.\n sample: available\n type: str\n tags:\n description: Any tags on the gateway.\n returned: when gateway exists and is available, and when tags exist.\n type: list\n type:\n description: encryption type.\n returned: when gateway exists and is available.\n sample: ipsec.1\n type: str\n'''\n\ntry:\n import botocore\nexcept ImportError:\n pass # Handled by AnsibleAWSModule\n\nfrom ansible.module_utils.common.dict_transformations import camel_dict_to_snake_dict\n\nfrom ansible_collections.amazon.aws.plugins.module_utils.core import AnsibleAWSModule\nfrom ansible_collections.amazon.aws.plugins.module_utils.ec2 import AWSRetry\n\n\nclass Ec2CustomerGatewayManager:\n\n def __init__(self, module):\n self.module = module\n\n try:\n self.ec2 = module.client('ec2')\n except (botocore.exceptions.ClientError, botocore.exceptions.BotoCoreError) as e:\n module.fail_json_aws(e, msg='Failed to connect to AWS')\n\n @AWSRetry.jittered_backoff(delay=2, max_delay=30, retries=6, catch_extra_error_codes=['IncorrectState'])\n def ensure_cgw_absent(self, gw_id):\n response = self.ec2.delete_customer_gateway(\n DryRun=False,\n CustomerGatewayId=gw_id\n )\n return response\n\n def ensure_cgw_present(self, bgp_asn, ip_address):\n if not bgp_asn:\n bgp_asn = 65000\n response = self.ec2.create_customer_gateway(\n DryRun=False,\n Type='ipsec.1',\n PublicIp=ip_address,\n BgpAsn=bgp_asn,\n )\n return response\n\n def tag_cgw_name(self, gw_id, name):\n response = self.ec2.create_tags(\n DryRun=False,\n Resources=[\n gw_id,\n ],\n Tags=[\n {\n 'Key': 'Name',\n 'Value': name\n },\n ]\n )\n return response\n\n def describe_gateways(self, ip_address):\n response = self.ec2.describe_customer_gateways(\n DryRun=False,\n Filters=[\n {\n 'Name': 'state',\n 'Values': [\n 'available',\n ]\n },\n {\n 'Name': 'ip-address',\n 'Values': [\n ip_address,\n ]\n }\n ]\n )\n return response\n\n\ndef main():\n argument_spec = dict(\n bgp_asn=dict(required=False, type='int'),\n ip_address=dict(required=True),\n name=dict(required=True),\n routing=dict(default='dynamic', choices=['dynamic', 'static']),\n state=dict(default='present', choices=['present', 'absent']),\n )\n\n module = AnsibleAWSModule(\n argument_spec=argument_spec,\n supports_check_mode=True,\n required_if=[\n ('routing', 'dynamic', ['bgp_asn'])\n ]\n )\n\n gw_mgr = Ec2CustomerGatewayManager(module)\n\n name = module.params.get('name')\n\n existing = gw_mgr.describe_gateways(module.params['ip_address'])\n\n results = dict(changed=False)\n if module.params['state'] == 'present':\n if existing['CustomerGateways']:\n existing['CustomerGateway'] = existing['CustomerGateways'][0]\n results['gateway'] = existing\n if existing['CustomerGateway']['Tags']:\n tag_array = existing['CustomerGateway']['Tags']\n for key, value in enumerate(tag_array):\n if value['Key'] == 'Name':\n current_name = value['Value']\n if current_name != name:\n results['name'] = gw_mgr.tag_cgw_name(\n results['gateway']['CustomerGateway']['CustomerGatewayId'],\n module.params['name'],\n )\n results['changed'] = True\n else:\n if not module.check_mode:\n results['gateway'] = gw_mgr.ensure_cgw_present(\n module.params['bgp_asn'],\n module.params['ip_address'],\n )\n results['name'] = gw_mgr.tag_cgw_name(\n results['gateway']['CustomerGateway']['CustomerGatewayId'],\n module.params['name'],\n )\n results['changed'] = True\n\n elif module.params['state'] == 'absent':\n if existing['CustomerGateways']:\n existing['CustomerGateway'] = existing['CustomerGateways'][0]\n results['gateway'] = existing\n if not module.check_mode:\n results['gateway'] = gw_mgr.ensure_cgw_absent(\n existing['CustomerGateway']['CustomerGatewayId']\n )\n results['changed'] = True\n\n pretty_results = camel_dict_to_snake_dict(results)\n module.exit_json(**pretty_results)\n\n\nif __name__ == '__main__':\n main()\n", "path": "plugins/modules/ec2_customer_gateway.py"}]}

| 3,462 | 136 |

gh_patches_debug_9153

|

rasdani/github-patches

|

git_diff

|

RedHatInsights__insights-core-2101

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

bash_version example doesn't work with json format

Running `insights run -p examples/rules -f json` results in a traceback because the `bash_version` rule puts an `InstalledRpm` object into its response:

```

TypeError: Object of type 'InstalledRpm' is not JSON serializable

```

</issue>

<code>

[start of examples/rules/bash_version.py]

1 """

2 Bash Version

3 ============

4

5 This is a simple rule and can be run against the local host

6 using the following command::

7

8 $ insights-run -p examples.rules.bash_version

9

10 or from the examples/rules directory::

11

12 $ python sample_rules.py

13 """

14 from insights.core.plugins import make_pass, rule

15 from insights.parsers.installed_rpms import InstalledRpms

16

17 KEY = "BASH_VERSION"

18

19 CONTENT = "Bash RPM Version: {{ bash_version }}"

20

21

22 @rule(InstalledRpms)

23 def report(rpms):

24 bash_ver = rpms.get_max('bash')

25 return make_pass(KEY, bash_version=bash_ver)

26

[end of examples/rules/bash_version.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/examples/rules/bash_version.py b/examples/rules/bash_version.py

--- a/examples/rules/bash_version.py

+++ b/examples/rules/bash_version.py

@@ -11,7 +11,7 @@

$ python sample_rules.py

"""

-from insights.core.plugins import make_pass, rule

+from insights.core.plugins import make_info, rule

from insights.parsers.installed_rpms import InstalledRpms

KEY = "BASH_VERSION"

@@ -21,5 +21,5 @@

@rule(InstalledRpms)

def report(rpms):

- bash_ver = rpms.get_max('bash')

- return make_pass(KEY, bash_version=bash_ver)

+ bash = rpms.get_max('bash')

+ return make_info(KEY, bash_version=bash.nvra)

|

{"golden_diff": "diff --git a/examples/rules/bash_version.py b/examples/rules/bash_version.py\n--- a/examples/rules/bash_version.py\n+++ b/examples/rules/bash_version.py\n@@ -11,7 +11,7 @@\n \n $ python sample_rules.py\n \"\"\"\n-from insights.core.plugins import make_pass, rule\n+from insights.core.plugins import make_info, rule\n from insights.parsers.installed_rpms import InstalledRpms\n \n KEY = \"BASH_VERSION\"\n@@ -21,5 +21,5 @@\n \n @rule(InstalledRpms)\n def report(rpms):\n- bash_ver = rpms.get_max('bash')\n- return make_pass(KEY, bash_version=bash_ver)\n+ bash = rpms.get_max('bash')\n+ return make_info(KEY, bash_version=bash.nvra)\n", "issue": "bash_version example doesn't work with json format\nRunning `insights run -p examples/rules -f json` results in a traceback because the `bash_version` rule puts an `InstalledRpm` object into its response:\r\n\r\n```\r\nTypeError: Object of type 'InstalledRpm' is not JSON serializable\r\n```\n", "before_files": [{"content": "\"\"\"\nBash Version\n============\n\nThis is a simple rule and can be run against the local host\nusing the following command::\n\n$ insights-run -p examples.rules.bash_version\n\nor from the examples/rules directory::\n\n$ python sample_rules.py\n\"\"\"\nfrom insights.core.plugins import make_pass, rule\nfrom insights.parsers.installed_rpms import InstalledRpms\n\nKEY = \"BASH_VERSION\"\n\nCONTENT = \"Bash RPM Version: {{ bash_version }}\"\n\n\n@rule(InstalledRpms)\ndef report(rpms):\n bash_ver = rpms.get_max('bash')\n return make_pass(KEY, bash_version=bash_ver)\n", "path": "examples/rules/bash_version.py"}]}

| 777 | 167 |

gh_patches_debug_1896

|

rasdani/github-patches

|

git_diff

|

graspologic-org__graspologic-207

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

GClust bug

<img width="558" alt="Screen Shot 2019-06-22 at 3 46 06 PM" src="https://user-images.githubusercontent.com/25714207/59968259-eb346c80-9504-11e9-984c-8c13dff93a37.png">

should be `- self.min_components` rather than `- 1`

This causes an indexing error when `min_components` does not equal 1

</issue>

<code>

[start of graspy/cluster/gclust.py]

1 # Copyright 2019 NeuroData (http://neurodata.io)

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #