problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_41603

|

rasdani/github-patches

|

git_diff

|

liqd__a4-meinberlin-675

|

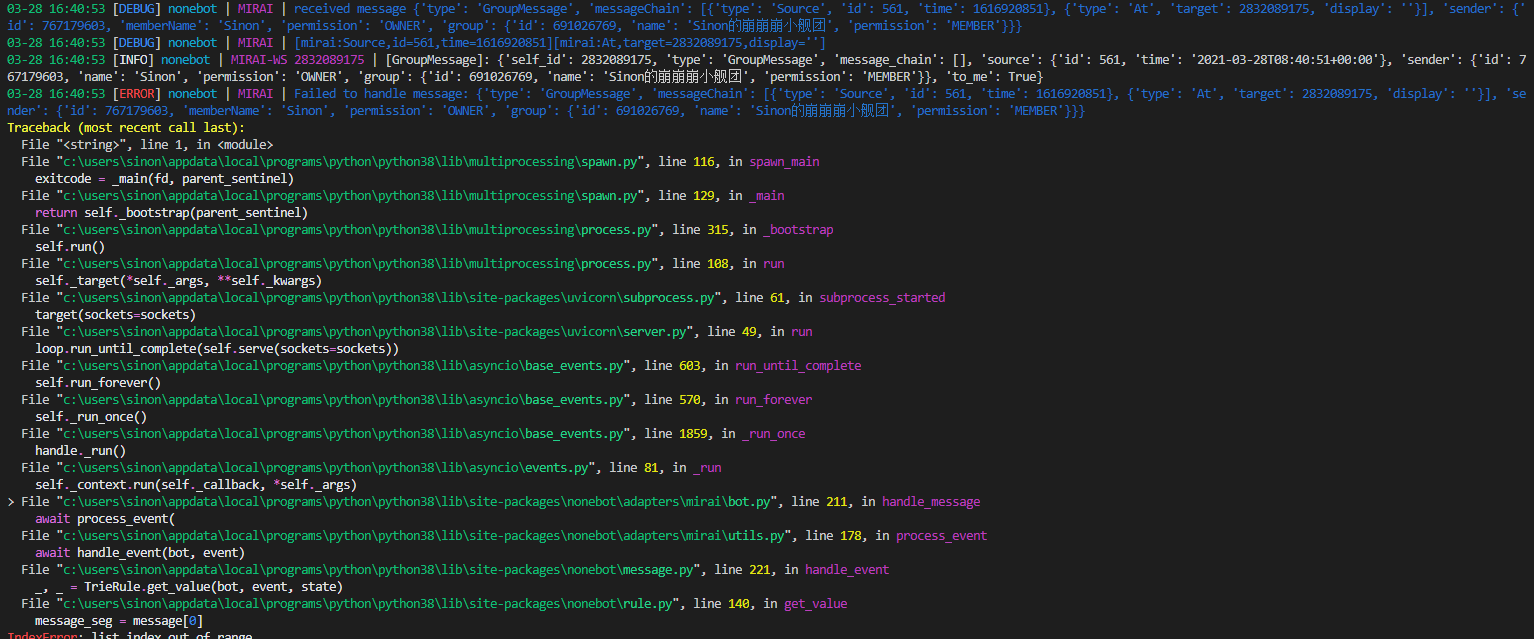

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Send wagtail form emails asynchronously

Currently wagtail form emails are sent synchronously because the `send` attributes are not serializable. see #671 for the temporary fix.

Rather then sending sync we should fix the problem and provide the required data as a serializable type

</issue>

<code>

[start of meinberlin/apps/cms/models.py]

1 from django.db import models

2 from modelcluster.fields import ParentalKey

3 from modelcluster.models import ClusterableModel

4 from wagtail.wagtailadmin import edit_handlers

5 from wagtail.wagtailcore import blocks

6 from wagtail.wagtailcore import fields

7 from wagtail.wagtailcore.models import Orderable

8 from wagtail.wagtailcore.models import Page

9 from wagtail.wagtailforms.models import AbstractEmailForm

10 from wagtail.wagtailforms.models import AbstractFormField

11 from wagtail.wagtailimages.edit_handlers import ImageChooserPanel

12 from wagtail.wagtailsnippets.models import register_snippet

13

14 from meinberlin.apps.actions import blocks as actions_blocks

15

16 from . import blocks as cms_blocks

17 from . import emails

18

19

20 class SimplePage(Page):

21 body = fields.RichTextField(blank=True)

22

23 content_panels = [

24 edit_handlers.FieldPanel('title'),

25 edit_handlers.FieldPanel('body'),

26 ]

27

28 subpage_types = []

29

30

31 class HomePage(Page):

32 body = fields.StreamField([

33 ('paragraph', blocks.RichTextBlock(

34 template='meinberlin_cms/blocks/richtext_block.html'

35 )),

36 ('call_to_action', cms_blocks.CallToActionBlock()),

37 ('columns_text', cms_blocks.ColumnsBlock()),

38 ('projects', cms_blocks.ProjectsWrapperBlock()),

39 ('activities', actions_blocks.PlatformActivityBlock()),

40 ])

41

42 subtitle = models.CharField(max_length=120)

43

44 header_image = models.ForeignKey(

45 'wagtailimages.Image',

46 null=True,

47 blank=False,

48 on_delete=models.SET_NULL,

49 related_name='+'

50 )

51

52 content_panels = Page.content_panels + [

53 edit_handlers.FieldPanel('subtitle'),

54 ImageChooserPanel('header_image'),

55 edit_handlers.StreamFieldPanel('body'),

56 ]

57

58

59 class MenuItem(models.Model):

60 title = models.CharField(max_length=255)

61 link_page = models.ForeignKey('wagtailcore.Page')

62

63 @property

64 def url(self):

65 return self.link_page.url

66

67 def __str__(self):

68 return self.title

69

70 panels = [

71 edit_handlers.FieldPanel('title'),

72 edit_handlers.PageChooserPanel('link_page')

73 ]

74

75

76 @register_snippet

77 class NavigationMenu(ClusterableModel):

78 title = models.CharField(max_length=255, null=False, blank=False)

79

80 def __str__(self):

81 return self.title

82

83 panels = [

84 edit_handlers.FieldPanel('title'),

85 edit_handlers.InlinePanel('items')

86 ]

87

88

89 class NavigationMenuItem(Orderable, MenuItem):

90 parent = ParentalKey('meinberlin_cms.NavigationMenu', related_name='items')

91

92

93 class EmailFormField(AbstractFormField):

94 page = ParentalKey('EmailFormPage', related_name='form_fields')

95

96

97 class EmailFormPage(AbstractEmailForm):

98 intro = fields.RichTextField(

99 help_text='Introduction text shown above the form'

100 )

101 thank_you = fields.RichTextField(

102 help_text='Text shown after form submission',

103 )

104 email_content = models.CharField(

105 max_length=200,

106 help_text='Email content message',

107 )

108 attach_as = models.CharField(

109 max_length=3,

110 choices=(

111 ('xls', 'XLSX Document'),

112 ('txt', 'Text'),

113 ),

114 default='xls',

115 help_text='Form results are send in this document format',

116 )

117

118 content_panels = AbstractEmailForm.content_panels + [

119 edit_handlers.MultiFieldPanel([

120 edit_handlers.FieldPanel('intro', classname='full'),

121 edit_handlers.FieldPanel('thank_you', classname='full'),

122 ], 'Page'),

123 edit_handlers.MultiFieldPanel([

124 edit_handlers.FieldPanel('to_address'),

125 edit_handlers.FieldPanel('subject'),

126 edit_handlers.FieldPanel('email_content', classname='full'),

127 edit_handlers.FieldPanel('attach_as'),

128 ], 'Email'),

129 edit_handlers.InlinePanel('form_fields', label='Form fields'),

130 ]

131

132 def send_mail(self, form):

133 self.form = form

134 if self.attach_as == 'xls':

135 emails.XlsxFormEmail.send(self)

136 elif self.attach_as == 'txt':

137 emails.TextFormEmail.send(self)

138

139 @property

140 def field_values(self):

141 fields = {}

142 for field in self.form:

143 value = field.value()

144 if isinstance(value, list):

145 value = ', '.join(value)

146 fields[field.label] = value

147 return fields

148

[end of meinberlin/apps/cms/models.py]

[start of meinberlin/apps/cms/emails.py]

1 import io

2 from email.mime.application import MIMEApplication

3 from email.mime.text import MIMEText

4

5 import xlsxwriter

6 from django.utils import timezone

7 from django.utils.translation import ugettext as _

8

9 from adhocracy4.emails.mixins import SyncEmailMixin

10 from meinberlin.apps.contrib.emails import Email

11

12

13 class FormEmail(SyncEmailMixin, Email):

14 template_name = 'meinberlin_cms/emails/form_submission'

15

16 def get_receivers(self):

17 return [x.strip() for x in self.object.to_address.split(',')]

18

19

20 class XlsxFormEmail(FormEmail):

21

22 def _generate_xlsx(self):

23 stream = io.BytesIO()

24 workbook = xlsxwriter.Workbook(stream, {'in_memory': True})

25 worksheet = workbook.add_worksheet()

26

27 worksheet.write(0, 0, _('Form Field'))

28 worksheet.write(0, 1, _('Response'))

29

30 rows = self.object.field_values.items()

31 for rownum, row in enumerate(rows, start=1):

32 worksheet.write(rownum, 0, row[0])

33 worksheet.write(rownum, 1, self._fix_newline_if_string(row[1]))

34

35 workbook.close()

36 return stream.getvalue()

37

38 def _fix_newline_if_string(self, value):

39 if isinstance(value, str):

40 return value.replace('\r', '')

41 return value

42

43 def get_attachments(self):

44 attachments = super().get_attachments()

45 xlsx_data = self._generate_xlsx()

46 mime_doc = MIMEApplication(

47 _data=xlsx_data,

48 _subtype='vnd.openxmlformats-officedocument.spreadsheetml.sheet')

49 timestamp = timezone.now().strftime("%Y-%m-%d")

50 form_title = self.object.title.replace(' ', '_')

51 submission_pk = self.object.get_submission_class().objects.last().pk

52 filename = '{}_{}_{}.xlsx'.format(timestamp, form_title, submission_pk)

53 mime_doc.add_header(

54 'Content-Disposition',

55 'attachment; filename="{}"'.format(filename)

56 )

57 return attachments + [mime_doc]

58

59

60 class TextFormEmail(FormEmail):

61

62 def get_attachments(self):

63 attachments = super().get_attachments()

64 text = ''

65 for field, value in self.object.field_values.items():

66 text += '{}:\n{}\n\n'.format(field, value)

67 mime_doc = MIMEText(_text=text, _charset='utf-8')

68 return attachments + [mime_doc]

69

[end of meinberlin/apps/cms/emails.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/meinberlin/apps/cms/emails.py b/meinberlin/apps/cms/emails.py

--- a/meinberlin/apps/cms/emails.py

+++ b/meinberlin/apps/cms/emails.py

@@ -6,15 +6,14 @@

from django.utils import timezone

from django.utils.translation import ugettext as _

-from adhocracy4.emails.mixins import SyncEmailMixin

from meinberlin.apps.contrib.emails import Email

-class FormEmail(SyncEmailMixin, Email):

+class FormEmail(Email):

template_name = 'meinberlin_cms/emails/form_submission'

def get_receivers(self):

- return [x.strip() for x in self.object.to_address.split(',')]

+ return [x.strip() for x in self.kwargs.get('to_addresses')]

class XlsxFormEmail(FormEmail):

@@ -27,8 +26,8 @@

worksheet.write(0, 0, _('Form Field'))

worksheet.write(0, 1, _('Response'))

- rows = self.object.field_values.items()

- for rownum, row in enumerate(rows, start=1):

+ field_values = self.kwargs.get('field_values')

+ for rownum, row in enumerate(field_values.items(), start=1):

worksheet.write(rownum, 0, row[0])

worksheet.write(rownum, 1, self._fix_newline_if_string(row[1]))

@@ -47,8 +46,8 @@

_data=xlsx_data,

_subtype='vnd.openxmlformats-officedocument.spreadsheetml.sheet')

timestamp = timezone.now().strftime("%Y-%m-%d")

- form_title = self.object.title.replace(' ', '_')

- submission_pk = self.object.get_submission_class().objects.last().pk

+ form_title = self.kwargs.get('title')

+ submission_pk = self.kwargs.get('submission_pk')

filename = '{}_{}_{}.xlsx'.format(timestamp, form_title, submission_pk)

mime_doc.add_header(

'Content-Disposition',

@@ -62,7 +61,8 @@

def get_attachments(self):

attachments = super().get_attachments()

text = ''

- for field, value in self.object.field_values.items():

+ field_values = self.kwargs.get('field_values')

+ for field, value in field_values.items():

text += '{}:\n{}\n\n'.format(field, value)

mime_doc = MIMEText(_text=text, _charset='utf-8')

return attachments + [mime_doc]

diff --git a/meinberlin/apps/cms/models.py b/meinberlin/apps/cms/models.py

--- a/meinberlin/apps/cms/models.py

+++ b/meinberlin/apps/cms/models.py

@@ -130,16 +130,20 @@

]

def send_mail(self, form):

- self.form = form

+ kwargs = {

+ 'title': self.title.replace(' ', '_'),

+ 'to_addresses': self.to_address.split(','),

+ 'field_values': self.get_field_values(form),

+ 'submission_pk': self.get_submission_class().objects.last().pk

+ }

if self.attach_as == 'xls':

- emails.XlsxFormEmail.send(self)

+ emails.XlsxFormEmail.send(self, **kwargs)

elif self.attach_as == 'txt':

- emails.TextFormEmail.send(self)

+ emails.TextFormEmail.send(self, **kwargs)

- @property

- def field_values(self):

+ def get_field_values(self, form):

fields = {}

- for field in self.form:

+ for field in form:

value = field.value()

if isinstance(value, list):

value = ', '.join(value)

|

{"golden_diff": "diff --git a/meinberlin/apps/cms/emails.py b/meinberlin/apps/cms/emails.py\n--- a/meinberlin/apps/cms/emails.py\n+++ b/meinberlin/apps/cms/emails.py\n@@ -6,15 +6,14 @@\n from django.utils import timezone\n from django.utils.translation import ugettext as _\n \n-from adhocracy4.emails.mixins import SyncEmailMixin\n from meinberlin.apps.contrib.emails import Email\n \n \n-class FormEmail(SyncEmailMixin, Email):\n+class FormEmail(Email):\n template_name = 'meinberlin_cms/emails/form_submission'\n \n def get_receivers(self):\n- return [x.strip() for x in self.object.to_address.split(',')]\n+ return [x.strip() for x in self.kwargs.get('to_addresses')]\n \n \n class XlsxFormEmail(FormEmail):\n@@ -27,8 +26,8 @@\n worksheet.write(0, 0, _('Form Field'))\n worksheet.write(0, 1, _('Response'))\n \n- rows = self.object.field_values.items()\n- for rownum, row in enumerate(rows, start=1):\n+ field_values = self.kwargs.get('field_values')\n+ for rownum, row in enumerate(field_values.items(), start=1):\n worksheet.write(rownum, 0, row[0])\n worksheet.write(rownum, 1, self._fix_newline_if_string(row[1]))\n \n@@ -47,8 +46,8 @@\n _data=xlsx_data,\n _subtype='vnd.openxmlformats-officedocument.spreadsheetml.sheet')\n timestamp = timezone.now().strftime(\"%Y-%m-%d\")\n- form_title = self.object.title.replace(' ', '_')\n- submission_pk = self.object.get_submission_class().objects.last().pk\n+ form_title = self.kwargs.get('title')\n+ submission_pk = self.kwargs.get('submission_pk')\n filename = '{}_{}_{}.xlsx'.format(timestamp, form_title, submission_pk)\n mime_doc.add_header(\n 'Content-Disposition',\n@@ -62,7 +61,8 @@\n def get_attachments(self):\n attachments = super().get_attachments()\n text = ''\n- for field, value in self.object.field_values.items():\n+ field_values = self.kwargs.get('field_values')\n+ for field, value in field_values.items():\n text += '{}:\\n{}\\n\\n'.format(field, value)\n mime_doc = MIMEText(_text=text, _charset='utf-8')\n return attachments + [mime_doc]\ndiff --git a/meinberlin/apps/cms/models.py b/meinberlin/apps/cms/models.py\n--- a/meinberlin/apps/cms/models.py\n+++ b/meinberlin/apps/cms/models.py\n@@ -130,16 +130,20 @@\n ]\n \n def send_mail(self, form):\n- self.form = form\n+ kwargs = {\n+ 'title': self.title.replace(' ', '_'),\n+ 'to_addresses': self.to_address.split(','),\n+ 'field_values': self.get_field_values(form),\n+ 'submission_pk': self.get_submission_class().objects.last().pk\n+ }\n if self.attach_as == 'xls':\n- emails.XlsxFormEmail.send(self)\n+ emails.XlsxFormEmail.send(self, **kwargs)\n elif self.attach_as == 'txt':\n- emails.TextFormEmail.send(self)\n+ emails.TextFormEmail.send(self, **kwargs)\n \n- @property\n- def field_values(self):\n+ def get_field_values(self, form):\n fields = {}\n- for field in self.form:\n+ for field in form:\n value = field.value()\n if isinstance(value, list):\n value = ', '.join(value)\n", "issue": "Send wagtail form emails asynchronously \nCurrently wagtail form emails are sent synchronously because the `send` attributes are not serializable. see #671 for the temporary fix.\r\nRather then sending sync we should fix the problem and provide the required data as a serializable type\n", "before_files": [{"content": "from django.db import models\nfrom modelcluster.fields import ParentalKey\nfrom modelcluster.models import ClusterableModel\nfrom wagtail.wagtailadmin import edit_handlers\nfrom wagtail.wagtailcore import blocks\nfrom wagtail.wagtailcore import fields\nfrom wagtail.wagtailcore.models import Orderable\nfrom wagtail.wagtailcore.models import Page\nfrom wagtail.wagtailforms.models import AbstractEmailForm\nfrom wagtail.wagtailforms.models import AbstractFormField\nfrom wagtail.wagtailimages.edit_handlers import ImageChooserPanel\nfrom wagtail.wagtailsnippets.models import register_snippet\n\nfrom meinberlin.apps.actions import blocks as actions_blocks\n\nfrom . import blocks as cms_blocks\nfrom . import emails\n\n\nclass SimplePage(Page):\n body = fields.RichTextField(blank=True)\n\n content_panels = [\n edit_handlers.FieldPanel('title'),\n edit_handlers.FieldPanel('body'),\n ]\n\n subpage_types = []\n\n\nclass HomePage(Page):\n body = fields.StreamField([\n ('paragraph', blocks.RichTextBlock(\n template='meinberlin_cms/blocks/richtext_block.html'\n )),\n ('call_to_action', cms_blocks.CallToActionBlock()),\n ('columns_text', cms_blocks.ColumnsBlock()),\n ('projects', cms_blocks.ProjectsWrapperBlock()),\n ('activities', actions_blocks.PlatformActivityBlock()),\n ])\n\n subtitle = models.CharField(max_length=120)\n\n header_image = models.ForeignKey(\n 'wagtailimages.Image',\n null=True,\n blank=False,\n on_delete=models.SET_NULL,\n related_name='+'\n )\n\n content_panels = Page.content_panels + [\n edit_handlers.FieldPanel('subtitle'),\n ImageChooserPanel('header_image'),\n edit_handlers.StreamFieldPanel('body'),\n ]\n\n\nclass MenuItem(models.Model):\n title = models.CharField(max_length=255)\n link_page = models.ForeignKey('wagtailcore.Page')\n\n @property\n def url(self):\n return self.link_page.url\n\n def __str__(self):\n return self.title\n\n panels = [\n edit_handlers.FieldPanel('title'),\n edit_handlers.PageChooserPanel('link_page')\n ]\n\n\n@register_snippet\nclass NavigationMenu(ClusterableModel):\n title = models.CharField(max_length=255, null=False, blank=False)\n\n def __str__(self):\n return self.title\n\n panels = [\n edit_handlers.FieldPanel('title'),\n edit_handlers.InlinePanel('items')\n ]\n\n\nclass NavigationMenuItem(Orderable, MenuItem):\n parent = ParentalKey('meinberlin_cms.NavigationMenu', related_name='items')\n\n\nclass EmailFormField(AbstractFormField):\n page = ParentalKey('EmailFormPage', related_name='form_fields')\n\n\nclass EmailFormPage(AbstractEmailForm):\n intro = fields.RichTextField(\n help_text='Introduction text shown above the form'\n )\n thank_you = fields.RichTextField(\n help_text='Text shown after form submission',\n )\n email_content = models.CharField(\n max_length=200,\n help_text='Email content message',\n )\n attach_as = models.CharField(\n max_length=3,\n choices=(\n ('xls', 'XLSX Document'),\n ('txt', 'Text'),\n ),\n default='xls',\n help_text='Form results are send in this document format',\n )\n\n content_panels = AbstractEmailForm.content_panels + [\n edit_handlers.MultiFieldPanel([\n edit_handlers.FieldPanel('intro', classname='full'),\n edit_handlers.FieldPanel('thank_you', classname='full'),\n ], 'Page'),\n edit_handlers.MultiFieldPanel([\n edit_handlers.FieldPanel('to_address'),\n edit_handlers.FieldPanel('subject'),\n edit_handlers.FieldPanel('email_content', classname='full'),\n edit_handlers.FieldPanel('attach_as'),\n ], 'Email'),\n edit_handlers.InlinePanel('form_fields', label='Form fields'),\n ]\n\n def send_mail(self, form):\n self.form = form\n if self.attach_as == 'xls':\n emails.XlsxFormEmail.send(self)\n elif self.attach_as == 'txt':\n emails.TextFormEmail.send(self)\n\n @property\n def field_values(self):\n fields = {}\n for field in self.form:\n value = field.value()\n if isinstance(value, list):\n value = ', '.join(value)\n fields[field.label] = value\n return fields\n", "path": "meinberlin/apps/cms/models.py"}, {"content": "import io\nfrom email.mime.application import MIMEApplication\nfrom email.mime.text import MIMEText\n\nimport xlsxwriter\nfrom django.utils import timezone\nfrom django.utils.translation import ugettext as _\n\nfrom adhocracy4.emails.mixins import SyncEmailMixin\nfrom meinberlin.apps.contrib.emails import Email\n\n\nclass FormEmail(SyncEmailMixin, Email):\n template_name = 'meinberlin_cms/emails/form_submission'\n\n def get_receivers(self):\n return [x.strip() for x in self.object.to_address.split(',')]\n\n\nclass XlsxFormEmail(FormEmail):\n\n def _generate_xlsx(self):\n stream = io.BytesIO()\n workbook = xlsxwriter.Workbook(stream, {'in_memory': True})\n worksheet = workbook.add_worksheet()\n\n worksheet.write(0, 0, _('Form Field'))\n worksheet.write(0, 1, _('Response'))\n\n rows = self.object.field_values.items()\n for rownum, row in enumerate(rows, start=1):\n worksheet.write(rownum, 0, row[0])\n worksheet.write(rownum, 1, self._fix_newline_if_string(row[1]))\n\n workbook.close()\n return stream.getvalue()\n\n def _fix_newline_if_string(self, value):\n if isinstance(value, str):\n return value.replace('\\r', '')\n return value\n\n def get_attachments(self):\n attachments = super().get_attachments()\n xlsx_data = self._generate_xlsx()\n mime_doc = MIMEApplication(\n _data=xlsx_data,\n _subtype='vnd.openxmlformats-officedocument.spreadsheetml.sheet')\n timestamp = timezone.now().strftime(\"%Y-%m-%d\")\n form_title = self.object.title.replace(' ', '_')\n submission_pk = self.object.get_submission_class().objects.last().pk\n filename = '{}_{}_{}.xlsx'.format(timestamp, form_title, submission_pk)\n mime_doc.add_header(\n 'Content-Disposition',\n 'attachment; filename=\"{}\"'.format(filename)\n )\n return attachments + [mime_doc]\n\n\nclass TextFormEmail(FormEmail):\n\n def get_attachments(self):\n attachments = super().get_attachments()\n text = ''\n for field, value in self.object.field_values.items():\n text += '{}:\\n{}\\n\\n'.format(field, value)\n mime_doc = MIMEText(_text=text, _charset='utf-8')\n return attachments + [mime_doc]\n", "path": "meinberlin/apps/cms/emails.py"}]}

| 2,562 | 814 |

gh_patches_debug_39538

|

rasdani/github-patches

|

git_diff

|

rotki__rotki-4037

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

User already exists during setup (DAppNode)

## Problem Definition

Using DAppNode Package:

1. Install Rotki

2. Setup Rotki using Premium API Key with restore sync'd database option

3. Enter incorrect password

4. Get error that password could not decrypt database

5. Go back

6. Enter correct password

7. "User $USERNAME already exists. User data dir: /data/$USERNAME"

Can't proceed...have to delete and re-create Rotki package

## Logs

None

### System Description

Operating system: DAppNode

Rotki version: DAppNode Package `0.1.9 (v1.23.1 upstream)`

</issue>

<code>

[start of rotkehlchen/premium/sync.py]

1 import base64

2 import logging

3 import shutil

4 from enum import Enum

5 from typing import Any, Dict, NamedTuple, Optional, Tuple

6

7 from typing_extensions import Literal

8

9 from rotkehlchen.data_handler import DataHandler

10 from rotkehlchen.errors import (

11 PremiumAuthenticationError,

12 RemoteError,

13 RotkehlchenPermissionError,

14 UnableToDecryptRemoteData,

15 )

16 from rotkehlchen.logging import RotkehlchenLogsAdapter

17 from rotkehlchen.premium.premium import Premium, PremiumCredentials, premium_create_and_verify

18 from rotkehlchen.utils.misc import ts_now

19

20 logger = logging.getLogger(__name__)

21 log = RotkehlchenLogsAdapter(logger)

22

23

24 class CanSync(Enum):

25 YES = 0

26 NO = 1

27 ASK_USER = 2

28

29

30 class SyncCheckResult(NamedTuple):

31 # The result of the sync check

32 can_sync: CanSync

33 # If result is ASK_USER, what should the message be?

34 message: str

35 payload: Optional[Dict[str, Any]]

36

37

38 class PremiumSyncManager():

39

40 def __init__(self, data: DataHandler, password: str) -> None:

41 # Initialize this with the value saved in the DB

42 self.last_data_upload_ts = data.db.get_last_data_upload_ts()

43 self.data = data

44 self.password = password

45 self.premium: Optional[Premium] = None

46

47 def _can_sync_data_from_server(self, new_account: bool) -> SyncCheckResult:

48 """

49 Checks if the remote data can be pulled from the server.

50

51 Returns a SyncCheckResult denoting whether we can pull for sure,

52 whether we can't pull or whether the user should be asked. If the user

53 should be asked a message is also returned

54 """

55 log.debug('can sync data from server -- start')

56 if self.premium is None:

57 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

58

59 b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)

60

61 try:

62 metadata = self.premium.query_last_data_metadata()

63 except RemoteError as e:

64 log.debug('can sync data from server failed', error=str(e))

65 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

66

67 if new_account:

68 return SyncCheckResult(can_sync=CanSync.YES, message='', payload=None)

69

70 if not self.data.db.get_premium_sync():

71 # If it's not a new account and the db setting for premium syncing is off stop

72 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

73

74 log.debug(

75 'CAN_PULL',

76 ours=our_hash,

77 theirs=metadata.data_hash,

78 )

79 if our_hash == metadata.data_hash:

80 log.debug('sync from server stopped -- same hash')

81 # same hash -- no need to get anything

82 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

83

84 our_last_write_ts = self.data.db.get_last_write_ts()

85 data_bytes_size = len(base64.b64decode(b64_encoded_data))

86

87 local_more_recent = our_last_write_ts >= metadata.last_modify_ts

88

89 if local_more_recent:

90 log.debug('sync from server stopped -- local is newer')

91 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

92

93 # else remote is bigger

94 return SyncCheckResult(

95 can_sync=CanSync.ASK_USER,

96 message='Detected remote database with bigger size than the local one. ',

97 payload={

98 'local_size': data_bytes_size,

99 'remote_size': metadata.data_size,

100 'local_last_modified': our_last_write_ts,

101 'remote_last_modified': metadata.last_modify_ts,

102 },

103 )

104

105 def _sync_data_from_server_and_replace_local(self) -> Tuple[bool, str]:

106 """

107 Performs syncing of data from server and replaces local db

108

109 Returns true for success and False for error/failure

110

111 May raise:

112 - PremiumAuthenticationError due to an UnableToDecryptRemoteData

113 coming from decompress_and_decrypt_db. This happens when the given password

114 does not match the one on the saved DB.

115 """

116 if self.premium is None:

117 return False, 'Pulling failed. User does not have active premium.'

118

119 try:

120 result = self.premium.pull_data()

121 except RemoteError as e:

122 log.debug('sync from server -- pulling failed.', error=str(e))

123 return False, f'Pulling failed: {str(e)}'

124

125 if result['data'] is None:

126 log.debug('sync from server -- no data found.')

127 return False, 'No data found'

128

129 try:

130 self.data.decompress_and_decrypt_db(self.password, result['data'])

131 except UnableToDecryptRemoteData as e:

132 raise PremiumAuthenticationError(

133 'The given password can not unlock the database that was retrieved from '

134 'the server. Make sure to use the same password as when the account was created.',

135 ) from e

136

137 return True, ''

138

139 def maybe_upload_data_to_server(self, force_upload: bool = False) -> bool:

140 # if user has no premium do nothing

141 if self.premium is None:

142 return False

143

144 if not self.data.db.get_premium_sync() and not force_upload:

145 return False

146

147 # upload only once per hour

148 diff = ts_now() - self.last_data_upload_ts

149 if diff < 3600 and not force_upload:

150 return False

151

152 try:

153 metadata = self.premium.query_last_data_metadata()

154 except RemoteError as e:

155 log.debug('upload to server -- fetching metadata error', error=str(e))

156 return False

157 b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)

158

159 log.debug(

160 'CAN_PUSH',

161 ours=our_hash,

162 theirs=metadata.data_hash,

163 )

164 if our_hash == metadata.data_hash and not force_upload:

165 log.debug('upload to server stopped -- same hash')

166 # same hash -- no need to upload anything

167 return False

168

169 our_last_write_ts = self.data.db.get_last_write_ts()

170 if our_last_write_ts <= metadata.last_modify_ts and not force_upload:

171 # Server's DB was modified after our local DB

172 log.debug(

173 f'upload to server stopped -- remote db({metadata.last_modify_ts}) '

174 f'more recent than local({our_last_write_ts})',

175 )

176 return False

177

178 data_bytes_size = len(base64.b64decode(b64_encoded_data))

179 if data_bytes_size < metadata.data_size and not force_upload:

180 # Let's be conservative.

181 # TODO: Here perhaps prompt user in the future

182 log.debug(

183 f'upload to server stopped -- remote db({metadata.data_size}) '

184 f'bigger than local({data_bytes_size})',

185 )

186 return False

187

188 try:

189 self.premium.upload_data(

190 data_blob=b64_encoded_data,

191 our_hash=our_hash,

192 last_modify_ts=our_last_write_ts,

193 compression_type='zlib',

194 )

195 except RemoteError as e:

196 log.debug('upload to server -- upload error', error=str(e))

197 return False

198

199 # update the last data upload value

200 self.last_data_upload_ts = ts_now()

201 self.data.db.update_last_data_upload_ts(self.last_data_upload_ts)

202 log.debug('upload to server -- success')

203 return True

204

205 def sync_data(self, action: Literal['upload', 'download']) -> Tuple[bool, str]:

206 msg = ''

207

208 if action == 'upload':

209 success = self.maybe_upload_data_to_server(force_upload=True)

210

211 if not success:

212 msg = 'Upload failed'

213 return success, msg

214

215 return self._sync_data_from_server_and_replace_local()

216

217 def _sync_if_allowed(

218 self,

219 sync_approval: Literal['yes', 'no', 'unknown'],

220 result: SyncCheckResult,

221 ) -> None:

222 if result.can_sync == CanSync.ASK_USER:

223 if sync_approval == 'unknown':

224 log.info('Remote DB is possibly newer. Ask user.')

225 raise RotkehlchenPermissionError(result.message, result.payload)

226

227 if sync_approval == 'yes':

228 log.info('User approved data sync from server')

229 # this may raise due to password

230 self._sync_data_from_server_and_replace_local()

231

232 else:

233 log.debug('Could sync data from server but user refused')

234 elif result.can_sync == CanSync.YES:

235 log.info('User approved data sync from server')

236 self._sync_data_from_server_and_replace_local() # this may raise due to password

237

238 def try_premium_at_start(

239 self,

240 given_premium_credentials: Optional[PremiumCredentials],

241 username: str,

242 create_new: bool,

243 sync_approval: Literal['yes', 'no', 'unknown'],

244 sync_database: bool,

245 ) -> Optional[Premium]:

246 """

247 Check if new user provided api pair or we already got one in the DB

248

249 Returns the created premium if user's premium credentials were fine.

250

251 If not it will raise PremiumAuthenticationError.

252

253 If no credentials were given it returns None

254 """

255

256 if given_premium_credentials is not None:

257 assert create_new, 'We should never get here for an already existing account'

258

259 try:

260 self.premium = premium_create_and_verify(given_premium_credentials)

261 except PremiumAuthenticationError as e:

262 log.error('Given API key is invalid')

263 # At this point we are at a new user trying to create an account with

264 # premium API keys and we failed. But a directory was created. Remove it.

265 # But create a backup of it in case something went really wrong

266 # and the directory contained data we did not want to lose

267 shutil.move(

268 self.data.user_data_dir, # type: ignore

269 self.data.data_directory / f'auto_backup_{username}_{ts_now()}',

270 )

271 raise PremiumAuthenticationError(

272 'Could not verify keys for the new account. '

273 '{}'.format(str(e)),

274 ) from e

275

276 # else, if we got premium data in the DB initialize it and try to sync with the server

277 db_credentials = self.data.db.get_rotkehlchen_premium()

278 if db_credentials:

279 assert not create_new, 'We should never get here for a new account'

280 try:

281 self.premium = premium_create_and_verify(db_credentials)

282 except PremiumAuthenticationError as e:

283 message = (

284 f'Could not authenticate with the rotkehlchen server with '

285 f'the API keys found in the Database. Error: {str(e)}'

286 )

287 log.error(message)

288 raise PremiumAuthenticationError(message) from e

289

290 if self.premium is None:

291 return None

292

293 result = self._can_sync_data_from_server(new_account=create_new)

294 if create_new:

295 # if this is a new account, make sure the api keys are properly stored

296 # in the DB

297 if sync_database:

298 self._sync_if_allowed(sync_approval, result)

299 self.data.db.set_rotkehlchen_premium(self.premium.credentials)

300 else:

301 self._sync_if_allowed(sync_approval, result)

302

303 # Success, return premium

304 return self.premium

305

[end of rotkehlchen/premium/sync.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/rotkehlchen/premium/sync.py b/rotkehlchen/premium/sync.py

--- a/rotkehlchen/premium/sync.py

+++ b/rotkehlchen/premium/sync.py

@@ -235,6 +235,23 @@

log.info('User approved data sync from server')

self._sync_data_from_server_and_replace_local() # this may raise due to password

+ def _abort_new_syncing_premium_user(

+ self,

+ username: str,

+ original_exception: PremiumAuthenticationError,

+ ) -> None:

+ """At this point we are at a new user trying to create an account with

+ premium API keys and we failed. But a directory was created. Remove it.

+ But create a backup of it in case something went really wrong

+ and the directory contained data we did not want to lose"""

+ shutil.move(

+ self.data.user_data_dir, # type: ignore

+ self.data.data_directory / f'auto_backup_{username}_{ts_now()}',

+ )

+ raise PremiumAuthenticationError(

+ f'Could not verify keys for the new account. {str(original_exception)}', # noqa: E501

+ ) from original_exception

+

def try_premium_at_start(

self,

given_premium_credentials: Optional[PremiumCredentials],

@@ -259,19 +276,7 @@

try:

self.premium = premium_create_and_verify(given_premium_credentials)

except PremiumAuthenticationError as e:

- log.error('Given API key is invalid')

- # At this point we are at a new user trying to create an account with

- # premium API keys and we failed. But a directory was created. Remove it.

- # But create a backup of it in case something went really wrong

- # and the directory contained data we did not want to lose

- shutil.move(

- self.data.user_data_dir, # type: ignore

- self.data.data_directory / f'auto_backup_{username}_{ts_now()}',

- )

- raise PremiumAuthenticationError(

- 'Could not verify keys for the new account. '

- '{}'.format(str(e)),

- ) from e

+ self._abort_new_syncing_premium_user(username=username, original_exception=e)

# else, if we got premium data in the DB initialize it and try to sync with the server

db_credentials = self.data.db.get_rotkehlchen_premium()

@@ -295,7 +300,11 @@

# if this is a new account, make sure the api keys are properly stored

# in the DB

if sync_database:

- self._sync_if_allowed(sync_approval, result)

+ try:

+ self._sync_if_allowed(sync_approval, result)

+ except PremiumAuthenticationError as e:

+ self._abort_new_syncing_premium_user(username=username, original_exception=e)

+

self.data.db.set_rotkehlchen_premium(self.premium.credentials)

else:

self._sync_if_allowed(sync_approval, result)

|

{"golden_diff": "diff --git a/rotkehlchen/premium/sync.py b/rotkehlchen/premium/sync.py\n--- a/rotkehlchen/premium/sync.py\n+++ b/rotkehlchen/premium/sync.py\n@@ -235,6 +235,23 @@\n log.info('User approved data sync from server')\n self._sync_data_from_server_and_replace_local() # this may raise due to password\n \n+ def _abort_new_syncing_premium_user(\n+ self,\n+ username: str,\n+ original_exception: PremiumAuthenticationError,\n+ ) -> None:\n+ \"\"\"At this point we are at a new user trying to create an account with\n+ premium API keys and we failed. But a directory was created. Remove it.\n+ But create a backup of it in case something went really wrong\n+ and the directory contained data we did not want to lose\"\"\"\n+ shutil.move(\n+ self.data.user_data_dir, # type: ignore\n+ self.data.data_directory / f'auto_backup_{username}_{ts_now()}',\n+ )\n+ raise PremiumAuthenticationError(\n+ f'Could not verify keys for the new account. {str(original_exception)}', # noqa: E501\n+ ) from original_exception\n+\n def try_premium_at_start(\n self,\n given_premium_credentials: Optional[PremiumCredentials],\n@@ -259,19 +276,7 @@\n try:\n self.premium = premium_create_and_verify(given_premium_credentials)\n except PremiumAuthenticationError as e:\n- log.error('Given API key is invalid')\n- # At this point we are at a new user trying to create an account with\n- # premium API keys and we failed. But a directory was created. Remove it.\n- # But create a backup of it in case something went really wrong\n- # and the directory contained data we did not want to lose\n- shutil.move(\n- self.data.user_data_dir, # type: ignore\n- self.data.data_directory / f'auto_backup_{username}_{ts_now()}',\n- )\n- raise PremiumAuthenticationError(\n- 'Could not verify keys for the new account. '\n- '{}'.format(str(e)),\n- ) from e\n+ self._abort_new_syncing_premium_user(username=username, original_exception=e)\n \n # else, if we got premium data in the DB initialize it and try to sync with the server\n db_credentials = self.data.db.get_rotkehlchen_premium()\n@@ -295,7 +300,11 @@\n # if this is a new account, make sure the api keys are properly stored\n # in the DB\n if sync_database:\n- self._sync_if_allowed(sync_approval, result)\n+ try:\n+ self._sync_if_allowed(sync_approval, result)\n+ except PremiumAuthenticationError as e:\n+ self._abort_new_syncing_premium_user(username=username, original_exception=e)\n+\n self.data.db.set_rotkehlchen_premium(self.premium.credentials)\n else:\n self._sync_if_allowed(sync_approval, result)\n", "issue": "User already exists during setup (DAppNode)\n## Problem Definition\r\n\r\nUsing DAppNode Package:\r\n\r\n1. Install Rotki\r\n2. Setup Rotki using Premium API Key with restore sync'd database option\r\n3. Enter incorrect password\r\n4. Get error that password could not decrypt database\r\n5. Go back\r\n6. Enter correct password\r\n7. \"User $USERNAME already exists. User data dir: /data/$USERNAME\"\r\n\r\nCan't proceed...have to delete and re-create Rotki package\r\n\r\n## Logs\r\n\r\nNone\r\n\r\n### System Description\r\n\r\nOperating system: DAppNode\r\nRotki version: DAppNode Package `0.1.9 (v1.23.1 upstream)`\r\n\n", "before_files": [{"content": "import base64\nimport logging\nimport shutil\nfrom enum import Enum\nfrom typing import Any, Dict, NamedTuple, Optional, Tuple\n\nfrom typing_extensions import Literal\n\nfrom rotkehlchen.data_handler import DataHandler\nfrom rotkehlchen.errors import (\n PremiumAuthenticationError,\n RemoteError,\n RotkehlchenPermissionError,\n UnableToDecryptRemoteData,\n)\nfrom rotkehlchen.logging import RotkehlchenLogsAdapter\nfrom rotkehlchen.premium.premium import Premium, PremiumCredentials, premium_create_and_verify\nfrom rotkehlchen.utils.misc import ts_now\n\nlogger = logging.getLogger(__name__)\nlog = RotkehlchenLogsAdapter(logger)\n\n\nclass CanSync(Enum):\n YES = 0\n NO = 1\n ASK_USER = 2\n\n\nclass SyncCheckResult(NamedTuple):\n # The result of the sync check\n can_sync: CanSync\n # If result is ASK_USER, what should the message be?\n message: str\n payload: Optional[Dict[str, Any]]\n\n\nclass PremiumSyncManager():\n\n def __init__(self, data: DataHandler, password: str) -> None:\n # Initialize this with the value saved in the DB\n self.last_data_upload_ts = data.db.get_last_data_upload_ts()\n self.data = data\n self.password = password\n self.premium: Optional[Premium] = None\n\n def _can_sync_data_from_server(self, new_account: bool) -> SyncCheckResult:\n \"\"\"\n Checks if the remote data can be pulled from the server.\n\n Returns a SyncCheckResult denoting whether we can pull for sure,\n whether we can't pull or whether the user should be asked. If the user\n should be asked a message is also returned\n \"\"\"\n log.debug('can sync data from server -- start')\n if self.premium is None:\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)\n\n try:\n metadata = self.premium.query_last_data_metadata()\n except RemoteError as e:\n log.debug('can sync data from server failed', error=str(e))\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n if new_account:\n return SyncCheckResult(can_sync=CanSync.YES, message='', payload=None)\n\n if not self.data.db.get_premium_sync():\n # If it's not a new account and the db setting for premium syncing is off stop\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n log.debug(\n 'CAN_PULL',\n ours=our_hash,\n theirs=metadata.data_hash,\n )\n if our_hash == metadata.data_hash:\n log.debug('sync from server stopped -- same hash')\n # same hash -- no need to get anything\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n our_last_write_ts = self.data.db.get_last_write_ts()\n data_bytes_size = len(base64.b64decode(b64_encoded_data))\n\n local_more_recent = our_last_write_ts >= metadata.last_modify_ts\n\n if local_more_recent:\n log.debug('sync from server stopped -- local is newer')\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n # else remote is bigger\n return SyncCheckResult(\n can_sync=CanSync.ASK_USER,\n message='Detected remote database with bigger size than the local one. ',\n payload={\n 'local_size': data_bytes_size,\n 'remote_size': metadata.data_size,\n 'local_last_modified': our_last_write_ts,\n 'remote_last_modified': metadata.last_modify_ts,\n },\n )\n\n def _sync_data_from_server_and_replace_local(self) -> Tuple[bool, str]:\n \"\"\"\n Performs syncing of data from server and replaces local db\n\n Returns true for success and False for error/failure\n\n May raise:\n - PremiumAuthenticationError due to an UnableToDecryptRemoteData\n coming from decompress_and_decrypt_db. This happens when the given password\n does not match the one on the saved DB.\n \"\"\"\n if self.premium is None:\n return False, 'Pulling failed. User does not have active premium.'\n\n try:\n result = self.premium.pull_data()\n except RemoteError as e:\n log.debug('sync from server -- pulling failed.', error=str(e))\n return False, f'Pulling failed: {str(e)}'\n\n if result['data'] is None:\n log.debug('sync from server -- no data found.')\n return False, 'No data found'\n\n try:\n self.data.decompress_and_decrypt_db(self.password, result['data'])\n except UnableToDecryptRemoteData as e:\n raise PremiumAuthenticationError(\n 'The given password can not unlock the database that was retrieved from '\n 'the server. Make sure to use the same password as when the account was created.',\n ) from e\n\n return True, ''\n\n def maybe_upload_data_to_server(self, force_upload: bool = False) -> bool:\n # if user has no premium do nothing\n if self.premium is None:\n return False\n\n if not self.data.db.get_premium_sync() and not force_upload:\n return False\n\n # upload only once per hour\n diff = ts_now() - self.last_data_upload_ts\n if diff < 3600 and not force_upload:\n return False\n\n try:\n metadata = self.premium.query_last_data_metadata()\n except RemoteError as e:\n log.debug('upload to server -- fetching metadata error', error=str(e))\n return False\n b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)\n\n log.debug(\n 'CAN_PUSH',\n ours=our_hash,\n theirs=metadata.data_hash,\n )\n if our_hash == metadata.data_hash and not force_upload:\n log.debug('upload to server stopped -- same hash')\n # same hash -- no need to upload anything\n return False\n\n our_last_write_ts = self.data.db.get_last_write_ts()\n if our_last_write_ts <= metadata.last_modify_ts and not force_upload:\n # Server's DB was modified after our local DB\n log.debug(\n f'upload to server stopped -- remote db({metadata.last_modify_ts}) '\n f'more recent than local({our_last_write_ts})',\n )\n return False\n\n data_bytes_size = len(base64.b64decode(b64_encoded_data))\n if data_bytes_size < metadata.data_size and not force_upload:\n # Let's be conservative.\n # TODO: Here perhaps prompt user in the future\n log.debug(\n f'upload to server stopped -- remote db({metadata.data_size}) '\n f'bigger than local({data_bytes_size})',\n )\n return False\n\n try:\n self.premium.upload_data(\n data_blob=b64_encoded_data,\n our_hash=our_hash,\n last_modify_ts=our_last_write_ts,\n compression_type='zlib',\n )\n except RemoteError as e:\n log.debug('upload to server -- upload error', error=str(e))\n return False\n\n # update the last data upload value\n self.last_data_upload_ts = ts_now()\n self.data.db.update_last_data_upload_ts(self.last_data_upload_ts)\n log.debug('upload to server -- success')\n return True\n\n def sync_data(self, action: Literal['upload', 'download']) -> Tuple[bool, str]:\n msg = ''\n\n if action == 'upload':\n success = self.maybe_upload_data_to_server(force_upload=True)\n\n if not success:\n msg = 'Upload failed'\n return success, msg\n\n return self._sync_data_from_server_and_replace_local()\n\n def _sync_if_allowed(\n self,\n sync_approval: Literal['yes', 'no', 'unknown'],\n result: SyncCheckResult,\n ) -> None:\n if result.can_sync == CanSync.ASK_USER:\n if sync_approval == 'unknown':\n log.info('Remote DB is possibly newer. Ask user.')\n raise RotkehlchenPermissionError(result.message, result.payload)\n\n if sync_approval == 'yes':\n log.info('User approved data sync from server')\n # this may raise due to password\n self._sync_data_from_server_and_replace_local()\n\n else:\n log.debug('Could sync data from server but user refused')\n elif result.can_sync == CanSync.YES:\n log.info('User approved data sync from server')\n self._sync_data_from_server_and_replace_local() # this may raise due to password\n\n def try_premium_at_start(\n self,\n given_premium_credentials: Optional[PremiumCredentials],\n username: str,\n create_new: bool,\n sync_approval: Literal['yes', 'no', 'unknown'],\n sync_database: bool,\n ) -> Optional[Premium]:\n \"\"\"\n Check if new user provided api pair or we already got one in the DB\n\n Returns the created premium if user's premium credentials were fine.\n\n If not it will raise PremiumAuthenticationError.\n\n If no credentials were given it returns None\n \"\"\"\n\n if given_premium_credentials is not None:\n assert create_new, 'We should never get here for an already existing account'\n\n try:\n self.premium = premium_create_and_verify(given_premium_credentials)\n except PremiumAuthenticationError as e:\n log.error('Given API key is invalid')\n # At this point we are at a new user trying to create an account with\n # premium API keys and we failed. But a directory was created. Remove it.\n # But create a backup of it in case something went really wrong\n # and the directory contained data we did not want to lose\n shutil.move(\n self.data.user_data_dir, # type: ignore\n self.data.data_directory / f'auto_backup_{username}_{ts_now()}',\n )\n raise PremiumAuthenticationError(\n 'Could not verify keys for the new account. '\n '{}'.format(str(e)),\n ) from e\n\n # else, if we got premium data in the DB initialize it and try to sync with the server\n db_credentials = self.data.db.get_rotkehlchen_premium()\n if db_credentials:\n assert not create_new, 'We should never get here for a new account'\n try:\n self.premium = premium_create_and_verify(db_credentials)\n except PremiumAuthenticationError as e:\n message = (\n f'Could not authenticate with the rotkehlchen server with '\n f'the API keys found in the Database. Error: {str(e)}'\n )\n log.error(message)\n raise PremiumAuthenticationError(message) from e\n\n if self.premium is None:\n return None\n\n result = self._can_sync_data_from_server(new_account=create_new)\n if create_new:\n # if this is a new account, make sure the api keys are properly stored\n # in the DB\n if sync_database:\n self._sync_if_allowed(sync_approval, result)\n self.data.db.set_rotkehlchen_premium(self.premium.credentials)\n else:\n self._sync_if_allowed(sync_approval, result)\n\n # Success, return premium\n return self.premium\n", "path": "rotkehlchen/premium/sync.py"}]}

| 3,952 | 687 |

gh_patches_debug_18243

|

rasdani/github-patches

|

git_diff

|

plone__Products.CMFPlone-1465

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

CSS bundles generation breaks background images relative urls

This is a bug related to PR #1300.

</issue>

<code>

[start of Products/CMFPlone/resources/browser/combine.py]

1 from zExceptions import NotFound

2 from Acquisition import aq_base

3 from datetime import datetime

4 from plone.registry.interfaces import IRegistry

5 from plone.resource.file import FilesystemFile

6 from plone.resource.interfaces import IResourceDirectory

7 from Products.CMFPlone.interfaces import IBundleRegistry

8 from Products.CMFPlone.interfaces.resources import (

9 OVERRIDE_RESOURCE_DIRECTORY_NAME,

10 )

11 from StringIO import StringIO

12 from zope.component import getUtility

13 from zope.component import queryUtility

14

15 PRODUCTION_RESOURCE_DIRECTORY = "production"

16

17

18 def get_production_resource_directory():

19 persistent_directory = queryUtility(IResourceDirectory, name="persistent")

20 if persistent_directory is None:

21 return ''

22 container = persistent_directory[OVERRIDE_RESOURCE_DIRECTORY_NAME]

23 try:

24 production_folder = container[PRODUCTION_RESOURCE_DIRECTORY]

25 except NotFound:

26 return "%s/++unique++1" % PRODUCTION_RESOURCE_DIRECTORY

27 timestamp = production_folder.readFile('timestamp.txt')

28 return "%s/++unique++%s" % (

29 PRODUCTION_RESOURCE_DIRECTORY, timestamp)

30

31

32 def get_resource(context, path):

33 resource = context.unrestrictedTraverse(path)

34 if isinstance(resource, FilesystemFile):

35 (directory, sep, filename) = path.rpartition('/')

36 return context.unrestrictedTraverse(directory).readFile(filename)

37 else:

38 if hasattr(aq_base(resource), 'GET'):

39 # for FileResource

40 return resource.GET()

41 else:

42 # any BrowserView

43 return resource()

44

45

46 def write_js(context, folder, meta_bundle):

47 registry = getUtility(IRegistry)

48 resources = []

49

50 # default resources

51 if meta_bundle == 'default' and registry.records.get(

52 'plone.resources/jquery.js'

53 ):

54 resources.append(get_resource(context,

55 registry.records['plone.resources/jquery.js'].value))

56 resources.append(get_resource(context,

57 registry.records['plone.resources.requirejs'].value))

58 resources.append(get_resource(context,

59 registry.records['plone.resources.configjs'].value))

60

61 # bundles

62 bundles = registry.collectionOfInterface(

63 IBundleRegistry, prefix="plone.bundles", check=False)

64 for bundle in bundles.values():

65 if bundle.merge_with == meta_bundle:

66 resources.append(get_resource(context, bundle.jscompilation))

67

68 fi = StringIO()

69 for script in resources:

70 fi.write(script + '\n')

71 folder.writeFile(meta_bundle + ".js", fi)

72

73

74 def write_css(context, folder, meta_bundle):

75 registry = getUtility(IRegistry)

76 resources = []

77

78 bundles = registry.collectionOfInterface(

79 IBundleRegistry, prefix="plone.bundles", check=False)

80 for bundle in bundles.values():

81 if bundle.merge_with == meta_bundle:

82 resources.append(get_resource(context, bundle.csscompilation))

83

84 fi = StringIO()

85 for script in resources:

86 fi.write(script + '\n')

87 folder.writeFile(meta_bundle + ".css", fi)

88

89

90 def combine_bundles(context):

91 persistent_directory = queryUtility(IResourceDirectory, name="persistent")

92 if persistent_directory is None:

93 return

94 if OVERRIDE_RESOURCE_DIRECTORY_NAME not in persistent_directory:

95 persistent_directory.makeDirectory(OVERRIDE_RESOURCE_DIRECTORY_NAME)

96 container = persistent_directory[OVERRIDE_RESOURCE_DIRECTORY_NAME]

97 if PRODUCTION_RESOURCE_DIRECTORY not in container:

98 container.makeDirectory(PRODUCTION_RESOURCE_DIRECTORY)

99 production_folder = container[PRODUCTION_RESOURCE_DIRECTORY]

100

101 # store timestamp

102 fi = StringIO()

103 fi.write(datetime.now().isoformat())

104 production_folder.writeFile("timestamp.txt", fi)

105

106 # generate new combined bundles

107 write_js(context, production_folder, 'default')

108 write_js(context, production_folder, 'logged-in')

109 write_css(context, production_folder, 'default')

110 write_css(context, production_folder, 'logged-in')

111

[end of Products/CMFPlone/resources/browser/combine.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/Products/CMFPlone/resources/browser/combine.py b/Products/CMFPlone/resources/browser/combine.py

--- a/Products/CMFPlone/resources/browser/combine.py

+++ b/Products/CMFPlone/resources/browser/combine.py

@@ -1,3 +1,4 @@

+import re

from zExceptions import NotFound

from Acquisition import aq_base

from datetime import datetime

@@ -79,7 +80,15 @@

IBundleRegistry, prefix="plone.bundles", check=False)

for bundle in bundles.values():

if bundle.merge_with == meta_bundle:

- resources.append(get_resource(context, bundle.csscompilation))

+ css = get_resource(context, bundle.csscompilation)

+ # Preserve relative urls:

+ # we prefix with '../'' any url not starting with '/'

+ # or http: or data:

+ css = re.sub(

+ r"""(url\(['"]?(?!['"]?([a-z]+:|\/)))""",

+ r'\1../',

+ css)

+ resources.append(css)

fi = StringIO()

for script in resources:

|

{"golden_diff": "diff --git a/Products/CMFPlone/resources/browser/combine.py b/Products/CMFPlone/resources/browser/combine.py\n--- a/Products/CMFPlone/resources/browser/combine.py\n+++ b/Products/CMFPlone/resources/browser/combine.py\n@@ -1,3 +1,4 @@\n+import re\n from zExceptions import NotFound\n from Acquisition import aq_base\n from datetime import datetime\n@@ -79,7 +80,15 @@\n IBundleRegistry, prefix=\"plone.bundles\", check=False)\n for bundle in bundles.values():\n if bundle.merge_with == meta_bundle:\n- resources.append(get_resource(context, bundle.csscompilation))\n+ css = get_resource(context, bundle.csscompilation)\n+ # Preserve relative urls:\n+ # we prefix with '../'' any url not starting with '/'\n+ # or http: or data:\n+ css = re.sub(\n+ r\"\"\"(url\\(['\"]?(?!['\"]?([a-z]+:|\\/)))\"\"\",\n+ r'\\1../',\n+ css)\n+ resources.append(css)\n \n fi = StringIO()\n for script in resources:\n", "issue": "CSS bundles generation breaks background images relative urls\nThis is a bug related to PR #1300.\n\n", "before_files": [{"content": "from zExceptions import NotFound\nfrom Acquisition import aq_base\nfrom datetime import datetime\nfrom plone.registry.interfaces import IRegistry\nfrom plone.resource.file import FilesystemFile\nfrom plone.resource.interfaces import IResourceDirectory\nfrom Products.CMFPlone.interfaces import IBundleRegistry\nfrom Products.CMFPlone.interfaces.resources import (\n OVERRIDE_RESOURCE_DIRECTORY_NAME,\n)\nfrom StringIO import StringIO\nfrom zope.component import getUtility\nfrom zope.component import queryUtility\n\nPRODUCTION_RESOURCE_DIRECTORY = \"production\"\n\n\ndef get_production_resource_directory():\n persistent_directory = queryUtility(IResourceDirectory, name=\"persistent\")\n if persistent_directory is None:\n return ''\n container = persistent_directory[OVERRIDE_RESOURCE_DIRECTORY_NAME]\n try:\n production_folder = container[PRODUCTION_RESOURCE_DIRECTORY]\n except NotFound:\n return \"%s/++unique++1\" % PRODUCTION_RESOURCE_DIRECTORY\n timestamp = production_folder.readFile('timestamp.txt')\n return \"%s/++unique++%s\" % (\n PRODUCTION_RESOURCE_DIRECTORY, timestamp)\n\n\ndef get_resource(context, path):\n resource = context.unrestrictedTraverse(path)\n if isinstance(resource, FilesystemFile):\n (directory, sep, filename) = path.rpartition('/')\n return context.unrestrictedTraverse(directory).readFile(filename)\n else:\n if hasattr(aq_base(resource), 'GET'):\n # for FileResource\n return resource.GET()\n else:\n # any BrowserView\n return resource()\n\n\ndef write_js(context, folder, meta_bundle):\n registry = getUtility(IRegistry)\n resources = []\n\n # default resources\n if meta_bundle == 'default' and registry.records.get(\n 'plone.resources/jquery.js'\n ):\n resources.append(get_resource(context,\n registry.records['plone.resources/jquery.js'].value))\n resources.append(get_resource(context,\n registry.records['plone.resources.requirejs'].value))\n resources.append(get_resource(context,\n registry.records['plone.resources.configjs'].value))\n\n # bundles\n bundles = registry.collectionOfInterface(\n IBundleRegistry, prefix=\"plone.bundles\", check=False)\n for bundle in bundles.values():\n if bundle.merge_with == meta_bundle:\n resources.append(get_resource(context, bundle.jscompilation))\n\n fi = StringIO()\n for script in resources:\n fi.write(script + '\\n')\n folder.writeFile(meta_bundle + \".js\", fi)\n\n\ndef write_css(context, folder, meta_bundle):\n registry = getUtility(IRegistry)\n resources = []\n\n bundles = registry.collectionOfInterface(\n IBundleRegistry, prefix=\"plone.bundles\", check=False)\n for bundle in bundles.values():\n if bundle.merge_with == meta_bundle:\n resources.append(get_resource(context, bundle.csscompilation))\n\n fi = StringIO()\n for script in resources:\n fi.write(script + '\\n')\n folder.writeFile(meta_bundle + \".css\", fi)\n\n\ndef combine_bundles(context):\n persistent_directory = queryUtility(IResourceDirectory, name=\"persistent\")\n if persistent_directory is None:\n return\n if OVERRIDE_RESOURCE_DIRECTORY_NAME not in persistent_directory:\n persistent_directory.makeDirectory(OVERRIDE_RESOURCE_DIRECTORY_NAME)\n container = persistent_directory[OVERRIDE_RESOURCE_DIRECTORY_NAME]\n if PRODUCTION_RESOURCE_DIRECTORY not in container:\n container.makeDirectory(PRODUCTION_RESOURCE_DIRECTORY)\n production_folder = container[PRODUCTION_RESOURCE_DIRECTORY]\n\n # store timestamp\n fi = StringIO()\n fi.write(datetime.now().isoformat())\n production_folder.writeFile(\"timestamp.txt\", fi)\n\n # generate new combined bundles\n write_js(context, production_folder, 'default')\n write_js(context, production_folder, 'logged-in')\n write_css(context, production_folder, 'default')\n write_css(context, production_folder, 'logged-in')\n", "path": "Products/CMFPlone/resources/browser/combine.py"}]}

| 1,585 | 251 |

gh_patches_debug_2033

|

rasdani/github-patches

|

git_diff

|

googleapis__python-bigquery-802

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

ChunkedEncodingError is not retried when fetching data with list_rows()

Original issue: https://github.com/googleapis/python-bigquery-storage/issues/242

A user reported that they saw an error in production when fetching table data with `Client.list_rows()`. That method uses the [default retry object](https://github.com/googleapis/python-bigquery/blob/7e0e2bafc4c3f98a4246100f504fd78a01a28e7d/google/cloud/bigquery/retry.py#L49), which currently does not consider `requests.exceptions.ChunkedEncodingError` retryable.

(it does retry `requests.exceptions.ConnectionError`, but `ChunkedEncodingError` is not a subclass of that.

</issue>

<code>

[start of google/cloud/bigquery/retry.py]

1 # Copyright 2018 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 from google.api_core import exceptions

16 from google.api_core import retry

17 from google.auth import exceptions as auth_exceptions

18 import requests.exceptions

19

20

21 _RETRYABLE_REASONS = frozenset(

22 ["rateLimitExceeded", "backendError", "internalError", "badGateway"]

23 )

24

25 _UNSTRUCTURED_RETRYABLE_TYPES = (

26 ConnectionError,

27 exceptions.TooManyRequests,

28 exceptions.InternalServerError,

29 exceptions.BadGateway,

30 requests.exceptions.ConnectionError,

31 auth_exceptions.TransportError,

32 )

33

34

35 def _should_retry(exc):

36 """Predicate for determining when to retry.

37

38 We retry if and only if the 'reason' is 'backendError'

39 or 'rateLimitExceeded'.

40 """

41 if not hasattr(exc, "errors") or len(exc.errors) == 0:

42 # Check for unstructured error returns, e.g. from GFE

43 return isinstance(exc, _UNSTRUCTURED_RETRYABLE_TYPES)

44

45 reason = exc.errors[0]["reason"]

46 return reason in _RETRYABLE_REASONS

47

48

49 DEFAULT_RETRY = retry.Retry(predicate=_should_retry)

50 """The default retry object.

51

52 Any method with a ``retry`` parameter will be retried automatically,

53 with reasonable defaults. To disable retry, pass ``retry=None``.

54 To modify the default retry behavior, call a ``with_XXX`` method

55 on ``DEFAULT_RETRY``. For example, to change the deadline to 30 seconds,

56 pass ``retry=bigquery.DEFAULT_RETRY.with_deadline(30)``.

57 """

58

[end of google/cloud/bigquery/retry.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/google/cloud/bigquery/retry.py b/google/cloud/bigquery/retry.py

--- a/google/cloud/bigquery/retry.py

+++ b/google/cloud/bigquery/retry.py

@@ -27,6 +27,7 @@

exceptions.TooManyRequests,

exceptions.InternalServerError,

exceptions.BadGateway,

+ requests.exceptions.ChunkedEncodingError,

requests.exceptions.ConnectionError,

auth_exceptions.TransportError,

)

|

{"golden_diff": "diff --git a/google/cloud/bigquery/retry.py b/google/cloud/bigquery/retry.py\n--- a/google/cloud/bigquery/retry.py\n+++ b/google/cloud/bigquery/retry.py\n@@ -27,6 +27,7 @@\n exceptions.TooManyRequests,\n exceptions.InternalServerError,\n exceptions.BadGateway,\n+ requests.exceptions.ChunkedEncodingError,\n requests.exceptions.ConnectionError,\n auth_exceptions.TransportError,\n )\n", "issue": "ChunkedEncodingError is not retried when fetching data with list_rows()\nOriginal issue: https://github.com/googleapis/python-bigquery-storage/issues/242\r\n\r\nA user reported that they saw an error in production when fetching table data with `Client.list_rows()`. That method uses the [default retry object](https://github.com/googleapis/python-bigquery/blob/7e0e2bafc4c3f98a4246100f504fd78a01a28e7d/google/cloud/bigquery/retry.py#L49), which currently does not consider `requests.exceptions.ChunkedEncodingError` retryable.\r\n\r\n(it does retry `requests.exceptions.ConnectionError`, but `ChunkedEncodingError` is not a subclass of that.\n", "before_files": [{"content": "# Copyright 2018 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom google.api_core import exceptions\nfrom google.api_core import retry\nfrom google.auth import exceptions as auth_exceptions\nimport requests.exceptions\n\n\n_RETRYABLE_REASONS = frozenset(\n [\"rateLimitExceeded\", \"backendError\", \"internalError\", \"badGateway\"]\n)\n\n_UNSTRUCTURED_RETRYABLE_TYPES = (\n ConnectionError,\n exceptions.TooManyRequests,\n exceptions.InternalServerError,\n exceptions.BadGateway,\n requests.exceptions.ConnectionError,\n auth_exceptions.TransportError,\n)\n\n\ndef _should_retry(exc):\n \"\"\"Predicate for determining when to retry.\n\n We retry if and only if the 'reason' is 'backendError'\n or 'rateLimitExceeded'.\n \"\"\"\n if not hasattr(exc, \"errors\") or len(exc.errors) == 0:\n # Check for unstructured error returns, e.g. from GFE\n return isinstance(exc, _UNSTRUCTURED_RETRYABLE_TYPES)\n\n reason = exc.errors[0][\"reason\"]\n return reason in _RETRYABLE_REASONS\n\n\nDEFAULT_RETRY = retry.Retry(predicate=_should_retry)\n\"\"\"The default retry object.\n\nAny method with a ``retry`` parameter will be retried automatically,\nwith reasonable defaults. To disable retry, pass ``retry=None``.\nTo modify the default retry behavior, call a ``with_XXX`` method\non ``DEFAULT_RETRY``. For example, to change the deadline to 30 seconds,\npass ``retry=bigquery.DEFAULT_RETRY.with_deadline(30)``.\n\"\"\"\n", "path": "google/cloud/bigquery/retry.py"}]}

| 1,261 | 92 |

gh_patches_debug_1899

|

rasdani/github-patches

|

git_diff

|

translate__translate-3683

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

setcontext is not working correctly for mounit

Calling setcontext on mounit does currently nothing as it inherits code from base class:

``` python

def setcontext(self, context):

"""Set the message context"""

pass

```

I'd expect it to properly update context as it does for other storages.

</issue>

<code>

[start of translate/storage/mo.py]

1 # -*- coding: utf-8 -*-

2 #

3 # Copyright 2007 Zuza Software Foundation

4 #

5 # the function "serialize" was derived from Python v2.4

6 # (Tools/i18n/msgfmt.py - function "generate"):

7 # Written by Martin v. Löwis <[email protected]>

8 # Copyright (c) 2001, 2002, 2003, 2004, 2005, 2006 Python Software Foundation.

9 # All rights reserved.

10 # original license: Python Software Foundation (version 2)

11 #

12 #

13 # This file is part of translate.

14 #

15 # translate is free software; you can redistribute it and/or modify

16 # it under the terms of the GNU General Public License as published by

17 # the Free Software Foundation; either version 2 of the License, or

18 # (at your option) any later version.

19 #

20 # translate is distributed in the hope that it will be useful,

21 # but WITHOUT ANY WARRANTY; without even the implied warranty of

22 # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

23 # GNU General Public License for more details.

24 #

25 # You should have received a copy of the GNU General Public License

26 # along with this program; if not, see <http://www.gnu.org/licenses/>.

27 #

28

29 """Module for parsing Gettext .mo files for translation.

30

31 The coding of .mo files was produced from `Gettext documentation

32 <http://www.gnu.org/software/gettext/manual/gettext.html#MO-Files>`_,

33 Pythons msgfmt.py and by observing and testing existing .mo files in the wild.

34

35 The hash algorithm is implemented for MO files, this should result in

36 faster access of the MO file. The hash is optional for Gettext

37 and is not needed for reading or writing MO files, in this implementation

38 it is always on and does produce sometimes different results to Gettext

39 in very small files.

40 """

41

42 import array

43 import re

44 import six

45 import struct

46

47 from translate.misc.multistring import multistring

48 from translate.storage import base, poheader

49

50

51 MO_MAGIC_NUMBER = 0x950412de

52

53

54 def mounpack(filename='messages.mo'):

55 """Helper to unpack Gettext MO files into a Python string"""

56 with open(filename, 'rb') as fh:

57 s = fh.read()

58 print("\\x%02x" * len(s) % tuple(map(ord, s)))

59

60

61 def my_swap4(result):

62 c0 = (result >> 0) & 0xff

63 c1 = (result >> 8) & 0xff

64 c2 = (result >> 16) & 0xff

65 c3 = (result >> 24) & 0xff

66

67 return (c0 << 24) | (c1 << 16) | (c2 << 8) | c3

68

69

70 def hashpjw(str_param):

71 HASHWORDBITS = 32

72 hval = 0

73 g = None

74 s = str_param

75 for s in str_param:

76 hval = hval << 4

77 hval += ord(s) if six.PY2 else s

78 g = hval & 0xf << (HASHWORDBITS - 4)

79 if (g != 0):

80 hval = hval ^ g >> (HASHWORDBITS - 8)

81 hval = hval ^ g

82 return hval

83

84

85 def get_next_prime_number(start):

86 # find the smallest prime number that is greater or equal "start"

87

88 def is_prime(num):

89 # special small numbers

90 if (num < 2) or (num == 4):

91 return False

92 if (num == 2) or (num == 3):

93 return True

94 # check for numbers > 4

95 for divider in range(2, num // 2):

96 if num % divider == 0:

97 return False

98 return True

99

100 candidate = start

101 while not is_prime(candidate):

102 candidate += 1

103 return candidate

104

105

106 class mounit(base.TranslationUnit):

107 """A class representing a .mo translation message."""

108

109 def __init__(self, source=None, **kwargs):

110 self.msgctxt = []

111 self.msgidcomments = []

112 super(mounit, self).__init__(source)

113

114 def getcontext(self):

115 """Get the message context"""

116 # Still need to handle KDE comments

117 if self.msgctxt is None:

118 return None

119 return "".join(self.msgctxt)

120

121 def isheader(self):

122 """Is this a header entry?"""

123 return self.source == u""

124

125 def istranslatable(self):

126 """Is this message translateable?"""

127 return bool(self.source)

128

129

130 class mofile(poheader.poheader, base.TranslationStore):

131 """A class representing a .mo file."""

132

133 UnitClass = mounit

134 Name = "Gettext MO file"

135 Mimetypes = ["application/x-gettext-catalog", "application/x-mo"]

136 Extensions = ["mo", "gmo"]

137 _binary = True

138

139 def __init__(self, inputfile=None, **kwargs):

140 super(mofile, self).__init__(**kwargs)

141 self.filename = ''

142 if inputfile is not None:

143 self.parsestring(inputfile)